CDA 3101 Spring 2016 Introduction to Computer Organization

![Know these Concepts & Techniques OVERVIEW: pp. 374 -382 [Patterson&Hennessey, 5 th Ed. ] Know these Concepts & Techniques OVERVIEW: pp. 374 -382 [Patterson&Hennessey, 5 th Ed. ]](https://slidetodoc.com/presentation_image_h2/6ed4ce3cb547aa68dd9847e76b9789fc/image-14.jpg)

- Slides: 21

CDA 3101 Spring 2016 Introduction to Computer Organization Physical Memory, Virtual Memory and Cache 22, 29 March 2016

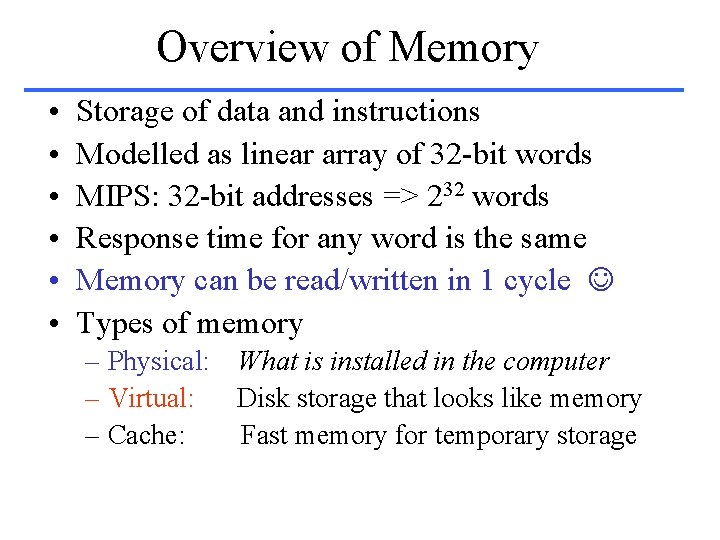

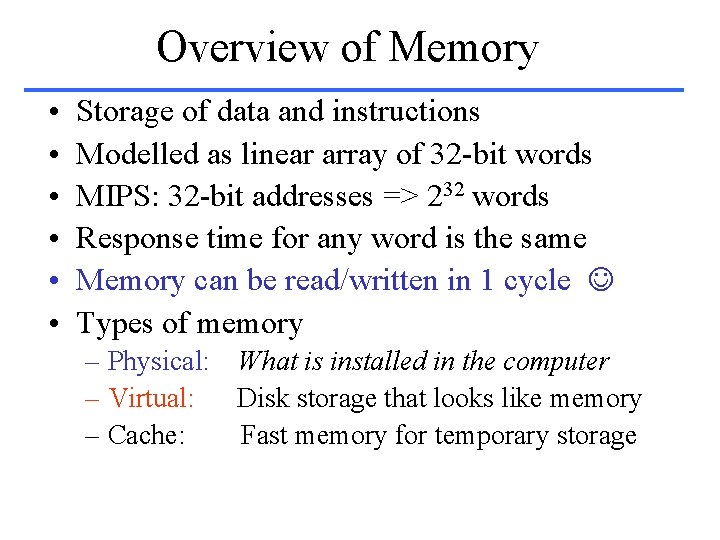

Overview of Memory • • • Storage of data and instructions Modelled as linear array of 32 -bit words MIPS: 32 -bit addresses => 232 words Response time for any word is the same Memory can be read/written in 1 cycle Types of memory – Physical: What is installed in the computer – Virtual: Disk storage that looks like memory – Cache: Fast memory for temporary storage

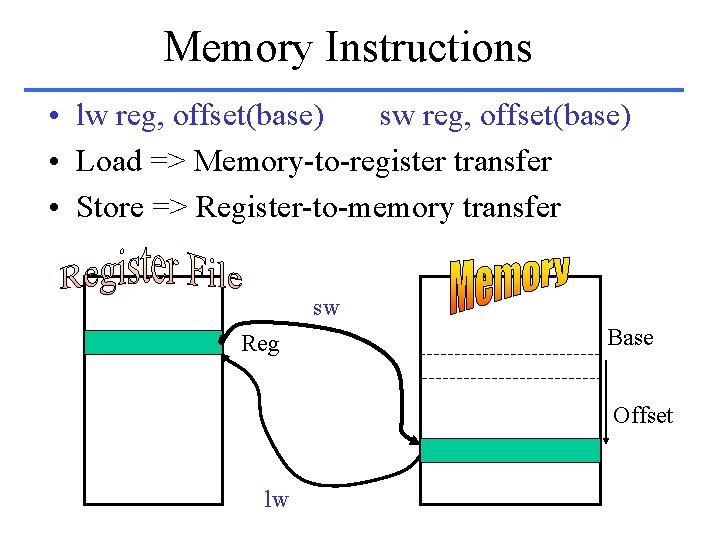

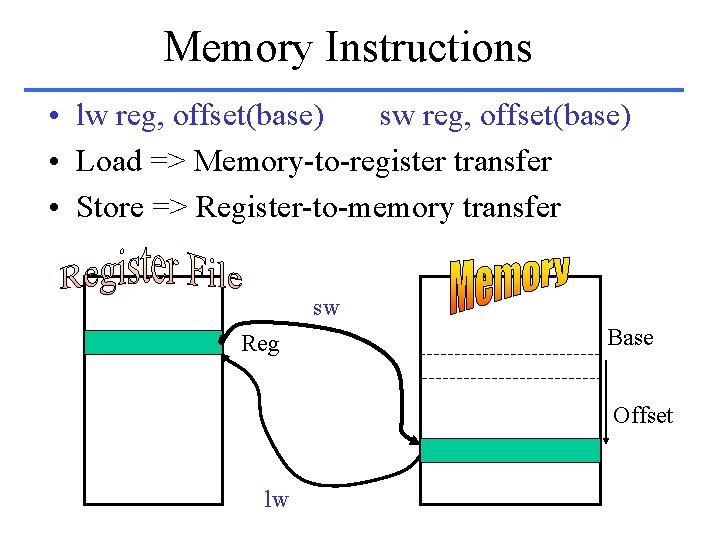

Memory Instructions • lw reg, offset(base) sw reg, offset(base) • Load => Memory-to-register transfer • Store => Register-to-memory transfer sw Reg Base Offset lw

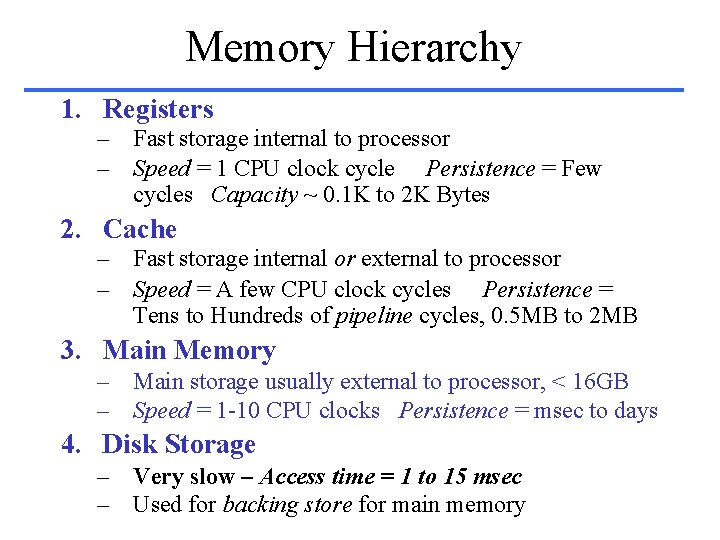

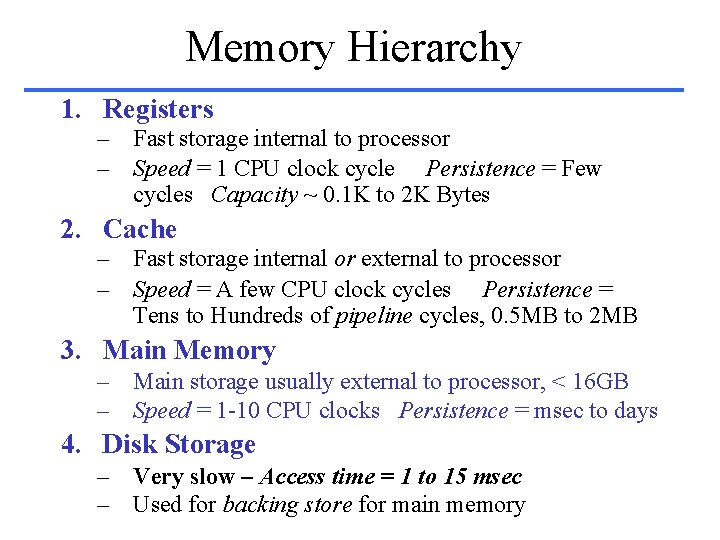

Memory Hierarchy 1. Registers – Fast storage internal to processor – Speed = 1 CPU clock cycle Persistence = Few cycles Capacity ~ 0. 1 K to 2 K Bytes 2. Cache – Fast storage internal or external to processor – Speed = A few CPU clock cycles Persistence = Tens to Hundreds of pipeline cycles, 0. 5 MB to 2 MB 3. Main Memory – Main storage usually external to processor, < 16 GB – Speed = 1 -10 CPU clocks Persistence = msec to days 4. Disk Storage – Very slow – Access time = 1 to 15 msec – Used for backing store for main memory

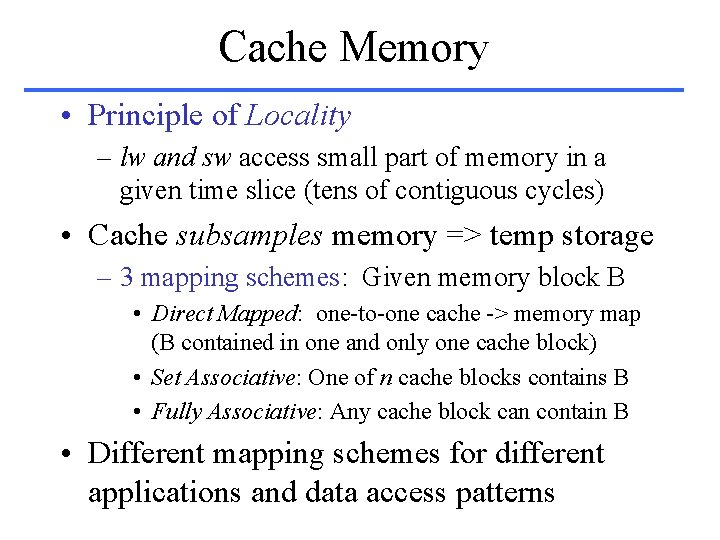

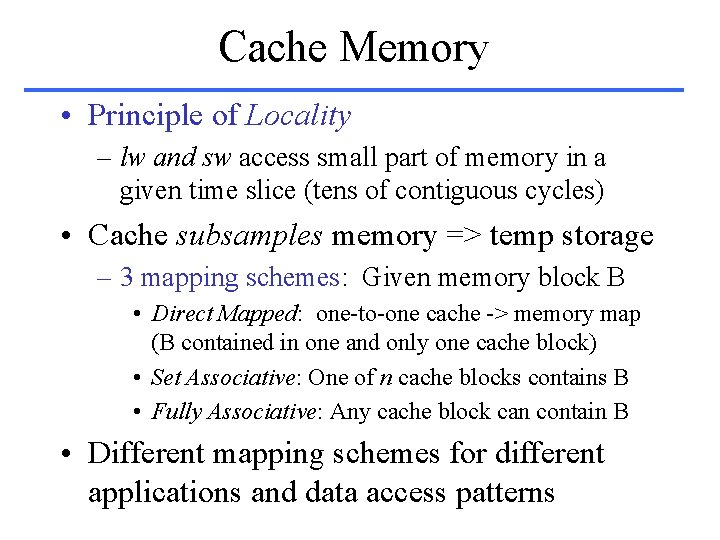

Physical vs. Virtual Memory • Physical Memory – Installed in Computer: 4 -32 GB RAM in PC – Limited by size and power supply of processor – Potential extent limited by size of address space • Virtual Memory – – – How to put 232 words in your PC? Not as RAM – not enough space or power Make the CPU believe it has 232 words Have the Main Memory act as a long-term Cache Page the main memory contents to/from disk Paging table (address system) works with Memory Management Unit to control page storage/retrieval

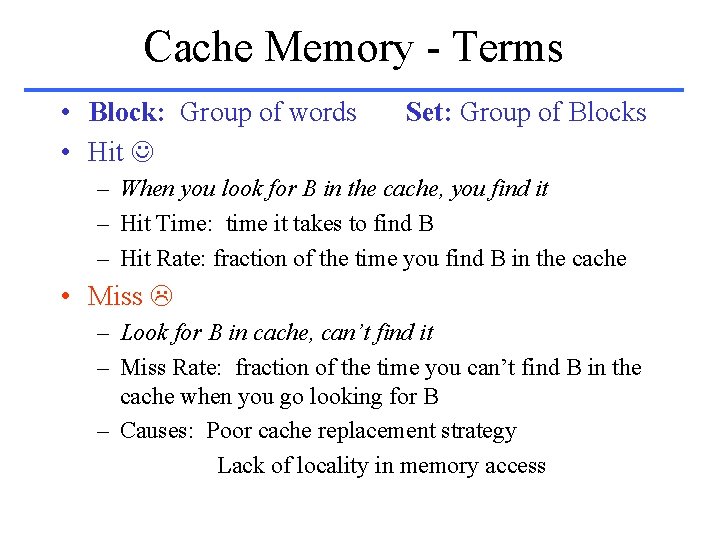

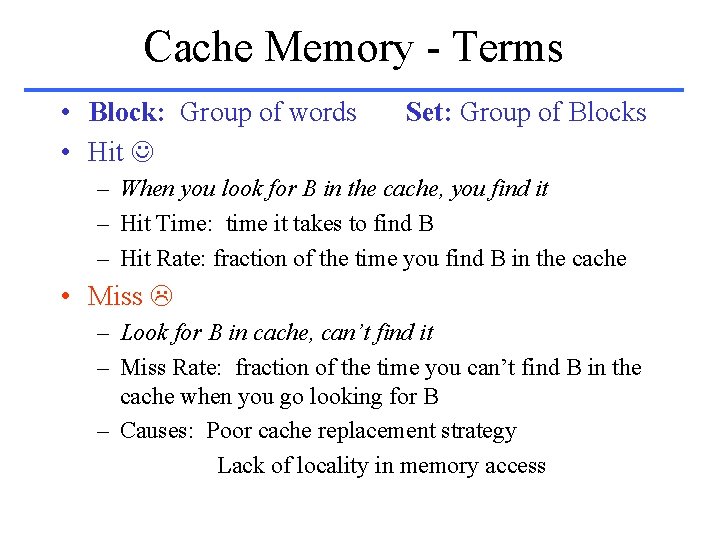

Cache Memory • Principle of Locality – lw and sw access small part of memory in a given time slice (tens of contiguous cycles) • Cache subsamples memory => temp storage – 3 mapping schemes: Given memory block B • Direct Mapped: one-to-one cache -> memory map (B contained in one and only one cache block) • Set Associative: One of n cache blocks contains B • Fully Associative: Any cache block can contain B • Different mapping schemes for different applications and data access patterns

Cache Memory - Terms • Block: Group of words • Hit Set: Group of Blocks – When you look for B in the cache, you find it – Hit Time: time it takes to find B – Hit Rate: fraction of the time you find B in the cache • Miss – Look for B in cache, can’t find it – Miss Rate: fraction of the time you can’t find B in the cache when you go looking for B – Causes: Poor cache replacement strategy Lack of locality in memory access

Set Associative Mapping • Generalizes all Cache Mapping Schemes – Assume cache contains N blocks – 1 -way SA cache: Direct Mapping – M-way SA cache: if M = N, then fully assoc. • Advantage – Decreases miss rate (more places to find B) • Disdvantage – Increases hit time (more places to look for B) – More complicated hardware

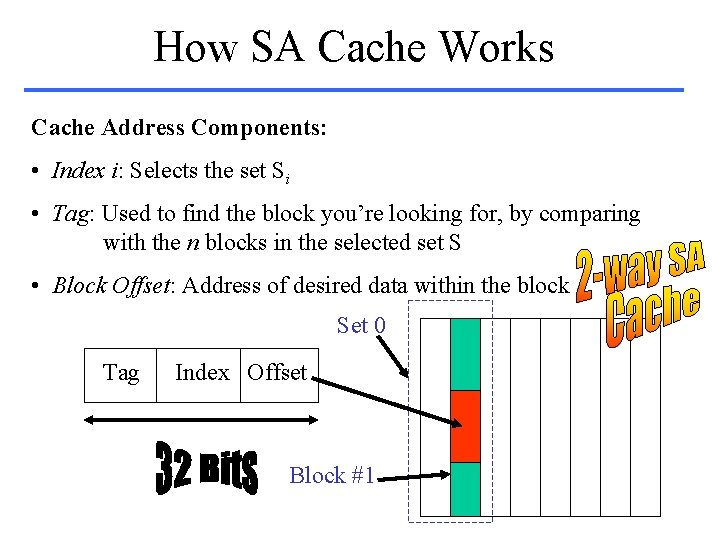

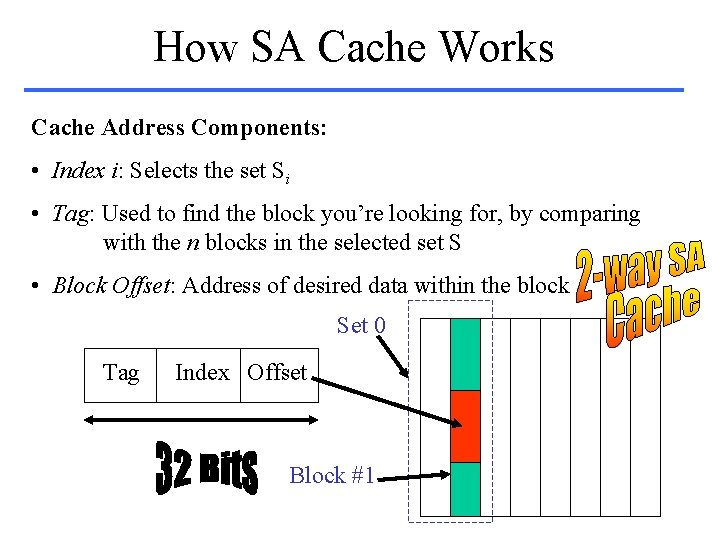

How SA Cache Works Cache Address Components: • Index i: Selects the set Si • Tag: Used to find the block you’re looking for, by comparing with the n blocks in the selected set S • Block Offset: Address of desired data within the block Set 0 Tag Index Offset Block #1

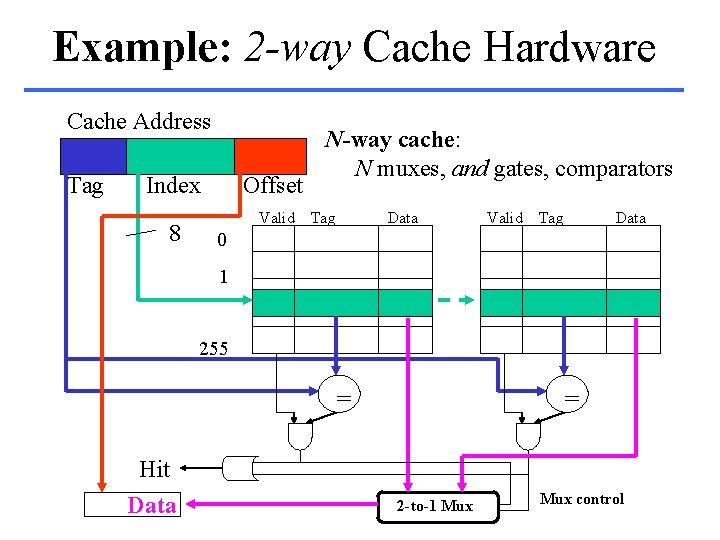

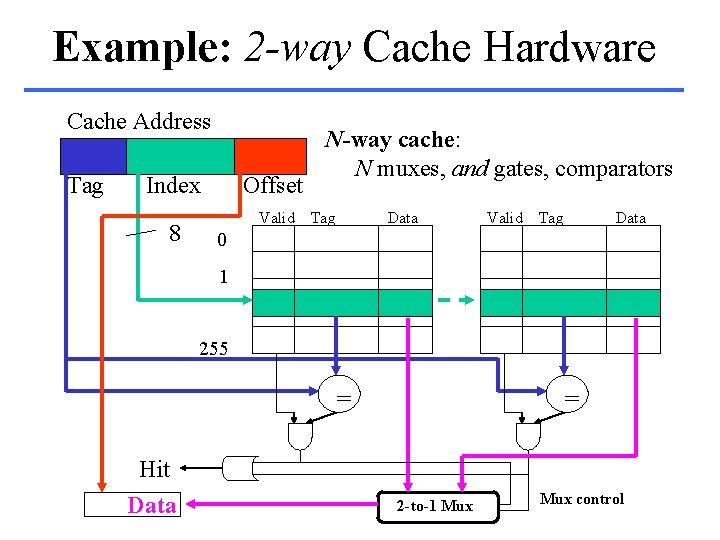

Example: 2 -way Cache Hardware Cache Address Tag Index 8 Offset Valid N-way cache: N muxes, and gates, comparators Tag Data Valid Tag Data 0 1 255 = Hit Data = 2 -to-1 Mux control

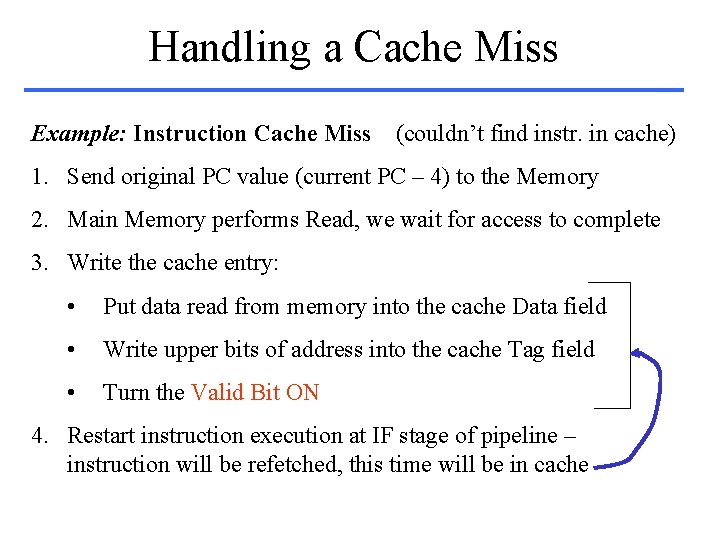

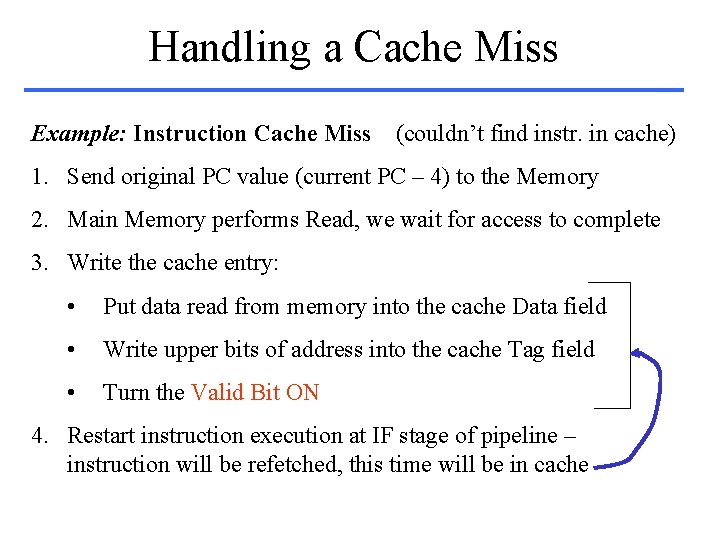

Handling a Cache Miss Example: Instruction Cache Miss (couldn’t find instr. in cache) 1. Send original PC value (current PC – 4) to the Memory 2. Main Memory performs Read, we wait for access to complete 3. Write the cache entry: • Put data read from memory into the cache Data field • Write upper bits of address into the cache Tag field • Turn the Valid Bit ON 4. Restart instruction execution at IF stage of pipeline – instruction will be refetched, this time will be in cache

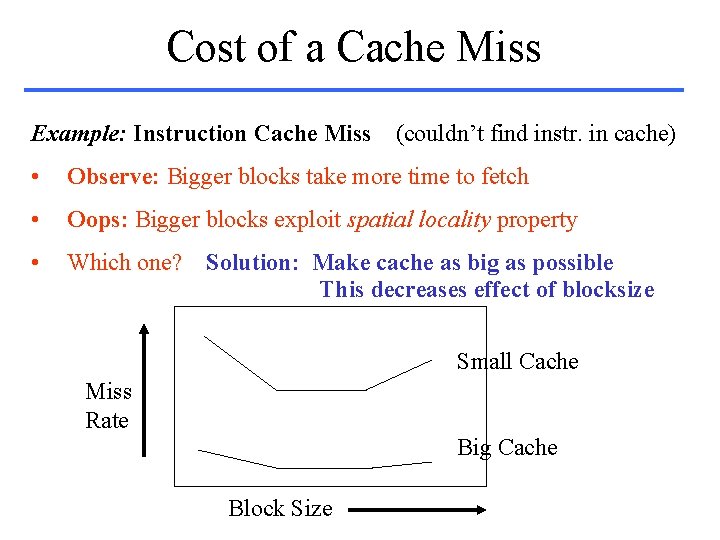

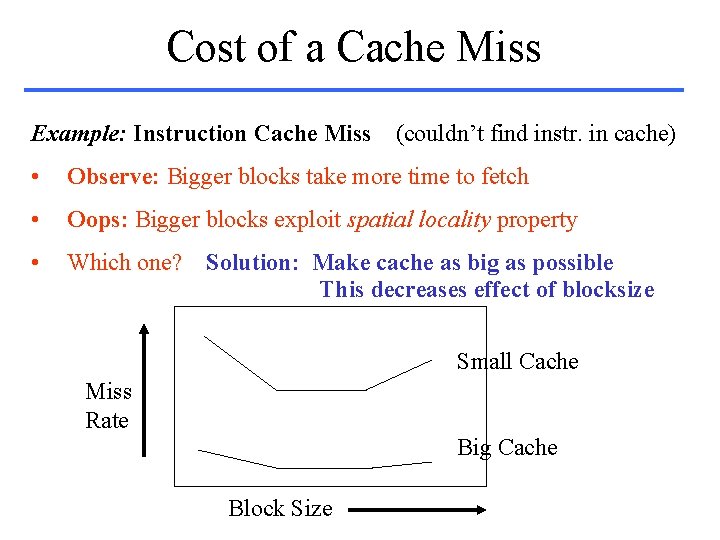

Cost of a Cache Miss Example: Instruction Cache Miss (couldn’t find instr. in cache) • Observe: Bigger blocks take more time to fetch • Oops: Bigger blocks exploit spatial locality property • Which one? Solution: Make cache as big as possible This decreases effect of blocksize Small Cache Miss Rate Big Cache Block Size

Block Write Strategies 1) Write-Through: • Write the data to (a) cache and (b) block in main memory • Advantage: Misses are simpler & cheaper because you don’t have to write the block back to a lower level • Advantage: Easier implementation, only need write buffer 2) Write-Back: • Write the data only to cache block. Write to memory only when block is replaced • Advantage: Writes are limited only by cache write rate • Advantage: Multi-word writes supported, since only one (efficient) write to main memory is made per block

![Know these Concepts Techniques OVERVIEW pp 374 382 PattersonHennessey 5 th Ed Know these Concepts & Techniques OVERVIEW: pp. 374 -382 [Patterson&Hennessey, 5 th Ed. ]](https://slidetodoc.com/presentation_image_h2/6ed4ce3cb547aa68dd9847e76b9789fc/image-14.jpg)

Know these Concepts & Techniques OVERVIEW: pp. 374 -382 [Patterson&Hennessey, 5 th Ed. ] Design of Memory to Support Cache: pp. 383 -397 Measuring and Improving Cache Performance: pp. 398 -417 Dependable Memory Hierarchy: pp. 418 -423 Cache Coherence: pp. 466 -469 Cache Controllers: pp. 461 -465, p. 470 READINGS FROM OUR COURSE WEB PAGE: http: //www. cise. ufl. edu/~mssz/Comp. Org/CDA-mem. html 6. 1. Overview of Memory Hierarchies 6. 2. Basics of Cache and Virtual Memory 6. 3. Memory Systems Performance Analysis & Metrics

New Topic: Virtual Memory (VM) Observe: Cache is a cache for main memory *** Main Memory is a Cache for Disk Storage *** Justification: • VM allows efficient and safe sharing of memory among multiple programs (multiprogramming support) • VM removes the programming headaches of a small amount of physical memory • VM simplifies loading the program by supporting relocation History: VM was developed first, then cache Cache is based on VM technology, not conversely

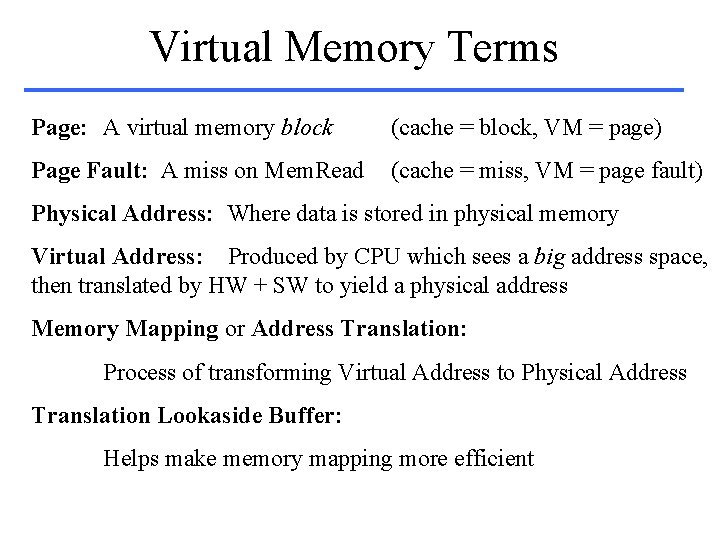

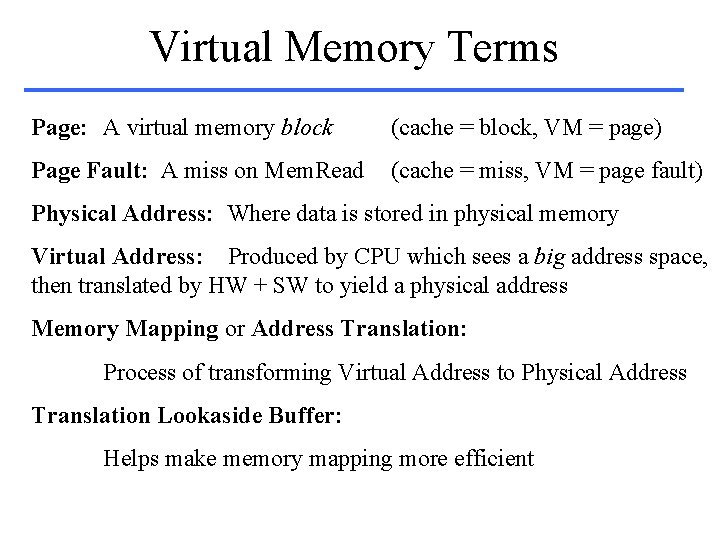

Virtual Memory Terms Page: A virtual memory block (cache = block, VM = page) Page Fault: A miss on Mem. Read (cache = miss, VM = page fault) Physical Address: Where data is stored in physical memory Virtual Address: Produced by CPU which sees a big address space, then translated by HW + SW to yield a physical address Memory Mapping or Address Translation: Process of transforming Virtual Address to Physical Address Translation Lookaside Buffer: Helps make memory mapping more efficient

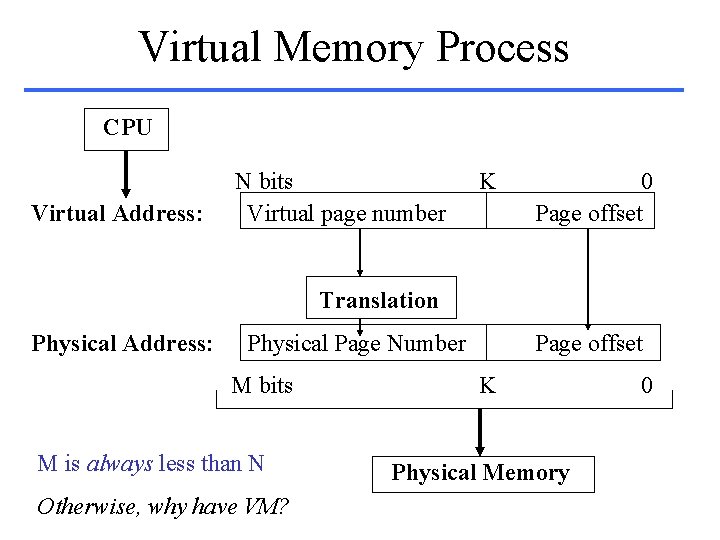

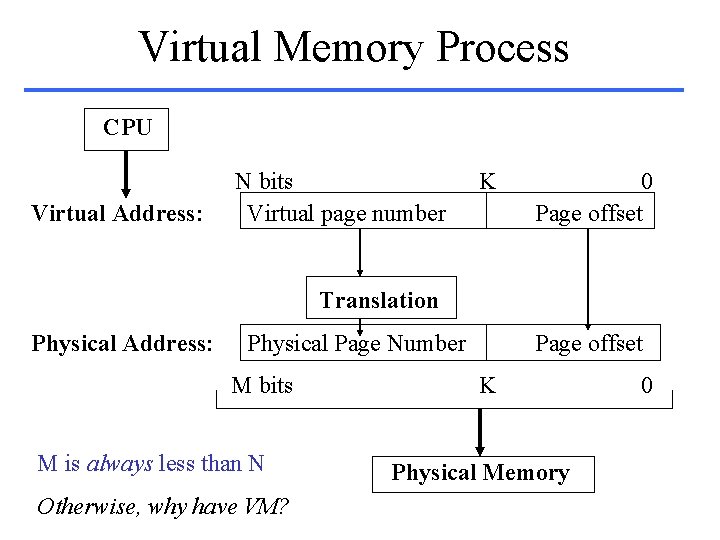

Virtual Memory Process CPU Virtual Address: N bits Virtual page number K 0 Page offset Translation Physical Address: Physical Page Number M bits M is always less than N Otherwise, why have VM? Page offset K Physical Memory 0

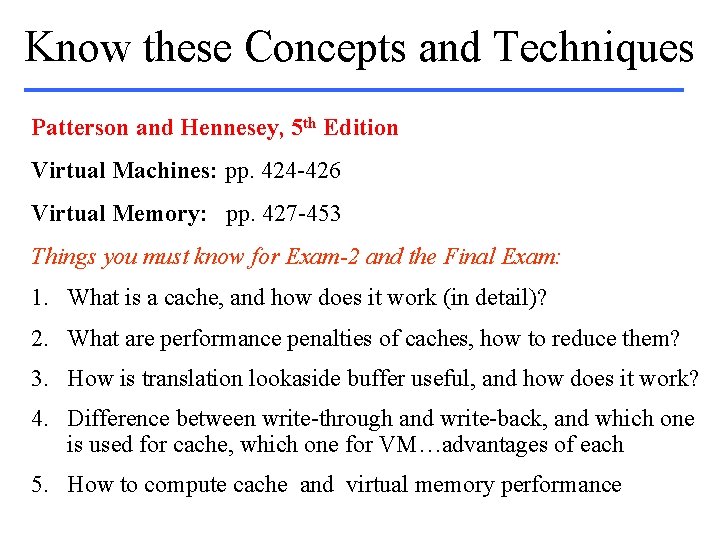

Virtual Memory Cost Page Fault: Re-fetch the data from disk (millisecond latency) Reducing VM Cost: • Make pages large enough to amortize high disk access time • Allow fully associative page placement to reduce page fault rate • Handle page faults in software, because you have a lot of time between disk accesses (versus cache, which is v. fast) • Use clever software algorithms to place pages (we have the time) Random page replacement, versus Least Recently Used • Use Write-back (faster) instead of write-through (too long)

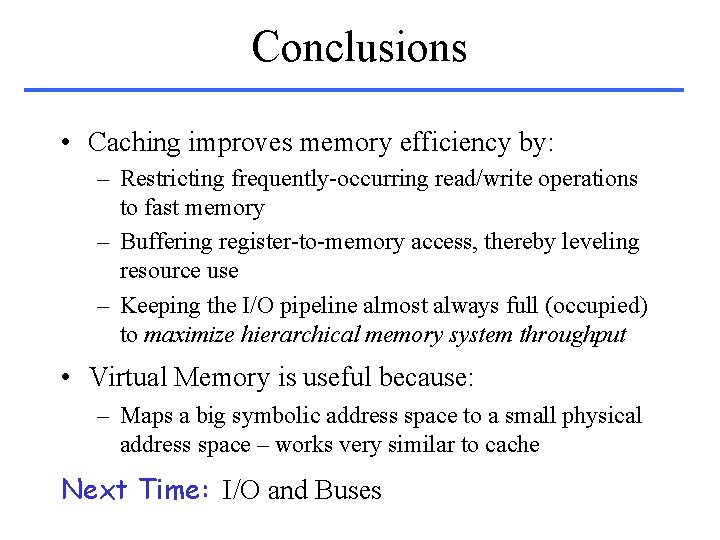

Know these Concepts and Techniques Patterson and Hennesey, 5 th Edition Virtual Machines: pp. 424 -426 Virtual Memory: pp. 427 -453 Things you must know for Exam-2 and the Final Exam: 1. What is a cache, and how does it work (in detail)? 2. What are performance penalties of caches, how to reduce them? 3. How is translation lookaside buffer useful, and how does it work? 4. Difference between write-through and write-back, and which one is used for cache, which one for VM…advantages of each 5. How to compute cache and virtual memory performance

Conclusions • Caching improves memory efficiency by: – Restricting frequently-occurring read/write operations to fast memory – Buffering register-to-memory access, thereby leveling resource use – Keeping the I/O pipeline almost always full (occupied) to maximize hierarchical memory system throughput • Virtual Memory is useful because: – Maps a big symbolic address space to a small physical address space – works very similar to cache Next Time: I/O and Buses

Think: Exam-2 + Weekend!!