Caching IRT 0180 Multimedia Technologies Marika Kulmar 5

- Slides: 24

Caching IRT 0180 Multimedia Technologies Marika Kulmar 5. 10. 2015 1

Multimedia services categories based on the scheduling policies of data delivery and on the degree of interactivity • No VOD: similar to the broadcast TV service where a user is a passive participant in the system and has no control over the video session. In this case, users do not request videos. • Pay-per-view: similar to cable TV pay-per-view. Users sign up and pay for specific services scheduled at predetermined times. • True VOD (TVOD): TVOD systems allow users to request and view any video at any time, with full VCR capabilities. The user has complete control over the video session. • Near VOD (NVOD): Users requesting for the same video are served using one video stream to minimize the demand on server bandwidth. The server is, therefore, in control of when to serve the video. VCR capabilities can be provided, using many channels delivering the different requested portions of the same video requested by the different users. • Quasi-VOD (QVOD): QVOD is a threshold-based NVOD. The server delivers a video when the number of user requests for the video is greater than a predefined threshold. The throughput of QVOD systems is usually greater than that of NVOD systems. http: //ieeexplore. ieee. org/xpls/icp. jsp? arnumber=1323291 2

Methods to improve media services • Media caching - a copy of a file is stored locally, or at least closer to the end-user device, so that it is available for re-use • Multicast delivery – one-to-many or many-to-many distribution is group communication where information is addressed to a group of destination computers simultaneously. 3

Caching • In conventional systems, caching used to improve program performance • In video servers, caching is used to increase server capacity • Separate servers called caching proxy servers are used Proxy caching is effective to • Reduce service delays • Reduce wide area network load • Reduce video server load • Provide better playback quality. 4

What to cache – caching policies • Prefetch – if the proxy can accurately predict the users' access patterns continuous media has a strong tendency to be accessed sequentially • Divide media file into segments of blocks – segment size increases from the beginning segment – cache segments • The later segments, if not cached, can be prefetched after the request is received. • LRU – last recently used – time since the last access of the object. The blocks that were retrieved by one client can be reused by other closely followed clients • LFU – last frequently used - number of times the object is accessed. Typical video accesses follow 80 -20 rule (i. e. , 80% of requests access 20% of video objects) • Size - proportional to the size of the clip raised to some exponent. 5

Proxy servers • Single video proxy • Cache Allocation Policy determines which portion of which video to cache at proxy storage. • Cache Replacement Policy determines which cache unit and how many of them to purge out when the current cache space is not enough to store the new video data. • Collaborative Proxy Caching - Proxy servers are either organized as a peer group or a cache hierarchy. Example CDN. 6

Caching procedures Cache hit Cache miss • Client request can be served by caching proxy. • Client request can not be served by caching proxy. • Proxy sends request to the server and relays reply to client and caches a reply. 7

Taxonomy of Cache Replacement Policies • Recency of access: locality of reference • Frequency based: hot sets with independent accesses • Optimal: knowledge of the time of next access • Size-based: different size objects • Miss cost based: different times to fetch objects • Resource-based: resource usage of different object classes

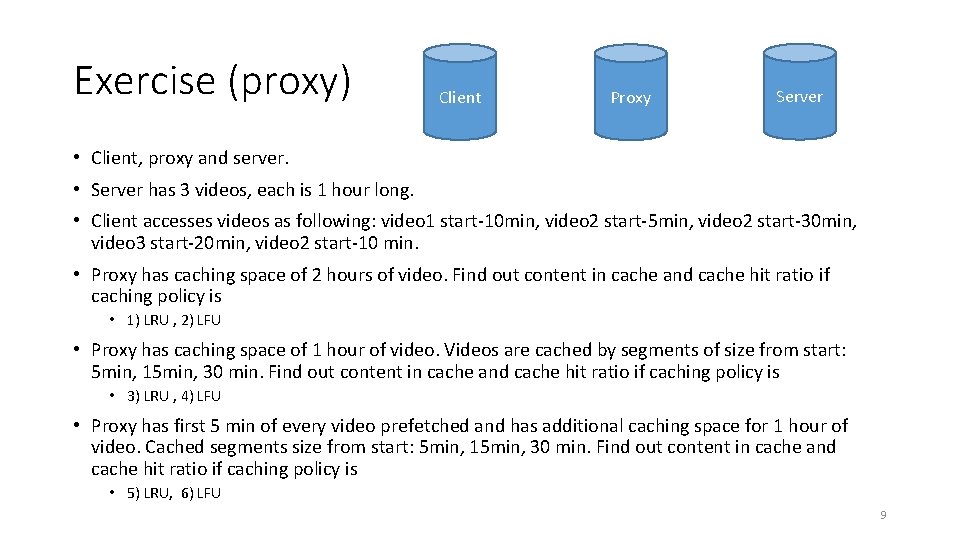

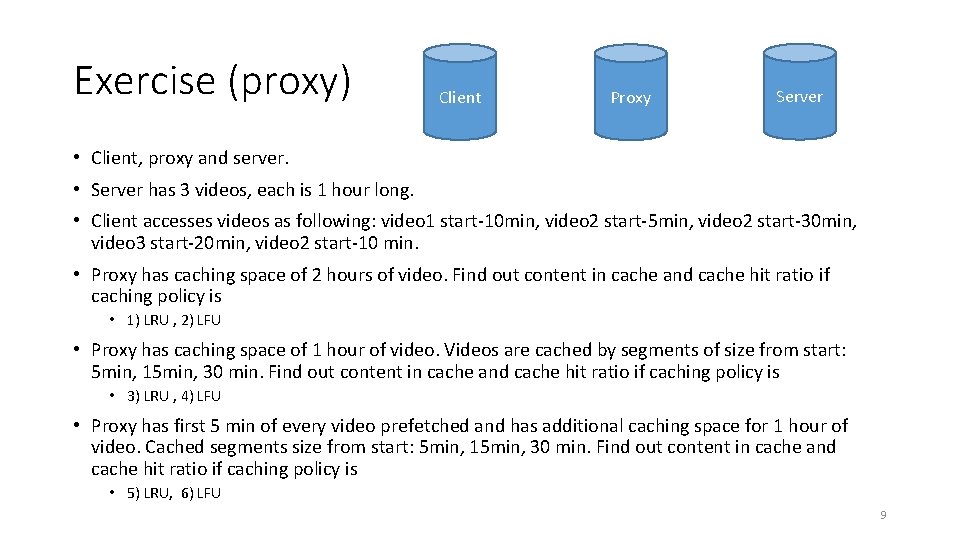

Exercise (proxy) Client Proxy Server • Client, proxy and server. • Server has 3 videos, each is 1 hour long. • Client accesses videos as following: video 1 start-10 min, video 2 start-5 min, video 2 start-30 min, video 3 start-20 min, video 2 start-10 min. • Proxy has caching space of 2 hours of video. Find out content in cache and cache hit ratio if caching policy is • 1) LRU , 2) LFU • Proxy has caching space of 1 hour of video. Videos are cached by segments of size from start: 5 min, 15 min, 30 min. Find out content in cache and cache hit ratio if caching policy is • 3) LRU , 4) LFU • Proxy has first 5 min of every video prefetched and has additional caching space for 1 hour of video. Cached segments size from start: 5 min, 15 min, 30 min. Find out content in cache and cache hit ratio if caching policy is • 5) LRU, 6) LFU 9

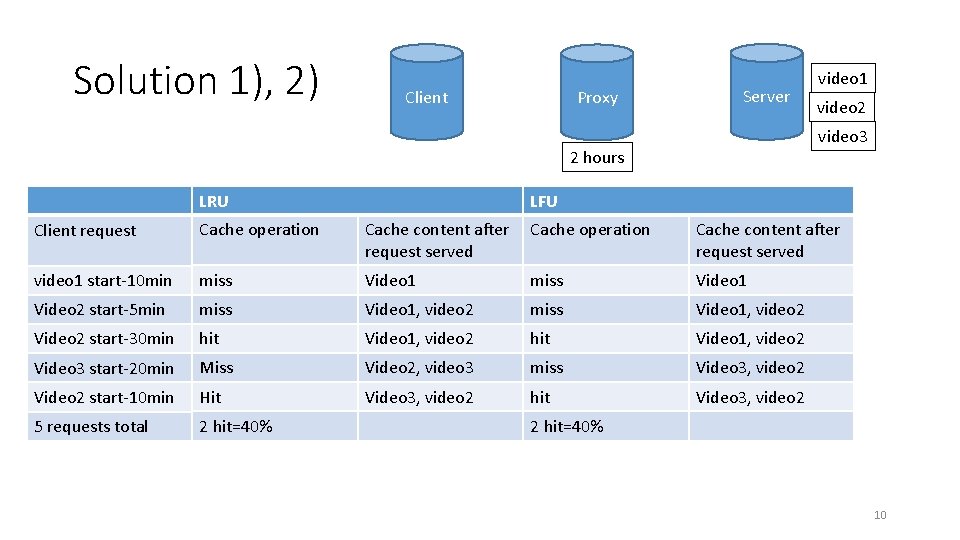

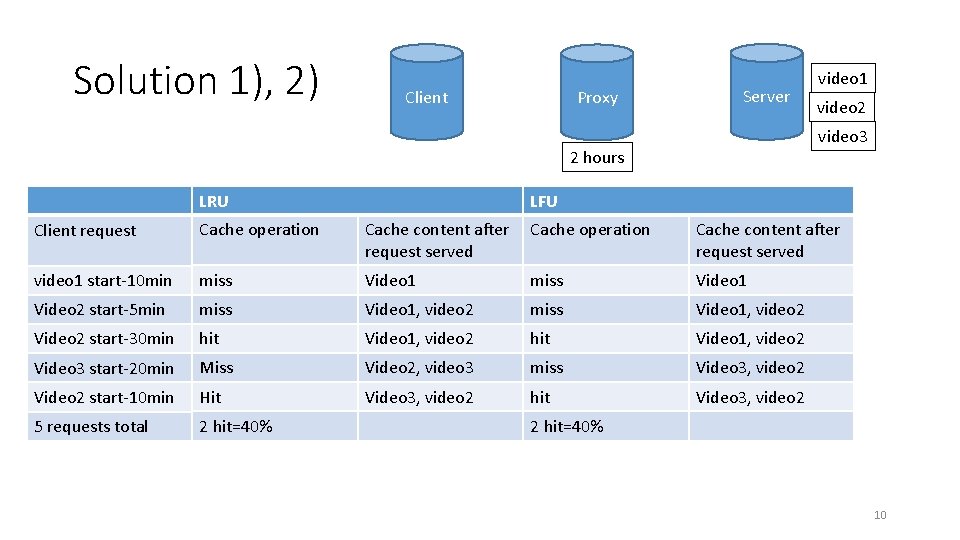

Solution 1), 2) Client Proxy Server video 2 video 3 2 hours LRU video 1 LFU Client request Cache operation Cache content after request served video 1 start-10 min miss Video 1 Video 2 start-5 min miss Video 1, video 2 Video 2 start-30 min hit Video 1, video 2 Video 3 start-20 min Miss Video 2, video 3 miss Video 3, video 2 Video 2 start-10 min Hit Video 3, video 2 hit Video 3, video 2 5 requests total 2 hit=40% 10

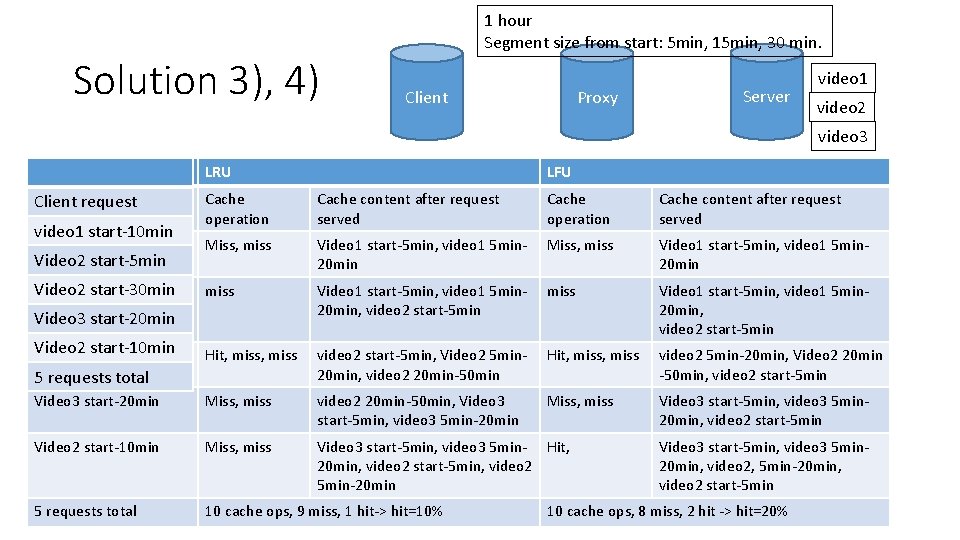

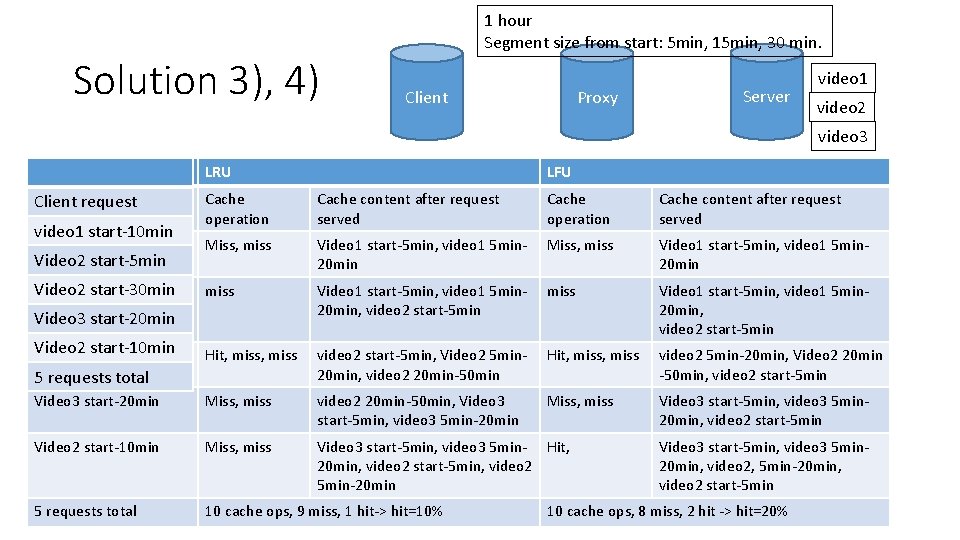

Solution 3), 4) 1 hour Segment size from start: 5 min, 15 min, 30 min. Client Proxy Server video 1 video 2 video 3 LRU Client request LFU Cache operation Cache content after request served video 1 start-10 min Miss, miss Video 1 start-5 min, video 1 5 min 20 min Video 2 start-30 min Video 2 start-5 min miss Video 1 start-5 min, video 1 5 min 20 min, video 2 start-5 min Hit, miss video 2 start-5 min, Video 2 5 min 20 min, video 2 20 min-50 min Hit, miss video 2 5 min-20 min, Video 2 20 min -50 min, video 2 start-5 min Video 3 start-20 min Miss, miss video 2 20 min-50 min, Video 3 start-5 min, video 3 5 min-20 min Miss, miss Video 3 start-5 min, video 3 5 min 20 min, video 2 start-5 min Video 2 start-10 min Miss, miss Video 3 start-5 min, video 3 5 min- Hit, 20 min, video 2 start-5 min, video 2 5 min-20 min 5 requests total 10 cache ops, 9 miss, 1 hit-> hit=10% video 1 start-10 min Video 2 start-5 min Video 3 start-20 min Video 2 start-10 min Video 2 start-30 min 5 requests total Video 3 start-5 min, video 3 5 min 20 min, video 2, 5 min-20 min, video 2 start-5 min 10 cache ops, 8 miss, 2 hit -> hit=20% 11

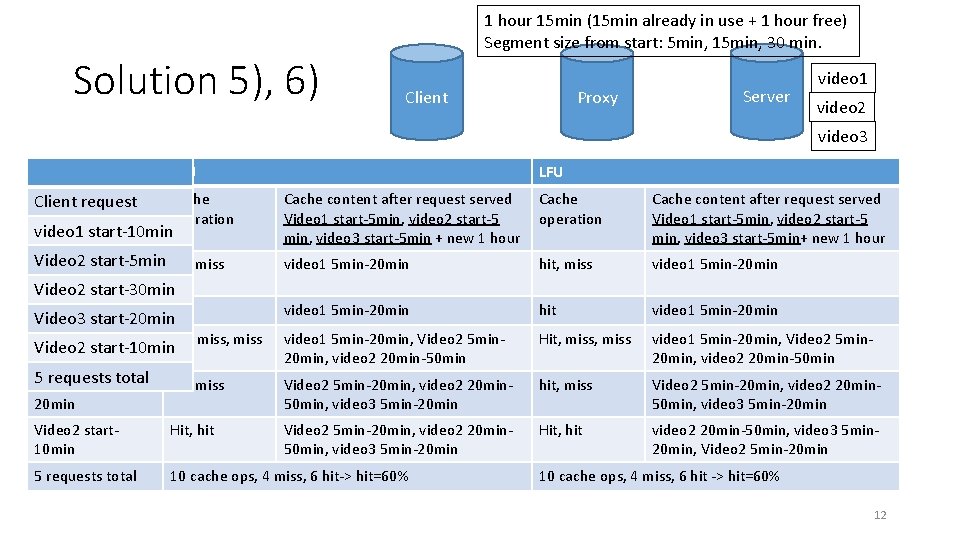

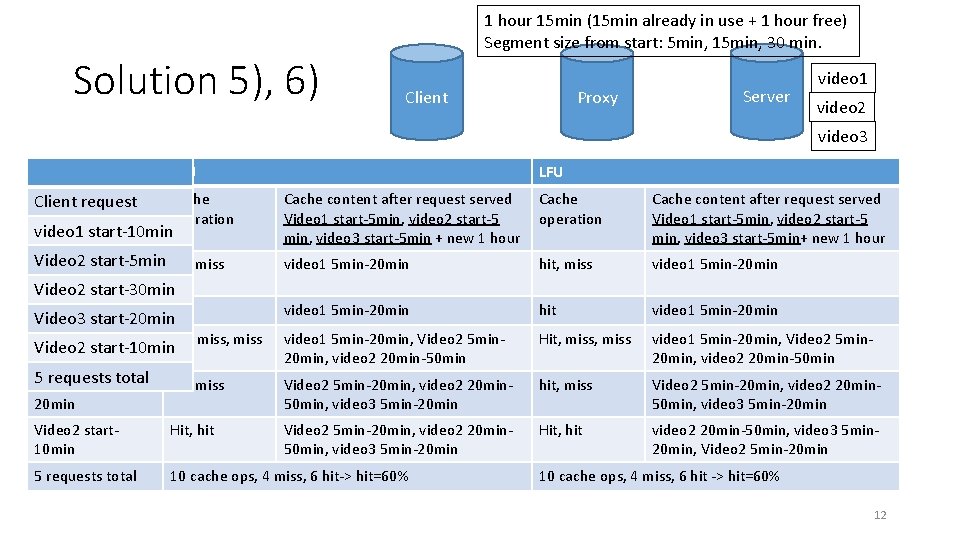

Solution 5), 6) 1 hour 15 min (15 min already in use + 1 hour free) Segment size from start: 5 min, 15 min, 30 min. Client Proxy Server video 1 video 2 video 3 LRU Clientrequest Cache operation LFU Cache content after request served Video 1 start-5 min, video 2 start-5 min, video 3 start-5 min + new 1 hour Cache operation Cache content after request served Video 1 start-5 min, video 2 start-5 min, video 3 start-5 min+ new 1 hour video 1 5 min-20 min hit, miss video 1 5 min-20 min hit video 1 5 min-20 min, Video 2 5 min 20 min, video 2 20 min-50 min Hit, miss video 1 5 min-20 min, Video 2 5 min 20 min, video 2 20 min-50 min hit, miss Video 2 5 min-20 min, video 2 20 min 50 min, video 3 5 min-20 min Video 2 start 10 min Hit, hit Video 2 5 min-20 min, video 2 20 min 50 min, video 3 5 min-20 min Hit, hit video 2 20 min-50 min, video 3 5 min 20 min, Video 2 5 min-20 min 5 requests total 10 cache ops, 4 miss, 6 hit-> hit=60% video 1 start-10 min Video 2 start-5 min hit, miss video 1 10 min Video 2 start-30 min Video 2 start-5 min hit Video 3 start-20 min Video 2 start. Hit, miss Video 2 start-10 min 30 min 5 requests Video 3 start-total 20 min 10 cache ops, 4 miss, 6 hit -> hit=60% 12

Content Delivery Network or Content Distribution Network (CDN) • CDN is a large distributed system of proxy servers deployed in multiple data centers across the Internet. The goal of a CDN is to serve content to end-users with high availability and high performance. • Here content (potentially multiple copies) may exist on several servers. When a user makes a request to a CDN hostname, DNS will resolve to an optimized server (based on location, availability, cost, and other metrics) and that server will handle the request. 13

Applications • Mbone (Multicast Backbone) - started in 1992, is a virtual network on top of the Internet and connects routers and end hosts that are multicast capable. However, over ten years after initial deployment, the MBone is still limited to a very small number of universities and research labs. • Akamai, started in 1998, is a content delivery overlay network that delivers both web and streaming media content. For streaming media, it uses overlay multicast with a dedicated-infrastructure model and has thousands of infrastructure nodes deployed all over the world. • real 14

Multicast delivery • Static multicast - a video server serves a batch of requests for the same video that arrive within a short period using one server channel • Dynamic multicast - extends the static multicast approach, allowing late-coming requests to join a batch currently being served by extending the multicast tree to include the newly arriving client. • Periodic broadcast - a video is fragmented into a number of segments. Each segment is periodically broadcast on a dedicated channel. • Hybrid broadcast – popular videos are periodically broadcasted and less requested videos are serviced using batching 15

Static and dynamic multicast batch • Batching – grouping clients requesting the same video object that arrives within a short duration of time • policies to select which batch to serve first when a server channel becomes available: • first-come-first-serve (FCFS), as soon as some server bandwidth becomes free, the batch holding the oldest request with the longest waiting time is served next. • maximum-queue-length-first (MQLF), the batch with the most number of pending requests (i. e. , longest queue) is chosen to receive the service. • Maximum-factored-queued-length first (MFQLF) attempts to provide reasonable fairness as well as high server throughput. patch • Patching assumes multicast transmission and clients arriving late to miss the start of main transmission • These late clients immediately receive main transmission and store it temporarily in a buffer. • In parallel, each client connects to server via unicast and transports (patches) the missing video start which can be shown immediately 16

Dynamic multicast approach • Adaptive piggybacking, the server slows down the delivery rate of the video stream to a previous client, and speeds up the delivery rate of the video stream to a new client until they share the same play point in the video. At this time, the server merges the two video streams and uses only one channel to serve the two clients. • Patching schemes let a new client join an ongoing multicast and still receive the entire video data stream. For a new request for the same video, the server delivers only the missing portion of the requested video in a separate patching stream. The client downloads the data from the patching stream and immediately displays the data. Concurrently, the client downloads and caches the later portion of the video from the multicast stream. When finishing playing back the data in the patching stream, the client switches to play back the video data in its local buffer. 17

Application Layer Multicast (ALM), overlay multicast • end hosts implement multicast services at the application layer, assuming only IP unicast at the network layer • infrastructure-based approach - a set of dedicated machines called overlay nodes act as software routers with multicast functionalities. Video content is transmitted from a source to a group of receivers on a multicast tree comprising of only the overlay nodes. A new receiver joins an existing multicast group by connecting to its nearest overlay node. • P 2 P approach i. e. chaining – • P 2 P With Prerecorded Video Streaming • P 2 P With Live Streaming 18

Exercise (multicast) • Assume server that delivers a live mediastream with bitrate of 300 kb/s. 1. How many receivers can it handle with it’s network connection of 1 G Ethernet? 2. For supporting 1000 receivers how fast access link is needed for server? 3. If multicast can be used, how to reduce network load if 30% of receivers are located outside of local network? 4. Where is most effective posititon for a caching proxy server in this network? How much server load can be reduced? How much network load can be reduced? 19

Solution 1) Server • Server delivers a live mediastream with bitrate of 300 kb/s. 1. How many receivers can it handle with it’s network connection of 1 G Ethernet? Client • 1 G /300 kb/s =~3000 clients 20

Solution 2) Server • Server delivers a live mediastream with bitrate of 300 kb/s. 2. For supporting 1000 receivers how fast access link is needed for server? Client • 1000 * 300 kb/s = 300000 kb/s = 300 Mb/s 21

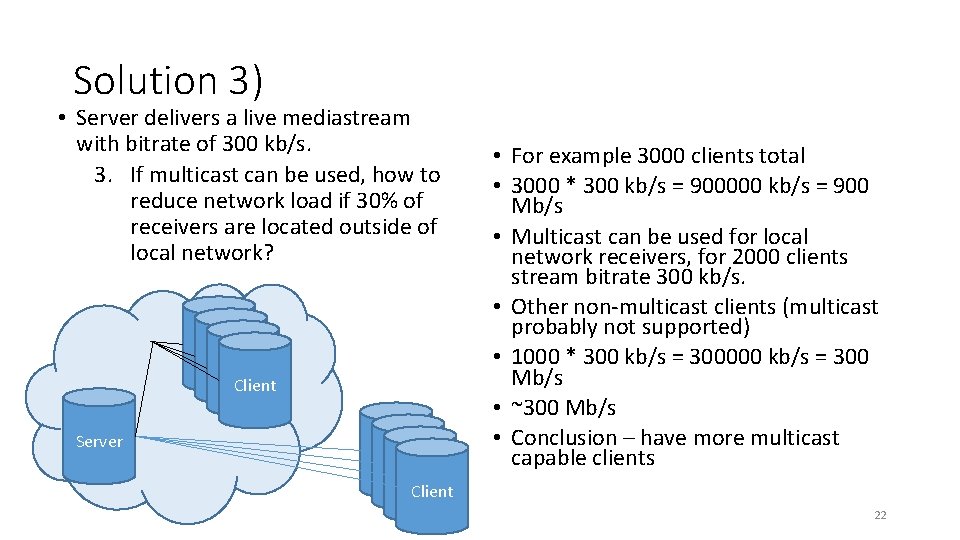

Solution 3) • Server delivers a live mediastream with bitrate of 300 kb/s. 3. If multicast can be used, how to reduce network load if 30% of receivers are located outside of local network? Client Server Client • For example 3000 clients total • 3000 * 300 kb/s = 900000 kb/s = 900 Mb/s • Multicast can be used for local network receivers, for 2000 clients stream bitrate 300 kb/s. • Other non-multicast clients (multicast probably not supported) • 1000 * 300 kb/s = 300000 kb/s = 300 Mb/s • ~300 Mb/s • Conclusion – have more multicast capable clients 22

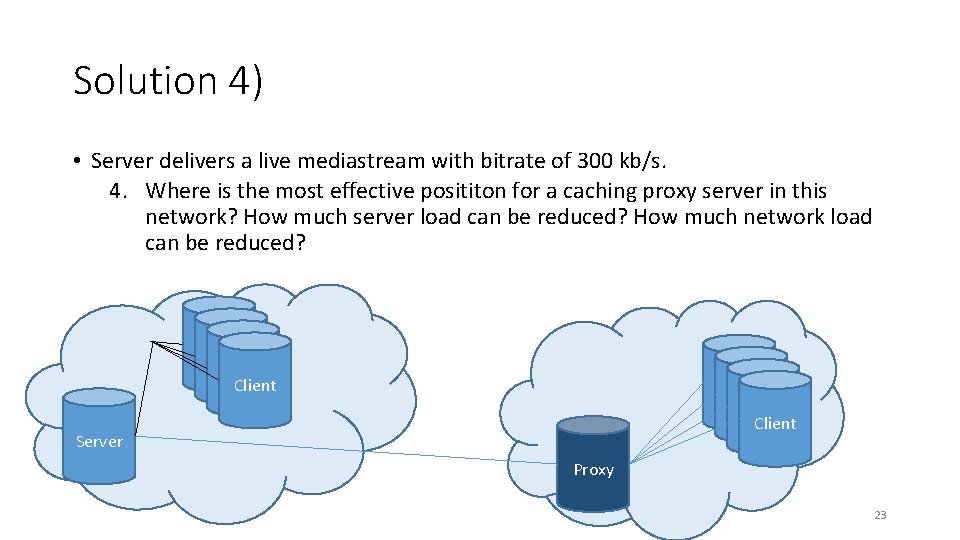

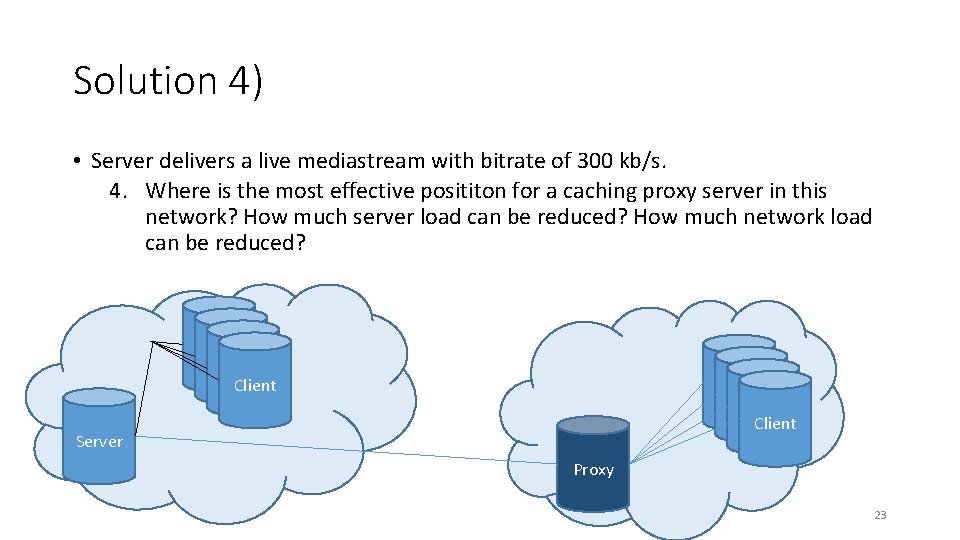

Solution 4) • Server delivers a live mediastream with bitrate of 300 kb/s. 4. Where is the most effective posititon for a caching proxy server in this network? How much server load can be reduced? How much network load can be reduced? Client Client Server Proxy 23

Further reading • Hua, Kien A. ; Tantaoui, Mounir A. ; Tavanapong, Wallapak, Video delivery technologies for large-scale deployment of multimedia applications, Proceedings of the IEEE, Sept. 2004, http: //ieeexplore. ieee. org/xpls/icp. jsp? arnumber=1323291 • Jiangchuan Liu; Jianliang Xu, Proxy caching for media streaming over the Internet, Communications Magazine, IEEE, Aug. 2004 http: //ieeexplore. ieee. org/xpls/icp. jsp? arnumber=1321397 • Ganjam, Aditya; Zhang, Hui, Internet Multicast Video Delivery, Proceedings of the IEEE, Jan. 2005, http: //ieeexplore. ieee. org/xpls/icp. jsp? arnumber=1369706 • Erik Nygren, Ramesh K. Sitaraman, Jennifer Sun, The Akamai Network: A Platform for High-Performance Internet Applications http: //www. akamai. com/dl/technical_publications/network_overview_osr. pdf • Ahmad, K. ; Begen, A. C. , IPTV and video networks in the 2015 timeframe: The evolution to medianets, Communications Magazine, IEEE, Dec. 2009, http: //ieeexplore. ieee. org/xpls/icp. jsp? arnumber=5350371 24