To IRT or not to IRT how to

- Slides: 38

To IRT or not to IRT: how to decide STANAG 6001 Testing Workshop – Kranjska Gora, Slovenia 4 -6 September 2018

What are we going to talk about? 2 Ülle Türk

How to statistically analyse tests. How it is done in j. Metrik. 3 Ülle Türk

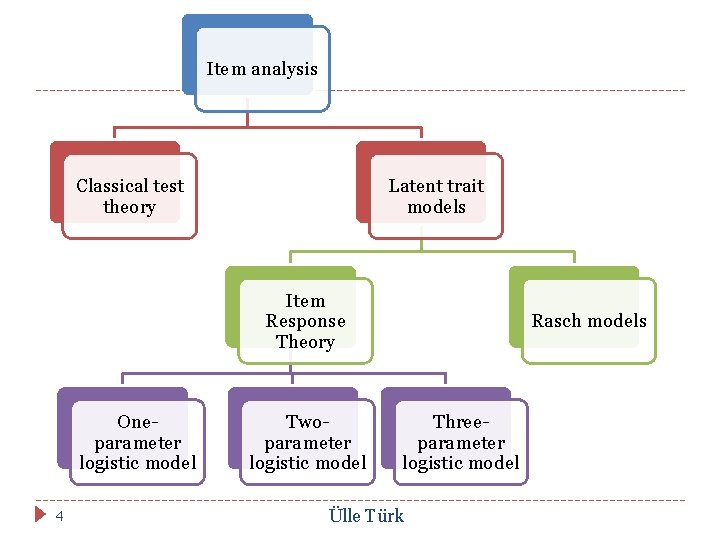

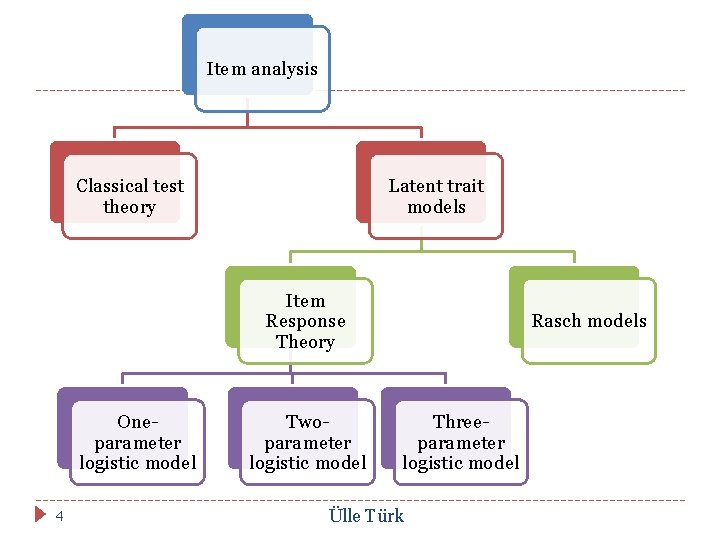

Item analysis Classical test theory Latent trait models Item Response Theory Oneparameter logistic model 4 Twoparameter logistic model Rasch models Threeparameter logistic model Ülle Türk

Why ‘latent trait’? Latent trait = unobservable ability or trait Latent traits or latent variables are constructs that in principle are “hidden” and cannot be measured directly. They can be measured using observed behaviours or responses (“indicators”). What is the name of the latent trait measured by a test? 5 Classical Test Theory (CTT) “True Score” (T) Item Response Theory (IRT) “Theta” (θ) Ülle Türk

Fundamental difference in approach CTT unit of analysis is the WHOLE TEST (item sum or mean) Sum = latent trait, so items and persons are inherently tied together (=bad!). IRT unit of analysis is the ITEM 6 Model of how item response relates to a separately estimated latent trait. Provides for separation of item and person properties (=good!). Ülle Türk

Classical Test Theory 7 Ülle Türk

Overview Originated from the work of Spearman in 1904 Also known as true score theory: observed score (X) = true score (T) + error (E) Central concern: reliability of measures Limitations: 8 Item difficulty and item discrimination depend on particular examinee samples. The assumption is that standard error of measurement is the same for all subjects. The focus is on test level information to the exclusion of item level information. Ülle Türk

Statistics used Test level Reliability (alpha, split-half, KR 20, KR 21) Measures of central tendency: mean, median, mode Measures of dispersion: range, variance, standard deviation Item level 9 Item facility (IF) Item discrimination (ID) Ülle Türk

Example A reading test consisting of 37 items (six tasks) Taken by 139 test-takers File: reading_pretest_b. csv Key: reading_pretest_b_key. doc 10 Ülle Türk

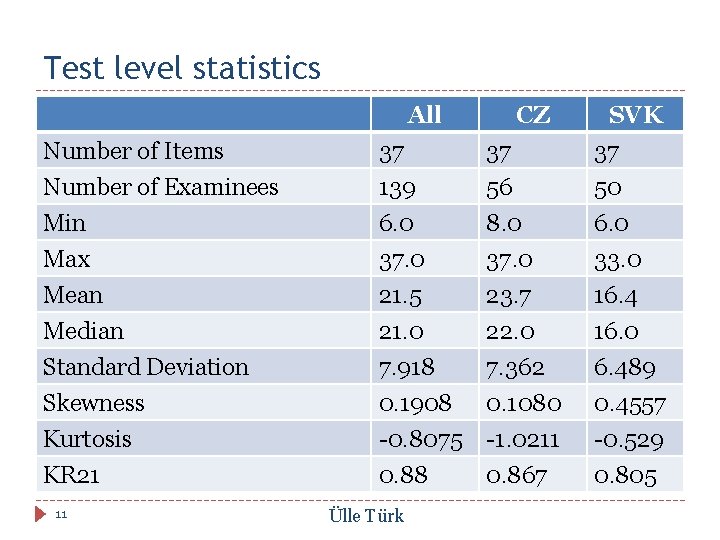

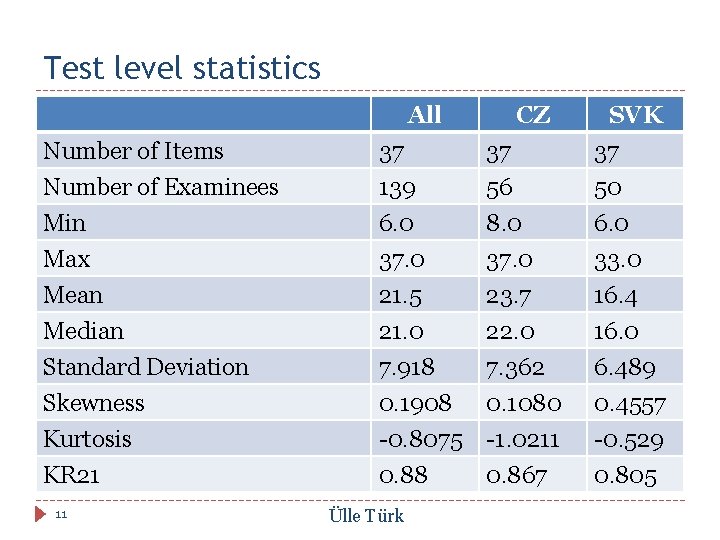

Test level statistics All Number of Items Number of Examinees Min 37 139 6. 0 37 56 8. 0 SVK 37 50 6. 0 Max 37. 0 33. 0 Mean Median Standard Deviation Skewness Kurtosis KR 21 21. 5 21. 0 7. 918 0. 1908 -0. 8075 0. 88 23. 7 22. 0 7. 362 0. 1080 -1. 0211 0. 867 16. 4 16. 0 6. 489 0. 4557 -0. 529 0. 805 11 Ülle Türk CZ

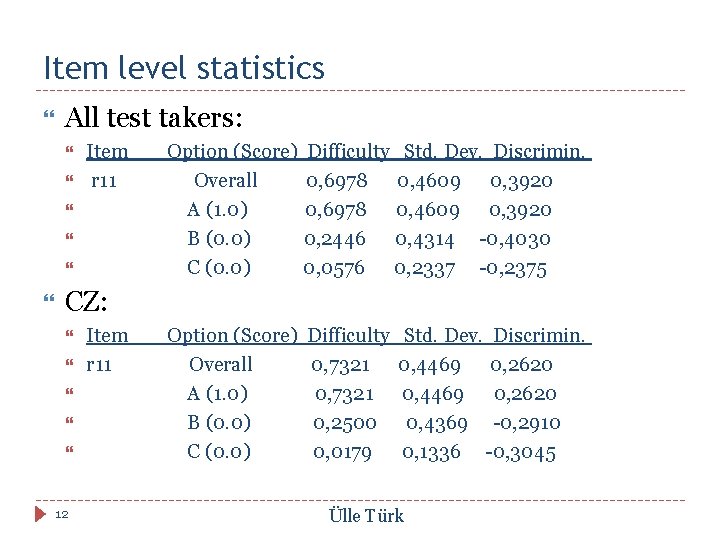

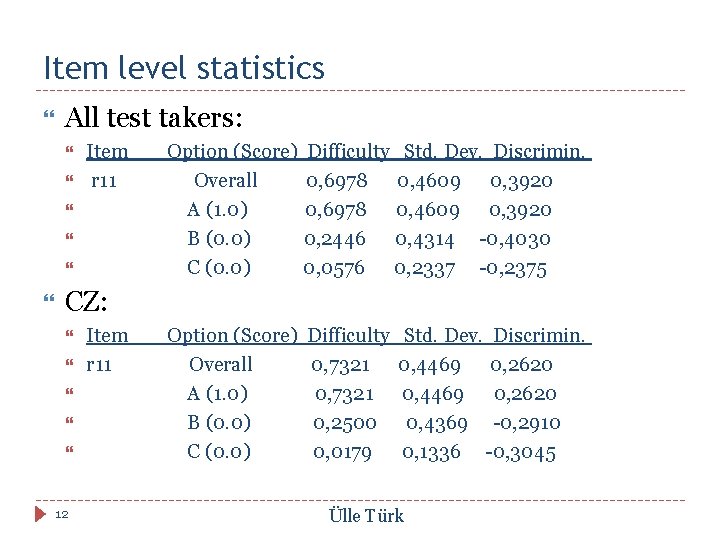

Item level statistics All test takers: Item r 11 Option (Score) Overall A (1. 0) B (0. 0) C (0. 0) Difficulty Std. Dev. Discrimin. 0, 6978 0, 4609 0, 3920 0, 2446 0, 4314 -0, 4030 0, 0576 0, 2337 -0, 2375 Option (Score) Overall A (1. 0) B (0. 0) C (0. 0) Difficulty 0, 7321 0, 2500 0, 0179 CZ: 12 Item r 11 Std. Dev. Discrimin. 0, 4469 0, 2620 0, 4369 -0, 2910 0, 1336 -0, 3045 Ülle Türk

Item Response Theory 13 Ülle Türk

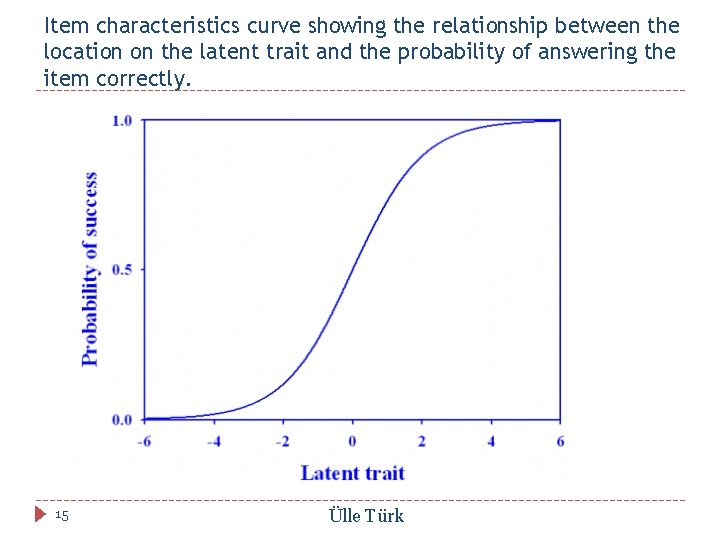

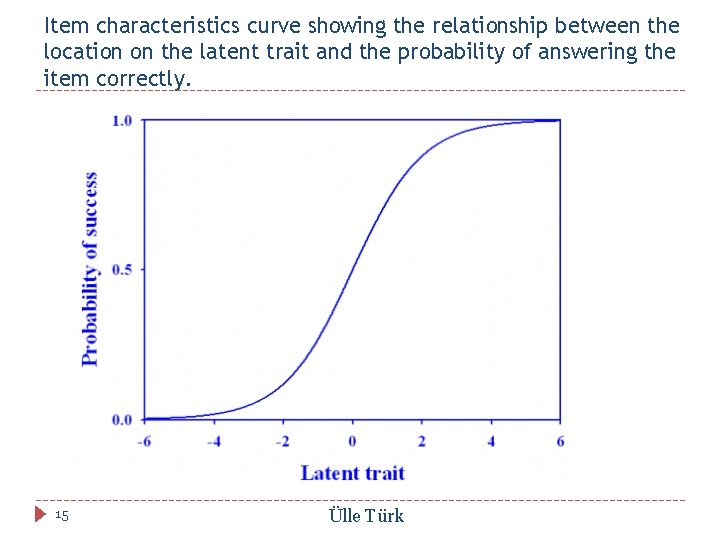

Overview Originated with the work of Rasch in the 1960 s and Lord in the 1950 s IRT methods model the probability of an individual's response to an item. The relationship between the probability of success to an item and the latent trait (e. g. , the ability) is described by a function called item characteristic curve (ICC) that takes an Sshape. 14 Ülle Türk

Item characteristics curve showing the relationship between the location on the latent trait and the probability of answering the item correctly. 15 Ülle Türk

A family of models Latent ability = theta ( ) Parameters: b – item location (i. e. difficulty) a – discrimination c – guessing The Rasch model: the probability of a correct response is modelled as a logistic function of the difference between the person and item parameter. 16 Ülle Türk

Rasch model: 2 assumptions and a ‘problem’ The data must fit the model the following two assumptions must be met: The test measures a single latent trait (i. e. it is unidimensional). Items are locally independent. A problem – scale indeterminancy The latent scale is completely arbitrary. Indeterminancy is resolved through either person centering or item centering. 17 j. Metric uses item centering. Ülle Türk

Rasch model: benefits Parameter invariance Item parameters do not depend on the distribution of examinees. Person parameters do not depend on the distribution of items. Specific objectivity 18 Comparisons between individuals are independent of which particular items within the class considered have been used. It is possible to compare items measuring the same thing independently of which particular individuals within a class considered answered them. Ülle Türk

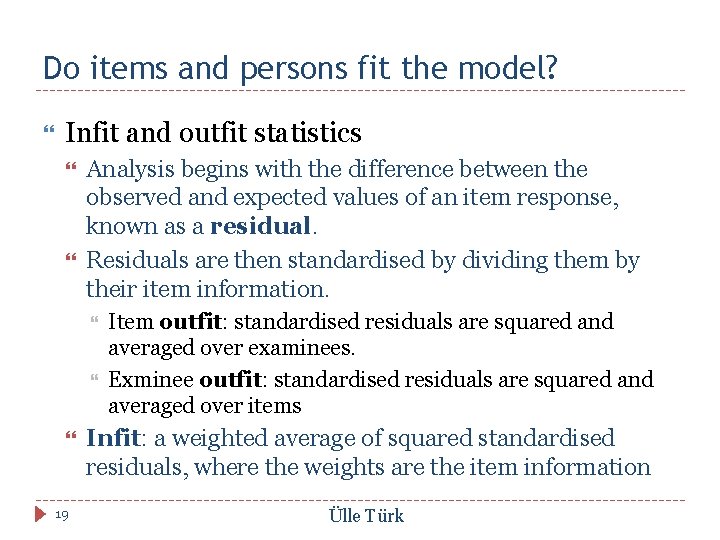

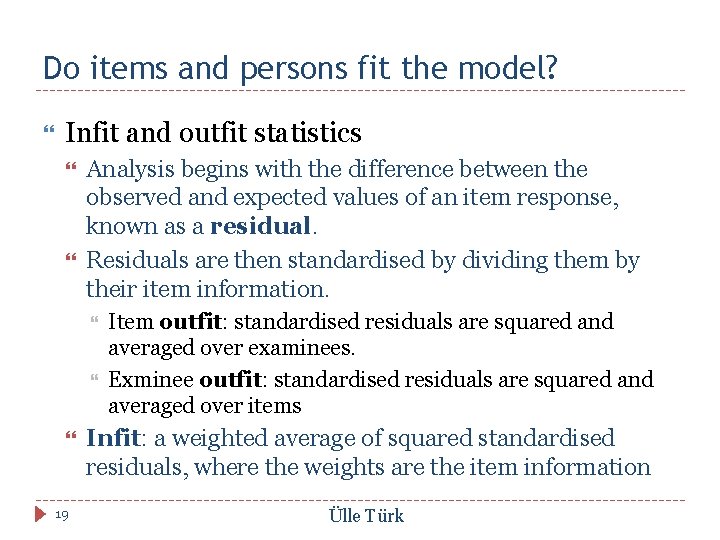

Do items and persons fit the model? Infit and outfit statistics Analysis begins with the difference between the observed and expected values of an item response, known as a residual. Residuals are then standardised by dividing them by their item information. 19 Item outfit: standardised residuals are squared and averaged over examinees. Exminee outfit: standardised residuals are squared and averaged over items Infit: a weighted average of squared standardised residuals, where the weights are the item information Ülle Türk

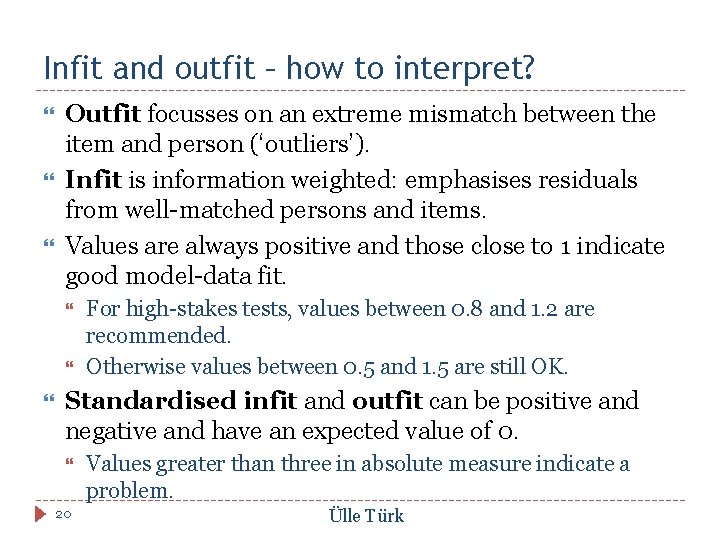

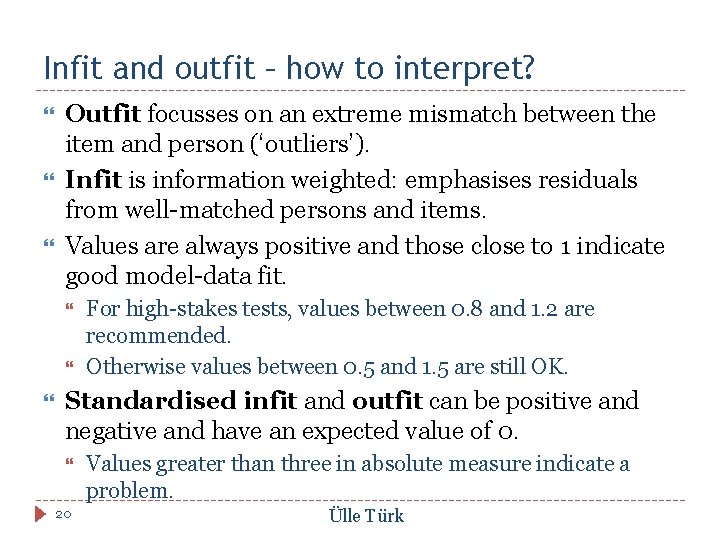

Infit and outfit – how to interpret? Outfit focusses on an extreme mismatch between the item and person (‘outliers’). Infit is information weighted: emphasises residuals from well-matched persons and items. Values are always positive and those close to 1 indicate good model-data fit. For high-stakes tests, values between 0. 8 and 1. 2 are recommended. Otherwise values between 0. 5 and 1. 5 are still OK. Standardised infit and outfit can be positive and negative and have an expected value of 0. 20 Values greater than three in absolute measure indicate a problem. Ülle Türk

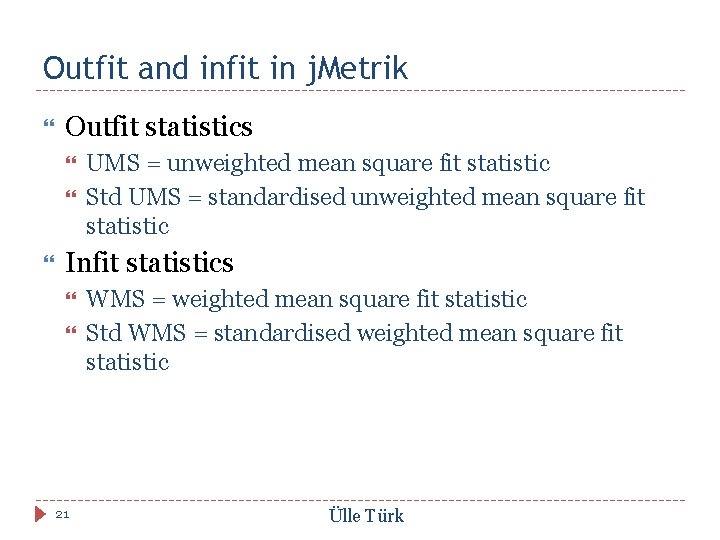

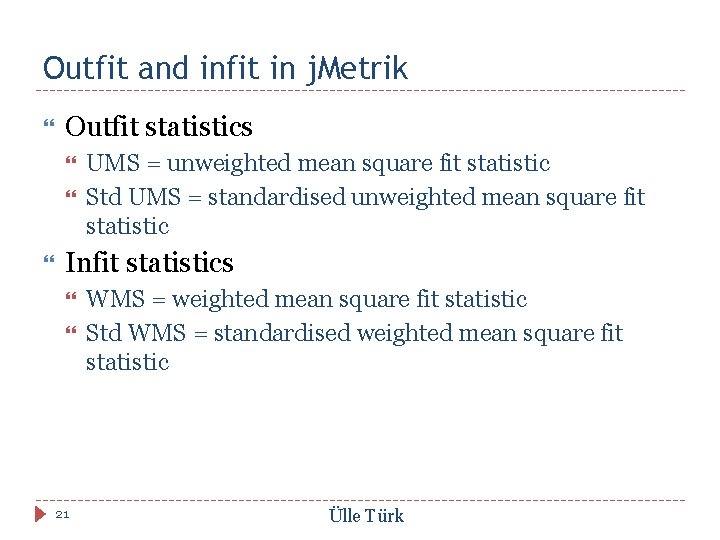

Outfit and infit in j. Metrik Outfit statistics UMS = unweighted mean square fit statistic Std UMS = standardised unweighted mean square fit statistic Infit statistics 21 WMS = weighted mean square fit statistic Std WMS = standardised weighted mean square fit statistic Ülle Türk

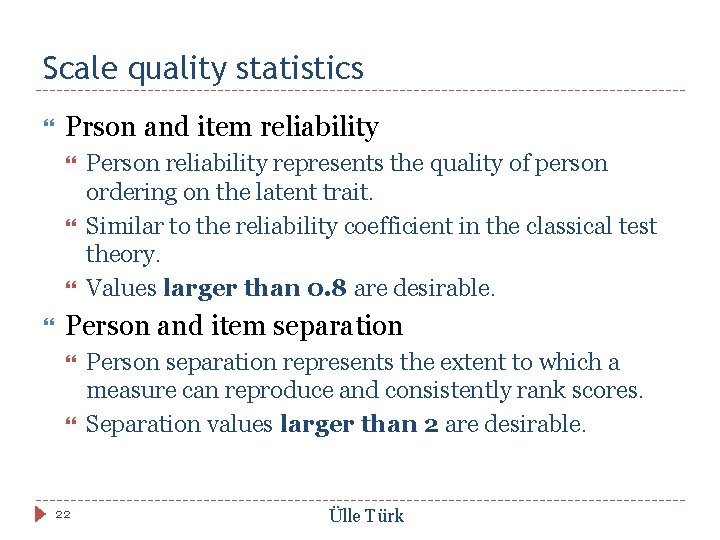

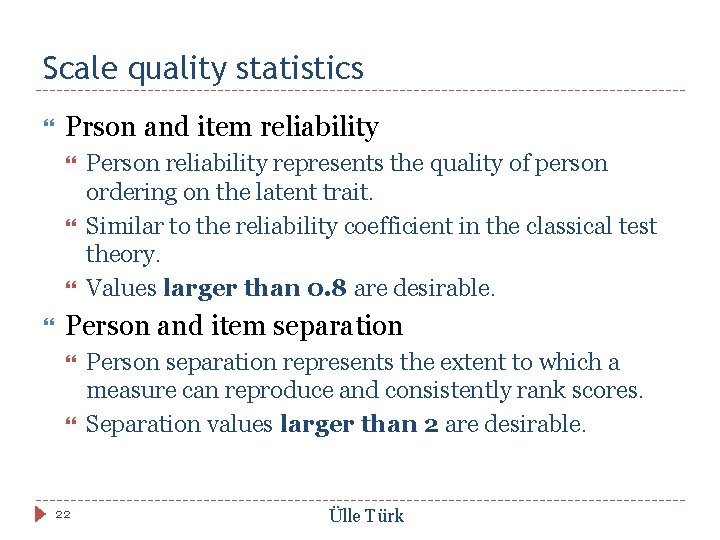

Scale quality statistics Prson and item reliability Person reliability represents the quality of person ordering on the latent trait. Similar to the reliability coefficient in the classical test theory. Values larger than 0. 8 are desirable. Person and item separation 22 Person separation represents the extent to which a measure can reproduce and consistently rank scores. Separation values larger than 2 are desirable. Ülle Türk

Using j. Metrik to do Rasch analyses 23 Ülle Türk

A quick introduction to j. Metrik. TM is free and open source psychometric software. Psychometric methods include 24 classical item analysis, reliability estimation, test scaling, differential item functioning, nonparametric item response theory, Rasch measurement models, item response models (e. g. 3 PL, 4 PL, GPCM), and item response theory linking and equating. Ülle Türk

Getting to know j. Metrik Can be downloaded from the Psychomeasurement Systems website. A Quick Start Guide and a little more detailed User Guide are available on the website. There is also a short video Getting started with j. Metrik. We are going to use the file reading_pretest_b. csv (a semi-colon delimited file). 25 Ülle Türk

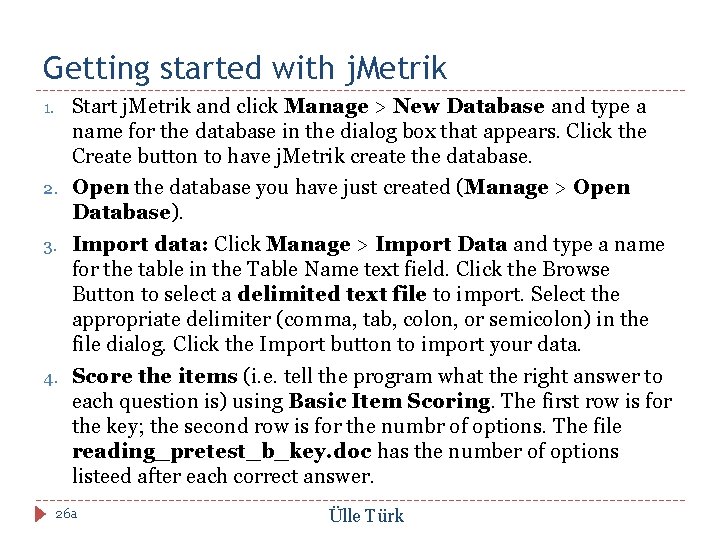

Getting started with j. Metrik 1. 2. 3. 4. Start j. Metrik and click Manage > New Database and type a name for the database in the dialog box that appears. Click the Create button to have j. Metrik create the database. Open the database you have just created (Manage > Open Database). Import data: Click Manage > Import Data and type a name for the table in the Table Name text field. Click the Browse Button to select a delimited text file to import. Select the appropriate delimiter (comma, tab, colon, or semicolon) in the file dialog. Click the Import button to import your data. Score the items (i. e. tell the program what the right answer to each question is) using Basic Item Scoring. The first row is for the key; the second row is for the numbr of options. The file reading_pretest_b_key. doc has the number of options listeed after each correct answer. 26 a Ülle Türk

Running the Rasch analysis in j. Metrik NB! You do not need to score examinees’ answers (Test Scaling) before running the Rasch analyses as the program calculates the total score for each eaminee. Click Analyze Rasch Models (JMLE). Rasch Models dialog box appears. Move the items you want to include in the analysis to the box on the right. The lower portion of the box includes three tabs: 27 Global Item Person Ülle Türk

Running the Rasch analysis in j. Metrik (2) Global tab Item tab Leave the numbers in the Global Estimation panel the way they are. In the Options panel, decide how to treat missing data. Ignore the Linear Transformation panel at this point. Select the Save item estimates checkbox to save item parameter estimates to a new database table. Person tab 28 Choosing the Save person estimates checkbox will add 5 new variables to the data table: the sum score (sum), valid sum score (vsum), latent trait estimate (theta), standard error of the latter (stderr), whether the examinee had an extreme score (extreme). Ülle Türk

Running the Rasch analysis in j. Metrik (3) Person tab (continued) When you select the Save person fit statistics checkbox, j. Metrik will add four new variables to the data table: infit (wms), standardised infit (stdwms), outfit (ums) and standardised outfit (stdums). Selecting the Save residuals checkbox will produce a new table that contains residual values. This table can be used to check th assumption of local independence using Yen’s Q 3 statistic. Now you can run the analyses by clicking Run. 29 Ülle Türk

Creating an item map Item maps summarise the distribution of person ability and distribution of item difficulty for a whole test. They are useful for determining whether items align with the examinee population and identifying parts of th scale that are in need of additional items. To create an item map, select the table that contains the person ability estimates. Click Graph Item Map to start the Item Map dialog. In the Variable Selection panel at the top of the dialog, select theta (person ability). In the Item Parameter Table panel, click the Select button to choose the item parameter table. Click the Run button to create the item map. 30 Ülle Türk

CTT and IRT compared 31 Ülle Türk

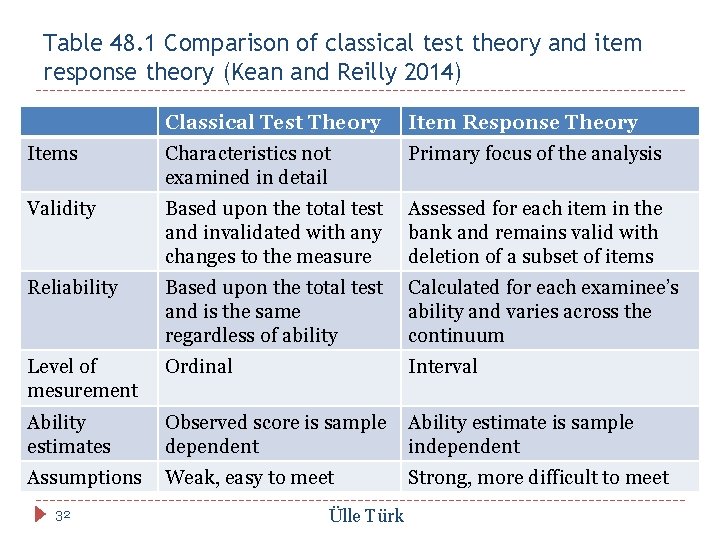

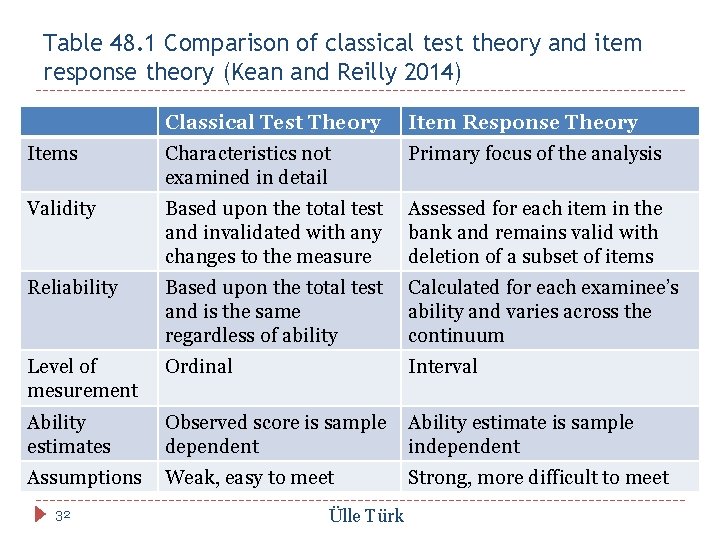

Table 48. 1 Comparison of classical test theory and item response theory (Kean and Reilly 2014) Classical Test Theory Item Response Theory Items Characteristics not examined in detail Primary focus of the analysis Validity Based upon the total test and invalidated with any changes to the measure Assessed for each item in the bank and remains valid with deletion of a subset of items Reliability Based upon the total test and is the same regardless of ability Calculated for each examinee’s ability and varies across the continuum Level of mesurement Ordinal Interval Ability estimates Observed score is sample dependent Ability estimate is sample independent Assumptions Weak, easy to meet Strong, more difficult to meet 32 Ülle Türk

Main advantages of IRT over CTT IRT allows item banking, which means that candidates can all be given a completely different set of items, but still provide an equally accurate estimate of ability. IRT allows for adaptive testing, in which a test gets more, or less difficult depending on the performance of the candidate, tailoring the test to their ability. If you want to create a proper item bank or start using adaptive testing, learn how to use IRT. 33 Ülle Türk

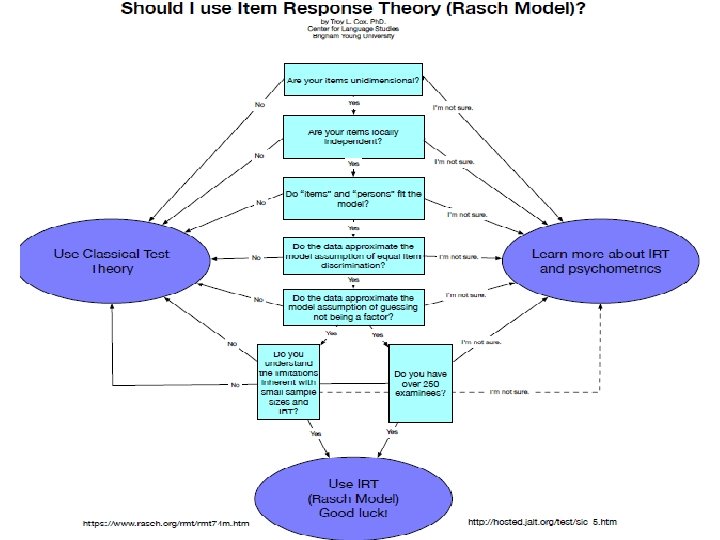

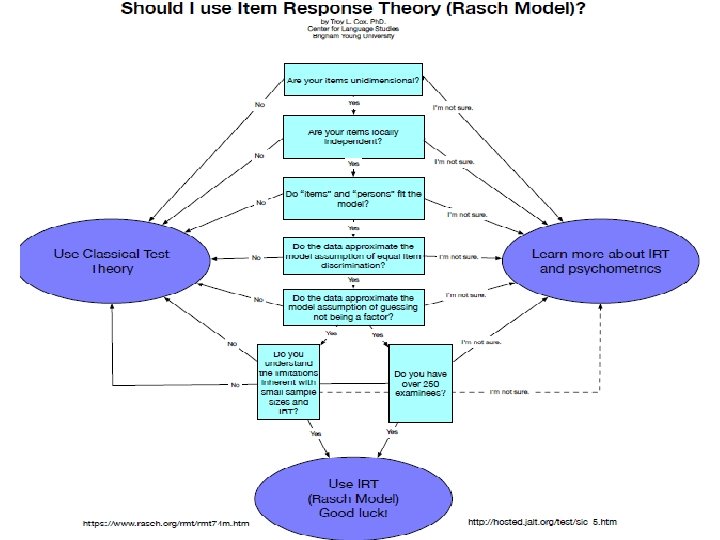

Are you ready for IRT? Troy L. Cox 34 Ülle Türk

35 Ülle Türk

Sources 36 Ülle Türk

References � Kean, Jacob and Jamie Reilly. 2014. Classical Test Theory. In: F. M. Hammond, J. F. Malec, T. Nick, & R. Buschbacher (Eds. ) Handbook for Clinical Research: Design, Statistics and Implementation. Chapter 48, pp 192 -194. New York, NY: Demos Medical Publishing. � Kean, Jacob and Jamie Reilly. 2014. Item Rsponse Theory. In: F. M. Hammond, J. F. Malec, T. Nick, & R. Buschbacher (Eds. ) Handbook for Clinical Research: Design, Statistics and Implementation. Chapter 49, pp 195 -198. New York, NY: Demos Medical Publishing. 37 Ülle Türk

Some useful sources Janssen, Gerriet, Valerie Meier & Jonathan Trace. 2014. Classical Test Theory and Item Response Theory: Two understandings of one high-stakes performance exam. Colombian Applied Linguistics Journal, 16(2), 167 -184. Thompson, Nathan. 2016. What is item response theory? From Assessment Systems. Item Response Theory from Columbia University Mailman School of Public Health. 38 Ülle Türk

Irt 5433

Irt 5433 Irt to amd

Irt to amd Irt lab

Irt lab Cppb-irt adalah

Cppb-irt adalah Irt software

Irt software Lori vanscoy

Lori vanscoy Nvu tutorial

Nvu tutorial Sadlier vocabulary workshop level d unit 1

Sadlier vocabulary workshop level d unit 1 Just right scale

Just right scale We will not be shaken we will not be moved

We will not be shaken we will not be moved Attention is not explanation

Attention is not explanation Ears that hear and eyes that see

Ears that hear and eyes that see Love is not all imagery

Love is not all imagery Not a rustling leaf not a bird

Not a rustling leaf not a bird Too broad too narrow

Too broad too narrow Pp ran

Pp ran If you are not confused you're not paying attention

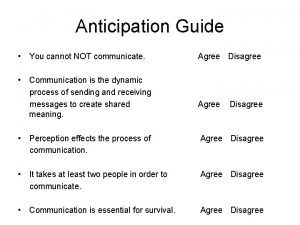

If you are not confused you're not paying attention You cannot not communicate

You cannot not communicate Negation of if

Negation of if If you can't measure it it doesn't exist

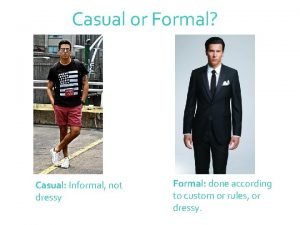

If you can't measure it it doesn't exist Not dressy

Not dressy Bud not buddy chapter 6 summary

Bud not buddy chapter 6 summary When god created woman he was working late

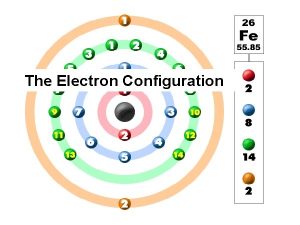

When god created woman he was working late Germanium electron configuration

Germanium electron configuration One to one and onto

One to one and onto The divine masculine

The divine masculine Staj mazeret izin dilekçesi

Staj mazeret izin dilekçesi Why does west tell sis not to play in the sun parlor?

Why does west tell sis not to play in the sun parlor? Why does curley wear a vaseline-filled glove on one hand

Why does curley wear a vaseline-filled glove on one hand Jlex does not deal with:

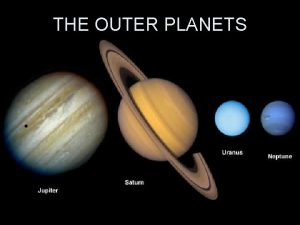

Jlex does not deal with: The first four planets

The first four planets Communication is inescapable

Communication is inescapable Control structure

Control structure Lirik lagu harap akan tuhan

Lirik lagu harap akan tuhan Heart not beating

Heart not beating Why not tri

Why not tri You see jody's new dog yesterday?

You see jody's new dog yesterday? Characteristics of broadcast writing

Characteristics of broadcast writing Cycloheptatriene cation is aromatic or not

Cycloheptatriene cation is aromatic or not