An Approach for Implementing Efficient Superscalar CISC Processors

![Fusing Algorithm: Example x 86 asm: -----------------------------1. lea eax, DS: [edi + 01] 2. Fusing Algorithm: Example x 86 asm: -----------------------------1. lea eax, DS: [edi + 01] 2.](https://slidetodoc.com/presentation_image/09af108fea42ecdf6d82d269c226d821/image-10.jpg)

- Slides: 33

An Approach for Implementing Efficient Superscalar CISC Processors Shiliang Hu, Ilhyun Kim, Kim Mikko Lipasti, James Smith HPCA 2006, Austin, TX

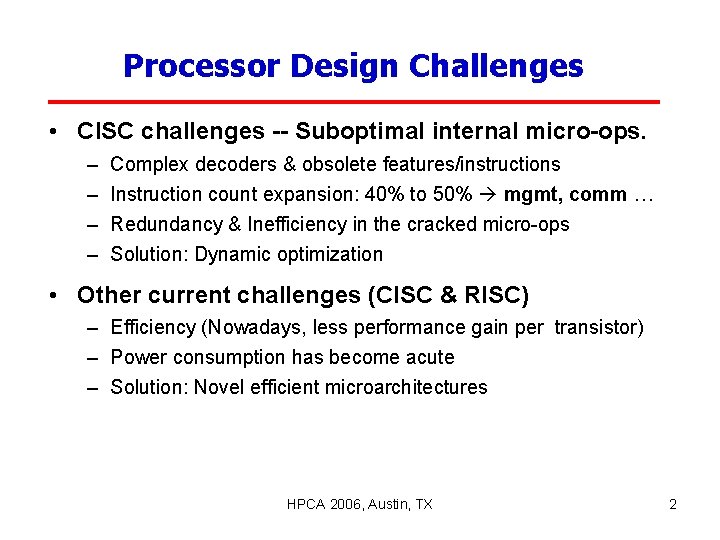

Processor Design Challenges • CISC challenges -- Suboptimal internal micro-ops. – – Complex decoders & obsolete features/instructions Instruction count expansion: 40% to 50% mgmt, comm … Redundancy & Inefficiency in the cracked micro-ops Solution: Dynamic optimization • Other current challenges (CISC & RISC) – Efficiency (Nowadays, less performance gain per transistor) – Power consumption has become acute – Solution: Novel efficient microarchitectures HPCA 2006, Austin, TX 2

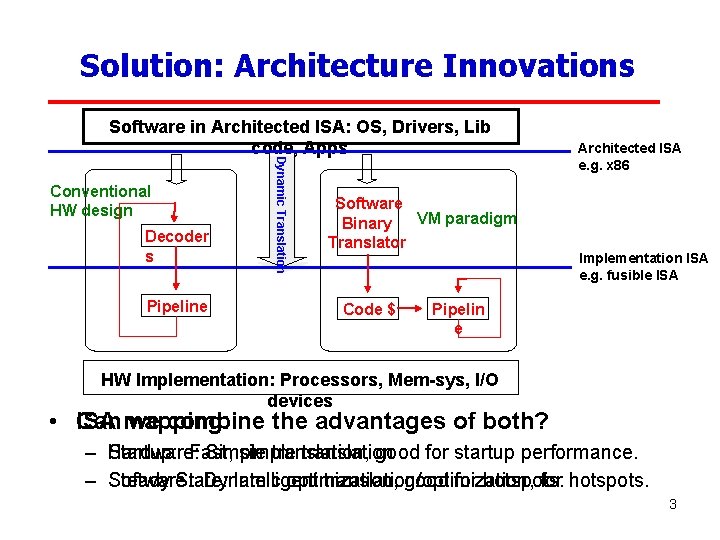

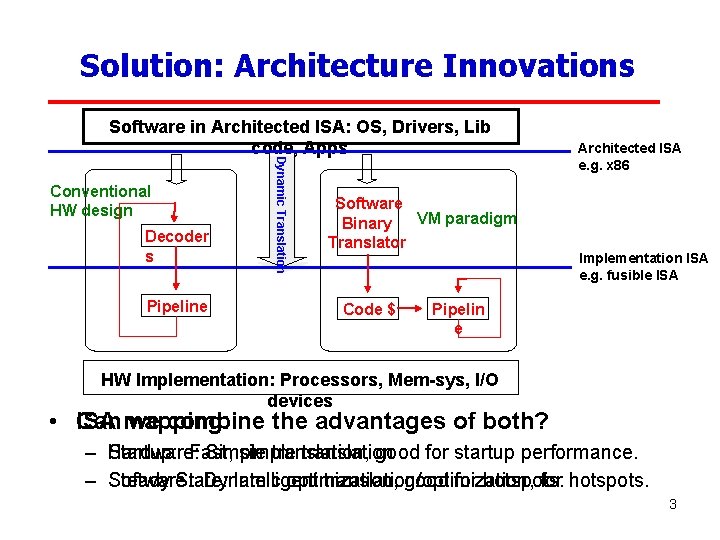

Solution: Architecture Innovations Conventional HW design Decoder s Pipeline Dynamic Translation Software in Architected ISA: OS, Drivers, Lib code, Apps Software VM paradigm Binary Translator Code $ Architected ISA e. g. x 86 Implementation ISA e. g. fusible ISA Pipelin e HW Implementation: Processors, Mem-sys, I/O devices • Can ISA mapping: we combine the advantages of both? – Startup: Hardware: Fast, Simple simple translation, translation good for startup performance. – Steady Software: State: Dynamic Intelligent optimization, translation/optimization, good for hotspots. 3

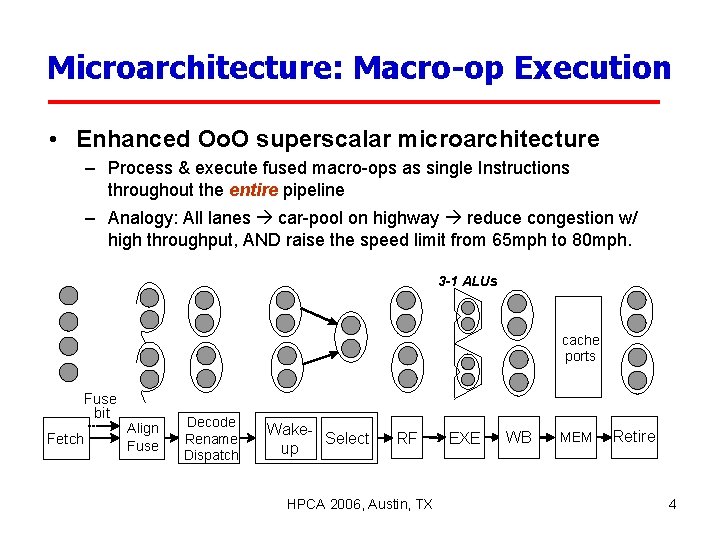

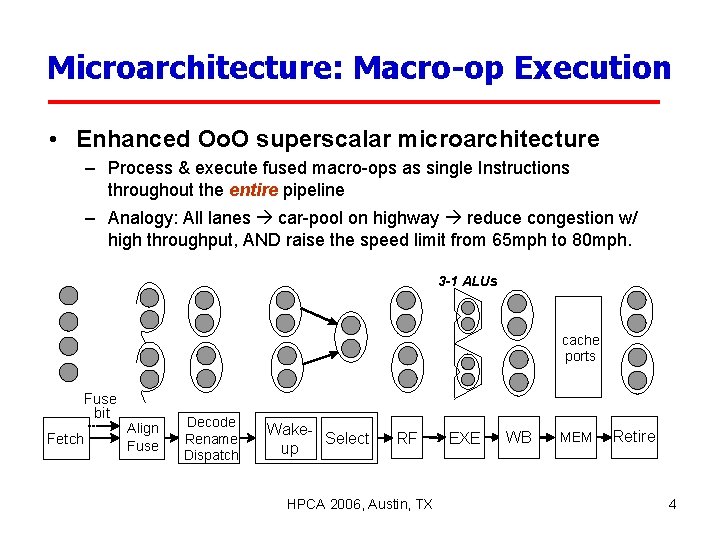

Microarchitecture: Macro-op Execution • Enhanced Oo. O superscalar microarchitecture – Process & execute fused macro-ops as single Instructions throughout the entire pipeline – Analogy: All lanes car-pool on highway reduce congestion w/ high throughput, AND raise the speed limit from 65 mph to 80 mph. 3 -1 ALUs cache ports Fuse bit Fetch Align Fuse Decode Rename Dispatch Wake. Select up RF HPCA 2006, Austin, TX EXE WB MEM Retire 4

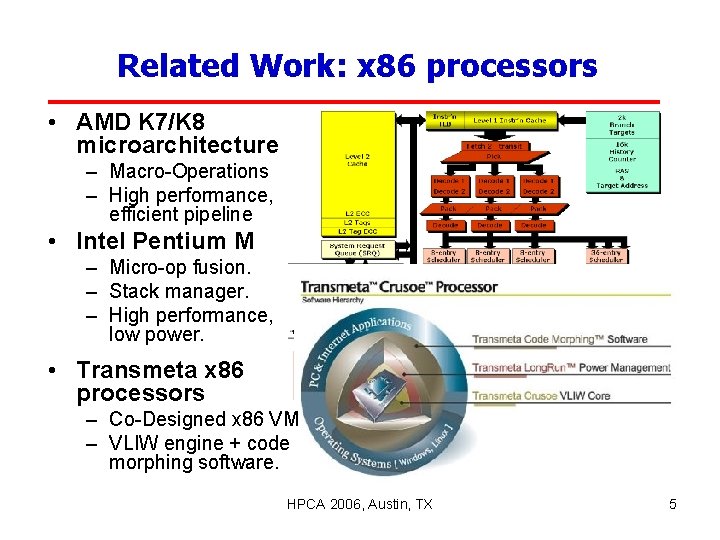

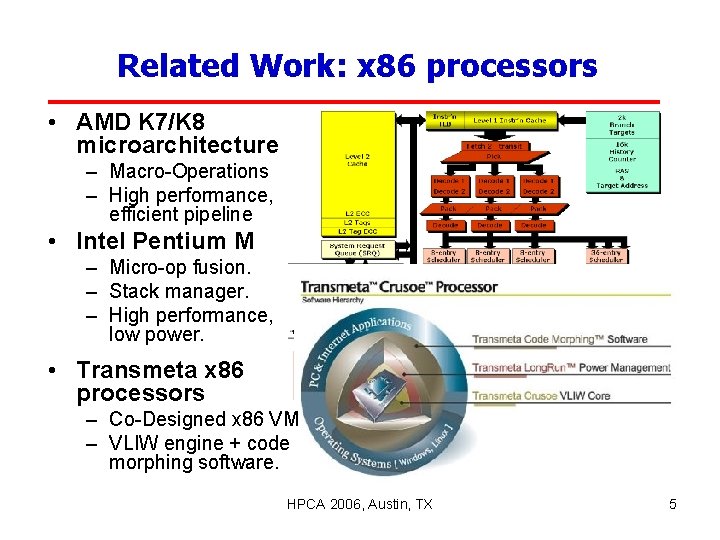

Related Work: x 86 processors • AMD K 7/K 8 microarchitecture – Macro-Operations – High performance, efficient pipeline • Intel Pentium M – Micro-op fusion. – Stack manager. – High performance, low power. • Transmeta x 86 processors – Co-Designed x 86 VM – VLIW engine + code morphing software. HPCA 2006, Austin, TX 5

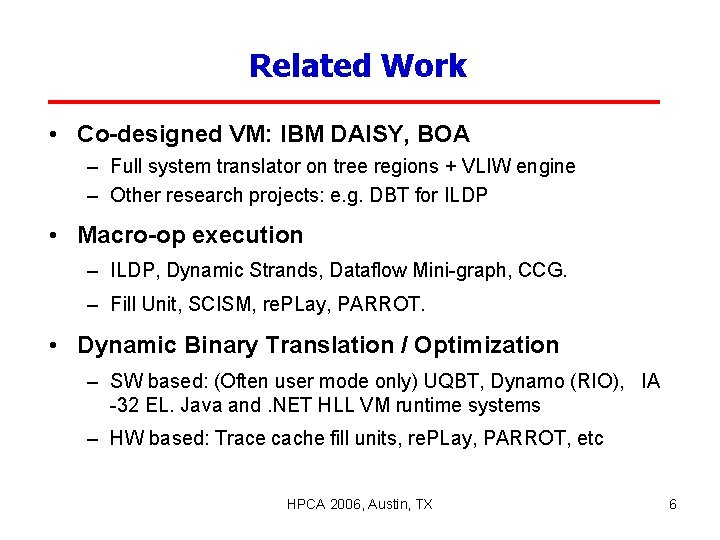

Related Work • Co-designed VM: IBM DAISY, BOA – Full system translator on tree regions + VLIW engine – Other research projects: e. g. DBT for ILDP • Macro-op execution – ILDP, Dynamic Strands, Dataflow Mini-graph, CCG. – Fill Unit, SCISM, re. PLay, PARROT. • Dynamic Binary Translation / Optimization – SW based: (Often user mode only) UQBT, Dynamo (RIO), IA -32 EL. Java and. NET HLL VM runtime systems – HW based: Trace cache fill units, re. PLay, PARROT, etc HPCA 2006, Austin, TX 6

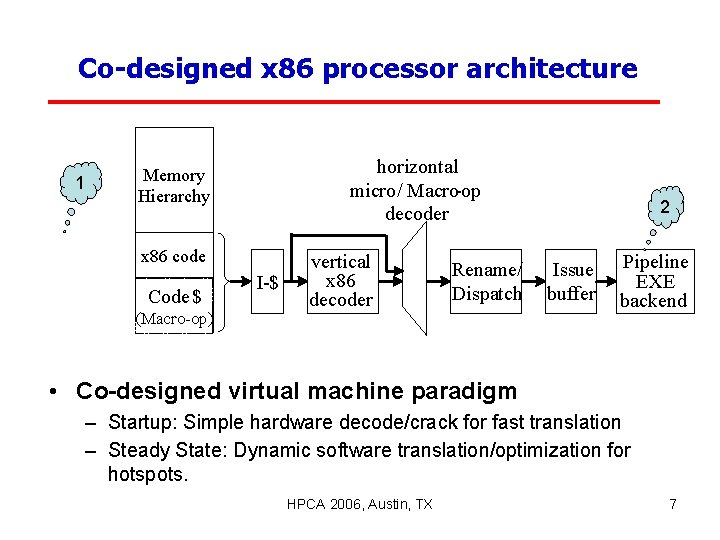

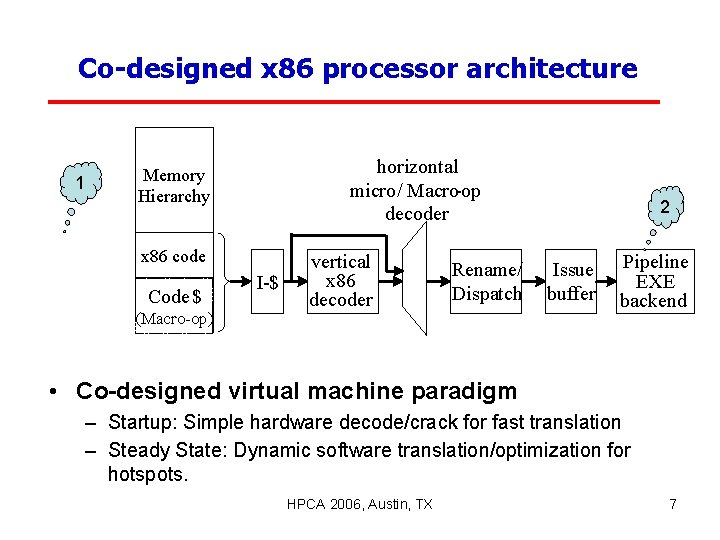

Co-designed x 86 processor architecture horizontal micro / Macro-op decoder Memory Hierarchy 1 VM translation / optimization software x 86 code Code $ (Macro-op) I-$ vertical x 86 decoder Rename/ Dispatch 2 Issue buffer Pipeline EXE backend • Co-designed virtual machine paradigm – Startup: Simple hardware decode/crack for fast translation – Steady State: Dynamic software translation/optimization for hotspots. HPCA 2006, Austin, TX 7

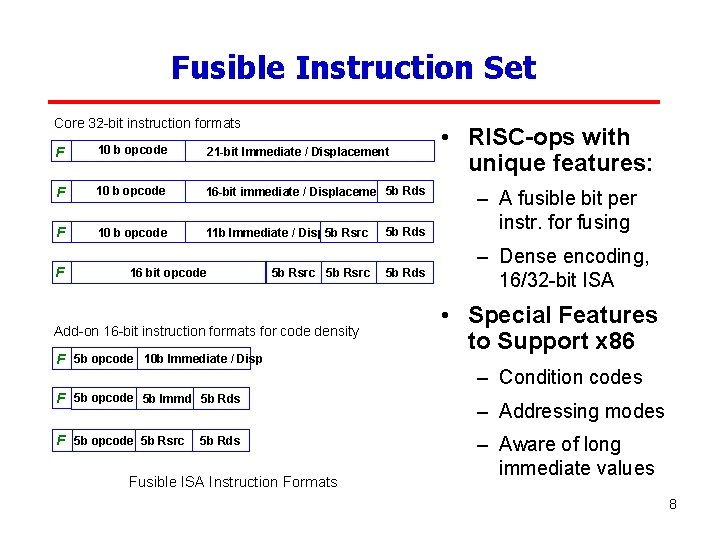

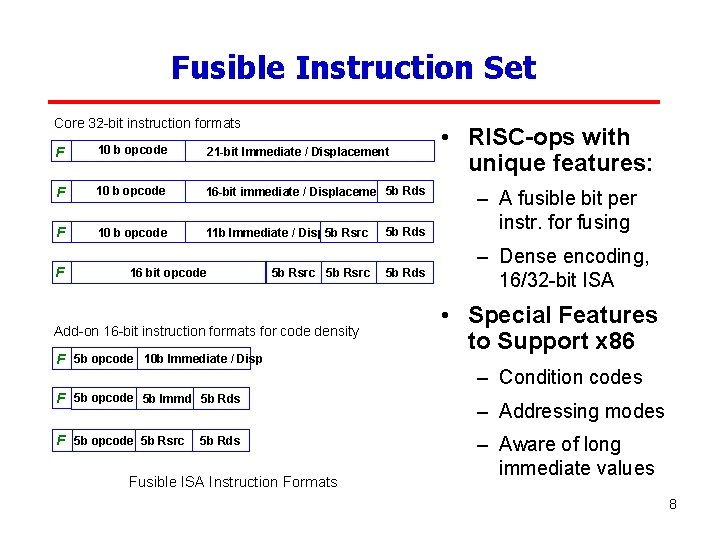

Fusible Instruction Set Core 32 -bit instruction formats F 10 b opcode / 21 -bit Immediate / Displacement F 10 b opcode 16 -bit immediate / Displacement 5 b Rds F 10 b opcode 11 b Immediate / Disp 5 b Rsrc F 16 bit opcode 5 b Rsrc Add-on 16 -bit instruction formats for code density F 5 b opcode 10 b Immediate / Disp F 5 b opcode 5 b Immd 5 b Rds F 5 b opcode 5 b Rsrc 5 b Rds Fusible ISA Instruction Formats 5 b Rds • RISC-ops with unique features: – A fusible bit per instr. for fusing – Dense encoding, 16/32 -bit ISA • Special Features to Support x 86 – Condition codes – Addressing modes – Aware of long immediate values 8

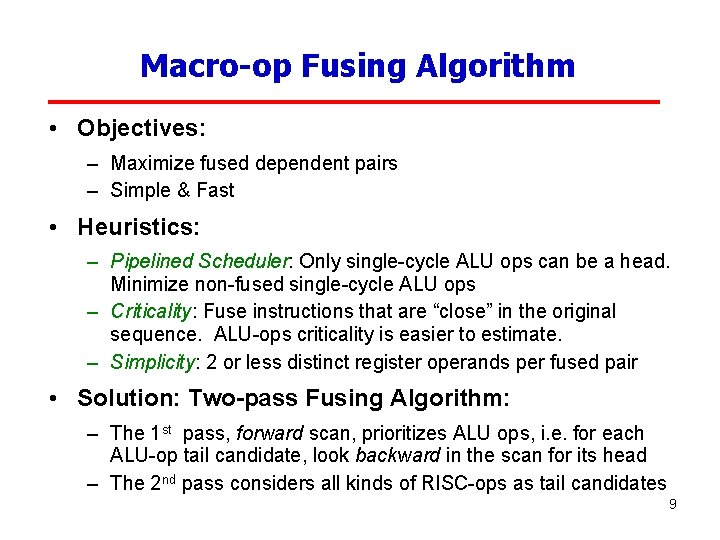

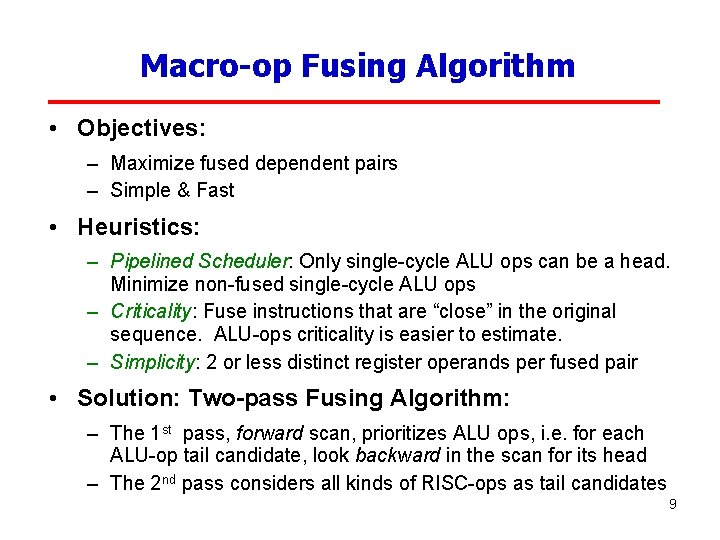

Macro-op Fusing Algorithm • Objectives: – Maximize fused dependent pairs – Simple & Fast • Heuristics: – Pipelined Scheduler: Only single-cycle ALU ops can be a head. Minimize non-fused single-cycle ALU ops – Criticality: Fuse instructions that are “close” in the original sequence. ALU-ops criticality is easier to estimate. – Simplicity: 2 or less distinct register operands per fused pair • Solution: Two-pass Fusing Algorithm: – The 1 st pass, forward scan, prioritizes ALU ops, i. e. for each ALU-op tail candidate, look backward in the scan for its head – The 2 nd pass considers all kinds of RISC-ops as tail candidates 9

![Fusing Algorithm Example x 86 asm 1 lea eax DS edi 01 2 Fusing Algorithm: Example x 86 asm: -----------------------------1. lea eax, DS: [edi + 01] 2.](https://slidetodoc.com/presentation_image/09af108fea42ecdf6d82d269c226d821/image-10.jpg)

Fusing Algorithm: Example x 86 asm: -----------------------------1. lea eax, DS: [edi + 01] 2. mov [DS: 080 b 8658], eax 3. movzx ebx, SS: [ebp + ecx << 1] 4. and eax, 0000007 f 5. mov edx, DS: [eax + esi << 0 + 0 x 7 c] RISC-ops: --------------------------After fusing: Macro-ops 1. ADD Reax, Redi, 1 --------------------------2. mem[R 22] 1. ST ADD Reax, R 18, Redi, 1 : : AND 3. LD. zx R 18, Rebx, mem[R 22] mem[Rebp + Recx << 1] 2. ST 4. 3. AND LD. zx Reax, Rebx, 0000007 f mem[Rebp + Recx << 1] 5. Reax, Resi : : 4. ADD R 17, Reax, LD 6. LD Redx, mem[R 17 + 0 x 7 c] Reax, R 18, 007 f Rebx, mem[R 17+0 x 7 c] HPCA 2006, Austin, TX 10

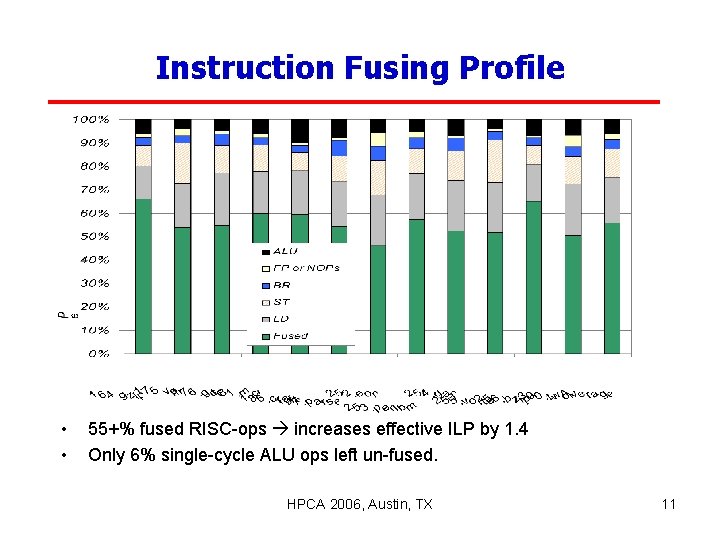

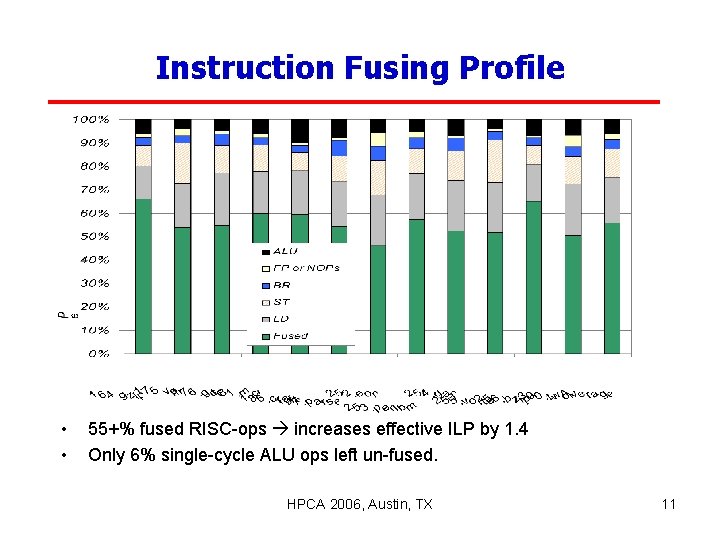

Instruction Fusing Profile • • 55+% fused RISC-ops increases effective ILP by 1. 4 Only 6% single-cycle ALU ops left un-fused. HPCA 2006, Austin, TX 11

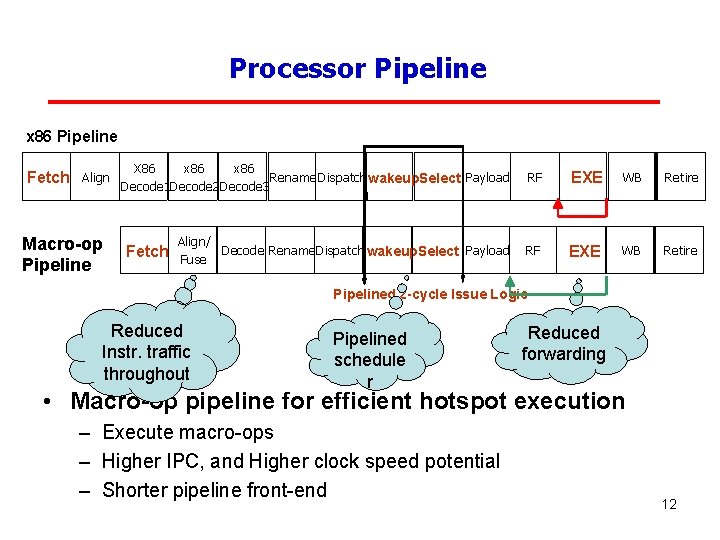

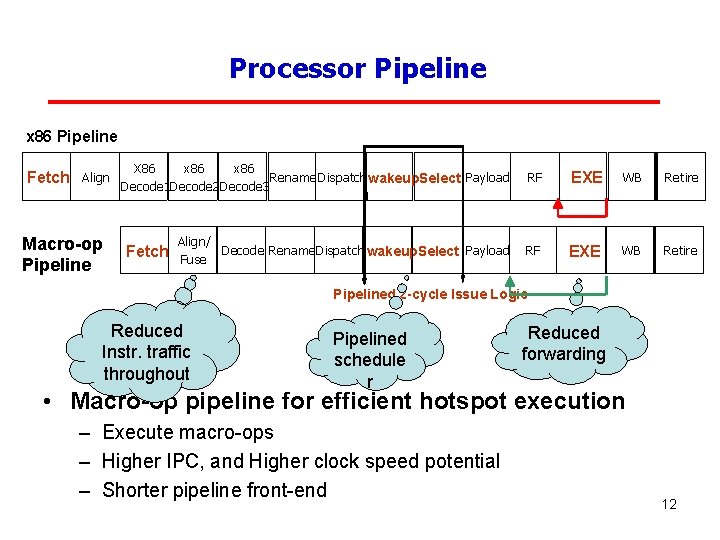

Processor Pipeline x 86 Pipeline X 86 x 86 Fetch Align Decode 1 Decode 2 Decode 3 Rename. Dispatch wakeup. Select Payload Macro-op Pipeline Align/ Fetch Fuse Decode Rename. Dispatch wakeup. Select Payload RF EXE WB Retire Pipelined 2 -cycle Issue Logic Reduced Instr. traffic throughout Pipelined schedule r Reduced forwarding • Macro-op pipeline for efficient hotspot execution – Execute macro-ops – Higher IPC, and Higher clock speed potential – Shorter pipeline front-end 12

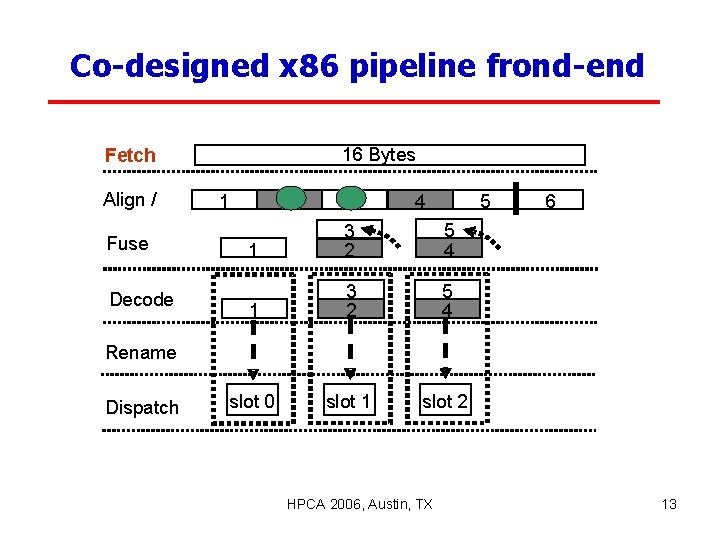

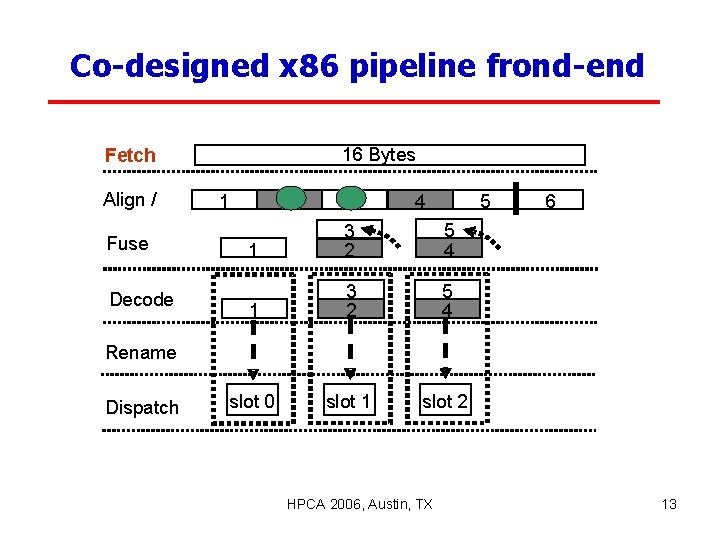

Co-designed x 86 pipeline frond-end 16 Bytes Fetch Align / Fuse Decode 1 2 3 4 5 1 3 2 5 4 slot 0 slot 1 slot 2 6 Rename Dispatch HPCA 2006, Austin, TX 13

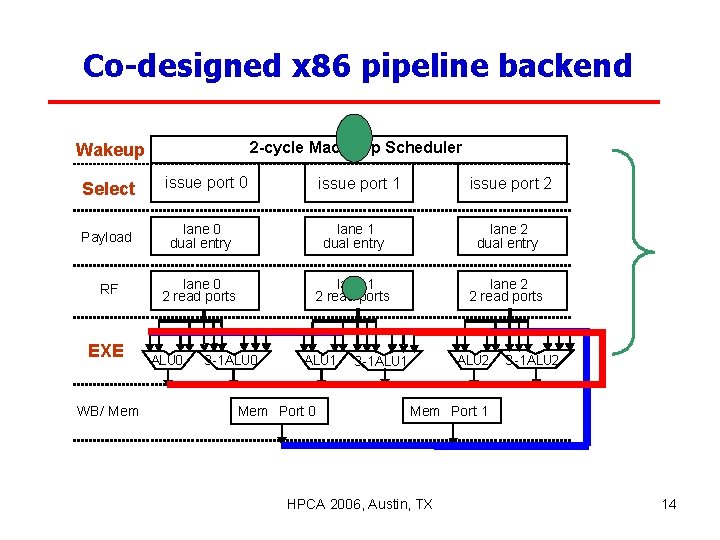

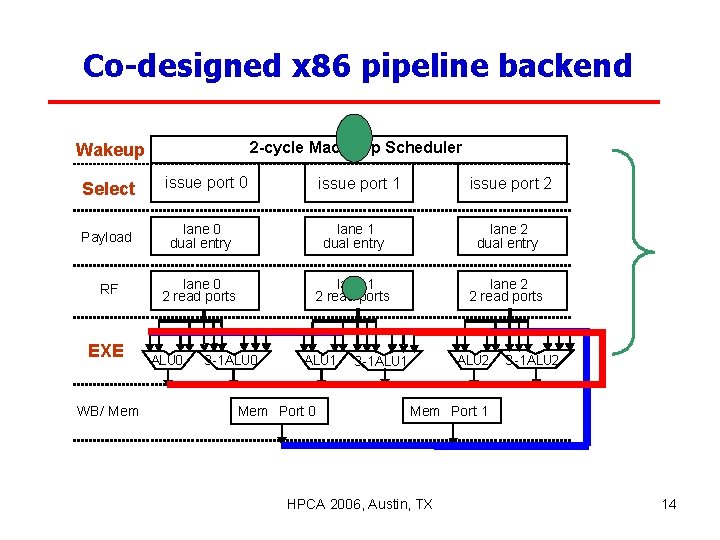

Co-designed x 86 pipeline backend 2 -cycle Macro-op Scheduler Wakeup Select issue port 0 issue port 1 issue port 2 Payload lane 0 dual entry lane 1 dual entry lane 2 dual entry RF lane 0 2 read ports lane 1 2 read ports lane 2 2 read ports EXE WB/ Mem ALU 0 3 -1 ALU 0 ALU 1 Mem Port 0 ALU 2 3 -1 ALU 1 3 -1 ALU 2 Mem Port 1 HPCA 2006, Austin, TX 14

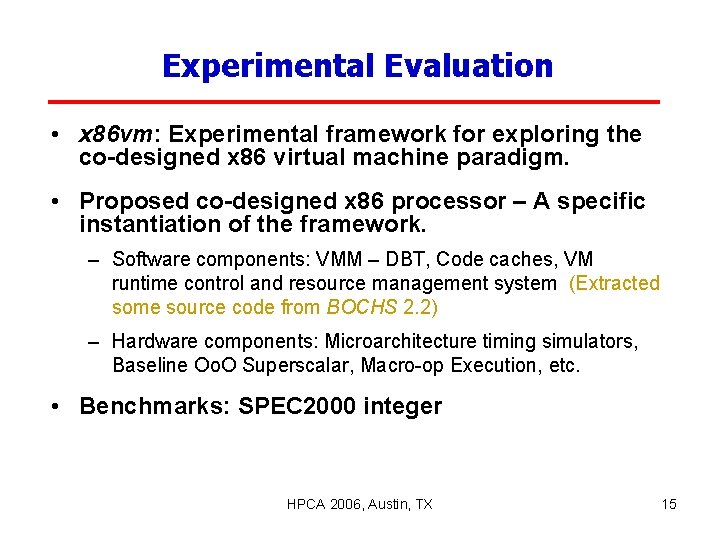

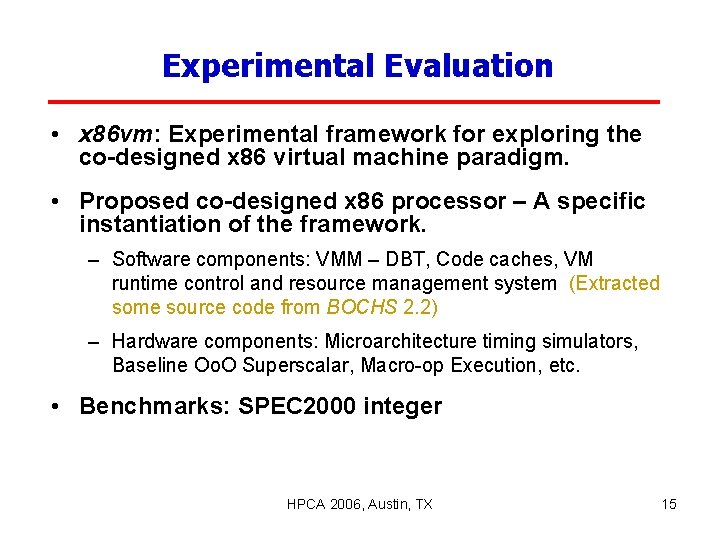

Experimental Evaluation • x 86 vm: Experimental framework for exploring the co-designed x 86 virtual machine paradigm. • Proposed co-designed x 86 processor – A specific instantiation of the framework. – Software components: VMM – DBT, Code caches, VM runtime control and resource management system (Extracted some source code from BOCHS 2. 2) – Hardware components: Microarchitecture timing simulators, Baseline Oo. O Superscalar, Macro-op Execution, etc. • Benchmarks: SPEC 2000 integer HPCA 2006, Austin, TX 15

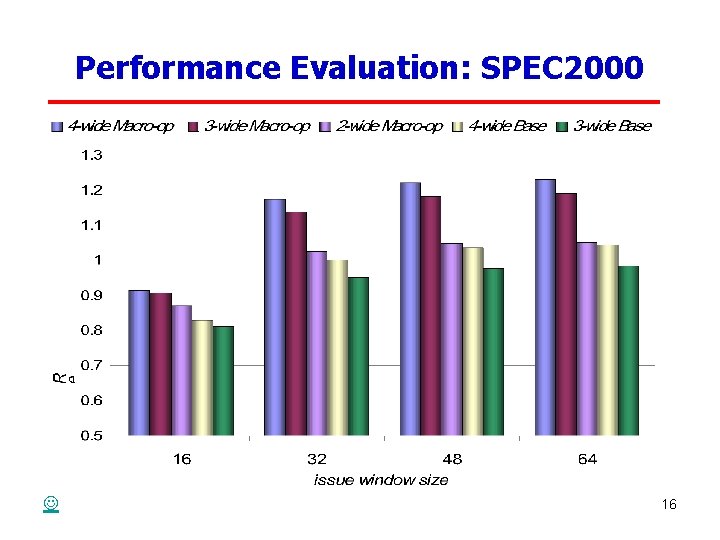

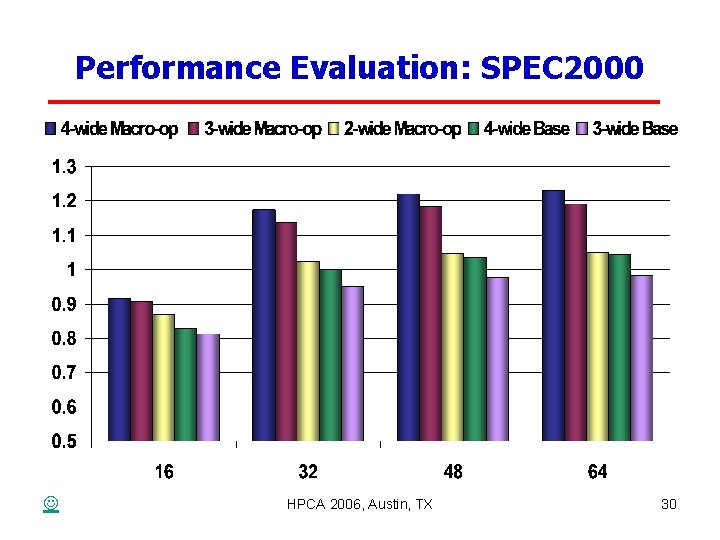

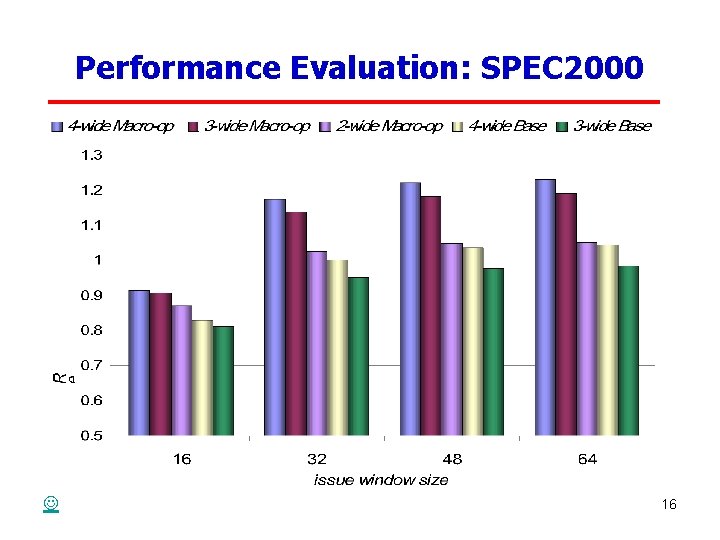

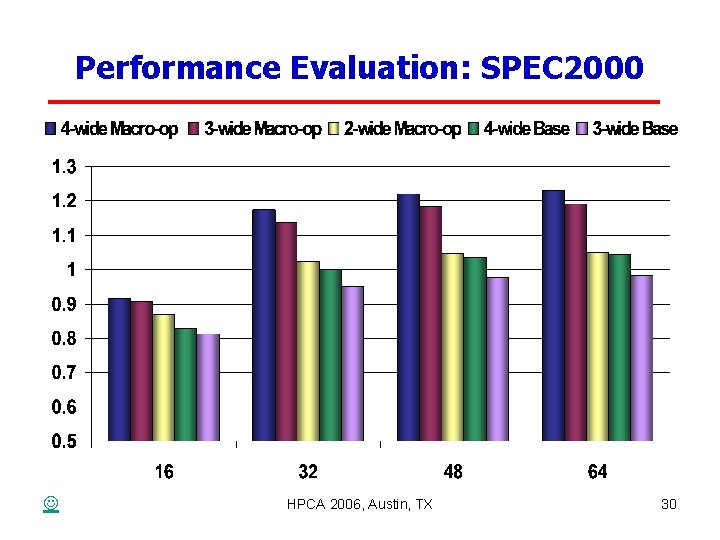

Performance Evaluation: SPEC 2000 16

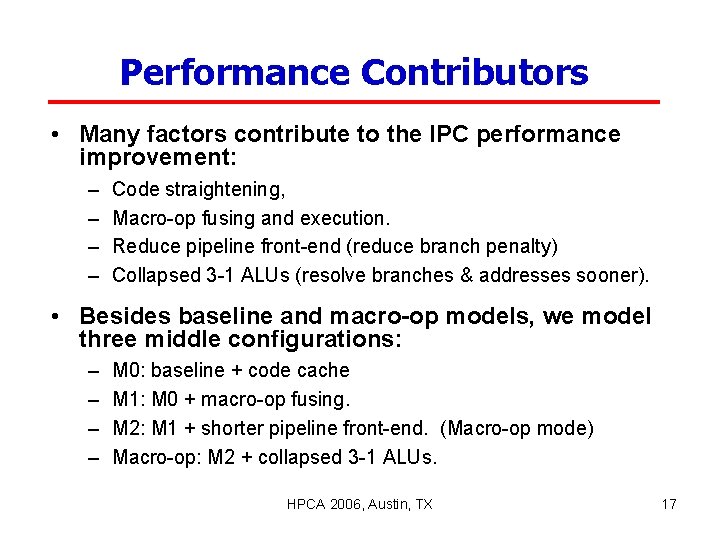

Performance Contributors • Many factors contribute to the IPC performance improvement: – – Code straightening, Macro-op fusing and execution. Reduce pipeline front-end (reduce branch penalty) Collapsed 3 -1 ALUs (resolve branches & addresses sooner). • Besides baseline and macro-op models, we model three middle configurations: – – M 0: baseline + code cache M 1: M 0 + macro-op fusing. M 2: M 1 + shorter pipeline front-end. (Macro-op mode) Macro-op: M 2 + collapsed 3 -1 ALUs. HPCA 2006, Austin, TX 17

Performance Contributors: SPEC 2000 HPCA 2006, Austin, TX 18

Conclusions • Architecture Enhancement – Hardware/Software co-designed paradigm enable novel designs & more desirable system features – Fuse dependent instruction pairs collapse dataflow graph to increase ILP • Complexity Effectiveness – Pipelined 2 -cycle instruction scheduler – Reduce ALU value forwarding network significantly – DBT software reduces hardware complexity • Power Consumption Implication – Reduced pipeline width – Reduced Inter-instruction communication and instruction management HPCA 2006, Austin, TX 19

Finale – Questions & Answers Suggestions and comments are welcome, Thank you! HPCA 2006, Austin, TX 20

Outline • Motivation & Introduction • Processor Microarchtecture Details • Evaluation & Conclusions HPCA 2006, Austin, TX 21

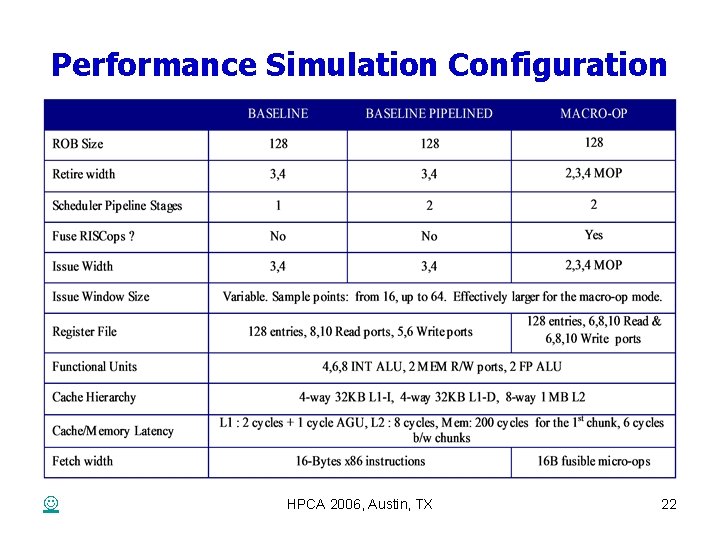

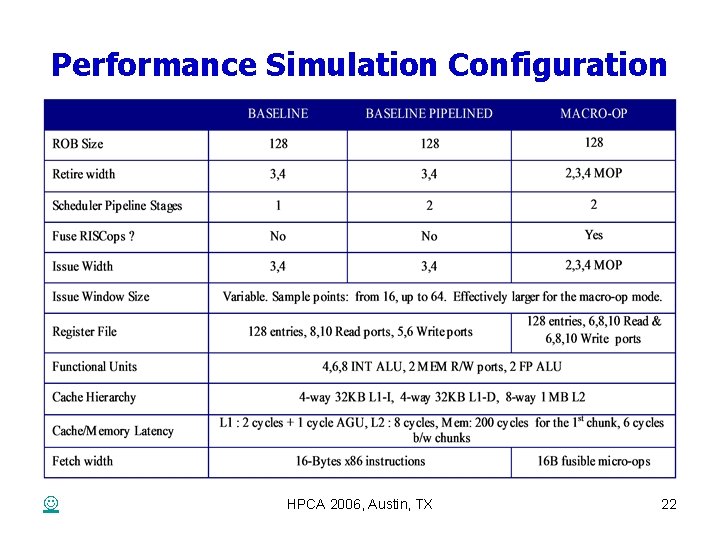

Performance Simulation Configuration HPCA 2006, Austin, TX 22

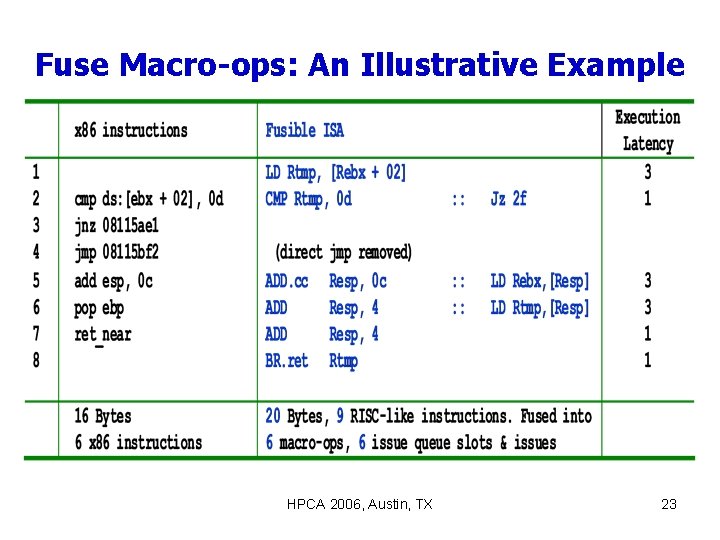

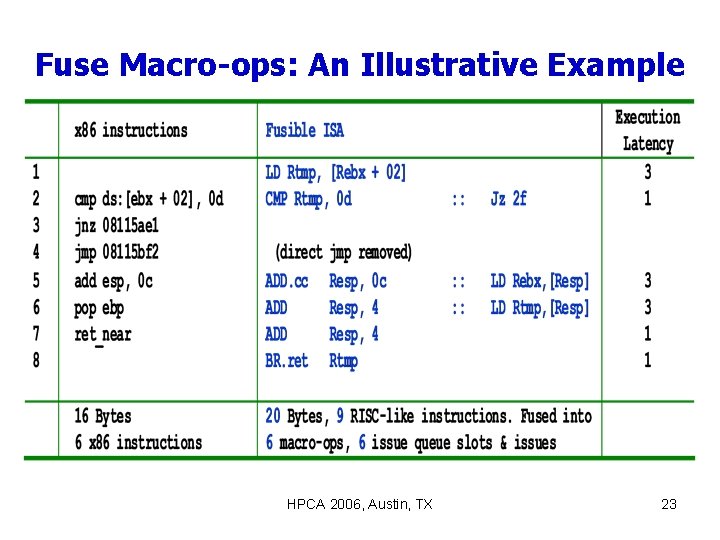

Fuse Macro-ops: An Illustrative Example HPCA 2006, Austin, TX 23

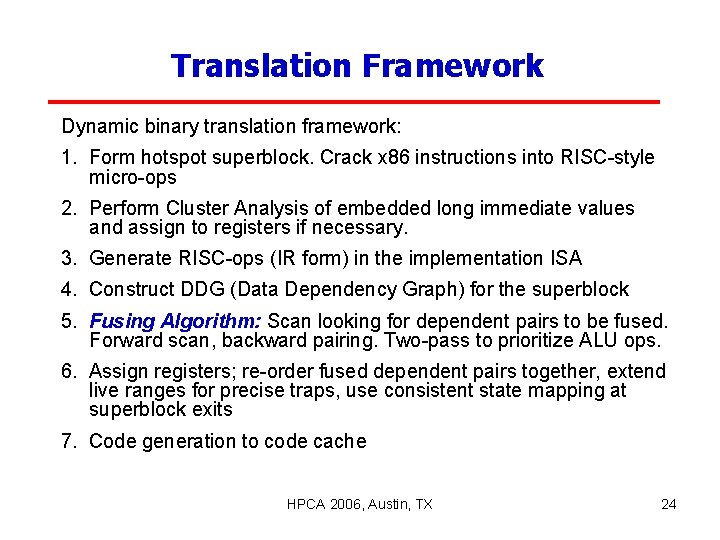

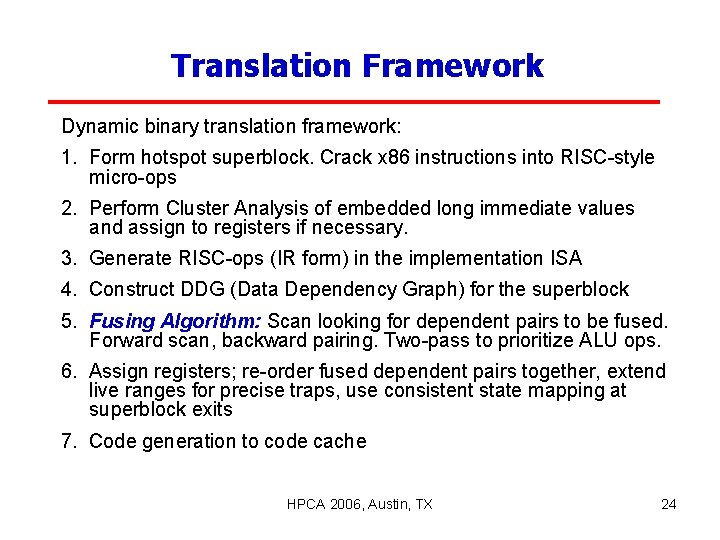

Translation Framework Dynamic binary translation framework: 1. Form hotspot superblock. Crack x 86 instructions into RISC-style micro-ops 2. Perform Cluster Analysis of embedded long immediate values and assign to registers if necessary. 3. Generate RISC-ops (IR form) in the implementation ISA 4. Construct DDG (Data Dependency Graph) for the superblock 5. Fusing Algorithm: Scan looking for dependent pairs to be fused. Forward scan, backward pairing. Two-pass to prioritize ALU ops. 6. Assign registers; re-order fused dependent pairs together, extend live ranges for precise traps, use consistent state mapping at superblock exits 7. Code generation to code cache HPCA 2006, Austin, TX 24

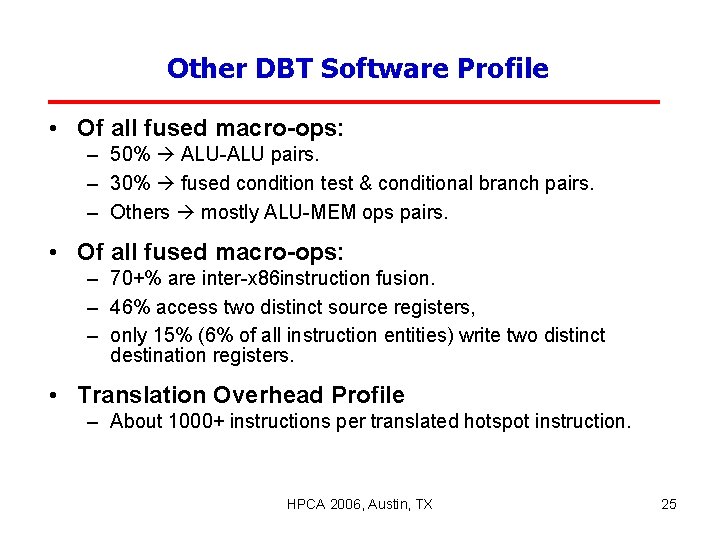

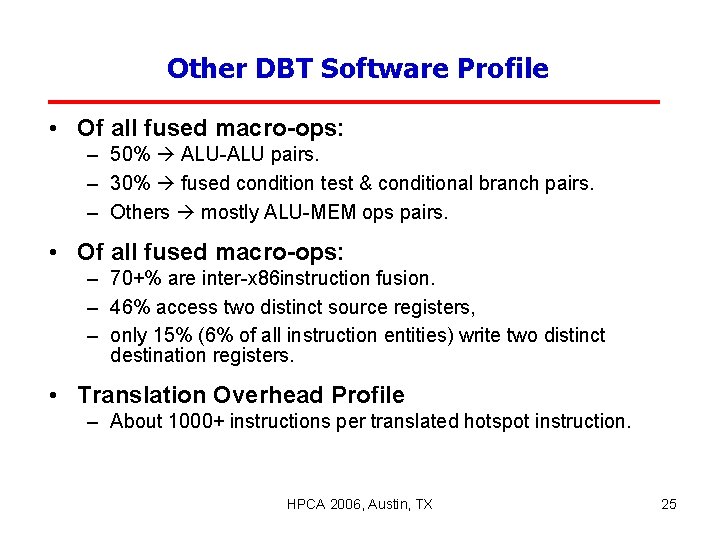

Other DBT Software Profile • Of all fused macro-ops: – 50% ALU-ALU pairs. – 30% fused condition test & conditional branch pairs. – Others mostly ALU-MEM ops pairs. • Of all fused macro-ops: – 70+% are inter-x 86 instruction fusion. – 46% access two distinct source registers, – only 15% (6% of all instruction entities) write two distinct destination registers. • Translation Overhead Profile – About 1000+ instructions per translated hotspot instruction. HPCA 2006, Austin, TX 25

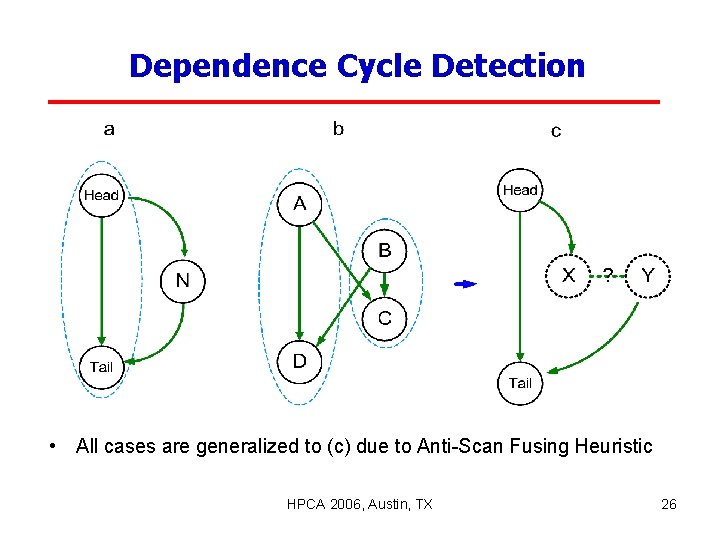

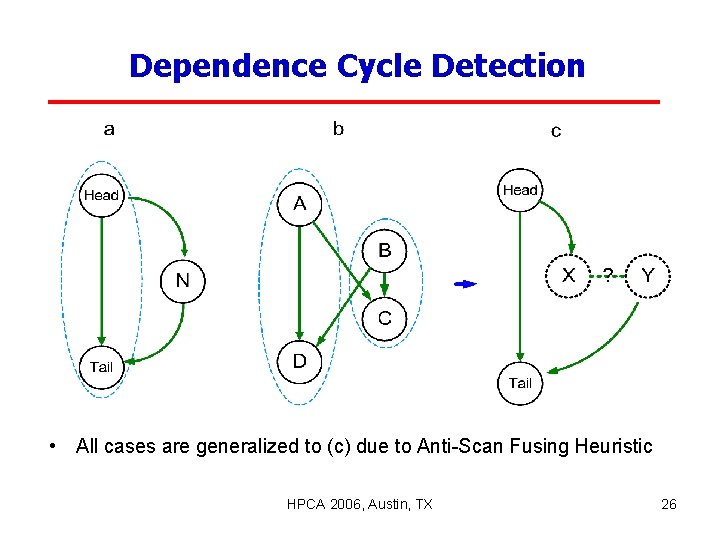

Dependence Cycle Detection • All cases are generalized to (c) due to Anti-Scan Fusing Heuristic HPCA 2006, Austin, TX 26

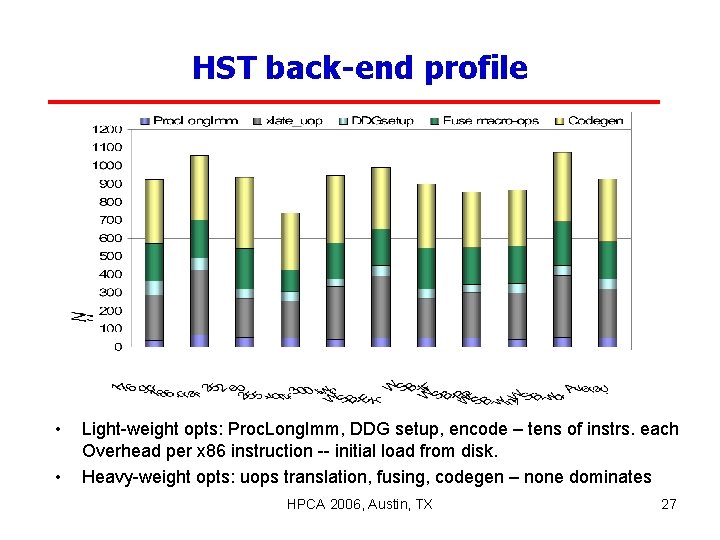

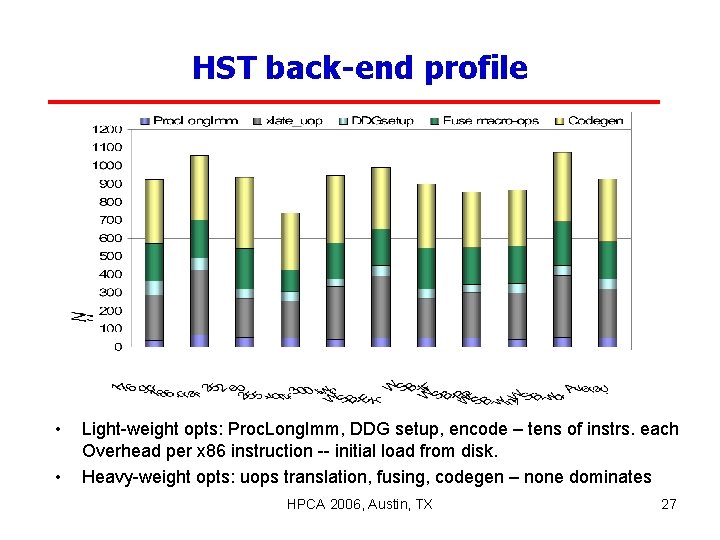

HST back-end profile • • Light-weight opts: Proc. Long. Imm, DDG setup, encode – tens of instrs. each Overhead per x 86 instruction -- initial load from disk. Heavy-weight opts: uops translation, fusing, codegen – none dominates HPCA 2006, Austin, TX 27

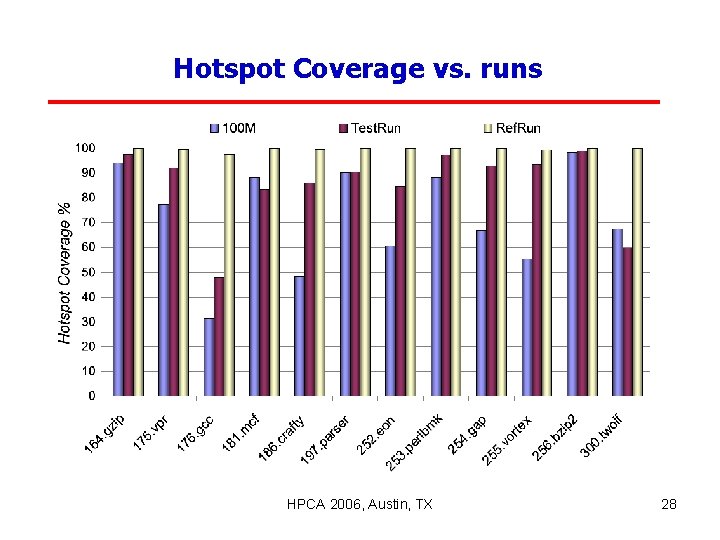

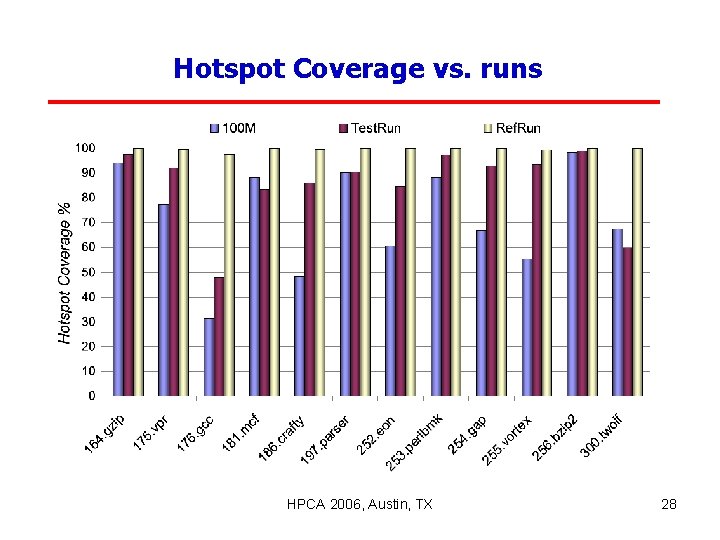

Hotspot Coverage vs. runs HPCA 2006, Austin, TX 28

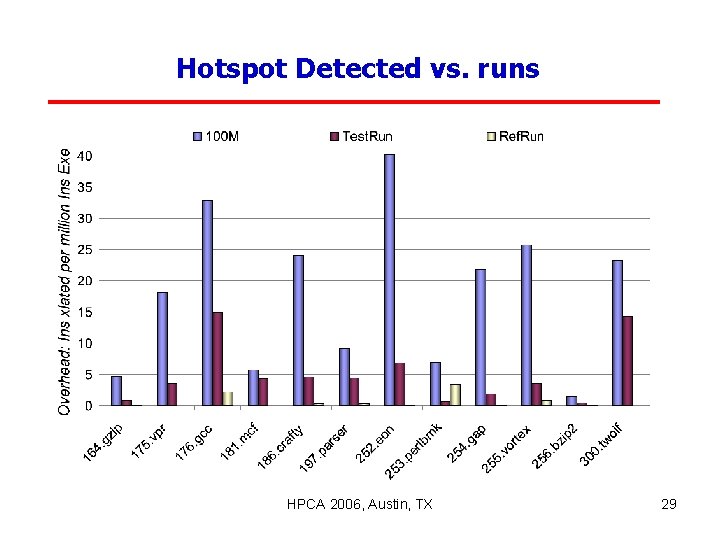

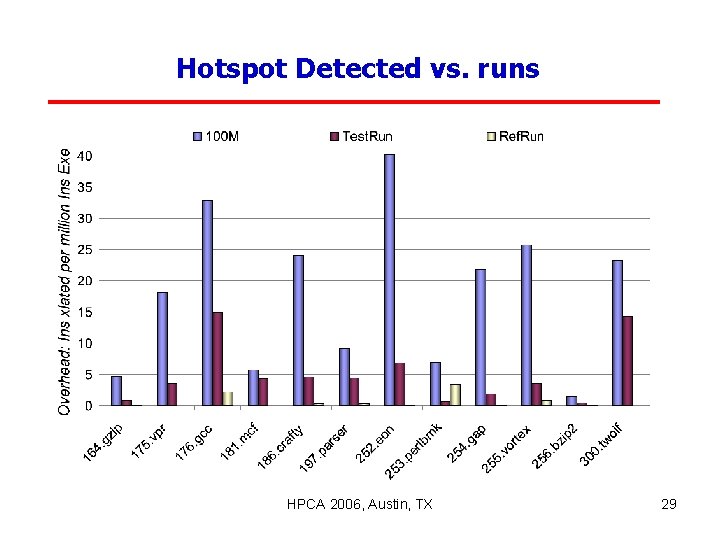

Hotspot Detected vs. runs HPCA 2006, Austin, TX 29

Performance Evaluation: SPEC 2000 HPCA 2006, Austin, TX 30

Performance evaluation (WSB 2004) HPCA 2006, Austin, TX 31

Performance Contributors (WSB 2004) HPCA 2006, Austin, TX 32

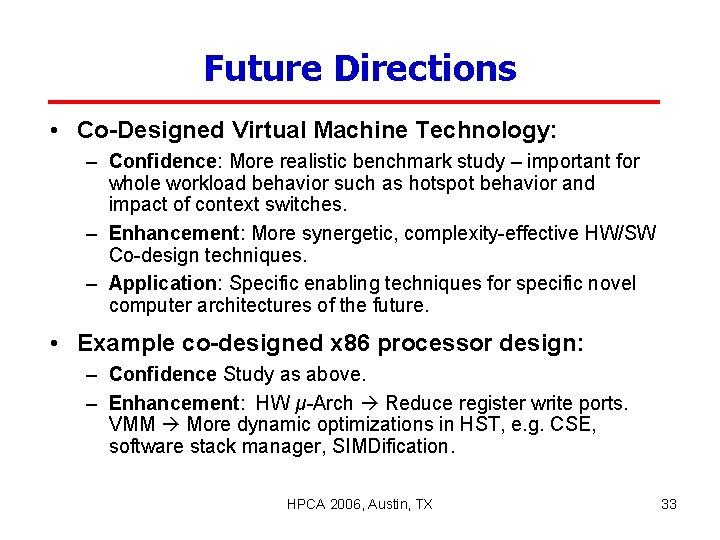

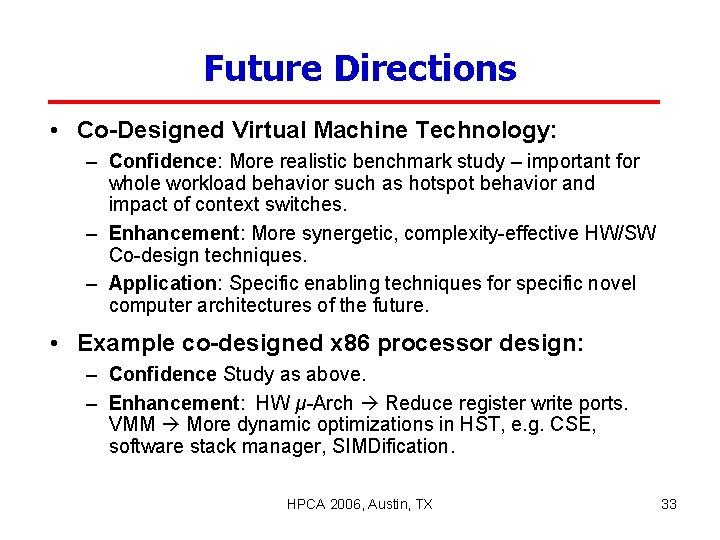

Future Directions • Co-Designed Virtual Machine Technology: – Confidence: More realistic benchmark study – important for whole workload behavior such as hotspot behavior and impact of context switches. – Enhancement: More synergetic, complexity-effective HW/SW Co-design techniques. – Application: Specific enabling techniques for specific novel computer architectures of the future. • Example co-designed x 86 processor design: – Confidence Study as above. – Enhancement: HW μ-Arch Reduce register write ports. VMM More dynamic optimizations in HST, e. g. CSE, software stack manager, SIMDification. HPCA 2006, Austin, TX 33