Chapter One Introduction to Pipelined Processors Superscalar Processors

- Slides: 19

Chapter One Introduction to Pipelined Processors

Superscalar Processors

Superscalar Processors • Scalar processors: one instruction per cycle • Superscalar : multiple instruction pipelines are used. • Purpose: To exploit more instruction level parallelism in user programs. • Only independent instructions can be executed in parallel.

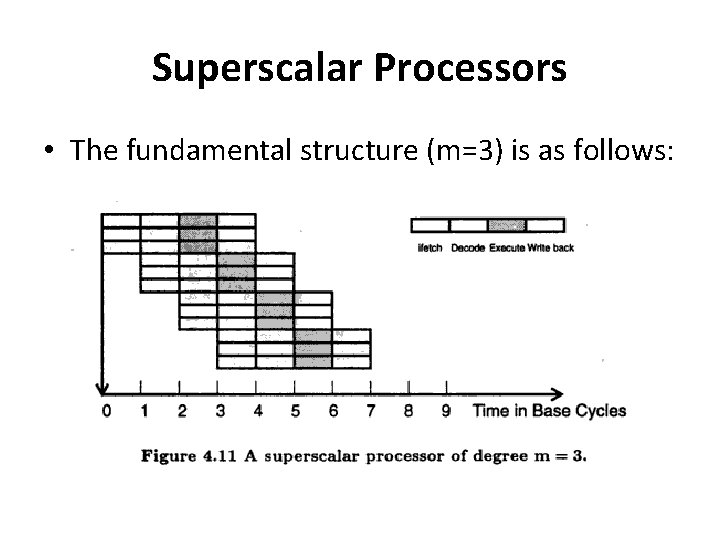

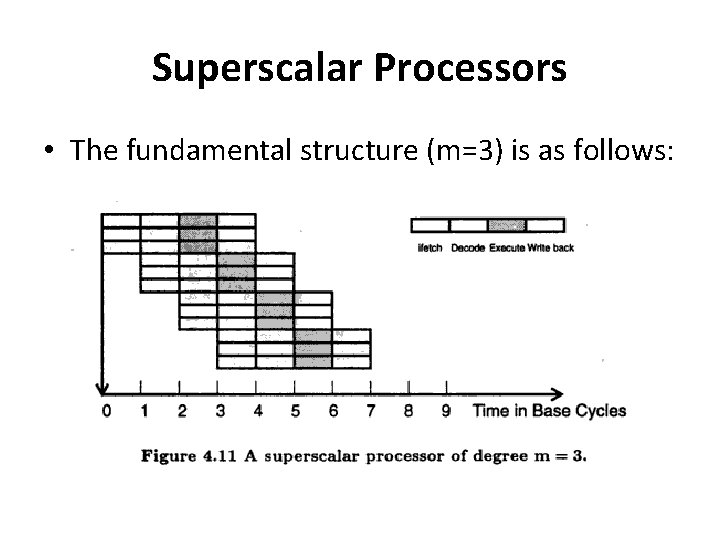

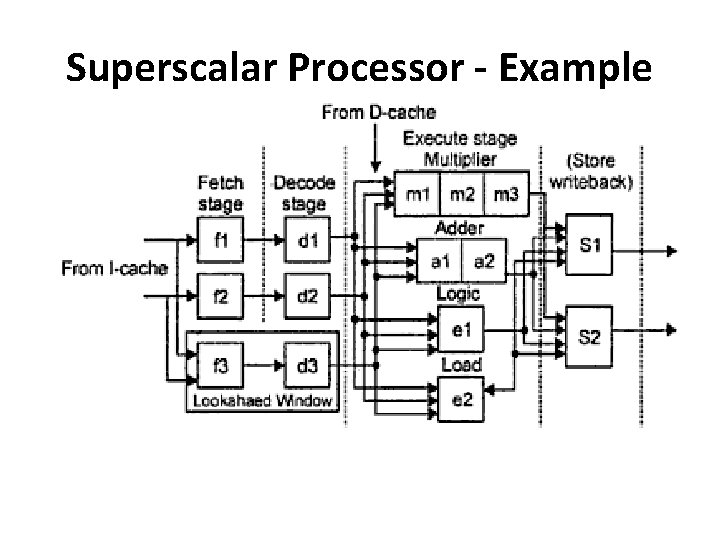

Superscalar Processors • The fundamental structure (m=3) is as follows:

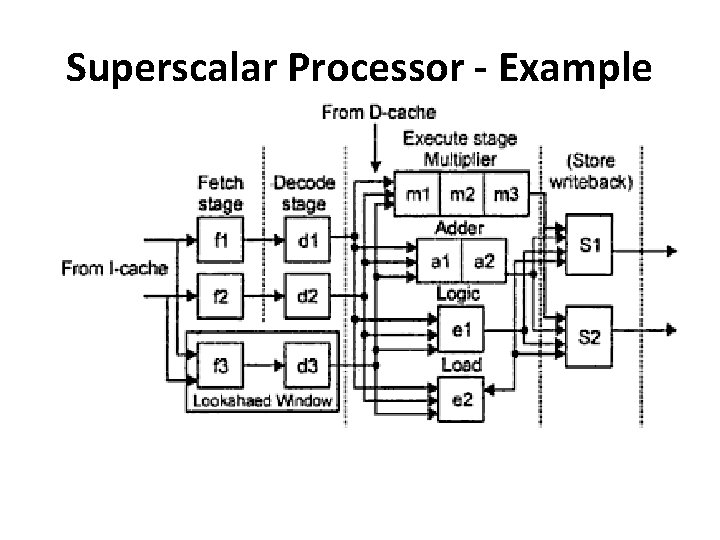

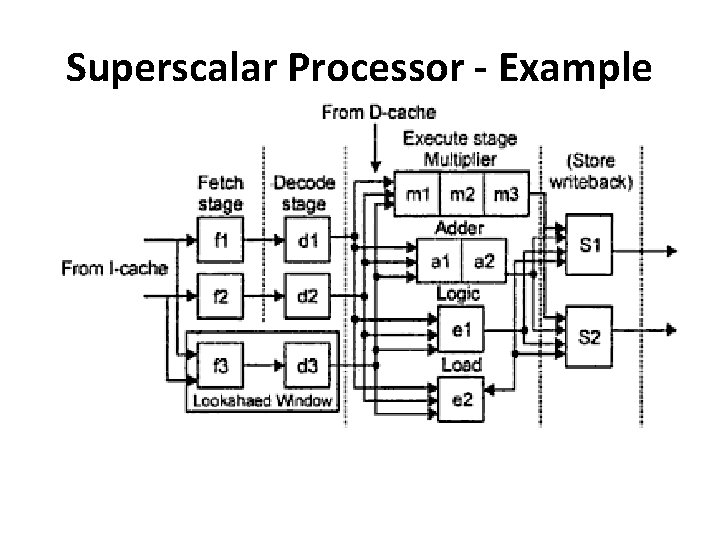

Superscalar Processors • Here, the instruction decoding and execution resources are increased • Example: A dual pipeline superscalar processor

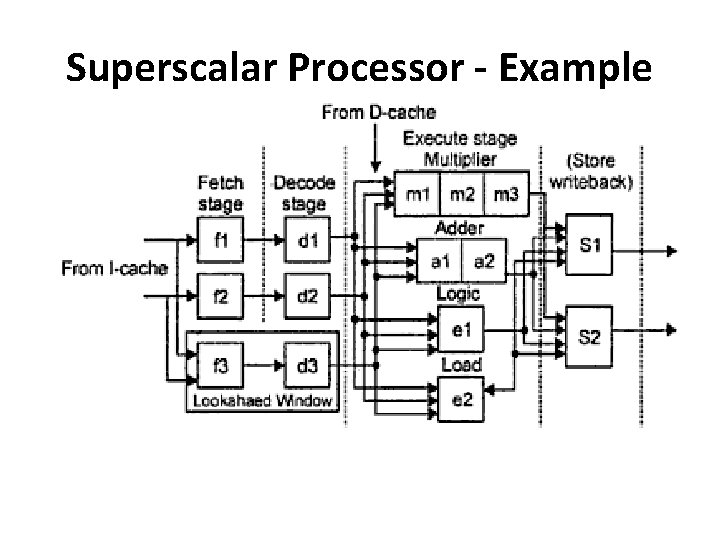

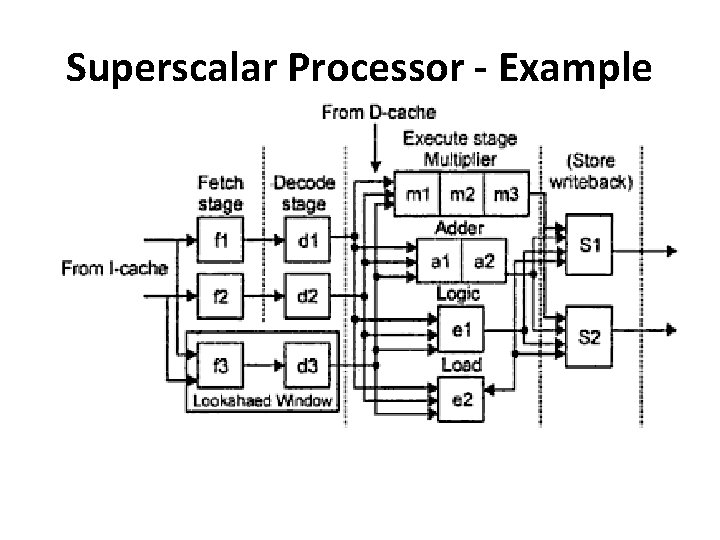

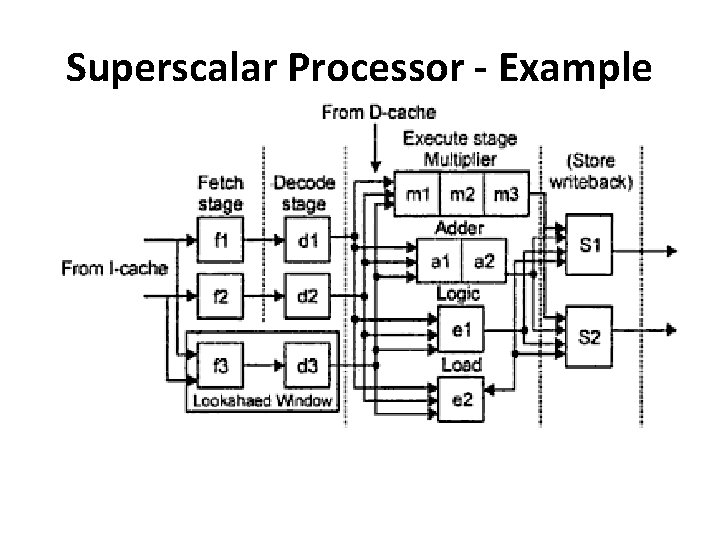

Superscalar Processor - Example

Superscalar Processor - Example • Can issue two instructions per cycle • There are two pipelines with four processing stages : fetch, decode, execute and store • Two instruction streams are from a single Icache. • Assume each stage requires one cycle except execution stage.

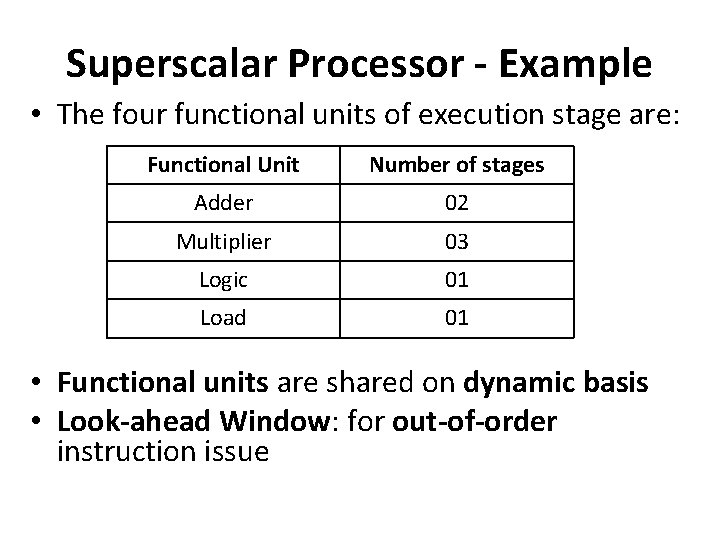

Superscalar Processor - Example

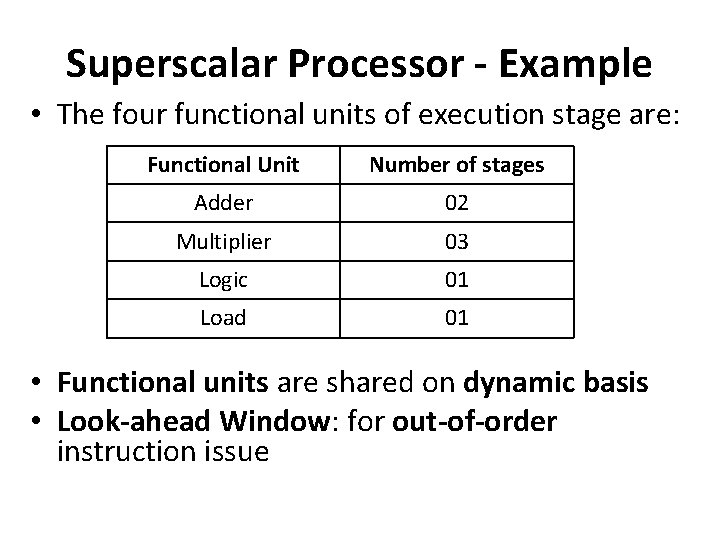

Superscalar Processor - Example • The four functional units of execution stage are: Functional Unit Number of stages Adder 02 Multiplier 03 Logic 01 Load 01 • Functional units are shared on dynamic basis • Look-ahead Window: for out-of-order instruction issue

Superscalar Processor - Example

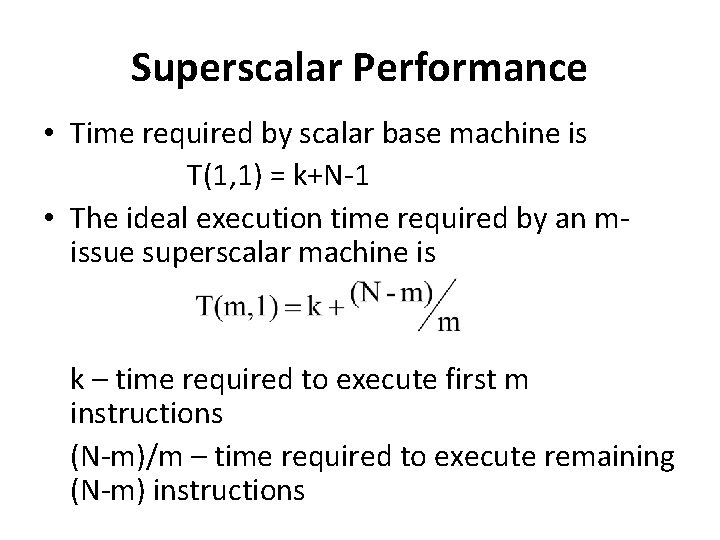

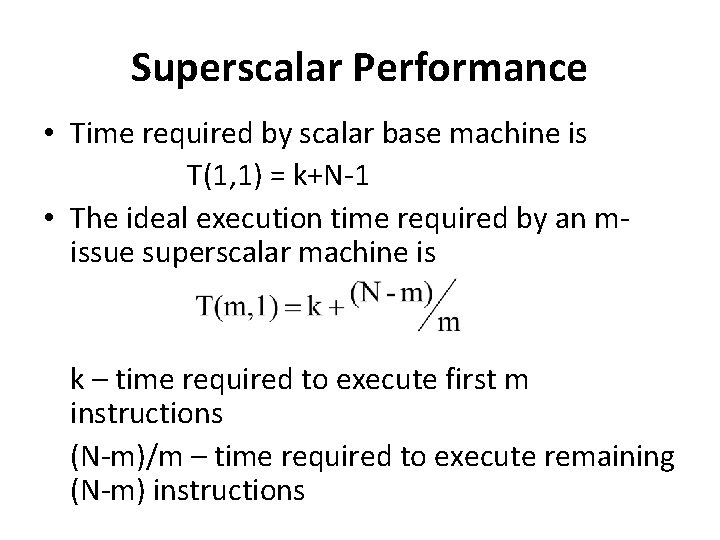

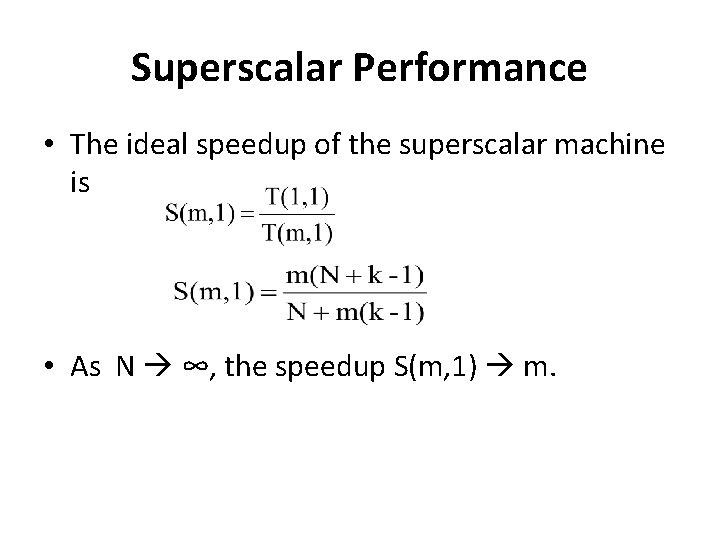

Superscalar Performance • Time required by scalar base machine is T(1, 1) = k+N-1 • The ideal execution time required by an missue superscalar machine is k – time required to execute first m instructions (N-m)/m – time required to execute remaining (N-m) instructions

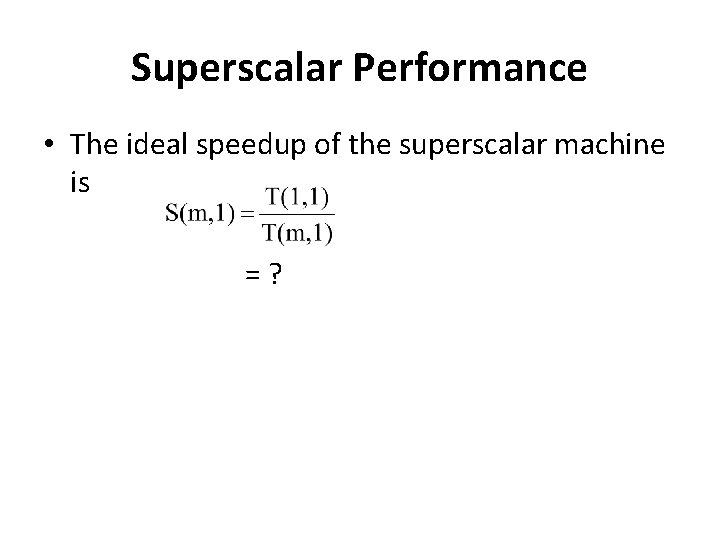

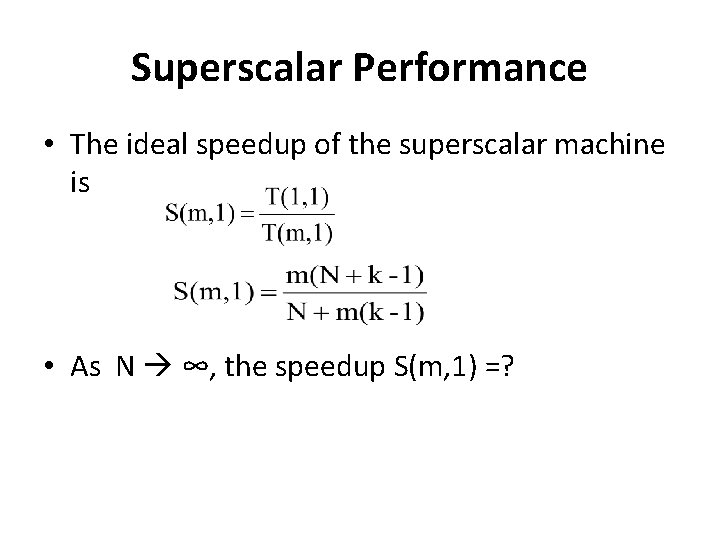

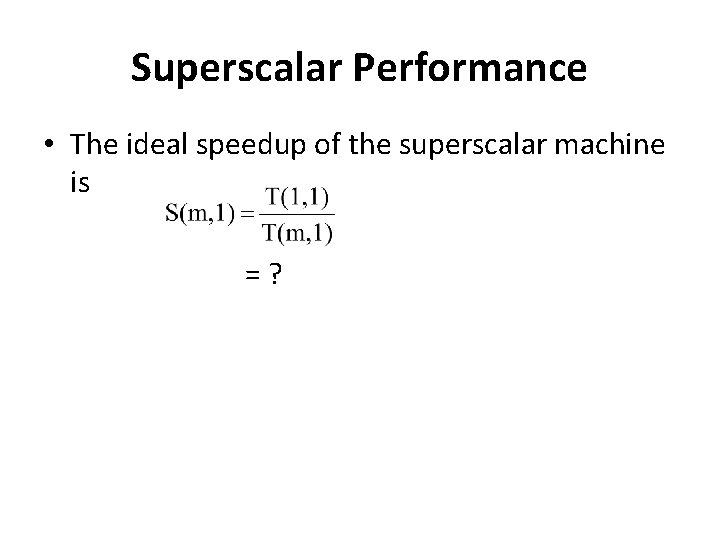

Superscalar Performance • The ideal speedup of the superscalar machine is =?

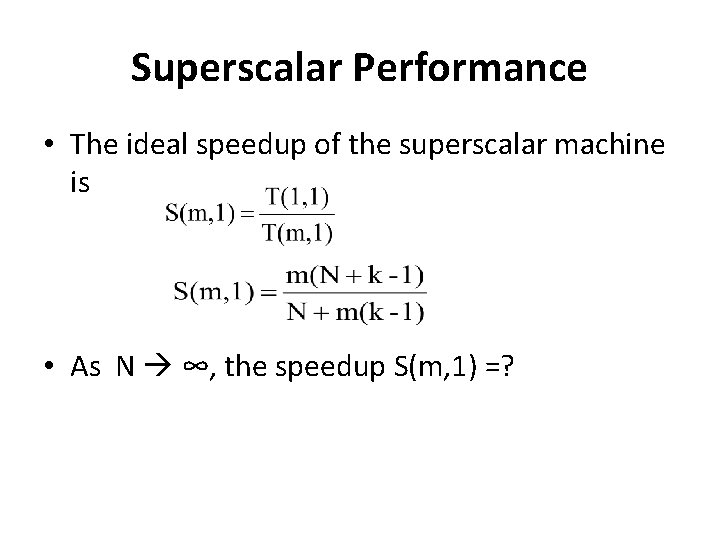

Superscalar Performance • The ideal speedup of the superscalar machine is • As N ∞, the speedup S(m, 1) =?

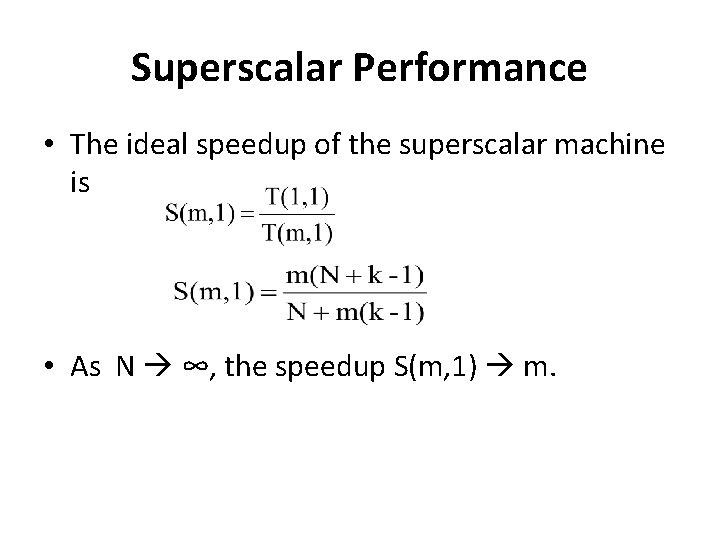

Superscalar Performance • The ideal speedup of the superscalar machine is • As N ∞, the speedup S(m, 1) m.

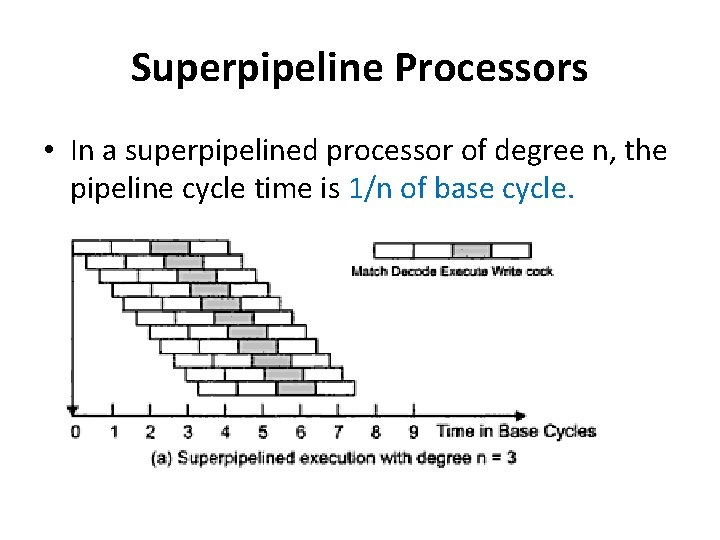

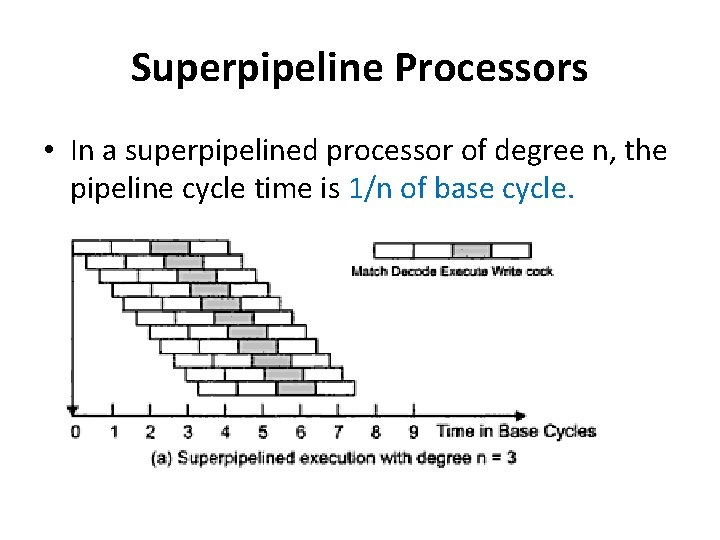

Superpipeline Processors • In a superpipelined processor of degree n, the pipeline cycle time is 1/n of base cycle.

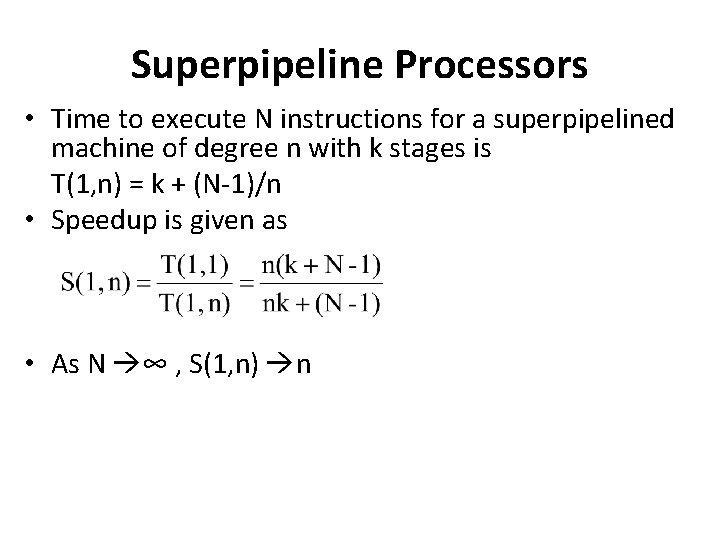

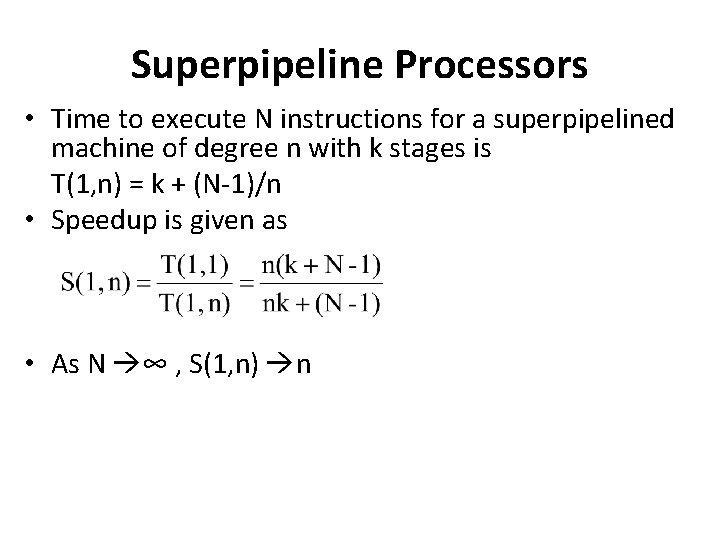

Superpipeline Processors • Time to execute N instructions for a superpipelined machine of degree n with k stages is T(1, n) = k + (N-1)/n • Speedup is given as • As N ∞ , S(1, n) n

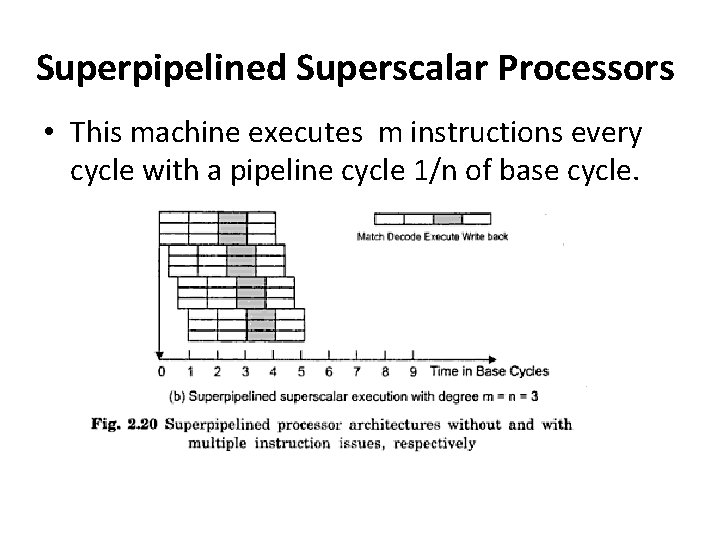

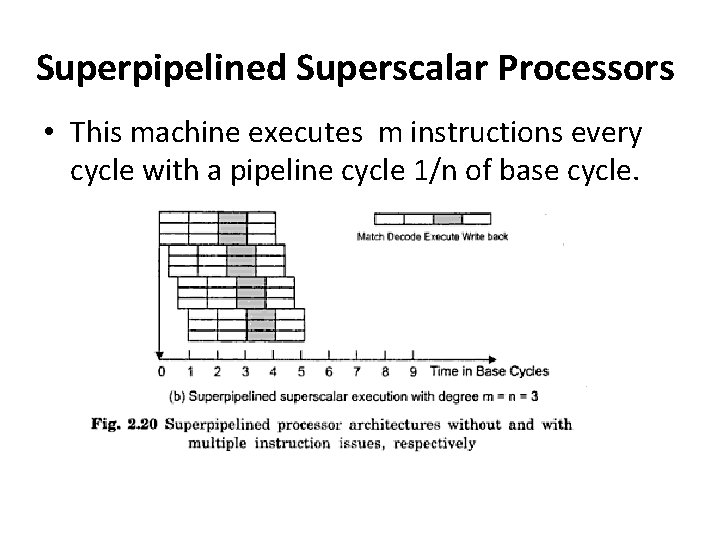

Superpipelined Superscalar Processors • This machine executes m instructions every cycle with a pipeline cycle 1/n of base cycle.

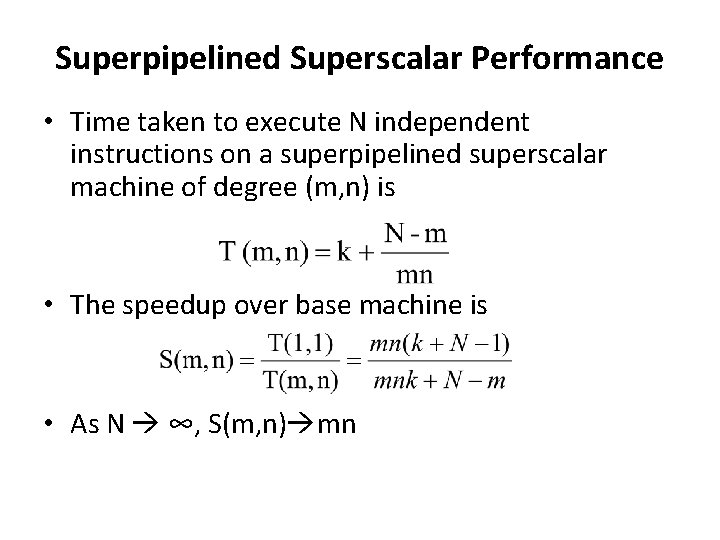

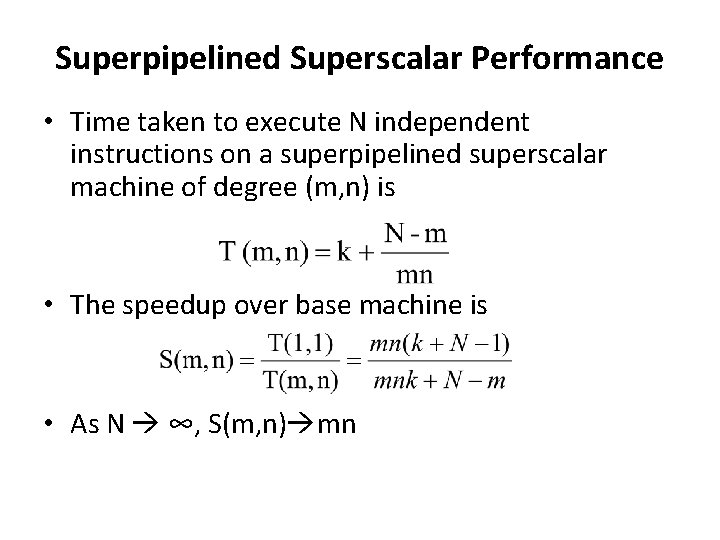

Superpipelined Superscalar Performance • Time taken to execute N independent instructions on a superpipelined superscalar machine of degree (m, n) is • The speedup over base machine is • As N ∞, S(m, n) mn

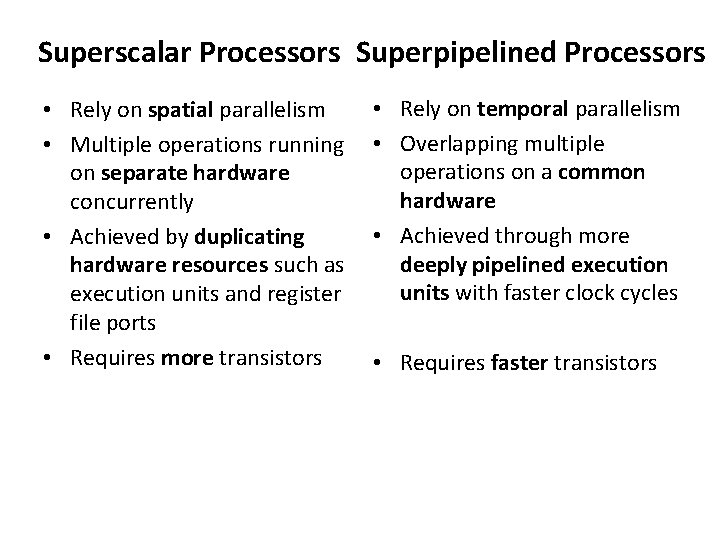

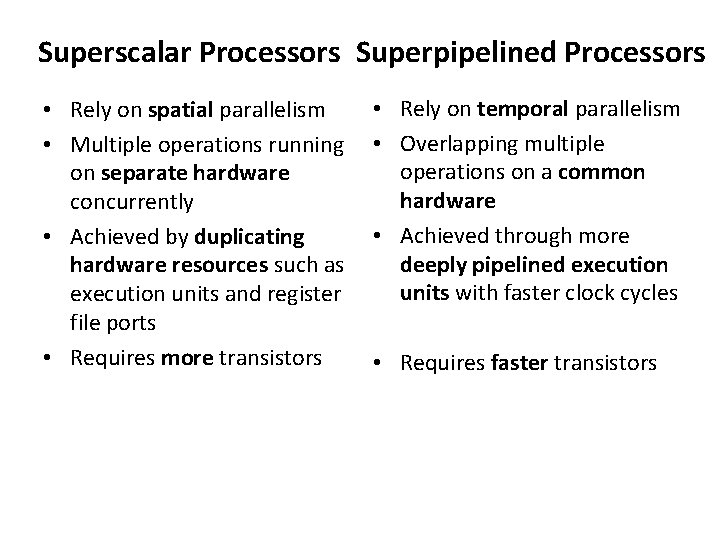

Superscalar Processors Superpipelined Processors • Rely on spatial parallelism • Multiple operations running on separate hardware concurrently • Achieved by duplicating hardware resources such as execution units and register file ports • Requires more transistors • Rely on temporal parallelism • Overlapping multiple operations on a common hardware • Achieved through more deeply pipelined execution units with faster clock cycles • Requires faster transistors