Virtual Memory Last Week Memory Management Increase degree

![Other Issues – Program Structure • Int[128, 128] data; • Each row is stored Other Issues – Program Structure • Int[128, 128] data; • Each row is stored](https://slidetodoc.com/presentation_image_h2/a1145bfea0692da639d92091646e8a99/image-64.jpg)

- Slides: 65

Virtual Memory

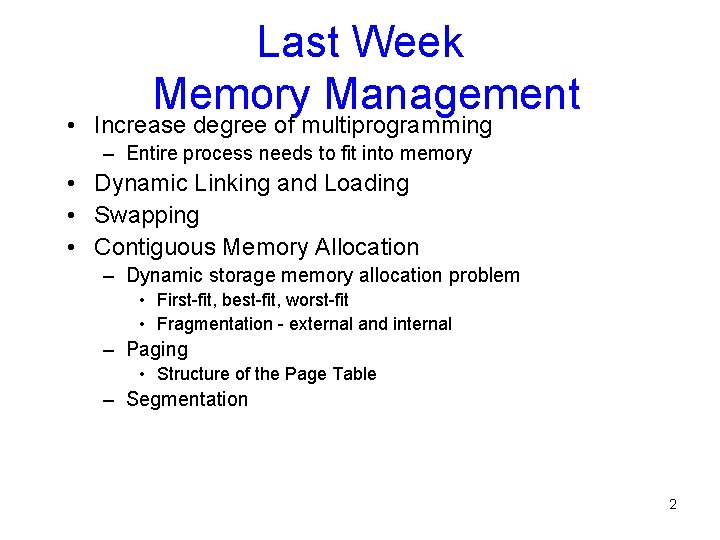

Last Week Memory Management • Increase degree of multiprogramming – Entire process needs to fit into memory • Dynamic Linking and Loading • Swapping • Contiguous Memory Allocation – Dynamic storage memory allocation problem • First-fit, best-fit, worst-fit • Fragmentation - external and internal – Paging • Structure of the Page Table – Segmentation 2

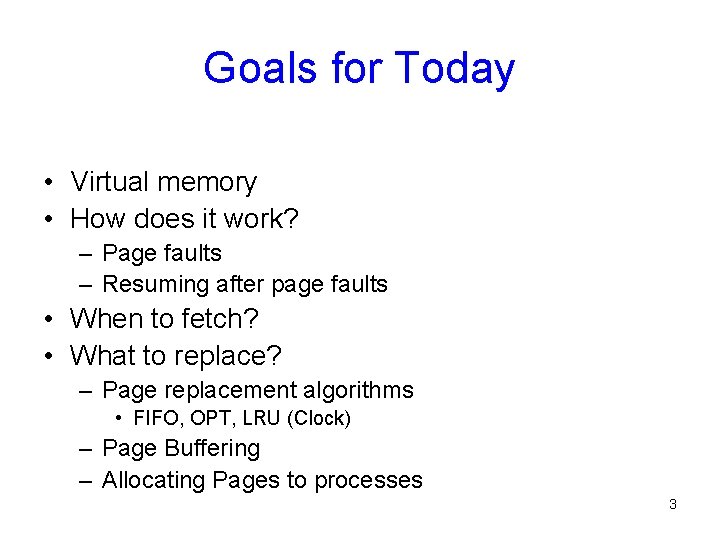

Goals for Today • Virtual memory • How does it work? – Page faults – Resuming after page faults • When to fetch? • What to replace? – Page replacement algorithms • FIFO, OPT, LRU (Clock) – Page Buffering – Allocating Pages to processes 3

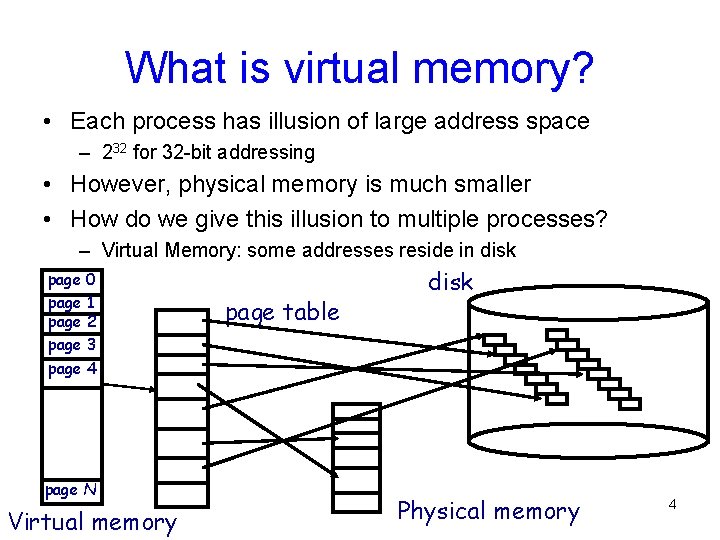

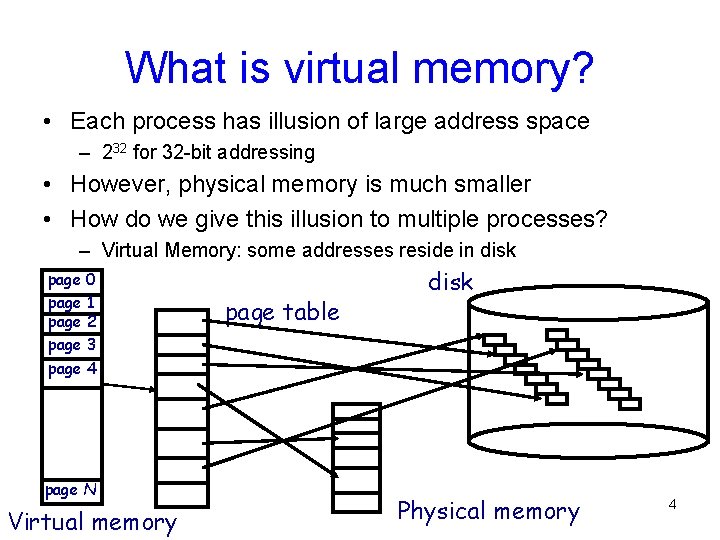

What is virtual memory? • Each process has illusion of large address space – 232 for 32 -bit addressing • However, physical memory is much smaller • How do we give this illusion to multiple processes? – Virtual Memory: some addresses reside in disk page 0 page 1 page 2 page 3 page 4 page N Virtual memory page table disk Physical memory 4

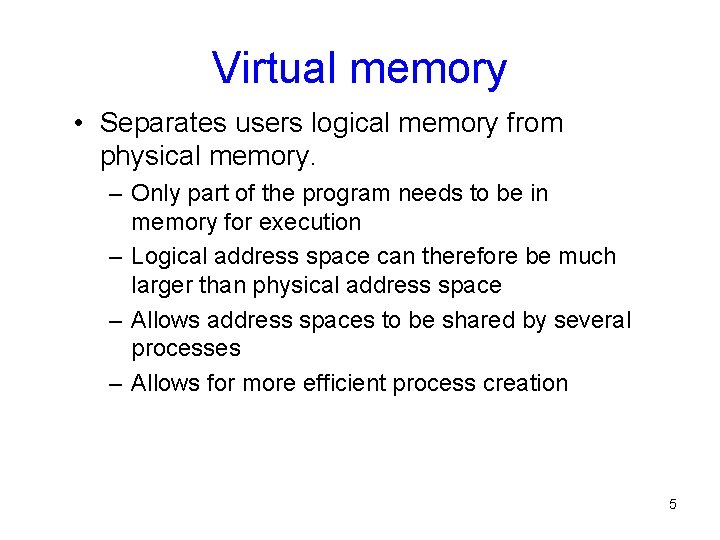

Virtual memory • Separates users logical memory from physical memory. – Only part of the program needs to be in memory for execution – Logical address space can therefore be much larger than physical address space – Allows address spaces to be shared by several processes – Allows for more efficient process creation 5

Virtual Memory • Load entire process in memory (swapping), run it, exit – Is slow (for big processes) – Wasteful (might not require everything) • Solutions: partial residency – Paging: only bring in pages, not all pages of process – Demand paging: bring only pages that are required • Where to fetch page from? – Have a contiguous space in disk: swap file (pagefile. sys) 6

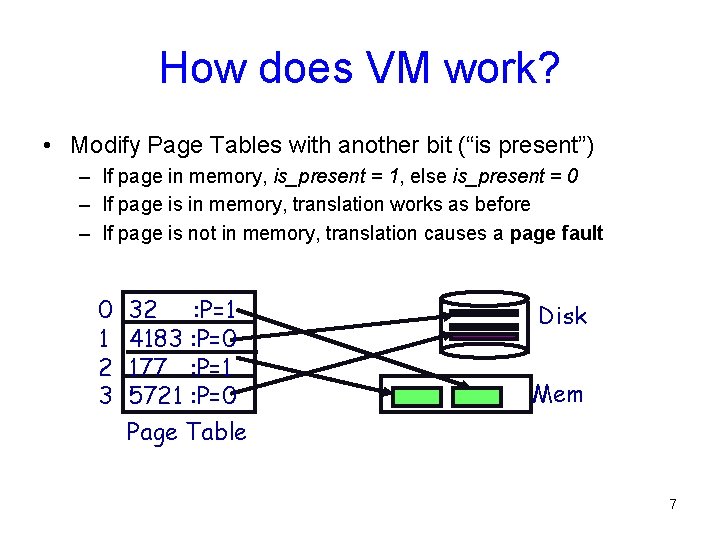

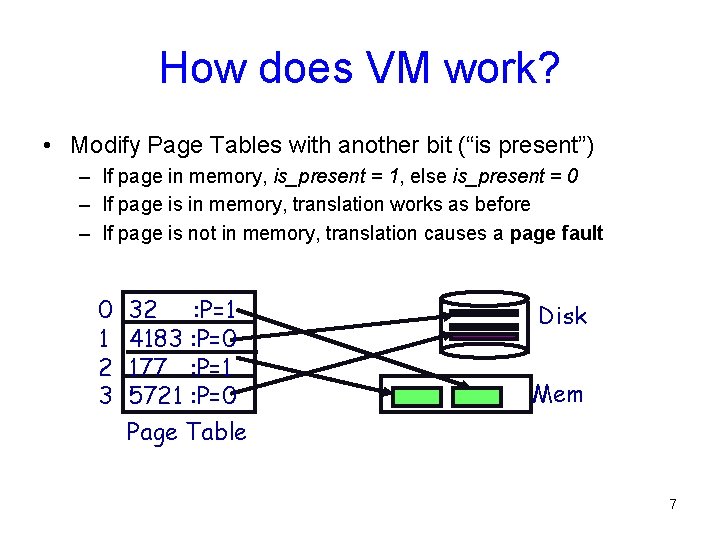

How does VM work? • Modify Page Tables with another bit (“is present”) – If page in memory, is_present = 1, else is_present = 0 – If page is in memory, translation works as before – If page is not in memory, translation causes a page fault 0 1 2 3 32 : P=1 4183 : P=0 177 : P=1 5721 : P=0 Page Table Disk Mem 7

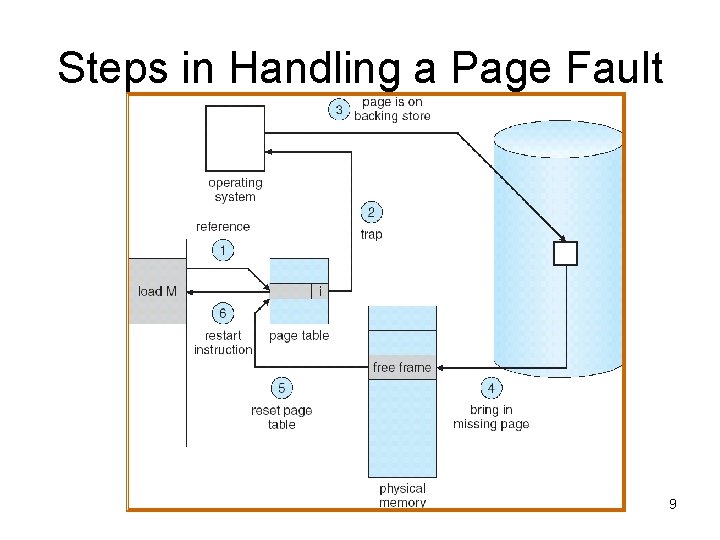

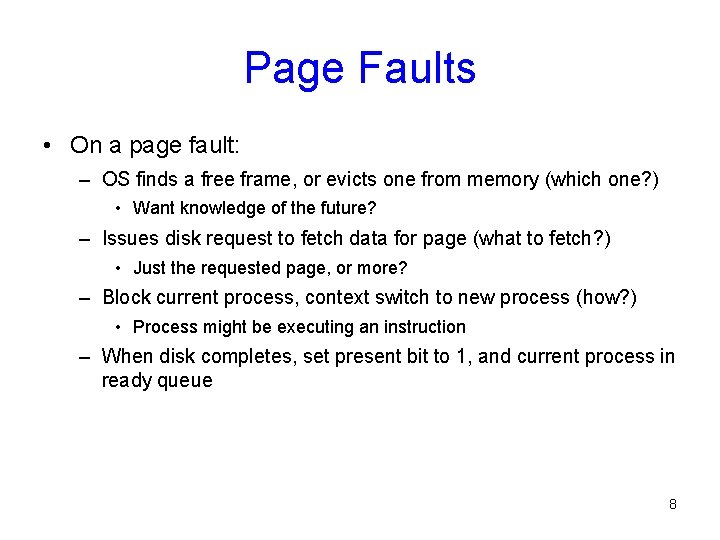

Page Faults • On a page fault: – OS finds a free frame, or evicts one from memory (which one? ) • Want knowledge of the future? – Issues disk request to fetch data for page (what to fetch? ) • Just the requested page, or more? – Block current process, context switch to new process (how? ) • Process might be executing an instruction – When disk completes, set present bit to 1, and current process in ready queue 8

Steps in Handling a Page Fault 9

Resuming after a page fault • Should be able to restart the instruction • For RISC processors this is simple: – Instructions are idempotent until references are done • More complicated for CISC: – E. g. move 256 bytes from one location to another – Possible Solutions: • Ensure pages are in memory before the instruction executes 10

When to fetch? • Just before the page is used! – Need to know the future • Demand paging: – Fetch a page when it faults • Prepaging: – Get the page on fault + some of its neighbors, or – Get all pages in use last time process was swapped 11

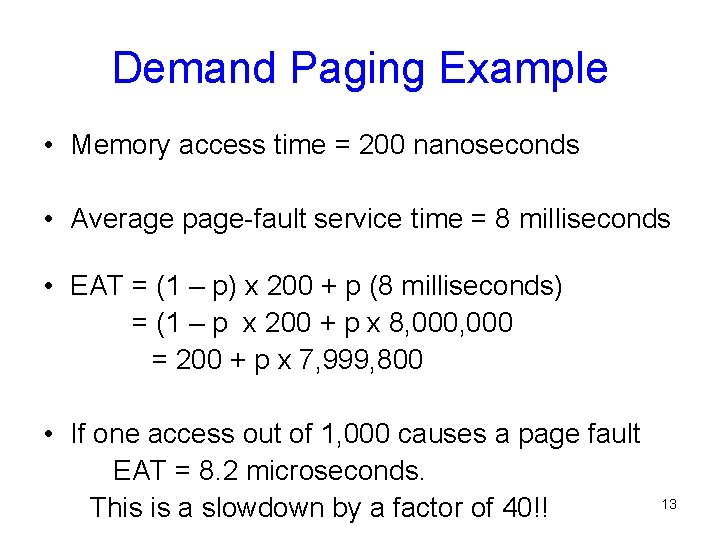

Performance of Demand Paging • Page Fault Rate 0 p 1. 0 – if p = 0 no page faults – if p = 1, every reference is a fault • Effective Access Time (EAT) EAT = (1 – p) x memory access + p (page fault overhead + swap page out + swap page in + restart overhead ) 12

Demand Paging Example • Memory access time = 200 nanoseconds • Average page-fault service time = 8 milliseconds • EAT = (1 – p) x 200 + p (8 milliseconds) = (1 – p x 200 + p x 8, 000 = 200 + p x 7, 999, 800 • If one access out of 1, 000 causes a page fault EAT = 8. 2 microseconds. This is a slowdown by a factor of 40!! 13

What to replace? • What happens if there is no free frame? – find some page in memory, but not really in use, swap it out • Page Replacement – When process has used up all frames it is allowed to use – OS must select a page to eject from memory to allow new page – The page to eject is selected using the Page Replacement Algo • Goal: Select page that minimizes future page faults 14

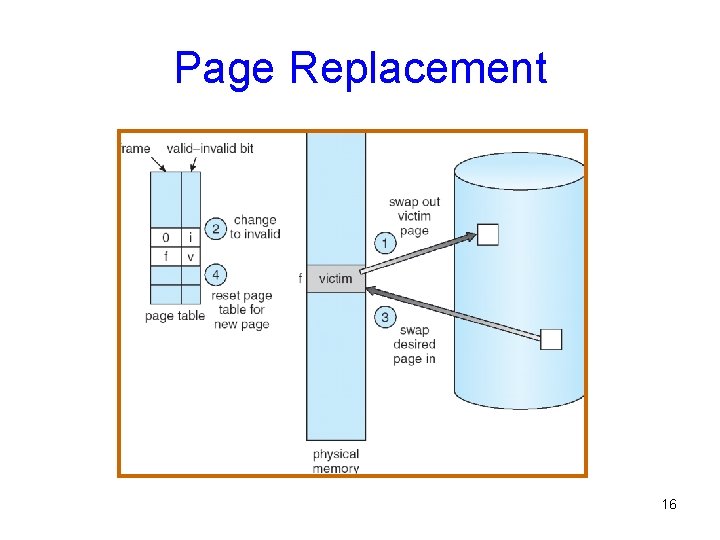

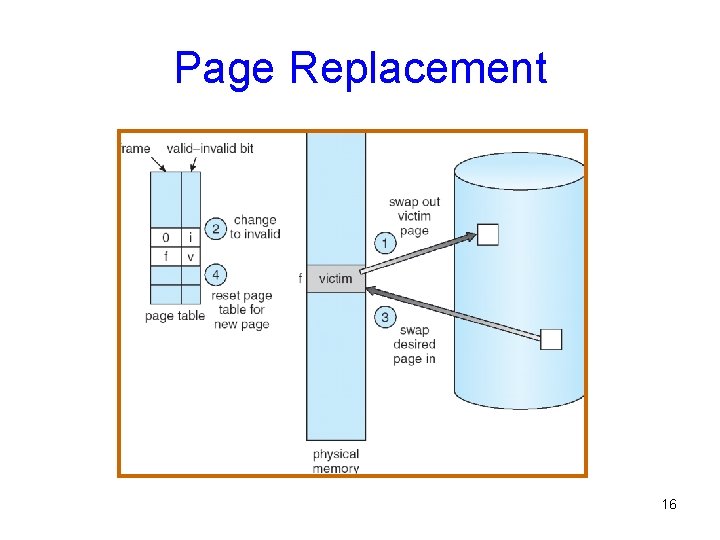

Page Replacement • Prevent over-allocation of memory by modifying page-fault service routine to include page replacement • Use modify (dirty) bit to reduce overhead of page transfers – only modified pages are written to disk • Page replacement completes separation between logical memory and physical memory – large virtual memory can be provided on a smaller physical memory 15

Page Replacement 16

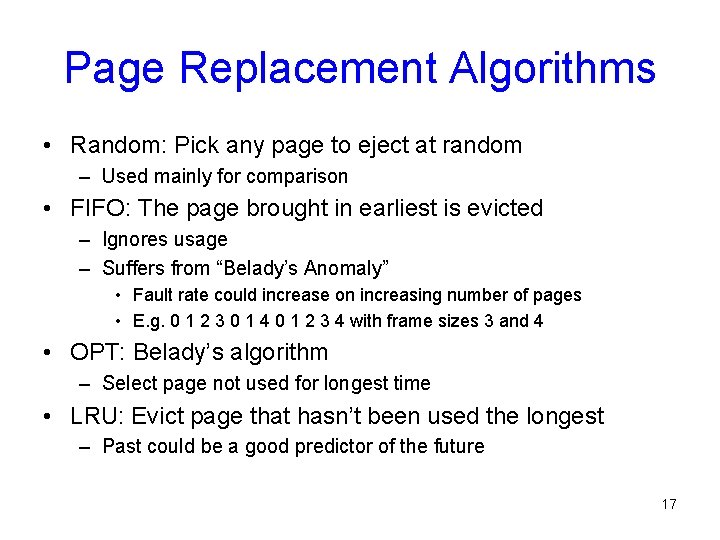

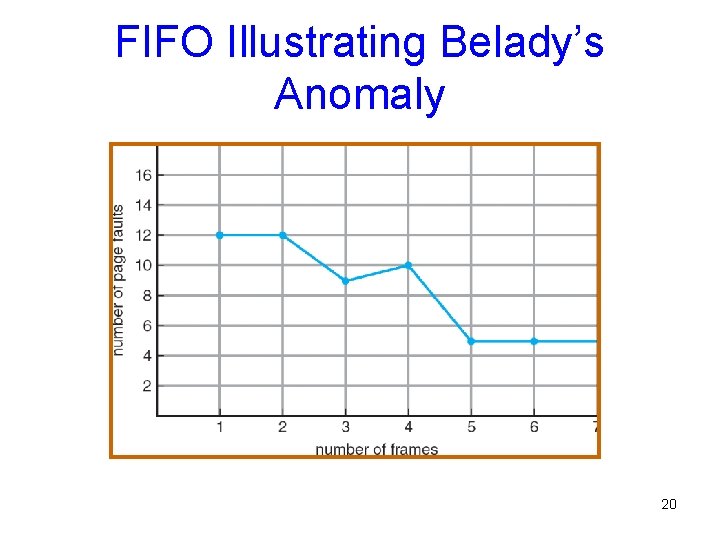

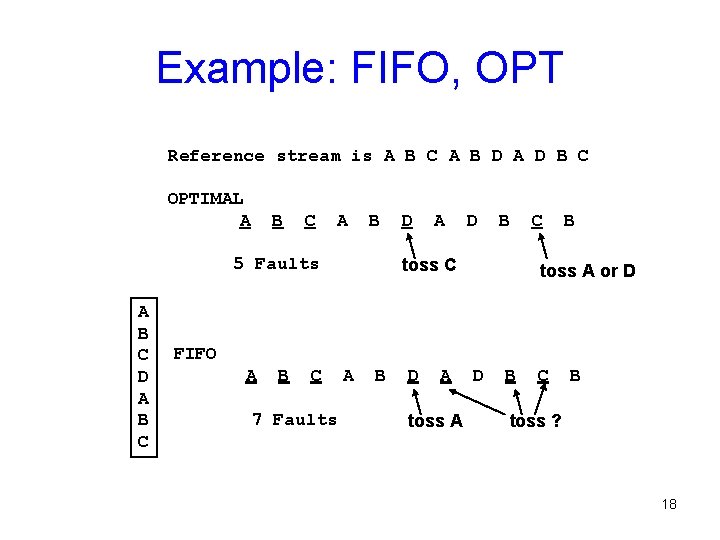

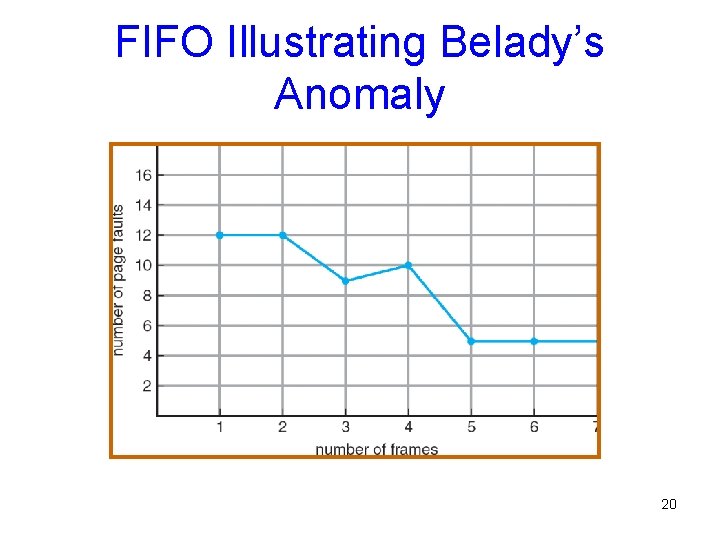

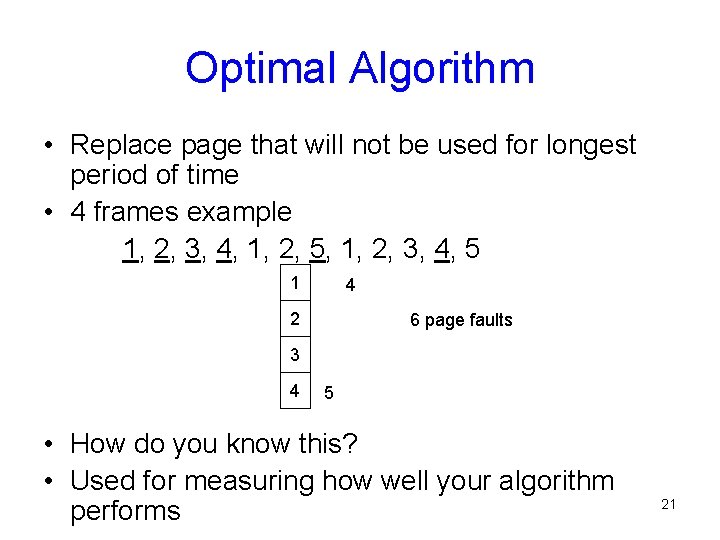

Page Replacement Algorithms • Random: Pick any page to eject at random – Used mainly for comparison • FIFO: The page brought in earliest is evicted – Ignores usage – Suffers from “Belady’s Anomaly” • Fault rate could increase on increasing number of pages • E. g. 0 1 2 3 0 1 4 0 1 2 3 4 with frame sizes 3 and 4 • OPT: Belady’s algorithm – Select page not used for longest time • LRU: Evict page that hasn’t been used the longest – Past could be a good predictor of the future 17

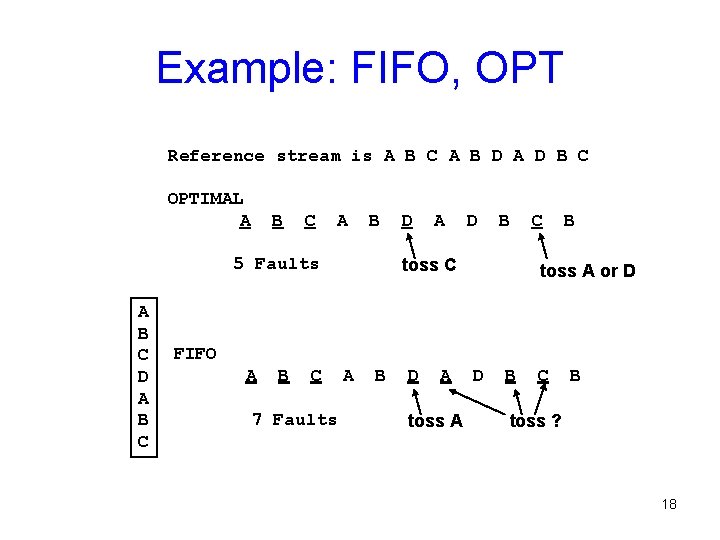

Example: FIFO, OPT Reference stream is A B C A B D A D B C OPTIMAL A B C A B 5 Faults A B C D A D B toss C C B toss A or D FIFO A B C 7 Faults A B D A toss A D B C B toss ? 18

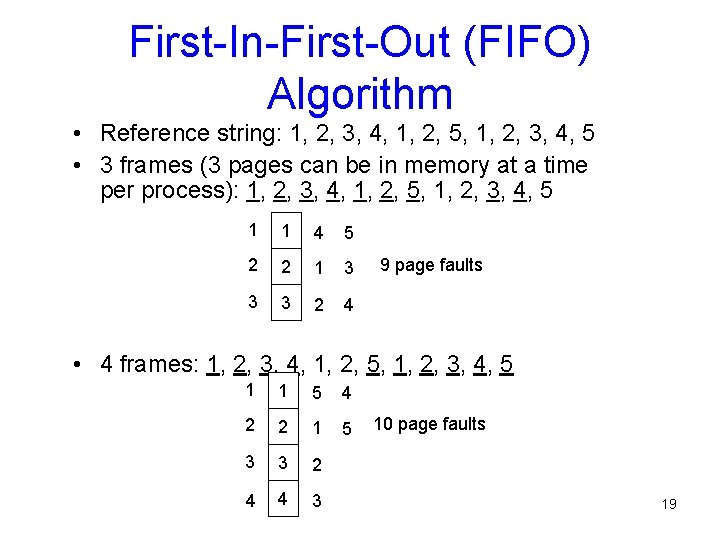

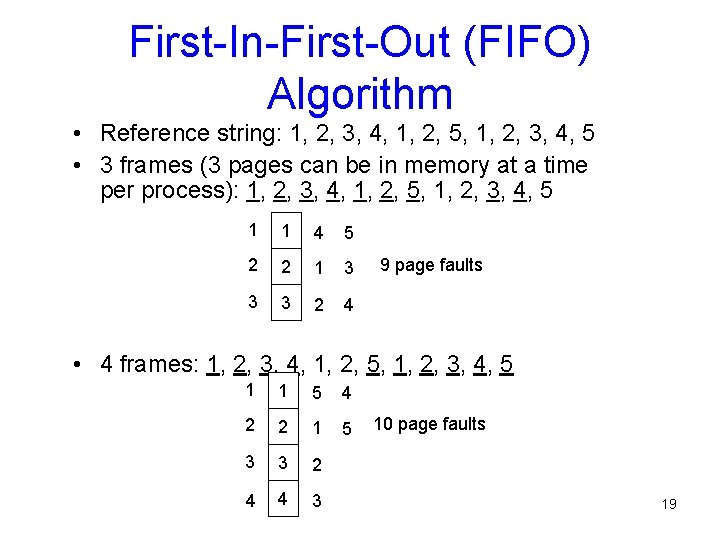

First-In-First-Out (FIFO) Algorithm • Reference string: 1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5 • 3 frames (3 pages can be in memory at a time per process): 1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5 1 1 4 5 2 2 1 3 3 3 2 4 9 page faults • 4 frames: 1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5 1 1 5 4 2 2 1 5 3 3 2 4 4 3 10 page faults 19

FIFO Illustrating Belady’s Anomaly 20

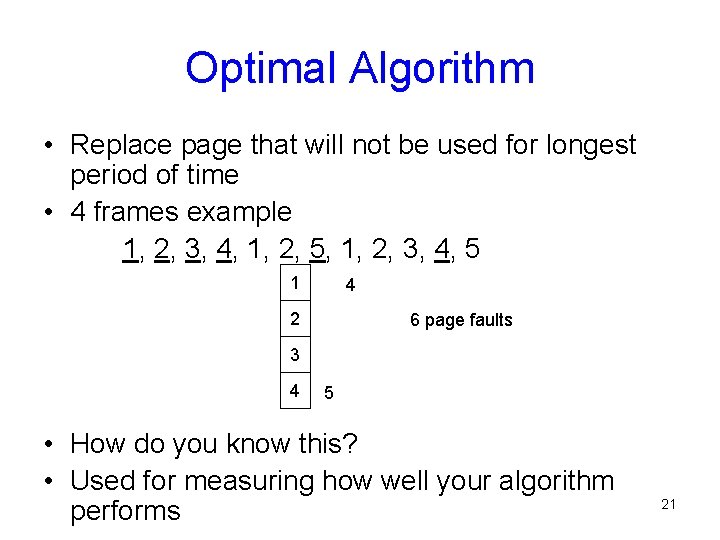

Optimal Algorithm • Replace page that will not be used for longest period of time • 4 frames example 1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5 1 4 2 6 page faults 3 4 5 • How do you know this? • Used for measuring how well your algorithm performs 21

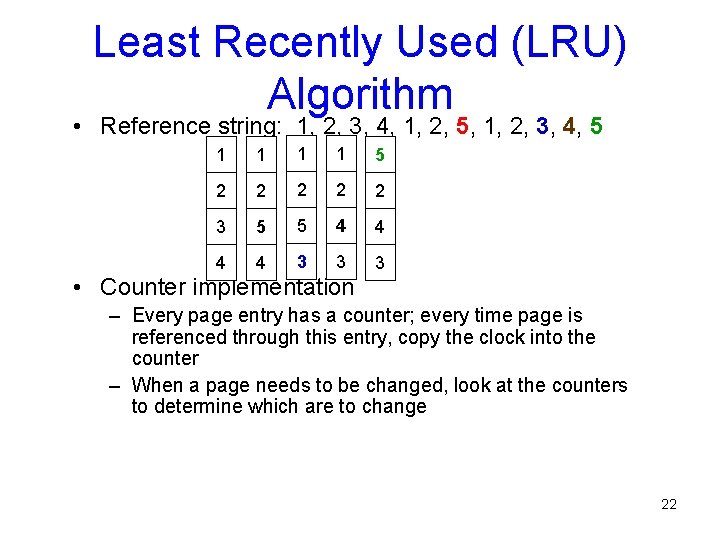

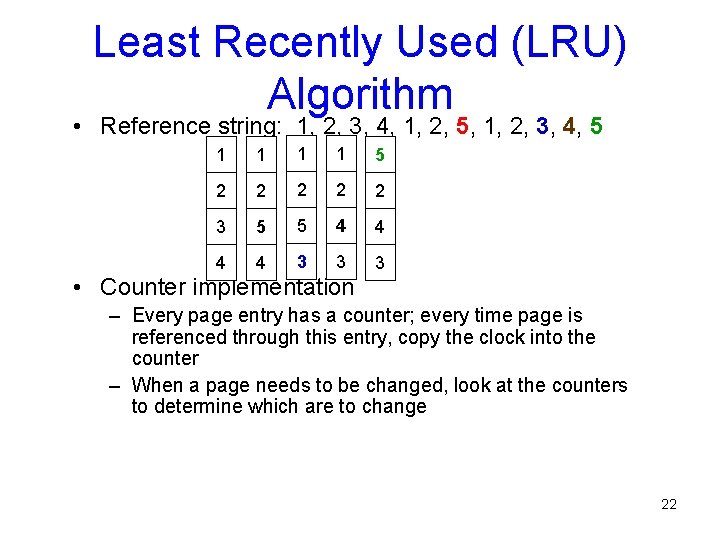

Least Recently Used (LRU) Algorithm • Reference string: 1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5 1 1 5 2 2 2 3 5 5 4 4 3 3 3 • Counter implementation – Every page entry has a counter; every time page is referenced through this entry, copy the clock into the counter – When a page needs to be changed, look at the counters to determine which are to change 22

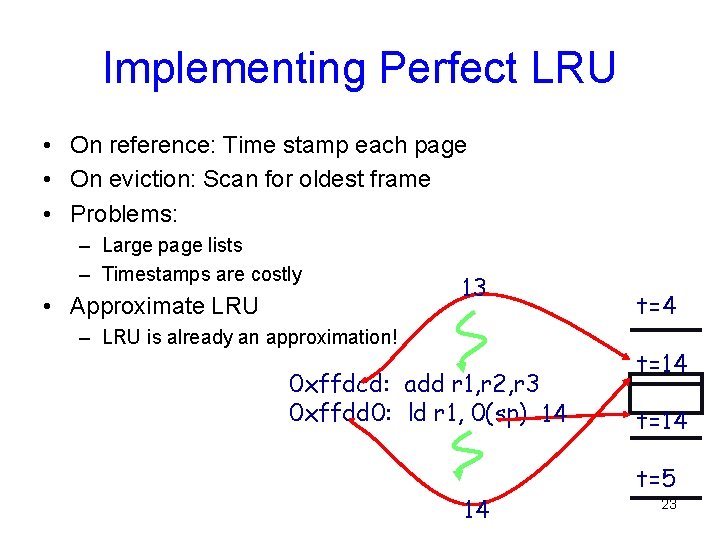

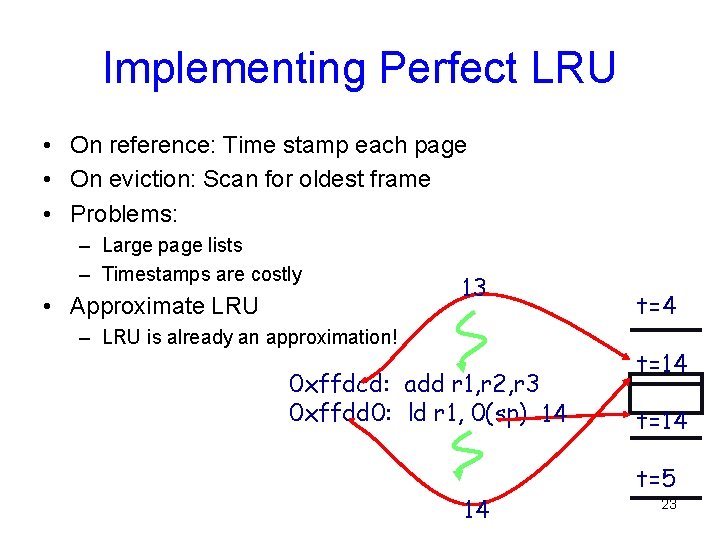

Implementing Perfect LRU • On reference: Time stamp each page • On eviction: Scan for oldest frame • Problems: – Large page lists – Timestamps are costly • Approximate LRU 13 – LRU is already an approximation! 0 xffdcd: add r 1, r 2, r 3 0 xffdd 0: ld r 1, 0(sp) 14 14 t=14 t=5 23

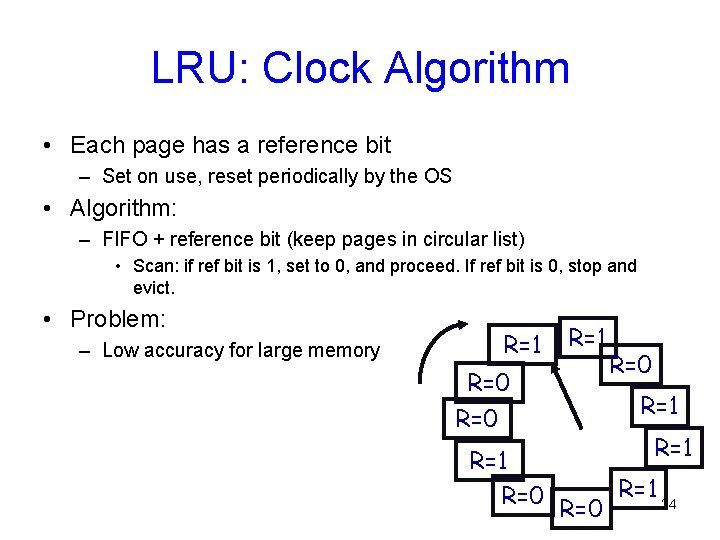

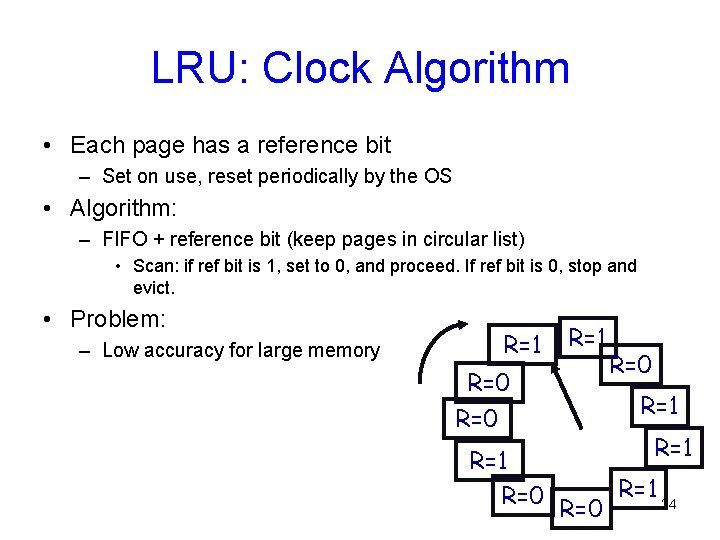

LRU: Clock Algorithm • Each page has a reference bit – Set on use, reset periodically by the OS • Algorithm: – FIFO + reference bit (keep pages in circular list) • Scan: if ref bit is 1, set to 0, and proceed. If ref bit is 0, stop and evict. • Problem: – Low accuracy for large memory R=1 R=0 R=0 R=1 R=1 R=0 R=1 24

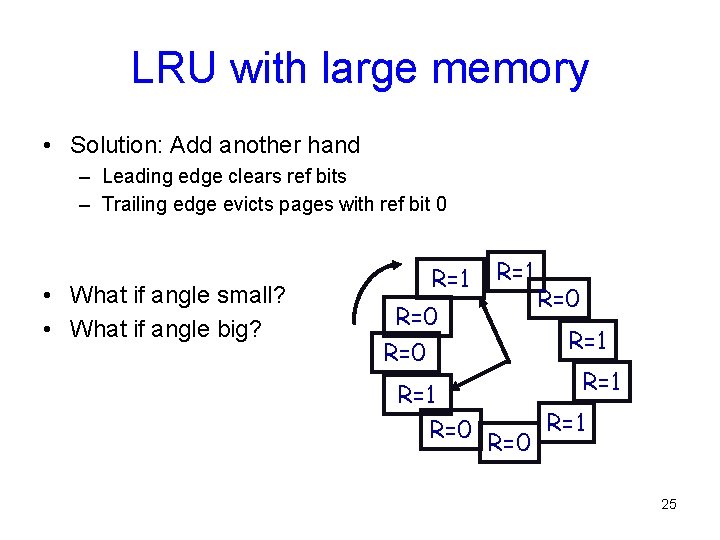

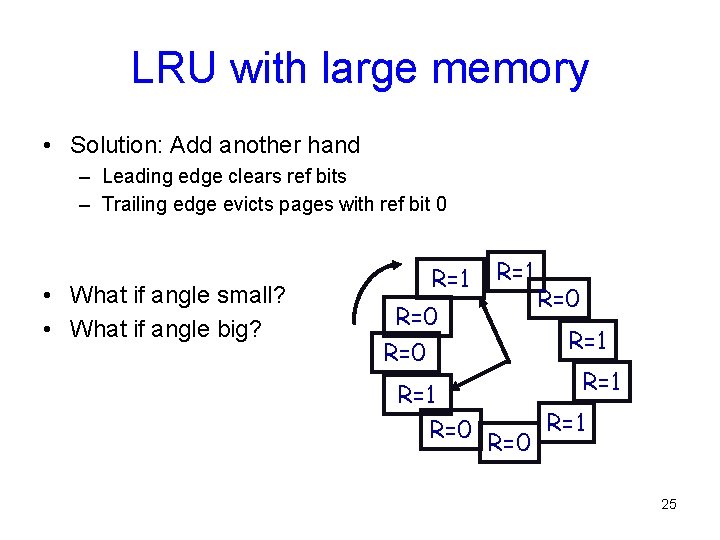

LRU with large memory • Solution: Add another hand – Leading edge clears ref bits – Trailing edge evicts pages with ref bit 0 • What if angle small? • What if angle big? R=1 R=0 R=0 R=1 R=1 R=0 R=1 25

Clock Algorithm: Discussion • Sensitive to sweeping interval – Fast: lose usage information – Slow: all pages look used • Clock: add reference bits – Could use (ref bit, modified bit) as ordered pair – Might have to scan all pages • LFU: Remove page with lowest count – No track of when the page was referenced – Use multiple bits. Shift right by 1 at regular intervals. • MFU: remove the most frequently used page • LFU and MFU do not approximate OPT well 26

27

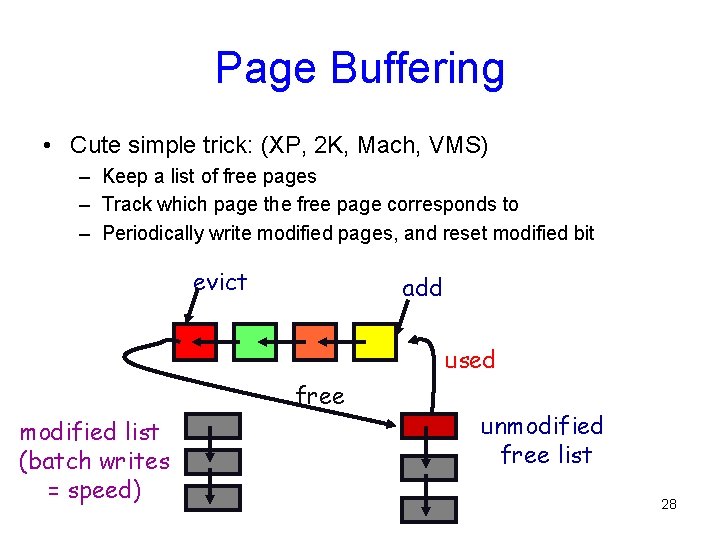

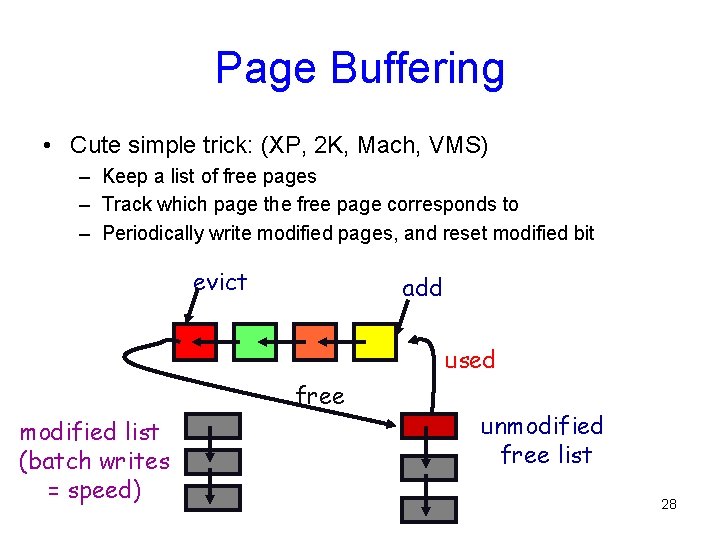

Page Buffering • Cute simple trick: (XP, 2 K, Mach, VMS) – Keep a list of free pages – Track which page the free page corresponds to – Periodically write modified pages, and reset modified bit evict add used free modified list (batch writes = speed) unmodified free list 28

Allocating Pages to Processes • Global replacement – Single memory pool for entire system – On page fault, evict oldest page in the system – Problem: protection • Local (per-process) replacement – Have a separate pool of pages for each process – Page fault in one process can only replace pages from its own process – Problem: might have idle resources 29

Allocation of Frames • Each process needs minimum number of pages • Example: IBM 370 – 6 pages to handle SS MOVE instruction: – instruction is 6 bytes, might span 2 pages – 2 pages to handle from – 2 pages to handle to • Two major allocation schemes – fixed allocation – priority allocation 30

Virtual Memory II: Thrashing Working Set Algorithm Dynamic Memory Management

Last Time • We’ve focused on demand paging – – Each process is allocated pages of memory As a process executes it references pages On a miss a page fault to the O/S occurs The O/S pages in the missing page and pages out some target page if room is needed • The CPU maintains a cache of PTEs: the TLB. • The O/S must flush the TLB before looking at page reference bits, or before context switching (because this changes the page table) 32

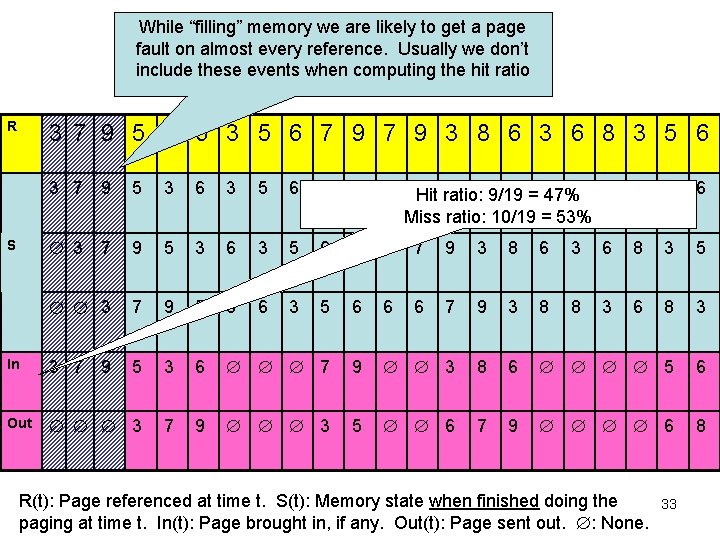

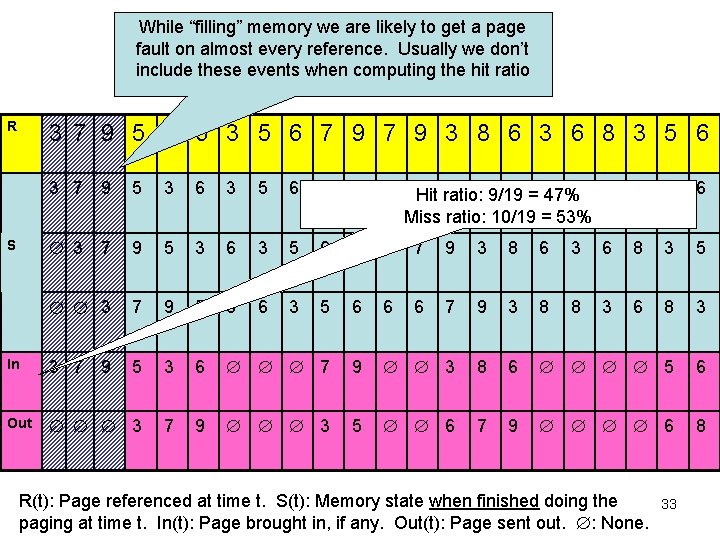

While “filling” memory we are likely to get a page fault on almost every reference. Usually we don’t include these events when computing the hit ratio Example: LRU, =3 R 3 7 9 5 3 6 3 5 6 7 9 3 8 6 3 6 8 3 5 6 3 7 9 5 3 6 3 5 6 7 9 7 9 3 8 6 3 3 7 9 5 5 6 3 5 6 6 6 7 9 3 8 8 In 3 7 9 5 3 6 7 9 3 8 Out 3 7 9 3 5 6 7 S 9 Hit ratio: 3 8 9/19 6 = 347%6 8 Miss ratio: 10/19 = 53% 3 5 6 6 8 3 5 3 6 8 3 6 5 6 9 6 8 R(t): Page referenced at time t. S(t): Memory state when finished doing the paging at time t. In(t): Page brought in, if any. Out(t): Page sent out. : None. 33

Goals for today • Review demand paging • What is thrashing? • Solutions to thrashing – Approach 1: Working Set – Approach 2: Page fault frequency • Dynamic Memory Management – Memory allocation and deallocation and goals – Memory allocator - impossibility result • Best fit • Simple scheme - chunking, binning, and free • Buddy-block scheme • Other issues 34

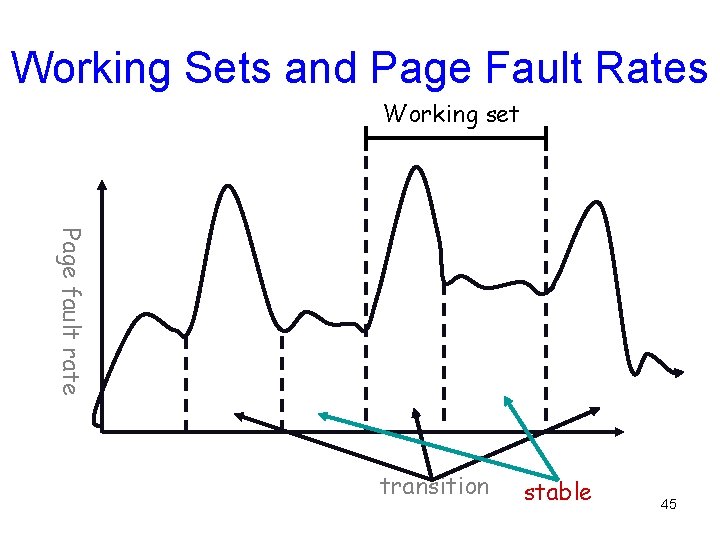

Thrashing • Def: Excessive rate of paging that occurs because processes in system require more memory – Keep throwing out page that will be referenced soon – So, they keep accessing memory that is not there • Why does it occur? – Poor locality, past != future – There is reuse, but process does not fit – Too many processes in the system 35

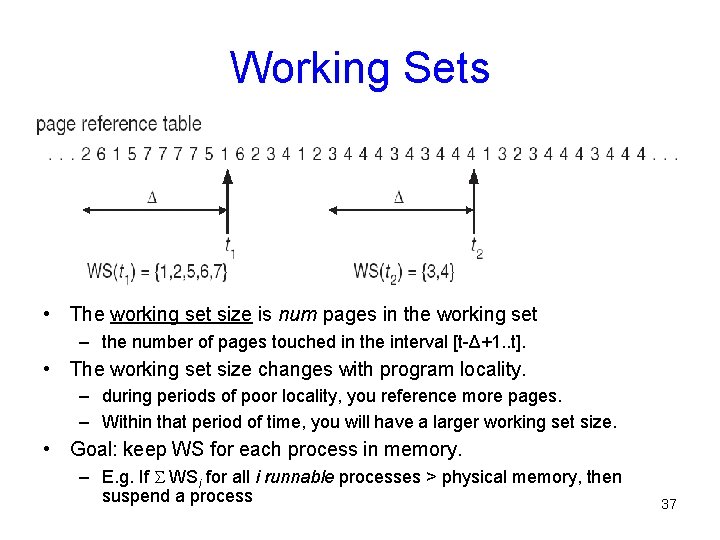

Approach 1: Working Set • Peter Denning, 1968 – He uses this term to denote memory locality of a program Def: pages referenced by process in last time-units comprise its working set For our examples, we usually discuss WS in terms of , a “window” in the page reference string. But while this is easier on paper it makes less sense in practice! In real systems, the window should probably be a period of time, perhaps a second or two. 36

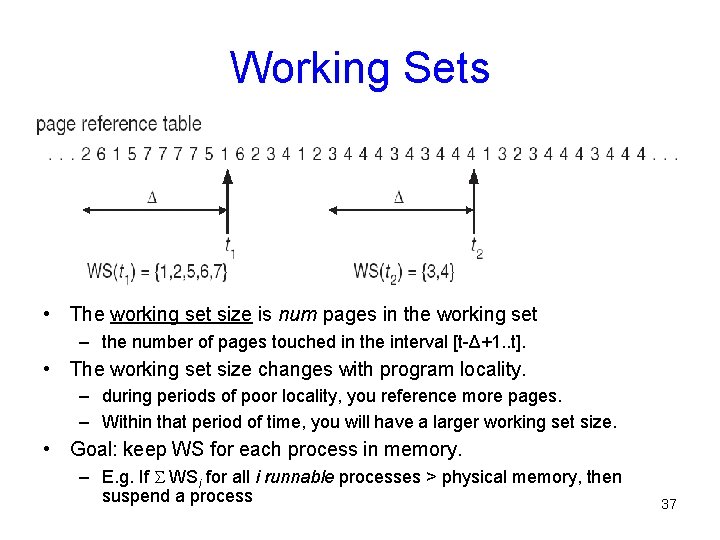

Working Sets • The working set size is num pages in the working set – the number of pages touched in the interval [t-Δ+1. . t]. • The working set size changes with program locality. – during periods of poor locality, you reference more pages. – Within that period of time, you will have a larger working set size. • Goal: keep WS for each process in memory. – E. g. If WSi for all i runnable processes > physical memory, then suspend a process 37

Working Set Approximation • Approximate with interval timer + a reference bit • Example: = 10, 000 – Timer interrupts after every 5000 time units – Keep in memory 2 bits for each page – Whenever a timer interrupts copy and sets the values of all reference bits to 0 – If one of the bits in memory = 1 page in working set • Why is this not completely accurate? – Cannot tell (within interval of 5000) where reference occured • Improvement = 10 bits and interrupt every 1000 time units 38

Using the Working Set • Used mainly for prepaging – Pages in working set are a good approximation • In Windows processes have a max and min WS size – At least min pages of the process are in memory – If > max pages in memory, on page fault a page is replaced – Else if memory is available, then WS is increased on page fault – The max WS can be specified by the application 39

Theoretical aside • Denning defined a policy called WSOpt – In this, the working set is computed over the next references, not the last: R(t). . R(t+ -1) • He compared WS with WSOpt. – WSOpt has knowledge of the future… – …yet even though WS is a practical algorithm with no ability to see the future, we can prove that the Hit and Miss ratio is identical for the two algorithms! 40

Key insight in proof • Basic idea is to look at the paging decision made in WS at time t+ -1 and compare with the decision made by WSOpt at time t • Both look at the same references… hence make same decision – Namely, WSOpt tosses out page R(t-1) if it isn’t referenced “again” in time t. . t+ -1 – WS running at time t+ -1 tosses out page R(t 1) if it wasn’t referenced in times t. . . t+ -1 41

How do WSOpt and WS differ? • WS maintains more pages in memory, because it needs time “delay” to make a paging decision – In effect, it makes the same decisions, but it makes them after a time lag – Hence these pages hang around a bit longer 42

How do WS and LRU compare? • Suppose we use the same value of – WS removes pages if they aren’t referenced and hence keeps less pages in memory – When it does page things out, it is using an LRU policy! – LRU will keep all pages in memory, referenced or not • Thus LRU often has a lower miss rate, but needs more memory than WS 43

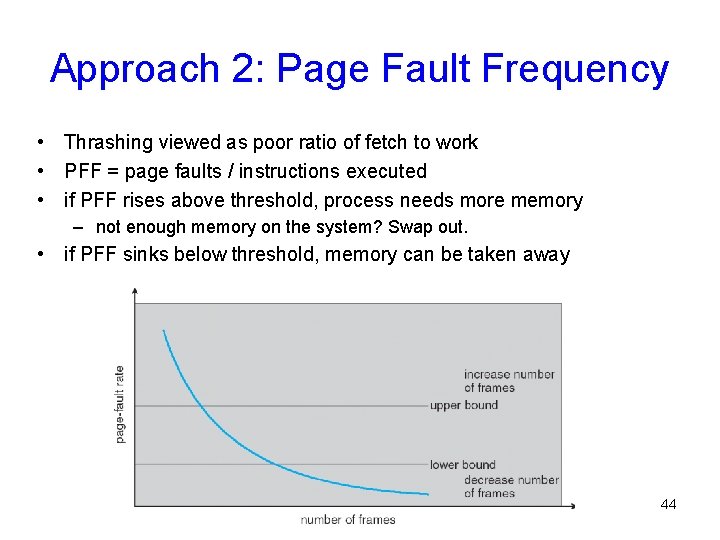

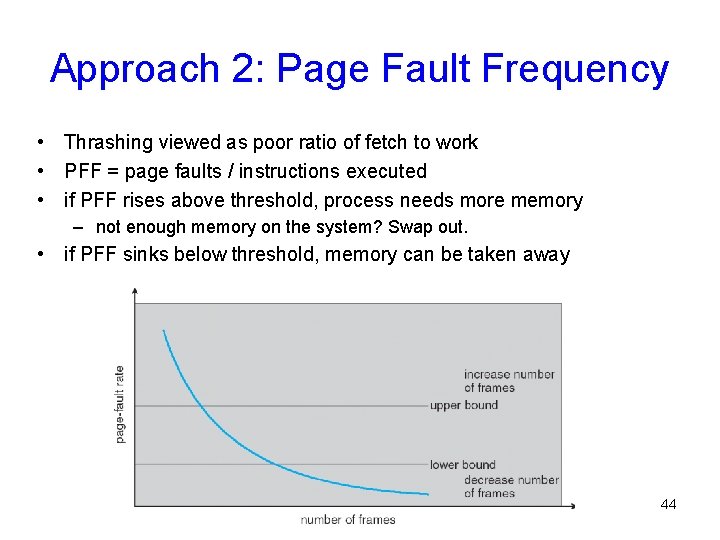

Approach 2: Page Fault Frequency • Thrashing viewed as poor ratio of fetch to work • PFF = page faults / instructions executed • if PFF rises above threshold, process needs more memory – not enough memory on the system? Swap out. • if PFF sinks below threshold, memory can be taken away 44

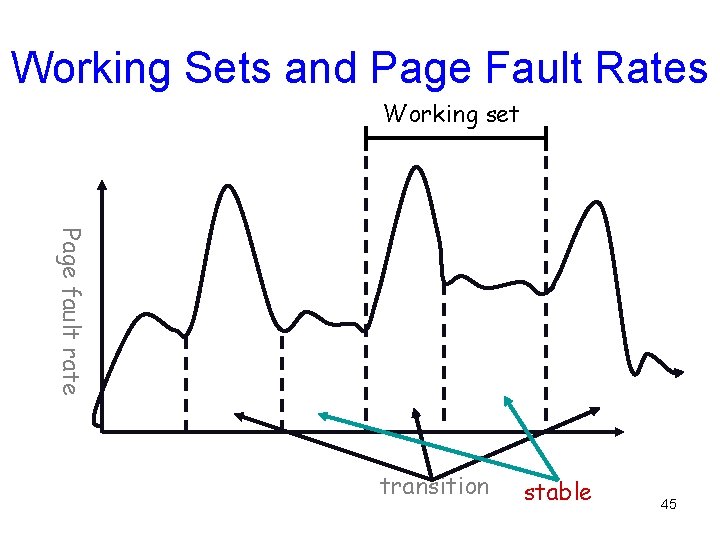

Working Sets and Page Fault Rates Working set Page fault rate transition stable 45

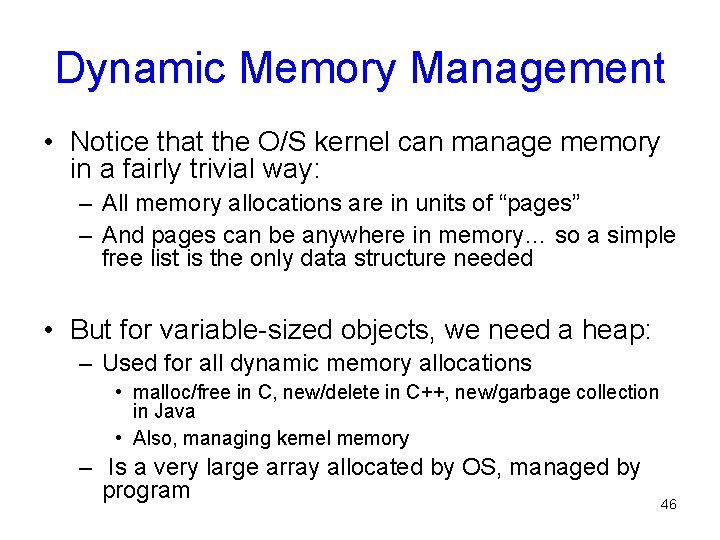

Dynamic Memory Management • Notice that the O/S kernel can manage memory in a fairly trivial way: – All memory allocations are in units of “pages” – And pages can be anywhere in memory… so a simple free list is the only data structure needed • But for variable-sized objects, we need a heap: – Used for all dynamic memory allocations • malloc/free in C, new/delete in C++, new/garbage collection in Java • Also, managing kernel memory – Is a very large array allocated by OS, managed by program 46

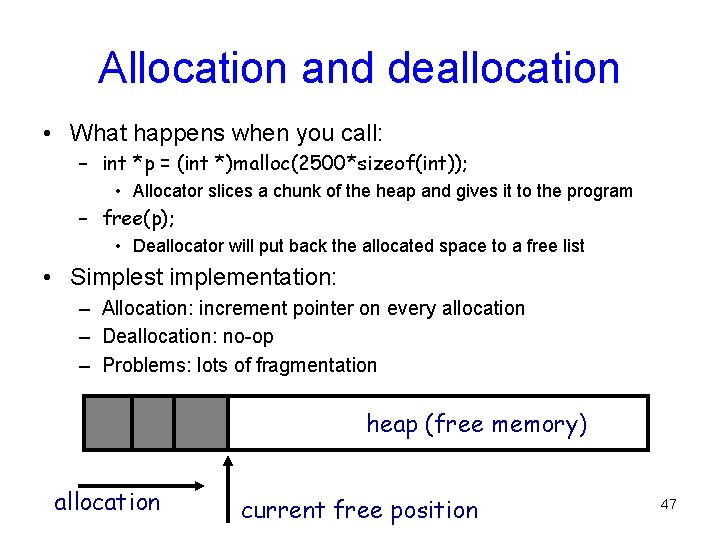

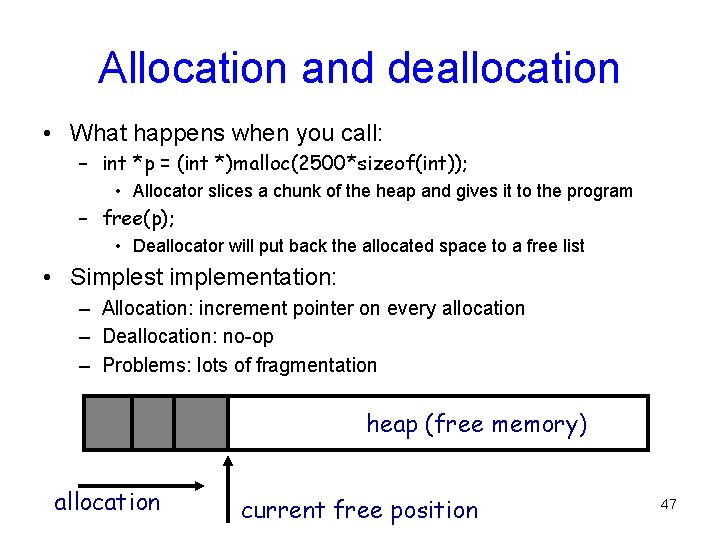

Allocation and deallocation • What happens when you call: – int *p = (int *)malloc(2500*sizeof(int)); • Allocator slices a chunk of the heap and gives it to the program – free(p); • Deallocator will put back the allocated space to a free list • Simplest implementation: – Allocation: increment pointer on every allocation – Deallocation: no-op – Problems: lots of fragmentation heap (free memory) allocation current free position 47

Memory allocation goals • Minimize space – Should not waste space, minimize fragmentation • Minimize time – As fast as possible, minimize system calls • Maximizing locality – Minimize page faults cache misses • And many more • Proven: impossible to construct “always good” memory allocator 48

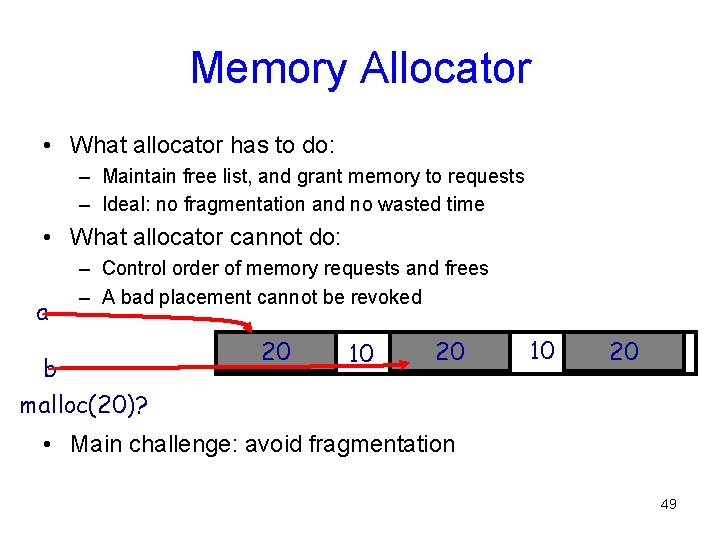

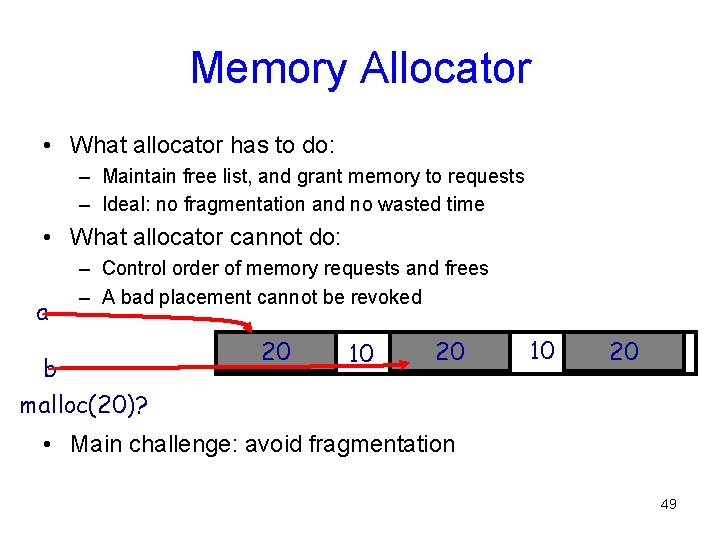

Memory Allocator • What allocator has to do: – Maintain free list, and grant memory to requests – Ideal: no fragmentation and no wasted time • What allocator cannot do: a – Control order of memory requests and frees – A bad placement cannot be revoked b malloc(20)? 20 10 20 • Main challenge: avoid fragmentation 49

Impossibility Results • Optimal memory allocation is NP-complete for general computation • Given any allocation algorithm, streams of allocation and deallocation requests that defeat the allocator and cause extreme fragmentation 50

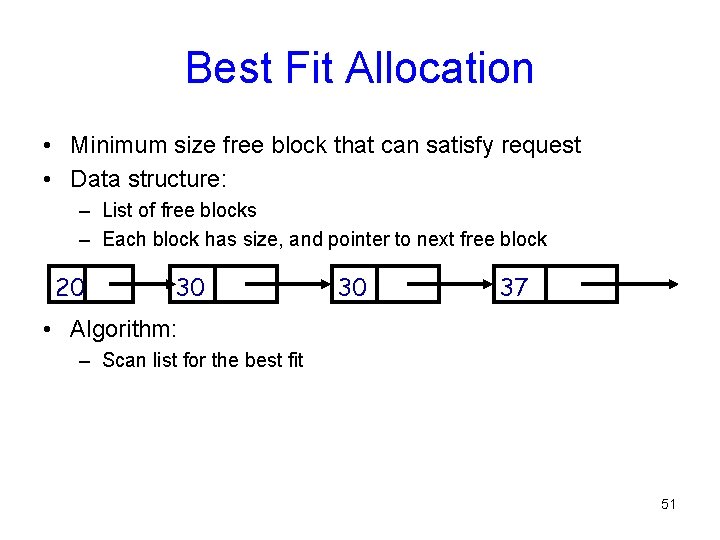

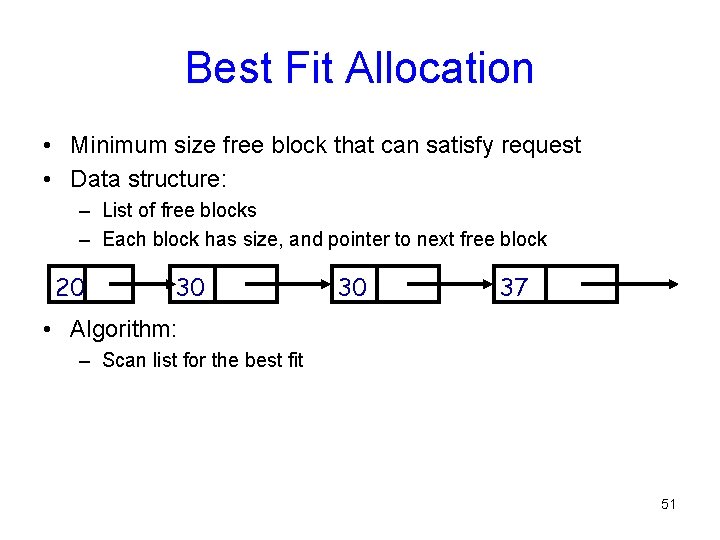

Best Fit Allocation • Minimum size free block that can satisfy request • Data structure: – List of free blocks – Each block has size, and pointer to next free block 20 30 30 37 • Algorithm: – Scan list for the best fit 51

Best Fit gone wrong • Simple bad case: allocate n, m (m<n) in alternating orders, free all the m’s, then try to allocate an m+1. • Example: – If we have 100 bytes of free memory – Request sequence: 19, 21, 19 19 21 19 – Free sequence: 19, 19 19 21 19 – Wasted space: 57! 52

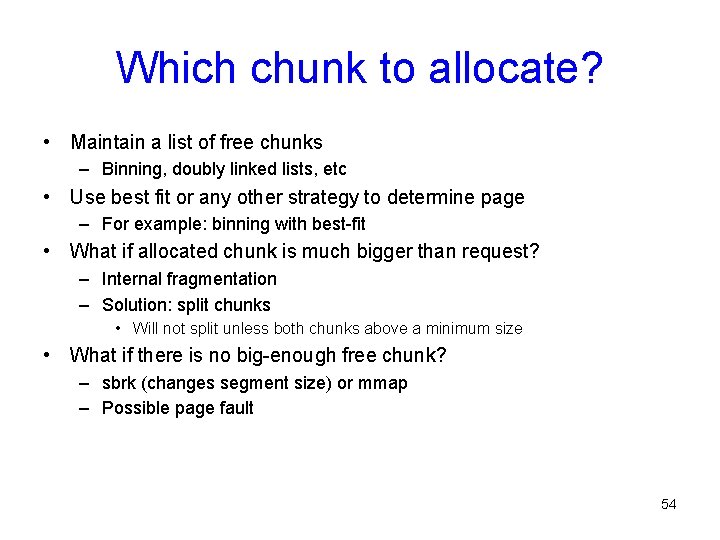

A simple scheme • Each memory chunk has a signature before and after – – Signature is an int +ve implies the a free chunk -ve implies that the chunk is currently in use Magnitude of chunk is its size • So, the smallest chunk is 3 elements: – One each for signature, and one for holding the data 53

Which chunk to allocate? • Maintain a list of free chunks – Binning, doubly linked lists, etc • Use best fit or any other strategy to determine page – For example: binning with best-fit • What if allocated chunk is much bigger than request? – Internal fragmentation – Solution: split chunks • Will not split unless both chunks above a minimum size • What if there is no big-enough free chunk? – sbrk (changes segment size) or mmap – Possible page fault 54

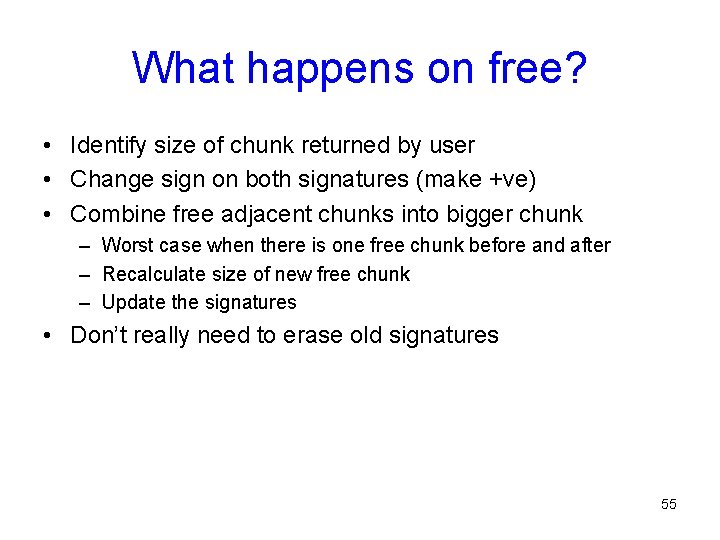

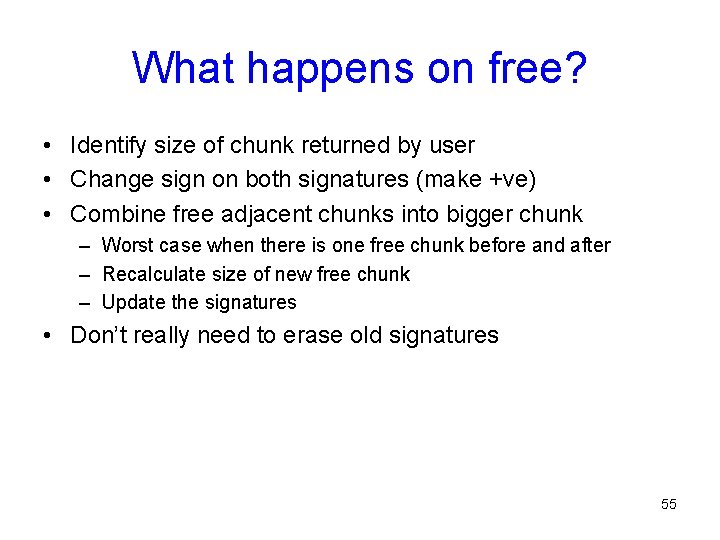

What happens on free? • Identify size of chunk returned by user • Change sign on both signatures (make +ve) • Combine free adjacent chunks into bigger chunk – Worst case when there is one free chunk before and after – Recalculate size of new free chunk – Update the signatures • Don’t really need to erase old signatures 55

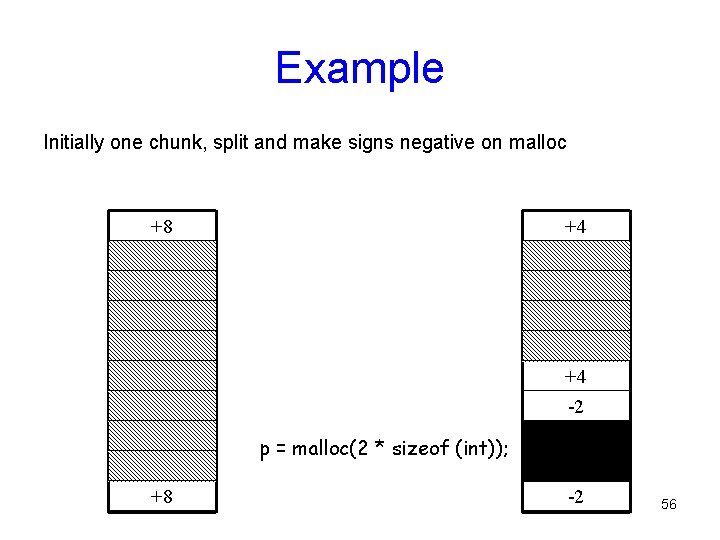

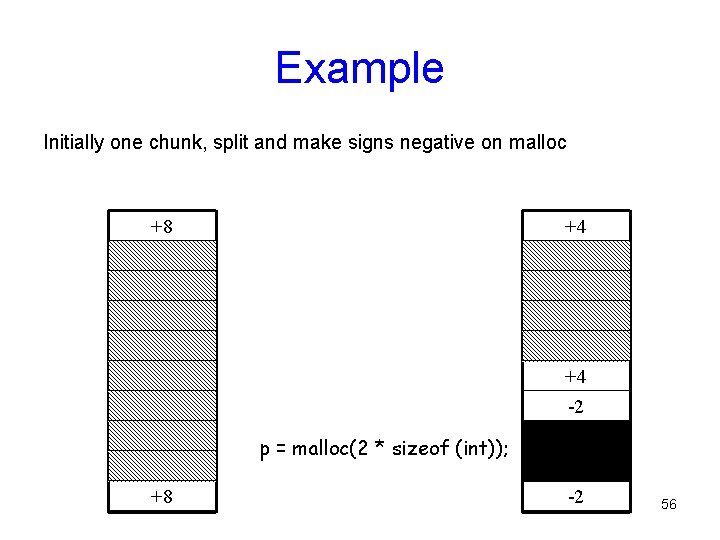

Example Initially one chunk, split and make signs negative on malloc +8 +4 +4 -2 p = malloc(2 * sizeof (int)); +8 -2 56

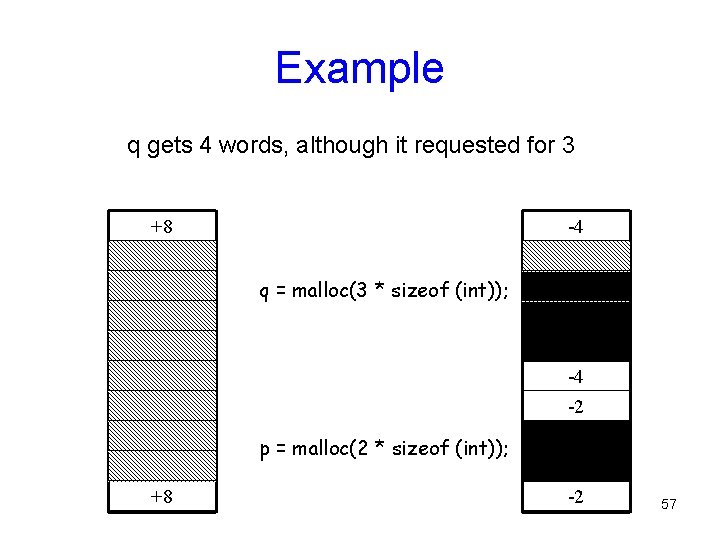

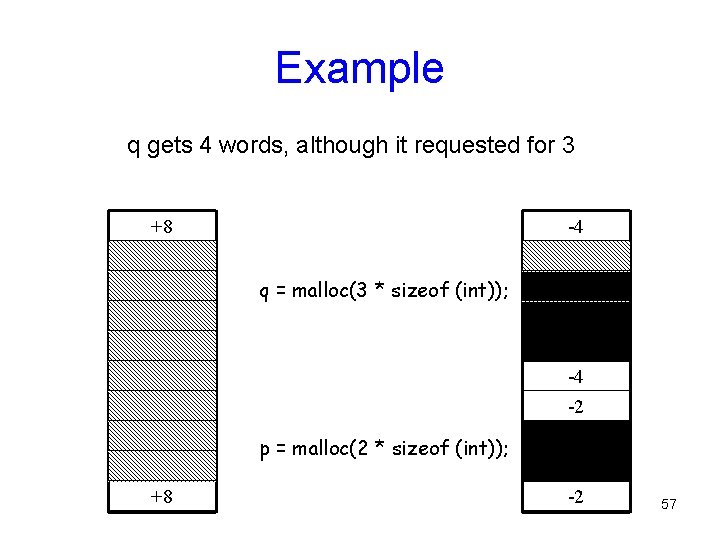

Example q gets 4 words, although it requested for 3 +8 -4 q = malloc(3 * sizeof (int)); -4 -2 p = malloc(2 * sizeof (int)); +8 -2 57

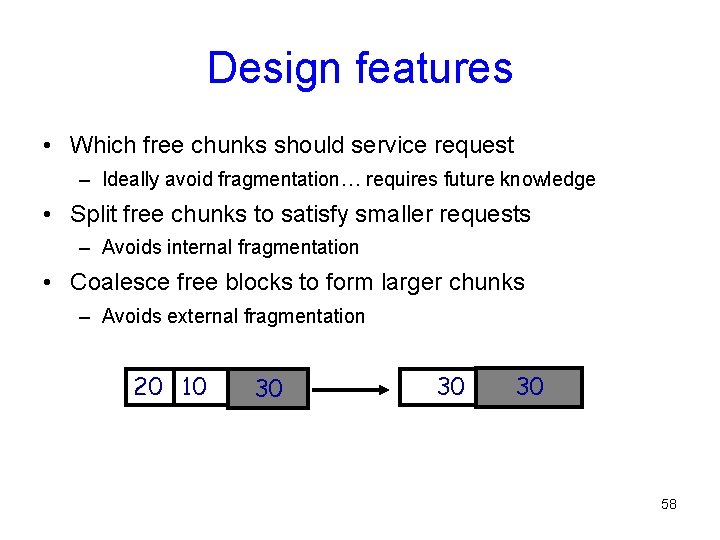

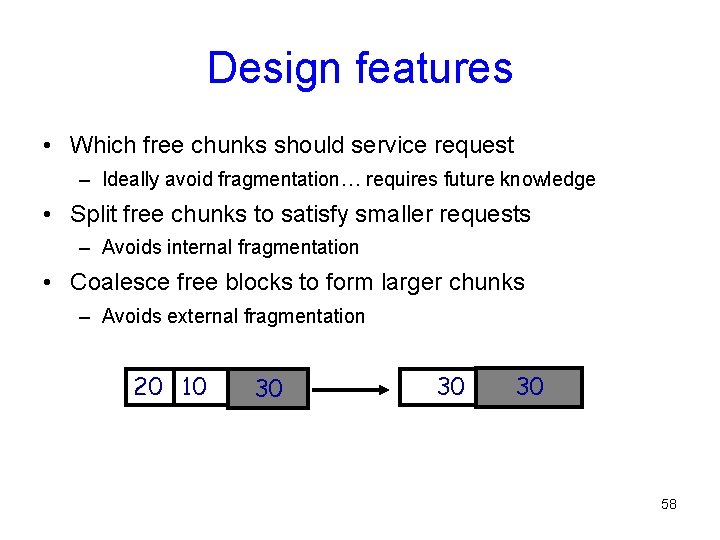

Design features • Which free chunks should service request – Ideally avoid fragmentation… requires future knowledge • Split free chunks to satisfy smaller requests – Avoids internal fragmentation • Coalesce free blocks to form larger chunks – Avoids external fragmentation 20 10 30 30 30 58

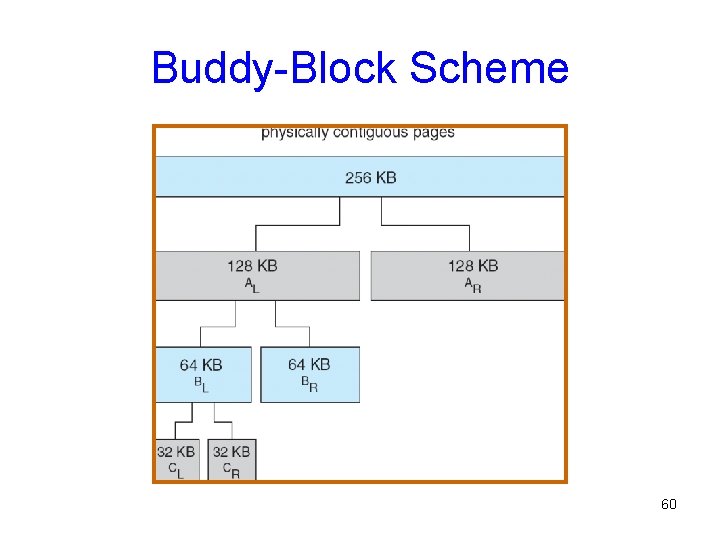

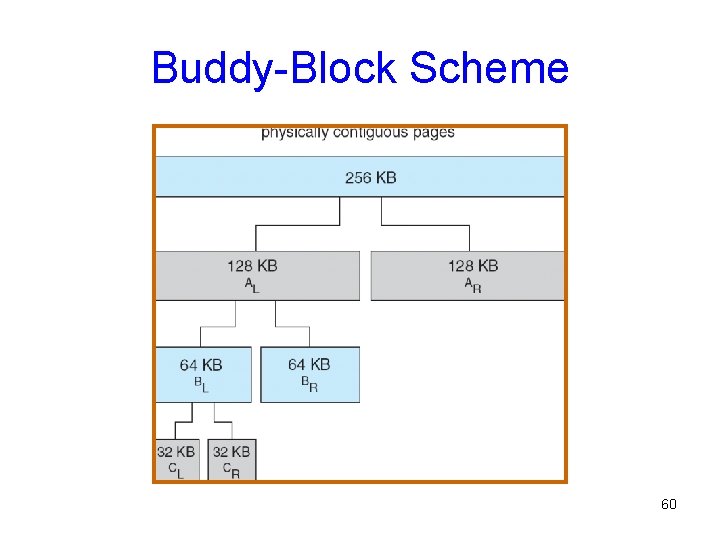

Buddy-Block Scheme • Invented by Donald Knuth, very simple • Idea: Work with memory regions that are all powers of 2 times some “smallest” size – 2 k times b • Round each request up to have form b*2 k 59

Buddy-Block Scheme 60

Buddy-Block Scheme • Keep a free list for each block size (each k) – When freeing an object, combine with adjacent free regions if this will result in a double-sized free object • Basic actions on allocation request: – If request is a close fit to a region on the free list, allocate that region. – If request is less than half the size of a region on the free list, split the next larger size of region in half – If request is larger than any region, double the size of the heap (this puts a new larger object on the free list) 61

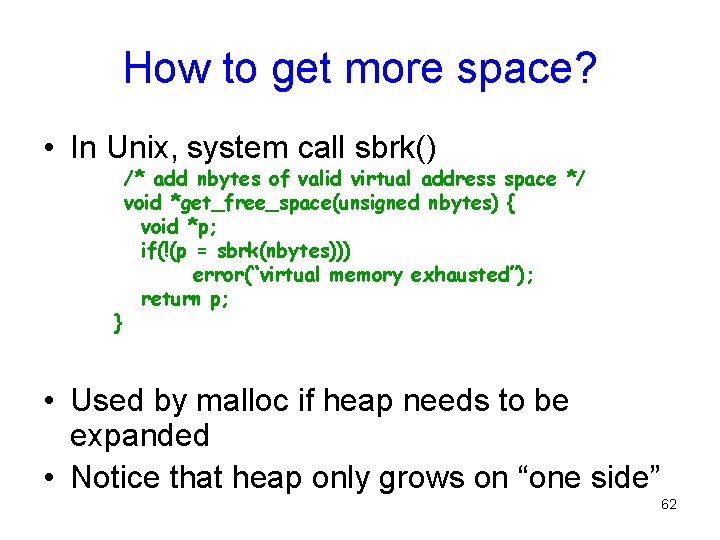

How to get more space? • In Unix, system call sbrk() } /* add nbytes of valid virtual address space */ void *get_free_space(unsigned nbytes) { void *p; if(!(p = sbrk(nbytes))) error(“virtual memory exhausted”); return p; • Used by malloc if heap needs to be expanded • Notice that heap only grows on “one side” 62

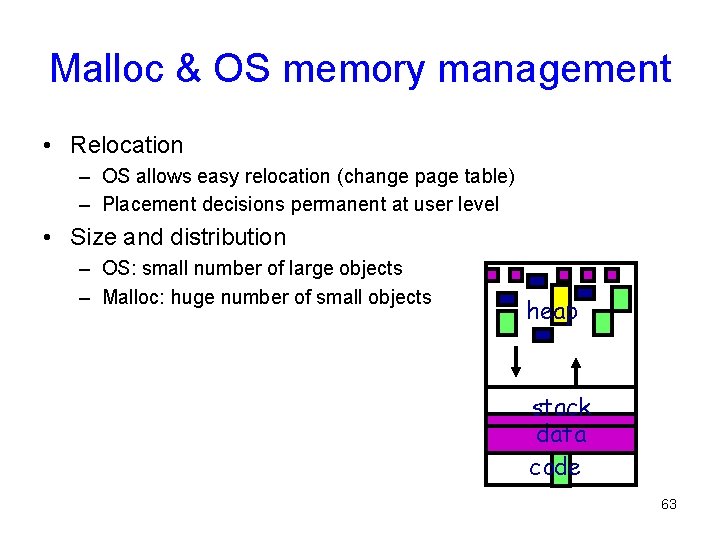

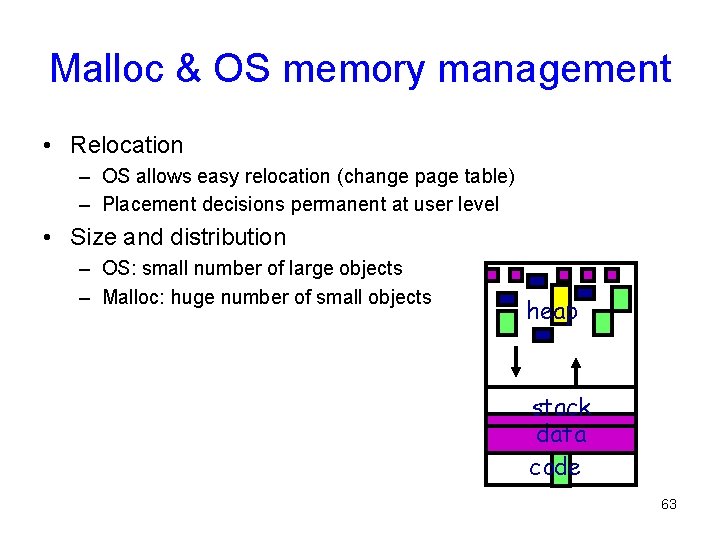

Malloc & OS memory management • Relocation – OS allows easy relocation (change page table) – Placement decisions permanent at user level • Size and distribution – OS: small number of large objects – Malloc: huge number of small objects heap stack data code 63

![Other Issues Program Structure Int128 128 data Each row is stored Other Issues – Program Structure • Int[128, 128] data; • Each row is stored](https://slidetodoc.com/presentation_image_h2/a1145bfea0692da639d92091646e8a99/image-64.jpg)

Other Issues – Program Structure • Int[128, 128] data; • Each row is stored in one page • Program 1 – for (j = 0; j <128; j++) for (i = 0; i < 128; i++) data[i, j] = 0; – 128 x 128 = 16, 384 page faults • Program 2 – for (i = 0; i < 128; i++) for (j = 0; j < 128; j++) data[i, j] = 0; – 128 page faults 64

OS and Paging • Process Creation: – Allocate space and initialize page table for program and data – Allocate and initialize swap area – Info about PT and swap space is recorded in process table • Process Execution – Reset MMU for new process – Flush the TLB – Bring processes’ pages in memory • Page Faults • Process Termination – Release pages 65