Vector MultiPorted Register File EECS 470 Lecture 24

- Slides: 31

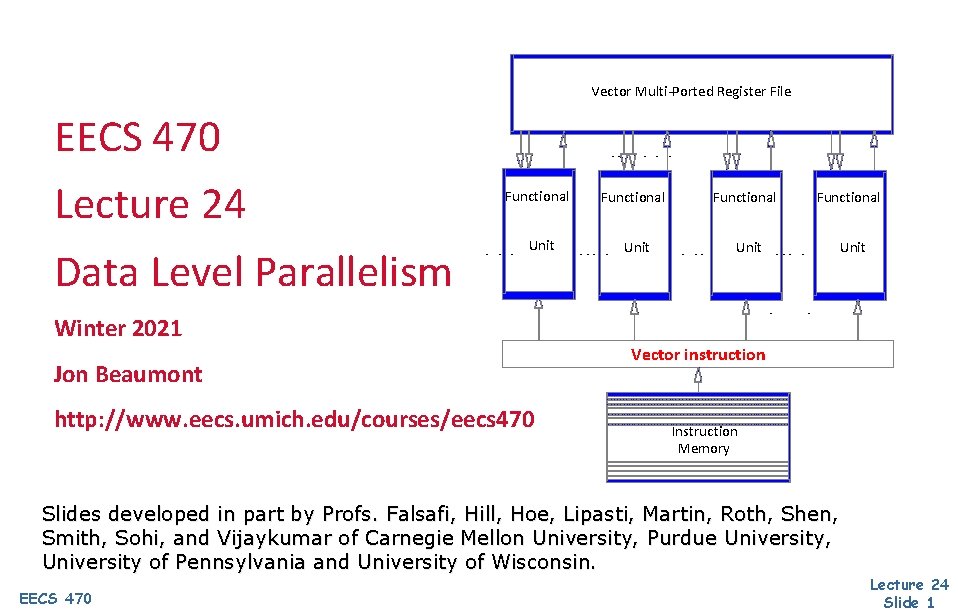

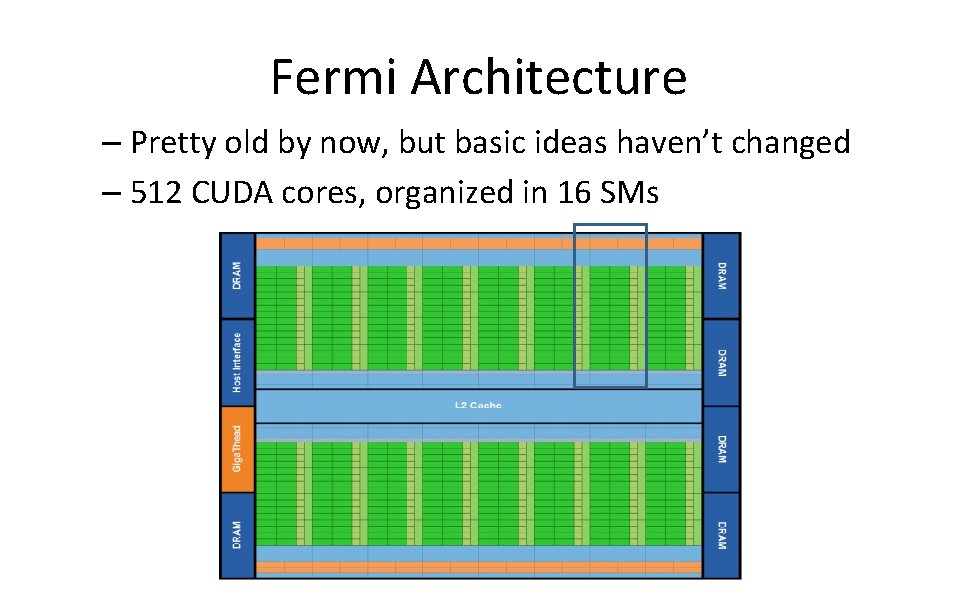

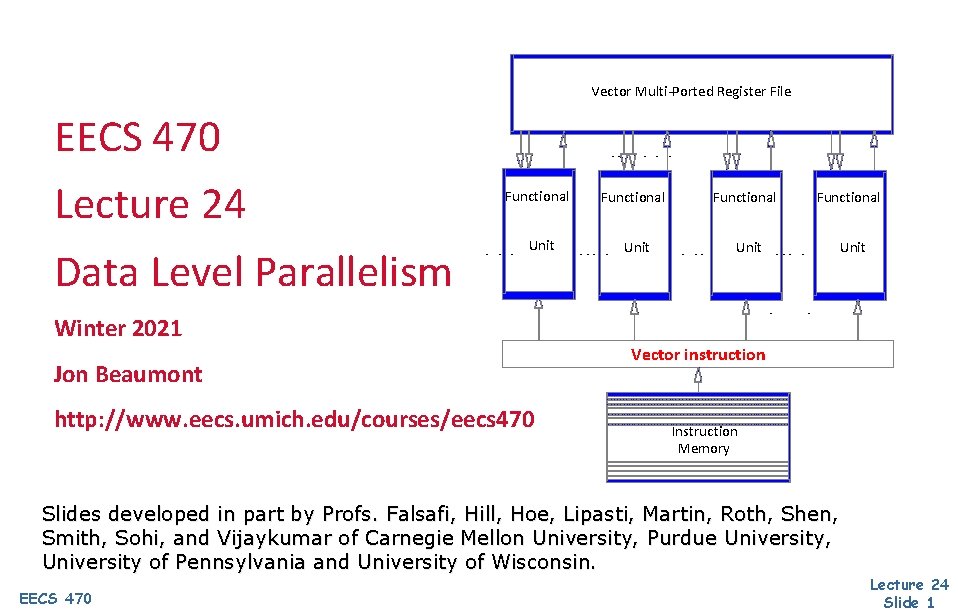

Vector Multi-Ported Register File EECS 470 Lecture 24 Data Level Parallelism Functional Unit Winter 2021 Jon Beaumont http: //www. eecs. umich. edu/courses/eecs 470 Vector instruction Instruction Memory Slides developed in part by Profs. Falsafi, Hill, Hoe, Lipasti, Martin, Roth, Shen, Smith, Sohi, and Vijaykumar of Carnegie Mellon University, Purdue University, University of Pennsylvania and University of Wisconsin. EECS 470 Lecture 24 Slide 1

Announcements Project Due Friday • Don’t panic • Make sure output is the same as P 3 (but with different cycles and CPI values… # instructions should be the same!) • Delete $dumpvars Presentations • You can either present live on Tuesday • Or submit a prerecorded video • ~ 8 minutes, meant to be pretty casual EECS 470 Lecture 24 Slide 2

Announcements Written report due next Monday night / Tuesday morning Describe the project, what you’ve implemented, and how you tested your design (7%) r In-depth analysis of your design and it’s performance (10%) r See spec and Piazza post for more details r Extra credit: Extra test case (up to 2%) r Homework #5 due next Tuesday r EECS 470 Dropping lowest assignment Lecture 24 Slide 3

Announcements Final Exam: W 4/28 8 -10 am • Administered on Gradescope, like midterm • Will focus on stuff after the midterm (Memory disambiguation and onwards) • Although anything is fair game • Will likely be a Verilog question as well • Sample exams posted EECS 470 Lecture 24 Slide 4

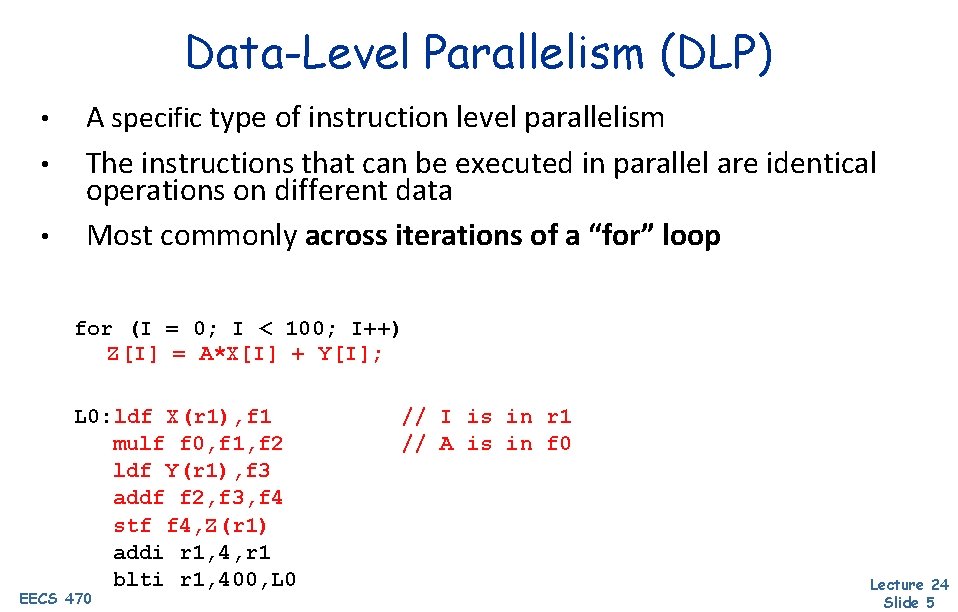

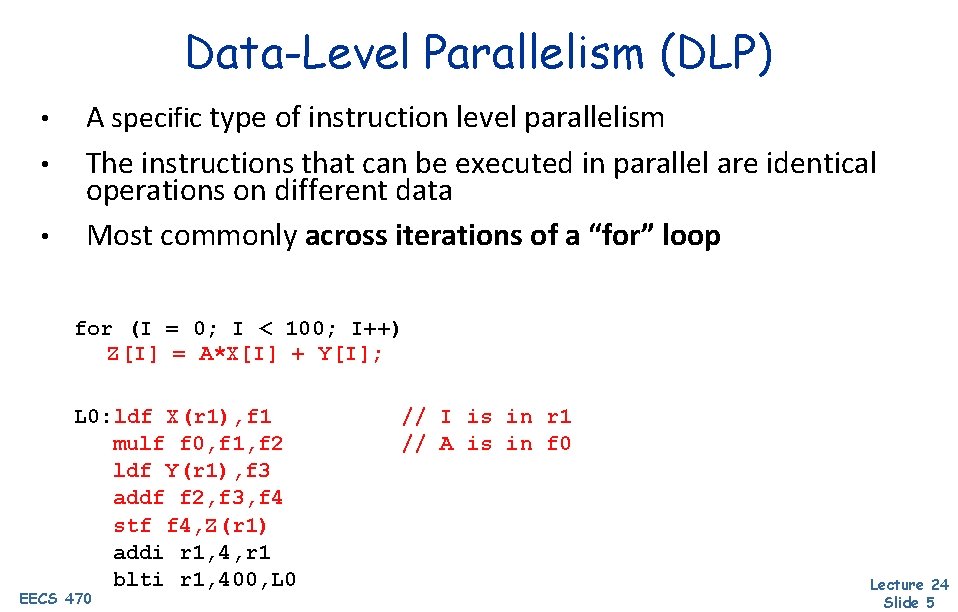

Data-Level Parallelism (DLP) • • • A specific type of instruction level parallelism The instructions that can be executed in parallel are identical operations on different data Most commonly across iterations of a “for” loop for (I = 0; I < 100; I++) Z[I] = A*X[I] + Y[I]; L 0: ldf X(r 1), f 1 mulf f 0, f 1, f 2 ldf Y(r 1), f 3 addf f 2, f 3, f 4 stf f 4, Z(r 1) addi r 1, 4, r 1 blti r 1, 400, L 0 EECS 470 // I is in r 1 // A is in f 0 Lecture 24 Slide 5

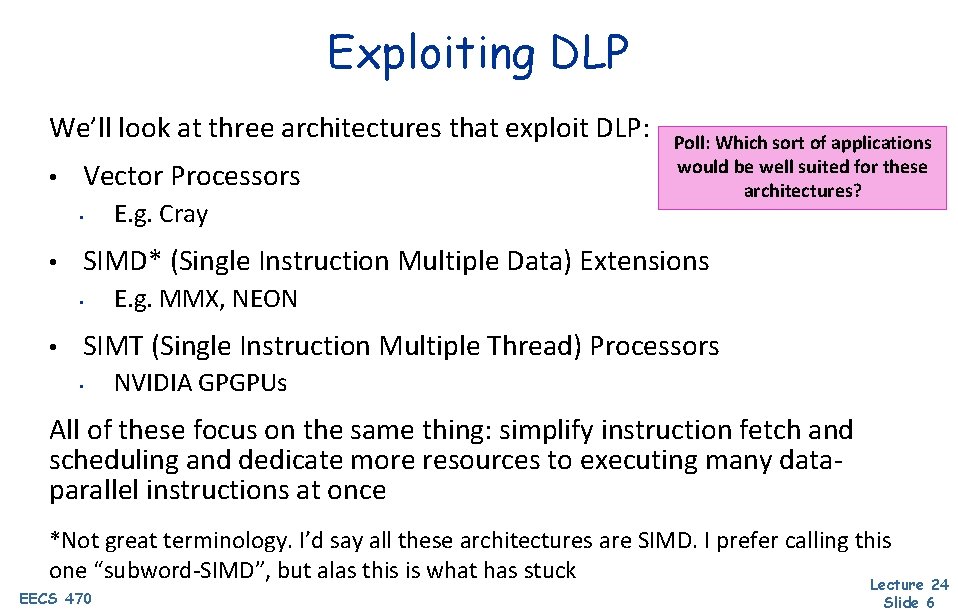

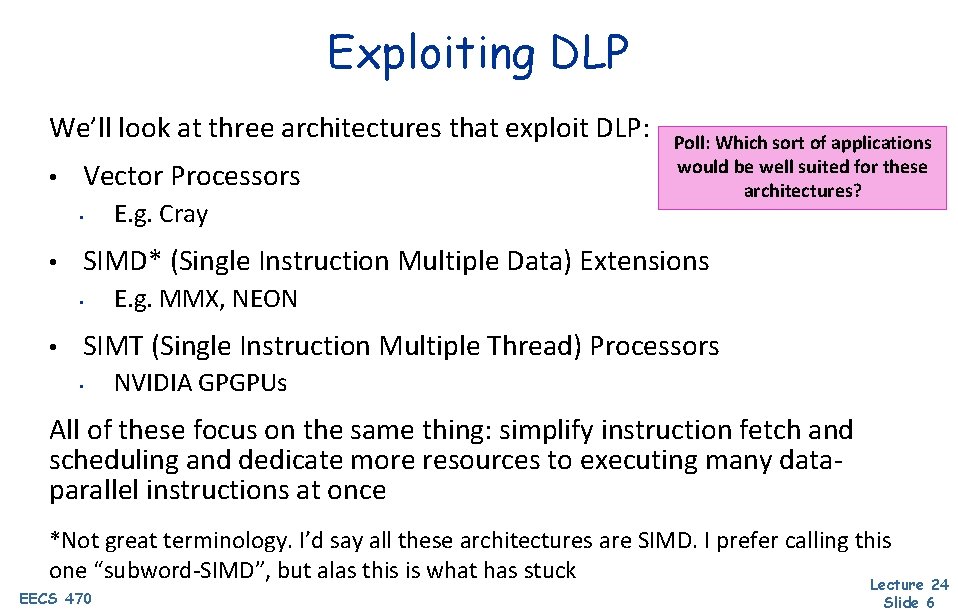

Exploiting DLP We’ll look at three architectures that exploit DLP: • Vector Processors • • SIMD* (Single Instruction Multiple Data) Extensions • • E. g. Cray Poll: Which sort of applications would be well suited for these architectures? E. g. MMX, NEON SIMT (Single Instruction Multiple Thread) Processors • NVIDIA GPGPUs All of these focus on the same thing: simplify instruction fetch and scheduling and dedicate more resources to executing many dataparallel instructions at once *Not great terminology. I’d say all these architectures are SIMD. I prefer calling this one “subword-SIMD”, but alas this is what has stuck Lecture 24 EECS 470 Slide 6

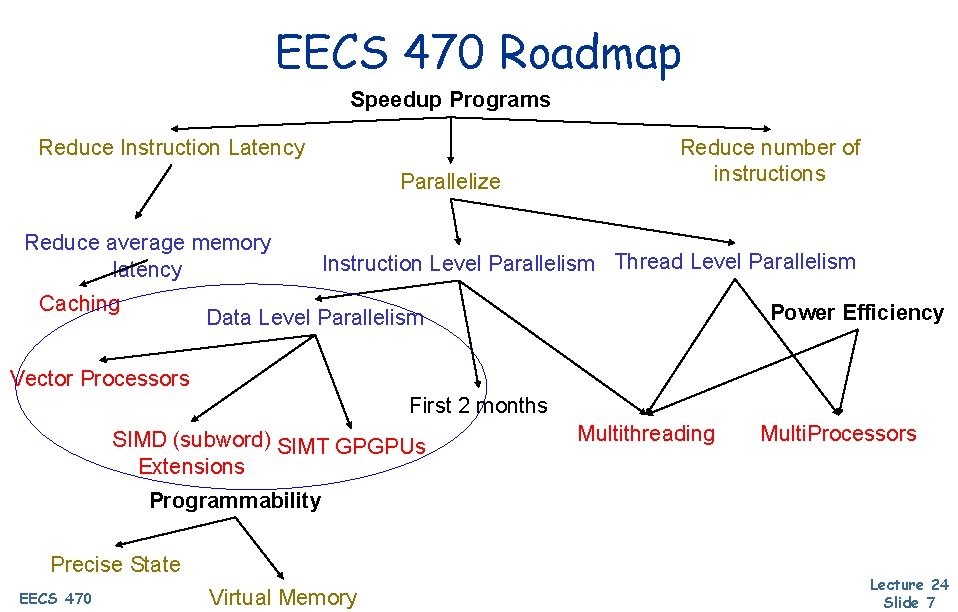

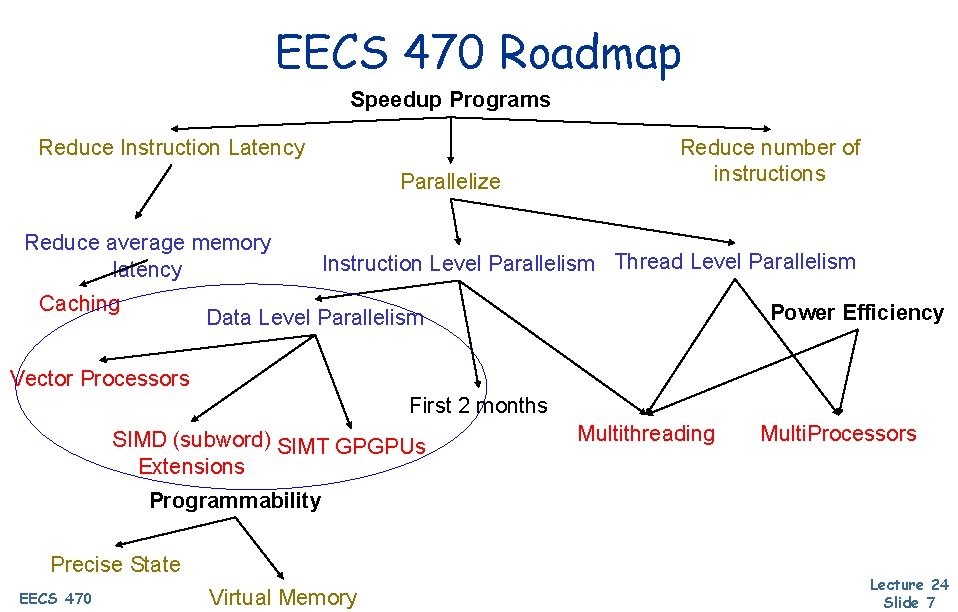

EECS 470 Roadmap Speedup Programs Reduce Instruction Latency Parallelize Reduce average memory latency Caching Reduce number of instructions Instruction Level Parallelism Thread Level Parallelism Power Efficiency Data Level Parallelism Vector Processors First 2 months SIMD (subword) SIMT GPGPUs Extensions Multithreading Multi. Processors Programmability Precise State EECS 470 Virtual Memory Lecture 24 Slide 7

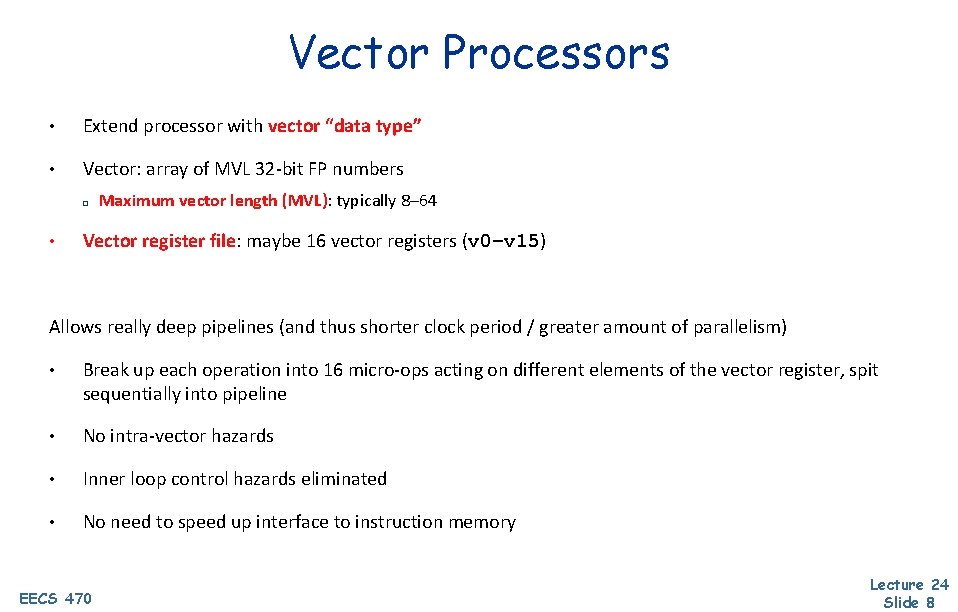

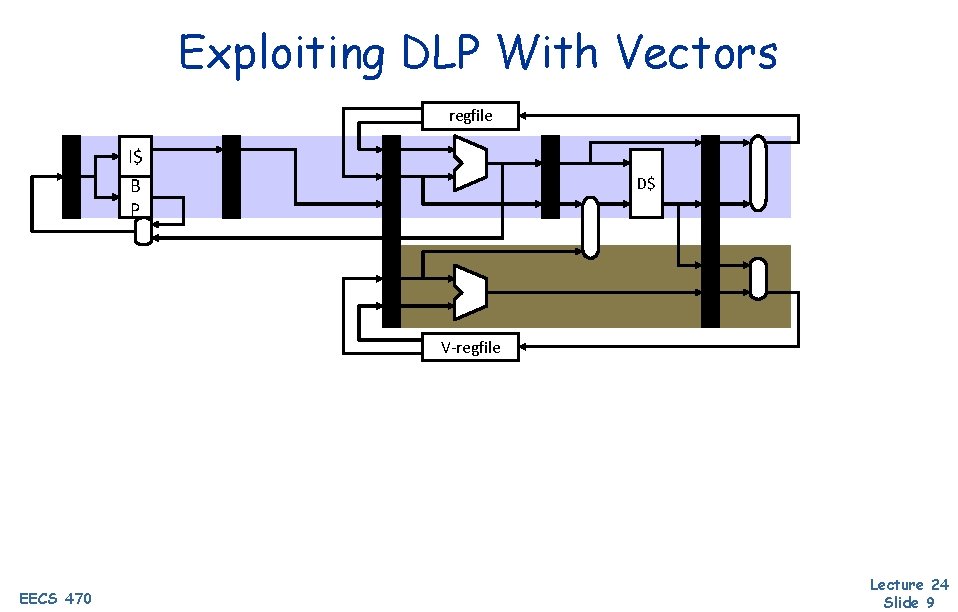

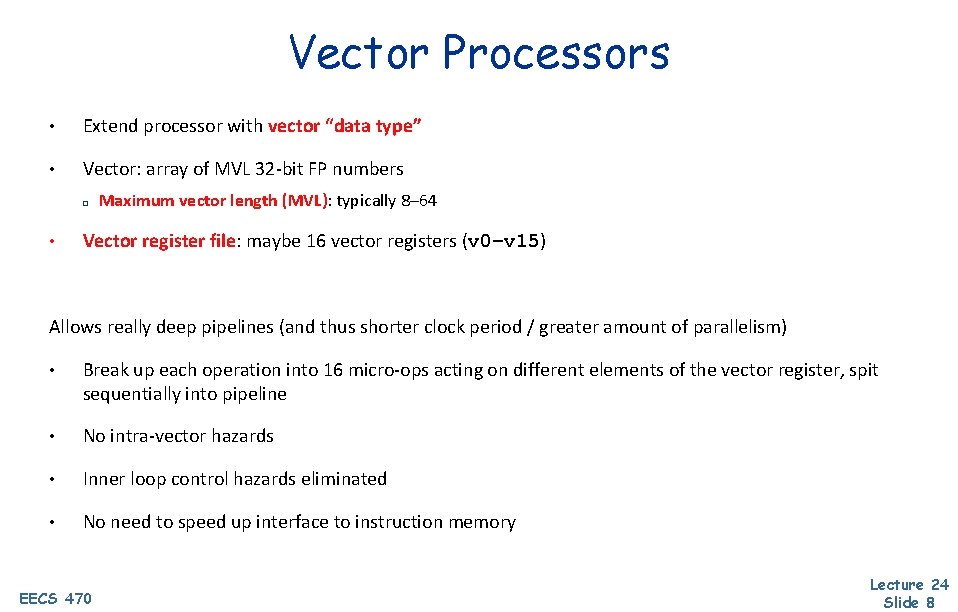

Vector Processors • Extend processor with vector “data type” • Vector: array of MVL 32 -bit FP numbers r • Maximum vector length (MVL): typically 8– 64 Vector register file: maybe 16 vector registers (v 0–v 15) Allows really deep pipelines (and thus shorter clock period / greater amount of parallelism) • Break up each operation into 16 micro-ops acting on different elements of the vector register, spit sequentially into pipeline • No intra-vector hazards • Inner loop control hazards eliminated • No need to speed up interface to instruction memory EECS 470 Lecture 24 Slide 8

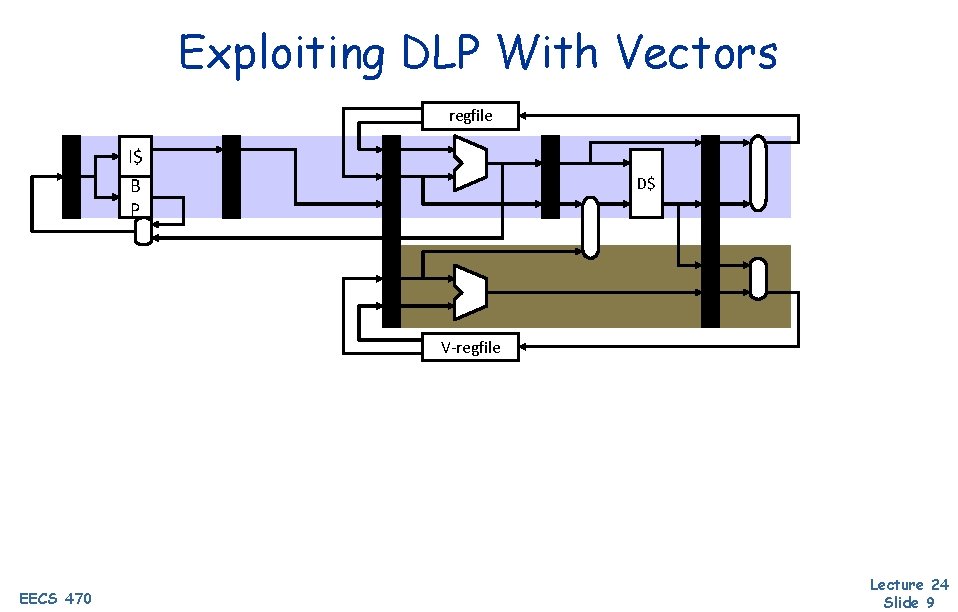

Exploiting DLP With Vectors regfile I$ B P D$ V-regfile EECS 470 Lecture 24 Slide 9

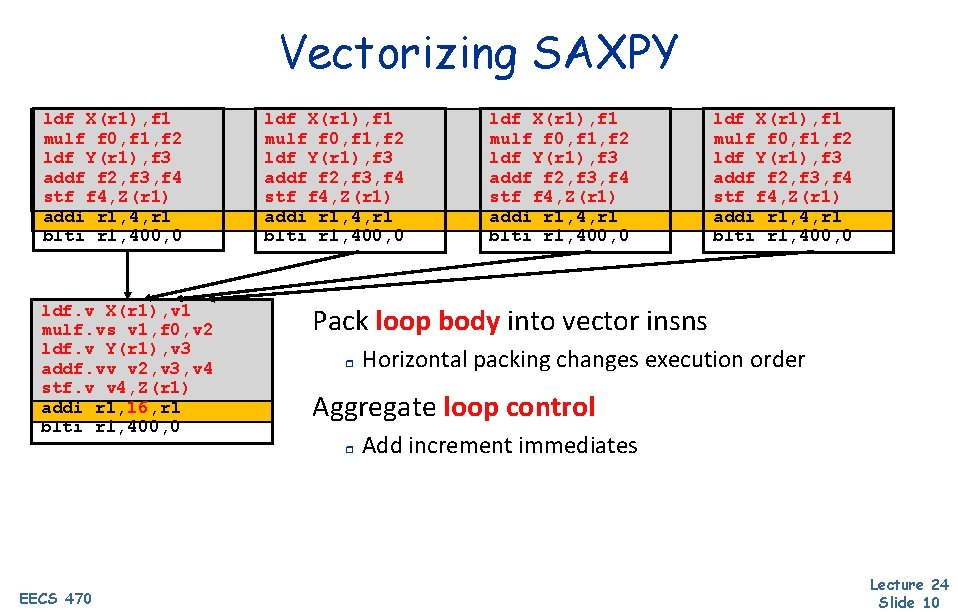

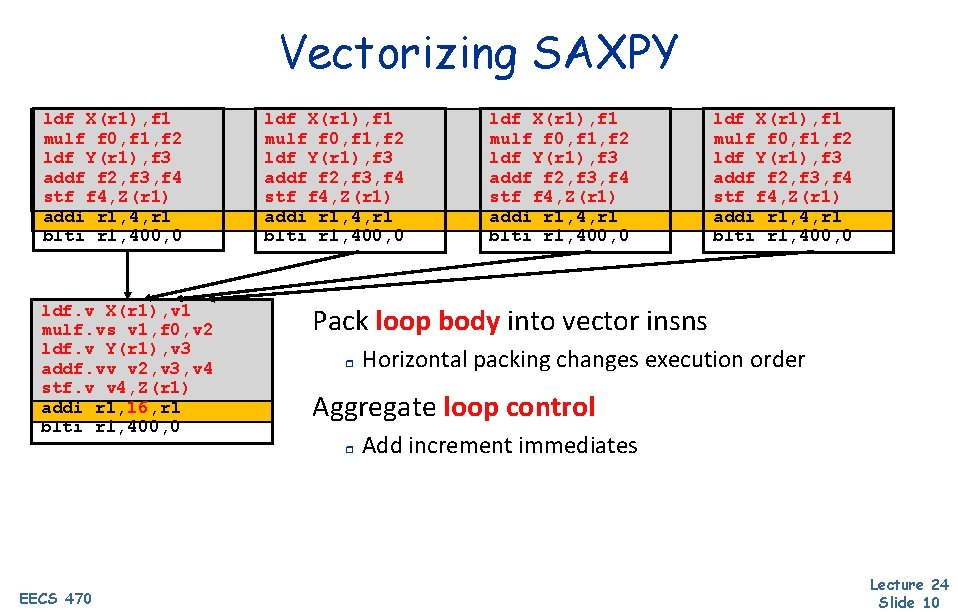

Vectorizing SAXPY ldf X(r 1), f 1 mulf f 0, f 1, f 2 ldf Y(r 1), f 3 addf f 2, f 3, f 4 stf f 4, Z(r 1) addi r 1, 4, r 1 blti r 1, 400, 0 ldf. v X(r 1), v 1 mulf. vs v 1, f 0, v 2 ldf. v Y(r 1), v 3 addf. vv v 2, v 3, v 4 stf. v v 4, Z(r 1) addi r 1, 16, r 1 blti r 1, 400, 0 ldf X(r 1), f 1 mulf f 0, f 1, f 2 ldf Y(r 1), f 3 addf f 2, f 3, f 4 stf f 4, Z(r 1) addi r 1, 4, r 1 blti r 1, 400, 0 Pack loop body into vector insns r Horizontal packing changes execution order Aggregate loop control r EECS 470 ldf X(r 1), f 1 mulf f 0, f 1, f 2 ldf Y(r 1), f 3 addf f 2, f 3, f 4 stf f 4, Z(r 1) addi r 1, 4, r 1 blti r 1, 400, 0 Add increment immediates Lecture 24 Slide 10

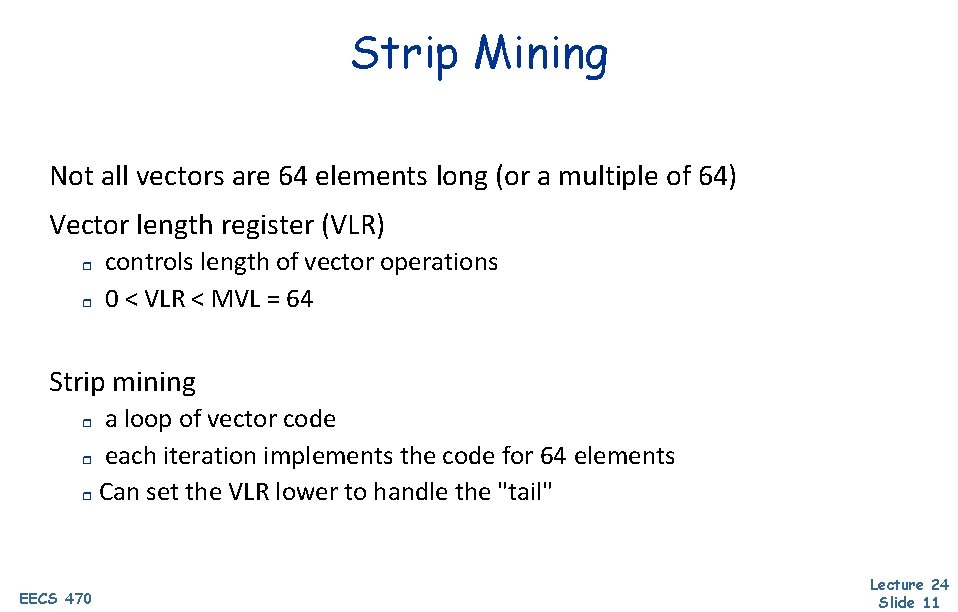

Strip Mining Not all vectors are 64 elements long (or a multiple of 64) Vector length register (VLR) r r controls length of vector operations 0 < VLR < MVL = 64 Strip mining a loop of vector code r each iteration implements the code for 64 elements r Can set the VLR lower to handle the "tail" r EECS 470 Lecture 24 Slide 11

Other Vector Operations Use masked vector register for vectorizing “if” statements r r a mask specifies which vector elements are operated on can set the mask using logical compares (sltsv v 1, f 0) Scatter/gather r r EECS 470 used for sparse arrays/matrices using an index vector, gather elements into a vector register operate on the vector put the vector back Lecture 24 Slide 12

Vector Performance Where does it come from? + + Much higher parallelism than traditional pipelines Fewer loop control insns: addi, blt, etc. m + RAW stalls taken only once, on “first iteration” m EECS 470 Vector insns contain implicit loop control Vector pipelines hide stalls of “subsequent iterations” Lecture 24 Slide 13

Moving beyond Vector Processors • More popular back when area was a premium and power was not • • • Today, area isn’t much of a concern, but power is • • Only size overhead was extra pipeline registers Can make clock frequency really fast We don’t like speeding up clock too much, consumes a lot of power More modern approaches to DLP focus on executing wider execution at a lower clock frequency EECS 470 Lecture 24 Slide 14

SIMD Extensions To maximize computational throughput for DLP heavy applications, ideally: • Fetch/decode one instruction • Dispatch many instances of that instruction with different data across many functional units Many modern processors already have a very wide functional unit: • Modern floating point functional units are 128 -256 bits • Don’t necessarily need a completely new pipeline like vector processors Let’s chop it up & execute multiple 8, 16, 32 or whatever-sized elements in parallel! EECS 470 Lecture 24 Slide 15

MMX Peleg & Weiser, IEEE Micro, August 1996 Goal: 2 x perf. in multimedia (audio, video, etc. ) Key: Single Instruction Multiple Data (SIMD) r used in the past to build large-scale supercomputers One instruction computes multiple data simultaneously Has since been extended by Streaming SIMD Extensions (SSE, 1999) and Advanced Vector Extensions (AVX, 2008) EECS 470 Lecture 24 Slide 16

MMX: Intro Most multimedia apps work on short integers Pack data 8 -bit or 16 -bit data into 64 -bit words Operate on packed data like short vectors r SIMD (around since Livermore S-1, 30 years ago!) Integrate into x 86 FP registers Can improve performance by 8 x (in theory) r EECS 470 in reality performance is typically better than 2 Lecture 24 Slide 17

MMX: Datatypes Native datatypes smaller than usual r e. g. , 8 -bit pixels, 16 -bit audio samples Datatypes packed or compressed into 64 bits Many options: 1 x 64 -bit quad-words r 2 x 32 -bit double-words r 4 x 16 -bit words r 8 x 8 -bit bytes r EECS 470 Lecture 24 Slide 18

MMX: Enhanced Instructions E. g. , addb (for byte) 17 87 100 …. (5 more) +17 13 200 …. --------------------------34 100 44 …. Saturating arithmetic ((100 + 200)mod 256=44) Needed by multimedia algorithms EECS 470 Lecture 24 Slide 19

MMX: Performance Example 16 element dot product [a 1 a 2 a 3…. . a 16]x[b 1 b 2 b 3…. . b 16] = a 1 xb 1+…. a 16 xb 16 Intel + few optimizations (compiler + hardware) 32 loads r 16 multiplies r 15 adds r 12 loop control r ------------r 75 instructions r MMX using 16 -bit data: 16 instructions EECS 470 Lecture 24 Slide 20

Aside: SVE Developed by ARM recently Allow hardware implementation to decide physical vector length Code is written “vector length agnosticly” (VLA) Can run on any implementation, even with different vector lengths EECS 470 Lecture 24 Slide 21

Scalable Hardware Vectors • Scalable Vector Extension (SVE) • Extends Advanced SIMD (NEON) beyond 128 bit vectors • NEON designed for DSP, media codecs etc. - Fixed vector sizes (128 bits) - Simple control flow - Regular, contiguous data structures • SVE extends to HPC applications - Longer, per-implementation vector sizes (128 -2048 bits) - Complex control flow - Irregular data structures EECS 470 Lecture 24 Slide 22

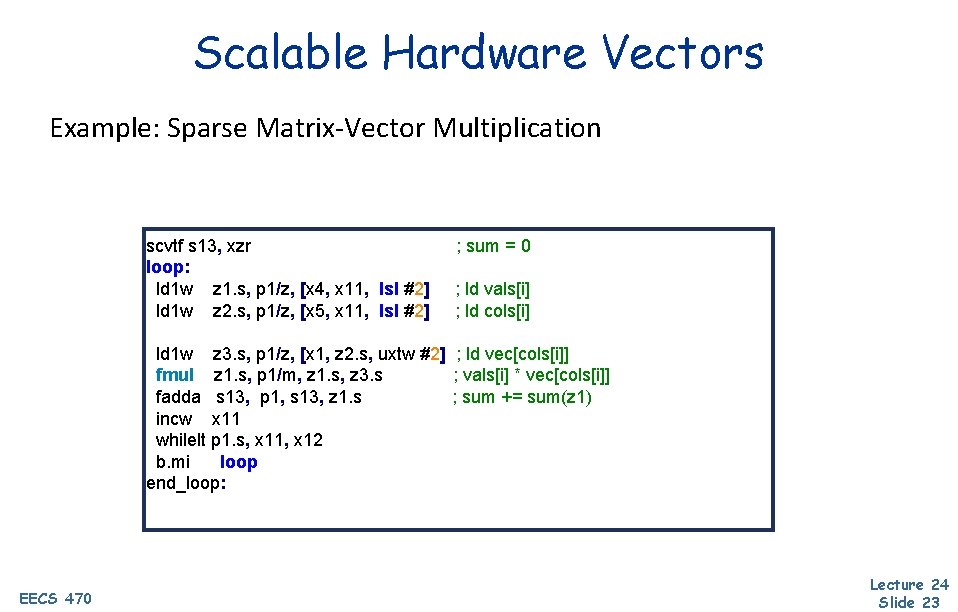

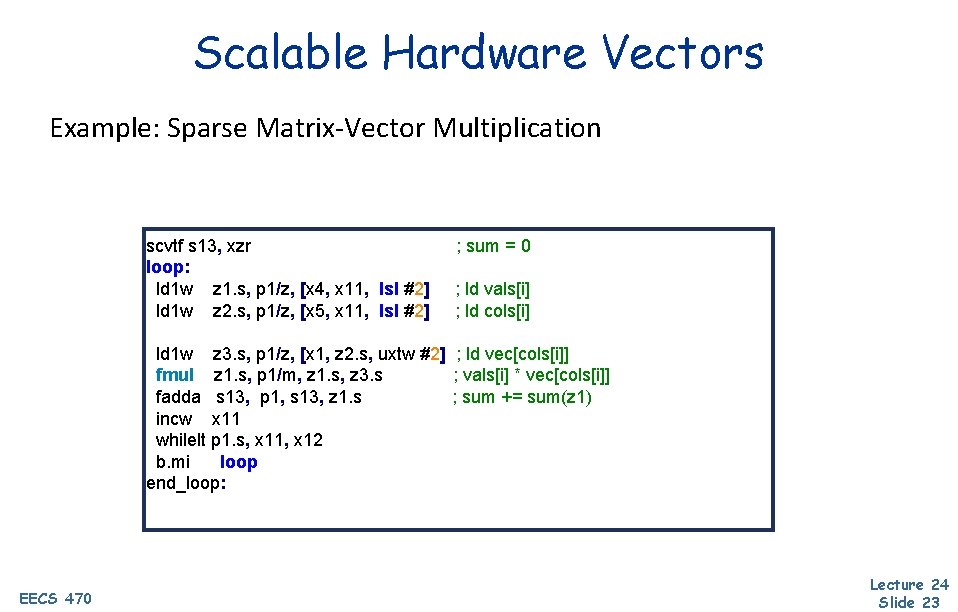

Scalable Hardware Vectors Example: Sparse Matrix-Vector Multiplication scvtf s 13, xzr loop: ld 1 w z 1. s, p 1/z, [x 4, x 11, lsl #2] ld 1 w z 2. s, p 1/z, [x 5, x 11, lsl #2] ; sum = 0 ; ld vals[i] ; ld cols[i] ld 1 w z 3. s, p 1/z, [x 1, z 2. s, uxtw #2] ; ld vec[cols[i]] fmul z 1. s, p 1/m, z 1. s, z 3. s ; vals[i] * vec[cols[i]] fadda s 13, p 1, s 13, z 1. s ; sum += sum(z 1) incw x 11 whilelt p 1. s, x 11, x 12 b. mi loop end_loop: EECS 470 Lecture 24 Slide 23

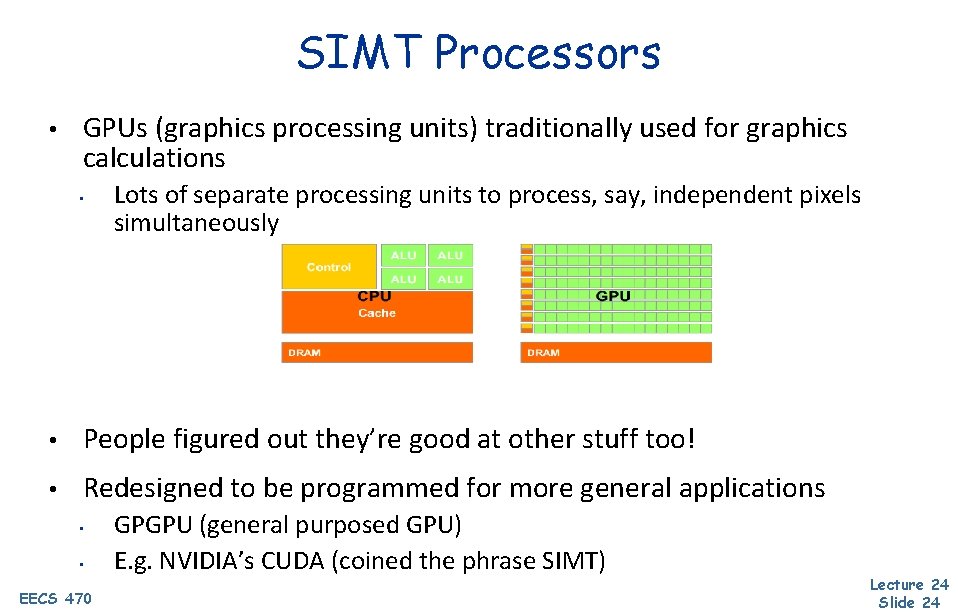

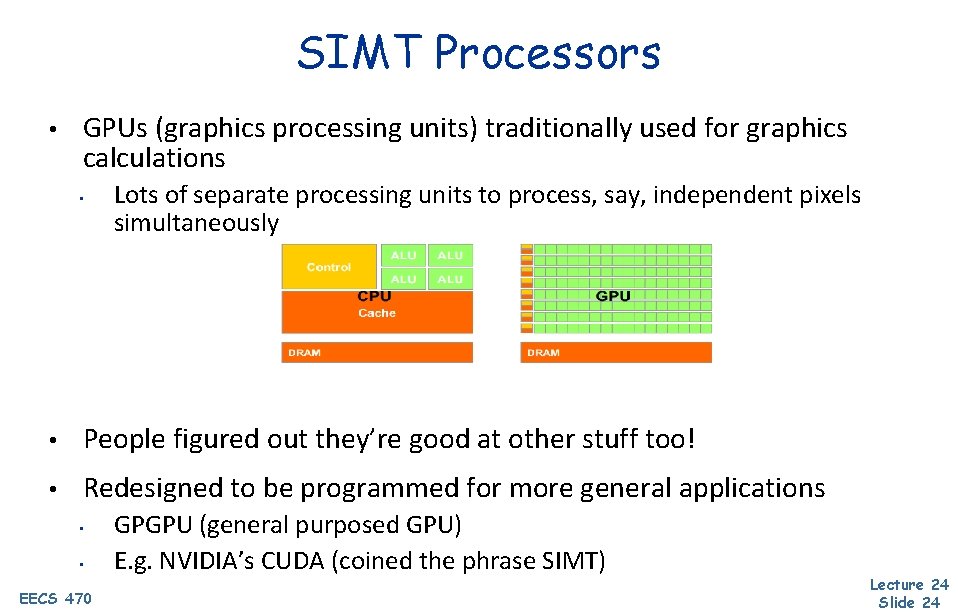

SIMT Processors • GPUs (graphics processing units) traditionally used for graphics calculations • Lots of separate processing units to process, say, independent pixels simultaneously • People figured out they’re good at other stuff too! • Redesigned to be programmed for more general applications • • EECS 470 GPGPU (general purposed GPU) E. g. NVIDIA’s CUDA (coined the phrase SIMT) Lecture 24 Slide 24

SIMT Single Instruction Multiple Thread Basically, combine: • SIMD • Multicore • Multithreading EECS 470 Lecture 24 Slide 25

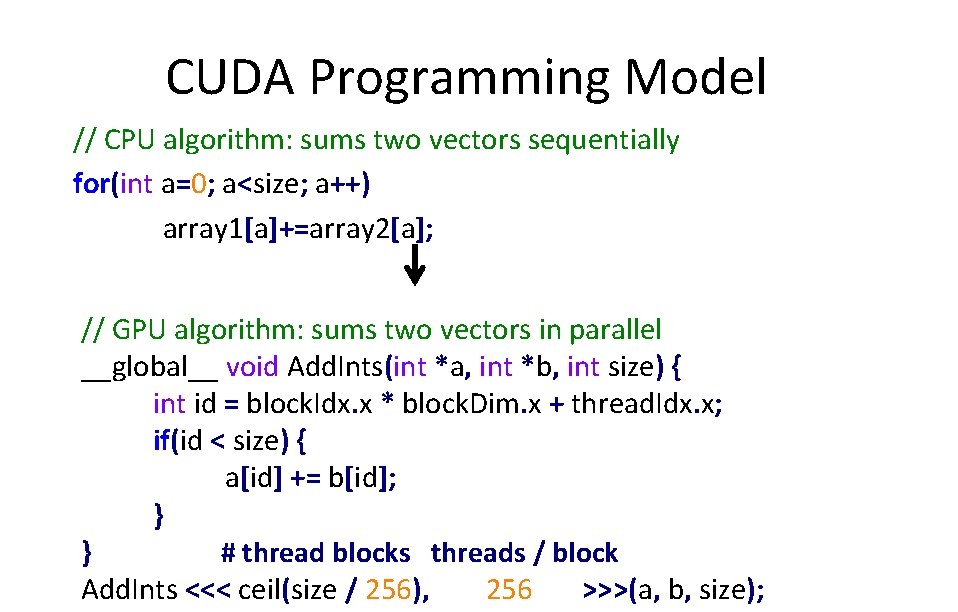

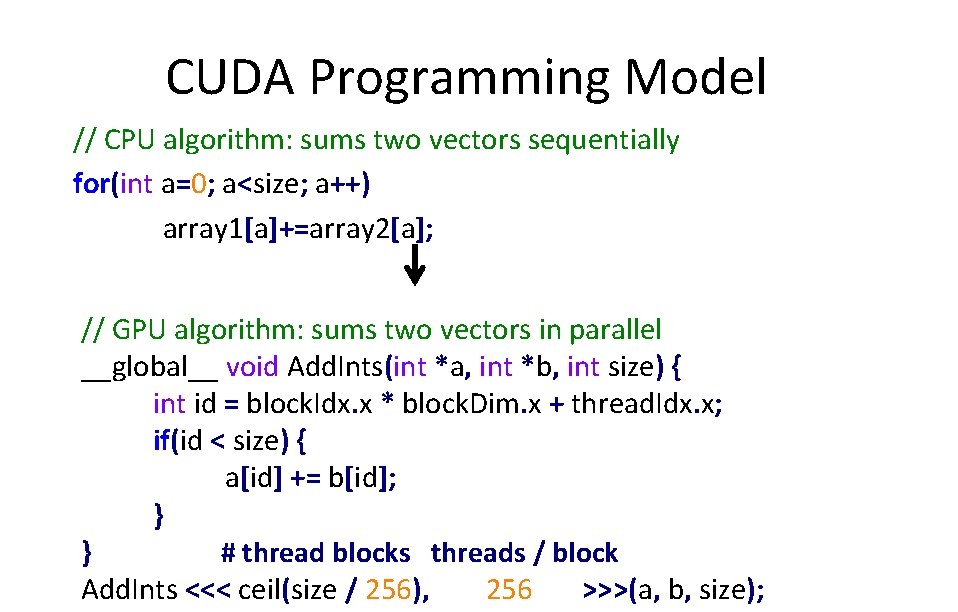

CUDA Programming Model // CPU algorithm: sums two vectors sequentially for(int a=0; a<size; a++) array 1[a]+=array 2[a]; // GPU algorithm: sums two vectors in parallel __global__ void Add. Ints(int *a, int *b, int size) { int id = block. Idx. x * block. Dim. x + thread. Idx. x; if(id < size) { a[id] += b[id]; } } # thread blocks threads / block Add. Ints <<< ceil(size / 256), 256 >>>(a, b, size);

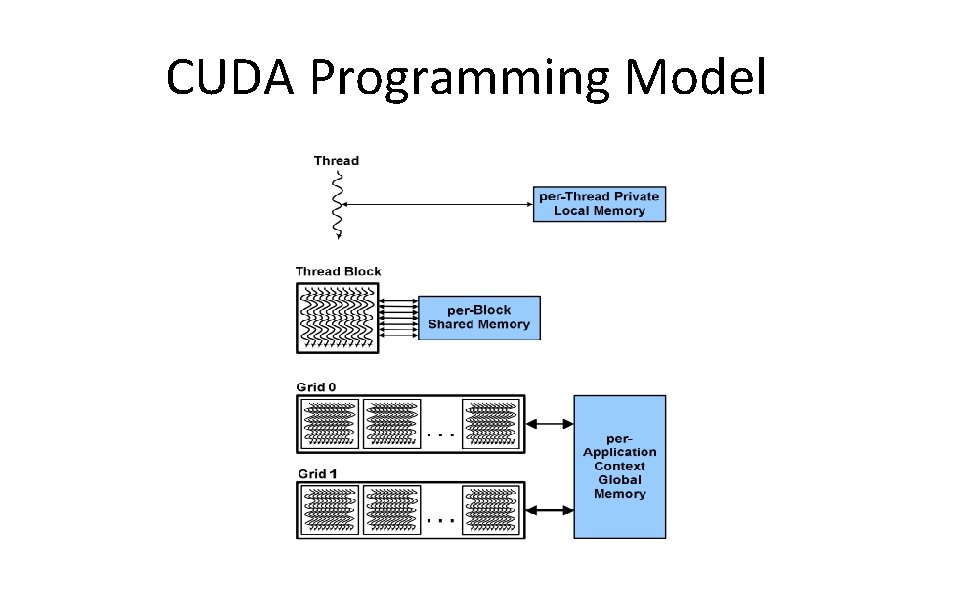

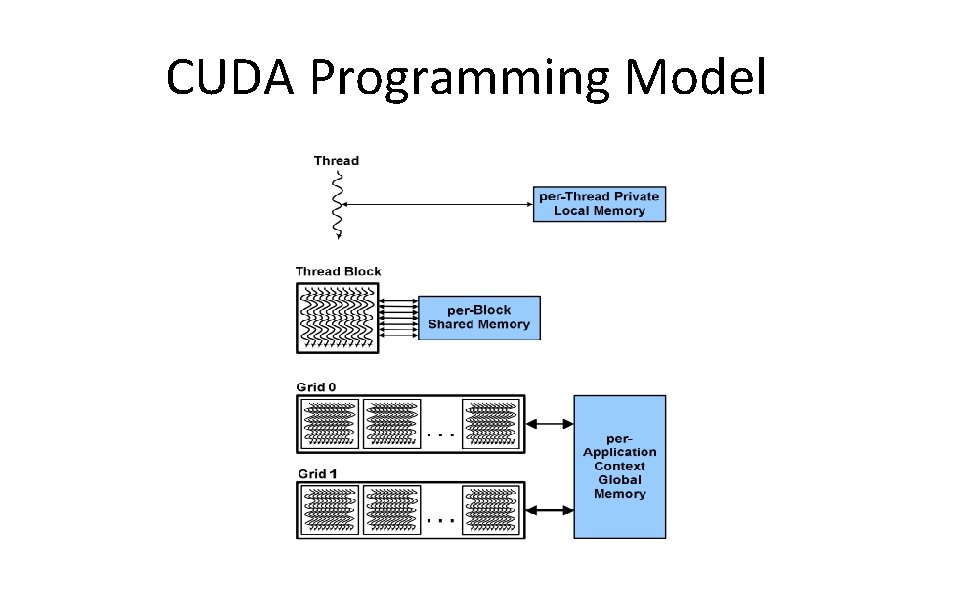

CUDA Programming Model

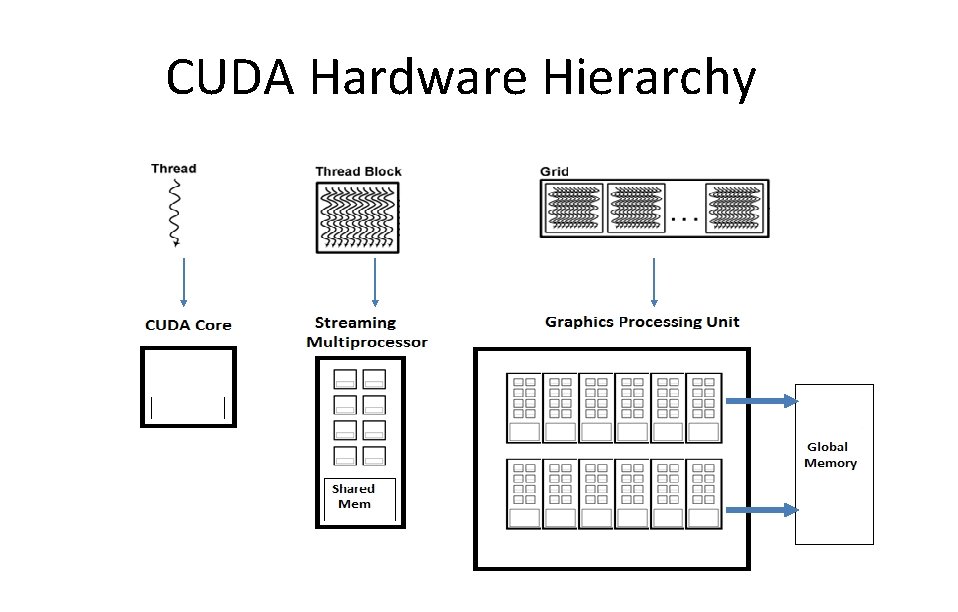

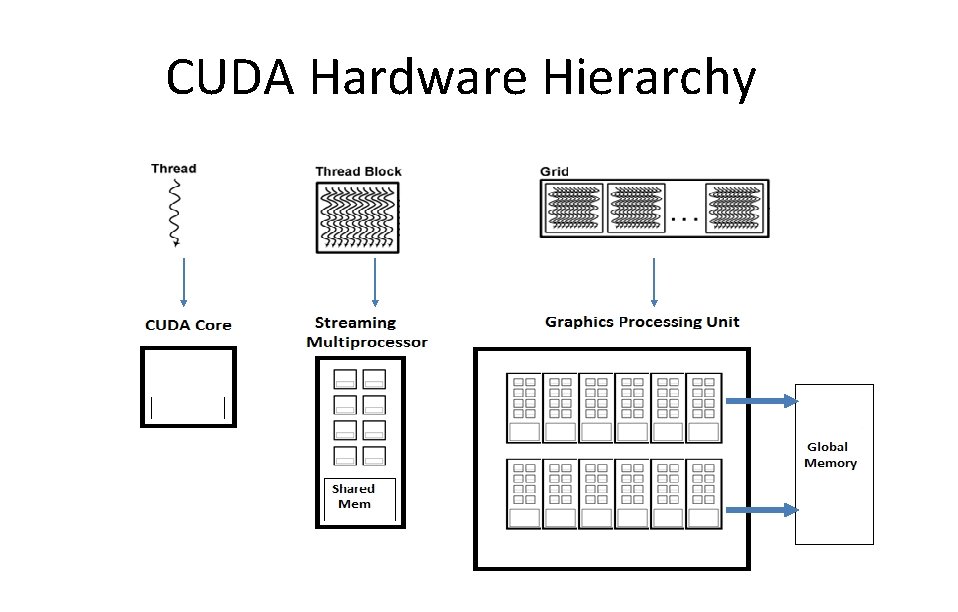

CUDA Hardware Hierarchy

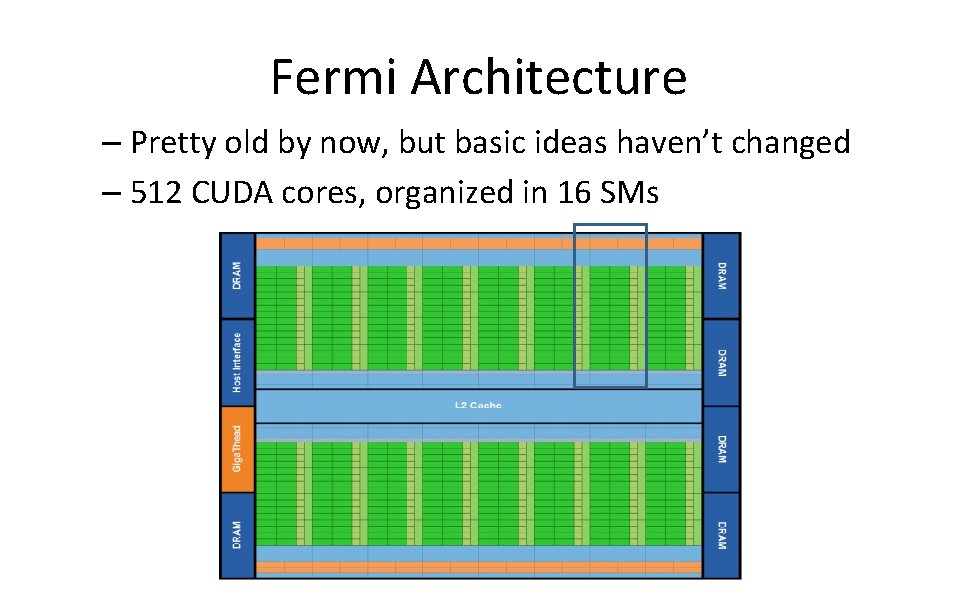

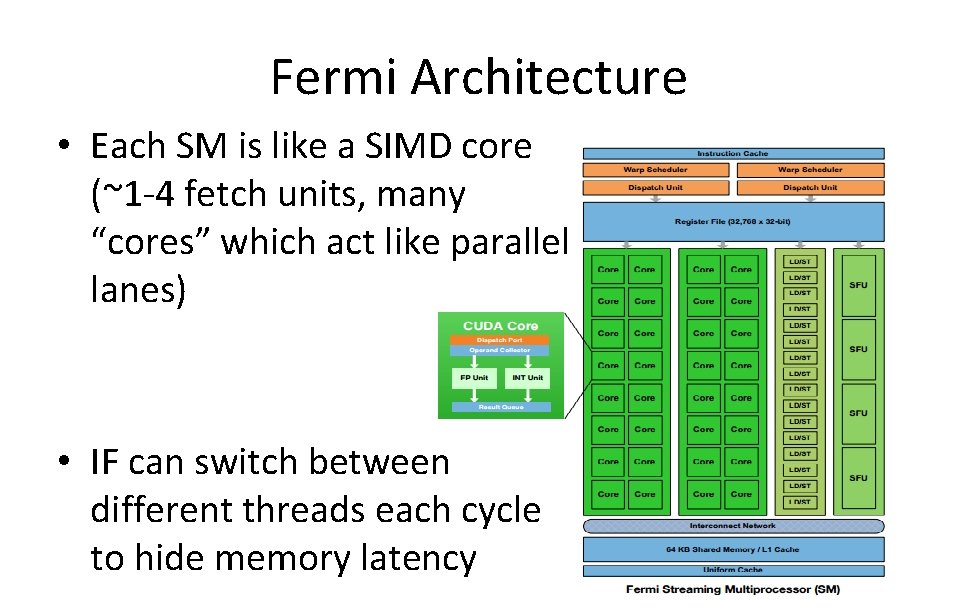

Fermi Architecture – Pretty old by now, but basic ideas haven’t changed – 512 CUDA cores, organized in 16 SMs

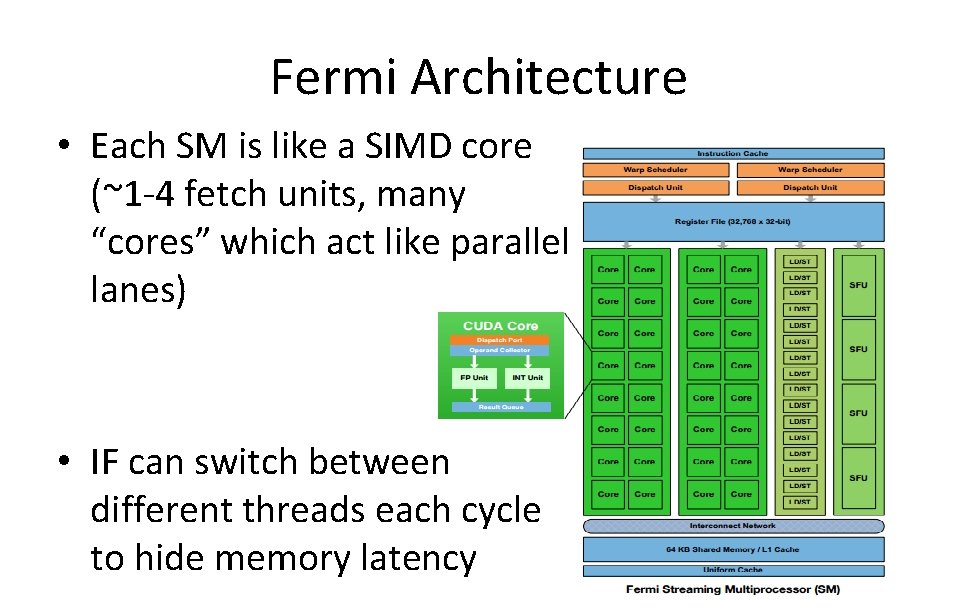

Fermi Architecture • Each SM is like a SIMD core (~1 -4 fetch units, many “cores” which act like parallel lanes) • IF can switch between different threads each cycle to hide memory latency

That's it for 470 Material! • Best of luck with the remainder of the project • Hope to see you on Tuesday!