Using Quality Indicators to Identify Quality Research Melody

- Slides: 30

Using Quality Indicators to Identify Quality Research Melody Tankersley, Ph. D n. Kent State University Bryan G. Cook, Ph. D n. University of Hawaii at Manoa

No Child Left Behind Act of 2002 stipulates that federally funded educational programs and practices must be grounded in scientificallybased research “that involves the application of rigorous, systematic, and objective procedures to obtain reliable and valid knowledge relevant to education activities and programs” (NCLB; p. 126). n begs two questions: q q How do we know if a research study involves rigorous, systematic, and objective procedures? How do we use the results of such research studies to identify educational practices that are effective for improving student outcome?

How do we know if a research study involves rigorous, systematic and objective procedures? n CEC-Division for Research q Sponsored prominent researchers to author papers to propose n n Parameters for establishing that reported research has been conducted with high quality (quality indicators) Criteria for determining whether a practice has been studied sufficiently (enough high-quality research studies conducted on its effectiveness) and shown to improve student outcomes (effects are strong enough) Graham, S. (2005). Criteria for evidence-based practice in special education [special issue]. Exceptional Children, 71.

Exceptional Children (2005) volume 71(2) n Group Experimental and Quasi-Experimental Research (Gersten, Fuchs, Comptom, Coyne, Greenwood, & Innocenti) n Single-Subject Research (Horner, Carr, Halle, Mc. Gee, Odom, & Wolery) n Correlational Research (Thompson, Diamond, Mc. William, Snyder) n Qualitative Studies (Brantlinger, Jimenez, Klingner, Pugach, & Richardson)

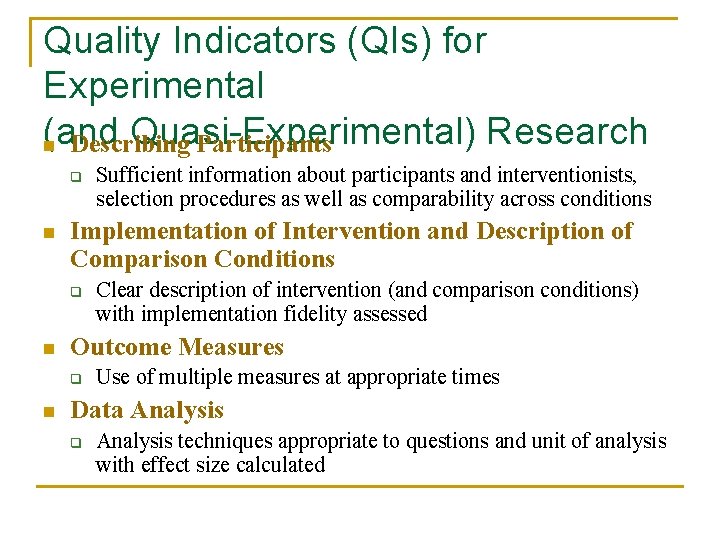

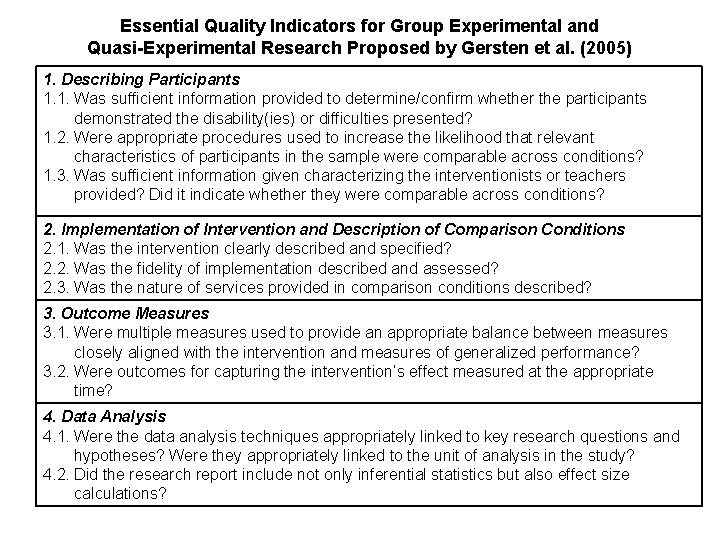

Quality Indicators (QIs) for Experimental (and Quasi-Experimental) Research n Describing Participants q n Implementation of Intervention and Description of Comparison Conditions q n Clear description of intervention (and comparison conditions) with implementation fidelity assessed Outcome Measures q n Sufficient information about participants and interventionists, selection procedures as well as comparability across conditions Use of multiple measures at appropriate times Data Analysis q Analysis techniques appropriate to questions and unit of analysis with effect size calculated

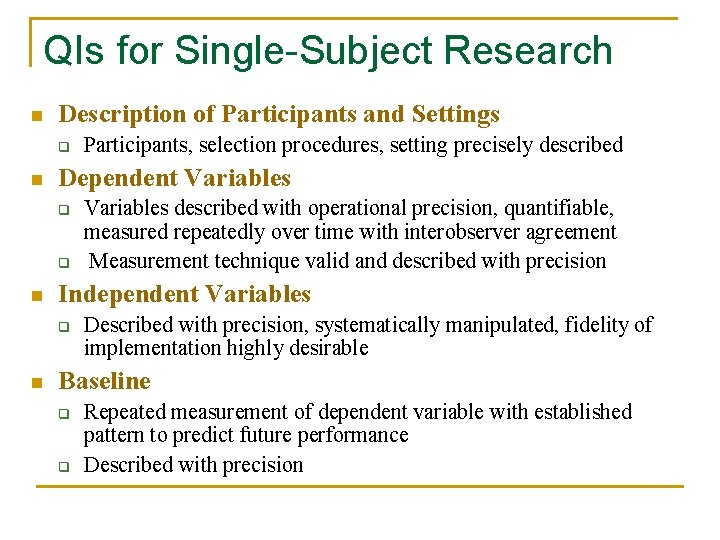

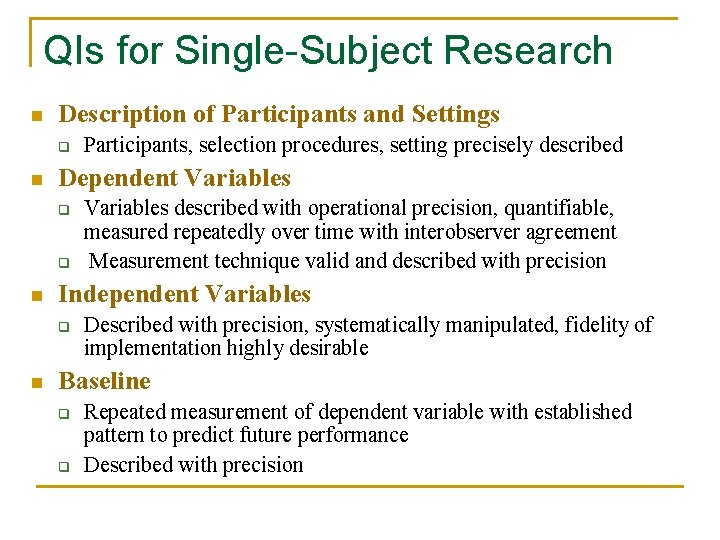

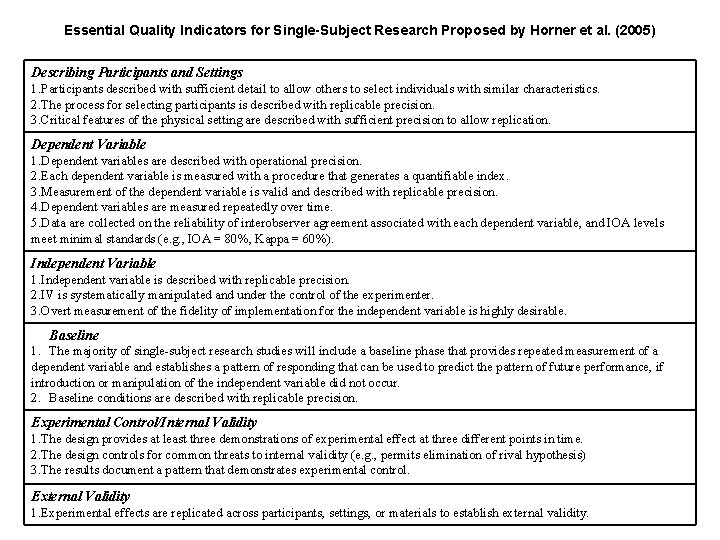

QIs for Single-Subject Research n Description of Participants and Settings q n Dependent Variables q q n Variables described with operational precision, quantifiable, measured repeatedly over time with interobserver agreement Measurement technique valid and described with precision Independent Variables q n Participants, selection procedures, setting precisely described Described with precision, systematically manipulated, fidelity of implementation highly desirable Baseline q q Repeated measurement of dependent variable with established pattern to predict future performance Described with precision

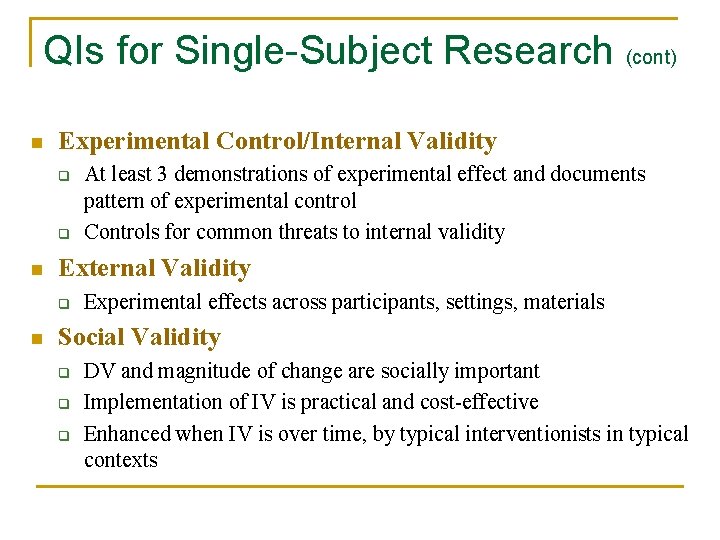

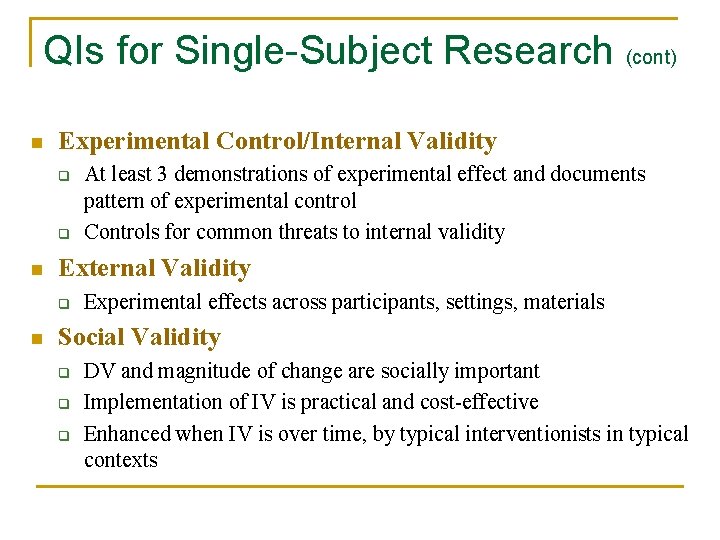

QIs for Single-Subject Research (cont) n Experimental Control/Internal Validity q q n External Validity q n At least 3 demonstrations of experimental effect and documents pattern of experimental control Controls for common threats to internal validity Experimental effects across participants, settings, materials Social Validity q q q DV and magnitude of change are socially important Implementation of IV is practical and cost-effective Enhanced when IV is over time, by typical interventionists in typical contexts

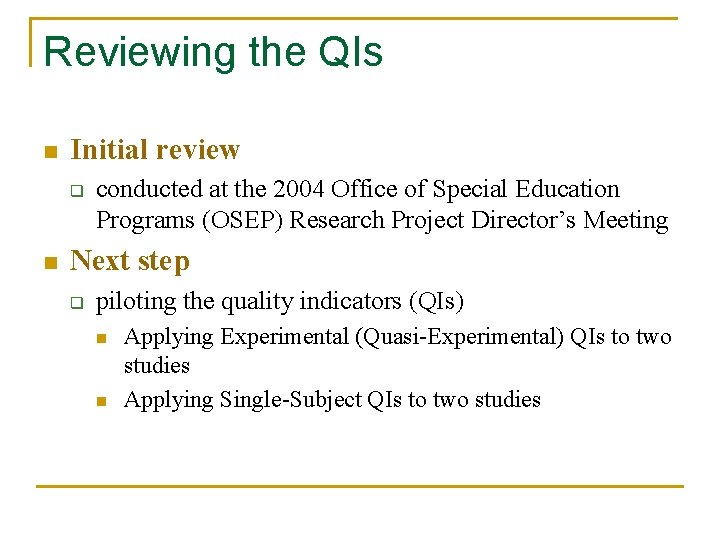

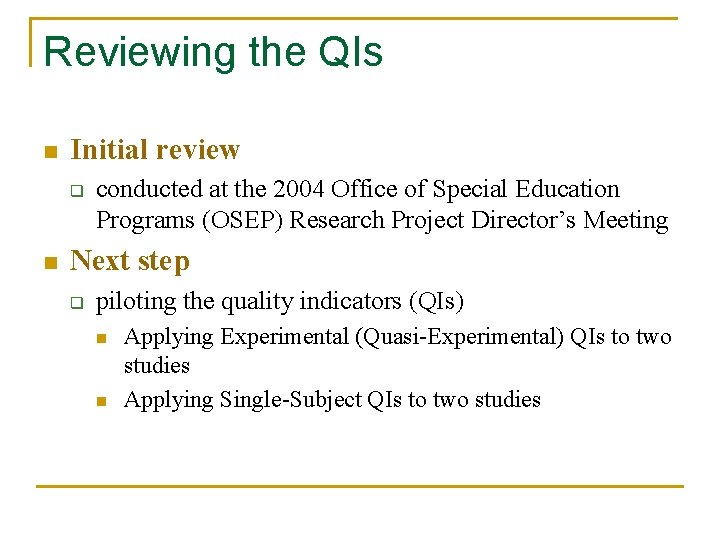

Reviewing the QIs n Initial review q n conducted at the 2004 Office of Special Education Programs (OSEP) Research Project Director’s Meeting Next step q piloting the quality indicators (QIs) n n Applying Experimental (Quasi-Experimental) QIs to two studies Applying Single-Subject QIs to two studies

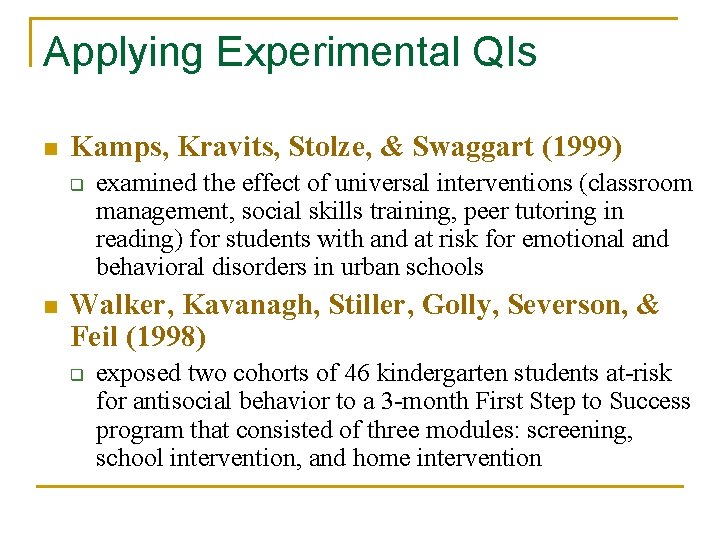

Applying Experimental QIs n Kamps, Kravits, Stolze, & Swaggart (1999) q n examined the effect of universal interventions (classroom management, social skills training, peer tutoring in reading) for students with and at risk for emotional and behavioral disorders in urban schools Walker, Kavanagh, Stiller, Golly, Severson, & Feil (1998) q exposed two cohorts of 46 kindergarten students at-risk for antisocial behavior to a 3 -month First Step to Success program that consisted of three modules: screening, school intervention, and home intervention

Results Applying the Experimental QIs n n Few experimental or quasi-experimental studies to choose from in the EBD literature Neither study came close to meeting all 10 of the QIs (Kamps et al. = 3; Walker et al. = 5)

Issues in Applying Experimental QIs n n n Rigorous requirements of some aspects of QIs Completeness of reporting research Clarity of requirements of some QIs

Issues in Applying Experimental QIs (cont) n Rigorous requirements of some aspects of QIs q “Interventions should be clearly described on a number of salient dimensions, including conceptual underpinnings; detailed instructional procedures; teacher actions and language (e. g. , modeling, corrective feedback); use of instructional materials (e. g. , task difficulty, example selection); and student behaviors (e. g. , what students are required to do and say)” (p. 156). n n Conceptual underpinnings: not described; described for some, but not all elements of multi-faceted IV Intervention description: references for implementation provided; appendix for instructional procedures

Issues in Applying Experimental QIs (cont) n Completeness of reporting research q q Rationale for data analysis techniques Comparison of demographics of experimental and control group Description of comparison conditions An effect size not calculated, but sufficient data reported for calculating an effect size

Issues in Applying Experimental QIs (cont) n Completeness of reporting research (cont) q Examples n Rationale for data analysis technique: “A brief rationale for major analyses and for selected secondary analyses is critical” (p. 161). q n Not typically done. If acceptable analysis, must it be justified? Effect size: “Statistical analyses are accompanied with presentation of effect sizes” (p. 161). q An effect size not calculated for one study, but sufficient data were reported for calculating an effect size.

Issues in Applying Experimental QIs (cont) n Clarity of requirements of some QIs q “For some studies, it may be appropriate to collect data at only preand posttest. In many cases, however, researchers should consider collecting data at multiple points across the course of the study, including follow-up measures” (p. 160). n q Must pre- and post-test measures be analyzed? It is implied when advocating for the use of follow-up measures, but not stated unequivocally “At a minimum, researchers should examine comparison groups to determine what instructional events are occurring, what texts are being used, and what professional development and support is provided to teachers. ” (p. 158). n Must all components (instructional events, texts, professional development, support) be described?

Issues in Applying Experimental (cont) n QIs Clarity of requirements of some QIs (cont) q “The optimal method for assigning participants to study conditions is through random assignment, although in some situations, this is impossible. It is then the researchers’ responsibility to describe how participants were assigned to study conditions (convenience, similar classrooms, preschool programs in comparable districts, etc. )” (p. 155). n Under what conditions are non-random assignment of participants to groups justifiable? When random assignment is not feasible, what procedures for assigning participants to groups are acceptable?

Issues in Applying Experimental QIs (cont) n Clarity of requirements of some QIs (cont) q Other questions: n n Is there a minimum level of implementation fidelity that is acceptable? What is meant by a broad and robust outcome measure that is not tightly aligned with the dependent variable?

Applying Single-Subject QIs n Hall, Lund, & Jackson (1968) q n investigated the effects of contingent teacher attention on the study behavior of six students who were nominated for participation by their teachers for disruptive and dawdling behaviors Sutherland, Wehby, & Copeland (2000) q investigated the effects of a teacher’s behavior-specific praise on the on-task behavior of 9 students identified with emotional and behavioral disorders

Results Applying Single-Subject QIs n n Literature base of interventions addressing behavioral concerns usingle-subject research methods is robust Neither Hall et al. nor Sutherland et al. met all of the QIs—each met only one of the seven

Issues in Applying Single-Subject QIs n n n Rigorous requirements of some aspects of QIs Completeness of reporting research Clarity of requirements of some QIs

Issues in Applying Single-Subject QIs n Rigorous requirements of some aspects of QIs q Cost-effectiveness, practicality of IV n q “Implementation of the independent variable is practical and cost effective” (p. 174). Instruments and processes used to identify disability n “…operational participant descriptions of individuals with a disability would require that the specific disability…and the specific instrument and process used to determine their disability…be identified. Global descriptions such as identifying participants as having developmental disabilities would be insufficient” (p. 167).

Issues in Applying Single-Subject QIs (cont) n Completeness of reporting research q q “The process for selecting participants is described with replicable precision” (p. 174). QIs prescribe integrating information about the “level, trend, and variability of performance occurring during baseline and intervention conditions” (p. 171) to determine the extent to which a functional relationship between the dependent and independent variables exists. n Can it be estimated through visual inspection of the graphed data? Must the researchers discuss experimental control?

Issues in Applying Single-Subject QIs (cont) n Clarity of requirements of some QIs q Discrepancies between what is listed in the tables identifying QIs and the text descriptions of them n q Measurement of intervention implementation fidelity q Table: “highly desirable” (p. 174) q Text: “documentation of adequate implementation fidelity is expected…” (p. 168) Subjective terminology—sufficient detail, replicable precision, critical features, cost-effectiveness, socially important

Issues in Applying Single-Subject QIs (cont) n Clarity of requirements of some QIs (cont) q q Equivocal guidelines— 5 or more data points for baseline condition (not specified for other conditions), “but fewer data points are acceptable in specific cases” (p. 168) “Burden of proof” n n n Are researchers required to discuss each point of analysis or can readers determine whether experimental control exists based on their own visual inspection of graphed data? Must researchers document the social validity of the dependent variable? If less than five data points exist for a certain phase, is it the responsibility of the authors to justify having fewer than five data points?

Considerations for Applying QIs We agree with the spirit of all the QIs—in applying them, however, we have a few points for consideration … n Identifying QIs vs. Operationally defining QIs q q n Published QIs were intended as conceptual guideposts rather than as fully defined, ready to apply indicators Strictly applying these QIs may have been an unfair test Restrictions of dissemination outlets q q Page restrictions of journal “Readability” of reports

Considerations for Applying QIs (cont) n Fundamental (rather than preferable) QIs for determining effectiveness of practices q q Indicators should reflect all but only the most essential methodological considerations Requirements for elements that may enhance the study, but do not affect the trustworthiness of the research, should be reduced

Considerations for Applying QIs (cont) n Retro-fitting q q q Applying criteria determined today to studies previously reported will not allow all good studies to pass through Grandfather criteria for previously reported research? New research held to contemporary requirements n APA effect size

Recommendations n n n Operationally define the QIs—with exemplars and nonexemplars for illustration, perhaps Continue to pilot-test the utility of the QIs with revisions as needed Finalize QIs with reliable interobserver agreement established Determine fundamental QIs for previously conducted research (to eliminate retro-fitting issues) Acquire professional support for QIs so that future research (and publishers) will use them in reports

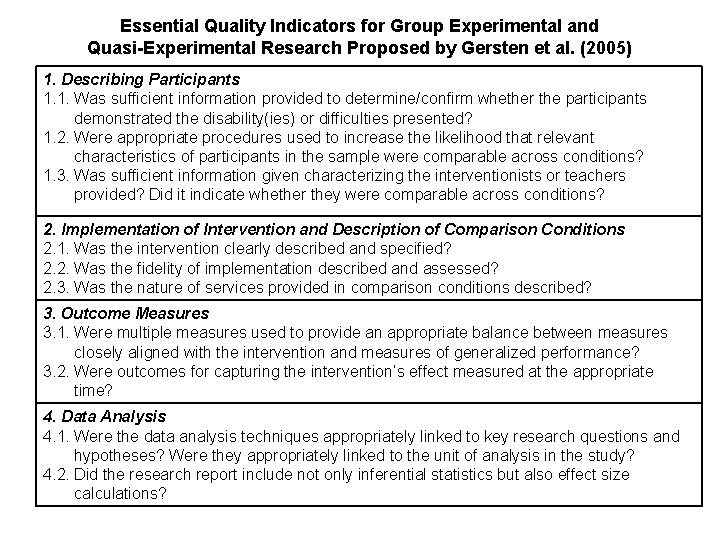

Essential Quality Indicators for Group Experimental and Quasi-Experimental Research Proposed by Gersten et al. (2005) 1. Describing Participants 1. 1. Was sufficient information provided to determine/confirm whether the participants demonstrated the disability(ies) or difficulties presented? 1. 2. Were appropriate procedures used to increase the likelihood that relevant characteristics of participants in the sample were comparable across conditions? 1. 3. Was sufficient information given characterizing the interventionists or teachers provided? Did it indicate whether they were comparable across conditions? 2. Implementation of Intervention and Description of Comparison Conditions 2. 1. Was the intervention clearly described and specified? 2. 2. Was the fidelity of implementation described and assessed? 2. 3. Was the nature of services provided in comparison conditions described? 3. Outcome Measures 3. 1. Were multiple measures used to provide an appropriate balance between measures closely aligned with the intervention and measures of generalized performance? 3. 2. Were outcomes for capturing the intervention’s effect measured at the appropriate time? 4. Data Analysis 4. 1. Were the data analysis techniques appropriately linked to key research questions and hypotheses? Were they appropriately linked to the unit of analysis in the study? 4. 2. Did the research report include not only inferential statistics but also effect size calculations?

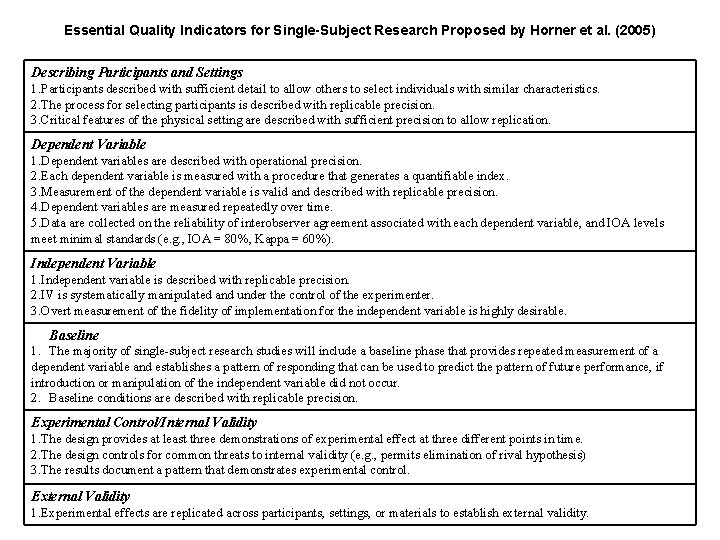

Essential Quality Indicators for Single-Subject Research Proposed by Horner et al. (2005) Describing Participants and Settings 1. Participants described with sufficient detail to allow others to select individuals with similar characteristics. 2. The process for selecting participants is described with replicable precision. 3. Critical features of the physical setting are described with sufficient precision to allow replication. Dependent Variable 1. Dependent variables are described with operational precision. 2. Each dependent variable is measured with a procedure that generates a quantifiable index. 3. Measurement of the dependent variable is valid and described with replicable precision. 4. Dependent variables are measured repeatedly over time. 5. Data are collected on the reliability of interobserver agreement associated with each dependent variable, and IOA levels meet minimal standards (e. g. , IOA = 80%, Kappa = 60%). Independent Variable 1. Independent variable is described with replicable precision. 2. IV is systematically manipulated and under the control of the experimenter. 3. Overt measurement of the fidelity of implementation for the independent variable is highly desirable. Baseline 1. The majority of single-subject research studies will include a baseline phase that provides repeated measurement of a dependent variable and establishes a pattern of responding that can be used to predict the pattern of future performance, if introduction or manipulation of the independent variable did not occur. 2. Baseline conditions are described with replicable precision. Experimental Control/Internal Validity 1. The design provides at least three demonstrations of experimental effect at three different points in time. 2. The design controls for common threats to internal validity (e. g. , permits elimination of rival hypothesis) 3. The results document a pattern that demonstrates experimental control. External Validity 1. Experimental effects are replicated across participants, settings, or materials to establish external validity.