User Modeling and Recommender Systems evaluation and interfaces

- Slides: 19

User Modeling and Recommender Systems: evaluation and interfaces Adolfo Ruiz Calleja 18/10/2014

Index • Some administrative staff • Evaluation of recommender systems • Interfaces for recommender systems 2

Index • Some administrative staff – Administrative DLs – Final project DLs • Evaluation of recommender systems • Interfaces for recommender systems 3

Administrative DLs • • • Lecturers in agreement with the study group, must set two exams times. I open those times in the Study Information System (ÕIS). Student must register themselves on one of these times. The responsible member of teaching staff has 10 working days to enter the results of exams/assessments on to the Study Information System. A student who receives a negative result have right to participate on the reexamination once until the end of Spring semesters intermediate week (March 22, 2015). Lecturer have right to choose, when he/she will conduct the re-exam. If student doesn't pass the course by the end of next semester intermediate week, then he/she must re-take the whole course. How to organize exam/assessment/re-examination? – Lecturer will set a exam/assessment/re-examination times and informs me – I will open those times – Student registers (registration is possible until 24 h before the exam) – ~24 h before exam - lecturer contact with me and asks the list of participants – Exam/assessment/re-examination will take place. NB! Lecturers must not allow to the exam/assessment/re-examination students who are not registered! – I enter the result. NB! System doesn't allow me to enter the results to students who haven't registered to the exam/assessment/re-examination! 4

Administrative DLs • Official evaluation dates: Dec. 20 th and 21 st • Projects should be reviewed by 1 st of January • Re-examination: Feb. 15 th – Those who fail/not-presented send the project – Those who want better grades send new version of the project + send answer to my review 5

Final project DLs October, 18 th: Projects are proposed by the students. October, 19 th: Students start working on their projects. October, 23 rd: I can still be asked about the project topics. December, 1 st: First complete draft of the projects December, 8 th: Each student should have peer-reviewed at least two projects of his colleagues. • December, 13 th and 14 th: Projects are presented • December, 21 st: Projects are finished • January, 1 st: Projects are reviewed. • • • 6

Index • Some administrative staff • Evaluation of recommender systems – General comment – Common metrics – Data gathering techniques • Interfaces for recommender systems 7

General comment • What do you want to evaluate? • What characteristics are relevant for this evaluation? • What metrics are relevant? • Which techniques can be employed to gather data? 8

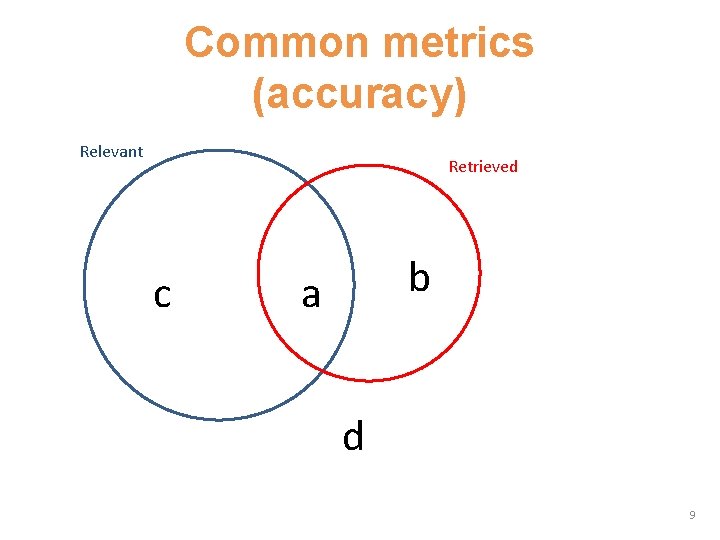

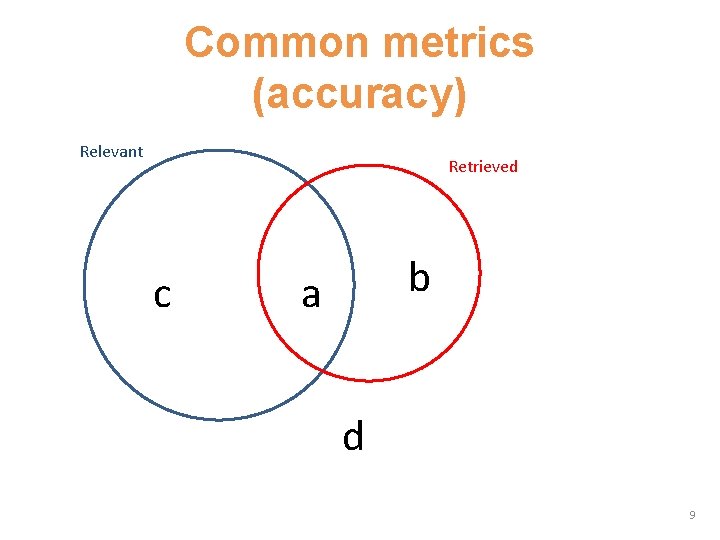

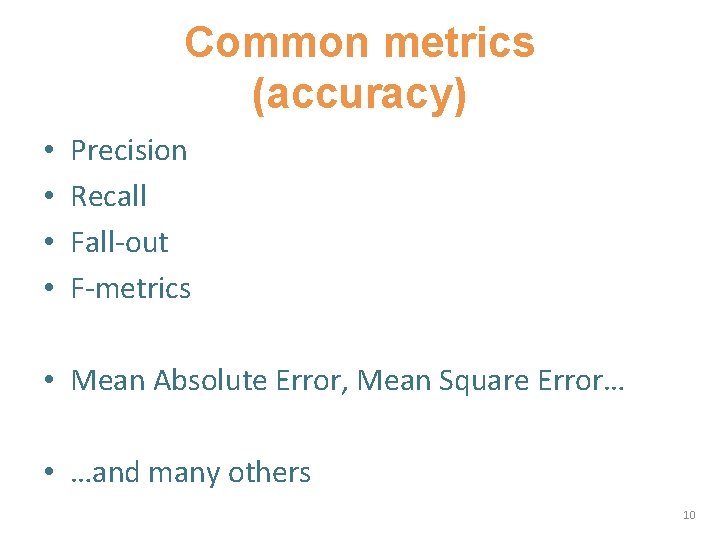

Common metrics (accuracy) Relevant Retrieved c b a d 9

Common metrics (accuracy) • • Precision Recall Fall-out F-metrics • Mean Absolute Error, Mean Square Error… • …and many others 10

Common metrics (beyond accuracy) • • • Coverage Learning Rate Serendipity Confidence User reactions – Explicit (ask) vs. Implicit (log) – Outcome vs. Process – Short-term vs. Long-term 11

Data gathering techniques • • Feature analysis Test the system with synthetic data Test the system with developers or experts Test the system with users – Laboratory vs. Field studies – Formal experiment vs. Analysis of user behavior 12

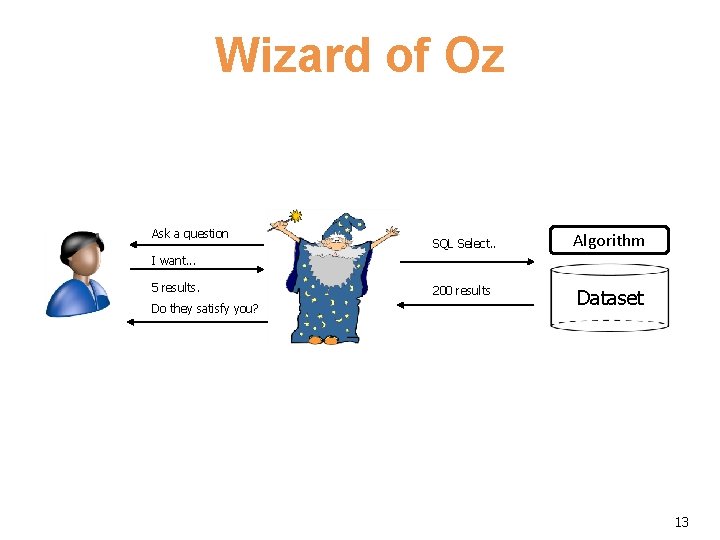

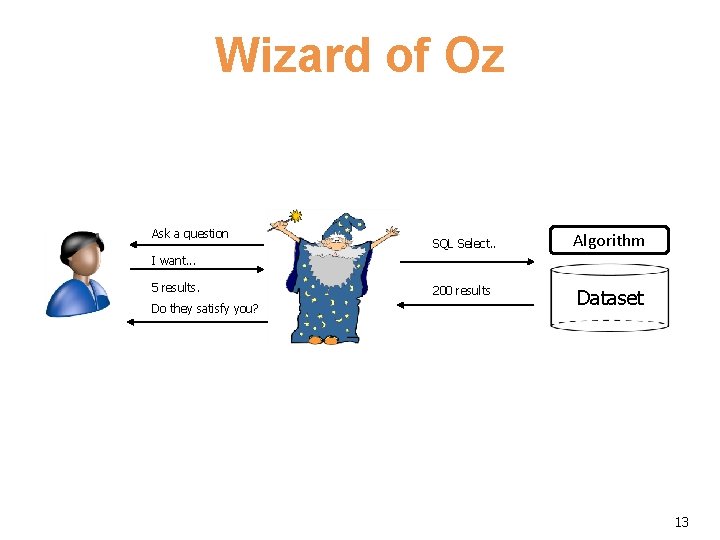

Wizard of Oz Ask a question SQL Select. . Algorithm I want. . . 5 results. Do they satisfy you? 200 results Dataset 13

Index • Some administrative staff • Evaluation of recommender systems • Interfaces for recommender systems – Recommendations – Explanations – Interactions 14

Recommendations • • Top Item Top N-Items Predicted ratings for each item Structured overview (divided into categories) 15

Explanations: Benefits • Transparency explain how the system works • Scrutability allow users to say that the system is wrong • Trust increase user’s confidence • Effectiveness Help users to make decissions • Persuasiveness Convince users to take an item • Efficiency Help users to make faster decissions • Satisfaction Increse the easy of use or the user enjoyenment 16

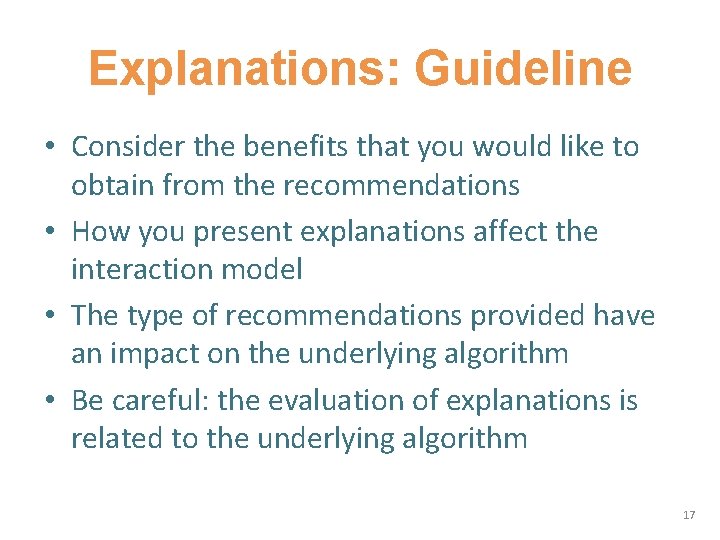

Explanations: Guideline • Consider the benefits that you would like to obtain from the recommendations • How you present explanations affect the interaction model • The type of recommendations provided have an impact on the underlying algorithm • Be careful: the evaluation of explanations is related to the underlying algorithm 17

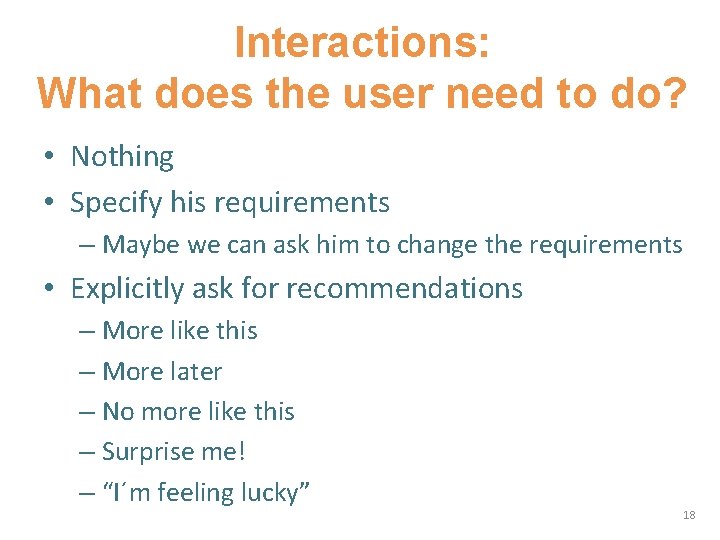

Interactions: What does the user need to do? • Nothing • Specify his requirements – Maybe we can ask him to change the requirements • Explicitly ask for recommendations – More like this – More later – No more like this – Surprise me! – “I´m feeling lucky” 18

User Modeling and Recommender Systems: evaluation and interfaces Adolfo Ruiz Calleja 18/10/2014