The Andrew File System at CERN and less

- Slides: 25

The Andrew File System at CERN and (less about) it’s possible interactions with the Grid Kindly prepared by the CERN AFS team in the FIO ‘Linux and AFS section’ Bernard Antoine (most of these slides), Rainer Tobbicke, Arne Wiebalck and Peter Kelemen Started at (Andrew) Carnegie (Andrew) Mellon University, Pittsburgh in the early 1980 s, commercialised under Transarc which was taken over by IBM who turned it into the Open. AFS that we use today. 20 June 2008 GD group talk: AFS at CERN 1

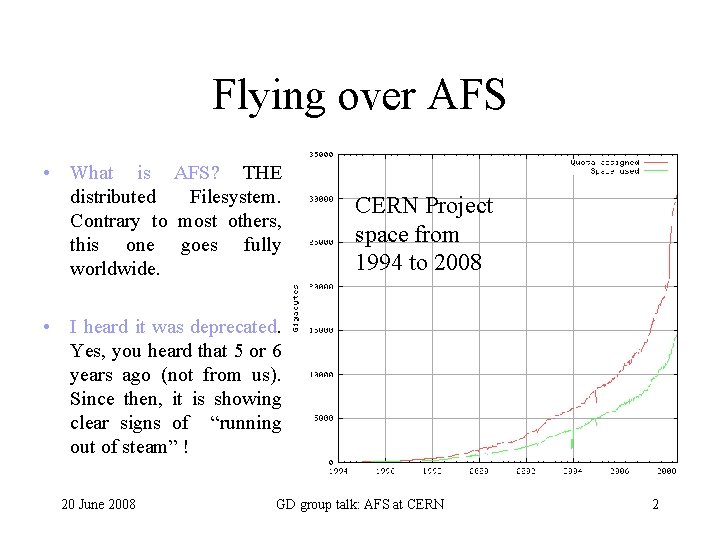

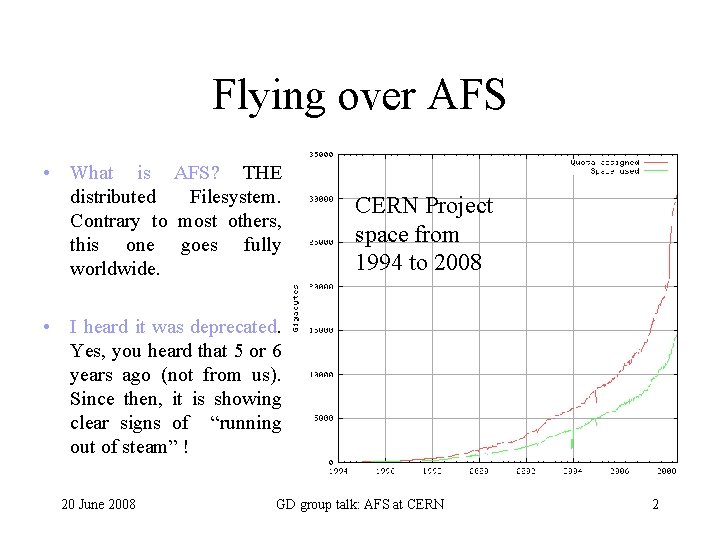

Flying over AFS • What is AFS? THE distributed Filesystem. Contrary to most others, this one goes fully worldwide. CERN Project space from 1994 to 2008 • I heard it was deprecated. Yes, you heard that 5 or 6 years ago (not from us). Since then, it is showing clear signs of “running out of steam” ! 20 June 2008 GD group talk: AFS at CERN 2

How does that work ? • Data is held on AFS File. Servers. • In containers called “volumes”. • Each “client” maintains a cache (files & directories). • For each modifiable object, the relevant server maintains a “callback”, i. e. the promise to tell the client in case the object is indeed modified. • When told so, the client invalidates this part of the cache and will, when necessary, refresh data. 20 June 2008 GD group talk: AFS at CERN 3

How does that work ? (cont) • A volume contains part of the directory tree. • At any subdirectory level, one can insert a pointer to another volume. This is called a mountpoint. – e. g. /afs/cern. ch/user/n/nopc 3 (aka ~nopc 3), ~nopc 3/public & ~nopc 3/private share the same volume user. nopc 3 – but ~nopc 3/w 0 is in another volume u. afs. nopc 3. 0 – NB mount points point to volumes but NOT the other way round • This way, AFS manipulates data in (hopefully) small amounts at a time, • which allows us to move volumes around for loadbalancing or other purposes, • and brings us scalability. • This new volume can even be anywhere outside CERN (no cell on the moon yet) ! 20 June 2008 GD group talk: AFS at CERN 4

How does that work ? (cont) • When following a path, the cache manager inside the client machine resolves mountpoints with the help of a Volume Location Data. Base (this is replicated 5 times and across two buildings), then contacts the relevant File. Server. • Of course, as much as possible stays in the cache, • until an invalidation message comes back from a File. Server, • or until the cache entry expires (after ~ 1 hour). • Access to Databases limited. Most dialog goes with the Fileservers. 20 June 2008 GD group talk: AFS at CERN 5

What about security ? • To access data on AFS, one should normally hold a “token”. • Data is protected by directory ACLs • All files in a directory share the same ACLs. • An ACL is a list of pairs of – Who can do something (single user or group of users) – Access bits • • 20 June 2008 r l i d w k a allow read of a file allow read through a directory allow insertion in the directory allow removal from a directory =~ unlink allow write in a file allow file locking gives administrative rights GD group talk: AFS at CERN 6

And the unix bits, then ? The source for so many misconceptions! What does the manual say ? AFS uses the UNIX mode bits in the following way: -- It uses the initial bit to distinguish files and directories. This is the bit that appears first in the output from the ls -l command shows the hyphen (-) for a file or the letter d for a directory. -- It does not use any of the mode bits on a directory. The AFS ACL alone controls directory access. (indeed true. My ~/public is d----- ! ) -- For a file, the owner (first) set of bits interacts with the ACL entries that apply to the file in the following way. AFS does not use the group or world (second and third sets) of mode bits at all. + If the first r mode bit is not set, no one (including the owner) can read the file, no matter what permissions they have on the ACL. If the bit is set, users also need the r and l permissions on the ACL of the file’s directory to read the file. + If the first w mode bit is not set, no one (including the owner) can modify the file. If the w bit is set, users also need the w and l permissions on the ACL of the file’s directory to modify the file. + There is no ACL permission directly corresponding to the x mode bit, but to execute a file stored in AFS, the user must also have the r and l permissions on the ACL of the file’s directory. (xlate: you need x, and r+l) 20 June 2008 GD group talk: AFS at CERN 7

And the Unix bits then ? (cont) • Did you carefully read the previous page? • No request will be answered from a user who cannot recite the previous page by heart ! • A corollary is that AFS does not care about the group a file appears to be in, • but may be there for some ill-conceived applications which think they can outsmart the system authorizations, • or just for aesthetic reasons (prettier alignment in the output of ls –l) 20 June 2008 GD group talk: AFS at CERN 8

More on ACLs The “who can do something” 3 pages ago can be: – a userid (e. g. nopc 3) – or some other special AFS account ( e. g. rcmd. lxplus 010) – a « pts group » , e. g. nopc 3: friends, system: anyuser, cern: nodes, which matches on any of its members The pts (protection subgroup) mechanism allows to group AFS accounts that share the same ACL. – the IP address of some machine (e. g. 137. 138. 12. 34) which matches on any process on that machine. We use this (dangerous possibility) in some special ‘tokenless’ service cases. 20 June 2008 GD group talk: AFS at CERN 9

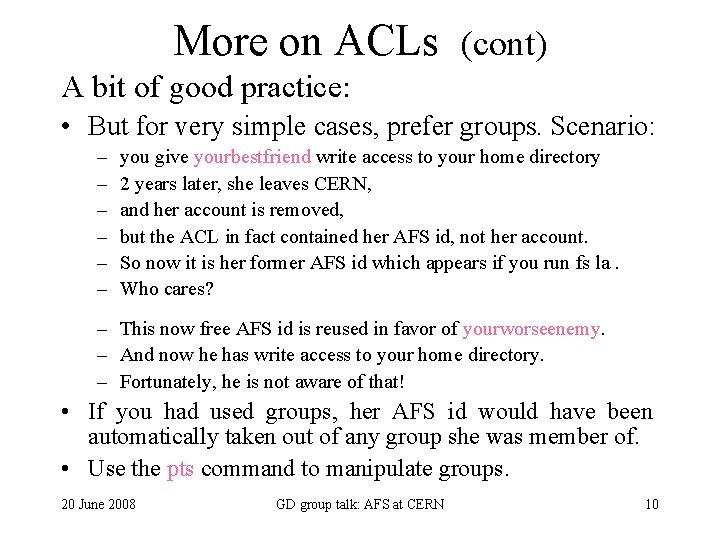

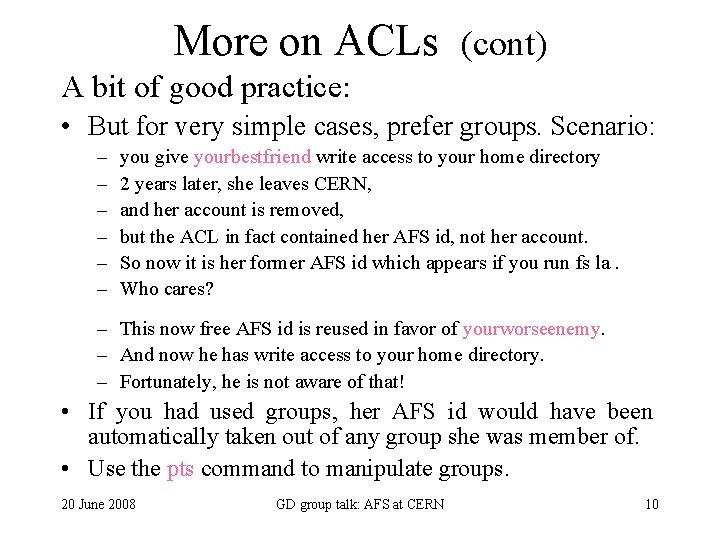

More on ACLs (cont) A bit of good practice: • But for very simple cases, prefer groups. Scenario: – – – you give yourbestfriend write access to your home directory 2 years later, she leaves CERN, and her account is removed, but the ACL in fact contained her AFS id, not her account. So now it is her former AFS id which appears if you run fs la. Who cares? – This now free AFS id is reused in favor of yourworseenemy. – And now he has write access to your home directory. – Fortunately, he is not aware of that! • If you had used groups, her AFS id would have been automatically taken out of any group she was member of. • Use the pts command to manipulate groups. 20 June 2008 GD group talk: AFS at CERN 10

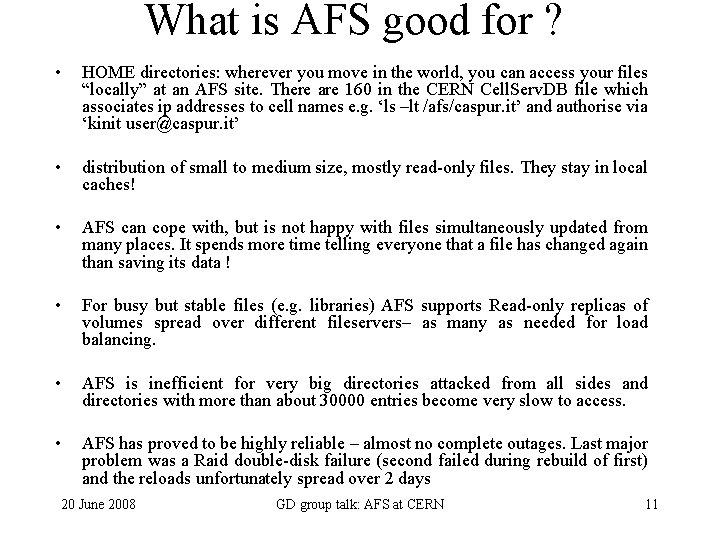

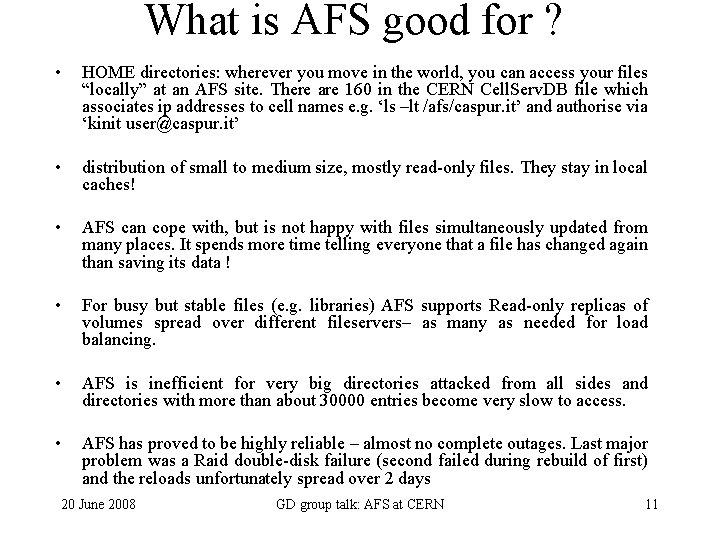

What is AFS good for ? • HOME directories: wherever you move in the world, you can access your files “locally” at an AFS site. There are 160 in the CERN Cell. Serv. DB file which associates ip addresses to cell names e. g. ‘ls –lt /afs/caspur. it’ and authorise via ‘kinit user@caspur. it’ • distribution of small to medium size, mostly read-only files. They stay in local caches! • AFS can cope with, but is not happy with files simultaneously updated from many places. It spends more time telling everyone that a file has changed again than saving its data ! • For busy but stable files (e. g. libraries) AFS supports Read-only replicas of volumes spread over different fileservers– as many as needed for load balancing. • AFS is inefficient for very big directories attacked from all sides and directories with more than about 30000 entries become very slow to access. • AFS has proved to be highly reliable – almost no complete outages. Last major problem was a Raid double-disk failure (second failed during rebuild of first) and the reloads unfortunately spread over 2 days 20 June 2008 GD group talk: AFS at CERN 11

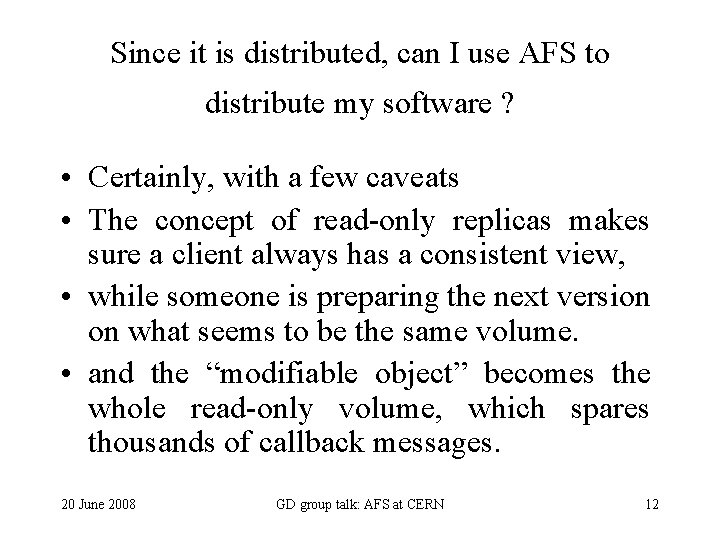

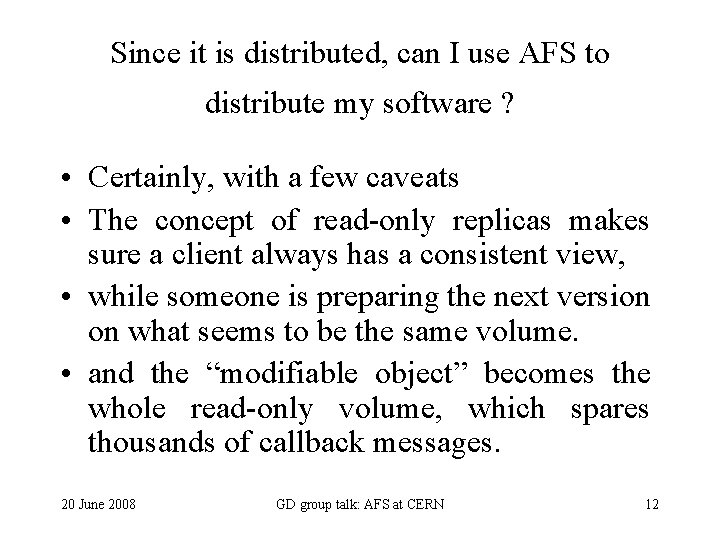

Since it is distributed, can I use AFS to distribute my software ? • Certainly, with a few caveats • The concept of read-only replicas makes sure a client always has a consistent view, • while someone is preparing the next version on what seems to be the same volume. • and the “modifiable object” becomes the whole read-only volume, which spares thousands of callback messages. 20 June 2008 GD group talk: AFS at CERN 12

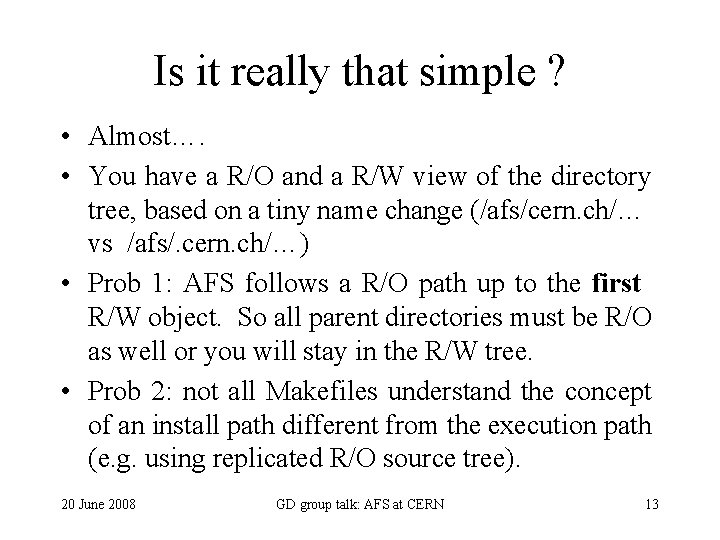

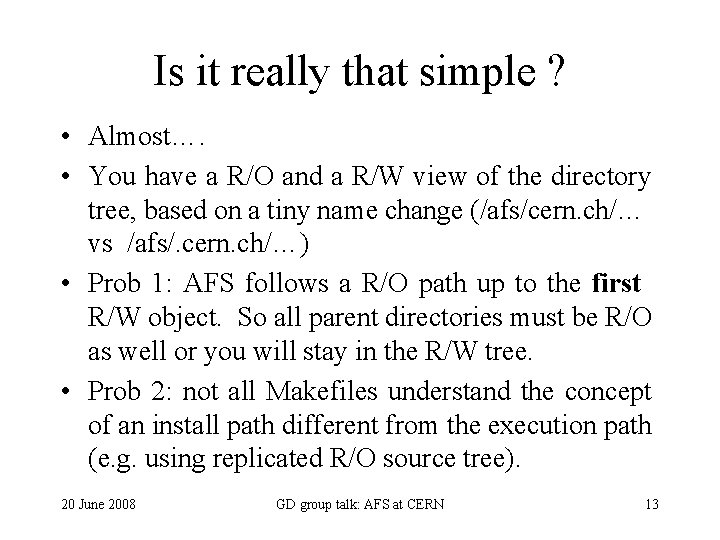

Is it really that simple ? • Almost…. • You have a R/O and a R/W view of the directory tree, based on a tiny name change (/afs/cern. ch/… vs /afs/. cern. ch/…) • Prob 1: AFS follows a R/O path up to the first R/W object. So all parent directories must be R/O as well or you will stay in the R/W tree. • Prob 2: not all Makefiles understand the concept of an install path different from the execution path (e. g. using replicated R/O source tree). 20 June 2008 GD group talk: AFS at CERN 13

But surely you did changes at CERN • Of course! • Mostly on the administrative side – 12000+ users -> as many HOME directories – 45000 volumes – Delegate ! • For historical reasons, 2 independent mechanisms: – CERN Unix groups (2 letter codes) for HOME directories – Project space for physics data (a CERN internal mechanism built on the AFS APIs) • each with its own set of administrators (often the same person) and different APIs: – The CRA web interface for home directories – The lxplus afs_admin command for project space 20 June 2008 GD group talk: AFS at CERN 14

User administration • All done through CRA • Mostly by group administrators • Within some sanity limits, AFS just obeys CRA (creation/removal of users, quota handling, passwords, …) • We created AFS protection groups defined to follow Unix groups but not all groups use them: – E. g. cern: zp contains all users in Unix group ZP (ATLAS). Group codes matter for the physics experiments – space and acls. • Talk to your group administrator for home directory space. • gd group members are mostly in CERN group code cg where F. Baud. Lavigne is the CRA administrator. You can see your group with the ‘phone userid –A’ command can ask to change it if needed but will have to change any acls yourself (e. g. to follow subdirectories – ‘find. –type d –exec fs sa –dir {} –acl cern: cg rl’) 20 June 2008 GD group talk: AFS at CERN 15

Project space • Mostly for experiments (in terms of space). • Many smaller groups for software distribution, created in an ad-hoc manner. • 250+ projects today • Power delegated to project administrators • Users can borrow additional HOME space from a project – Backed up: workspace : ~/w 0, ~/w 1 … – Not backed up: scratch area : ~/scratch 0 … – But remember: HOME directories group admins, workspace & scratch areas project admins !! – /afs/cern. ch/project/gd has 450 GB of backed up project space and 150 GB of scratch project space with many project administrators (see ‘afs_admin list_project gd’) 20 June 2008 GD group talk: AFS at CERN 16

CERN Volume Naming Conventions You can see volume names and quota of a directory with • fs lq /afs/cern. ch/………. And you can see volume location information and basic statistics with • vos exa volume-name Under group space (through the CRA web pages): • /afs/cern. ch/user/m/mylogin is volume name user. mylogin while user workspaces come out of project space: • /afs/cern. ch/user/m/mylogin/w 0 is u. myproject. mylogin. 0 but both are backed up. /afs/cern. ch/user/m/mylogin/scratch 0 is s. myproject. mylogin. 0 and is not backed up And under project space (through the afs_admin command) • /afs/cern. ch/project/myproject/precious is p. myproject. precious and is backed up • /afs/cern. ch/project/myproject/scratch is q. project. scratch and is not backed up Volume names beginning user, u and p are backed up Volume names beginning s and q are not backed up 20 June 2008 GD group talk: AFS at CERN 17

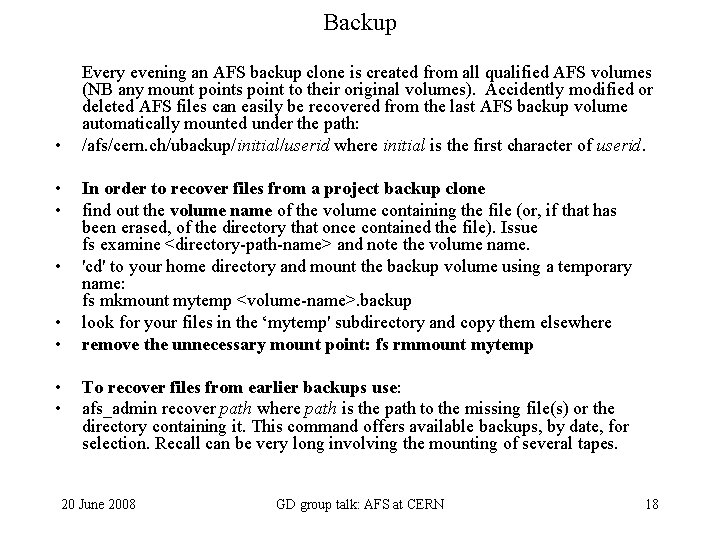

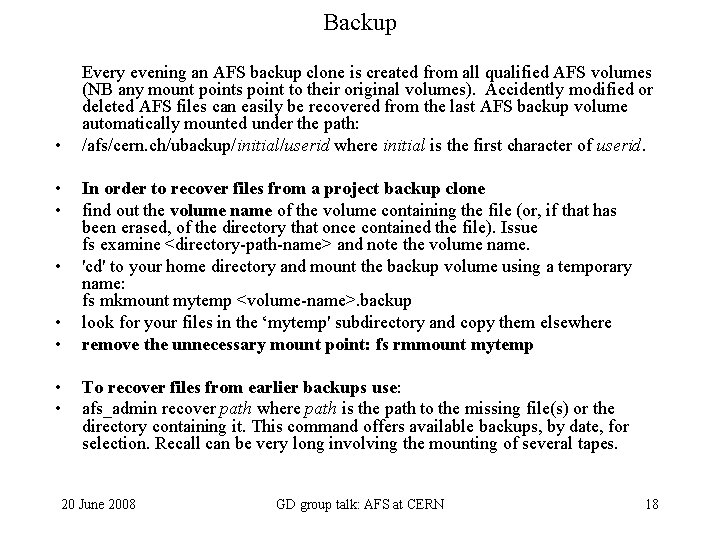

Backup Every evening an AFS backup clone is created from all qualified AFS volumes (NB any mount points point to their original volumes). Accidently modified or deleted AFS files can easily be recovered from the last AFS backup volume automatically mounted under the path: • /afs/cern. ch/ubackup/initial/userid where initial is the first character of userid. • • In order to recover files from a project backup clone find out the volume name of the volume containing the file (or, if that has been erased, of the directory that once contained the file). Issue fs examine <directory-path-name> and note the volume name. 'cd' to your home directory and mount the backup volume using a temporary name: fs mkmount mytemp <volume-name>. backup look for your files in the ‘mytemp' subdirectory and copy them elsewhere remove the unnecessary mount point: fs rmmount mytemp To recover files from earlier backups use: afs_admin recover path where path is the path to the missing file(s) or the directory containing it. This command offers available backups, by date, for selection. Recall can be very long involving the mounting of several tapes. 20 June 2008 GD group talk: AFS at CERN 18

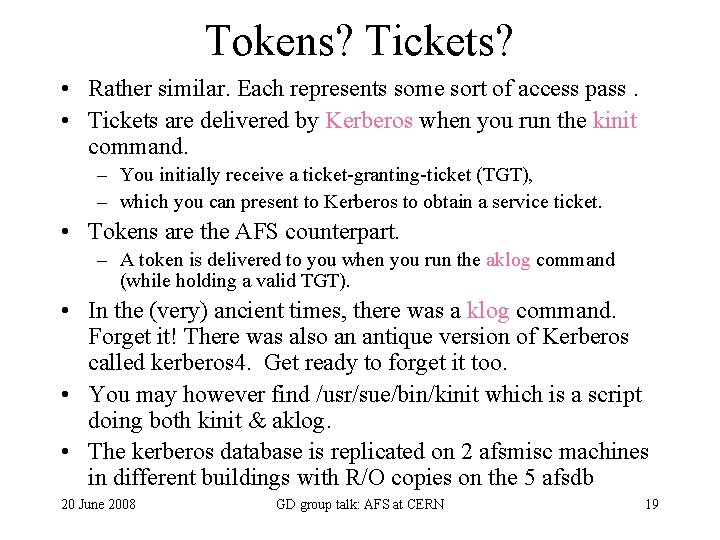

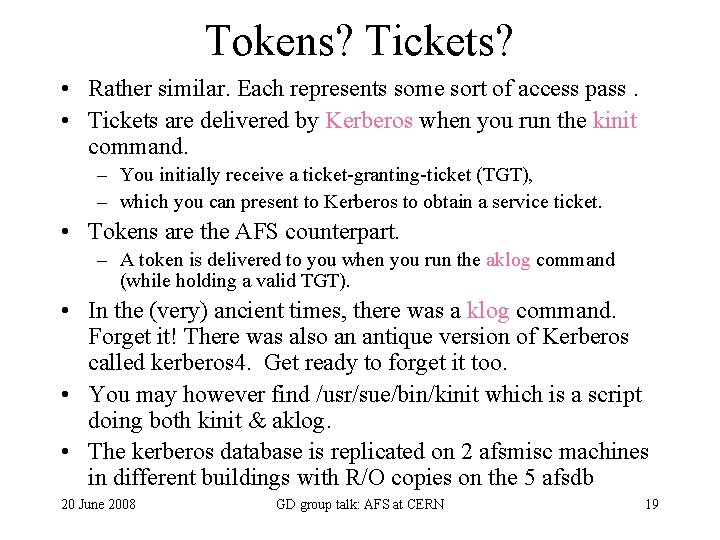

Tokens? Tickets? • Rather similar. Each represents some sort of access pass. • Tickets are delivered by Kerberos when you run the kinit command. – You initially receive a ticket-granting-ticket (TGT), – which you can present to Kerberos to obtain a service ticket. • Tokens are the AFS counterpart. – A token is delivered to you when you run the aklog command (while holding a valid TGT). • In the (very) ancient times, there was a klog command. Forget it! There was also an antique version of Kerberos called kerberos 4. Get ready to forget it too. • You may however find /usr/sue/bin/kinit which is a script doing both kinit & aklog. • The kerberos database is replicated on 2 afsmisc machines in different buildings with R/O copies on the 5 afsdb 20 June 2008 GD group talk: AFS at CERN 19

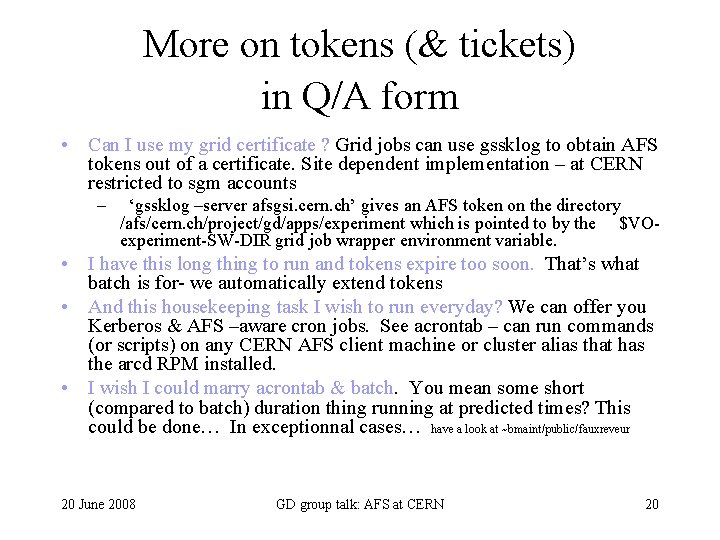

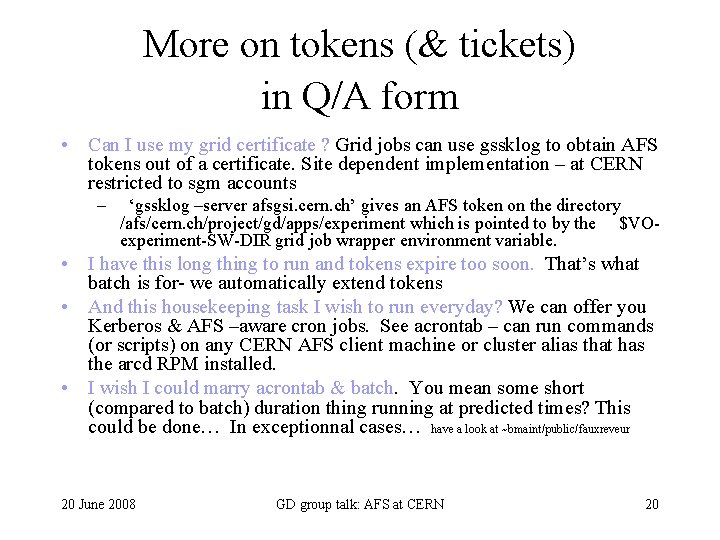

More on tokens (& tickets) in Q/A form • Can I use my grid certificate ? Grid jobs can use gssklog to obtain AFS tokens out of a certificate. Site dependent implementation – at CERN restricted to sgm accounts – ‘gssklog –server afsgsi. cern. ch’ gives an AFS token on the directory /afs/cern. ch/project/gd/apps/experiment which is pointed to by the $VOexperiment-SW-DIR grid job wrapper environment variable. • I have this long thing to run and tokens expire too soon. That’s what batch is for- we automatically extend tokens • And this housekeeping task I wish to run everyday? We can offer you Kerberos & AFS –aware cron jobs. See acrontab – can run commands (or scripts) on any CERN AFS client machine or cluster alias that has the arcd RPM installed. • I wish I could marry acrontab & batch. You mean some short (compared to batch) duration thing running at predicted times? This could be done… In exceptionnal cases… have a look at ~bmaint/public/fauxreveur 20 June 2008 GD group talk: AFS at CERN 20

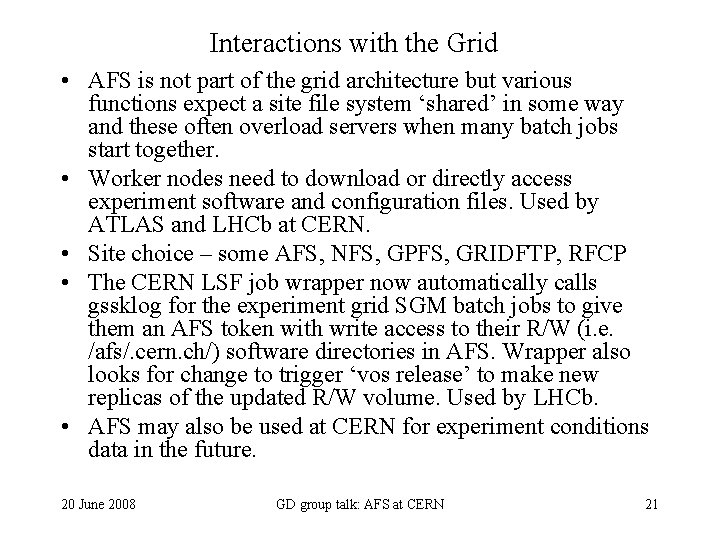

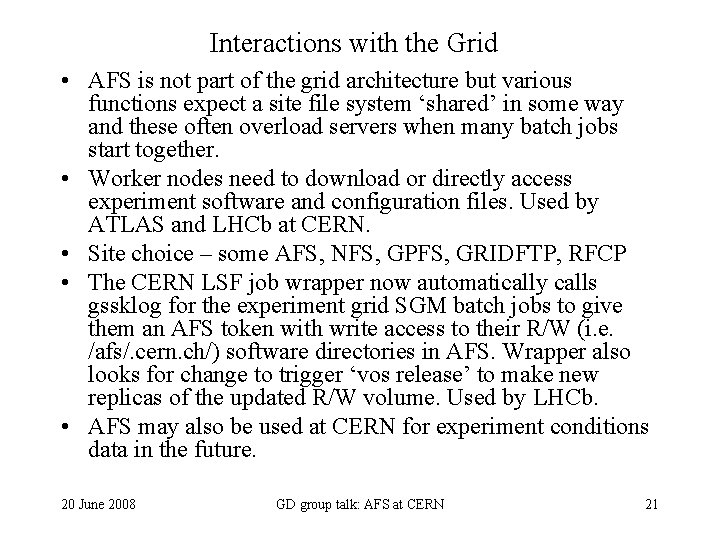

Interactions with the Grid • AFS is not part of the grid architecture but various functions expect a site file system ‘shared’ in some way and these often overload servers when many batch jobs start together. • Worker nodes need to download or directly access experiment software and configuration files. Used by ATLAS and LHCb at CERN. • Site choice – some AFS, NFS, GPFS, GRIDFTP, RFCP • The CERN LSF job wrapper now automatically calls gssklog for the experiment grid SGM batch jobs to give them an AFS token with write access to their R/W (i. e. /afs/. cern. ch/) software directories in AFS. Wrapper also looks for change to trigger ‘vos release’ to make new replicas of the updated R/W volume. Used by LHCb. • AFS may also be used at CERN for experiment conditions data in the future. 20 June 2008 GD group talk: AFS at CERN 21

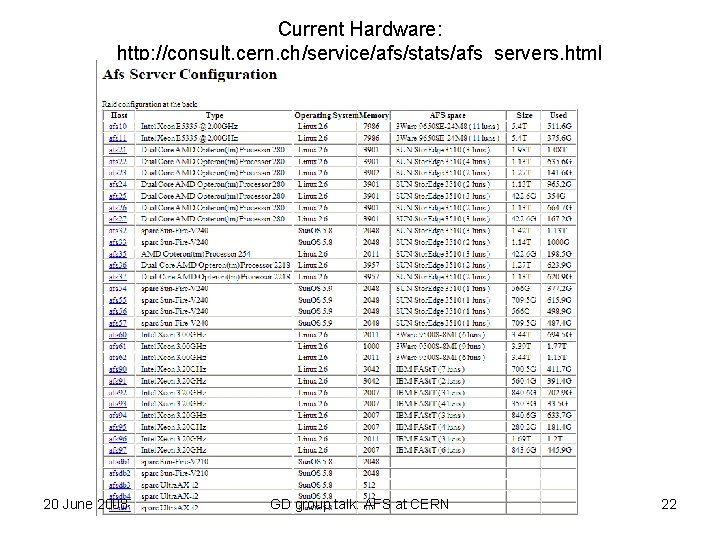

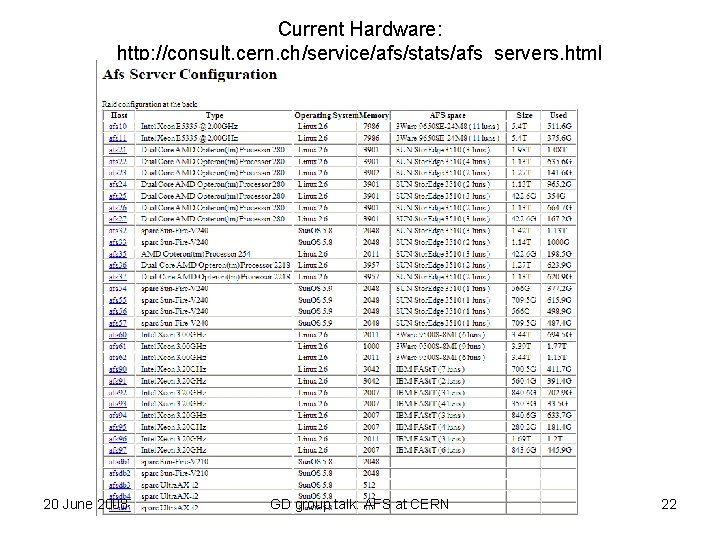

Current Hardware: http: //consult. cern. ch/service/afs/stats/afs_servers. html 20 June 2008 GD group talk: AFS at CERN 22

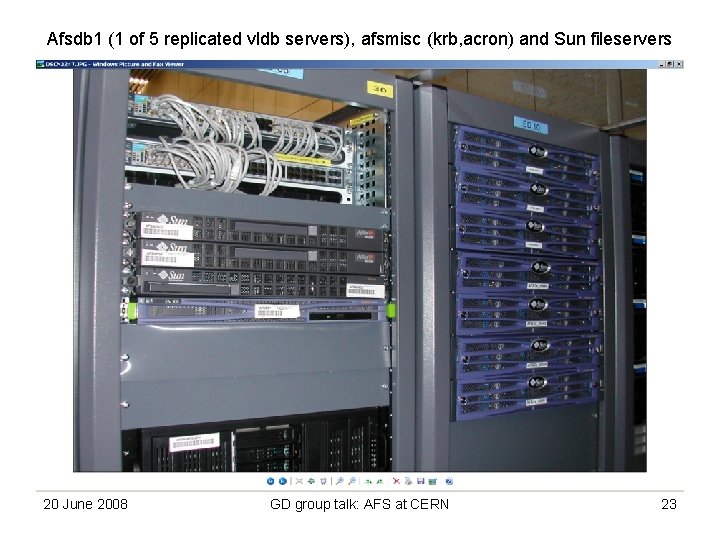

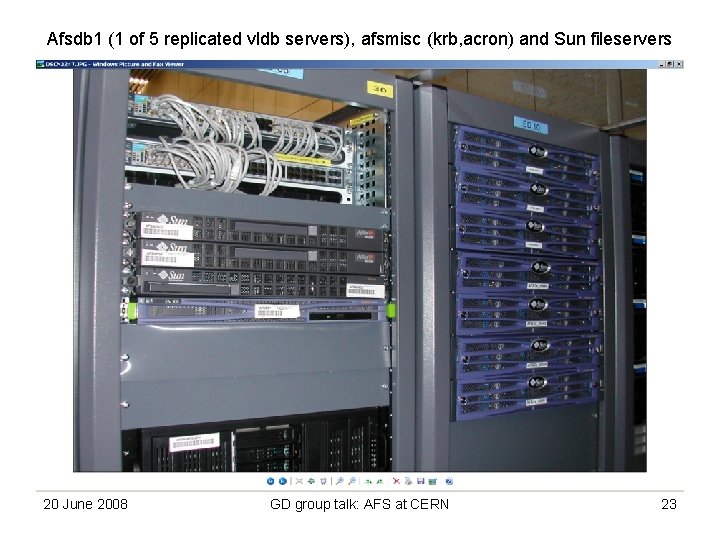

Afsdb 1 (1 of 5 replicated vldb servers), afsmisc (krb, acron) and Sun fileservers 20 June 2008 GD group talk: AFS at CERN 23

Some recent Sun and Dell fileservers Generic/3 -ware servers are only used for scratch volumes 20 June 2008 GD group talk: AFS at CERN 24

Any documentation available ? • If you mean up-to-date documentation you are probably dreaming. • http: //services. web. cern. ch/services/afs/ is not that obsolete, after all. • And of course you have the official info on the open. AFS site: http: //www. openafs. org/main. html 20 June 2008 GD group talk: AFS at CERN 25