Summary of MDPs until Now Finitehorizon MDPs Nonstationary

![Real Time Dynamic Programming [Barto, Bradtke, Singh’ 95] • Trial: simulate greedy policy starting Real Time Dynamic Programming [Barto, Bradtke, Singh’ 95] • Trial: simulate greedy policy starting](https://slidetodoc.com/presentation_image_h2/9e7e6078782bed09bef421262a49b06e/image-4.jpg)

![Labeled RTDP [Bonet&Geffner’ 03] Converged means bellman residual is less than e • Initialise Labeled RTDP [Bonet&Geffner’ 03] Converged means bellman residual is less than e • Initialise](https://slidetodoc.com/presentation_image_h2/9e7e6078782bed09bef421262a49b06e/image-9.jpg)

- Slides: 48

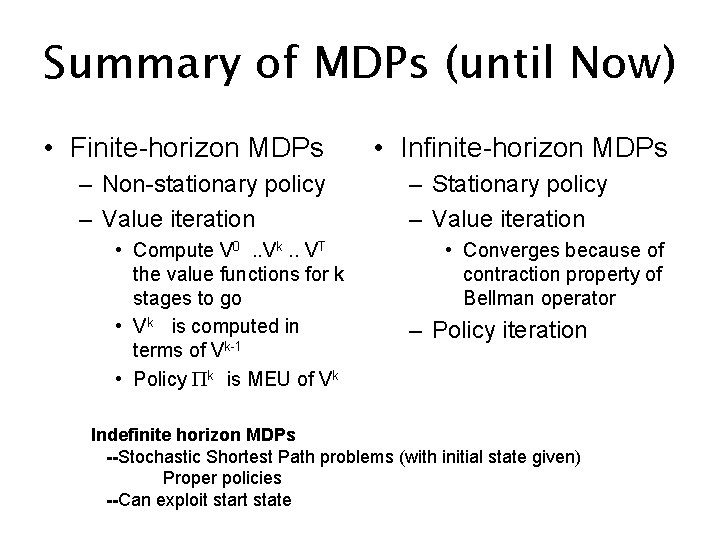

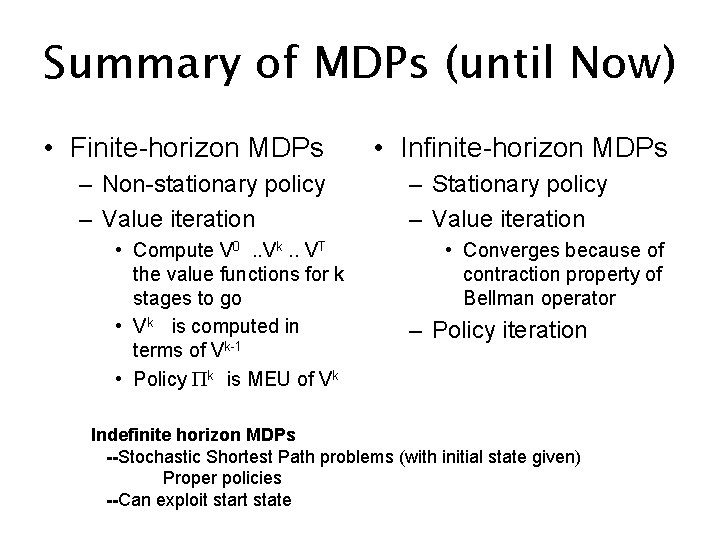

Summary of MDPs (until Now) • Finite-horizon MDPs – Non-stationary policy – Value iteration • Compute V 0. . Vk. . VT the value functions for k stages to go • Vk is computed in terms of Vk-1 • Policy Pk is MEU of Vk • Infinite-horizon MDPs – Stationary policy – Value iteration • Converges because of contraction property of Bellman operator – Policy iteration Indefinite horizon MDPs --Stochastic Shortest Path problems (with initial state given) Proper policies --Can exploit start state

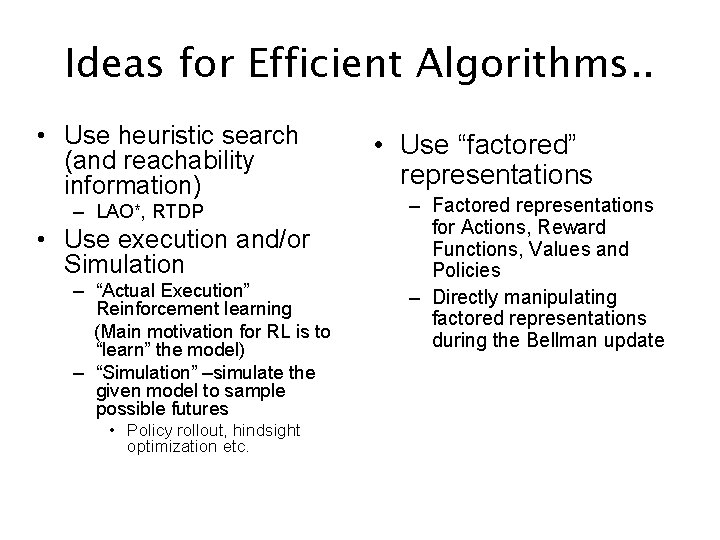

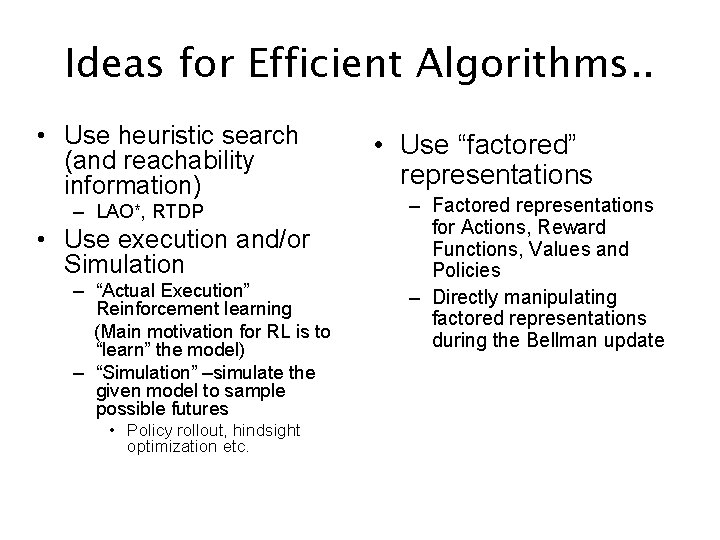

Ideas for Efficient Algorithms. . • Use heuristic search (and reachability information) – LAO*, RTDP • Use execution and/or Simulation – “Actual Execution” Reinforcement learning (Main motivation for RL is to “learn” the model) – “Simulation” –simulate the given model to sample possible futures • Policy rollout, hindsight optimization etc. • Use “factored” representations – Factored representations for Actions, Reward Functions, Values and Policies – Directly manipulating factored representations during the Bellman update

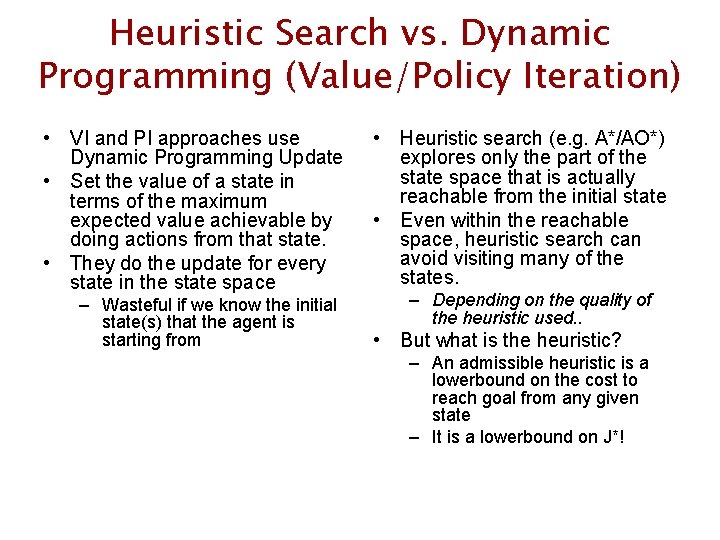

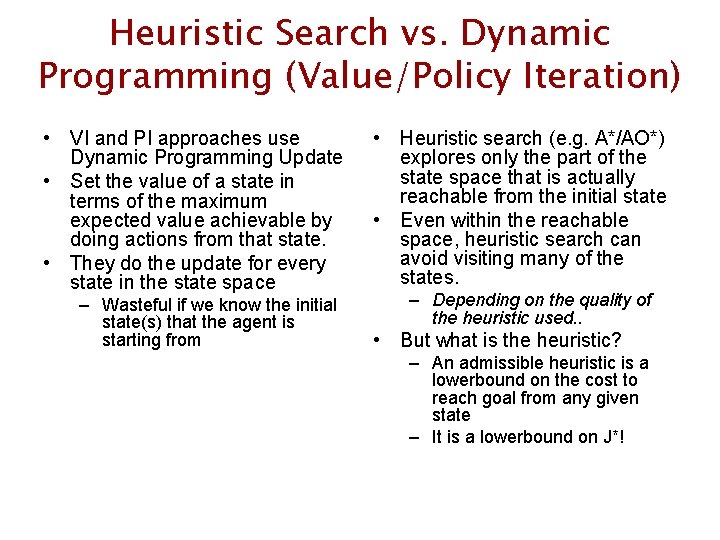

Heuristic Search vs. Dynamic Programming (Value/Policy Iteration) • VI and PI approaches use Dynamic Programming Update • Set the value of a state in terms of the maximum expected value achievable by doing actions from that state. • They do the update for every state in the state space – Wasteful if we know the initial state(s) that the agent is starting from • Heuristic search (e. g. A*/AO*) explores only the part of the state space that is actually reachable from the initial state • Even within the reachable space, heuristic search can avoid visiting many of the states. – Depending on the quality of the heuristic used. . • But what is the heuristic? – An admissible heuristic is a lowerbound on the cost to reach goal from any given state – It is a lowerbound on J*!

![Real Time Dynamic Programming Barto Bradtke Singh 95 Trial simulate greedy policy starting Real Time Dynamic Programming [Barto, Bradtke, Singh’ 95] • Trial: simulate greedy policy starting](https://slidetodoc.com/presentation_image_h2/9e7e6078782bed09bef421262a49b06e/image-4.jpg)

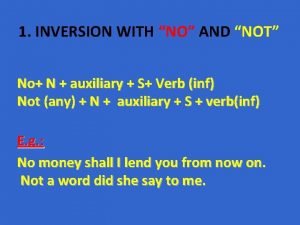

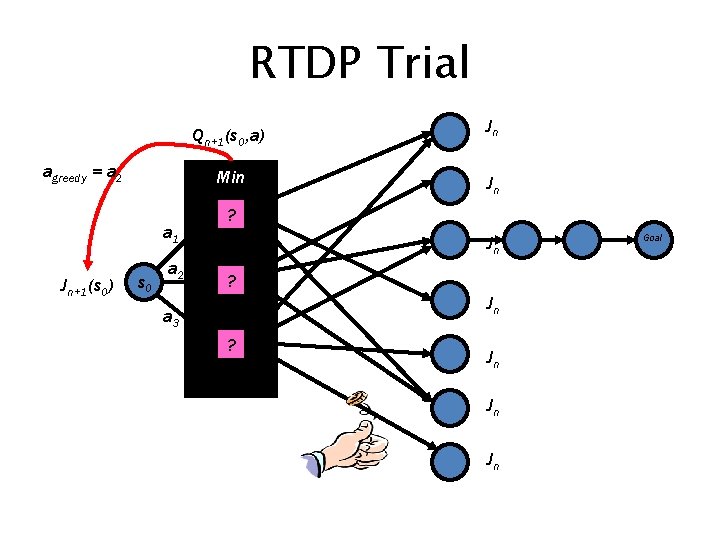

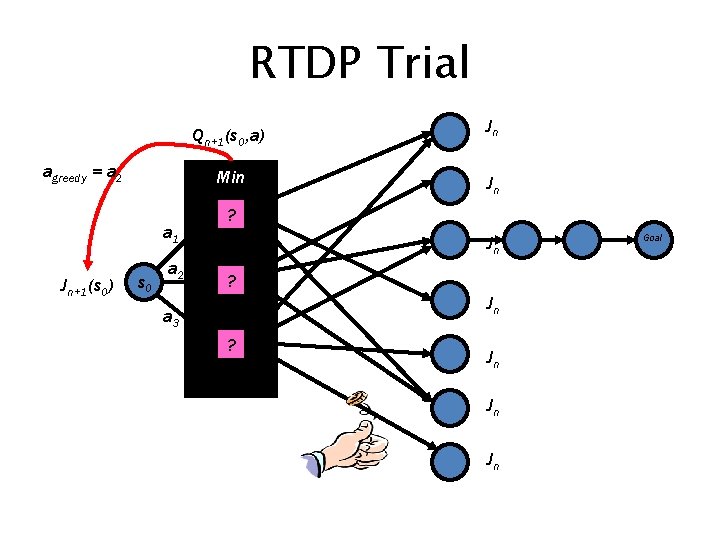

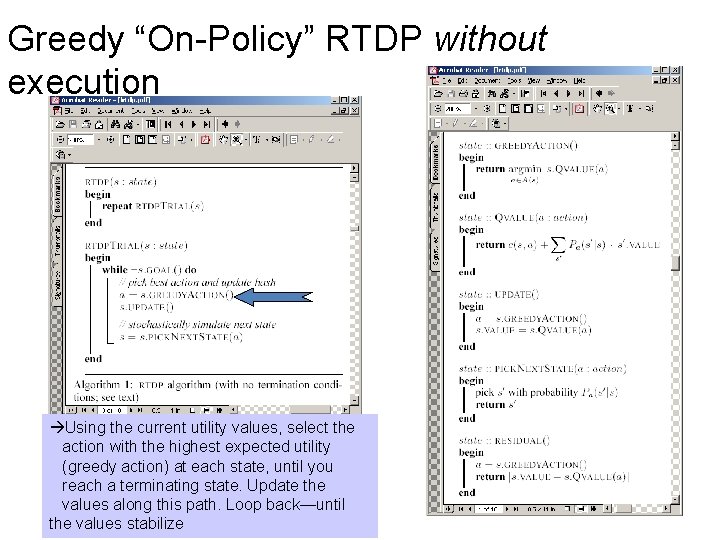

Real Time Dynamic Programming [Barto, Bradtke, Singh’ 95] • Trial: simulate greedy policy starting from start state; perform Bellman backup on visited states • RTDP: repeat Trials until cost function converges RTDP was originally introduced for Reinforcement Learning For RL, instead of “simulate” you “execute” You also have to do “exploration” in addition to “exploitation” with probability p, follow the greedy policy with 1 -p pick a random action

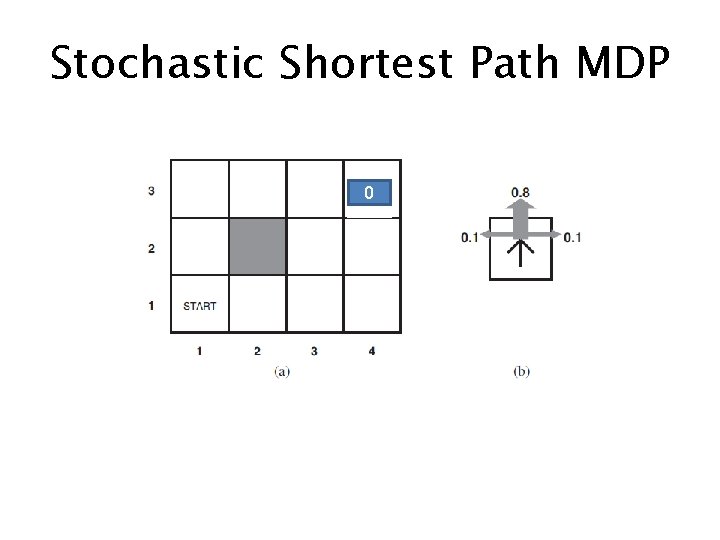

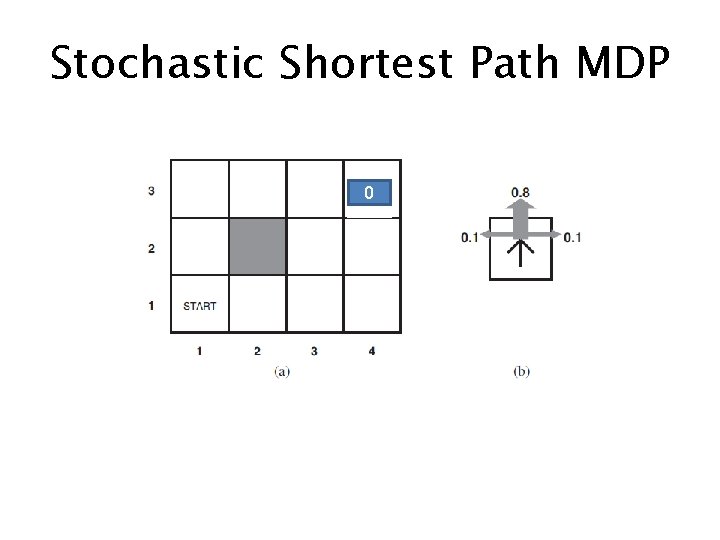

Stochastic Shortest Path MDP 0

RTDP Trial Qn+1(s 0, a) agreedy = a 2 Min a 1 Jn+1(s 0) s 0 a 2 Jn Jn ? Jn a 3 ? Jn Jn Jn Goal

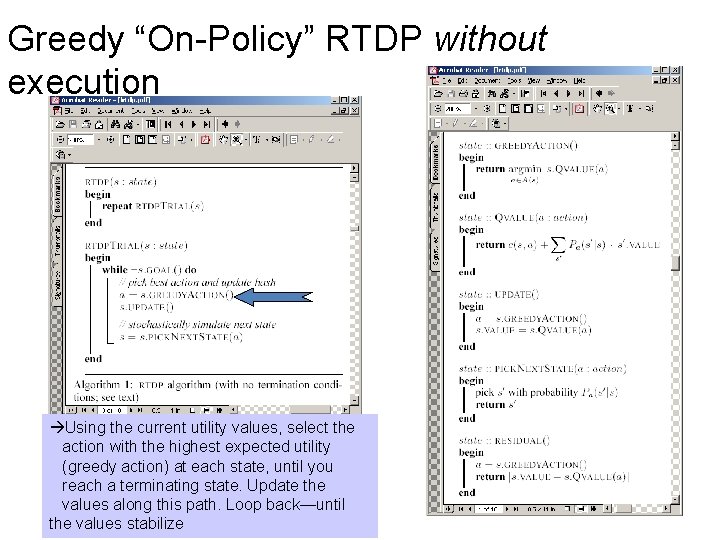

Greedy “On-Policy” RTDP without execution Using the current utility values, select the action with the highest expected utility (greedy action) at each state, until you reach a terminating state. Update the values along this path. Loop back—until the values stabilize

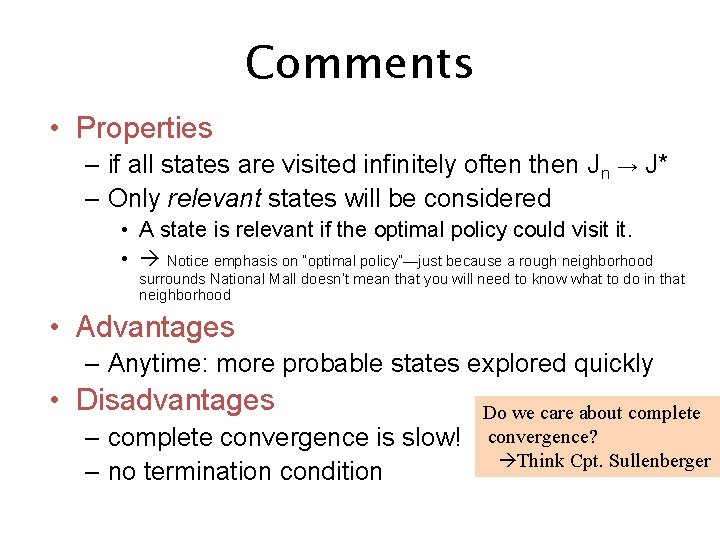

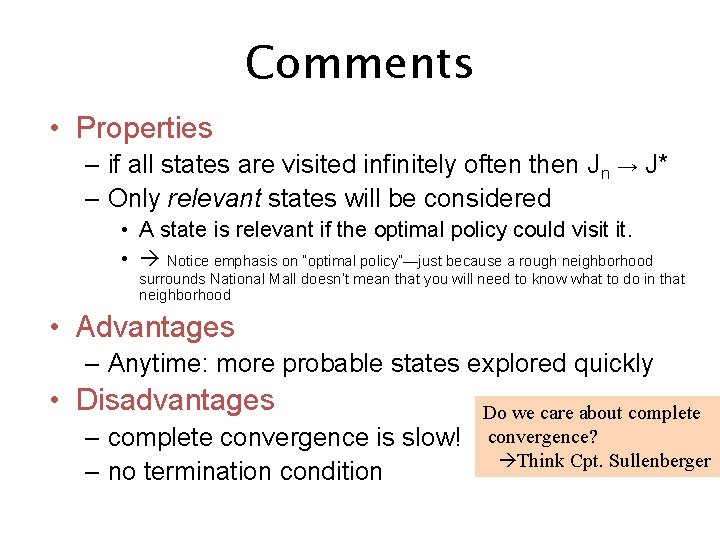

Comments • Properties – if all states are visited infinitely often then Jn → J* – Only relevant states will be considered • A state is relevant if the optimal policy could visit it. • Notice emphasis on “optimal policy”—just because a rough neighborhood surrounds National Mall doesn’t mean that you will need to know what to do in that neighborhood • Advantages – Anytime: more probable states explored quickly • Disadvantages – complete convergence is slow! – no termination condition Do we care about complete convergence? Think Cpt. Sullenberger

![Labeled RTDP BonetGeffner 03 Converged means bellman residual is less than e Initialise Labeled RTDP [Bonet&Geffner’ 03] Converged means bellman residual is less than e • Initialise](https://slidetodoc.com/presentation_image_h2/9e7e6078782bed09bef421262a49b06e/image-9.jpg)

Labeled RTDP [Bonet&Geffner’ 03] Converged means bellman residual is less than e • Initialise J 0 with an admissible heuristic ts – ⇒ Jn monotonically increases s best action hi s co st Q gh hi s gh – if the Jn for that state has converged Q co s • Label a state as solved G ) J(s) won’t change! • Backpropagate ‘solved’ labeling • Stop trials when they reach any solved state • Terminate when s 0 is solved ? G t both s and t get solved together

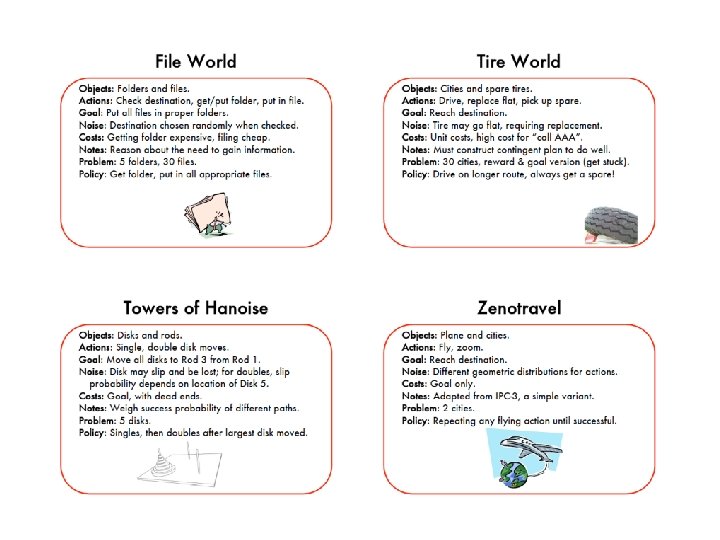

Probabilistic Planning --The competition (IPPC) --The Action language. . (PPDDL)

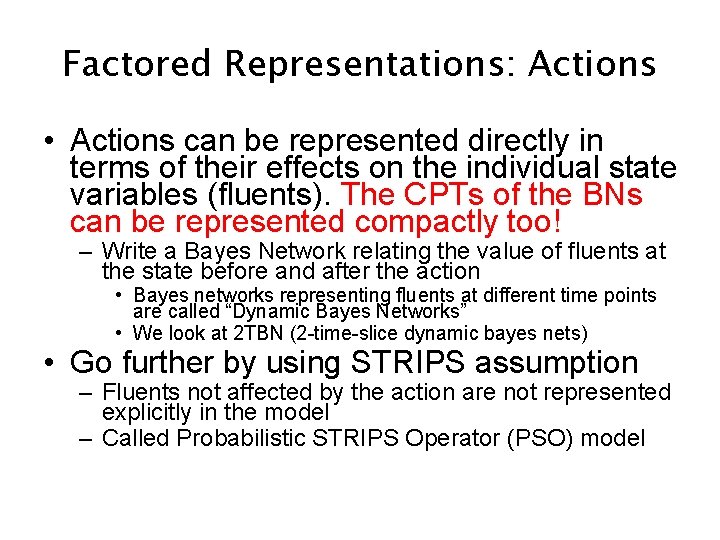

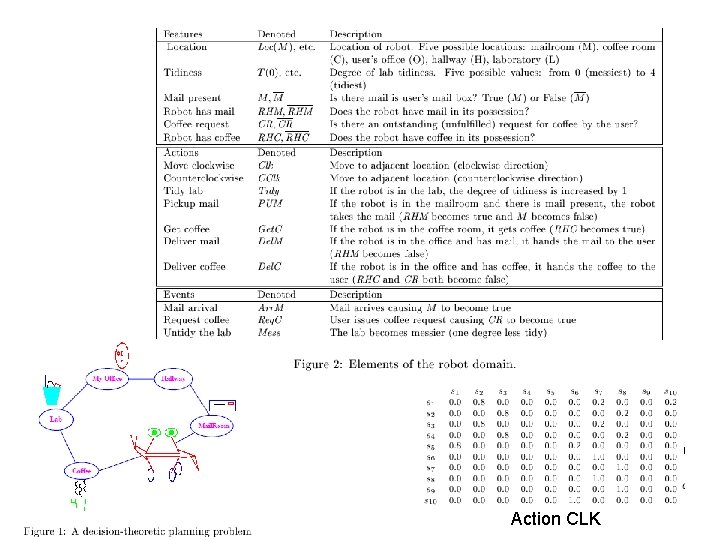

Factored Representations: Actions • Actions can be represented directly in terms of their effects on the individual state variables (fluents). The CPTs of the BNs can be represented compactly too! – Write a Bayes Network relating the value of fluents at the state before and after the action • Bayes networks representing fluents at different time points are called “Dynamic Bayes Networks” • We look at 2 TBN (2 -time-slice dynamic bayes nets) • Go further by using STRIPS assumption – Fluents not affected by the action are not represented explicitly in the model – Called Probabilistic STRIPS Operator (PSO) model

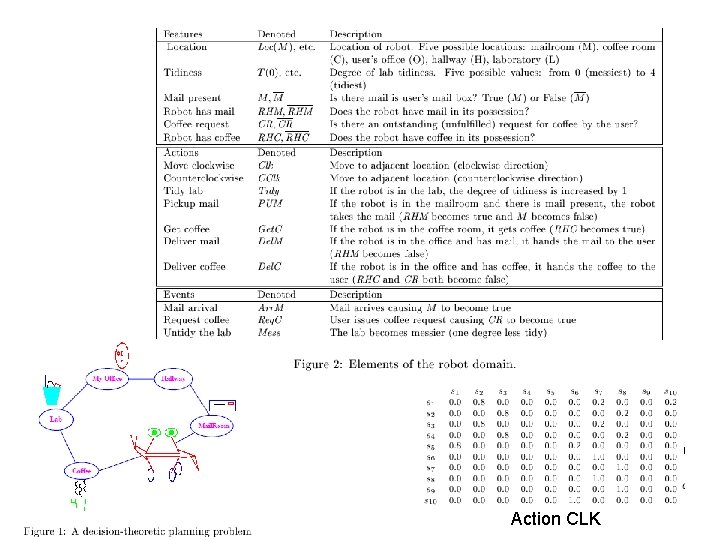

Action CLK

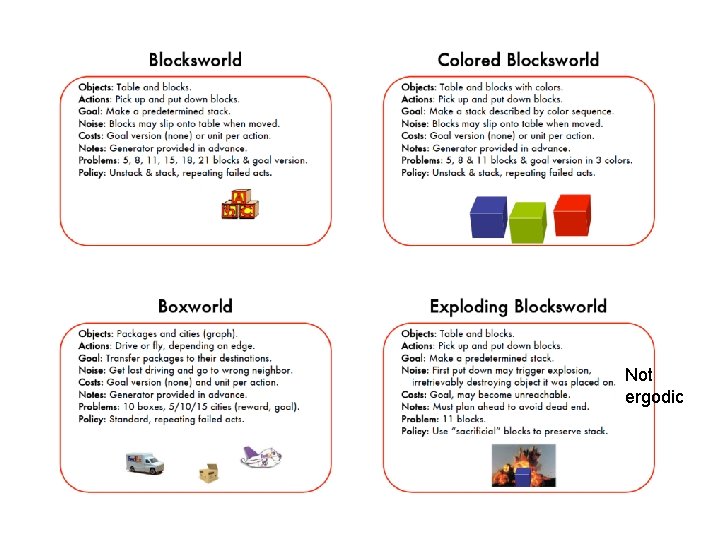

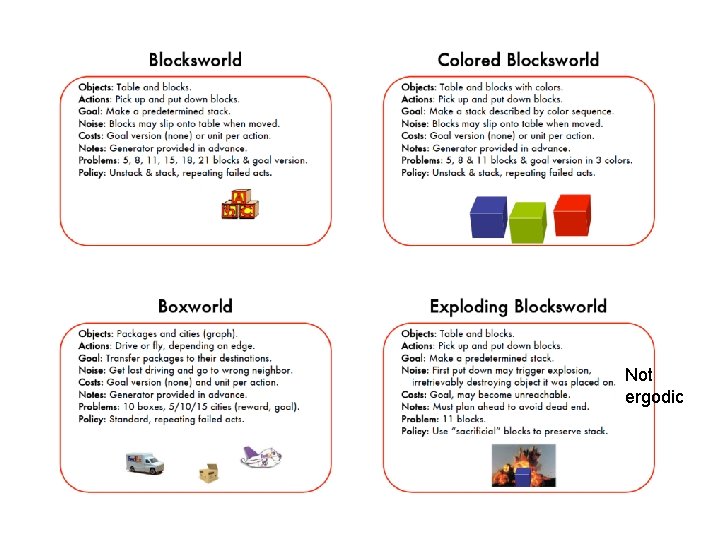

Not ergodic

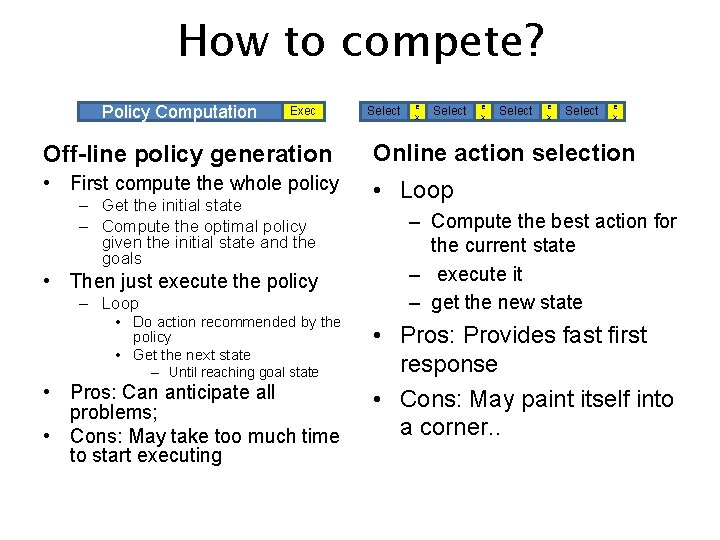

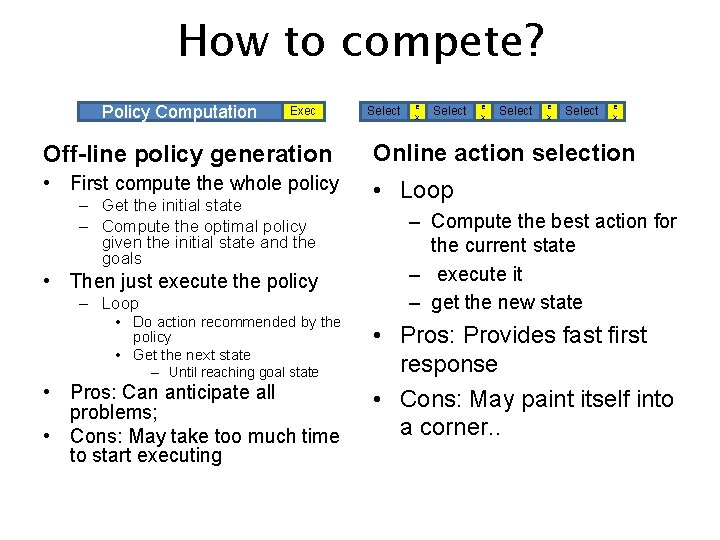

How to compete? Policy Computation Exec Select e x Off-line policy generation Online action selection • First compute the whole policy • Loop – Get the initial state – Compute the optimal policy given the initial state and the goals • Then just execute the policy – Loop • Do action recommended by the policy • Get the next state – Until reaching goal state • Pros: Can anticipate all problems; • Cons: May take too much time to start executing – Compute the best action for the current state – execute it – get the new state • Pros: Provides fast first response • Cons: May paint itself into a corner. .

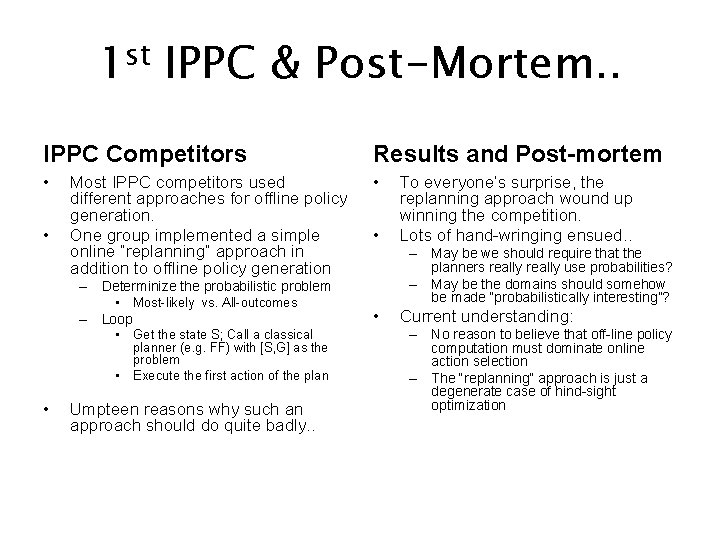

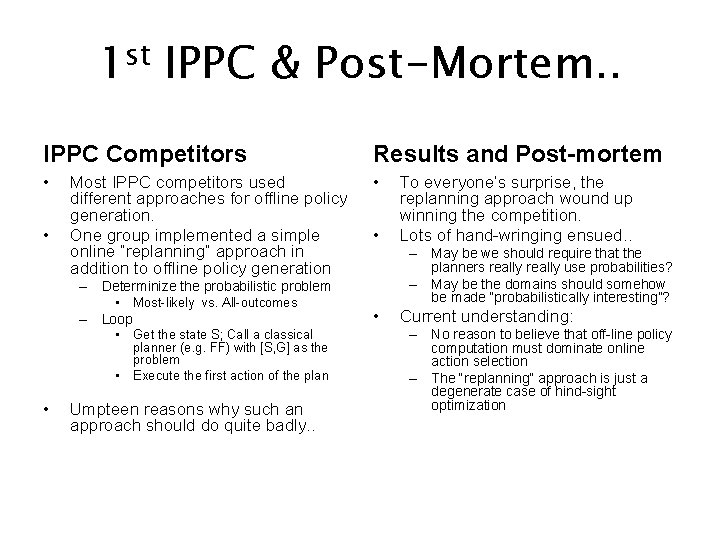

1 st IPPC & Post-Mortem. . IPPC Competitors Results and Post-mortem • • • Most IPPC competitors used different approaches for offline policy generation. One group implemented a simple online “replanning” approach in addition to offline policy generation • – May be we should require that the planners really use probabilities? – May be the domains should somehow be made “probabilistically interesting”? – Determinize the probabilistic problem – • • Most-likely vs. All-outcomes Loop • Get the state S; Call a classical planner (e. g. FF) with [S, G] as the problem • Execute the first action of the plan Umpteen reasons why such an approach should do quite badly. . To everyone’s surprise, the replanning approach wound up winning the competition. Lots of hand-wringing ensued. . • Current understanding: – No reason to believe that off-line policy computation must dominate online action selection – The “replanning” approach is just a degenerate case of hind-sight optimization

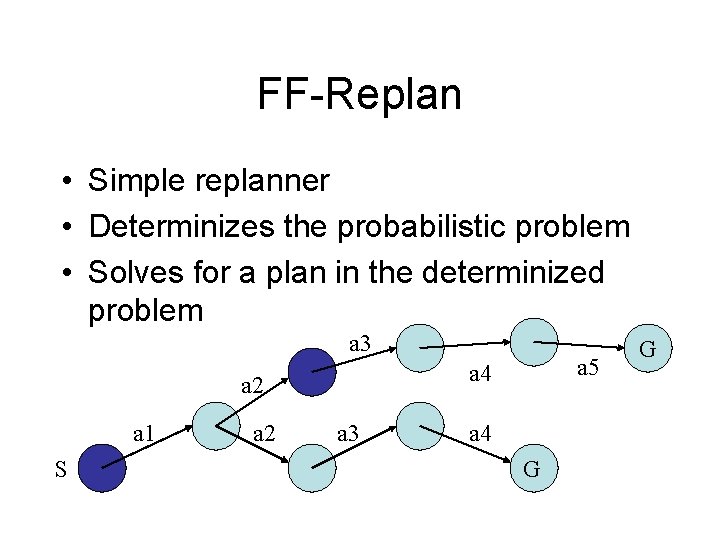

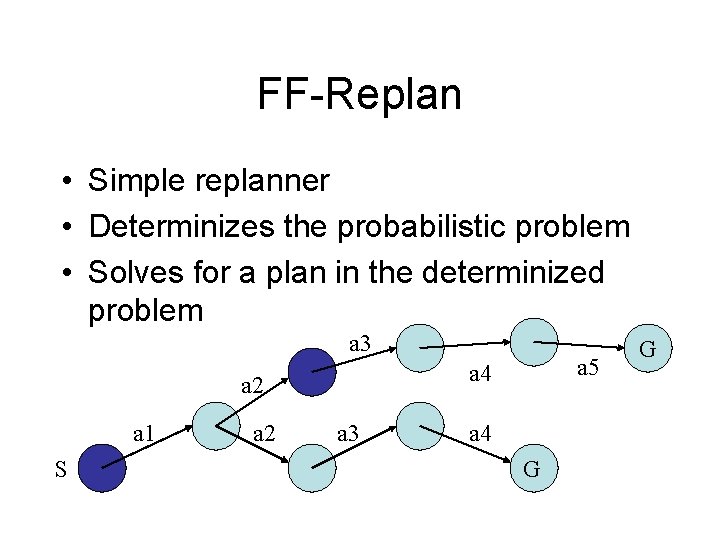

FF-Replan • Simple replanner • Determinizes the probabilistic problem • Solves for a plan in the determinized problem a 3 a 2 a 1 S a 2 a 5 a 4 a 3 a 4 G G

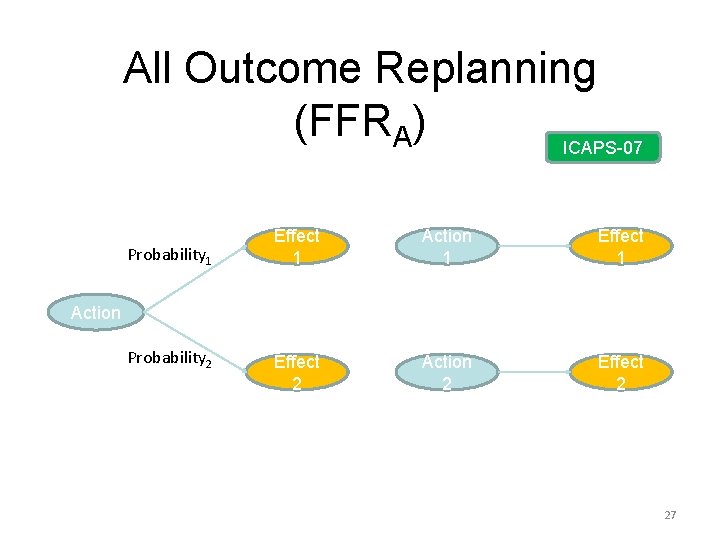

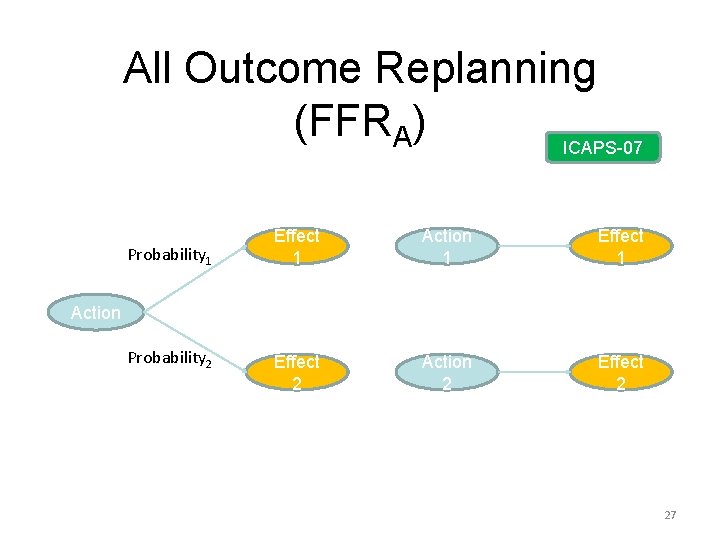

All Outcome Replanning (FFRA) ICAPS-07 Probability 1 Effect 1 Action 1 Effect 2 Action 2 Effect 2 Action Probability 2 27

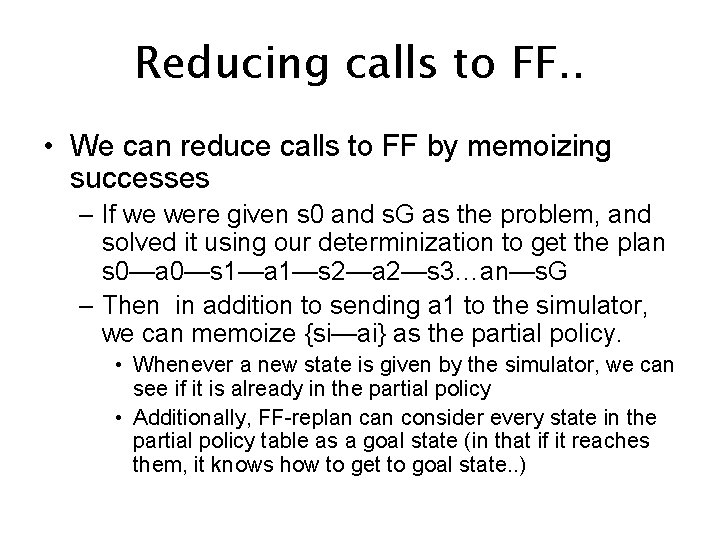

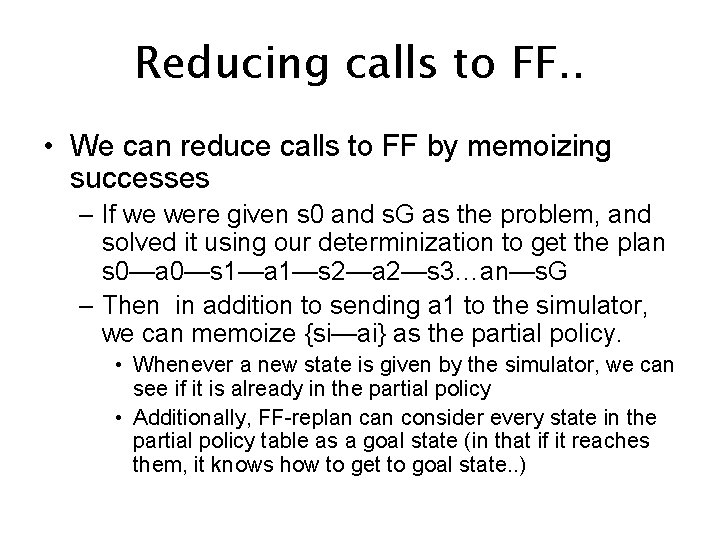

Reducing calls to FF. . • We can reduce calls to FF by memoizing successes – If we were given s 0 and s. G as the problem, and solved it using our determinization to get the plan s 0—a 0—s 1—a 1—s 2—a 2—s 3…an—s. G – Then in addition to sending a 1 to the simulator, we can memoize {si—ai} as the partial policy. • Whenever a new state is given by the simulator, we can see if it is already in the partial policy • Additionally, FF-replan consider every state in the partial policy table as a goal state (in that if it reaches them, it knows how to get to goal state. . )

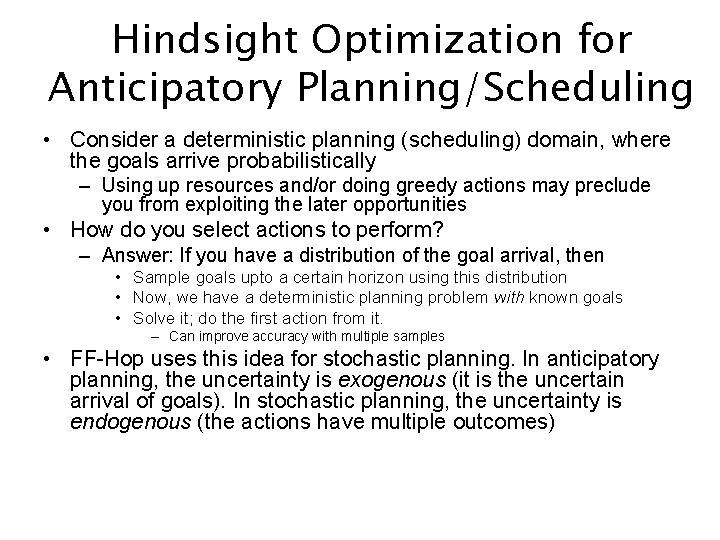

Hindsight Optimization for Anticipatory Planning/Scheduling • Consider a deterministic planning (scheduling) domain, where the goals arrive probabilistically – Using up resources and/or doing greedy actions may preclude you from exploiting the later opportunities • How do you select actions to perform? – Answer: If you have a distribution of the goal arrival, then • Sample goals upto a certain horizon using this distribution • Now, we have a deterministic planning problem with known goals • Solve it; do the first action from it. – Can improve accuracy with multiple samples • FF-Hop uses this idea for stochastic planning. In anticipatory planning, the uncertainty is exogenous (it is the uncertain arrival of goals). In stochastic planning, the uncertainty is endogenous (the actions have multiple outcomes)

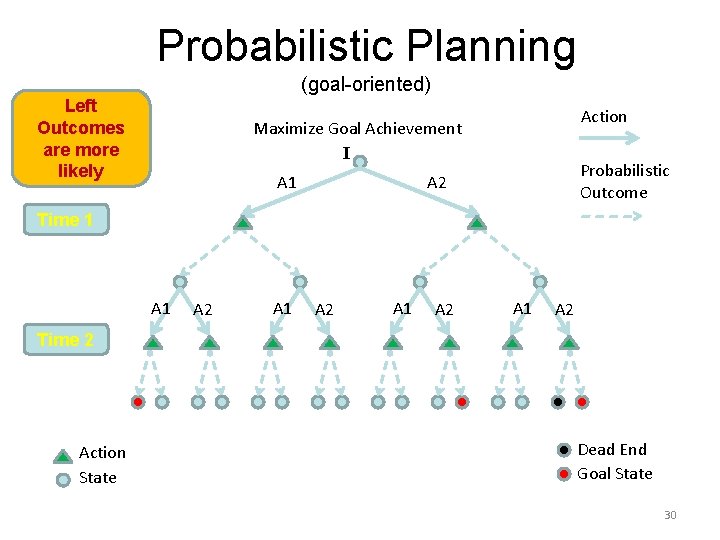

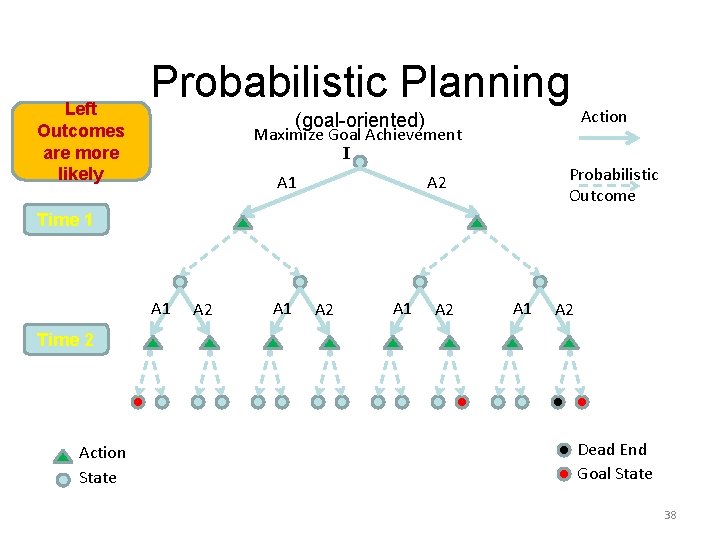

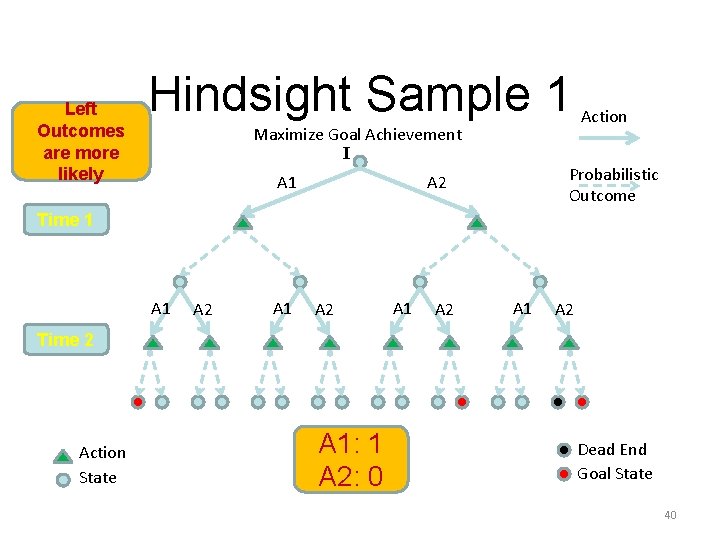

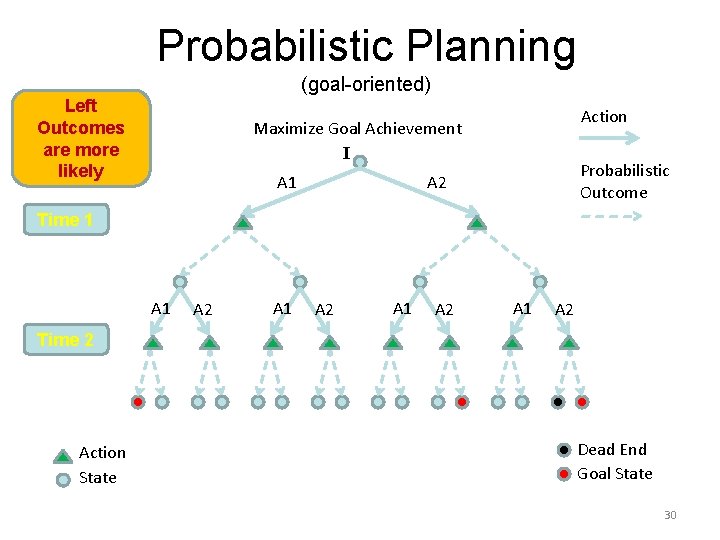

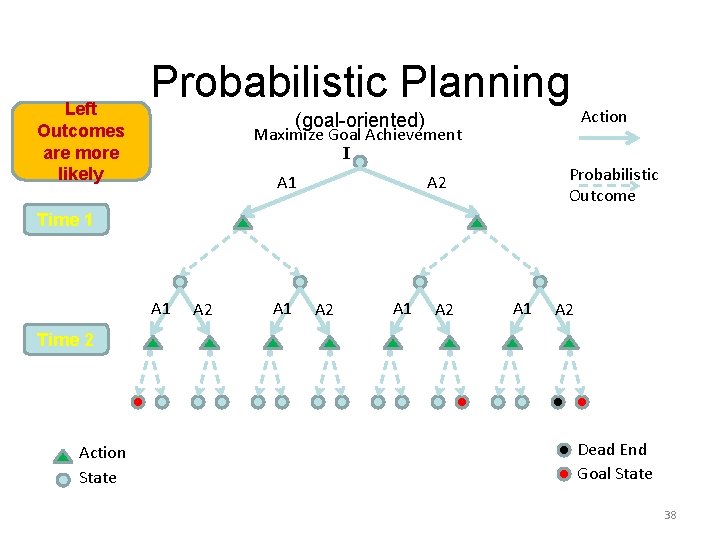

Probabilistic Planning (goal-oriented) Left Outcomes are more likely Action Maximize Goal Achievement I A 1 Probabilistic Outcome A 2 Time 1 A 2 A 1 A 2 Time 2 Action State Dead End Goal State 30

Problems of FF-Replan and better alternative sampling FF-Replan’s Static Determinizations don’t respect probabilities. We need “Probabilistic and Dynamic Determinization” Sample Future Outcomes and Determinization in Hindsight Each Future Sample Becomes a Known-Future Deterministic Problem 33

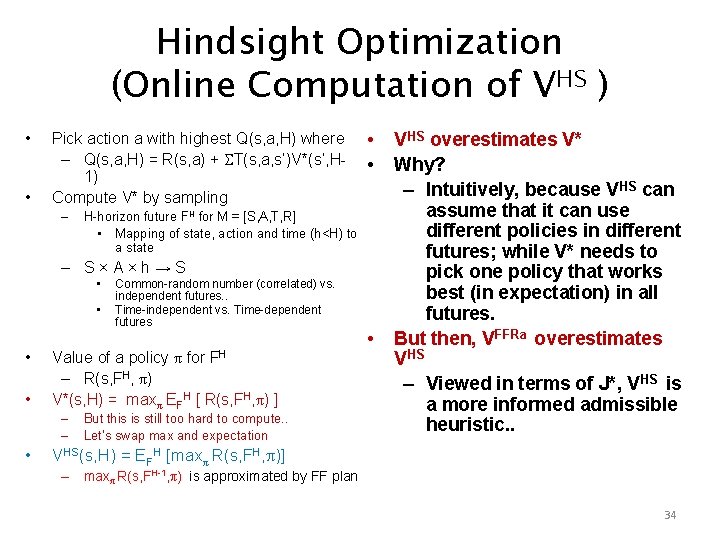

Hindsight Optimization (Online Computation of VHS ) • • Pick action a with highest Q(s, a, H) where – Q(s, a, H) = R(s, a) + ST(s, a, s’)V*(s’, H 1) Compute V* by sampling – • • H-horizon future FH for M = [S, A, T, R] • Mapping of state, action and time (h<H) to a state – S×A×h→S • • FH Value of a policy π for – R(s, FH, π) V*(s, H) = maxπ EFH [ R(s, FH, π) ] – – • Common-random number (correlated) vs. independent futures. . Time-independent vs. Time-dependent futures But this is still too hard to compute. . Let’s swap max and expectation • VHS overestimates V* Why? – Intuitively, because VHS can assume that it can use different policies in different futures; while V* needs to pick one policy that works best (in expectation) in all futures. But then, VFFRa overestimates VHS – Viewed in terms of J*, VHS is a more informed admissible heuristic. . VHS(s, H) = EFH [maxπ R(s, FH, π)] – maxπ R(s, FH-1, π) is approximated by FF plan 34

Implementation FF-Hindsight Constructs a set of futures • Solves the planning problem using the H-horizon futures using FF • Sums the rewards of each of the plans • Chooses action with largest Qhs value

Left Outcomes are more likely Probabilistic Planning (goal-oriented) Maximize Goal Achievement I A 1 Action Probabilistic Outcome A 2 Time 1 A 2 A 1 A 2 Time 2 Action State Dead End Goal State 38

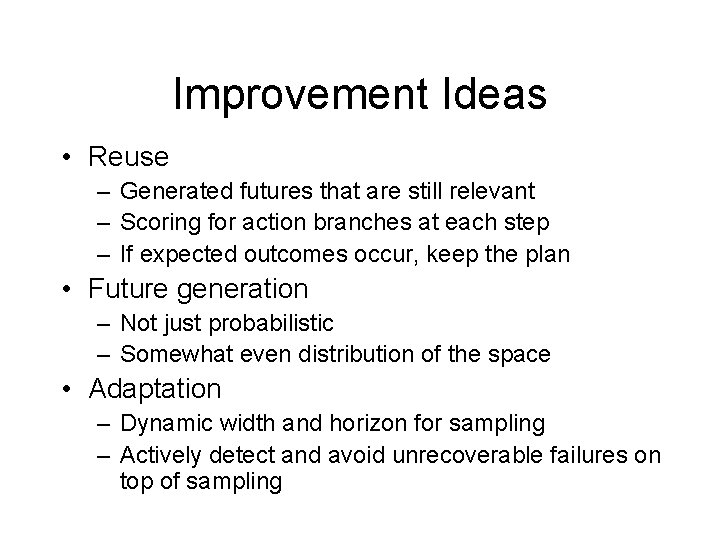

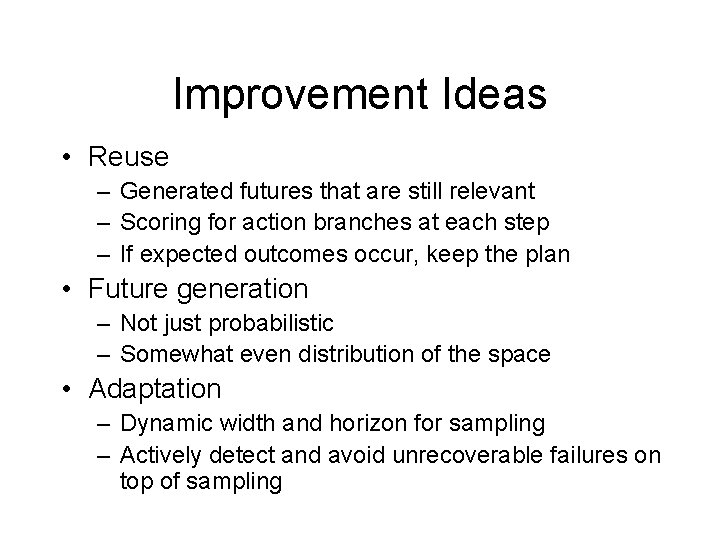

Improvement Ideas • Reuse – Generated futures that are still relevant – Scoring for action branches at each step – If expected outcomes occur, keep the plan • Future generation – Not just probabilistic – Somewhat even distribution of the space • Adaptation – Dynamic width and horizon for sampling – Actively detect and avoid unrecoverable failures on top of sampling

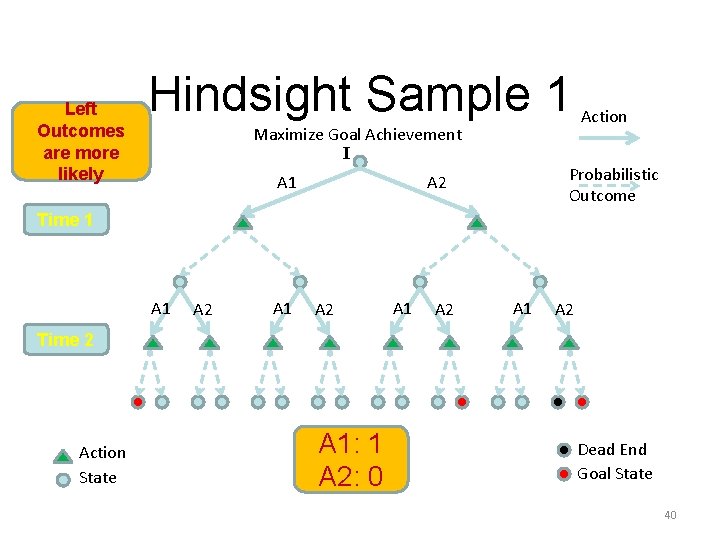

Left Outcomes are more likely Hindsight Sample 1 Maximize Goal Achievement I A 1 Action Probabilistic Outcome A 2 Time 1 A 2 A 1 A 2 Time 2 Action State A 1: 1 A 2: 0 Dead End Goal State 40

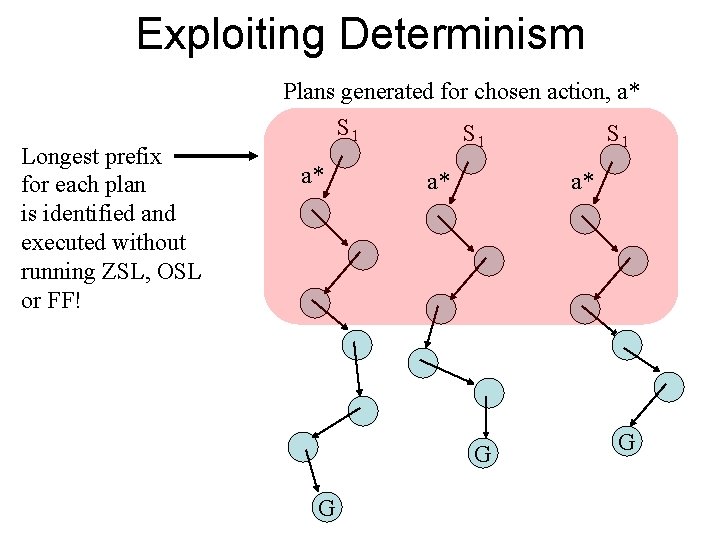

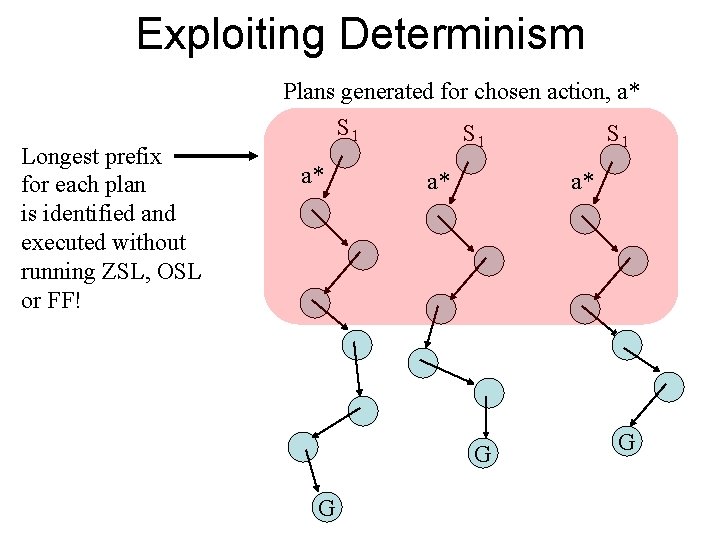

Exploiting Determinism Longest prefix for each plan is identified and executed without running ZSL, OSL or FF! Plans generated for chosen action, a* S 1 S 1 a* a* a* G G G

Handling unlikely outcomes: All-outcome Determinization • Assign each possible outcome an action • Solve for a plan • Combine the plan with the plans from the HOP solutions

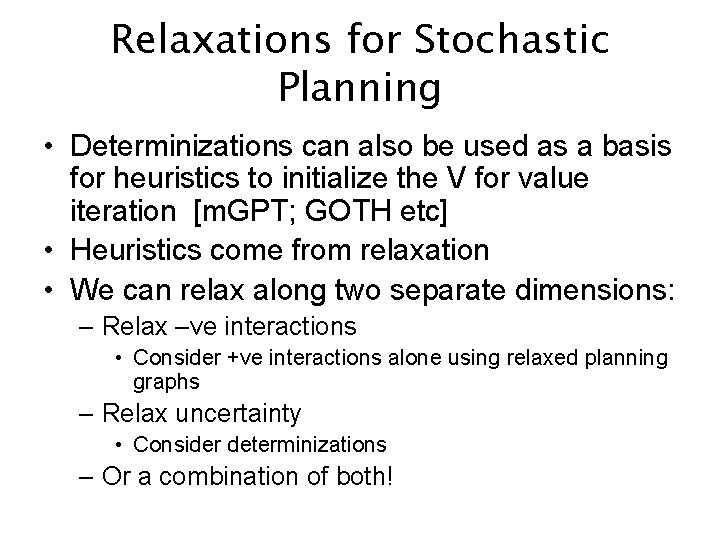

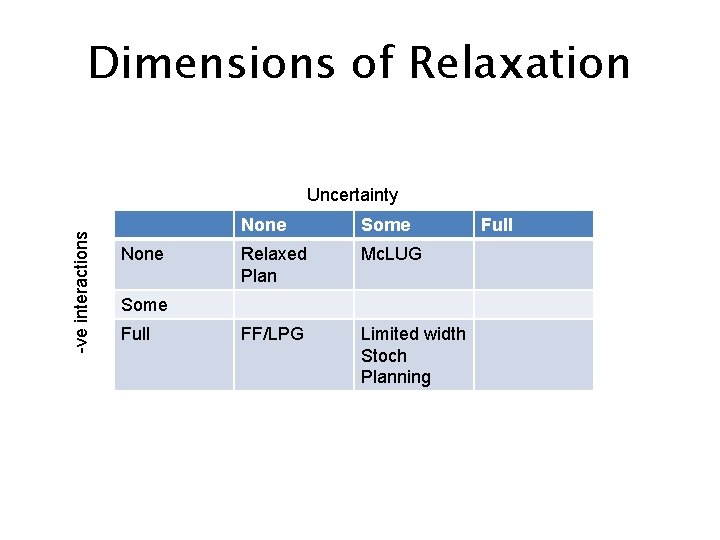

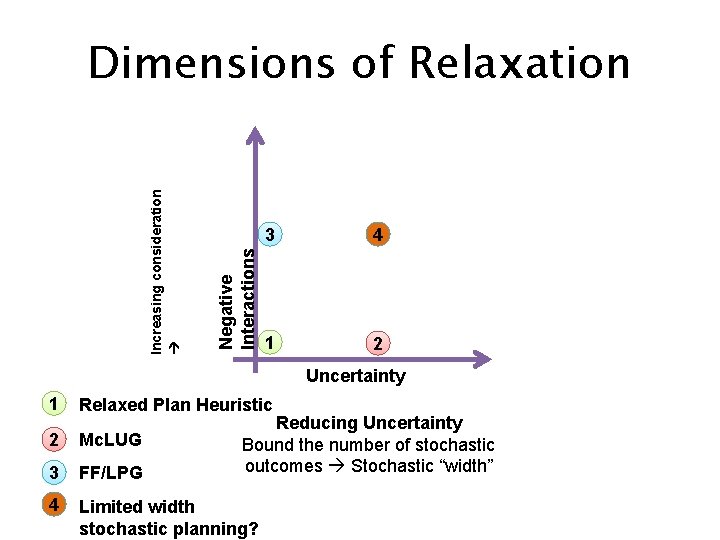

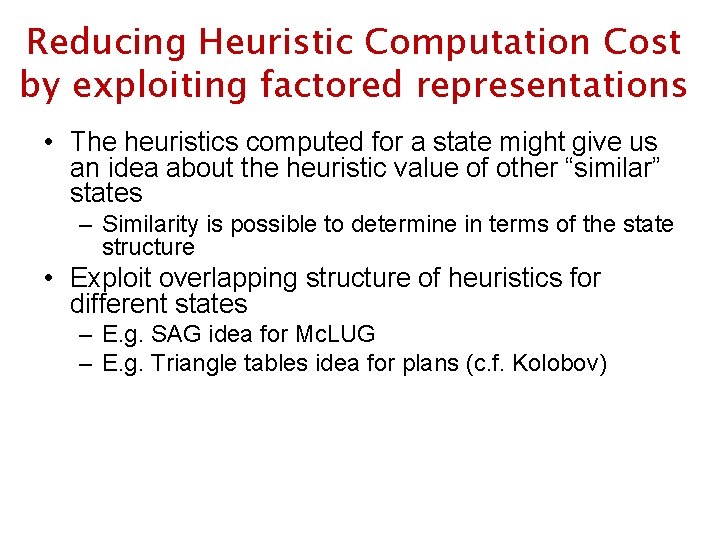

Relaxations for Stochastic Planning • Determinizations can also be used as a basis for heuristics to initialize the V for value iteration [m. GPT; GOTH etc] • Heuristics come from relaxation • We can relax along two separate dimensions: – Relax –ve interactions • Consider +ve interactions alone using relaxed planning graphs – Relax uncertainty • Consider determinizations – Or a combination of both!

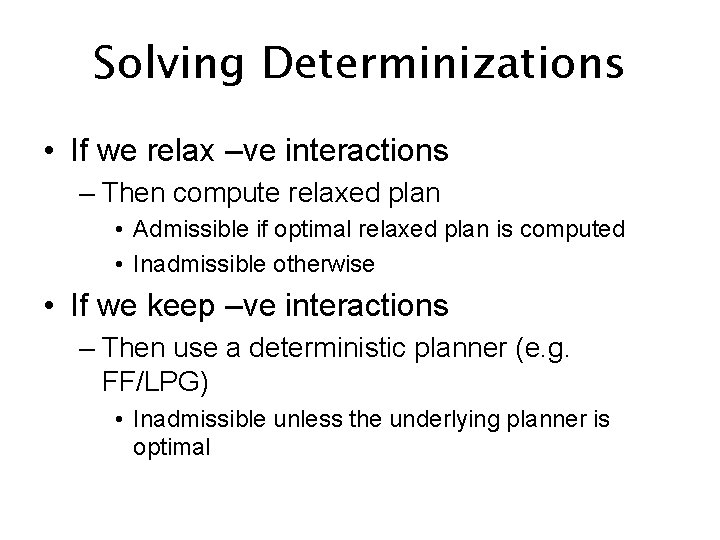

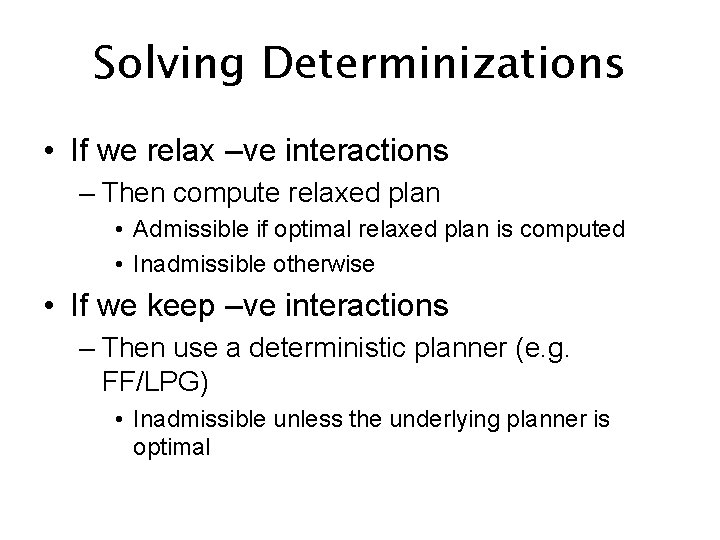

Solving Determinizations • If we relax –ve interactions – Then compute relaxed plan • Admissible if optimal relaxed plan is computed • Inadmissible otherwise • If we keep –ve interactions – Then use a deterministic planner (e. g. FF/LPG) • Inadmissible unless the underlying planner is optimal

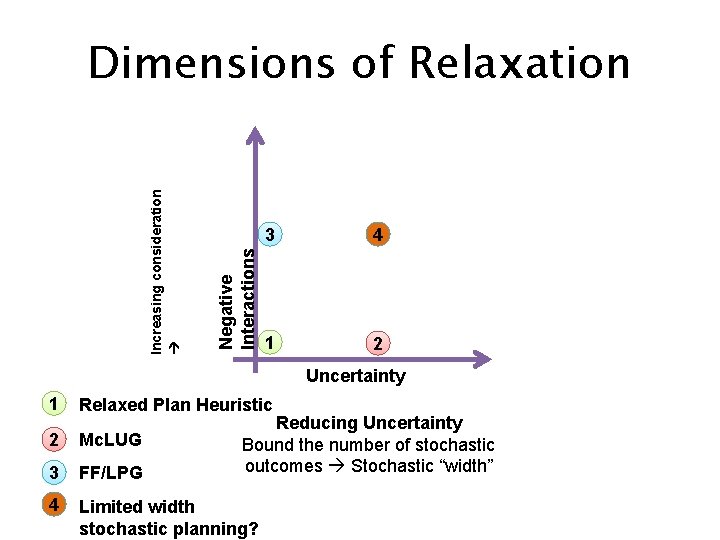

Negative Interactions Increasing consideration Dimensions of Relaxation 3 4 1 2 Uncertainty 1 Relaxed Plan Heuristic 2 Mc. LUG 3 FF/LPG 4 Limited width stochastic planning? Reducing Uncertainty Bound the number of stochastic outcomes Stochastic “width”

Dimensions of Relaxation -ve interactions Uncertainty None Some Relaxed Plan Mc. LUG FF/LPG Limited width Stoch Planning Some Full

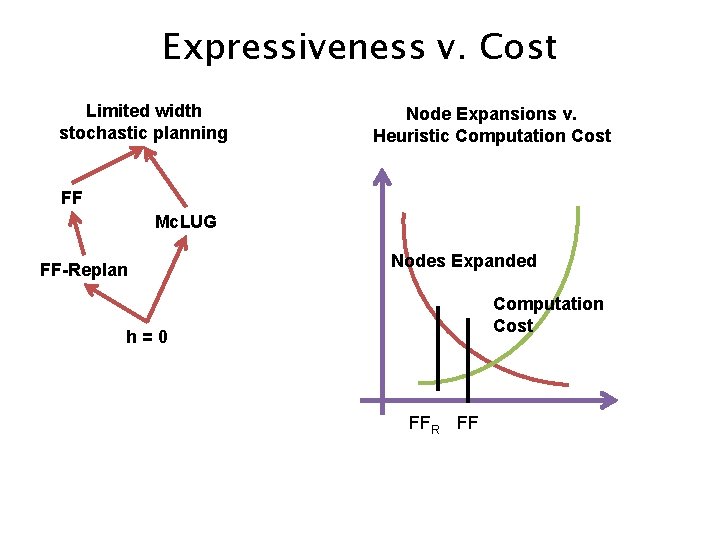

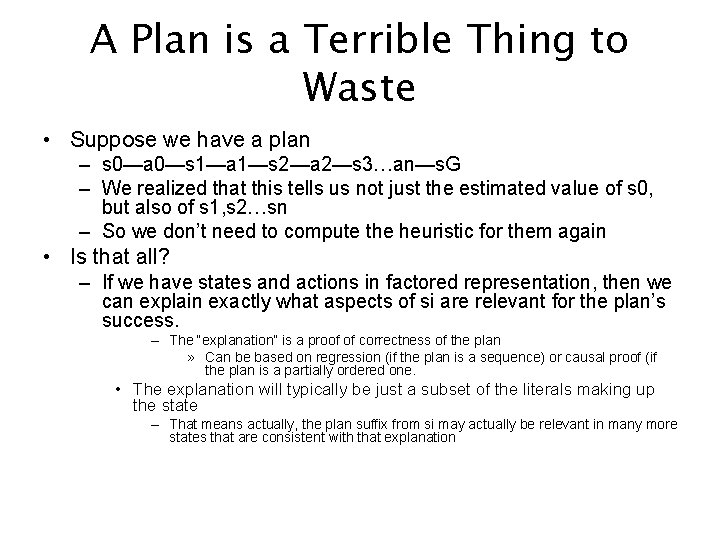

Expressiveness v. Cost Limited width stochastic planning Node Expansions v. Heuristic Computation Cost FF Mc. LUG FF-Replan Nodes Expanded Computation Cost h=0 FFR FF

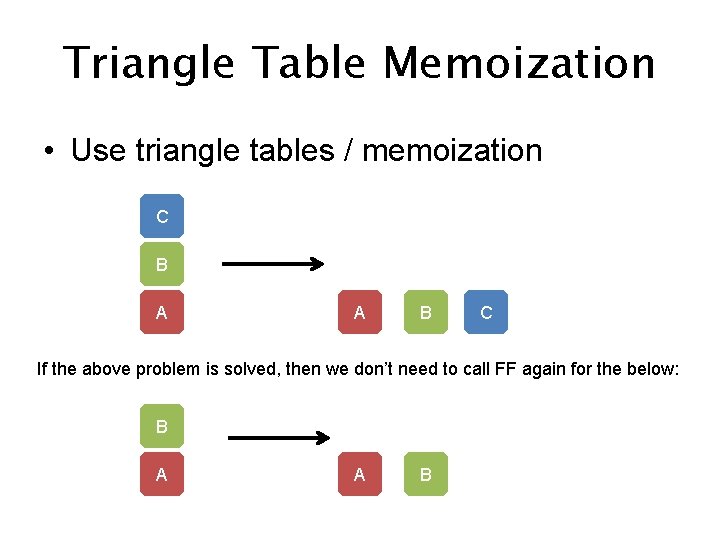

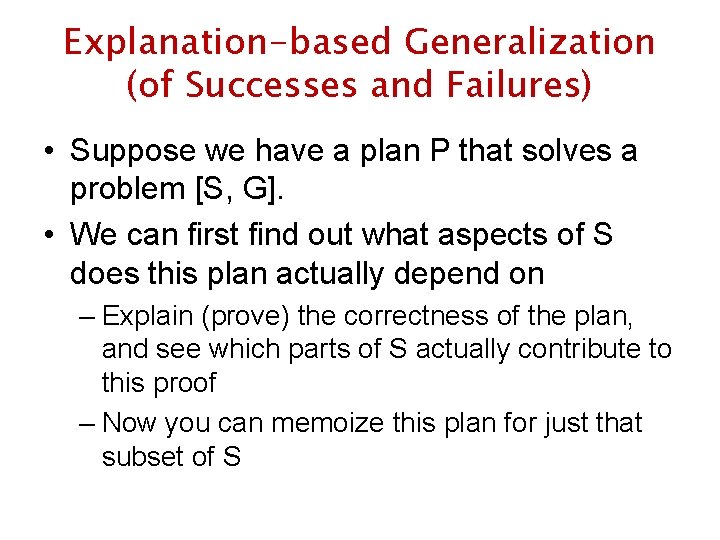

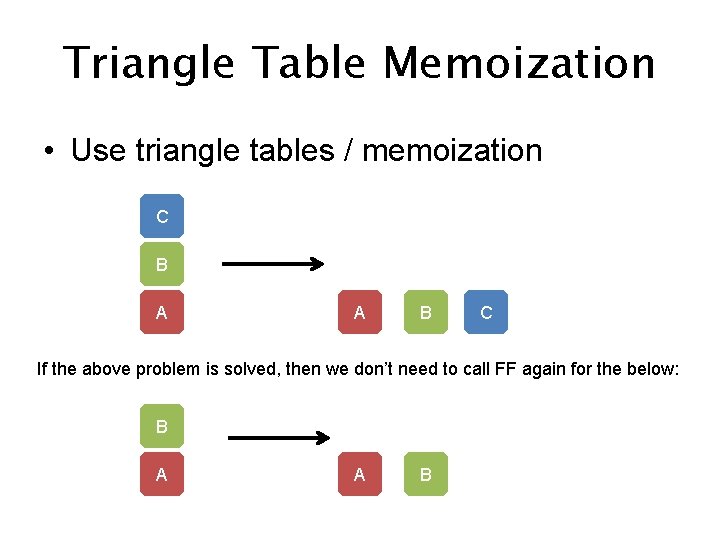

Reducing Heuristic Computation Cost by exploiting factored representations • The heuristics computed for a state might give us an idea about the heuristic value of other “similar” states – Similarity is possible to determine in terms of the state structure • Exploit overlapping structure of heuristics for different states – E. g. SAG idea for Mc. LUG – E. g. Triangle tables idea for plans (c. f. Kolobov)

A Plan is a Terrible Thing to Waste • Suppose we have a plan – s 0—a 0—s 1—a 1—s 2—a 2—s 3…an—s. G – We realized that this tells us not just the estimated value of s 0, but also of s 1, s 2…sn – So we don’t need to compute the heuristic for them again • Is that all? – If we have states and actions in factored representation, then we can explain exactly what aspects of si are relevant for the plan’s success. – The “explanation” is a proof of correctness of the plan » Can be based on regression (if the plan is a sequence) or causal proof (if the plan is a partially ordered one. • The explanation will typically be just a subset of the literals making up the state – That means actually, the plan suffix from si may actually be relevant in many more states that are consistent with that explanation

Triangle Table Memoization • Use triangle tables / memoization C B A A B C If the above problem is solved, then we don’t need to call FF again for the below: B A A B

Explanation-based Generalization (of Successes and Failures) • Suppose we have a plan P that solves a problem [S, G]. • We can first find out what aspects of S does this plan actually depend on – Explain (prove) the correctness of the plan, and see which parts of S actually contribute to this proof – Now you can memoize this plan for just that subset of S

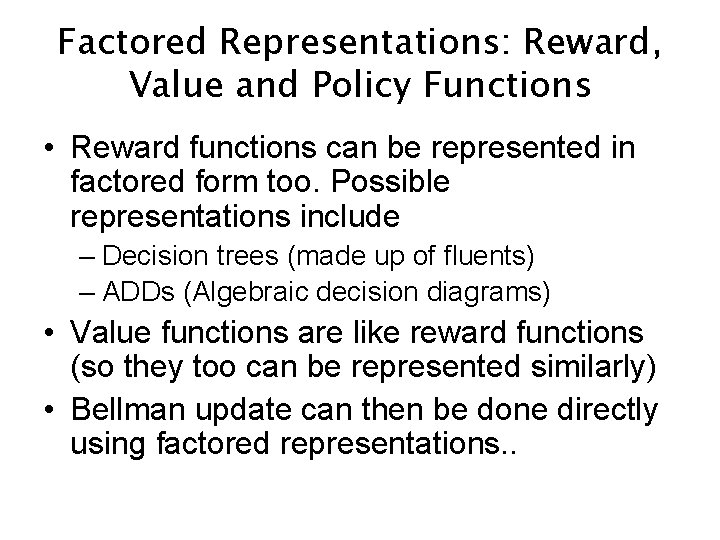

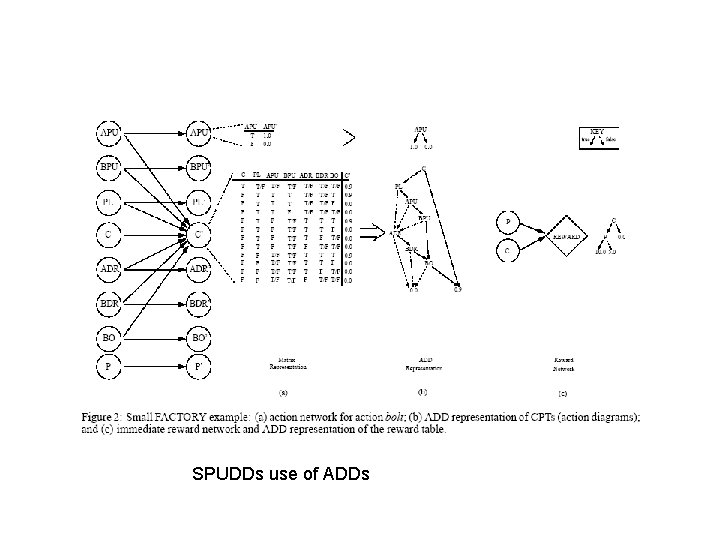

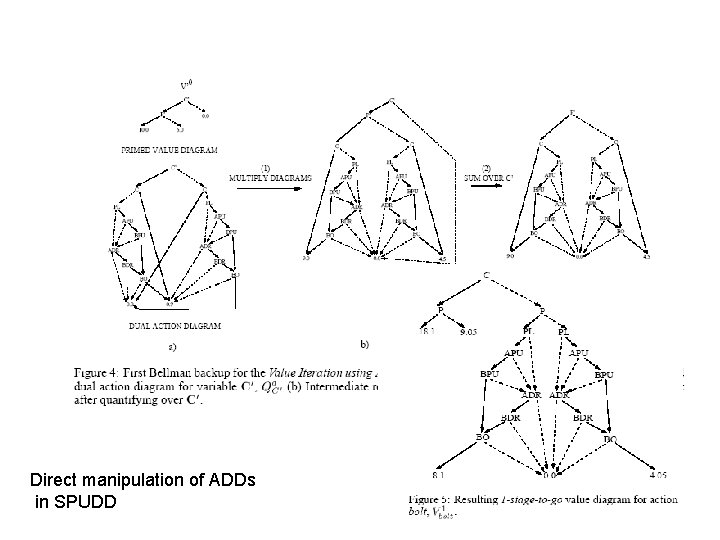

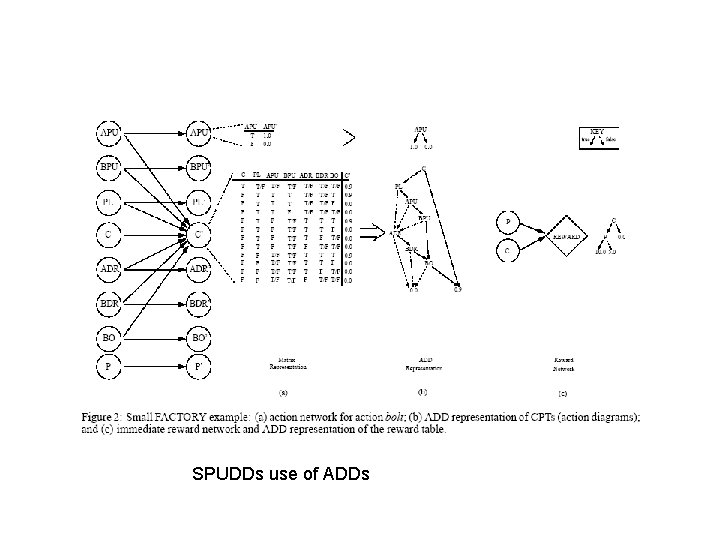

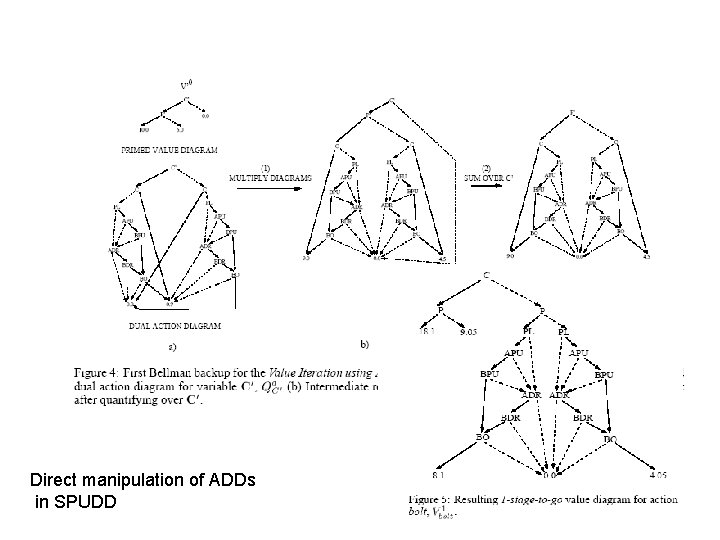

Factored Representations: Reward, Value and Policy Functions • Reward functions can be represented in factored form too. Possible representations include – Decision trees (made up of fluents) – ADDs (Algebraic decision diagrams) • Value functions are like reward functions (so they too can be represented similarly) • Bellman update can then be done directly using factored representations. .

SPUDDs use of ADDs

Direct manipulation of ADDs in SPUDD

Now i see it now you don't

Now i see it now you don't That was then this is now summary

That was then this is now summary Summary of story of an hour

Summary of story of an hour Do you finish your homework

Do you finish your homework Environmentalist are earnestly trying to determine

Environmentalist are earnestly trying to determine Until lambs become lions

Until lambs become lions Jelaskan tujuan perulangan 'repeat' digunakan. *

Jelaskan tujuan perulangan 'repeat' digunakan. * Rise and rise again until lambs become lions

Rise and rise again until lambs become lions Flowchart repeat loop

Flowchart repeat loop Algoritma repeat until

Algoritma repeat until Statement repeat dan until merupakan pengganti dari

Statement repeat dan until merupakan pengganti dari Practice until perfect

Practice until perfect Till we meet till we meet at jesus feet

Till we meet till we meet at jesus feet Wordless book song

Wordless book song Robbie sinclair security

Robbie sinclair security What does a strike slip fault cause

What does a strike slip fault cause Forms and shores must not be removed until

Forms and shores must not be removed until Until the nineteenth century only plant or animal fibers

Until the nineteenth century only plant or animal fibers Matlab loop until condition met

Matlab loop until condition met Dog bite pictures

Dog bite pictures Little lambs academy

Little lambs academy Until 1996 the sears tower

Until 1996 the sears tower A brick sits on a table until you push on it.

A brick sits on a table until you push on it. A brick sits on a table until you push it

A brick sits on a table until you push it Entrepreneurs work until the job is done

Entrepreneurs work until the job is done We've waited all through the year

We've waited all through the year Second star to the right and straight on until morning

Second star to the right and straight on until morning Until lions have their historians

Until lions have their historians The poet robert herrick ask the daffodils to stay until the

The poet robert herrick ask the daffodils to stay until the You can't have any chocolate it's

You can't have any chocolate it's Pascal while loop

Pascal while loop Parvenau

Parvenau To cut food into small, uneven pieces.

To cut food into small, uneven pieces. Up until this point

Up until this point Up until the 1960s

Up until the 1960s Quia conjunctions rags to riches

Quia conjunctions rags to riches Not until inversion

Not until inversion Praise until the spirit of worship comes

Praise until the spirit of worship comes All the way until the end

All the way until the end Is which a subordinating conjunction

Is which a subordinating conjunction Promotion valid until

Promotion valid until Stress that squeezes rock until it folds or breaks

Stress that squeezes rock until it folds or breaks Until this moment

Until this moment Do until vba

Do until vba Perform until cobol example

Perform until cobol example Where were sneakers manufactured until the 1960s

Where were sneakers manufactured until the 1960s Rise and rise again until lambs become lions origin

Rise and rise again until lambs become lions origin Lasted until 1914

Lasted until 1914 Silva service now

Silva service now