Stochastic Gradient Descent Presenter Khishigsuren Ph D Candidate

- Slides: 19

Stochastic Gradient Descent Presenter: Khishigsuren (류수리) Ph. D Candidate student Database / Bioinformatics Laboratory

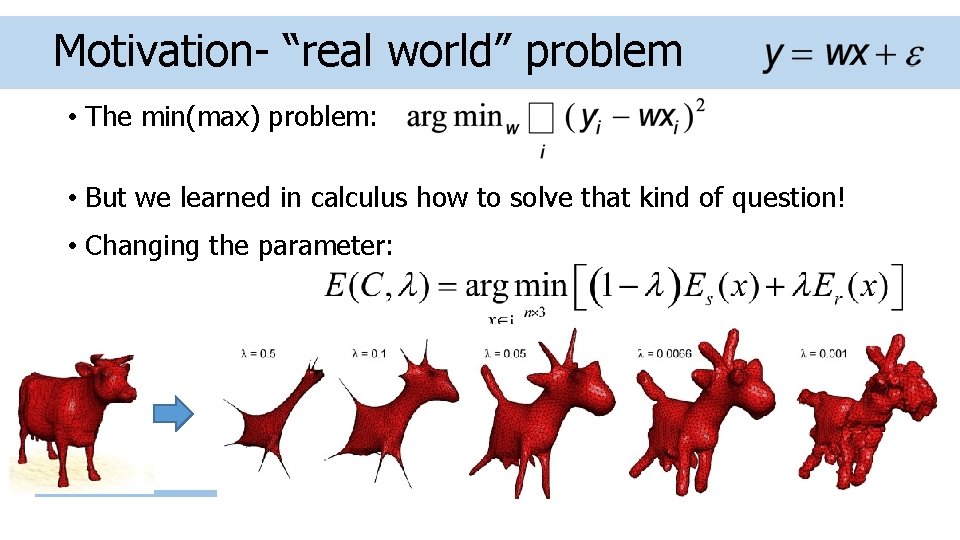

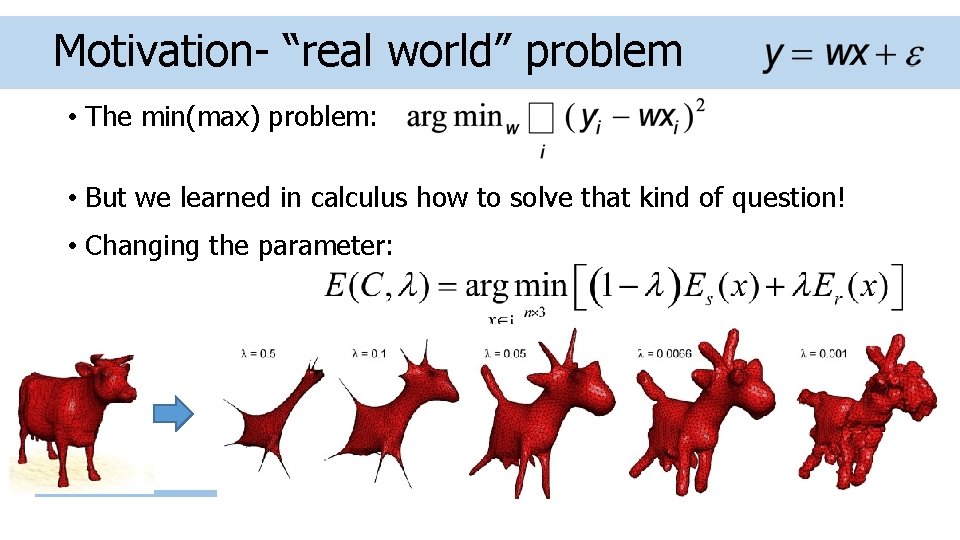

Motivation- “real world” problem • The min(max) problem: • But we learned in calculus how to solve that kind of question! • Changing the parameter:

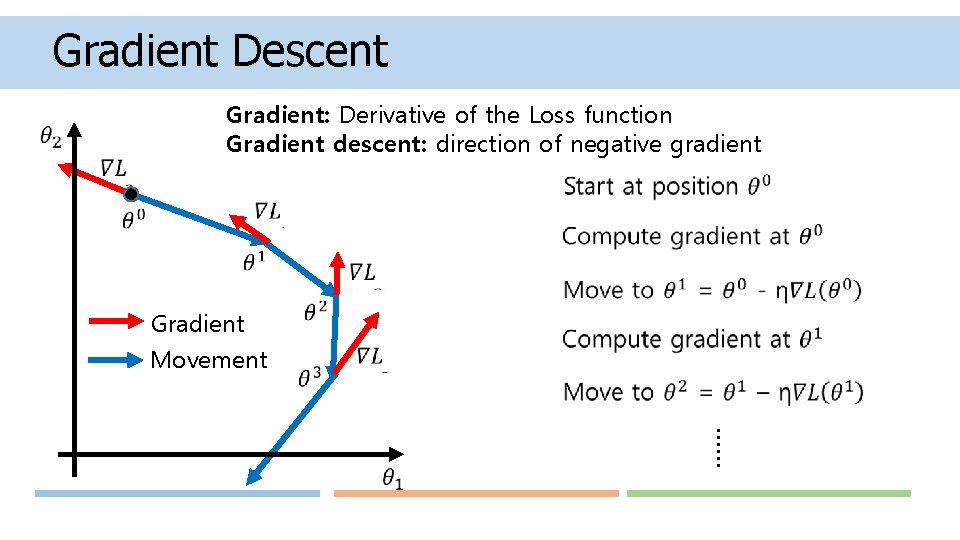

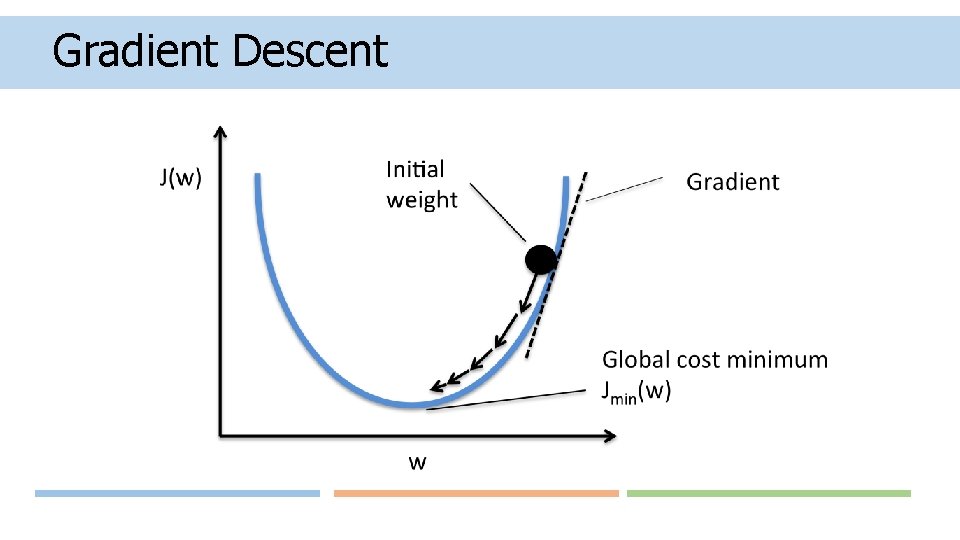

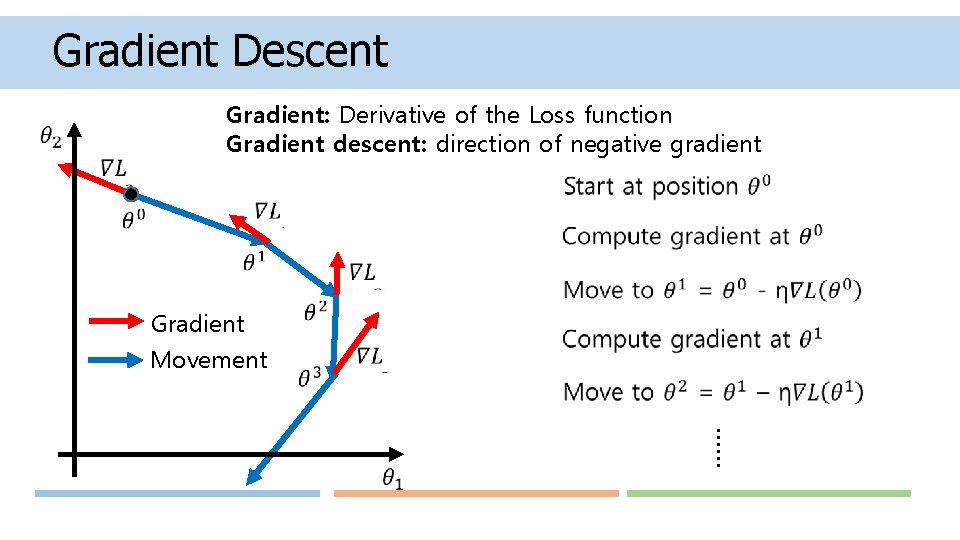

Gradient Descent Gradient: Derivative of the Loss function Gradient descent: direction of negative gradient Gradient Movement ……

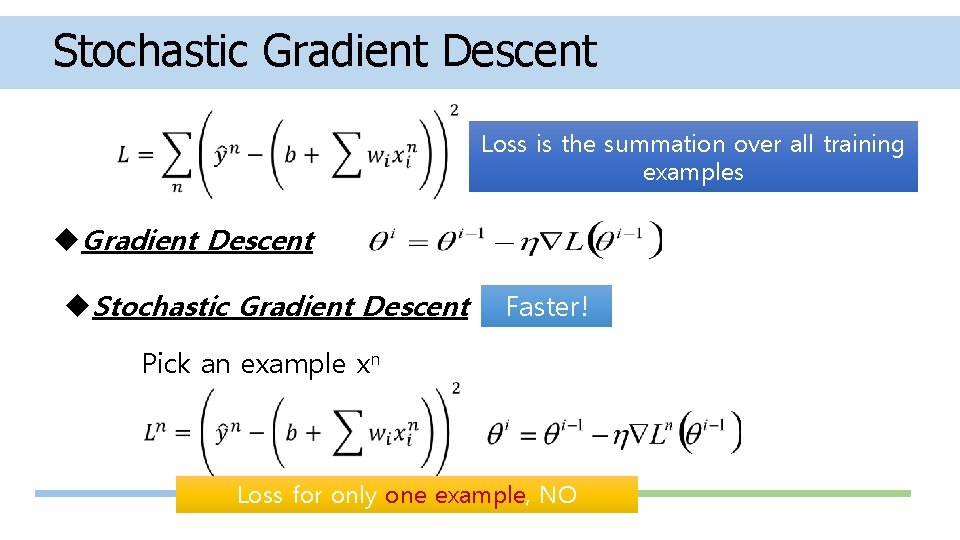

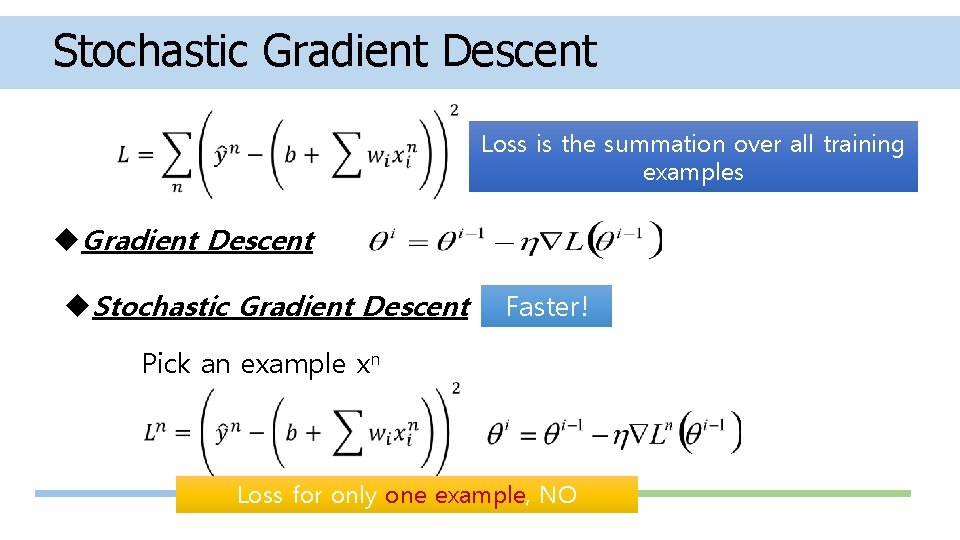

Stochastic Gradient Descent Loss is the summation over all training examples u. Gradient Descent u. Stochastic Gradient Descent Faster! Pick an example xn Loss for only one example, NO summing

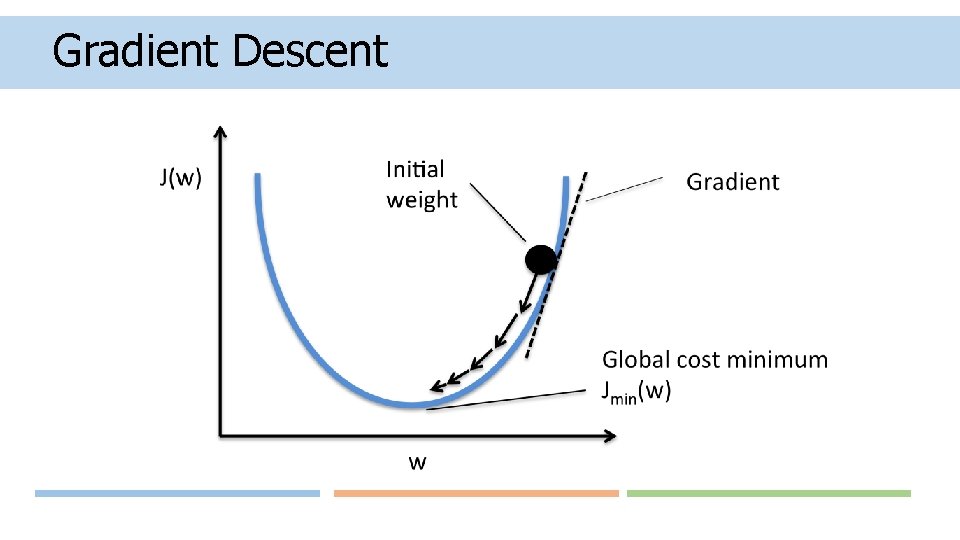

Gradient Descent

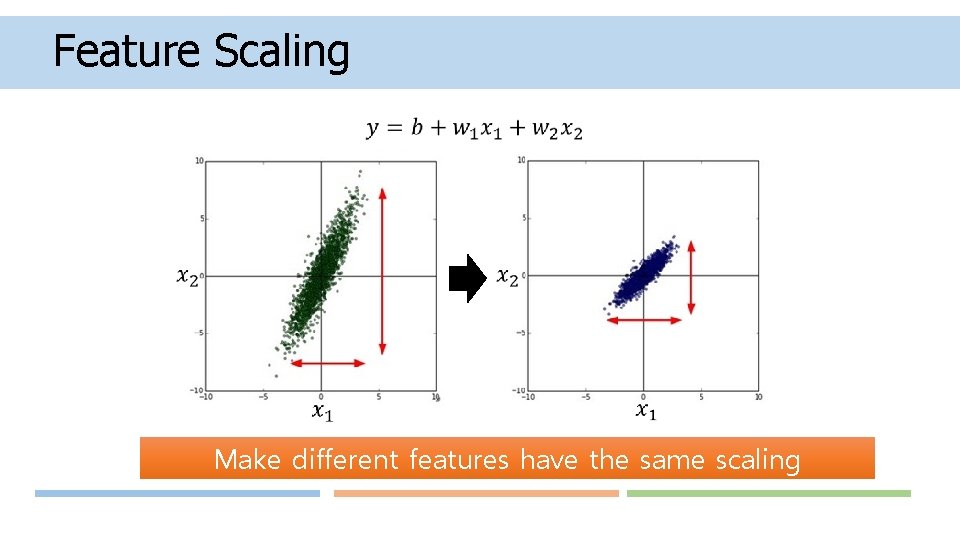

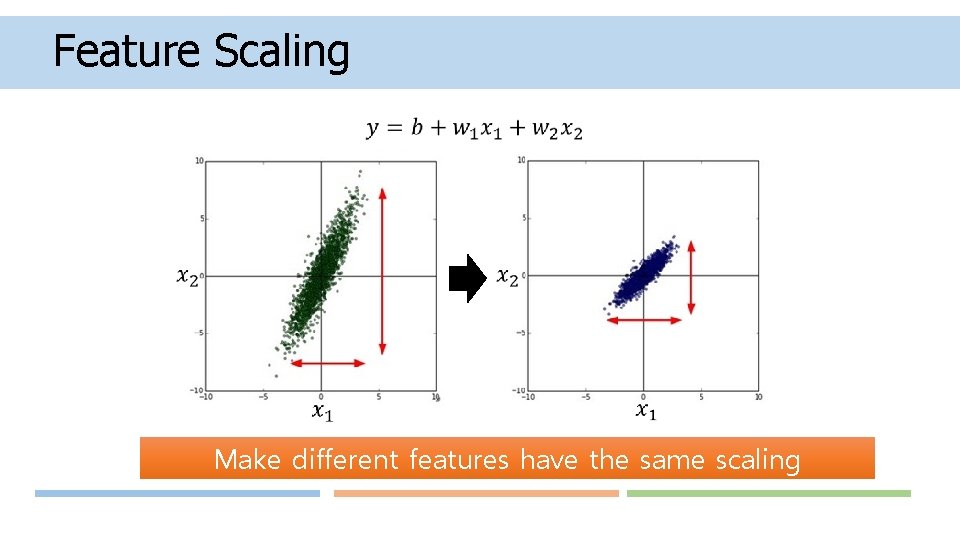

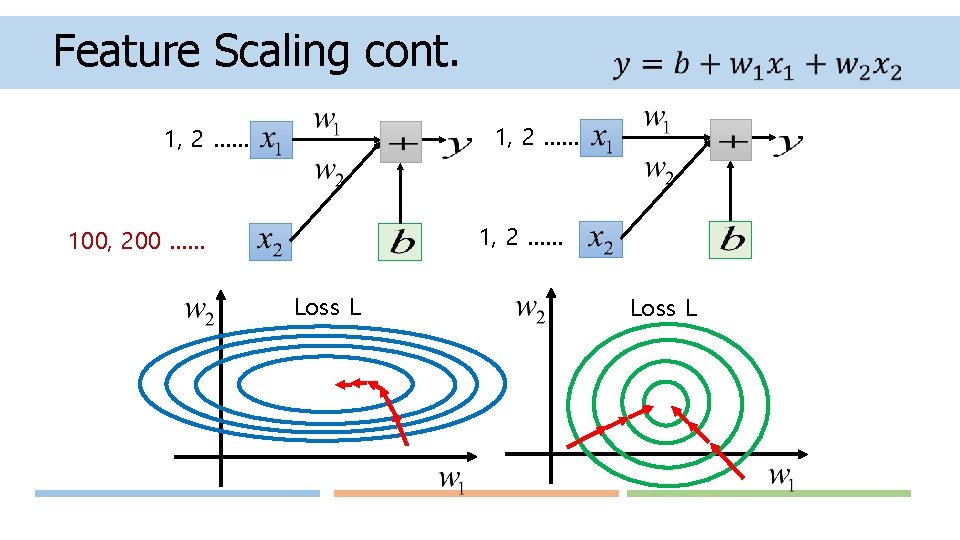

Feature Scaling Make different features have the same scaling

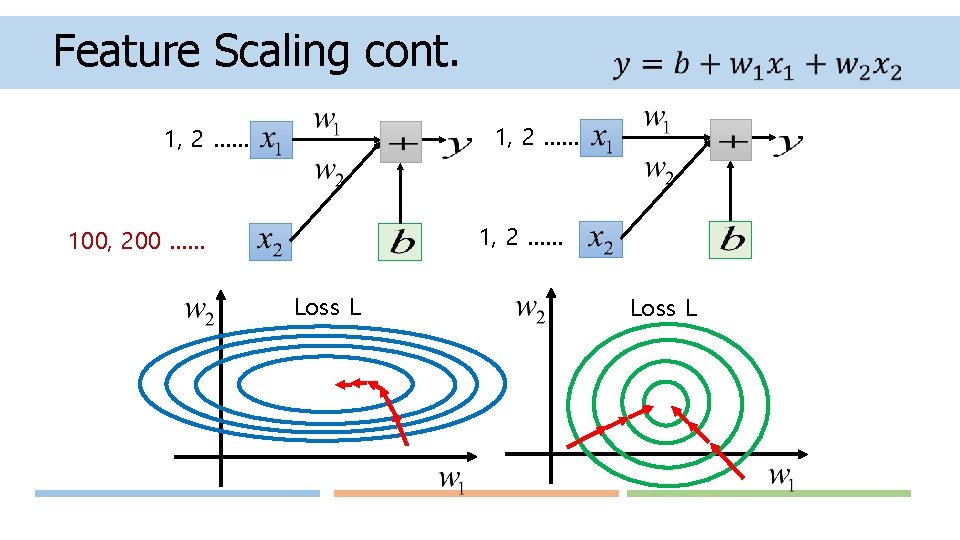

Feature Scaling cont. 1, 2 …… 100, 200 …… Loss L

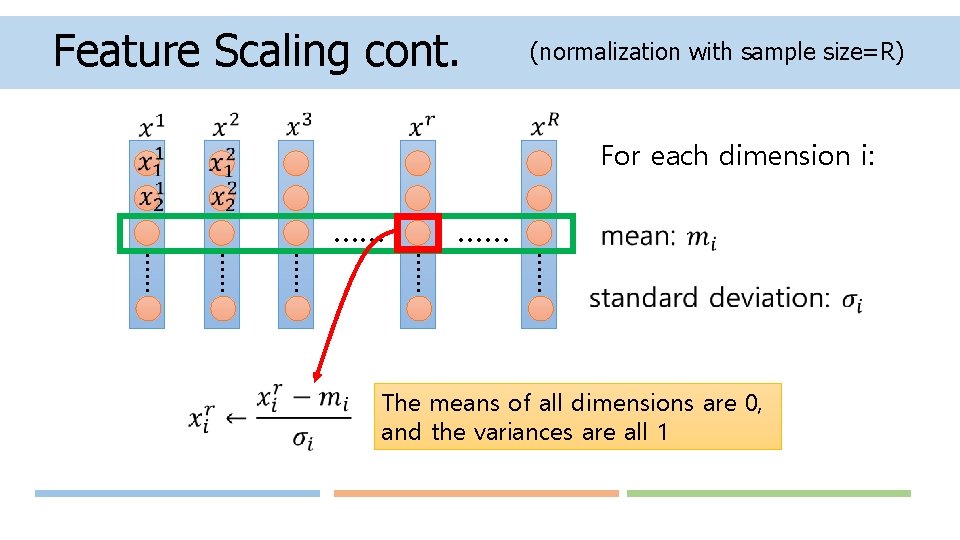

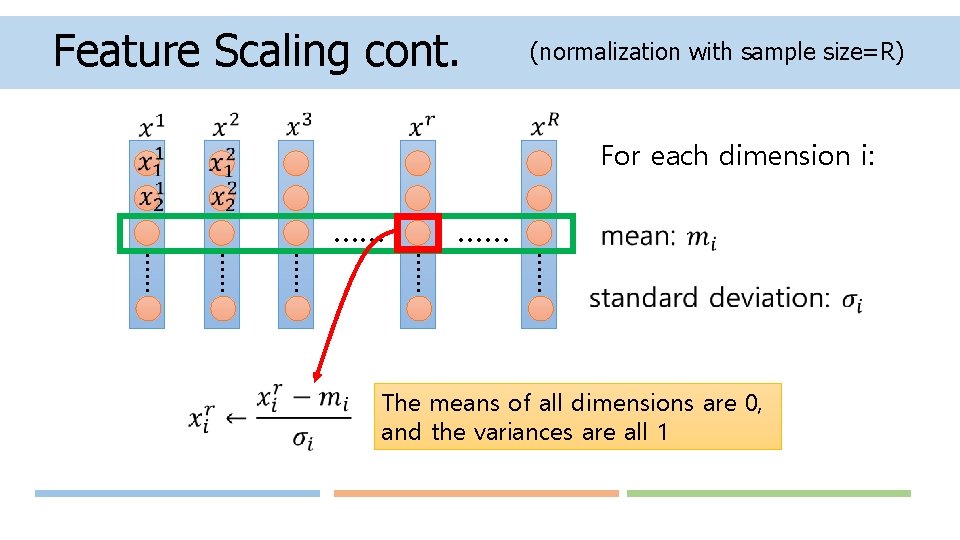

Feature Scaling cont. (normalization with sample size=R) For each dimension i: …… …… The means of all dimensions are 0, and the variances are all 1

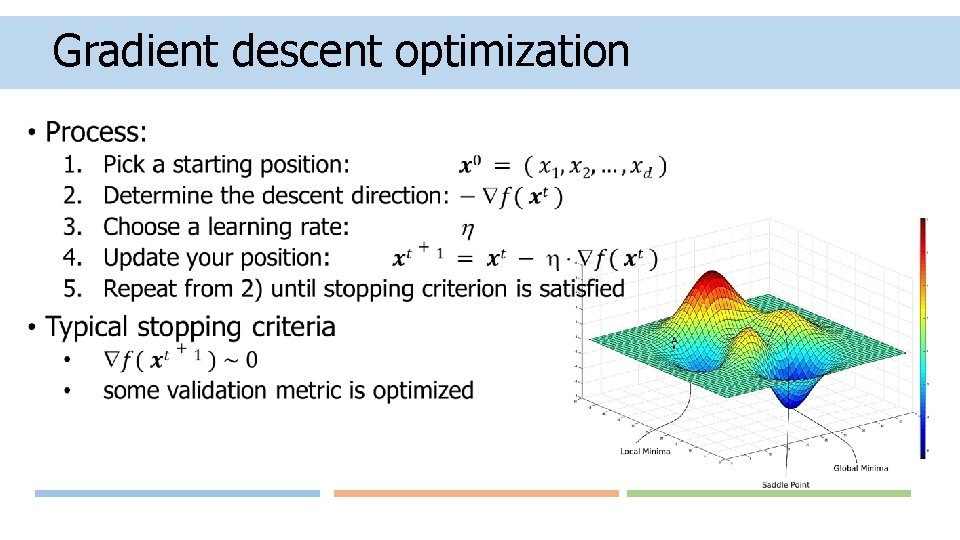

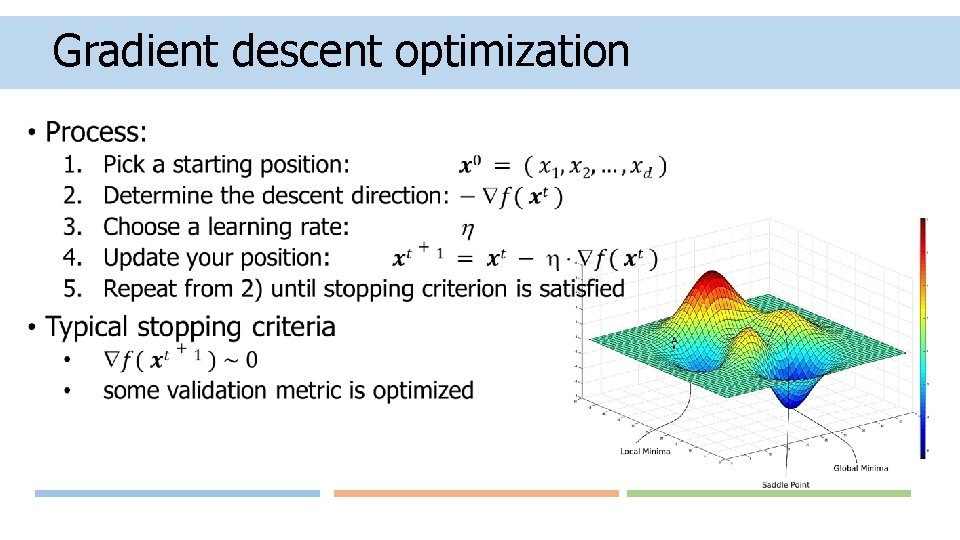

Gradient descent optimization •

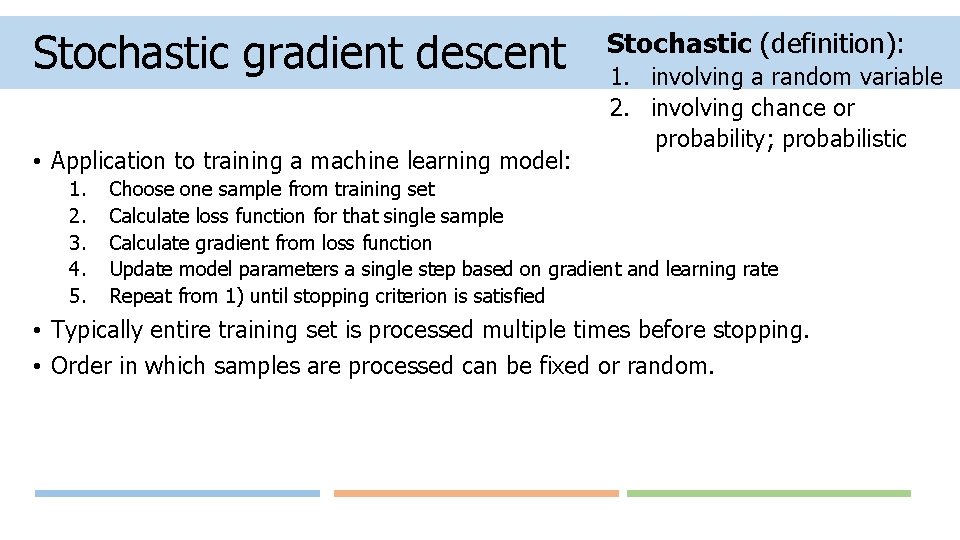

Stochastic gradient descent • Application to training a machine learning model: 1. 2. 3. 4. 5. Stochastic (definition): 1. involving a random variable 2. involving chance or probability; probabilistic Choose one sample from training set Calculate loss function for that single sample Calculate gradient from loss function Update model parameters a single step based on gradient and learning rate Repeat from 1) until stopping criterion is satisfied • Typically entire training set is processed multiple times before stopping. • Order in which samples are processed can be fixed or random.

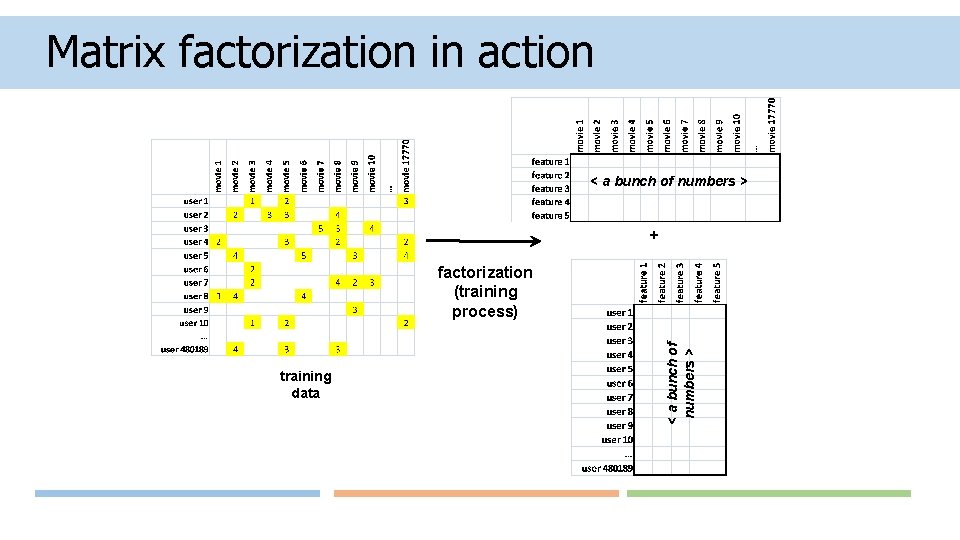

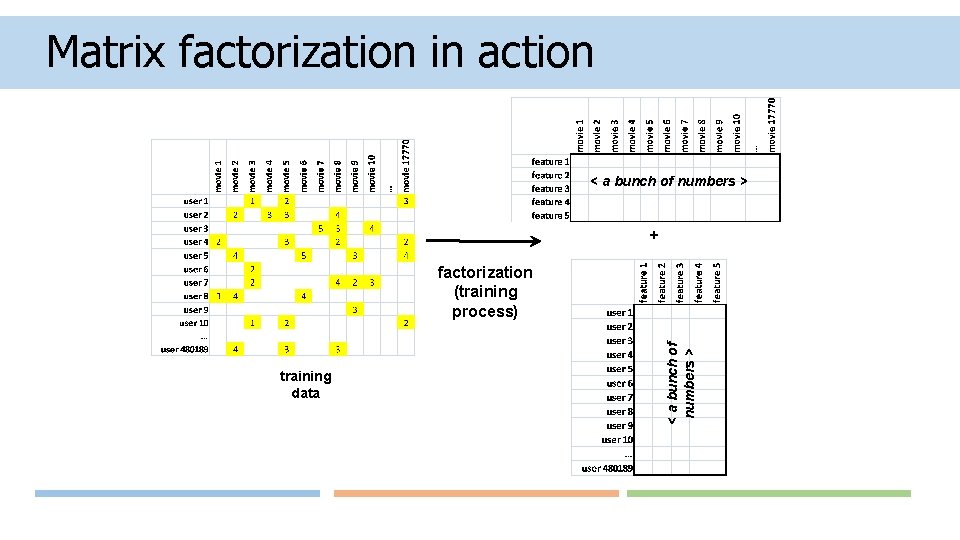

Matrix factorization in action < a bunch of numbers > + training data < a bunch of numbers > factorization (training process)

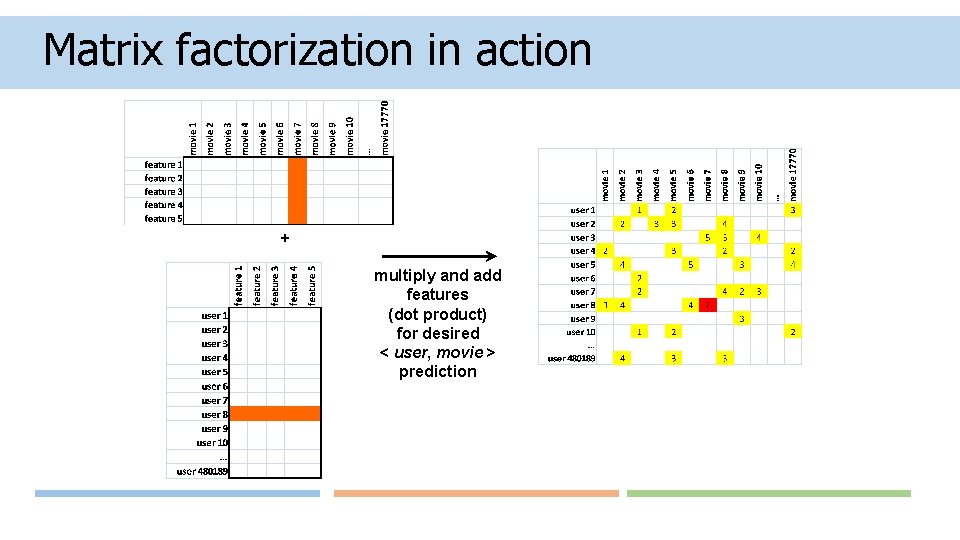

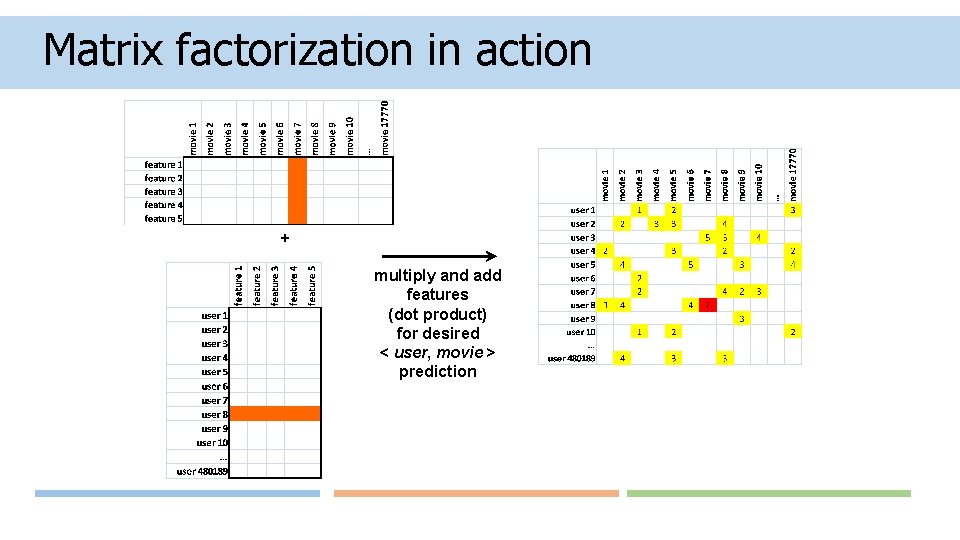

Matrix factorization in action + multiply and add features (dot product) for desired < user, movie > prediction

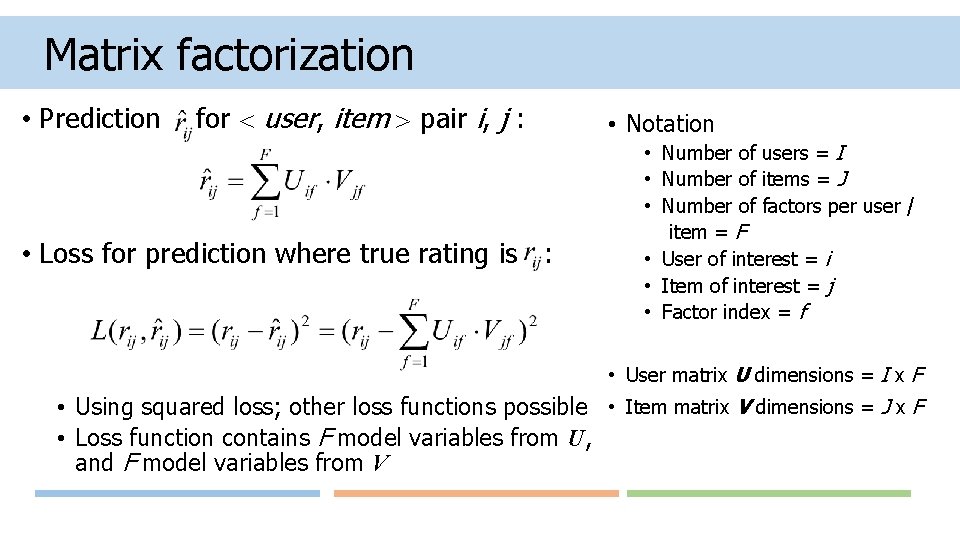

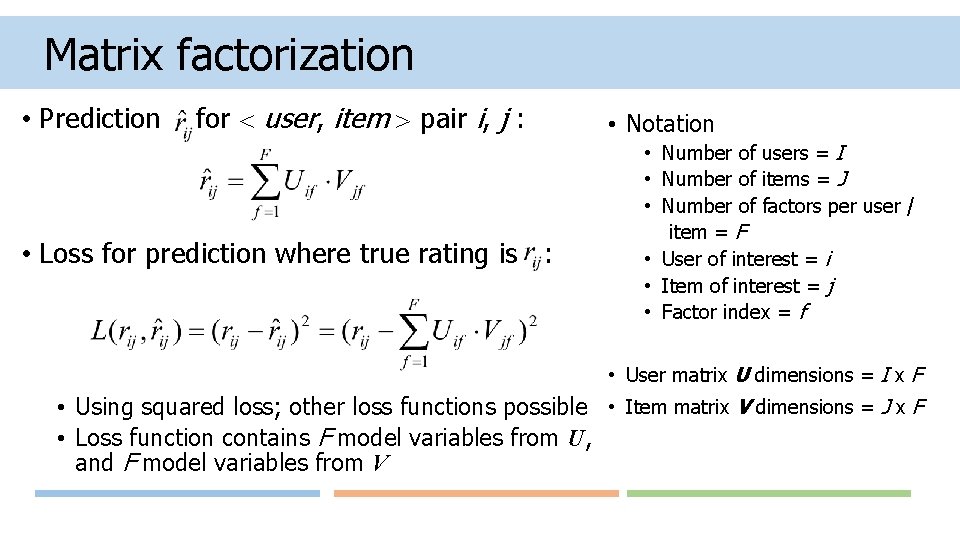

Matrix factorization • Prediction for user, item pair i, j : • Loss for prediction where true rating is : • Notation • Number of users = I • Number of items = J • Number of factors per user / item = F • User of interest = i • Item of interest = j • Factor index = f • User matrix U dimensions = I x F • Using squared loss; other loss functions possible • Item matrix V dimensions = J x F • Loss function contains F model variables from U, and F model variables from V

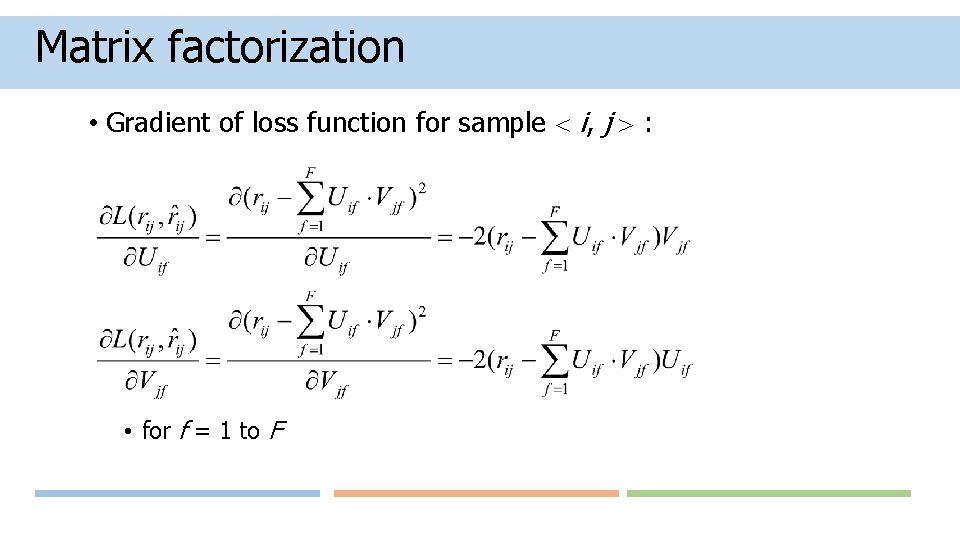

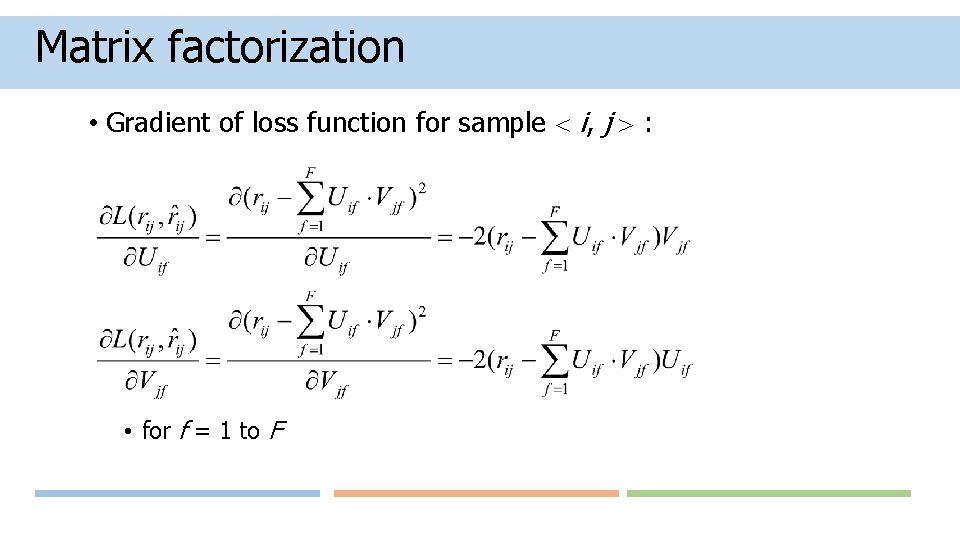

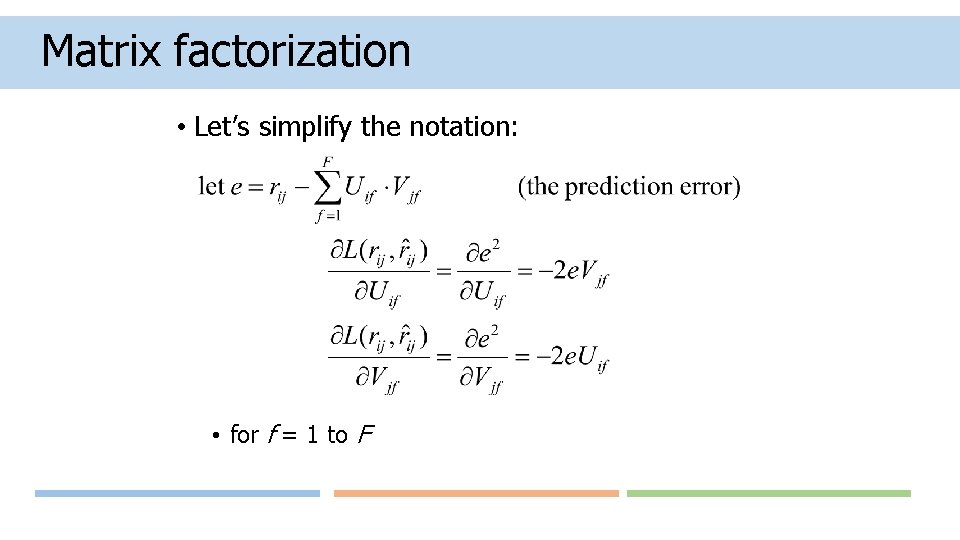

Matrix factorization • Gradient of loss function for sample i, j : • for f = 1 to F

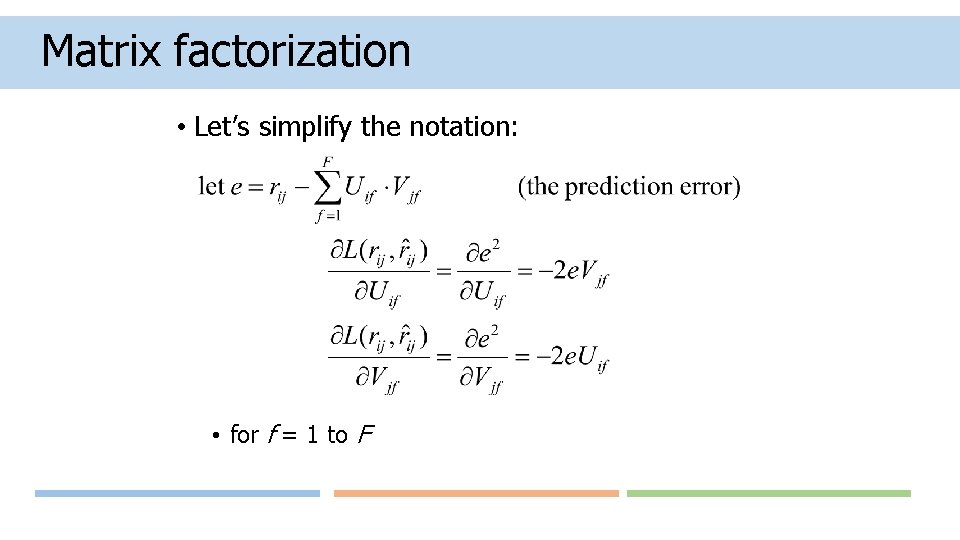

Matrix factorization • Let’s simplify the notation: • for f = 1 to F

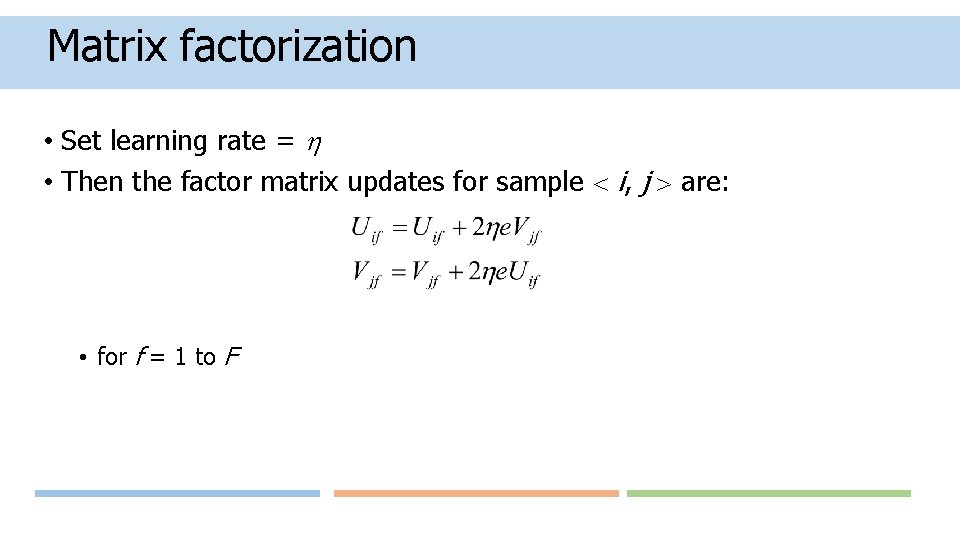

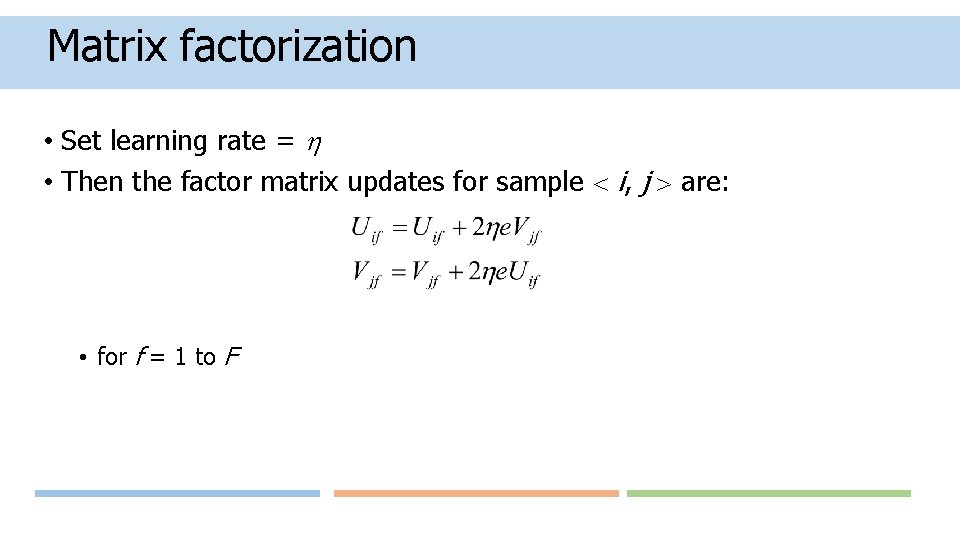

Matrix factorization • Set learning rate = • Then the factor matrix updates for sample i, j are: • for f = 1 to F

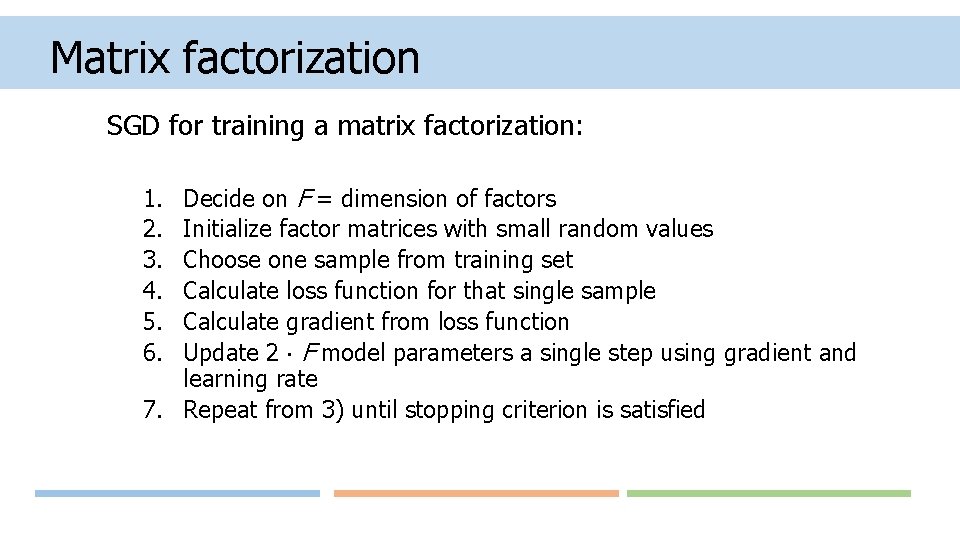

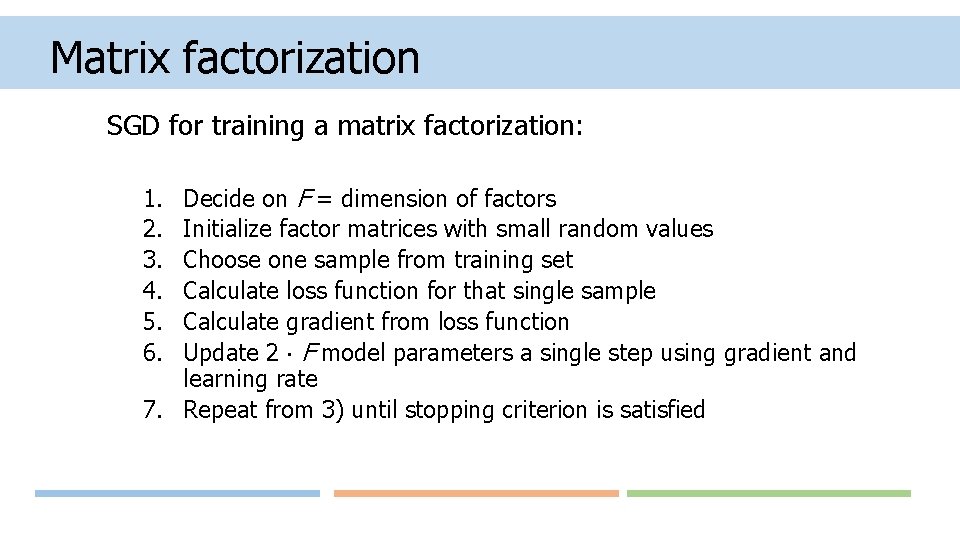

Matrix factorization SGD for training a matrix factorization: Decide on F = dimension of factors Initialize factor matrices with small random values Choose one sample from training set Calculate loss function for that single sample Calculate gradient from loss function Update 2 F model parameters a single step using gradient and learning rate 7. Repeat from 3) until stopping criterion is satisfied 1. 2. 3. 4. 5. 6.

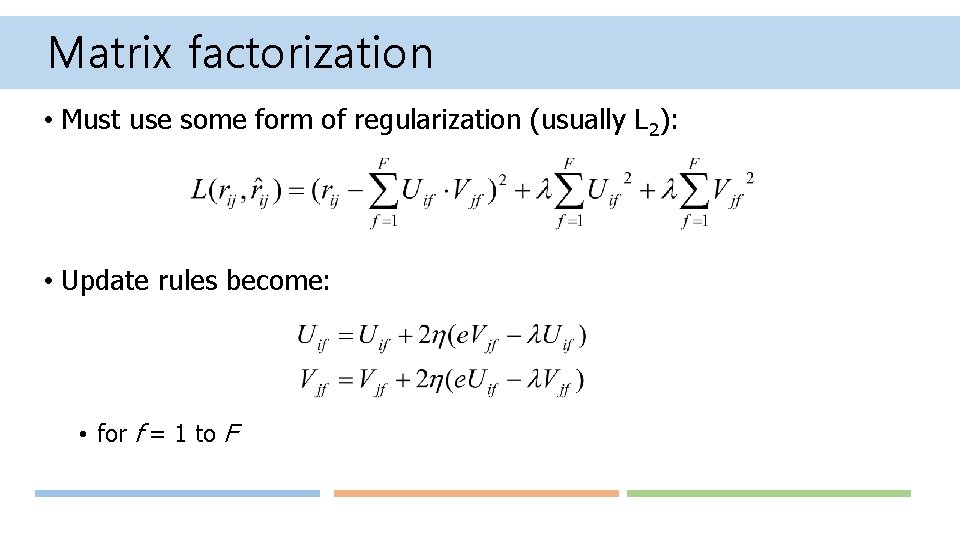

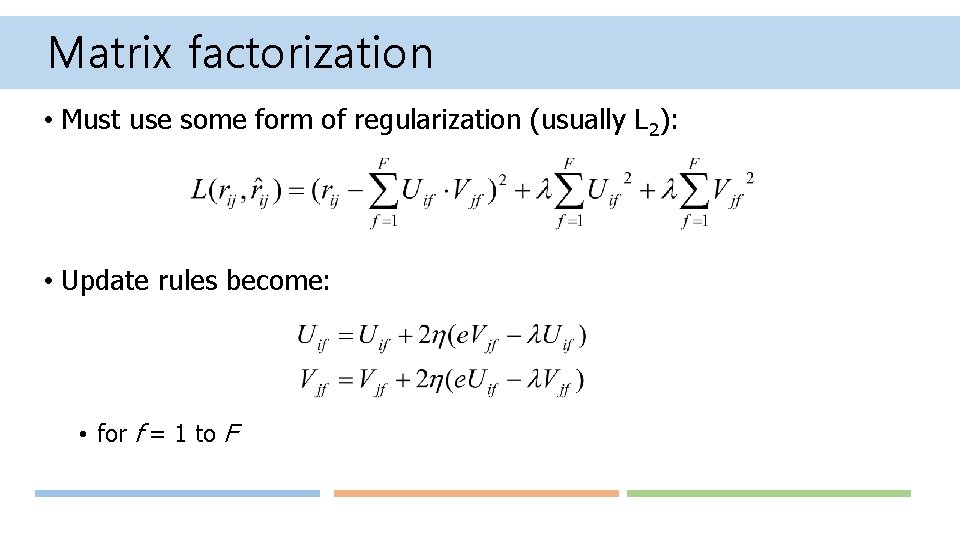

Matrix factorization • Must use some form of regularization (usually L 2): • Update rules become: • for f = 1 to F