Machine Learning Project PM 2 5 Prediction Disclaimer

- Slides: 25

Machine Learning Project PM 2. 5 Prediction Disclaimer: This PPT is modified based on Dr. Hung-yi Lee http: //speech. ee. ntu. edu. tw/~tlkagk/courses_ML 17. html

Outline ❖ ❖ ❖ Project Introduction train/test data Objective for this project

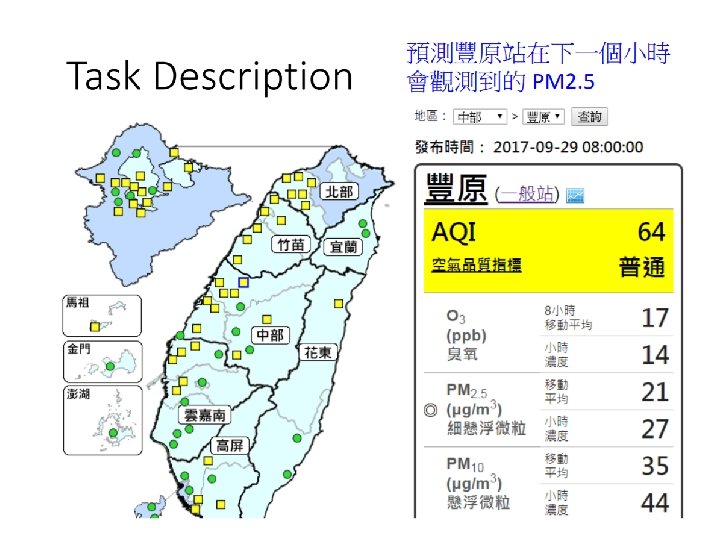

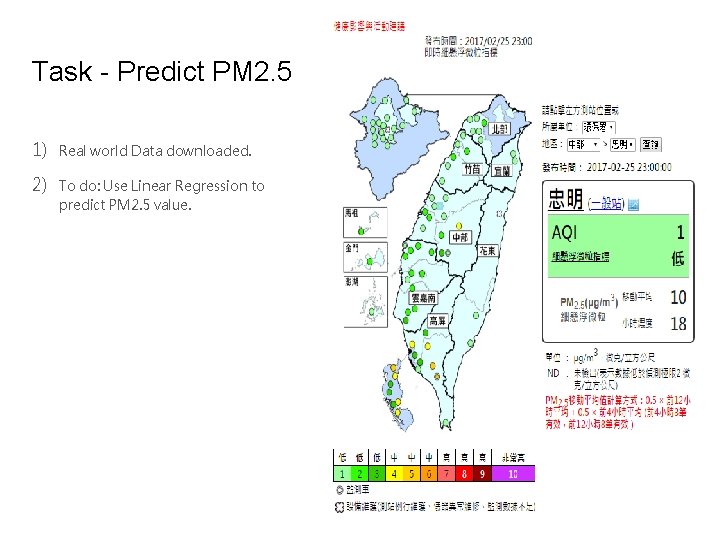

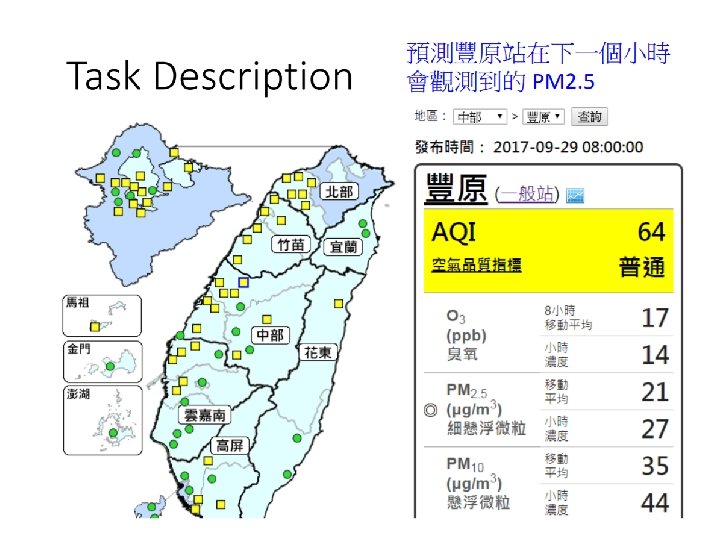

Task - Predict PM 2. 5 1) Real world Data downloaded. 2) To do: Use Linear Regression to predict PM 2. 5 value.

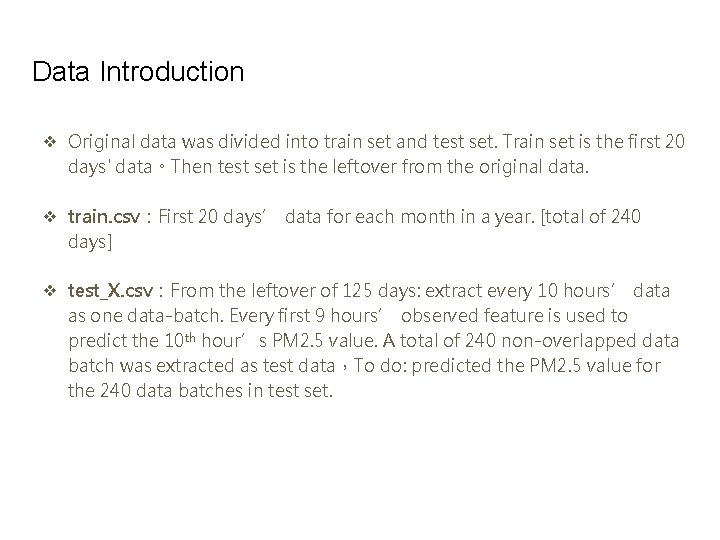

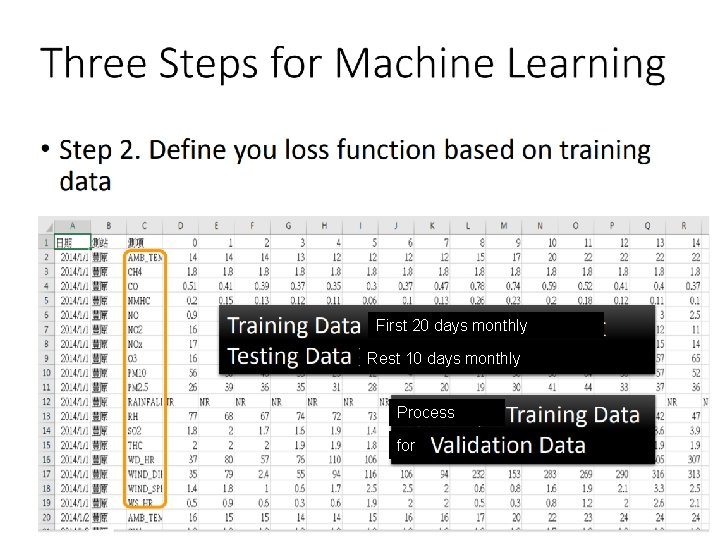

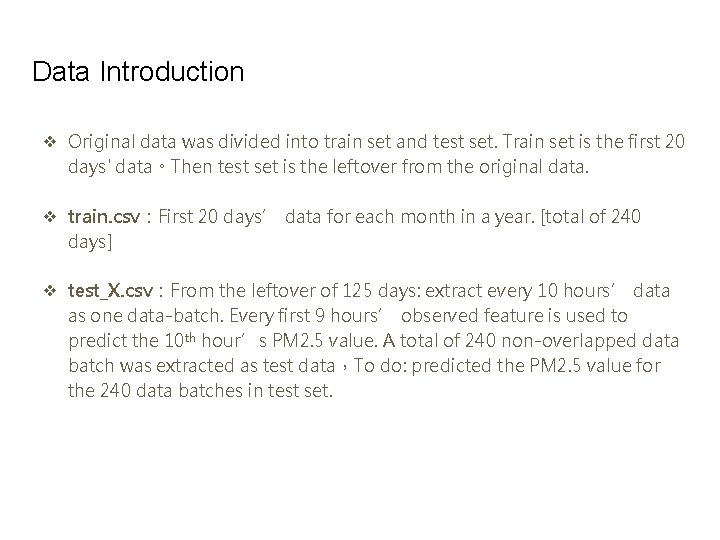

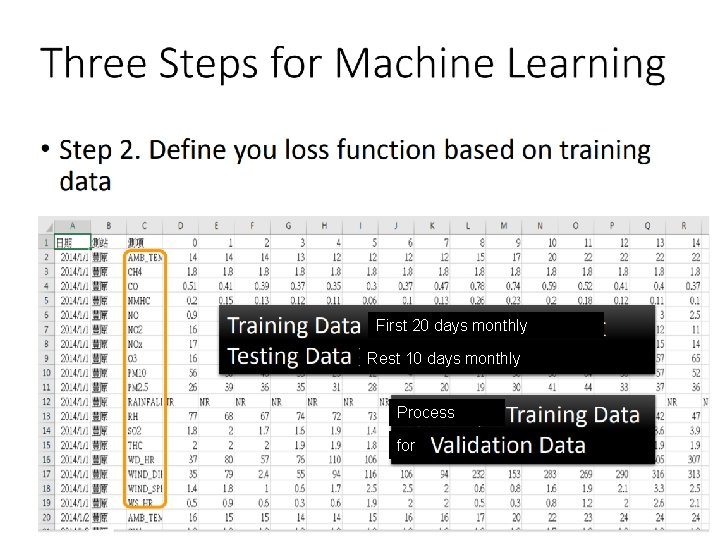

Data Introduction ❖ Original data was divided into train set and test set. Train set is the first 20 days' data。Then test set is the leftover from the original data. ❖ train. csv:First 20 days’ data for each month in a year. [total of 240 days] ❖ test_X. csv:From the leftover of 125 days: extract every 10 hours’ data as one data-batch. Every first 9 hours’ observed feature is used to predict the 10 th hour’s PM 2. 5 value. A total of 240 non-overlapped data batch was extracted as test data,To do: predicted the PM 2. 5 value for the 240 data batches in test set.

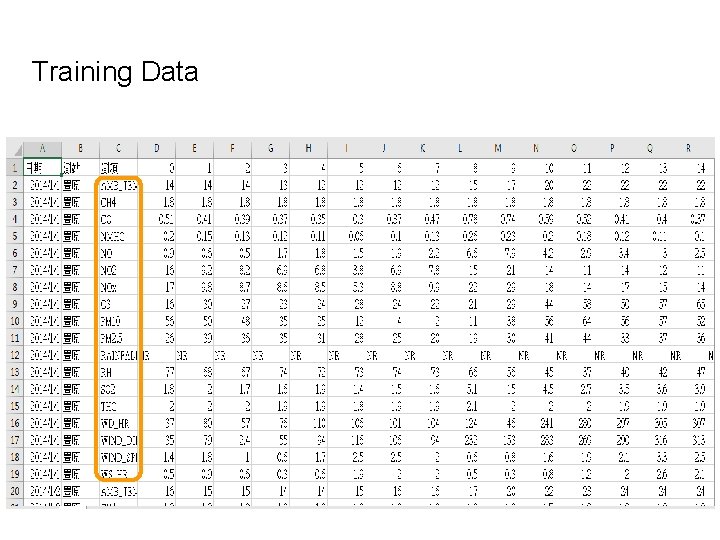

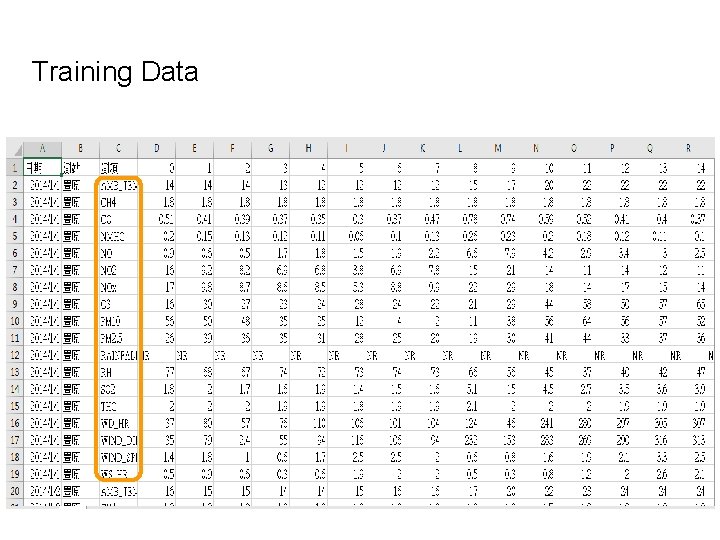

Training Data

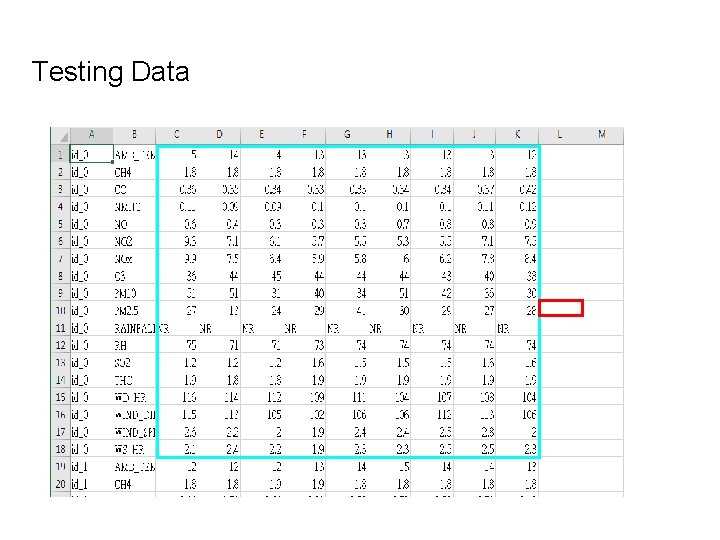

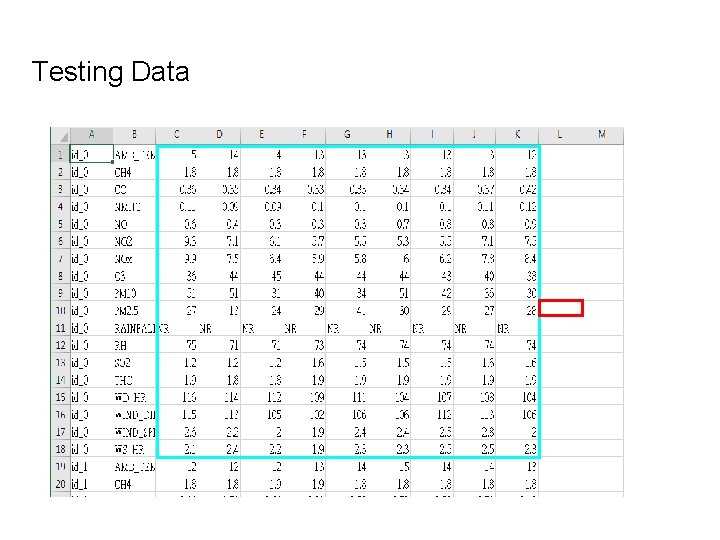

Testing Data

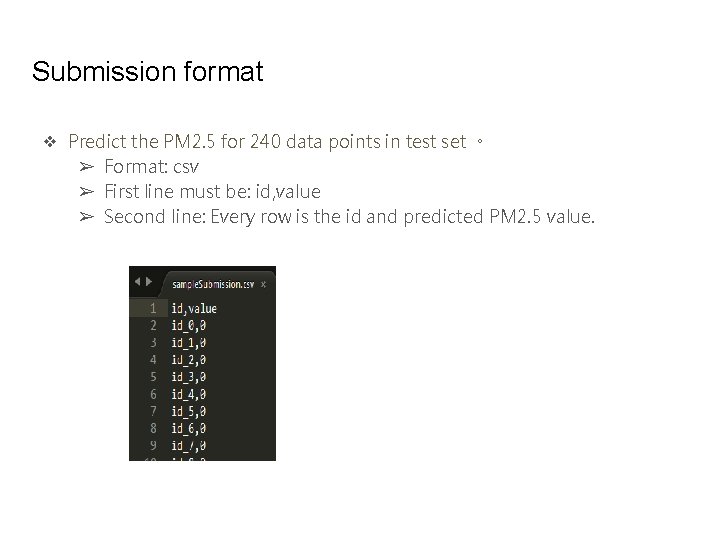

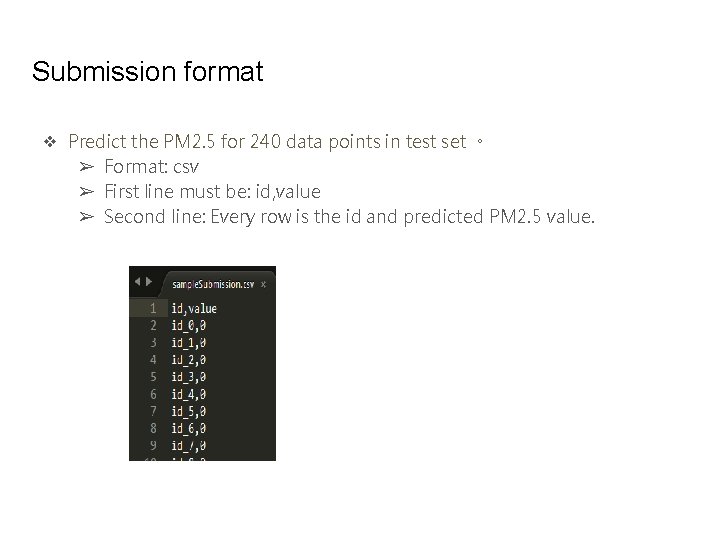

Submission format ❖ Predict the PM 2. 5 for 240 data points in test set 。 ➢ Format: csv ➢ First line must be: id, value ➢ Second line: Every row is the id and predicted PM 2. 5 value.

Instruction ❖ Python ❖ Implement linear regression,with Adagrad Gradient Descent。 ❖ Can NOT use the linear regression function from Python packages,but you are allowed to use numpy、scipy and pandas。 ( Standard library is allowed ) (numpy. linalg. lstsq is not allowed!!!)

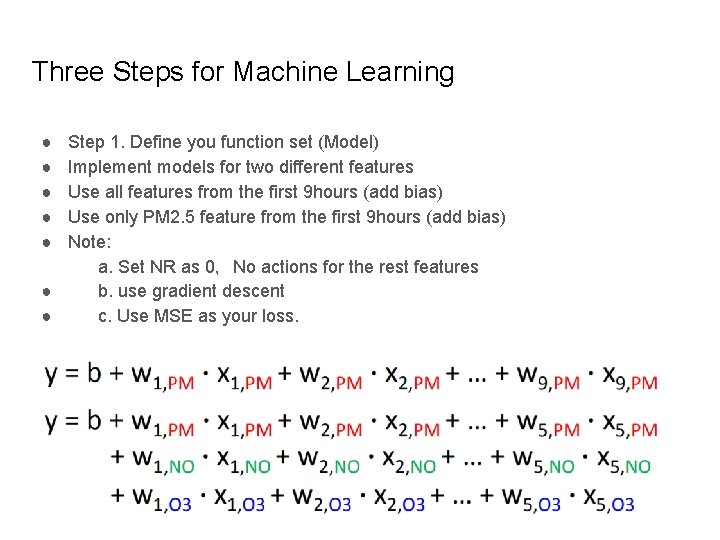

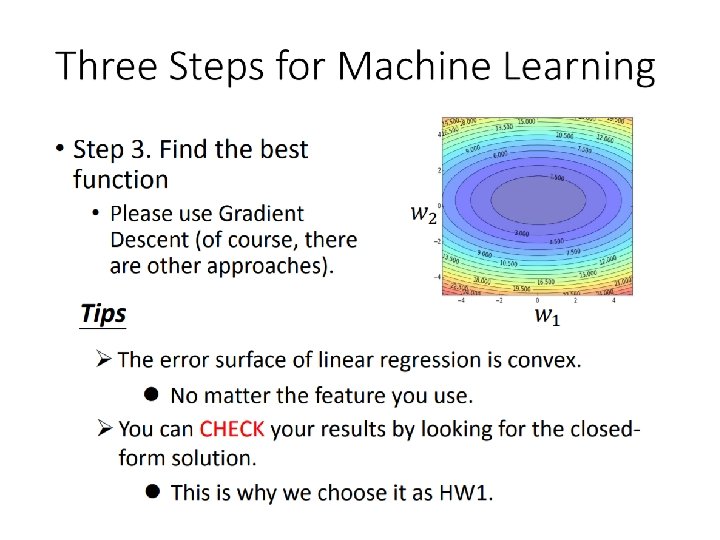

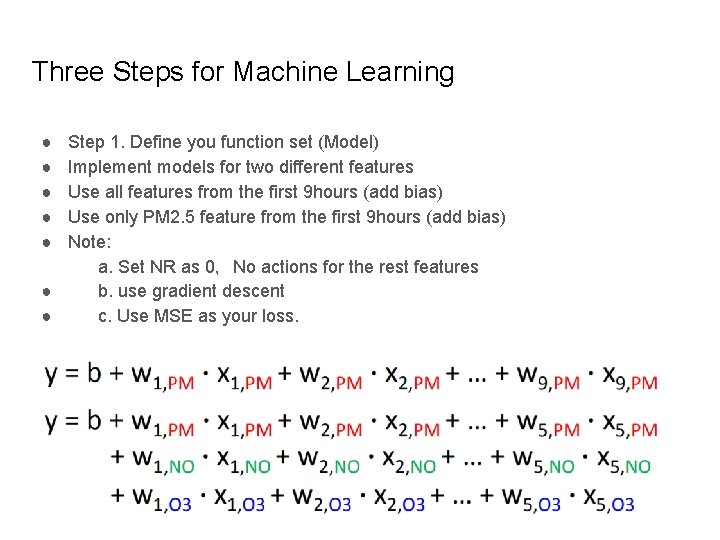

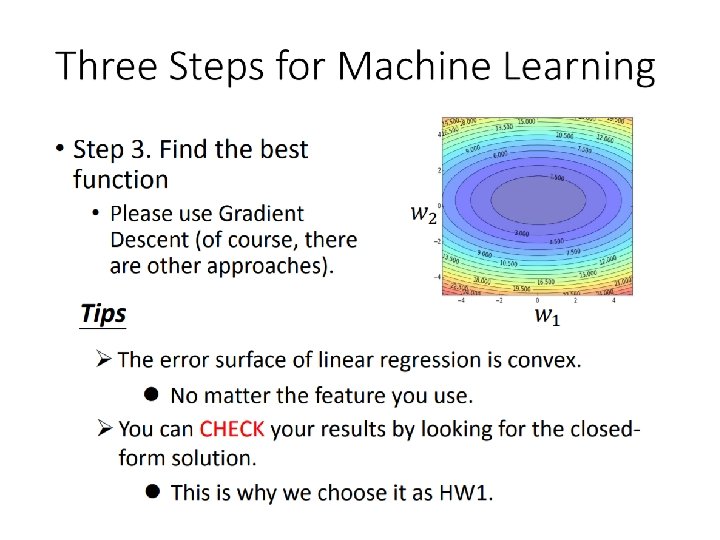

Three Steps for Machine Learning ● ● ● Step 1. Define you function set (Model) Implement models for two different features Use all features from the first 9 hours (add bias) Use only PM 2. 5 feature from the first 9 hours (add bias) Note: a. Set NR as 0,No actions for the rest features ● b. use gradient descent ● c. Use MSE as your loss.

First 20 days monthly Rest 10 days monthly Process for

Project Implementation Instruction

Outline Simple linear regression using gradient descent (with adagrad) 1. How to extract feature 2. Implement linear regression 3. Apply model from Step (2) to predict pm 2. 5

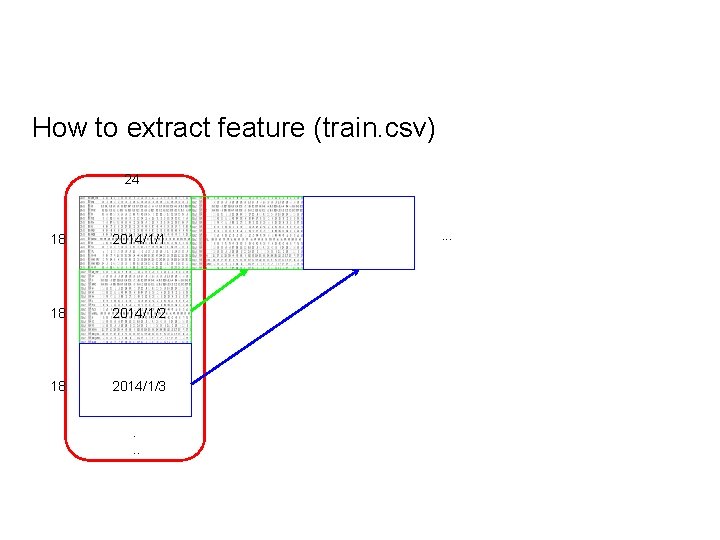

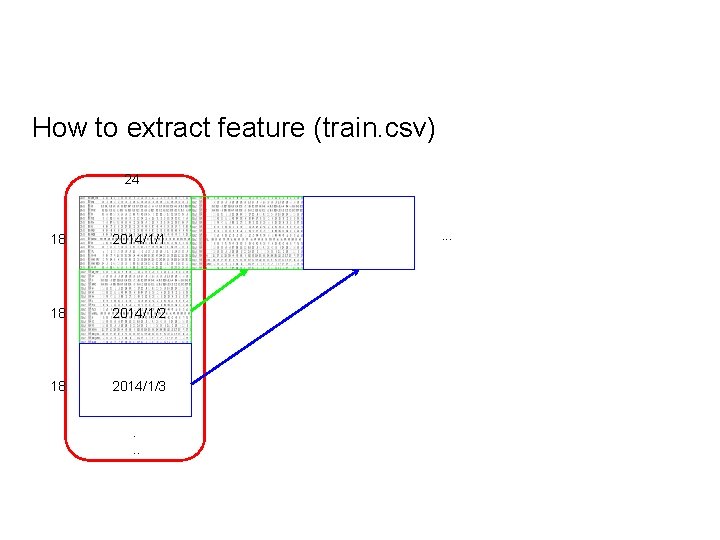

How to extract feature (train. csv) 24 18 2014/1/1 18 2014/1/2 18 2014/1/3. . .

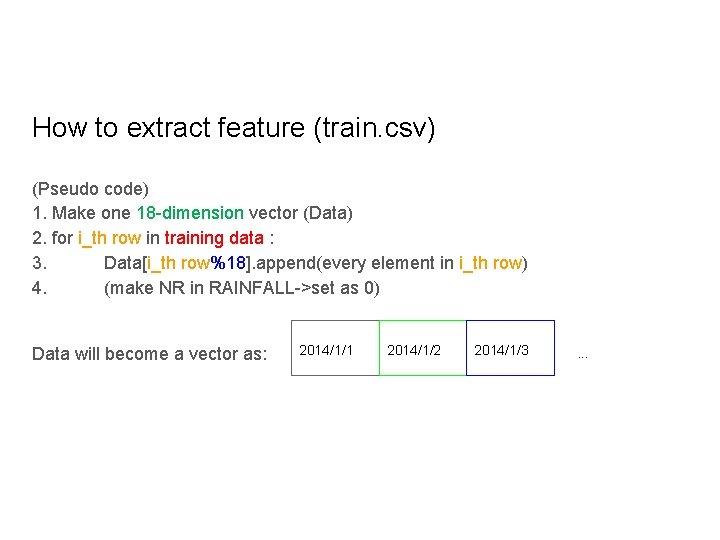

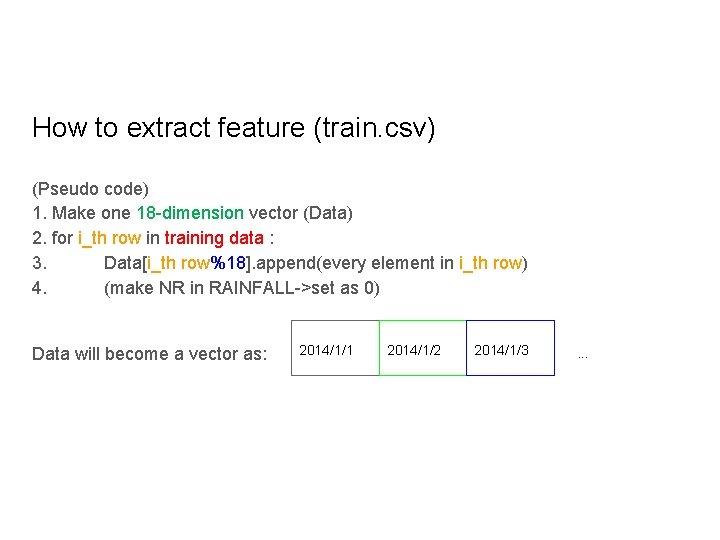

How to extract feature (train. csv) (Pseudo code) 1. Make one 18 -dimension vector (Data) 2. for i_th row in training data : 3. Data[i_th row%18]. append(every element in i_th row) 4. (make NR in RAINFALL->set as 0) Data will become a vector as: 2014/1/1 2014/1/2 2014/1/3 . . .

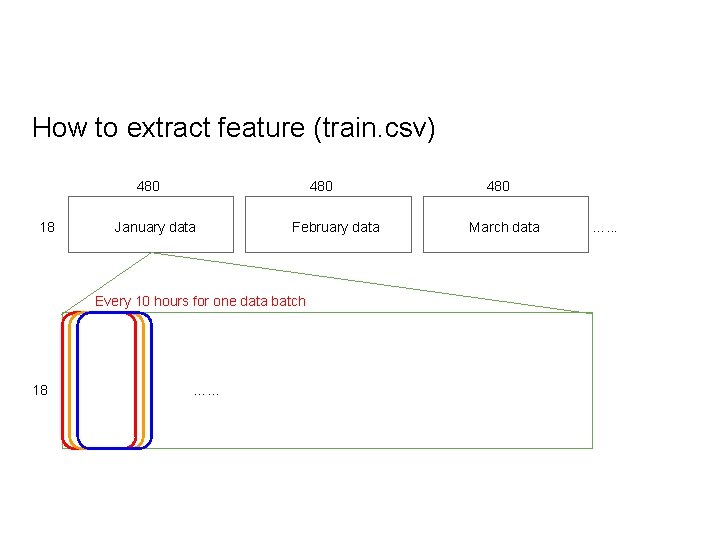

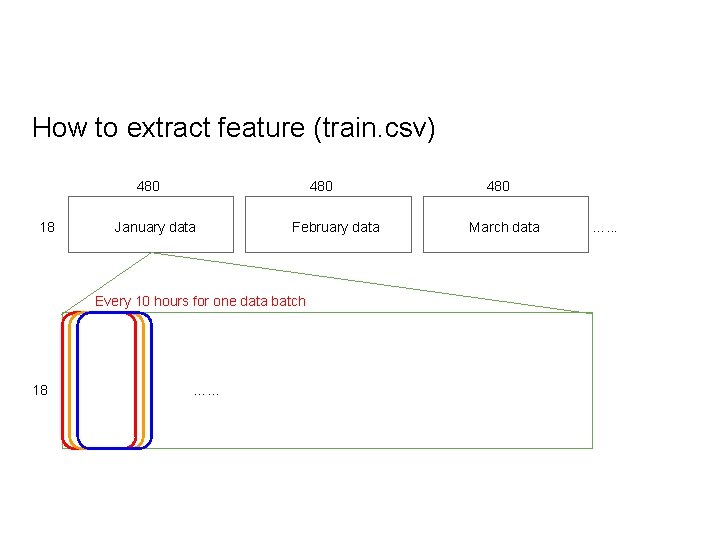

How to extract feature (train. csv) 480 18 480 January data February data Every 10 hours for one data batch 18 …. . . 480 March data …. . .

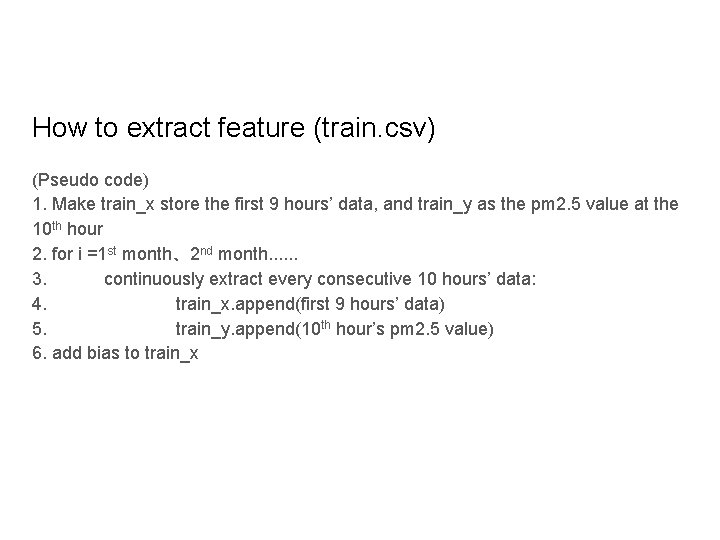

How to extract feature (train. csv) (Pseudo code) 1. Make train_x store the first 9 hours’ data, and train_y as the pm 2. 5 value at the 10 th hour 2. for i =1 st month、2 nd month. . . 3. continuously extract every consecutive 10 hours’ data: 4. train_x. append(first 9 hours’ data) 5. train_y. append(10 th hour’s pm 2. 5 value) 6. add bias to train_x

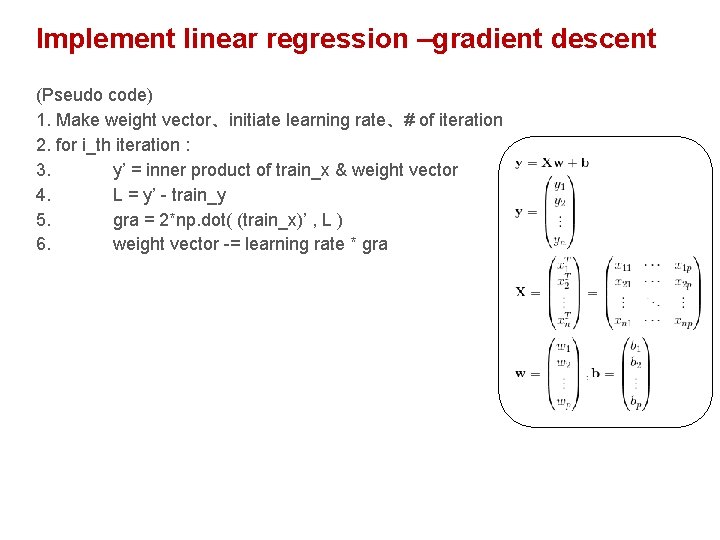

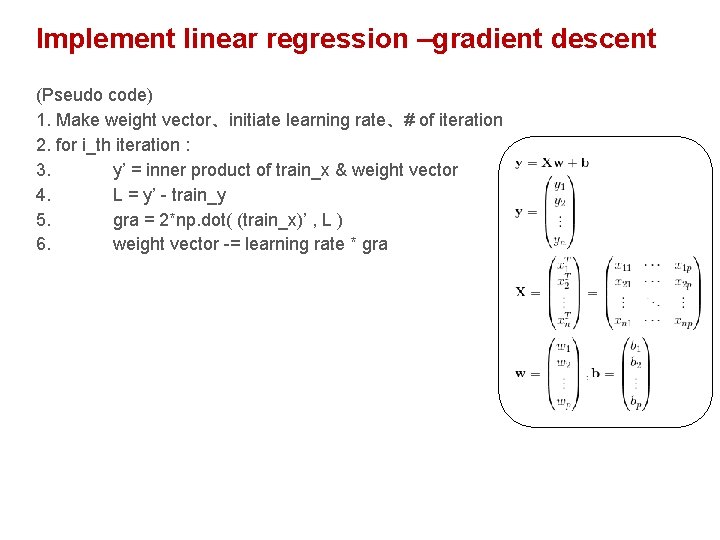

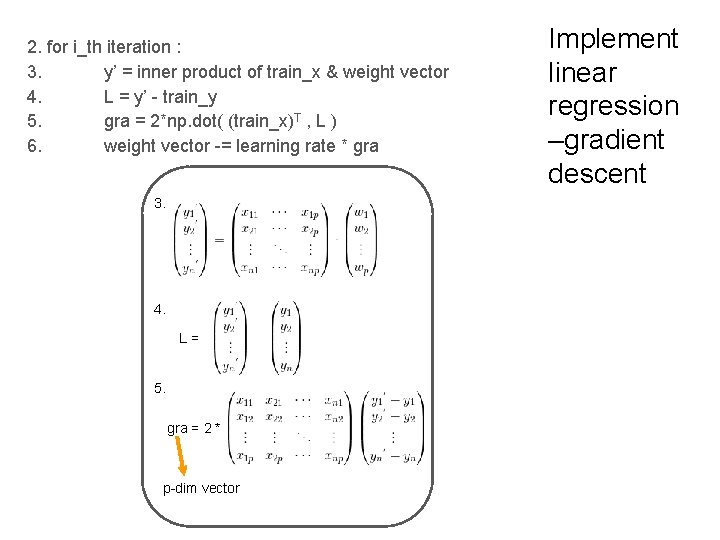

Implement linear regression –gradient descent (Pseudo code) 1. Make weight vector、initiate learning rate、# of iteration 2. for i_th iteration : 3. y’ = inner product of train_x & weight vector 4. L = y’ - train_y 5. gra = 2*np. dot( (train_x)’ , L ) 6. weight vector -= learning rate * gra

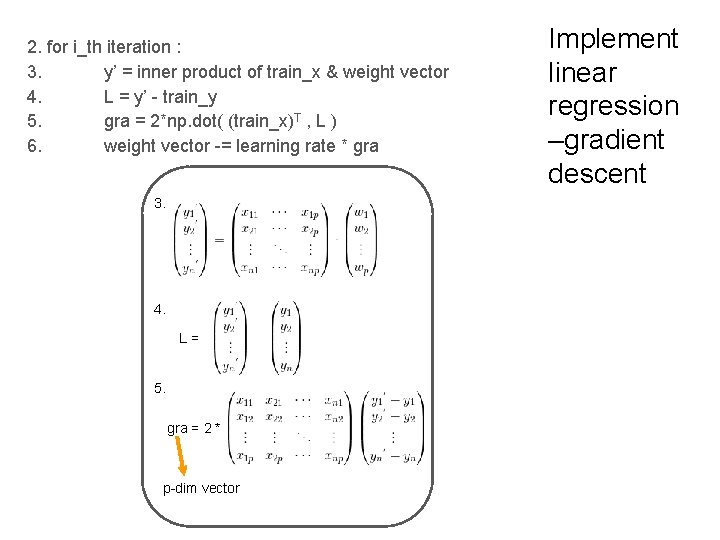

2. for i_th iteration : 3. y’ = inner product of train_x & weight vector 4. L = y’ - train_y 5. gra = 2*np. dot( (train_x)T , L ) 6. weight vector -= learning rate * gra 3. 4. L = 5. gra = 2 * p-dim vector Implement linear regression –gradient descent

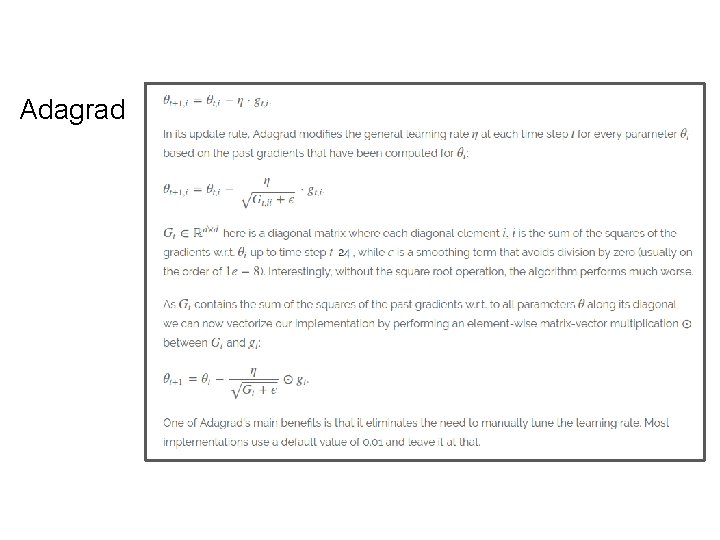

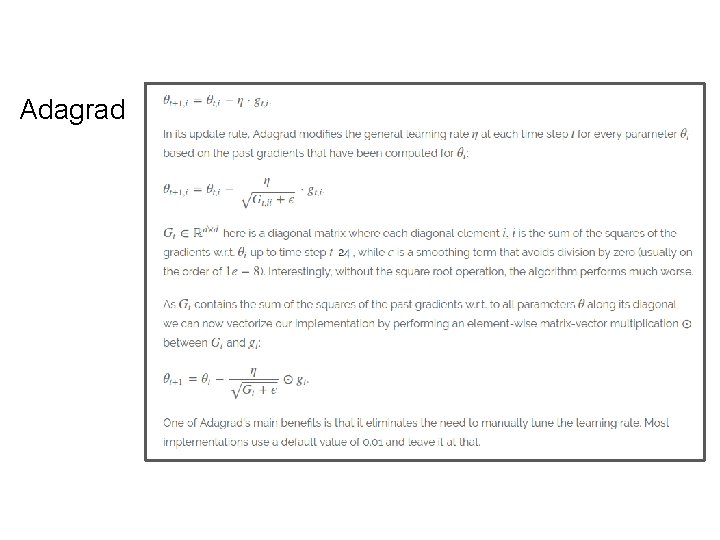

Adagrad

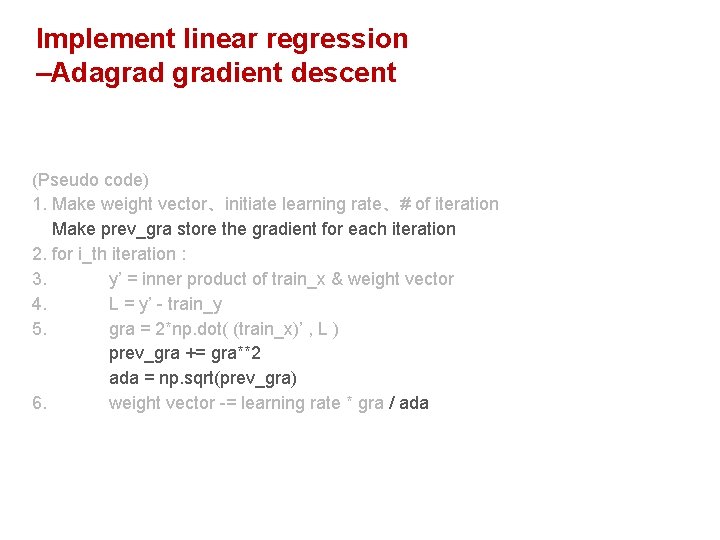

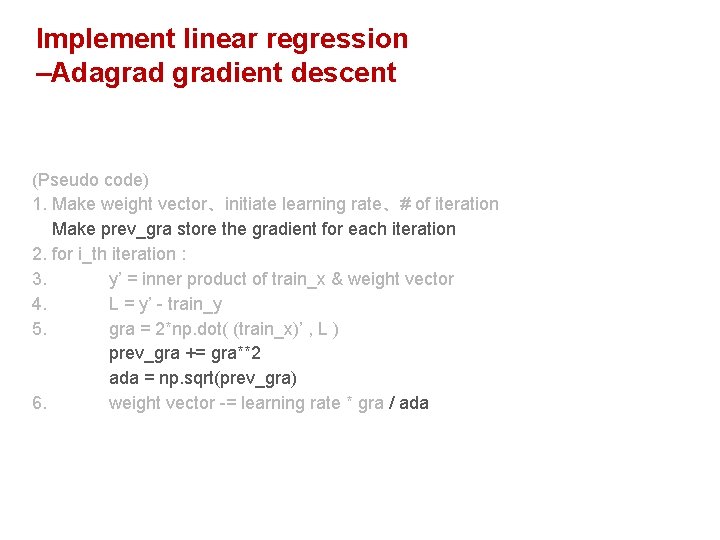

Implement linear regression –Adagradient descent (Pseudo code) 1. Make weight vector、initiate learning rate、# of iteration Make prev_gra store the gradient for each iteration 2. for i_th iteration : 3. y’ = inner product of train_x & weight vector 4. L = y’ - train_y 5. gra = 2*np. dot( (train_x)’ , L ) prev_gra += gra**2 ada = np. sqrt(prev_gra) 6. weight vector -= learning rate * gra / ada

Predict PM 2. 5 (Pseudo code) 1. read test_x. csv 2. every 18 rows : 3. test_x. append([1]) 4. test_x. append(data for 9 hours) 5. test_y = np. dot( weight vector, test_x)

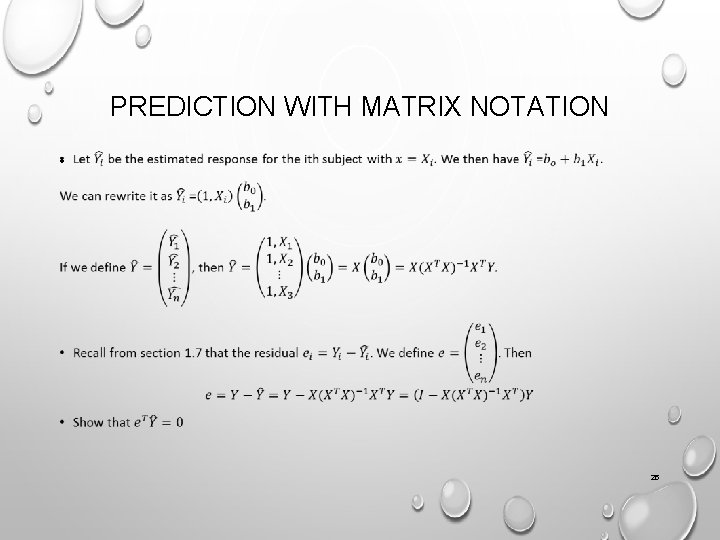

STT 512 FALL 2017 CHAPTER 2 DR. YISHI WANG 24

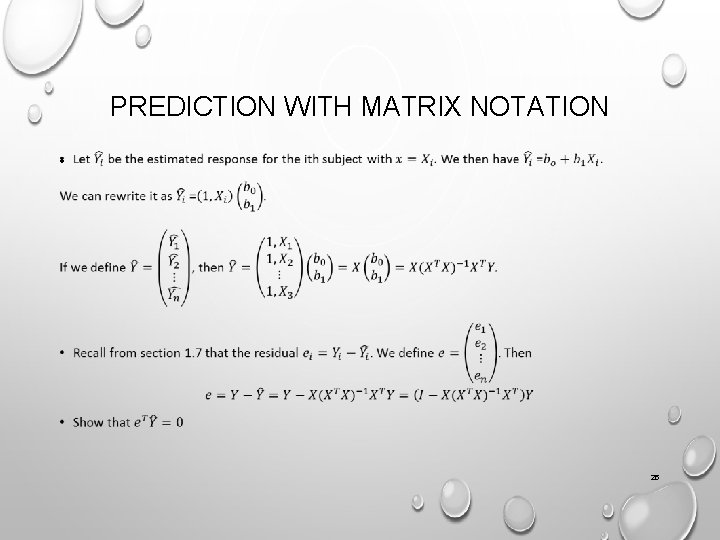

PREDICTION WITH MATRIX NOTATION • 25