Sensor Data Management and XML Data Management Zachary

![XML in a Database § Use a legacy RDBMS § § § Shredding [Shanmugasundaram+99] XML in a Database § Use a legacy RDBMS § § § Shredding [Shanmugasundaram+99]](https://slidetodoc.com/presentation_image_h/13ab9bc6292d3e8b85cd48a2316ab1a0/image-22.jpg)

- Slides: 33

Sensor Data Management and XML Data Management Zachary G. Ives University of Pennsylvania CIS 650 – Implementing Data Management Systems November 19, 2008

Administrivia § By next Tuesday, please email me with a status report on your project § … We are well under a month from the deadline! § For next time: § Please read & review the Turbo. XPath paper 2

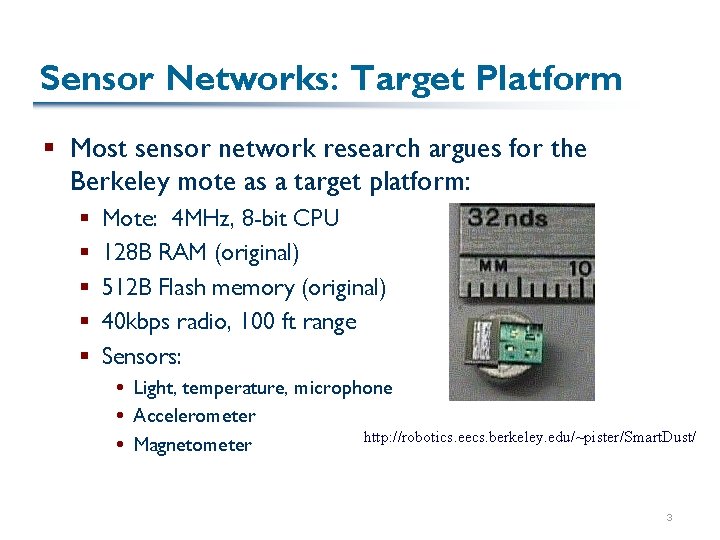

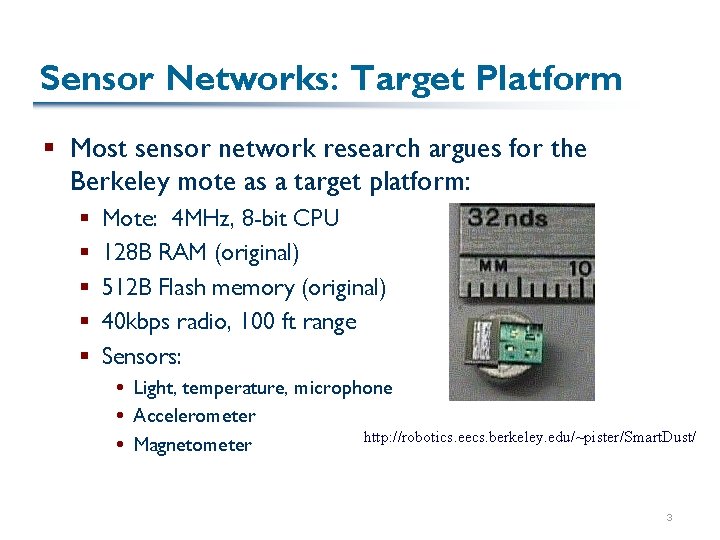

Sensor Networks: Target Platform § Most sensor network research argues for the Berkeley mote as a target platform: § § § Mote: 4 MHz, 8 -bit CPU 128 B RAM (original) 512 B Flash memory (original) 40 kbps radio, 100 ft range Sensors: Light, temperature, microphone Accelerometer http: //robotics. eecs. berkeley. edu/~pister/Smart. Dust/ Magnetometer 3

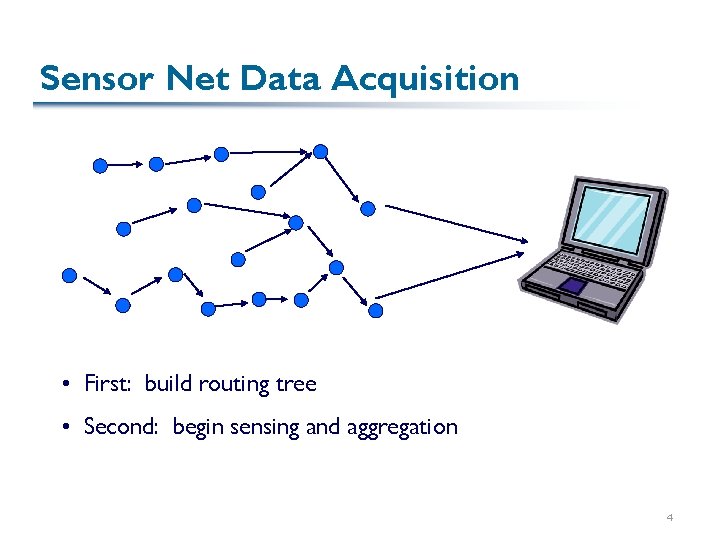

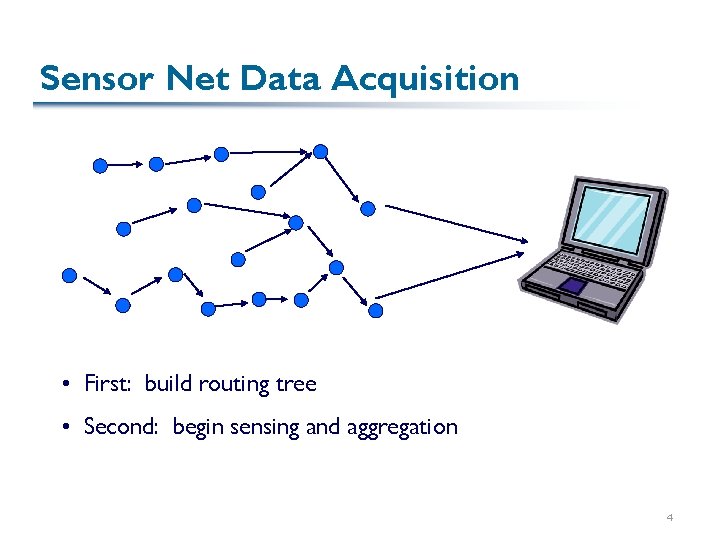

Sensor Net Data Acquisition • First: build routing tree • Second: begin sensing and aggregation 4

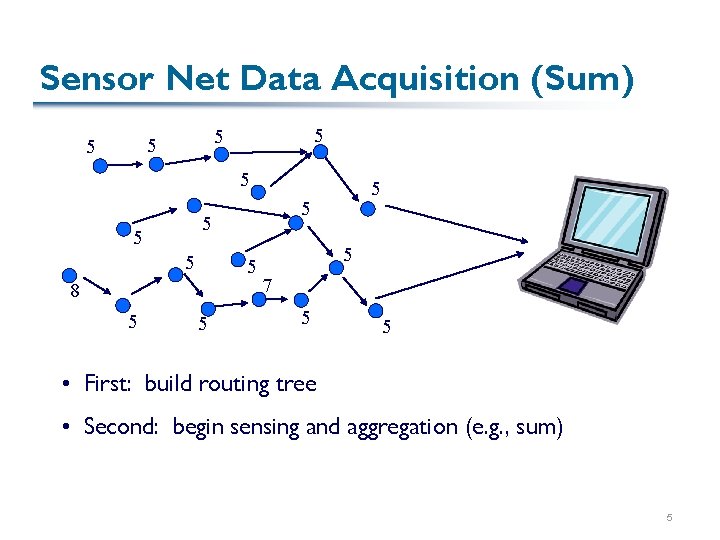

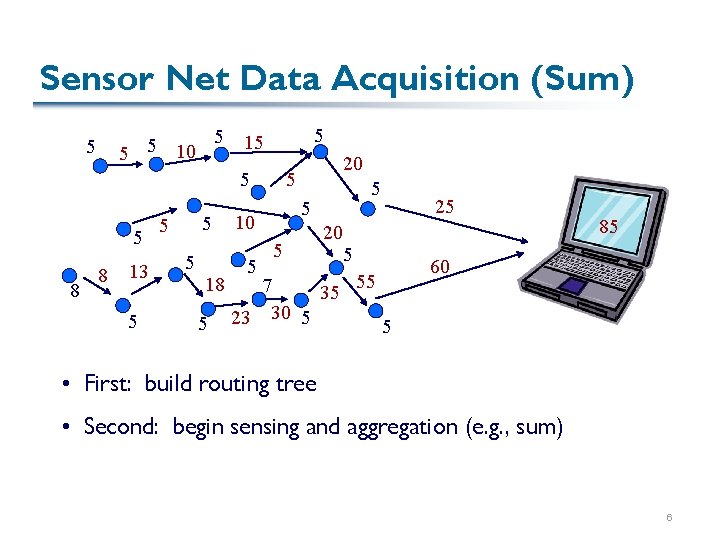

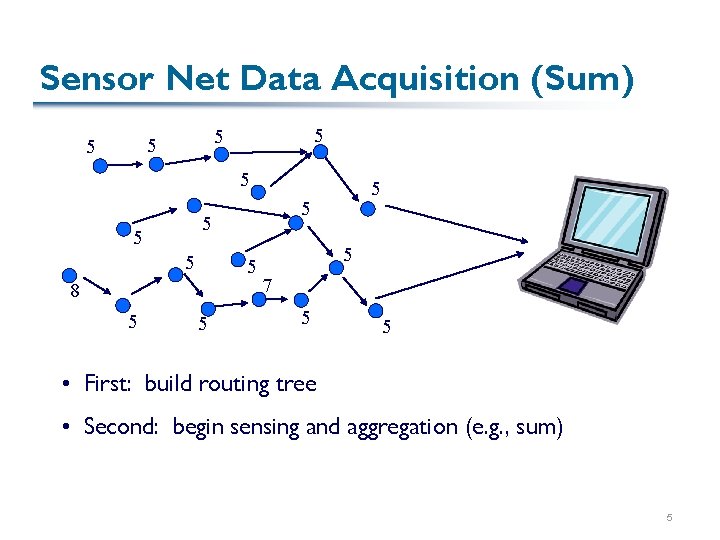

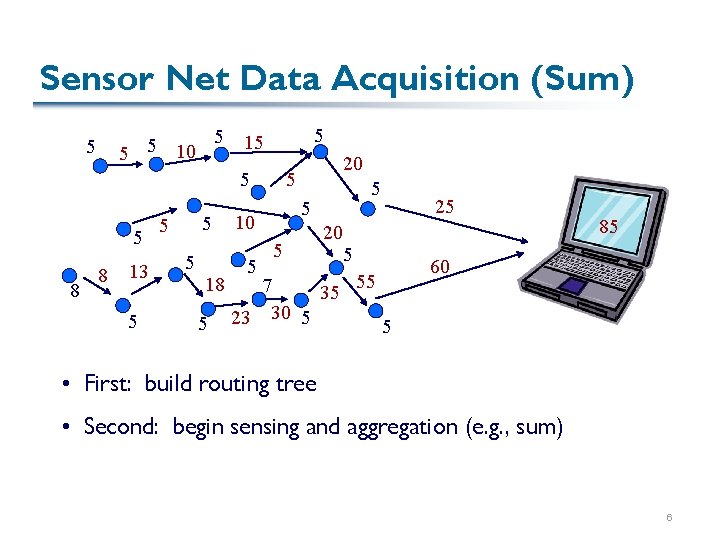

Sensor Net Data Acquisition (Sum) 5 5 5 5 8 5 5 5 5 7 5 5 • First: build routing tree • Second: begin sensing and aggregation (e. g. , sum) 5

Sensor Net Data Acquisition (Sum) 5 8 5 5 10 5 5 5 8 13 5 5 18 5 5 10 5 20 7 35 23 30 5 25 5 85 60 55 5 • First: build routing tree • Second: begin sensing and aggregation (e. g. , sum) 6

Sensor Network Research § Routing: need to aggregate and consolidate data in a power-efficient way § Ad hoc routing – generate routing tree to base station § Generally need to merge computation with routing § Robustness: need to combine info from many sensors to account for individual errors § What aggregation functions make sense? § Languages: how do we express what we want to do with sensor networks? § Many proposals here 7

A First Try: Tiny OS and nes. C § Tiny. OS: a custom OS for sensor nets, written in nes. C § Assumes low-power CPU Very limited concurrency support: events (signaled asynchronously) and tasks (cooperatively scheduled) § Applications built from “components” Basically, small objects without any local state § Various features in libraries that may or may not be included § interface Timer { command result_t start(char type, uint 32_t interval); command result_t stop(); event result_t fired(); } 8

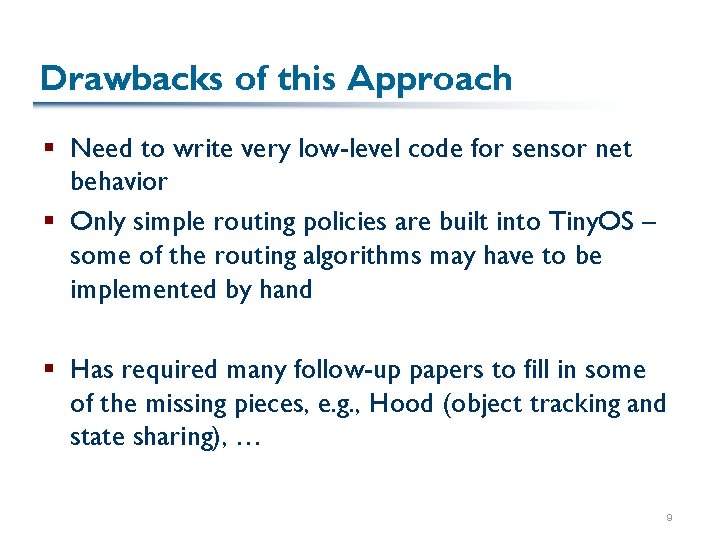

Drawbacks of this Approach § Need to write very low-level code for sensor net behavior § Only simple routing policies are built into Tiny. OS – some of the routing algorithms may have to be implemented by hand § Has required many follow-up papers to fill in some of the missing pieces, e. g. , Hood (object tracking and state sharing), … 9

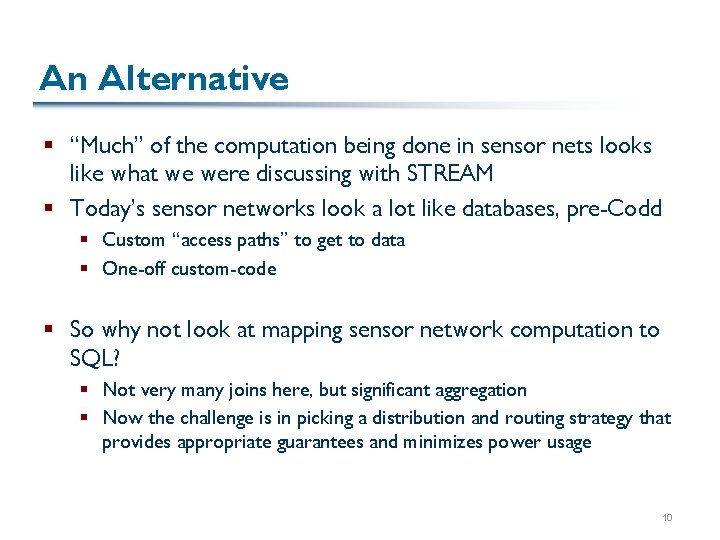

An Alternative § “Much” of the computation being done in sensor nets looks like what we were discussing with STREAM § Today’s sensor networks look a lot like databases, pre-Codd § Custom “access paths” to get to data § One-off custom-code § So why not look at mapping sensor network computation to SQL? § Not very many joins here, but significant aggregation § Now the challenge is in picking a distribution and routing strategy that provides appropriate guarantees and minimizes power usage 10

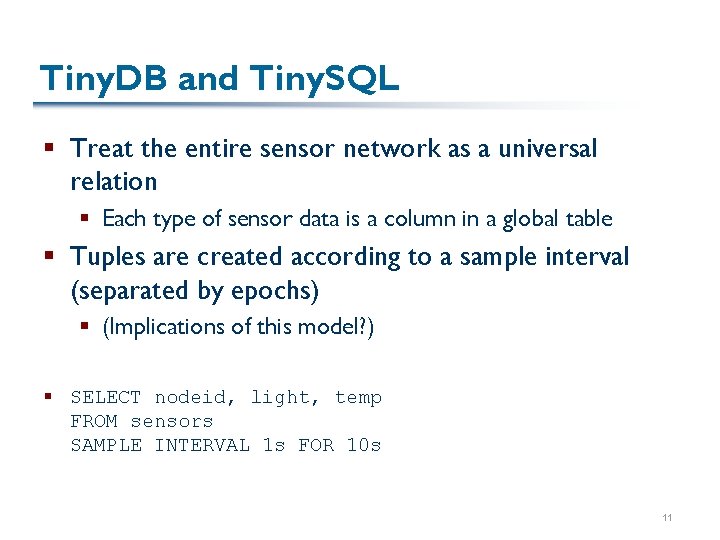

Tiny. DB and Tiny. SQL § Treat the entire sensor network as a universal relation § Each type of sensor data is a column in a global table § Tuples are created according to a sample interval (separated by epochs) § (Implications of this model? ) § SELECT nodeid, light, temp FROM sensors SAMPLE INTERVAL 1 s FOR 10 s 11

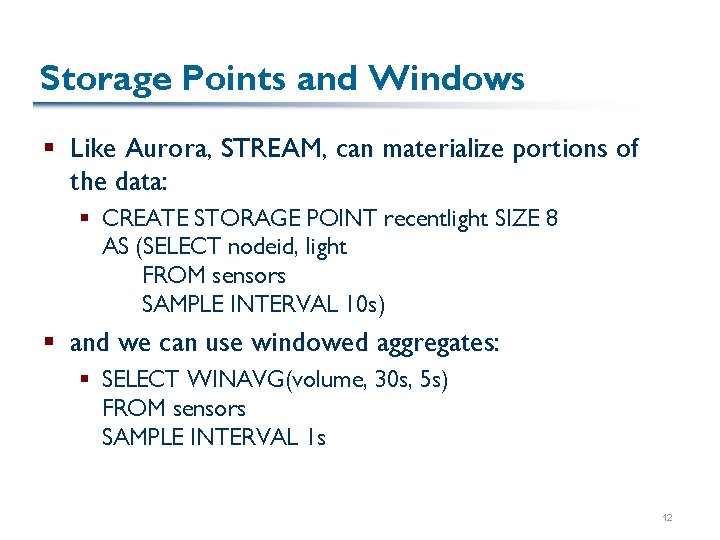

Storage Points and Windows § Like Aurora, STREAM, can materialize portions of the data: § CREATE STORAGE POINT recentlight SIZE 8 AS (SELECT nodeid, light FROM sensors SAMPLE INTERVAL 10 s) § and we can use windowed aggregates: § SELECT WINAVG(volume, 30 s, 5 s) FROM sensors SAMPLE INTERVAL 1 s 12

Events § ON EVENT bird-detect(loc): SELECT AVG(light), AVG(temp), event. loc FROM sensors AS s WHERE dist(s. loc, event. loc) < 10 m SAMPLE INTERVAL 2 s FOR 30 s 13

Power and Tiny. DB § Cost-based optimizer tries to find a query plan to yield lowest overall power consumption § Different sensors have different power usage Try to order sampling according to selectivity (sounds familiar? ) Assumption of uniform distribution of values over range § Batching of queries (multi-query optimization) Convert a series of events into a stream join with a table § Also need to consider where the query is processed… 14

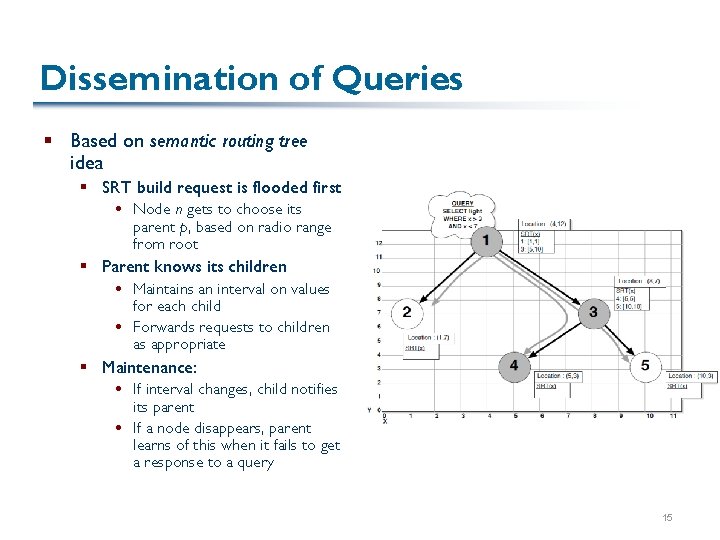

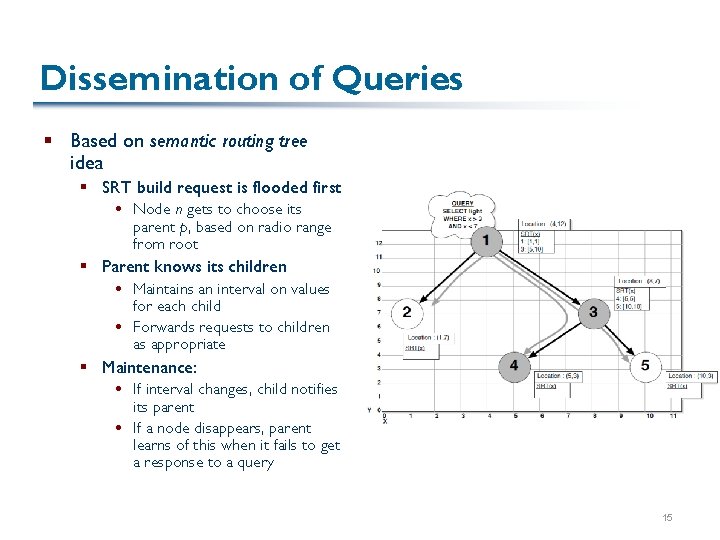

Dissemination of Queries § Based on semantic routing tree idea § SRT build request is flooded first Node n gets to choose its parent p, based on radio range from root § Parent knows its children Maintains an interval on values for each child Forwards requests to children as appropriate § Maintenance: If interval changes, child notifies its parent If a node disappears, parent learns of this when it fails to get a response to a query 15

Query Processing § Mostly consists of sleeping! § Wake briefly, sample, and compute operators, then route onwards § Nodes are time synchronized § Awake time is proportional to the neighborhood size (why? ) § Computation is based on partial state records § Basically, each operation is a partial aggregate value, plus the reading from the sensor 16

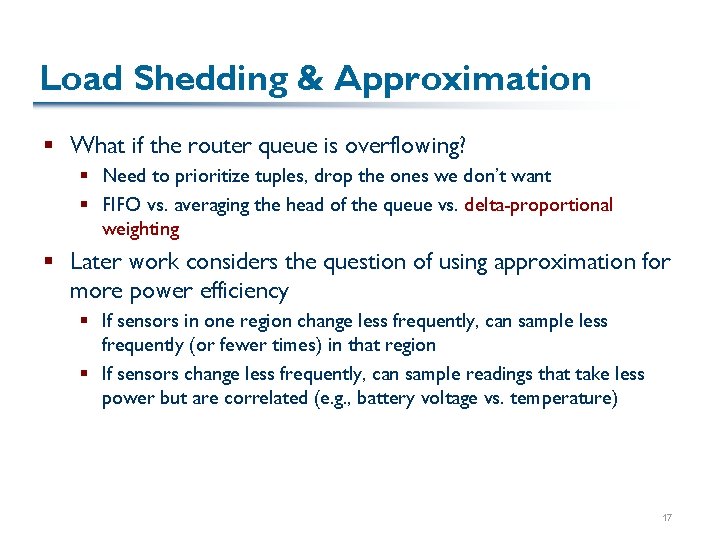

Load Shedding & Approximation § What if the router queue is overflowing? § Need to prioritize tuples, drop the ones we don’t want § FIFO vs. averaging the head of the queue vs. delta-proportional weighting § Later work considers the question of using approximation for more power efficiency § If sensors in one region change less frequently, can sample less frequently (or fewer times) in that region § If sensors change less frequently, can sample readings that take less power but are correlated (e. g. , battery voltage vs. temperature) 17

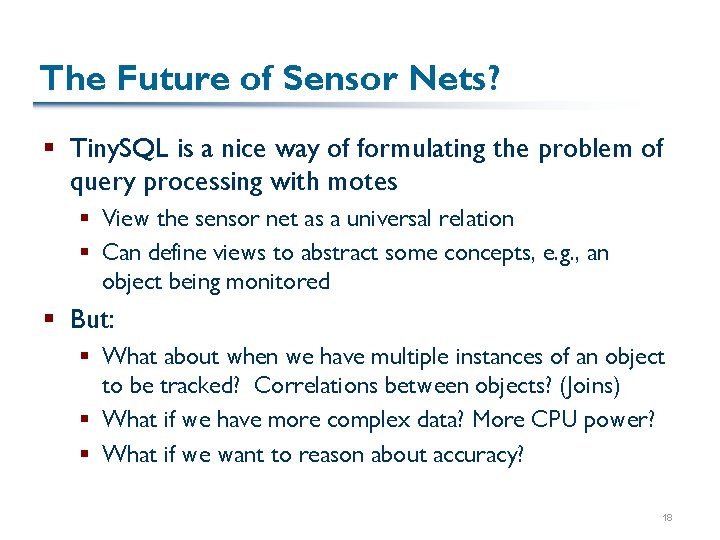

The Future of Sensor Nets? § Tiny. SQL is a nice way of formulating the problem of query processing with motes § View the sensor net as a universal relation § Can define views to abstract some concepts, e. g. , an object being monitored § But: § What about when we have multiple instances of an object to be tracked? Correlations between objects? (Joins) § What if we have more complex data? More CPU power? § What if we want to reason about accuracy? 18

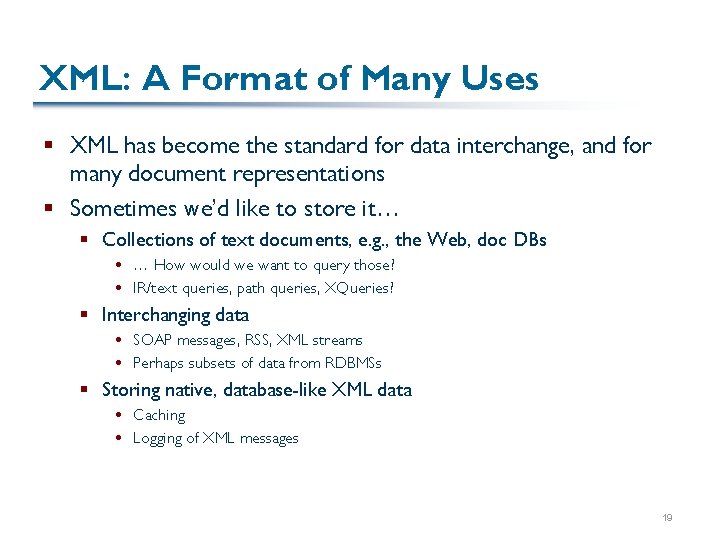

XML: A Format of Many Uses § XML has become the standard for data interchange, and for many document representations § Sometimes we’d like to store it… § Collections of text documents, e. g. , the Web, doc DBs … How would we want to query those? IR/text queries, path queries, XQueries? § Interchanging data SOAP messages, RSS, XML streams Perhaps subsets of data from RDBMSs § Storing native, database-like XML data Caching Logging of XML messages 19

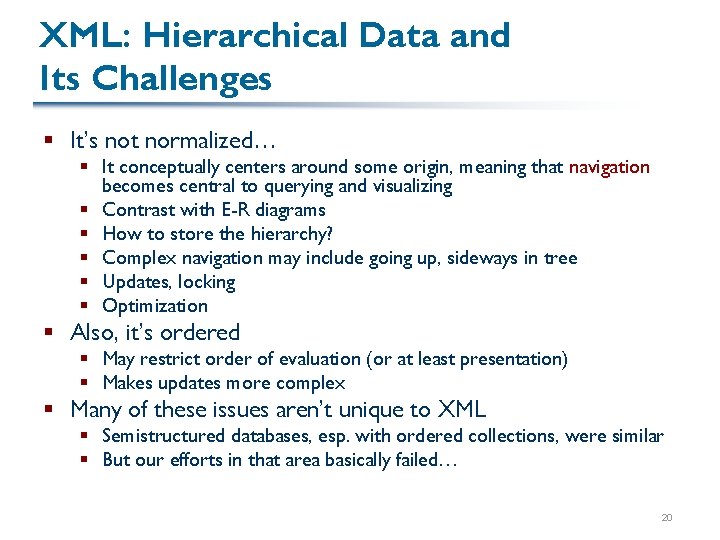

XML: Hierarchical Data and Its Challenges § It’s not normalized… § It conceptually centers around some origin, meaning that navigation becomes central to querying and visualizing § Contrast with E-R diagrams § How to store the hierarchy? § Complex navigation may include going up, sideways in tree § Updates, locking § Optimization § Also, it’s ordered § May restrict order of evaluation (or at least presentation) § Makes updates more complex § Many of these issues aren’t unique to XML § Semistructured databases, esp. with ordered collections, were similar § But our efforts in that area basically failed… 20

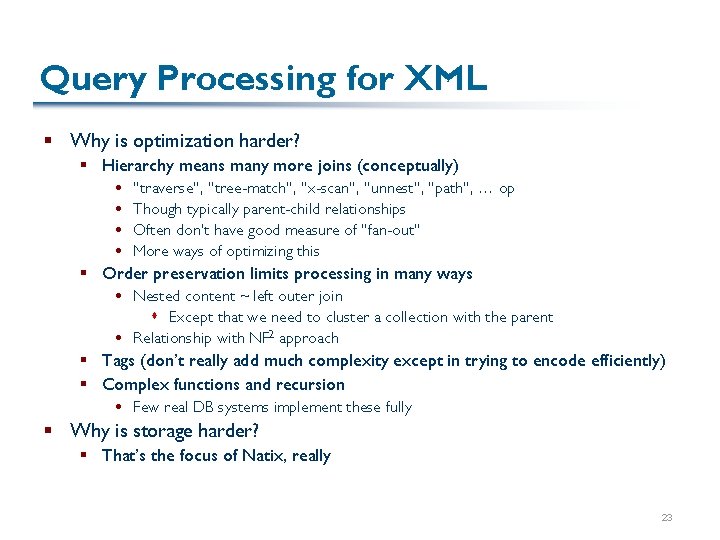

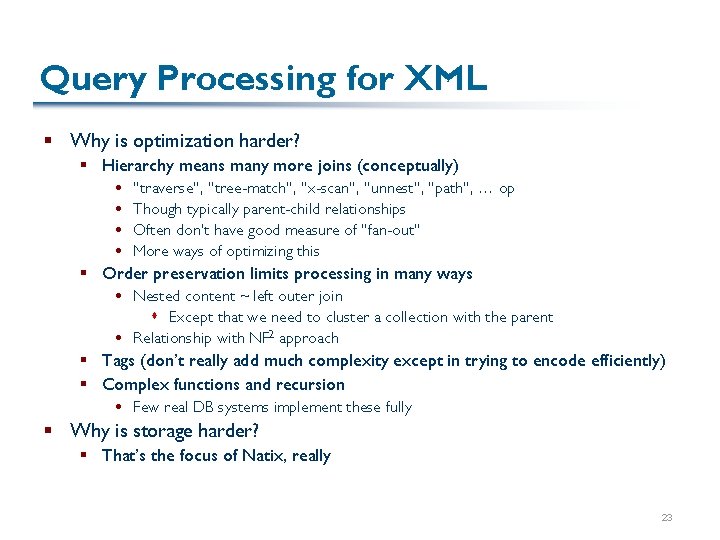

Two Ways of Thinking of XML Processing § XML databases (today) § Hierarchical storage + locking (Natix, TIMBER, Berkeley. DB, Tamino, …) § Query optimization § “Streaming XML” (next time) § RDBMS XML export § Partitioning of computation between source and mediator § “Streaming XPath” engines § The difference is in storage (or lack thereof) 21

![XML in a Database Use a legacy RDBMS Shredding Shanmugasundaram99 XML in a Database § Use a legacy RDBMS § § § Shredding [Shanmugasundaram+99]](https://slidetodoc.com/presentation_image_h/13ab9bc6292d3e8b85cd48a2316ab1a0/image-22.jpg)

XML in a Database § Use a legacy RDBMS § § § Shredding [Shanmugasundaram+99] and many others Path-based encodings [Cooper+01] Region-based encodings [Bruno+02][Chen+04] Order preservation in updates [Tatarinov+02], … What’s novel here? How does this relate to materialized views and warehousing? § Native XML databases § Hierarchical storage (Natix, TIMBER, Berkeley. DB, Tamino, …) § Updates and locking § Query optimization (e. g. , that on Galax) 22

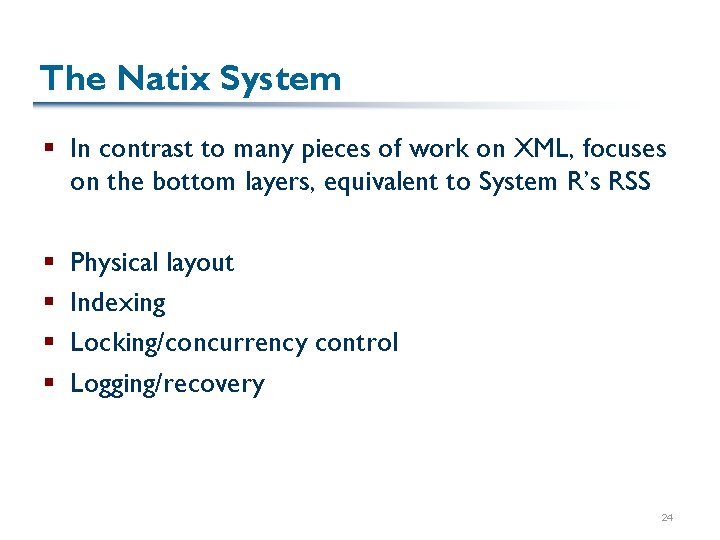

Query Processing for XML § Why is optimization harder? § Hierarchy means many more joins (conceptually) “traverse”, “tree-match”, “x-scan”, “unnest”, “path”, … op Though typically parent-child relationships Often don’t have good measure of “fan-out” More ways of optimizing this § Order preservation limits processing in many ways Nested content ~ left outer join s Except that we need to cluster a collection with the parent Relationship with NF 2 approach § Tags (don’t really add much complexity except in trying to encode efficiently) § Complex functions and recursion Few real DB systems implement these fully § Why is storage harder? § That’s the focus of Natix, really 23

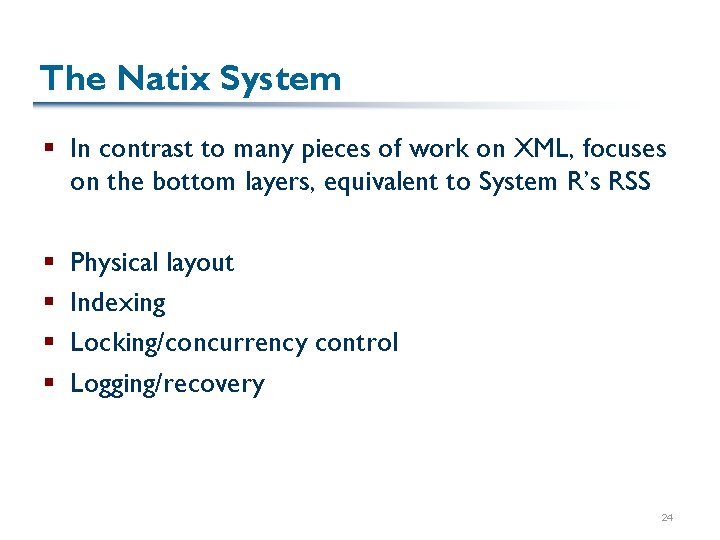

The Natix System § In contrast to many pieces of work on XML, focuses on the bottom layers, equivalent to System R’s RSS § § Physical layout Indexing Locking/concurrency control Logging/recovery 24

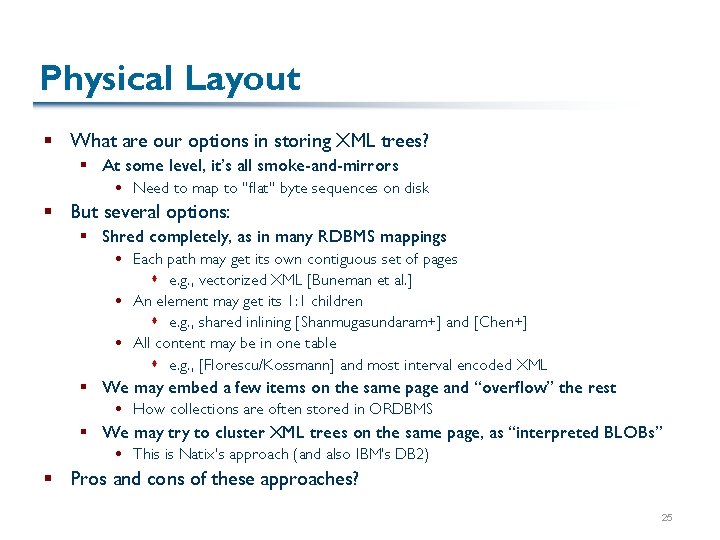

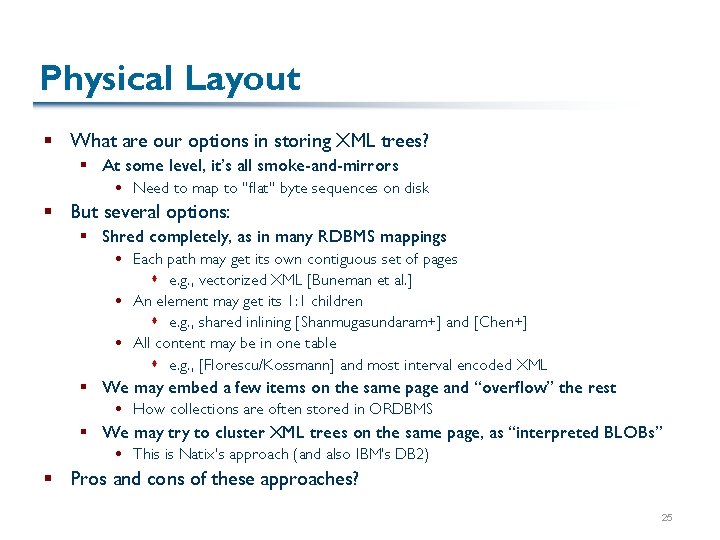

Physical Layout § What are our options in storing XML trees? § At some level, it’s all smoke-and-mirrors Need to map to “flat” byte sequences on disk § But several options: § Shred completely, as in many RDBMS mappings Each path may get its own contiguous set of pages s e. g. , vectorized XML [Buneman et al. ] An element may get its 1: 1 children s e. g. , shared inlining [Shanmugasundaram+] and [Chen+] All content may be in one table s e. g. , [Florescu/Kossmann] and most interval encoded XML § We may embed a few items on the same page and “overflow” the rest How collections are often stored in ORDBMS § We may try to cluster XML trees on the same page, as “interpreted BLOBs” This is Natix’s approach (and also IBM’s DB 2) § Pros and cons of these approaches? 25

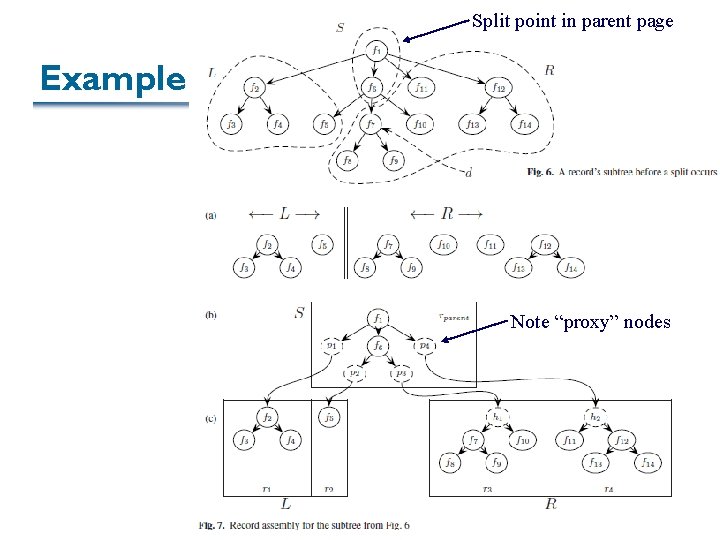

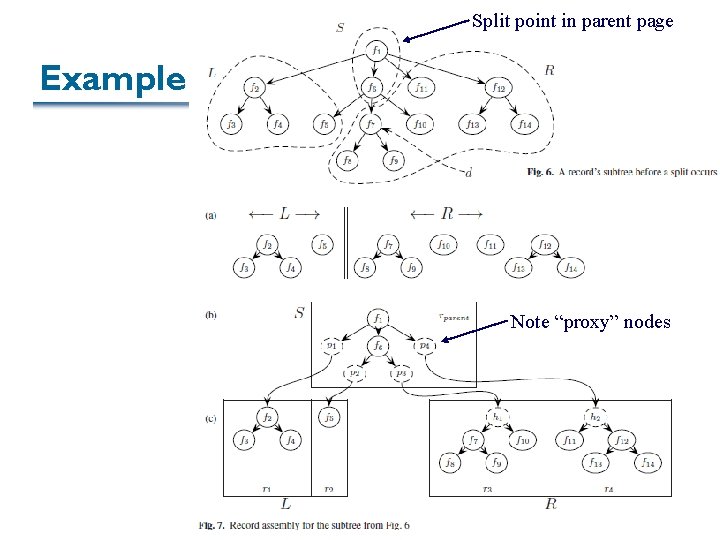

Challenges of the Page-per-Tree Approach § How big of a tree? § What happens if the XML overflows the tree? § Natix claims an adaptive approach to choosing the tree’s granularity § Primarily based on balancing the tree, constraints on children that must appear with a parent § What other possibilities make sense? § Natix uses a B+ Tree-like scheme for achieving balance and splitting a tree across pages 26

Split point in parent page Example Note “proxy” nodes 27

That Was Simple – But What about Updates? § Clearly, insertions and deletions can affect things § Deletion may ultimately require us to rebalance § Ditto with insertion § But insertion also may make us run out of space – what to do? § Their approach: add another page; ultimately may need to split at multiple levels, as in B+ Tree § Others have studied this problem and used integer encoding schemes (plus B+ Trees) for the order 28

Does this Help? § According to general lore, yes § The Natix experiments in this paper were limited in their query and adaptivity loads § But the IBM people say their approach, which is similar, works significantly better than Oracle’s shredded approach 29

There’s More to Updates than the Pages § What about concurrency control and recovery? § We already have a notion of hierarchical locks, but they claim: § If we want to support IDREF traversal, and indexing directly to nodes, we need more § What’s the idea behind SPP locking? 30

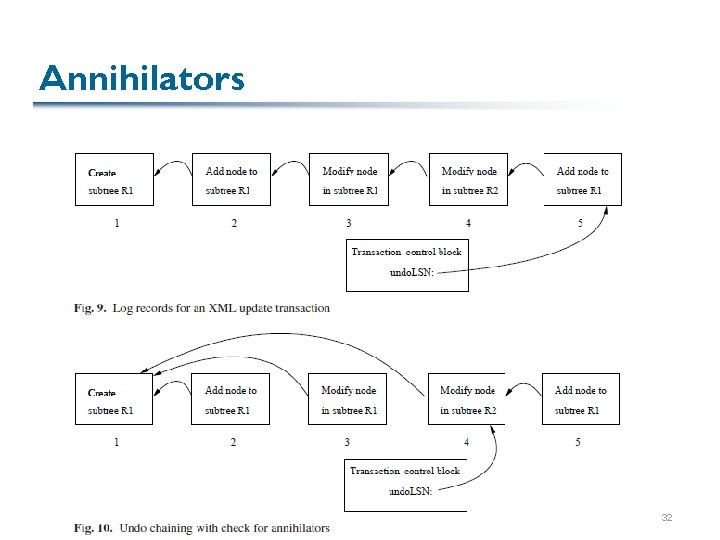

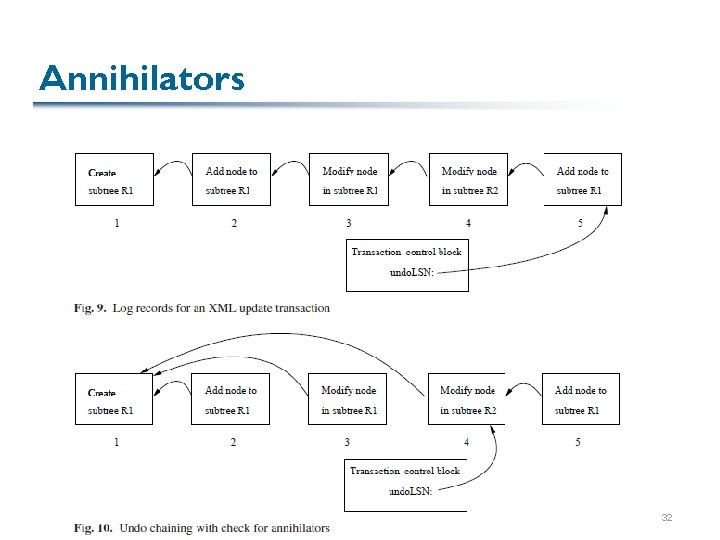

Logging § They claim ARIES needs some modifications – why? § Their changes: § Need to make subtree updates more efficient – don’t want to write a log entry for each subtree insertion § Use (a copy of) the page itself as a means of tracking what was inserted, then batch-apply to WAL § “Annihilators”: if we undo a tree creation, then we probably don’t need to worry about undoing later changes to that tree § A few minor tweaks to minimize undo/redo when only one transaction touches a page 31

Annihilators 32

Assessment § Native XML storage isn’t really all that different from other means of storage § There are probably some good reasons to make a few tweaks in locking § Optimization remains harder § A real solution to materialized view creation would probably make RDBMSs come close to delivering the same performance, modulo locking 33