Sense clusters versus sense relations Irina Chugur Julio

- Slides: 23

Sense clusters versus sense relations Irina Chugur, Julio Gonzalo UNED (Spain)

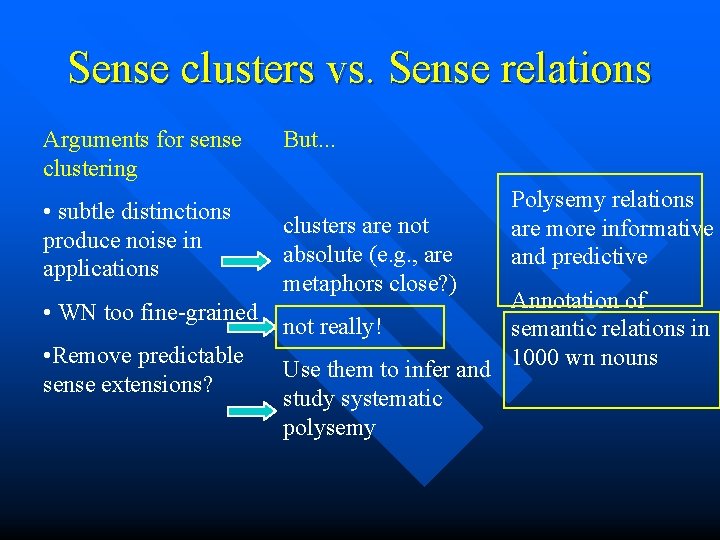

Sense clusters vs. Sense relations Arguments for sense clustering • subtle distinctions produce noise in applications • WN too fine-grained • Remove predictable sense extensions?

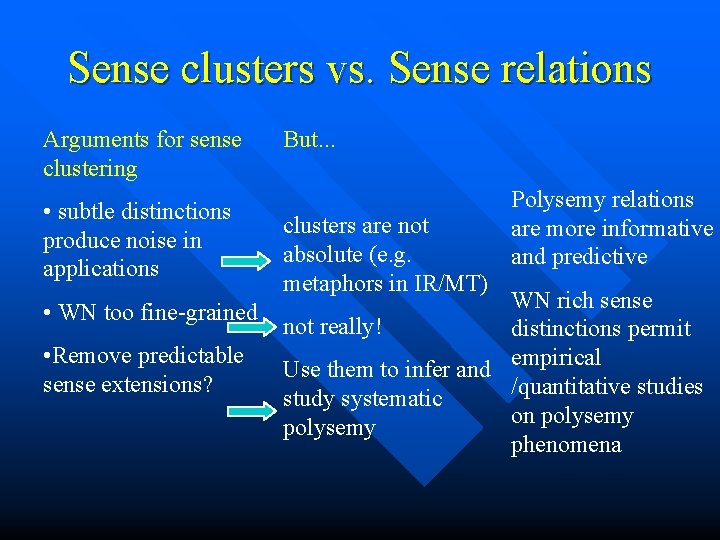

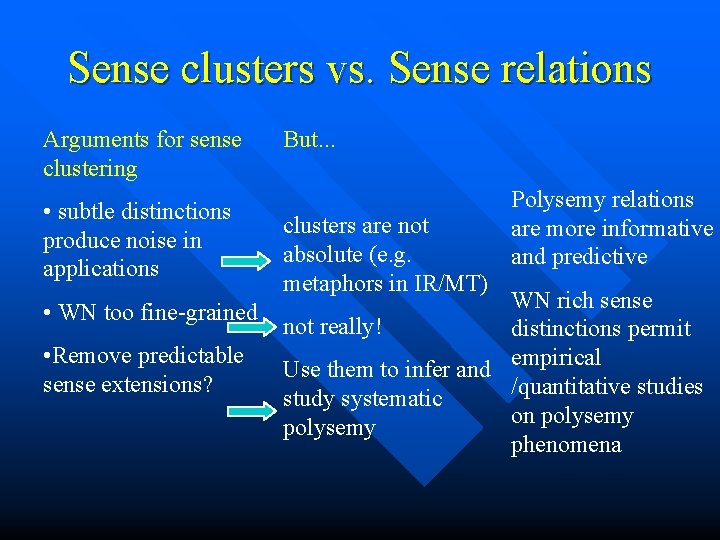

Sense clusters vs. Sense relations Arguments for sense clustering • subtle distinctions produce noise in applications • WN too fine-grained • Remove predictable sense extensions? But. . . clusters are not absolute (e. g. metaphors in IR/MT) Polysemy relations are more informative and predictive WN rich sense not really! distinctions permit Use them to infer and empirical /quantitative studies study systematic on polysemy phenomena

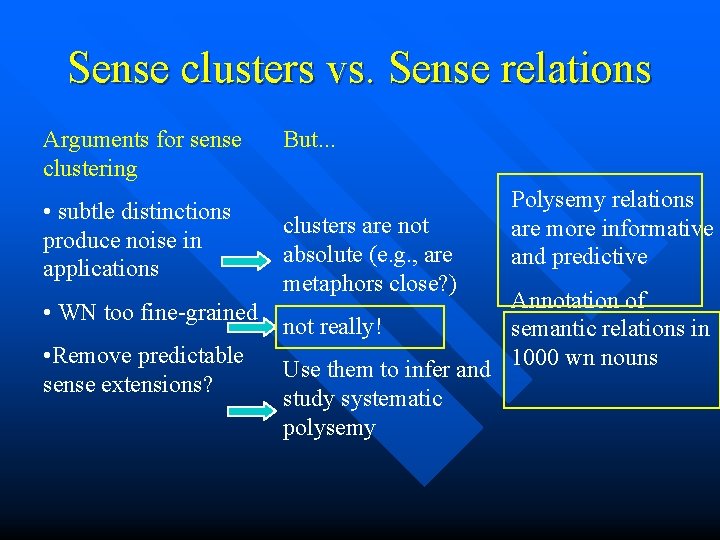

Sense clusters vs. Sense relations Arguments for sense clustering • subtle distinctions produce noise in applications • WN too fine-grained • Remove predictable sense extensions? But. . . clusters are not absolute (e. g. , are metaphors close? ) Polysemy relations are more informative and predictive Annotation of not really! semantic relations in Use them to infer and 1000 wn nouns study systematic polysemy

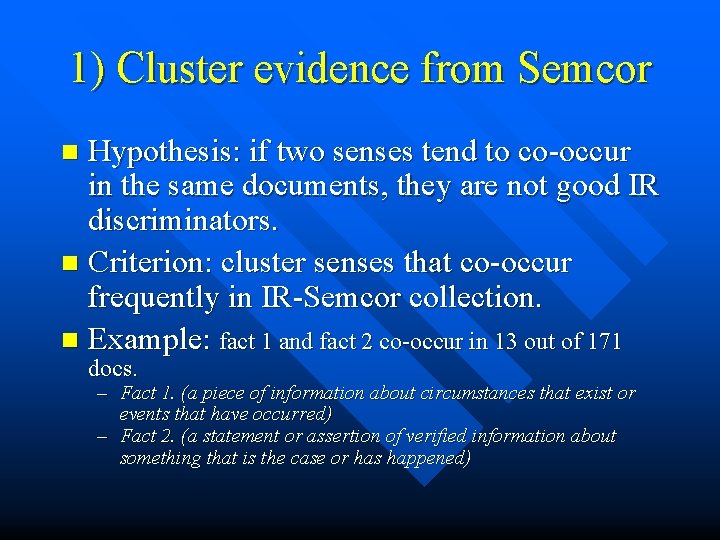

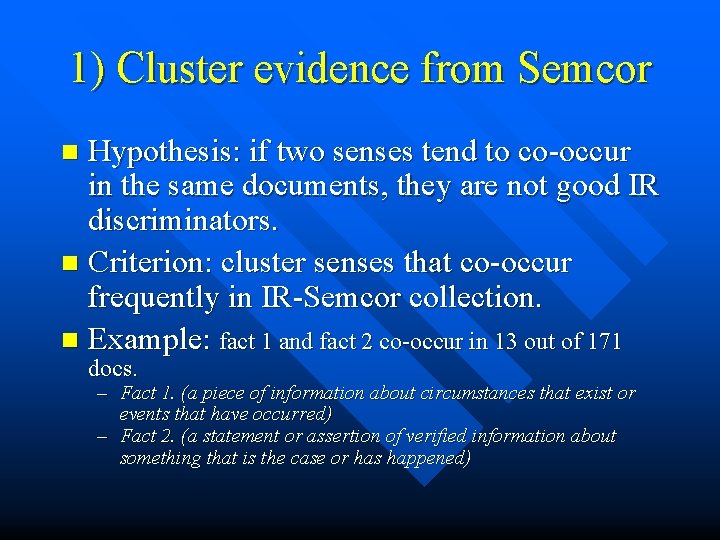

1) Cluster evidence from Semcor Hypothesis: if two senses tend to co-occur in the same documents, they are not good IR discriminators. n Criterion: cluster senses that co-occur frequently in IR-Semcor collection. n Example: fact 1 and fact 2 co-occur in 13 out of 171 n docs. – Fact 1. (a piece of information about circumstances that exist or events that have occurred) – Fact 2. (a statement or assertion of verified information about something that is the case or has happened)

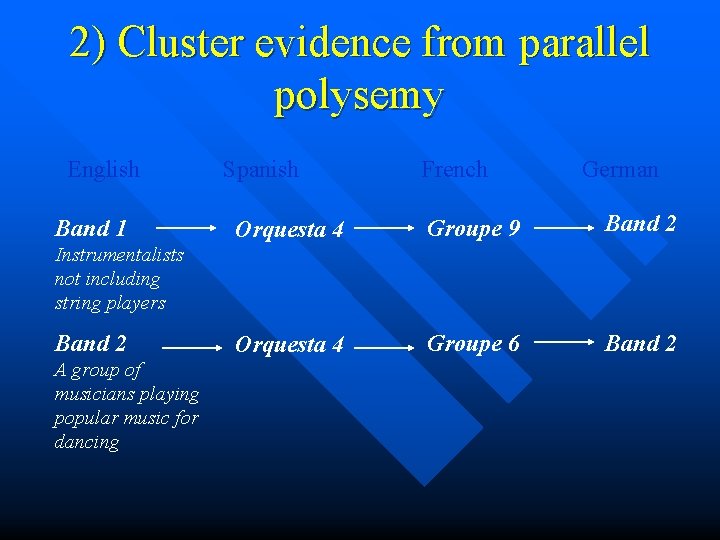

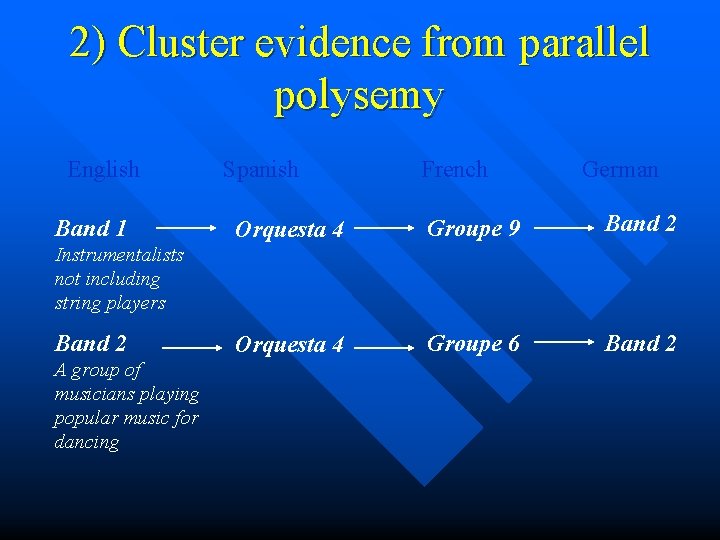

2) Cluster evidence from parallel polysemy English Band 1 Spanish French German Orquesta 4 Groupe 9 Band 2 Orquesta 4 Groupe 6 Band 2 Instrumentalists not including string players Band 2 A group of musicians playing popular music for dancing

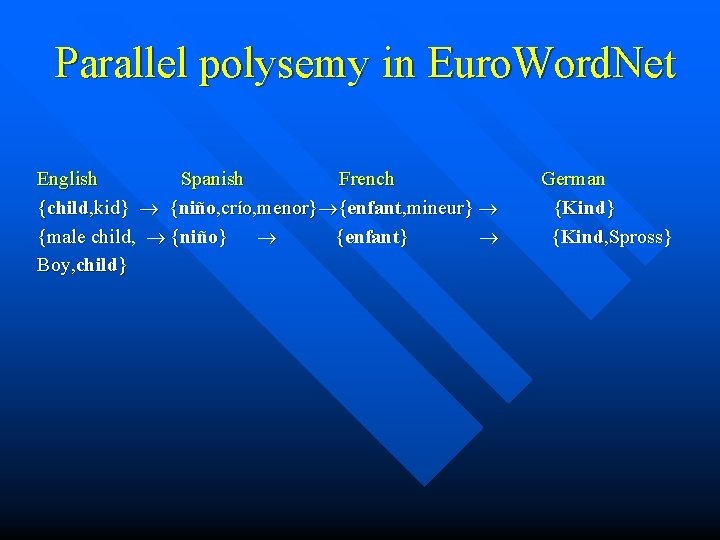

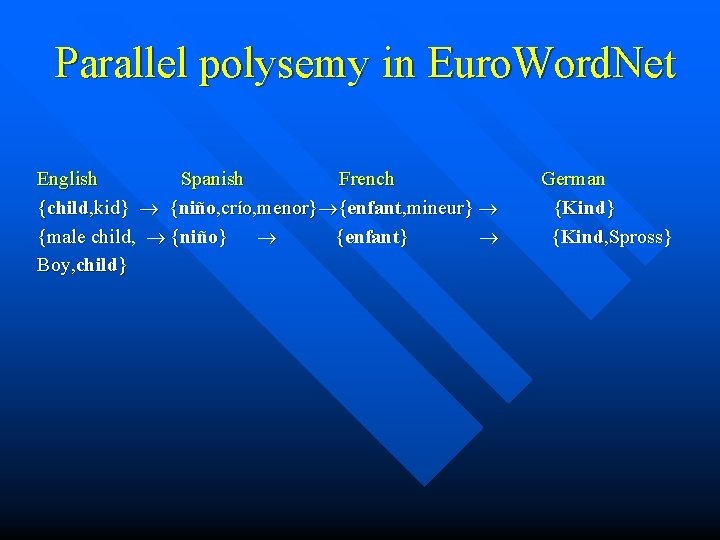

Parallel polysemy in Euro. Word. Net English Spanish French {child, kid} {niño, crío, menor} {enfant, mineur} {male child, {niño} {enfant} Boy, child} German {Kind} {Kind, Spross}

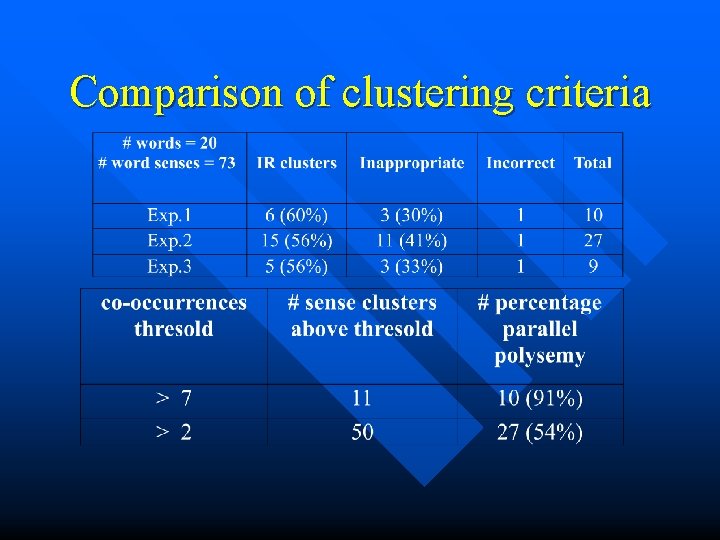

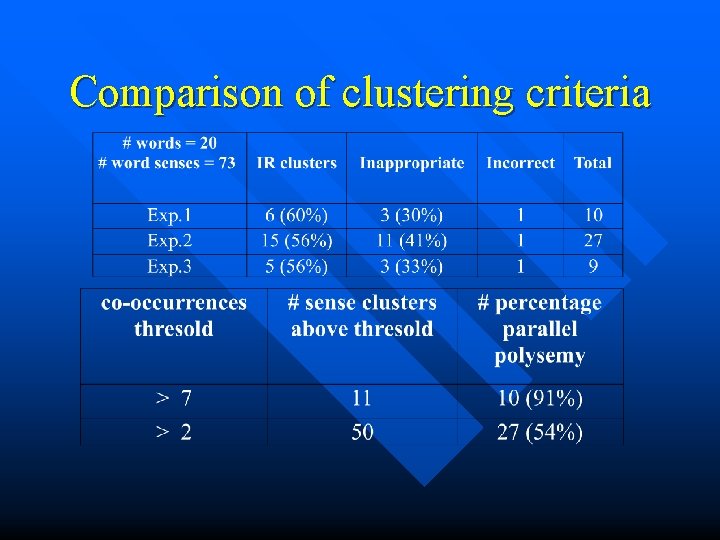

Comparison of clustering criteria

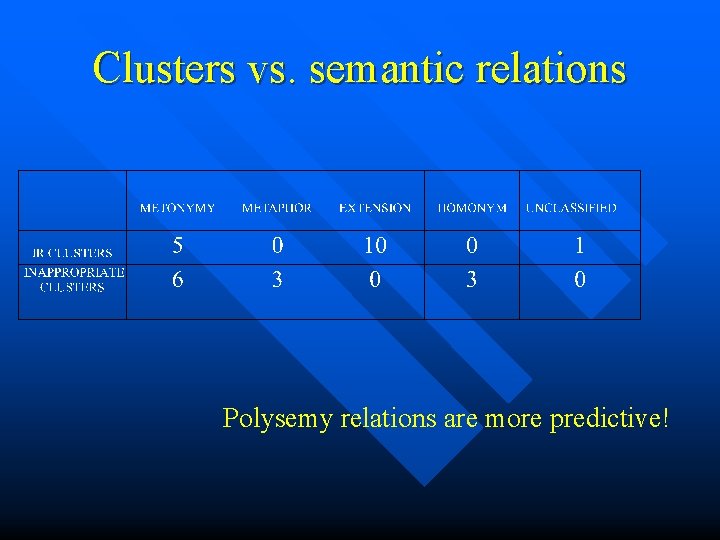

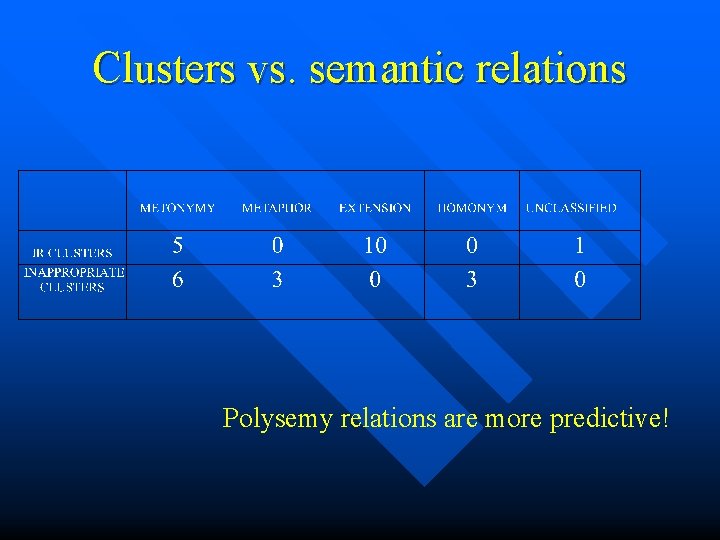

Clusters vs. semantic relations Polysemy relations are more predictive!

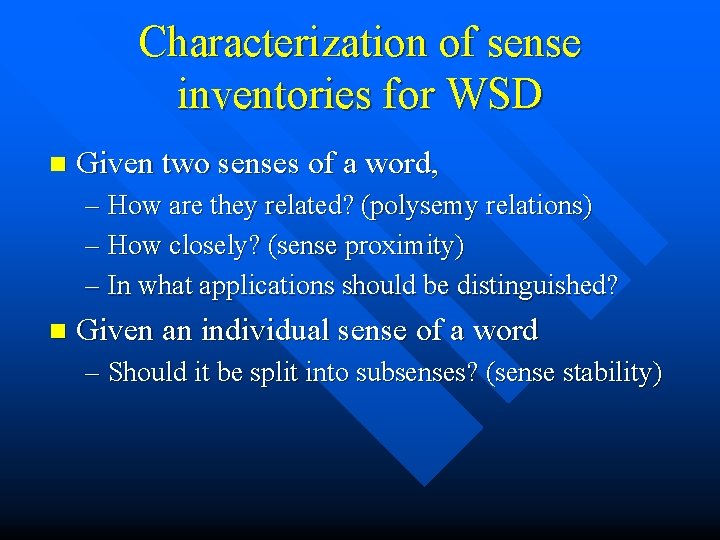

Characterization of sense inventories for WSD n Given two senses of a word, – How are they related? (polysemy relations) – How closely? (sense proximity) – In what applications should be distinguished? n Given an individual sense of a word – Should it be split into subsenses? (sense stability)

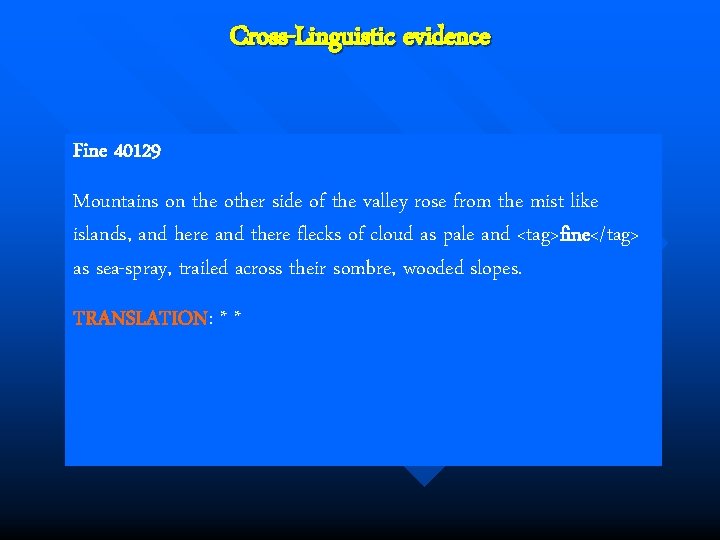

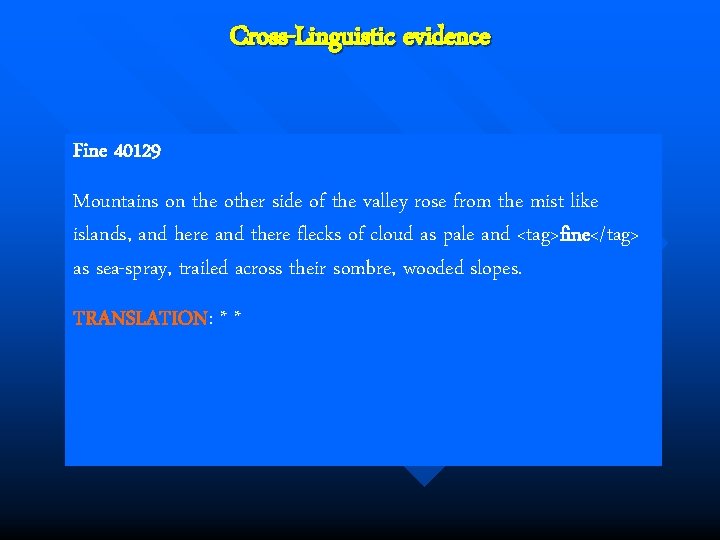

Cross-Linguistic evidence Fine 40129 Mountains on the other side of the valley rose from the mist like islands, and here and there flecks of cloud as pale and <tag>fine</tag> as sea-spray, trailed across their sombre, wooded slopes. TRANSLATION: * *

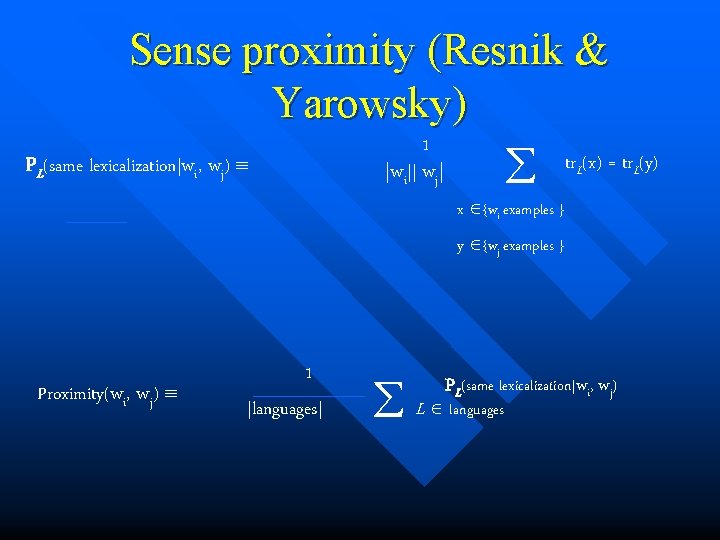

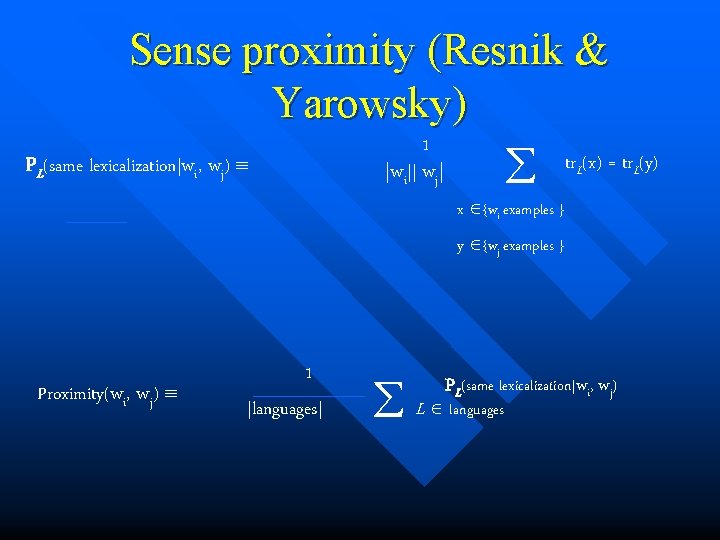

Sense proximity (Resnik & Yarowsky) 1 PL(same lexicalization|wi, wj) |wi|| wj| tr (x) = tr (y) L x {wi examples } y {wj examples } Proximity(wi, wj) 1 |languages| L languages PL(same lexicalization|wi, wj) L

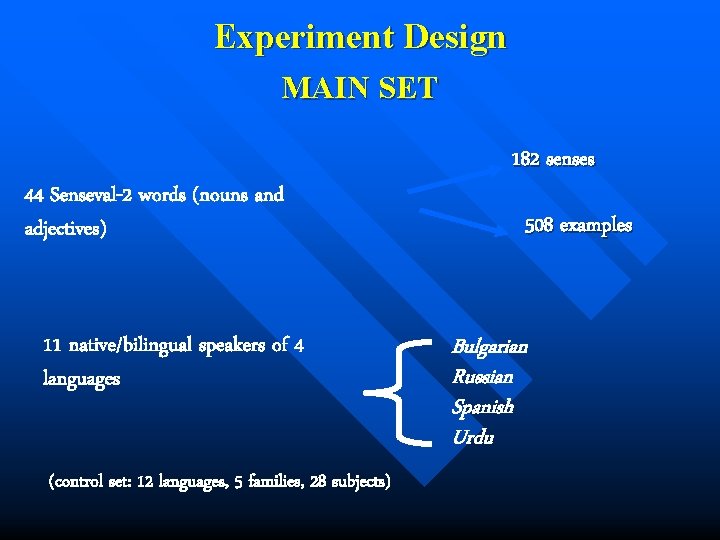

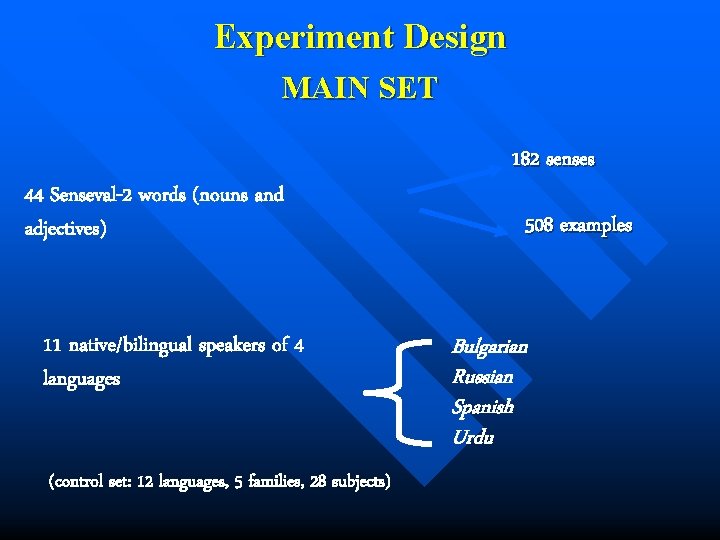

Experiment Design MAIN SET 44 Senseval-2 words (nouns and adjectives) 11 native/bilingual speakers of 4 languages (control set: 12 languages, 5 families, 28 subjects) 182 senses 508 examples Bulgarian Russian Spanish Urdu

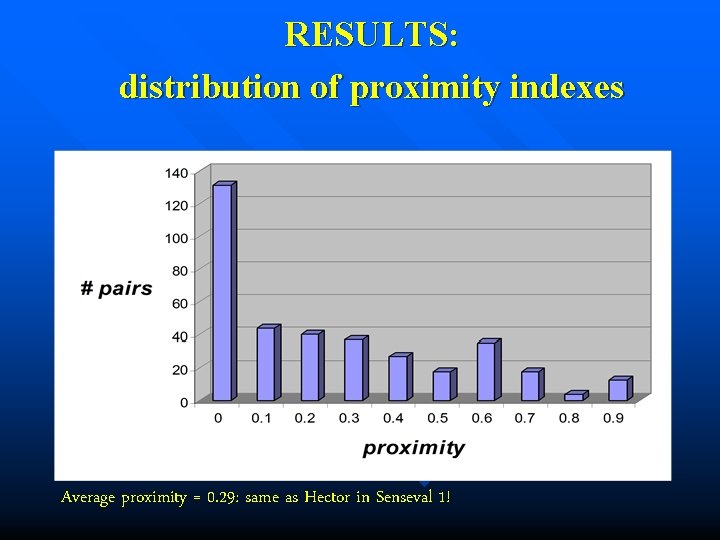

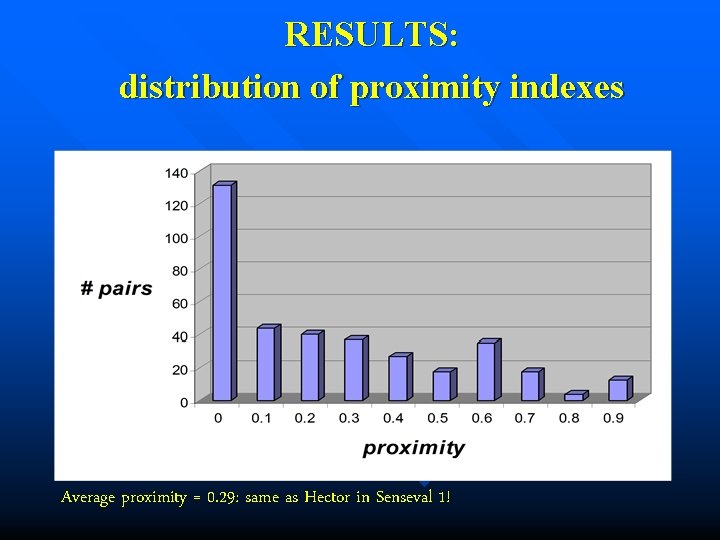

RESULTS: distribution of proximity indexes Average proximity = 0. 29: same as Hector in Senseval 1!

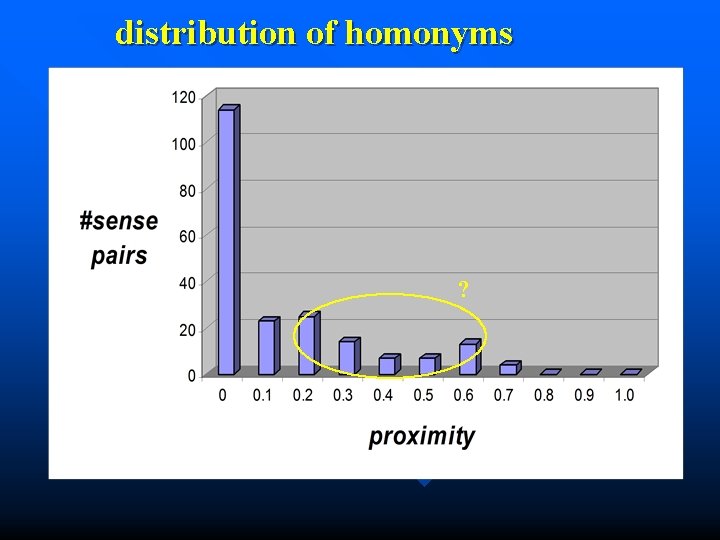

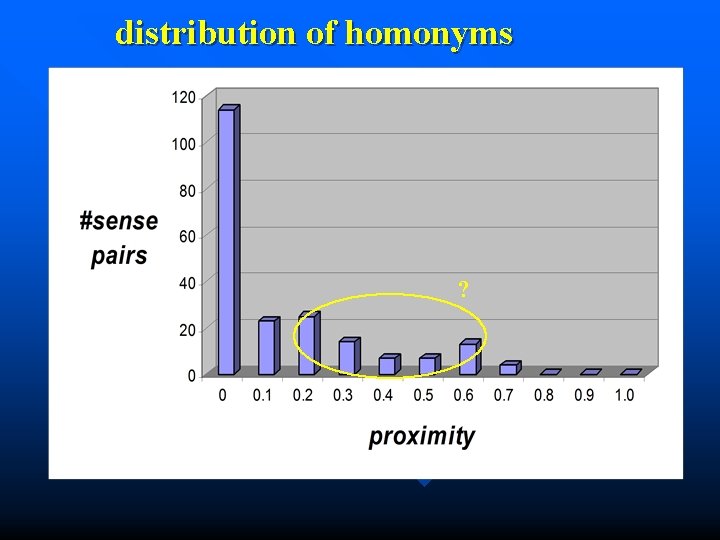

distribution of homonyms ?

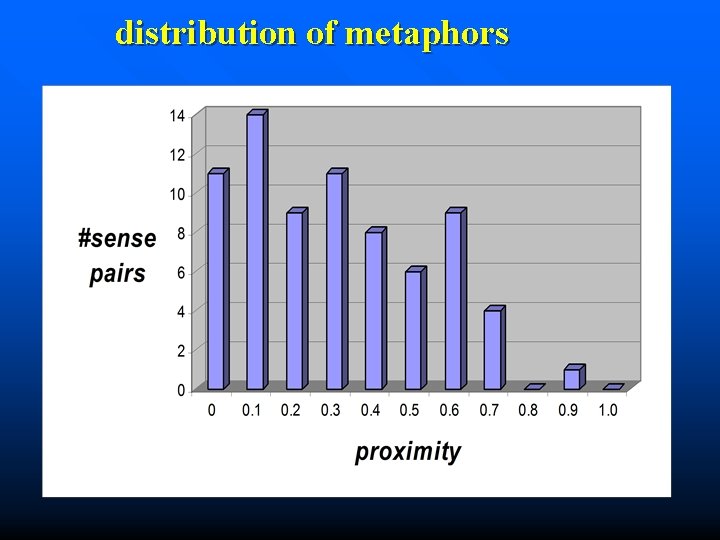

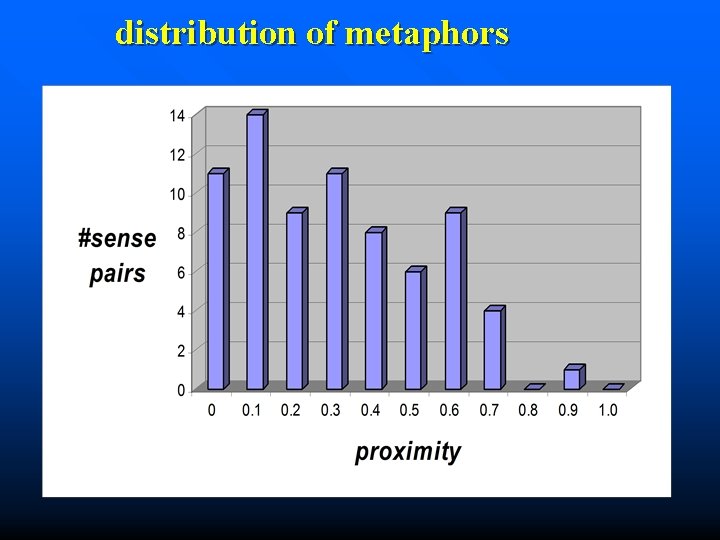

distribution of metaphors

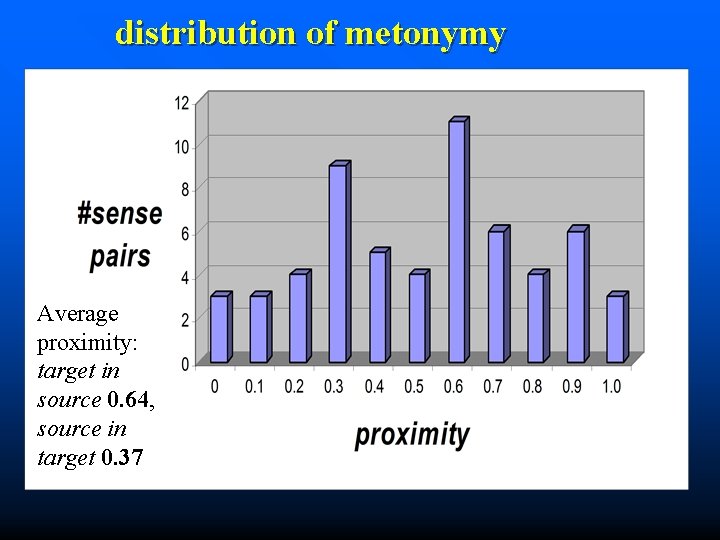

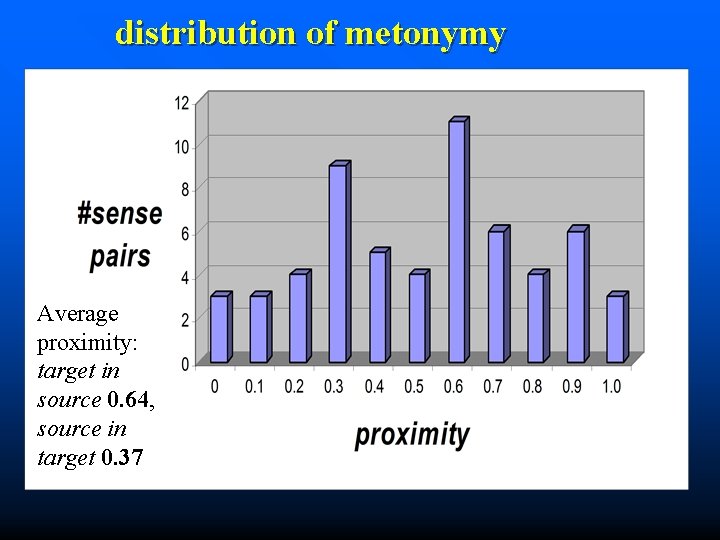

distribution of metonymy Average proximity: target in source 0. 64, source in target 0. 37

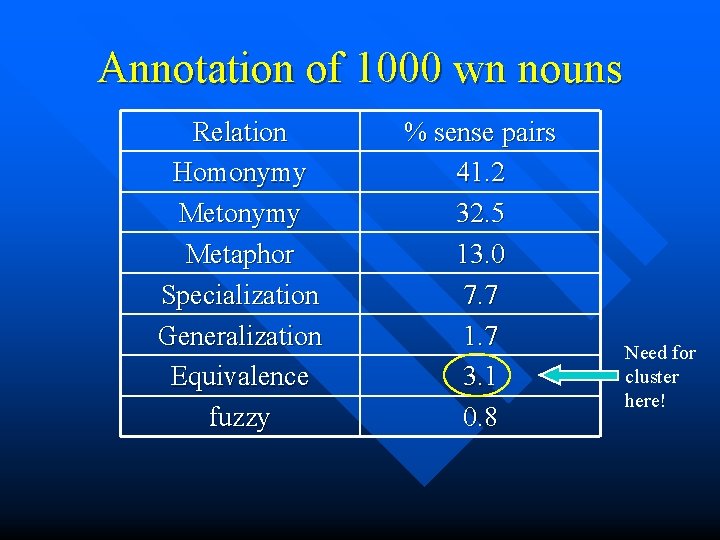

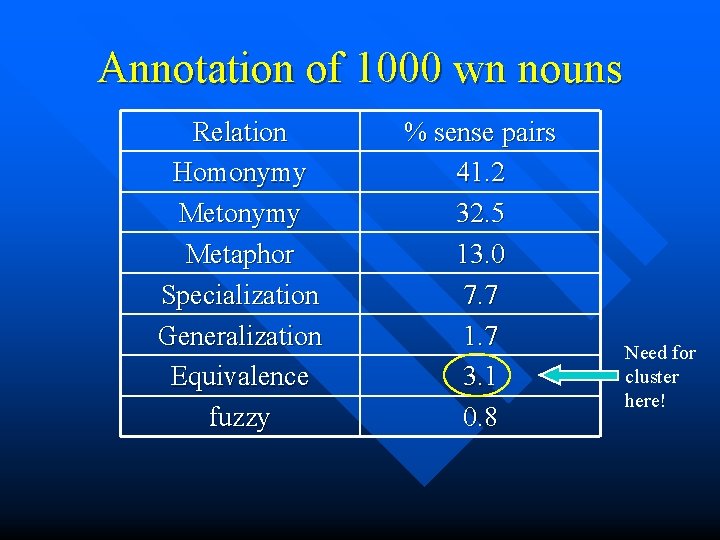

Annotation of 1000 wn nouns Relation Homonymy Metaphor Specialization Generalization Equivalence fuzzy % sense pairs 41. 2 32. 5 13. 0 7. 7 1. 7 3. 1 0. 8 Need for cluster here!

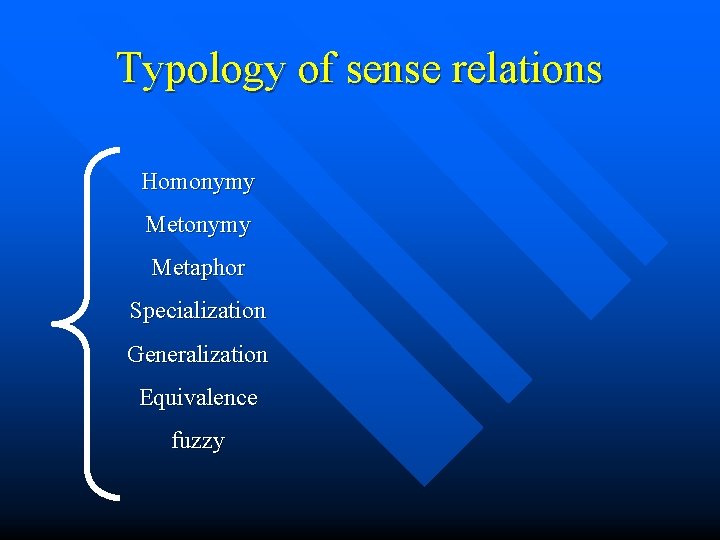

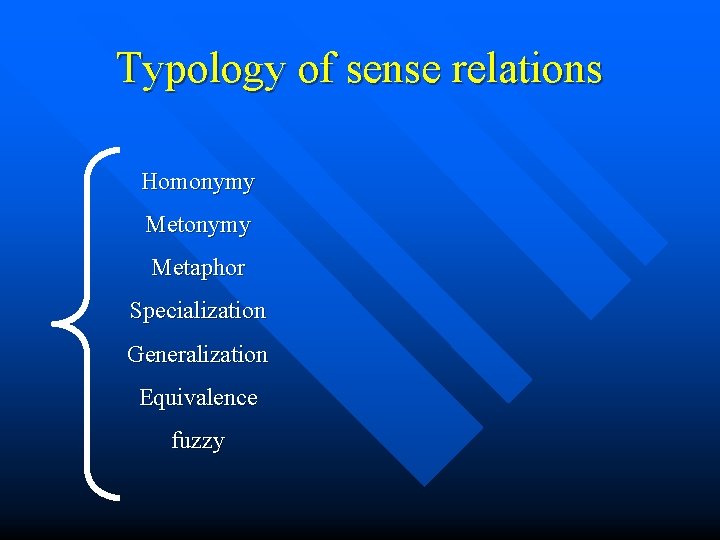

Typology of sense relations Homonymy Metaphor Specialization Generalization Equivalence fuzzy

Typology of sense relations: metonymy Animal-meat Animal-fur Homonymy target in source Metonymy Object-color Plant-fruit People-language Metaphor Action-duration Specialization Generalization Tree-wood Recipient-quantity. . . source in target Equivalence Action-object Action-result Shape-object Plant-food/beverage fuzzy Material-product. . . Co-metonymy Substance-agent

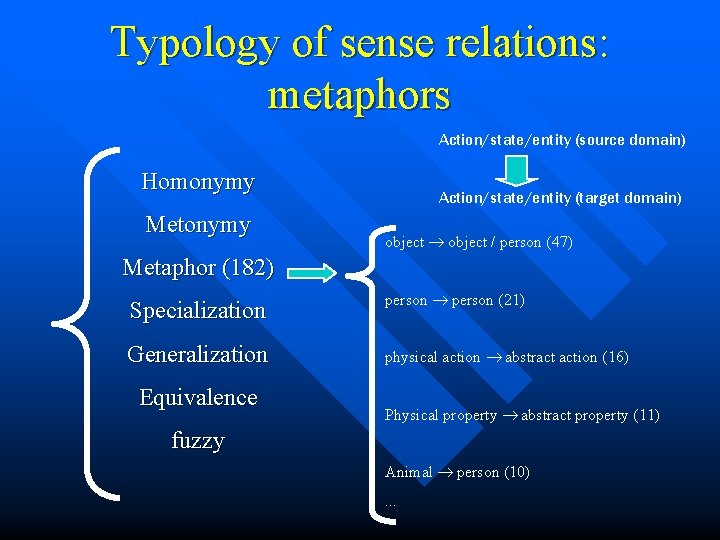

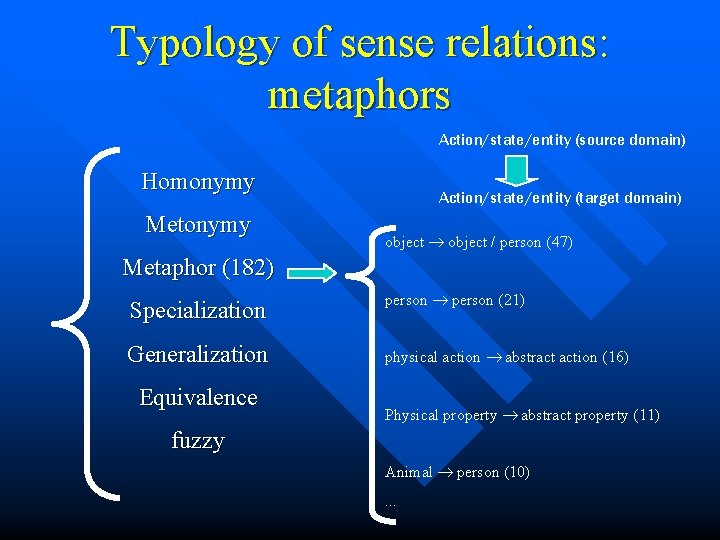

Typology of sense relations: metaphors Action/state/entity (source domain) Homonymy Metonymy Action/state/entity (target domain) object / person (47) Metaphor (182) Specialization Generalization Equivalence person (21) physical action abstract action (16) Physical property abstract property (11) fuzzy Animal person (10). . .

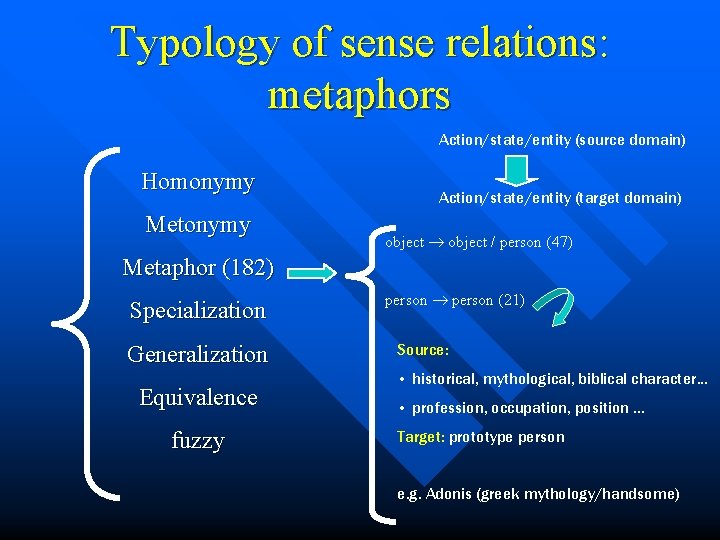

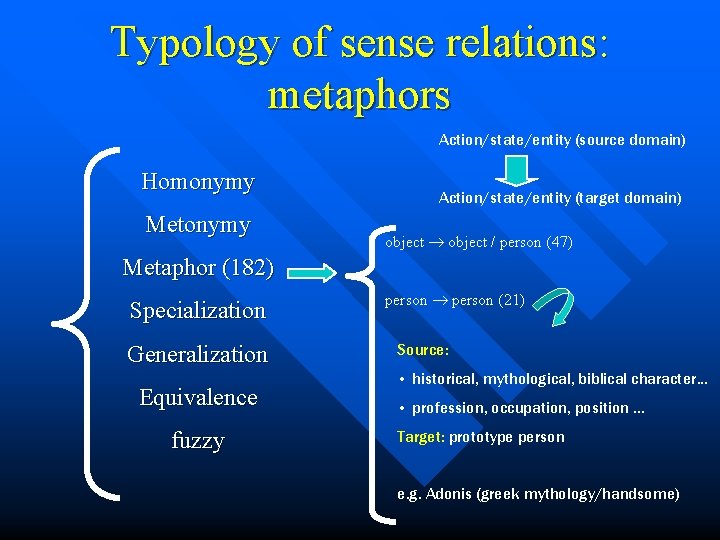

Typology of sense relations: metaphors Action/state/entity (source domain) Homonymy Metonymy Action/state/entity (target domain) object / person (47) Metaphor (182) Specialization Generalization Equivalence fuzzy person (21) Source: • historical, mythological, biblical character. . . • profession, occupation, position. . . Target: prototype person e. g. Adonis (greek mythology/handsome)

Conclusions Let’s annotate semantic relations between WN word senses!

Employee relations in public relations

Employee relations in public relations Types of sense relations in semantics

Types of sense relations in semantics Narrow sense heritability vs broad sense heritability

Narrow sense heritability vs broad sense heritability Dominant genetic variance

Dominant genetic variance Jonathan brockbank

Jonathan brockbank Irina santesteban

Irina santesteban 050466 color

050466 color Irina orssich

Irina orssich Irina mazurika

Irina mazurika Irina vukcevic

Irina vukcevic Irina plaks

Irina plaks Irina

Irina Dr irina grimberg

Dr irina grimberg Irina arefeva

Irina arefeva Irina chistiakova

Irina chistiakova Irina graciela cervantes bravo

Irina graciela cervantes bravo Dr irina strambu

Dr irina strambu Irina veretennicoff

Irina veretennicoff Irina semjonova

Irina semjonova Tsaravich

Tsaravich Irina osmanbašić

Irina osmanbašić Speakalley

Speakalley Ezotropija

Ezotropija Irina krasnova

Irina krasnova