SDS Testing Verification the CERES Delta Design Review

- Slides: 19

SDS Testing / Verification @ the CERES Delta Design Review Victor Buczkowski Page 1

Agenda • • • Progress & Schedule Testing Process Progress Accounting & Reporting Requirements Verification Progress Score Card Page 2

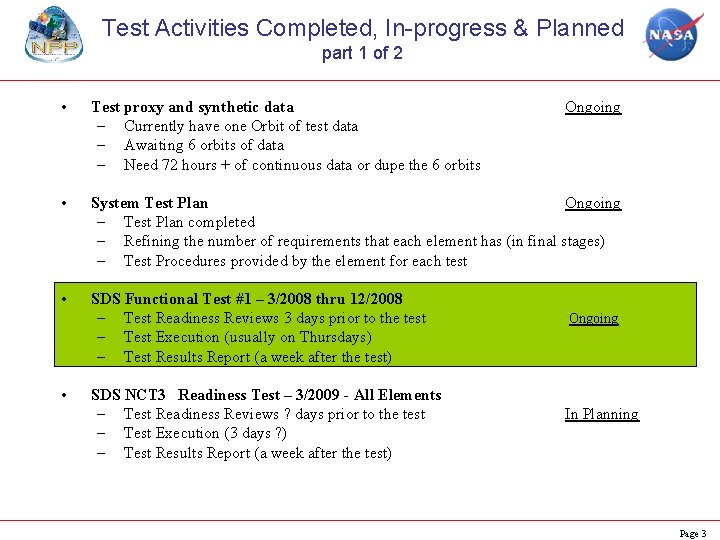

Test Activities Completed, In-progress & Planned part 1 of 2 • Test proxy and synthetic data – Currently have one Orbit of test data – Awaiting 6 orbits of data – Need 72 hours + of continuous data or dupe the 6 orbits • System Test Plan Ongoing – Test Plan completed – Refining the number of requirements that each element has (in final stages) – Test Procedures provided by the element for each test • SDS Functional Test #1 – 3/2008 thru 12/2008 – Test Readiness Reviews 3 days prior to the test – Test Execution (usually on Thursdays) – Test Results Report (a week after the test) • SDS NCT 3 Readiness Test – 3/2009 - All Elements – Test Readiness Reviews ? days prior to the test – Test Execution (3 days ? ) – Test Results Report (a week after the test) Ongoing In Planning Page 3

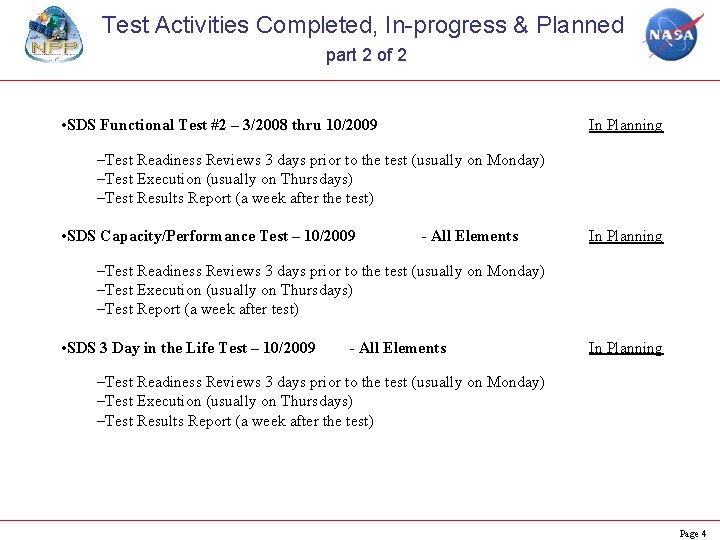

Test Activities Completed, In-progress & Planned part 2 of 2 • SDS Functional Test #2 – 3/2008 thru 10/2009 In Planning –Test Readiness Reviews 3 days prior to the test (usually on Monday) –Test Execution (usually on Thursdays) –Test Results Report (a week after the test) • SDS Capacity/Performance Test – 10/2009 - All Elements In Planning –Test Readiness Reviews 3 days prior to the test (usually on Monday) –Test Execution (usually on Thursdays) –Test Report (a week after test) • SDS 3 Day in the Life Test – 10/2009 - All Elements In Planning –Test Readiness Reviews 3 days prior to the test (usually on Monday) –Test Execution (usually on Thursdays) –Test Results Report (a week after the test) Page 4

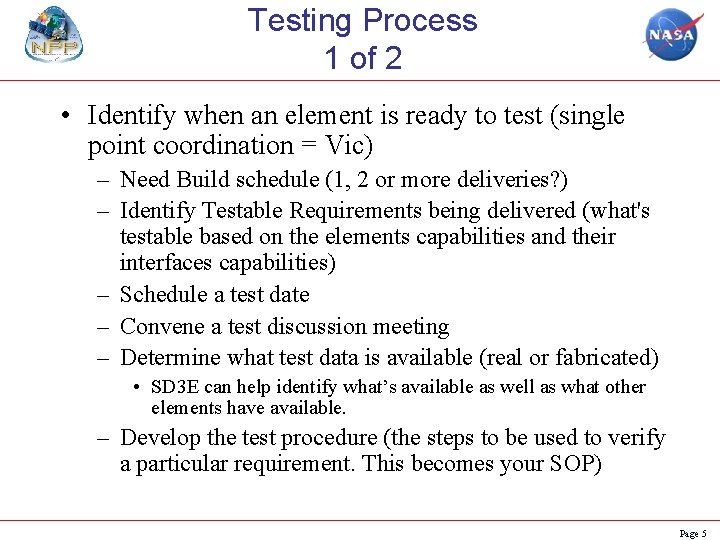

Testing Process 1 of 2 • Identify when an element is ready to test (single point coordination = Vic) – Need Build schedule (1, 2 or more deliveries? ) – Identify Testable Requirements being delivered (what's testable based on the elements capabilities and their interfaces capabilities) – Schedule a test date – Convene a test discussion meeting – Determine what test data is available (real or fabricated) • SD 3 E can help identify what’s available as well as what other elements have available. – Develop the test procedure (the steps to be used to verify a particular requirement. This becomes your SOP) Page 5

Testing Process 2 of 2 – Conduct the Test Readiness Review (TRR usually three days prior to the test to insure everything is ready) – Execute the test – Provide the test results/report Page 6

SDS Functional Test # 1 Schedule & Progress 1 of 2 Page 7

SDS Functional Test # 1 Schedule & Progress 2 of 2 Page 8

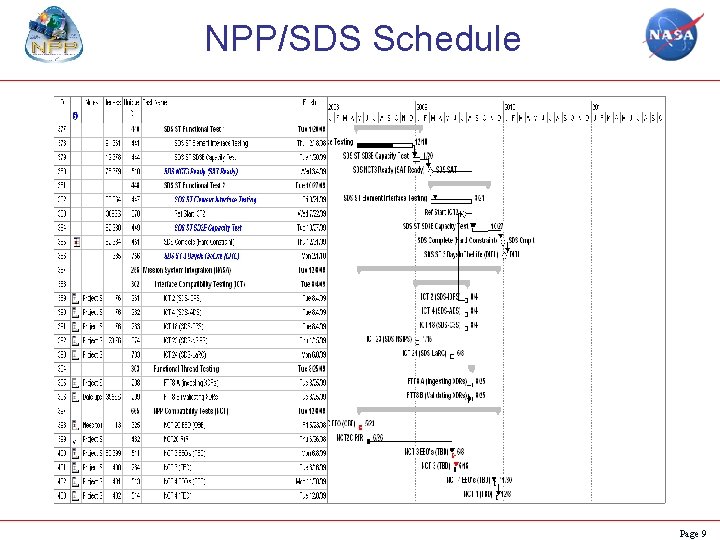

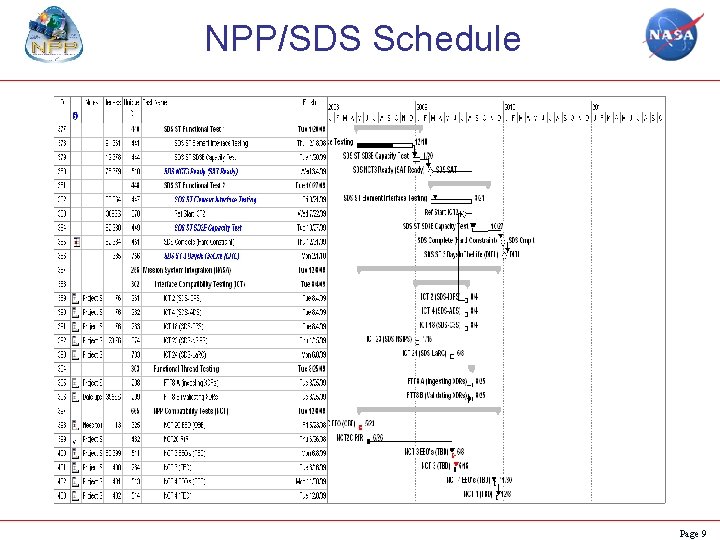

NPP/SDS Schedule Page 9

Accounting Process 1 of 2 Test Accounting • Conduct Test Readiness Reviews (TRR) – Identify what is being tested • Schedule, Coordinate and Execute the tests • Collect, Review & Maintain test report(s) – What requirements were verified and how – Pass, Fail, Partial, Status assessment – Issues and discrepancies must be identified, tracked and, retested as necessary – These materials are maintained by the SDS Test Coordinator • The test verification matrix and a score card will be maintained regularly Page 10

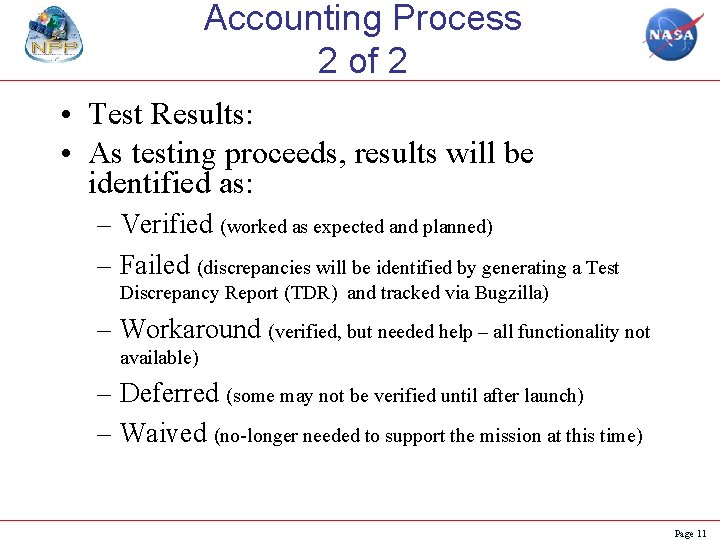

Accounting Process 2 of 2 • Test Results: • As testing proceeds, results will be identified as: – Verified (worked as expected and planned) – Failed (discrepancies will be identified by generating a Test Discrepancy Report (TDR) and tracked via Bugzilla) – Workaround (verified, but needed help – all functionality not available) – Deferred (some may not be verified until after launch) – Waived (no-longer needed to support the mission at this time) Page 11

Reporting ( page 1 of 2) Sample Procedure Page 12

Reporting ( page 1 of 2) Sample Procedure Page 13

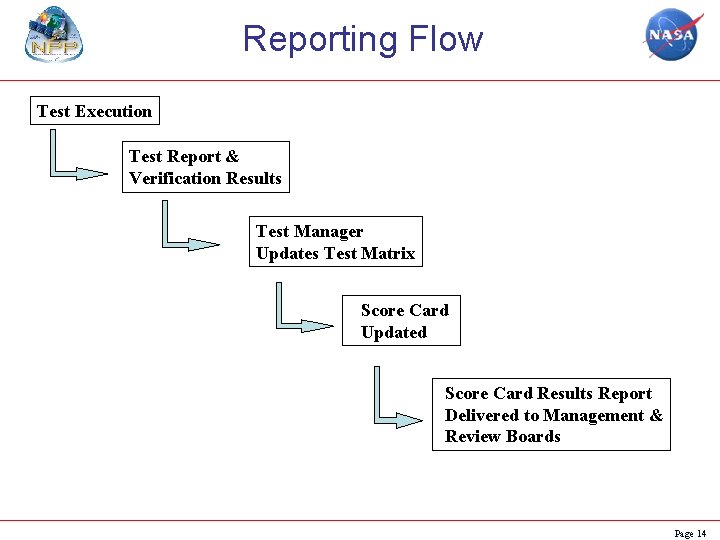

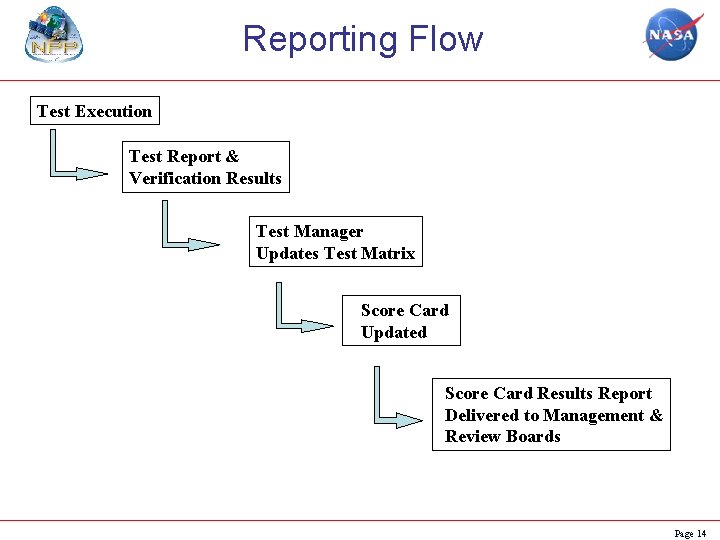

Reporting Flow Test Execution Test Report & Verification Results Test Manager Updates Test Matrix Score Card Updated Score Card Results Report Delivered to Management & Review Boards Page 14

Verification Progress as of 8/12/2008 1 of 3 Green = Passed Page 15

Verification Progress as of 8/12/2008 2 of 3 Green = Passed Page 16

Verification Progress as of 8/12/2008 3 of 3 • The previous two slides show the following – The total number of requirements for each element – The Level 3 requirement ID number – The number of requirements verified to date highlighted in green – Other colors not yet used – Red = Failed, Blue = Deferred, Waived = Peach Page 17

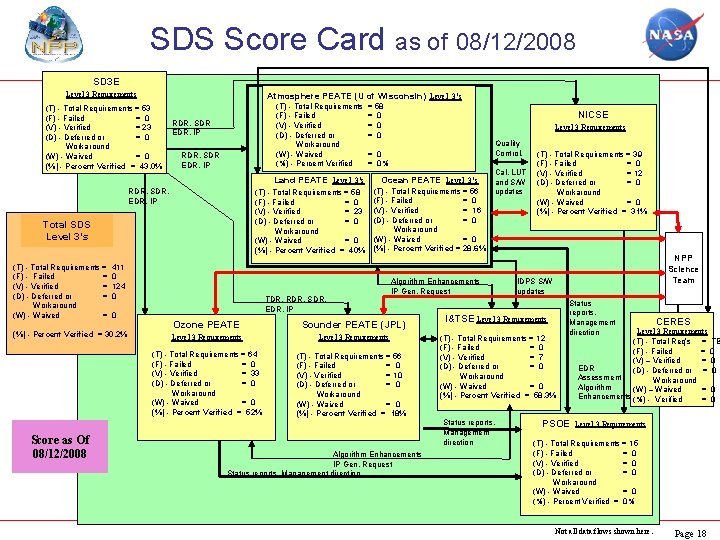

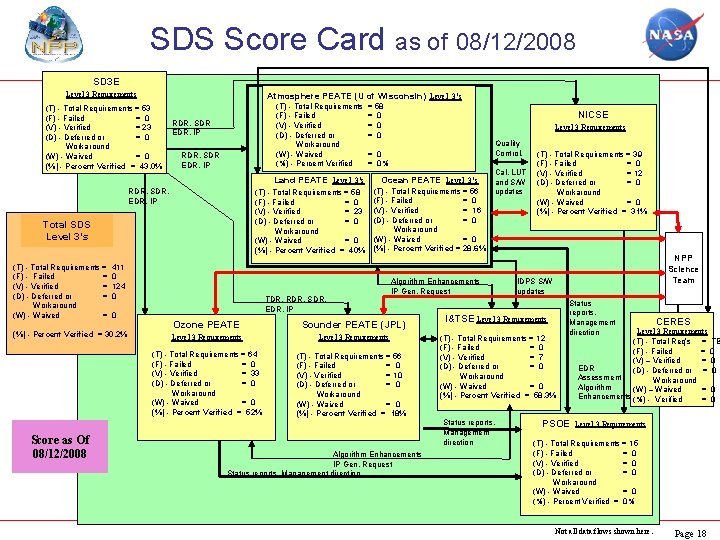

SDS Score Card as of 08/12/2008 SD 3 E Level 3 Requirements Atmosphere PEATE (U of Wisconsin) Level 3’s (T) - Total Requirements = 53 (F) - Failed = 0 (V) - Verified = 23 (D) - Deferred or = 0 Workaround (W) - Waived = 0 (%) - Percent Verified = 43. 0% (T) - Total Requirements (F) - Failed (V) - Verified (D) - Deferred or Workaround (W) - Waived (%) - Percent Verified RDR, SDR EDR, IP Land PEATE Level 3’s RDR, SDR, EDR, IP (T) - Total Requirements = 58 (F) - Failed = 0 (V) - Verified = 23 (D) - Deferred or = 0 Workaround (W) - Waived = 0 (%) - Percent Verified = 40% Total SDS Level 3’s (T) - Total Requirements = (F) - Failed = (V) - Verified = (D) - Deferred or = Workaround (W) - Waived = 411 0 124 0 0 (%) - Percent Verified = 30. 2% Score as Of 08/12/2008 = 58 = 0 = 0 NICSE Level 3 Requirements Quality Control, = 0% Ocean PEATE Level 3’s (T) - Total Requirements = 56 (F) - Failed = 0 (V) - Verified = 16 (D) - Deferred or = 0 Workaround (W) - Waived = 0 (%) - Percent Verified = 28. 6% TDR, RDR, SDR, EDR, IP Cal. LUT and S/W updates Algorithm Enhancements IP Gen. Request Ozone PEATE Sounder PEATE (JPL) Level 3 Requirements (T) - Total Requirements = 64 (F) - Failed = 0 (V) - Verified = 33 (D) - Deferred or = 0 Workaround (W) - Waived = 0 (%) - Percent Verified = 52% (T) - Total Requirements = 56 (F) - Failed = 0 (V) - Verified = 10 (D) - Deferred or = 0 Workaround (W) - Waived = 0 (%) - Percent Verified = 18% NPP Science Team IDPS S/W updates I&TSE Level 3 Requirements (T) - Total Requirements = 12 (F) - Failed = 0 (V) - Verified = 7 (D) - Deferred or = 0 Workaround (W) - Waived = 0 (%) - Percent Verified = 58. 3% Status reports, Management direction Algorithm Enhancements IP Gen. Request Status reports, Management direction (T) - Total Requirements = 39 (F) - Failed = 0 (V) - Verified = 12 (D) - Deferred or = 0 Workaround (W) - Waived = 0 (%) - Percent Verified = 31% Status reports, Management direction PSOE CERES Level 3 Requirements (T) - Total Req’s = (F) - Failed = (V) – Verified = EDR (D) - Deferred or = Assessment Workaround Algorithm (W) – Waived = Enhancements (%) - Verified = Level 3 Requirements (T) - Total Requirements = 15 (F) - Failed = 0 (V) - Verified = 0 (D) - Deferred or = 0 Workaround (W) - Waived = 0 (%) - Percent Verified = 0% Not all data flows shown here. Page 18 TB 0 0 0

SDS Score Card (page 2 of 2) • The previous slide reports the level 3 progress by element and total SDS progress. • Requirements status are scored: – Verified (worked as expected and planned) – Failed (discrepancies will identified and tracked using Bugzilla) – Workaround (verified, but needed help – all functionality not available) – Deferred (some may not be verified until after launch) – Waived (no-longer needed to support the mission at this time) Page 19