Research in Edge Computing PI Dinesh Manocha CoPI

- Slides: 39

Research in Edge Computing PI: Dinesh Manocha Co-PI: Ming C. Lin UNC Chapel Hill PM: Larry Skelly DTO The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

DTO Contract Period: October 01, 2006 – September 30, 2007 Administered through RDECOM The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

Goals Comparison of Cell vs. GPU features for Edge Computing Proceedings of IEEE: Special issue on Edge Computing GPU-based algorithms for numerical computations and data mining The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

Goals Comparison of Cell vs. GPU features for Edge Computing Proceedings of IEEE: Special issue on Edge Computing GPU-based algorithms for numerical computations and data mining The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

Cell Processor Cell-based products increasingly visible Play. Station 3 Dual-Cell blades for IBM blade centers Accelerator cards Cell clusters for supercomputing, i. e. LANL Road. Runner Very high-speed computation possible when programmed carefully The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

Cell Processor: Overview Cell Architecture Power. PC Element Synergistic Processing Elements Interconnect and Memory Access Cell Programming Techniques SIMD operations Data access and storage use Application Partitioning Compilers and Tools The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

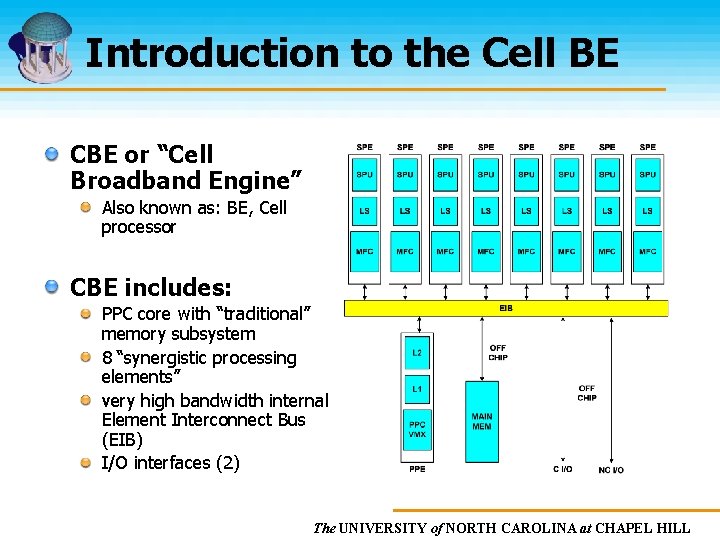

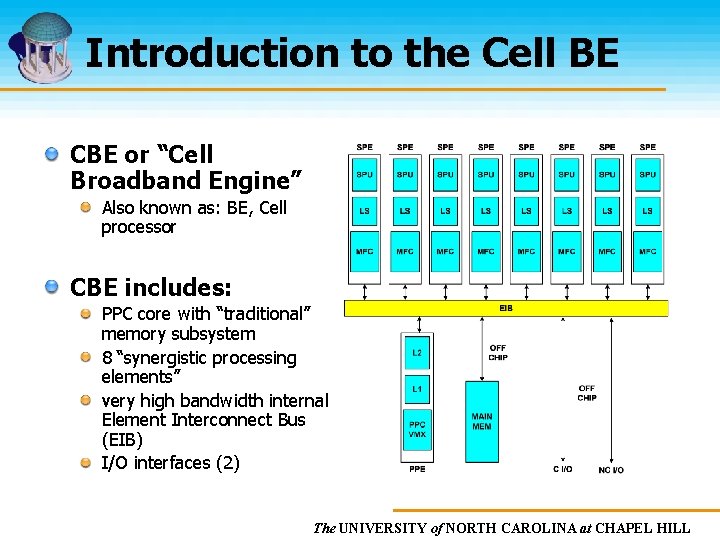

Introduction to the Cell BE CBE or “Cell Broadband Engine” Also known as: BE, Cell processor CBE includes: PPC core with “traditional” memory subsystem 8 “synergistic processing elements” very high bandwidth internal Element Interconnect Bus (EIB) I/O interfaces (2) The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

The Power. PC Element “PPE” – 64 bit Power. PC core with VMX standard 64 bit Power. PC architecture Nominally intended for: control functions OS and management of system resources (including SPE “threads”) enabling legacy software with good performance on control code Standard hierarchical memory L 1, L 2 on chip Simple microarchitecture dual-thread in-order, dual issue VMX (Alti. Vec) 128 -bit SIMD vector registers and operations The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

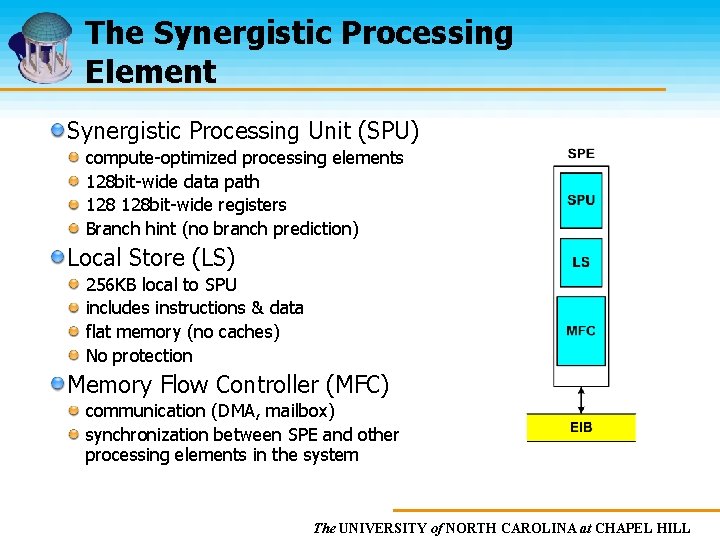

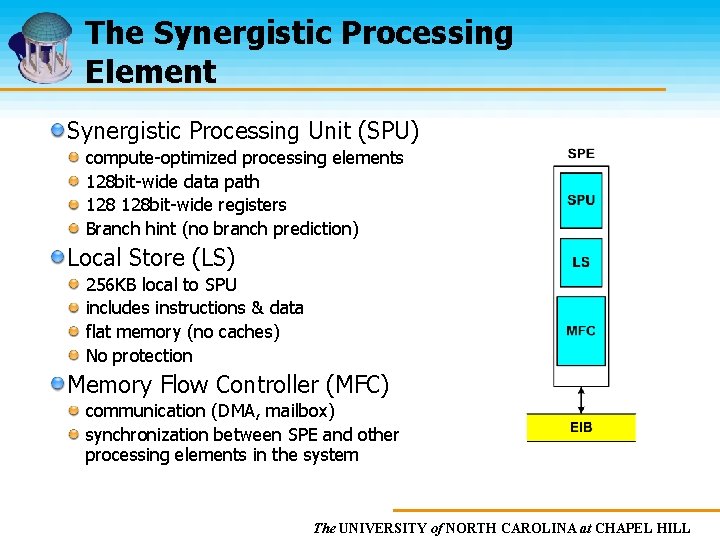

The Synergistic Processing Element Synergistic Processing Unit (SPU) compute-optimized processing elements 128 bit-wide data path 128 bit-wide registers Branch hint (no branch prediction) Local Store (LS) 256 KB local to SPU includes instructions & data flat memory (no caches) No protection Memory Flow Controller (MFC) communication (DMA, mailbox) synchronization between SPE and other processing elements in the system The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

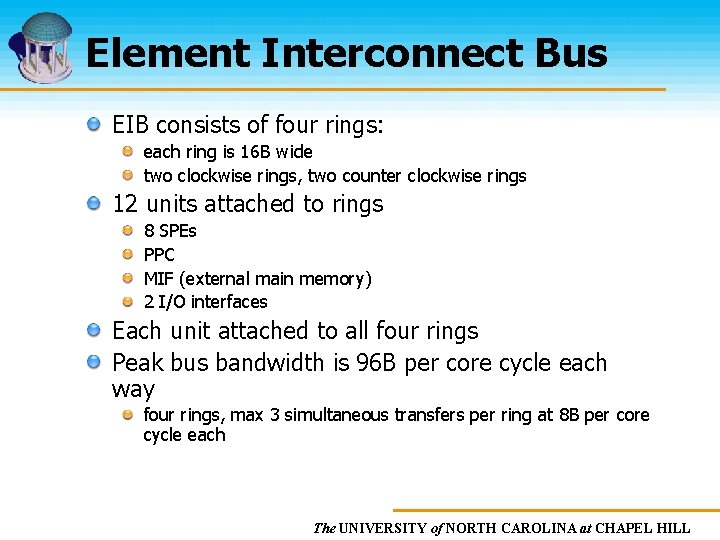

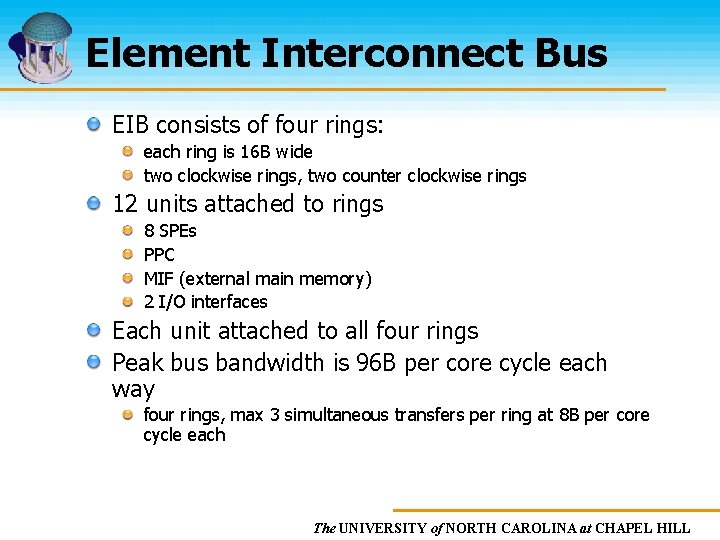

Element Interconnect Bus EIB consists of four rings: each ring is 16 B wide two clockwise rings, two counter clockwise rings 12 units attached to rings 8 SPEs PPC MIF (external main memory) 2 I/O interfaces Each unit attached to all four rings Peak bus bandwidth is 96 B per core cycle each way four rings, max 3 simultaneous transfers per ring at 8 B per core cycle each The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

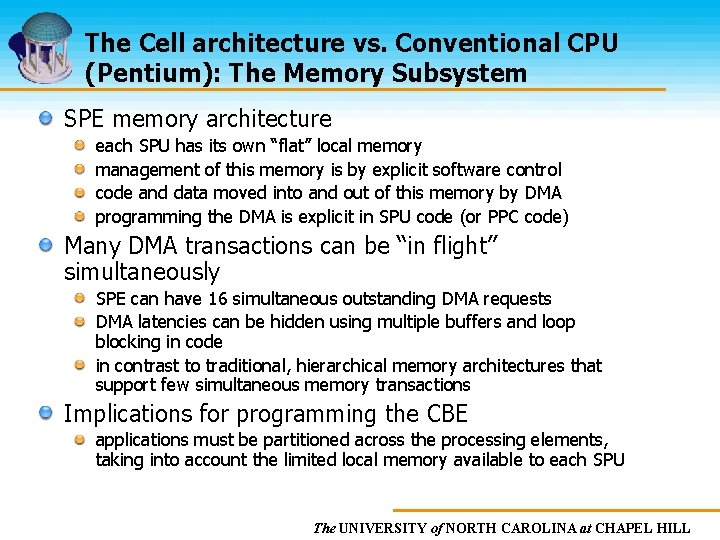

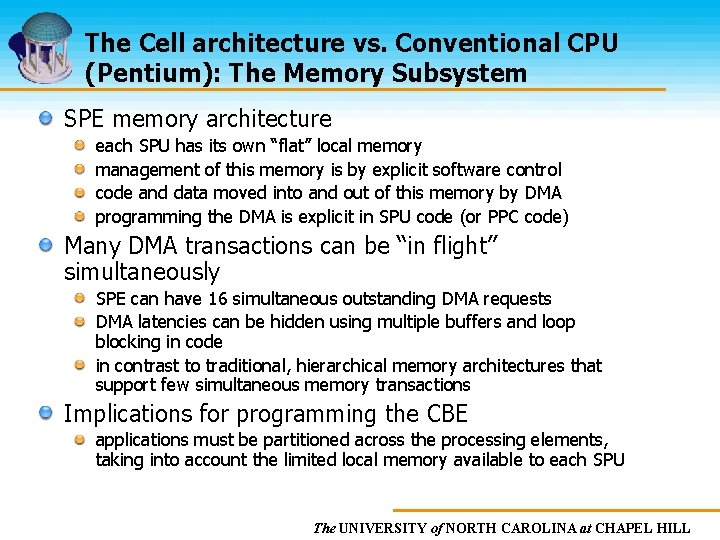

The Cell architecture vs. Conventional CPU (Pentium): The Memory Subsystem SPE memory architecture each SPU has its own “flat” local memory management of this memory is by explicit software control code and data moved into and out of this memory by DMA programming the DMA is explicit in SPU code (or PPC code) Many DMA transactions can be “in flight” simultaneously SPE can have 16 simultaneous outstanding DMA requests DMA latencies can be hidden using multiple buffers and loop blocking in code in contrast to traditional, hierarchical memory architectures that support few simultaneous memory transactions Implications for programming the CBE applications must be partitioned across the processing elements, taking into account the limited local memory available to each SPU The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

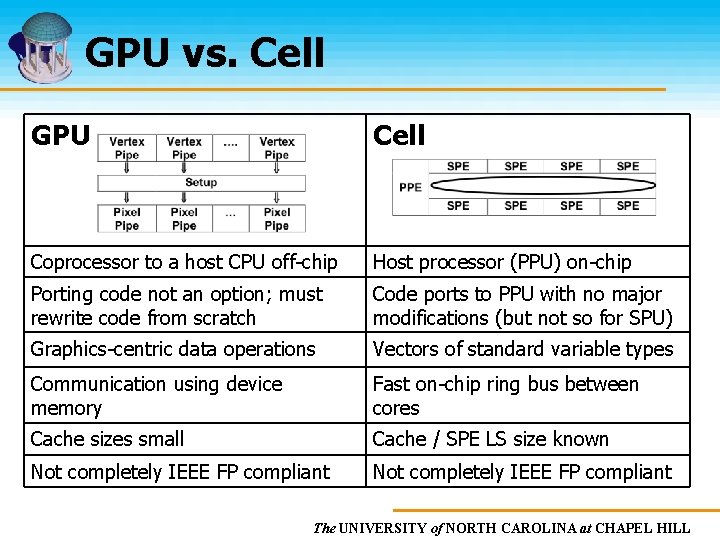

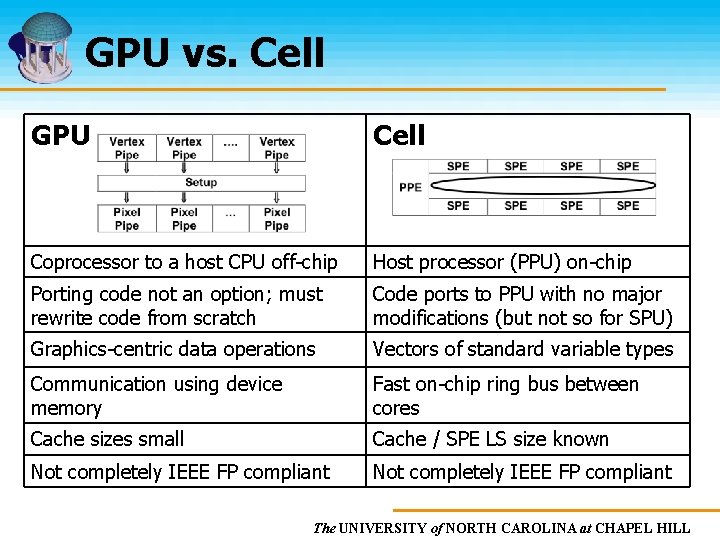

GPU vs. Cell GPU Cell Coprocessor to a host CPU off-chip Host processor (PPU) on-chip Porting code not an option; must rewrite code from scratch Code ports to PPU with no major modifications (but not so for SPU) Graphics-centric data operations Vectors of standard variable types Communication using device memory Fast on-chip ring bus between cores Cache sizes small Cache / SPE LS size known Not completely IEEE FP compliant The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

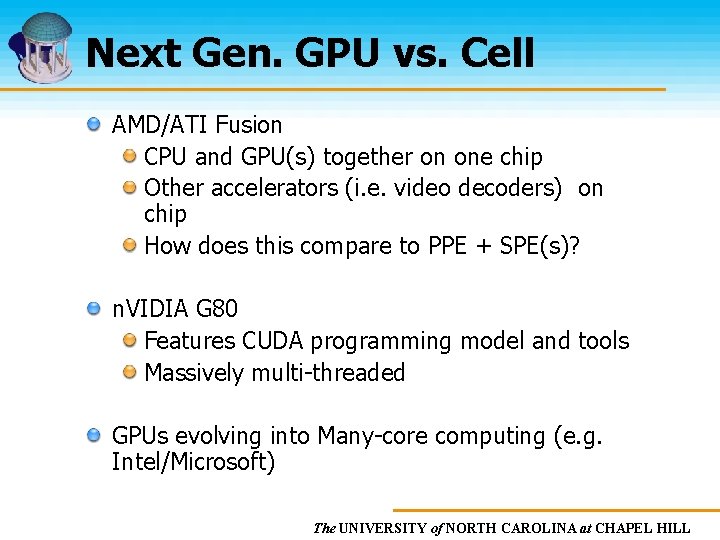

Next Gen. GPU vs. Cell AMD/ATI Fusion CPU and GPU(s) together on one chip Other accelerators (i. e. video decoders) on chip How does this compare to PPE + SPE(s)? n. VIDIA G 80 Features CUDA programming model and tools Massively multi-threaded GPUs evolving into Many-core computing (e. g. Intel/Microsoft) The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

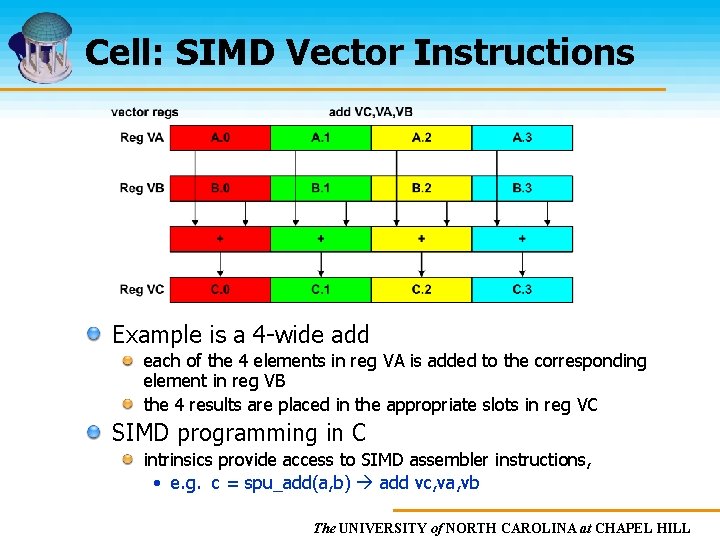

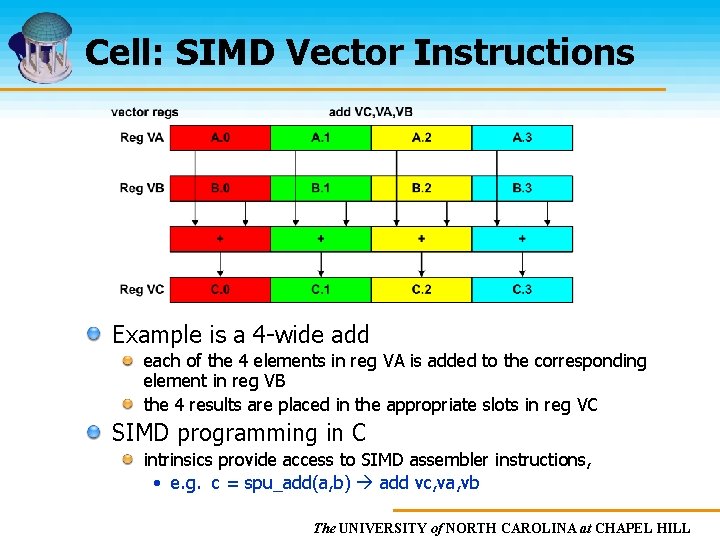

Cell: SIMD Vector Instructions Example is a 4 -wide add each of the 4 elements in reg VA is added to the corresponding element in reg VB the 4 results are placed in the appropriate slots in reg VC SIMD programming in C intrinsics provide access to SIMD assembler instructions, • e. g. c = spu_add(a, b) add vc, va, vb The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

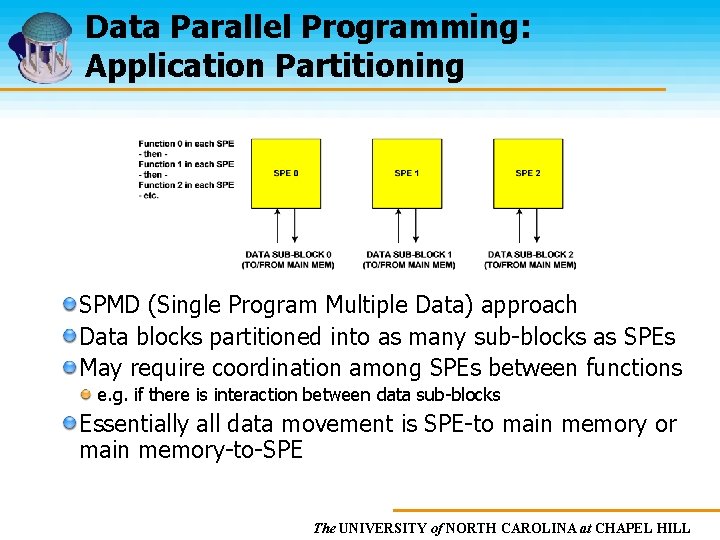

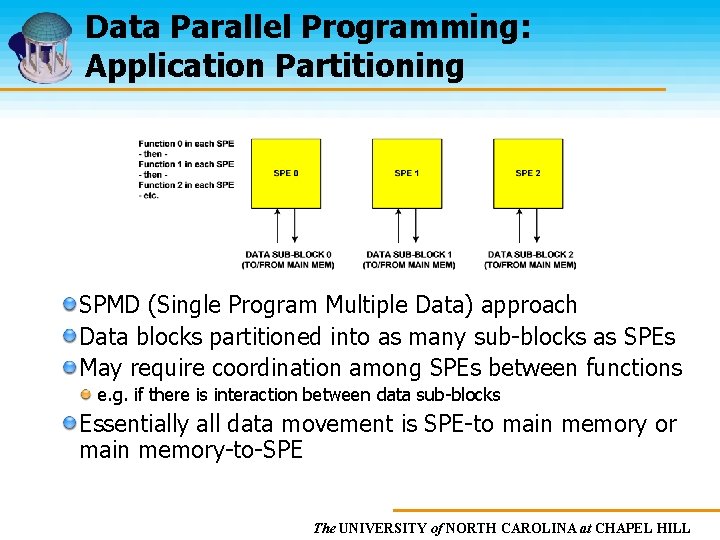

Data Parallel Programming: Application Partitioning SPMD (Single Program Multiple Data) approach Data blocks partitioned into as many sub-blocks as SPEs May require coordination among SPEs between functions e. g. if there is interaction between data sub-blocks Essentially all data movement is SPE-to main memory or main memory-to-SPE The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

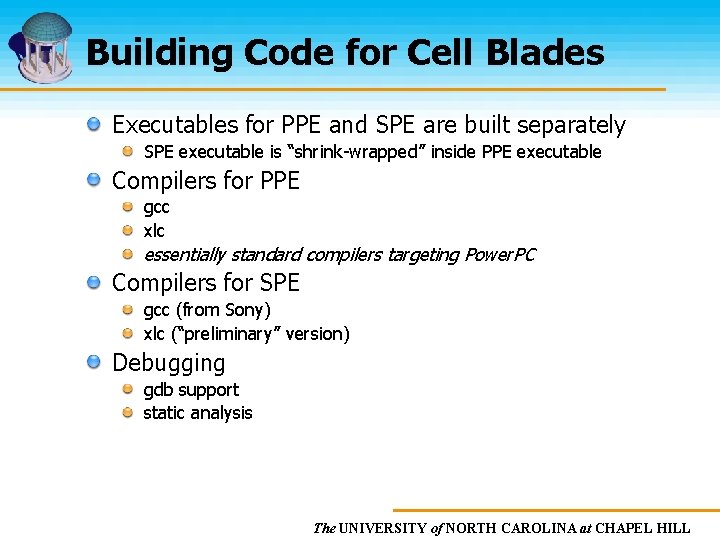

Building Code for Cell Blades Executables for PPE and SPE are built separately SPE executable is “shrink-wrapped” inside PPE executable Compilers for PPE gcc xlc essentially standard compilers targeting Power. PC Compilers for SPE gcc (from Sony) xlc (“preliminary” version) Debugging gdb support static analysis The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

Acknowledgements Jeff Derby, STSM, IBM STG Source of some diagrams (used with permission) Ken Vu, STSM, IBM STG Dave Luebke, Steve Molnar, NVIDIA Jan Prins, Stephen Olivier, UNC-CH Computer Science The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

Cell Resources Useful websites: developer. Works: http: //www-128. ibm. com/developerworks/power/cell/ SDK: http: //www. alphaworks. ibm. com/tech/cellsw Simulator: http: //www. alphaworks. ibm. com/tech/cellsystemsim XLC/C++: http: //www. alphaworks. ibm. com/tech/cellcompiler BSC Linux for Cell: http: //www. bsc. es/projects/deepcomputing/linuxoncell/ Techlib: http: //www 306. ibm. com/chips/techlib. nsf/products/Cell_Broadband_Engine Support forum: http: //www 128. ibm. com/developerworks/forums/dw_forum. jsp? forum=739&cat=28 The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

Cell Processor for Edge Computing Pros: Cell has very nice architectural features Some limited support from IBM and 3 rd Party vendors (e. g. Mercury) Possibilities as accelerator for high-performance computing Cons: Programming support for Cell is very limited The only killer app. for Cell is PS 3 Not much is known about future generations of Cell processor Recent trend of GPGPU and many-core processing is more promising The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

Goals Comparison of Cell vs. GPU features for Edge Computing Proceedings of IEEE: Special issue on Edge Computing GPU-based algorithms for numerical computations and data mining The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

Proceedings of IEEE Based on Edge Computing Workshop held at UNC Chapel Hill in May 2006 Co-editors: Ming C. Lin Michael Macedonia Dinesh Manocha The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

Proceedings of IEEE: Schedule Proposal submitted to IEEE: Aug 2006 Proposal approved: Sep 2006 Author invitations: Invited 22 authors to submit papers (Oct. ’ 06 – Jan’ 06): Academic researchers in computer architecture, high performance computing, compilers, GPUs, etc. Industrial researchers: Intel, Microsoft, NVIDIA, ATI/AMD, Ericcson The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

Proceedings of IEEE: Current Status 13 invitations accepted 6 article submissions so far (undergoing review) Rest of the articles expected during May-June 2007 Article details available at: http: //gamma. cs. unc. edu/PROC_IEEE/ The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

Proceedings of IEEE: Next steps Article review process Closely work with the authors and revisions Have final versions ready (by Sep-Oct’ 07) Co-editors write an overview article Send to IEEE for final publication (Dec’ 07: expected timeframe) Risks: Delays on part of the authors; IEEE publication schedule. The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

Goals Comparison of Cell vs. GPU features for Edge Computing Proceedings of IEEE: Special issue on Edge Computing GPU-based algorithms for numerical computations and data mining The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

Numerical algorithms using GPUs Dense matrix computations LU decomposition SVD computation QR decomposition Sorting FFT Computations Thousands of downloads from our WWW sites The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

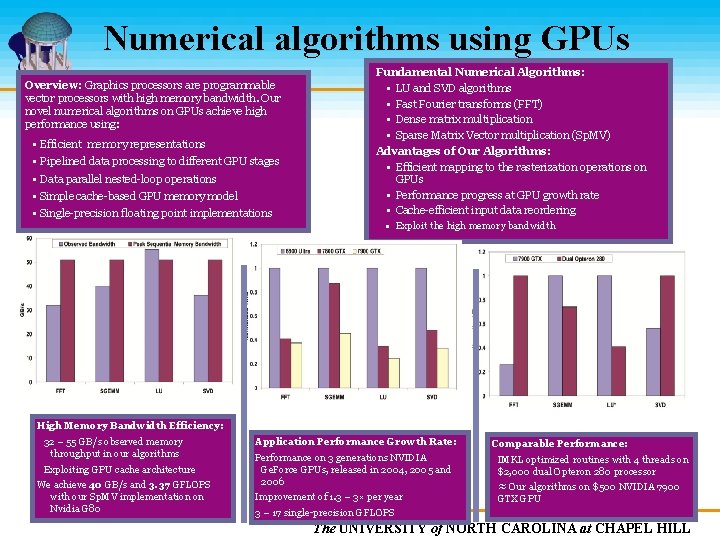

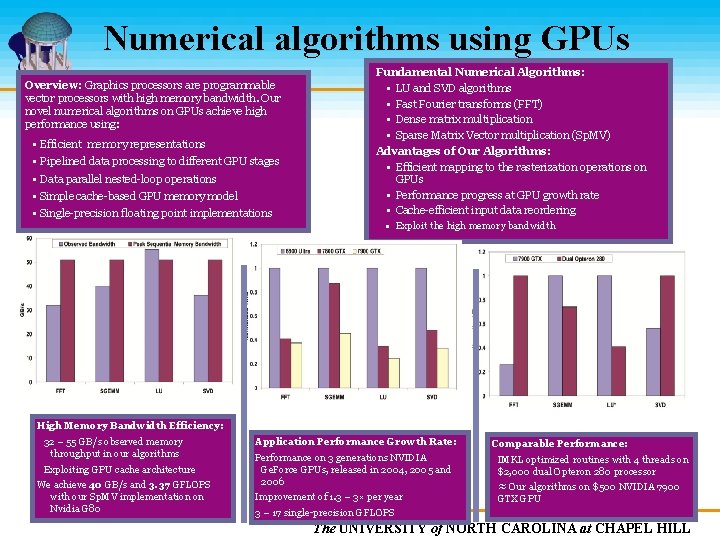

Numerical algorithms using GPUs Overview: Graphics processors are programmable vector processors with high memory bandwidth. Our novel numerical algorithms on GPUs achieve high performance using: • • • Efficient memory representations Pipelined data processing to different GPU stages Data parallel nested-loop operations Simple cache-based GPU memory model Single-precision floating point implementations Fundamental Numerical Algorithms: • LU and SVD algorithms • Fast Fourier transforms (FFT) • Dense matrix multiplication • Sparse Matrix Vector multiplication (Sp. MV) Advantages of Our Algorithms: • Efficient mapping to the rasterization operations on GPUs • Performance progress at GPU growth rate • Cache-efficient input data reordering • Exploit the high memory bandwidth High Memory Bandwidth Efficiency: 32 – 55 GB/s observed memory throughput in our algorithms Exploiting GPU cache architecture We achieve 40 GB/s and 3. 37 GFLOPS with our Sp. MV implementation on Nvidia G 80 Application Performance Growth Rate: Performance on 3 generations NVIDIA Ge. Force GPUs, released in 2004, 2005 and 2006 Improvement of 1. 3 – 3× per year Comparable Performance: IMKL optimized routines with 4 threads on $2, 000 dual Opteron 280 processor ≈ Our algorithms on $500 NVIDIA 7900 GTX GPU 3 – 17 single-precision GFLOPS The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

Current Focus: Sparse Matrix Computations Number of non-zero elements in the matrix is much less than total size Data mining computations WWW search Data streaming: multi-dimensional scaling Scientific computation Differential equation solvers VLSI CAD simulation The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

Motivation Latest GPUs have very high computing power and memory bandwidth On NVIDIA Ge. Force 8800 GTX (G 80) • Peak performance 330 GFLOPS • Peak memory bandwidth 86 GB/s Latest GPUs are Extremely flexible and programmable CUDA on NVIDIA GPUs CTM on ATI GPUs Sparse matrix operations can be parallelized and implemented on the GPU The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

Applications Numerical methods Linear systems Least square problems Eigen value problems Scientific computing and Engineering Fluid Simulation Data mining The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

Sparse Matrix: Challenges Poor temporal and spatial locality Indirect and irregular memory accesses Distributing computation and memory hierarchy optimizations are challenging To get very high performance from GPUs We need to have very high arithmetic intensity relative to the memory accesses The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

Sparse matrix representations CSR representation Compressed Sparse Row Array of non zero elements and corresponding column indices Row pointers Block CSR (BCSR) representation Divide into blocks Each block treated like a dense matrix The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

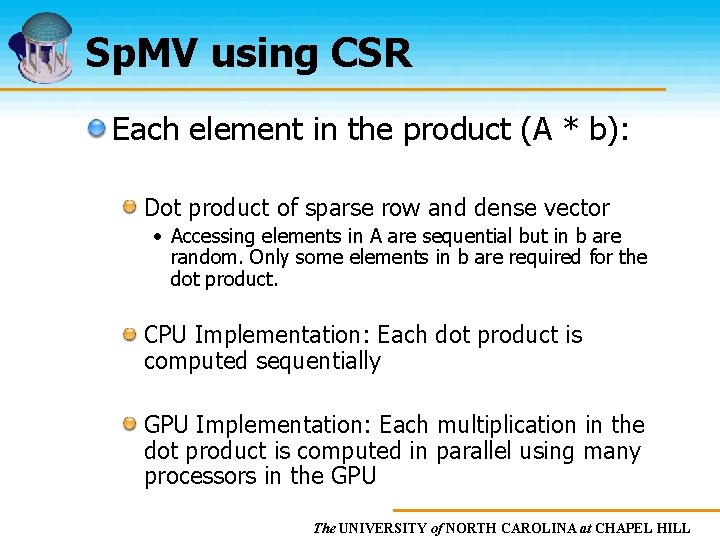

Sp. MV using CSR Each element in the product (A * b): Dot product of sparse row and dense vector • Accessing elements in A are sequential but in b are random. Only some elements in b are required for the dot product. CPU Implementation: Each dot product is computed sequentially GPU Implementation: Each multiplication in the dot product is computed in parallel using many processors in the GPU The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

Approach CSR representation for the sparse matrix vector multiplication Computation is distributed evenly among many threads The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

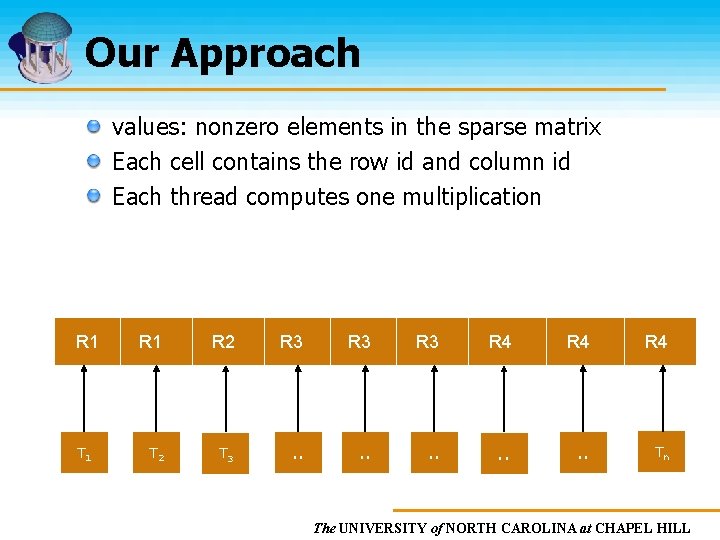

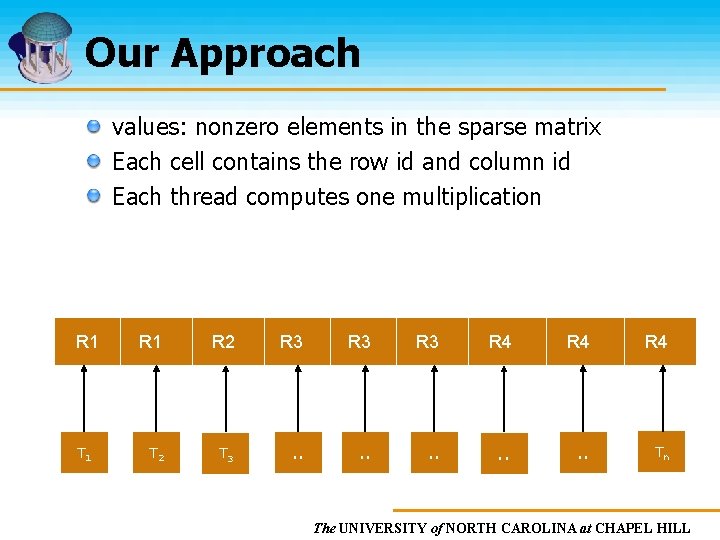

Our Approach values: nonzero elements in the sparse matrix Each cell contains the row id and column id Each thread computes one multiplication values R 1 T 1 R 2 R 3 R 3 R 4 R 4 T 2 T 3 . . Tn The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

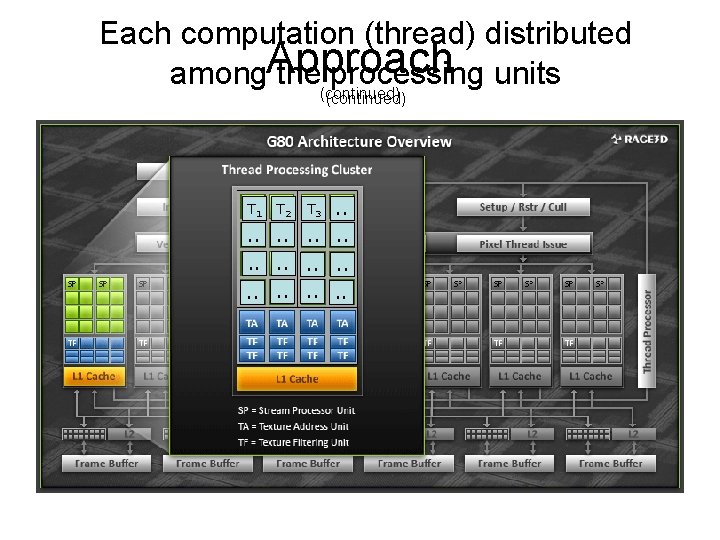

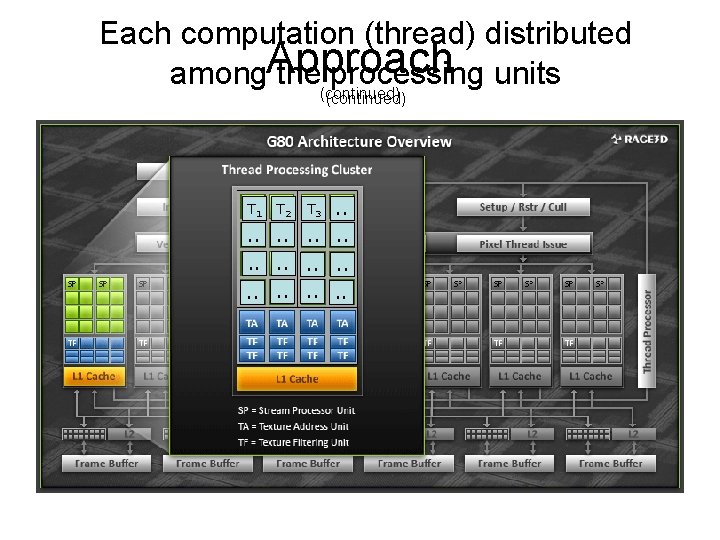

Each computation (thread) distributed among. Approach the(continued) processing units (continued) . . T 1 T 2 T 3 . . . .

Current Results Performance numbers Memory bandwidth : 40. 32 GB/s Floating point operations : 3. 37 GFLOPS Our GPU implementation is 30 times faster than our CPU implementation (preliminary analysis) The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

Ongoing work Register and Cache blocking Reorganization of data structure and computation to improve reuse of values Sparse matrix singular value decomposition (SVD) on the GPU Extensive performance analysis Application: Conjugate gradient The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL

Ongoing work Extensive performance analysis on different kind of sparse matrices (Benchmarking) NAS Parallel benchmark • Developed for the performance evolution of highly parallel supercomputers • Mimic the characteristics of large scale computational fluid dynamics application Matrix Market – NIST • A visual repository of test data SPARSITY – UCB/LLNL OSKI (Optimized Sparse Kernel Interface) – UCB The UNIVERSITY of NORTH CAROLINA at CHAPEL HILL