Random Sampling from a Search Engines Index Ziv

![Measuring size using random samples [Bharat. Broder 98, Cheney. Perry 05, Gulli. Signorni 05] Measuring size using random samples [Bharat. Broder 98, Cheney. Perry 05, Gulli. Signorni 05]](https://slidetodoc.com/presentation_image_h2/b9a374b14af14fc346e4c0bf22c89b17/image-6.jpg)

![Other Approaches n Anecdotal queries [Search. Engine. Watch, Google, Bradlow. Schmittlein 00] n Queries Other Approaches n Anecdotal queries [Search. Engine. Watch, Google, Bradlow. Schmittlein 00] n Queries](https://slidetodoc.com/presentation_image_h2/b9a374b14af14fc346e4c0bf22c89b17/image-7.jpg)

![Rejection Sampling [von Neumann] n C: envelope constant ¨C n ≥ w(x) for all Rejection Sampling [von Neumann] n C: envelope constant ¨C n ≥ w(x) for all](https://slidetodoc.com/presentation_image_h2/b9a374b14af14fc346e4c0bf22c89b17/image-19.jpg)

- Slides: 34

Random Sampling from a Search Engine‘s Index Ziv Bar-Yossef Maxim Gurevich Department of Electrical Engineering Technion 1

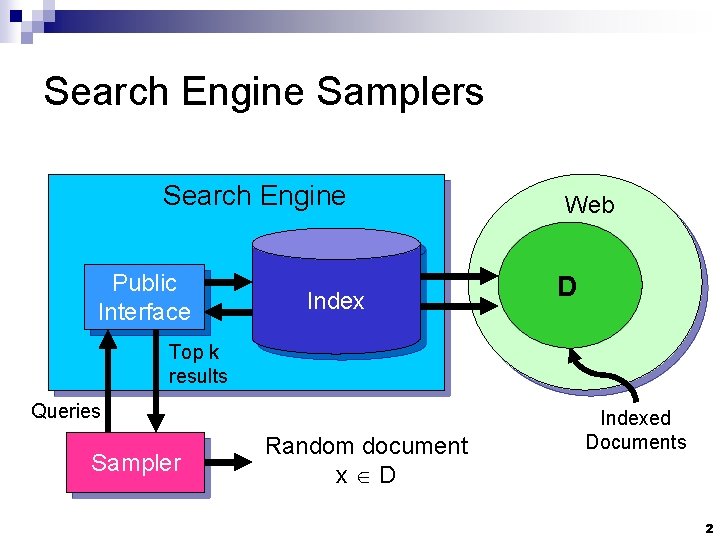

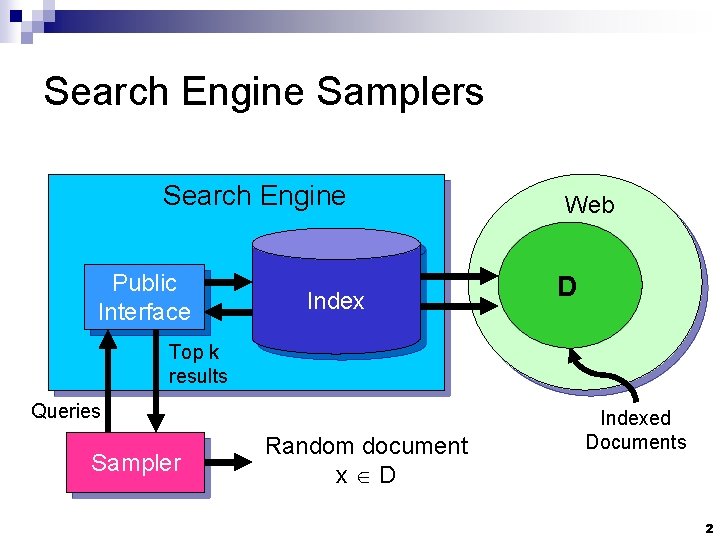

Search Engine Samplers Search Engine Public Interface Index Web D Top k results Queries Sampler Random document x D Indexed Documents 2

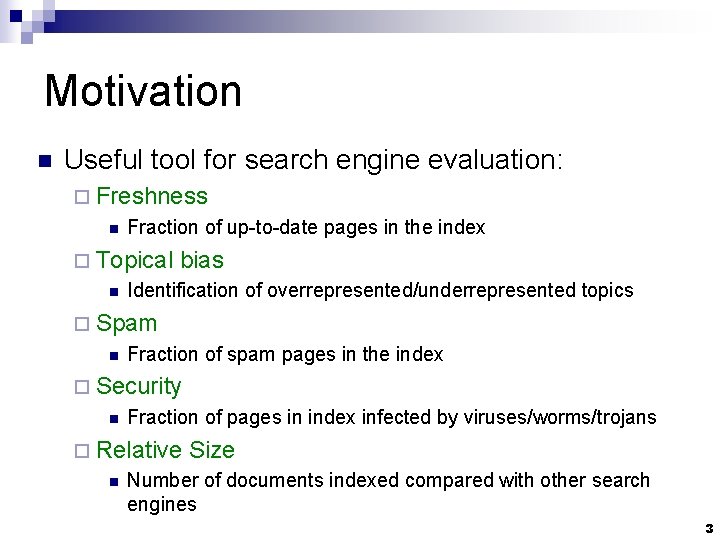

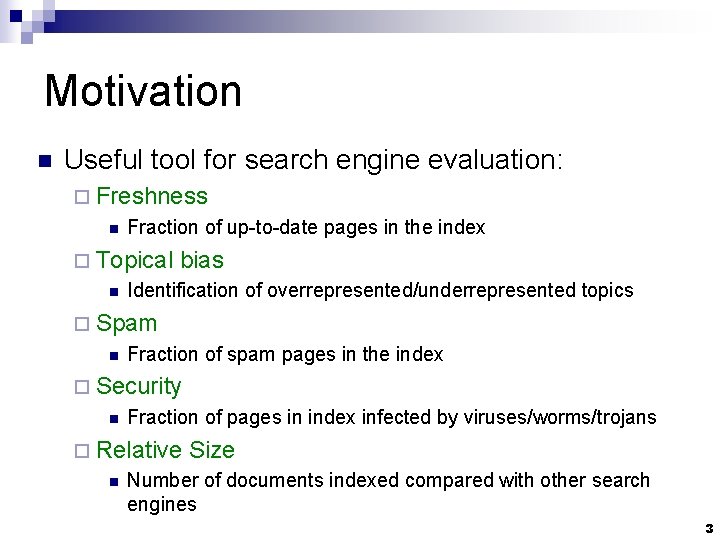

Motivation n Useful tool for search engine evaluation: ¨ Freshness n Fraction of up-to-date pages in the index ¨ Topical n bias Identification of overrepresented/underrepresented topics ¨ Spam n Fraction of spam pages in the index ¨ Security n Fraction of pages in index infected by viruses/worms/trojans ¨ Relative n Size Number of documents indexed compared with other search engines 3

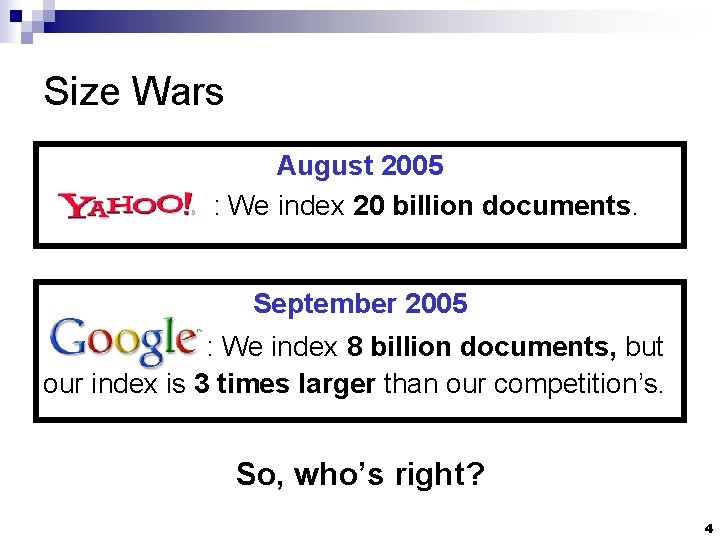

Size Wars August 2005 : We index 20 billion documents. September 2005 : We index 8 billion documents, but our index is 3 times larger than our competition’s. So, who’s right? 4

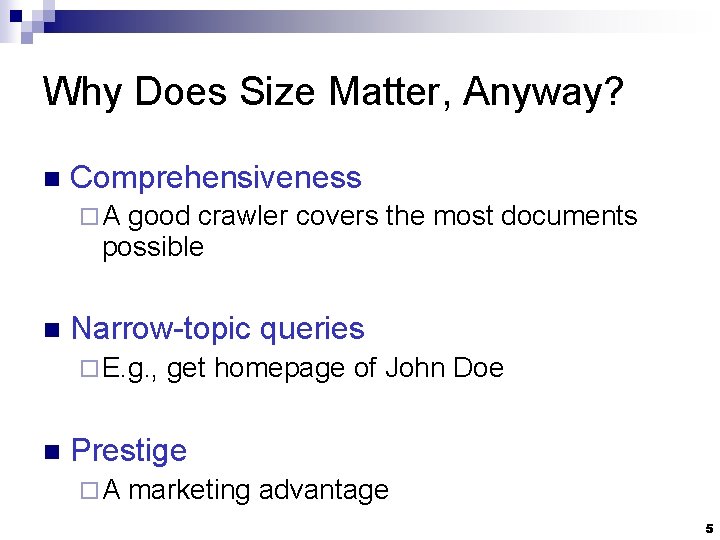

Why Does Size Matter, Anyway? n Comprehensiveness ¨A good crawler covers the most documents possible n Narrow-topic queries ¨ E. g. , n get homepage of John Doe Prestige ¨A marketing advantage 5

![Measuring size using random samples Bharat Broder 98 Cheney Perry 05 Gulli Signorni 05 Measuring size using random samples [Bharat. Broder 98, Cheney. Perry 05, Gulli. Signorni 05]](https://slidetodoc.com/presentation_image_h2/b9a374b14af14fc346e4c0bf22c89b17/image-6.jpg)

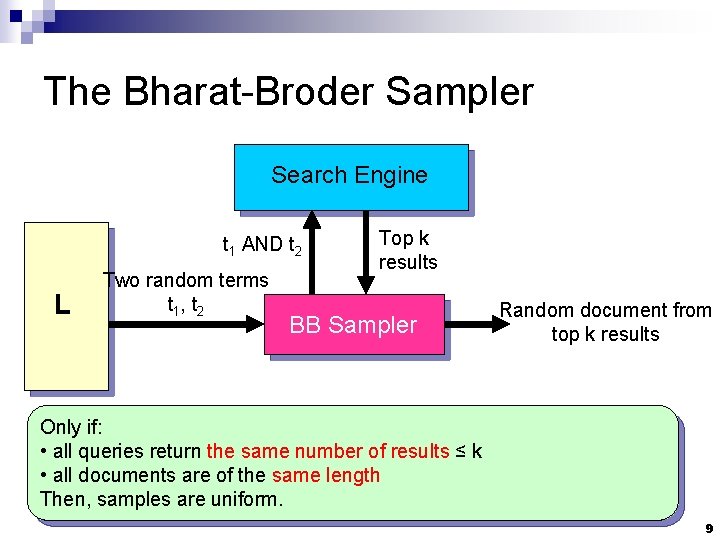

Measuring size using random samples [Bharat. Broder 98, Cheney. Perry 05, Gulli. Signorni 05] n n Sample pages uniformly at random from the search engine’s index Two alternatives ¨ Absolute n n n Sample until collision Collision expected after k ~ N½ random samples (birthday paradox) Return k 2 ¨ Relative n size estimation Check how many samples from search engine A are present in search engine B and vice versa 6

![Other Approaches n Anecdotal queries Search Engine Watch Google Bradlow Schmittlein 00 n Queries Other Approaches n Anecdotal queries [Search. Engine. Watch, Google, Bradlow. Schmittlein 00] n Queries](https://slidetodoc.com/presentation_image_h2/b9a374b14af14fc346e4c0bf22c89b17/image-7.jpg)

Other Approaches n Anecdotal queries [Search. Engine. Watch, Google, Bradlow. Schmittlein 00] n Queries from user query logs [Lawrence. Giles 98, Dobra. Feinberg 04] n Random sampling from the whole web [Henzinger et al 00, Bar-Yossef et al 00, Rusmevichientong et al 01] 7

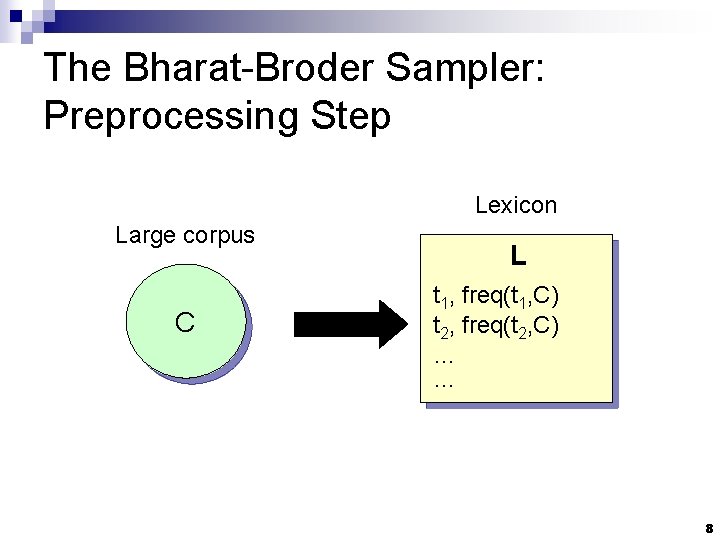

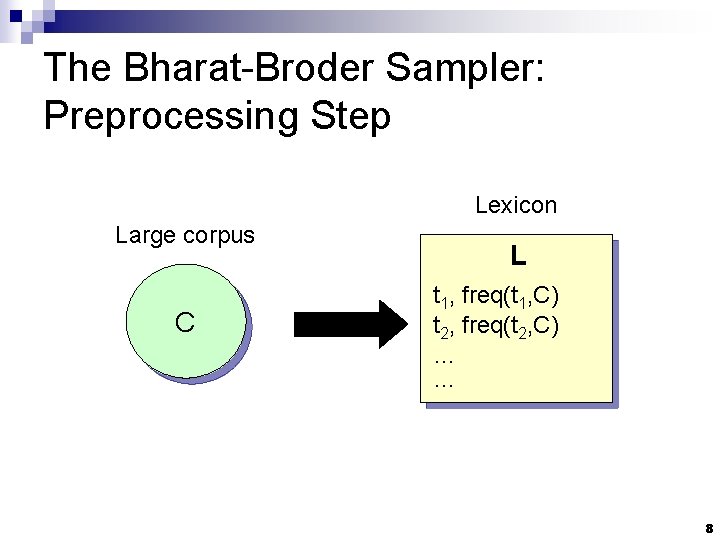

The Bharat-Broder Sampler: Preprocessing Step Lexicon Large corpus C L t 1, freq(t 1, C) t 2, freq(t 2, C) … … 8

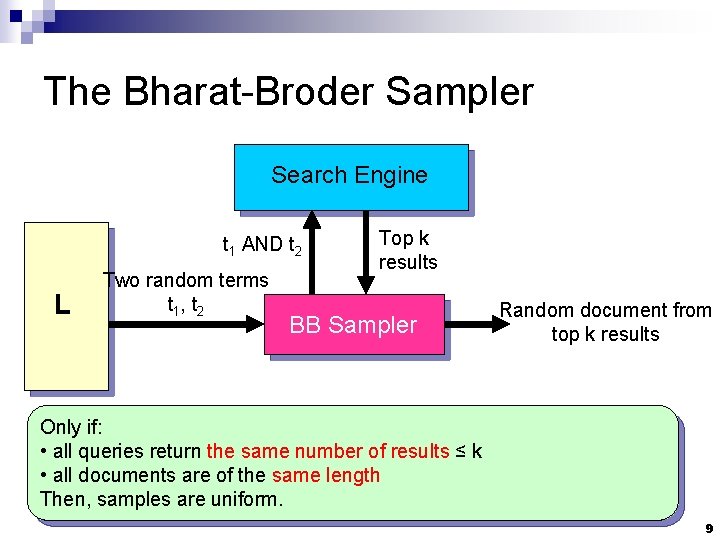

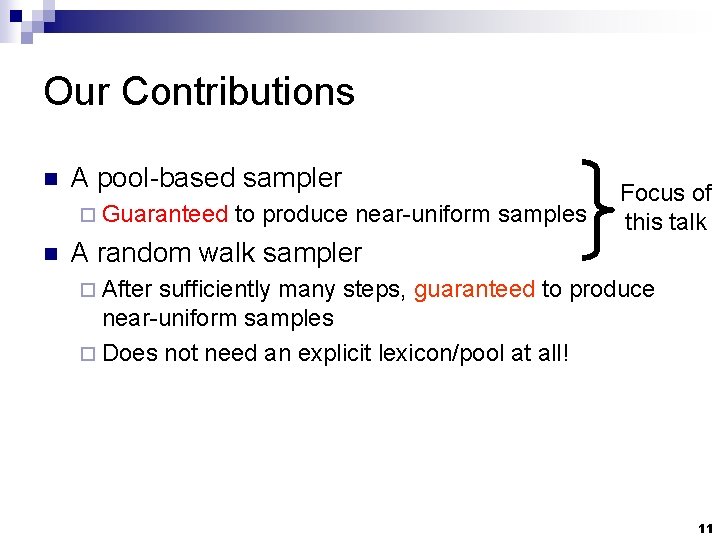

The Bharat-Broder Sampler Search Engine t 1 AND t 2 L Two random terms t 1 , t 2 Top k results BB Sampler Random document from top k results Only if: • all queries return the same number of results ≤ k • all documents are of the same length Then, samples are uniform. 9

The Bharat-Broder Sampler: Drawbacks n Documents have varying lengths ¨ Bias n towards long documents Some queries have more than k matches ¨ Bias towards documents with high static rank 10

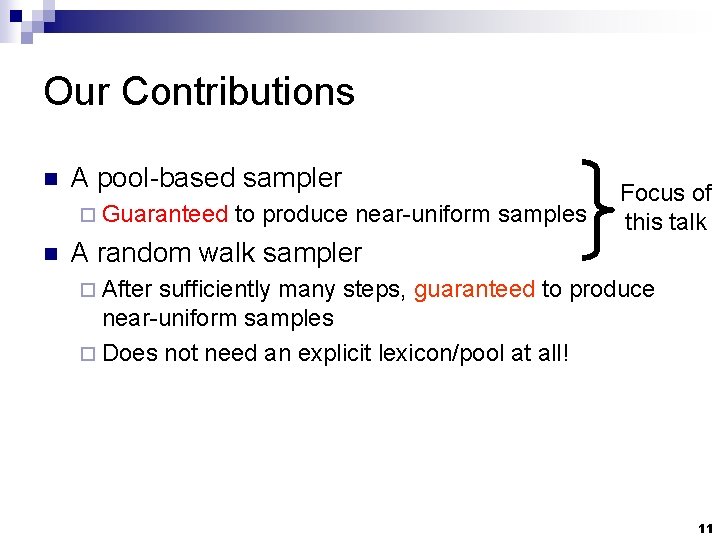

Our Contributions n A pool-based sampler ¨ Guaranteed n to produce near-uniform samples Focus of this talk A random walk sampler ¨ After sufficiently many steps, guaranteed to produce near-uniform samples ¨ Does not need an explicit lexicon/pool at all! 11

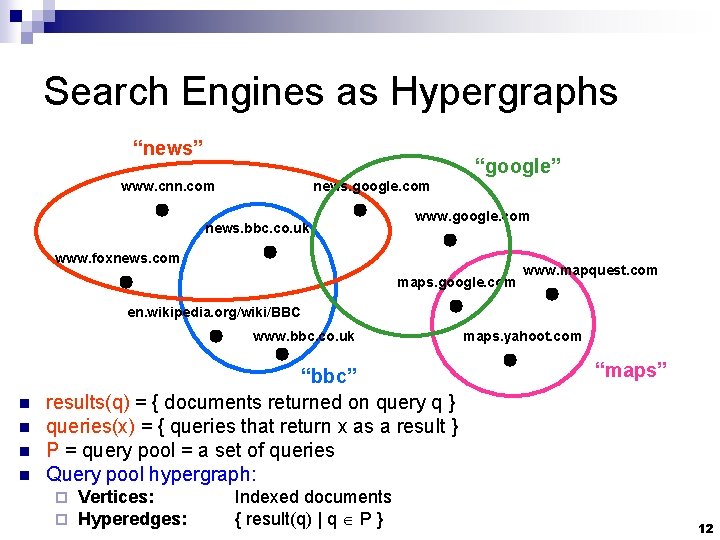

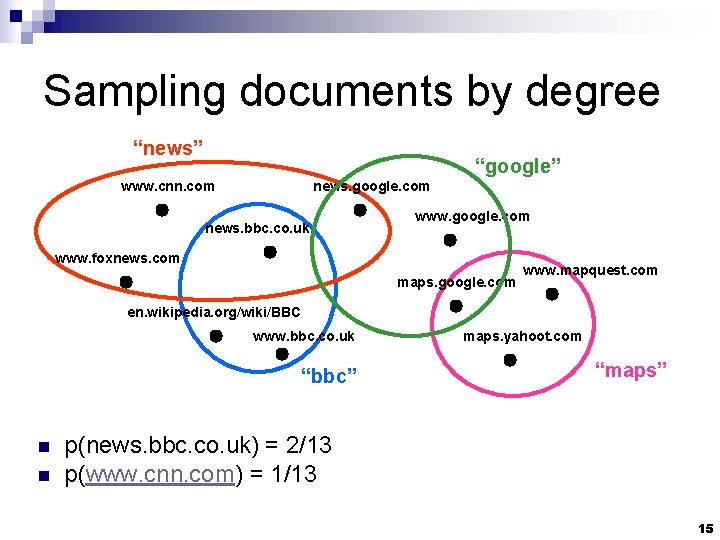

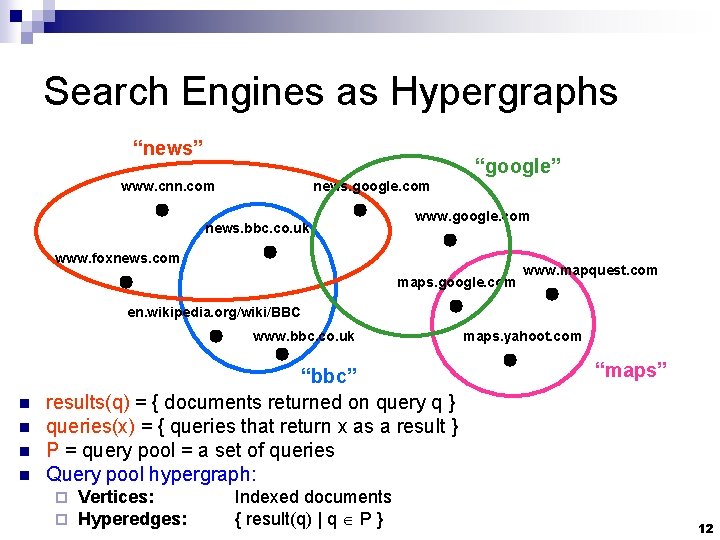

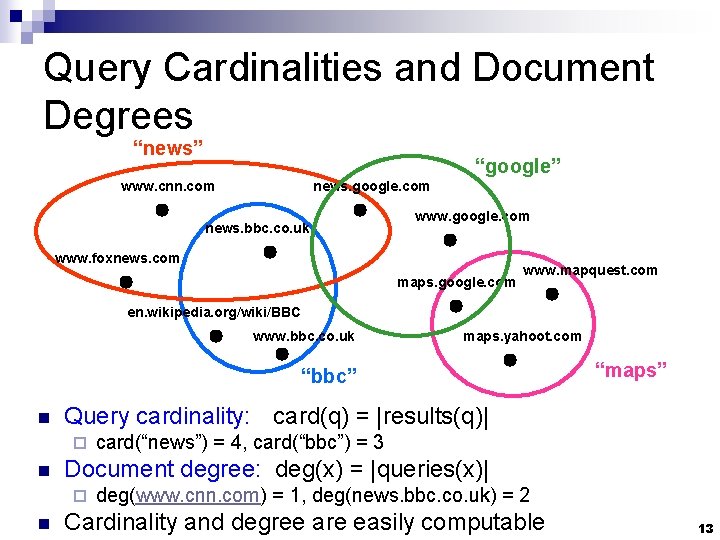

Search Engines as Hypergraphs “news” “google” www. cnn. com news. google. com news. bbc. co. uk www. google. com www. foxnews. com maps. google. com www. mapquest. com en. wikipedia. org/wiki/BBC www. bbc. co. uk n n “bbc” results(q) = { documents returned on query q } queries(x) = { queries that return x as a result } P = query pool = a set of queries Query pool hypergraph: ¨ ¨ Vertices: Hyperedges: Indexed documents { result(q) | q P } maps. yahoot. com “maps” 12

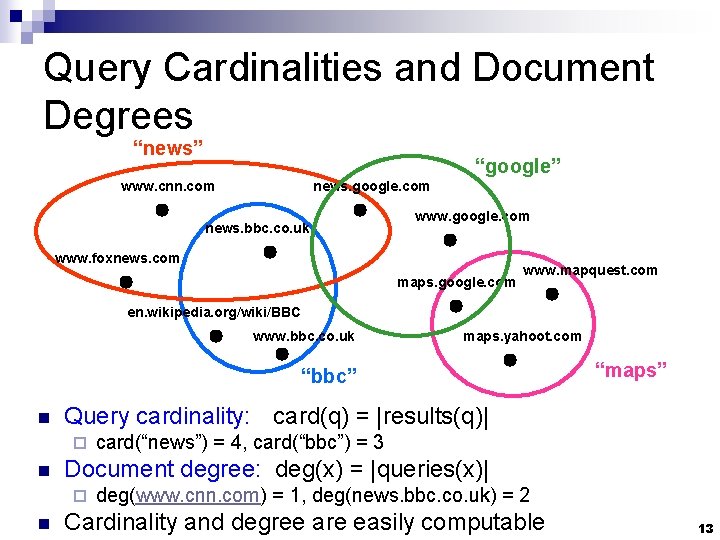

Query Cardinalities and Document Degrees “news” “google” www. cnn. com news. google. com news. bbc. co. uk www. google. com www. foxnews. com maps. google. com www. mapquest. com en. wikipedia. org/wiki/BBC www. bbc. co. uk maps. yahoot. com “bbc” n Query cardinality: card(q) = |results(q)| ¨ n card(“news”) = 4, card(“bbc”) = 3 Document degree: deg(x) = |queries(x)| ¨ n “maps” deg(www. cnn. com) = 1, deg(news. bbc. co. uk) = 2 Cardinality and degree are easily computable 13

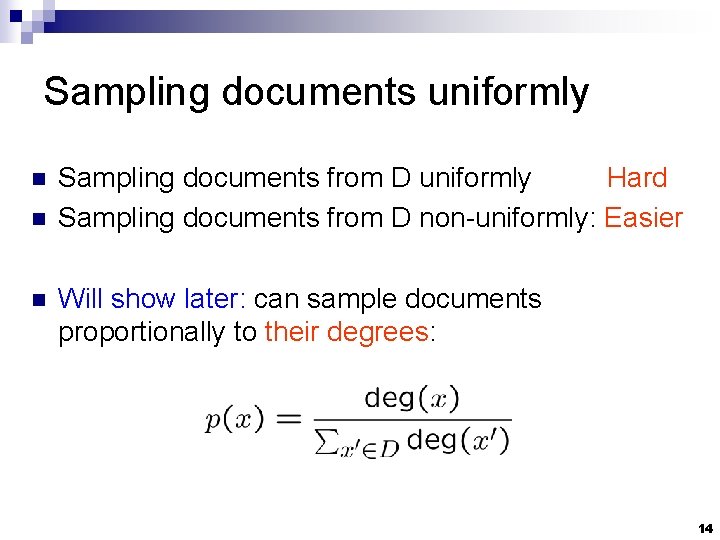

Sampling documents uniformly n n n Sampling documents from D uniformly Hard Sampling documents from D non-uniformly: Easier Will show later: can sample documents proportionally to their degrees: 14

Sampling documents by degree “news” “google” www. cnn. com news. google. com news. bbc. co. uk www. google. com www. foxnews. com maps. google. com www. mapquest. com en. wikipedia. org/wiki/BBC www. bbc. co. uk “bbc” n n maps. yahoot. com “maps” p(news. bbc. co. uk) = 2/13 p(www. cnn. com) = 1/13 15

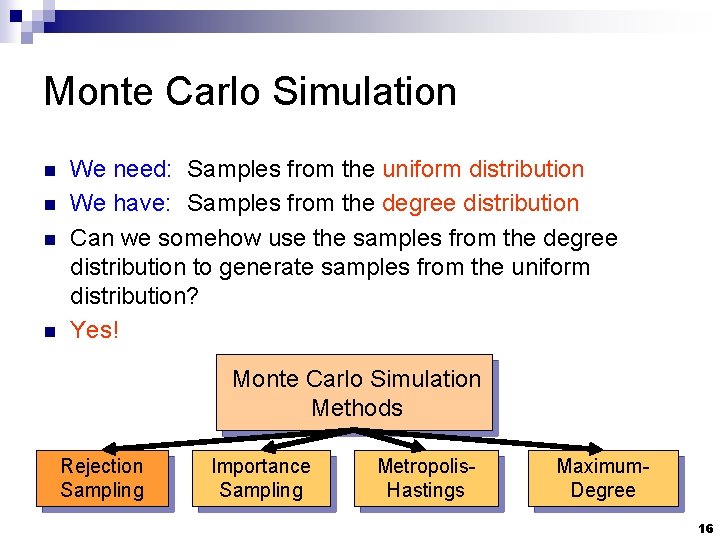

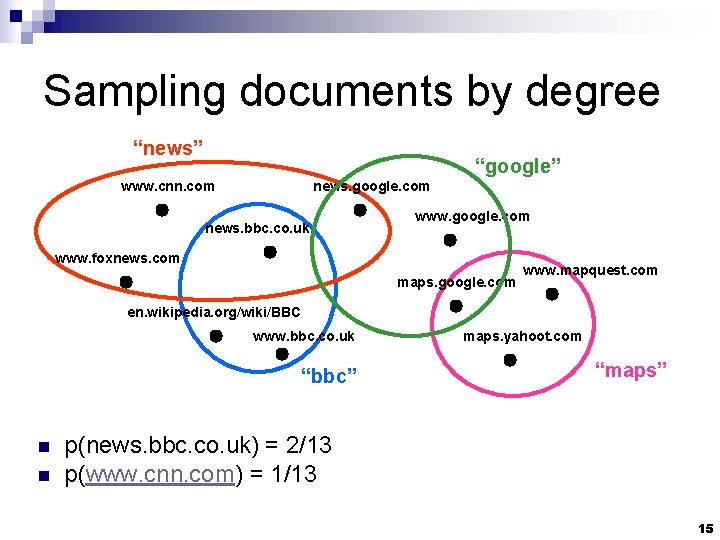

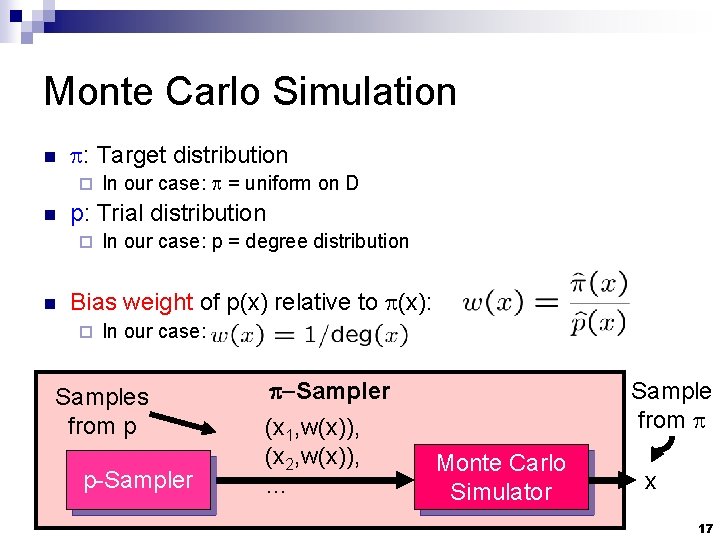

Monte Carlo Simulation n n We need: Samples from the uniform distribution We have: Samples from the degree distribution Can we somehow use the samples from the degree distribution to generate samples from the uniform distribution? Yes! Monte Carlo Simulation Methods Rejection Sampling Importance Sampling Metropolis. Hastings Maximum. Degree 16

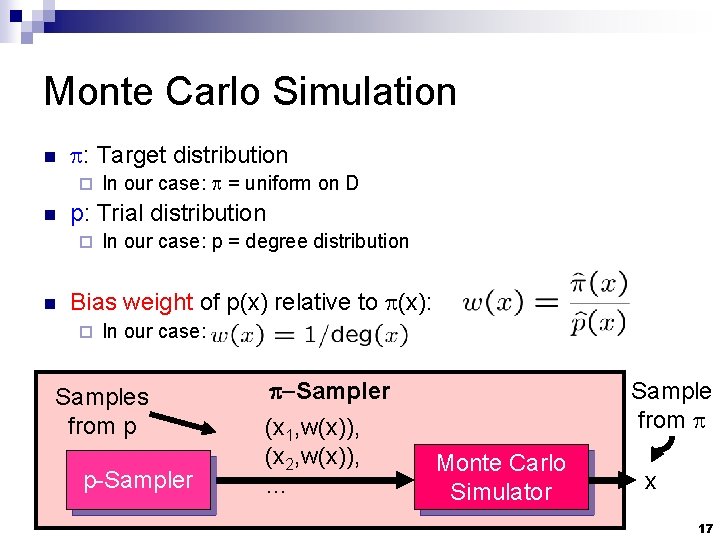

Monte Carlo Simulation n : Target distribution ¨ n p: Trial distribution ¨ n In our case: = uniform on D In our case: p = degree distribution Bias weight of p(x) relative to (x): ¨ In our case: Samples from p p-Sampler (x 1, w(x)), (x 2, w(x)), … Sample from Monte Carlo Simulator x 17

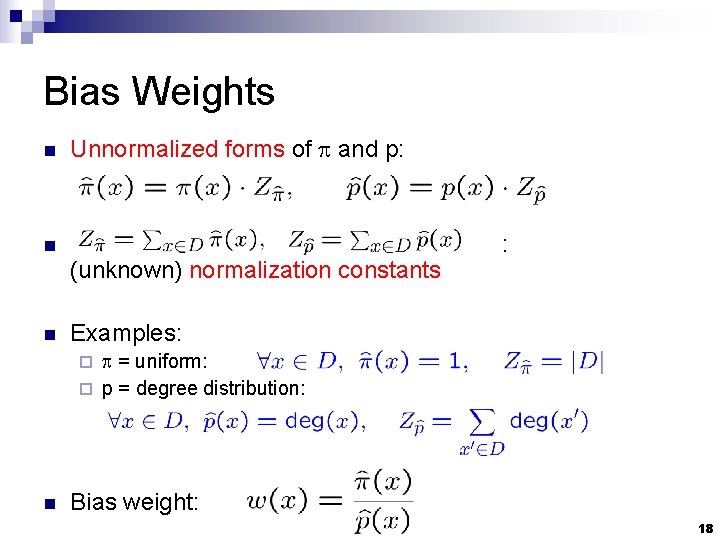

Bias Weights n Unnormalized forms of and p: n (unknown) normalization constants n : Examples: = uniform: ¨ p = degree distribution: ¨ n Bias weight: 18

![Rejection Sampling von Neumann n C envelope constant C n wx for all Rejection Sampling [von Neumann] n C: envelope constant ¨C n ≥ w(x) for all](https://slidetodoc.com/presentation_image_h2/b9a374b14af14fc346e4c0bf22c89b17/image-19.jpg)

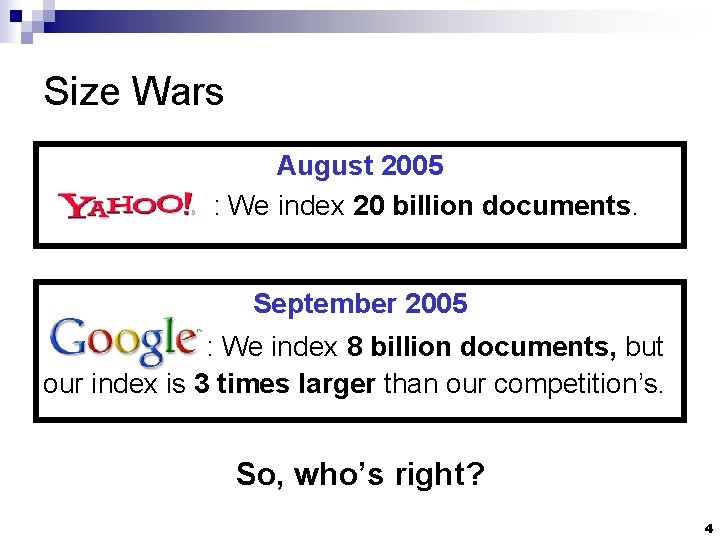

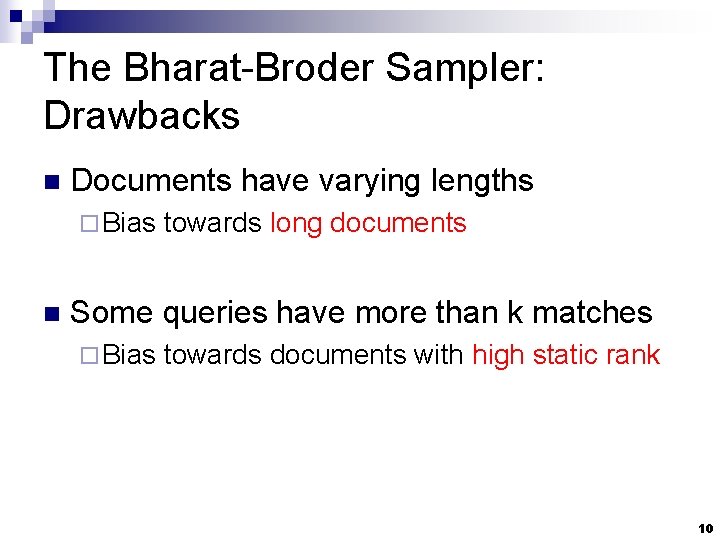

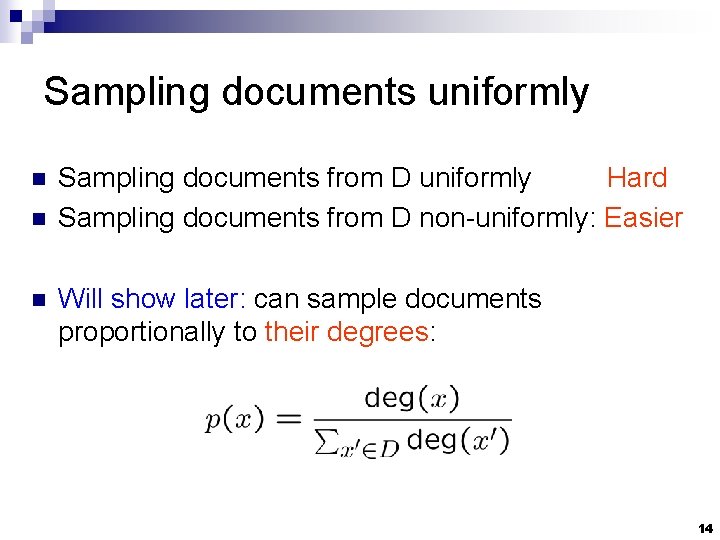

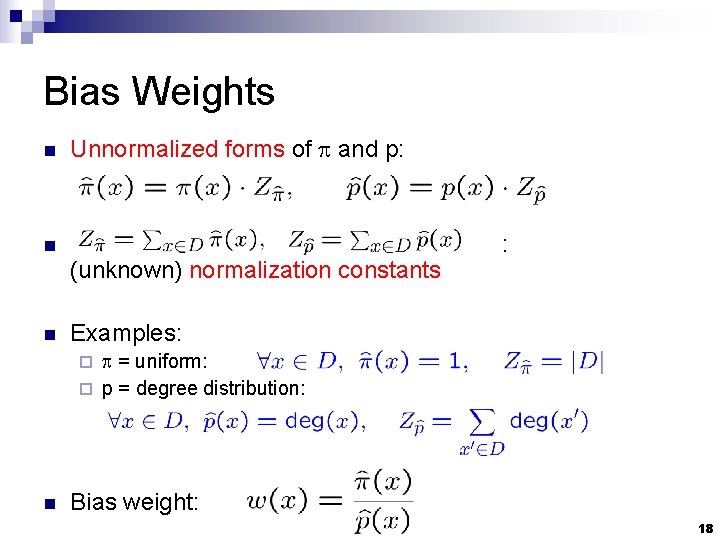

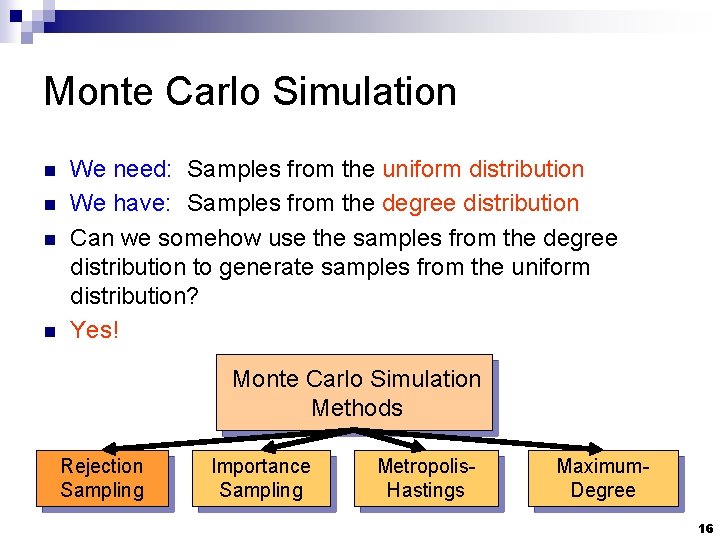

Rejection Sampling [von Neumann] n C: envelope constant ¨C n ≥ w(x) for all x The algorithm: ¨ accept : = false ¨ while (not accept) n n n generate a sample x from p toss a coin whose heads probability is if coin comes up heads, accept : = true ¨ return n x In our case: C = 1 and acceptance prob = 1/deg(x) 19

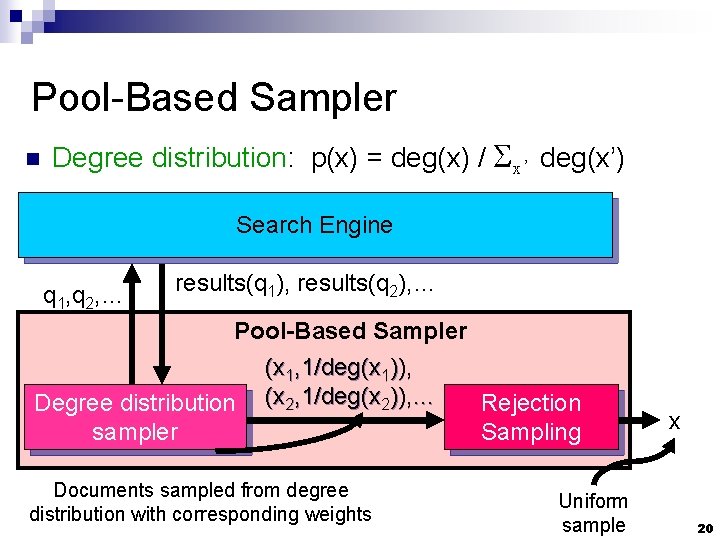

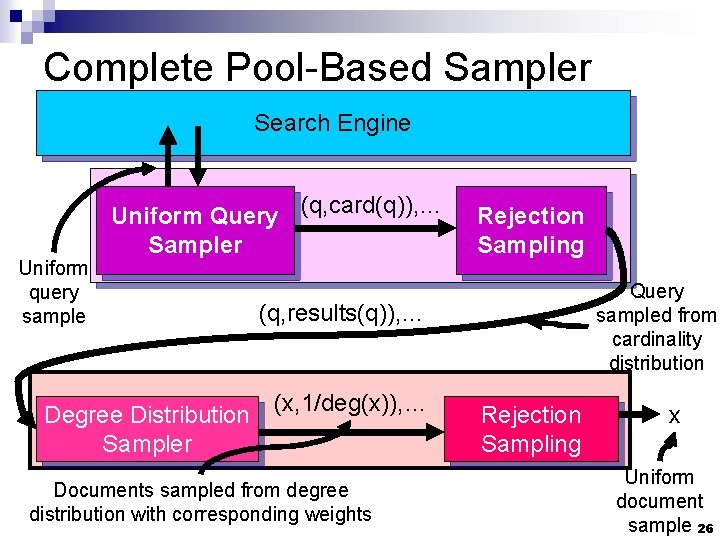

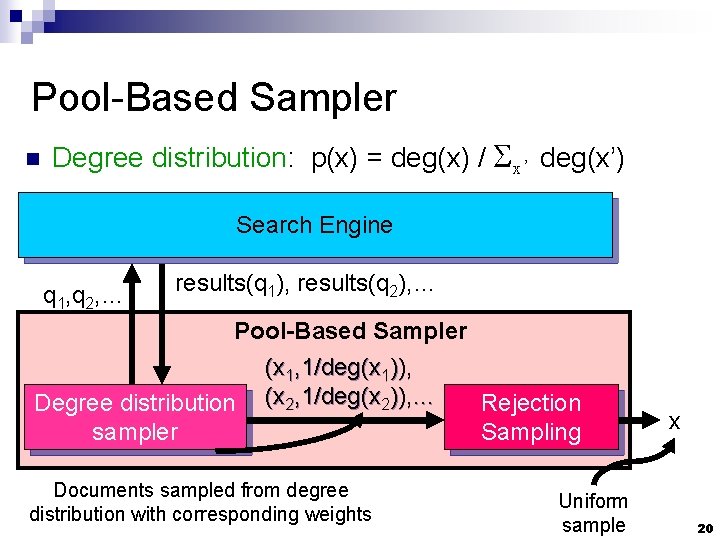

Pool-Based Sampler n Degree distribution: p(x) = deg(x) / x’deg(x’) Search Engine q 1, q 2, … results(q 1), results(q 2), … Pool-Based Sampler (x 1, 1/deg(x 1)), Rejection Degree distribution (x 2, 1/deg(x 2)), … Sampling sampler Documents sampled from degree distribution with corresponding weights Uniform sample x 20

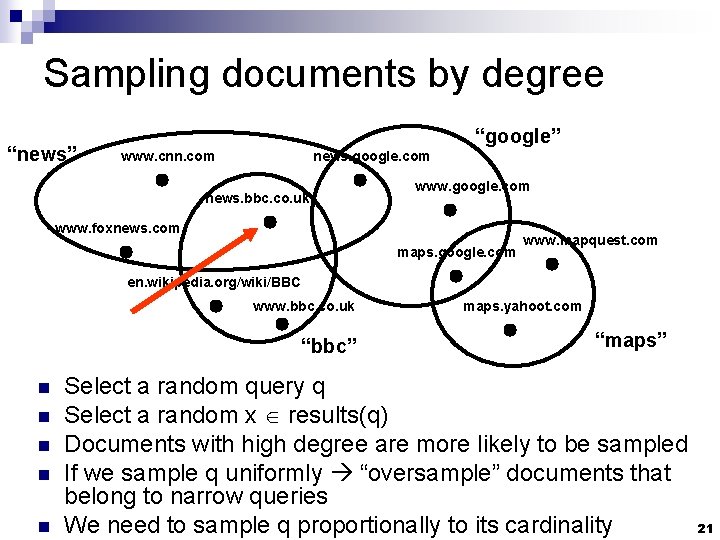

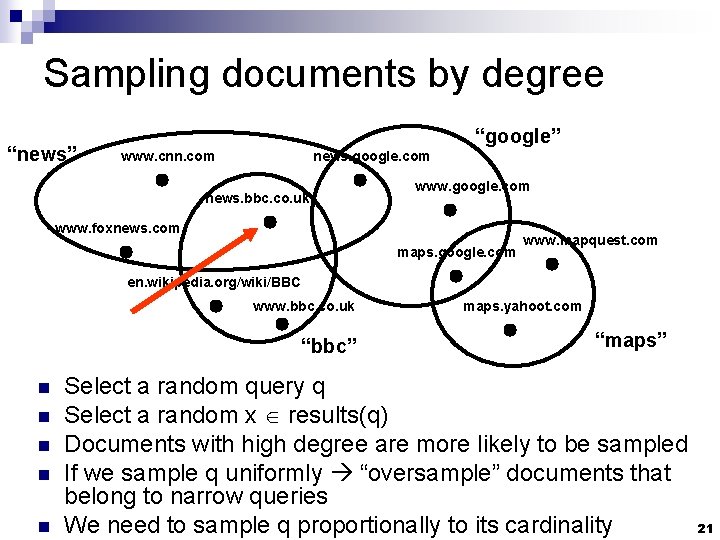

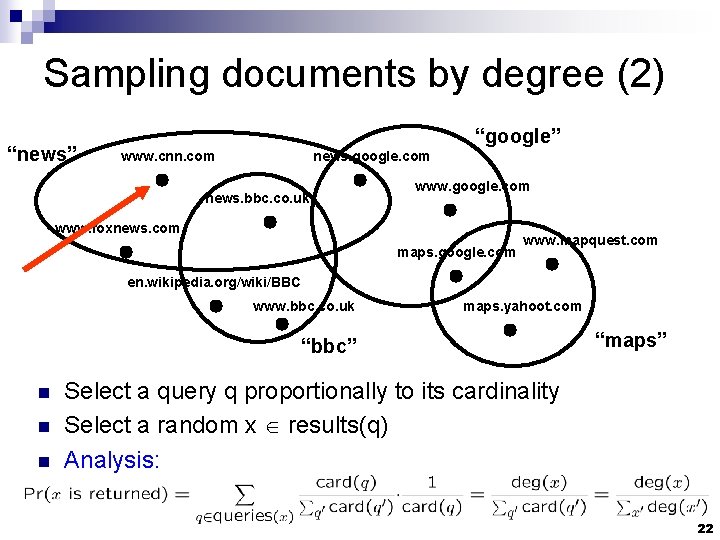

Sampling documents by degree “news” “google” www. cnn. com news. google. com news. bbc. co. uk www. google. com www. foxnews. com maps. google. com www. mapquest. com en. wikipedia. org/wiki/BBC www. bbc. co. uk “bbc” n n n maps. yahoot. com “maps” Select a random query q Select a random x results(q) Documents with high degree are more likely to be sampled If we sample q uniformly “oversample” documents that belong to narrow queries We need to sample q proportionally to its cardinality 21

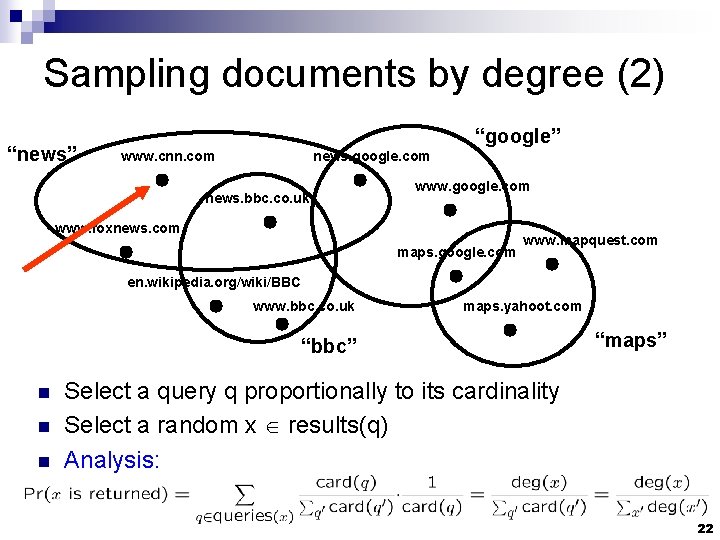

Sampling documents by degree (2) “news” “google” www. cnn. com news. google. com news. bbc. co. uk www. google. com www. foxnews. com maps. google. com www. mapquest. com en. wikipedia. org/wiki/BBC www. bbc. co. uk maps. yahoot. com “bbc” n n n “maps” Select a query q proportionally to its cardinality Select a random x results(q) Analysis: 22

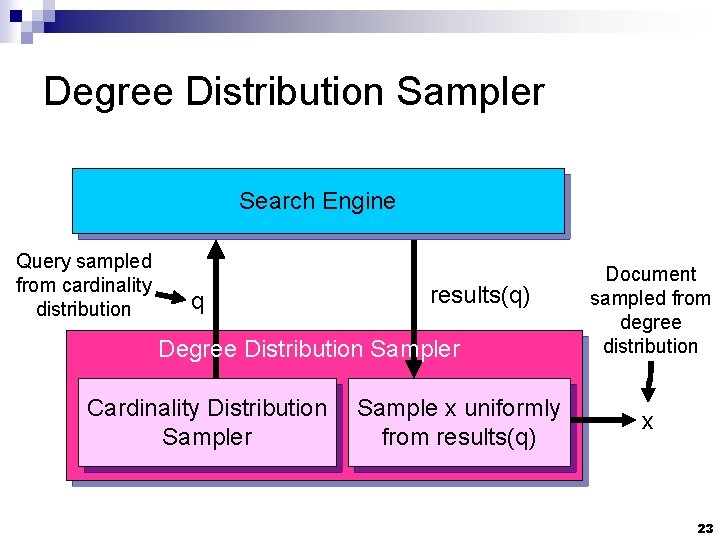

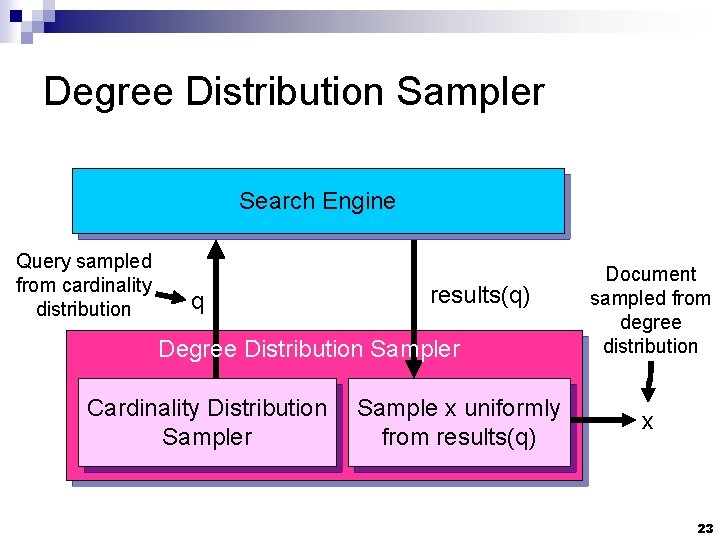

Degree Distribution Sampler Search Engine Query sampled from cardinality distribution q results(q) Degree Distribution Sampler Cardinality Distribution Sampler Sample x uniformly from results(q) Document sampled from degree distribution x 23

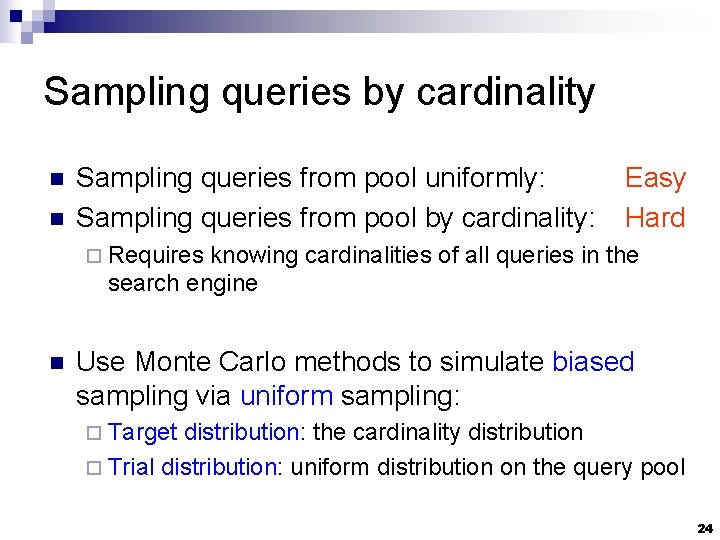

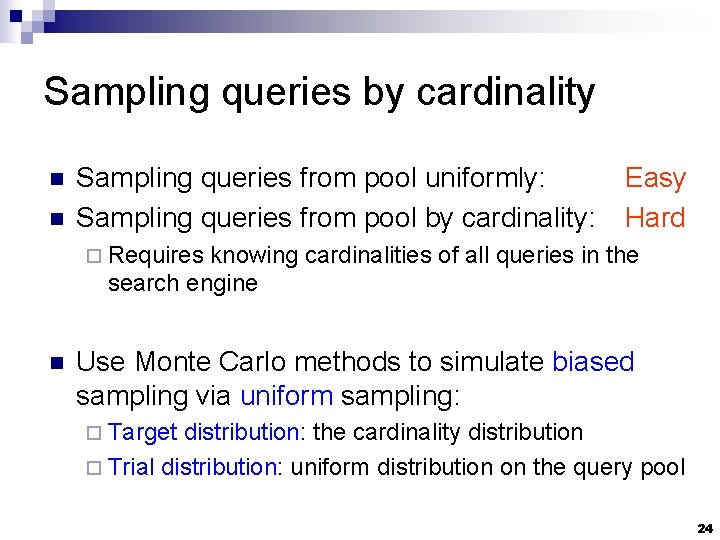

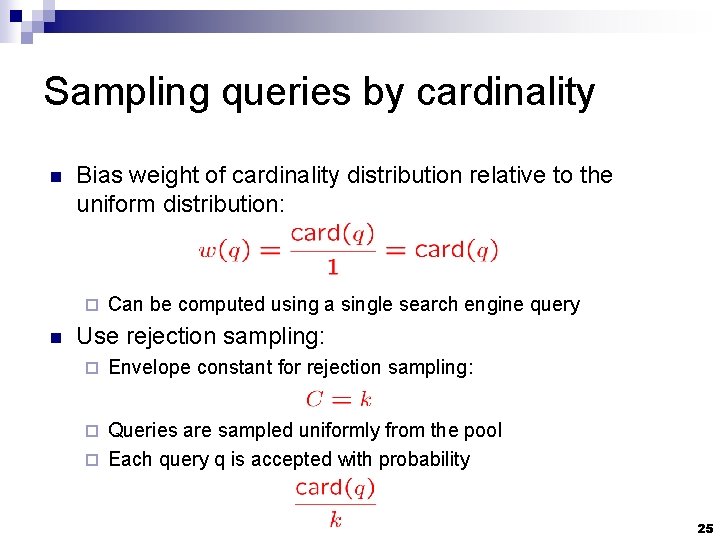

Sampling queries by cardinality n n Sampling queries from pool uniformly: Sampling queries from pool by cardinality: Easy Hard ¨ Requires knowing cardinalities of all queries in the search engine n Use Monte Carlo methods to simulate biased sampling via uniform sampling: ¨ Target distribution: the cardinality distribution ¨ Trial distribution: uniform distribution on the query pool 24

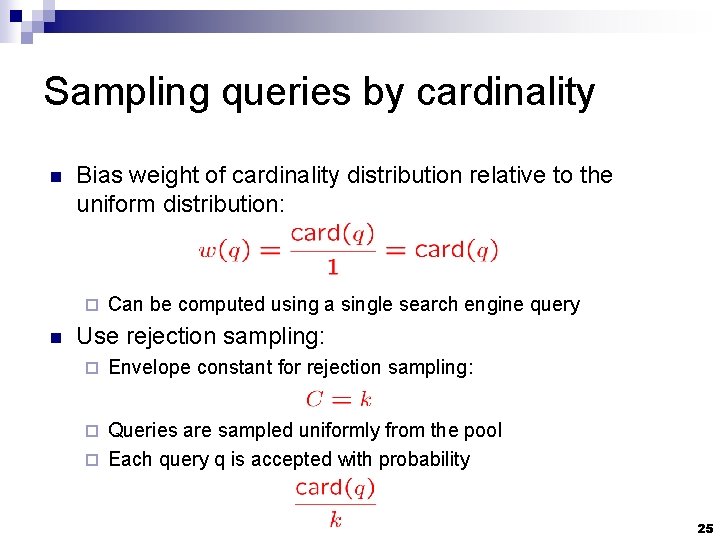

Sampling queries by cardinality n Bias weight of cardinality distribution relative to the uniform distribution: ¨ n Can be computed using a single search engine query Use rejection sampling: ¨ Envelope constant for rejection sampling: Queries are sampled uniformly from the pool ¨ Each query q is accepted with probability ¨ 25

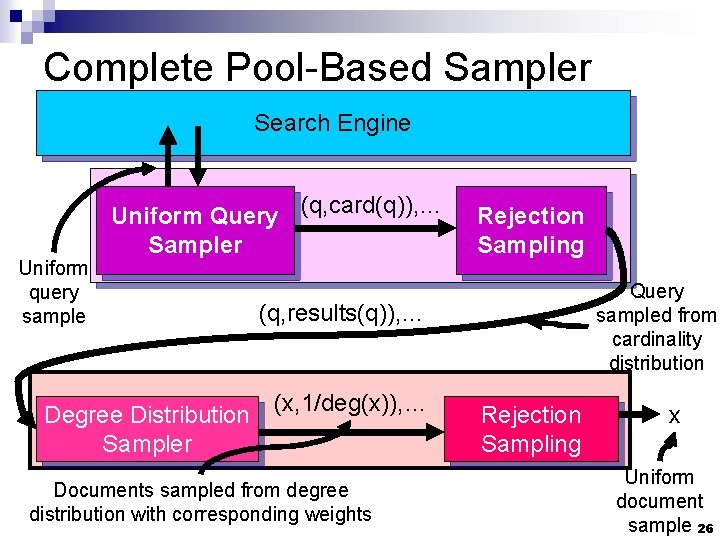

Complete Pool-Based Sampler Search Engine Uniform query sample Uniform Query (q, card(q)), … Sampler Degree Distribution Sampler Rejection Sampling Query sampled from cardinality distribution (q, results(q)), … (x, 1/deg(x)), … Documents sampled from degree distribution with corresponding weights Rejection Sampling x Uniform document sample 26

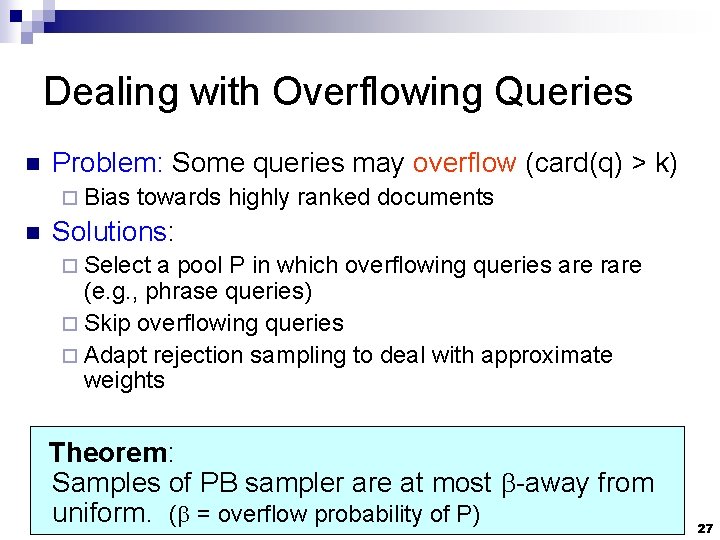

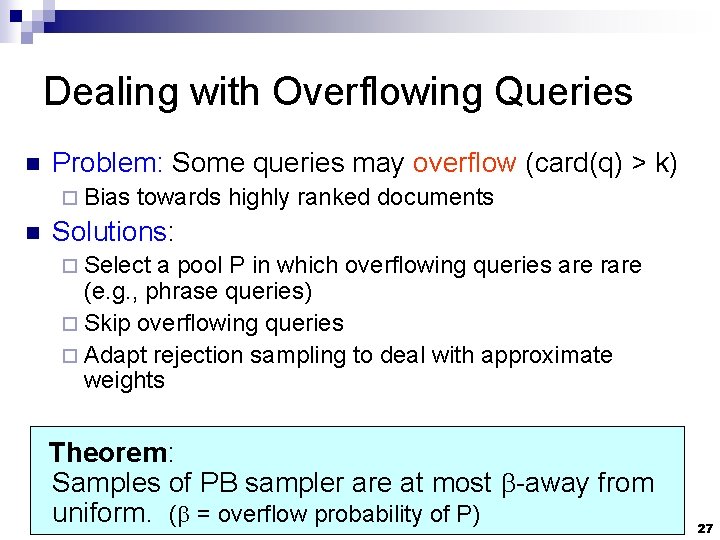

Dealing with Overflowing Queries n Problem: Some queries may overflow (card(q) > k) ¨ Bias n towards highly ranked documents Solutions: ¨ Select a pool P in which overflowing queries are rare (e. g. , phrase queries) ¨ Skip overflowing queries ¨ Adapt rejection sampling to deal with approximate weights Theorem: Samples of PB sampler are at most -away from uniform. ( = overflow probability of P) 27

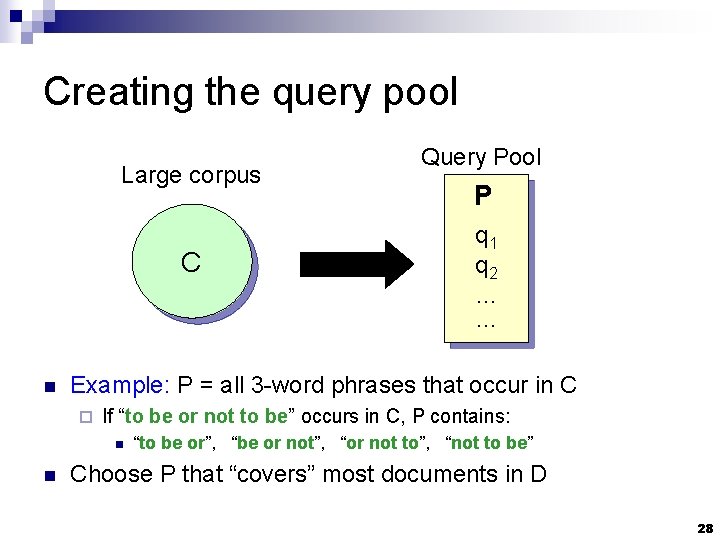

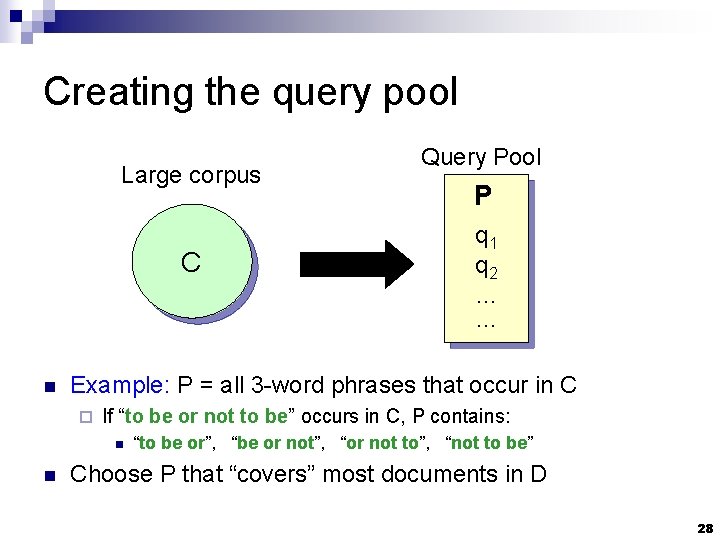

Creating the query pool Large corpus C n P q 1 q 2 … … Example: P = all 3 -word phrases that occur in C ¨ If “to be or not to be” occurs in C, P contains: n n Query Pool “to be or”, “be or not”, “or not to”, “not to be” Choose P that “covers” most documents in D 28

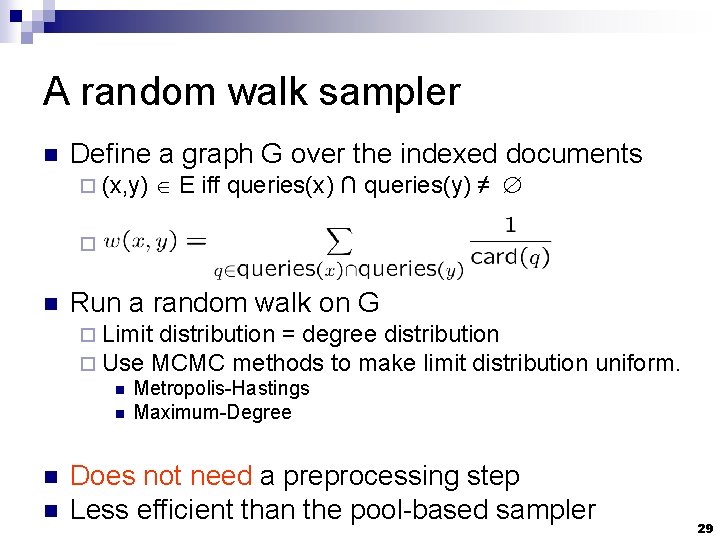

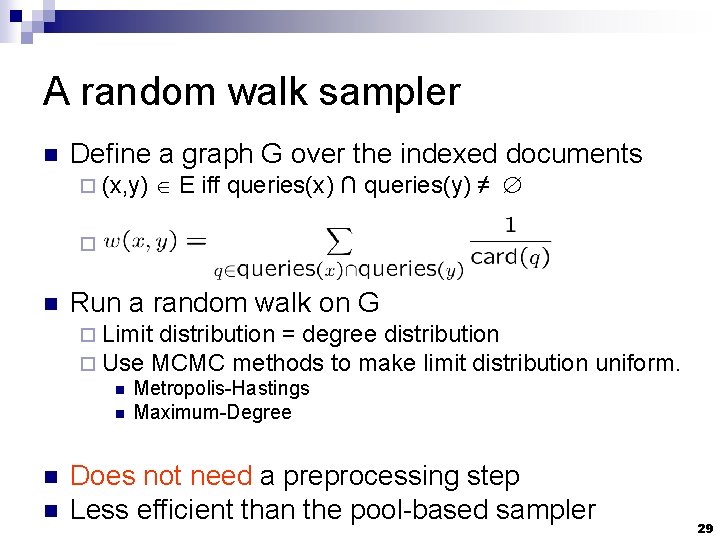

A random walk sampler n Define a graph G over the indexed documents ¨ (x, y) E iff queries(x) ∩ queries(y) ≠ ¨ n Run a random walk on G ¨ Limit distribution = degree distribution ¨ Use MCMC methods to make limit distribution n Metropolis-Hastings n Maximum-Degree n n uniform. Does not need a preprocessing step Less efficient than the pool-based sampler 29

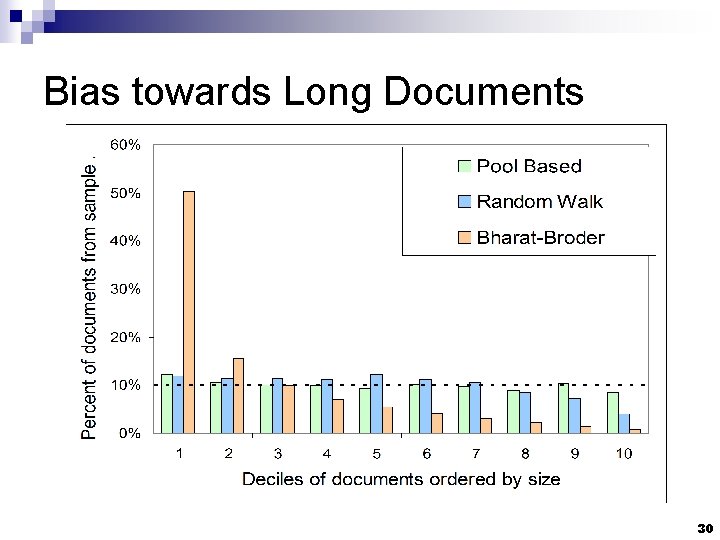

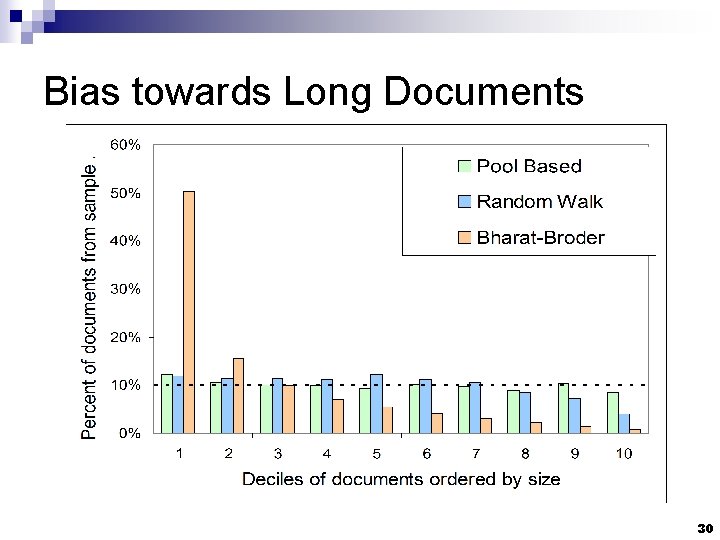

Bias towards Long Documents 30

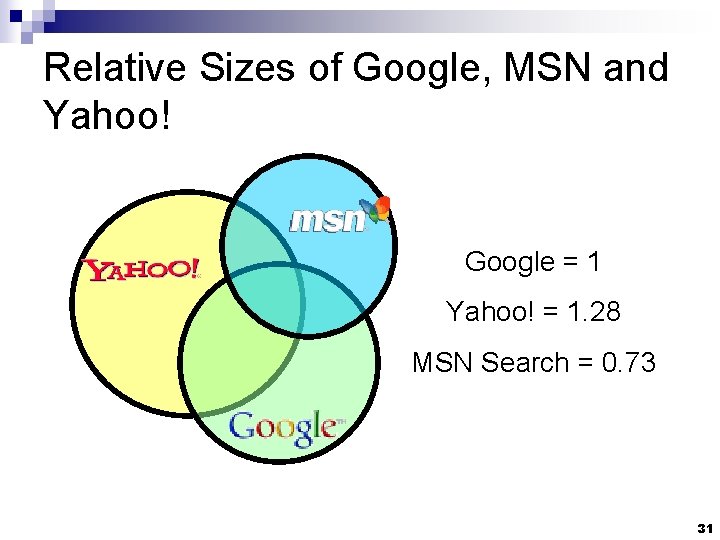

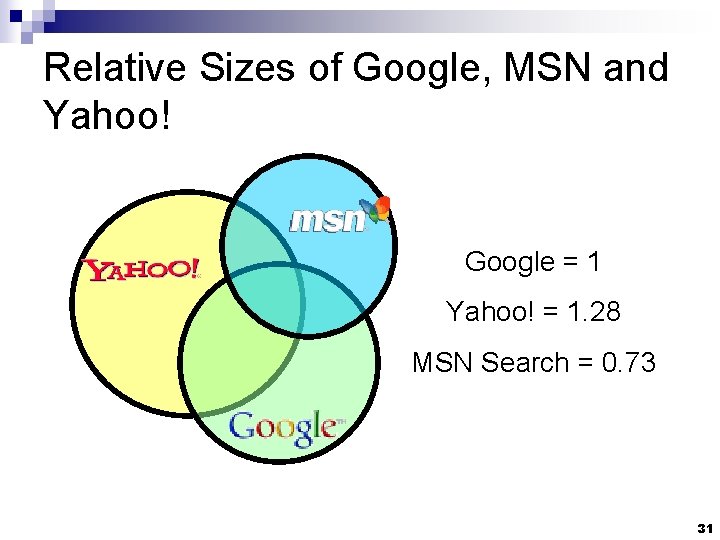

Relative Sizes of Google, MSN and Yahoo! Google = 1 Yahoo! = 1. 28 MSN Search = 0. 73 31

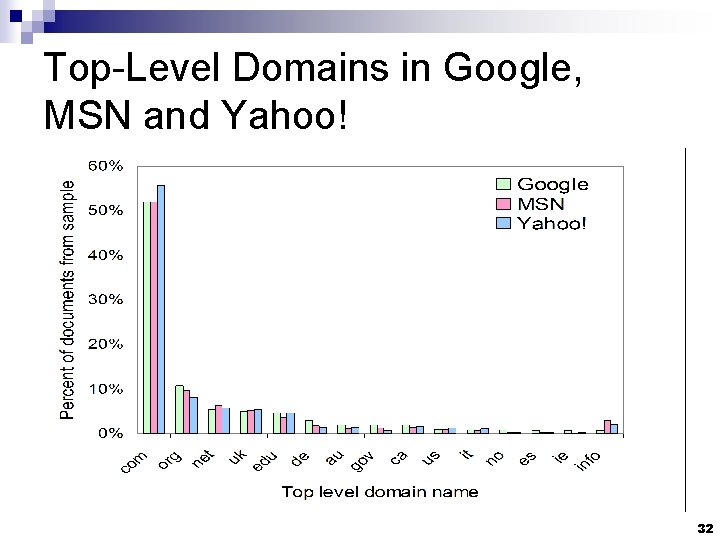

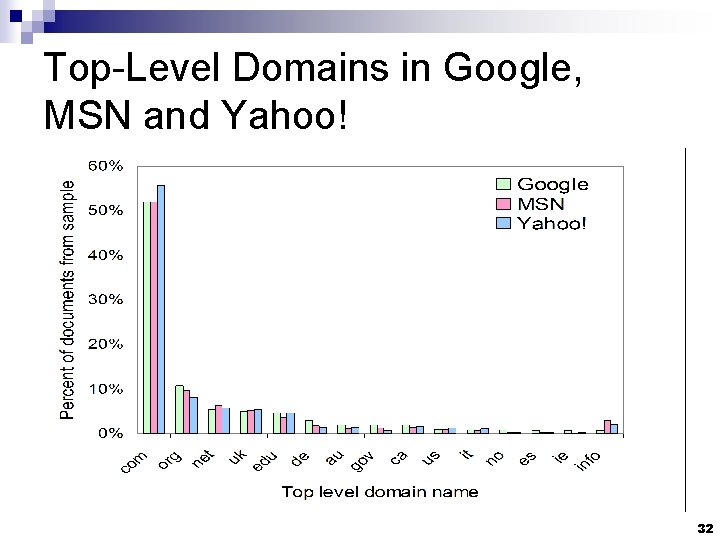

Top-Level Domains in Google, MSN and Yahoo! 32

Conclusions n Two new search engine samplers ¨ Pool-based sampler ¨ Random walk sampler Samplers are guaranteed to produce nearuniform samples, under plausible assumptions. n Samplers show no or little bias in experiments. n 33

Thank You 34