Lecture 5 Search Engines Outline Search engines key

- Slides: 27

Lecture 5: Search Engines

Outline • Search engines: key tools for ecommerce – Buyers and sellers must find each other • • • How do they work? How much do they index? Are they reliable? How are hits ordered? Can the order be changed?

Search Engines • Tools for finding information on the Web – Problem: “hidden” databases, e. g. New York Times • Directory – A hand-constructed hierarchy of topics (e. g. Yahoo) • Search engine – A machine-constructed index (usually by keyword) • So many search engines, we now need search engines to find them. Searchenginecollosus. com

Indexing • Arrangement of data (data structure) to permit fast searching • Which list is easier to search? sow fox pig eel yak hen ant cat dog hog ant cat dog eel fox hen hog pig sow yak • Sorting helps. Why? – Permits binary search. About log 2 n probes into list • log 2(1 billion) ~ 30 – Permits interpolation search. About log 2(log 2 n) probes • log 2(1 billion) ~ 5

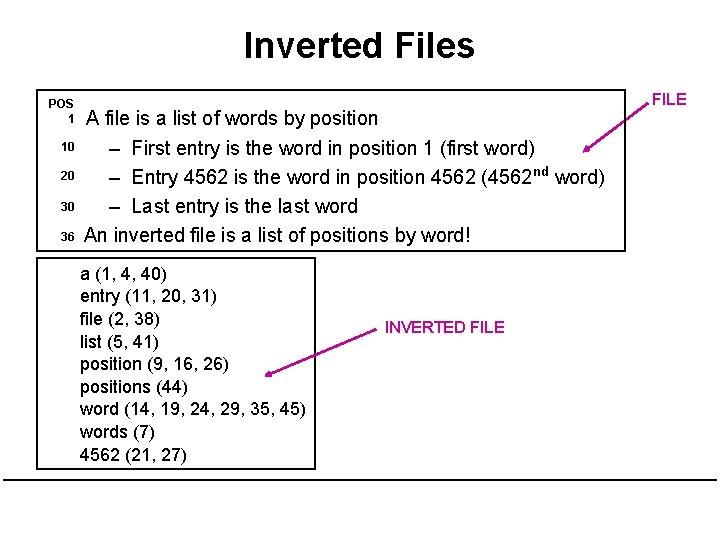

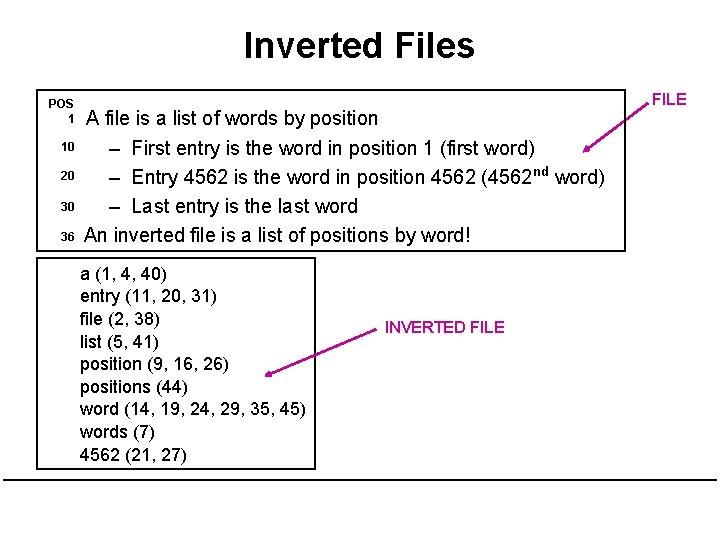

Inverted Files POS 1 10 20 30 36 A file is a list of words by position – First entry is the word in position 1 (first word) – Entry 4562 is the word in position 4562 (4562 nd word) – Last entry is the last word An inverted file is a list of positions by word! a (1, 4, 40) entry (11, 20, 31) file (2, 38) list (5, 41) position (9, 16, 26) positions (44) word (14, 19, 24, 29, 35, 45) words (7) 4562 (21, 27) INVERTED FILE

Inverted Files for Multiple Documents LEXICON DOCID OCCUR . . . POS 1 POS 2 . . . “jezebel” occurs 6 times in document 34, 3 times in document 44, 4 times in document 56. . . WORD INDEX

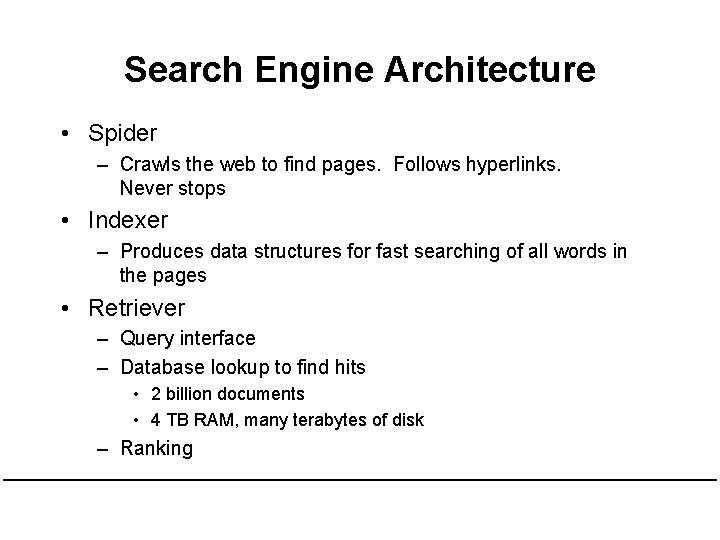

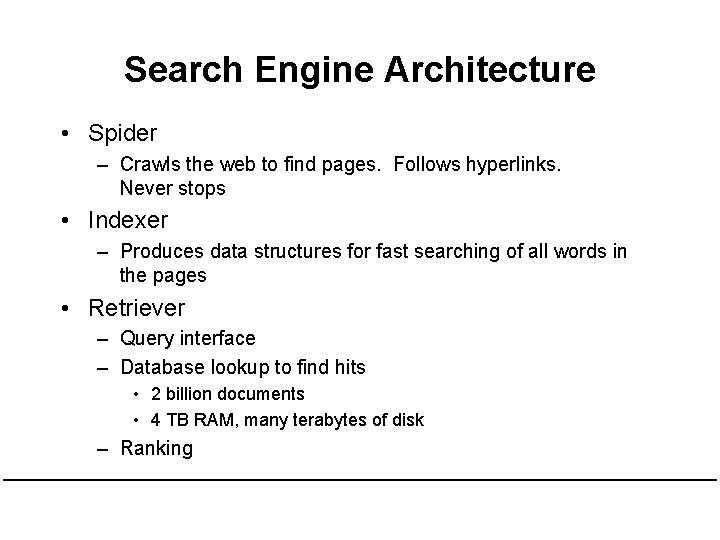

Search Engine Architecture • Spider – Crawls the web to find pages. Follows hyperlinks. Never stops • Indexer – Produces data structures for fast searching of all words in the pages • Retriever – Query interface – Database lookup to find hits • 2 billion documents • 4 TB RAM, many terabytes of disk – Ranking

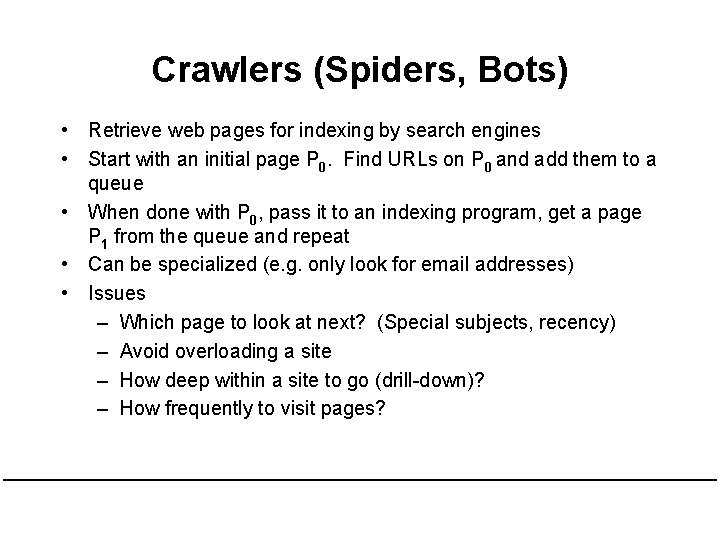

Crawlers (Spiders, Bots) • Retrieve web pages for indexing by search engines • Start with an initial page P 0. Find URLs on P 0 and add them to a queue • When done with P 0, pass it to an indexing program, get a page P 1 from the queue and repeat • Can be specialized (e. g. only look for email addresses) • Issues – Which page to look at next? (Special subjects, recency) – Avoid overloading a site – How deep within a site to go (drill-down)? – How frequently to visit pages?

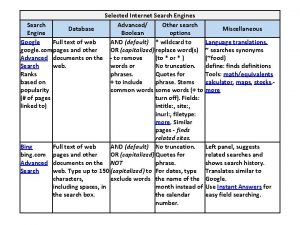

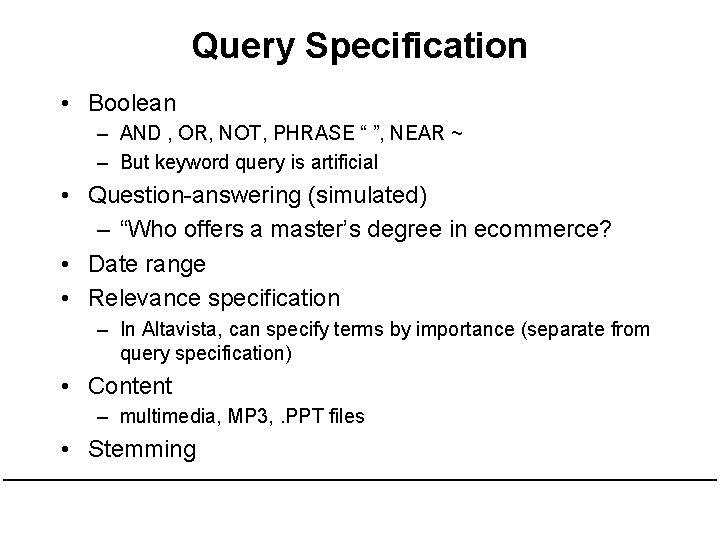

Query Specification • Boolean – AND , OR, NOT, PHRASE “ ”, NEAR ~ – But keyword query is artificial • Question-answering (simulated) – “Who offers a master’s degree in ecommerce? • Date range • Relevance specification – In Altavista, can specify terms by importance (separate from query specification) • Content – multimedia, MP 3, . PPT files • Stemming

“Advanced” Query Specification • Multimedia, e. g. Google • Date range • Relevance specification – In Altavista, can specify terms by importance (separate from query specification) • Content – multimedia, MP 3, . PPT files • Stemming • Language • Search depth (from site’s front page)

Ranking (Scoring) Hits • Hits must be presented in some order • What order? – Relevance, recency, popularity, reliability? • Some ranking methods – – Presence of keywords in title of document Closeness of keywords to start of document Frequency of keyword in document Link popularity (how many pages point to this one) • Can the user control? Can the page owner control? • Can you find out what order is used? • Spamdexing: influencing retrieval ranking by altering a web page. (Puts “spam” in the index)

Link Popularity • How many pages link to this page? – on the whole Web – in our database? • www. linkpopularity. com • Link popularity is used for ranking – Many measures – Number of links in – Weighted number of links in (by weight of referring page)

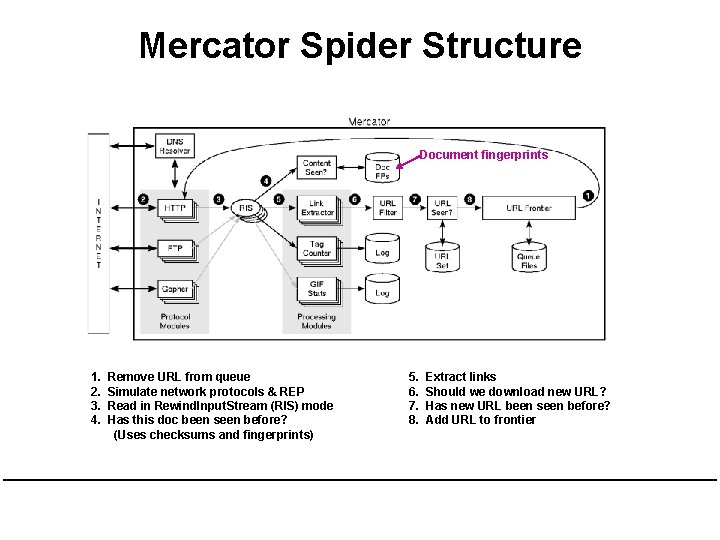

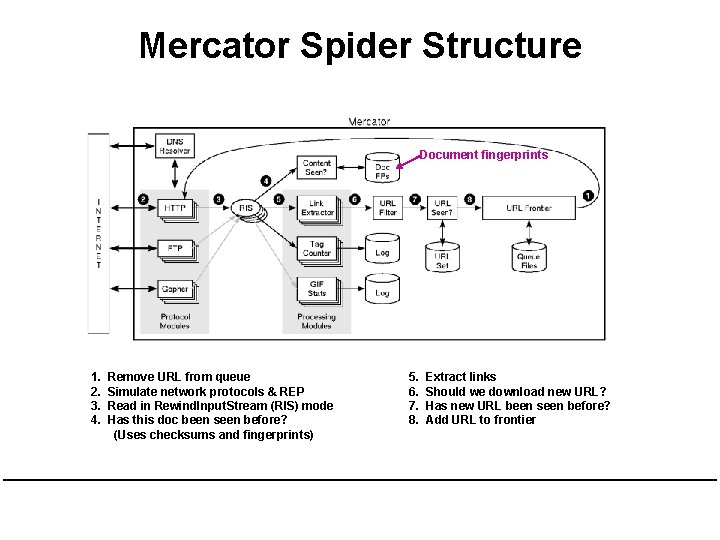

Mercator Spider Structure Document fingerprints 1. 2. 3. 4. Remove URL from queue Simulate network protocols & REP Read in Rewind. Input. Stream (RIS) mode Has this doc been seen before? (Uses checksums and fingerprints) 5. 6. 7. 8. Extract links Should we download new URL? Has new URL been seen before? Add URL to frontier

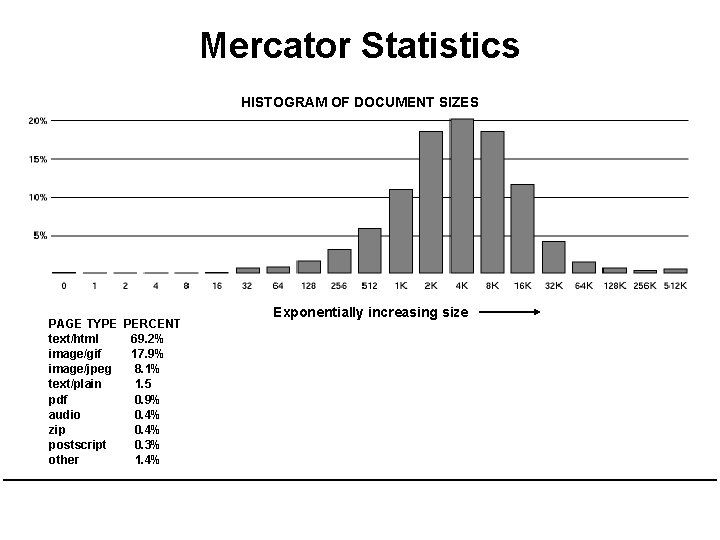

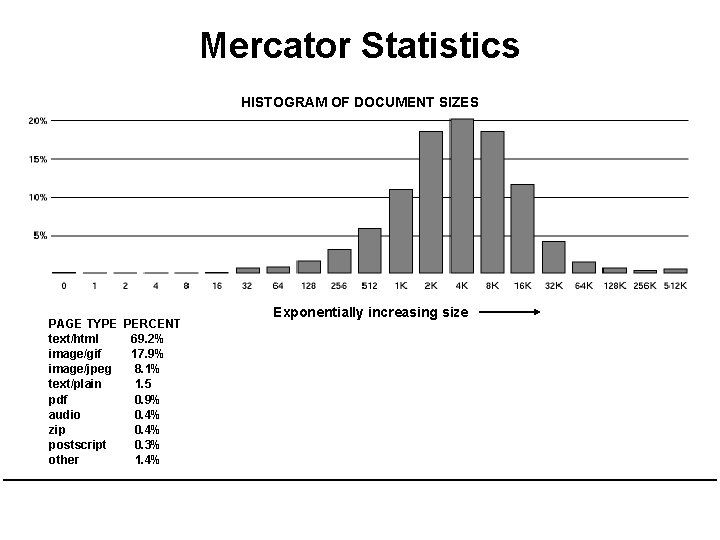

Mercator Statistics HISTOGRAM OF DOCUMENT SIZES PAGE TYPE PERCENT text/html 69. 2% image/gif 17. 9% image/jpeg 8. 1% text/plain 1. 5 pdf 0. 9% audio 0. 4% zip 0. 4% postscript 0. 3% other 1. 4% Exponentially increasing size

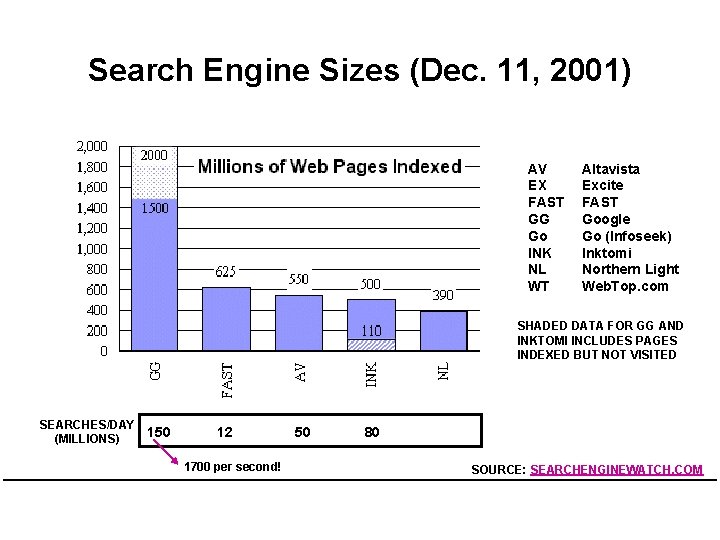

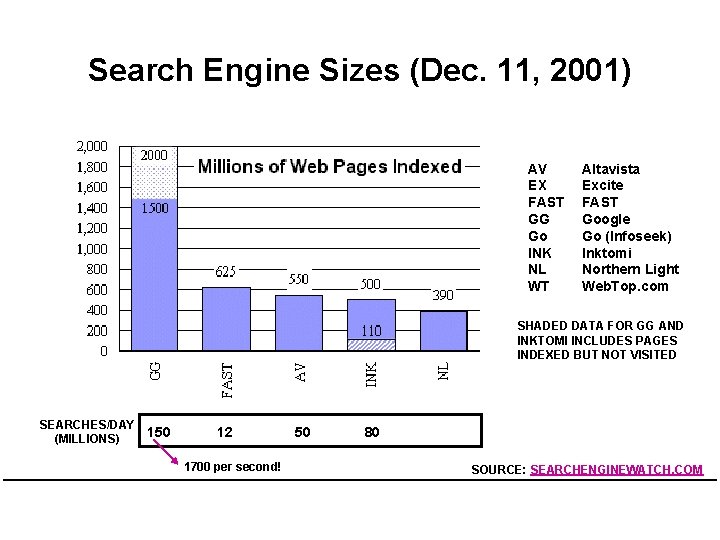

Search Engine Sizes (Dec. 11, 2001) AV EX FAST GG Go INK NL WT Altavista Excite FAST Google Go (Infoseek) Inktomi Northern Light Web. Top. com SHADED DATA FOR GG AND INKTOMI INCLUDES PAGES INDEXED BUT NOT VISITED SEARCHES/DAY 150 (MILLIONS) 12 1700 per second! 50 80 SOURCE: SEARCHENGINEWATCH. COM

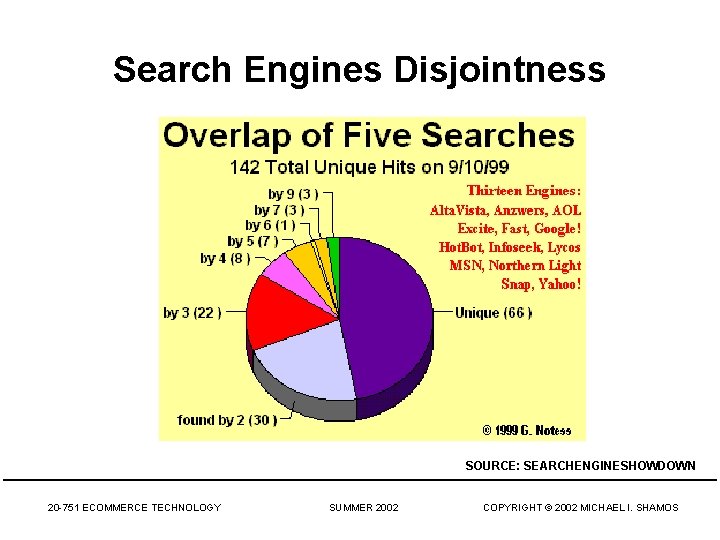

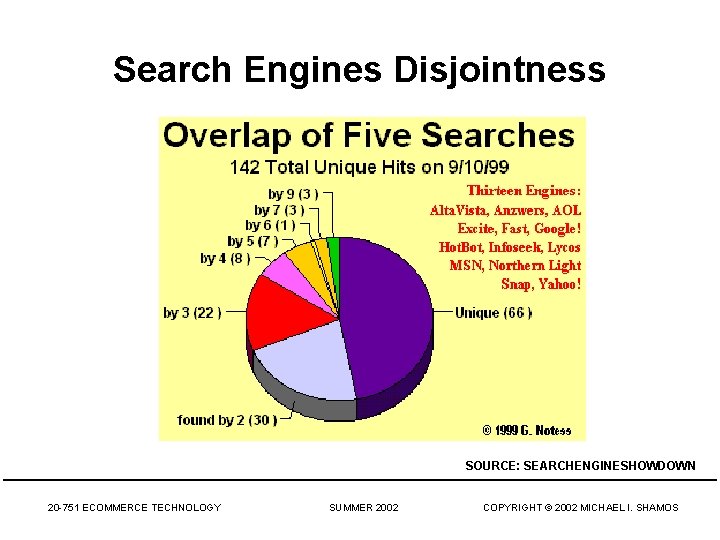

Search Engines Disjointness SOURCE: SEARCHENGINESHOWDOWN 20 -751 ECOMMERCE TECHNOLOGY SUMMER 2002 COPYRIGHT © 2002 MICHAEL I. SHAMOS

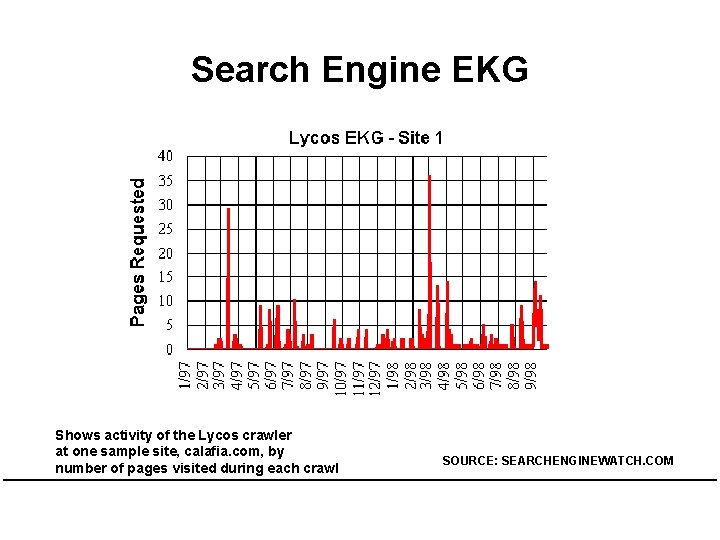

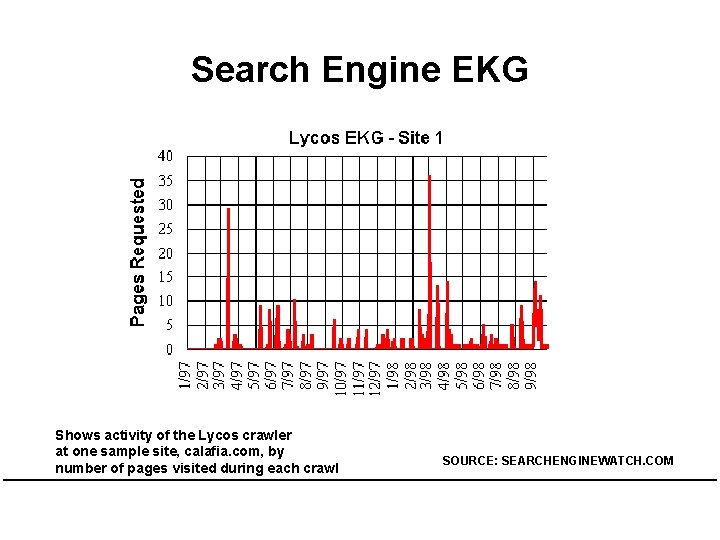

Search Engine EKG Shows activity of the Lycos crawler at one sample site, calafia. com, by number of pages visited during each crawl SOURCE: SEARCHENGINEWATCH. COM

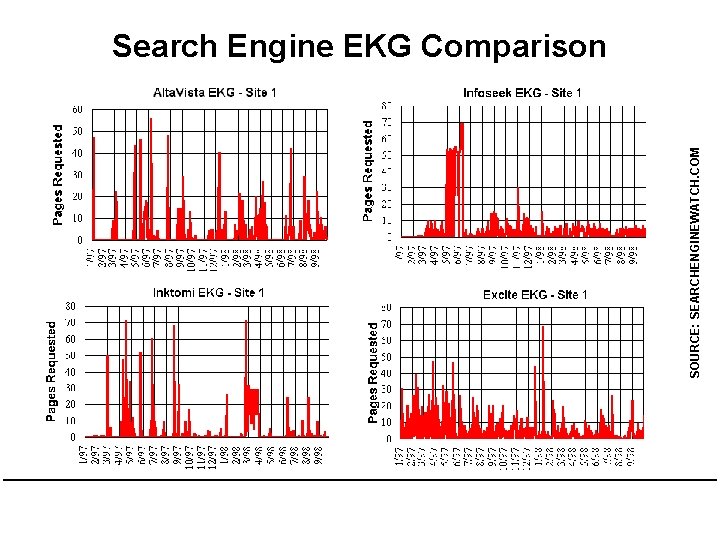

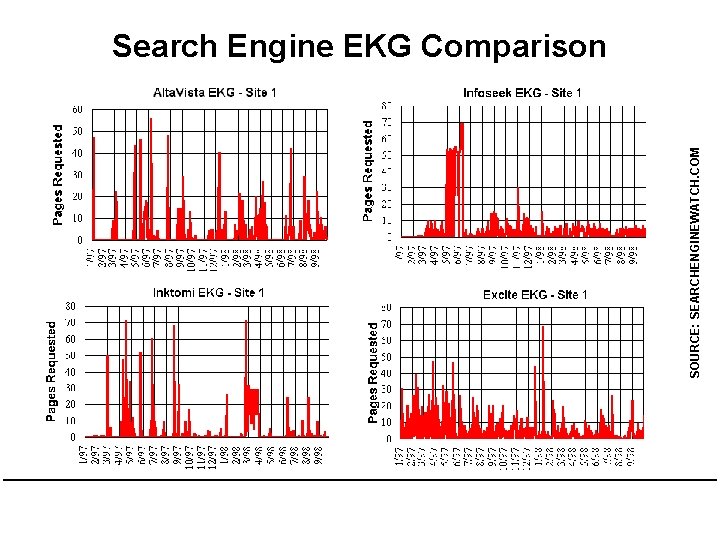

SOURCE: SEARCHENGINEWATCH. COM Search Engine EKG Comparison

Search Engine Differences • Coverage (number of documents) • Spidering algorithms (visit Spider. Catcher) – Frequency, depth of visits • • Inexing policies Search interfaces Ranking One solution: use a metasearcher (search agent)

Metasearchers • All the engines operate differently. Different – – – sizes query languages crawling algorithms storage policies (stop words, punctuation, fonts) freshness ranking • Submit the same query to many engines and collect the results • Metacrawler

Search Spying • • Peeking at queries as they are being submitted Metaspy. Spies on Metacrawler Ask. Jeeves The. Search. Site Epicurious (recipes) Stock. Charts. com Yahoo buzz index Kanoodle

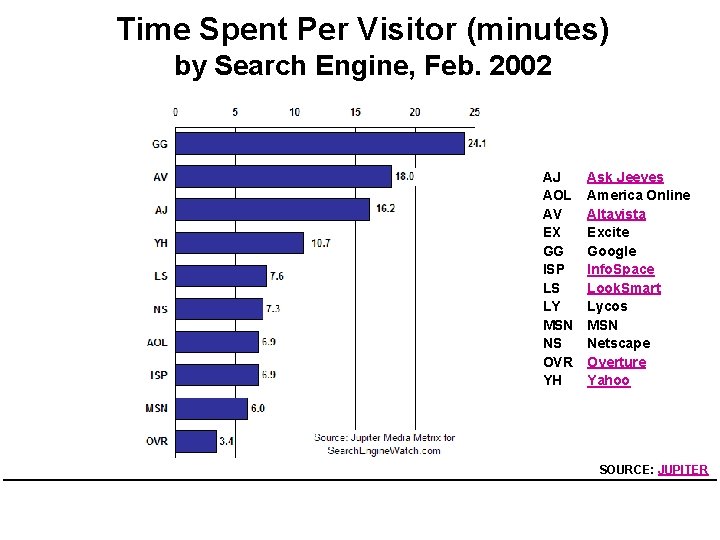

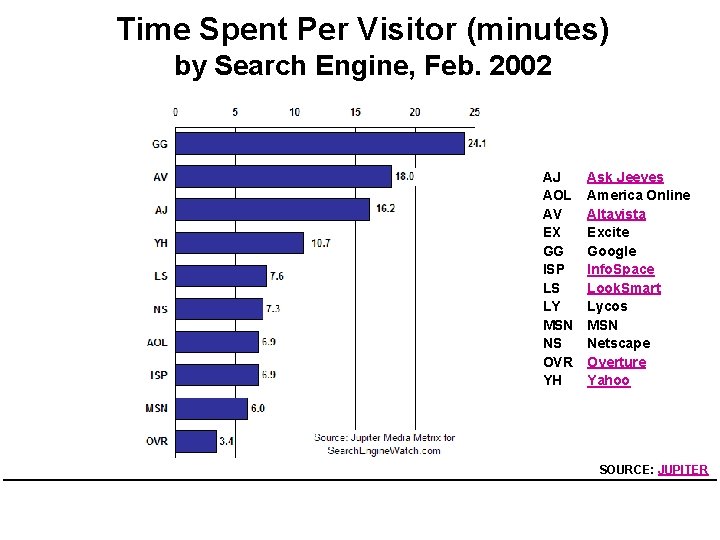

Time Spent Per Visitor (minutes) by Search Engine, Feb. 2002 AJ AOL AV EX GG ISP LS LY MSN NS OVR YH Ask Jeeves America Online Altavista Excite Google Info. Space Look. Smart Lycos MSN Netscape Overture Yahoo SOURCE: JUPITER

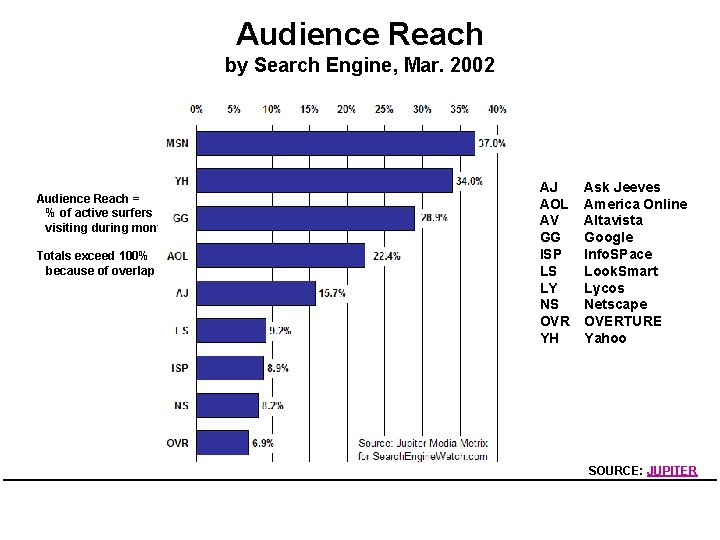

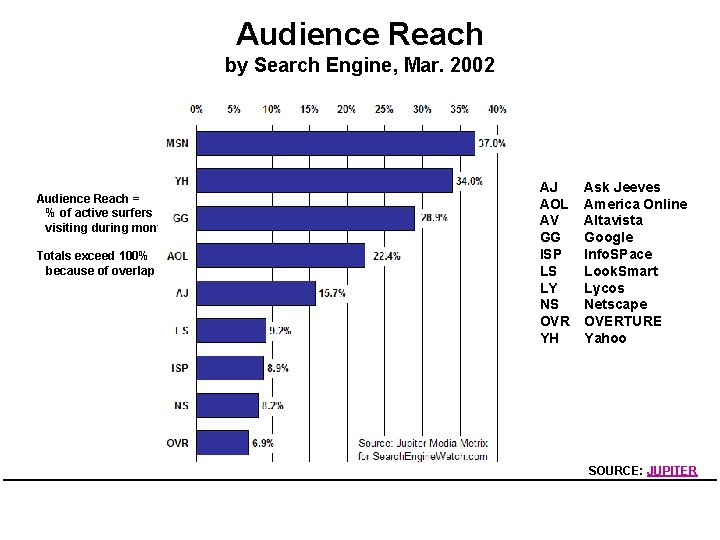

Audience Reach by Search Engine, Mar. 2002 Audience Reach = % of active surfers visiting during month. Totals exceed 100% because of overlap AJ AOL AV GG ISP LS LY NS OVR YH Ask Jeeves America Online Altavista Google Info. SPace Look. Smart Lycos Netscape OVERTURE Yahoo SOURCE: JUPITER

Key Takeaways • • • Engines are a critical Web resource Very sophisticated, high technology But they’re secret They don’t cover the Web comletely Spamdexing is a problem New paradigms needed as Web grows – Keywords are not enough. Try hyperbolic trees • What about images, music, video? – www. corbis. com

Q&A 20 -751 ECOMMERCE TECHNOLOGY SUMMER 2002 COPYRIGHT © 2002 MICHAEL I. SHAMOS

Robot Exclusion • You may not want certain pages indexed but still viewable by browsers. Can’t protect directory. • Some crawlers conform to the Robot Exclusion Protocol. Compliance is voluntary. One way to enforce: firewall • They look for file robots. txt at highest directory level in domain. If domain is www. ecom. cmu. edu, robots. txt goes in www. ecom. cmu. edu/robots. txt • A specific document can be shielded from a crawler by adding the line: <META NAME="ROBOTS” CONTENT="NOINDEX">

Robots Exclusion Protocol • Format of robots. txt – Two fields. User-agent to specify a robot – Disallow to tell the agent what to ignore • To exclude all robots from a server: User-agent: * Disallow: / • To exclude one robot from two directories: User-agent: Web. Crawler Disallow: /news/ Disallow: /tmp/ • View the robots. txt specification.

Advantages and disadvantages of meta search engines

Advantages and disadvantages of meta search engines Search engines

Search engines Meta search engine definition

Meta search engine definition Open source search engines

Open source search engines Search engine architecture

Search engine architecture Other search engines

Other search engines Information retrieval slides

Information retrieval slides Search engine architecture

Search engine architecture Search engines information retrieval in practice

Search engines information retrieval in practice Www.sbu

Www.sbu Search engines information retrieval in practice

Search engines information retrieval in practice Search engines information retrieval in practice

Search engines information retrieval in practice 01:640:244 lecture notes - lecture 15: plat, idah, farad

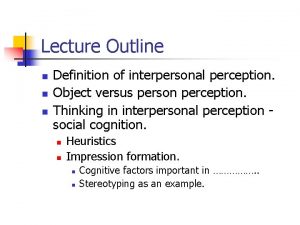

01:640:244 lecture notes - lecture 15: plat, idah, farad Lecture outline example

Lecture outline example Lecture outline example

Lecture outline example Lecture outline example

Lecture outline example Lecture outline meaning

Lecture outline meaning What is sentence outline

What is sentence outline Key partners adalah

Key partners adalah Key partners

Key partners Troubleshooting small engines

Troubleshooting small engines Medieval war machines

Medieval war machines Light vehicle diesel engines

Light vehicle diesel engines Propane injection for gas engines

Propane injection for gas engines Engine types

Engine types Whats engine displacement

Whats engine displacement Edelman engines has 17 billion

Edelman engines has 17 billion Principle of carburetion

Principle of carburetion