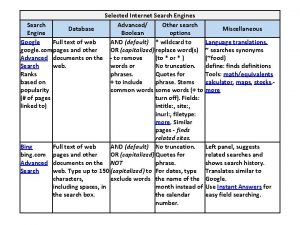

Search Engines 1 2 Todays Agenda Search engines

- Slides: 60

Search Engines 1

2

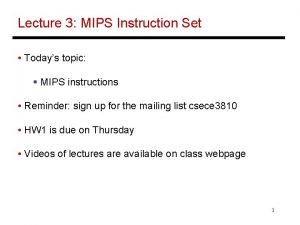

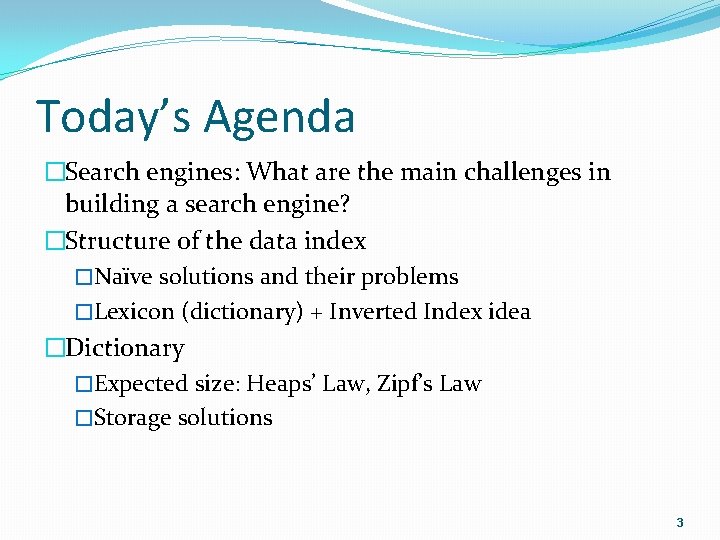

Today’s Agenda �Search engines: What are the main challenges in building a search engine? �Structure of the data index �Naïve solutions and their problems �Lexicon (dictionary) + Inverted Index idea �Dictionary �Expected size: Heaps’ Law, Zipf’s Law �Storage solutions 3

Building a Search Engine: Challenges What do you think? 4

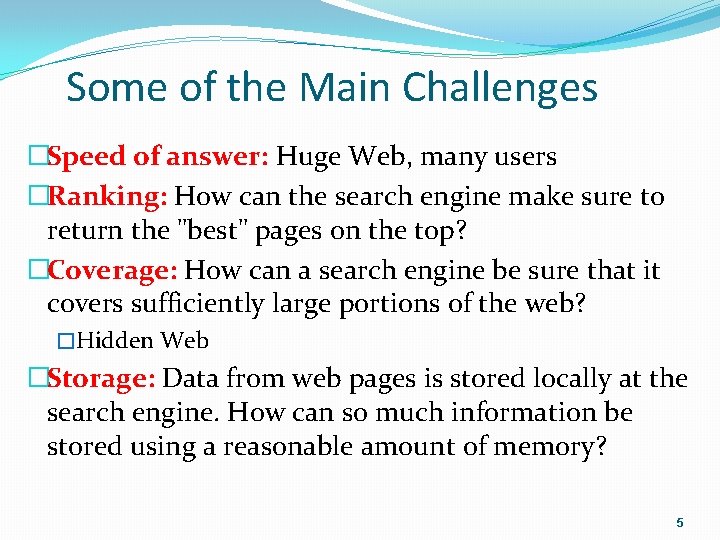

Some of the Main Challenges �Speed of answer: Huge Web, many users �Ranking: How can the search engine make sure to return the "best" pages on the top? �Coverage: How can a search engine be sure that it covers sufficiently large portions of the web? �Hidden Web �Storage: Data from web pages is stored locally at the search engine. How can so much information be stored using a reasonable amount of memory? 5

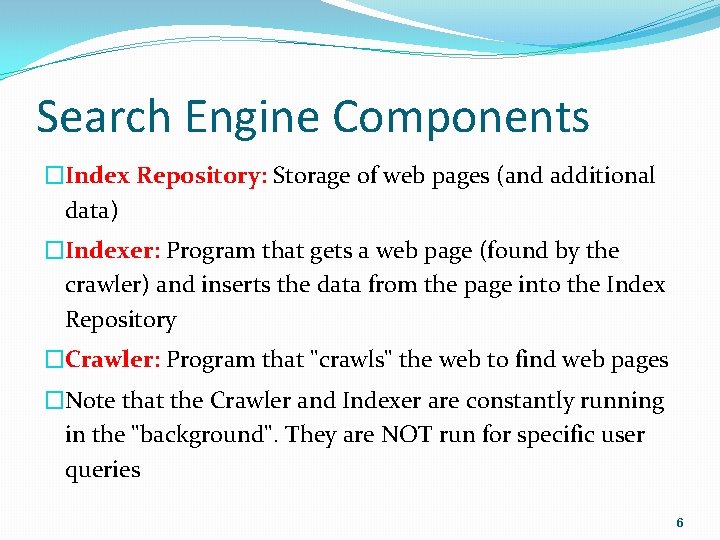

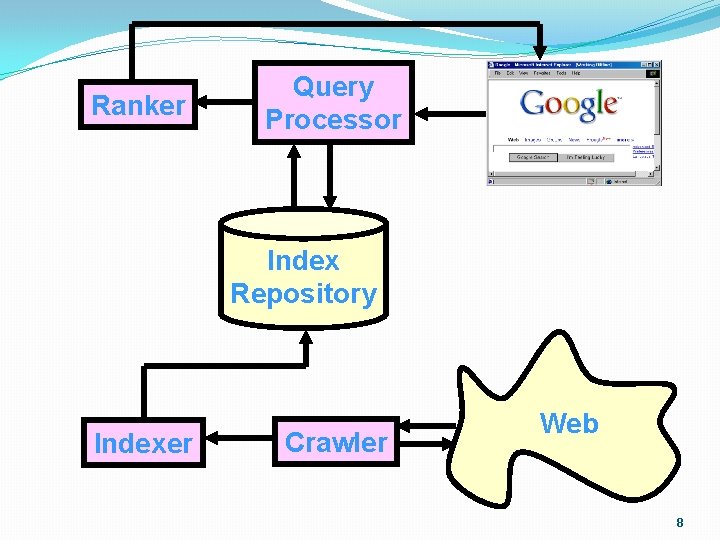

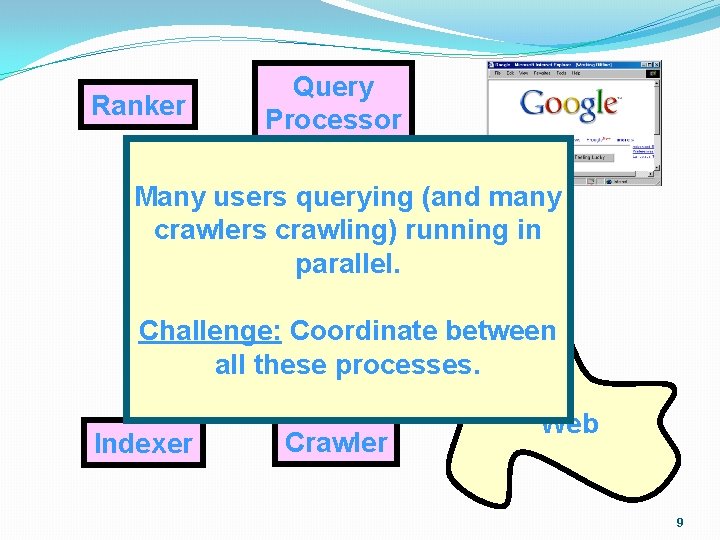

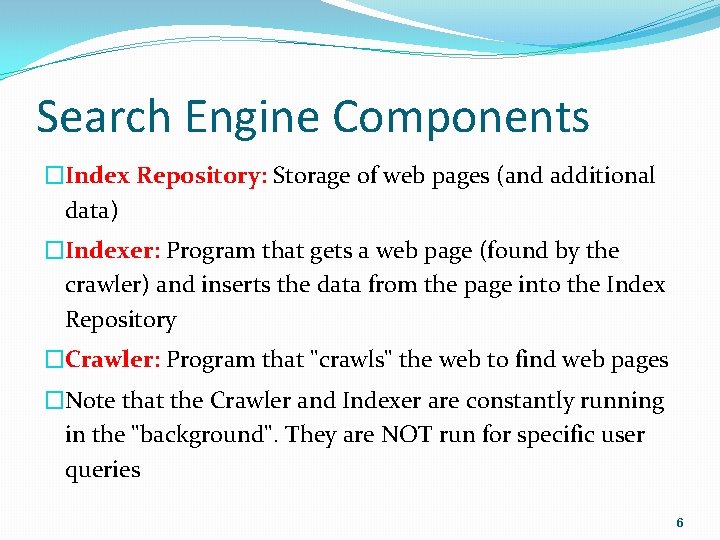

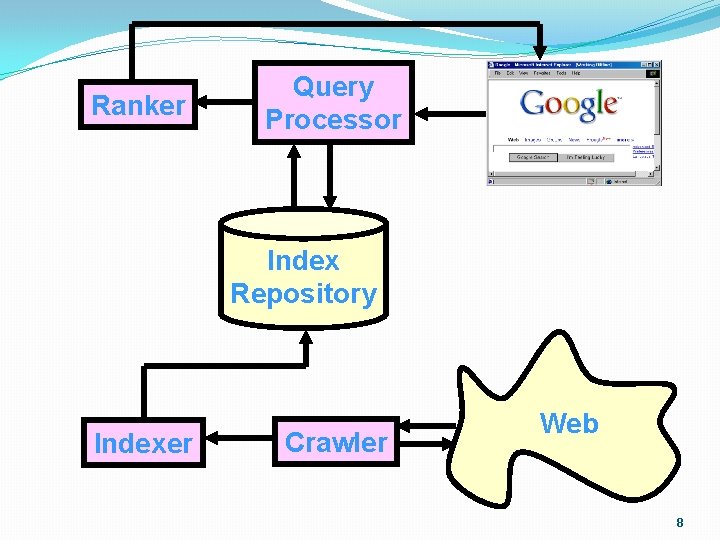

Search Engine Components �Index Repository: Storage of web pages (and additional data) �Indexer: Program that gets a web page (found by the crawler) and inserts the data from the page into the Index Repository �Crawler: Program that "crawls" the web to find web pages �Note that the Crawler and Indexer are constantly running in the "background". They are NOT run for specific user queries 6

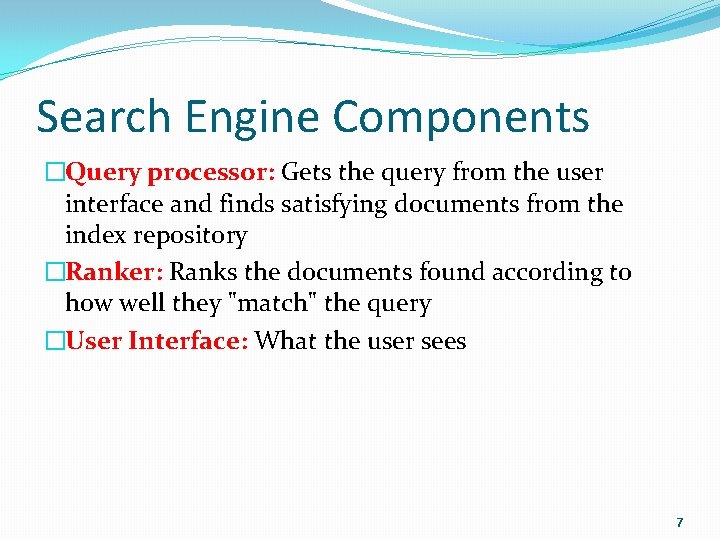

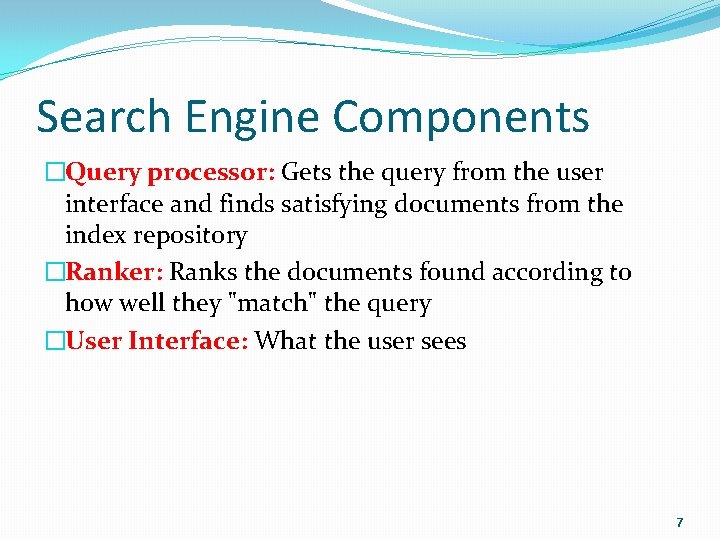

Search Engine Components �Query processor: Gets the query from the user interface and finds satisfying documents from the index repository �Ranker: Ranks the documents found according to how well they "match" the query �User Interface: What the user sees 7

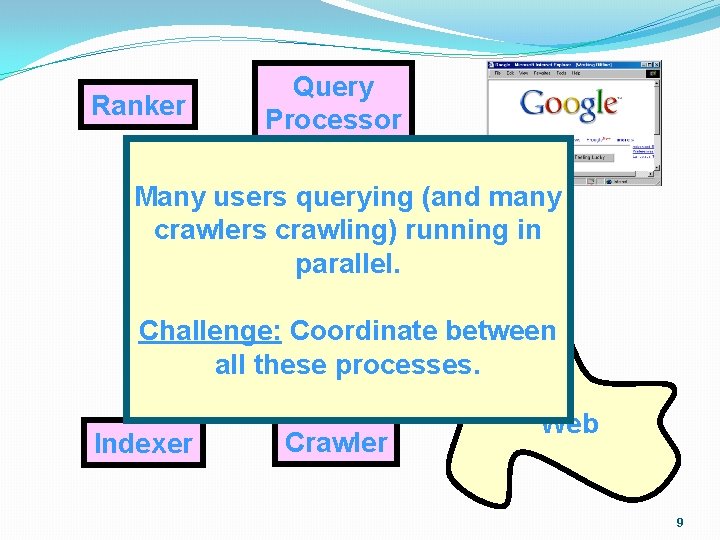

Ranker Query Processor Index Repository Indexer Crawler Web 8

Ranker Query Processor Many users querying (and many crawlers crawling) running in Index parallel. Repository Challenge: Coordinate between all these processes. Indexer Crawler Web 9

Information Retrieval �Information Retrieval (IR) is finding material (usually documents) of an unstructured nature (usually text) that satisfies an information need from within large collections (usually stored on computers). 10

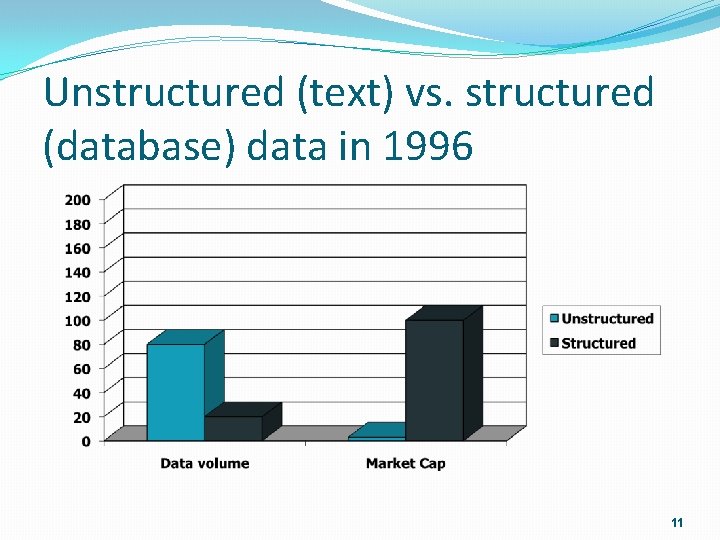

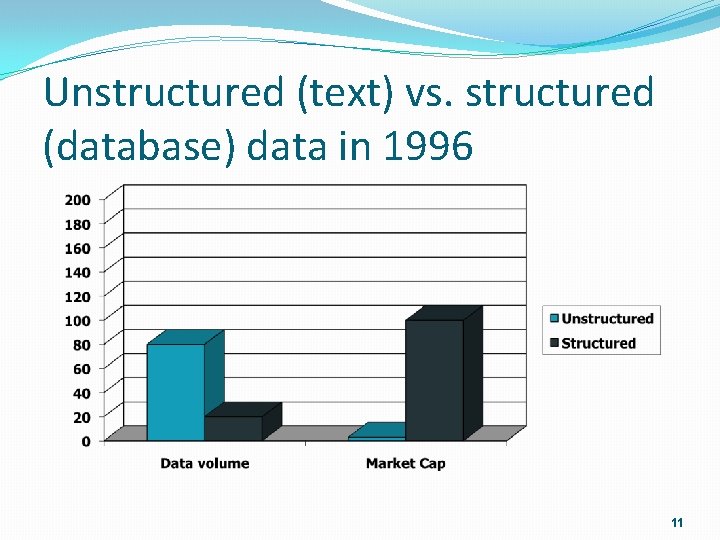

Unstructured (text) vs. structured (database) data in 1996 11

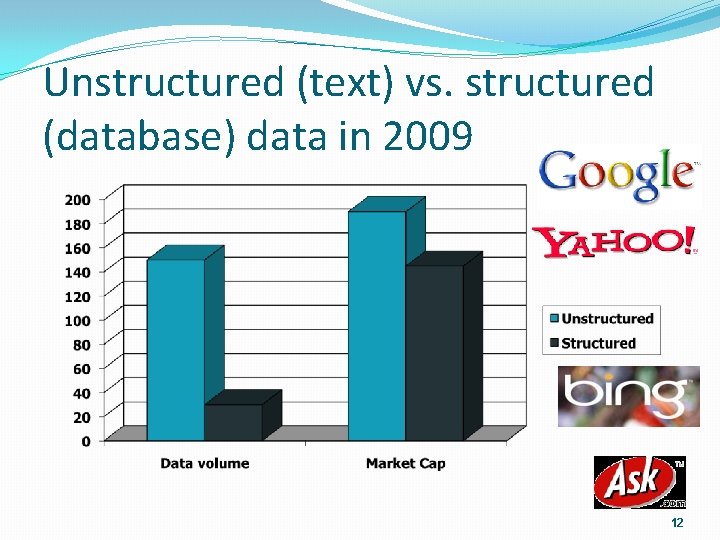

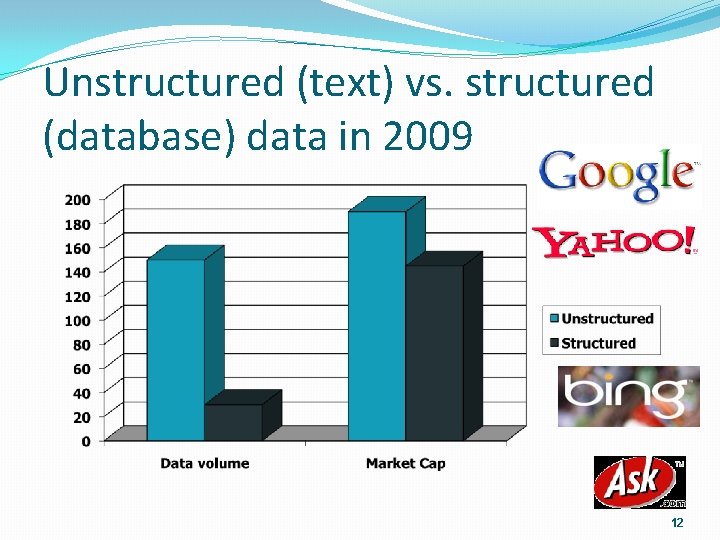

Unstructured (text) vs. structured (database) data in 2009 12

Document Statistics How big will the dictionary be? 13

Vocabulary vs. collection size �How big is the term vocabulary? �That is, how many distinct words are there? �Can we assume an upper bound? �In practice, the vocabulary will keep growing with the collection size

Vocabulary vs. collection size �Heaps’ law: M = k. Tb �M is the size of the vocabulary, T is the number of tokens in the collection �Typical values: 30 ≤ k ≤ 100 and b ≈ 0. 5 �In a log-log plot of vocabulary size M vs. T, Heaps’ law predicts a line with slope about ½ �An empirical finding (“empirical law”)

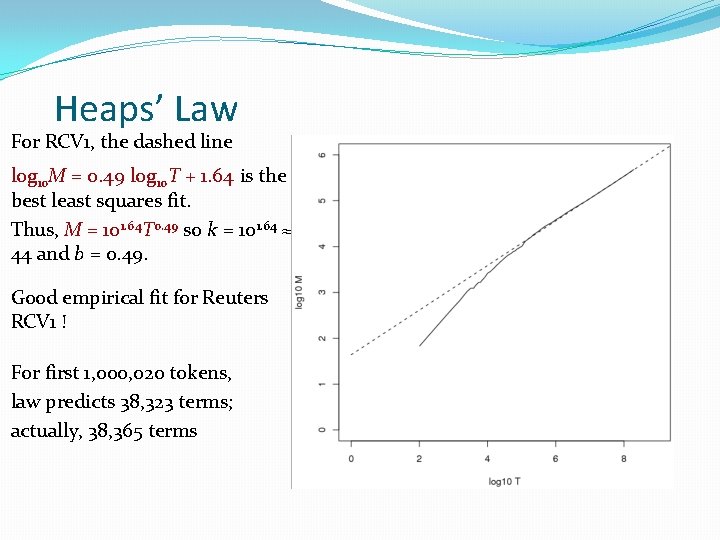

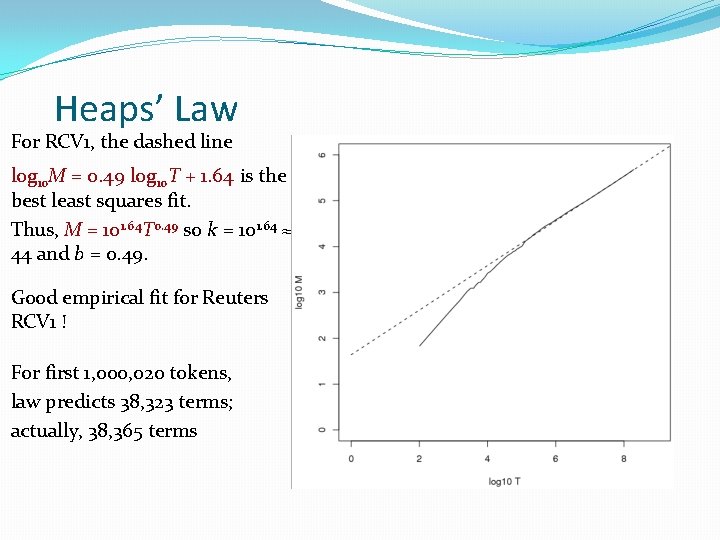

Heaps’ Law For RCV 1, the dashed line log 10 M = 0. 49 log 10 T + 1. 64 is the best least squares fit. Thus, M = 101. 64 T 0. 49 so k = 101. 64 ≈ 44 and b = 0. 49. Good empirical fit for Reuters RCV 1 ! For first 1, 000, 020 tokens, law predicts 38, 323 terms; actually, 38, 365 terms

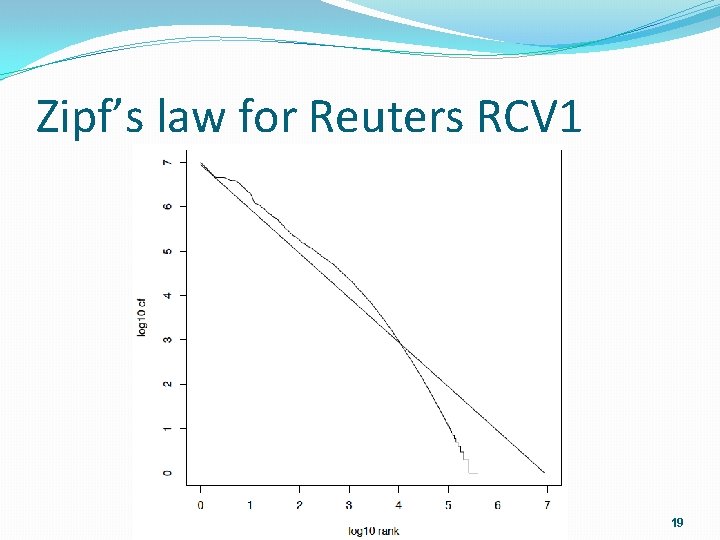

Zipf’s law �Heaps’ law gives the vocabulary size in collections. �We also study the relative frequencies of terms. �In natural language, there a few very frequent terms and very many very rare terms. �Zipf’s law: The ith most frequent term has frequency proportional to 1/i. �cfi = K/i where K is a normalizing constant �cfi is collection frequency: the number of occurrences of the term ti in the collection.

Zipf consequences �If the most frequent term (the) occurs cf 1 times �then the second most frequent term (of) occurs cf 1/2 times �the third most frequent term (and) occurs cf 1/3 times … �Equivalent: cfi = K/i where K is a normalizing factor, so �log cfi = log K - log i �Linear relationship between log cfi and log i

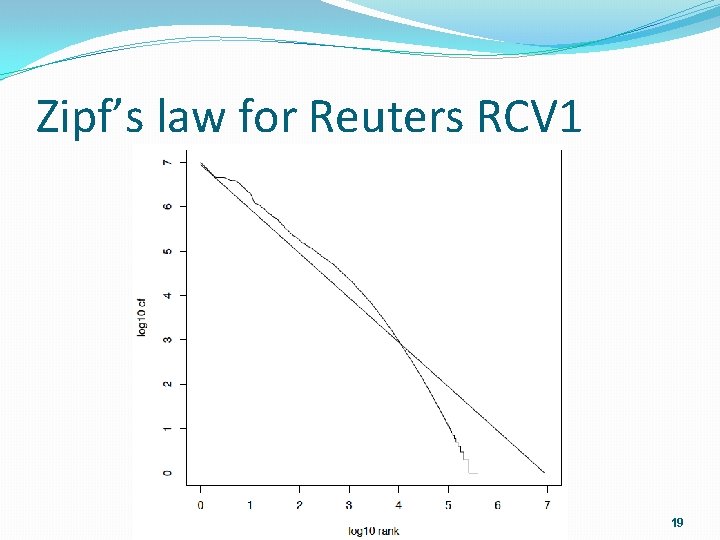

Zipf’s law for Reuters RCV 1 19

Efficiently Storing Huge Amounts of Textual Data Brainstorming Index Repository 20

The Problem �We want to store (information about) a lot of pages �Given a list of words, we want to find documents containing all words �Note this simplification – assume that the user task is exactly reflected in the query! �Ignore ranking for now �Dimension tradeoffs: �Speed �Memory size �Types of queries to be supported 21

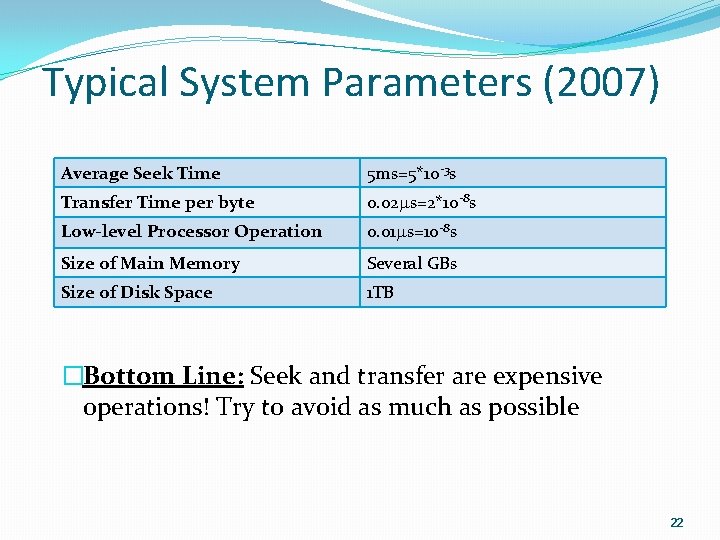

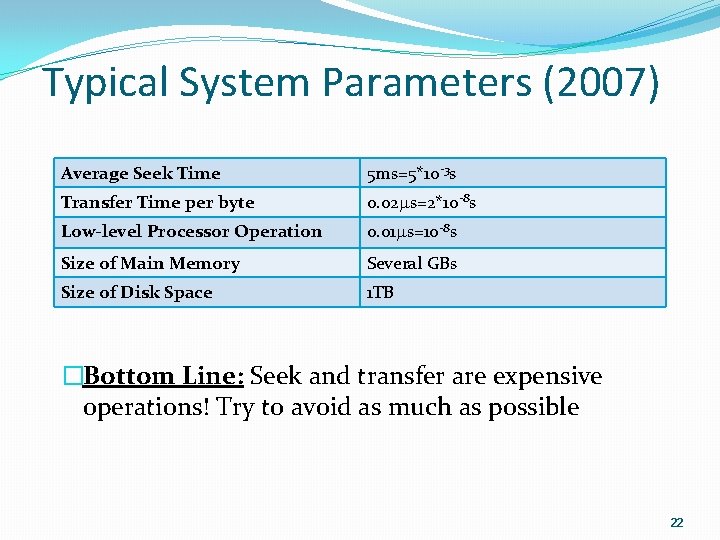

Typical System Parameters (2007) Average Seek Time 5 ms=5*10 -3 s Transfer Time per byte 0. 02 s=2*10 -8 s Low-level Processor Operation 0. 01 s=10 -8 s Size of Main Memory Several GBs Size of Disk Space 1 TB �Bottom Line: Seek and transfer are expensive operations! Try to avoid as much as possible 22

Ideas? 23

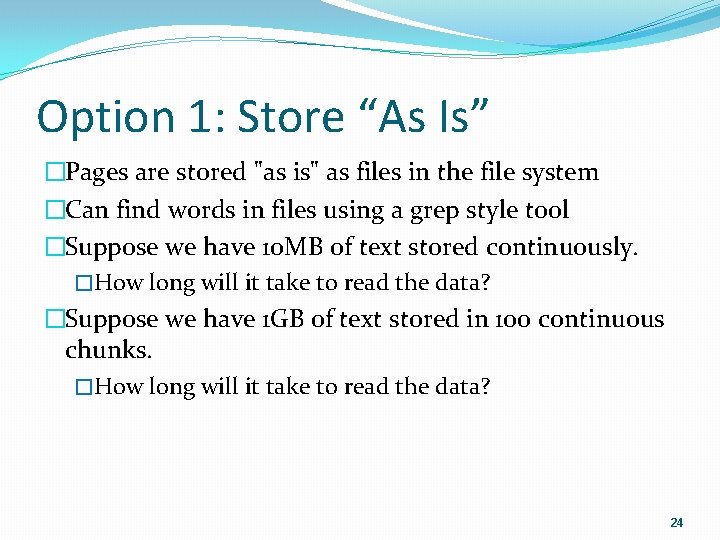

Option 1: Store “As Is” �Pages are stored "as is" as files in the file system �Can find words in files using a grep style tool �Suppose we have 10 MB of text stored continuously. �How long will it take to read the data? �Suppose we have 1 GB of text stored in 100 continuous chunks. �How long will it take to read the data? 24

What do you think �Are queries processed quickly? �Is this space efficient? 25

Option 2: Relational Database Doc. ID Doc 1 Rain, rain, go away. . . 2 The rain in Spain falls mainly Model A in the plain �How would we find documents containing rain? Rain and Spain? Rain and not Spain? �Is this better or worse than using the file system with grep? 26

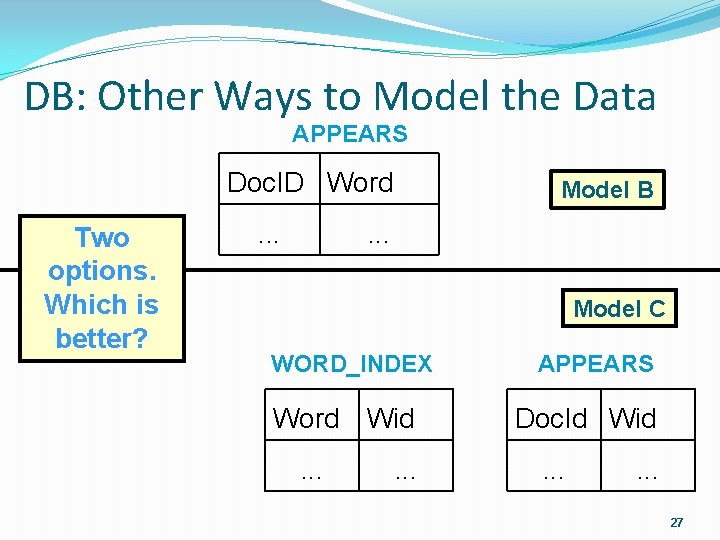

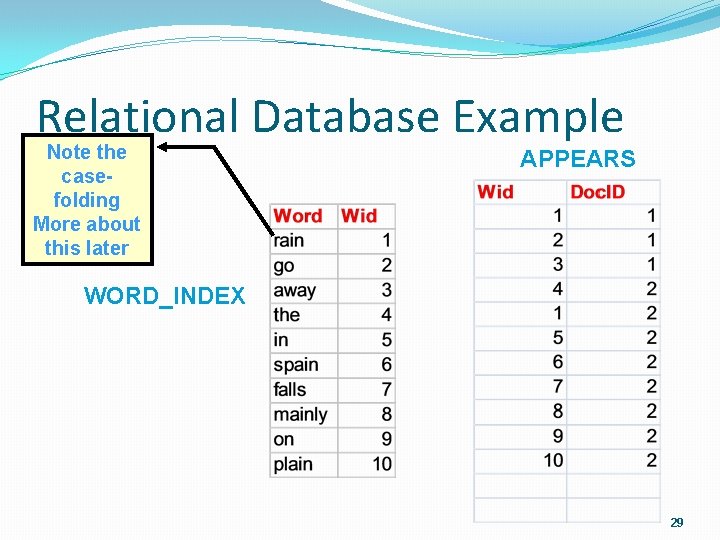

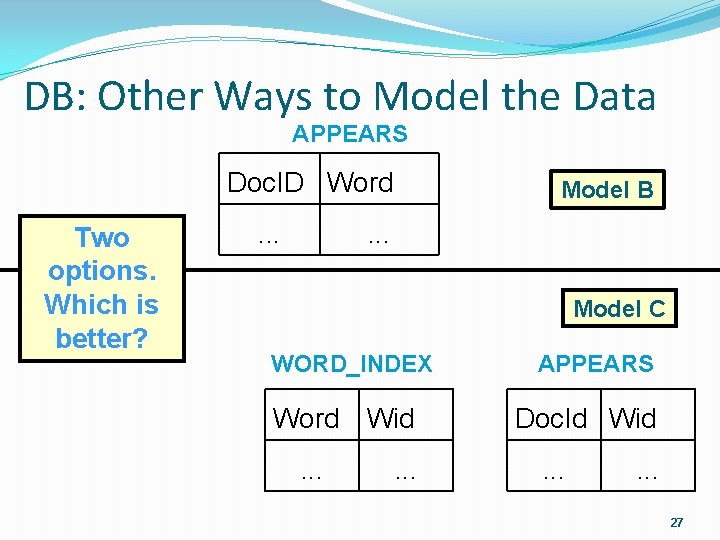

DB: Other Ways to Model the Data APPEARS Doc. ID Word Two options. Which is better? . . . Model B . . . Model C WORD_INDEX Word Wid. . . APPEARS Doc. Id Wid. . . 27

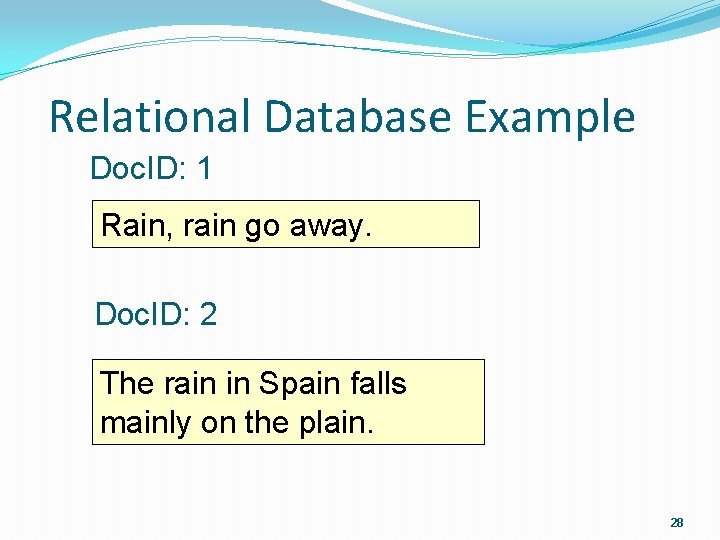

Relational Database Example Doc. ID: 1 Rain, rain go away. Doc. ID: 2 The rain in Spain falls mainly on the plain. 28

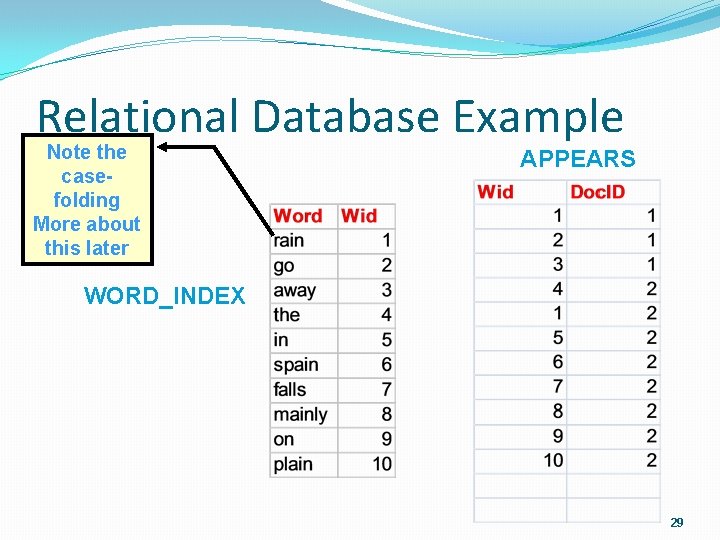

Relational Database Example Note the casefolding More about this later APPEARS WORD_INDEX 29

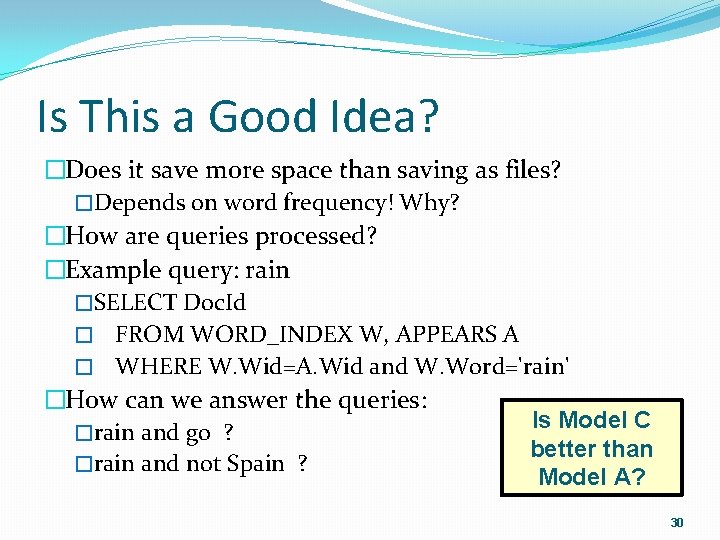

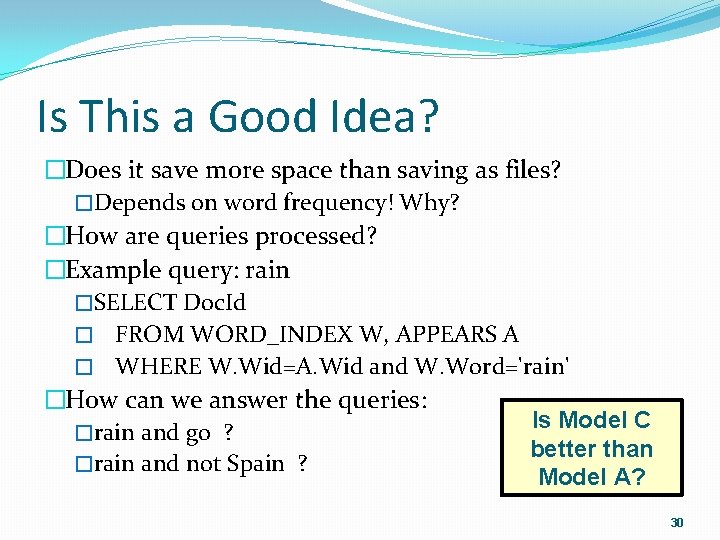

Is This a Good Idea? �Does it save more space than saving as files? �Depends on word frequency! Why? �How are queries processed? �Example query: rain �SELECT Doc. Id � FROM WORD_INDEX W, APPEARS A � WHERE W. Wid=A. Wid and W. Word='rain' �How can we answer the queries: Is Model C �rain and go ? better than �rain and not Spain ? Model A? 30

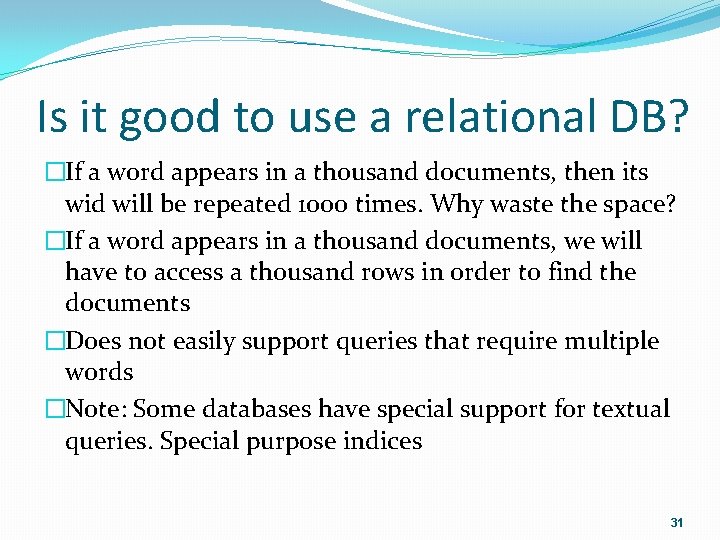

Is it good to use a relational DB? �If a word appears in a thousand documents, then its wid will be repeated 1000 times. Why waste the space? �If a word appears in a thousand documents, we will have to access a thousand rows in order to find the documents �Does not easily support queries that require multiple words �Note: Some databases have special support for textual queries. Special purpose indices 31

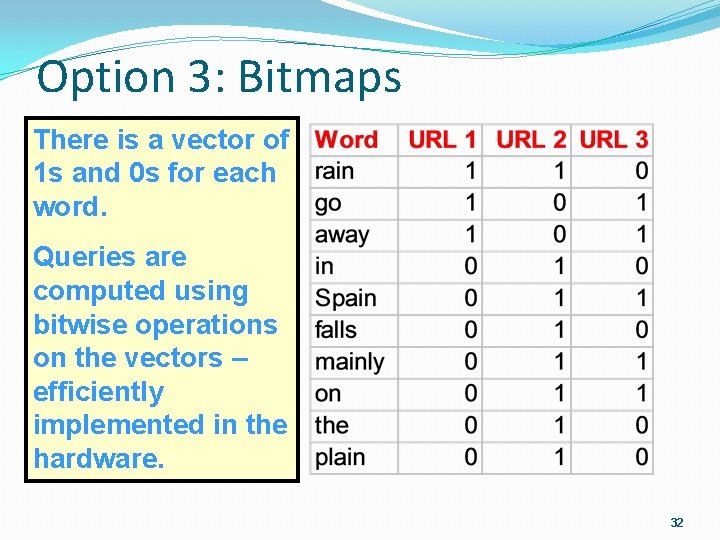

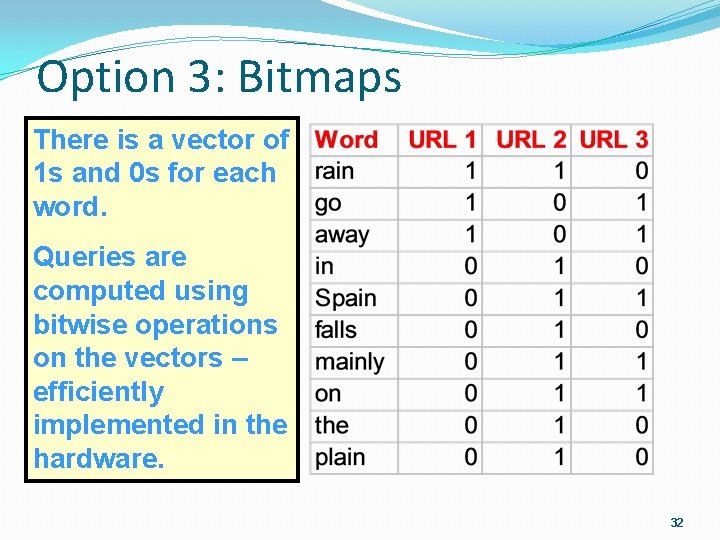

Option 3: Bitmaps There is a vector of 1 s and 0 s for each word. Queries are computed using bitwise operations on the vectors – efficiently implemented in the hardware. 32

Option 3: Bitmaps How would you compute: Q 1 = rain Q 2 = rain and Spain Q 3 = rain or not Spain 33

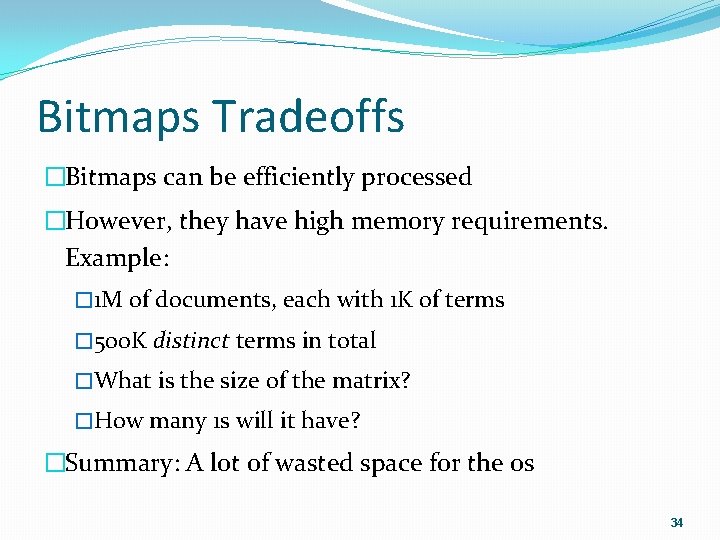

Bitmaps Tradeoffs �Bitmaps can be efficiently processed �However, they have high memory requirements. Example: � 1 M of documents, each with 1 K of terms � 500 K distinct terms in total �What is the size of the matrix? �How many 1 s will it have? �Summary: A lot of wasted space for the 0 s 34

The Index Repository A Good Solution 35

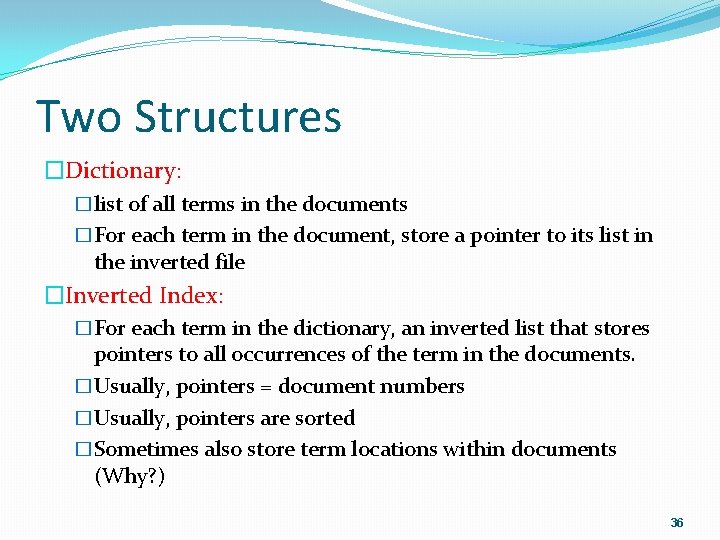

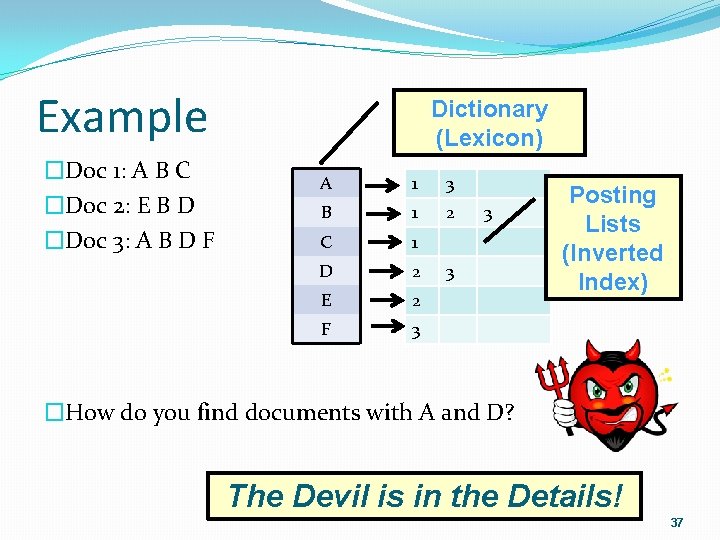

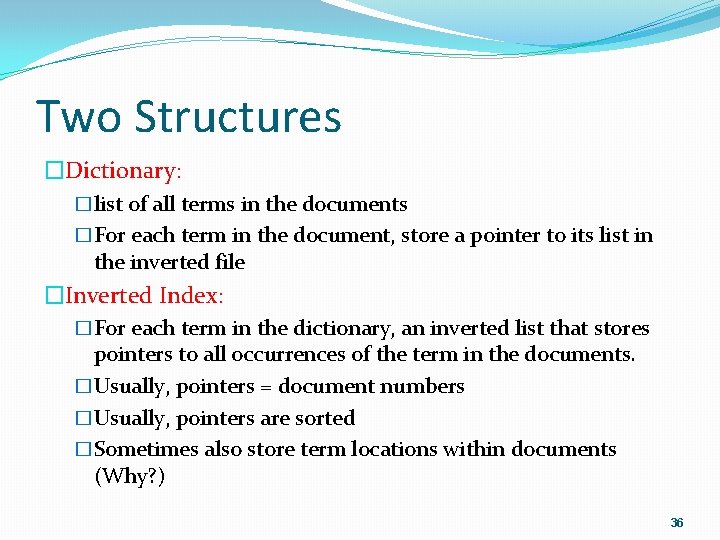

Two Structures �Dictionary: �list of all terms in the documents �For each term in the document, store a pointer to its list in the inverted file �Inverted Index: �For each term in the dictionary, an inverted list that stores pointers to all occurrences of the term in the documents. �Usually, pointers = document numbers �Usually, pointers are sorted �Sometimes also store term locations within documents (Why? ) 36

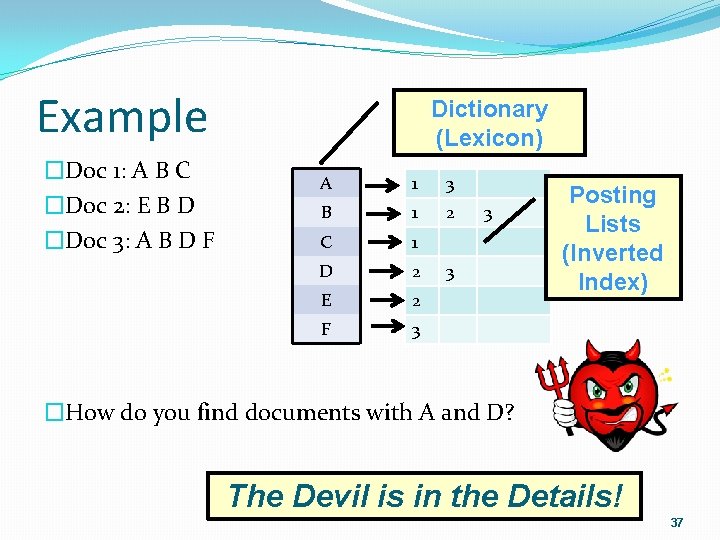

Example �Doc 1: A B C �Doc 2: E B D �Doc 3: A B D F Dictionary (Lexicon) A 1 3 B 1 2 C 1 D 2 E 2 F 3 3 3 Posting Lists (Inverted Index) �How do you find documents with A and D? The Devil is in the Details! 37

Compression �Use less disk space �Saves a little money �Keep more stuff in memory �Increases speed �Increase speed of data transfer from disk to memory �[read compressed data | decompress] is faster than [read uncompressed data] �Premise: Decompression algorithms are fast

Why compression for Index Repository? �Dictionary �Make it small enough to keep in main memory �Make it so small that you can keep some postings lists in main memory too �Postings file(s) �Reduce disk space needed �Decrease time needed to read postings lists from disk �Large search engines keep a significant part of the postings in memory. � Compression lets you keep more in memory

The Dictionary Data Structures 40

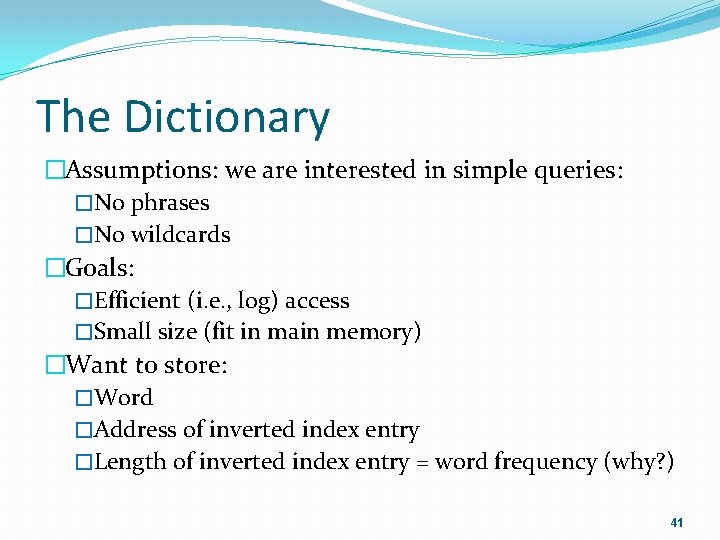

The Dictionary �Assumptions: we are interested in simple queries: �No phrases �No wildcards �Goals: �Efficient (i. e. , log) access �Small size (fit in main memory) �Want to store: �Word �Address of inverted index entry �Length of inverted index entry = word frequency (why? ) 41

Why compress the dictionary? �Search begins with the dictionary �We want to keep it in memory �Memory footprint competition with other applications �Embedded/mobile devices may have very little memory �Even if the dictionary isn’t in memory, we want it to be small for a fast search startup time �So, compressing the dictionary is important

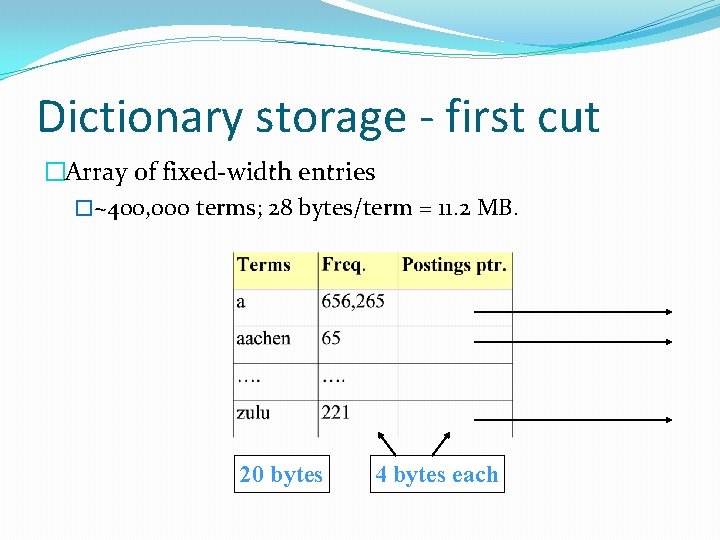

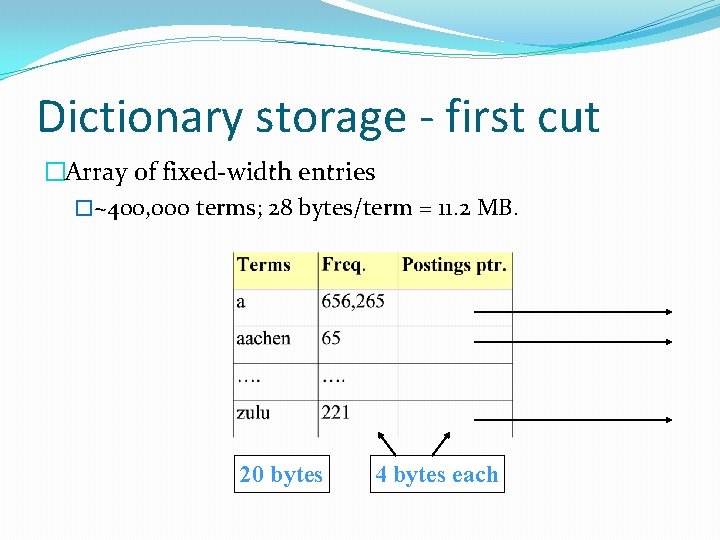

Dictionary storage - first cut �Array of fixed-width entries �~400, 000 terms; 28 bytes/term = 11. 2 MB. 20 bytes 4 bytes each

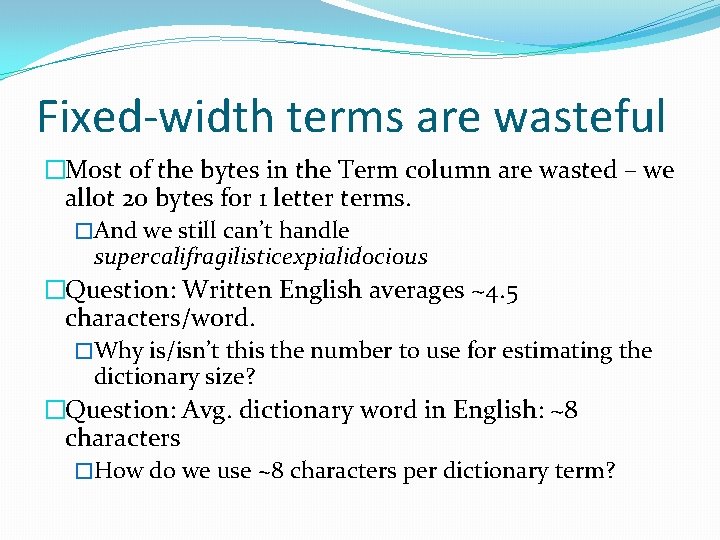

Fixed-width terms are wasteful �Most of the bytes in the Term column are wasted – we allot 20 bytes for 1 letter terms. �And we still can’t handle supercalifragilisticexpialidocious �Question: Written English averages ~4. 5 characters/word. �Why is/isn’t this the number to use for estimating the dictionary size? �Question: Avg. dictionary word in English: ~8 characters �How do we use ~8 characters per dictionary term?

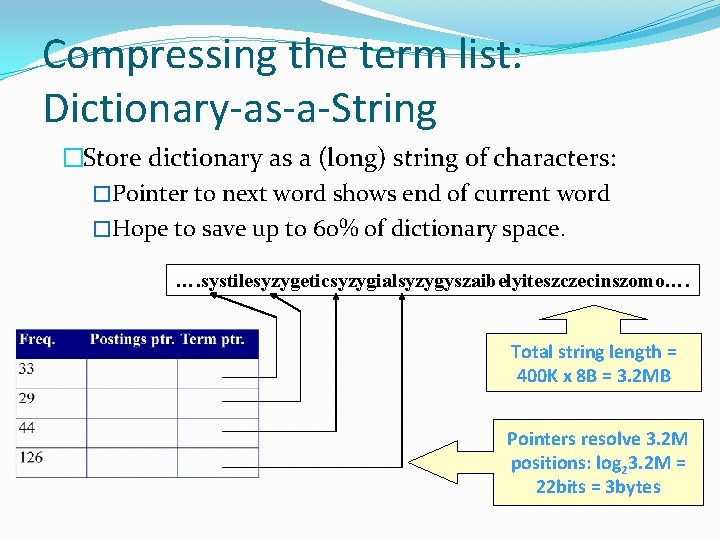

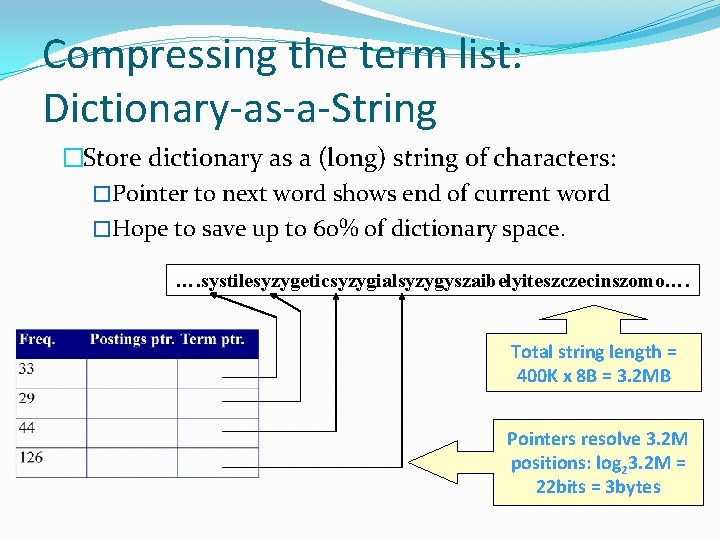

Compressing the term list: Dictionary-as-a-String �Store dictionary as a (long) string of characters: �Pointer to next word shows end of current word �Hope to save up to 60% of dictionary space. …. systilesyzygeticsyzygialsyzygyszaibelyiteszczecinszomo…. Total string length = 400 K x 8 B = 3. 2 MB Pointers resolve 3. 2 M positions: log 23. 2 M = 22 bits = 3 bytes

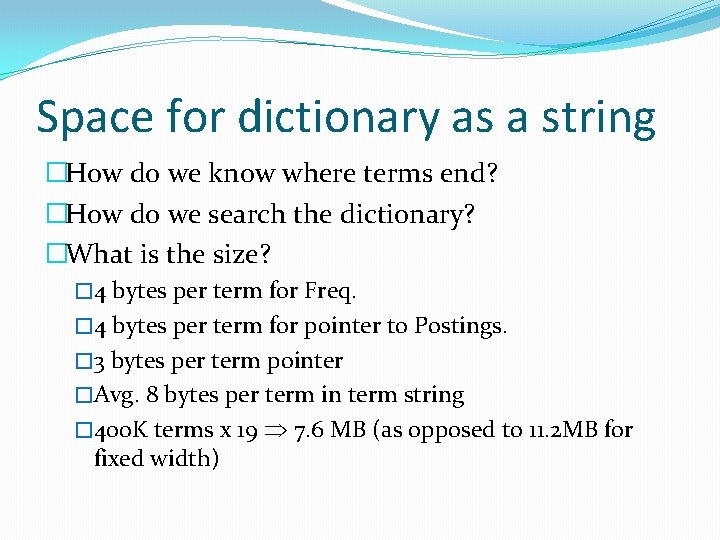

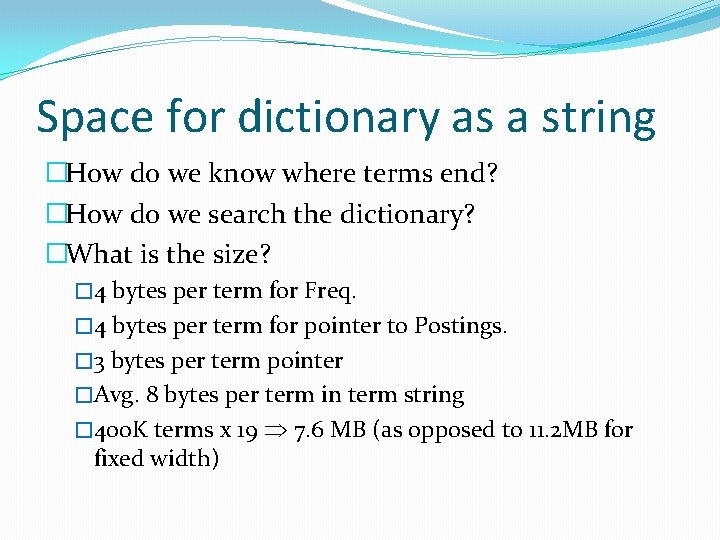

Space for dictionary as a string �How do we know where terms end? �How do we search the dictionary? �What is the size? � 4 bytes per term for Freq. � 4 bytes per term for pointer to Postings. � 3 bytes per term pointer �Avg. 8 bytes per term in term string � 400 K terms x 19 7. 6 MB (as opposed to 11. 2 MB for fixed width)

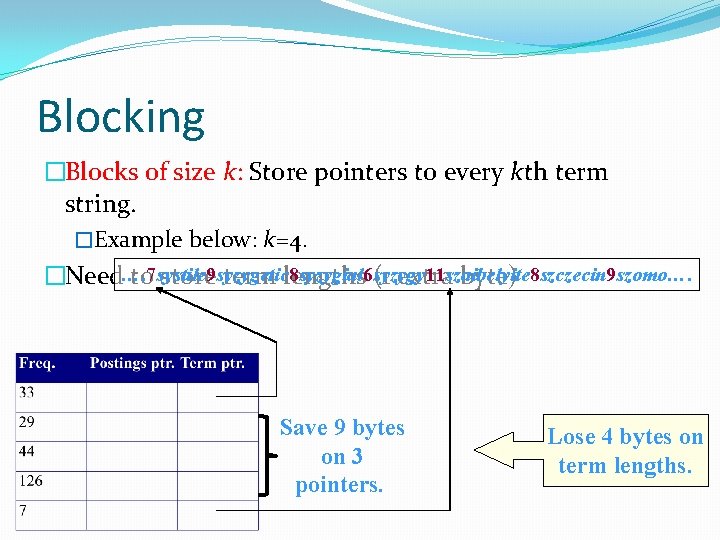

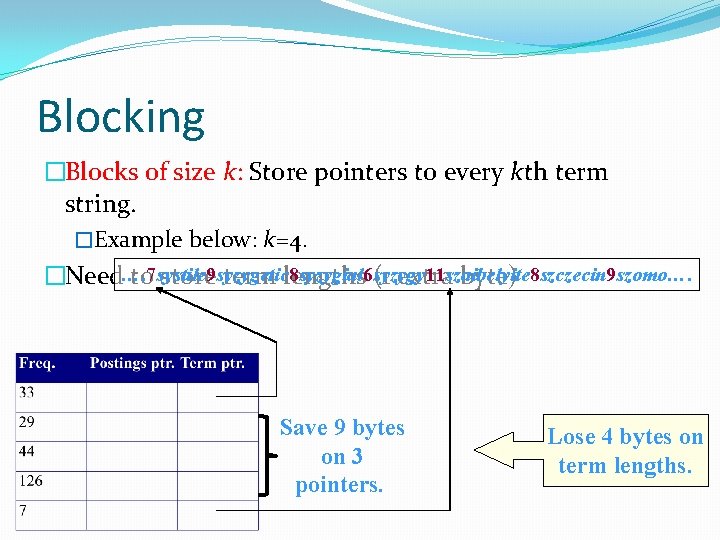

Blocking �Blocks of size k: Store pointers to every kth term string. �Example below: k=4. �Need…. 7 systile 9 syzygetic 8 syzygial 6 syzygy 11 szaibelyite 8 szczecin 9 szomo…. to store term lengths (1 extra byte) Save 9 bytes on 3 pointers. Lose 4 bytes on term lengths.

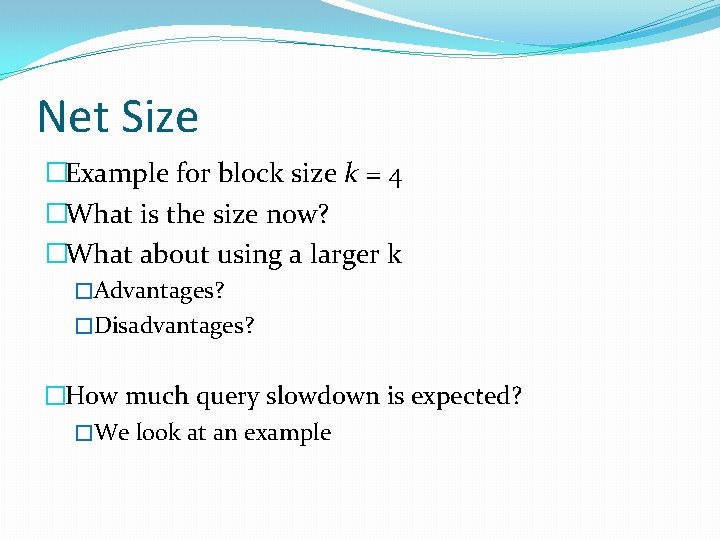

Net Size �Example for block size k = 4 �What is the size now? �What about using a larger k �Advantages? �Disadvantages? �How much query slowdown is expected? �We look at an example

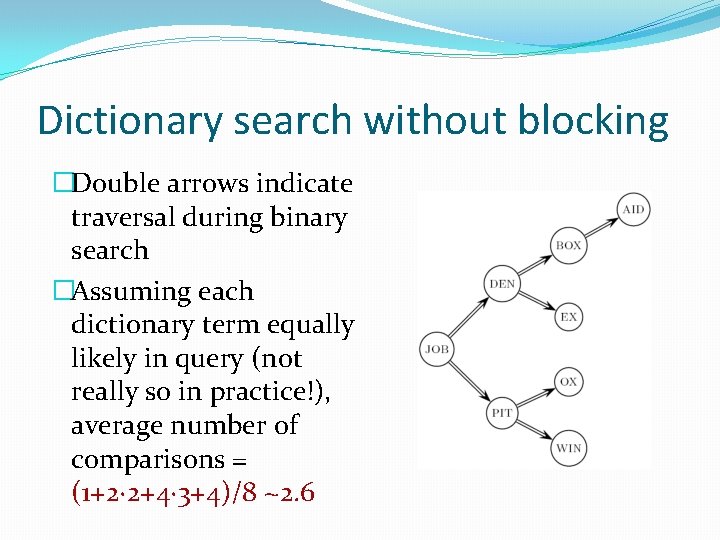

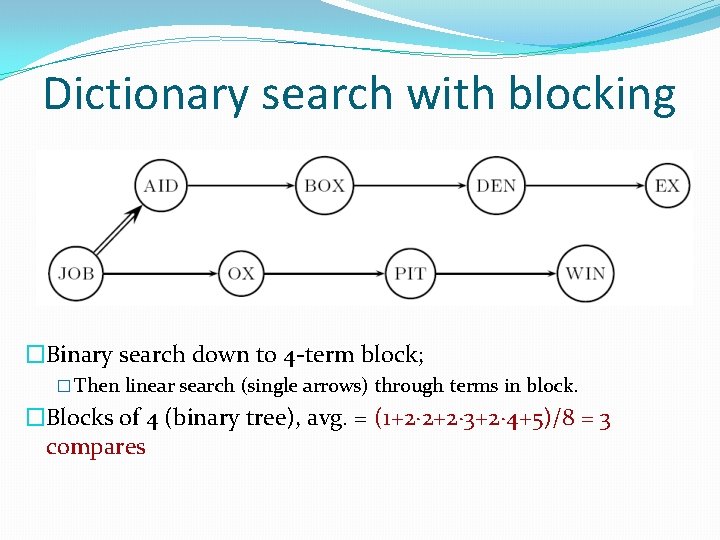

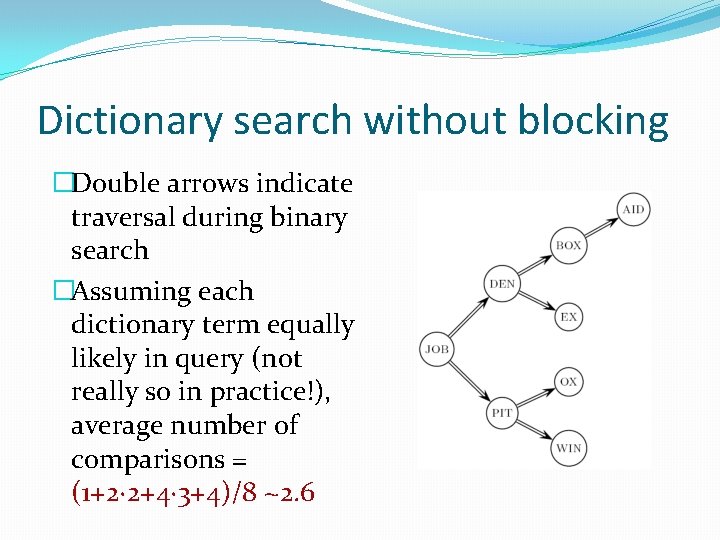

Dictionary search without blocking �Double arrows indicate traversal during binary search �Assuming each dictionary term equally likely in query (not really so in practice!), average number of comparisons = (1+2∙ 2+4∙ 3+4)/8 ~2. 6

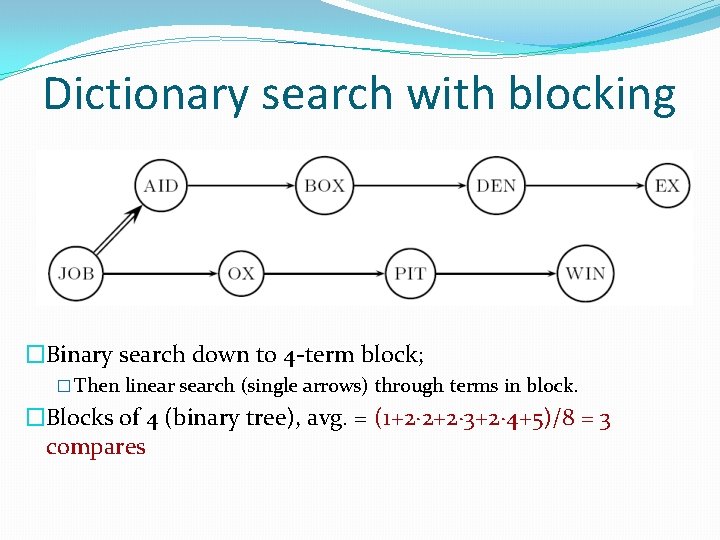

Dictionary search with blocking �Binary search down to 4 -term block; � Then linear search (single arrows) through terms in block. �Blocks of 4 (binary tree), avg. = (1+2∙ 2+2∙ 3+2∙ 4+5)/8 = 3 compares

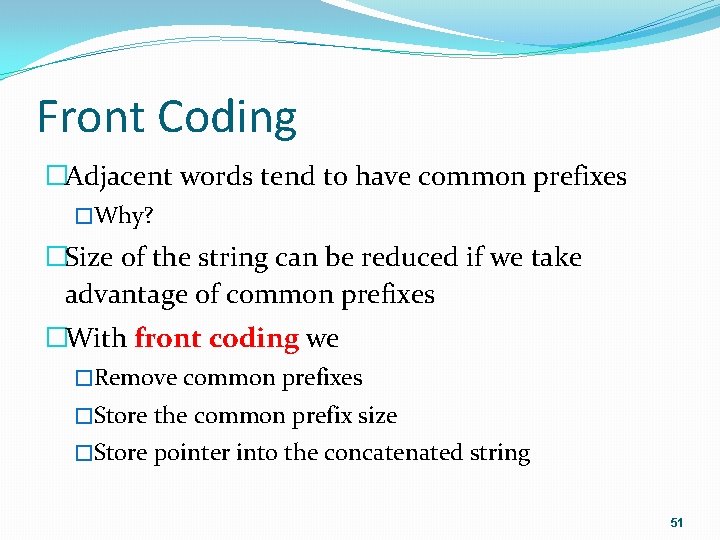

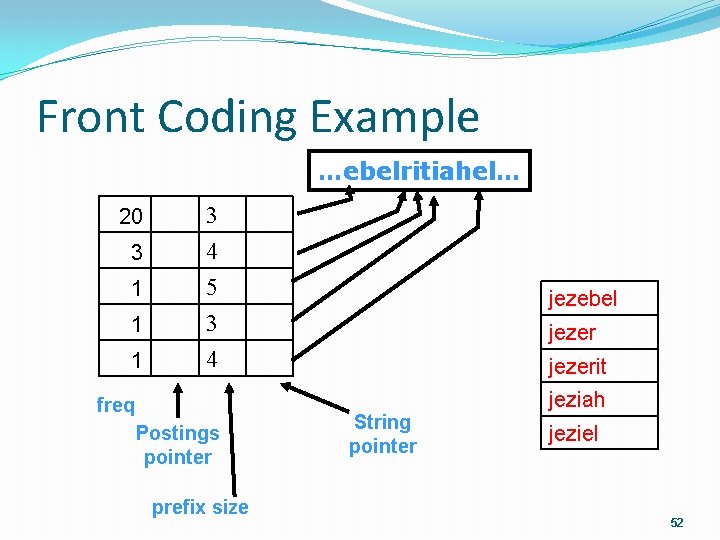

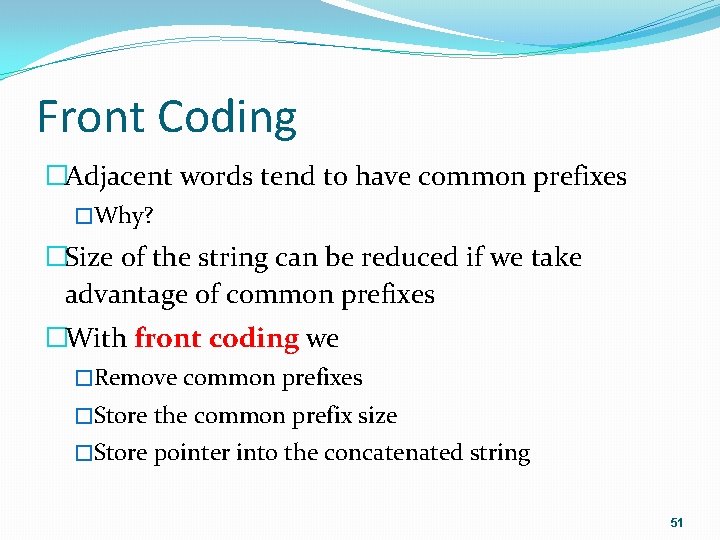

Front Coding �Adjacent words tend to have common prefixes �Why? �Size of the string can be reduced if we take advantage of common prefixes �With front coding we �Remove common prefixes �Store the common prefix size �Store pointer into the concatenated string 51

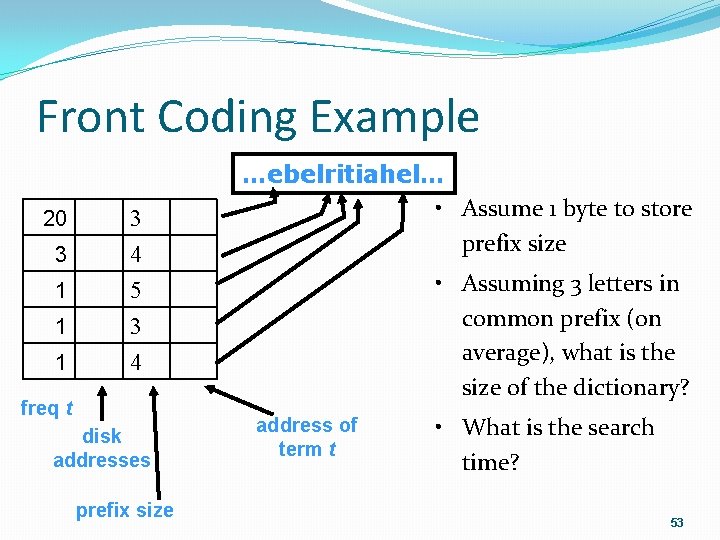

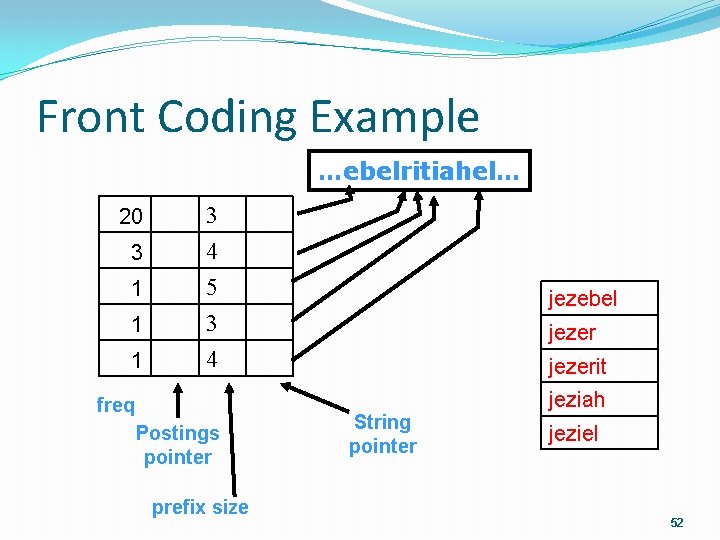

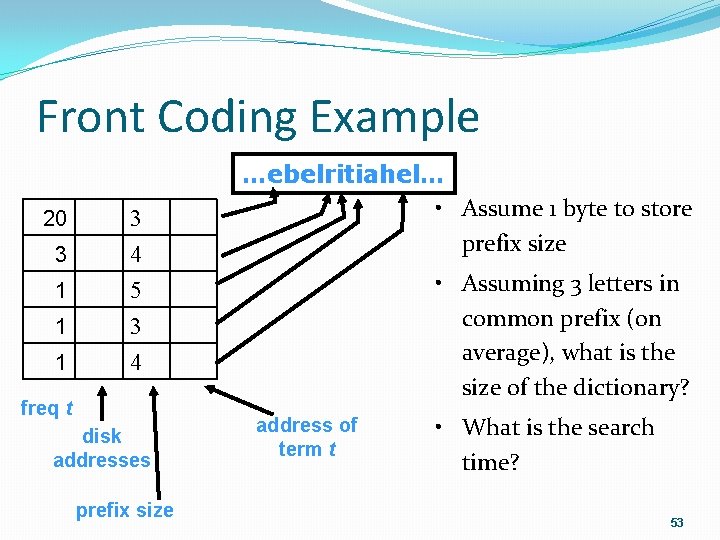

Front Coding Example …ebelritiahel… 20 3 1 1 3 4 5 3 1 4 freq Postings pointer prefix size jezebel jezerit String pointer jeziah jeziel 52

Front Coding Example 20 3 1 1 3 4 5 3 1 4 freq t disk addresses prefix size …ebelritiahel… • Assume 1 byte to store prefix size • Assuming 3 letters in common prefix (on average), what is the size of the dictionary? address of term t • What is the search time? 53

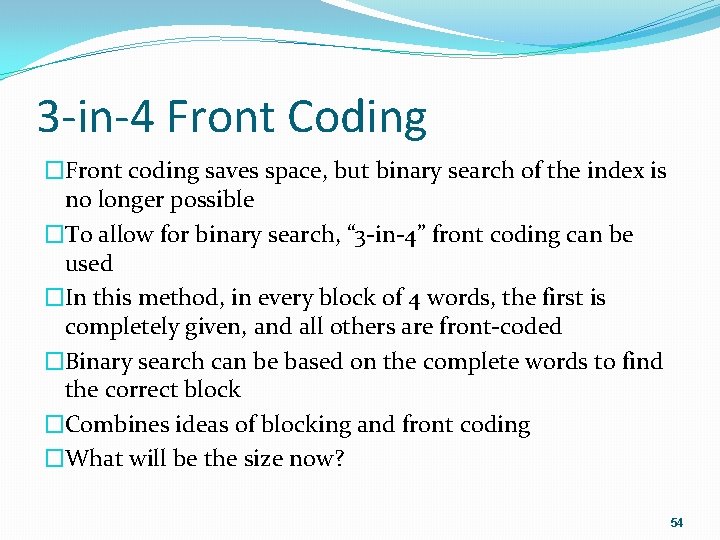

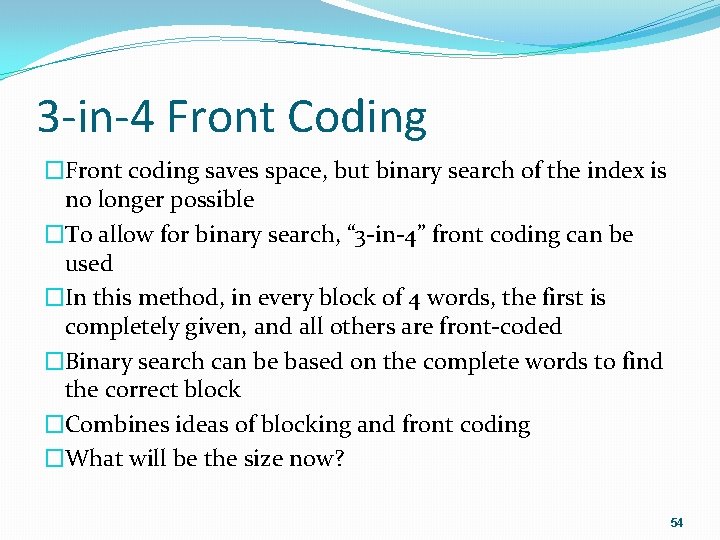

3 -in-4 Front Coding �Front coding saves space, but binary search of the index is no longer possible �To allow for binary search, “ 3 -in-4” front coding can be used �In this method, in every block of 4 words, the first is completely given, and all others are front-coded �Binary search can be based on the complete words to find the correct block �Combines ideas of blocking and front coding �What will be the size now? 54

Tirgul Questions 55

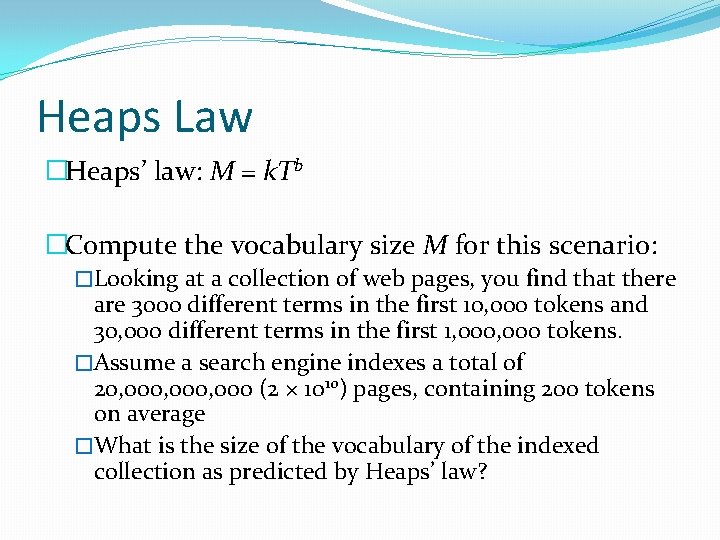

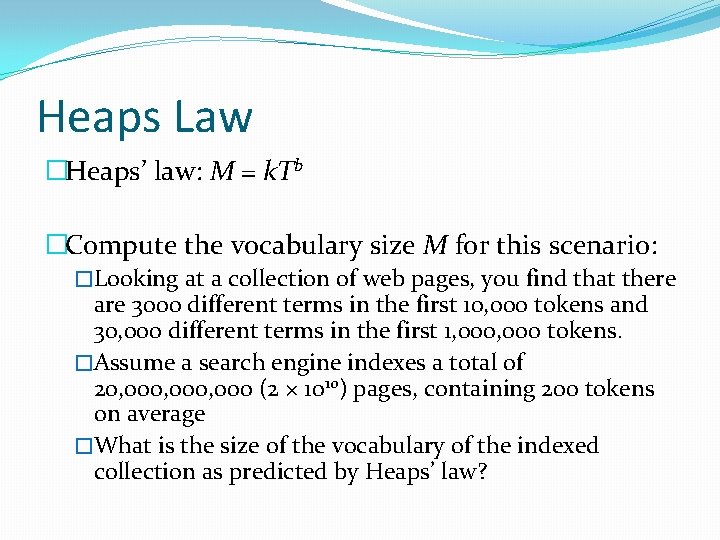

Heaps Law �Heaps’ law: M = k. Tb �Compute the vocabulary size M for this scenario: �Looking at a collection of web pages, you find that there are 3000 different terms in the first 10, 000 tokens and 30, 000 different terms in the first 1, 000 tokens. �Assume a search engine indexes a total of 20, 000, 000 (2 × 1010) pages, containing 200 tokens on average �What is the size of the vocabulary of the indexed collection as predicted by Heaps’ law?

Zipf’s Law �Remember: If the most frequent term (the) occurs cf 1 times �then the second most frequent term (of) occurs cf 1/2 times �the third most frequent term (and) occurs cf 1/3 times … �Suppose that t 2 , the second most common word in the text, appears 10, 000 times �How many times will t 10 appear? 57

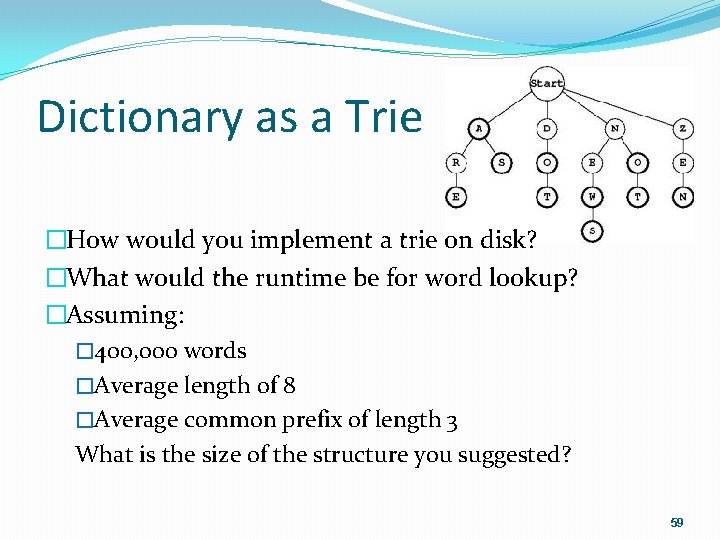

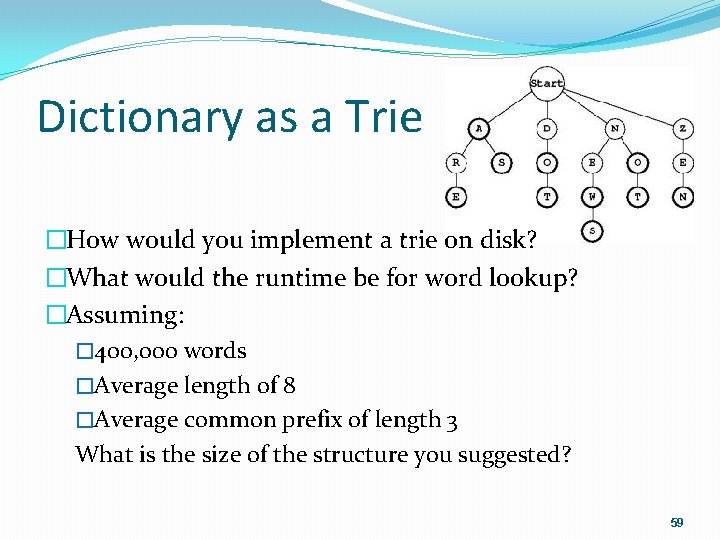

Dictionary as a Trie �This tree is called a trie �Each node can have a child for each letter of the alphabet �Circled nodes indicate the end of words �The following words are stored in this trie: �a, as, are, dot, news, not, zen 58

Dictionary as a Trie �How would you implement a trie on disk? �What would the runtime be for word lookup? �Assuming: � 400, 000 words �Average length of 8 �Average common prefix of length 3 What is the size of the structure you suggested? 59

Todays agenda

Todays agenda Search engines information retrieval in practice

Search engines information retrieval in practice Search engines

Search engines Other search engines

Other search engines Www.sbu

Www.sbu Meta search engines compared

Meta search engines compared Information retrieval slides

Information retrieval slides Search engines information retrieval in practice

Search engines information retrieval in practice Open source search engines

Open source search engines Search engine architecture

Search engine architecture Meta search engines

Meta search engines Search engines information retrieval in practice

Search engines information retrieval in practice Explain search engine architecture

Explain search engine architecture Agenda sistemica y agenda institucional

Agenda sistemica y agenda institucional Todays worldld

Todays worldld Todays price of asda shares

Todays price of asda shares Todays software

Todays software Todays objective

Todays objective Todays vision

Todays vision No thats not it

No thats not it Todays concept

Todays concept Todays lab

Todays lab Chapter 13 marketing in todays world

Chapter 13 marketing in todays world Todays goal

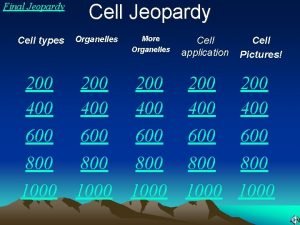

Todays goal Todays jeopardy

Todays jeopardy Todays objective

Todays objective Todays final jeopardy

Todays final jeopardy Midlands 2 west (north)

Midlands 2 west (north) Whats thermal energy

Whats thermal energy Todays class com

Todays class com Todays science

Todays science Wat is todays date

Wat is todays date Todays objective

Todays objective Todays objective

Todays objective Cell organelle jeopardy

Cell organelle jeopardy Todays plan

Todays plan Todays class

Todays class Todaysclass

Todaysclass Teacher:good morning class

Teacher:good morning class Todays objective

Todays objective Todays generations

Todays generations Handcuff nomenclature quiz

Handcuff nomenclature quiz Todays health

Todays health Whats todays temperature

Whats todays temperature Todays globl

Todays globl Judith kuster

Judith kuster Todays objective

Todays objective Todays objective

Todays objective Today planets position

Today planets position Todays sabbath lesson

Todays sabbath lesson Objective of cyberbullying

Objective of cyberbullying Multiple choice comma quiz

Multiple choice comma quiz Todays public relations departments

Todays public relations departments Adam smith jeopardy

Adam smith jeopardy Resume objectives examples

Resume objectives examples Todays weather hull

Todays weather hull Todays whether

Todays whether How to identify simile

How to identify simile Todays jeopardy

Todays jeopardy Todays wordlw

Todays wordlw Geographic regions final jeopardy

Geographic regions final jeopardy