Parallel Processing CS 676 Lecture 7 Message Passing

![dot. c #include <stdio. h> float Serial_doc(float x[] /* in */, float y[] /* dot. c #include <stdio. h> float Serial_doc(float x[] /* in */, float y[] /*](https://slidetodoc.com/presentation_image_h/e91c0c6fabf634206bf145fdb5005a62/image-21.jpg)

![Parallel Dot float Parallel_doc(float local_x[] /* in */, float local_y[] /* in */, int Parallel Dot float Parallel_doc(float local_x[] /* in */, float local_y[] /* in */, int](https://slidetodoc.com/presentation_image_h/e91c0c6fabf634206bf145fdb5005a62/image-22.jpg)

![Parallel All Dot float Parallel_doc(float local_x[] /* in */, float local_y[] /* in */, Parallel All Dot float Parallel_doc(float local_x[] /* in */, float local_y[] /* in */,](https://slidetodoc.com/presentation_image_h/e91c0c6fabf634206bf145fdb5005a62/image-23.jpg)

- Slides: 35

Parallel Processing (CS 676) Lecture 7: Message Passing using MPI* Jeremy R. Johnson *Parts of this lecture was derived from chapters 3 -5, 11 in Pacheco Parallel Processing 1

Introduction • Objective: To introduce distributed memory parallel programming using message passing. Introduction to the MPI standard for message passing. • Topics – Introduction to MPI • hello. c • hello. f – Example Problem (numeric integration) – Collective Communication – Performance Model Parallel Processing 2

MPI • Message Passing Interface • Distributed Memory Model – Single Program Multiple Data (SPMD) – Communication using message passing • Send/Recv – Collective Communication • • • Broadcast Reduce (All. Reduce) Gather (All. Gather) Scatter (All. Scatter) Alltoall Parallel Processing 3

Benefits/Disadvantges • No new language is requried • Portable • Good performance • Explicitly forces programmer to deal with local/global access • Harder to program that shared memory – requires larger program/algorithm changes Parallel Processing 4

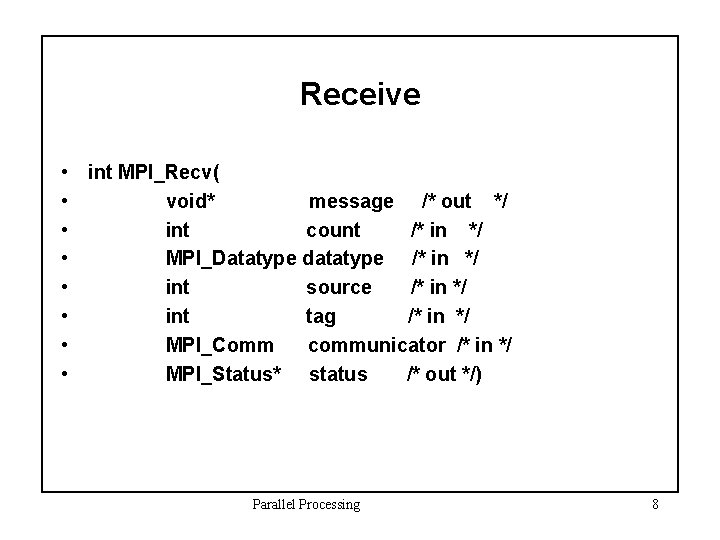

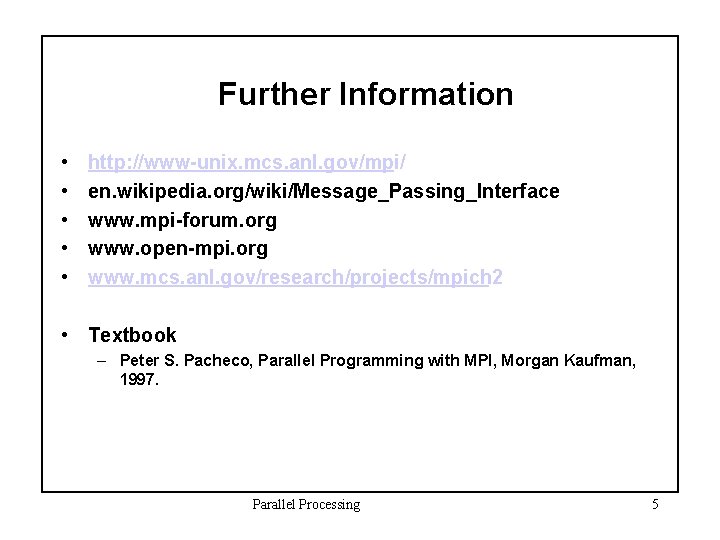

Further Information • • • http: //www-unix. mcs. anl. gov/mpi/ en. wikipedia. org/wiki/Message_Passing_Interface www. mpi-forum. org www. open-mpi. org www. mcs. anl. gov/research/projects/mpich 2 • Textbook – Peter S. Pacheco, Parallel Programming with MPI, Morgan Kaufman, 1997. Parallel Processing 5

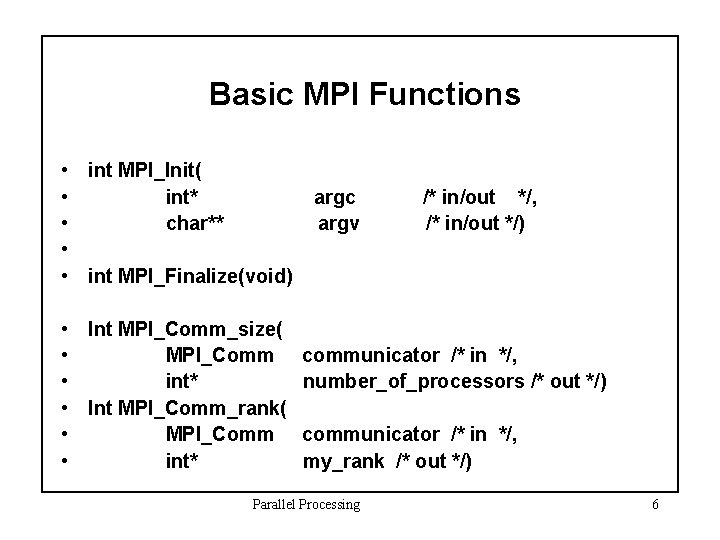

Basic MPI Functions • int MPI_Init( • int* • char** • • int MPI_Finalize(void) argc argv /* in/out */, /* in/out */) • Int MPI_Comm_size( • MPI_Comm communicator /* in */, • int* number_of_processors /* out */) • Int MPI_Comm_rank( • MPI_Comm communicator /* in */, • int* my_rank /* out */) Parallel Processing 6

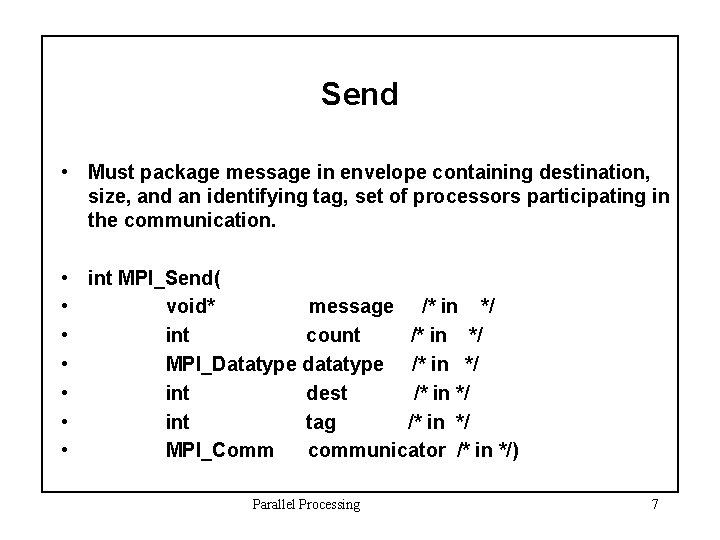

Send • Must package message in envelope containing destination, size, and an identifying tag, set of processors participating in the communication. • int MPI_Send( • void* message /* in */ • int count /* in */ • MPI_Datatype datatype /* in */ • int dest /* in */ • int tag /* in */ • MPI_Comm communicator /* in */) Parallel Processing 7

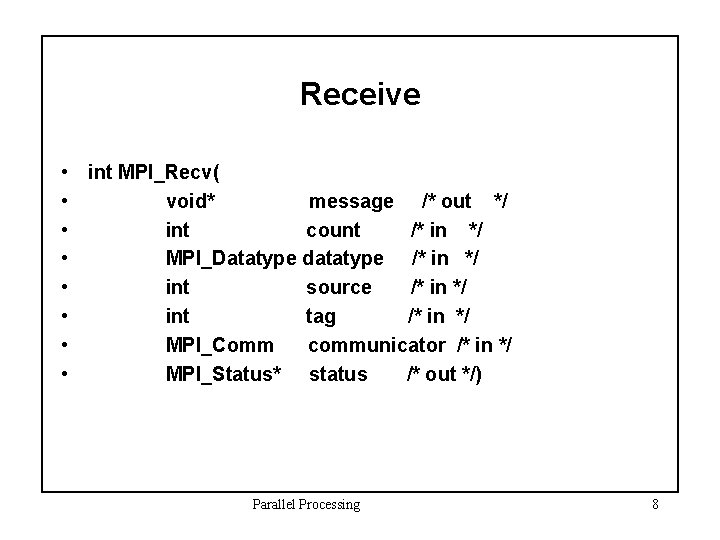

Receive • int MPI_Recv( • void* message /* out */ • int count /* in */ • MPI_Datatype datatype /* in */ • int source /* in */ • int tag /* in */ • MPI_Comm communicator /* in */ • MPI_Status* status /* out */) Parallel Processing 8

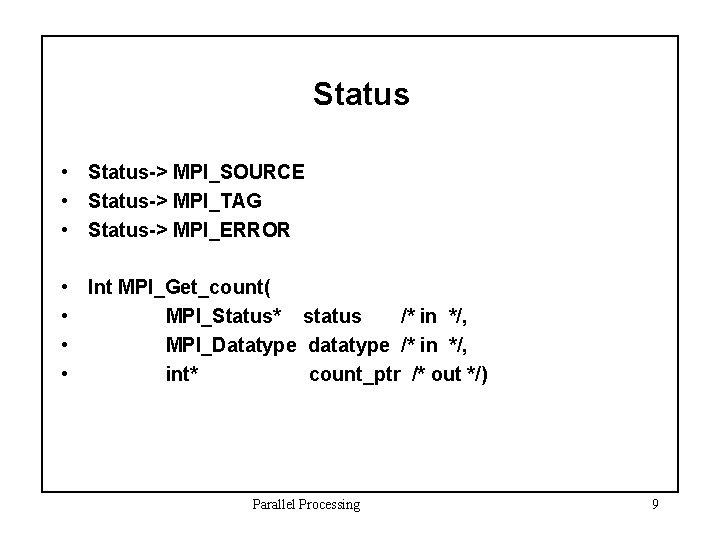

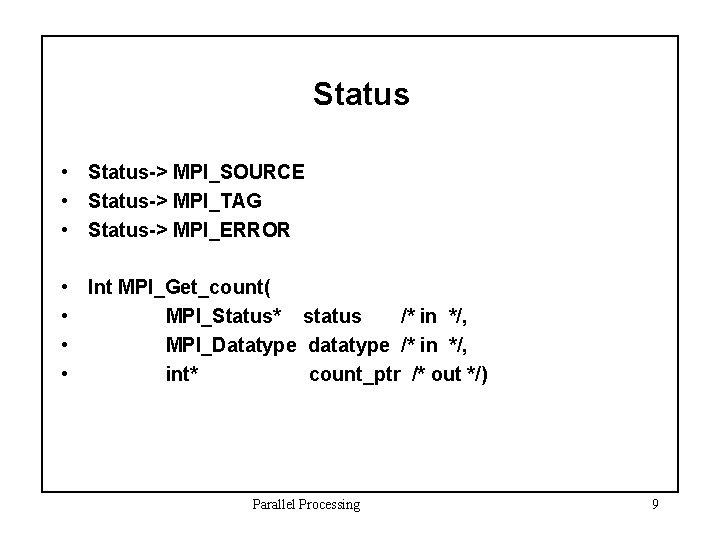

Status • Status-> MPI_SOURCE • Status-> MPI_TAG • Status-> MPI_ERROR • Int MPI_Get_count( • MPI_Status* status /* in */, • MPI_Datatype datatype /* in */, • int* count_ptr /* out */) Parallel Processing 9

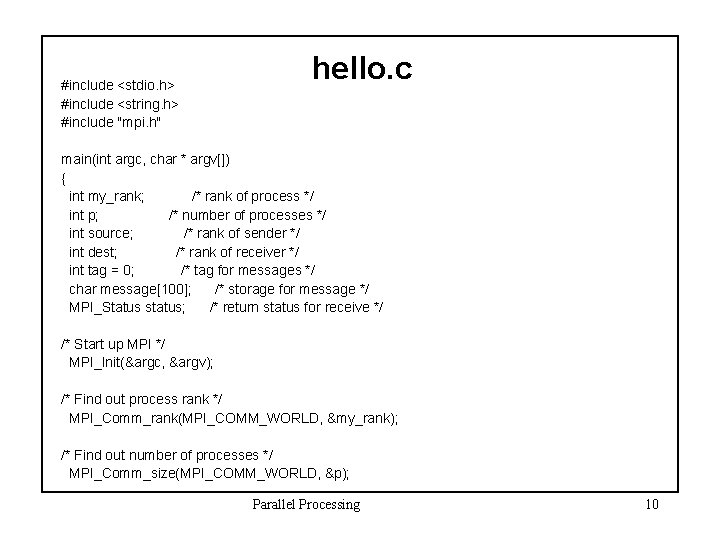

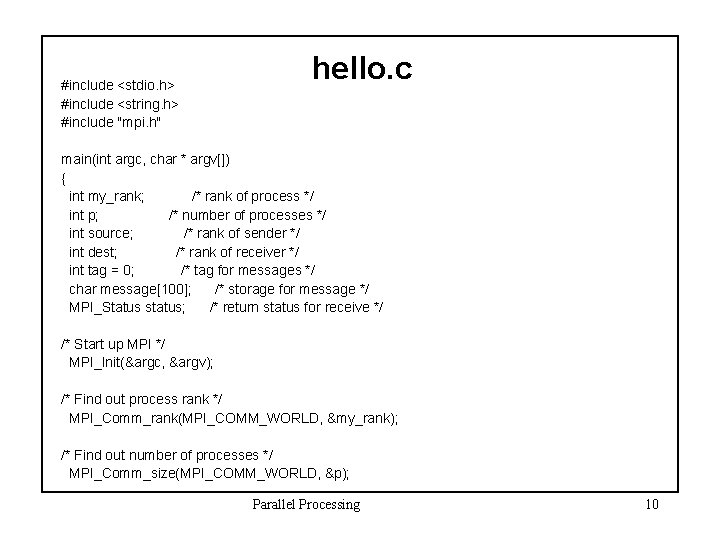

#include <stdio. h> #include <string. h> #include "mpi. h" hello. c main(int argc, char * argv[]) { int my_rank; /* rank of process */ int p; /* number of processes */ int source; /* rank of sender */ int dest; /* rank of receiver */ int tag = 0; /* tag for messages */ char message[100]; /* storage for message */ MPI_Status status; /* return status for receive */ /* Start up MPI */ MPI_Init(&argc, &argv); /* Find out process rank */ MPI_Comm_rank(MPI_COMM_WORLD, &my_rank); /* Find out number of processes */ MPI_Comm_size(MPI_COMM_WORLD, &p); Parallel Processing 10

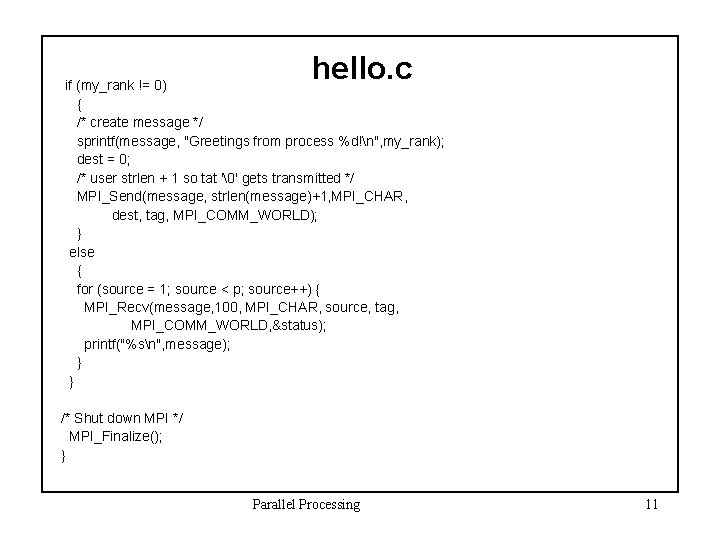

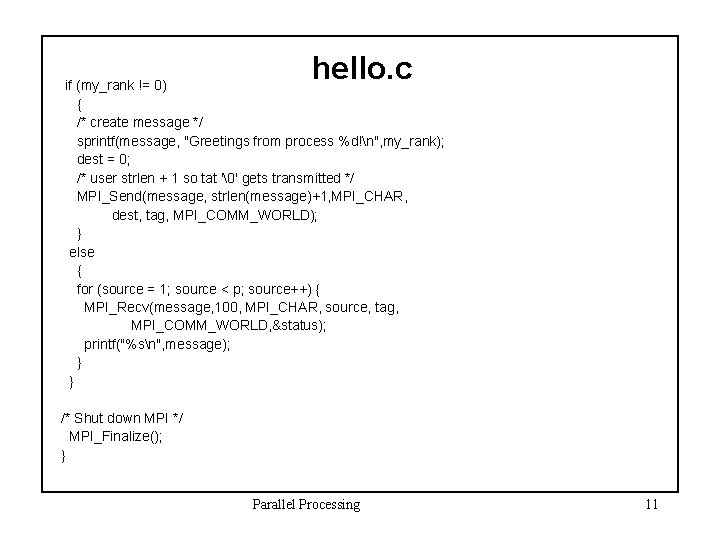

hello. c if (my_rank != 0) { /* create message */ sprintf(message, "Greetings from process %d!n", my_rank); dest = 0; /* user strlen + 1 so tat '�' gets transmitted */ MPI_Send(message, strlen(message)+1, MPI_CHAR, dest, tag, MPI_COMM_WORLD); } else { for (source = 1; source < p; source++) { MPI_Recv(message, 100, MPI_CHAR, source, tag, MPI_COMM_WORLD, &status); printf("%sn", message); } } /* Shut down MPI */ MPI_Finalize(); } Parallel Processing 11

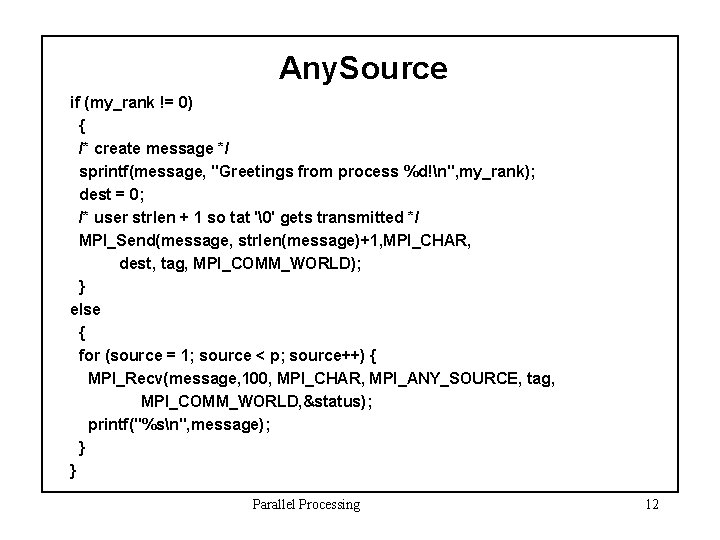

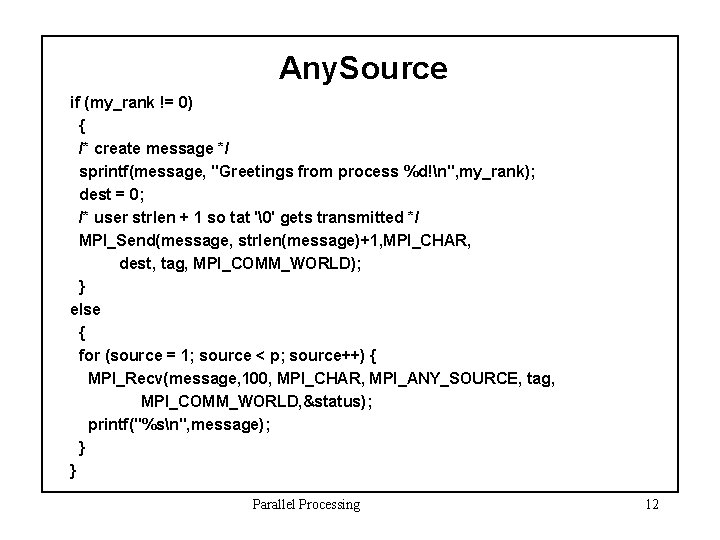

Any. Source if (my_rank != 0) { /* create message */ sprintf(message, "Greetings from process %d!n", my_rank); dest = 0; /* user strlen + 1 so tat '�' gets transmitted */ MPI_Send(message, strlen(message)+1, MPI_CHAR, dest, tag, MPI_COMM_WORLD); } else { for (source = 1; source < p; source++) { MPI_Recv(message, 100, MPI_CHAR, MPI_ANY_SOURCE, tag, MPI_COMM_WORLD, &status); printf("%sn", message); } } Parallel Processing 12

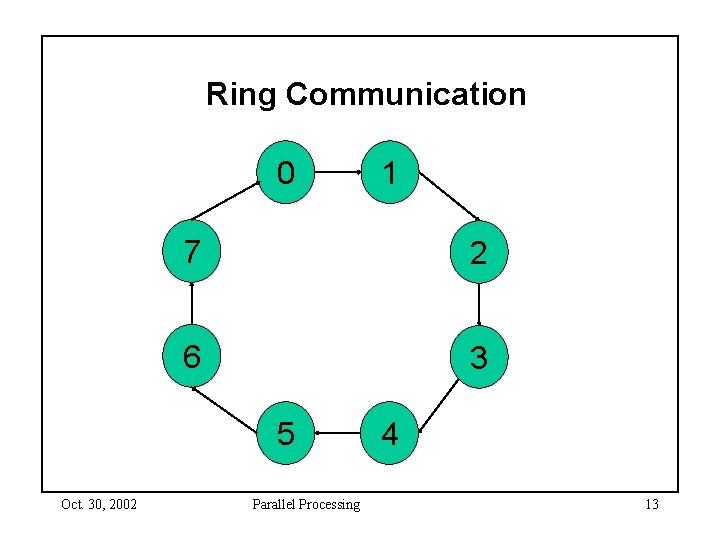

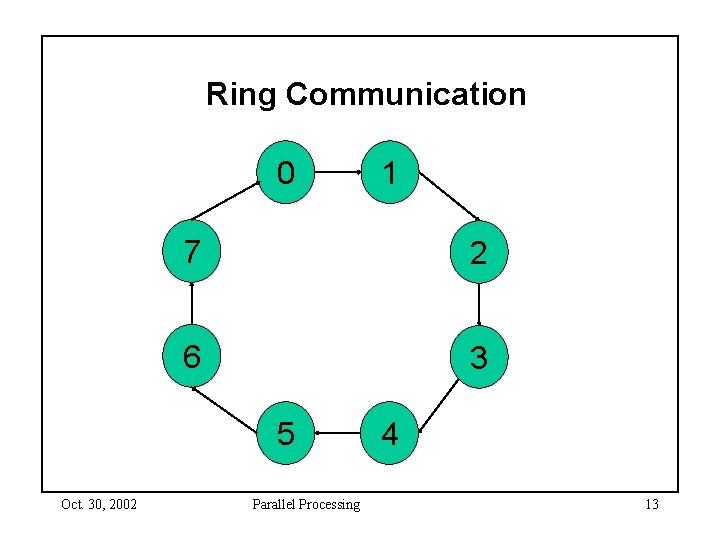

Ring Communication 0 7 2 6 3 5 Oct. 30, 2002 1 Parallel Processing 4 13

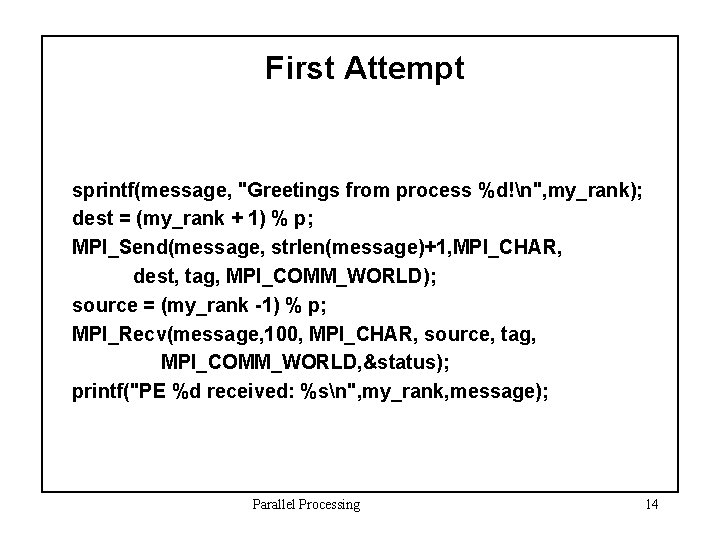

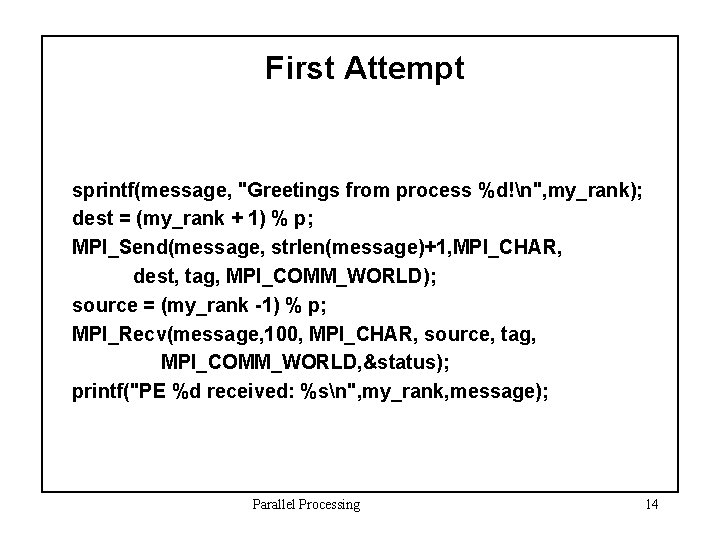

First Attempt sprintf(message, "Greetings from process %d!n", my_rank); dest = (my_rank + 1) % p; MPI_Send(message, strlen(message)+1, MPI_CHAR, dest, tag, MPI_COMM_WORLD); source = (my_rank -1) % p; MPI_Recv(message, 100, MPI_CHAR, source, tag, MPI_COMM_WORLD, &status); printf("PE %d received: %sn", my_rank, message); Parallel Processing 14

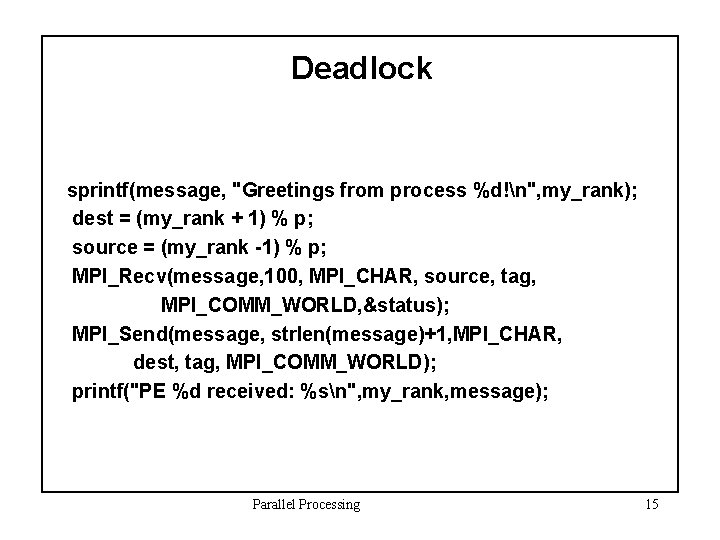

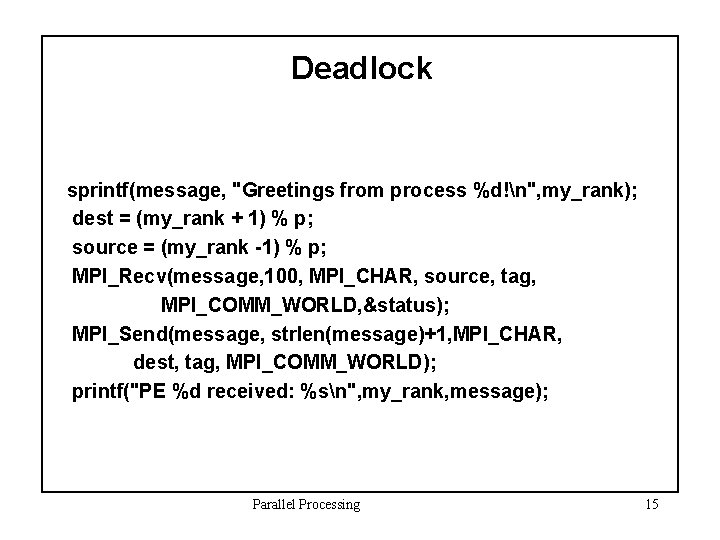

Deadlock sprintf(message, "Greetings from process %d!n", my_rank); dest = (my_rank + 1) % p; source = (my_rank -1) % p; MPI_Recv(message, 100, MPI_CHAR, source, tag, MPI_COMM_WORLD, &status); MPI_Send(message, strlen(message)+1, MPI_CHAR, dest, tag, MPI_COMM_WORLD); printf("PE %d received: %sn", my_rank, message); Parallel Processing 15

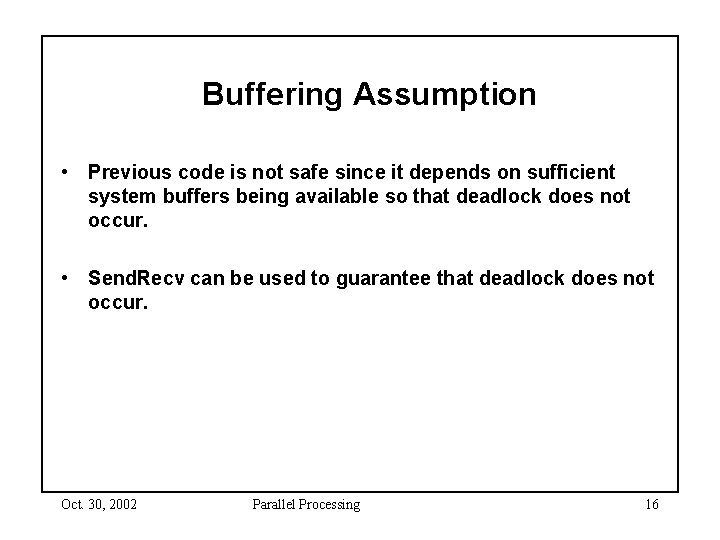

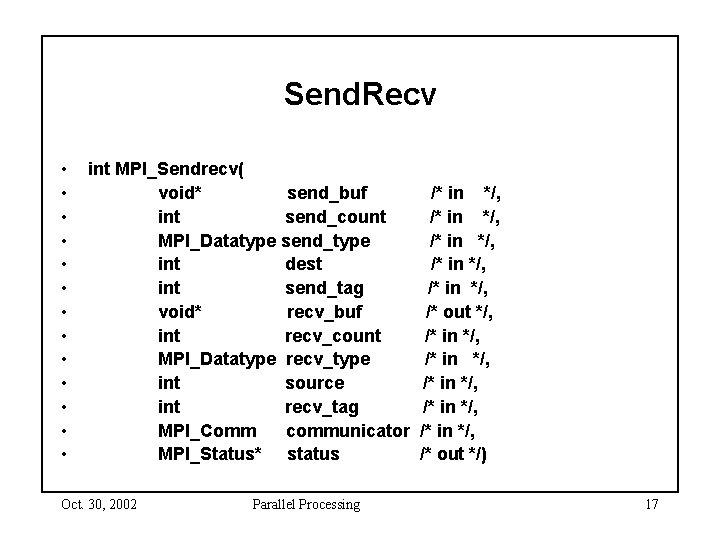

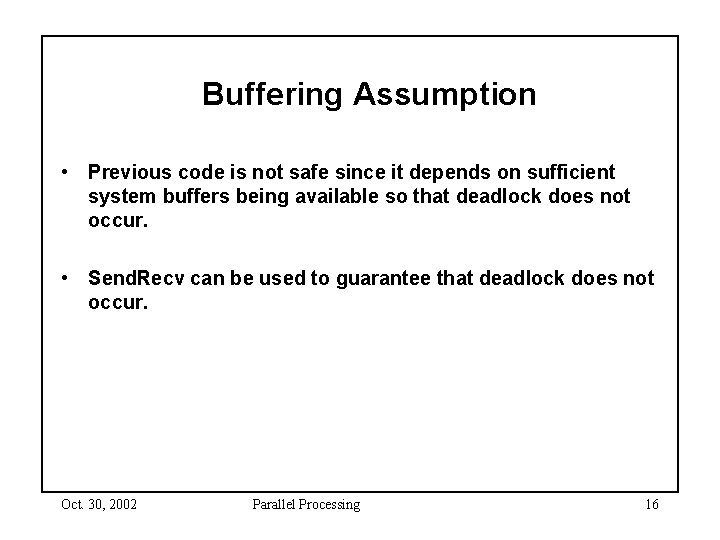

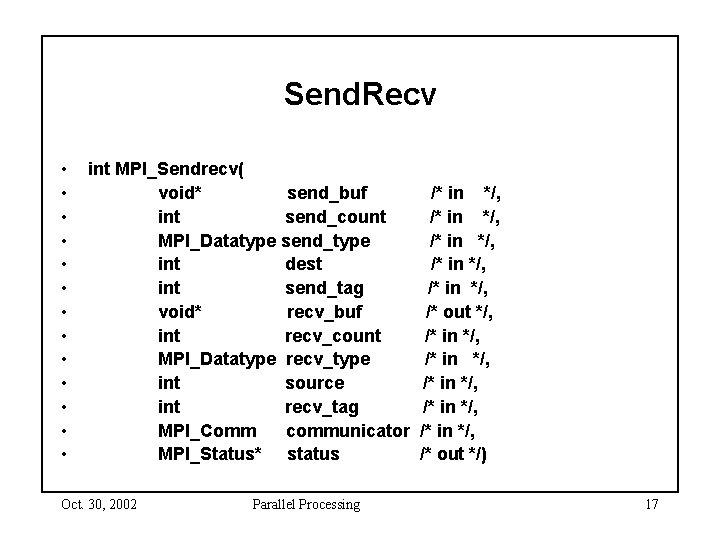

Buffering Assumption • Previous code is not safe since it depends on sufficient system buffers being available so that deadlock does not occur. • Send. Recv can be used to guarantee that deadlock does not occur. Oct. 30, 2002 Parallel Processing 16

Send. Recv • • • • int MPI_Sendrecv( void* send_buf int send_count MPI_Datatype send_type int dest int send_tag void* recv_buf int recv_count MPI_Datatype recv_type int source int recv_tag MPI_Comm communicator MPI_Status* status Oct. 30, 2002 Parallel Processing /* in */, /* in */, /* out */, /* in */, /* in */, /* out */) 17

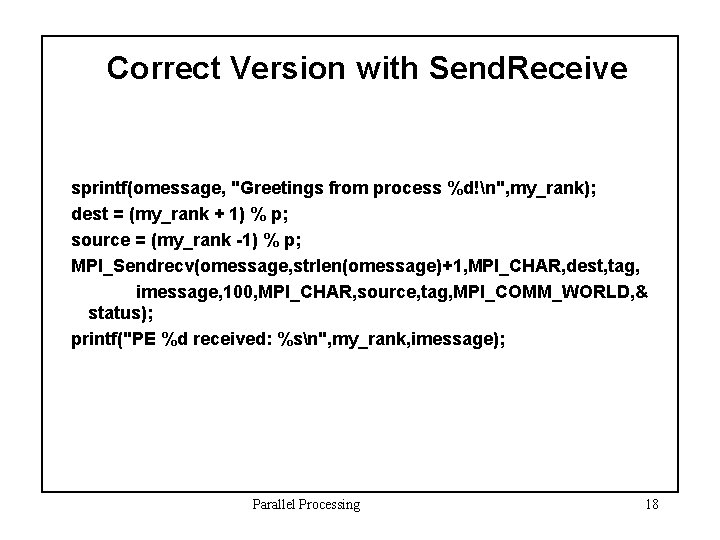

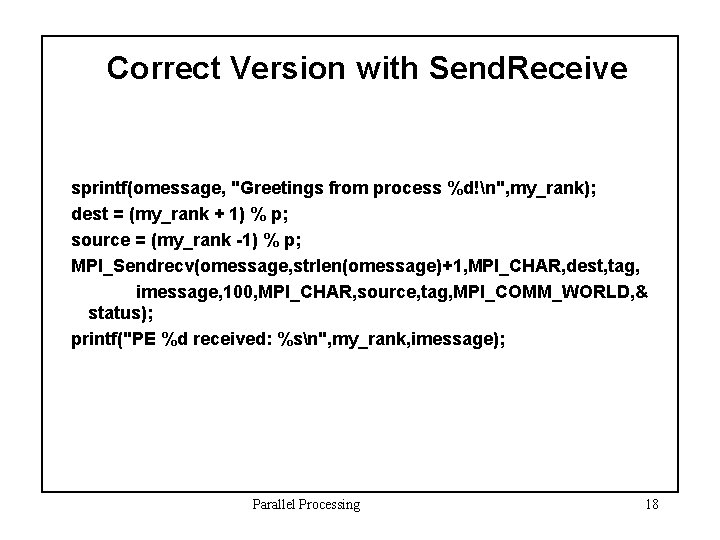

Correct Version with Send. Receive sprintf(omessage, "Greetings from process %d!n", my_rank); dest = (my_rank + 1) % p; source = (my_rank -1) % p; MPI_Sendrecv(omessage, strlen(omessage)+1, MPI_CHAR, dest, tag, imessage, 100, MPI_CHAR, source, tag, MPI_COMM_WORLD, & status); printf("PE %d received: %sn", my_rank, imessage); Parallel Processing 18

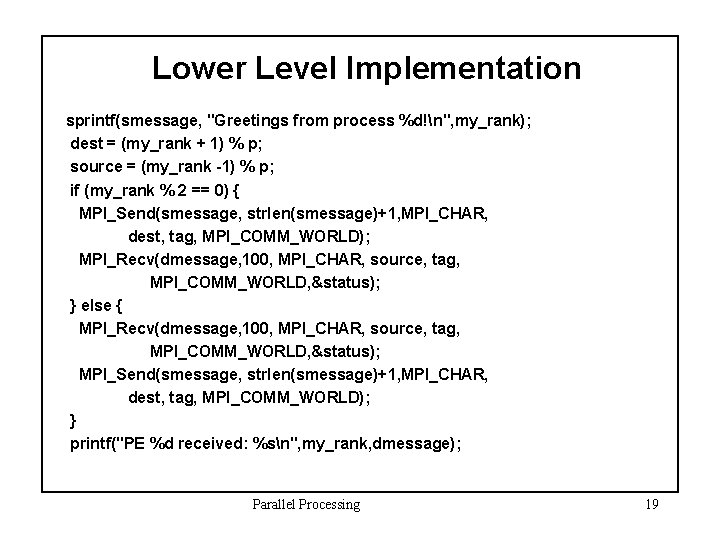

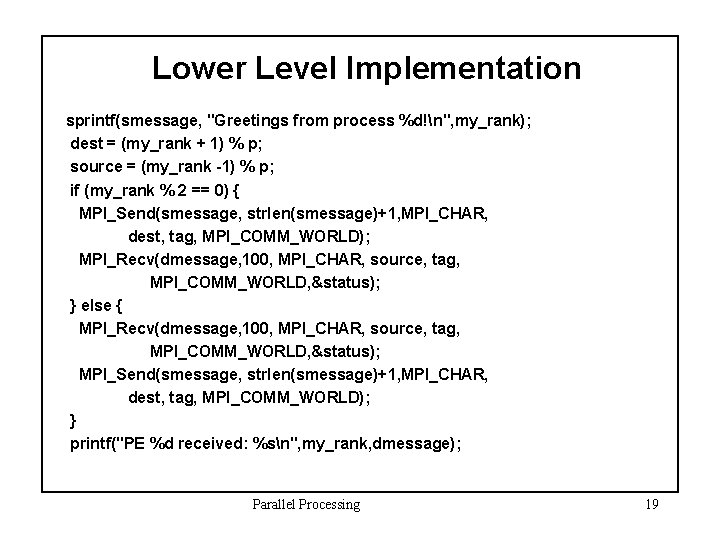

Lower Level Implementation sprintf(smessage, "Greetings from process %d!n", my_rank); dest = (my_rank + 1) % p; source = (my_rank -1) % p; if (my_rank % 2 == 0) { MPI_Send(smessage, strlen(smessage)+1, MPI_CHAR, dest, tag, MPI_COMM_WORLD); MPI_Recv(dmessage, 100, MPI_CHAR, source, tag, MPI_COMM_WORLD, &status); } else { MPI_Recv(dmessage, 100, MPI_CHAR, source, tag, MPI_COMM_WORLD, &status); MPI_Send(smessage, strlen(smessage)+1, MPI_CHAR, dest, tag, MPI_COMM_WORLD); } printf("PE %d received: %sn", my_rank, dmessage); Parallel Processing 19

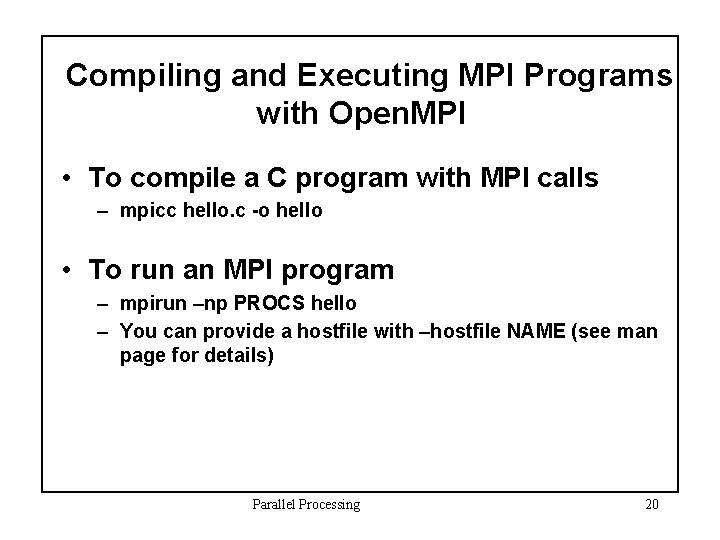

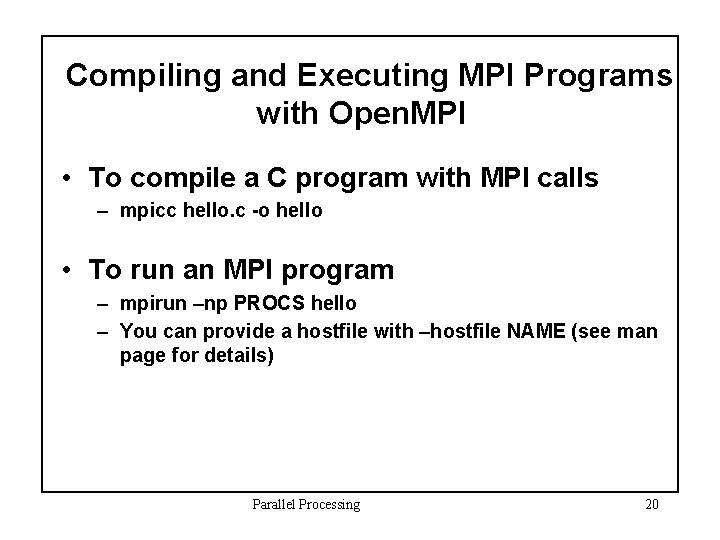

Compiling and Executing MPI Programs with Open. MPI • To compile a C program with MPI calls – mpicc hello. c -o hello • To run an MPI program – mpirun –np PROCS hello – You can provide a hostfile with –hostfile NAME (see man page for details) Parallel Processing 20

![dot c include stdio h float Serialdocfloat x in float y dot. c #include <stdio. h> float Serial_doc(float x[] /* in */, float y[] /*](https://slidetodoc.com/presentation_image_h/e91c0c6fabf634206bf145fdb5005a62/image-21.jpg)

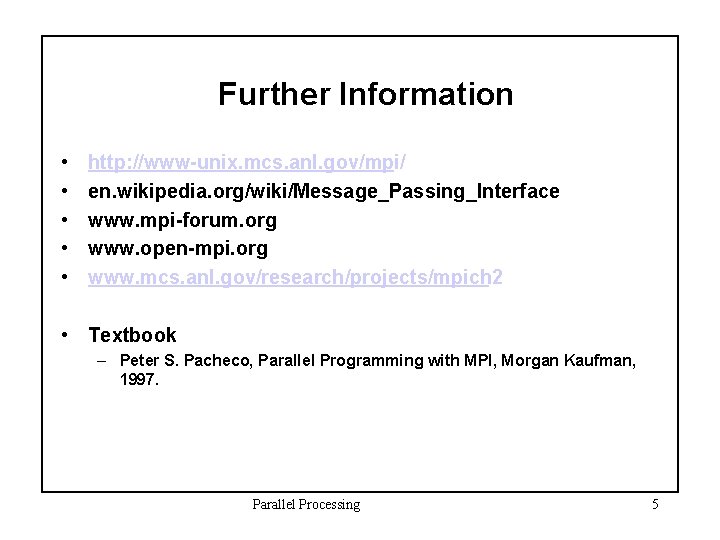

dot. c #include <stdio. h> float Serial_doc(float x[] /* in */, float y[] /* in */, int n /* in */) { int i; float sum = 0. 0; for (i=0; i< n; i++) sum = sum + x[i]*y[i]; return sum; } Parallel Processing 21

![Parallel Dot float Paralleldocfloat localx in float localy in int Parallel Dot float Parallel_doc(float local_x[] /* in */, float local_y[] /* in */, int](https://slidetodoc.com/presentation_image_h/e91c0c6fabf634206bf145fdb5005a62/image-22.jpg)

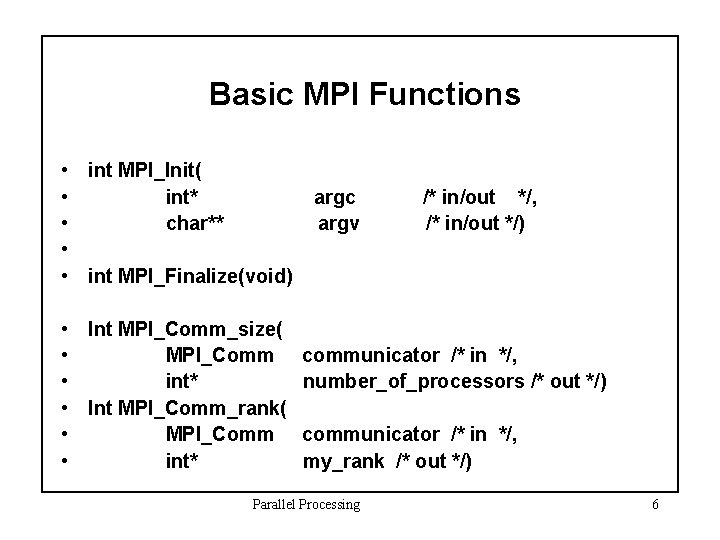

Parallel Dot float Parallel_doc(float local_x[] /* in */, float local_y[] /* in */, int n_bar /* in */) { float local_dot; local_dot = Serial_dot(local_x, local_y, b_bar); MPI_Reduce(&local_dot, &dot, 1, MPI_FLOAT, MPI_SUM, 0, MPI_COMM_WORLD); return dot; } Parallel Processing 22

![Parallel All Dot float Paralleldocfloat localx in float localy in Parallel All Dot float Parallel_doc(float local_x[] /* in */, float local_y[] /* in */,](https://slidetodoc.com/presentation_image_h/e91c0c6fabf634206bf145fdb5005a62/image-23.jpg)

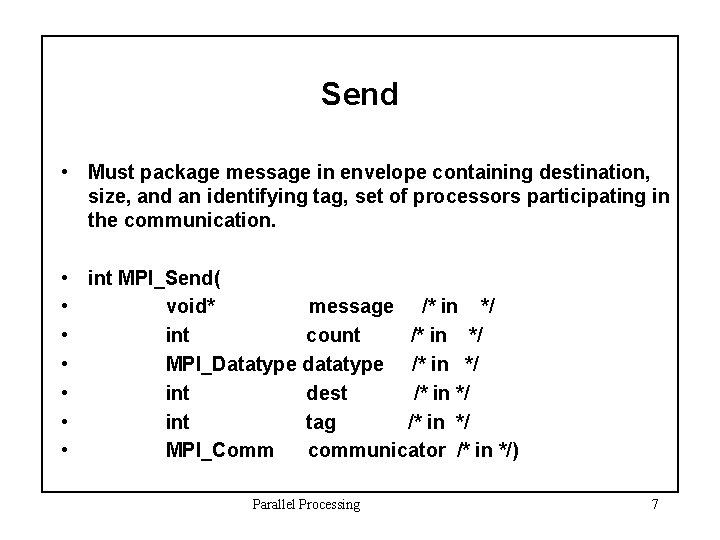

Parallel All Dot float Parallel_doc(float local_x[] /* in */, float local_y[] /* in */, int n_bar /* in */) { float local_dot; local_dot = Serial_dot(local_x, local_y, b_bar); MPI_Allreduce(&local_dot, &dot, 1, MPI_FLOAT, MPI_SUM, 0, MPI_COMM_WORLD); return dot; } Parallel Processing 23

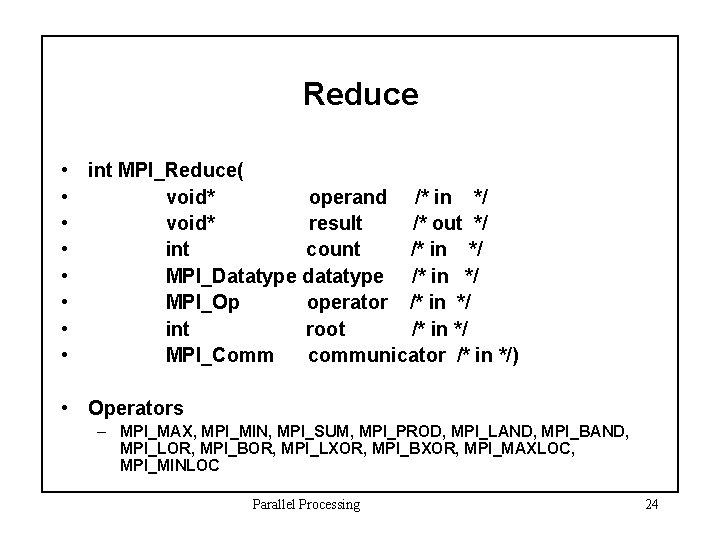

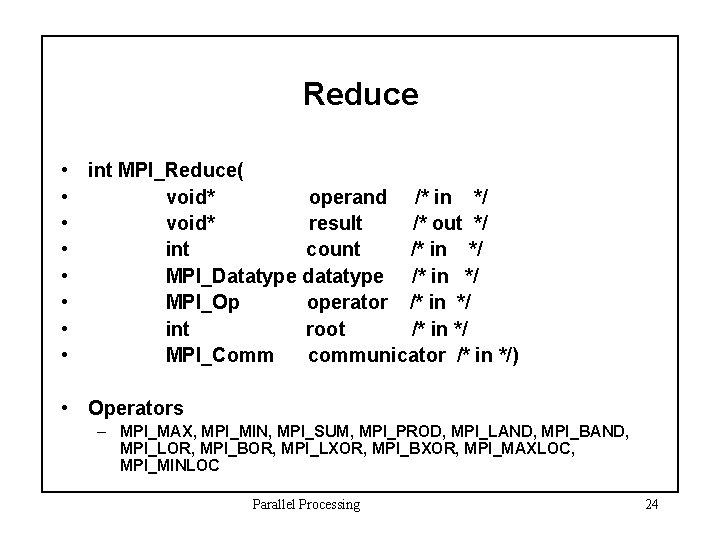

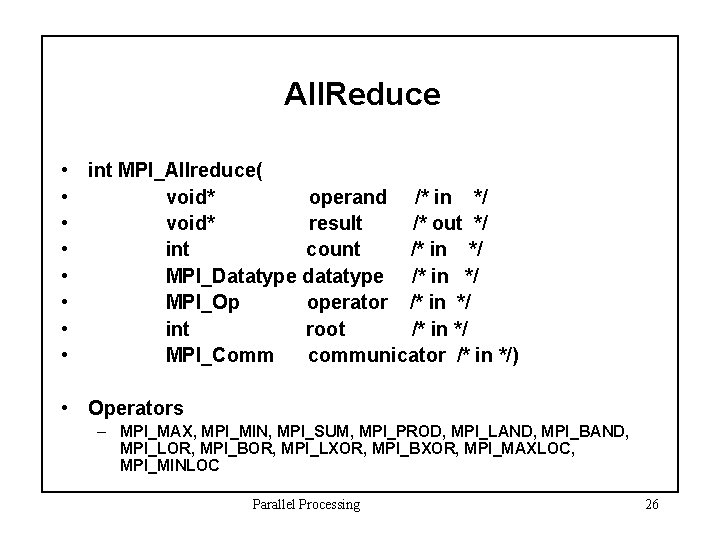

Reduce • int MPI_Reduce( • void* operand /* in */ • void* result /* out */ • int count /* in */ • MPI_Datatype datatype /* in */ • MPI_Op operator /* in */ • int root /* in */ • MPI_Comm communicator /* in */) • Operators – MPI_MAX, MPI_MIN, MPI_SUM, MPI_PROD, MPI_LAND, MPI_BAND, MPI_LOR, MPI_BOR, MPI_LXOR, MPI_BXOR, MPI_MAXLOC, MPI_MINLOC Parallel Processing 24

Reduce 0 x 0+ x 1+x 2+x 3+x 4+ x 5+x 6+ x 7 0 1 2 x 0+x 4+x 2+x 6 x 1+x 5+x 3+ x 7 3 x 0+x 4, x 1+x 5, x 2+x 6, x 3+x 7 0 1 2 3 4 5 6 7 x 0 x 1 x 2 x 3 x 4 x 5 x 6 x 7 Parallel Processing 25

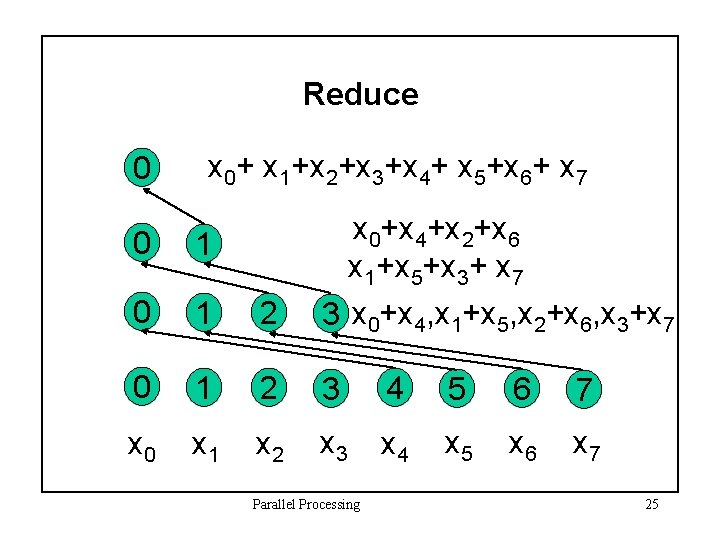

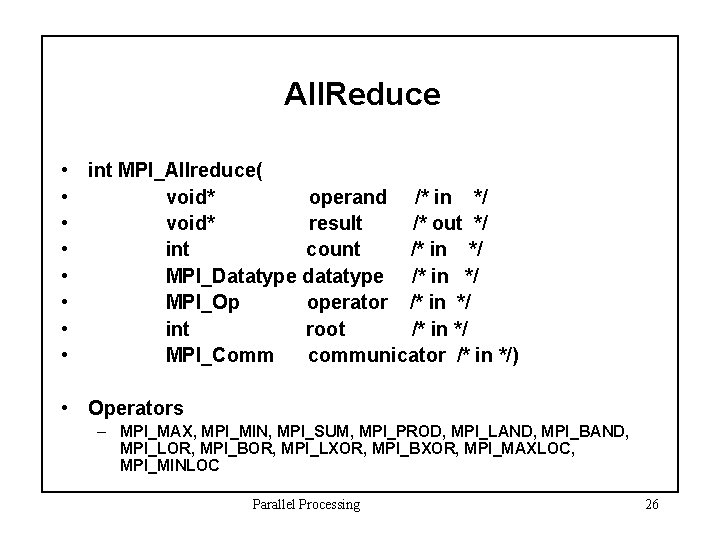

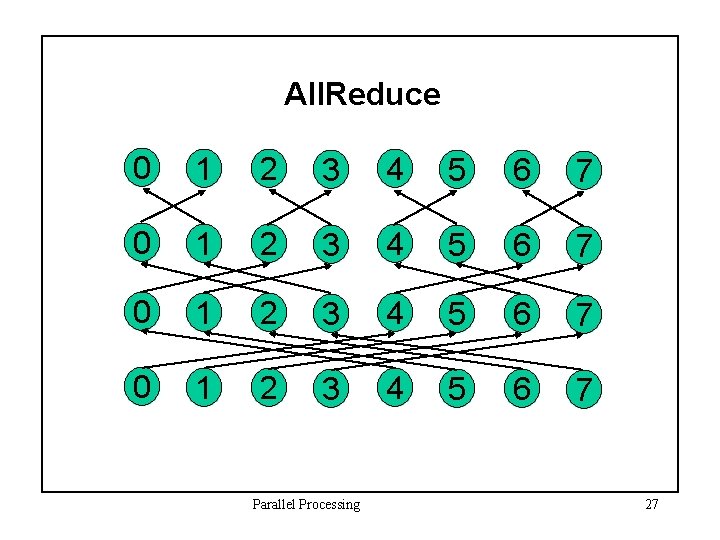

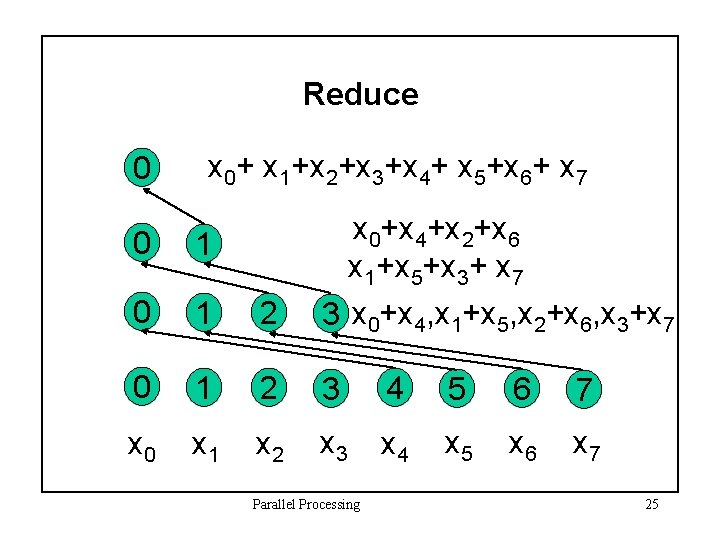

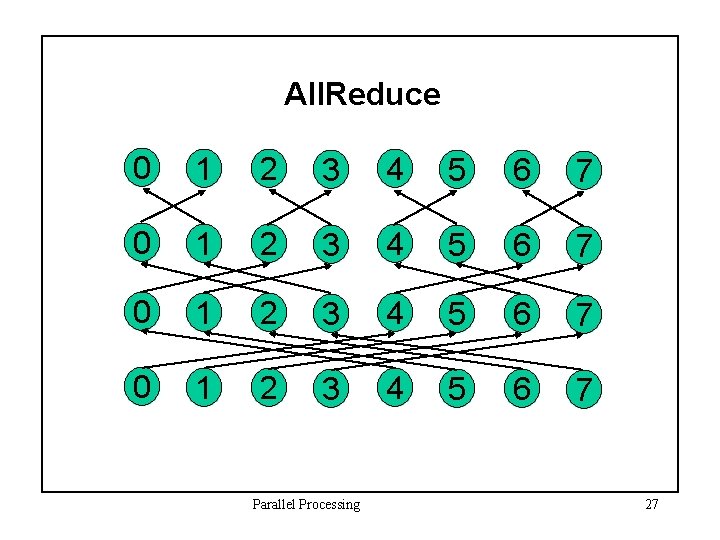

All. Reduce • int MPI_Allreduce( • void* operand /* in */ • void* result /* out */ • int count /* in */ • MPI_Datatype datatype /* in */ • MPI_Op operator /* in */ • int root /* in */ • MPI_Comm communicator /* in */) • Operators – MPI_MAX, MPI_MIN, MPI_SUM, MPI_PROD, MPI_LAND, MPI_BAND, MPI_LOR, MPI_BOR, MPI_LXOR, MPI_BXOR, MPI_MAXLOC, MPI_MINLOC Parallel Processing 26

All. Reduce 0 1 2 3 4 5 6 7 Parallel Processing 27

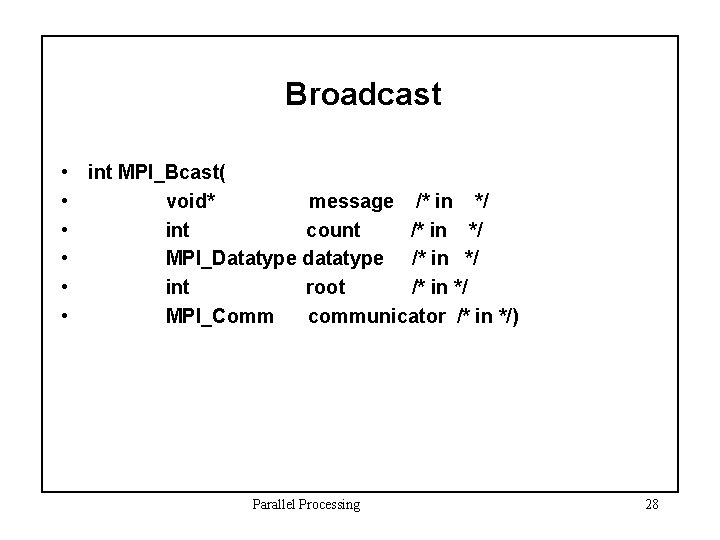

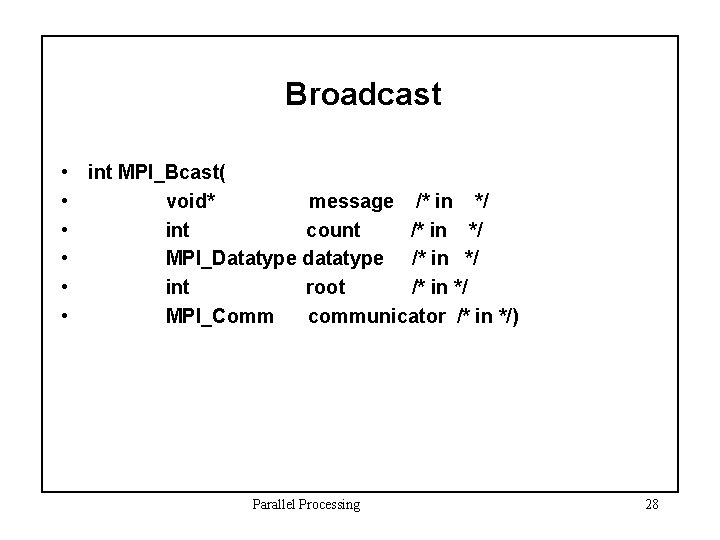

Broadcast • int MPI_Bcast( • void* message /* in */ • int count /* in */ • MPI_Datatype datatype /* in */ • int root /* in */ • MPI_Comm communicator /* in */) Parallel Processing 28

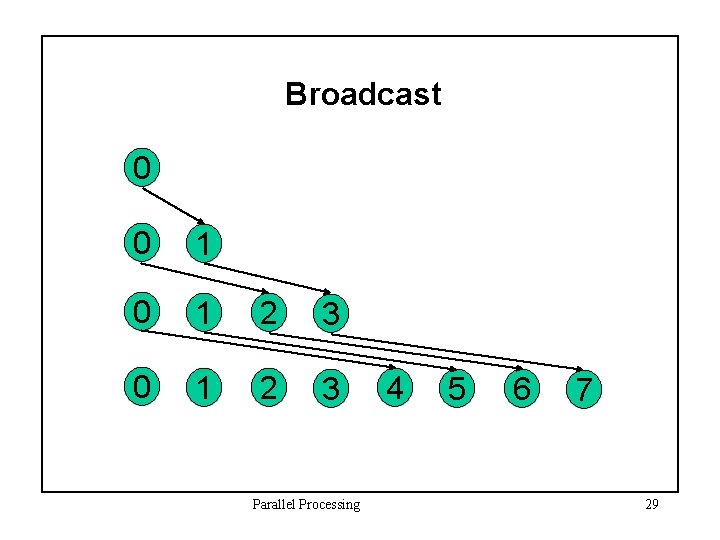

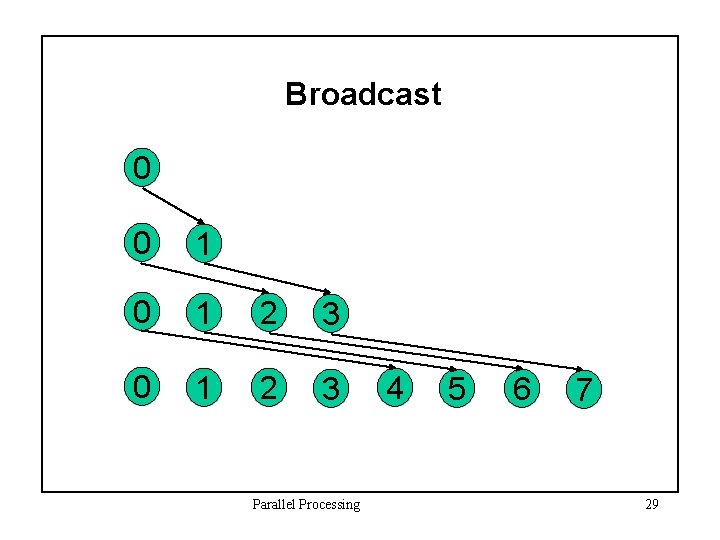

Broadcast 0 0 1 2 3 Parallel Processing 4 5 6 7 29

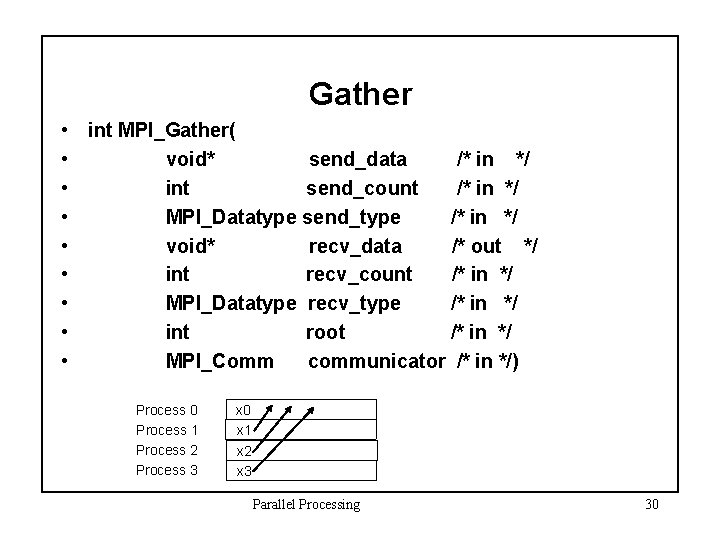

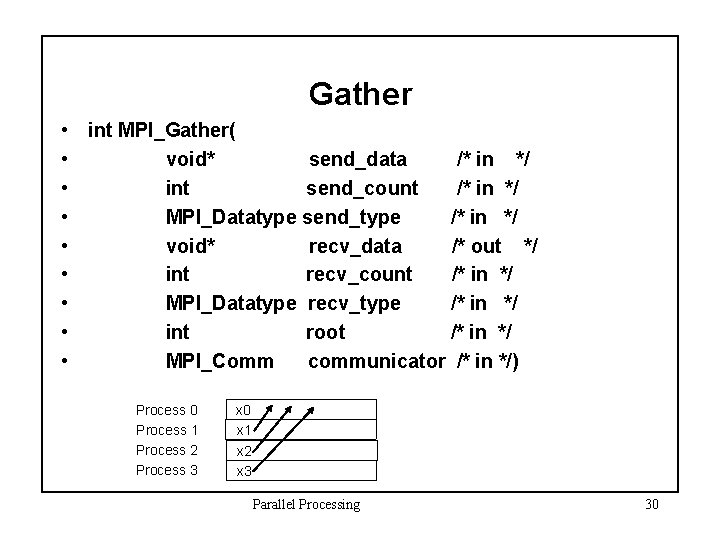

Gather • int MPI_Gather( • void* send_data /* in */ • int send_count /* in */ • MPI_Datatype send_type /* in */ • void* recv_data /* out */ • int recv_count /* in */ • MPI_Datatype recv_type /* in */ • int root /* in */ • MPI_Comm communicator /* in */) Process 0 Process 1 Process 2 Process 3 x 0 x 1 x 2 x 3 Parallel Processing 30

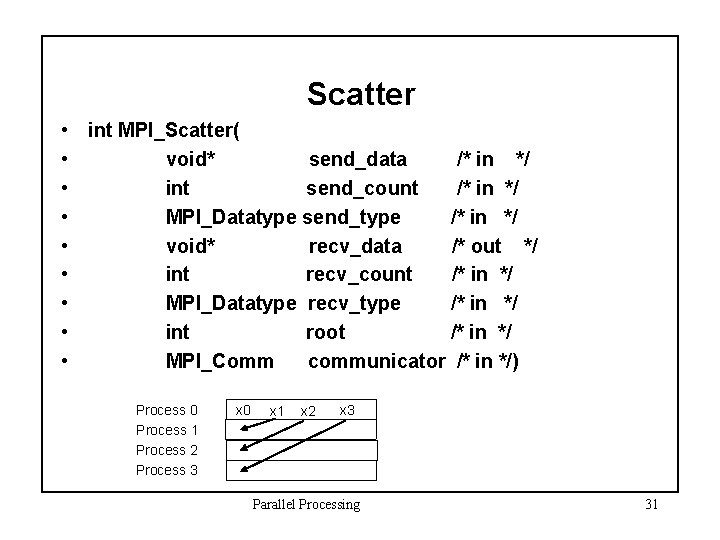

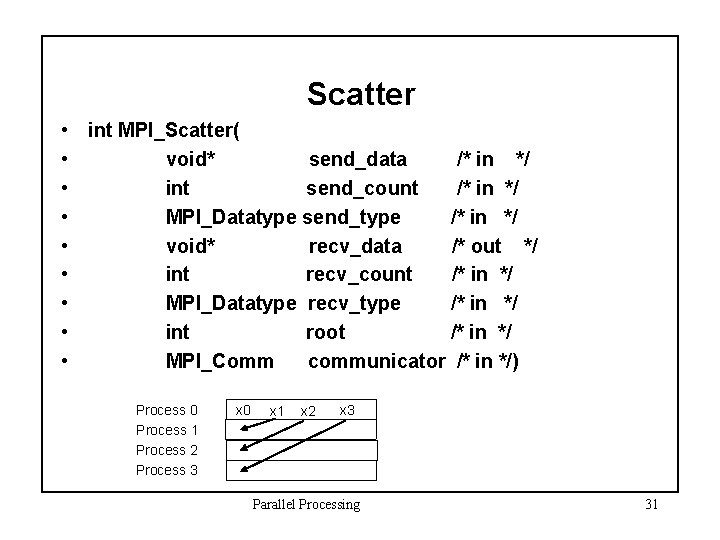

Scatter • int MPI_Scatter( • void* send_data /* in */ • int send_count /* in */ • MPI_Datatype send_type /* in */ • void* recv_data /* out */ • int recv_count /* in */ • MPI_Datatype recv_type /* in */ • int root /* in */ • MPI_Comm communicator /* in */) Process 0 Process 1 Process 2 Process 3 x 0 x 1 x 2 x 3 Parallel Processing 31

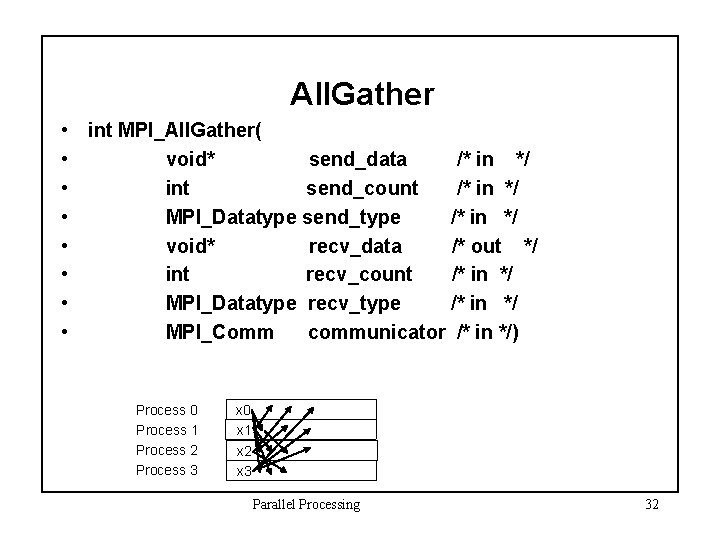

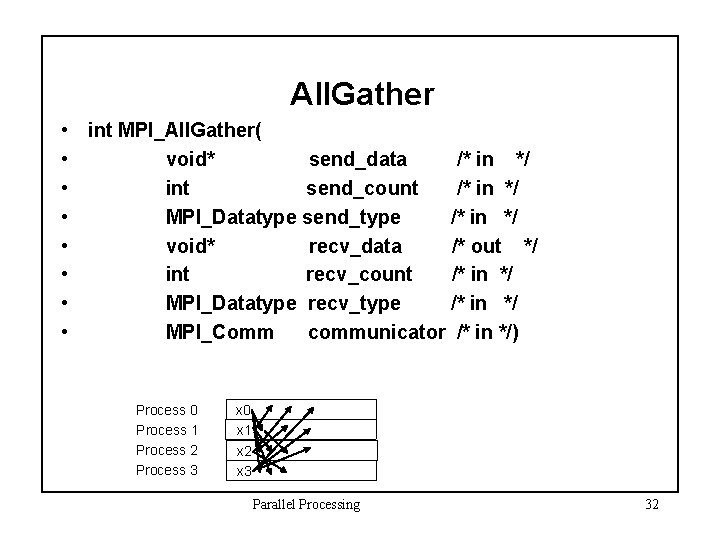

All. Gather • int MPI_All. Gather( • void* send_data /* in */ • int send_count /* in */ • MPI_Datatype send_type /* in */ • void* recv_data /* out */ • int recv_count /* in */ • MPI_Datatype recv_type /* in */ • MPI_Comm communicator /* in */) Process 0 Process 1 Process 2 Process 3 x 0 x 1 x 2 x 3 Parallel Processing 32

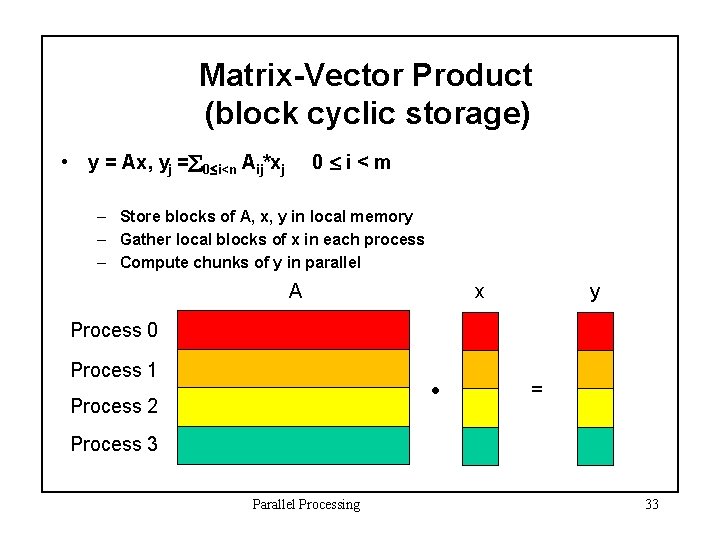

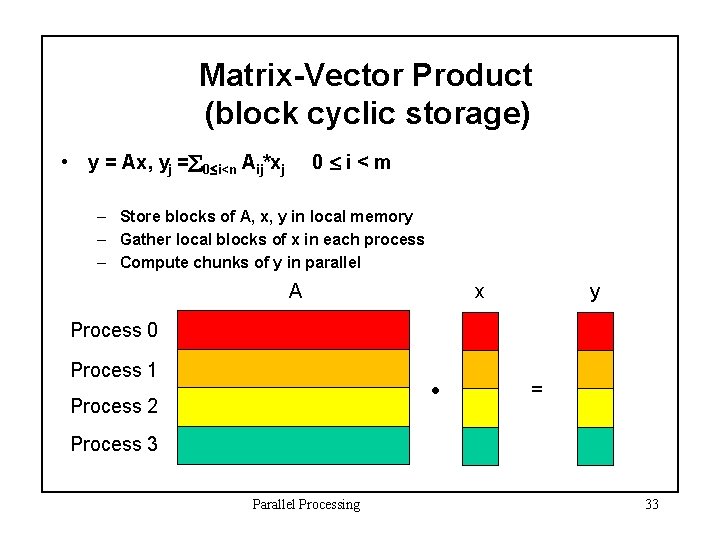

Matrix-Vector Product (block cyclic storage) 0 i<m • y = Ax, yj = 0 i<n Aij*xj – Store blocks of A, x, y in local memory – Gather local blocks of x in each process – Compute chunks of y in parallel A x y Process 0 Process 1 Process 2 = Process 3 Parallel Processing 33

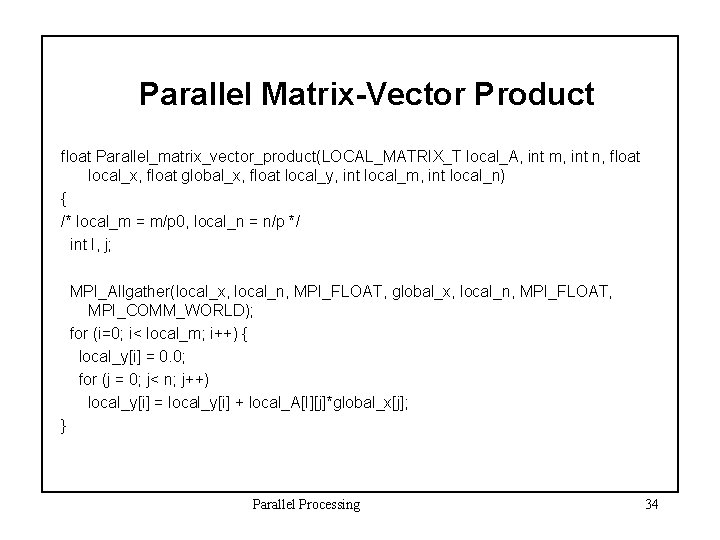

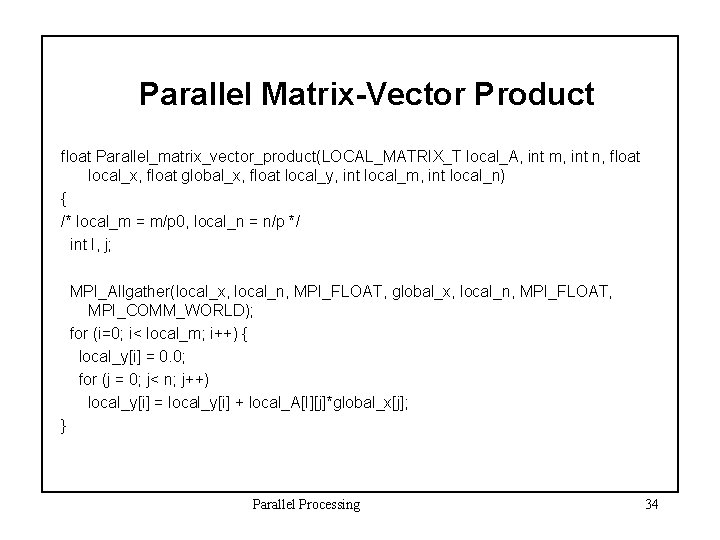

Parallel Matrix-Vector Product float Parallel_matrix_vector_product(LOCAL_MATRIX_T local_A, int m, int n, float local_x, float global_x, float local_y, int local_m, int local_n) { /* local_m = m/p 0, local_n = n/p */ int I, j; MPI_Allgather(local_x, local_n, MPI_FLOAT, global_x, local_n, MPI_FLOAT, MPI_COMM_WORLD); for (i=0; i< local_m; i++) { local_y[i] = 0. 0; for (j = 0; j< n; j++) local_y[i] = local_y[i] + local_A[I][j]*global_x[j]; } Parallel Processing 34

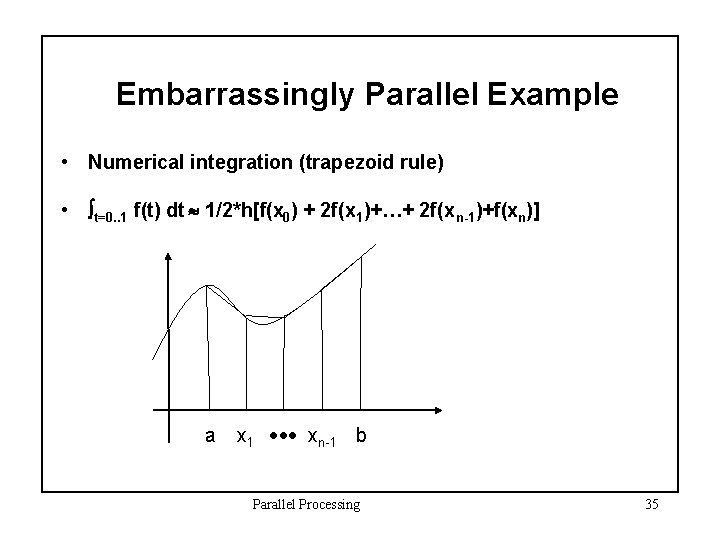

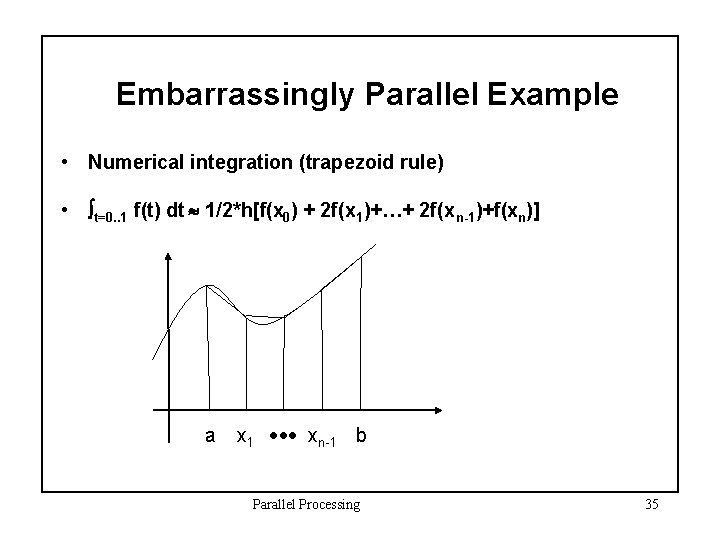

Embarrassingly Parallel Example • Numerical integration (trapezoid rule) • t=0. . 1 f(t) dt 1/2*h[f(x 0) + 2 f(x 1)+…+ 2 f(x n-1)+f(xn)] a x 1 xn-1 b Parallel Processing 35