Parallel Computing Explained Parallel Performance Analysis Slides Prepared

- Slides: 21

Parallel Computing Explained Parallel Performance Analysis Slides Prepared from the CI-Tutor Courses at NCSA http: //ci-tutor. ncsa. uiuc. edu/ By S. Masoud Sadjadi School of Computing and Information Sciences Florida International University March 2009

Agenda 1 Parallel Computing Overview 2 How to Parallelize a Code 3 Porting Issues 4 Scalar Tuning 5 Parallel Code Tuning 6 Timing and Profiling 7 Cache Tuning 8 Parallel Performance Analysis 8. 1 Speedup 8. 2 Speedup Extremes 8. 3 Efficiency 8. 4 Amdahl's Law 8. 5 Speedup Limitations 8. 6 Benchmarks 8. 7 Summary 9 About the IBM Regatta P 690

Parallel Performance Analysis Now that you have parallelized your code, and have run it on a parallel computer using multiple processors you may want to know the performance gain that parallelization has achieved. This chapter describes how to compute parallel code performance. Often the performance gain is not perfect, and this chapter also explains some of the reasons for limitations on parallel performance. Finally, this chapter covers the kinds of information you should provide in a benchmark, and some sample benchmarks are given.

Speedup The speedup of your code tells you how much performance gain is achieved by running your program in parallel on multiple processors. A simple definition is that it is the length of time it takes a program to run on a single processor, divided by the time it takes to run on a multiple processors. Speedup generally ranges between 0 and p, where p is the number of processors. Scalability When you compute with multiple processors in a parallel environment, you will also want to know how your code scales. The scalability of a parallel code is defined as its ability to achieve performance proportional to the number of processors used. As you run your code with more and more processors, you want to see the performance of the code continue to improve. Computing speedup is a good way to measure how a program scales as more processors are used.

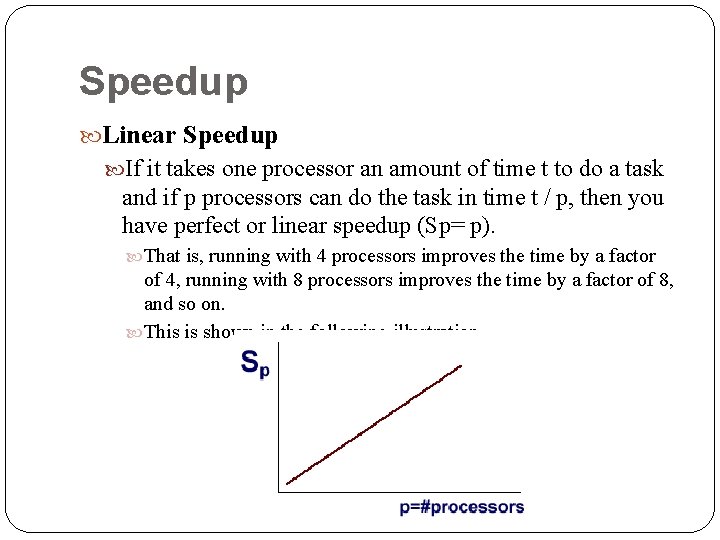

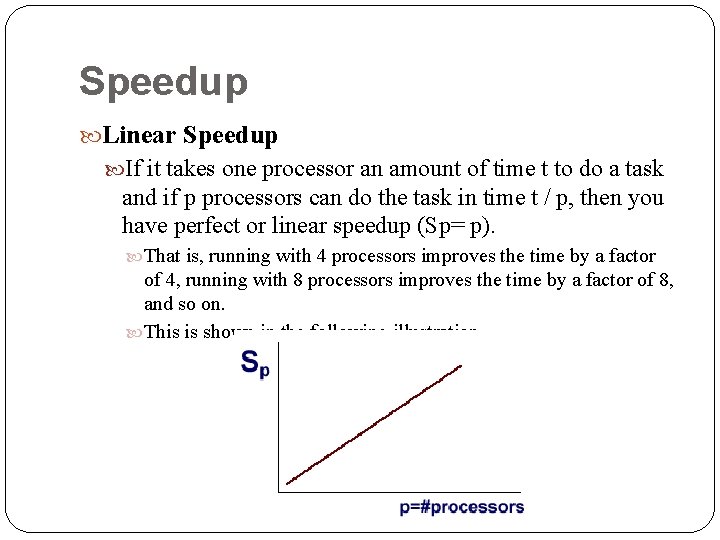

Speedup Linear Speedup If it takes one processor an amount of time t to do a task and if p processors can do the task in time t / p, then you have perfect or linear speedup (Sp= p). That is, running with 4 processors improves the time by a factor of 4, running with 8 processors improves the time by a factor of 8, and so on. This is shown in the following illustration.

Speedup Extremes The extremes of speedup happen when speedup is greater than p, called super-linear speedup, less than 1. Super-Linear Speedup You might wonder how super-linear speedup can occur. How can speedup be greater than the number of processors used? The answer usually lies with the program's memory use. When using multiple processors, each processor only gets part of the problem compared to the single processor case. It is possible that the smaller problem can make better use of the memory hierarchy, that is, the cache and the registers. For example, the smaller problem may fit in cache when the entire problem would not. When super-linear speedup is achieved, it is often an indication that the sequential code, run on one processor, had serious cache miss problems. The most common programs that achieve super-linear speedup are those that solve dense linear algebra problems.

Speedup Extremes Parallel Code Slower than Sequential Code When speedup is less than one, it means that the parallel code runs slower than the sequential code. This happens when there isn't enough computation to be done by each processor. The overhead of creating and controlling the parallel threads outweighs the benefits of parallel computation, and it causes the code to run slower. To eliminate this problem you can try to increase the problem size or run with fewer processors.

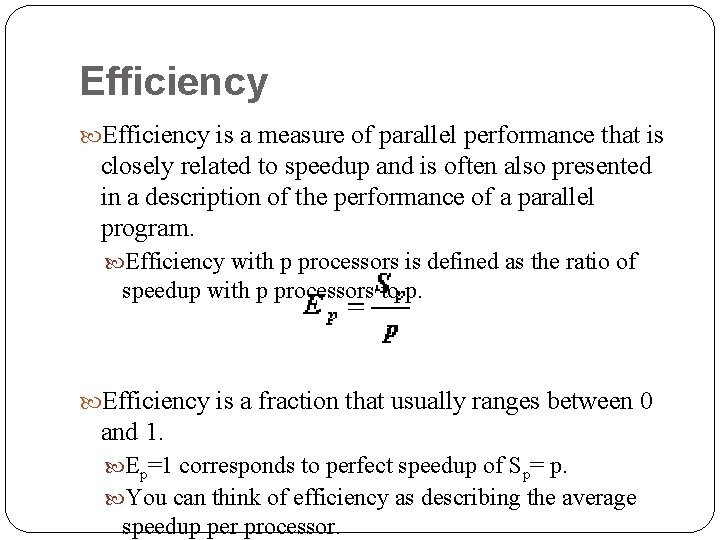

Efficiency is a measure of parallel performance that is closely related to speedup and is often also presented in a description of the performance of a parallel program. Efficiency with p processors is defined as the ratio of speedup with p processors to p. Efficiency is a fraction that usually ranges between 0 and 1. Ep=1 corresponds to perfect speedup of Sp= p. You can think of efficiency as describing the average speedup per processor.

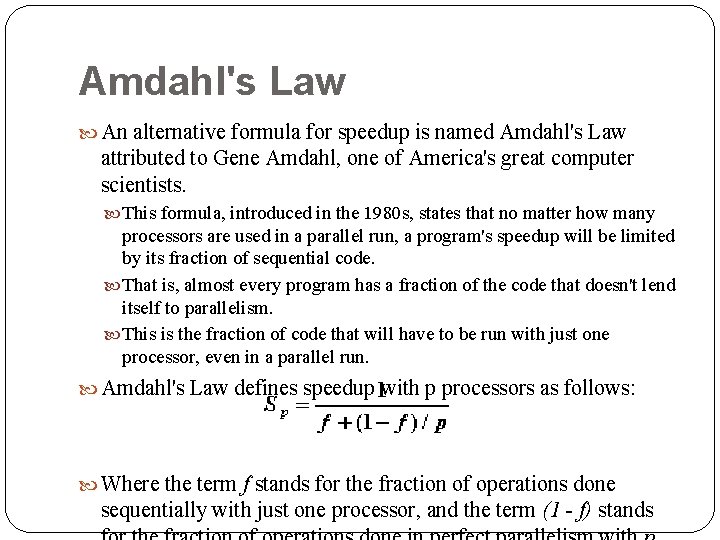

Amdahl's Law An alternative formula for speedup is named Amdahl's Law attributed to Gene Amdahl, one of America's great computer scientists. This formula, introduced in the 1980 s, states that no matter how many processors are used in a parallel run, a program's speedup will be limited by its fraction of sequential code. That is, almost every program has a fraction of the code that doesn't lend itself to parallelism. This is the fraction of code that will have to be run with just one processor, even in a parallel run. Amdahl's Law defines speedup with p processors as follows: Where the term f stands for the fraction of operations done sequentially with just one processor, and the term (1 - f) stands

Amdahl's Law The sequential fraction of code, f, is a unitless measure ranging between 0 and 1. When f is 0, meaning there is no sequential code, then speedup is p, or perfect parallelism. This can be seen by substituting f = 0 in the formula above, which results in Sp = p. When f is 1, meaning there is no parallel code, then speedup is 1, or there is no benefit from parallelism. This can be seen by substituting f = 1 in the formula above, which results in Sp = 1. This shows that Amdahl's speedup ranges between 1 and p, where p is the number of processors used in a parallel processing run.

Amdahl's Law The interpretation of Amdahl's Law is that speedup is limited by the fact that not all parts of a code can be run in parallel. Substituting in the formula, when the number of processors goes to infinity, your code's speedup is still limited by 1 / f. Amdahl's Law shows that the sequential fraction of code has a strong effect on speedup. This helps to explain the need for large problem sizes when using parallel computers. It is well known in the parallel computing community, that you cannot take a small application and expect it to show good performance on a parallel computer. To get good performance, you need to run large applications, with large data array sizes, and lots of computation. The reason for this is that as the problem size increases the opportunity for parallelism grows, and the sequential fraction shrinks, and it shrinks in its importance for speedup.

Agenda 8 Parallel Performance Analysis 8. 1 Speedup 8. 2 Speedup Extremes 8. 3 Efficiency 8. 4 Amdahl's Law 8. 5 Speedup Limitations 8. 5. 1 Memory Contention Limitation 8. 5. 2 Problem Size Limitation 8. 6 Benchmarks 8. 7 Summary

Speedup Limitations This section covers some of the reasons why a program doesn't get perfect Speedup. Some of the reasons for limitations on speedup are: Too much I/O Speedup is limited when the code is I/O bound. That is, when there is too much input or output compared to the amount of computation. Wrong algorithm Speedup is limited when the numerical algorithm is not suitable for a parallel computer. You need to replace it with a parallel algorithm. Too much memory contention Speedup is limited when there is too much memory contention. You need to redesign the code with attention to data locality. Cache reutilization techniques will help here.

Speedup Limitations Wrong problem size Speedup is limited when the problem size is too small to take best advantage of a parallel computer. In addition, speedup is limited when the problem size is fixed. That is, when the problem size doesn't grow as you compute with more processors. Too much sequential code Speedup is limited when there's too much sequential code. This is shown by Amdahl's Law. Too much parallel overhead Speedup is limited when there is too much parallel overhead compared to the amount of computation. These are the additional CPU cycles accumulated in creating parallel regions, creating threads, synchronizing threads, spin/blocking threads, and ending parallel regions. Load imbalance Speedup is limited when the processors have different workloads. The processors that finish early will be idle while they are waiting for the other processors to catch up.

Memory Contention Limitation Gene Golub, a professor of Computer Science at Stanford University, writes in his book on parallel computing that the best way to define memory contention is with the word delay. When different processors all want to read or write into the main memory, there is a delay until the memory is free. On the SGI Origin 2000 computer, you can determine whether your code has memory contention problems by using SGI's perfex utility. The perfex utility is covered in the Cache Tuning lecture in this course. You can also refer to SGI's manual page, man perfex, for more details. On the Linux clusters, you can use the hardware performance counter tools to get information on memory performance. On the IA 32 platform, use perfex, vprof, hmpcount, psrun/perfsuite. On the IA 64 platform, use vprof, pfmon, psrun/perfsuite.

Memory Contention Limitation Many of these tools can be used with the PAPI performance counter interface. Be sure to refer to the man pages and webpages on the NCSA website for more information. If the output of the utility shows that memory contention is a problem, you will want to use some programming techniques for reducing memory contention. A good way to reduce memory contention is to access elements from the processor's cache memory instead of the main memory. Some programming techniques for doing this are: Access arrays with unit `. Order nested do loops (in Fortran) so that the innermost loop index is the leftmost index of the arrays in the loop. For the C language, the order is the opposite of Fortran. Avoid specific array sizes that are the same as the size of the data cache or that are exact fractions or exact multiples of the size of the data cache. Pad common blocks. These techniques are called cache tuning optimizations. The details for performing these code modifications are covered in the section on Cache Optimization of this lecture.

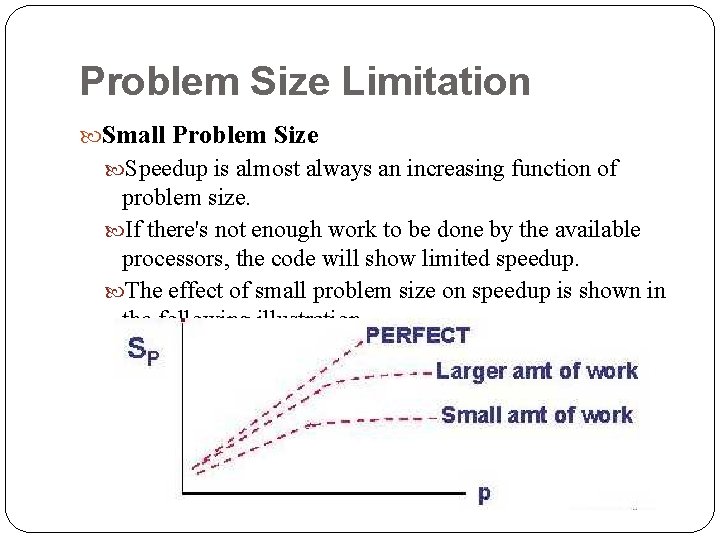

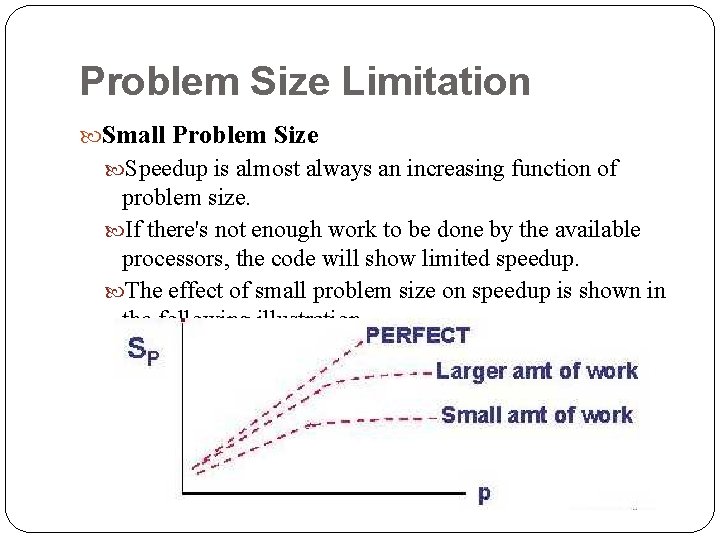

Problem Size Limitation Small Problem Size Speedup is almost always an increasing function of problem size. If there's not enough work to be done by the available processors, the code will show limited speedup. The effect of small problem size on speedup is shown in the following illustration.

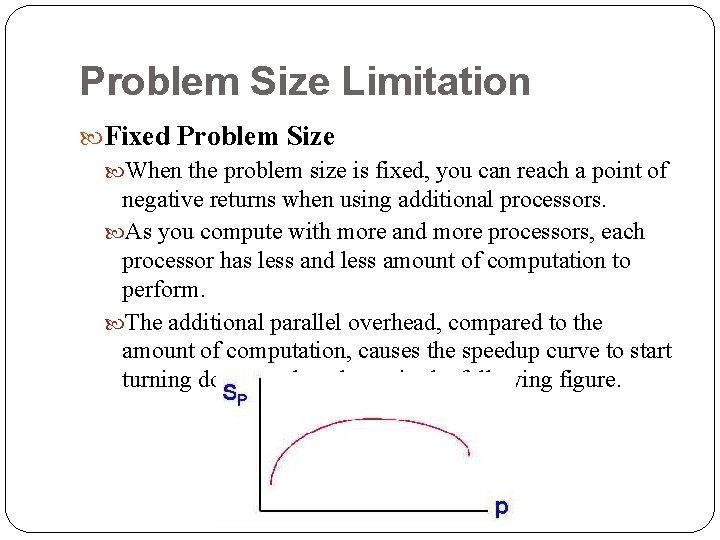

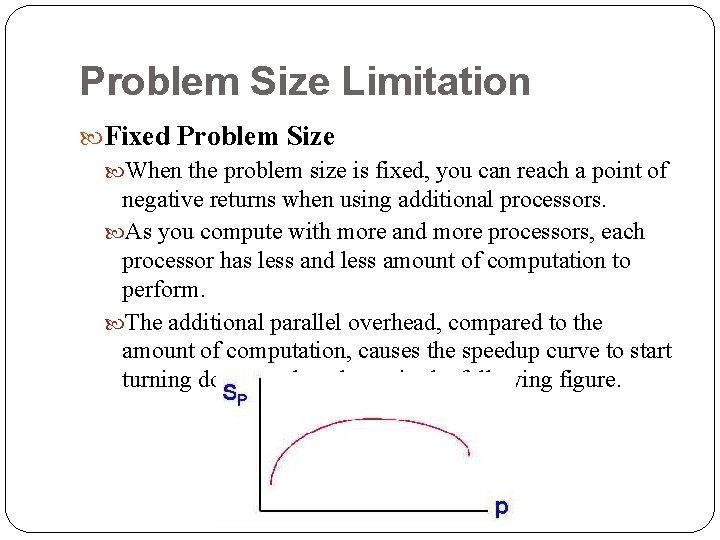

Problem Size Limitation Fixed Problem Size When the problem size is fixed, you can reach a point of negative returns when using additional processors. As you compute with more and more processors, each processor has less and less amount of computation to perform. The additional parallel overhead, compared to the amount of computation, causes the speedup curve to start turning downward as shown in the following figure.

Benchmarks It will finally be time to report the parallel performance of your application code. You will want to show a speedup graph with the number of processors on the x axis, and speedup on the y axis. Some other things you should report and record are: the date you obtained the results the problem size the computer model the compiler and the version number of the compiler any special compiler options you used

Benchmarks When doing computational science, it is often helpful to find out what kind of performance your colleagues are obtaining. In this regard, NCSA has a compilation of parallel performance benchmarks online at http: //www. ncsa. uiuc. edu/User. Info/Perf/NCSAbench/. You might be interested in looking at these benchmarks to see how other people report their parallel performance. In particular, the NAMD benchmark is a report about the performance of the NAMD program that does molecular dynamics simulations.

Summary There are many good texts on parallel computing which treat the subject of parallel performance analysis. Here are two useful references: Scientific Computing An Introduction with Parallel Computing, Gene Golub and James Ortega, Academic Press, Inc. Parallel Computing Theory and Practice, Michael J. Quinn, Mc. Graw-Hill, Inc.