Parallel Computing Explained How to Parallelize a Code

- Slides: 10

Parallel Computing Explained How to Parallelize a Code 1 Slides Prepared from the CI-Tutor Courses at NCSA http: //ci-tutor. ncsa. uiuc. edu/ By S. Masoud Sadjadi School of Computing and Information Sciences Florida International University March 2009

Agenda 1 Parallel Computing Overview 2 How to Parallelize a Code 2. 1 Automatic Compiler Parallelism 2. 2 Data Parallelism by Hand 2. 3 Mixing Automatic and Hand Parallelism 2. 4 Task Parallelism 2. 5 Parallelism Issues 3 Porting Issues 4 Scalar Tuning 5 Parallel Code Tuning 6 Timing and Profiling 7 Cache Tuning 8 Parallel Performance Analysis 9 About the IBM Regatta P 690 2

How to Parallelize a Code This chapter describes how to turn a single processor program into a parallel one, focusing on shared memory machines. Both automatic compiler parallelization and parallelization by hand are covered. The details for accomplishing both data parallelism and task parallelism are presented. 3

Automatic Compiler Parallelism Automatic compiler parallelism enables you to use a single compiler option and let the compiler do the work. The advantage of it is that it’s easy to use. The disadvantages are: The compiler only does loop level parallelism, not task parallelism. The compiler wants to parallelize every do loop in your code. If you have hundreds of do loops this creates way too much parallel overhead. 4

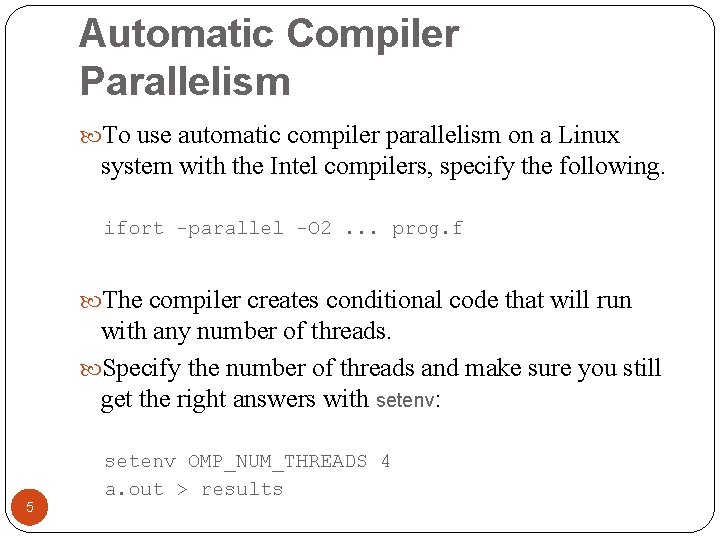

Automatic Compiler Parallelism To use automatic compiler parallelism on a Linux system with the Intel compilers, specify the following. ifort -parallel -O 2. . . prog. f The compiler creates conditional code that will run with any number of threads. Specify the number of threads and make sure you still get the right answers with setenv: 5 setenv OMP_NUM_THREADS 4 a. out > results

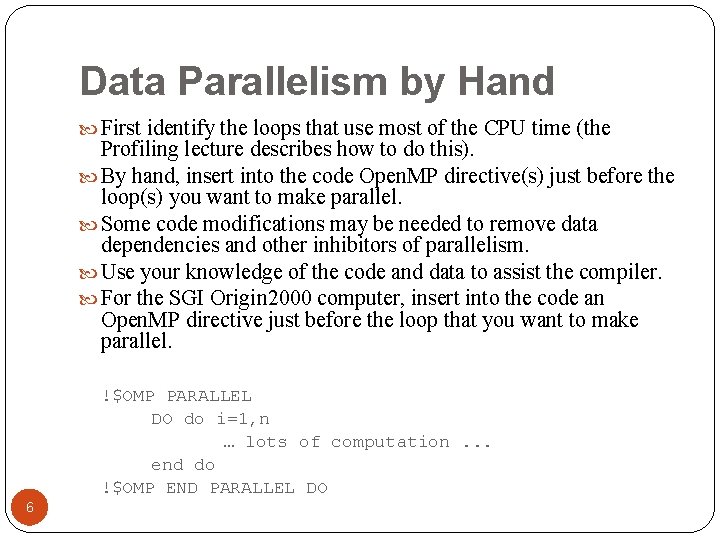

Data Parallelism by Hand First identify the loops that use most of the CPU time (the Profiling lecture describes how to do this). By hand, insert into the code Open. MP directive(s) just before the loop(s) you want to make parallel. Some code modifications may be needed to remove data dependencies and other inhibitors of parallelism. Use your knowledge of the code and data to assist the compiler. For the SGI Origin 2000 computer, insert into the code an Open. MP directive just before the loop that you want to make parallel. !$OMP PARALLEL DO do i=1, n … lots of computation. . . end do !$OMP END PARALLEL DO 6

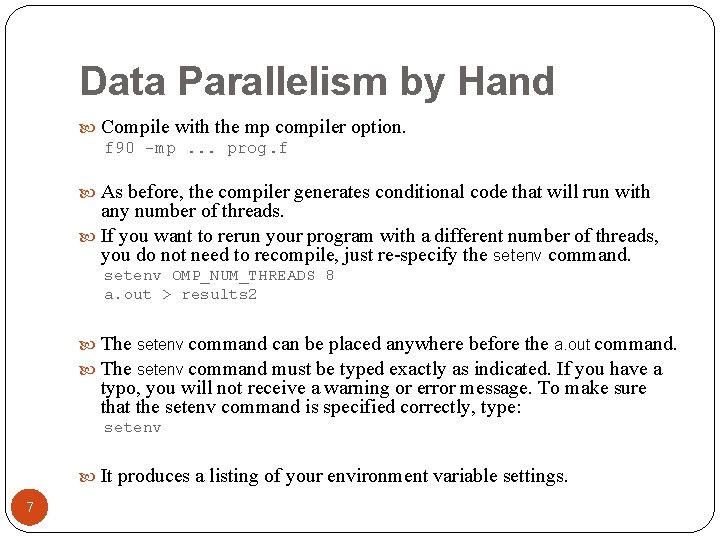

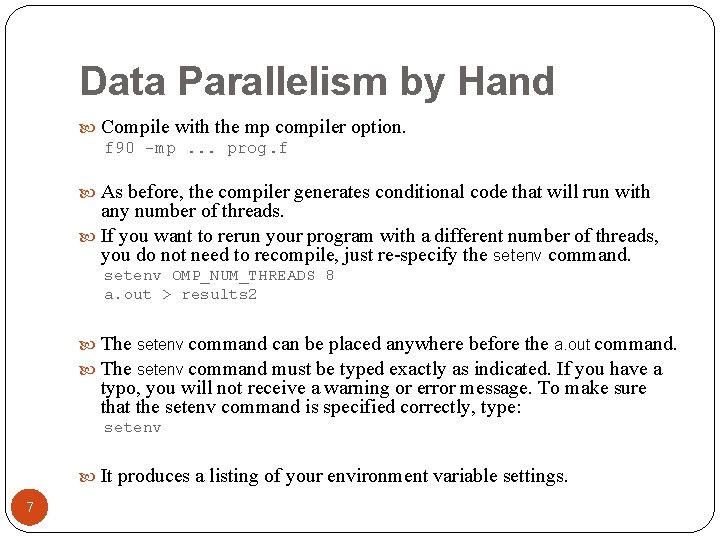

Data Parallelism by Hand Compile with the mp compiler option. f 90 -mp. . . prog. f As before, the compiler generates conditional code that will run with any number of threads. If you want to rerun your program with a different number of threads, you do not need to recompile, just re-specify the setenv command. setenv OMP_NUM_THREADS 8 a. out > results 2 The setenv command can be placed anywhere before the a. out command. The setenv command must be typed exactly as indicated. If you have a typo, you will not receive a warning or error message. To make sure that the setenv command is specified correctly, type: setenv It produces a listing of your environment variable settings. 7

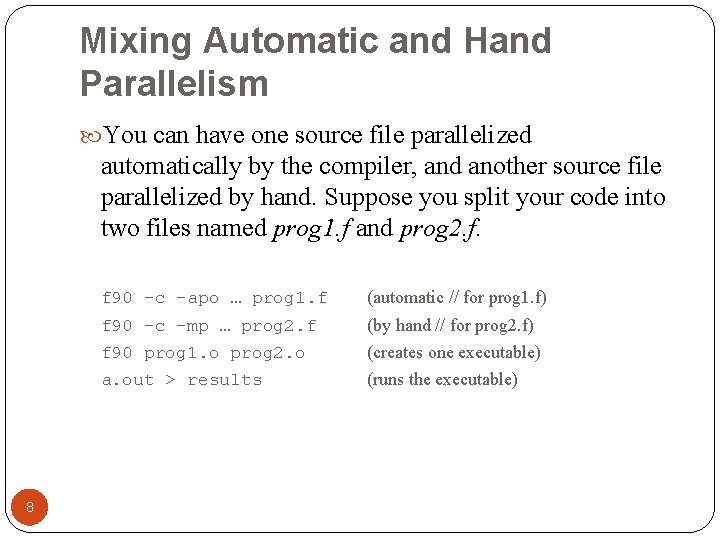

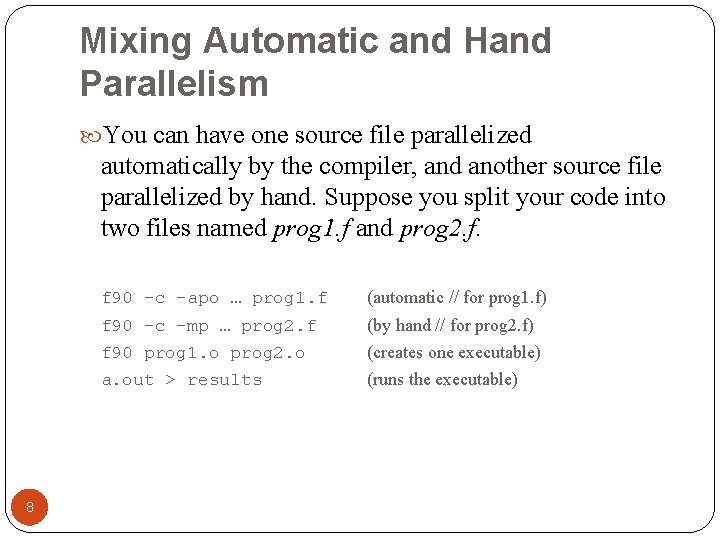

Mixing Automatic and Hand Parallelism You can have one source file parallelized automatically by the compiler, and another source file parallelized by hand. Suppose you split your code into two files named prog 1. f and prog 2. f. 8 f 90 -c -apo … prog 1. f (automatic // for prog 1. f) f 90 -c -mp … prog 2. f (by hand // for prog 2. f) f 90 prog 1. o prog 2. o (creates one executable) a. out > results (runs the executable)

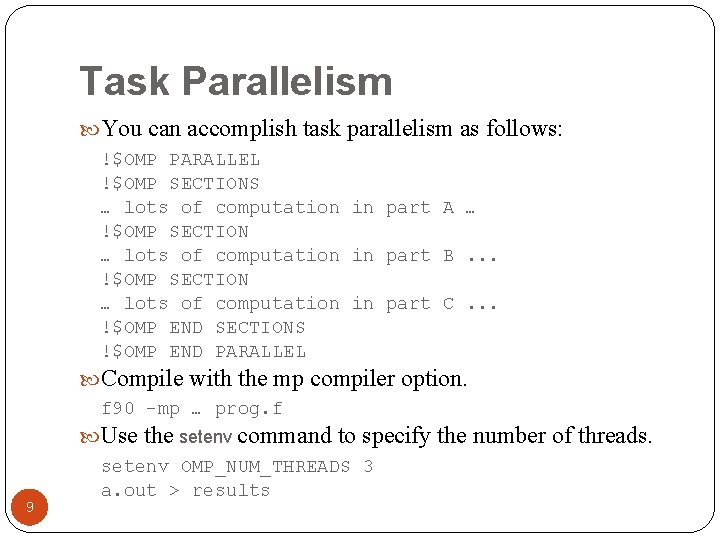

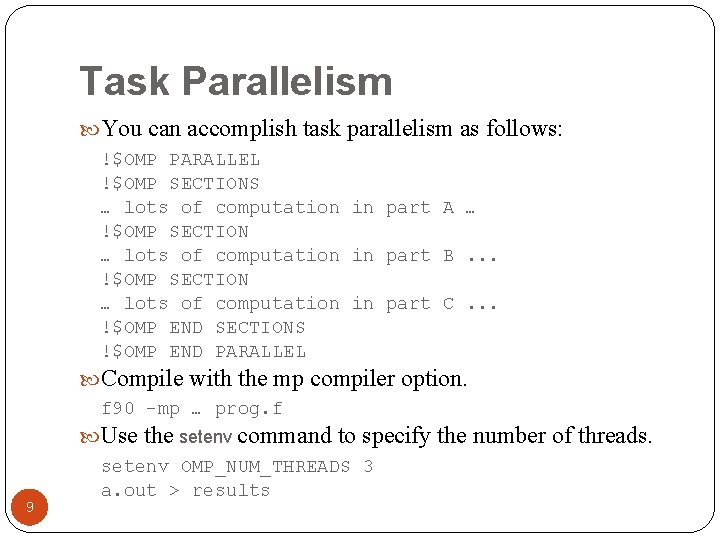

Task Parallelism You can accomplish task parallelism as follows: !$OMP PARALLEL !$OMP SECTIONS … lots of computation in part A … !$OMP SECTION … lots of computation in part B. . . !$OMP SECTION … lots of computation in part C. . . !$OMP END SECTIONS !$OMP END PARALLEL Compile with the mp compiler option. f 90 -mp … prog. f Use the setenv command to specify the number of threads. 9 setenv OMP_NUM_THREADS 3 a. out > results

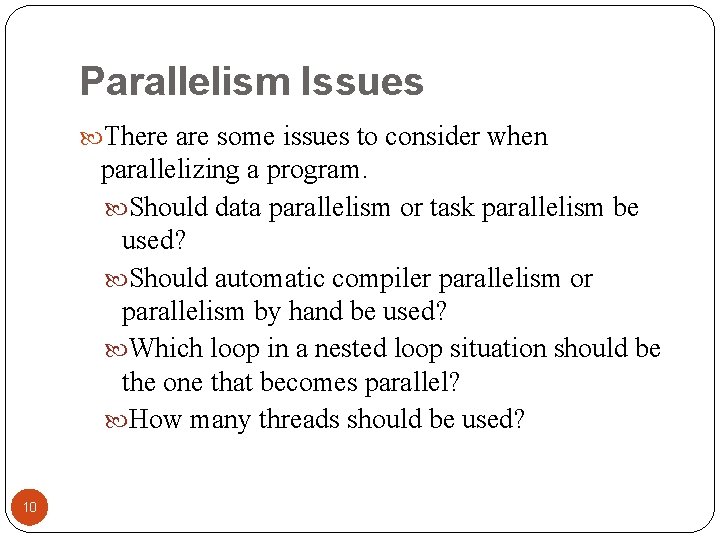

Parallelism Issues There are some issues to consider when parallelizing a program. Should data parallelism or task parallelism be used? Should automatic compiler parallelism or parallelism by hand be used? Which loop in a nested loop situation should be the one that becomes parallel? How many threads should be used? 10