Outline l NetworkCommunication Middleware CA KTL DSS ICE

- Slides: 47

Outline l Network/Communication Middleware – CA – KTL – DSS – ICE – TINE l Platforms – Vx. Works – RTEMS – Other? l Frameworks – EPICS – ATSTCS – TANGO

DDS - Data Distribution Service l Data (information) centric approach l Publish- Subscribe standard l Data Distribution Service – OMG Standard for Data Centric Publish Subscribe (DCPS) – Architected for data-centric communications in embedded, realtime systems – Adopted in 2003 – Two aspects: API and Wire Protocol l DDS more than a Pub/Sub Middleware l DDS is a data broker and a data manager – The Integration of data distribution, data management, and data location functions

What is DDS l l l l Networking middleware that simplifies complex network programming. A publish/subscribe model for sending and receiving data, events, and commands among the nodes. Publishers create "topics" (e. g. , temperature, location, pressure) and publish "samples. " DDS handles all the transfer chores: message addressing, marshalling, delivery, flow control, retries, etc. Any node can be a publisher, subscriber, or both simultaneously. Very simple programming model. Applications that use DDS for their communications are entirely decoupled. DDS automatically handles all aspects of message delivery and allows the user to specify Quality of Service (Qo. S) parameters: – Way to configure automatic-discovery mechanisms and specify the behavior – l used when sending and receiving messages. The mechanisms are configured up-front and require no further effort on the user's part. DDS also automatically handles hot-swapping redundant publishers if the primary fails.

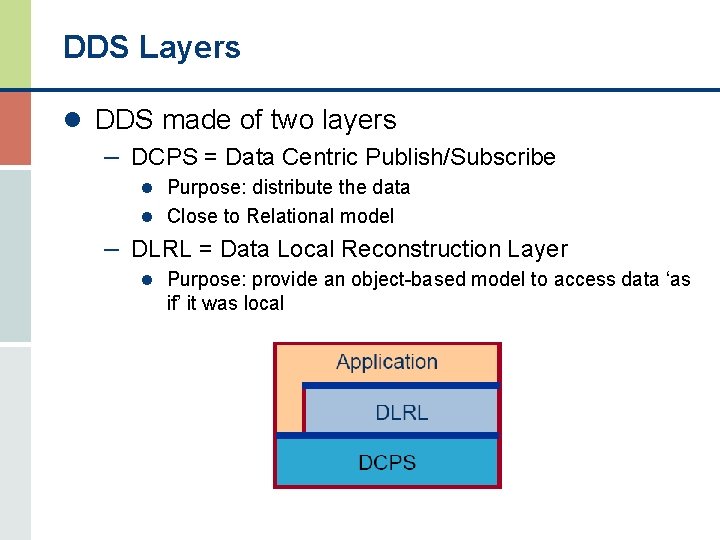

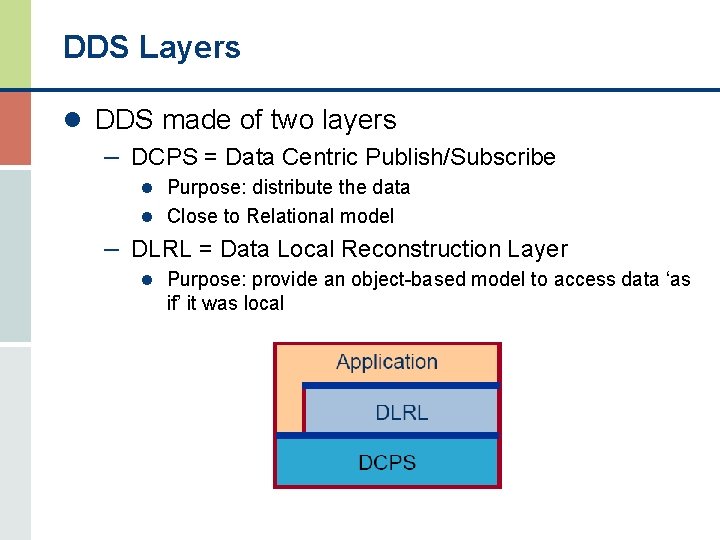

DDS Layers l DDS made of two layers – DCPS = Data Centric Publish/Subscribe l Purpose: distribute the data l Close to Relational model – DLRL = Data Local Reconstruction Layer l Purpose: provide an object-based model to access data ‘as if’ it was local

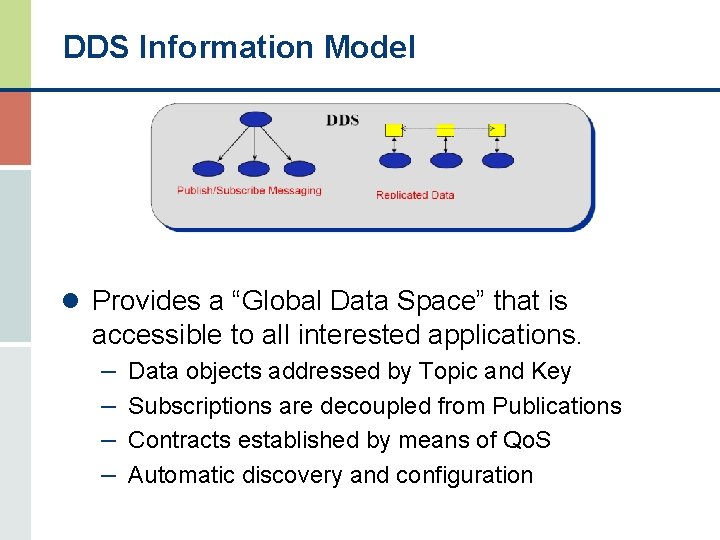

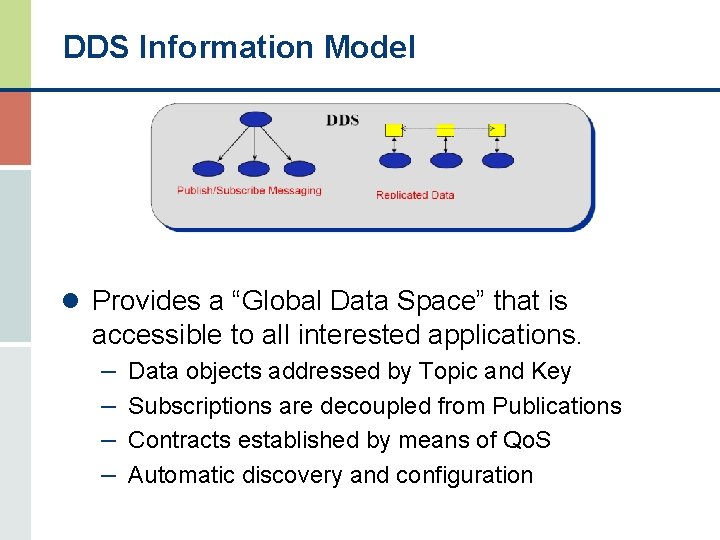

DDS Information Model l Provides a “Global Data Space” that is accessible to all interested applications. – Data objects addressed by Topic and Key – Subscriptions are decoupled from Publications – Contracts established by means of Qo. S – Automatic discovery and configuration

DDS Concepts l l l Handle, manage and dispatch complex data models. Portability, language and platform transparency Platform independent Data-model Strongly typed interfaces Real-time Qo. S Management Location Transparency Data Integrity Control and Filtering Domain managements Data History Fault tolerance

DSS Summary l OMG Standard l Information/Data centric – TOPIC based, defined with IDL l DDS targets applications that need to distribute data in a real-time environment l DDS is highly configurable by Qo. S settings l DDS provides a shared “global data space” – Any application can publish data it has – Any application can subscribe to data it needs – Automatic discovery – Facilities for fault tolerance – Heterogeneous systems easily accommodated

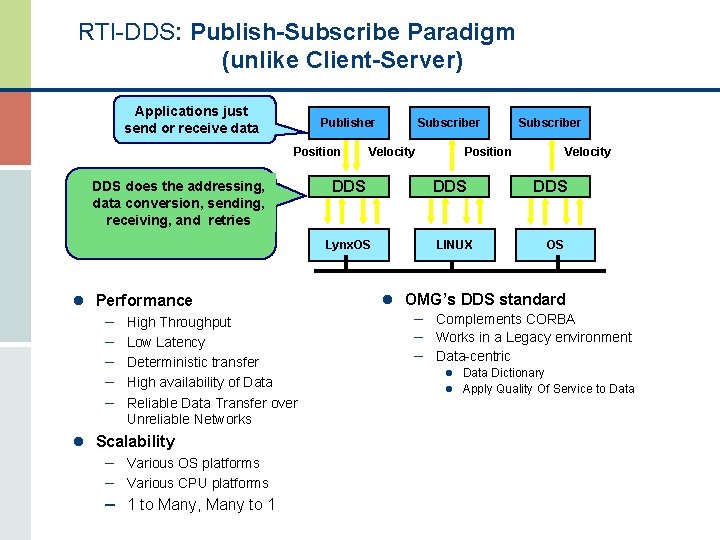

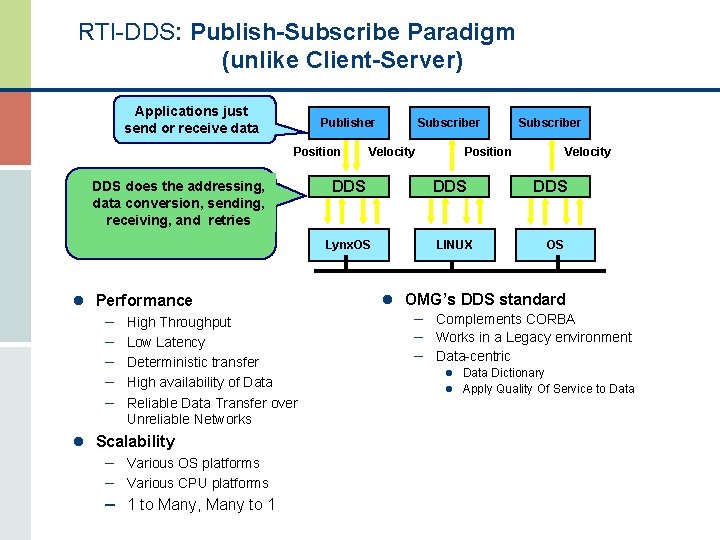

RTI-DDS: Publish-Subscribe Paradigm (unlike Client-Server) Applications just send or receive data Publisher Position DDS does the addressing, data conversion, sending, receiving, and retries Velocity DDS Lynx. OS l Performance – – – High Throughput Low Latency Deterministic transfer High availability of Data Reliable Data Transfer over Unreliable Networks l Scalability – Various OS platforms – Various CPU platforms – 1 to Many, Many to 1 Subscriber Position DDS LINUX Velocity DDS OS l OMG’s DDS standard – Complements CORBA – Works in a Legacy environment – Data-centric l Data Dictionary l Apply Quality Of Service to Data

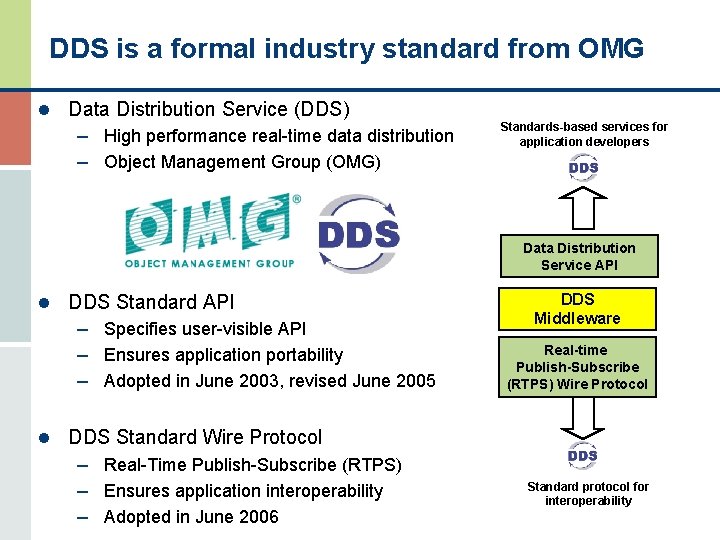

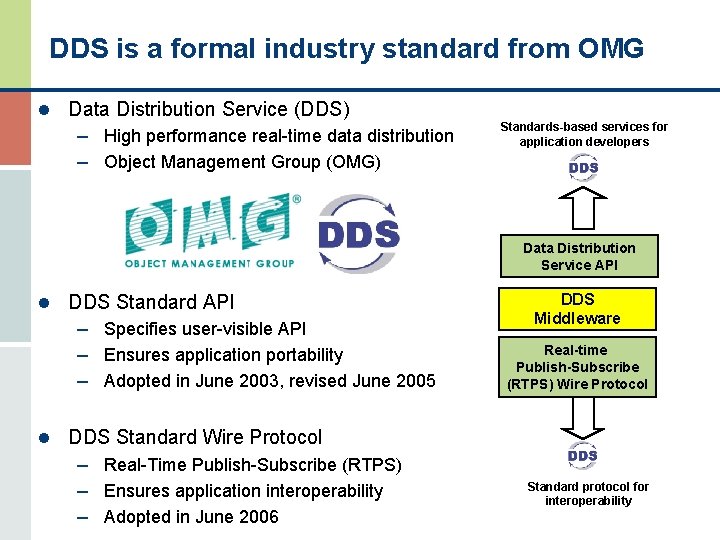

DDS is a formal industry standard from OMG l Data Distribution Service (DDS) – High performance real-time data distribution – Object Management Group (OMG) Standards-based services for application developers Data Distribution Service API l DDS Standard API – Specifies user-visible API – Ensures application portability – Adopted in June 2003, revised June 2005 l DDS Standard Wire Protocol – Real-Time Publish-Subscribe (RTPS) – Ensures application interoperability – Adopted in June 2006 DDS Middleware Real-time Publish-Subscribe (RTPS) Wire Protocol Standard protocol for interoperability

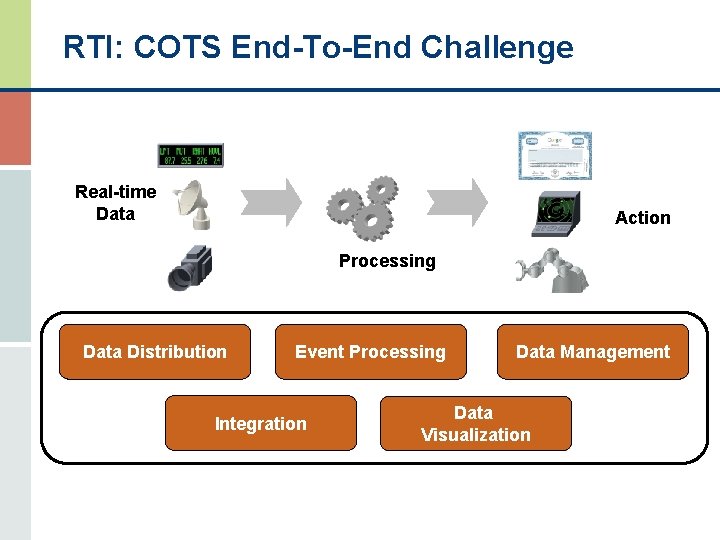

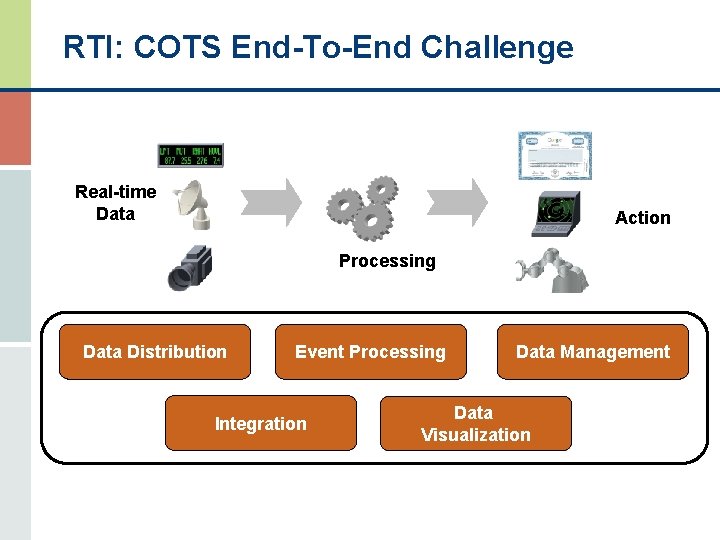

RTI: COTS End-To-End Challenge Real-time Data Action Processing Data Distribution Event Processing Integration Data Management Data Visualization

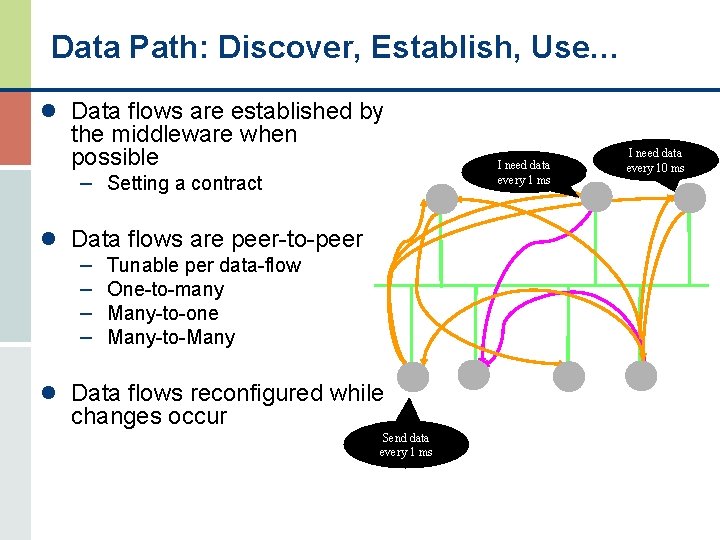

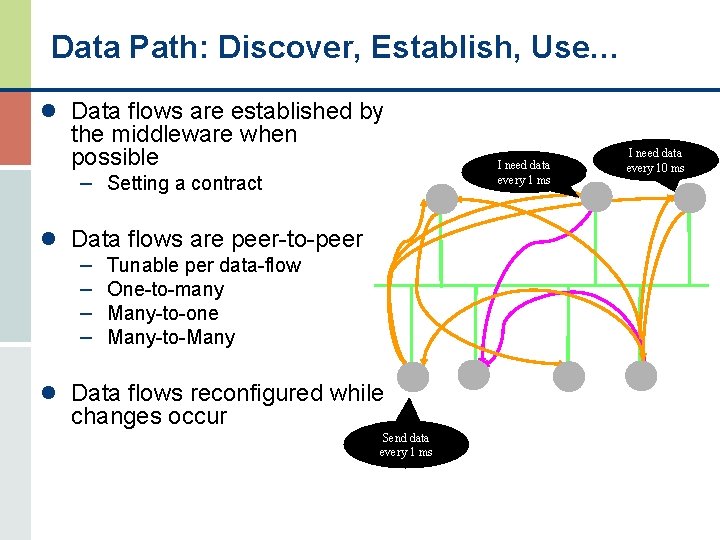

Data Path: Discover, Establish, Use… l Data flows are established by the middleware when possible I need data every 1 ms – Setting a contract l Data flows are peer-to-peer – Tunable per data-flow – One-to-many – Many-to-one – Many-to-Many l Data flows reconfigured while changes occur Send data every 1 ms I need data every 10 ms

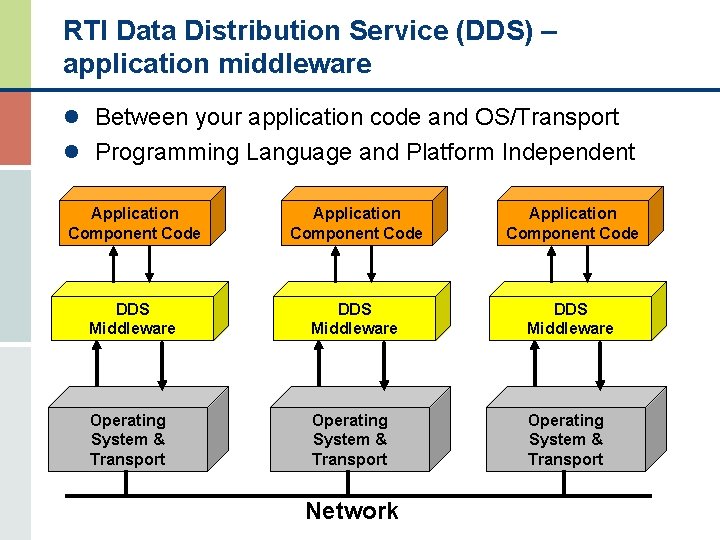

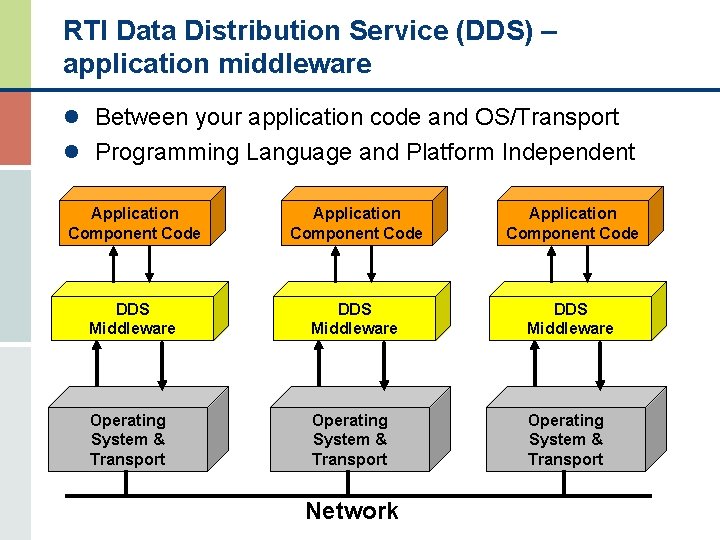

RTI Data Distribution Service (DDS) – application middleware l Between your application code and OS/Transport l Programming Language and Platform Independent Application Component Code DDS Middleware Operating System & Transport Network

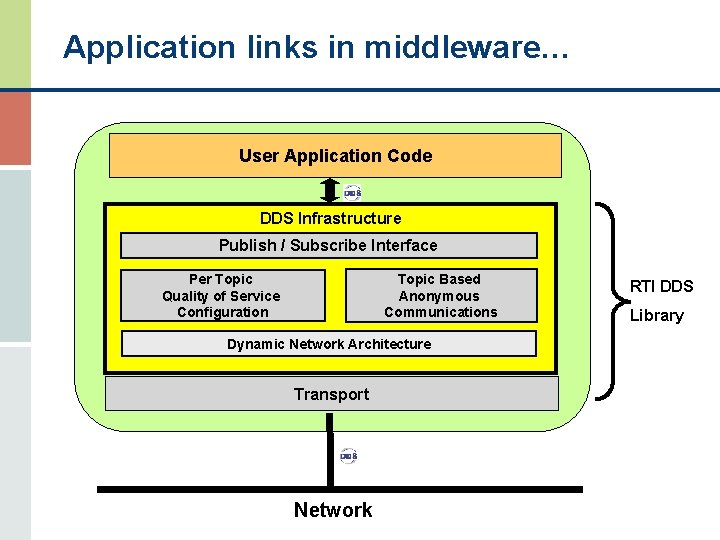

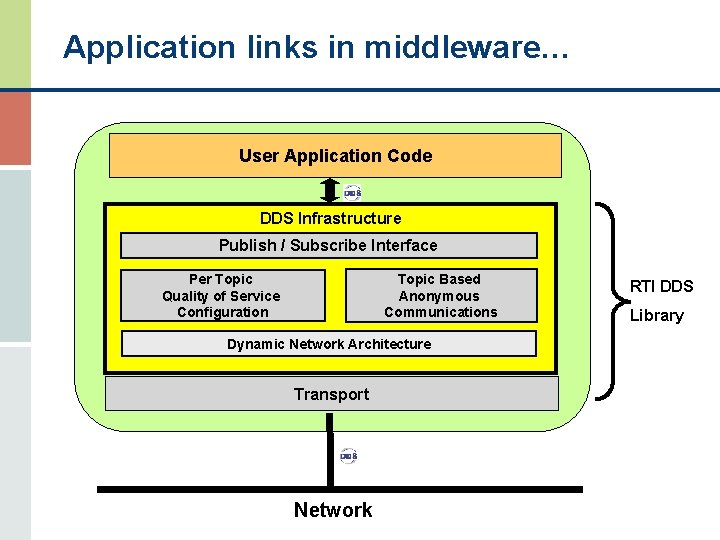

Application links in middleware… User Application Code DDS Infrastructure Publish / Subscribe Interface Topic Based Anonymous Communications Per Topic Quality of Service Configuration Dynamic Network Architecture Transport Network RTI DDS Library

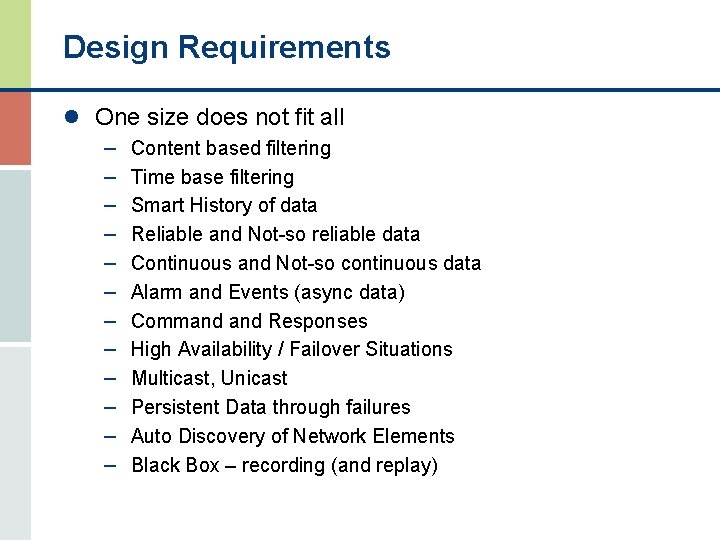

Design Requirements l One size does not fit all – Content based filtering – Time base filtering – Smart History of data – Reliable and Not-so reliable data – Continuous and Not-so continuous data – Alarm and Events (async data) – Command Responses – High Availability / Failover Situations – Multicast, Unicast – Persistent Data through failures – Auto Discovery of Network Elements – Black Box – recording (and replay)

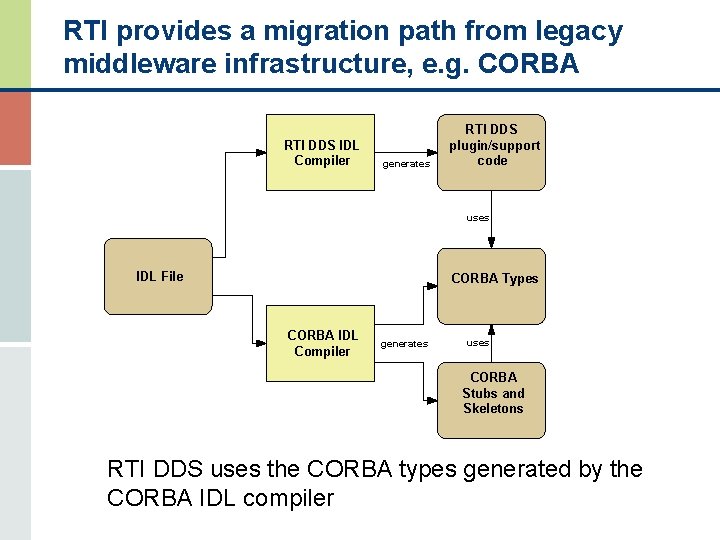

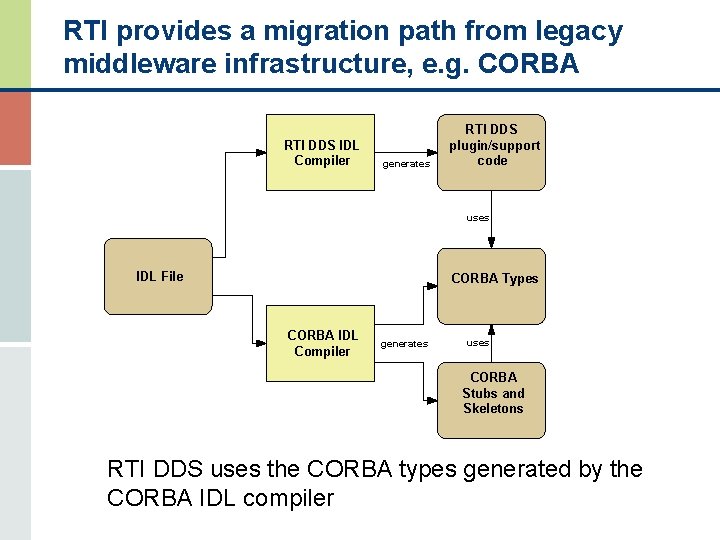

RTI provides a migration path from legacy middleware infrastructure, e. g. CORBA RTI DDS IDL Compiler generates RTI DDS plugin/support code uses IDL File CORBA Types CORBA IDL generates Compiler uses CORBA Stubs and Skeletons RTI DDS uses the CORBA types generated by the CORBA IDL compiler

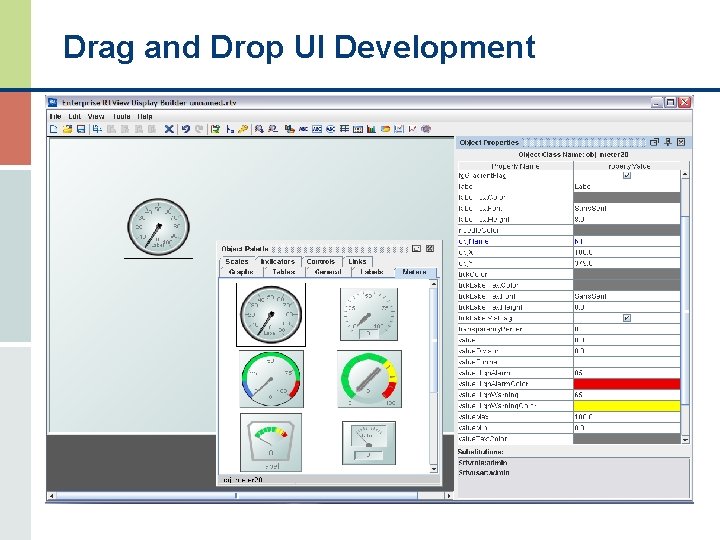

Drag and Drop UI Development

DDS (RTI) Pricing l 3 -user Named User Development Package = $X – 3 -user development license – 2 -day on-site quickstart training – 6 run-time licenses – 1 year maintenance and support

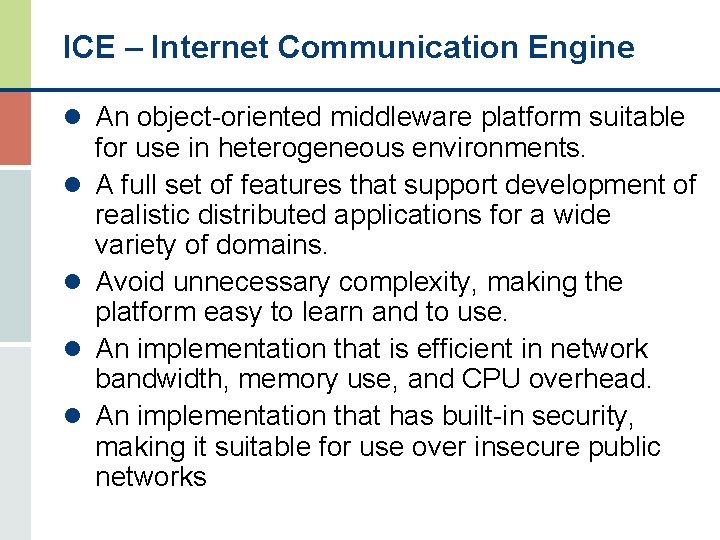

ICE – Internet Communication Engine l An object-oriented middleware platform suitable l l for use in heterogeneous environments. A full set of features that support development of realistic distributed applications for a wide variety of domains. Avoid unnecessary complexity, making the platform easy to learn and to use. An implementation that is efficient in network bandwidth, memory use, and CPU overhead. An implementation that has built-in security, making it suitable for use over insecure public networks

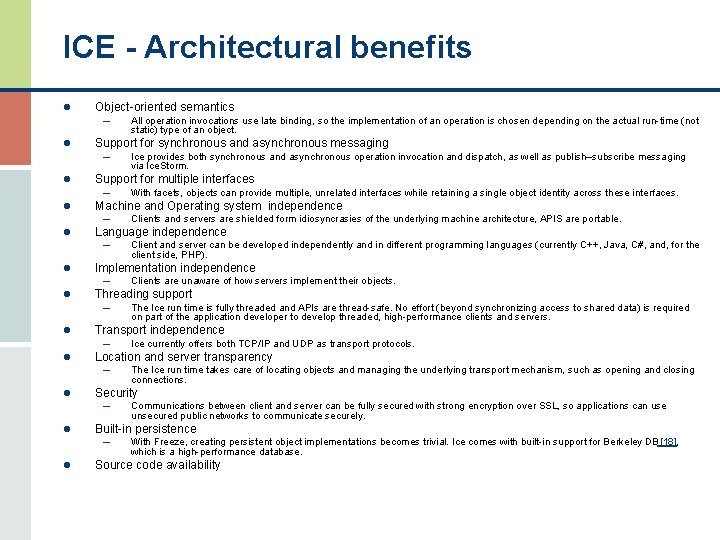

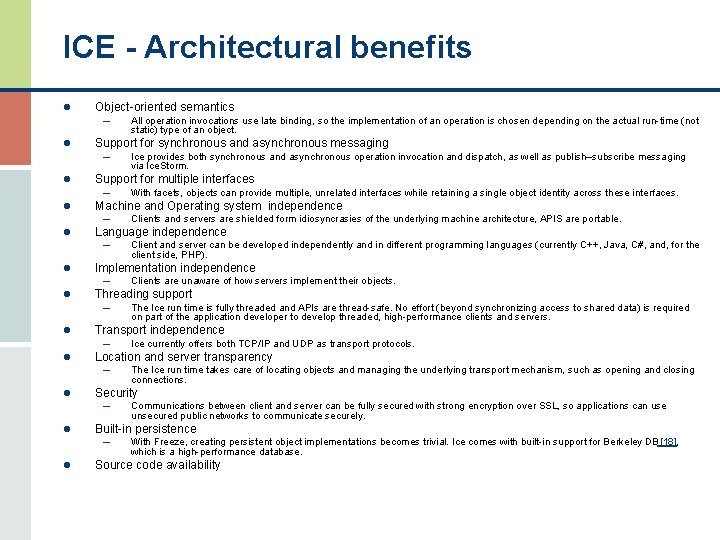

ICE - Architectural benefits l Object-oriented semantics – All operation invocations use late binding, so the implementation of an operation is chosen depending on the actual run-time (not l Support for synchronous and asynchronous messaging – Ice provides both synchronous and asynchronous operation invocation and dispatch, as well as publish–subscribe messaging l Support for multiple interfaces – With facets, objects can provide multiple, unrelated interfaces while retaining a single object identity across these interfaces. Machine and Operating system independence – Clients and servers are shielded form idiosyncrasies of the underlying machine architecture, APIS are portable. Language independence – Client and server can be developed independently and in different programming languages (currently C++, Java, C#, and, for the static) type of an object. via Ice. Storm. l l client side, PHP). l l Implementation independence – Clients are unaware of how servers implement their objects. Threading support – The Ice run time is fully threaded and APIs are thread-safe. No effort (beyond synchronizing access to shared data) is required on part of the application developer to develop threaded, high-performance clients and servers. l l Transport independence – Ice currently offers both TCP/IP and UDP as transport protocols. Location and server transparency – The Ice run time takes care of locating objects and managing the underlying transport mechanism, such as opening and closing connections. l Security – Communications between client and server can be fully secured with strong encryption over SSL, so applications can use unsecured public networks to communicate securely. l Built-in persistence – With Freeze, creating persistent object implementations becomes trivial. Ice comes with built-in support for Berkeley DB [18], which is a high-performance database. l Source code availability

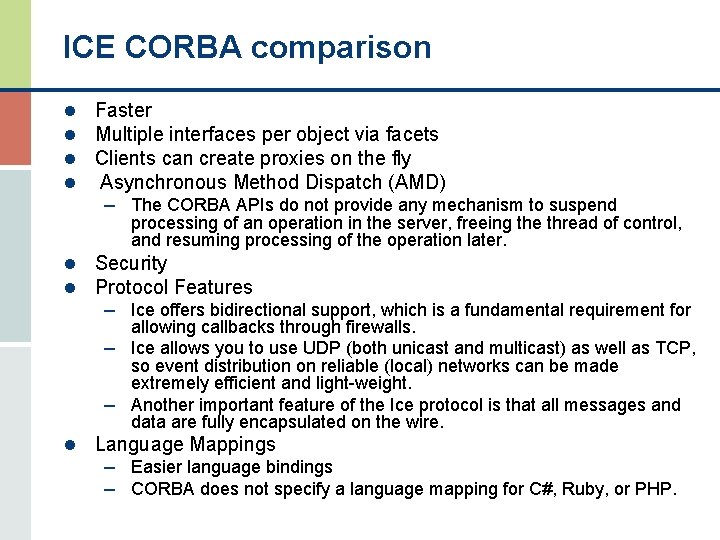

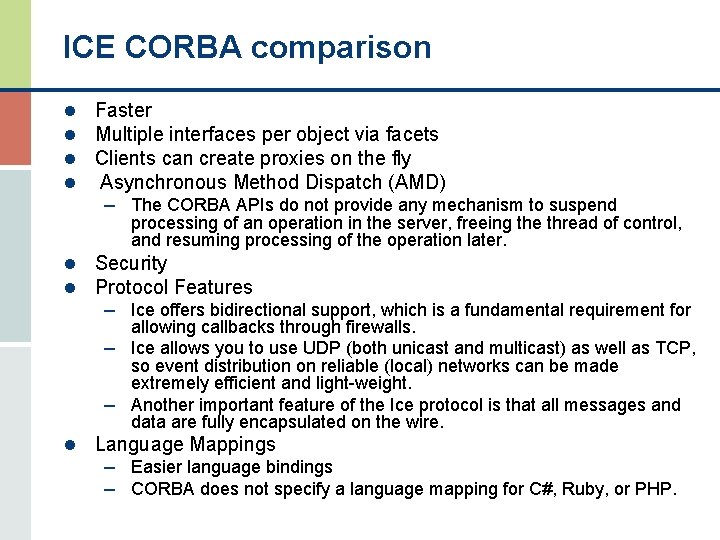

ICE CORBA comparison l l Faster Multiple interfaces per object via facets Clients can create proxies on the fly Asynchronous Method Dispatch (AMD) – The CORBA APIs do not provide any mechanism to suspend processing of an operation in the server, freeing the thread of control, and resuming processing of the operation later. l Security l Protocol Features – Ice offers bidirectional support, which is a fundamental requirement for allowing callbacks through firewalls. – Ice allows you to use UDP (both unicast and multicast) as well as TCP, so event distribution on reliable (local) networks can be made extremely efficient and light-weight. – Another important feature of the Ice protocol is that all messages and data are fully encapsulated on the wire. l Language Mappings – Easier language bindings – CORBA does not specify a language mapping for C#, Ruby, or PHP.

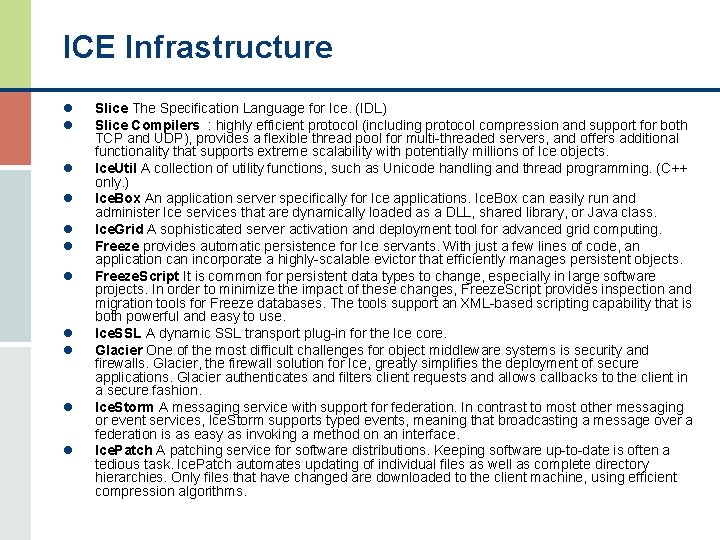

ICE Infrastructure l l l Slice The Specification Language for Ice. (IDL) Slice Compilers : highly efficient protocol (including protocol compression and support for both TCP and UDP), provides a flexible thread pool for multi-threaded servers, and offers additional functionality that supports extreme scalability with potentially millions of Ice objects. Ice. Util A collection of utility functions, such as Unicode handling and thread programming. (C++ only. ) Ice. Box An application server specifically for Ice applications. Ice. Box can easily run and administer Ice services that are dynamically loaded as a DLL, shared library, or Java class. Ice. Grid A sophisticated server activation and deployment tool for advanced grid computing. Freeze provides automatic persistence for Ice servants. With just a few lines of code, an application can incorporate a highly-scalable evictor that efficiently manages persistent objects. Freeze. Script It is common for persistent data types to change, especially in large software projects. In order to minimize the impact of these changes, Freeze. Script provides inspection and migration tools for Freeze databases. The tools support an XML-based scripting capability that is both powerful and easy to use. Ice. SSL A dynamic SSL transport plug-in for the Ice core. Glacier One of the most difficult challenges for object middleware systems is security and firewalls. Glacier, the firewall solution for Ice, greatly simplifies the deployment of secure applications. Glacier authenticates and filters client requests and allows callbacks to the client in a secure fashion. Ice. Storm A messaging service with support for federation. In contrast to most other messaging or event services, Ice. Storm supports typed events, meaning that broadcasting a message over a federation is as easy as invoking a method on an interface. Ice. Patch A patching service for software distributions. Keeping software up-to-date is often a tedious task. Ice. Patch automates updating of individual files as well as complete directory hierarchies. Only files that have changed are downloaded to the client machine, using efficient compression algorithms.

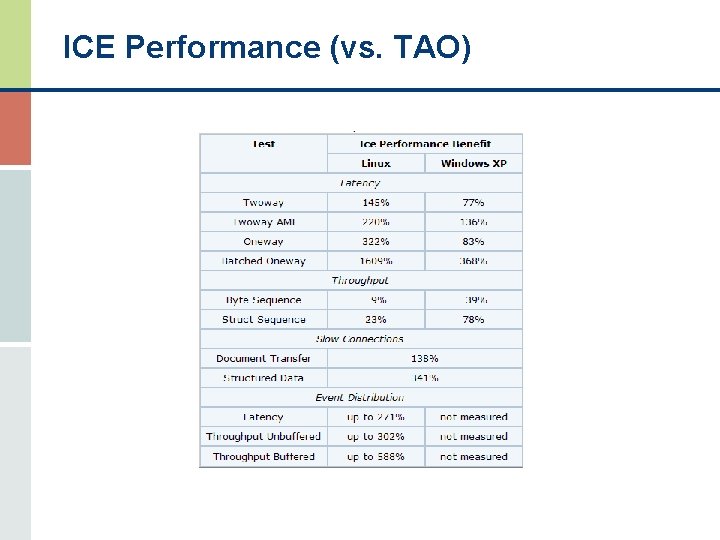

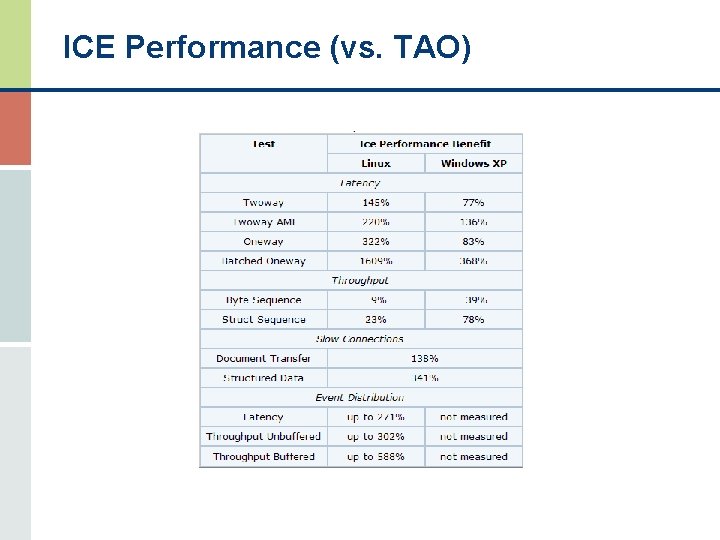

ICE Performance (vs. TAO)

TINE Protocol l The TINE protocol allows data transfer according to one of four different paradigms: l “client-server” (i. e. synchronous transaction) and l “publisher-subscriber” (i. e. asynchronous event notification). – Both of these mechanisms inherently imply uni-cast (peer-to-peer) data transfer. l “producer-consumer” mode of transfer, where control system data deemed of interest to most control system elements (e. g. beam energy) are multi-casted from a single source, regardless of the number of interested consumers. l “producer-subscriber” mode, whereby subscribers inform a server of their wishes, and the server (producer) multi-casts the results to his multicast group. Any number of clients can then make the identical request with no extra load on either the server or the network.

EPICS and TINE l Epics 2 tine translator can either run practically embedded on any EPICS ioc, or run in parallel on a softioc. l Essentially, it bypasses the channel access protocol completely and runs EPICS over the TINE protocol.

RTEMS (Real-Time Executive for Multiprocessor Systems) l Free deterministic real-time operating system targeted towards deeply embedded systems l Cross development toolset is based upon the free GNU tools l Supports EPICS l Possible Vx. Works alternative? – It has been shown that RTEMS provides a real time behavior that is comparable to Vx. Works

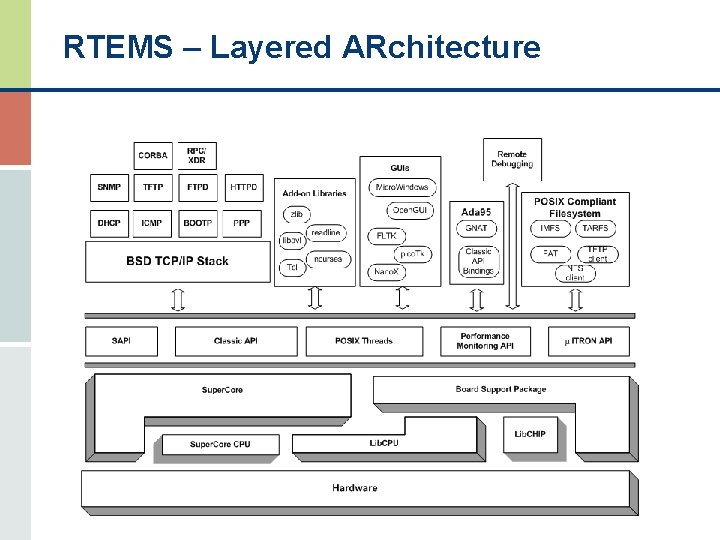

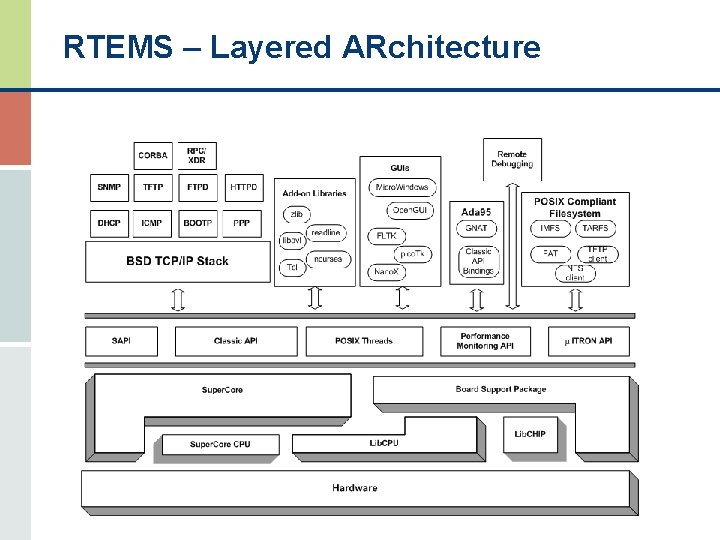

RTEMS – Layered ARchitecture

RTEMS – Standards Compliance l POSIX 1003. 1 b API including threads l VMEbus Industry Trade Association RTEID/ORKID l l l based Classic API (similar to p. SOS+) TCP/IP including BSD Sockets u. ITRON 3. 0 API GNU Toolset Supports Multiple Language Standards ISO/ANSI C++ including Standard Template Library Ada with GNAT/RTEMS

RTEMS Kernel Features l Multitasking capabilities l Homogeneous and heterogeneous multiprocessor l l l l systems Event-driven, priority-based pre-emptive scheduling Optional rate-monotonic scheduling Inter task communication and synchronization Priority inheritance Responsive interrupt management Dynamic memory allocation High level of user configurability Portable to many target environments

RTEMS - Networking l l l l High performance port of Free. BSD TCP/IP stack UDP, TCP ICMP, DHCP, RARP, BOOTP, PPPD Client Services Domain Name Service (DNS) client Trivial FTP (TFTP) client Network Filesystem System (NFS) client Servers FTP server (FTPD) Web Server (HTTPD) Telnet Server (Telnetd) Sun Remote Procedure Call (RPC) Sun e. Xternal Data Representation (XDR) CORBA

ATSTCS l Advanced Technology Solar Telescope (ATST) Common Services l Common framework for all observatory software – ALMA ACS is excellent example (but too BIG) – ATSTCS draws from general ACS model l Much more than just a set of libraries l Separates technical and functional architectures l Provides bulk of technical architecture through Container/Component model l Allows application developers to focus on functionality l Provides consistency of use l System becomes easier to maintain and manage

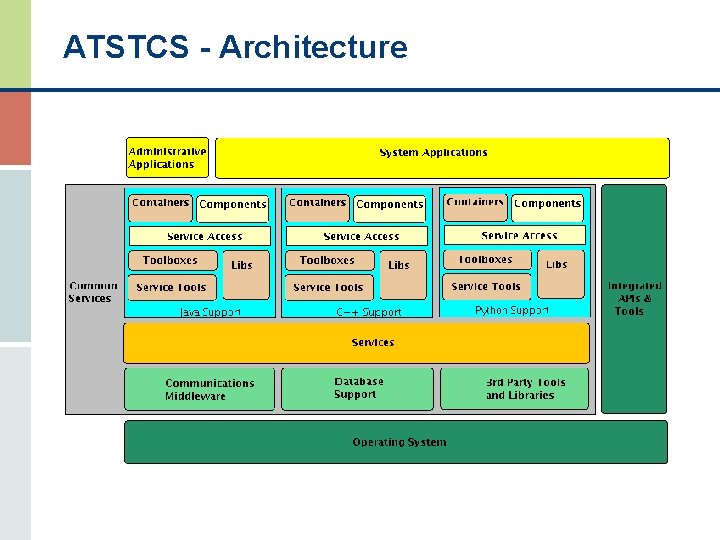

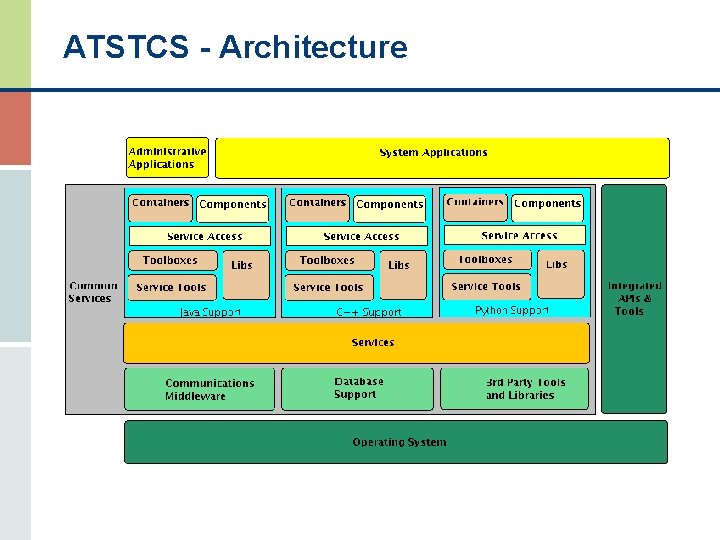

ATSTCS - Architecture

ATSTCS - Benefits l Avoids need to write communication infrastructure in- house – – Less effort Less in-house expertise required Access to outside expertise Benefit from wide-spread use l Often provides rich set of features l Supports actions required to run in a distributed environment l Lots to choose from (both commercial and community)

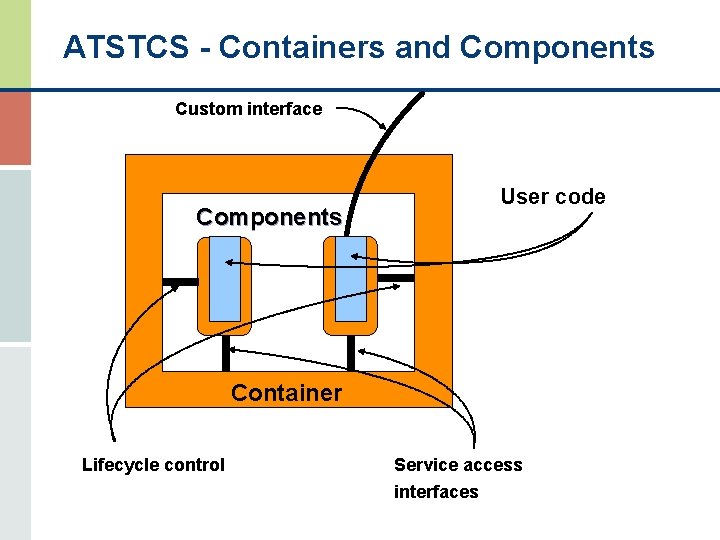

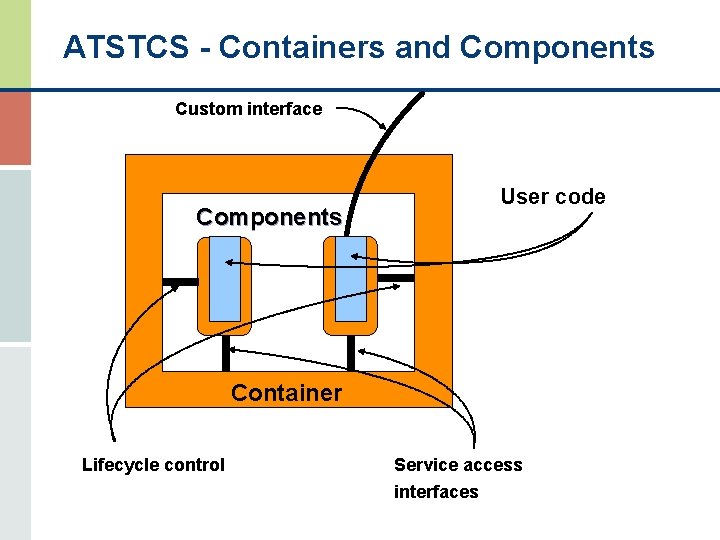

ATSTCS - Containers and Components Custom interface Components User code Container Lifecycle control Service access interfaces

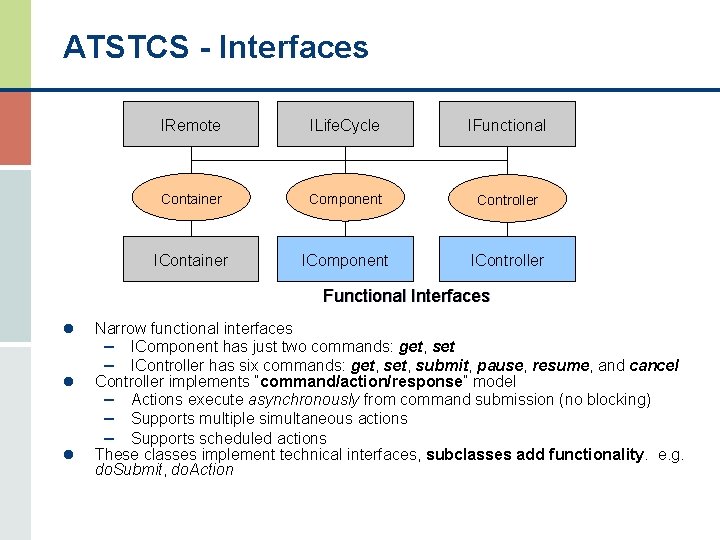

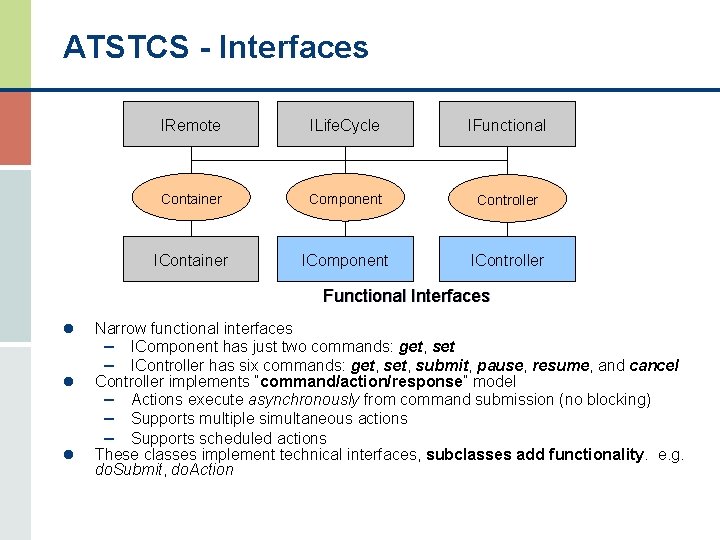

ATSTCS - Interfaces IRemote ILife. Cycle IFunctional Container Component Controller IContainer IComponent IController Functional Interfaces l l l Narrow functional interfaces – IComponent has just two commands: get, set – IController has six commands: get, submit, pause, resume, and cancel Controller implements “command/action/response” model – Actions execute asynchronously from command submission (no blocking) – Supports multiple simultaneous actions – Supports scheduled actions These classes implement technical interfaces, subclasses add functionality. e. g. do. Submit, do. Action

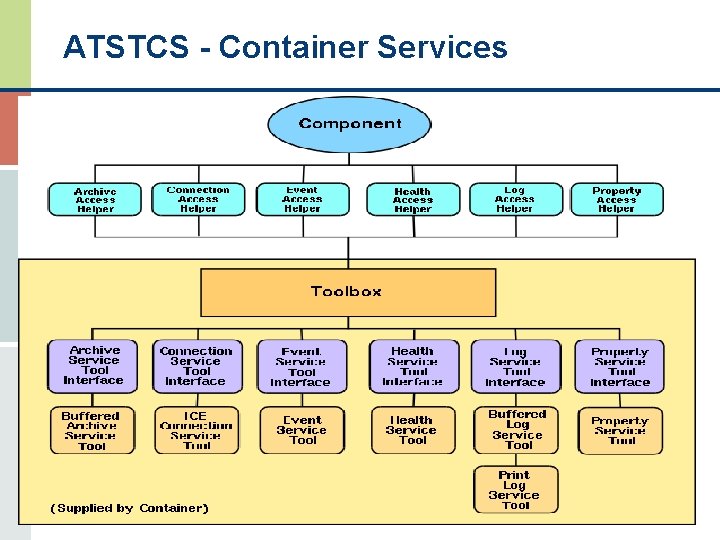

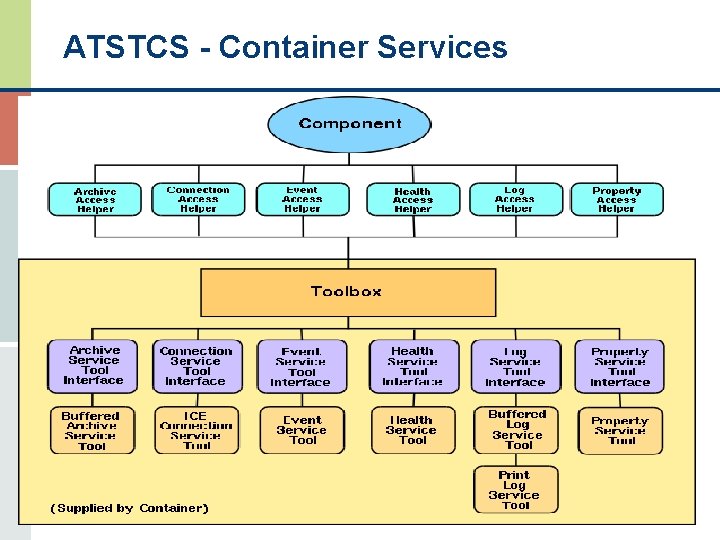

ATSTCS - Container Services

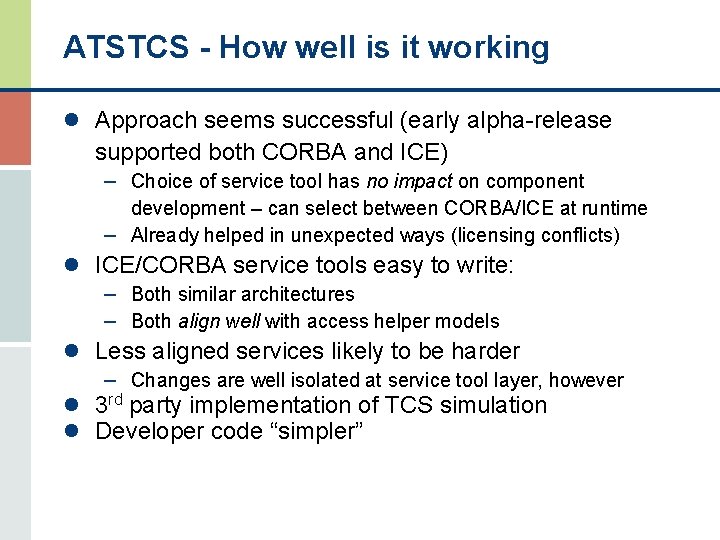

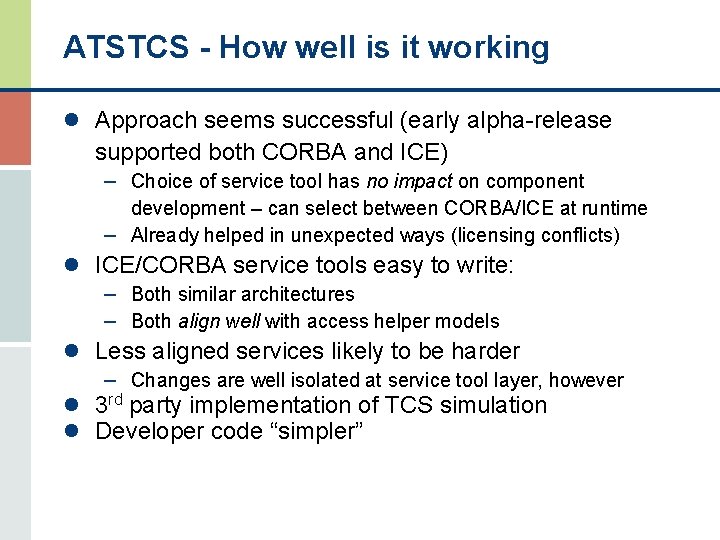

ATSTCS - How well is it working l Approach seems successful (early alpha-release supported both CORBA and ICE) – Choice of service tool has no impact on component development – can select between CORBA/ICE at runtime – Already helped in unexpected ways (licensing conflicts) l ICE/CORBA service tools easy to write: – Both similar architectures – Both align well with access helper models l Less aligned services likely to be harder – Changes are well isolated at service tool layer, however l 3 rd party implementation of TCS simulation l Developer code “simpler”

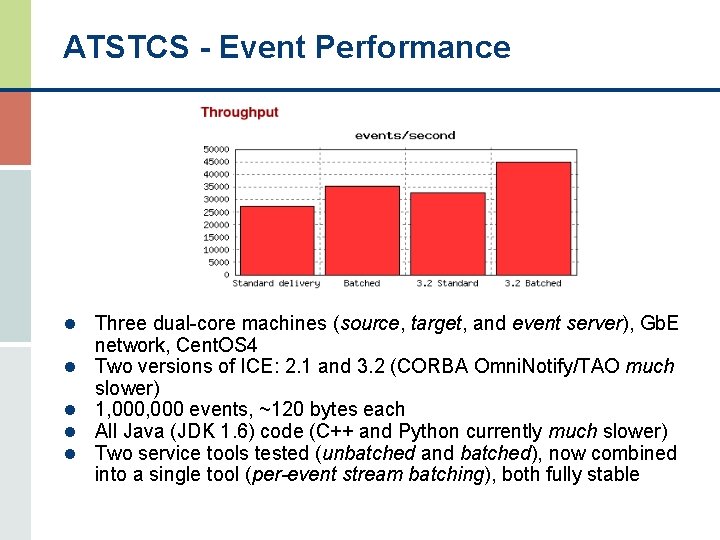

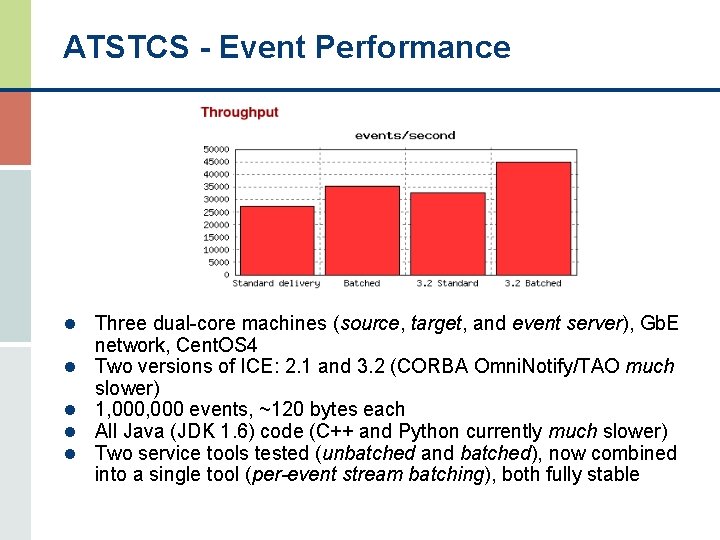

ATSTCS - Event Performance l Three dual-core machines (source, target, and event server), Gb. E l l network, Cent. OS 4 Two versions of ICE: 2. 1 and 3. 2 (CORBA Omni. Notify/TAO much slower) 1, 000 events, ~120 bytes each All Java (JDK 1. 6) code (C++ and Python currently much slower) Two service tools tested (unbatched and batched), now combined into a single tool (per-event stream batching), both fully stable

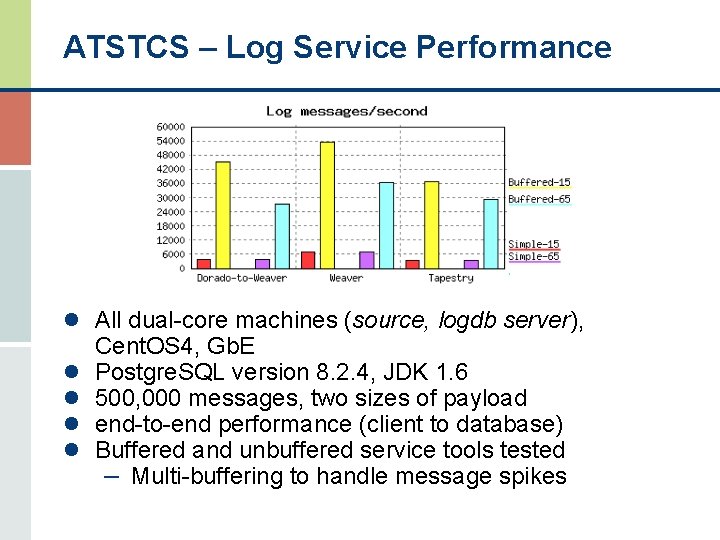

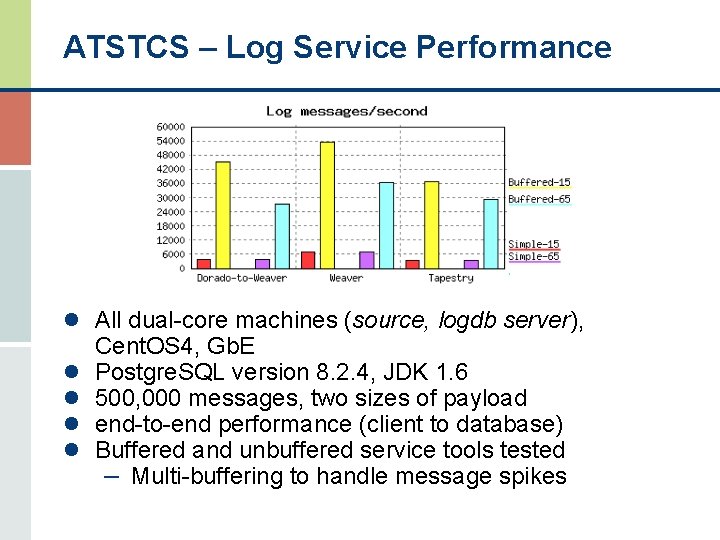

ATSTCS – Log Service Performance l All dual-core machines (source, logdb server), l l Cent. OS 4, Gb. E Postgre. SQL version 8. 2. 4, JDK 1. 6 500, 000 messages, two sizes of payload end-to-end performance (client to database) Buffered and unbuffered service tools tested – Multi-buffering to handle message spikes

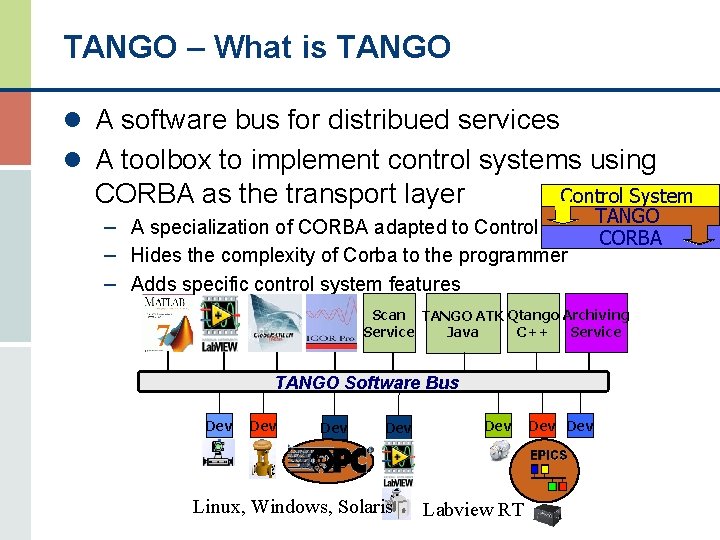

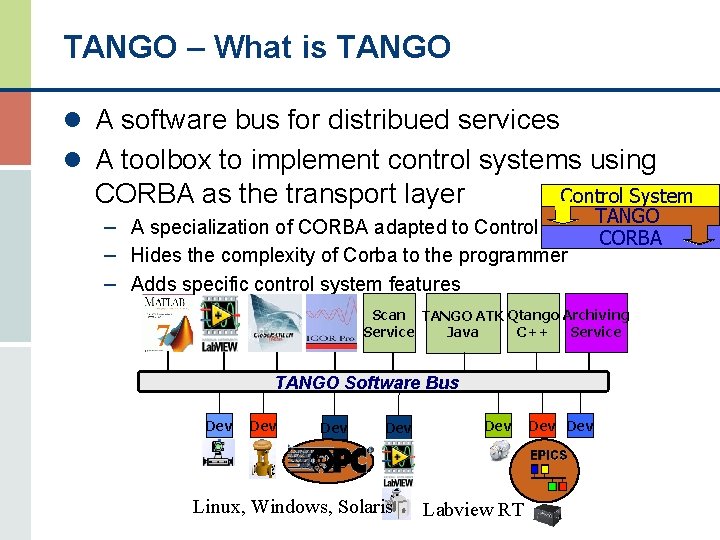

TANGO – What is TANGO l A software bus for distribued services l A toolbox to implement control systems using CORBA as the transport layer Control System TANGO – A specialization of CORBA adapted to Control CORBA – Hides the complexity of Corba to the programmer – Adds specific control system features Scan TANGO ATK Qtango Archiving Service C++ Service Java TANGO Software Bus Dev Dev Dev OPC Linux, Windows, Solaris Labview RT Dev

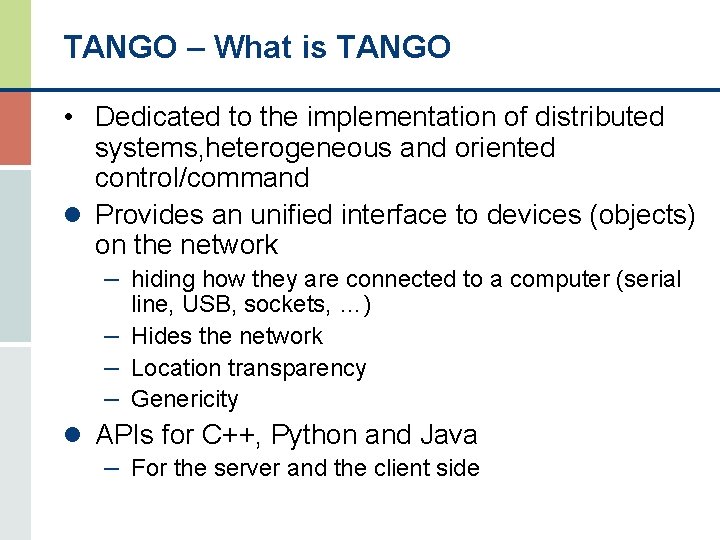

TANGO – What is TANGO • Dedicated to the implementation of distributed systems, heterogeneous and oriented control/command l Provides an unified interface to devices (objects) on the network – hiding how they are connected to a computer (serial line, USB, sockets, …) – Hides the network – Location transparency – Genericity l APIs for C++, Python and Java – For the server and the client side

TANGO – What is TANGO l More than only a software bus – Database for persistency – Code generator to implement networked devices – Central services (Archive, snapshots, logging, – – – alarms, scans, security, …) Application Toolkits (Java, C++ and Python) Commercial bindings (Labview, Matlab, Igor. Pro) Control system administration (Starter, Astor) Hundreds of available classes Web interfaces (PHP, Java)

TANGO and CORBA l Tango encapsulates the CORBA communication protocol – Allows the use of other communication protocols l Tango uses a narrow CORBA interface – All objects on the network have the same interface – Allows the use of generic applications – Avoids recompilation when new objects are added

The TANGO Device l The fundamental brick of Tango is the device! l Everything which needs to be controlled is a “device” from a very simple equipment to a very sophisticated one l Every device has a three field name “domain/family/member” – sr/v-ip/c 18 -1, sr/v-ip/c 18 -2 – sr/d-ct/1 – id 10/motor/10

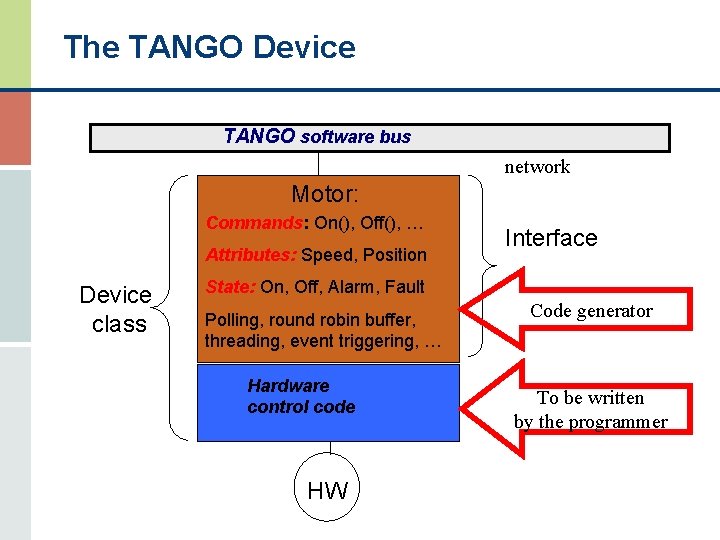

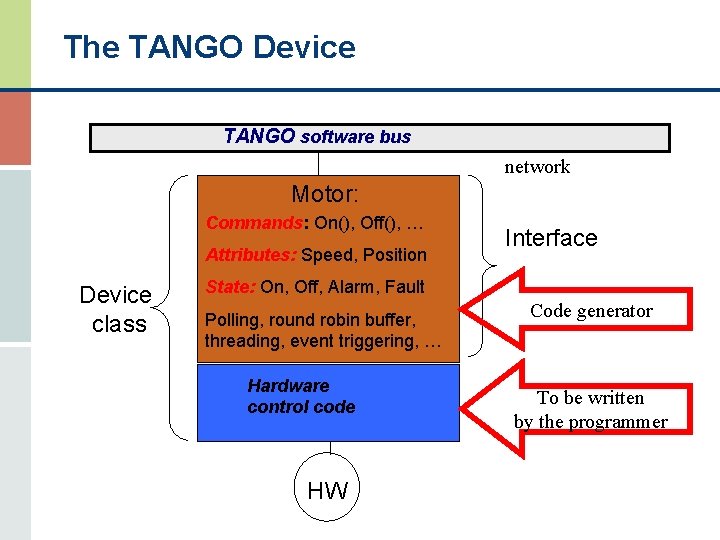

The TANGO Device TANGO software bus network Motor: Commands: On(), Off(), … Attributes: Speed, Position Device class Interface State: On, Off, Alarm, Fault Polling, round robin buffer, threading, event triggering, … Hardware control code HW Code generator To be written by the programmer

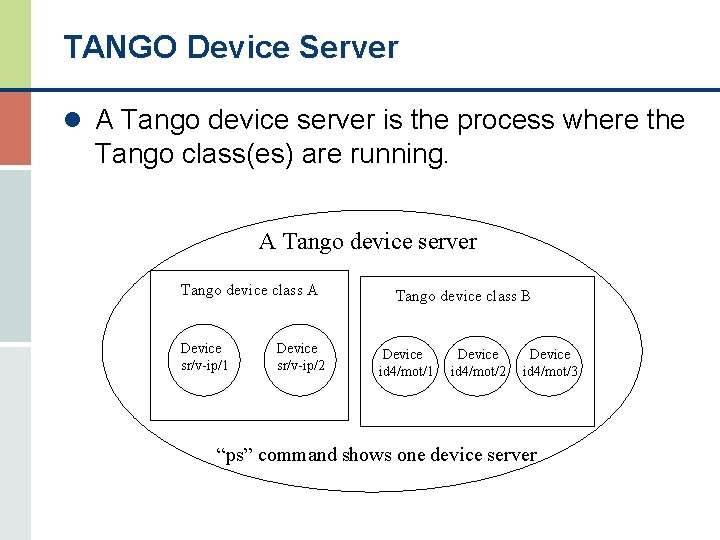

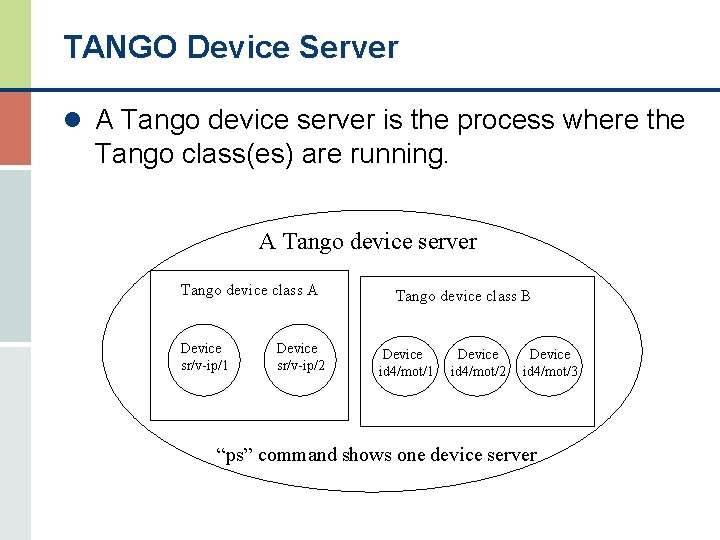

TANGO Device Server l A Tango device server is the process where the Tango class(es) are running. A Tango device server Tango device class A Device sr/v-ip/1 Device sr/v-ip/2 Tango device class B Device id 4/mot/1 Device id 4/mot/2 Device id 4/mot/3 “ps” command shows one device server

TANGO Device Server l Tango uses a database to configure a device server process l Device number and names for a Tango class are defined within the database not in the code. l Which Tango class(es) are part of a device server process is defined in the database but also in the code

TANGO Support l APIs/Programming Languages – C++ (performances) – Java (portability) – Python (scripts) – Others (Matlab, Igor Pro, Lab. View) l platforms – Linux – Windows NT/2000/XP – Sun-Solaris – FPGA