DSS for Integrated Water Resources Management IWRM DSS

- Slides: 49

DSS for Integrated Water Resources Management (IWRM) DSS methods and tools DDr. Kurt Fedra kurt@ess. co. at ESS Gmb. H, Austria http: //www. ess. co. at Environmental Software & Services A-2352 Gumpoldskirchen 1 © K. Fedra 2007

Workshop objectives • • • Show the potential of DSS for IWRM; Create awareness of the possibilities; Facilitate understanding by describing: – Basic concepts, theory and approaches, components, methods, the tools, the language; – Scope and benefits of possible applications, prototypical application examples; – Limitations, uncertainty, data requirements, infrastructure and institutional requirements. Get the participants interested in active participation, including the exploratory application of the on-line tools for any specific problem or project. 2 © K. Fedra 2007

Main topics: • DSS software tools and methods, overview and comparison of basic methods Classical decision problems, decision support and optimization, scenario development • 3 © K. Fedra 2007

DSS tools and methods DSS: 254, 000 hits in Google. The term DSS is frequently used for (software) systems that are only marginally related to DSS; Any SPREADSHEET is not a DSS Any DATA BASE is not a DSS Any MODEL is not a DSS Any GIS is not a DSS 4 © K. Fedra 2007

What is a DSS ? A Decision Support System (DSS) is a • computer based • • • problem solving system (HW, SW, data, people) that can assist non-trivial choice between alternatives in complex and controversial domains. A DSS must manage together: • Set of alternatives (design) • Preference structure (selection) 5 © K. Fedra 2007

DSS tools and methods DSS should at least explicitly address: • Alternatives (manage, design) • Preference structure (criteria, objectives, constraints, ranking and selection rules) 6 © K. Fedra 2007

Design of alternatives • • • Predefined set (externally defined) Expert assessment, ad hoc Expert assessment, checklists (EIA, SIA) Expert systems (rule-based) Simulation modelling, scenario analysis Mathematical programming • (optimization) 7 © K. Fedra 2007

Design of alternatives • • Predefined set (externally defined) Expert assessment, ad hoc Advantage: simple, cost efficient Limitations: arbitrary, subjective, possibly unstructured, no consistency or optimality guaranteed 8 © K. Fedra 2007

Design of alternatives • Expert assessment, checklists (EIA, SIA) better structured than ad-hoc methods, commonly used for EIA Advantage: simple, efficient, cheap Limitations: subjective, no guarantee of completeness or consistency, no convergence to optimality 9 © K. Fedra 2007

Design of alternatives • Expert assessment, checklists (EIA, SIA) • Expert systems (rule-based) Advantage: very flexible, can cover qualitative and quantitative concepts, easy to use Limitations: subjective element, effort in preparing a domain specific knowledge base 10 © K. Fedra 2007

Expert system Uses first order logic for the description and assessment of alternatives: IF [condition: variable|operator|value] AND/OR [condition] test THEN [conclusion: variable|operator|value] assignment Intelligent checklists: alternatives are generated by systematically varying input/control conditions (antecedents) 11 © K. Fedra 2007

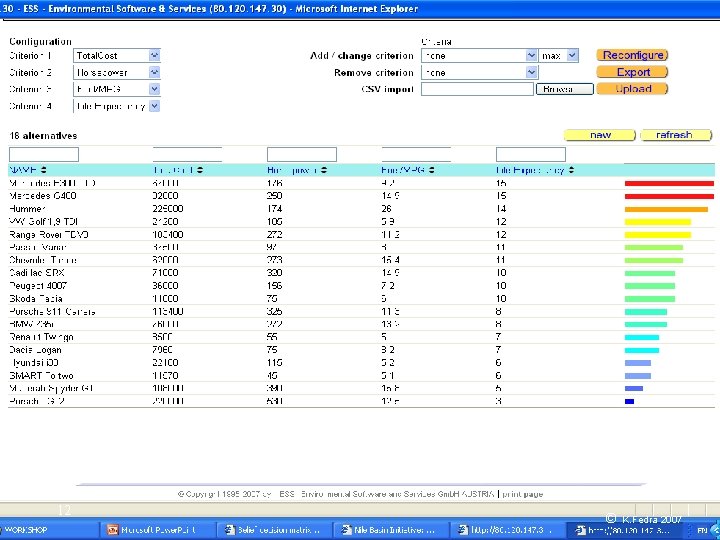

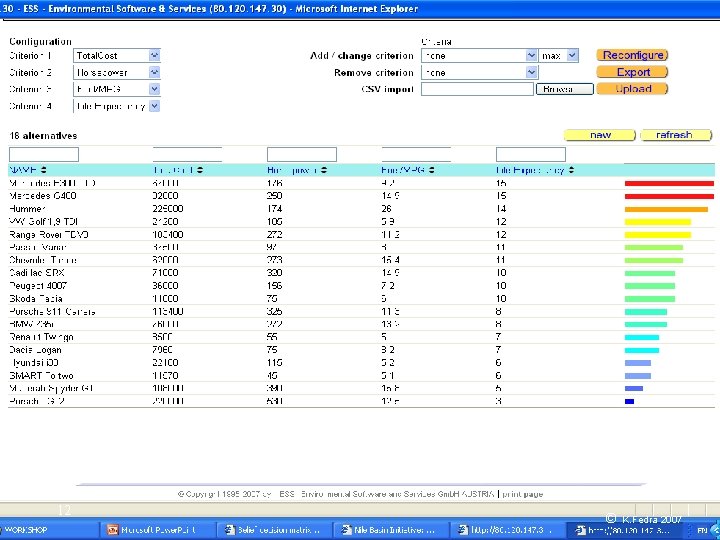

ranking 12 © K. Fedra 2007

Design of alternatives • Simulation modelling, scenario analysis Advantage: most powerful, flexible, and versatile; high level of detail arbitrary resolution and coverage (dynamic, distributed), large body of experience and available tools Limitations: efforts and costs, data requirements (GIGO) 13 © K. Fedra 2007

Design of alternatives • Mathematical programming (optimization) Advantage: most powerful paradigm, only one to truly design and generate alternatives to directly address objectives (goal oriented) Limitations: same as modelling, effort and data, simplifying assumptions for many methods (LP). 14 © K. Fedra 2007

Mathematical programming Maximize f(x): x in X, g(x) <= 0, h(x) = 0 where: X is a subset of R^n X is in the domain of the real-valued functions, f, g and h. The relations, g(x) <= 0 and h(x) = 0 are called constraints, f is called the objective function. 15 © K. Fedra 2007

DSS tools and methods DSS should at least explicitly address: • Alternatives (manage, design) • Preference structure (criteria, objectives, constraints, ranking and selection rules) 16 © K. Fedra 2007

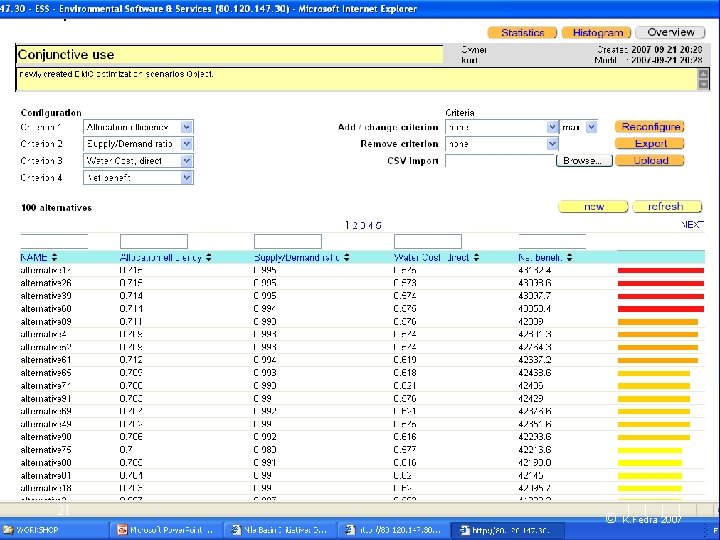

DSS tools and methods For a GIVEN set of alternatives: Primary approach to selection is RANKING, sorting • Simple with single or integrated criteria (everything monetized) • Difficult with multiple criteria 17 © K. Fedra 2007

Cost-benefit analysis Simple and single decision criterion: Does the project (alternative ) create NET BENEFIT ? • Evaluate and sum all costs • Evaluate and sum all benefits Benefit – Costs > 0. 0 ? 18 © K. Fedra 2007

Cost-benefit analysis Establish net present value of net benefits (PVNB): 19 © K. Fedra 2007

Cost-benefit analysis where: • • • 20 B incremental benefit in sector i C capital and operating costs D “dis-benefits” , external and opportunity costs i sectoral index t time, r interest rate © K. Fedra 2007

21 © K. Fedra 2007

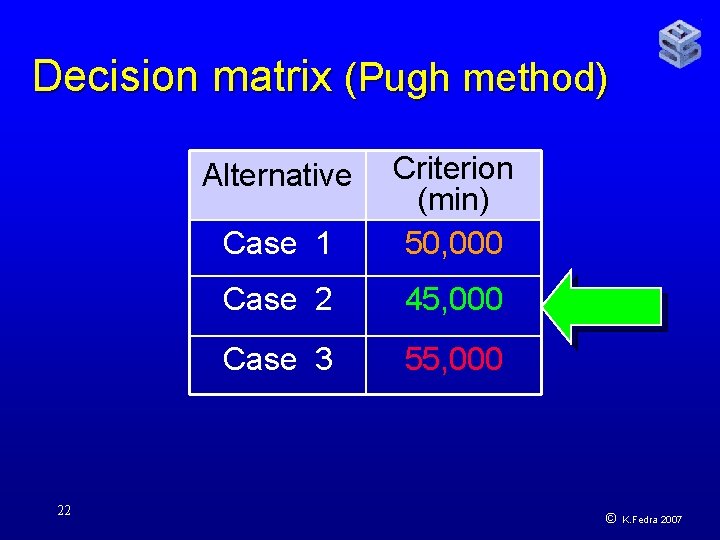

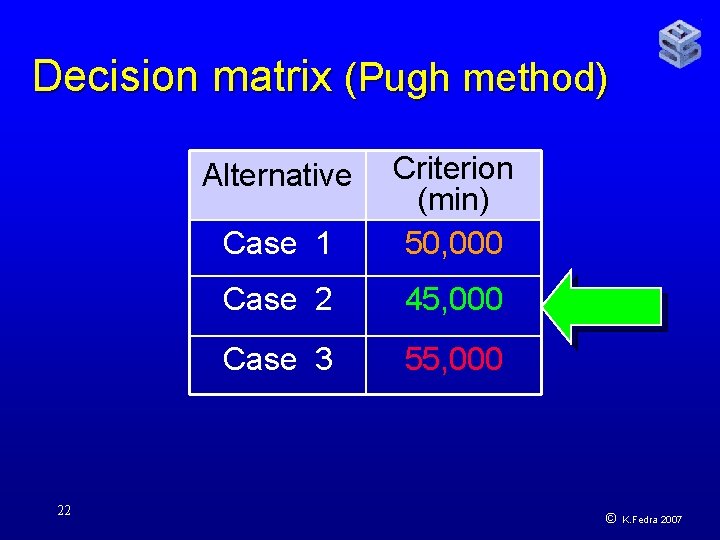

Decision matrix (Pugh method) Case 1 Criterion (min) 50, 000 Case 2 45, 000 Case 3 55, 000 Alternative 22 © K. Fedra 2007

Decision matrix (Pugh method) Alternatives 23 Case 1 Criterion 1 (min) 50, 000 Criterion 2 (max) 10 Case 2 45, 000 12 Case 3 55, 000 15 © K. Fedra 2007

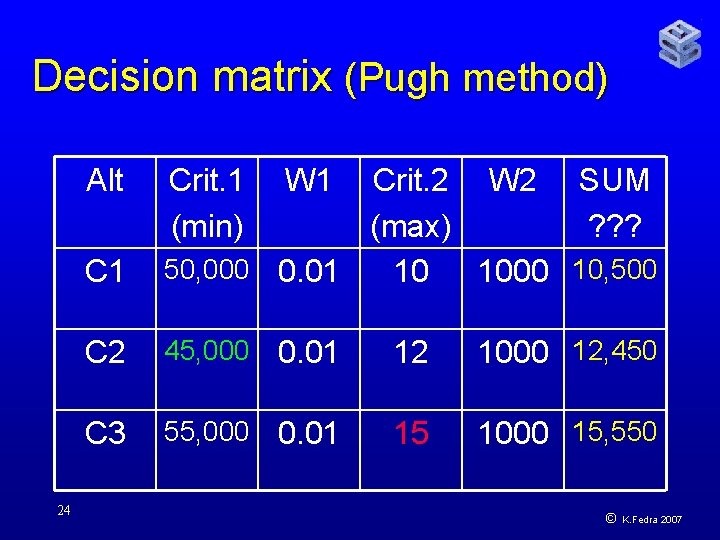

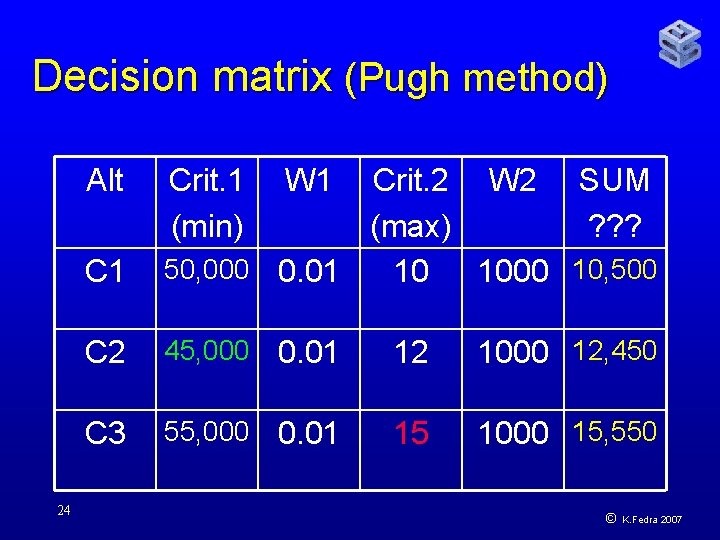

Decision matrix (Pugh method) Alt 24 Crit. 1 (min) W 1 Crit. 2 W 2 SUM (max) ? ? ? 50, 000 0. 01 10 1000 10, 500 C 2 45, 000 0. 01 12 1000 12, 450 C 3 55, 000 0. 01 15 1000 15, 550 © K. Fedra 2007

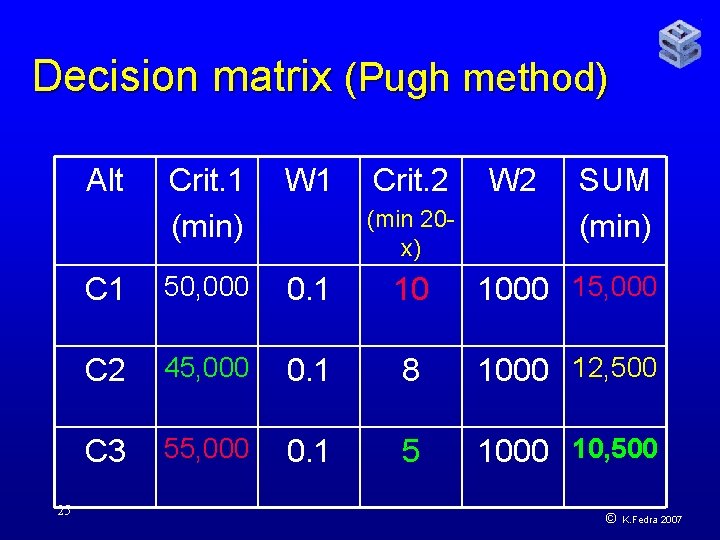

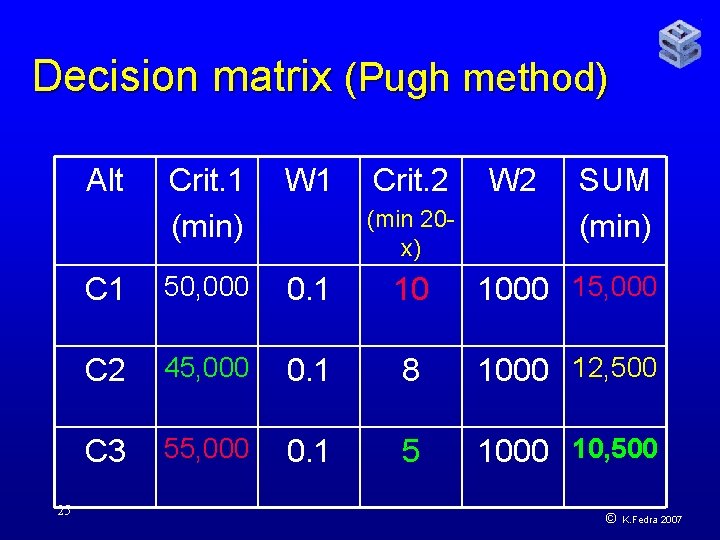

Decision matrix (Pugh method) Alt 25 Crit. 1 (min) W 1 Crit. 2 C 1 50, 000 0. 1 10 1000 15, 000 C 2 45, 000 0. 1 8 1000 12, 500 C 3 55, 000 0. 1 5 1000 10, 500 (min 20 x) W 2 SUM (min) © K. Fedra 2007

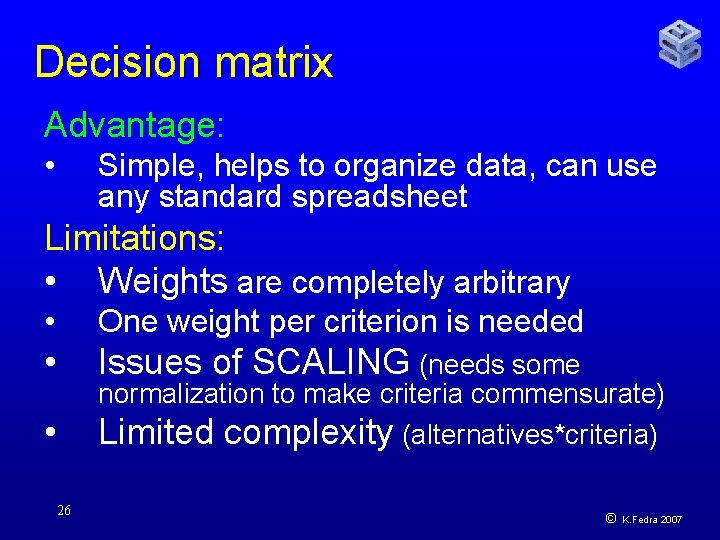

Decision matrix Advantage: • Simple, helps to organize data, can use any standard spreadsheet Limitations: • Weights are completely arbitrary • One weight per criterion is needed • Issues of SCALING (needs some • 26 normalization to make criteria commensurate) Limited complexity (alternatives*criteria) © K. Fedra 2007

DSS tools: preference structures • • • 27 Analytic Hierarchy Process (AHP) Multi-Attribute Global Inference of Quality (MAGIQ) Goal Programming ELECTRE (Outranking) PROMETHÉE (Outranking) The Evidential Reasoning Approach © K. Fedra 2007

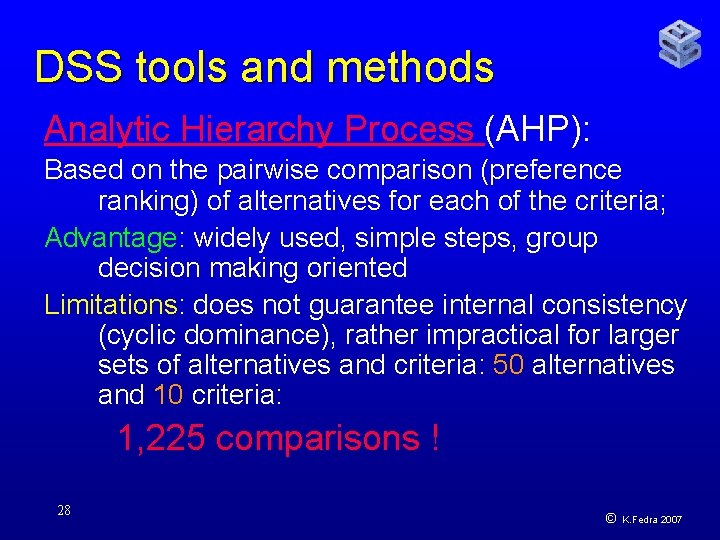

DSS tools and methods Analytic Hierarchy Process (AHP): Based on the pairwise comparison (preference ranking) of alternatives for each of the criteria; Advantage: widely used, simple steps, group decision making oriented Limitations: does not guarantee internal consistency (cyclic dominance), rather impractical for larger sets of alternatives and criteria: 50 alternatives and 10 criteria: 1, 225 comparisons ! 28 © K. Fedra 2007

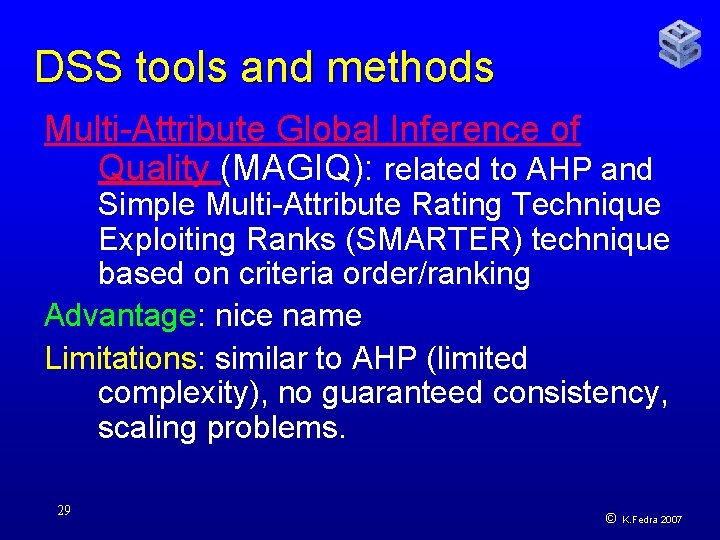

DSS tools and methods Multi-Attribute Global Inference of Quality (MAGIQ): related to AHP and Simple Multi-Attribute Rating Technique Exploiting Ranks (SMARTER) technique based on criteria order/ranking Advantage: nice name Limitations: similar to AHP (limited complexity), no guaranteed consistency, scaling problems. 29 © K. Fedra 2007

DSS tools and methods Goal Programming: a variation of linear programming to handle multiple, normally conflicting objective measures, minimizes deviation from a set of targets (goals). Advantage: based on mathematical programming related to satisficing Limitations: based on mathematical programming (assuming gradients), linear assumptions, scaling of criteria or goals. 30 © K. Fedra 2007

DSS tools and methods ELECTRE (Outranking) based on the pairwise comparison and “outranking” of alternatives. Outranking relation: • A 1 is at least as good as A 2 with respect to a major subset of the criteria • A 1 is not too bad relative to A 2 with respect to the remaining criteria 31 © K. Fedra 2007

DSS tools and methods PROMETHÉE (Outranking) Preference Ranking Organisation METHod for Enrichment Evaluations Same old pairwise comparison …. . Advantage: nice PC based tool: (Decision Lab 2000) Limitations: as above. 32 © K. Fedra 2007

DSS tools and methods The Evidential Reasoning Approach Decision matrix application; combines quantitative and qualitative criteria, emphasis on perception and believes (belief decision matrix). Advantages: very flexible Limitations: very subjective 33 © K. Fedra 2007

DSS tools and methods Reference point Uses a normalization of criteria between NADIR and UTOPIA, values are expressed as % achievement along that distance; implicit weights through reference (default: UTOPIA); efficient solution: closest to REF. Advantage: conceptually clean, minimal assumptions, very efficient for large data sets Limitations: assumes independent criteria (test !) non-intuitive for high dimensionality 34 © K. Fedra 2007

DSS tools and methods Occam’s razor: entia non sunt multiplicanda praeter necessitatem (lex parsimoniae, William of Ockham, 14 th century philosopher monk) • One should not increase, beyond what is necessary, the number of entities (assumptions, parameters) required to explain anything, All things being equal, the simplest solution tends to be the right one. • • KISS 35 (keep it simple, stupid) ! © K. Fedra 2007

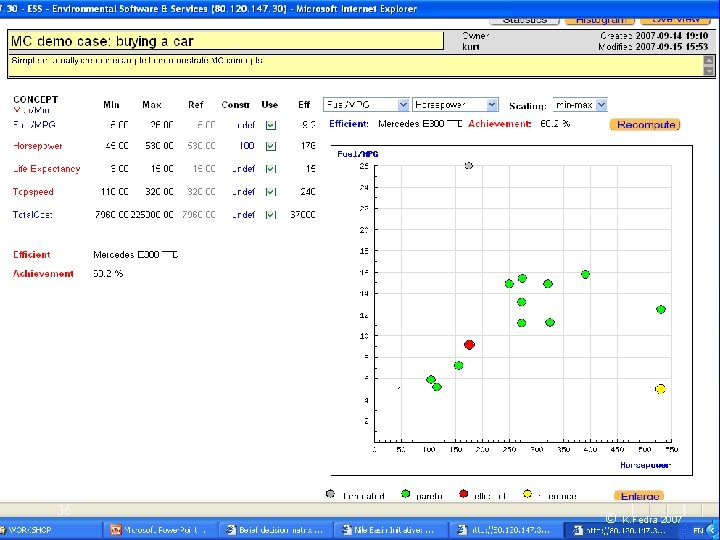

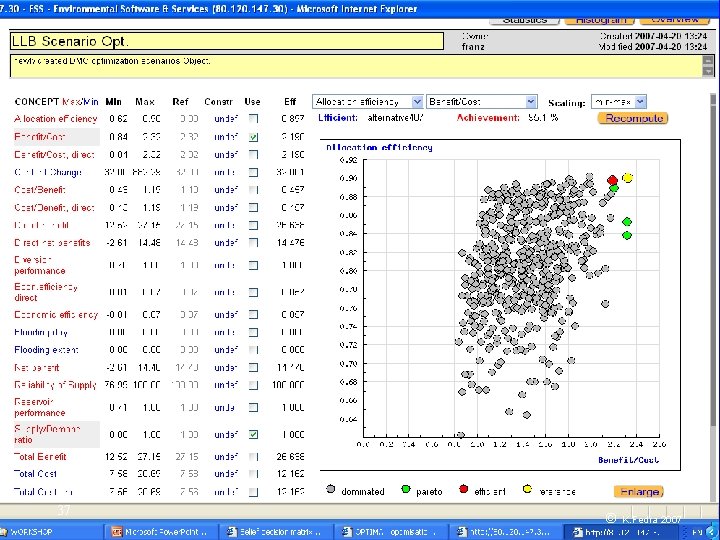

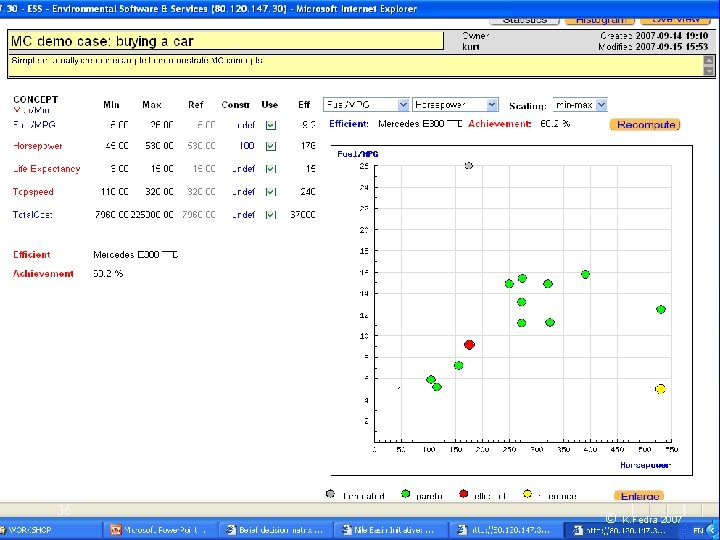

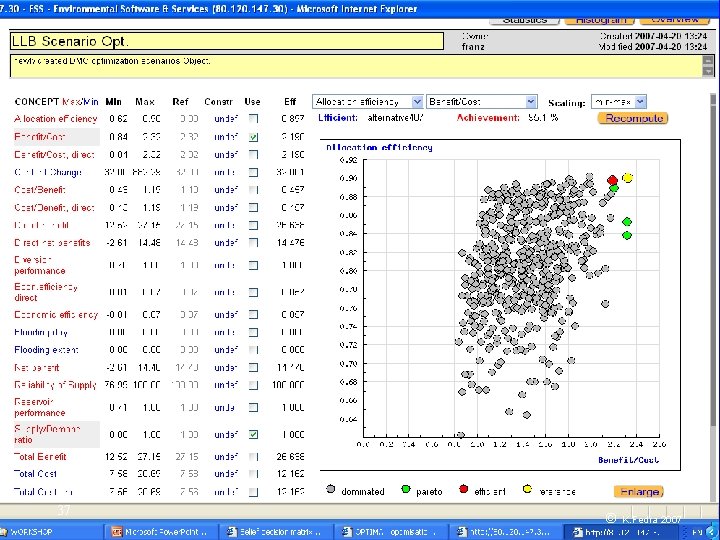

DSS tools and methods 36 © K. Fedra 2007

DSS tools and methods 37 © K. Fedra 2007

DSS tools and methods 38 © K. Fedra 2007

DSS tools and methods 39 © K. Fedra 2007

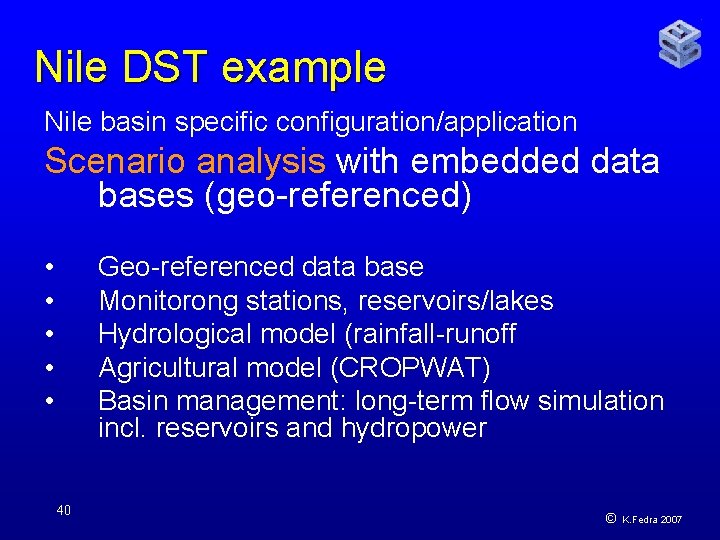

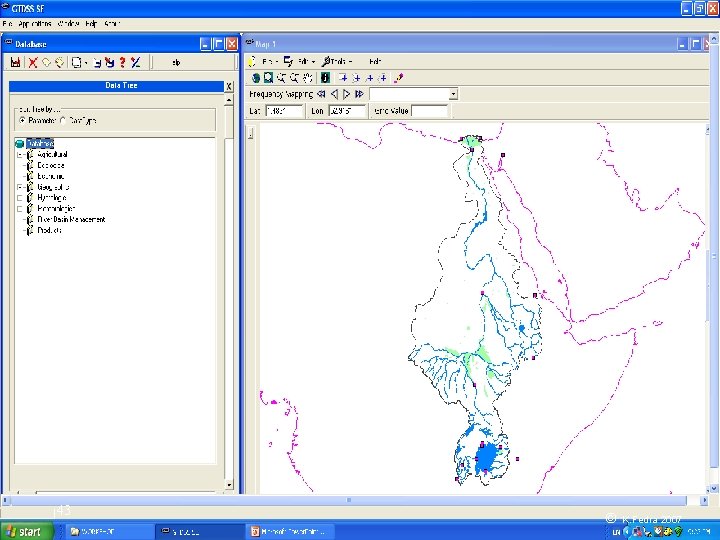

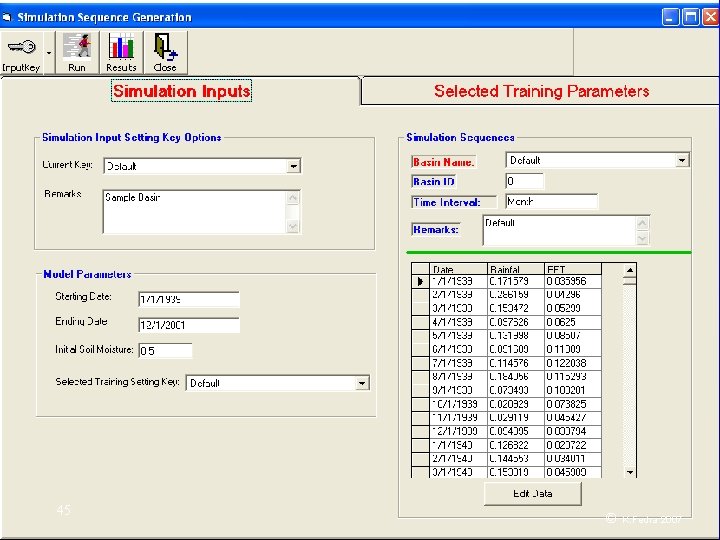

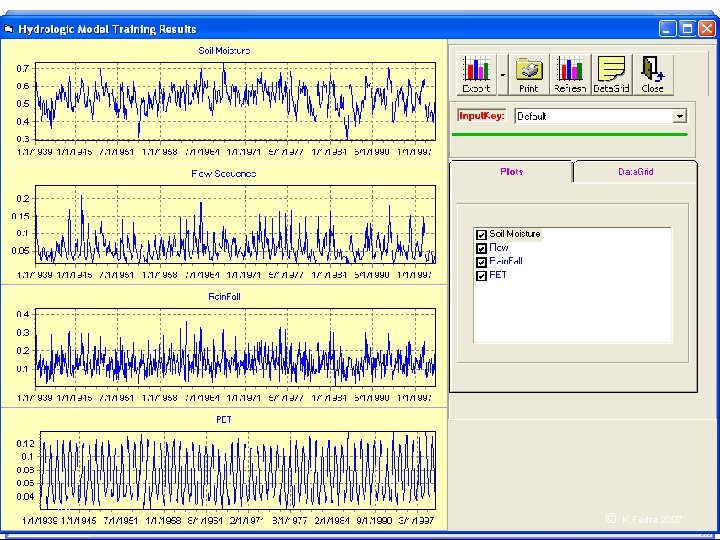

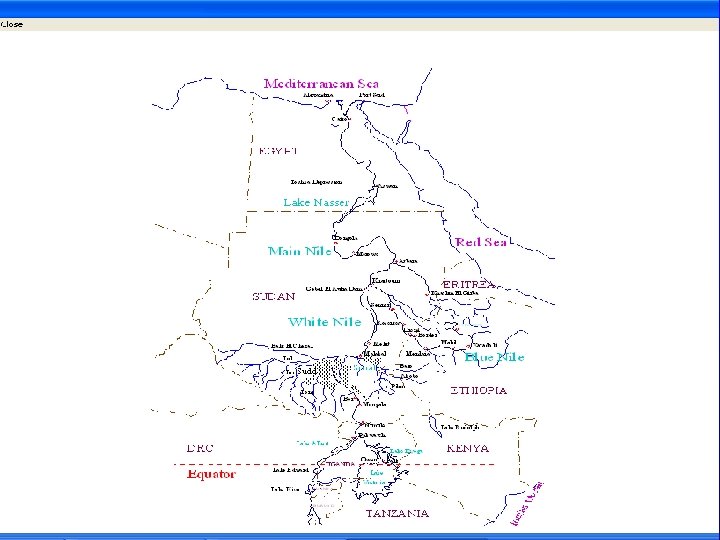

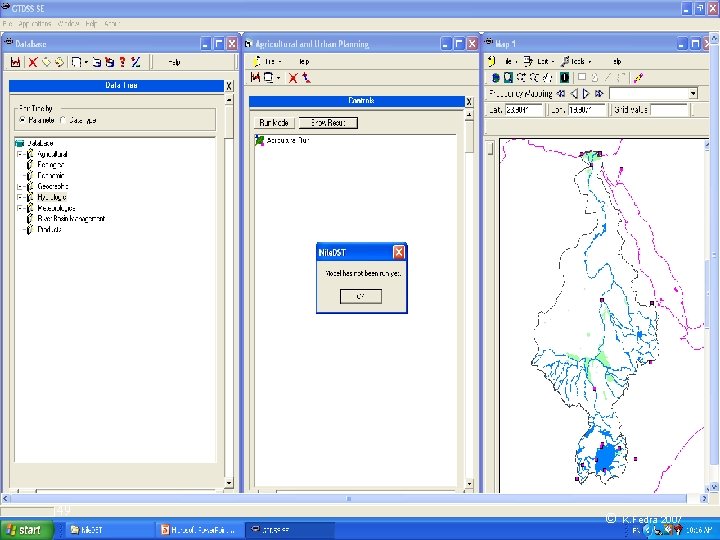

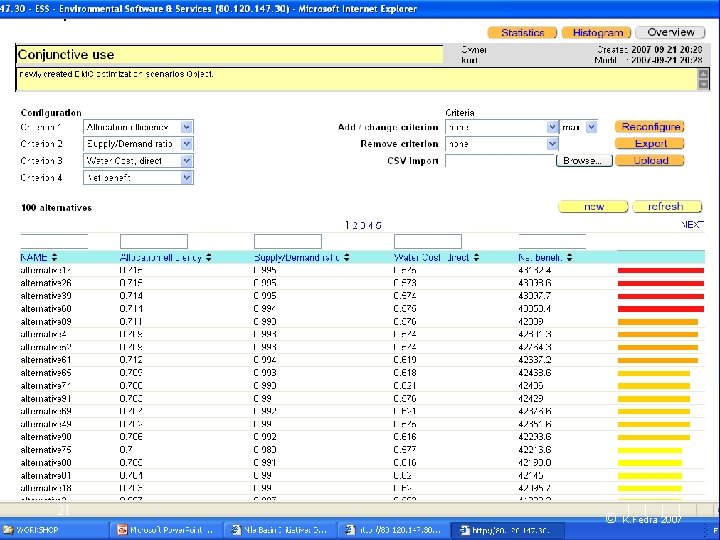

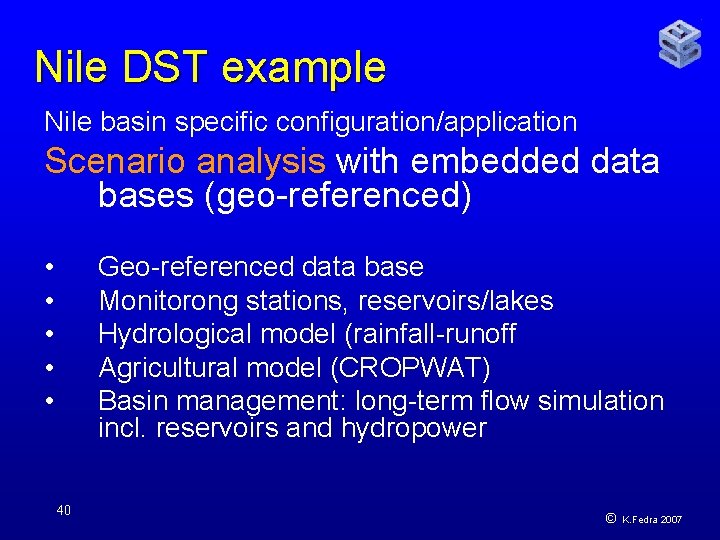

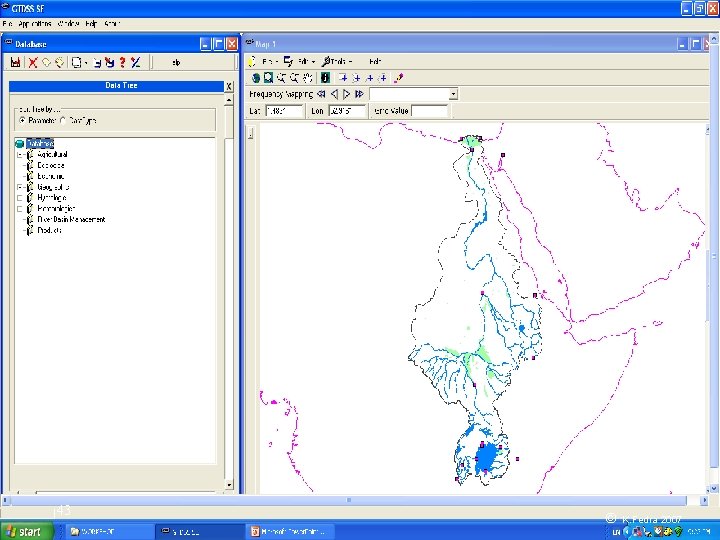

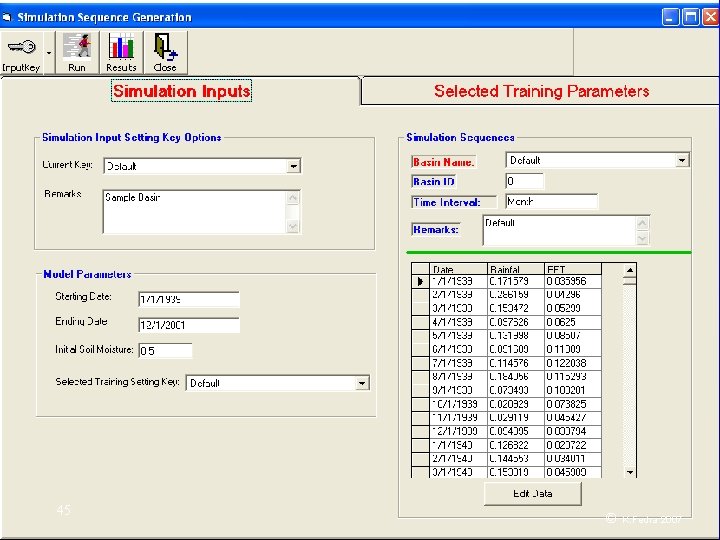

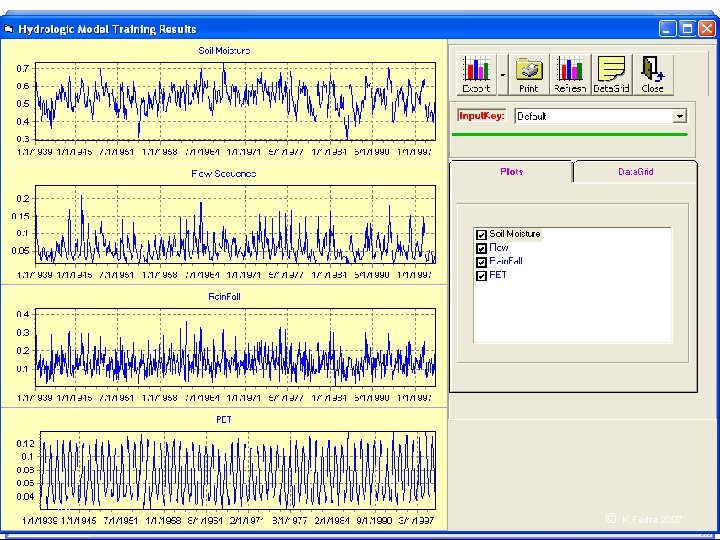

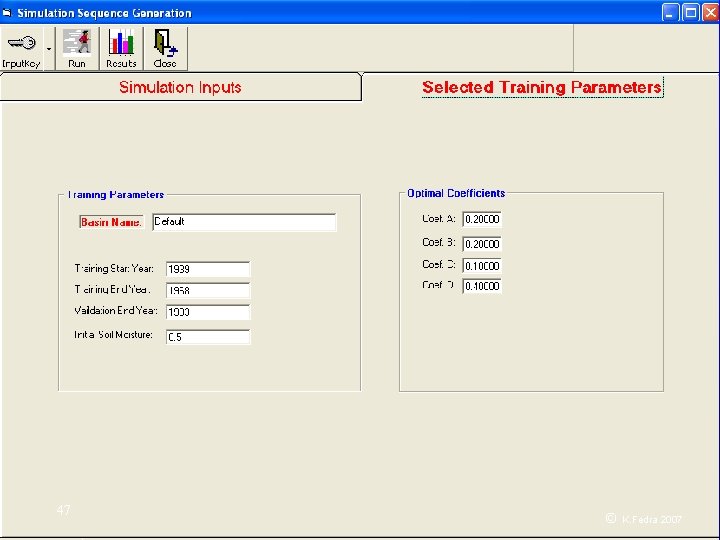

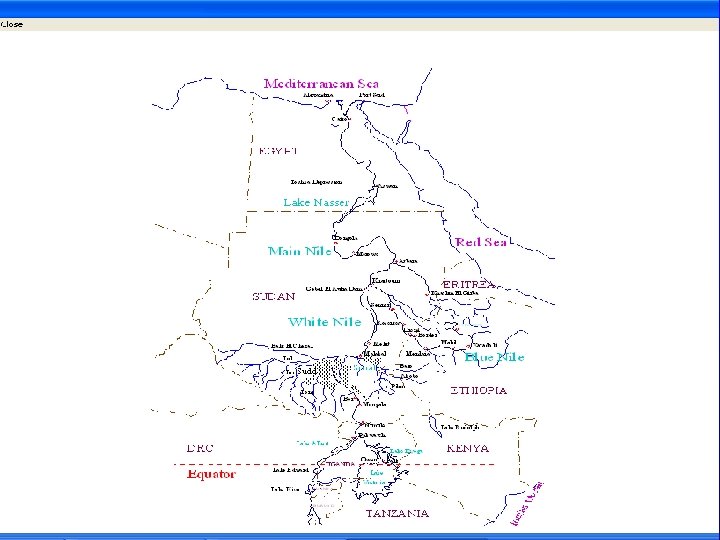

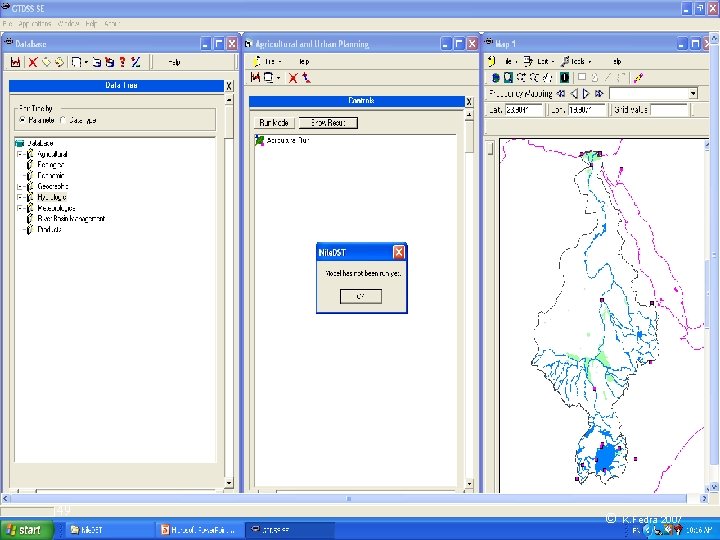

Nile DST example Nile basin specific configuration/application Scenario analysis with embedded data bases (geo-referenced) • • • Geo-referenced data base Monitorong stations, reservoirs/lakes Hydrological model (rainfall-runoff Agricultural model (CROPWAT) Basin management: long-term flow simulation incl. reservoirs and hydropower 40 © K. Fedra 2007

Nile DST example Advantages: • • • Nile basin specific application and configuration Extensive geo-referenced data base PC based application Limitations: • NOT a DSS • Difficult to configure (network, scenarios) 41 © K. Fedra 2007

42 © K. Fedra 2007

43 © K. Fedra 2007

44 © K. Fedra 2007

45 © K. Fedra 2007

46 © K. Fedra 2007

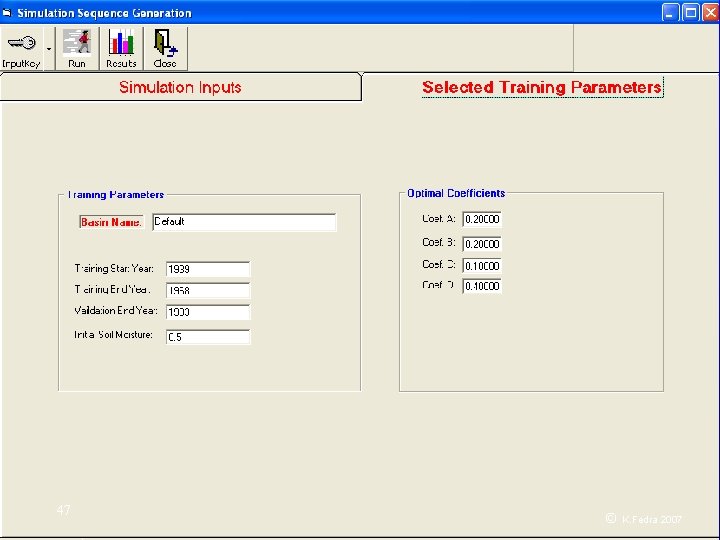

47 © K. Fedra 2007

48 © K. Fedra 2007

49 © K. Fedra 2007