NEW Oracle Real Application Clusters RAC and Oracle

- Slides: 49

NEW: Oracle Real Application Clusters (RAC) and Oracle Clusterware 11 g Release 2 Markus Michalewicz Product Manager Oracle Clusterware

The preceding is intended to outline our general product direction. It is intended for information purposes only, and may not be incorporated into any contract. It is not a commitment to deliver any material, code, or functionality, and should not be relied upon in making purchasing decisions. The development, release, and timing of any features or functionality described for Oracle’s products remains at the sole discretion of Oracle.

Agenda • Overview • Easier Installation – SSH Setup, prerequisite checks, and Fix. Up-scripts – Automatic cluster time synchronization configuration – OCR & Voting Files can be stored in Oracle ASM • Easier Management – – Policy-based and Role-separated Cluster Management Oracle EM-based Resource and Cluster Management Grid Plug and Play (GPn. P) and Grid Naming Service Single Client Access Name (SCAN) • Summary <Insert Picture Here>

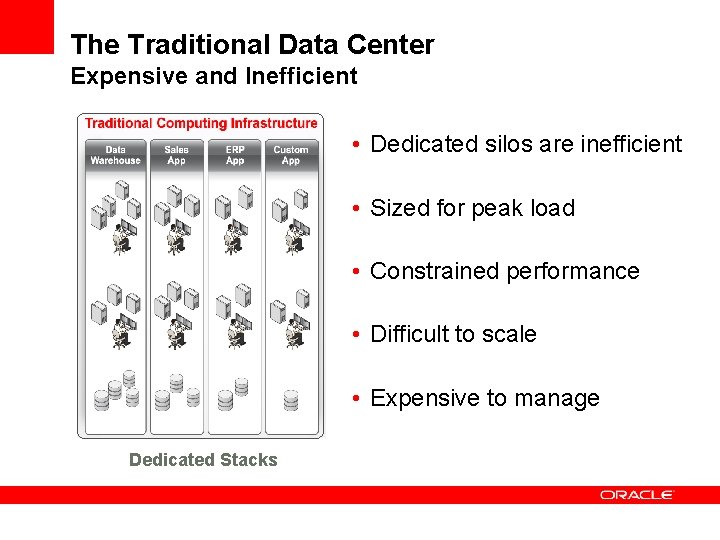

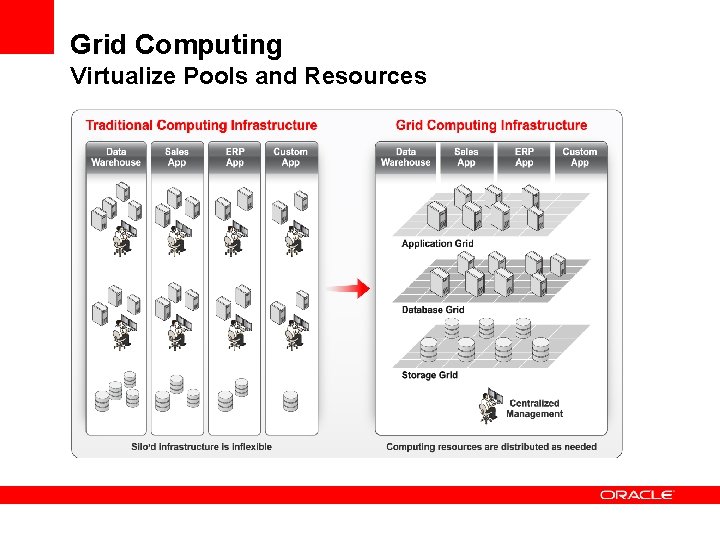

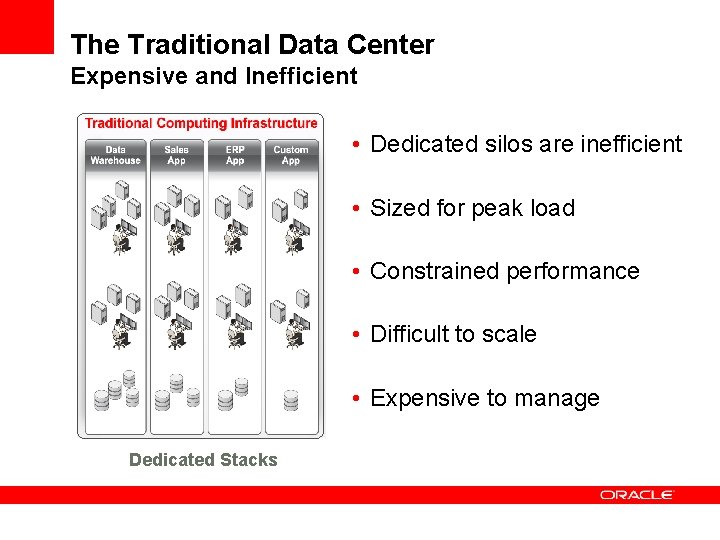

The Traditional Data Center Expensive and Inefficient • Dedicated silos are inefficient • Sized for peak load • Constrained performance • Difficult to scale • Expensive to manage Dedicated Stacks

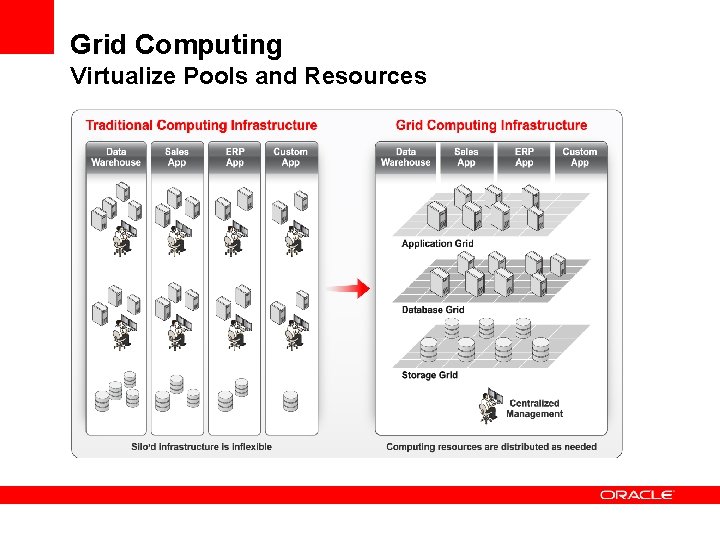

Grid Computing Virtualize Pools and Resources

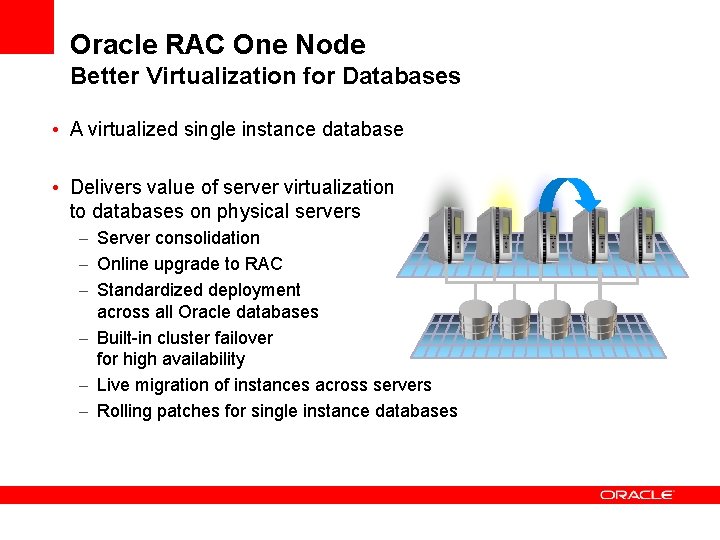

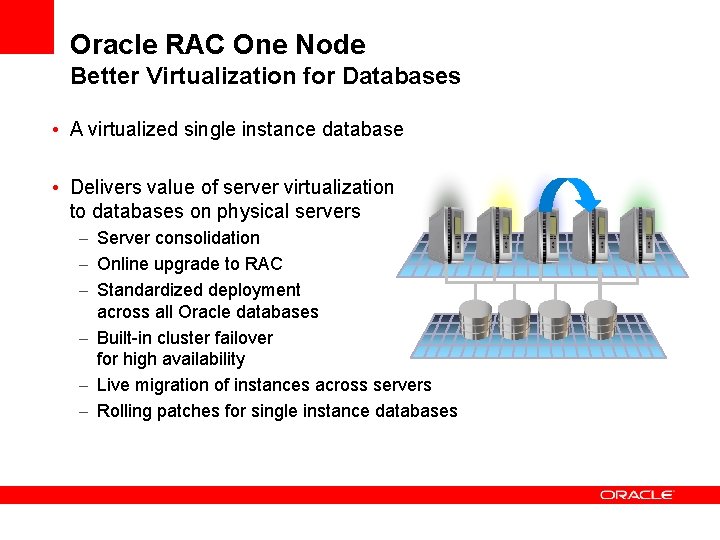

Oracle RAC One Node Better Virtualization for Databases • A virtualized single instance database • Delivers value of server virtualization to databases on physical servers – Server consolidation – Online upgrade to RAC – Standardized deployment across all Oracle databases – Built-in cluster failover for high availability – Live migration of instances across servers – Rolling patches for single instance databases

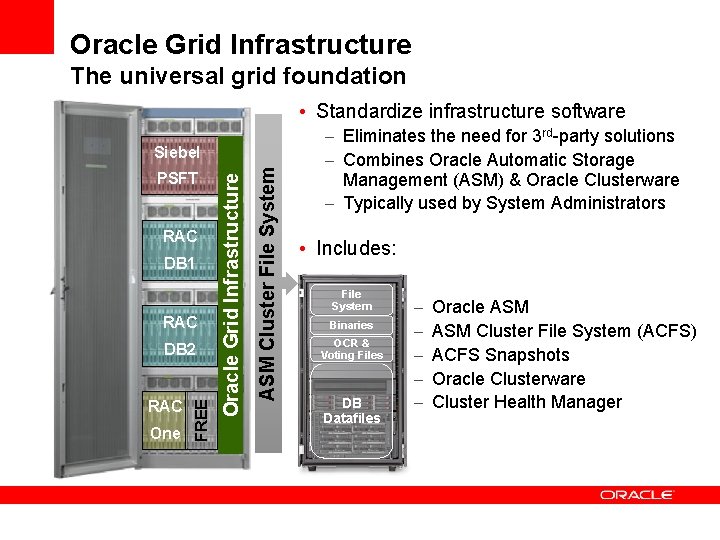

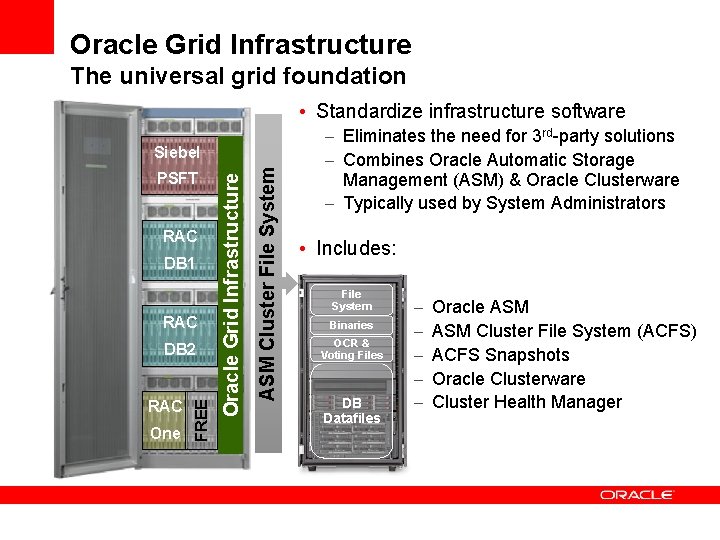

Oracle Grid Infrastructure The universal grid foundation • Standardize infrastructure software RAC DB 1 RAC One FREE DB 2 ASM Cluster File System PSFT Oracle Grid Infrastructure Siebel – Eliminates the need for 3 rd-party solutions – Combines Oracle Automatic Storage Management (ASM) & Oracle Clusterware – Typically used by System Administrators • Includes: File System Binaries OCR & Voting Files DB Datafiles – – – Oracle ASM Cluster File System (ACFS) ACFS Snapshots Oracle Clusterware Cluster Health Manager

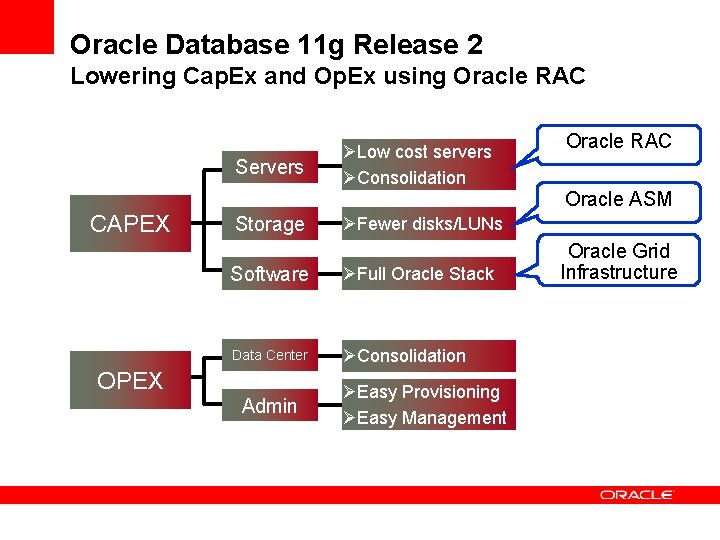

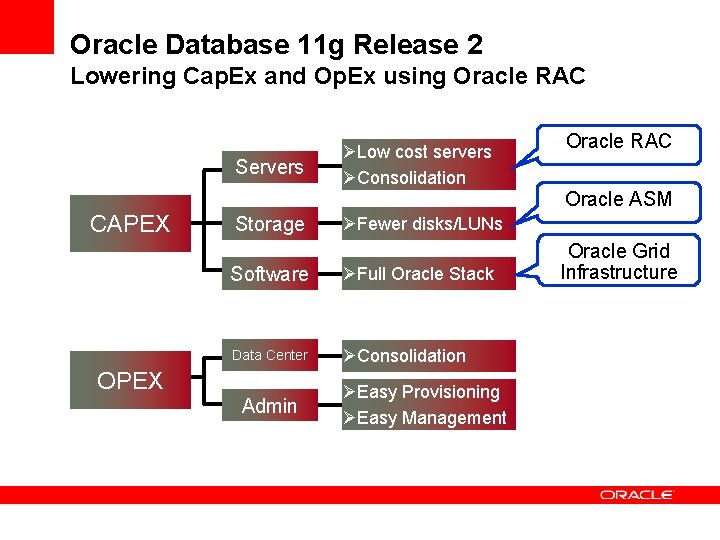

Oracle Database 11 g Release 2 Lowering Cap. Ex and Op. Ex using Oracle RAC Servers CAPEX Storage ØLow cost servers ØConsolidation ØFull Oracle Stack Data Center ØConsolidation Admin Oracle ASM ØFewer disks/LUNs Software OPEX Oracle RAC ØEasy Provisioning ØEasy Management Oracle Grid Infrastructure

Easier Installation

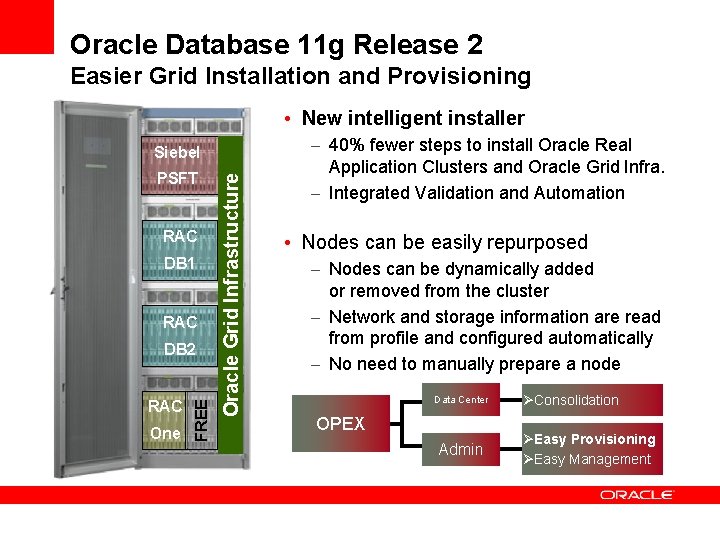

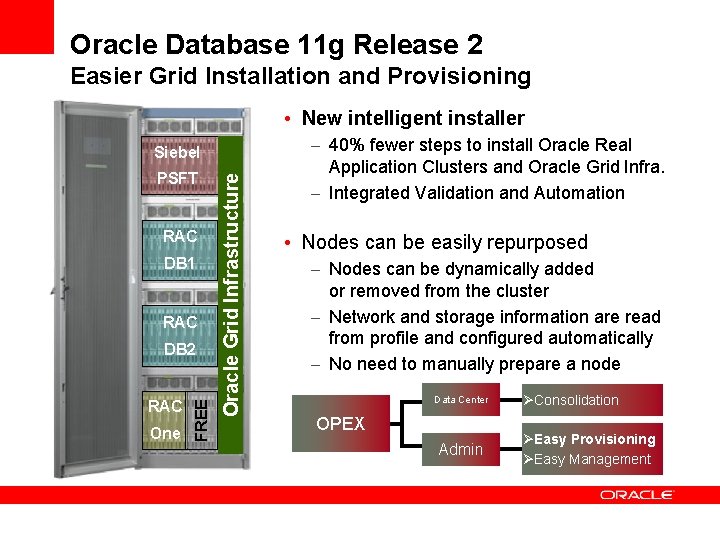

Oracle Database 11 g Release 2 Easier Grid Installation and Provisioning • New intelligent installer PSFT RAC DB 1 RAC One FREE DB 2 Oracle Grid Infrastructure Siebel – 40% fewer steps to install Oracle Real Application Clusters and Oracle Grid Infra. – Integrated Validation and Automation • Nodes can be easily repurposed – Nodes can be dynamically added or removed from the cluster – Network and storage information are read from profile and configured automatically – No need to manually prepare a node Data Center OPEX Admin ØConsolidation ØEasy Provisioning ØEasy Management

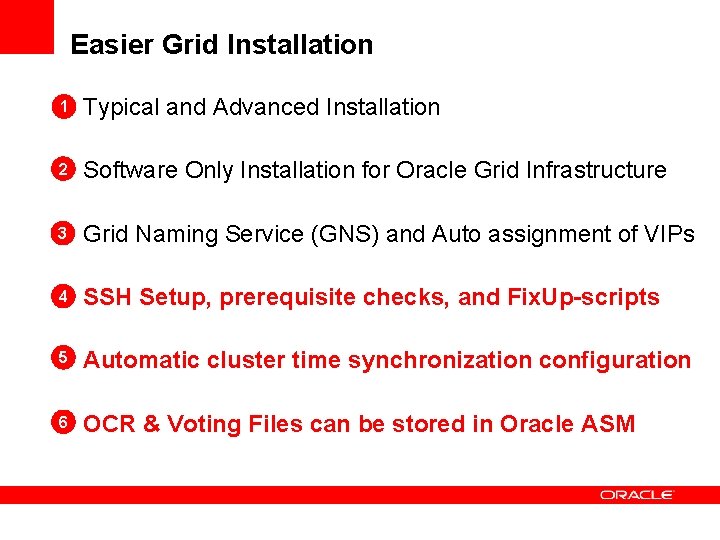

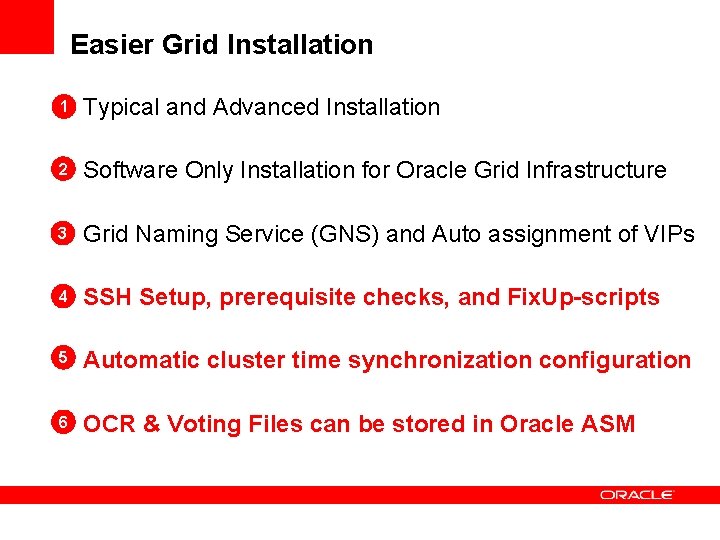

Easier Grid Installation 1 Typical and Advanced Installation 2 Software Only Installation for Oracle Grid Infrastructure 3 Grid Naming Service (GNS) and Auto assignment of VIPs 4 SSH Setup, prerequisite checks, and Fix. Up-scripts 5 Automatic cluster time synchronization configuration 6 OCR & Voting Files can be stored in Oracle ASM

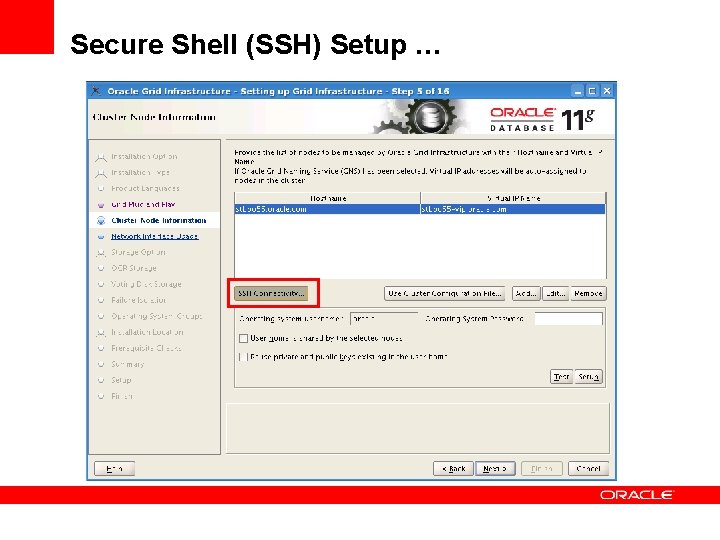

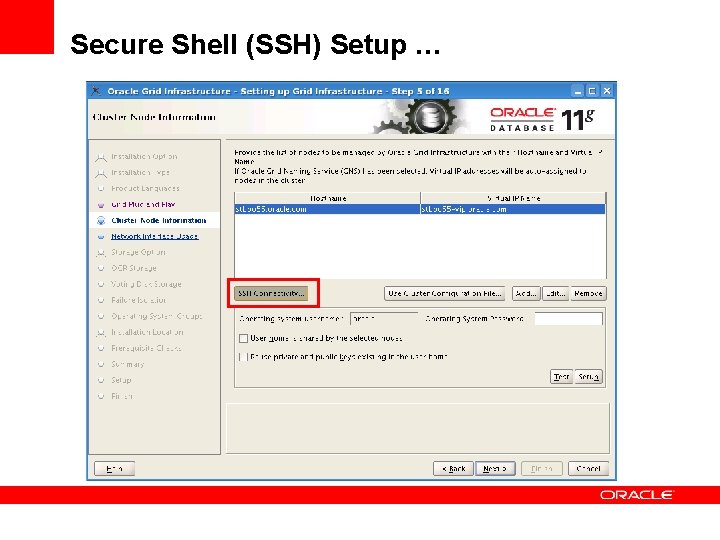

Secure Shell (SSH) Setup …

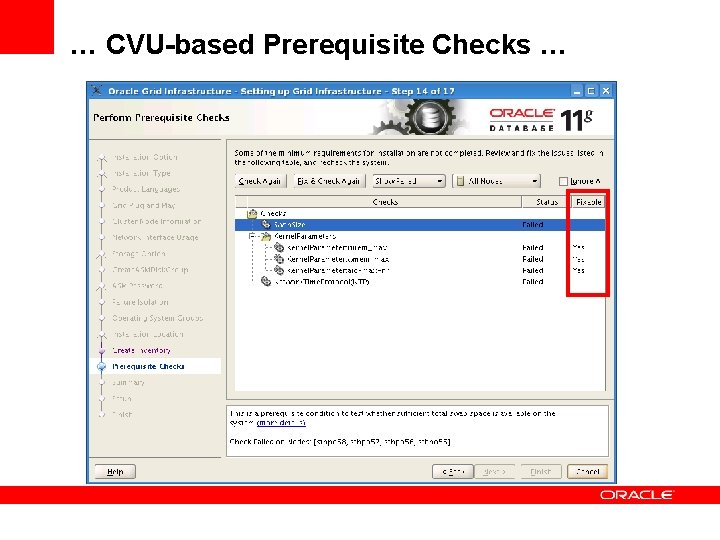

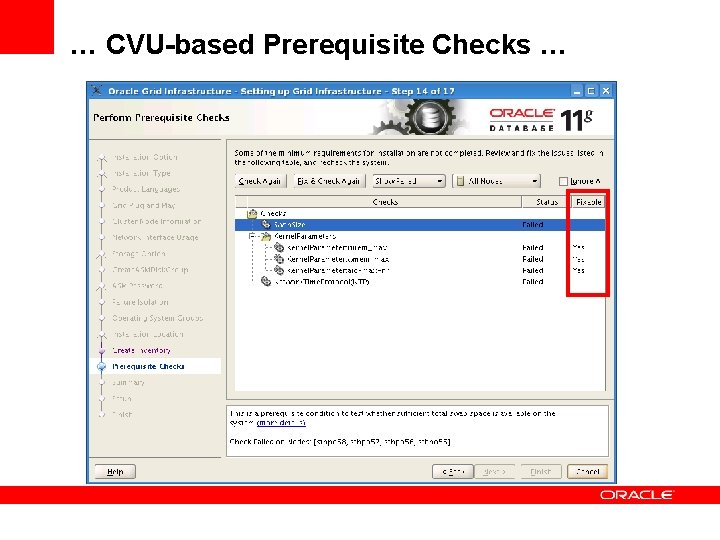

… CVU-based Prerequisite Checks …

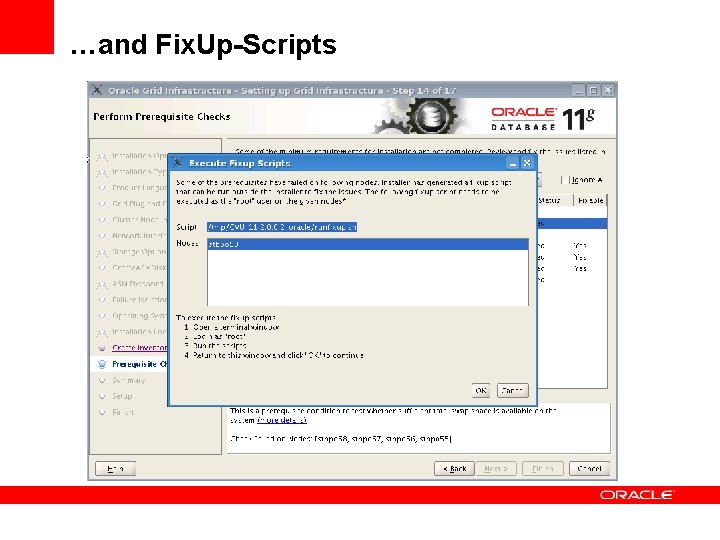

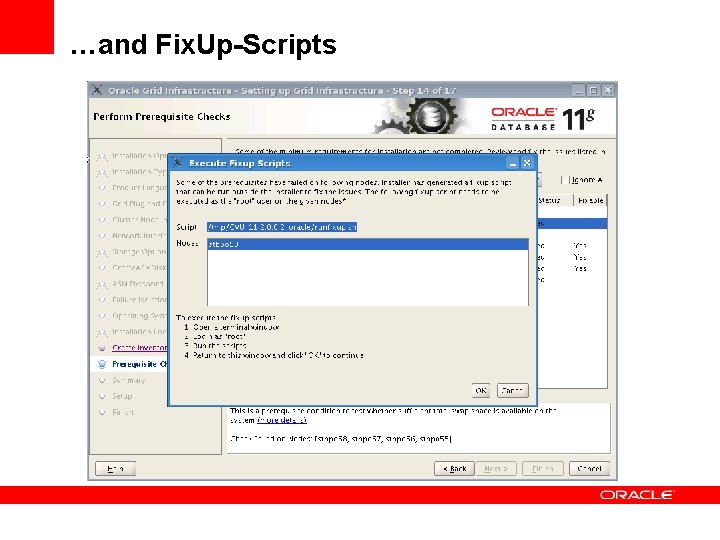

…and Fix. Up-Scripts

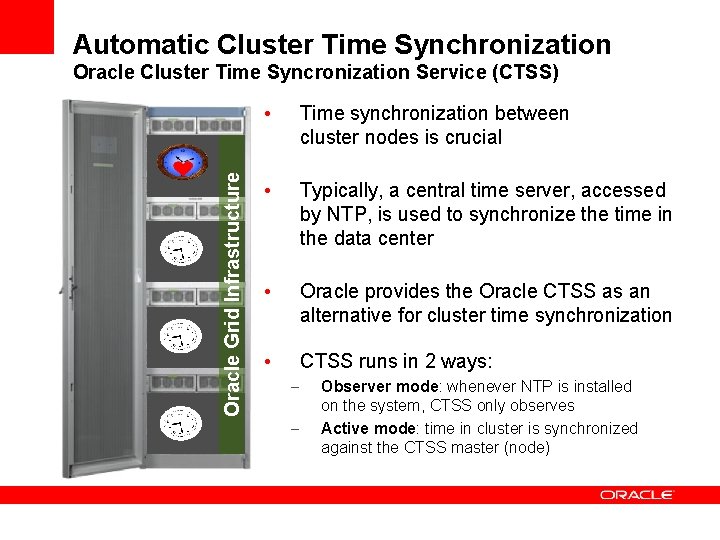

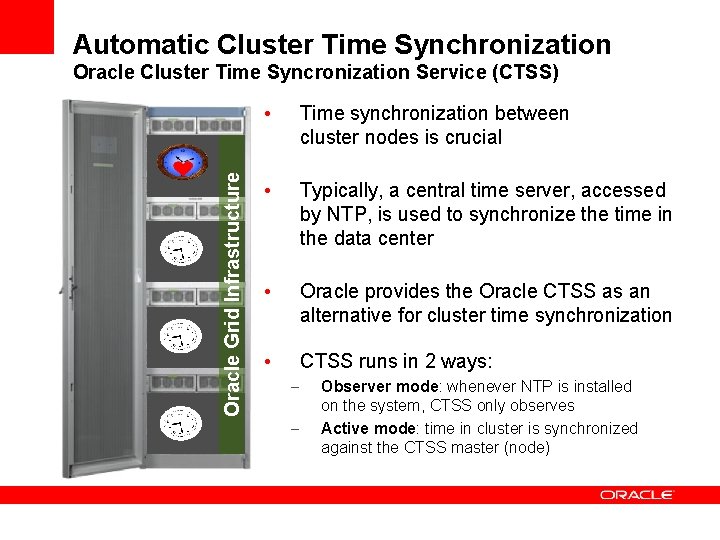

Automatic Cluster Time Synchronization Oracle Grid Infrastructure Oracle Cluster Time Syncronization Service (CTSS) • Time synchronization between cluster nodes is crucial • Typically, a central time server, accessed by NTP, is used to synchronize the time in the data center • Oracle provides the Oracle CTSS as an alternative for cluster time synchronization • CTSS runs in 2 ways: – – Observer mode: whenever NTP is installed on the system, CTSS only observes Active mode: time in cluster is synchronized against the CTSS master (node)

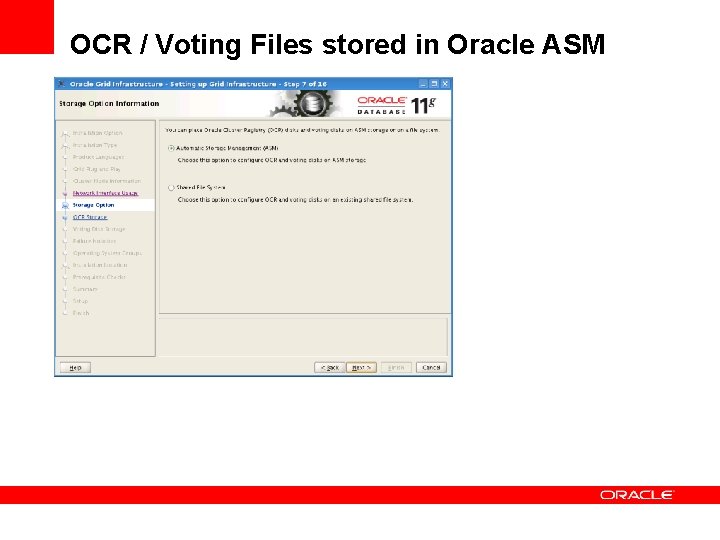

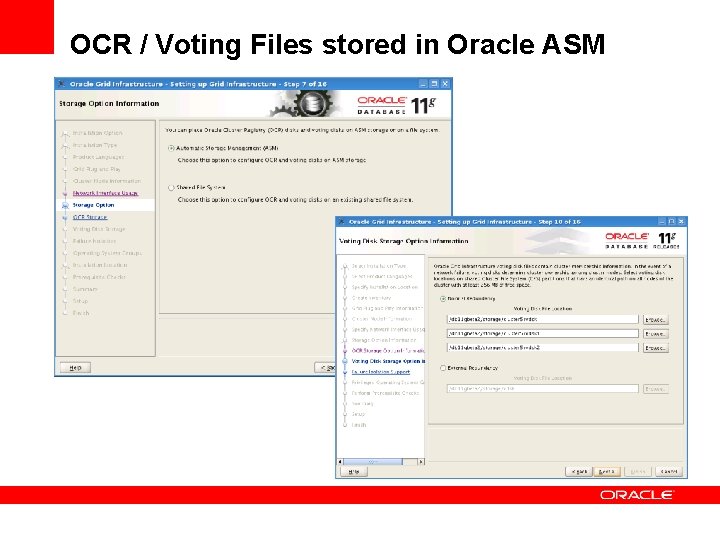

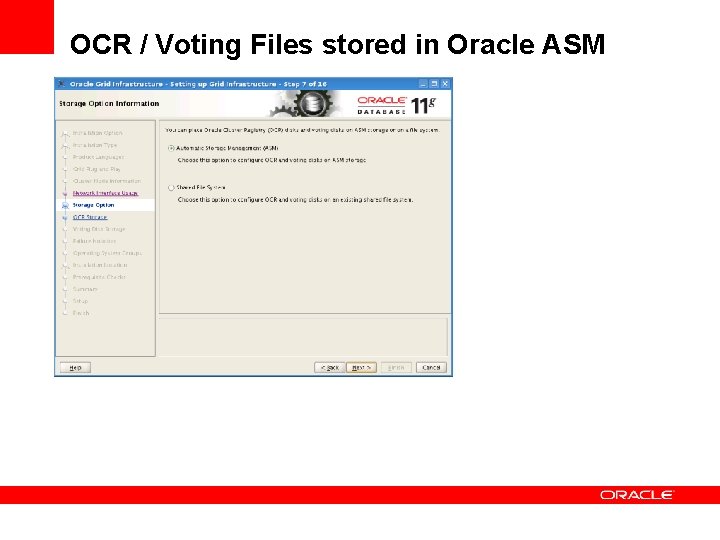

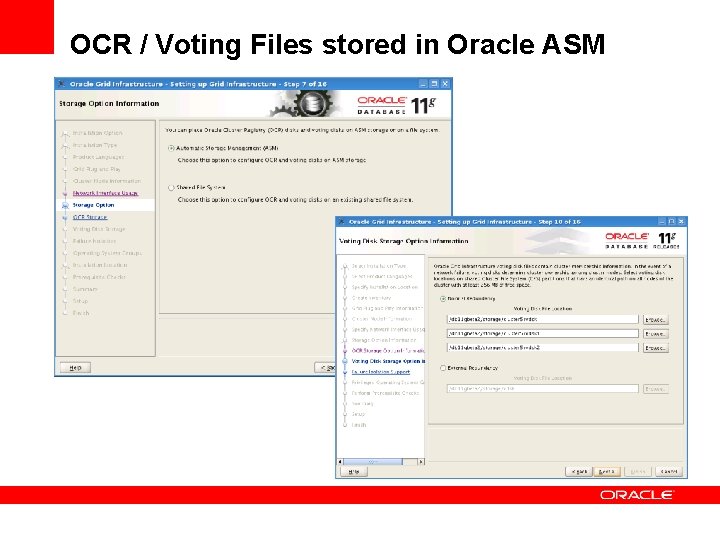

OCR / Voting Files stored in Oracle ASM

OCR / Voting Files stored in Oracle ASM

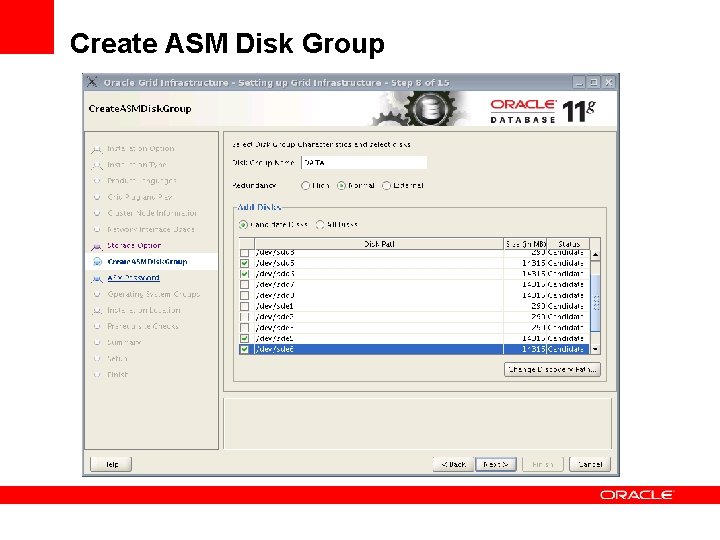

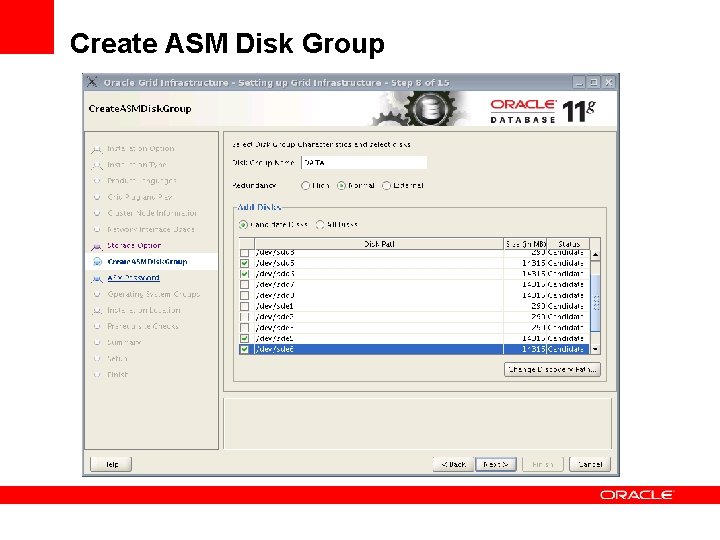

Create ASM Disk Group

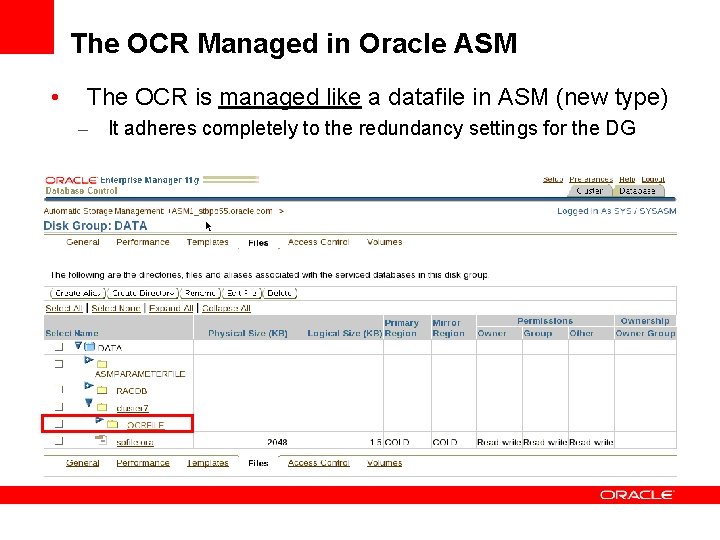

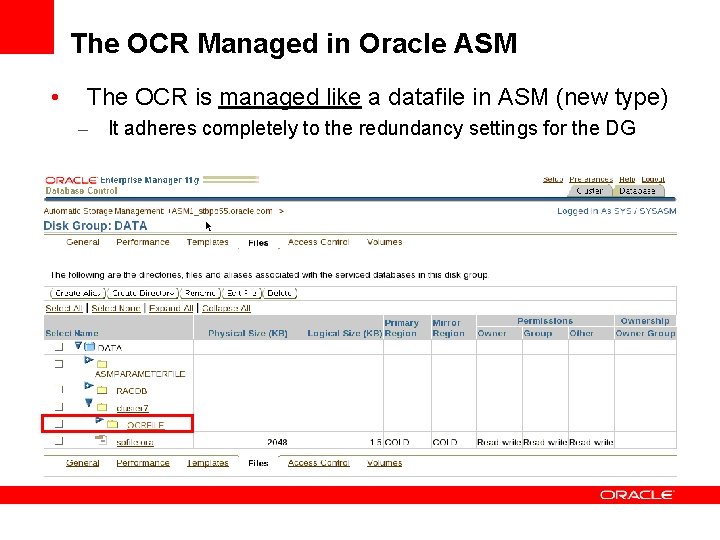

The OCR Managed in Oracle ASM • The OCR is managed like a datafile in ASM (new type) – It adheres completely to the redundancy settings for the DG

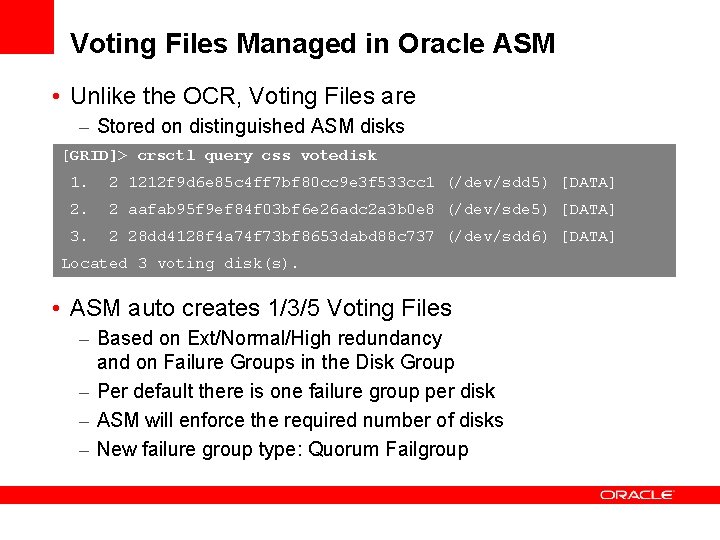

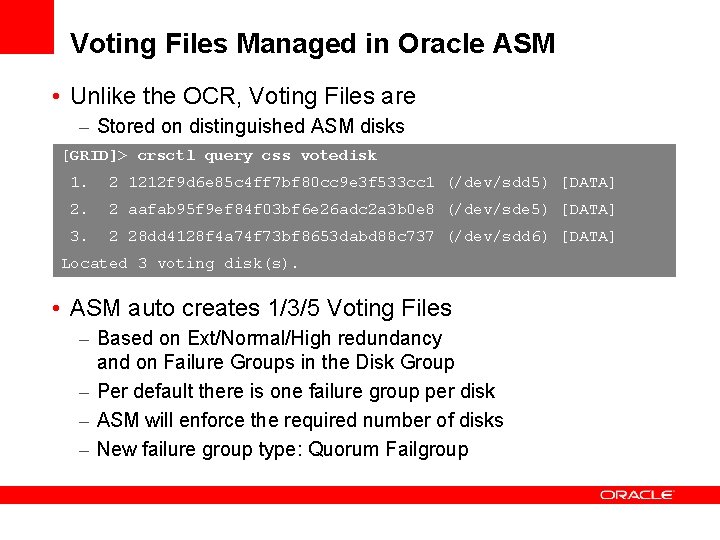

Voting Files Managed in Oracle ASM • Unlike the OCR, Voting Files are – Stored on distinguished ASM disks [GRID]> crsctl query css votedisk 1. 2 1212 f 9 d 6 e 85 c 4 ff 7 bf 80 cc 9 e 3 f 533 cc 1 (/dev/sdd 5) [DATA] 2. 2 aafab 95 f 9 ef 84 f 03 bf 6 e 26 adc 2 a 3 b 0 e 8 (/dev/sde 5) [DATA] 3. 2 28 dd 4128 f 4 a 74 f 73 bf 8653 dabd 88 c 737 (/dev/sdd 6) [DATA] Located 3 voting disk(s). • ASM auto creates 1/3/5 Voting Files – Based on Ext/Normal/High redundancy and on Failure Groups in the Disk Group – Per default there is one failure group per disk – ASM will enforce the required number of disks – New failure group type: Quorum Failgroup

Easier Management

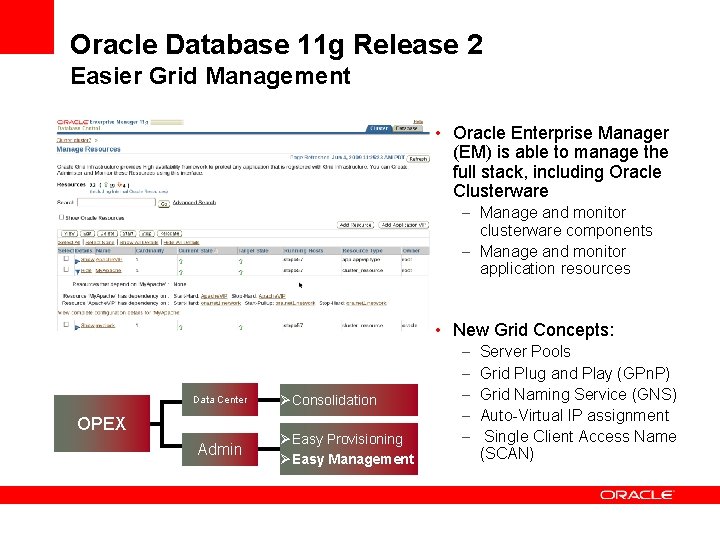

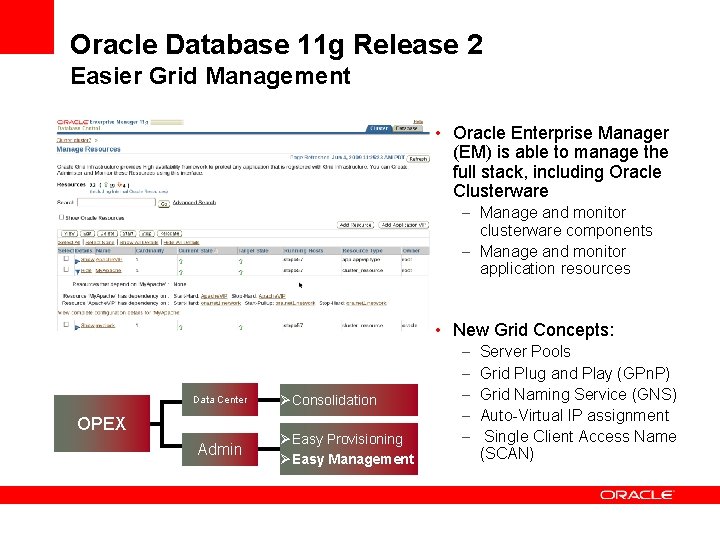

Oracle Database 11 g Release 2 Easier Grid Management • Oracle Enterprise Manager (EM) is able to manage the full stack, including Oracle Clusterware – Manage and monitor clusterware components – Manage and monitor application resources • New Grid Concepts: Data Center OPEX Admin ØConsolidation ØEasy Provisioning ØEasy Management – – – Server Pools Grid Plug and Play (GPn. P) Grid Naming Service (GNS) Auto-Virtual IP assignment Single Client Access Name (SCAN)

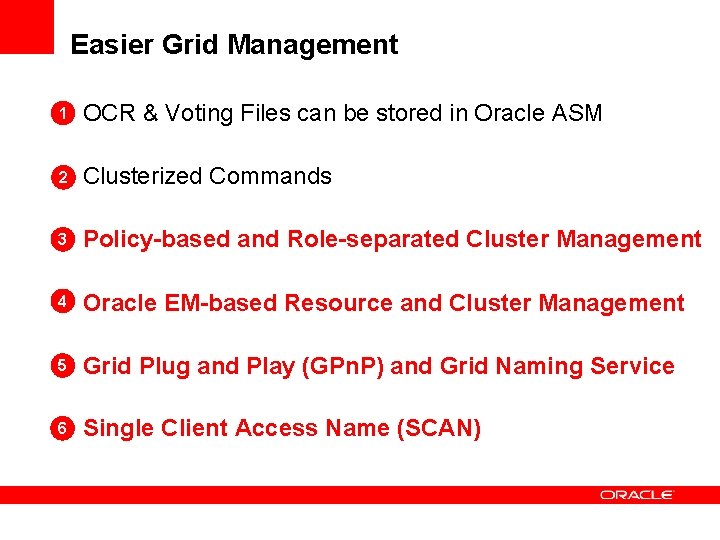

Easier Grid Management 1 OCR & Voting Files can be stored in Oracle ASM 2 Clusterized Commands 3 Policy-based and Role-separated Cluster Management 4 Oracle EM-based Resource and Cluster Management 5 Grid Plug and Play (GPn. P) and Grid Naming Service 6 Single Client Access Name (SCAN)

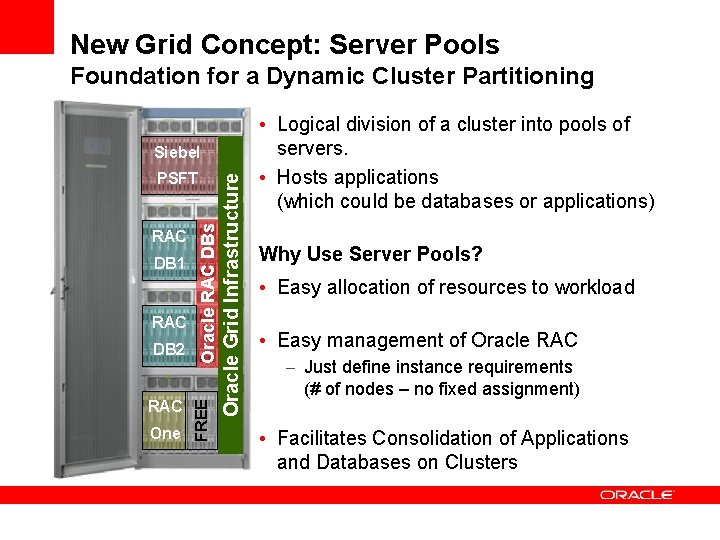

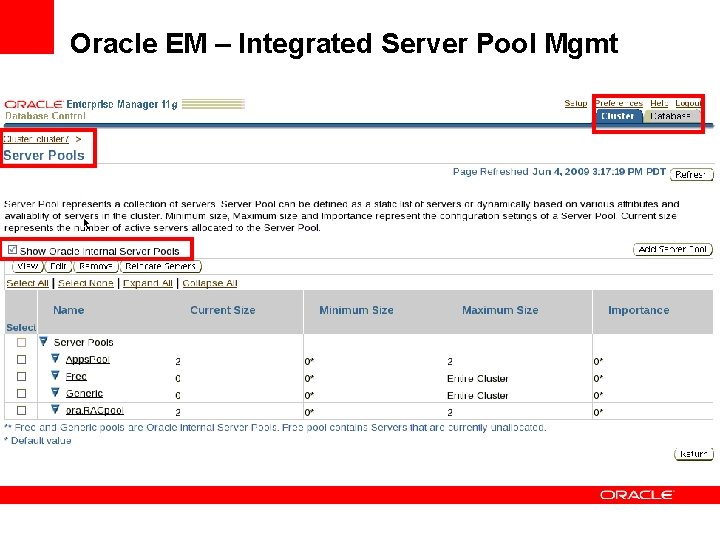

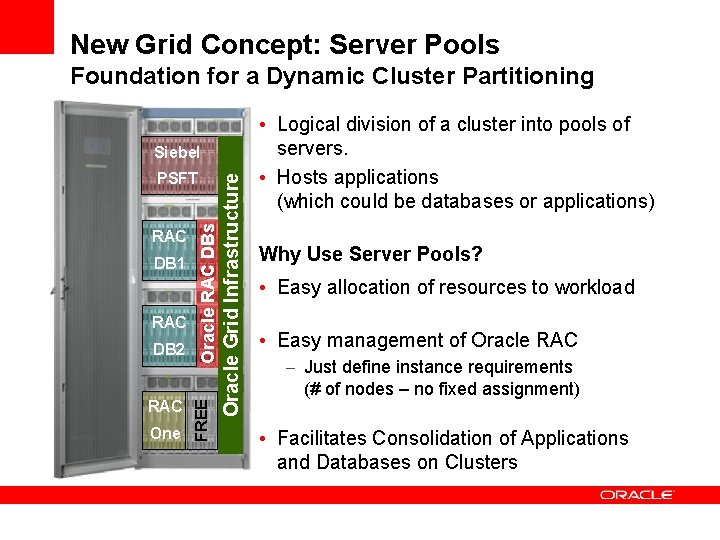

New Grid Concept: Server Pools Foundation for a Dynamic Cluster Partitioning DB 1 RAC DB 2 RAC One FREE RAC Oracle RAC DBs PSFT Oracle Grid Infrastructure Siebel • Logical division of a cluster into pools of servers. • Hosts applications (which could be databases or applications) Why Use Server Pools? • Easy allocation of resources to workload • Easy management of Oracle RAC – Just define instance requirements (# of nodes – no fixed assignment) • Facilitates Consolidation of Applications and Databases on Clusters

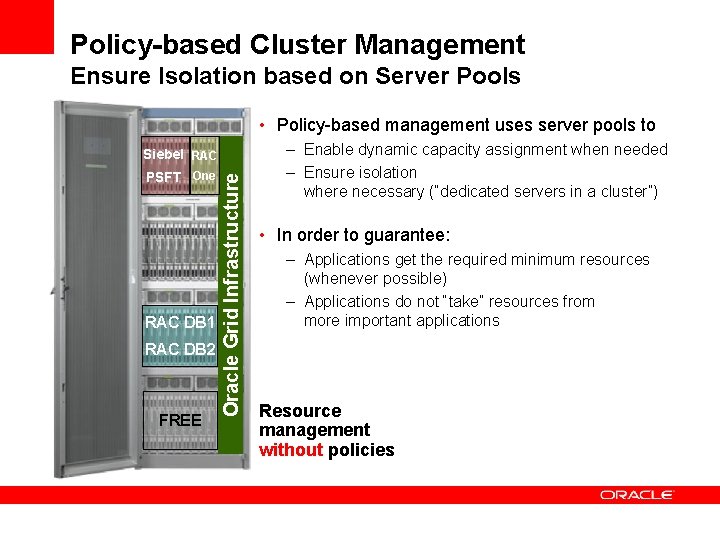

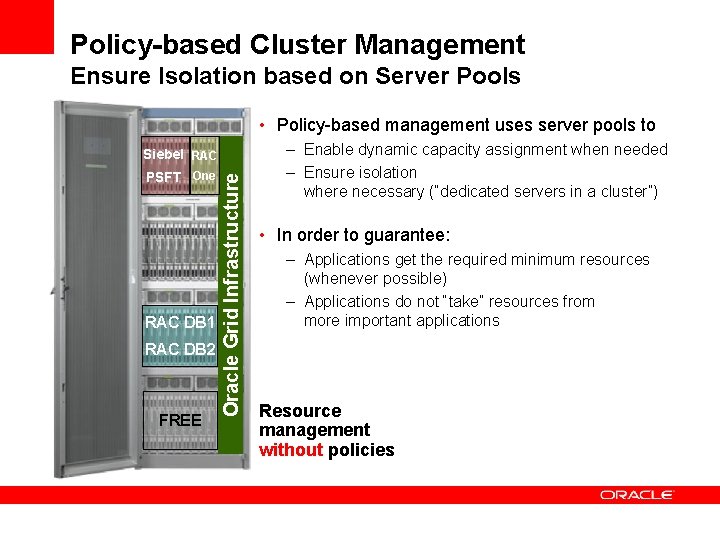

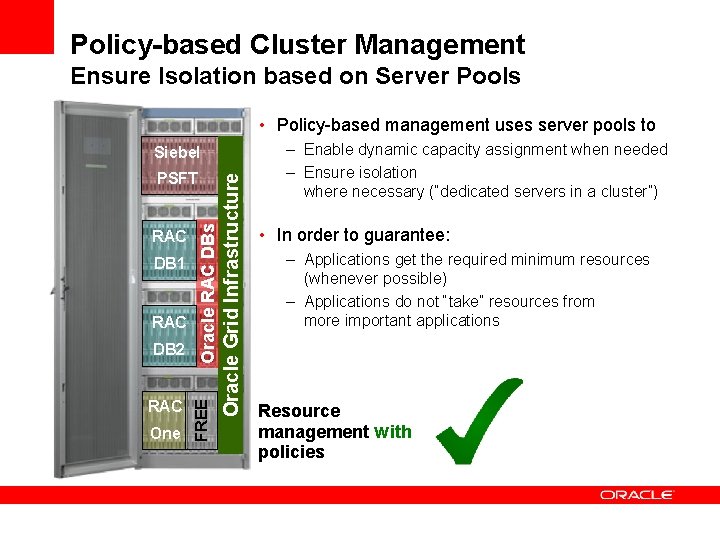

Policy-based Cluster Management Ensure Isolation based on Server Pools • Policy-based management uses server pools to PSFT One RAC DB 1 RAC DB 2 FREE Oracle Grid Infrastructure Siebel RAC – Enable dynamic capacity assignment when needed – Ensure isolation where necessary (“dedicated servers in a cluster”) • In order to guarantee: – Applications get the required minimum resources (whenever possible) – Applications do not “take” resources from more important applications Resource management without policies

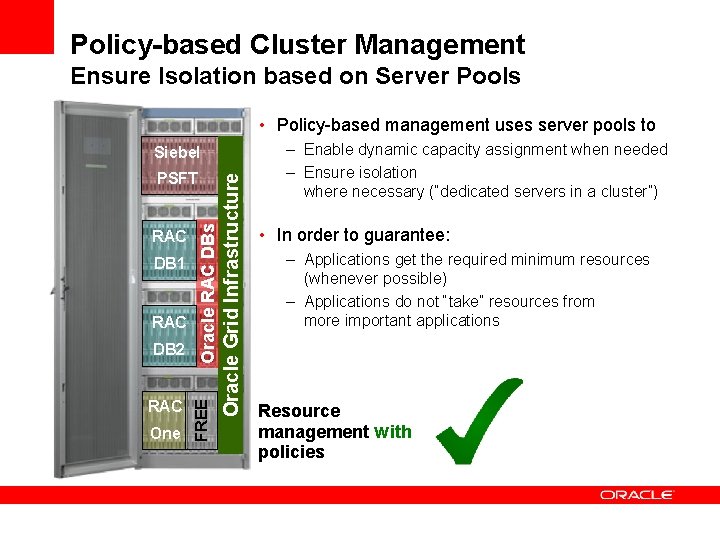

Policy-based Cluster Management Ensure Isolation based on Server Pools • Policy-based management uses server pools to DB 1 RAC DB 2 RAC One FREE RAC Oracle RAC DBs PSFT Oracle Grid Infrastructure Siebel – Enable dynamic capacity assignment when needed – Ensure isolation where necessary (“dedicated servers in a cluster”) • In order to guarantee: – Applications get the required minimum resources (whenever possible) – Applications do not “take” resources from more important applications Resource management without policies

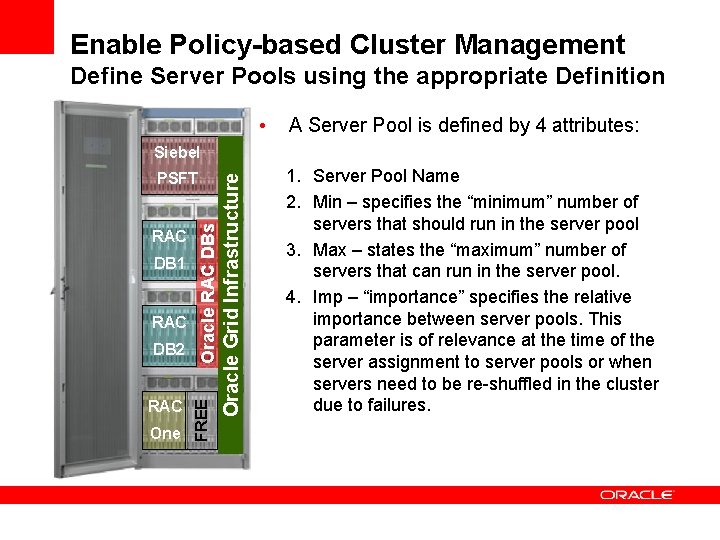

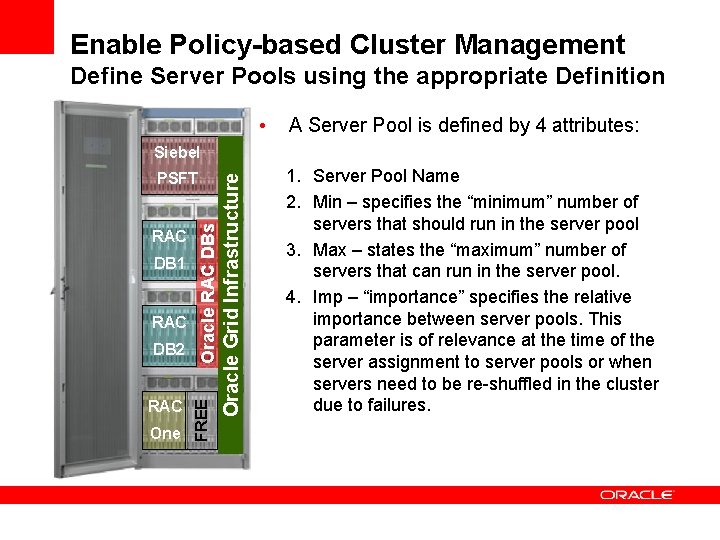

Enable Policy-based Cluster Management Define Server Pools using the appropriate Definition • A Server Pool is defined by 4 attributes: DB 1 RAC DB 2 RAC One FREE RAC Oracle RAC DBs PSFT Oracle Grid Infrastructure Siebel 1. Server Pool Name 2. Min – specifies the “minimum” number of servers that should run in the server pool 3. Max – states the “maximum” number of servers that can run in the server pool. 4. Imp – “importance” specifies the relative importance between server pools. This parameter is of relevance at the time of the server assignment to server pools or when servers need to be re-shuffled in the cluster due to failures.

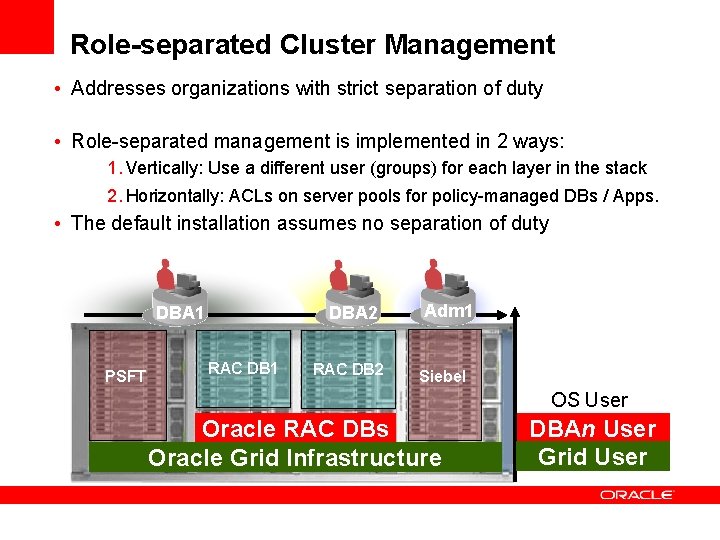

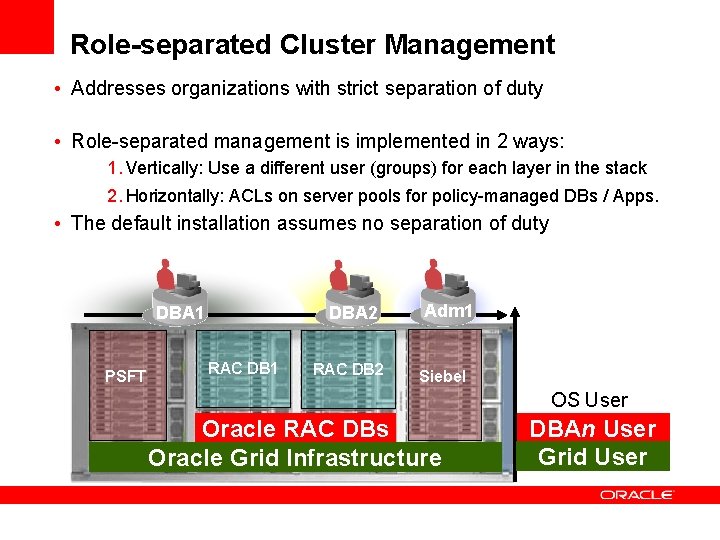

Role-separated Cluster Management • Addresses organizations with strict separation of duty • Role-separated management is implemented in 2 ways: 1. Vertically: Use a different user (groups) for each layer in the stack 2. Horizontally: ACLs on server pools for policy-managed DBs / Apps. • The default installation assumes no separation of duty DBA 1 PSFT DBA 2 RAC DB 1 RAC DB 2 Adm 1 Siebel OS User Oracle RAC DBs Oracle Grid Infrastructure DBAn User Grid User

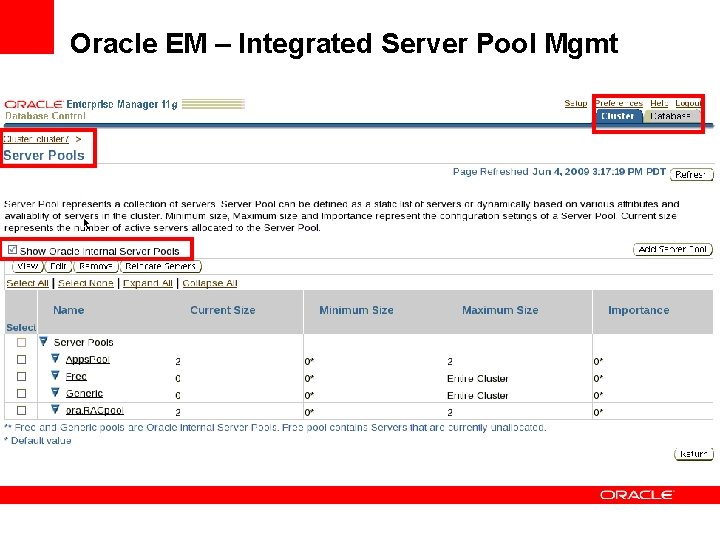

Oracle EM – Integrated Server Pool Mgmt

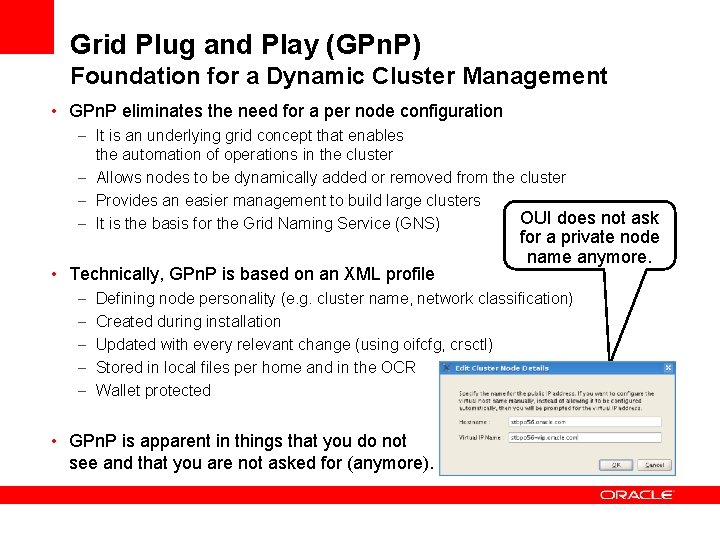

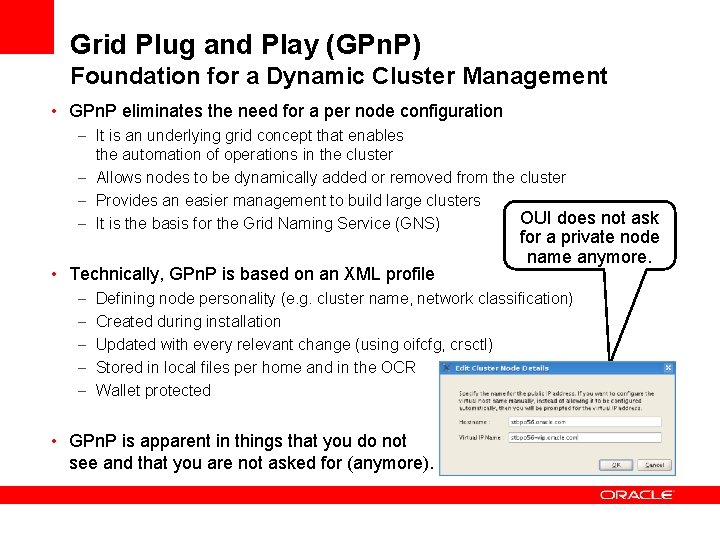

Grid Plug and Play (GPn. P) Foundation for a Dynamic Cluster Management • GPn. P eliminates the need for a per node configuration – It is an underlying grid concept that enables the automation of operations in the cluster – Allows nodes to be dynamically added or removed from the cluster – Provides an easier management to build large clusters OUI does not ask – It is the basis for the Grid Naming Service (GNS) • Technically, GPn. P is based on an XML profile – – – for a private node name anymore. Defining node personality (e. g. cluster name, network classification) Created during installation Updated with every relevant change (using oifcfg, crsctl) Stored in local files per home and in the OCR Wallet protected • GPn. P is apparent in things that you do not see and that you are not asked for (anymore).

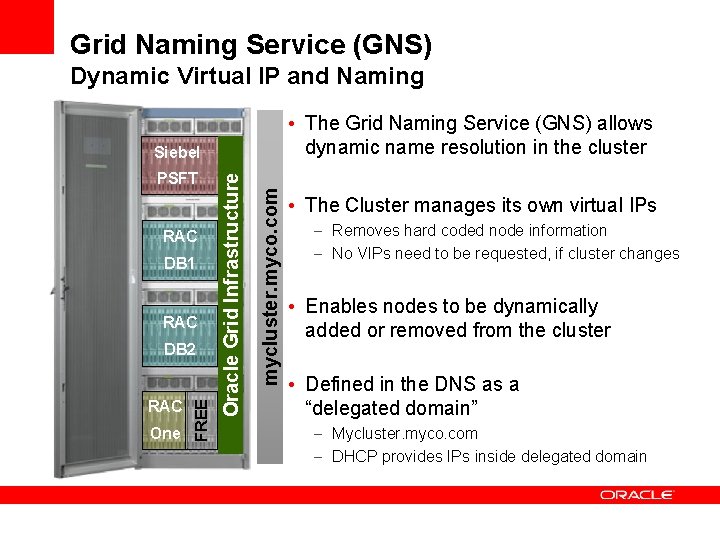

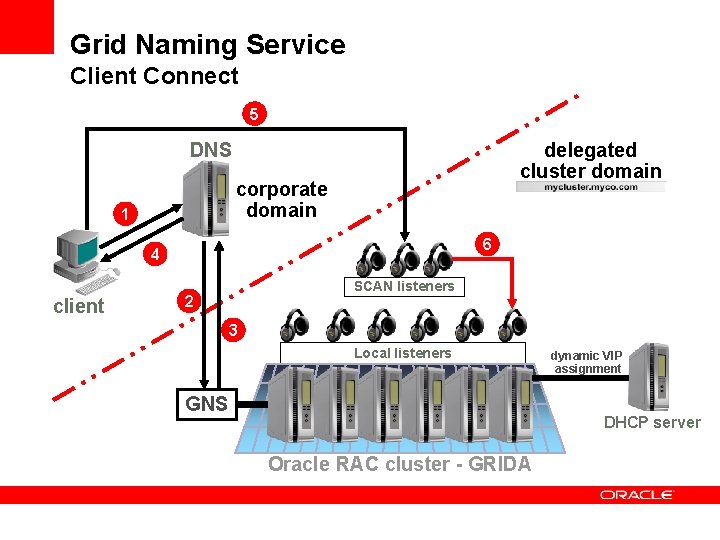

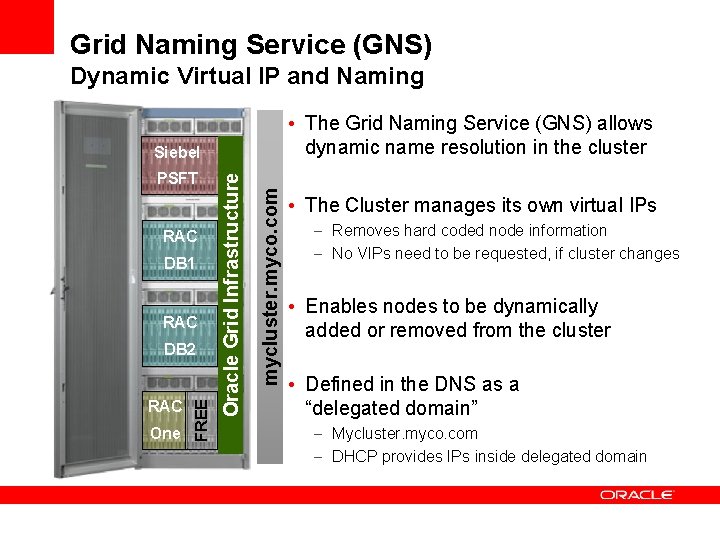

Grid Naming Service (GNS) Dynamic Virtual IP and Naming • The Grid Naming Service (GNS) allows dynamic name resolution in the cluster RAC DB 1 RAC One FREE DB 2 mycluster. myco. com PSFT Oracle Grid Infrastructure Siebel • The Cluster manages its own virtual IPs – Removes hard coded node information – No VIPs need to be requested, if cluster changes • Enables nodes to be dynamically added or removed from the cluster • Defined in the DNS as a “delegated domain” – Mycluster. myco. com – DHCP provides IPs inside delegated domain

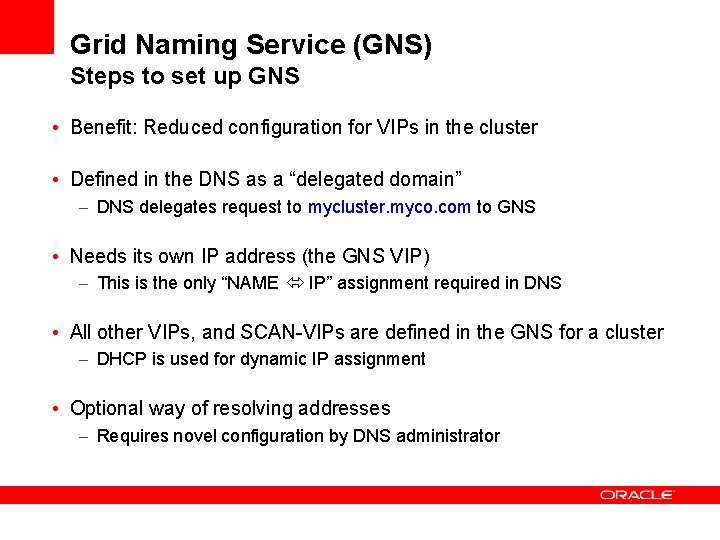

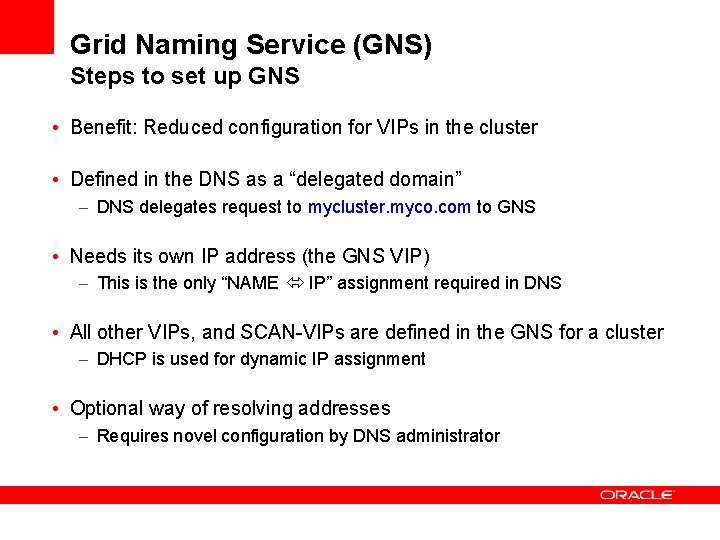

Grid Naming Service (GNS) Steps to set up GNS • Benefit: Reduced configuration for VIPs in the cluster • Defined in the DNS as a “delegated domain” – DNS delegates request to mycluster. myco. com to GNS • Needs its own IP address (the GNS VIP) – This is the only “NAME IP” assignment required in DNS • All other VIPs, and SCAN-VIPs are defined in the GNS for a cluster – DHCP is used for dynamic IP assignment • Optional way of resolving addresses – Requires novel configuration by DNS administrator

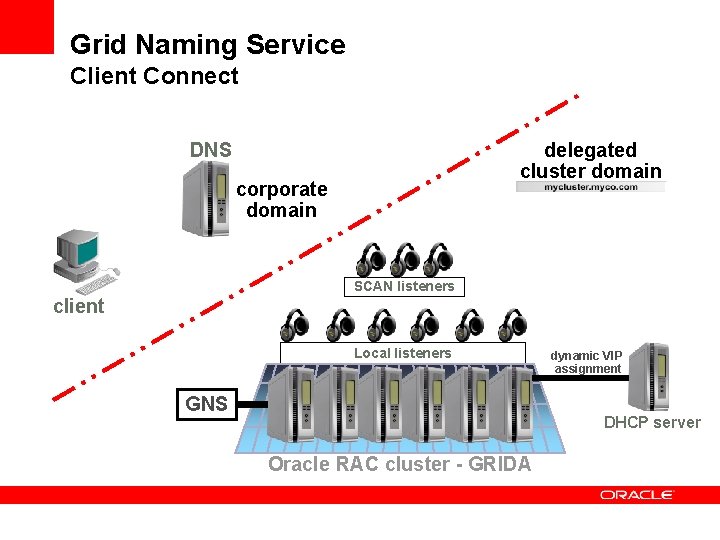

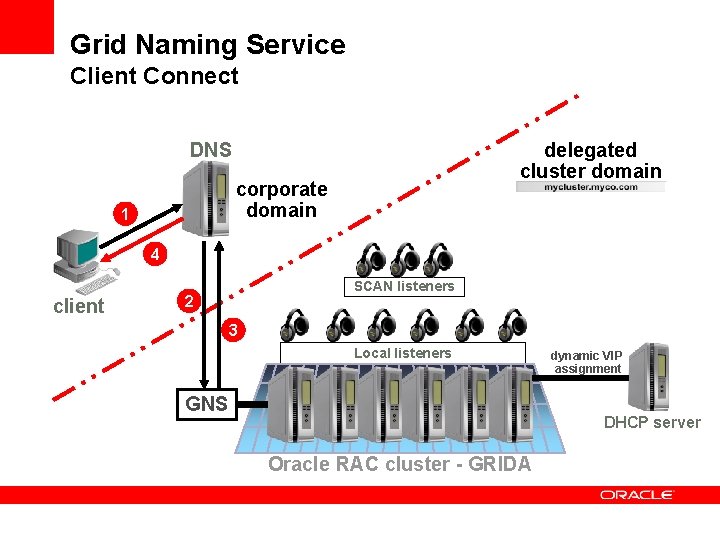

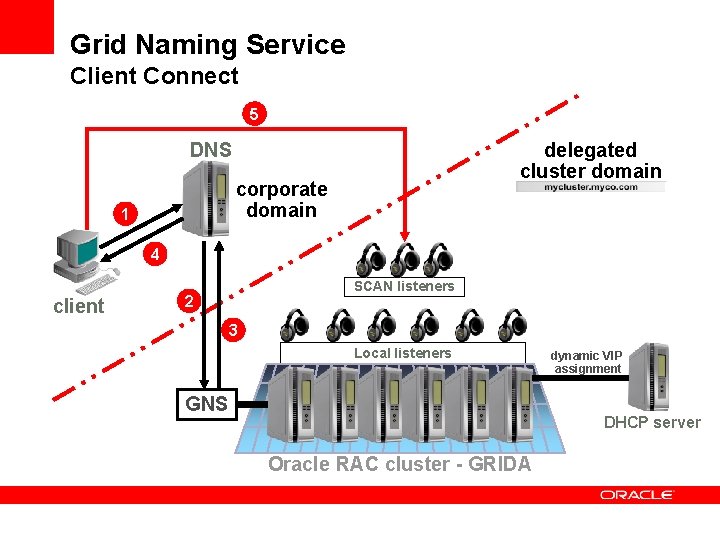

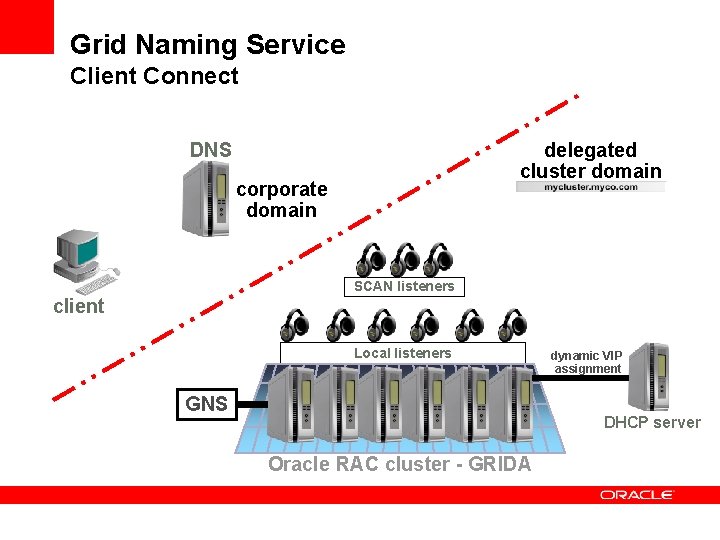

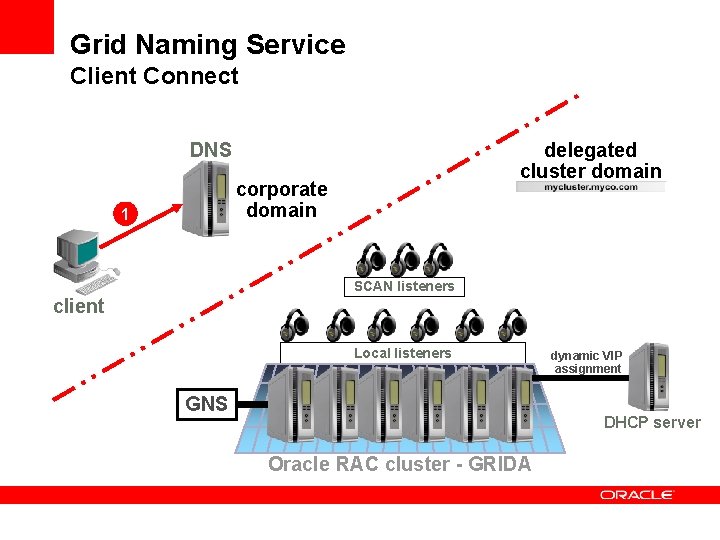

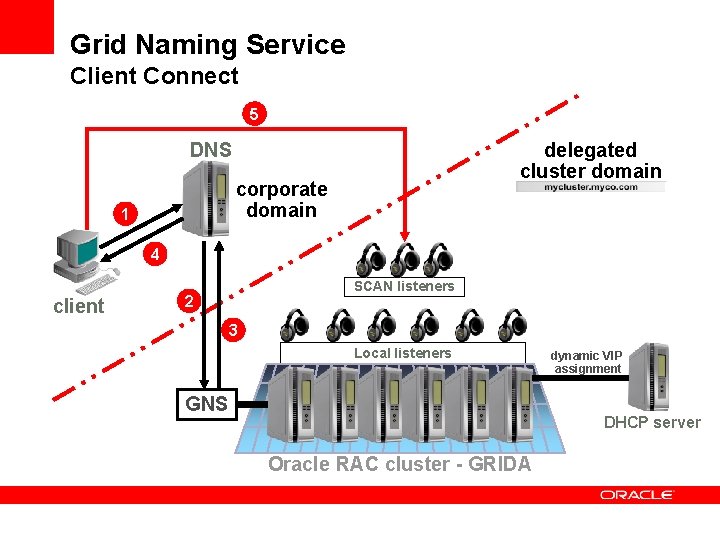

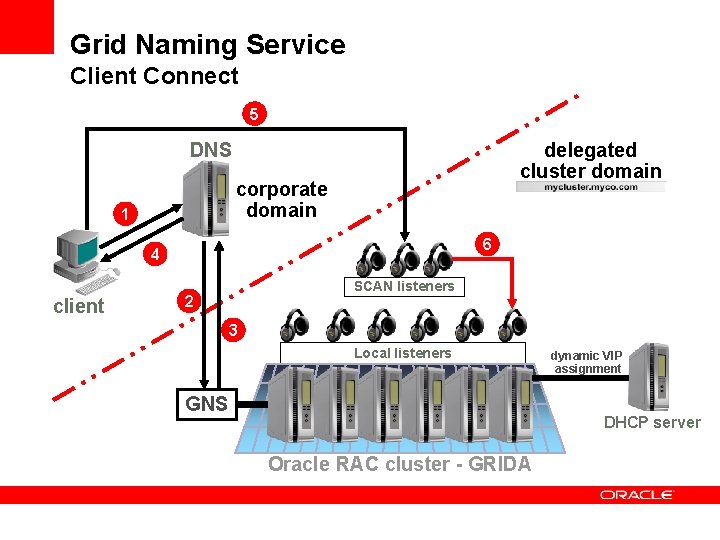

Grid Naming Service Client Connect delegated cluster domain DNS corporate domain SCAN listeners client Local listeners GNS dynamic VIP assignment DHCP server Oracle RAC cluster - GRIDA

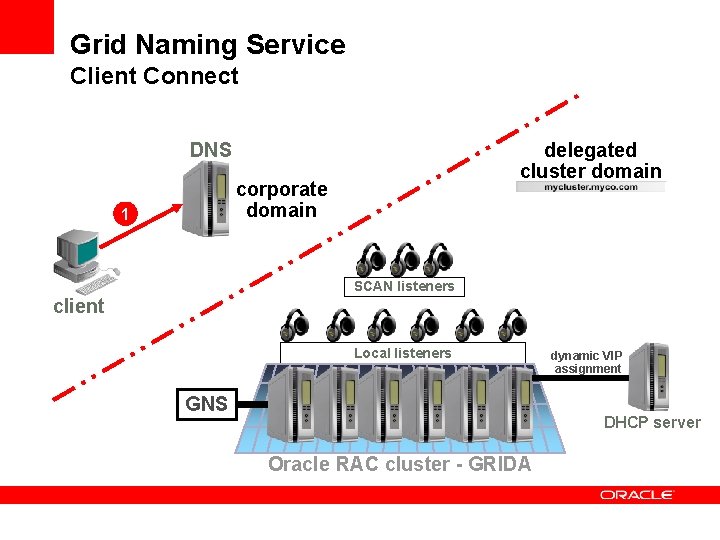

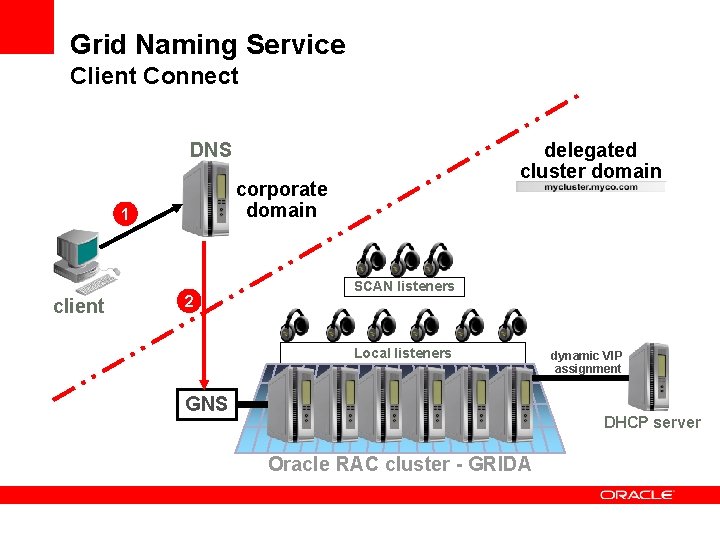

Grid Naming Service Client Connect delegated cluster domain DNS corporate domain 1 SCAN listeners client Local listeners GNS dynamic VIP assignment DHCP server Oracle RAC cluster - GRIDA

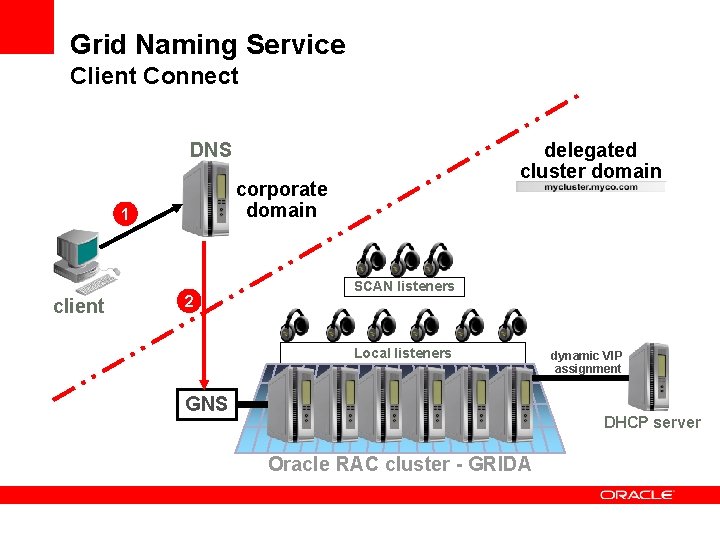

Grid Naming Service Client Connect delegated cluster domain DNS corporate domain 1 client 2 SCAN listeners Local listeners GNS dynamic VIP assignment DHCP server Oracle RAC cluster - GRIDA

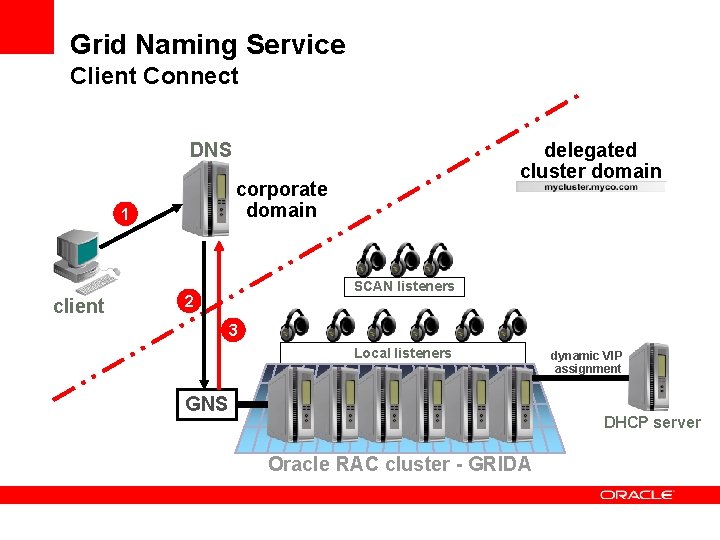

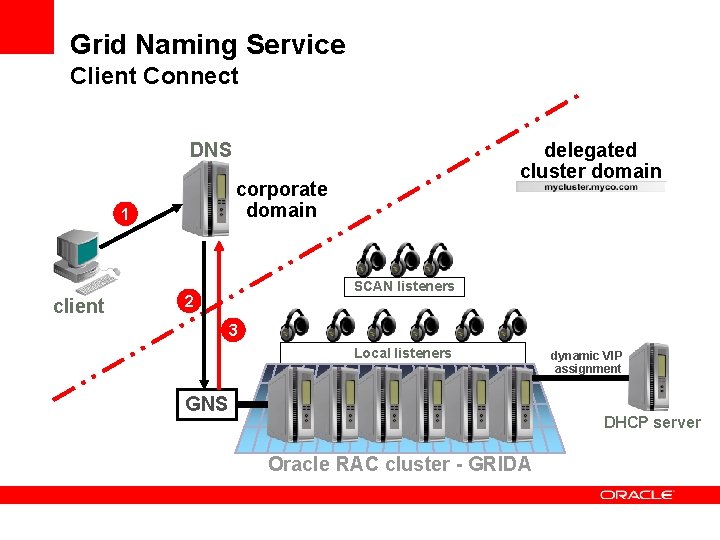

Grid Naming Service Client Connect delegated cluster domain DNS corporate domain 1 client SCAN listeners 2 3 Local listeners GNS dynamic VIP assignment DHCP server Oracle RAC cluster - GRIDA

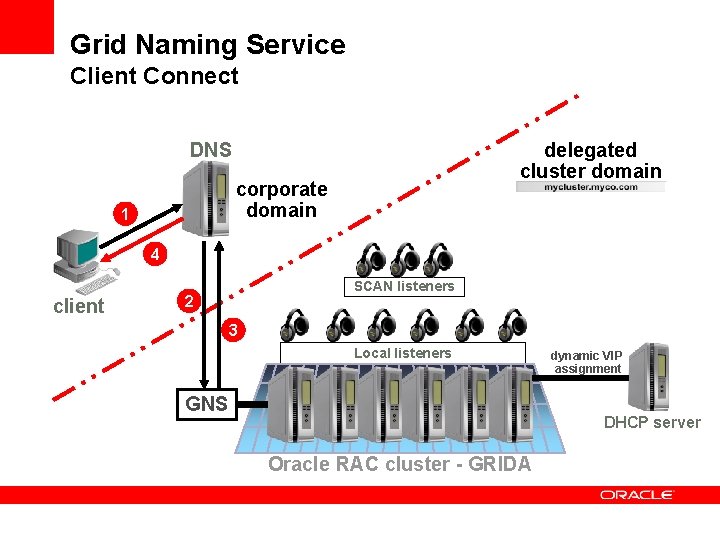

Grid Naming Service Client Connect delegated cluster domain DNS corporate domain 1 4 client SCAN listeners 2 3 Local listeners GNS dynamic VIP assignment DHCP server Oracle RAC cluster - GRIDA

Grid Naming Service Client Connect 5 delegated cluster domain DNS corporate domain 1 4 client SCAN listeners 2 3 Local listeners GNS dynamic VIP assignment DHCP server Oracle RAC cluster - GRIDA

Grid Naming Service Client Connect 5 delegated cluster domain DNS corporate domain 1 6 4 client SCAN listeners 2 3 Local listeners GNS dynamic VIP assignment DHCP server Oracle RAC cluster - GRIDA

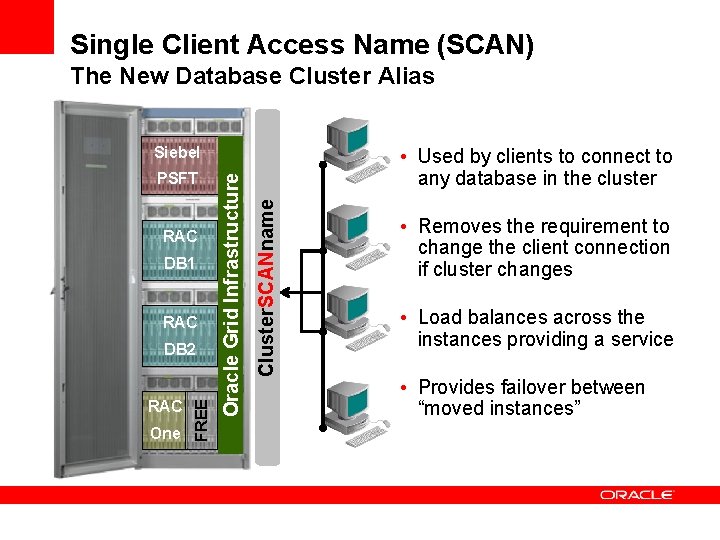

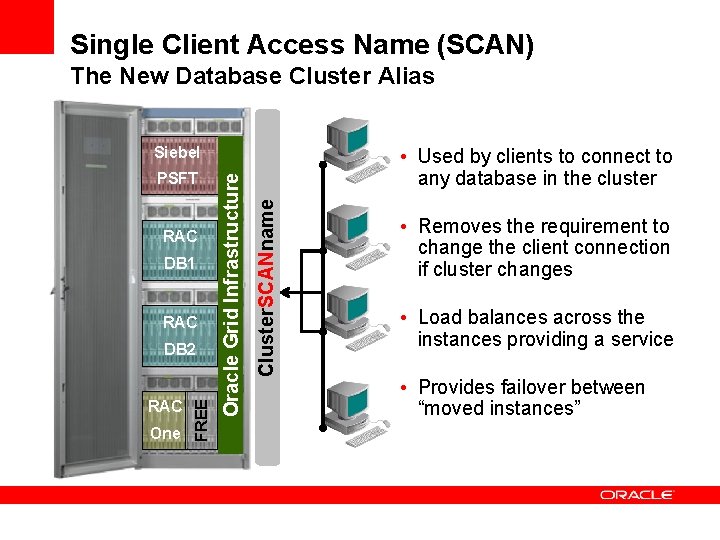

Single Client Access Name (SCAN) The New Database Cluster Alias RAC DB 1 RAC One FREE DB 2 • Used by clients to connect to any database in the cluster Cluster. SCANname PSFT Oracle Grid Infrastructure Siebel • Removes the requirement to change the client connection if cluster changes • Load balances across the instances providing a service • Provides failover between “moved instances”

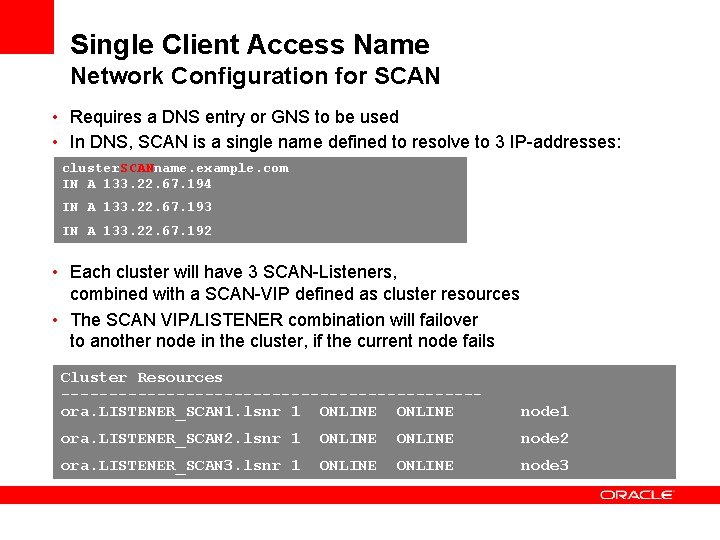

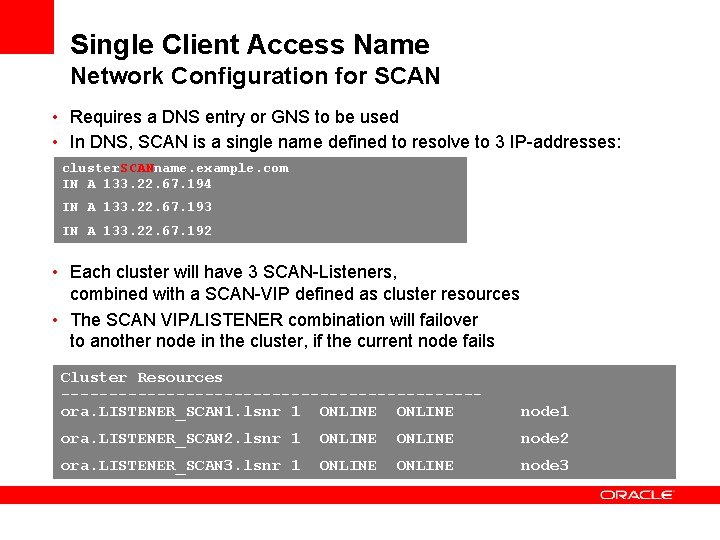

Single Client Access Name Network Configuration for SCAN • Requires a DNS entry or GNS to be used • In DNS, SCAN is a single name defined to resolve to 3 IP-addresses: cluster. SCANname. example. com IN A 133. 22. 67. 194 IN A 133. 22. 67. 193 IN A 133. 22. 67. 192 • Each cluster will have 3 SCAN-Listeners, combined with a SCAN-VIP defined as cluster resources • The SCAN VIP/LISTENER combination will failover to another node in the cluster, if the current node fails Cluster Resources ----------------------ora. LISTENER_SCAN 1. lsnr 1 ONLINE node 1 ora. LISTENER_SCAN 2. lsnr 1 ONLINE node 2 ora. LISTENER_SCAN 3. lsnr 1 ONLINE node 3

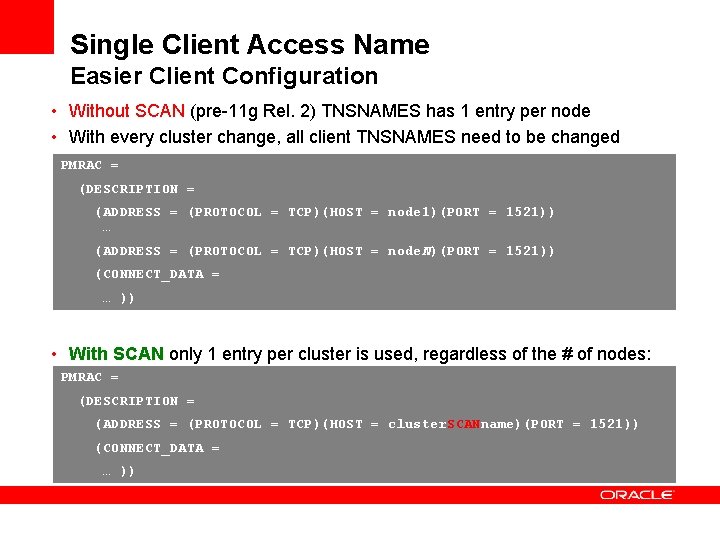

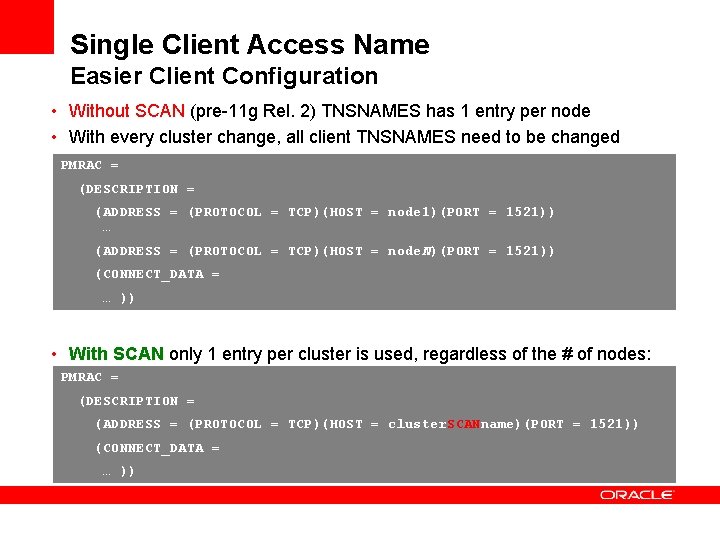

Single Client Access Name Easier Client Configuration • Without SCAN (pre-11 g Rel. 2) TNSNAMES has 1 entry per node • With every cluster change, all client TNSNAMES need to be changed PMRAC = (DESCRIPTION = (ADDRESS = (PROTOCOL = TCP)(HOST = node 1)(PORT = 1521)) … (ADDRESS = (PROTOCOL = TCP)(HOST = node. N)(PORT = 1521)) (CONNECT_DATA = … )) • With SCAN only 1 entry per cluster is used, regardless of the # of nodes: PMRAC = (DESCRIPTION = (ADDRESS = (PROTOCOL = TCP)(HOST = cluster. SCANname)(PORT = 1521)) (CONNECT_DATA = … ))

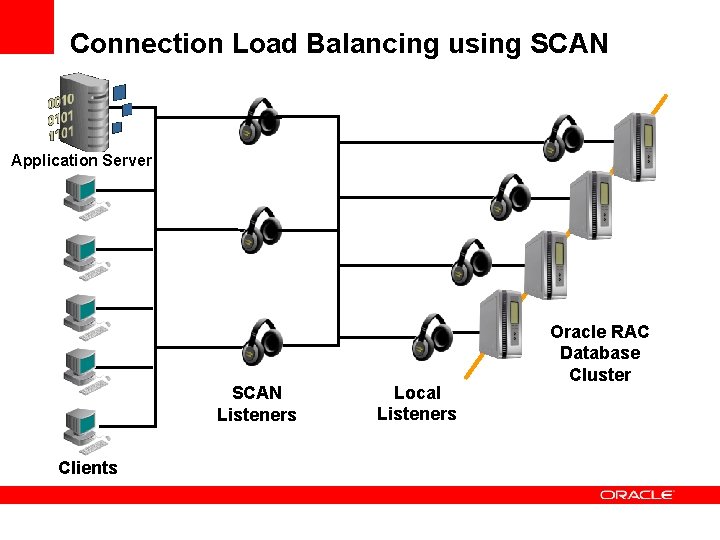

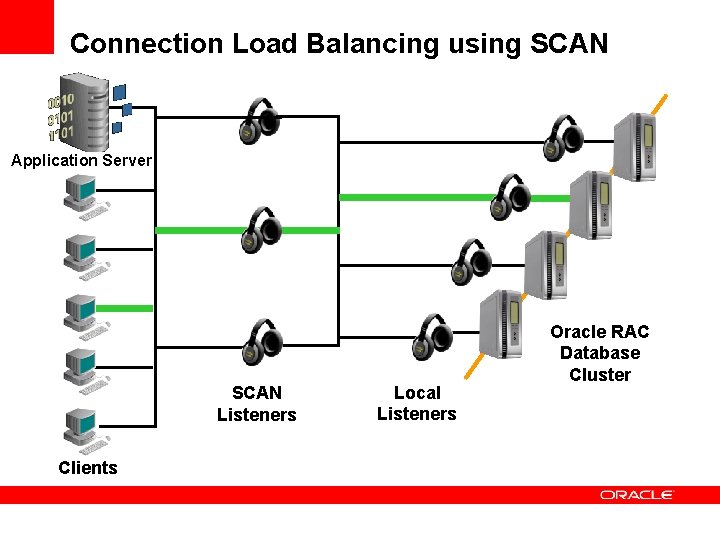

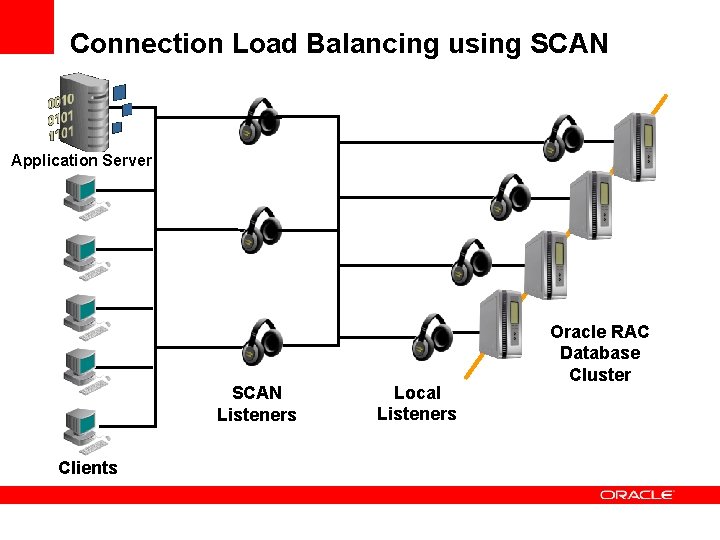

Connection Load Balancing using SCAN Application Server SCAN Listeners Clients Local Listeners Oracle RAC Database Cluster

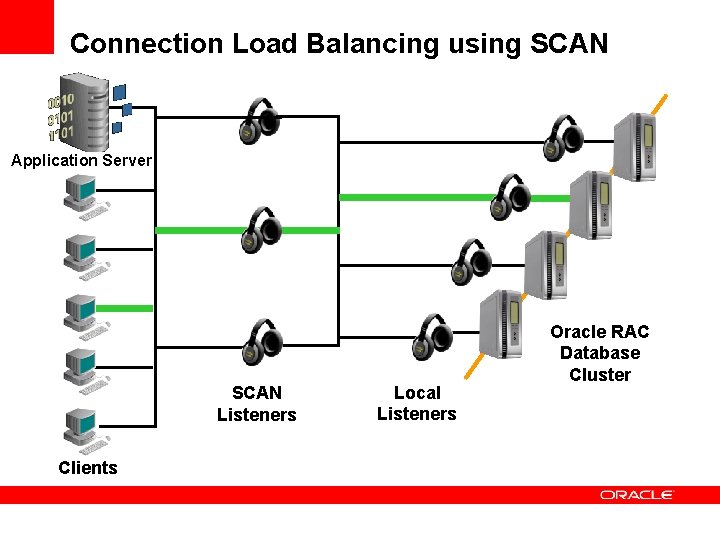

Connection Load Balancing using SCAN Application Server SCAN Listeners Clients Local Listeners Oracle RAC Database Cluster

Summary

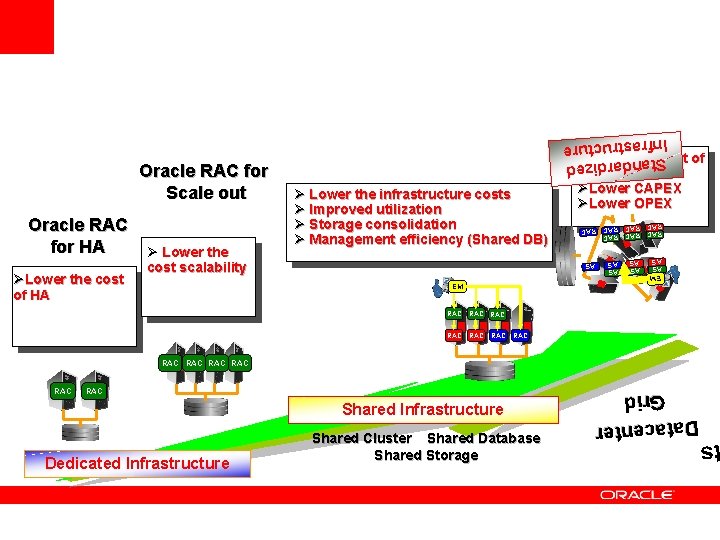

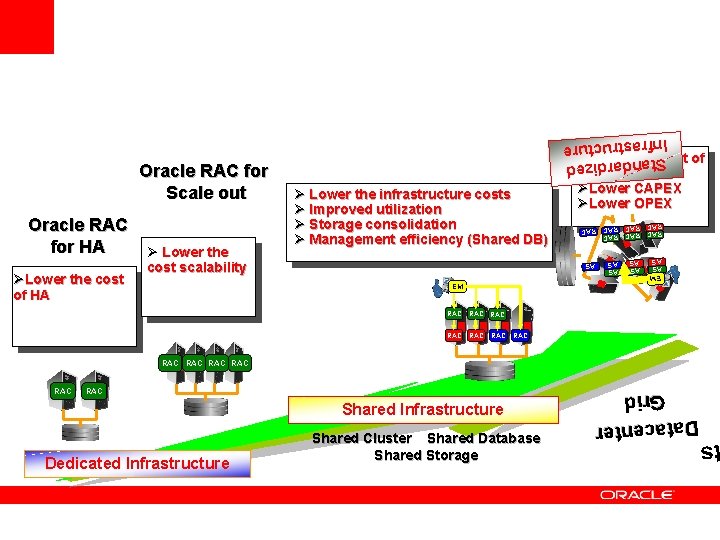

AS AS cost scalability EM AS AS AS ØLower the cost of HA Ø Lower the infrastructure costs Ø Improved utilization Ø Storage consolidation Ø Management efficiency (Shared DB) C RAC RAC RAC RA Oracle RAC for HA Ø Lower the Standardized Infrastructure Oracle RAC for Scale out ØLower the cost of deployments ØLower CAPEX ØLower OPEX EM RAC RAC RAC Shared Infrastructure Dedicated Infrastructure Shared Cluster Shared Database Shared Storage s RAC Data cente r Gr id RAC

<Insert Picture Here> Questions and Answers