Modern Information Retrieval Chapter 3 Retrieval Evaluation The

- Slides: 24

Modern Information Retrieval Chapter 3 Retrieval Evaluation

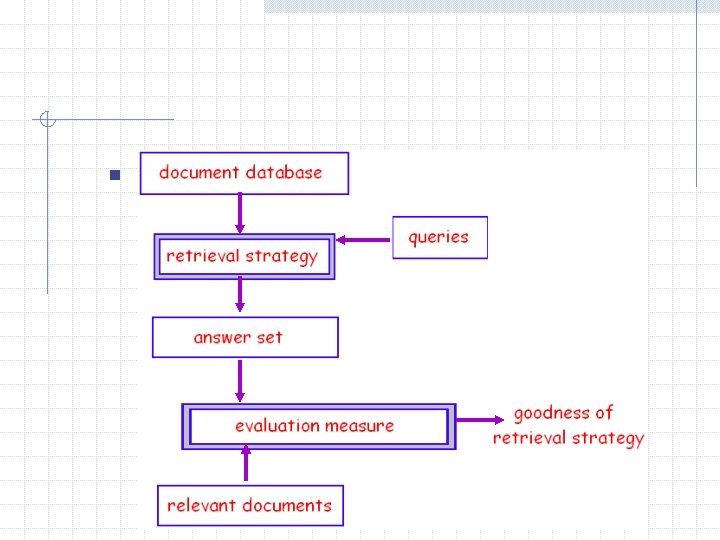

The most common measures of system performance are time and space n an inherent tradeoff Data retrieval n n time and space indexing Information retrieval n precision of the answer set also important

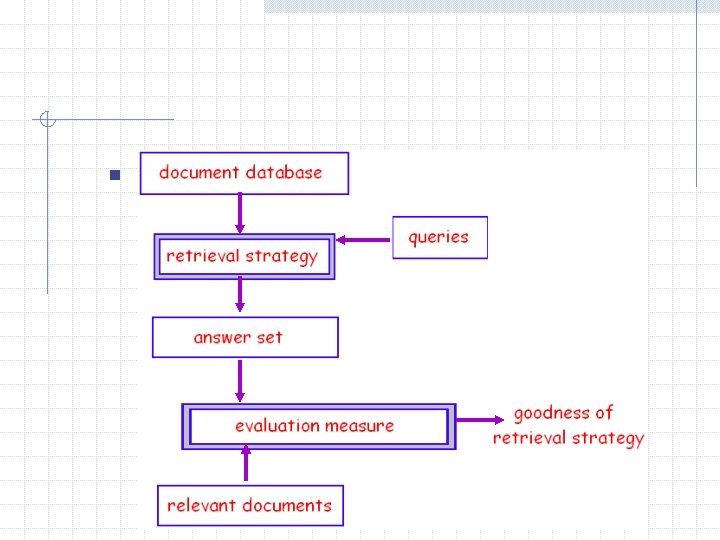

n

n evaluation considerations w query with/without feedback w query interface design w real data/synthetic data w real life/laboratory environment n repeatability and scalability

n recall and precision w recall: fraction of relevant documents which has been retrieved w precision: fraction of retrieved documents which is relevant

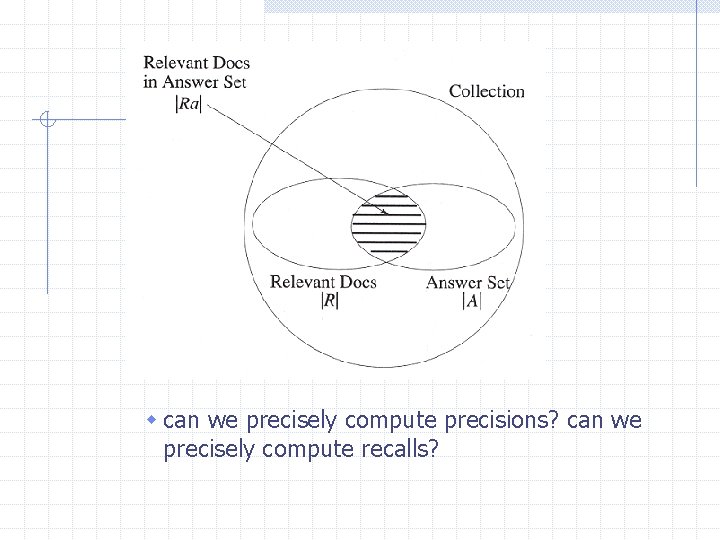

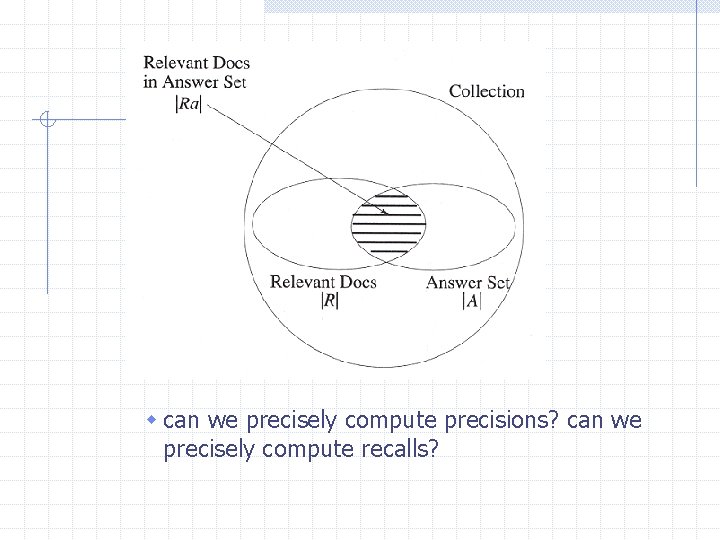

w can we precisely compute precisions? can we precisely compute recalls?

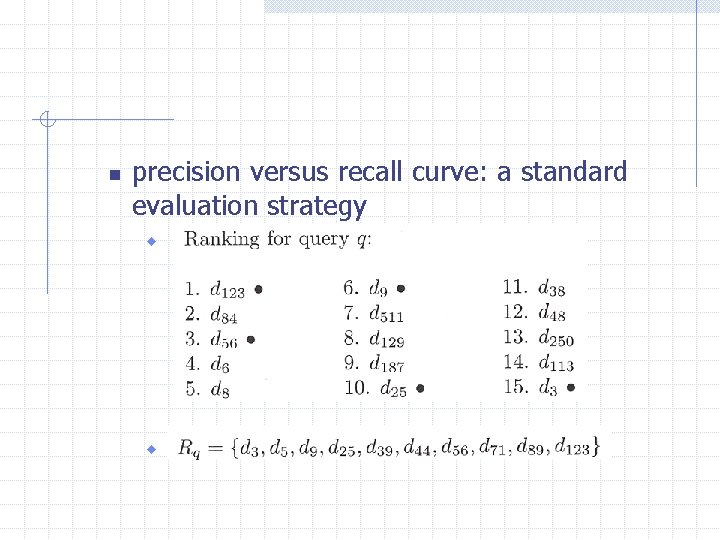

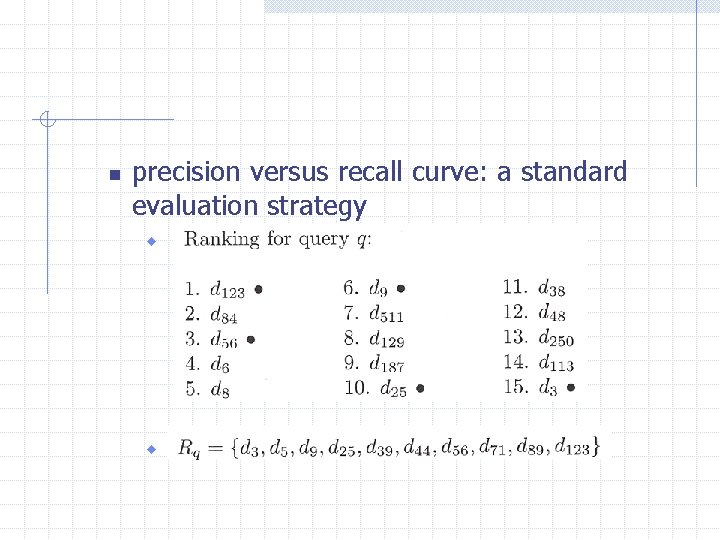

n precision versus recall curve: a standard evaluation strategy w w

w

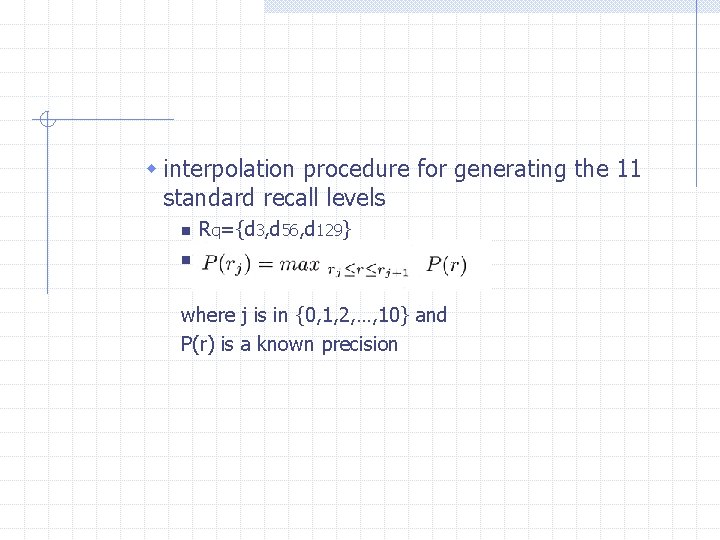

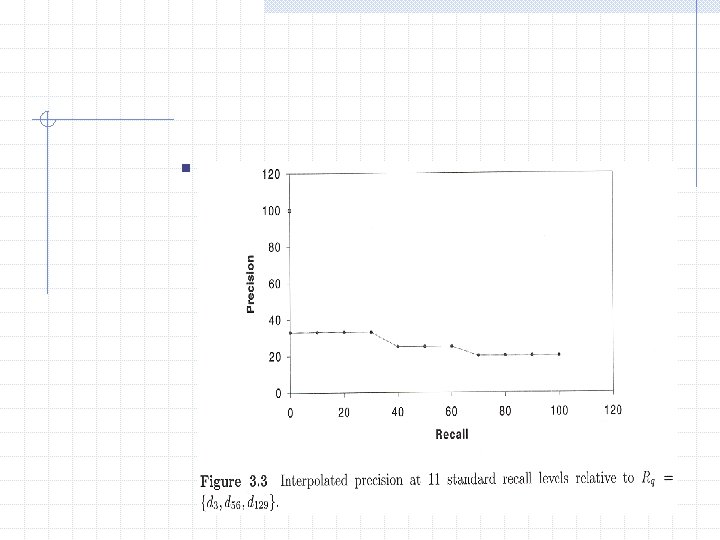

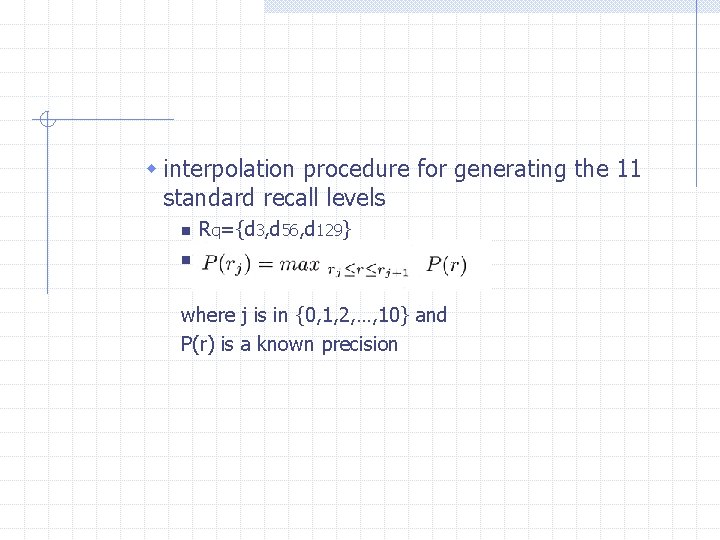

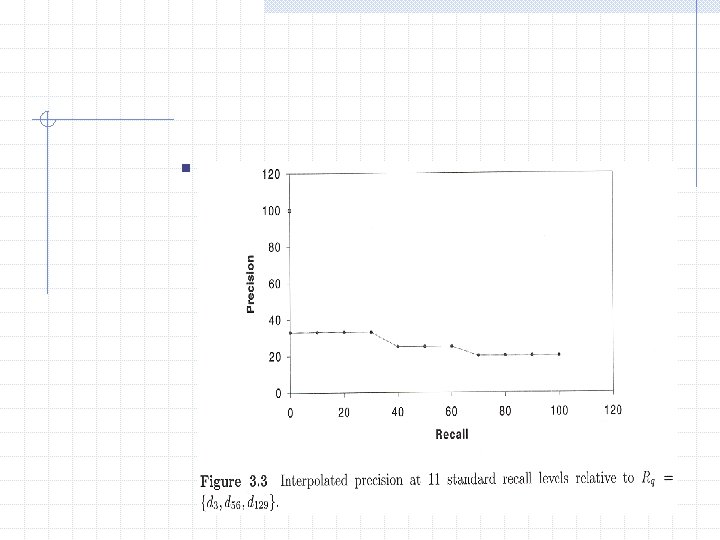

w interpolation procedure for generating the 11 standard recall levels n Rq={d 3, d 56, d 129} n where j is in {0, 1, 2, …, 10} and P(r) is a known precision

n

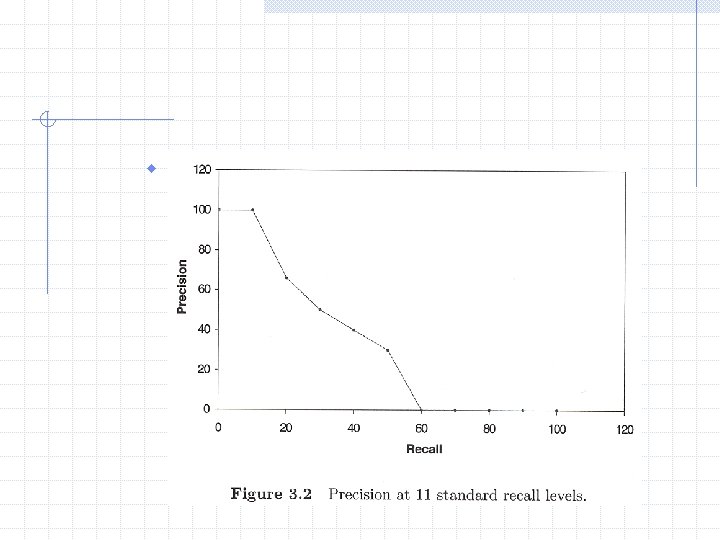

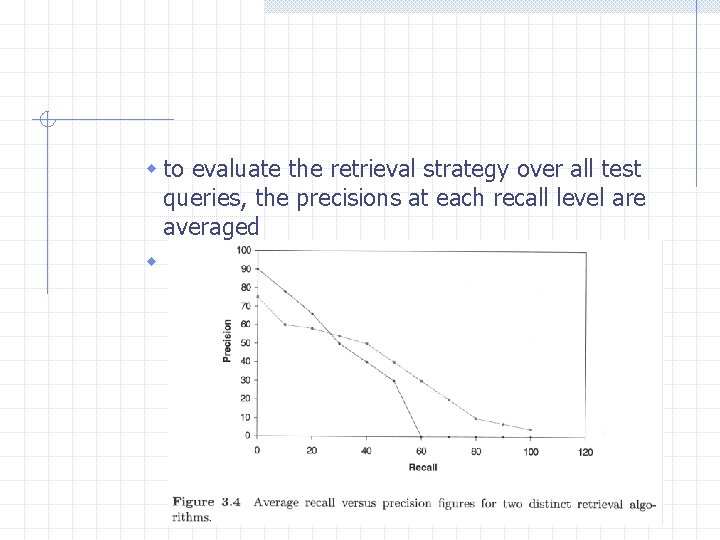

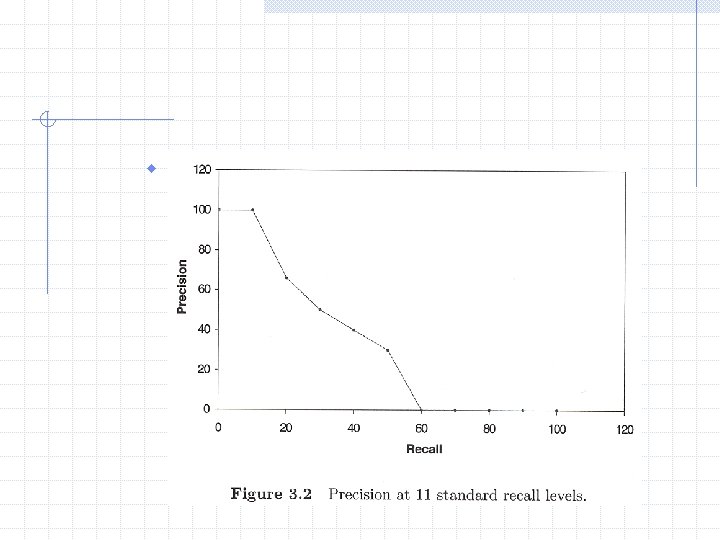

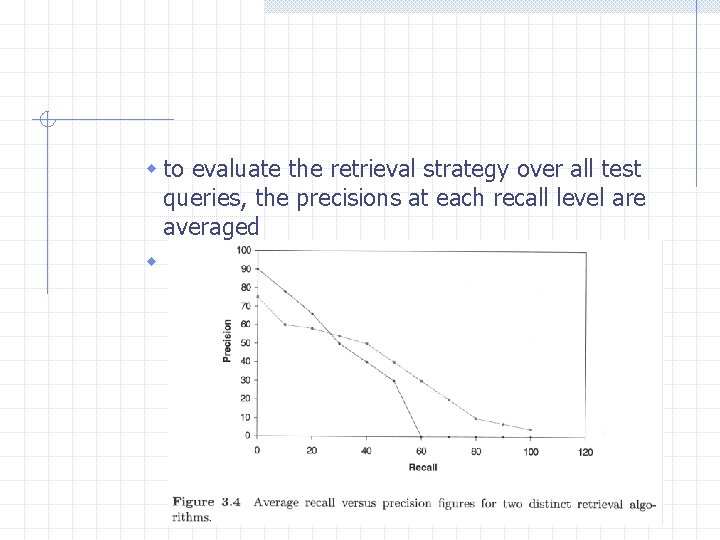

w to evaluate the retrieval strategy over all test queries, the precisions at each recall level are averaged w

w another approach: compute average precision at given relevant document cutoff values n advantages?

n single value summary for each query w average precision at seen relevant documents n n example in Figure 3. 2 favor systems which retrieve relevant documents quickly n can have a poor overall recall performance w R-precision n n R: total number of relevant documents examples in Figures 3. 2 and 3. 3

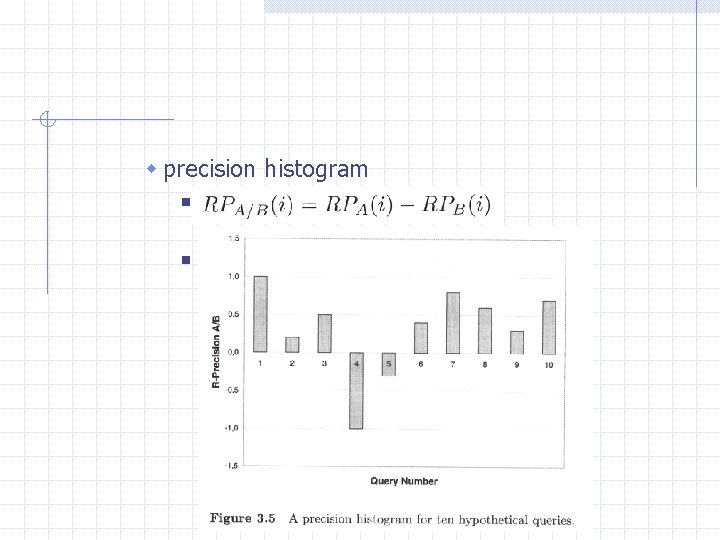

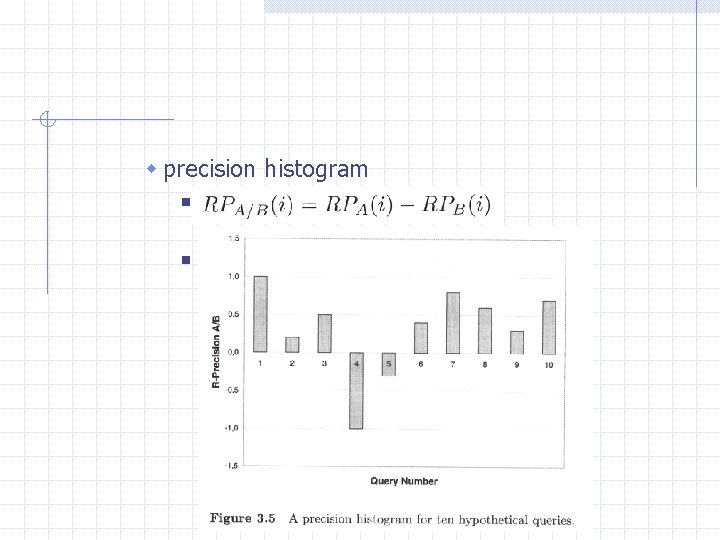

w precision histogram n n

n combining recall and precision w the harmonic mean n n it assumes a high value only when both recall and precision are high

w the E measure n n b=1, complement of the harmonic mean b>1, the user is more interested in precision b<1, the user is more interested in recall

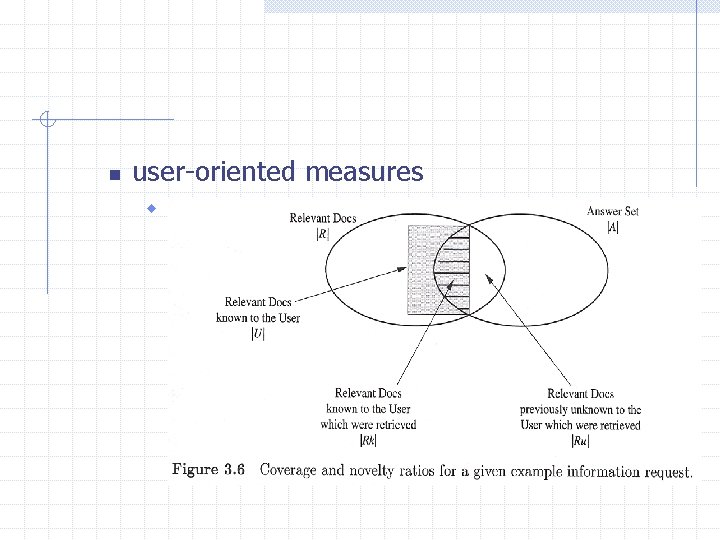

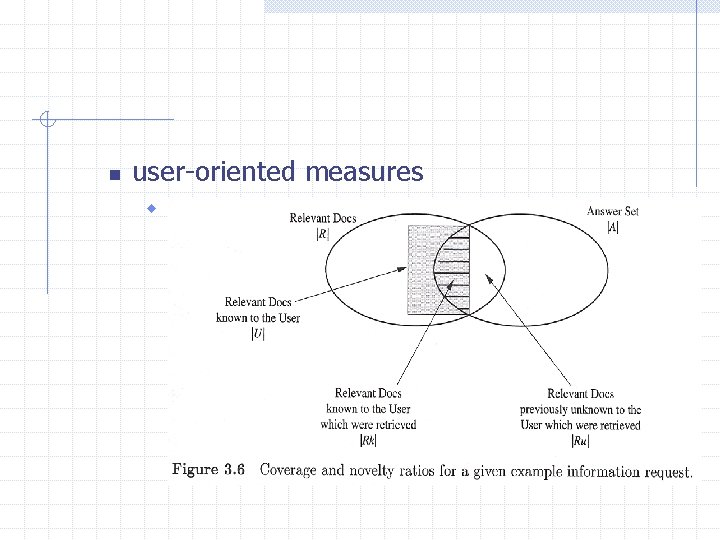

n user-oriented measures w

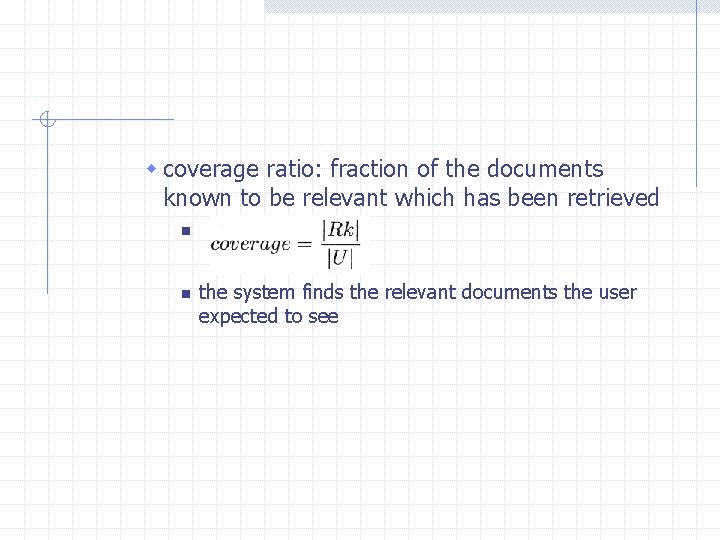

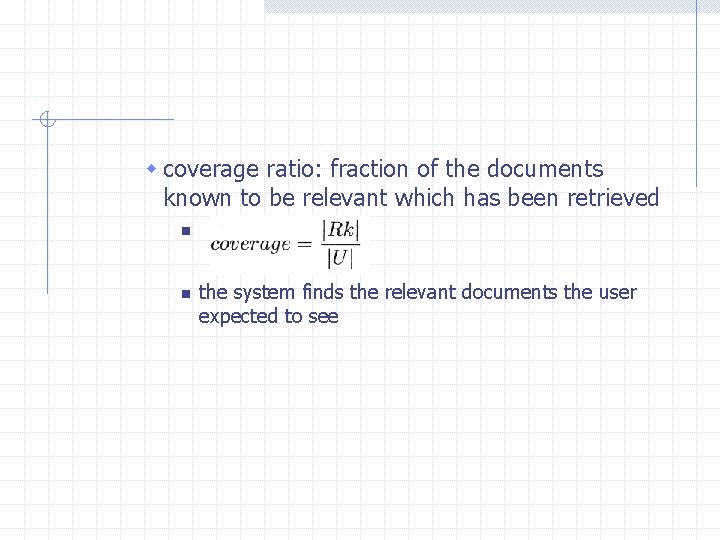

w coverage ratio: fraction of the documents known to be relevant which has been retrieved n n the system finds the relevant documents the user expected to see

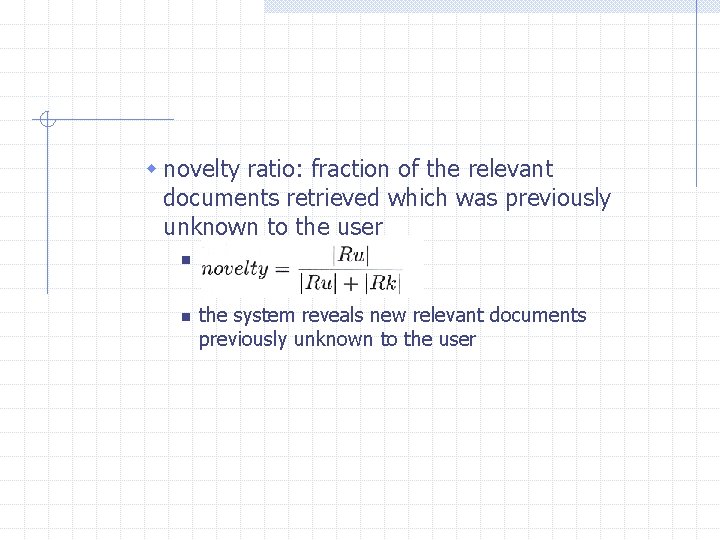

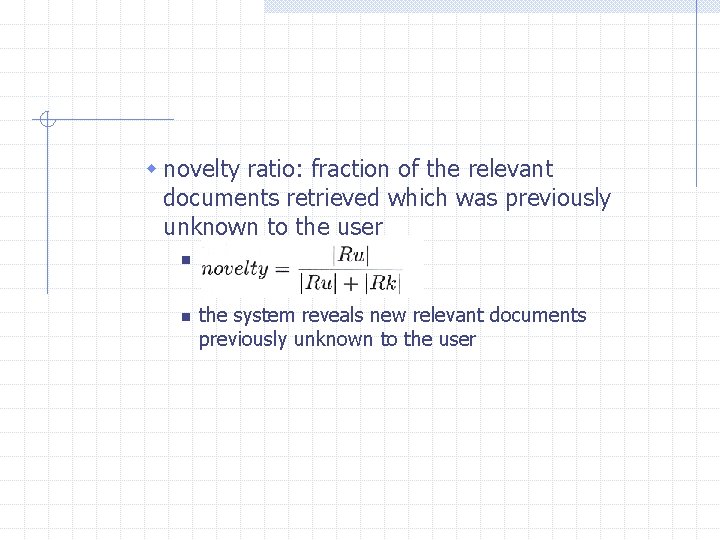

w novelty ratio: fraction of the relevant documents retrieved which was previously unknown to the user n n the system reveals new relevant documents previously unknown to the user

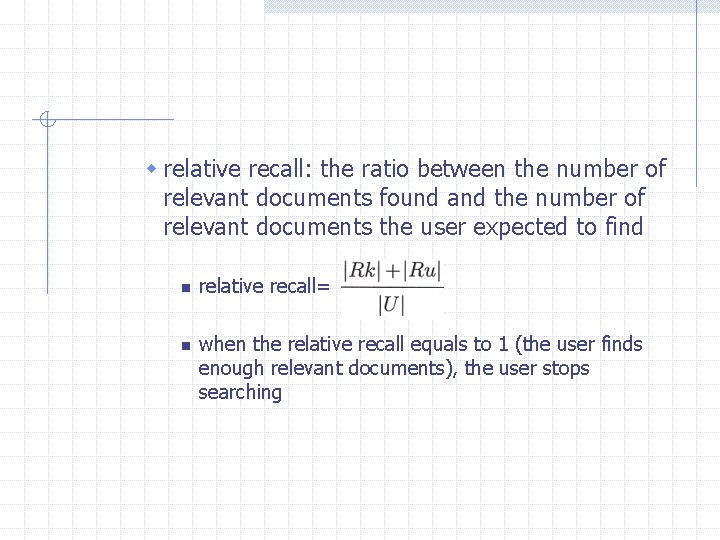

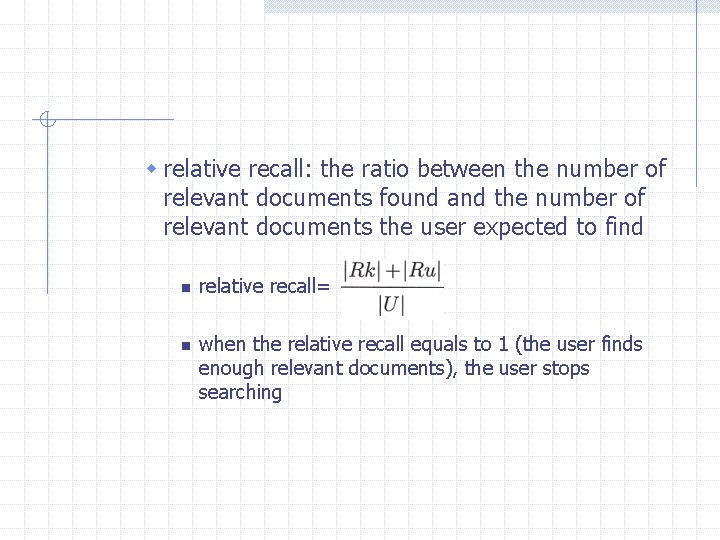

w relative recall: the ratio between the number of relevant documents found and the number of relevant documents the user expected to find n n relative recall= when the relative recall equals to 1 (the user finds enough relevant documents), the user stops searching

w recall effort: the ratio between the number of relevant documents the user expected to find and the number of documents examined in an attempt to find the expected relevant documents n research in IR w lack a solid formal framework w lack robust and consistent testbeds and benchmarks n Text REtrieval Conference

n n n retrieval techniques n methods using automatic thesauri n sophisticated term weighting n natural language techniques n relevance feedback n advanced pattern matching document collection n over 1 million documents n newspaper, patents, etc. topics n in natural language n conversion done by the system

n n relevant documents n the pooling method: for each topic, collect the top k documents generated by each participating system and decide their relevance by human assessors the benchmark tasks n ad hoc task n filtering task n Chinese n cross languages n spoken document retrieval n high precision n very large collection

n evaluation measures n summary table statistics: number of documents retrieved, number of relevant documents not retrieved, etc. n recall-precision averages n document level averages: average precision at seen relevant documents n average precision histogram