Modern Information Retrieval Chapter 3 Evaluation 1 Saif

- Slides: 20

Modern Information Retrieval Chapter 3. Evaluation 1 Saif Rababah

Why System Evaluation? • There are many retrieval models/ algorithms/ systems, which one is the best? • What is the best component for: – Ranking function (dot-product, cosine, …) – Term selection (stopword removal, stemming…) – Term weighting (TF, TF-IDF, …) • How far down the ranked list will a user need to look to find some/all relevant documents? 2 Saif Rababah

Difficulties in Evaluating IR Systems • Effectiveness is related to the relevancy of retrieved items. • Relevancy is not typically binary but continuous. • Even if relevancy is binary, it can be a difficult judgment to make. • Relevancy, from a human standpoint, is: – – Subjective: Depends upon a specific user’s judgment. Situational: Relates to user’s current needs. Cognitive: Depends on human perception and behavior. Dynamic: Changes over time. 3 Saif Rababah

Human Labeled Corpora (Gold Standard) • Start with a corpus of documents. • Collect a set of queries for this corpus. • Have one or more human experts exhaustively label the relevant documents for each query. • Typically assumes binary relevance judgments. • Requires considerable human effort for large document/query corpora. 4 Saif Rababah

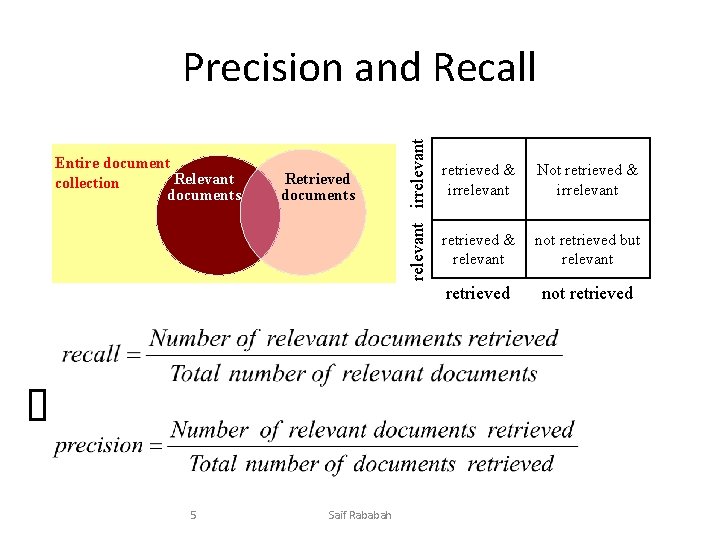

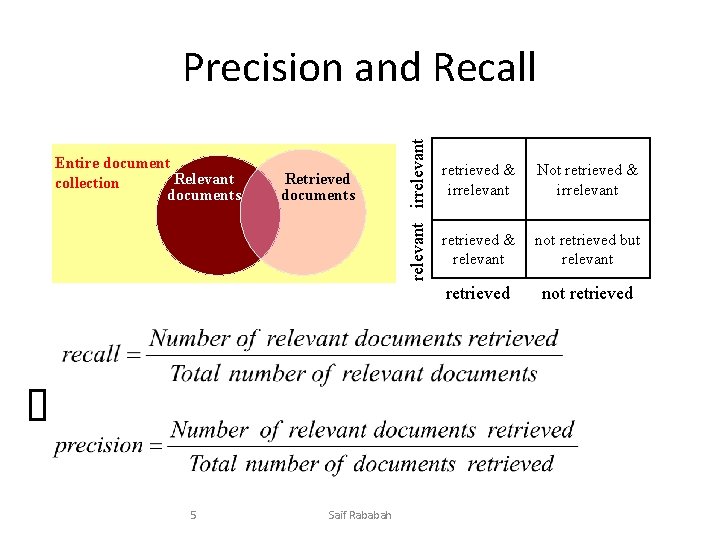

Entire document Relevant collection documents 5 Retrieved documents Saif Rababah relevant irrelevant Precision and Recall retrieved & irrelevant Not retrieved & irrelevant retrieved & relevant not retrieved but relevant retrieved not retrieved

Precision and Recall • Precision – The ability to retrieve top-ranked documents that are mostly relevant. • Recall – The ability of the search to find all of the relevant items in the corpus. 6 Saif Rababah

Determining Recall is Difficult • Total number of relevant items is sometimes not available: – Sample across the database and perform relevance judgment on these items. – Apply different retrieval algorithms to the same database for the same query. The aggregate of relevant items is taken as the total relevant set. 7 Saif Rababah

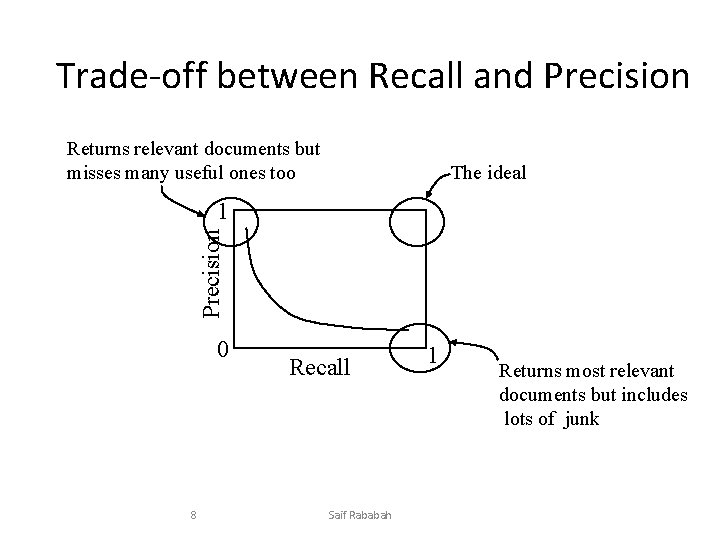

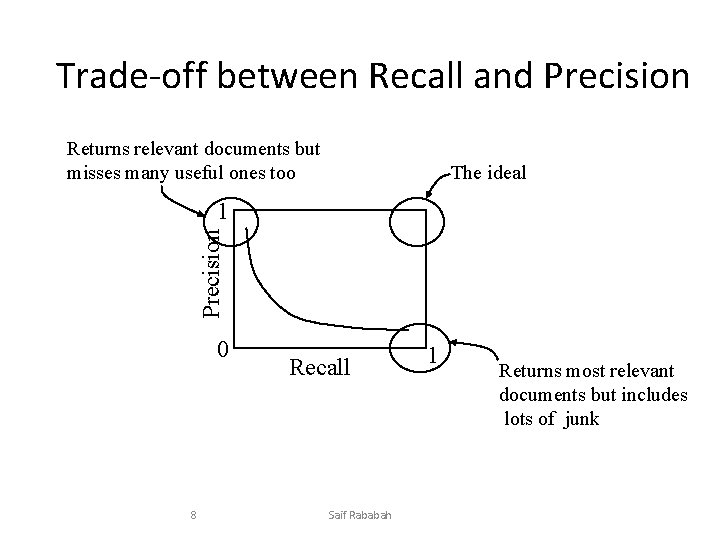

Trade-off between Recall and Precision Returns relevant documents but misses many useful ones too The ideal Precision 1 0 8 Recall Saif Rababah 1 Returns most relevant documents but includes lots of junk

Computing Recall/Precision Points • For a given query, produce the ranked list of retrievals. • Adjusting a threshold on this ranked list produces different sets of retrieved documents, and therefore different recall/precision measures. • Mark each document in the ranked list that is relevant according to the gold standard. • Compute a recall/precision pair for each position in the ranked list that contains a relevant document. 9 Saif Rababah

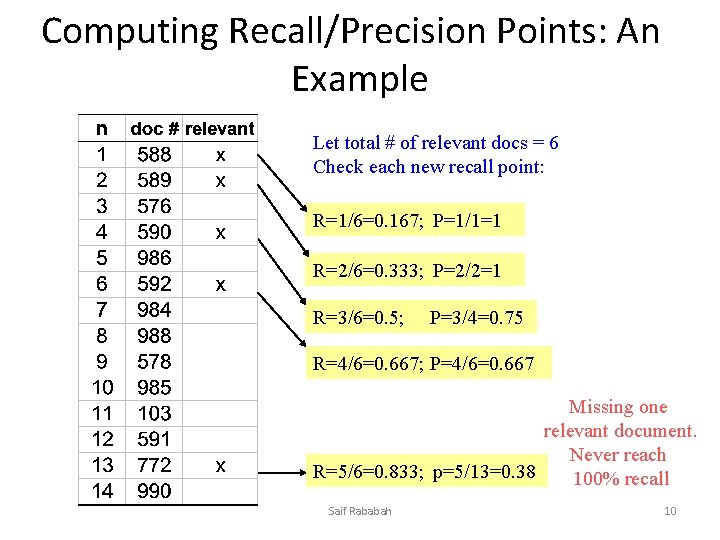

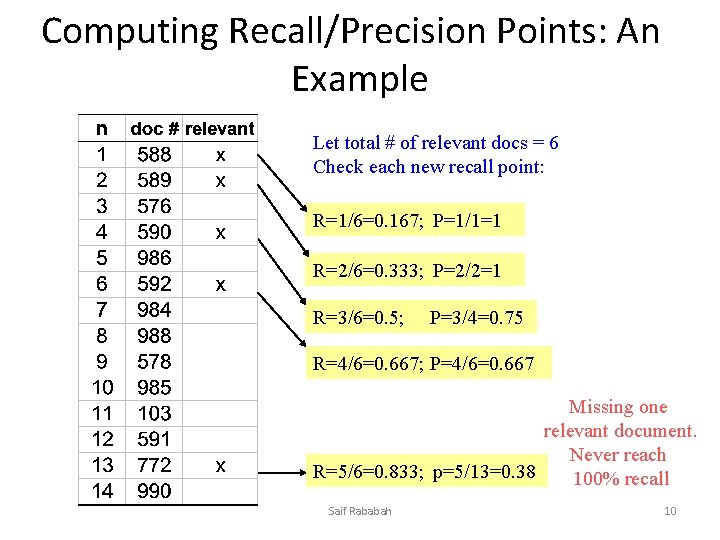

Computing Recall/Precision Points: An Example Let total # of relevant docs = 6 Check each new recall point: R=1/6=0. 167; P=1/1=1 R=2/6=0. 333; P=2/2=1 R=3/6=0. 5; P=3/4=0. 75 R=4/6=0. 667; P=4/6=0. 667 Missing one relevant document. Never reach R=5/6=0. 833; p=5/13=0. 38 100% recall Saif Rababah 10

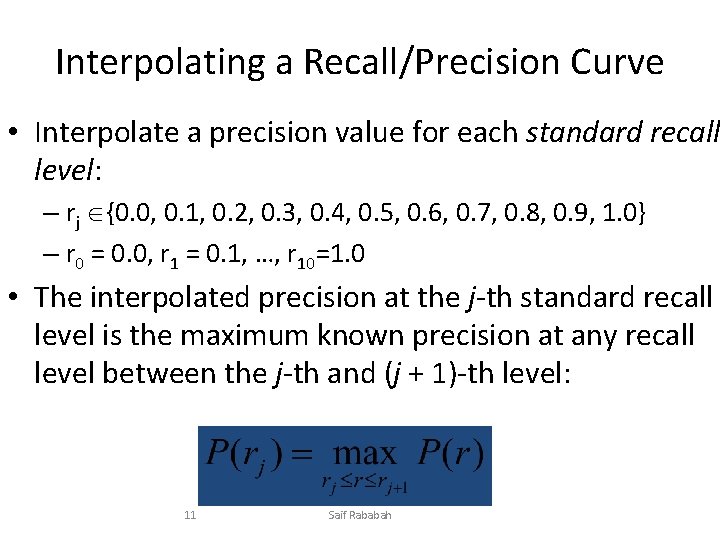

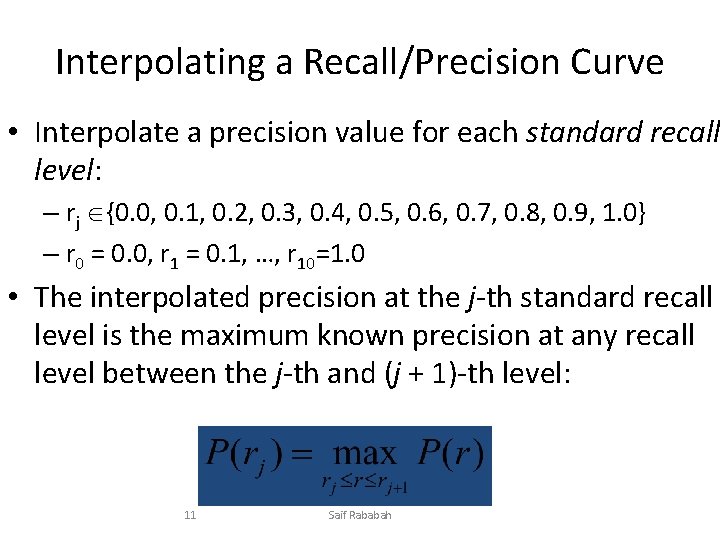

Interpolating a Recall/Precision Curve • Interpolate a precision value for each standard recall level: – rj {0. 0, 0. 1, 0. 2, 0. 3, 0. 4, 0. 5, 0. 6, 0. 7, 0. 8, 0. 9, 1. 0} – r 0 = 0. 0, r 1 = 0. 1, …, r 10=1. 0 • The interpolated precision at the j-th standard recall level is the maximum known precision at any recall level between the j-th and (j + 1)-th level: 11 Saif Rababah

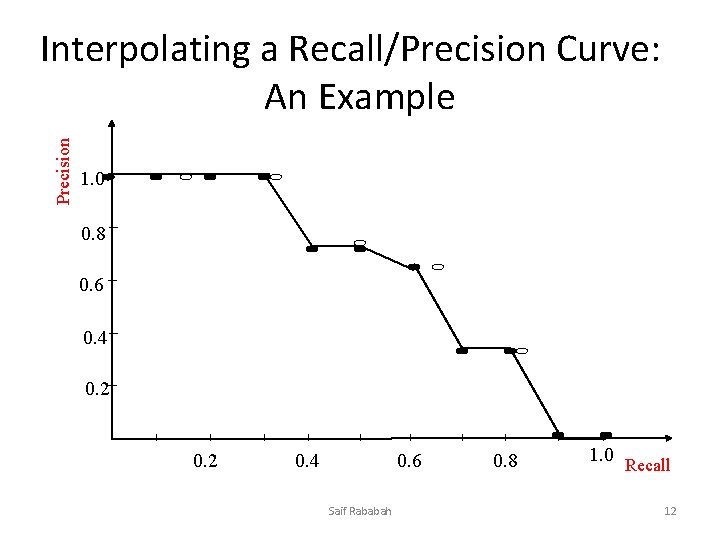

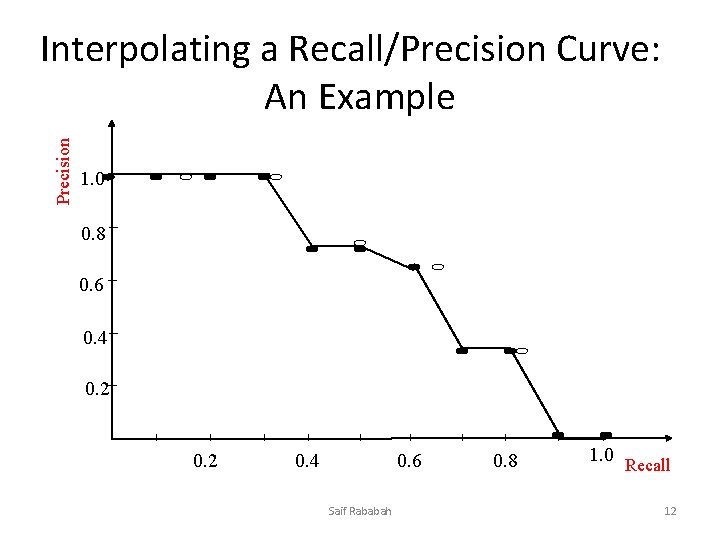

Precision Interpolating a Recall/Precision Curve: An Example 1. 0 0. 8 0. 6 0. 4 0. 2 0. 4 0. 6 Saif Rababah 0. 8 1. 0 Recall 12

Average Recall/Precision Curve • Typically average performance over a large set of queries. • Compute average precision at each standard recall level across all queries. • Plot average precision/recall curves to evaluate overall system performance on a document/query corpus. 13 Saif Rababah

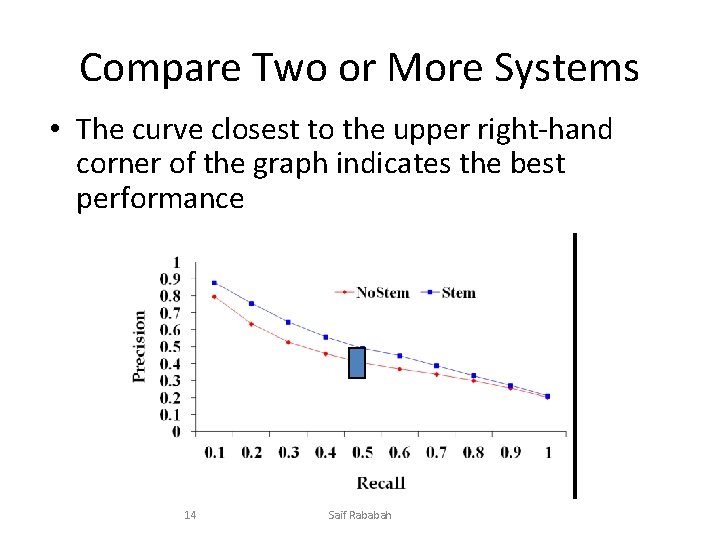

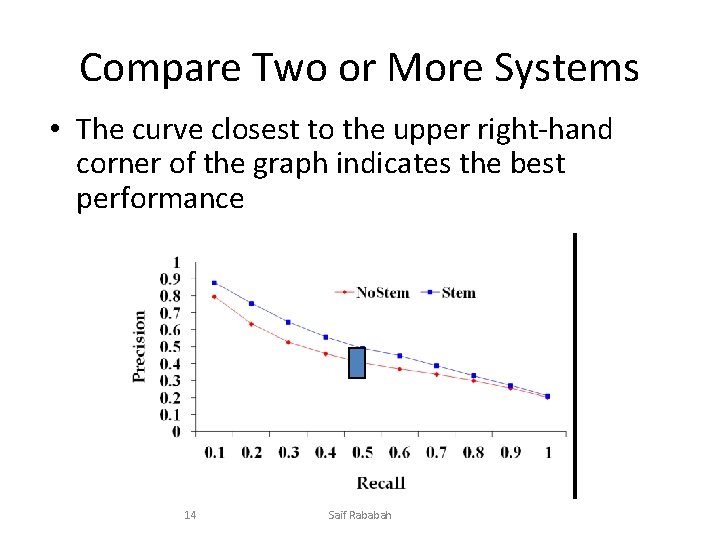

Compare Two or More Systems • The curve closest to the upper right-hand corner of the graph indicates the best performance 14 Saif Rababah

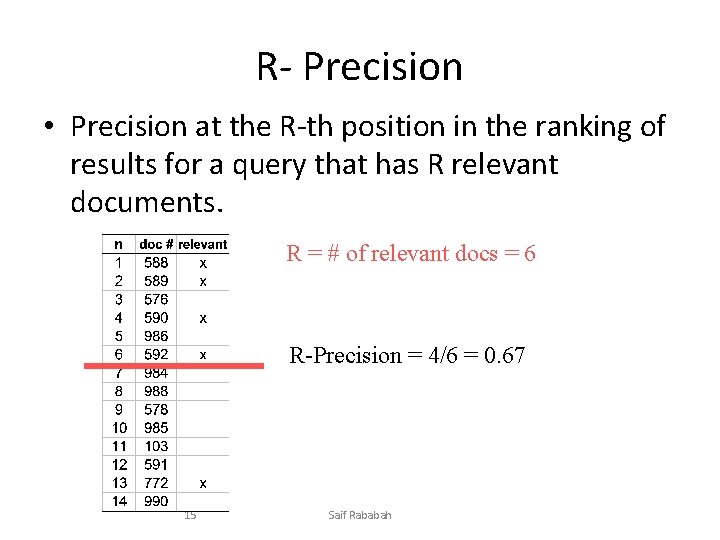

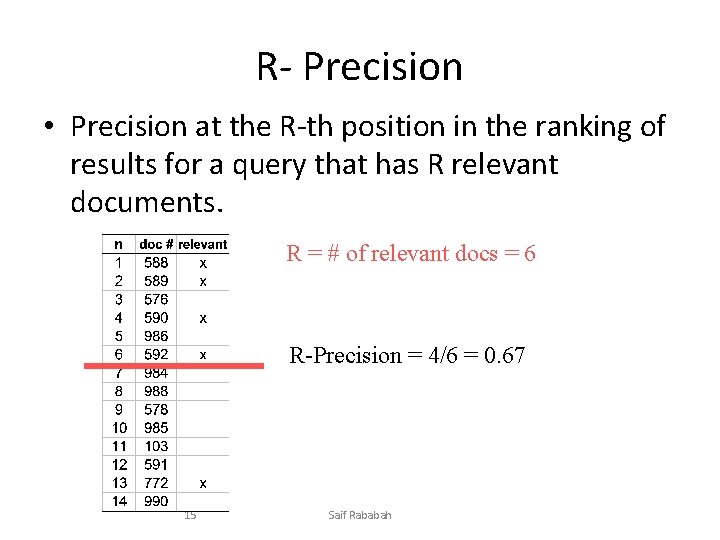

R- Precision • Precision at the R-th position in the ranking of results for a query that has R relevant documents. R = # of relevant docs = 6 R-Precision = 4/6 = 0. 67 15 Saif Rababah

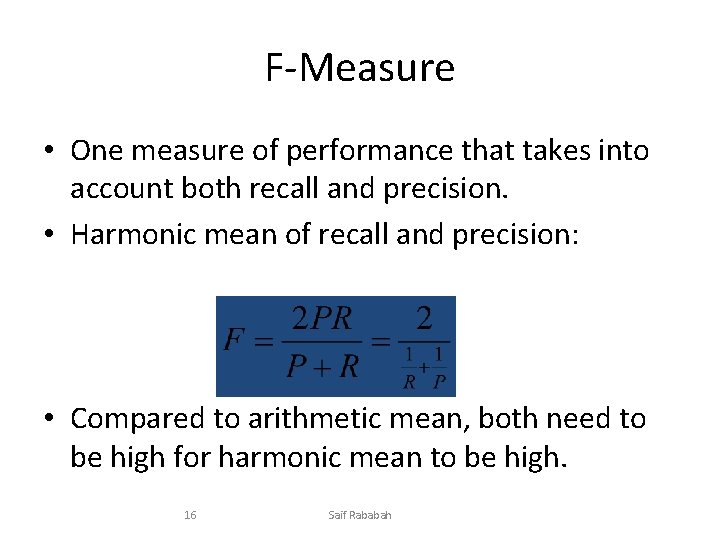

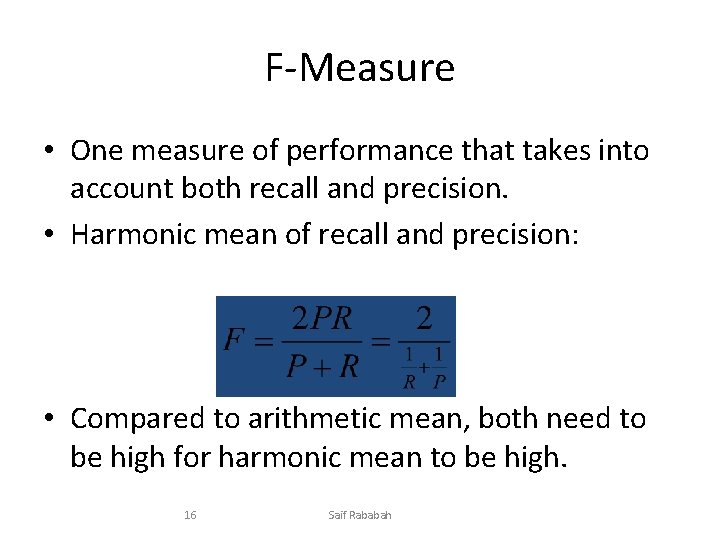

F-Measure • One measure of performance that takes into account both recall and precision. • Harmonic mean of recall and precision: • Compared to arithmetic mean, both need to be high for harmonic mean to be high. 16 Saif Rababah

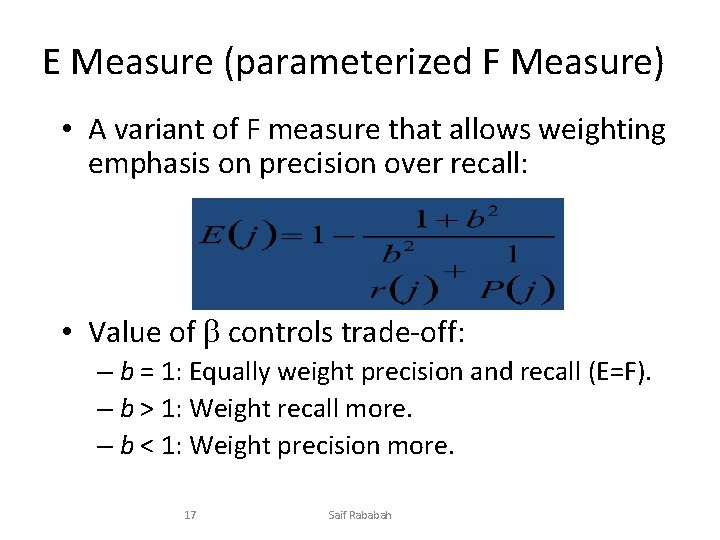

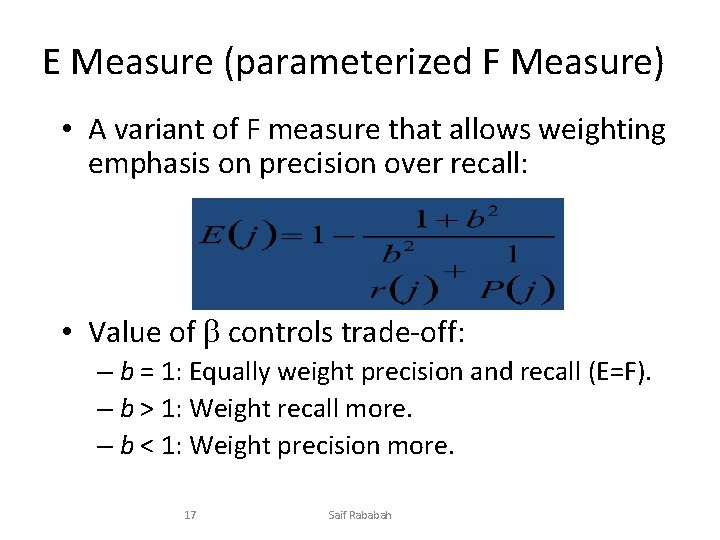

E Measure (parameterized F Measure) • A variant of F measure that allows weighting emphasis on precision over recall: • Value of controls trade-off: – b = 1: Equally weight precision and recall (E=F). – b > 1: Weight recall more. – b < 1: Weight precision more. 17 Saif Rababah

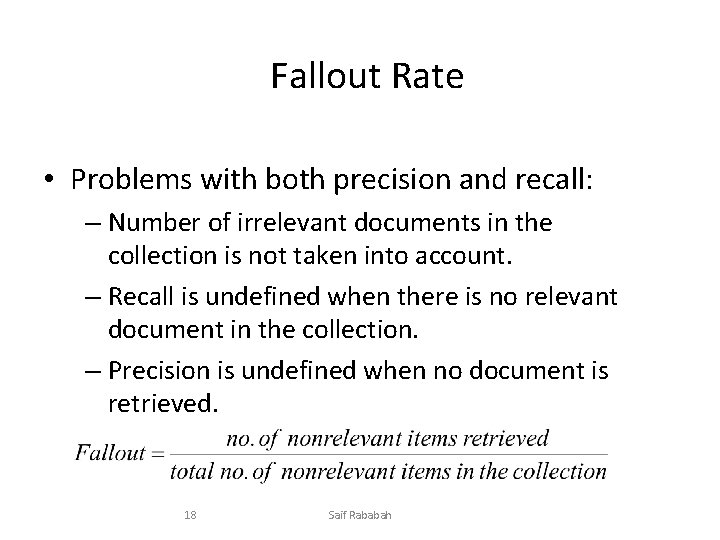

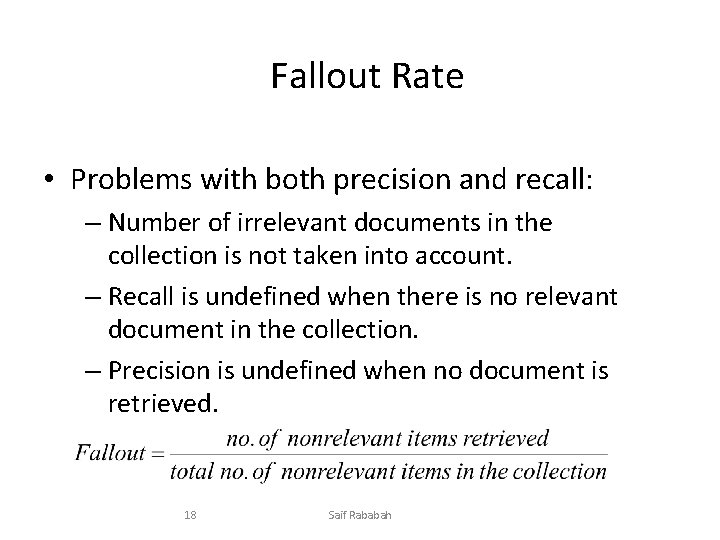

Fallout Rate • Problems with both precision and recall: – Number of irrelevant documents in the collection is not taken into account. – Recall is undefined when there is no relevant document in the collection. – Precision is undefined when no document is retrieved. 18 Saif Rababah

Subjective Relevance Measure • Novelty Ratio: The proportion of items retrieved and judged relevant by the user and of which they were previously unaware. – Ability to find new information on a topic. • Coverage Ratio: The proportion of relevant items retrieved out of the total relevant documents known to a user prior to the search. – Relevant when the user wants to locate documents which they have seen before (e. g. , the budget report for Year 2000). 19 Saif Rababah

Other Factors to Consider • User effort: Work required from the user in formulating queries, conducting the search, and screening the output. • Response time: Time interval between receipt of a user query and the presentation of system responses. • Form of presentation: Influence of search output format on the user’s ability to utilize the retrieved materials. 20 Saif Rababah