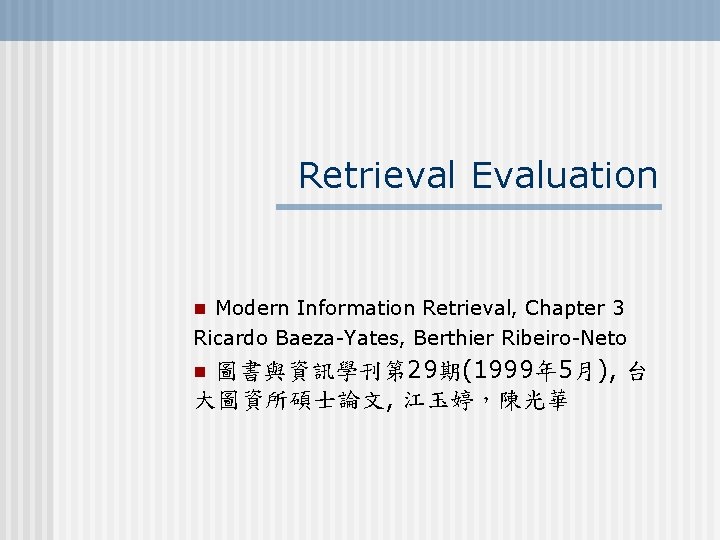

Retrieval Evaluation n Modern Information Retrieval Chapter 3

- Slides: 40

Retrieval Evaluation n Modern Information Retrieval, Chapter 3 Ricardo Baeza-Yates, Berthier Ribeiro-Neto 圖書與資訊學刊第 29期(1999年 5月), 台 大圖資所碩士論文, 江玉婷,陳光華 n

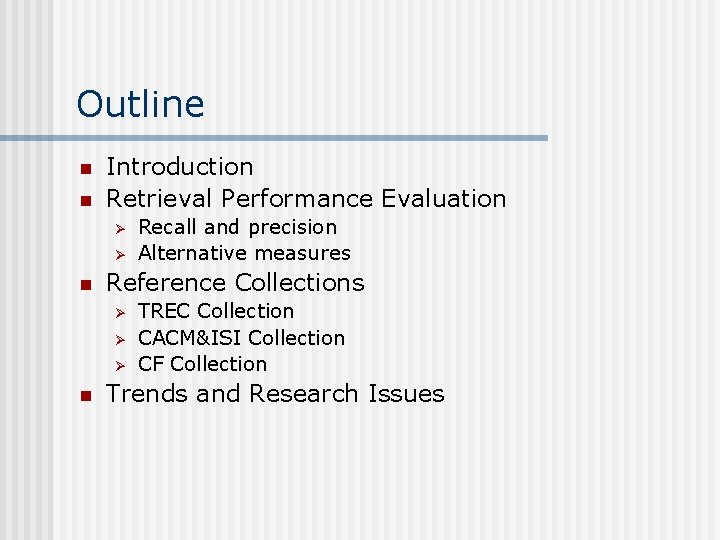

Outline n n Introduction Retrieval Performance Evaluation Ø Ø n Reference Collections Ø Ø Ø n Recall and precision Alternative measures TREC Collection CACM&ISI Collection CF Collection Trends and Research Issues

Introduction n Type of evaluation Ø Ø n Performance evaluation Ø n Functional analysis phase, and Error analysis phase Performance evaluation Response time/space required Retrieval performance evaluation Ø The evaluation of how precise is the answer set

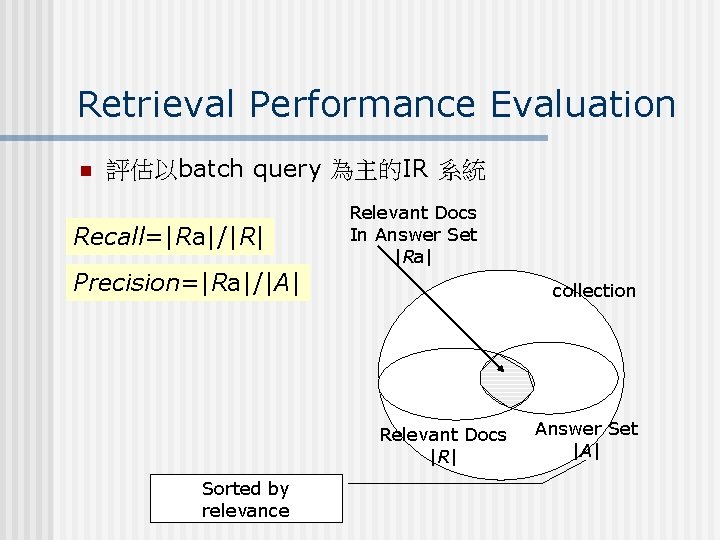

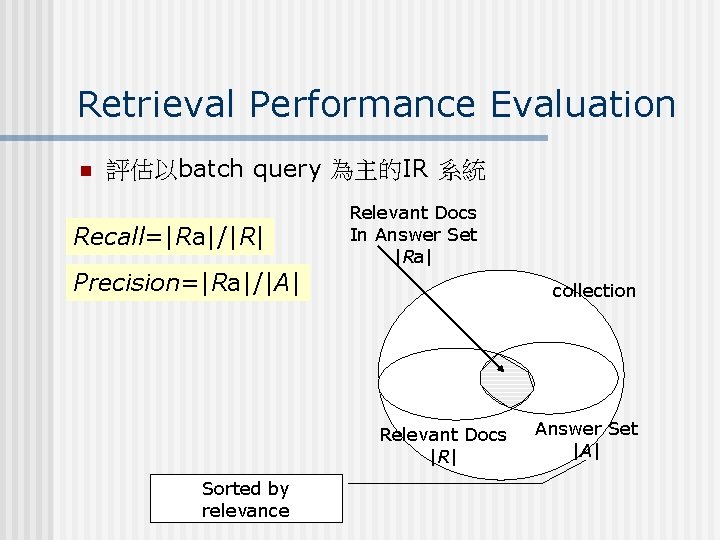

Retrieval Performance Evaluation n 評估以batch query 為主的IR 系統 Recall=|Ra|/|R| Relevant Docs In Answer Set |Ra| Precision=|Ra|/|A| collection Relevant Docs |R| Sorted by relevance Answer Set |A|

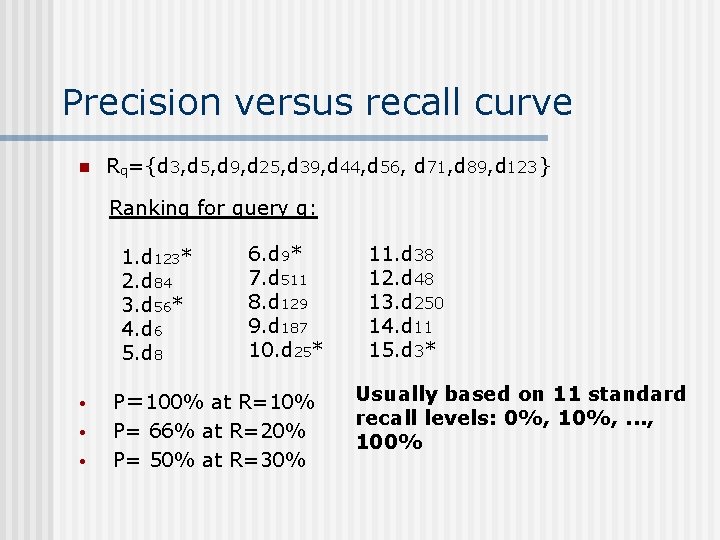

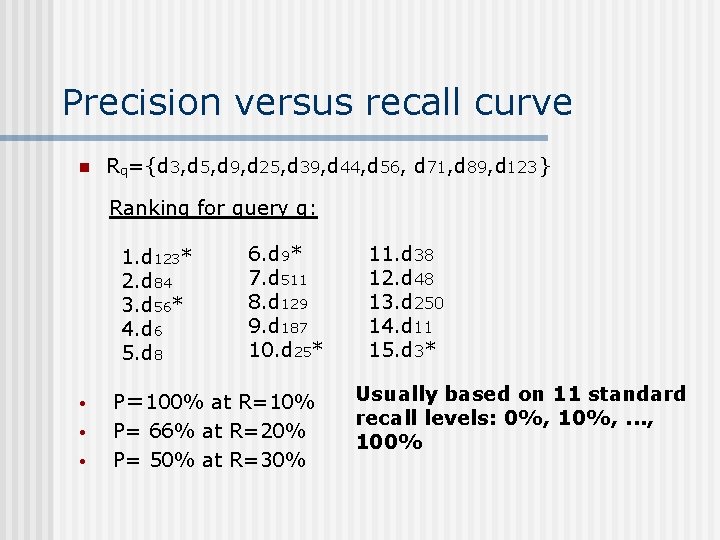

Precision versus recall curve n Rq={d 3, d 5, d 9, d 25, d 39, d 44, d 56, d 71, d 89, d 123} Ranking for query q: 1. d 123* 2. d 84 3. d 56* 4. d 6 5. d 8 • • • 6. d 9* 7. d 511 8. d 129 9. d 187 10. d 25* P=100% at R=10% P= 66% at R=20% P= 50% at R=30% 11. d 38 12. d 48 13. d 250 14. d 11 15. d 3* Usually based on 11 standard recall levels: 0%, 10%, . . . , 100%

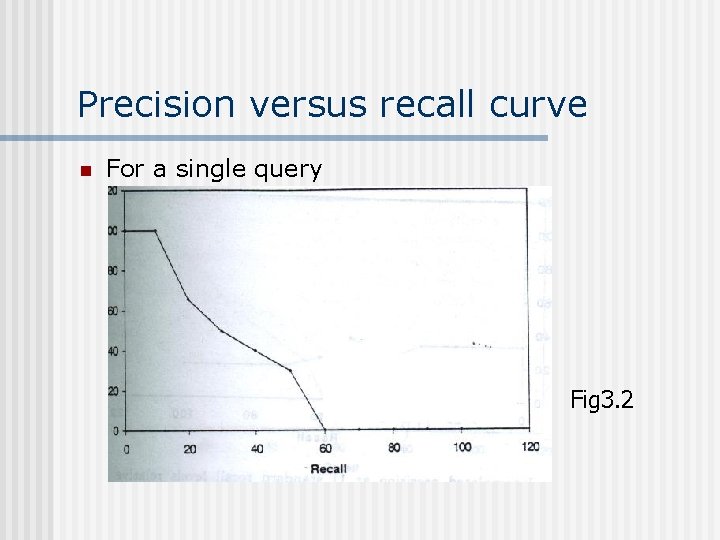

Precision versus recall curve n For a single query Fig 3. 2

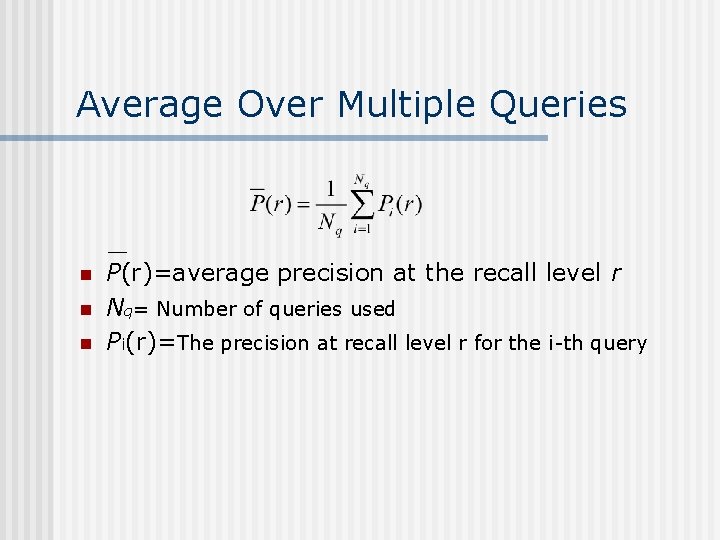

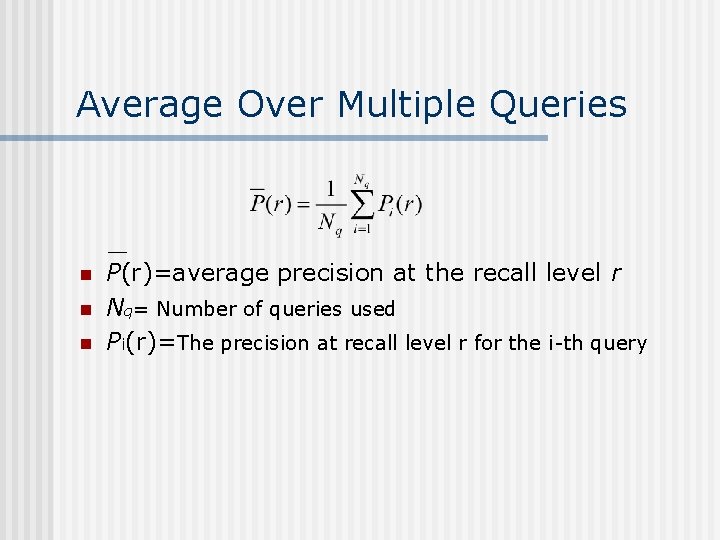

Average Over Multiple Queries n n n P(r)=average precision at the recall level r Nq= Number of queries used Pi(r)=The precision at recall level r for the i-th query

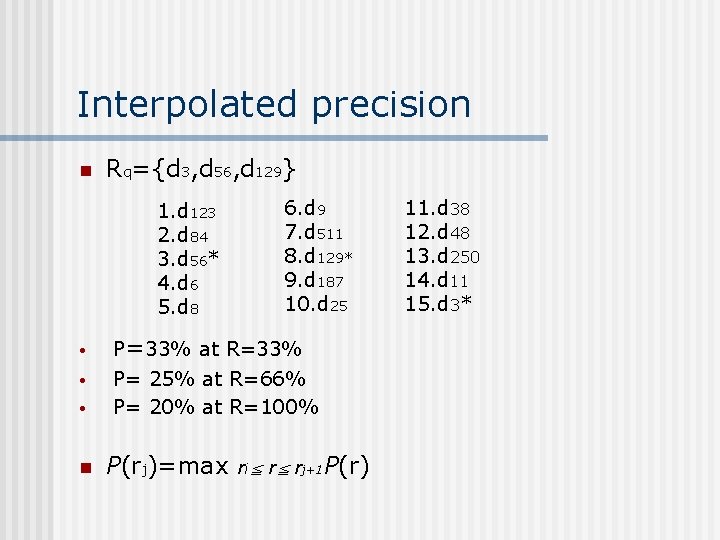

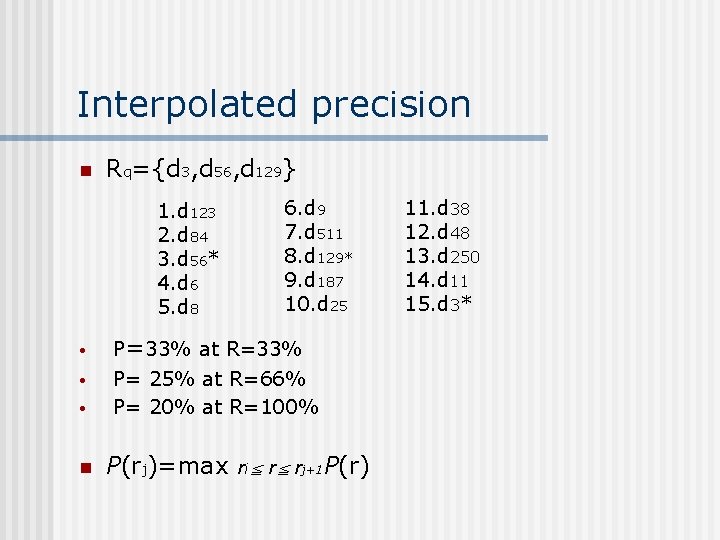

Interpolated precision n Rq={d 3, d 56, d 129} 1. d 123 2. d 84 3. d 56* 4. d 6 5. d 8 • • • n 6. d 9 7. d 511 8. d 129* 9. d 187 10. d 25 P=33% at R=33% P= 25% at R=66% P= 20% at R=100% P(rj)=max ri≦ r≦ rj+1 P(r) 11. d 38 12. d 48 13. d 250 14. d 11 15. d 3*

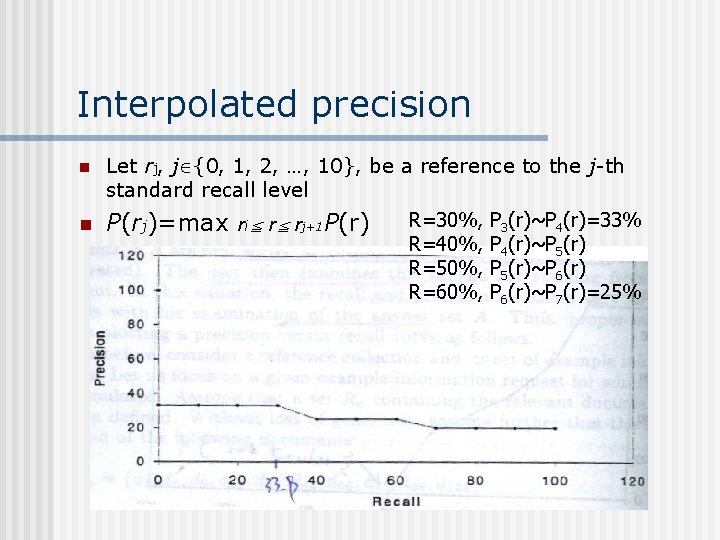

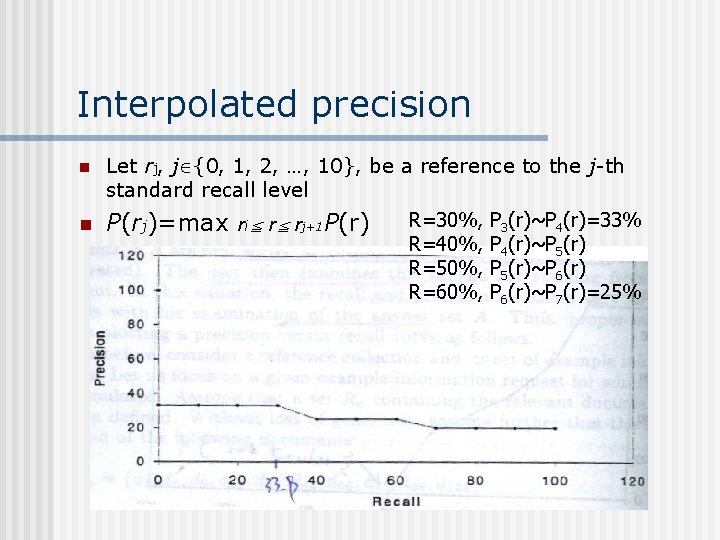

Interpolated precision n Let rj, j {0, 1, 2, …, 10}, be a reference to the j-th standard recall level n P(rj)=max ri≦ r≦ rj+1 P(r) R=30%, R=40%, R=50%, R=60%, P 3(r)~P 4(r)=33% P 4(r)~P 5(r)~P 6(r)~P 7(r)=25%

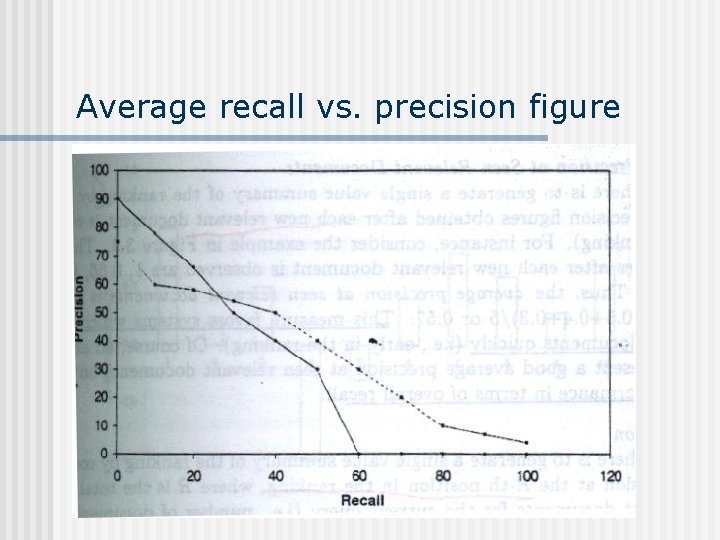

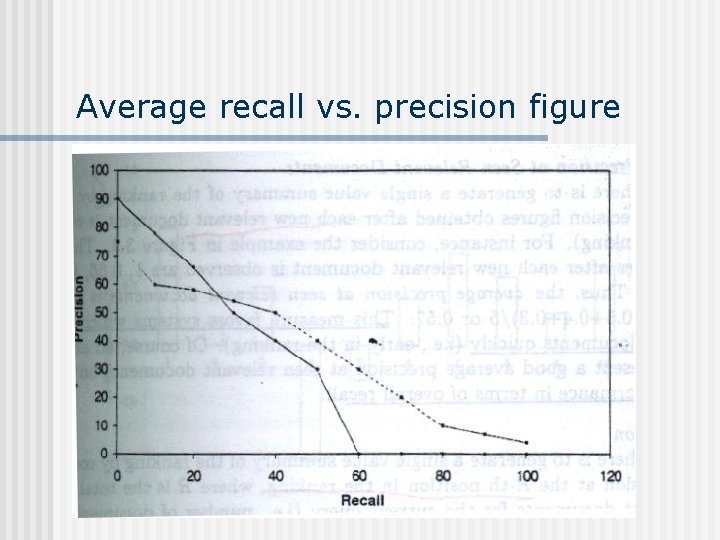

Average recall vs. precision figure

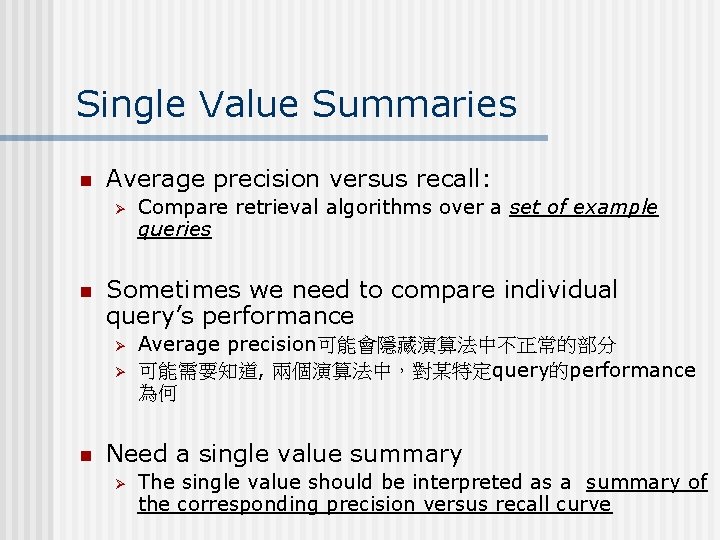

Single Value Summaries n Average precision versus recall: Ø n Sometimes we need to compare individual query’s performance Ø Ø n Compare retrieval algorithms over a set of example queries Average precision可能會隱藏演算法中不正常的部分 可能需要知道, 兩個演算法中,對某特定query的performance 為何 Need a single value summary Ø The single value should be interpreted as a summary of the corresponding precision versus recall curve

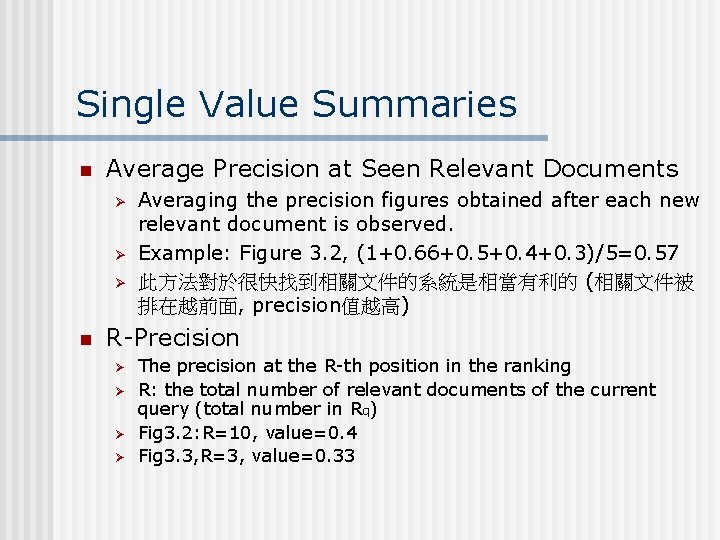

Single Value Summaries n Average Precision at Seen Relevant Documents Ø Ø Ø n Averaging the precision figures obtained after each new relevant document is observed. Example: Figure 3. 2, (1+0. 66+0. 5+0. 4+0. 3)/5=0. 57 此方法對於很快找到相關文件的系統是相當有利的 (相關文件被 排在越前面, precision值越高) R-Precision Ø Ø The precision at the R-th position in the ranking R: the total number of relevant documents of the current query (total number in Rq) Fig 3. 2: R=10, value=0. 4 Fig 3. 3, R=3, value=0. 33

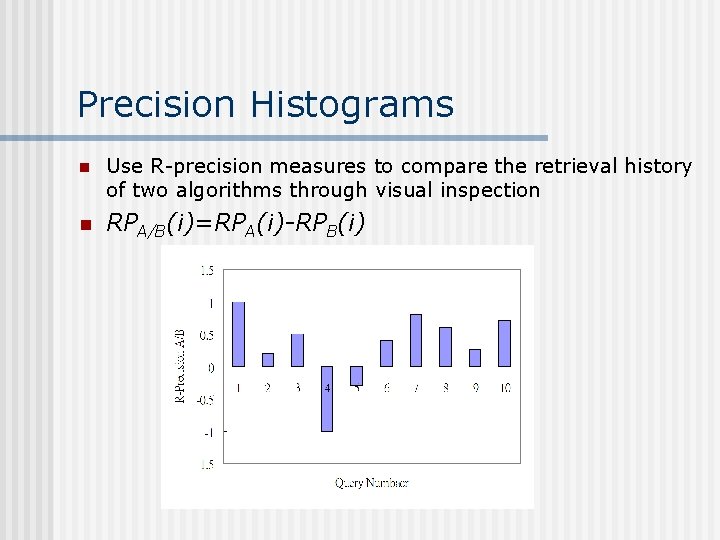

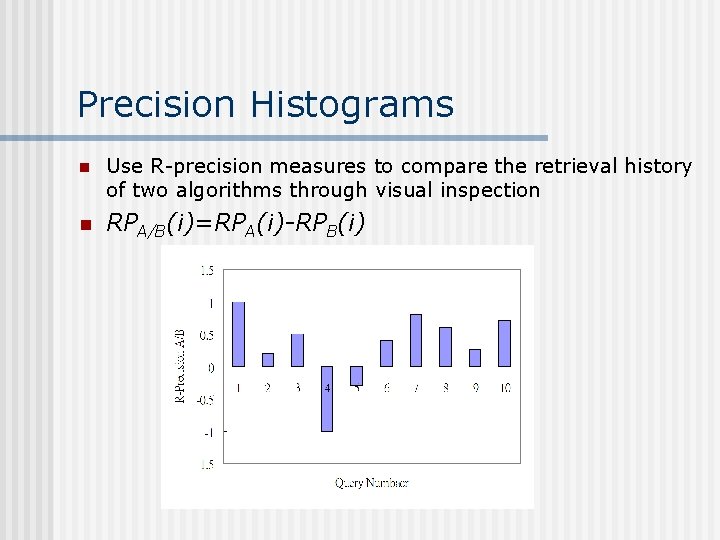

Precision Histograms n Use R-precision measures to compare the retrieval history of two algorithms through visual inspection n RPA/B(i)=RPA(i)-RPB(i)

Summary Table Statistics n 將所有query相關的single value summary 放在table 中 Ø Ø the number of queries , total number of documents retrieved by all queries, total number of relevant documents were effectively retrieved when all queries are considered total number of relevant documents retrieved by all queries…

Precision and Recall 的適用性 n n Maximum recall值的產生,需要知道所有文件相關的 背景知識 Recall and precision是相對的測量方式,兩者要合併 使用比較適合。 Measures which quantify the informativeness of the retrieval process might now be more appropriate Recall and precision are easy to define when a linear ordering of the retrieved documents is enforced

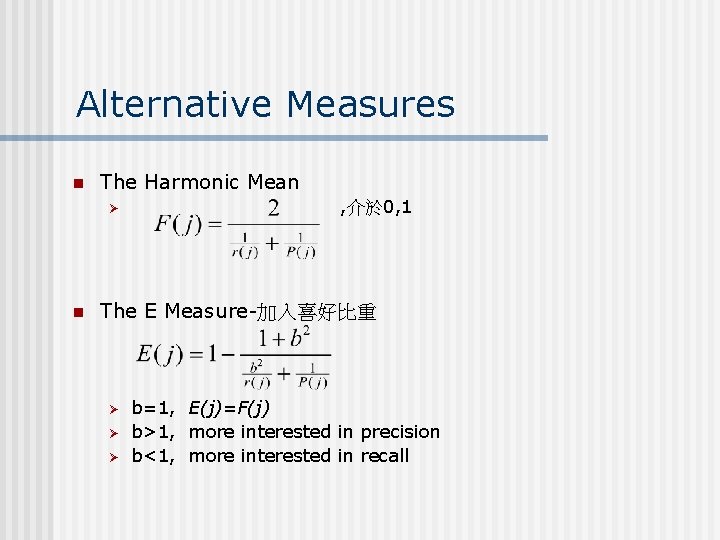

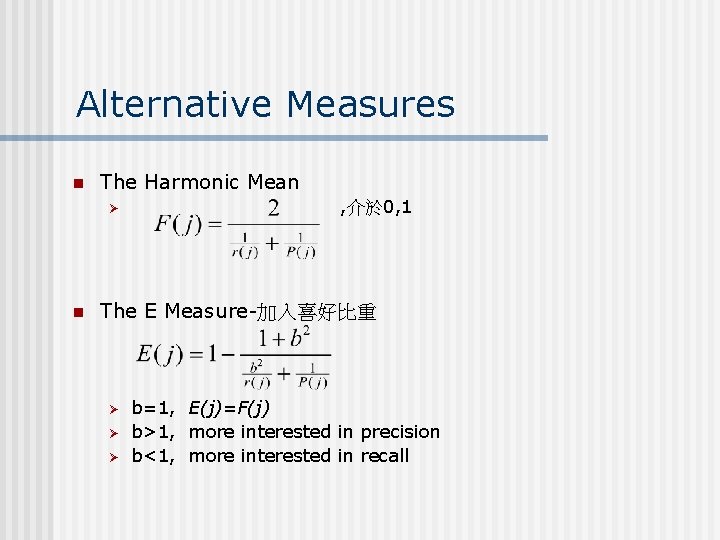

Alternative Measures n The Harmonic Mean Ø n , 介於 0, 1 The E Measure-加入喜好比重 Ø Ø Ø b=1, E(j)=F(j) b>1, more interested in precision b<1, more interested in recall

Reference Collection n 用來作為評估IR系統reference test collections Ø Ø Ø TIPSTER/TREC: 量大,實驗用 CACM, ISI: 歷史意義 Cystic Fibrosis: small collections, relevant documents由 專家研討後產生

IR system遇到的批評 n Lacks a solid formal framework as a basic foundation Ø n 無解!一個文件是否與查詢相關,是相當主觀的! Lacks robust and consistent testbeds and benchmarks Ø Ø 較早,發展實驗性質的小規模測試資料 1990後,TREC成立,蒐集上萬文件,提供給研究團體作IR系統 評量之用

TREC (Text REtrieval Conference) n n Initiated under the National Institute of Standards and Technology(NIST) Goals: Ø Ø Ø n Providing a large test collection Uniform scoring procedures Forum 7 th TREC conference in 1998: Ø Ø Document collection: test collections, example information requests (topics), relevant docs The benchmarks tasks

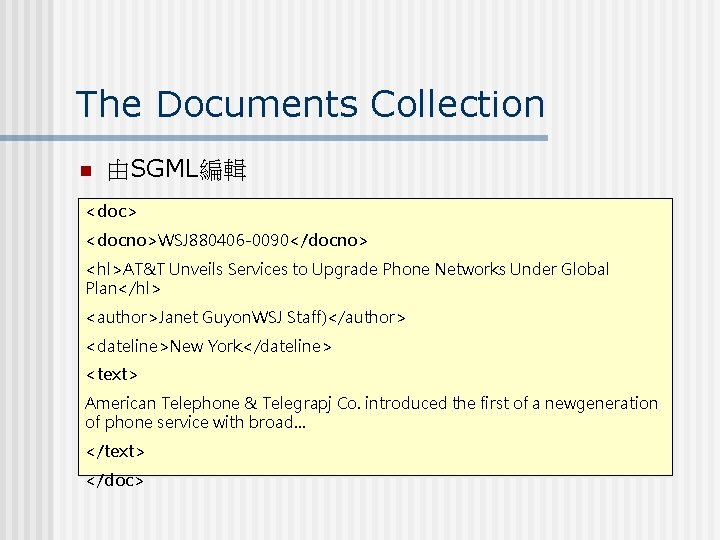

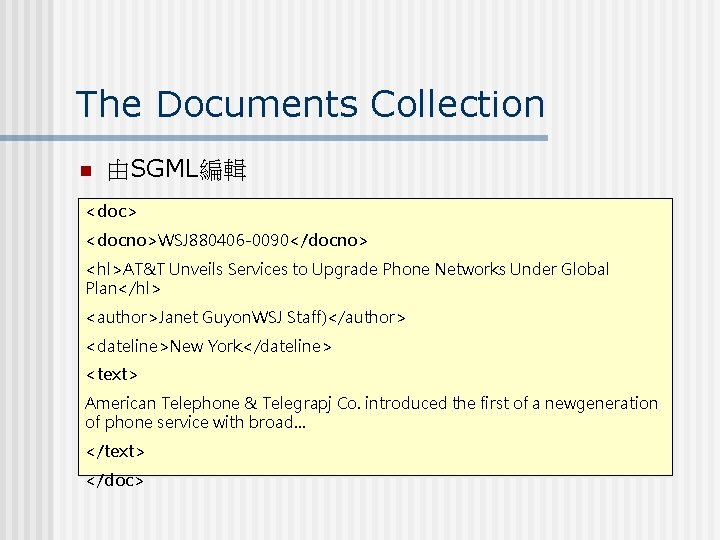

The Documents Collection n 由SGML編輯 <doc> <docno>WSJ 880406 -0090</docno> <hl>AT&T Unveils Services to Upgrade Phone Networks Under Global Plan</hl> <author>Janet Guyon. WSJ Staff)</author> <dateline>New York</dateline> <text> American Telephone & Telegrapj Co. introduced the first of a newgeneration of phone service with broad… </text> </doc>

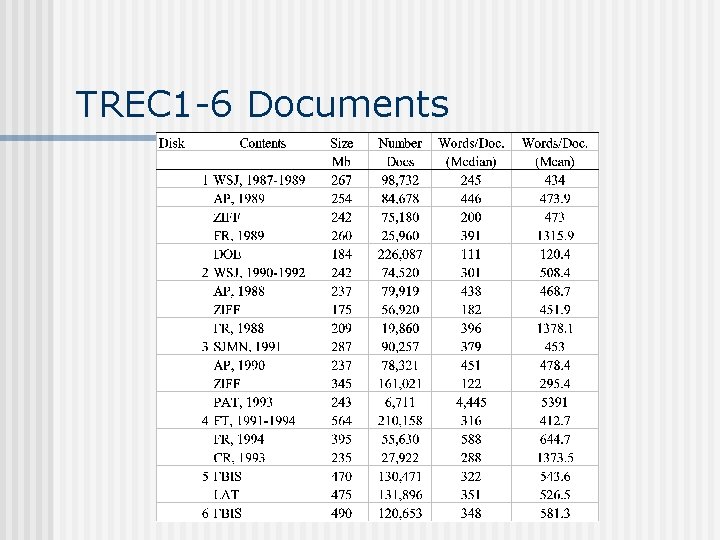

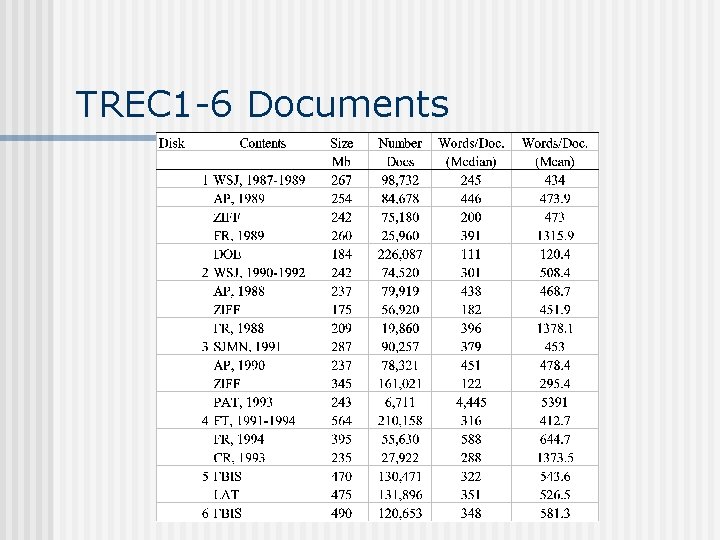

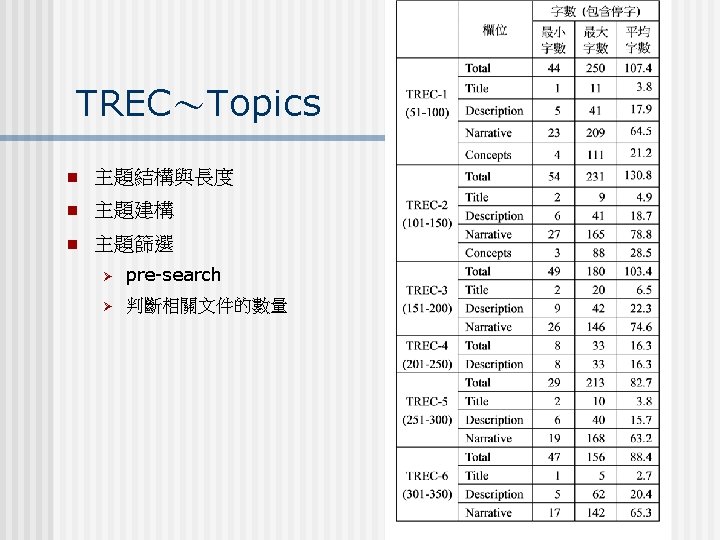

TREC 1 -6 Documents

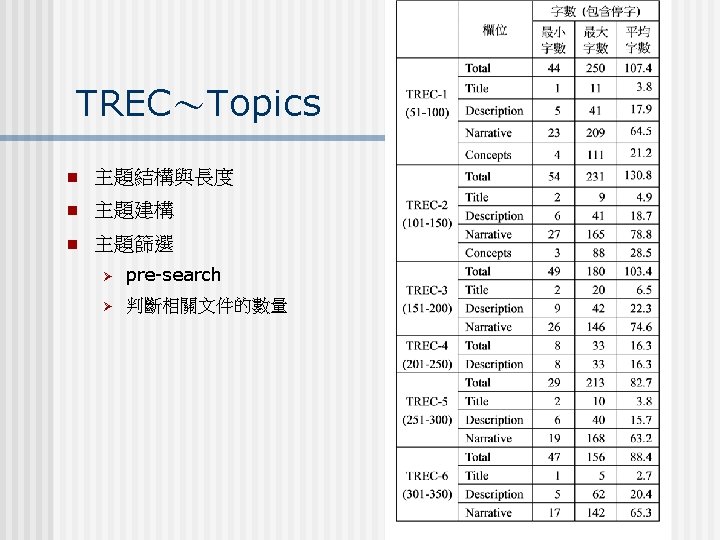

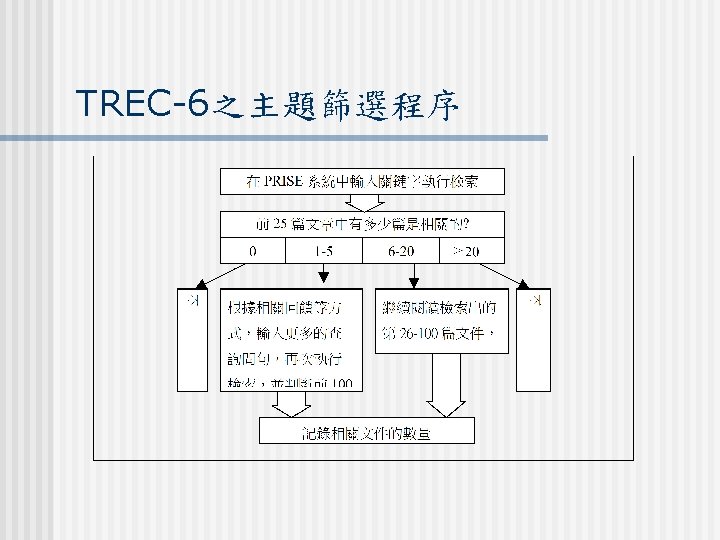

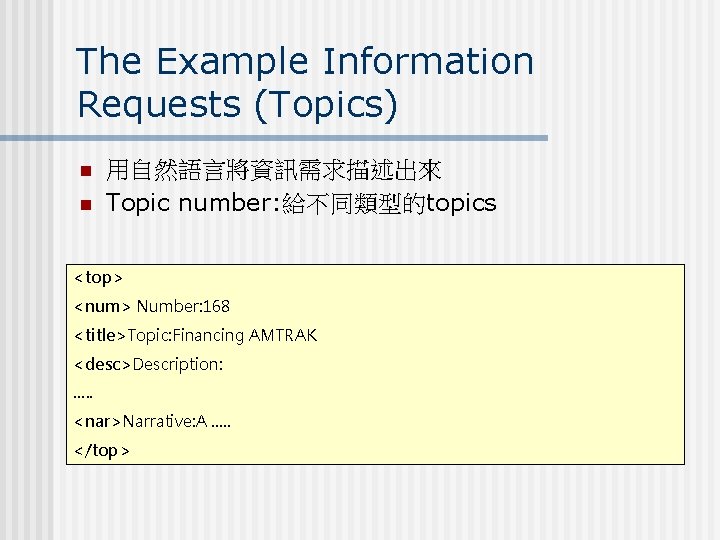

The Example Information Requests (Topics) n n 用自然語言將資訊需求描述出來 Topic number: 給不同類型的topics <top> <num> Number: 168 <title>Topic: Financing AMTRAK <desc>Description: …. . <nar>Narrative: A …. . </top>

The (Benchmark) Tasks at the TREC Conferences n Ad hoc task: Ø n Receive new requests and execute them on a pre-specified document collection Routing task Ø Ø Ø Receive test info. Requests, two document collections first doc: training and tuning retrieval algorithm Second doc: testing the tuned retrieval algorithm

Other tasks: n n n n *Chinese Filtering Interactive *NLP(natural language procedure) Cross languages High precision Spoken document retrieval Query Task(TREC-7)

New TREC Tracks n n n Interactive Track Cross language Track Filtering Track Genome Track Hard Track Novelty Track Query Answering Track Robust Retrieval Track Video Track Web Track http: //trec. nist. gov/tracks. html

Evaluation Measures at the TREC Conferences n n Summary table statistics Recall-precision Document level averages* Average precision histogram

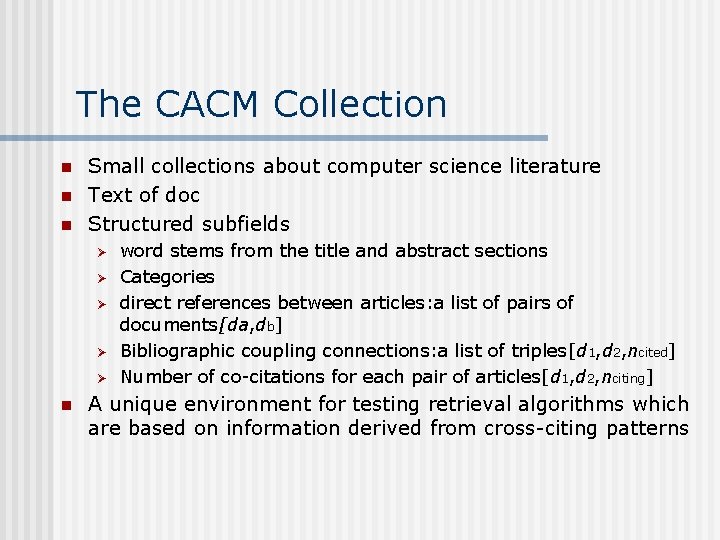

The CACM Collection n Small collections about computer science literature Text of doc Structured subfields Ø Ø Ø n word stems from the title and abstract sections Categories direct references between articles: a list of pairs of documents[da, db] Bibliographic coupling connections: a list of triples[d 1, d 2, ncited] Number of co-citations for each pair of articles[d 1, d 2, nciting] A unique environment for testing retrieval algorithms which are based on information derived from cross-citing patterns

The ISI Collection n ISI 的test collection是由之前在ISI(Institute of Scientific Information) 的Small組合而成 這些文件大部分是由當初Small計畫中有關crosscitation study中挑選出來 支持有關於terms和cross-citation patterns的相似性 研究

The Cystic Fibrosis Collection n 有關於“囊胞性纖維症”的文件 Topics和相關文件由具有此方面在臨床或研究的專家所 產生 Relevance scores Ø Ø Ø 0: non-relevance 1: marginal relevance 2: high relevance

Characteristics of CF collection n n Relevance score均由專家給定 Good number of information requests(relative to the collection size) Ø Ø The respective query vectors present overlap among themselves 利用之前的query增加檢索效率

Trends and Research Issues n Interactive user interface Ø Ø n 一般認為feedback的檢索可以改善效率 如何決定此情境下的評估方式(Evaluation measures)? 其它有別於precise, recall的評估方式研究