Mega Software Engineering Katsuro Inoue Pankaj K Garg

- Slides: 30

Mega Software Engineering Katsuro Inoue*, Pankaj K. Garg**, Hajimu Iida+, Kenichi Matsumoto+, Koji Torii+ *Osaka University **Zee Source +Nara Institute of Science and Technology

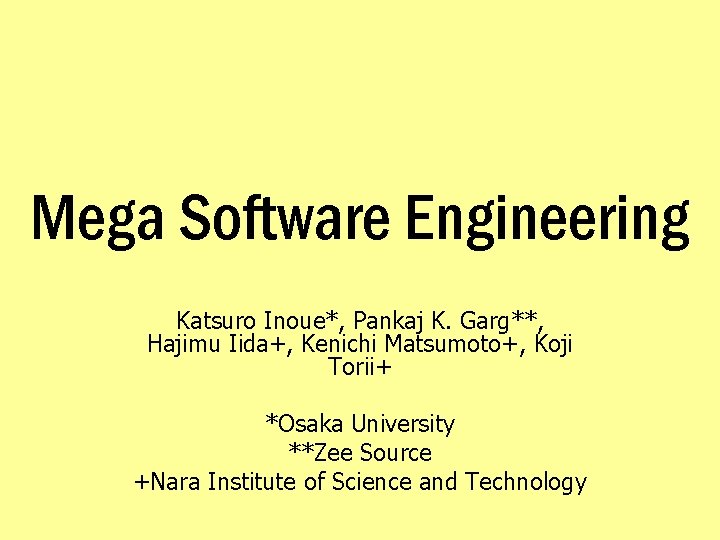

üBackground and Motivation • History of Software Engineering Technologies – Almost 40 years – Various kinds of technologies • Mostly targeting to limited scale optimization based on limited scale knowledge – Due to limitation of information collection • Rapid performance growth of hardware – Faster CPU and memory – Vast disc capacity – Ubiquitous networking Profes 2005, Oulu, Finland June 13 -15, 2005 2

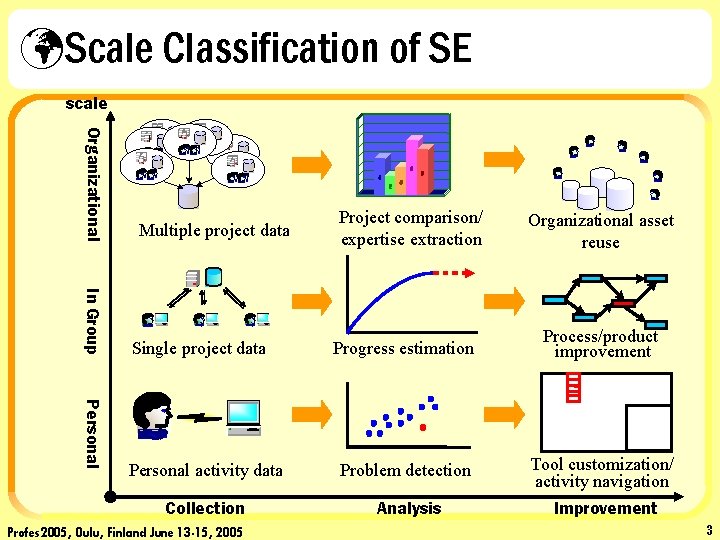

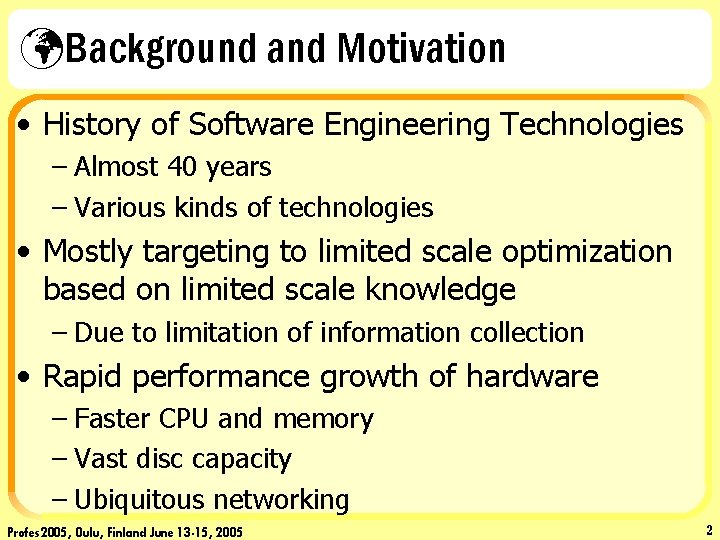

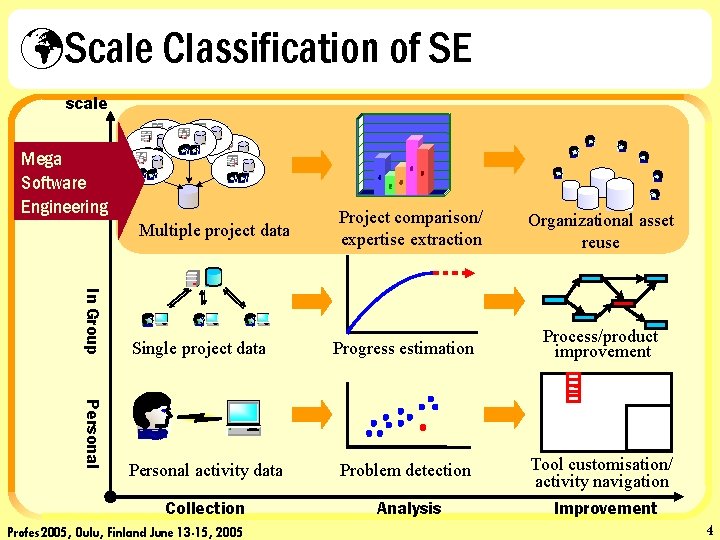

üScale Classification of SE scale Organizational 6 4 2 Multiple project data 5 3 Project comparison/ expertise extraction Organizational asset reuse In Group Personal Progress estimation Process/product improvement Personal activity data Problem detection Tool customization/ activity navigation Collection Analysis Improvement Single project data Profes 2005, Oulu, Finland June 13 -15, 2005 3

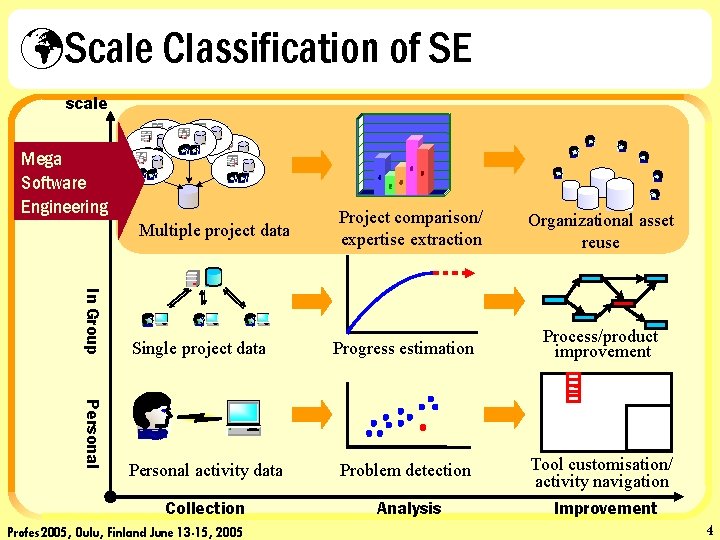

üScale Classification of SE scale Mega Software Engineering 6 4 2 Multiple project data 5 3 Project comparison/ expertise extraction Organizational asset reuse In Group Personal Progress estimation Process/product improvement Personal activity data Problem detection Tool customisation/ activity navigation Collection Analysis Improvement Single project data Profes 2005, Oulu, Finland June 13 -15, 2005 4

üMega Software Engineering (MSE) • Targets many projects • A new concept but not a new technology itself • Collection of key technologies already existing and emerging • • • Distributed environment and data sharing Analysis and data mining Project monitoring and controlling Scalable computing. . . • Use advances of hardware performance, e. g. , network bandwidth, CPU clock, memory space, disk capacity, . . . – Software engineering technology should share in advances of hardware, which is mainly used for multimedia, grid, simulator, . . . Profes 2005, Oulu, Finland June 13 -15, 2005 5

üCharacteristics of MSE • Experience and knowledge of individual developer or project are collected, refined as assets, and reused in community • Single-level flat static community for information sharing • Automatic process : Little burden is required for each developer or manager • View from the organizational benefits may be directly obtained (no individual developer’s view or project view) • Open source development is a simple case of MSE (MSE focuses analysis and feedback) Profes 2005, Oulu, Finland June 13 -15, 2005 6

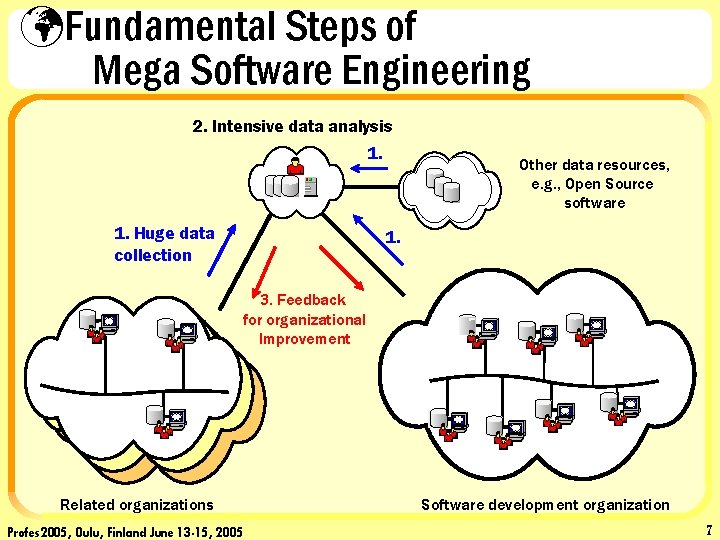

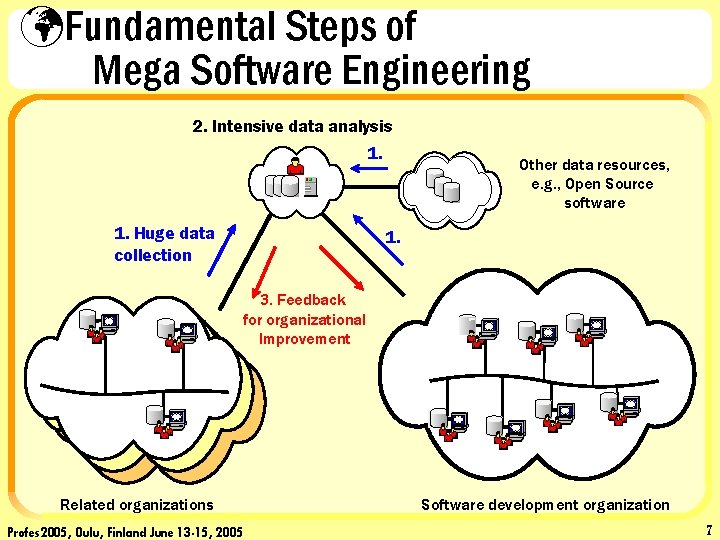

üFundamental Steps of Mega Software Engineering 2. Intensive data analysis 1. Huge data collection Other data resources, e. g. , Open Source software 1. 3. Feedback for organizational Improvement Related organizations Profes 2005, Oulu, Finland June 13 -15, 2005 Software development organization 7

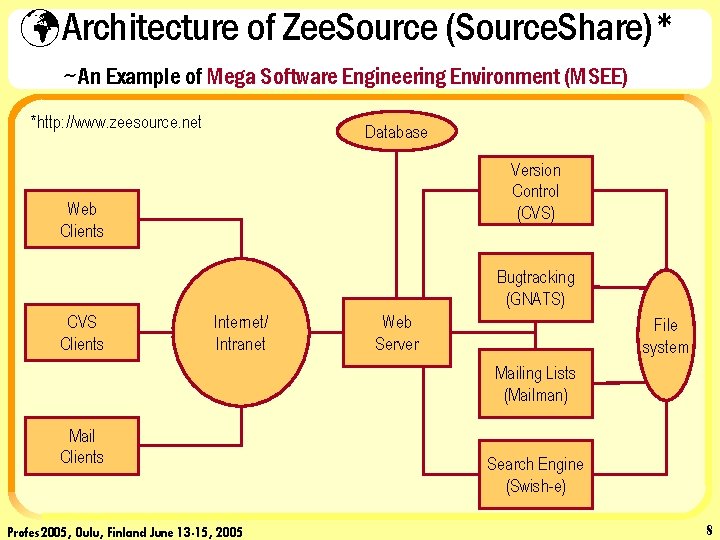

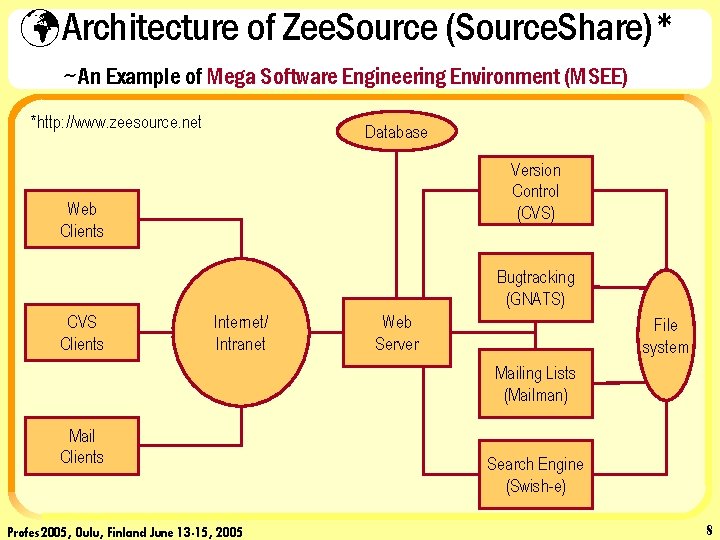

ü Architecture of Zee. Source (Source. Share)* ~An Example of Mega Software Engineering Environment (MSEE) *http: //www. zeesource. net Database Version Control (CVS) Web Clients Bugtracking (GNATS) CVS Clients Internet/ Intranet Web Server File system Mailing Lists (Mailman) Mail Clients Profes 2005, Oulu, Finland June 13 -15, 2005 Search Engine (Swish-e) 8

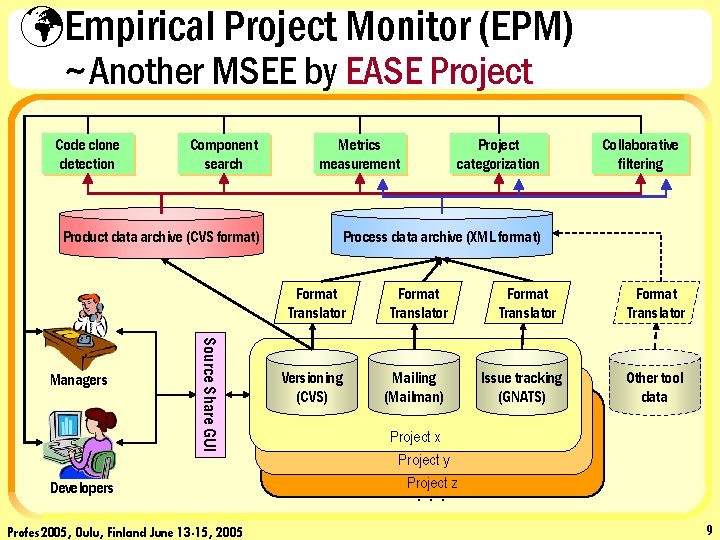

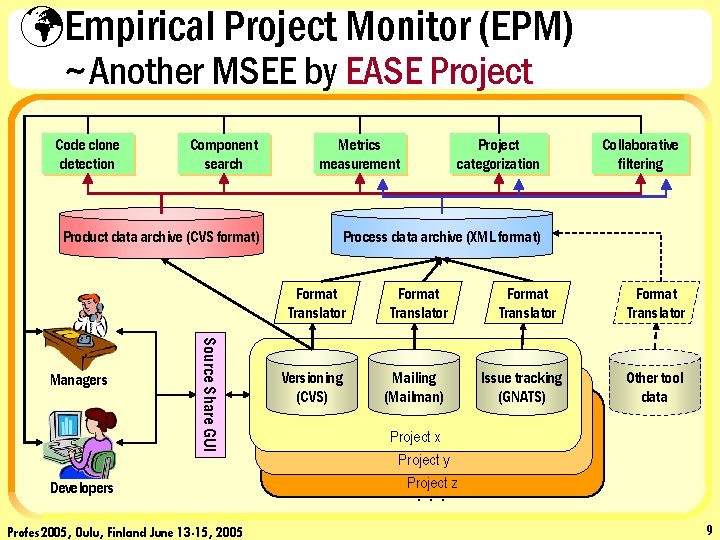

üEmpirical Project Monitor (EPM) ~Another MSEE by EASE Project Code clone detection Component search Metrics measurement Source Share GUI Developers Profes 2005, Oulu, Finland June 13 -15, 2005 Collaborative filtering Process data archive (XML format) Product data archive (CVS format) Managers Project categorization Format Translator Versioning (CVS) Mailing (Mailman) Format Translator Issue tracking (GNATS) Format Translator Other tool data Project x Project y Project z . . . 9

üEASE Project http: //www. empirical. jp • Empirical Approach to Software Engineering • Using the concept of MSE as its basis • Funded by MEXT (Japanese government, Ministry of Education, Culture, Sports, Science and Technology) • 5 year project starting 2003 Profes 2005, Oulu, Finland June 13 -15, 2005 10

üEASE Project Activities 1. Development of EPM 2. Application of EPM to real projects 3. Collection of data and expertise of empirical SE 4. Organizational benefits by applying EPM Profes 2005, Oulu, Finland June 13 -15, 2005 11

üEPM Analysis Features • Core EPM provides massive and exact data for automatic analysis made by co-existing analysis tools and plug-ins. • Analysis Features: – Simple data browsing in many kinds of chart/table – Latent Semantic Analysis (automatic categorization) – Code-clone detection – Software component searching and ranking – Other many traditional software metrics Profes 2005, Oulu, Finland June 13 -15, 2005 12

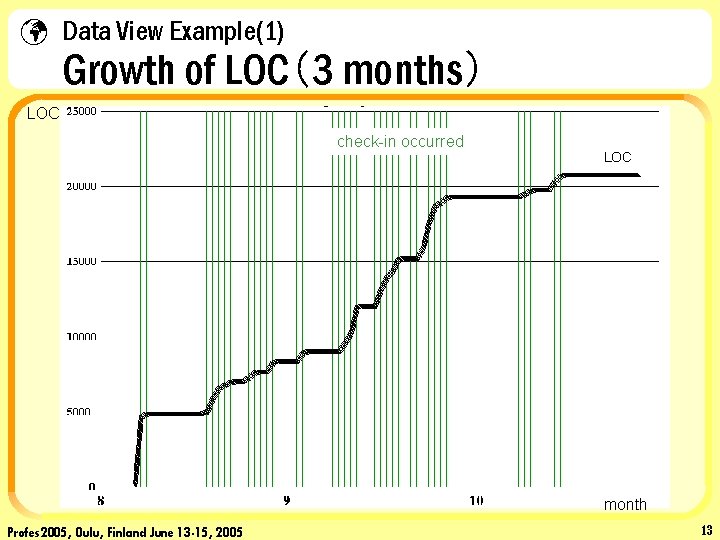

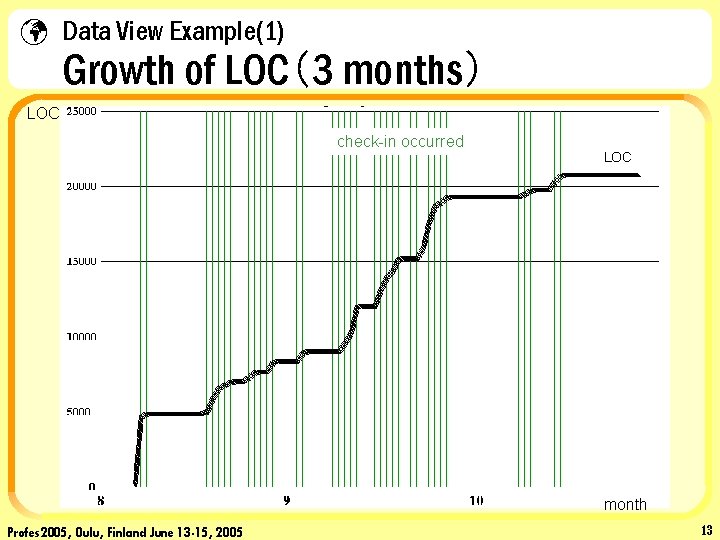

ü Data View Example(1) Growth of LOC(3 months) LOC check-in occurred LOC month Profes 2005, Oulu, Finland June 13 -15, 2005 13

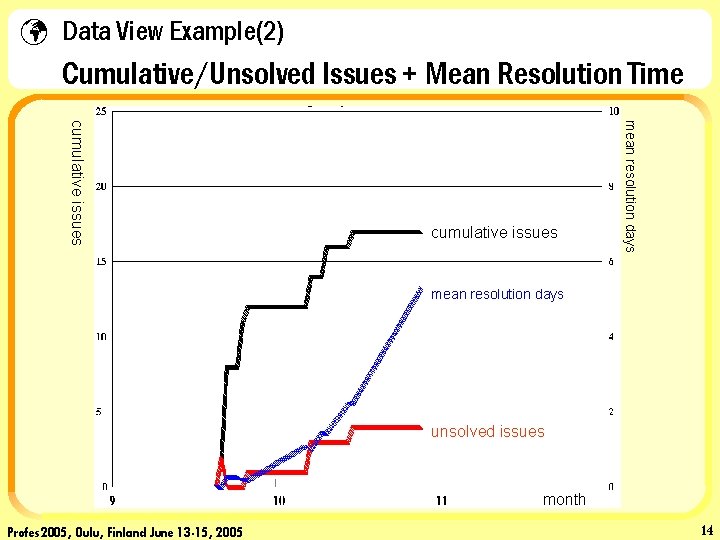

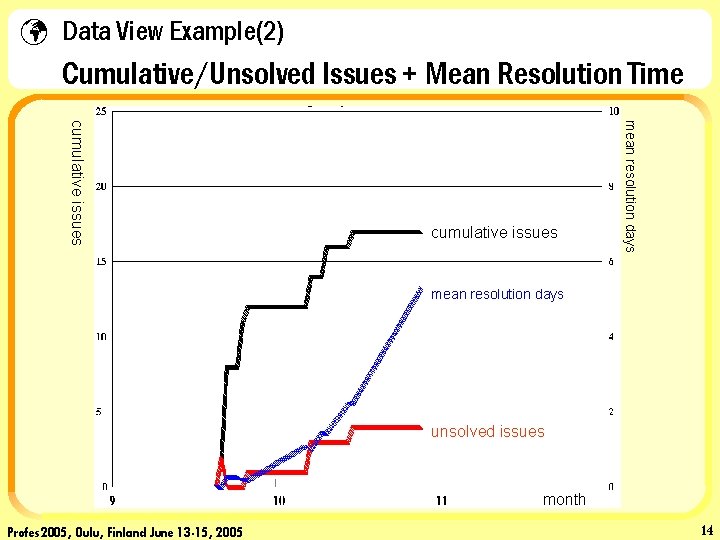

ü Data View Example(2) Cumulative/Unsolved Issues + Mean Resolution Time mean resolution days cumulative issues mean resolution days unsolved issues month Profes 2005, Oulu, Finland June 13 -15, 2005 14

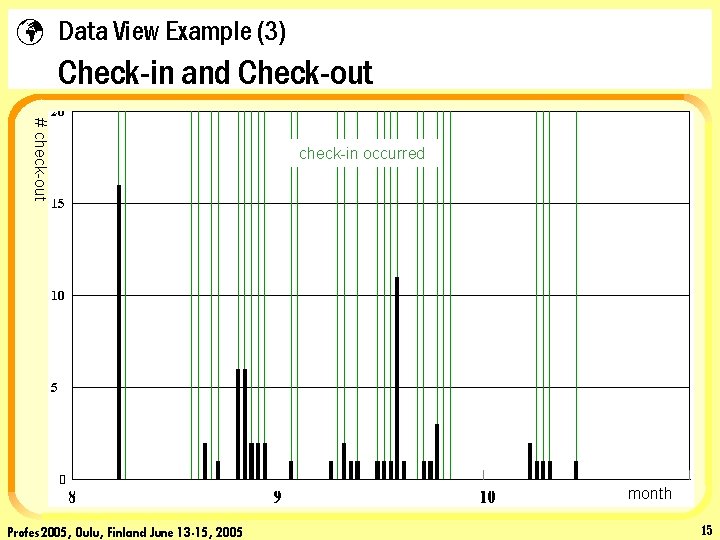

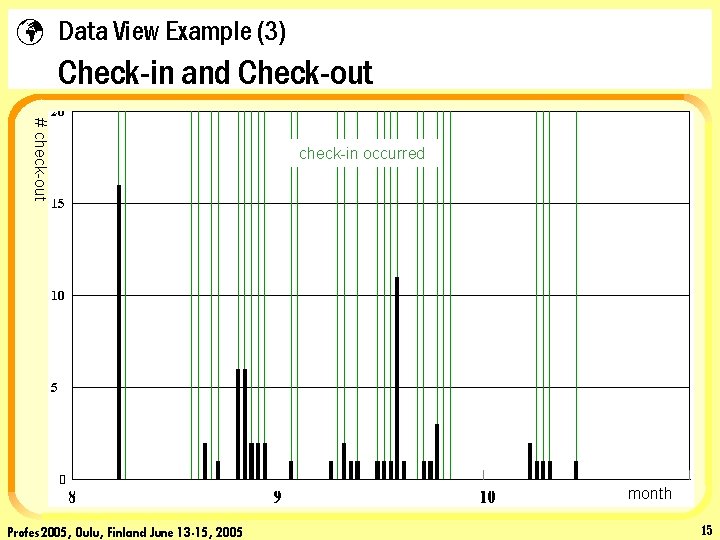

ü Data View Example (3) Check-in and Check-out # check-out check-in occurred month Profes 2005, Oulu, Finland June 13 -15, 2005 15

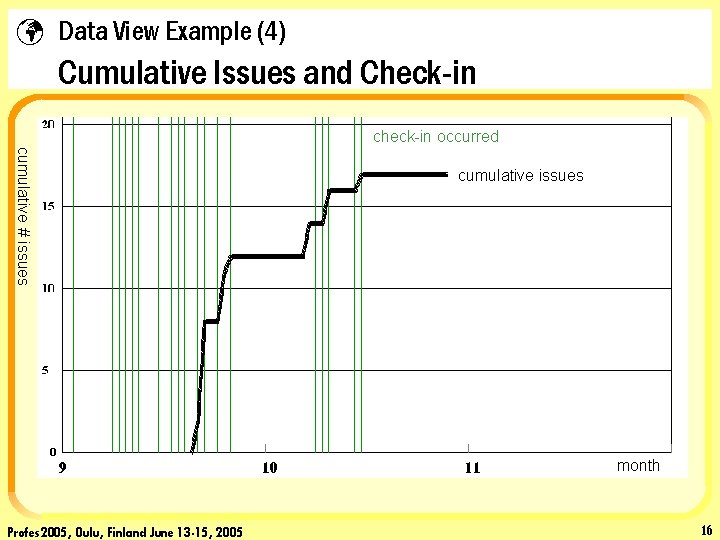

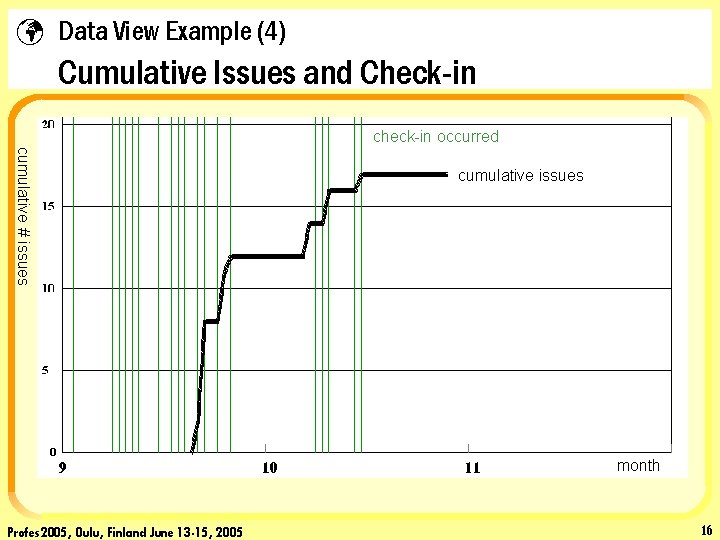

ü Data View Example (4) Cumulative Issues and Check-in check-in occurred cumulative # issues cumulative issues month Profes 2005, Oulu, Finland June 13 -15, 2005 16

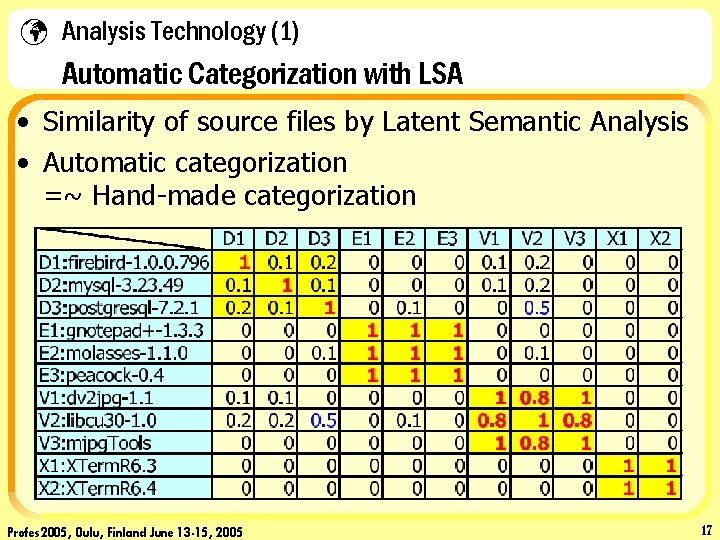

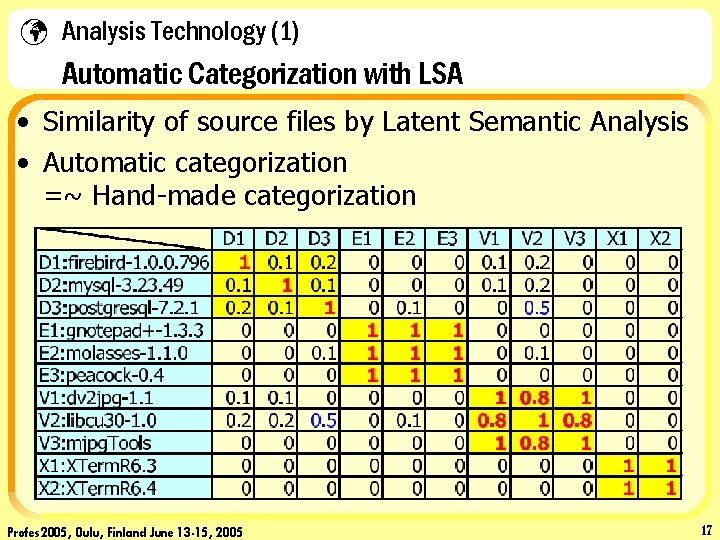

ü Analysis Technology (1) Automatic Categorization with LSA • Similarity of source files by Latent Semantic Analysis • Automatic categorization =~ Hand-made categorization Profes 2005, Oulu, Finland June 13 -15, 2005 17

ü Analysis Technology (2) Code-Clone Detection Code-Clone Analysis between Qt and GTK Area “a” has high clone density (because of font handler sharing) Profes 2005, Oulu, Finland June 13 -15, 2005 18

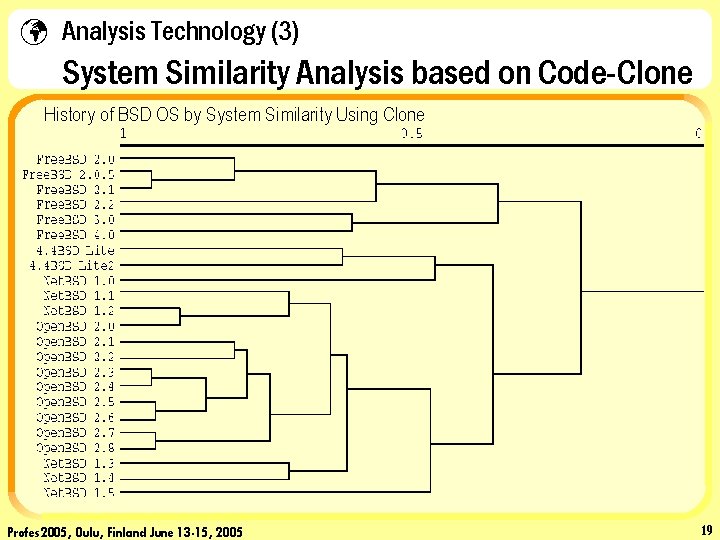

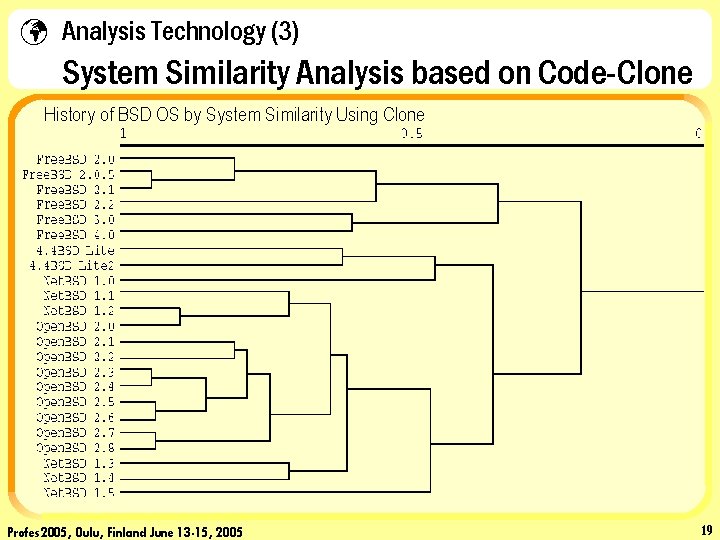

ü Analysis Technology (3) System Similarity Analysis based on Code-Clone History of BSD OS by System Similarity Using Clone Profes 2005, Oulu, Finland June 13 -15, 2005 19

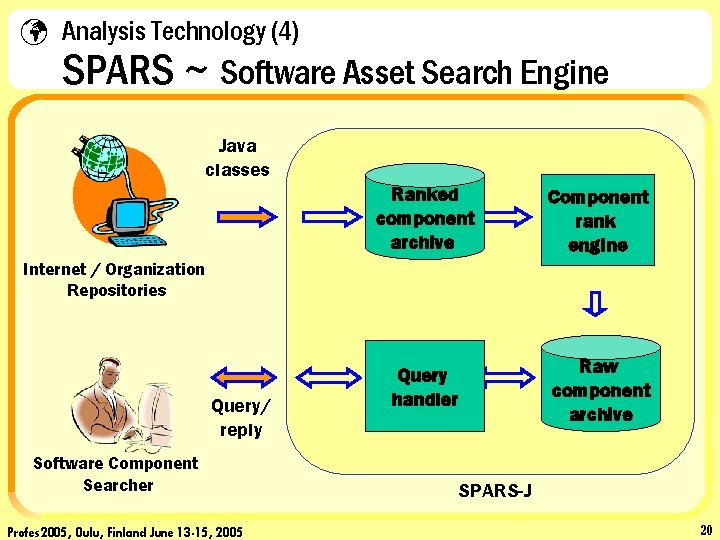

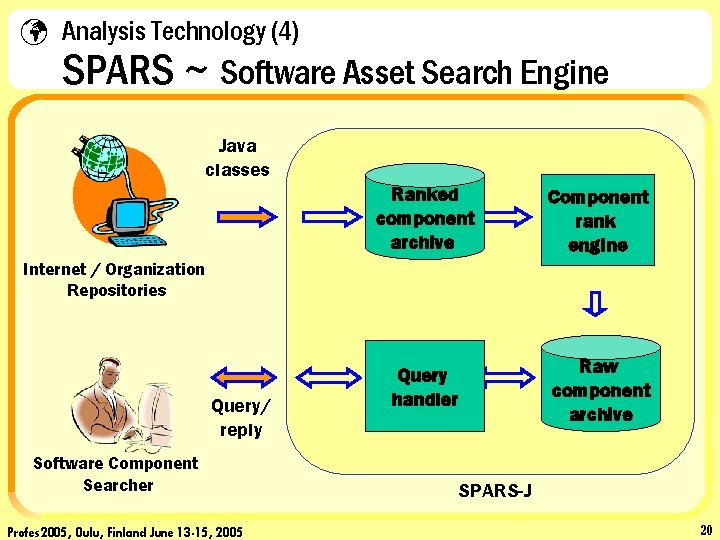

ü Analysis Technology (4) SPARS ~ Software Asset Search Engine Java classes Ranked component archive Component rank engine Query handler Raw component archive Internet / Organization Repositories Query/ reply Software Component Searcher Profes 2005, Oulu, Finland June 13 -15, 2005 SPARS-J 20

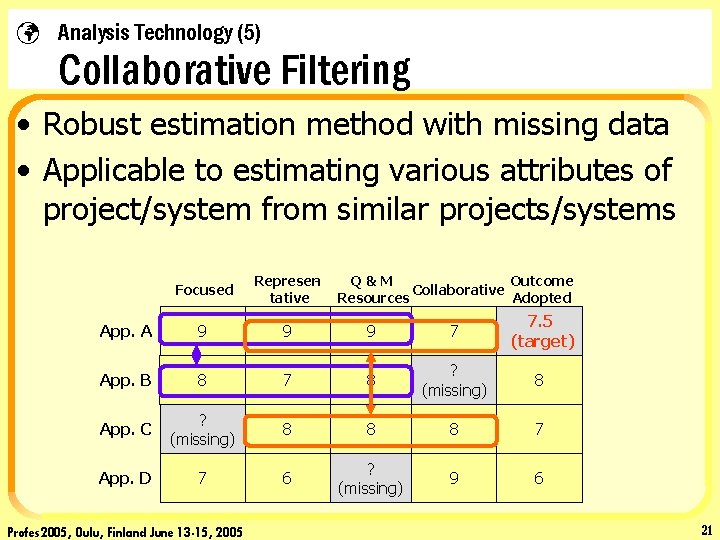

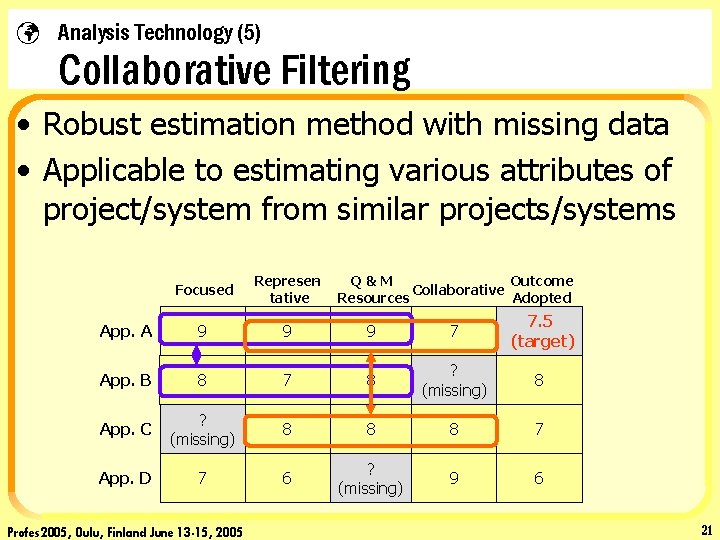

ü Analysis Technology (5) Collaborative Filtering • Robust estimation method with missing data • Applicable to estimating various attributes of project/system from similar projects/systems Focused Representative App. A 9 9 9 7 7. 5 (target) App. B 8 7 8 ? (missing) 8 App. C ? (missing) 8 8 8 7 App. D 7 6 ? (missing) 9 6 Profes 2005, Oulu, Finland June 13 -15, 2005 Outcome Q&M Collaborative Adopted Resources 21

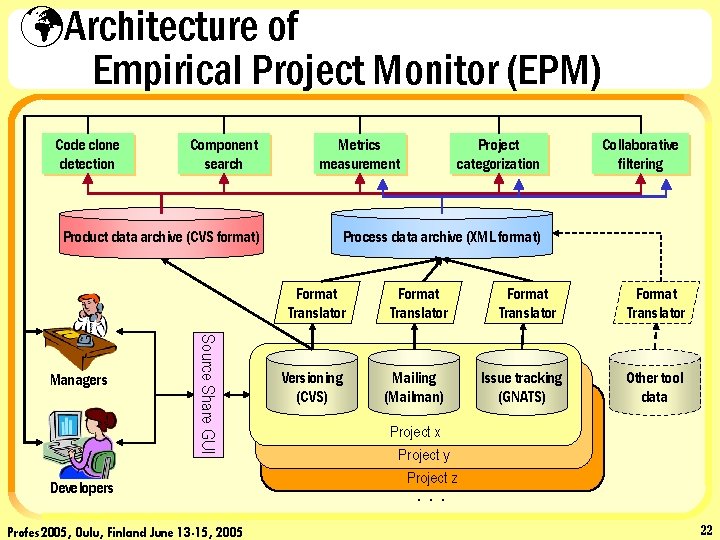

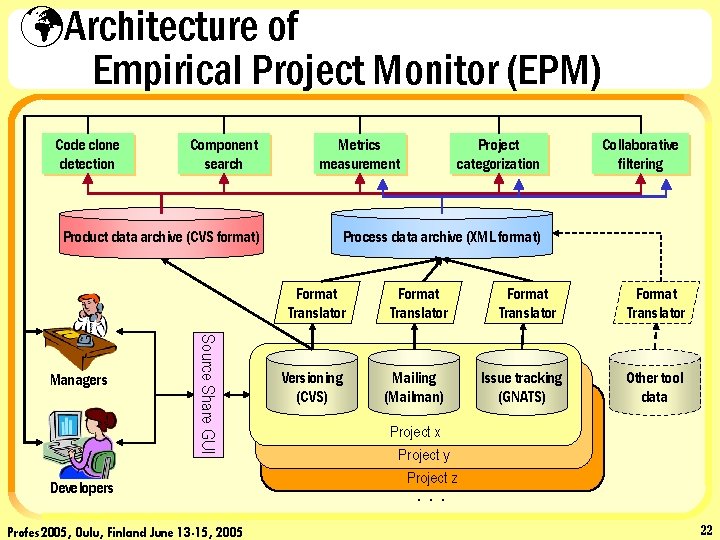

üArchitecture of Empirical Project Monitor (EPM) Code clone detection Component search Metrics measurement Source Share GUI Developers Profes 2005, Oulu, Finland June 13 -15, 2005 Collaborative filtering Process data archive (XML format) Product data archive (CVS format) Managers Project categorization Format Translator Versioning (CVS) Mailing (Mailman) Format Translator Issue tracking (GNATS) Format Translator Other tool data Project x Project y Project z . . . 22

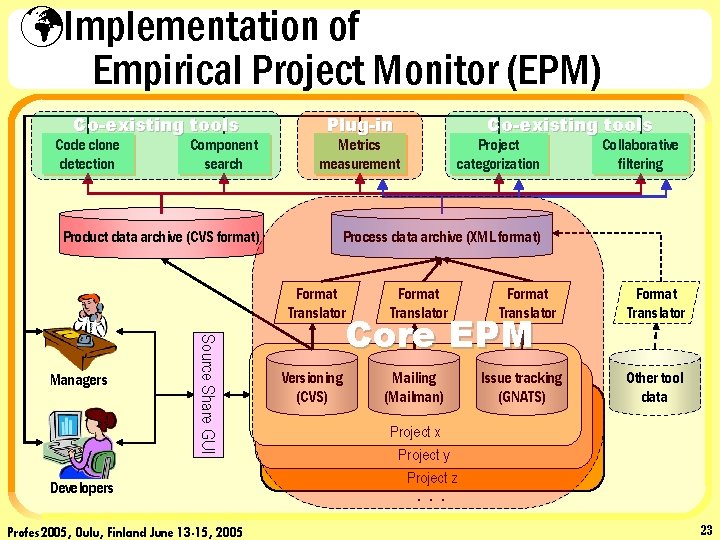

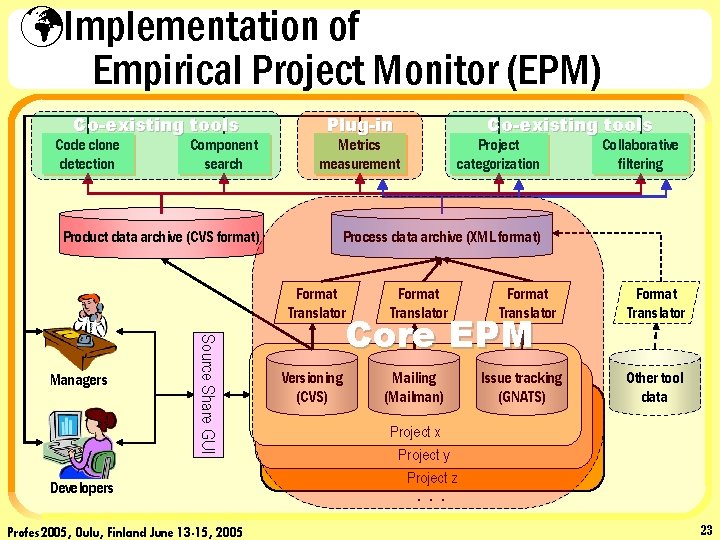

üImplementation of Empirical Project Monitor (EPM) Co-existing tools Code clone detection Component search Plug-in Metrics measurement Source Share GUI Developers Profes 2005, Oulu, Finland June 13 -15, 2005 Project categorization Collaborative filtering Process data archive (XML format) Product data archive (CVS format) Managers Co-existing tools Format Translator Versioning (CVS) Mailing (Mailman) Format Translator Core EPM Issue tracking (GNATS) Format Translator Other tool data Project x Project y Project z . . . 23

üApplying EPM in Industries • EPM is being applied to several real projects – Business systems – Personal mobile applications – Automobile information systems… • Very low stress to developers for data collection • Collected data is currently under analysis – Many findings such as • Module refactoring candidates • Internal trouble detection only with collected data Profes 2005, Oulu, Finland June 13 -15, 2005 24

üCurrent Status and Schedule • Current - Demo version of EPM • First quarter of 2004 – Alpha release of core EPM • First quarter of 2005 – Application of core EPM in industry • Second quarter of 2005 – Beta release of core EPM (http: //www. empirical. jp) • End of 2005 Inclusion of analysis tools • User group, consortium, interest group, . . . Profes 2005, Oulu, Finland June 13 -15, 2005 25

üConclusions • Proposed the concept of Mega Software Engineering • Shows examples of MSE • The concept needs further exploration of various applications and their benefits Profes 2005, Oulu, Finland June 13 -15, 2005 26

üRelated Works • Global software development – Support knowledge share in different locations – Expertise browser • Knowledge share • Open source projects – MSE may be closed to an organization internal • Measurement-based improvement framework – GQM, CMM, SPICE – MSE assumes organization-wide data collection and improvement, and also deeper analyses • Experience factory Profes 2005, Oulu, Finland June 13 -15, 2005 27

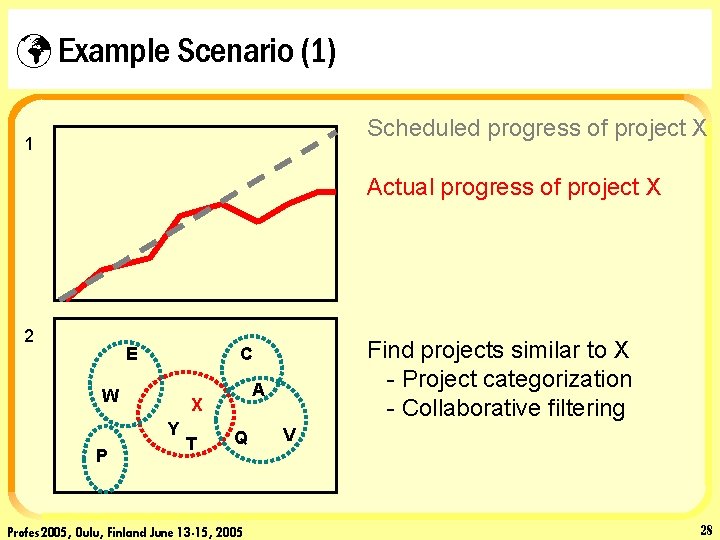

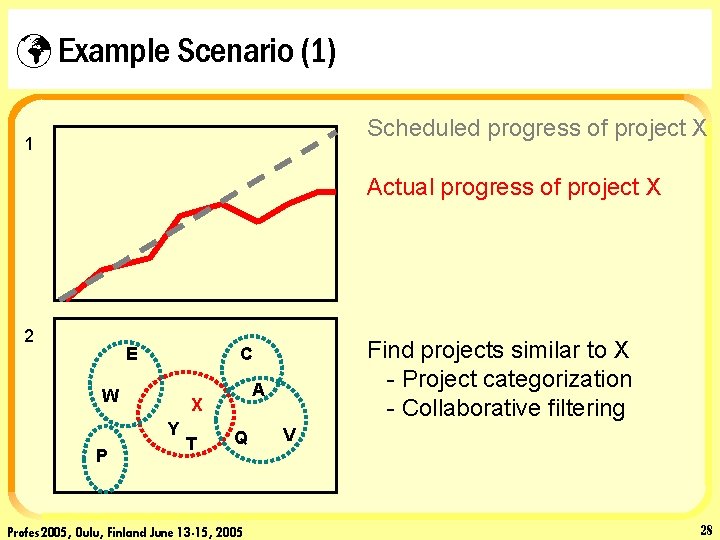

ü Example Scenario (1) Scheduled progress of project X 1 Actual progress of project X 2 E W A X Y P Find projects similar to X - Project categorization - Collaborative filtering C T Q Profes 2005, Oulu, Finland June 13 -15, 2005 V 28

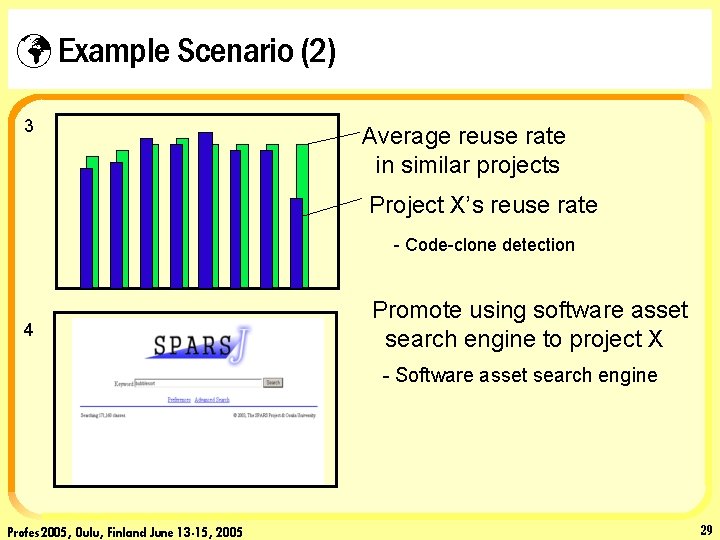

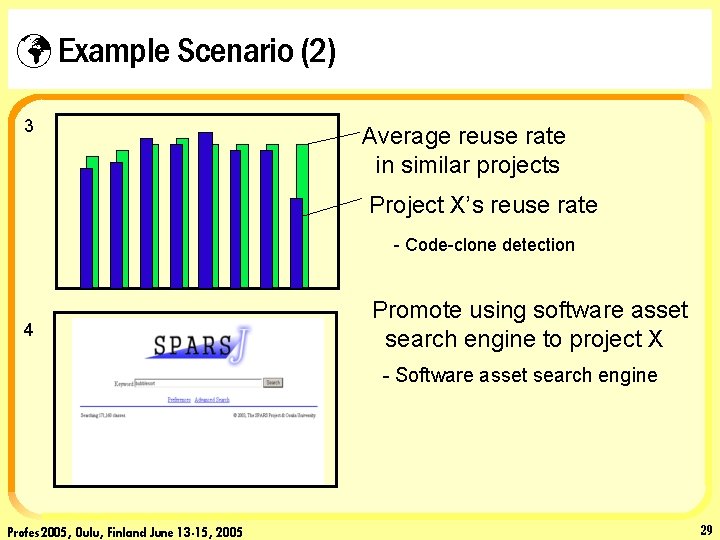

ü Example Scenario (2) 3 Average reuse rate in similar projects Project X’s reuse rate - Code-clone detection 4 Promote using software asset search engine to project X - Software asset search engine Profes 2005, Oulu, Finland June 13 -15, 2005 29

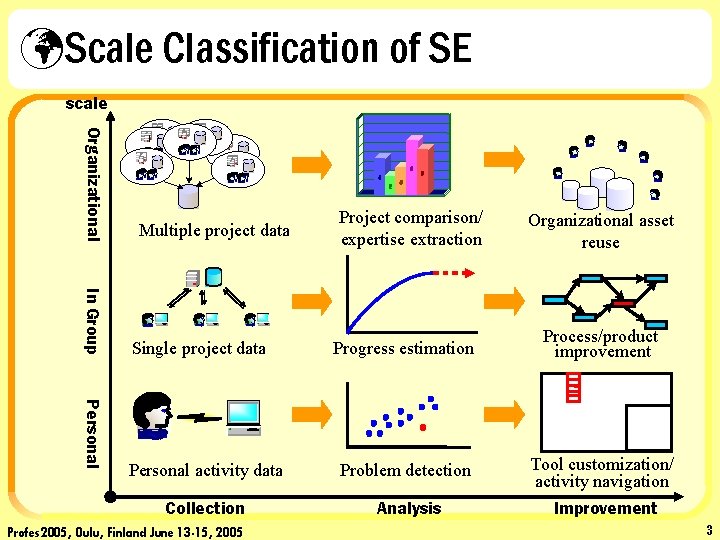

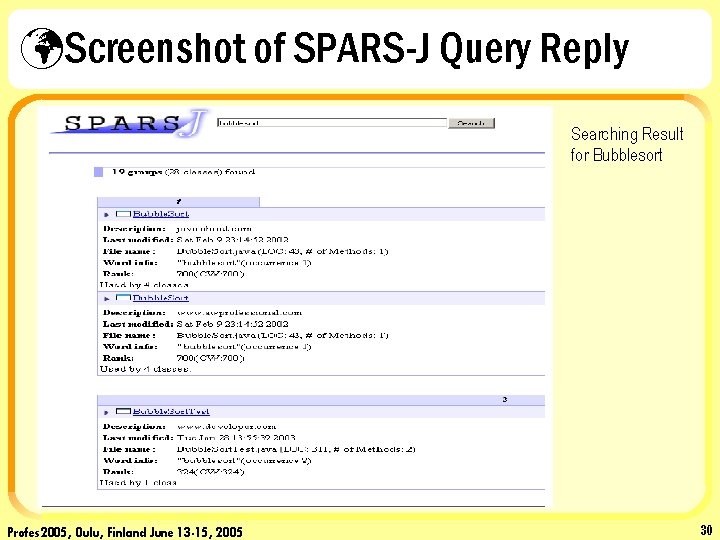

üScreenshot of SPARS-J Query Reply Searching Result for Bubblesort Profes 2005, Oulu, Finland June 13 -15, 2005 30