Map Reduce Simplified Data Processing on Large Clusters

- Slides: 29

Map. Reduce: Simplified Data Processing on Large Clusters Jeffrey Dean, and Sanjay Ghemawat in OSDI ‘ 04 Kim Daeyeon Jin Wenjing 2017. 12. 05 *Slides based on the Map. Reduce paper, and other online sources. Architecture and Code Optimization (ARC) Laboratory @ SNU 1

Contents • Introduction • Programming Model • Implementation • Refinements • Performance • Experience and Conclusion Architecture and Code Optimization (ARC) Laboratory @ SNU 2

Introduction • Input data is usually large. • Computations have to be distributed across hundreds or thousands of machines in order to finish in a reasonable amount of time. • The issues of how to parallelize the computation, distribute the data, and handle failures conspire to obscure the original simple computation with large amounts of complex code to deal with these issues. • Map and Reduce in Lisp inspire simple computations with distributed system features. Architecture and Code Optimization (ARC) Laboratory @ SNU 3

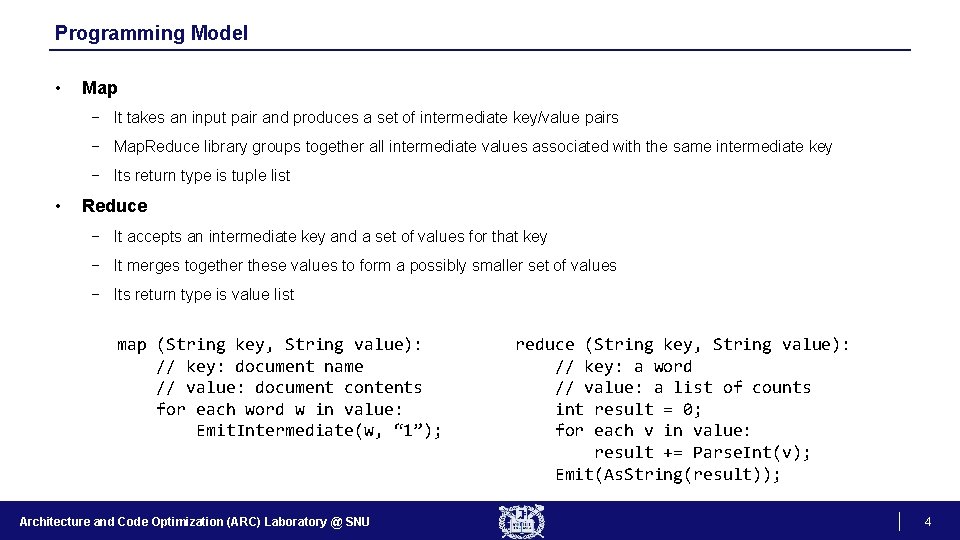

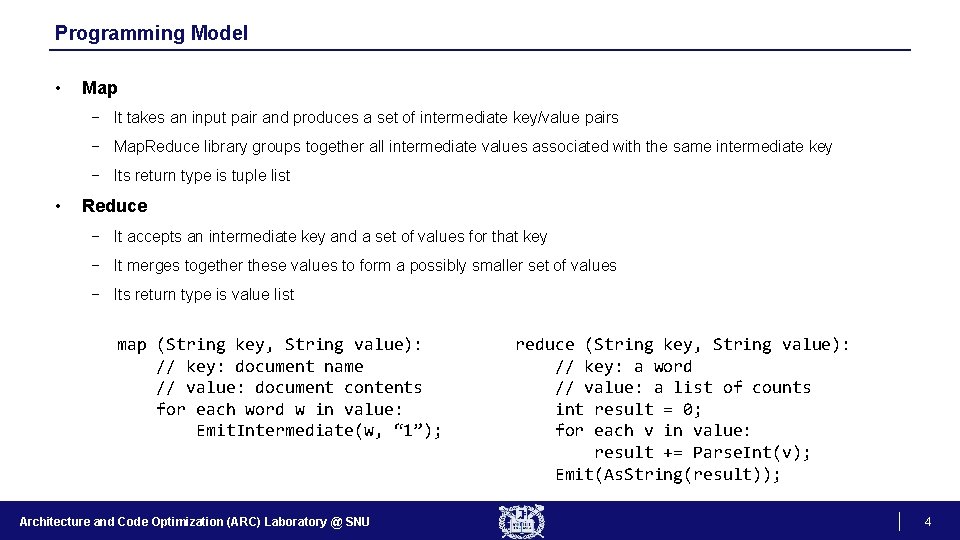

Programming Model • Map − It takes an input pair and produces a set of intermediate key/value pairs − Map. Reduce library groups together all intermediate values associated with the same intermediate key − Its return type is tuple list • Reduce − It accepts an intermediate key and a set of values for that key − It merges together these values to form a possibly smaller set of values − Its return type is value list map (String key, String value): // key: document name // value: document contents for each word w in value: Emit. Intermediate(w, “ 1”); Architecture and Code Optimization (ARC) Laboratory @ SNU reduce (String key, String value): // key: a word // value: a list of counts int result = 0; for each v in value: result += Parse. Int(v); Emit(As. String(result)); 4

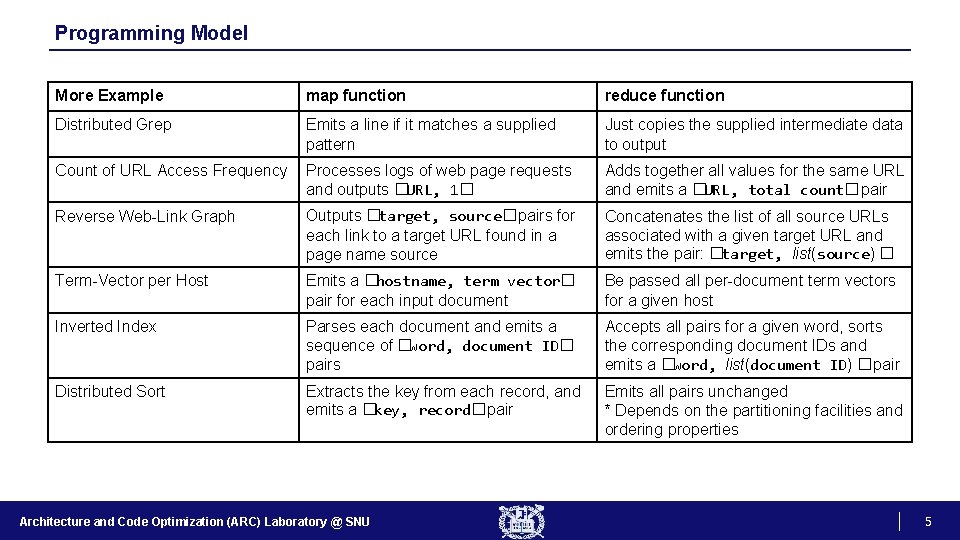

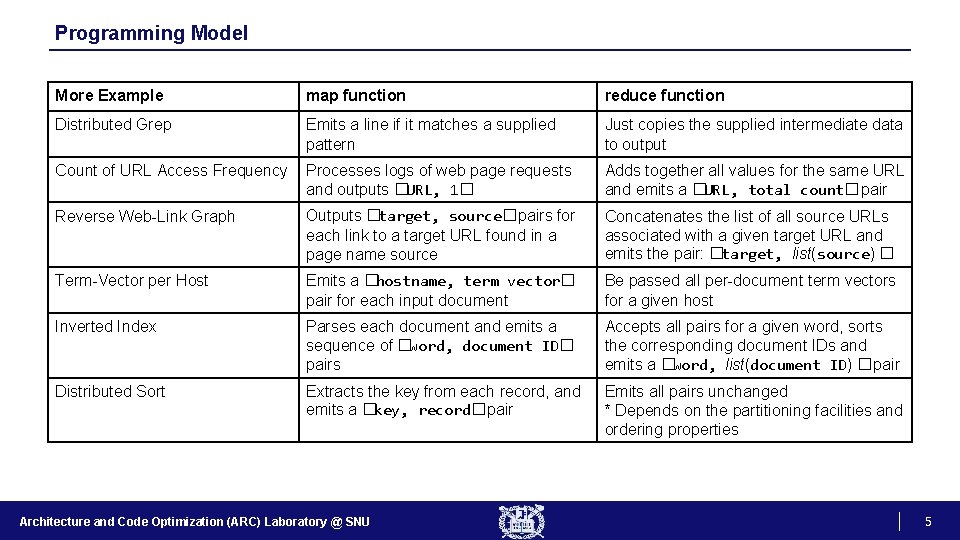

Programming Model More Example map function reduce function Distributed Grep Emits a line if it matches a supplied pattern Just copies the supplied intermediate data to output Count of URL Access Frequency Processes logs of web page requests and outputs �URL, 1� Adds together all values for the same URL and emits a �URL, total count�pair Reverse Web-Link Graph Outputs �target, source�pairs for each link to a target URL found in a page name source Concatenates the list of all source URLs associated with a given target URL and emits the pair: �target, list(source) � Term-Vector per Host Emits a �hostname, term vector� pair for each input document Be passed all per-document term vectors for a given host Inverted Index Parses each document and emits a sequence of �word, document ID� pairs Accepts all pairs for a given word, sorts the corresponding document IDs and emits a �word, list(document ID) �pair Distributed Sort Extracts the key from each record, and emits a �key, record�pair Emits all pairs unchanged * Depends on the partitioning facilities and ordering properties Architecture and Code Optimization (ARC) Laboratory @ SNU 5

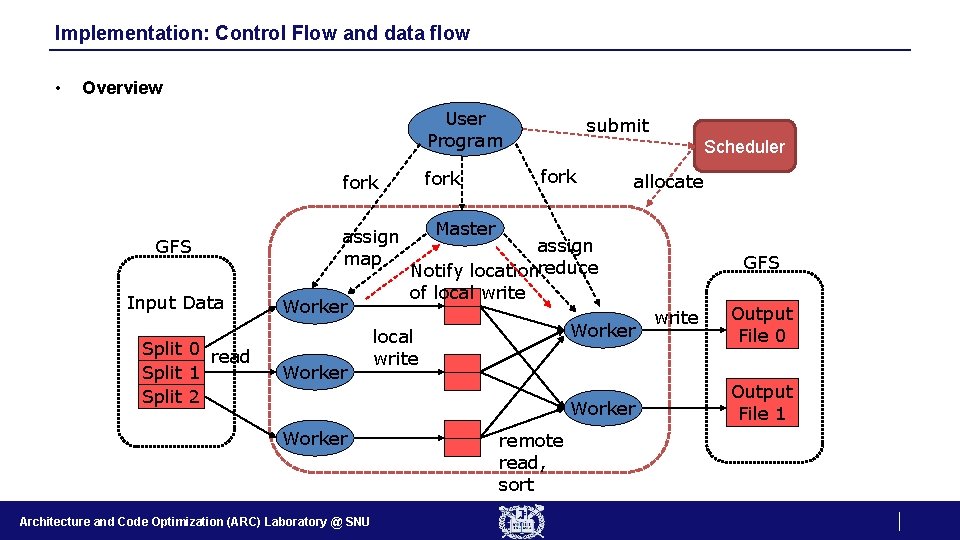

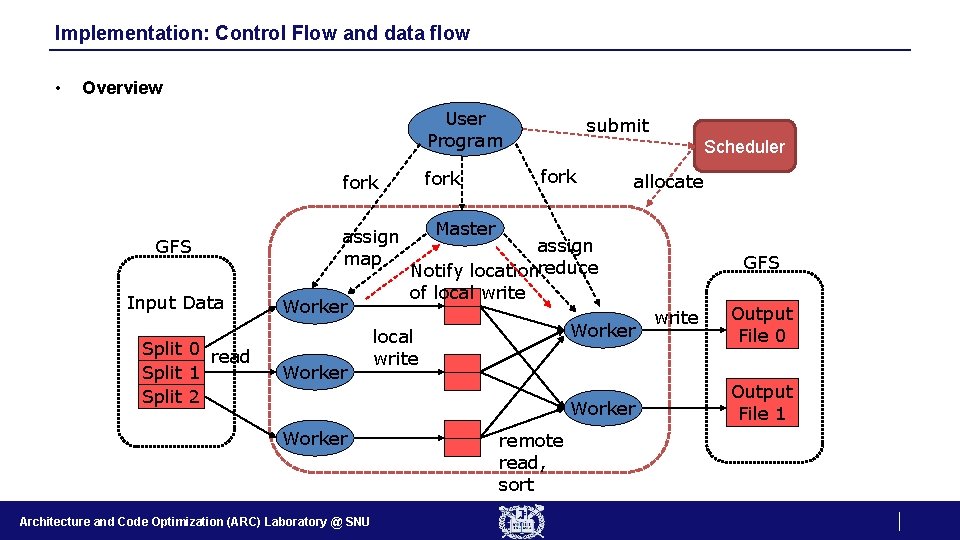

Implementation: Control Flow and data flow • Overview User Program fork GFS Input Data Split 0 read Split 1 Split 2 assign map Worker submit Scheduler fork allocate Master assign Notify locationreduce of local write Worker Architecture and Code Optimization (ARC) Laboratory @ SNU remote read, sort GFS write Output File 0 Output File 1

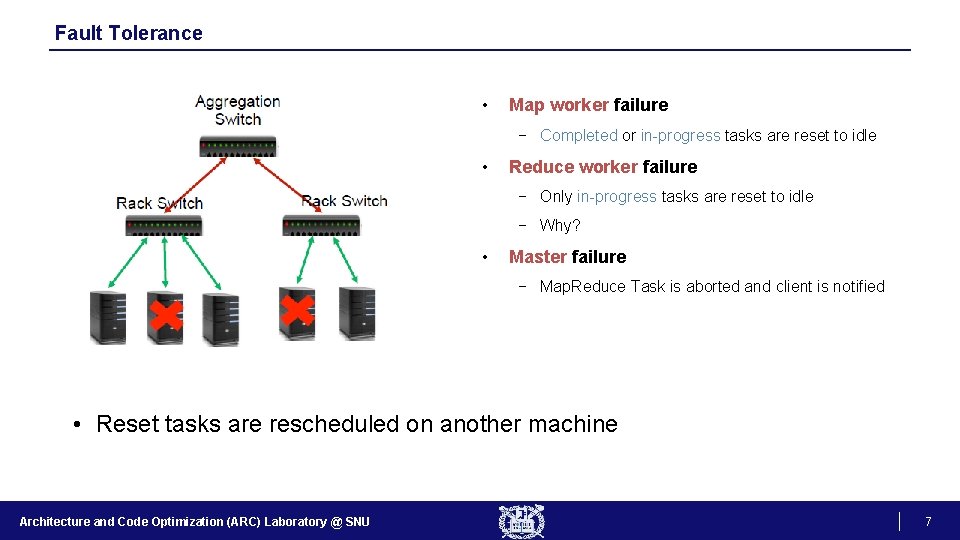

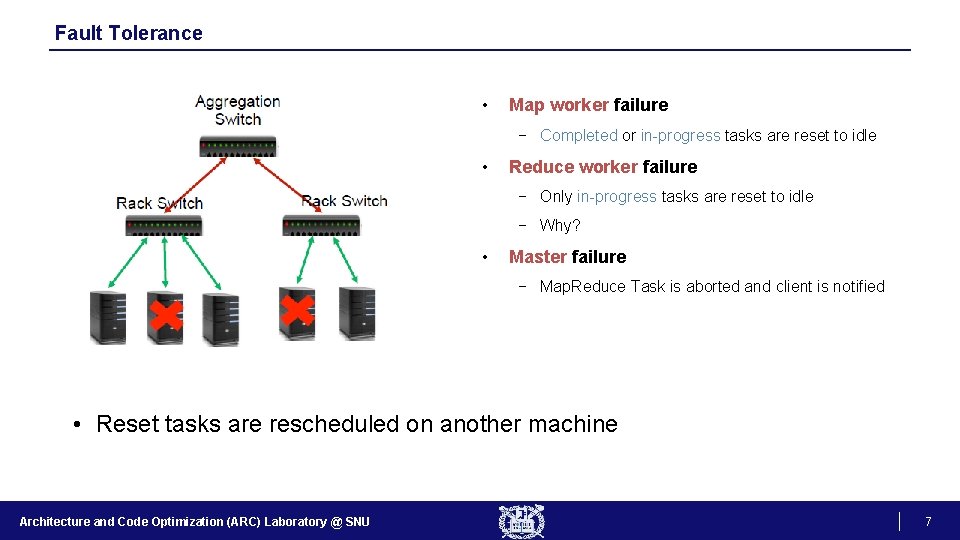

Fault Tolerance • Map worker failure − Completed or in-progress tasks are reset to idle • Reduce worker failure − Only in-progress tasks are reset to idle − Why? • Master failure − Map. Reduce Task is aborted and client is notified • Reset tasks are rescheduled on another machine Architecture and Code Optimization (ARC) Laboratory @ SNU 7

Disk Locality • Map tasks are scheduled close to data − on nodes that have input data − if not, on nodes that are nearer to input data − Ex. Same network switch • Conserves network bandwidth • Leverage the Google File System Architecture and Code Optimization (ARC) Laboratory @ SNU 8

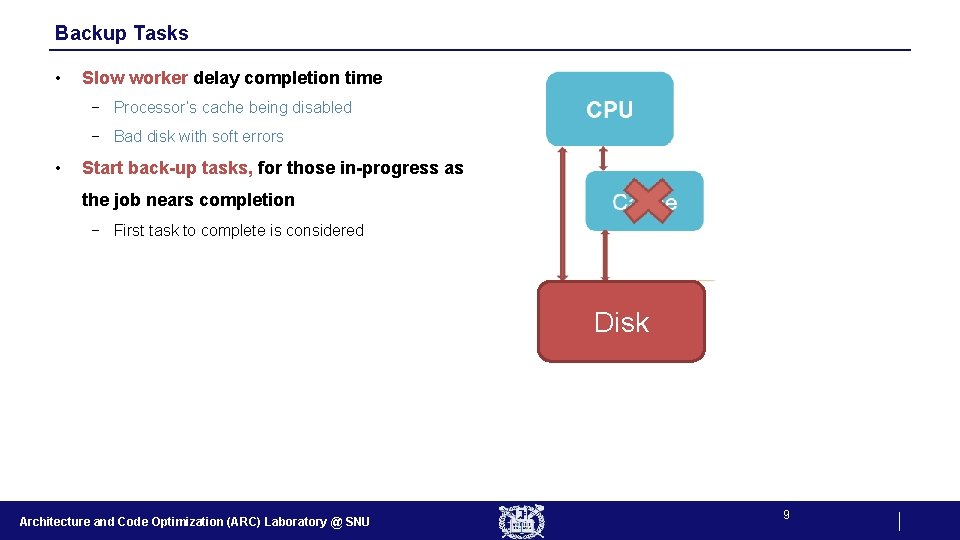

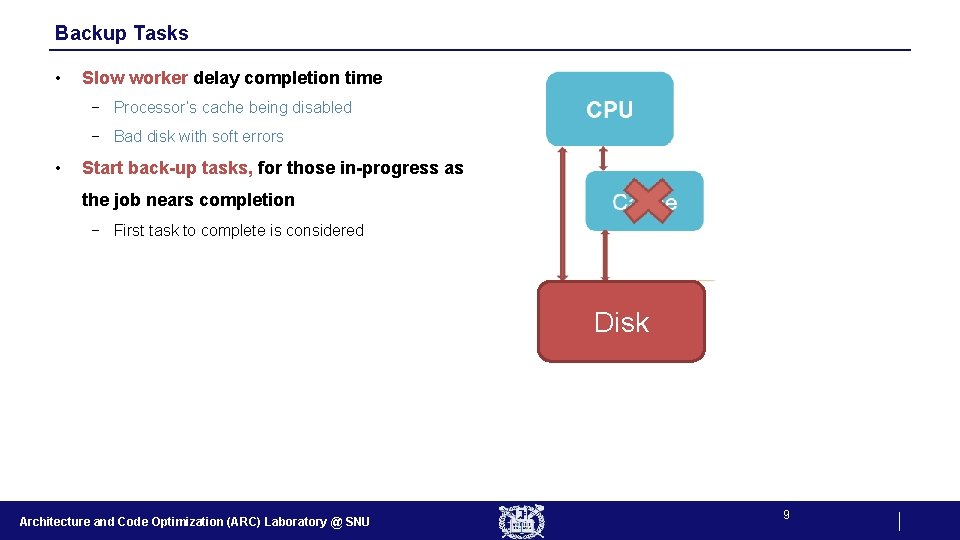

Backup Tasks • Slow worker delay completion time − Processor’s cache being disabled − Bad disk with soft errors • Start back-up tasks, for those in-progress as the job nears completion − First task to complete is considered Disk Architecture and Code Optimization (ARC) Laboratory @ SNU 9

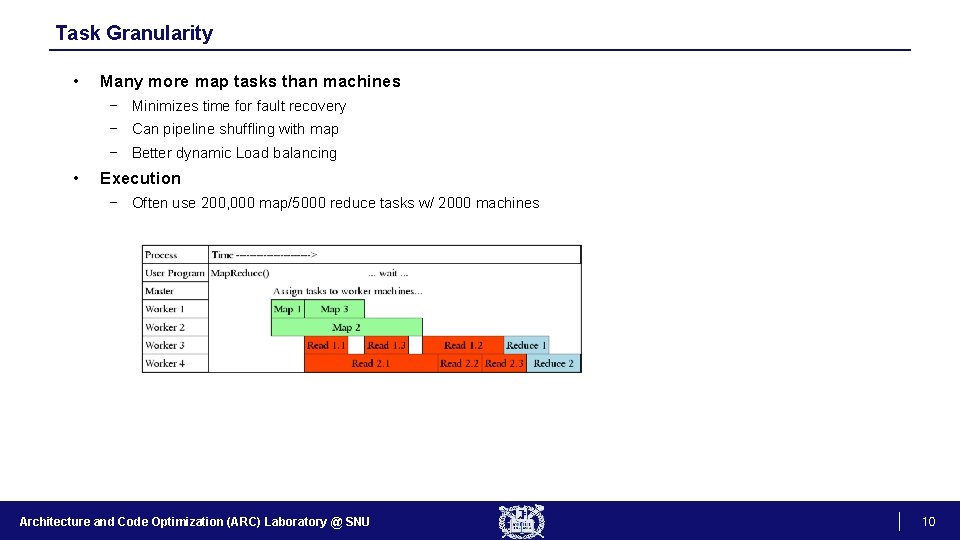

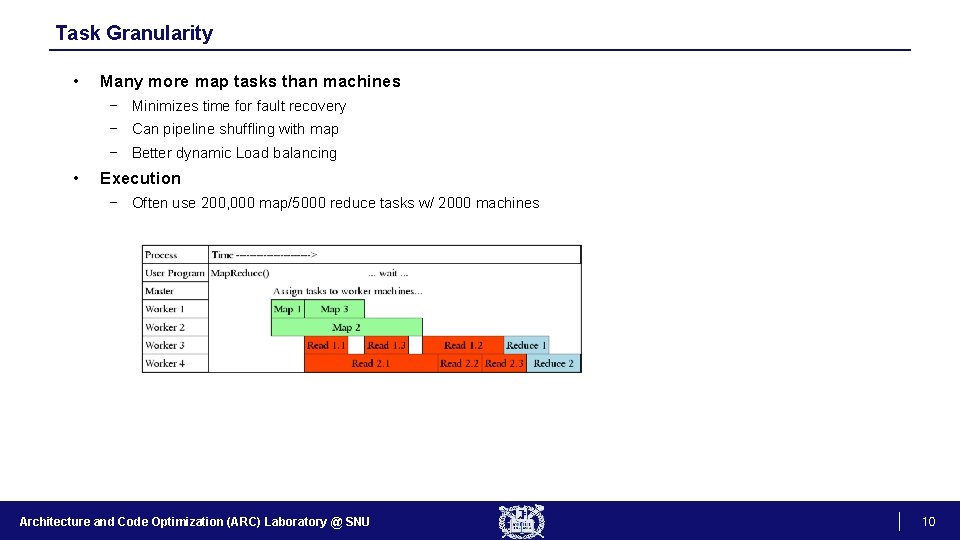

Task Granularity • Many more map tasks than machines − Minimizes time for fault recovery − Can pipeline shuffling with map − Better dynamic Load balancing • Execution − Often use 200, 000 map/5000 reduce tasks w/ 2000 machines Architecture and Code Optimization (ARC) Laboratory @ SNU 10

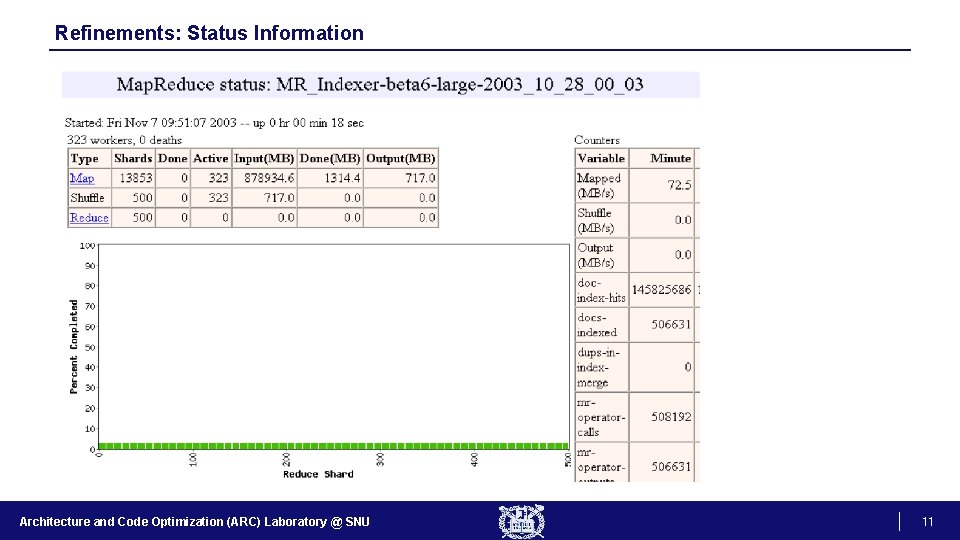

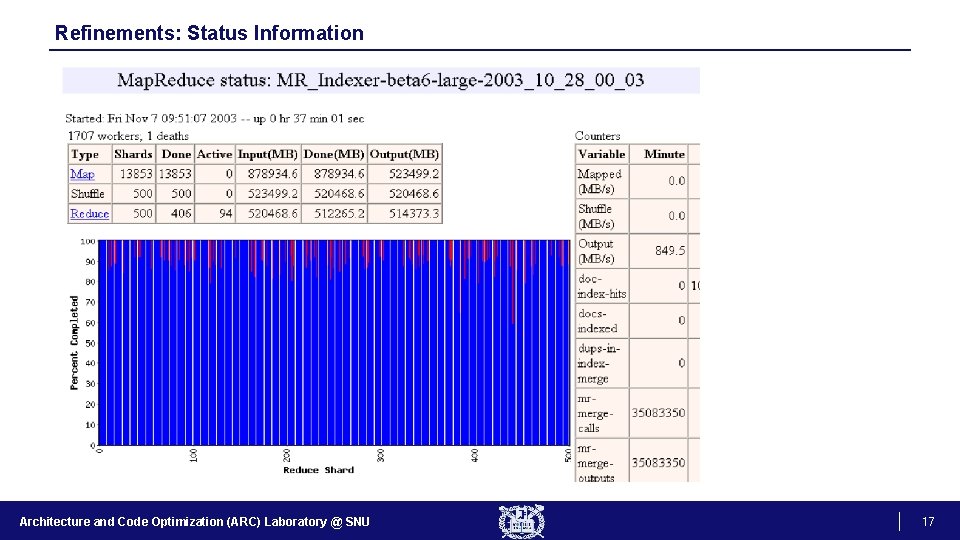

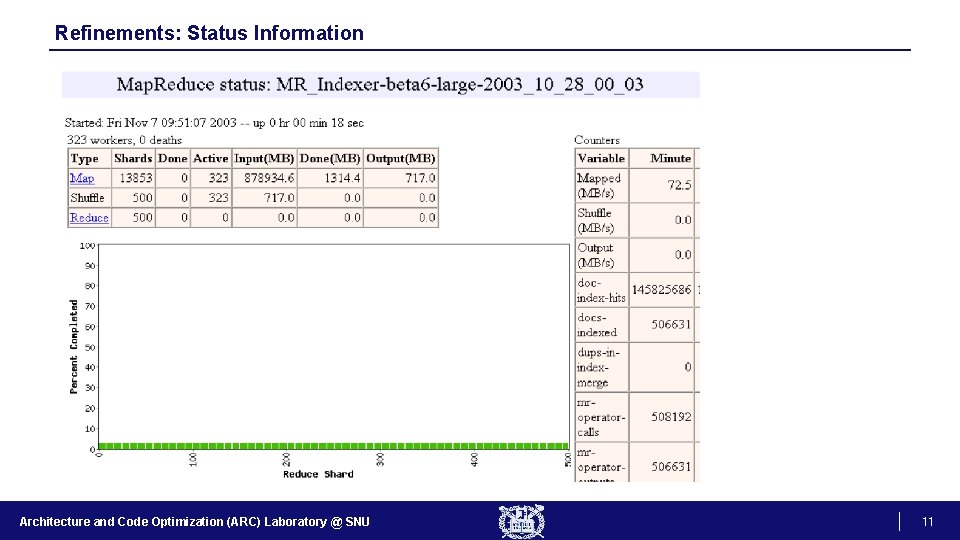

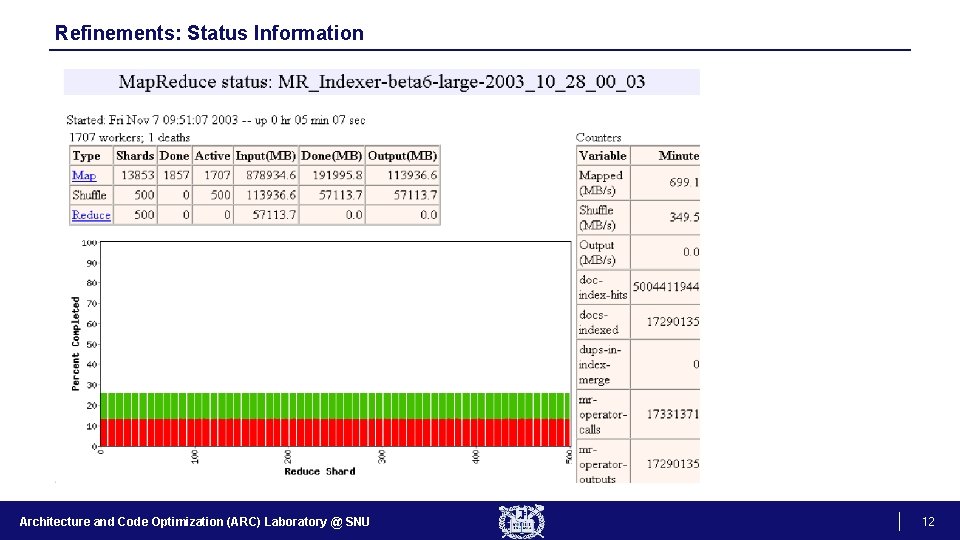

Refinements: Status Information Architecture and Code Optimization (ARC) Laboratory @ SNU 11

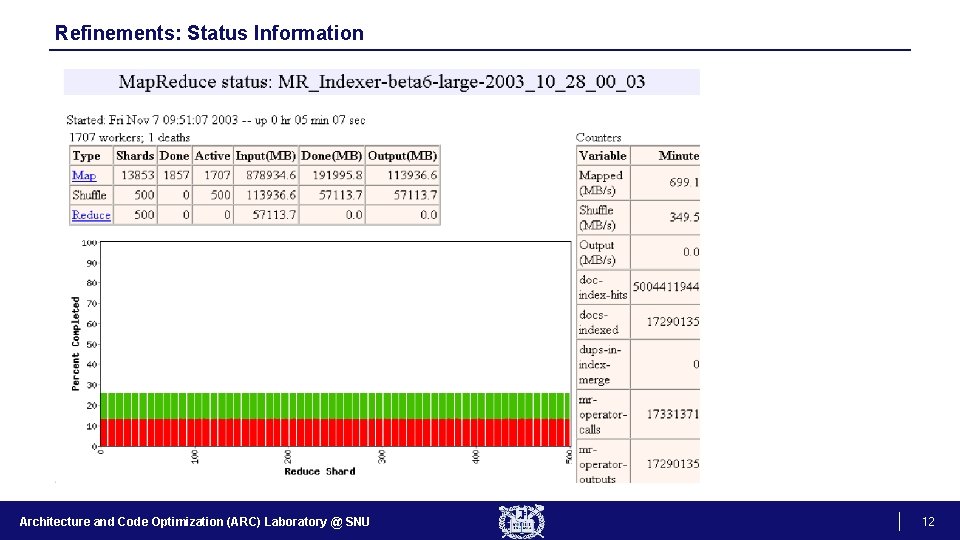

Refinements: Status Information Architecture and Code Optimization (ARC) Laboratory @ SNU 12

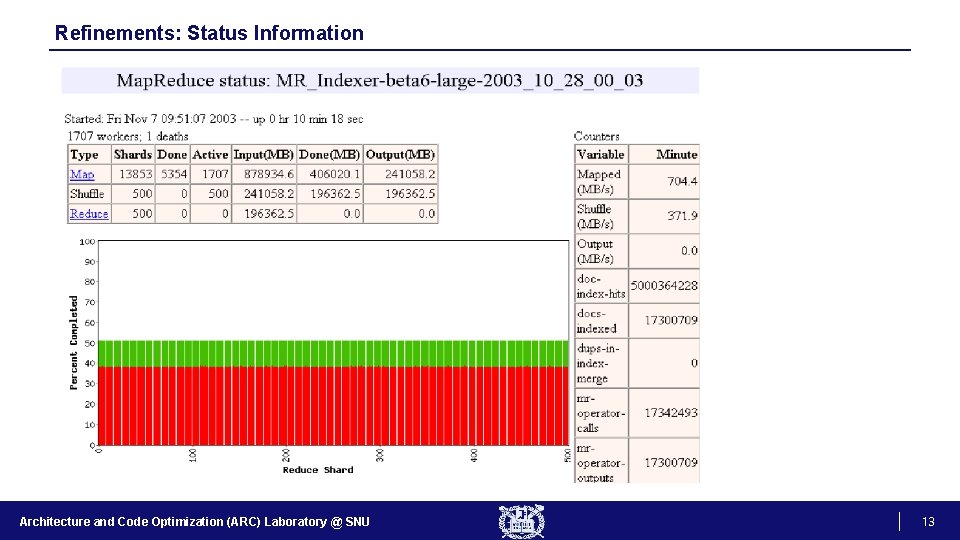

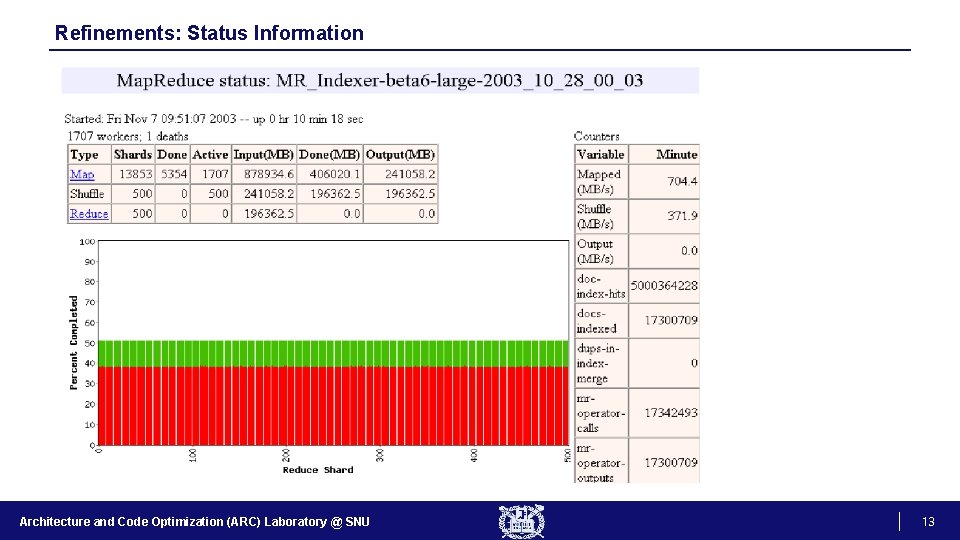

Refinements: Status Information Architecture and Code Optimization (ARC) Laboratory @ SNU 13

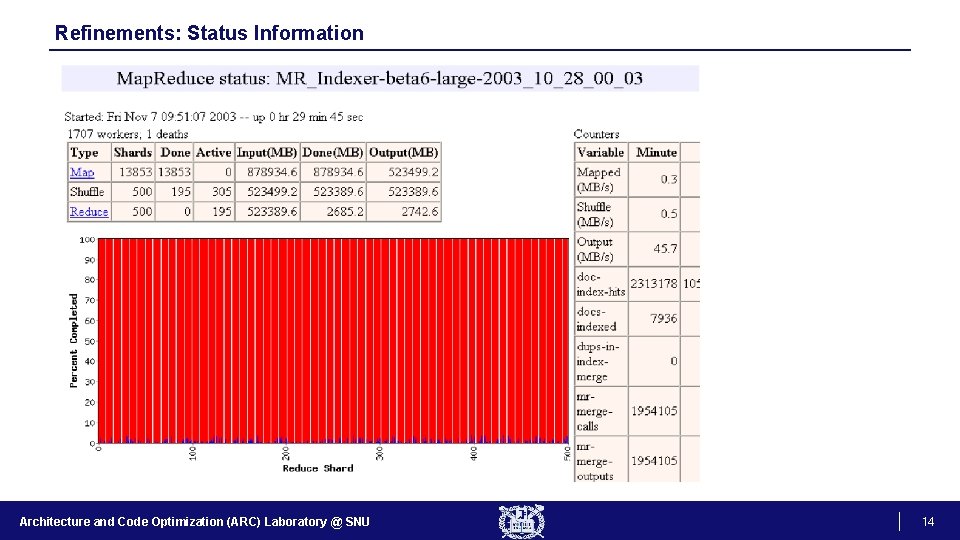

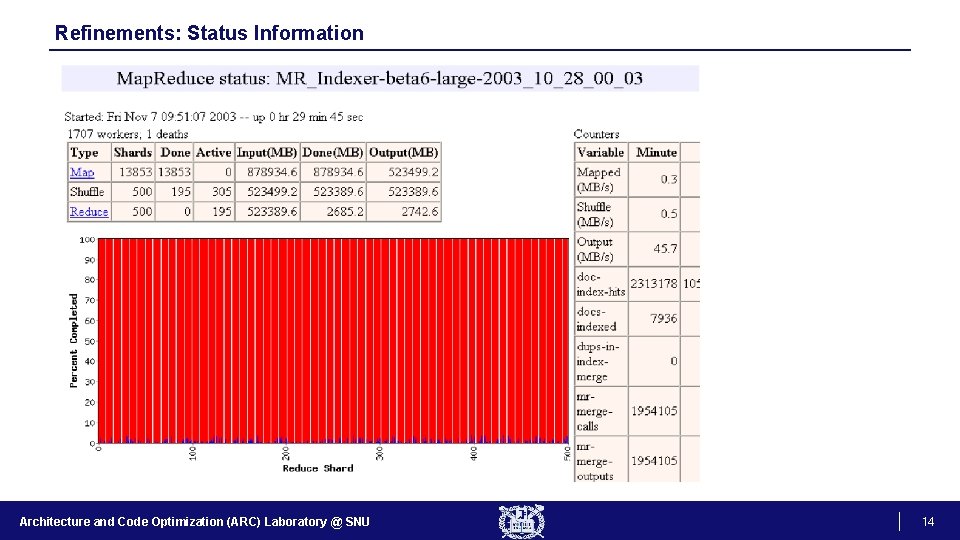

Refinements: Status Information Architecture and Code Optimization (ARC) Laboratory @ SNU 14

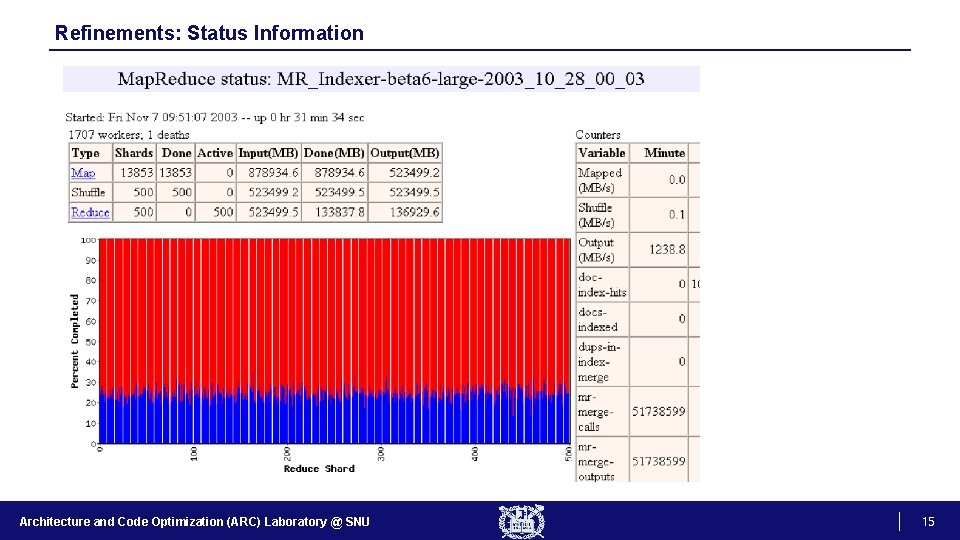

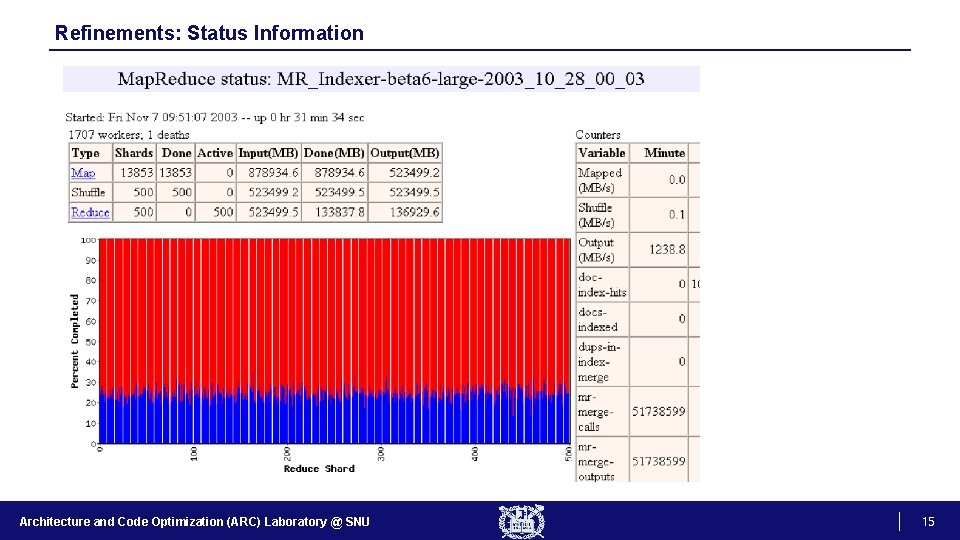

Refinements: Status Information Architecture and Code Optimization (ARC) Laboratory @ SNU 15

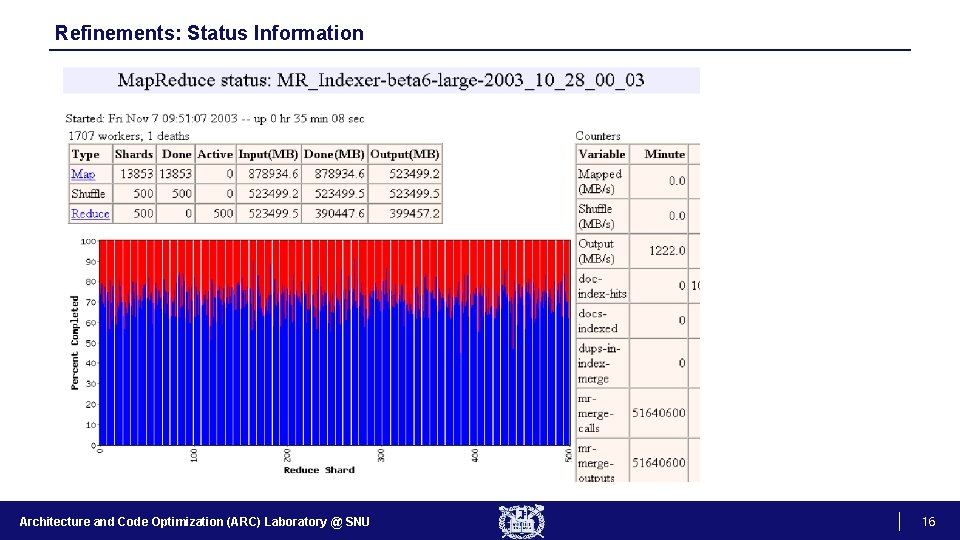

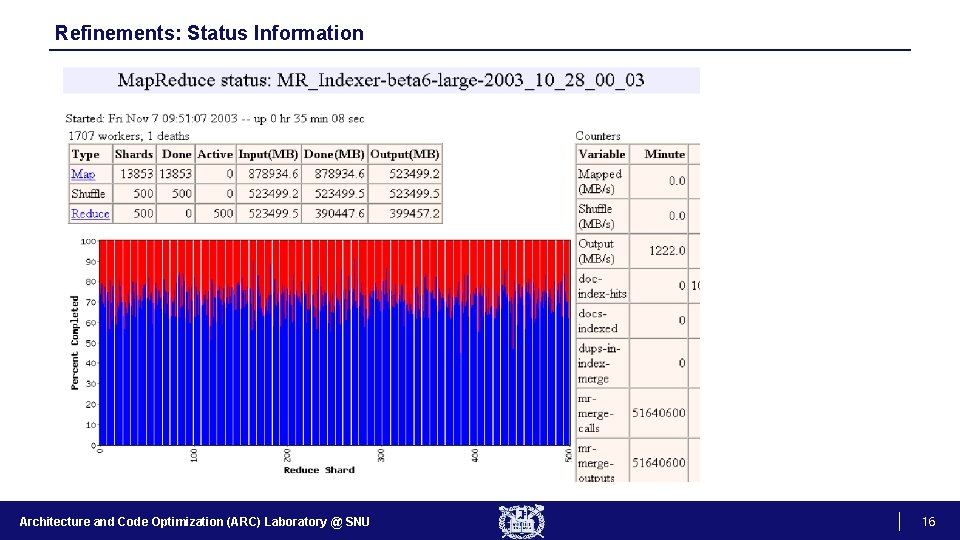

Refinements: Status Information Architecture and Code Optimization (ARC) Laboratory @ SNU 16

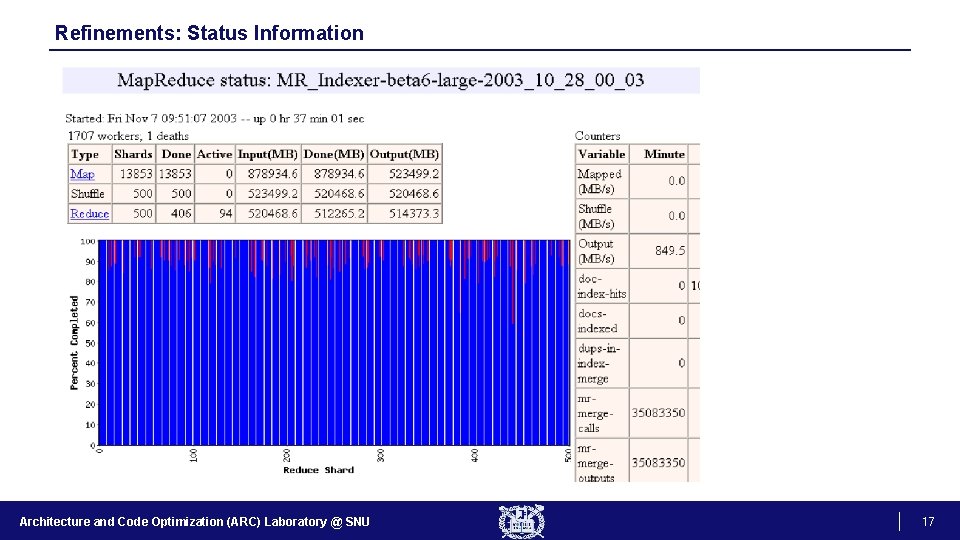

Refinements: Status Information Architecture and Code Optimization (ARC) Laboratory @ SNU 17

Refinements: Partitioning Function • Records with the same intermediate key end up at the same reducer • Default partition function e. g. , hash(key) mod R • Sometimes useful to override − E. g. , hash(hostname(URL)) mod R ensures URLs from a host end up in the same output file Architecture and Code Optimization (ARC) Laboratory @ SNU 18

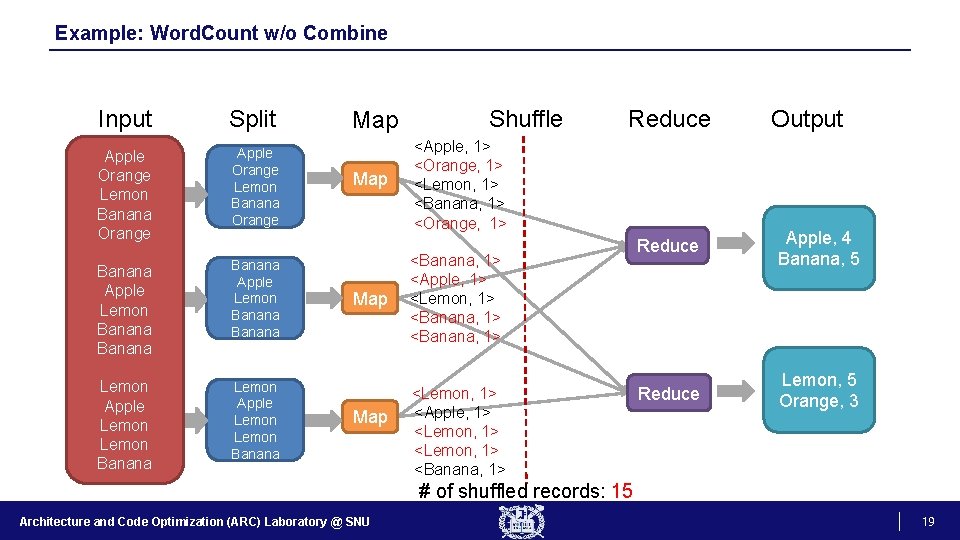

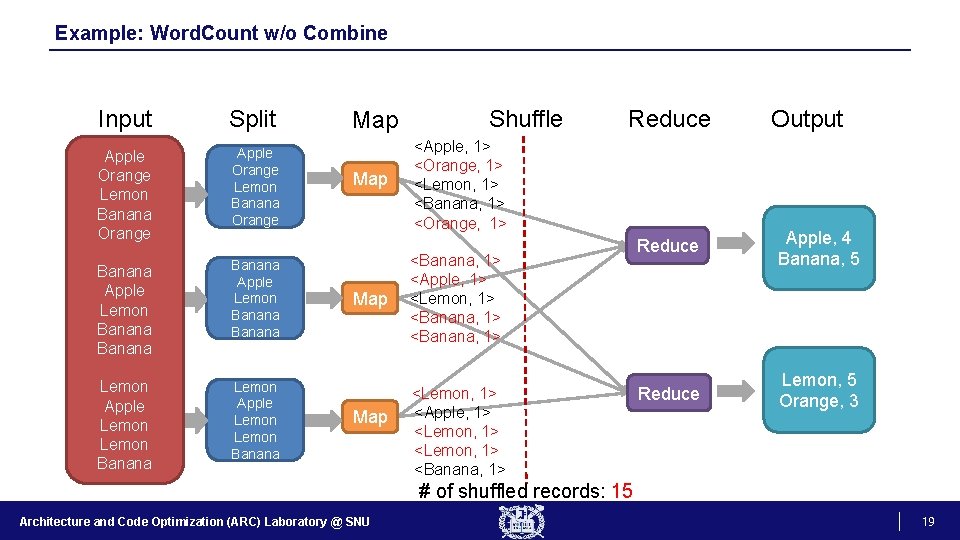

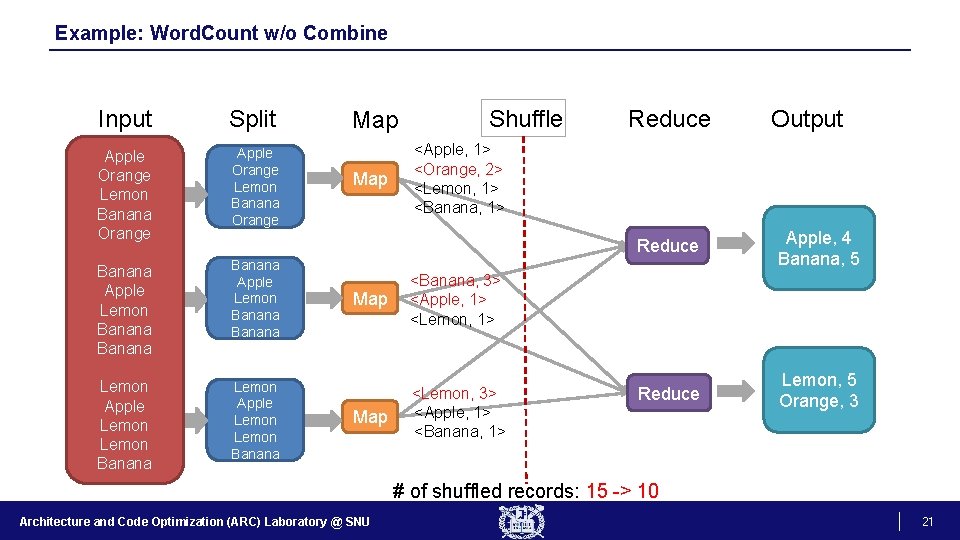

Example: Word. Count w/o Combine Input Split Apple Orange Lemon Banana Orange Banana Apple Lemon Banana Lemon Apple Lemon Banana Map Map Shuffle Reduce <Apple, 1> <Orange, 1> <Lemon, 1> <Banana, 1> <Orange, 1> <Banana, 1> <Apple, 1> <Lemon, 1> <Banana, 1> <Lemon, 1> <Apple, 1> <Lemon, 1> <Banana, 1> Output Reduce Apple, 4 Banana, 5 Reduce Lemon, 5 Orange, 3 # of shuffled records: 15 Architecture and Code Optimization (ARC) Laboratory @ SNU 19

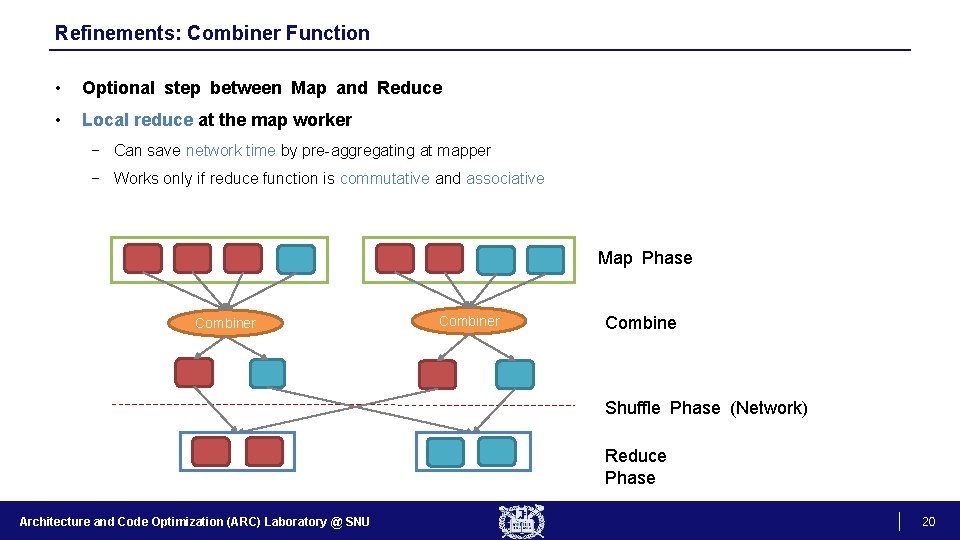

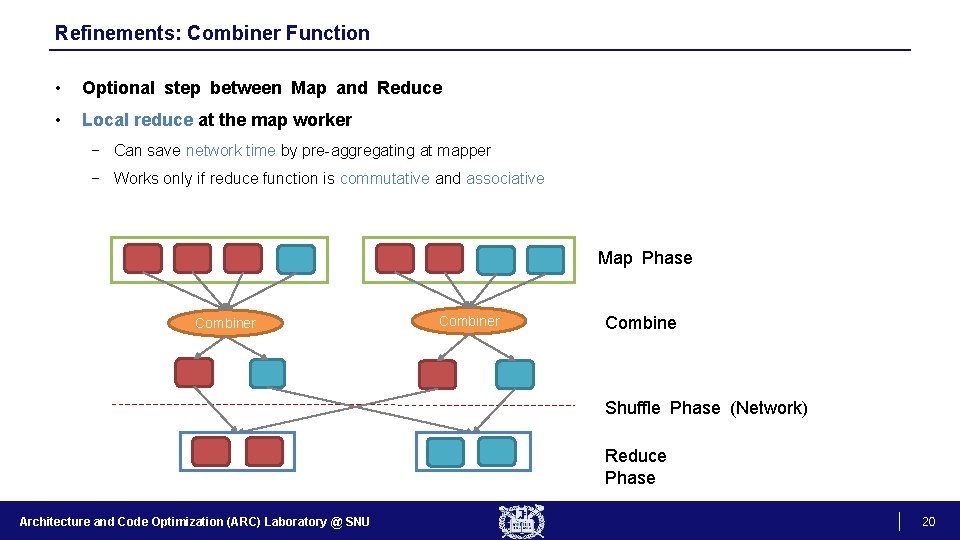

Refinements: Combiner Function • Optional step between Map and Reduce • Local reduce at the map worker − Can save network time by pre-aggregating at mapper − Works only if reduce function is commutative and associative Map Phase Combiner Combine Shuffle Phase (Network) Reduce Phase Architecture and Code Optimization (ARC) Laboratory @ SNU 20

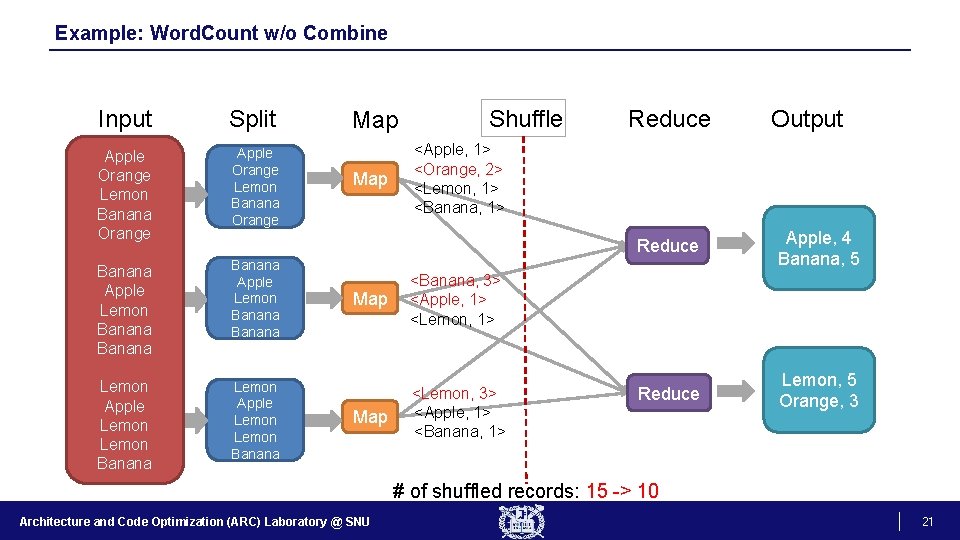

Example: Word. Count w/o Combine Input Split Apple Orange Lemon Banana Orange Banana Apple Lemon Banana Lemon Apple Lemon Banana Map Shuffle Reduce Output <Apple, 1> <Orange, 2> <Lemon, 1> <Banana, 1> Map <Banana, 3> <Apple, 1> <Lemon, 1> Map <Lemon, 3> <Apple, 1> <Banana, 1> Reduce Apple, 4 Banana, 5 Reduce Lemon, 5 Orange, 3 # of shuffled records: 15 -> 10 Architecture and Code Optimization (ARC) Laboratory @ SNU 21

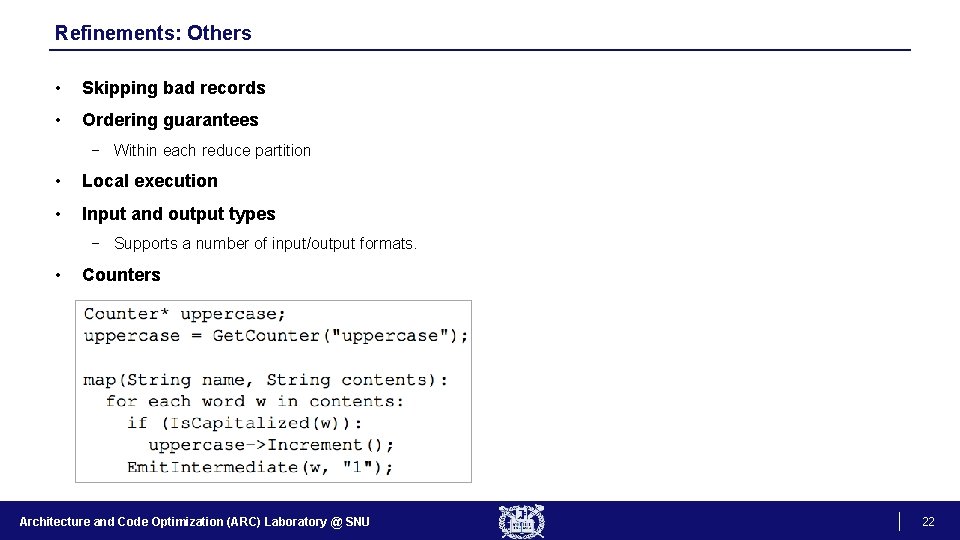

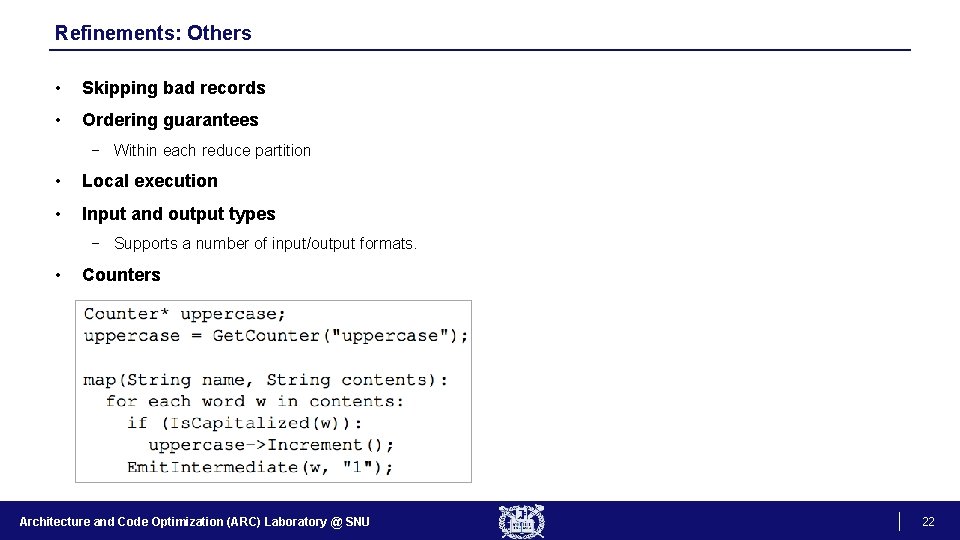

Refinements: Others • Skipping bad records • Ordering guarantees − Within each reduce partition • Local execution • Input and output types − Supports a number of input/output formats. • Counters Architecture and Code Optimization (ARC) Laboratory @ SNU 22

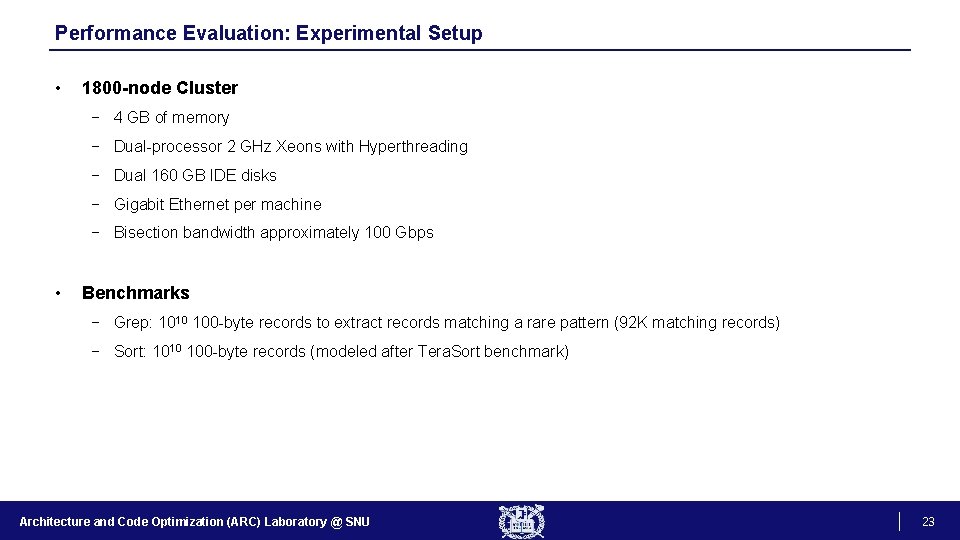

Performance Evaluation: Experimental Setup • 1800 -node Cluster − 4 GB of memory − Dual-processor 2 GHz Xeons with Hyperthreading − Dual 160 GB IDE disks − Gigabit Ethernet per machine − Bisection bandwidth approximately 100 Gbps • Benchmarks − Grep: 1010 100 -byte records to extract records matching a rare pattern (92 K matching records) − Sort: 1010 100 -byte records (modeled after Tera. Sort benchmark) Architecture and Code Optimization (ARC) Laboratory @ SNU 23

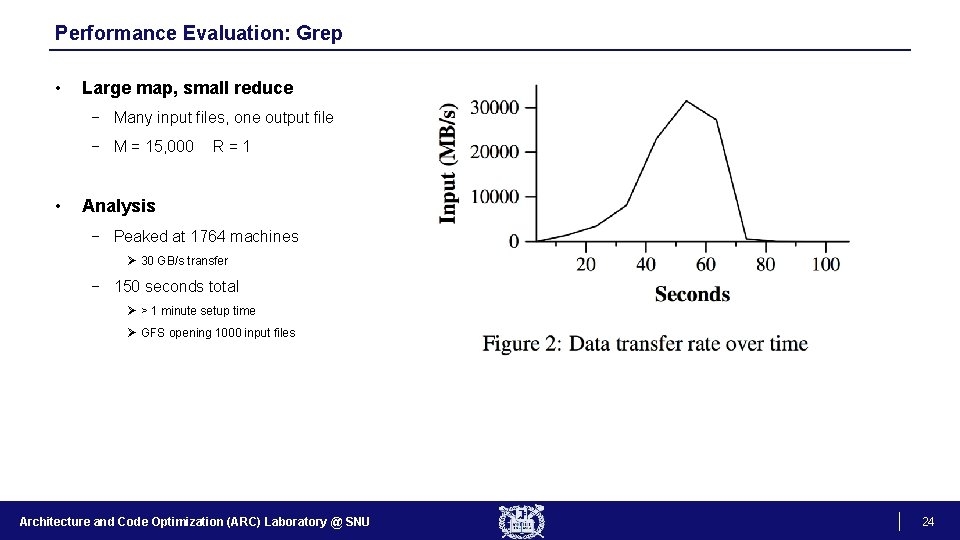

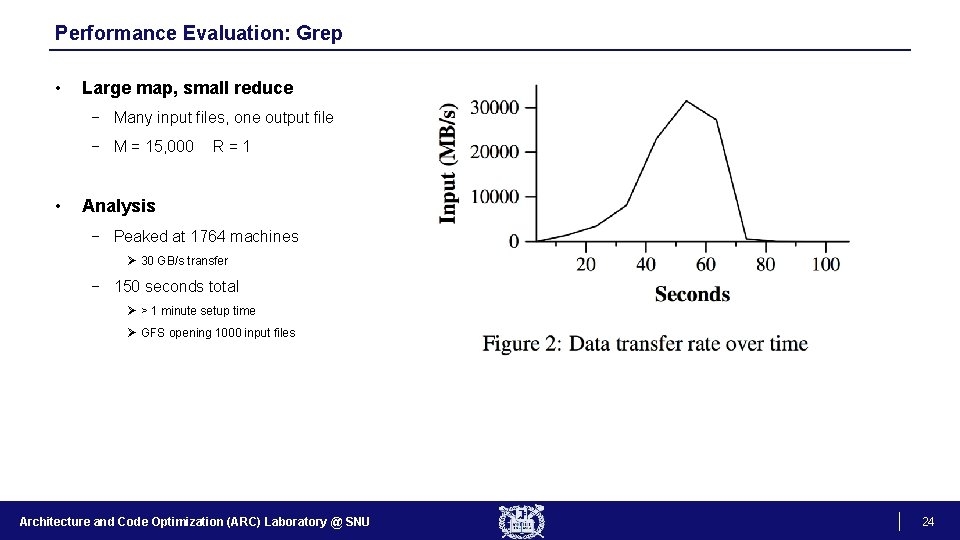

Performance Evaluation: Grep • Large map, small reduce − Many input files, one output file − M = 15, 000 • R=1 Analysis − Peaked at 1764 machines Ø 30 GB/s transfer − 150 seconds total Ø > 1 minute setup time Ø GFS opening 1000 input files Architecture and Code Optimization (ARC) Laboratory @ SNU 24

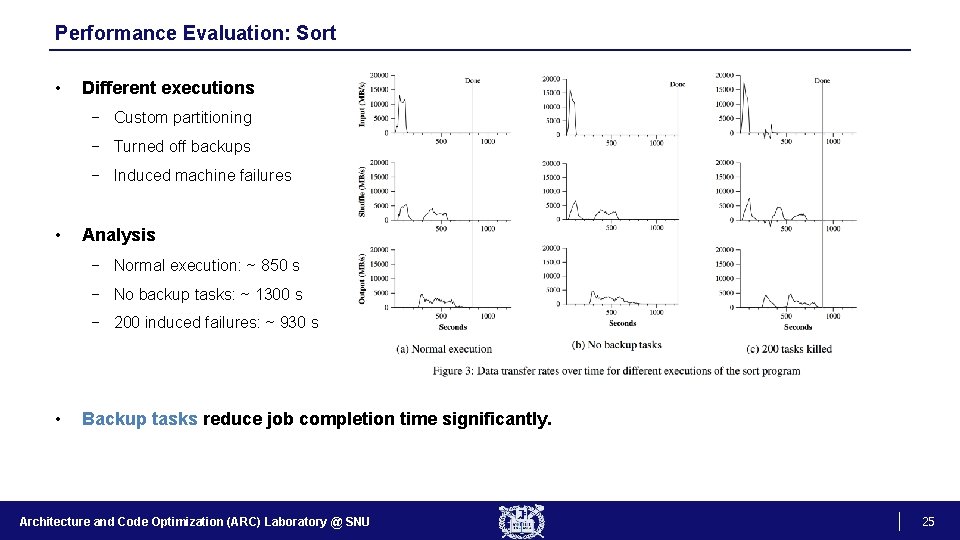

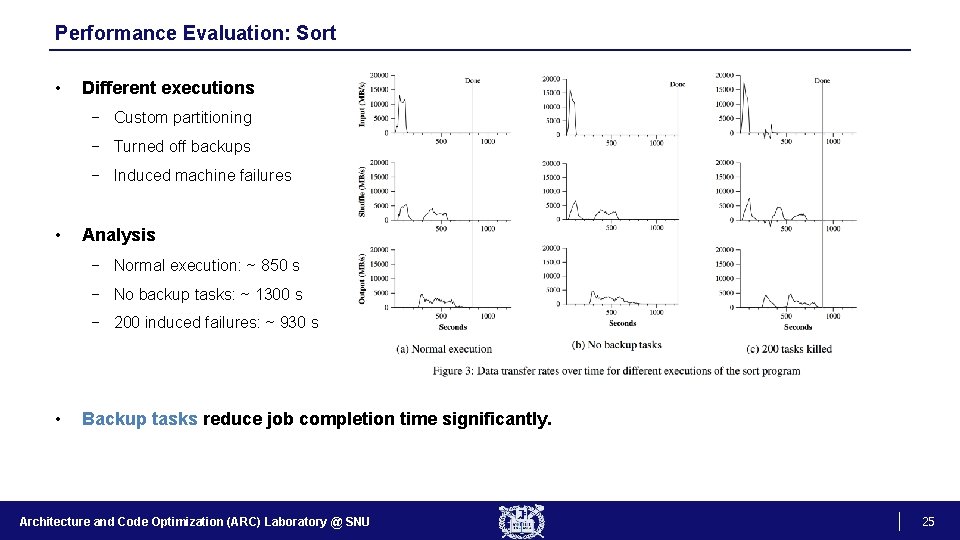

Performance Evaluation: Sort • Different executions − Custom partitioning − Turned off backups − Induced machine failures • Analysis − Normal execution: ~ 850 s − No backup tasks: ~ 1300 s − 200 induced failures: ~ 930 s • Backup tasks reduce job completion time significantly. Architecture and Code Optimization (ARC) Laboratory @ SNU 25

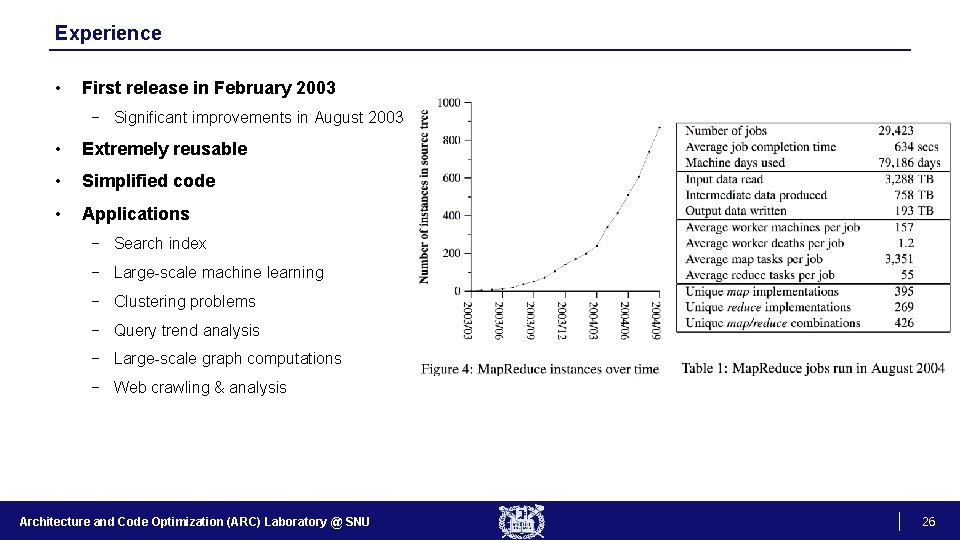

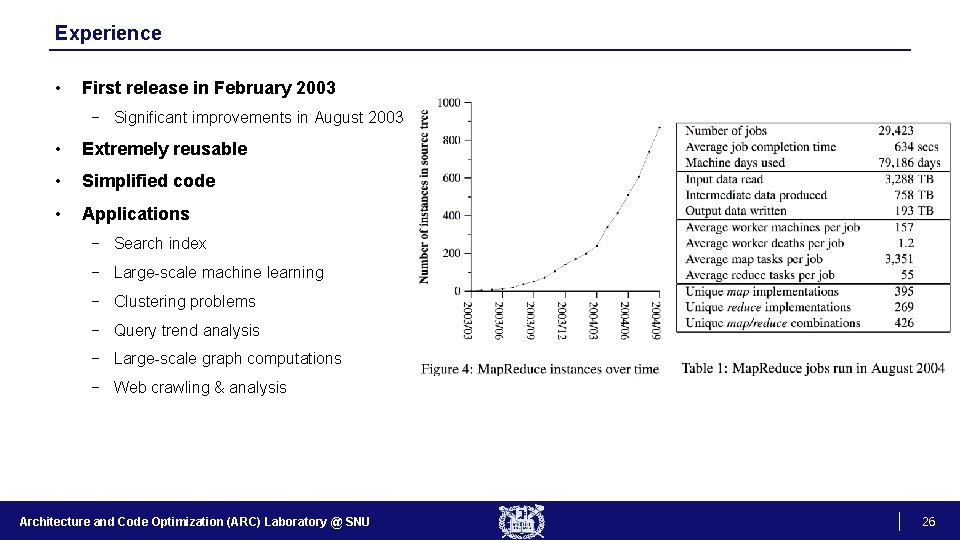

Experience • First release in February 2003 − Significant improvements in August 2003 • Extremely reusable • Simplified code • Applications − Search index − Large-scale machine learning − Clustering problems − Query trend analysis − Large-scale graph computations − Web crawling & analysis Architecture and Code Optimization (ARC) Laboratory @ SNU 26

Conclusion • Restricted programming model transparently providing: − Fault tolerance − Parallelization − Load balancing • Benefits − Easy to use − Applicable − Scalable • Key ideas − Restricted programming model allows a more robust system − Network bandwidth is a scarce resource − Redundant execution can alleviate failure or straggler problems Architecture and Code Optimization (ARC) Laboratory @ SNU 27

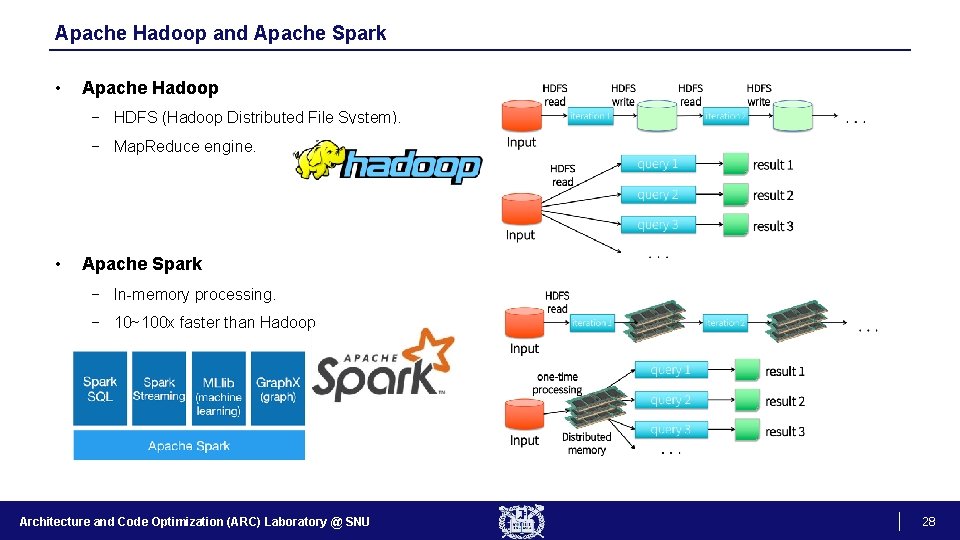

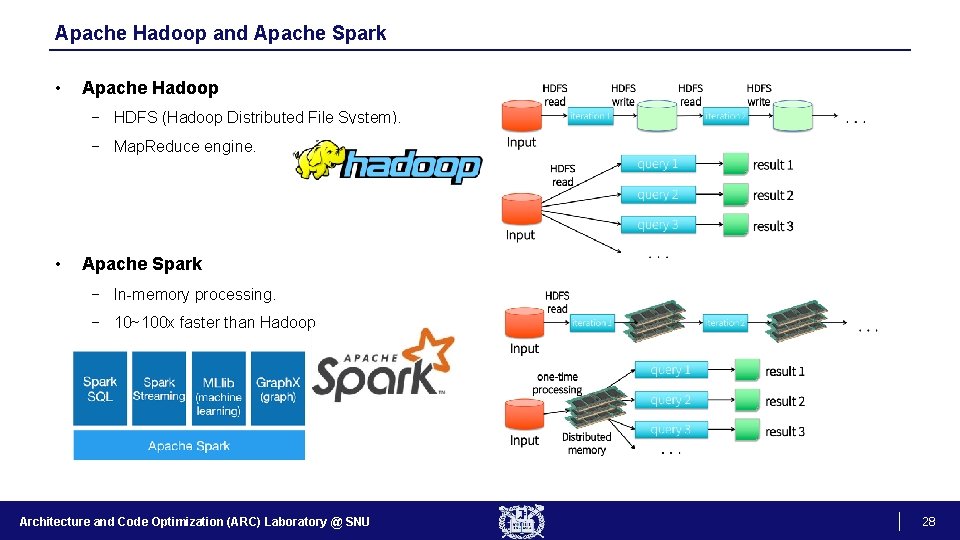

Apache Hadoop and Apache Spark • Apache Hadoop − HDFS (Hadoop Distributed File System). − Map. Reduce engine. • Apache Spark − In-memory processing. − 10~100 x faster than Hadoop. Architecture and Code Optimization (ARC) Laboratory @ SNU 28

Thank you! Architecture and Code Optimization (ARC) Laboratory @ SNU 29