Map Reduce Simplified Data Processing on Large Clusters

- Slides: 25

Map. Reduce: Simplified Data Processing on Large Clusters Jeffrey Dean and Sanjay Ghemawat Google, Inc. Presented by Saleh Alnaeli At Kent State University Fall 2010 Advance Database systems course. Prof. Ruoming Jin.

Goals ¨Introduction ¨Programming Model ¨Implementation & model features ¨Refinements ¨Performance Evaluation ¨Conclusion

What is Map-Reduce? n Programming Model, approach, for processing large data sets. n Contains Map and Reduce functions. n Runs on a large cluster of commodity machines. n Many real world tasks are expressible in this model.

Programming model Input & Output are sets of key/value pairs n Programmer specifies two functions: n ¨ map (in_key, in_value) -> list(out_key, intermediate_value) ¨ reduce (out_key, list(intermediate_value)) -> list(out_value) Combines all intermediate values for a particular key n Produces a set of merged output values (usually just one) n

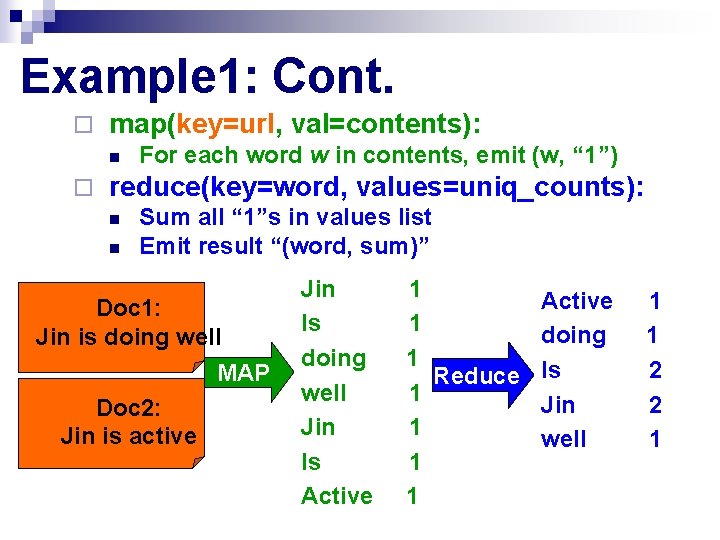

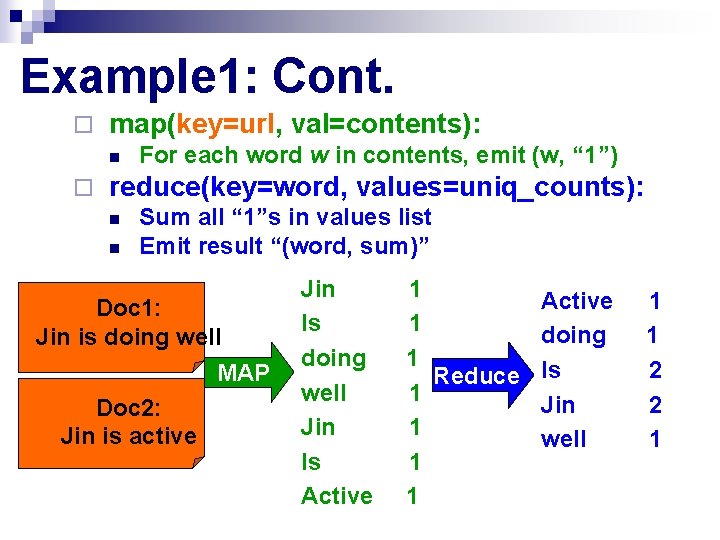

Example 1 : count words in docs ¨Input consists of (url, contents) pairs ¨map(key=url, val=contents): n For each word w in contents, emit (w, “ 1”) ¨reduce(key=word, n Sum values=uniq_counts): all “ 1”s in values list n Emit result “(word, sum)”

Example 1: Cont. ¨ map(key=url, val=contents): n ¨ For each word w in contents, emit (w, “ 1”) reduce(key=word, values=uniq_counts): n n Sum all “ 1”s in values list Emit result “(word, sum)” Doc 1: Jin is doing well MAP Doc 2: Jin is active Jin Is doing well Jin Is Active 1 doing 1 Reduce Is 1 Jin 1 well 1 1 2 2 1

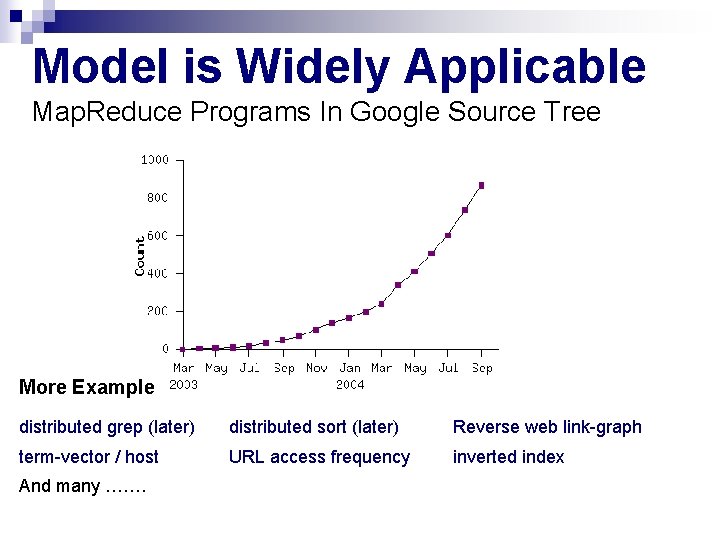

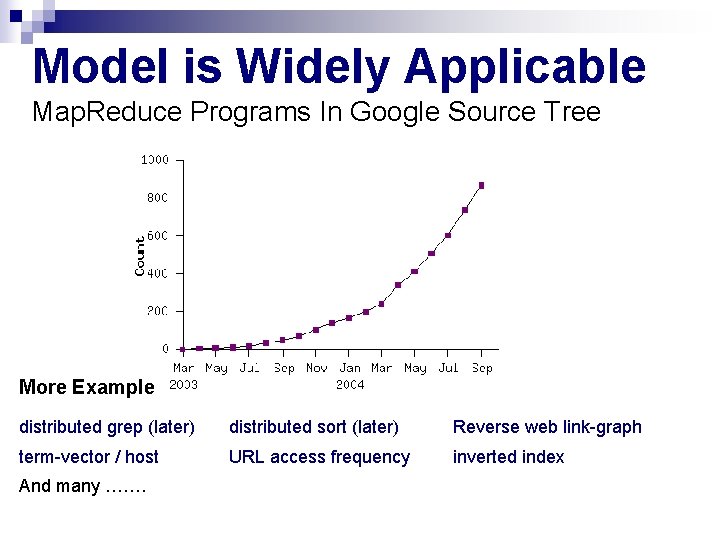

Model is Widely Applicable Map. Reduce Programs In Google Source Tree More Example distributed grep (later) distributed sort (later) Reverse web link-graph term-vector / host URL access frequency inverted index And many …….

Implementation n n Many different implementations are possible The right choice is depending on the environment. Typical cluster: (wide use at Google, large clusters of PC’s connected via switched nets) ¨ Hundreds to thousands of dual-processors x 86 machines, Linux, 2 -4 GB of memory per machine. ¨ connected with networking HW, Limited bisection bandwidth ¨ Storage is on local IDE disks (inexpensive) ¨ GFS: distributed file system manages data ¨ Scheduling system by the users to submit the tasks (Job=set of tasks mapped by scheduler to set of available PC within the cluster) Implemented using C++ library and linked into user programs

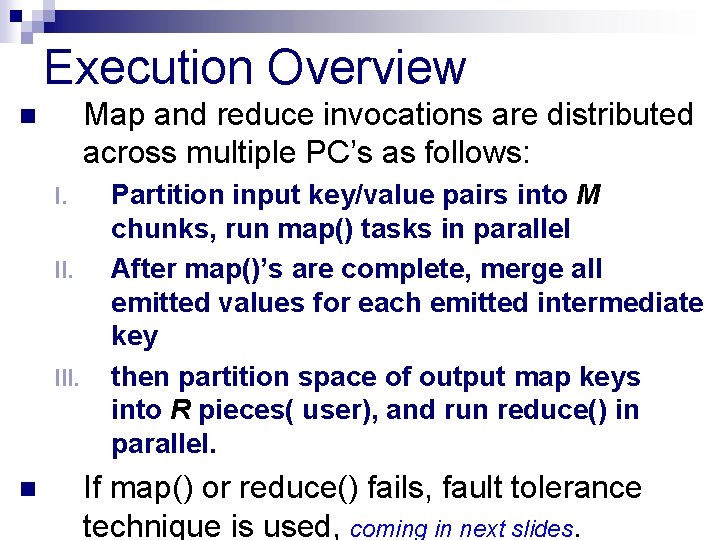

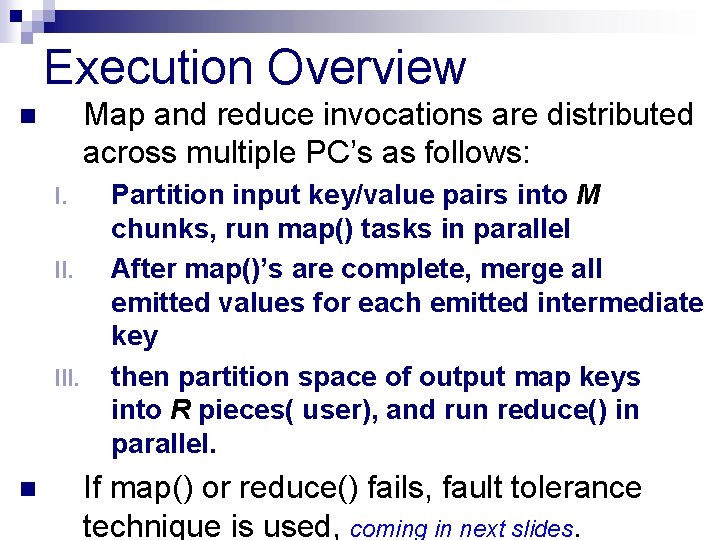

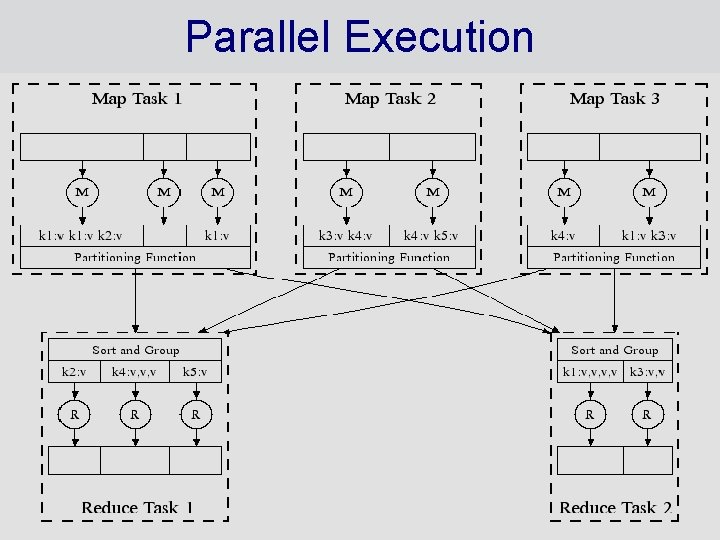

Execution Overview Map and reduce invocations are distributed across multiple PC’s as follows: n I. II. III. n Partition input key/value pairs into M chunks, run map() tasks in parallel After map()’s are complete, merge all emitted values for each emitted intermediate key then partition space of output map keys into R pieces( user), and run reduce() in parallel. If map() or reduce() fails, fault tolerance technique is used, coming in next slides.

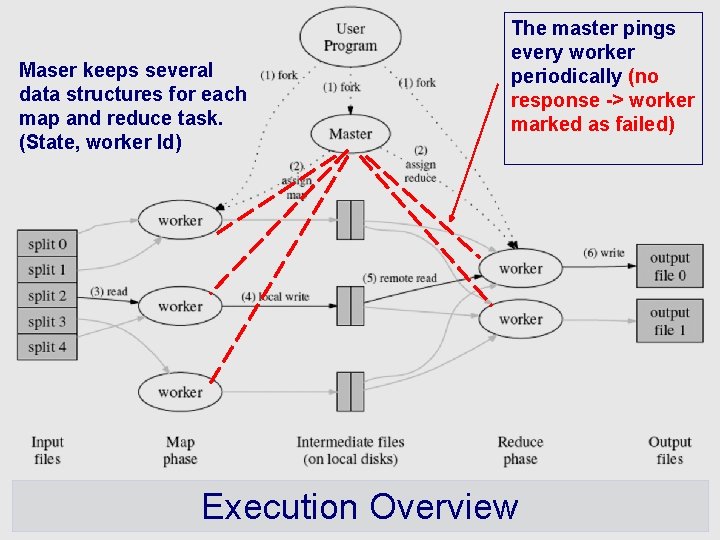

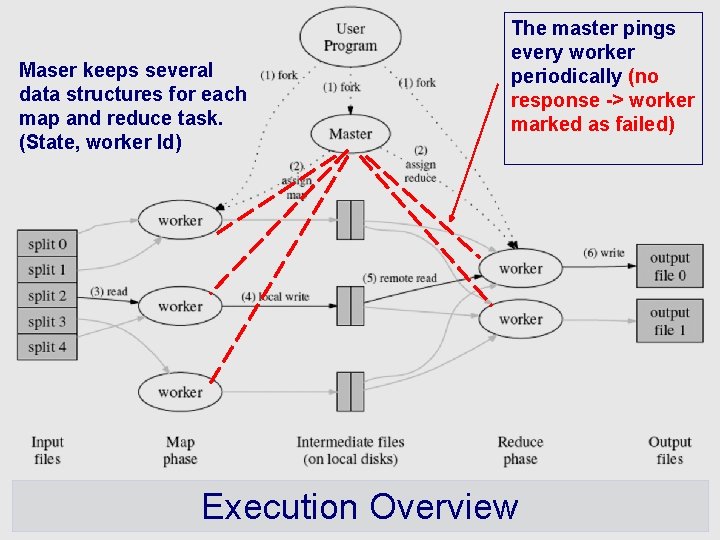

Maser keeps several data structures for each map and reduce task. (State, worker Id) The master pings every worker periodically (no response -> worker marked as failed) Execution Overview

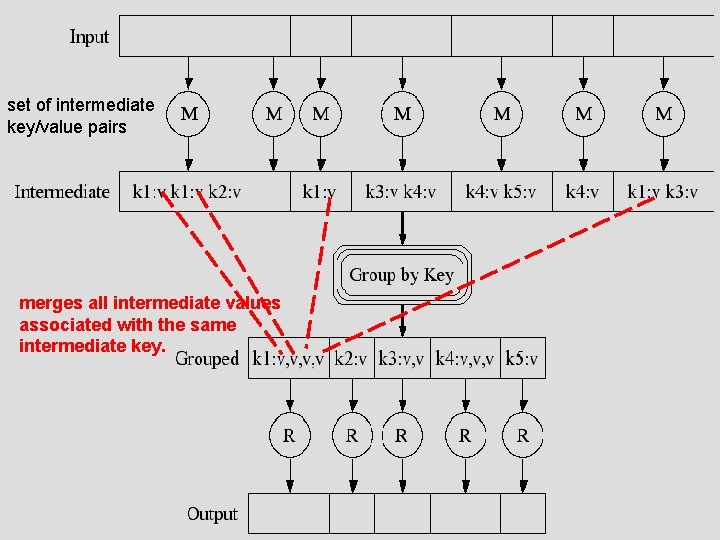

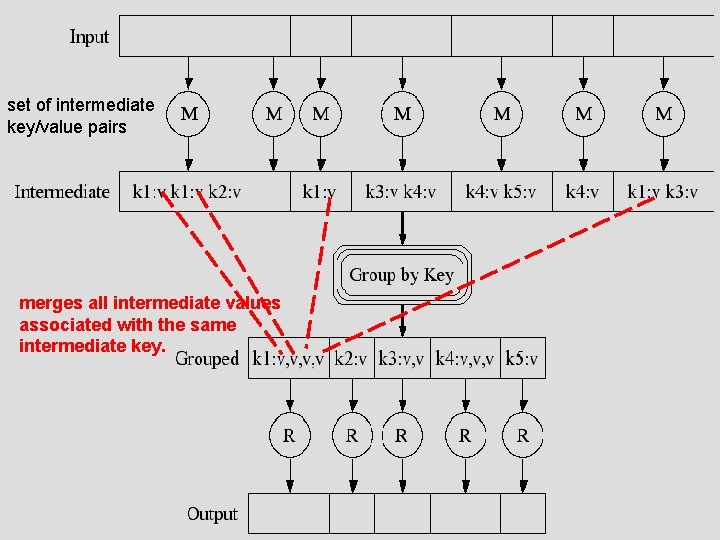

Execution set of intermediate key/value pairs merges all intermediate values associated with the same intermediate key.

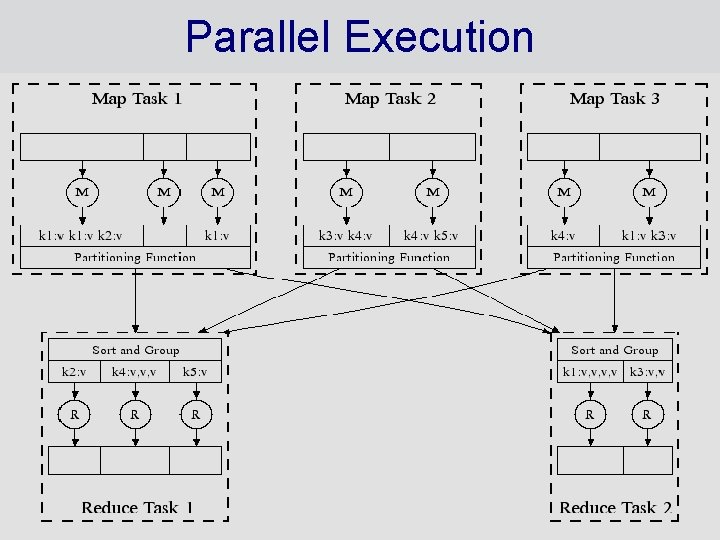

Parallel Execution

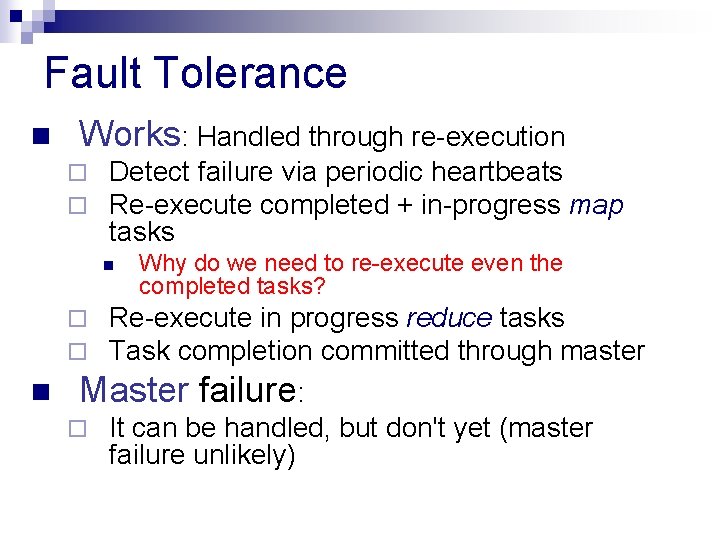

Fault Tolerance n Works: Handled through re-execution ¨ ¨ Detect failure via periodic heartbeats Re-execute completed + in-progress map tasks n Why do we need to re-execute even the completed tasks? Re-execute in progress reduce tasks Task completion committed through master n Master failure: ¨ It can be handled, but don't yet (master failure unlikely) ¨ ¨

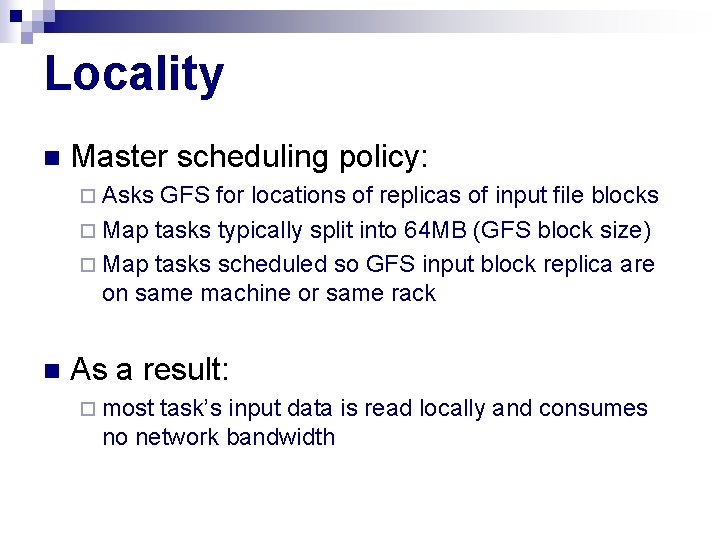

Locality n Master scheduling policy: ¨ Asks GFS for locations of replicas of input file blocks ¨ Map tasks typically split into 64 MB (GFS block size) ¨ Map tasks scheduled so GFS input block replica are on same machine or same rack n As a result: ¨ most task’s input data is read locally and consumes no network bandwidth

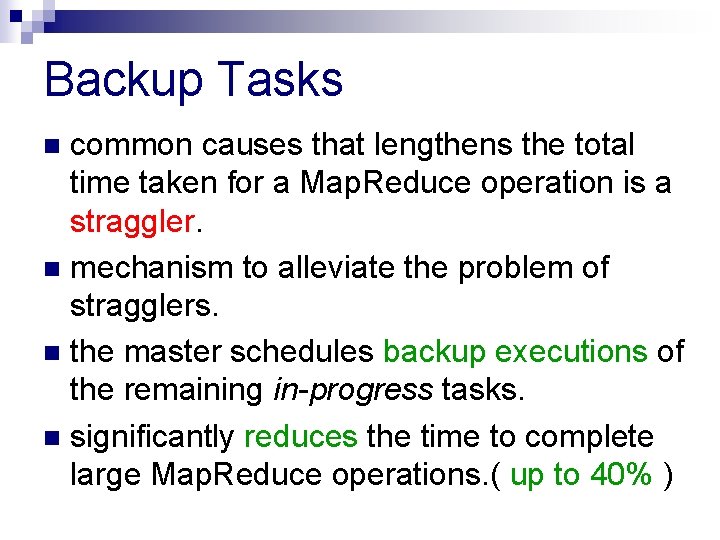

Backup Tasks common causes that lengthens the total time taken for a Map. Reduce operation is a straggler. n mechanism to alleviate the problem of stragglers. n the master schedules backup executions of the remaining in-progress tasks. n significantly reduces the time to complete large Map. Reduce operations. ( up to 40% ) n

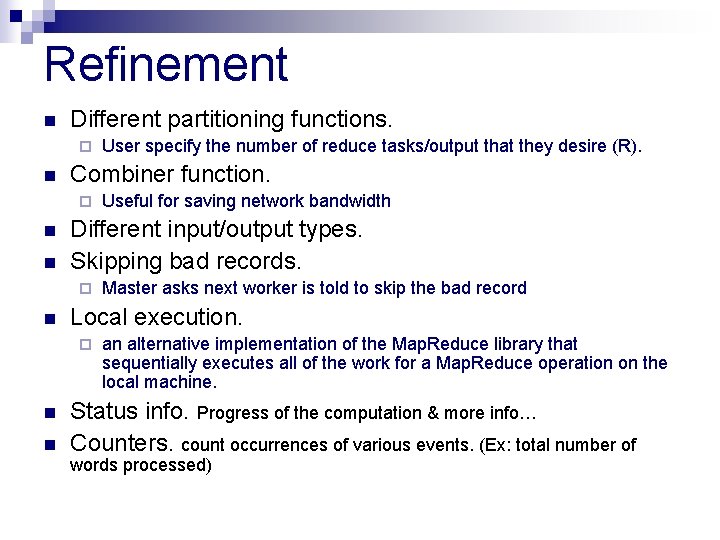

Refinement n Different partitioning functions. ¨ n Combiner function. ¨ n n n Master asks next worker is told to skip the bad record Local execution. ¨ n Useful for saving network bandwidth Different input/output types. Skipping bad records. ¨ n User specify the number of reduce tasks/output that they desire (R). an alternative implementation of the Map. Reduce library that sequentially executes all of the work for a Map. Reduce operation on the local machine. Status info. Progress of the computation & more info… Counters. count occurrences of various events. (Ex: total number of words processed)

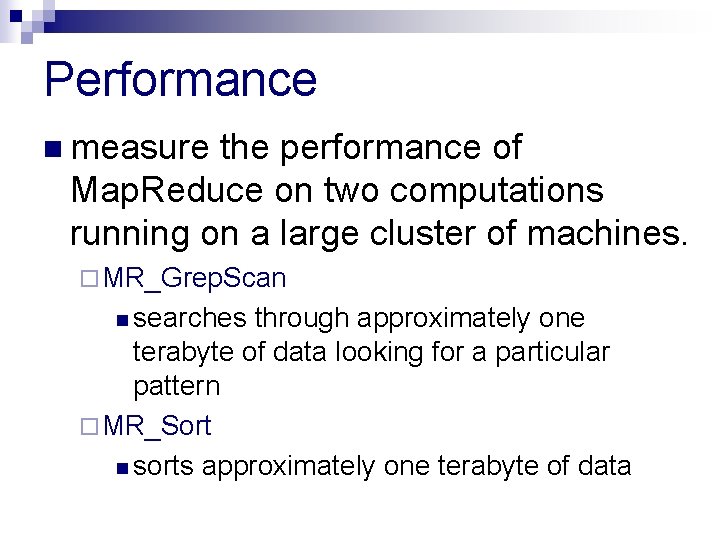

Performance n measure the performance of Map. Reduce on two computations running on a large cluster of machines. ¨ MR_Grep. Scan n searches through approximately one terabyte of data looking for a particular pattern ¨ MR_Sort n sorts approximately one terabyte of data

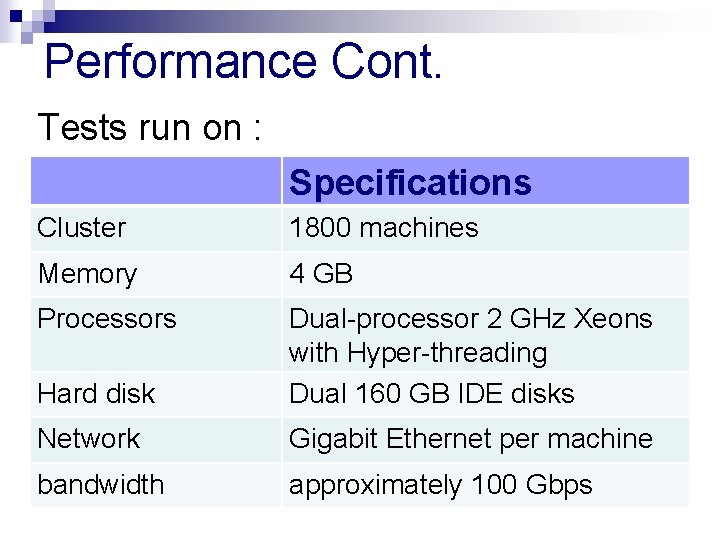

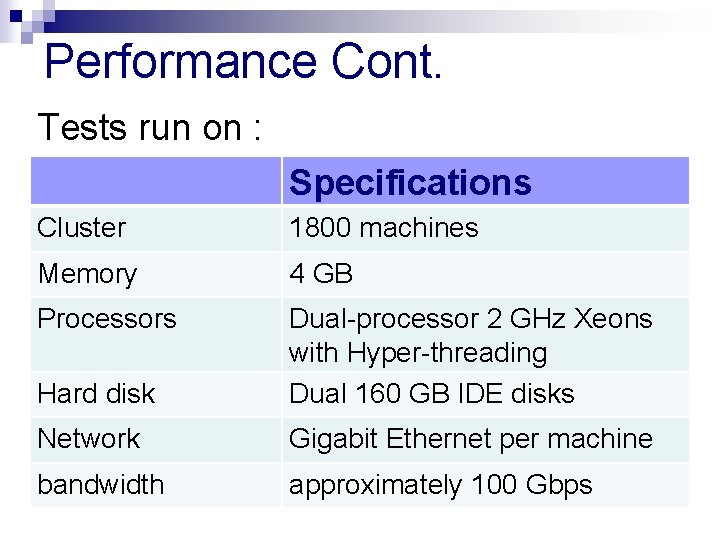

Performance Cont. Tests run on : Specifications Cluster 1800 machines Memory 4 GB Processors Hard disk Dual-processor 2 GHz Xeons with Hyper-threading Dual 160 GB IDE disks Network Gigabit Ethernet per machine bandwidth approximately 100 Gbps

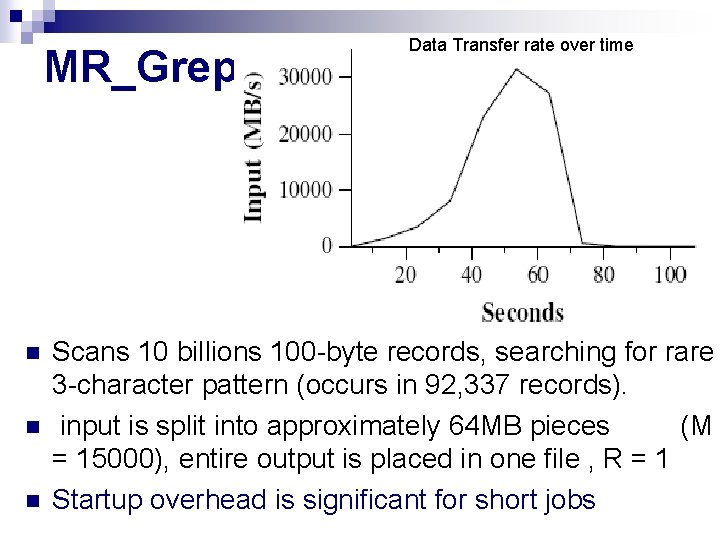

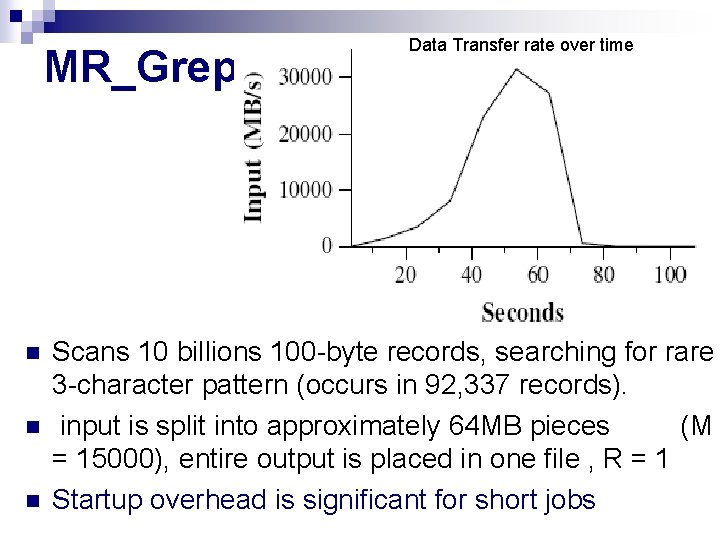

MR_Grep n n n Data Transfer rate over time Scans 10 billions 100 -byte records, searching for rare 3 -character pattern (occurs in 92, 337 records). input is split into approximately 64 MB pieces (M = 15000), entire output is placed in one file , R = 1 Startup overhead is significant for short jobs

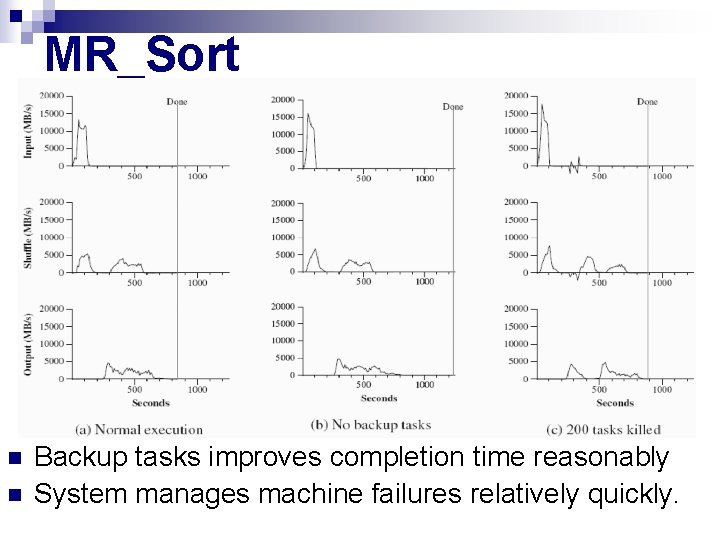

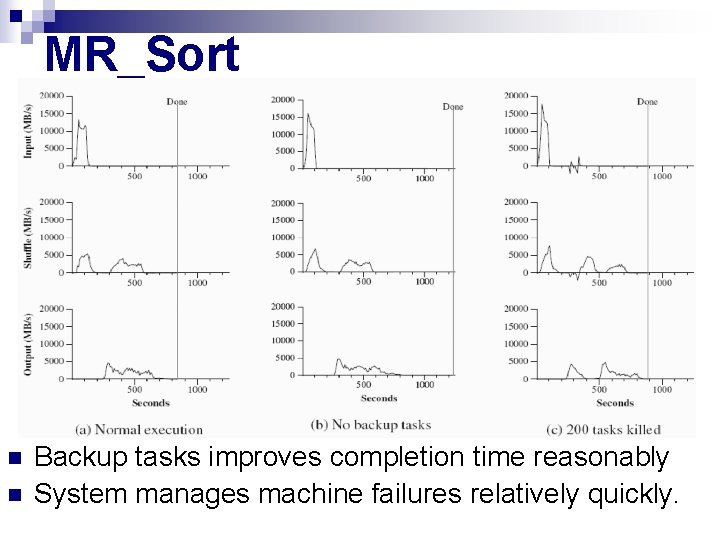

MR_Sort n n Backup tasks improves completion time reasonably System manages machine failures relatively quickly.

Experience & Conclusions n More and more use of Map. Reduce approach. ¨ See n n the paper. Map. Reduce has proven to be a useful abstraction Greatly simplifies large-scale computations at Google Fun to use: focus on problem, let library deal with messy details No big need for parallelization knowledge (relief the user from dealing with low level parallelization details)

Disadvantages ¨ Might be hard to express ¨ Data parallelism is key n Need problem in Map. Reduce to be able to break up a problem by data chunks ¨ Map. Reduce is closed-source (to Google) C++ n Hadoop is open-source Java-based rewrite

Related Work n n n Hadoop. DB: (An Architectural Hybrid of Map. Reduce and DBMS Technologies for Analytical Workloads) [R 4] ¨ a hybrid system that takes the best features from both technologies; ¨ the prototype approaches parallel databases in performance and efficiency, yet still yields the scalability, fault tolerance, and flexibility of Map. Reduce-based systems. FREERIDE (Framework for Rapid Implementation of Datamining Engines). [R 3] ¨ a middleware for rapid development of data mining implementations on large SMPs and clusters of SMPs. ¨ The middleware performs distributed memory parallelization across the cluster and ¨ shared memory parallelization within each node. River (processes communicate with each other by sending data over distributed queues / similar in Dynamic load balancing) [R 5]

References n J. Dean and S. Ghemawat. ¨ n Dan Weld’s at U. Washington ¨ n Hadoop. DB: An Architectural Hybrid of Map. Reduce and DBMS Technologies for Analytical Workloads [R 4] Remzi H. Arpaci-Dusseau, Eric Anderson, Noah Treuhaft, David E. Culler, Joseph M. Hellerstein, David Patterson, and Kathy Yelick. ¨ n Shared Memory Parallelization of Data Mining Algorithms: Techniques, Programming Interface, and Performance (pdf 2004) [R 3] Azza Abouzeid, Kamil Bajda. Pawlikowski, Daniel Abadi, Avi Silberschatz, Alexander Rasin ¨ n (tutorial & slides) Ruoming Jin, Ge Yang, and Gagan Agrawal ¨ n Map. Reduce: Simplified Data Processing on Large Clusters. In OSDI, 2004. (Paper and slides) Cluster I/O with River: Making the fast case common. In Proceedings of the Sixth Workshop on Input/Output in Parallel and Distributed. Systems (IOPADS '99), pages 10. 22, Atlanta, Georgia, May 1999. [R 4] Prof. Demmel ¨ ¨ inst. eecs. berkeley. edu/~cs 61 c http: //gcu. googlecode. com/files/intermachine-parallelism-lecture. ppt

Thank you! n Questions and Comments?