JASMIN Success Stories NERC Scientific Computing Forum 29

- Slides: 22

JASMIN Success Stories NERC Scientific Computing Forum 29 June 2017 Dr Matt Pritchard Centre for Environmental Data Analysis STFC / RAL Space

Outline • What is JASMIN – What are the services provided to users? – How do they access them? – Current facts / figures • Success stories – – Service provider stories User stories Challenges Next steps

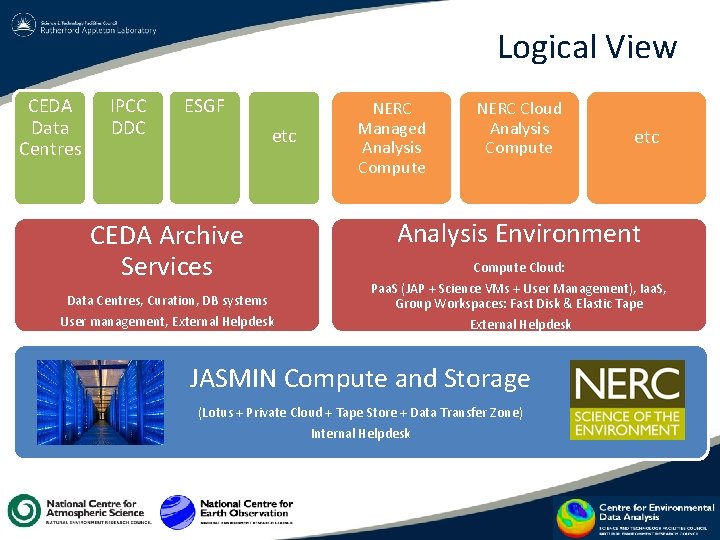

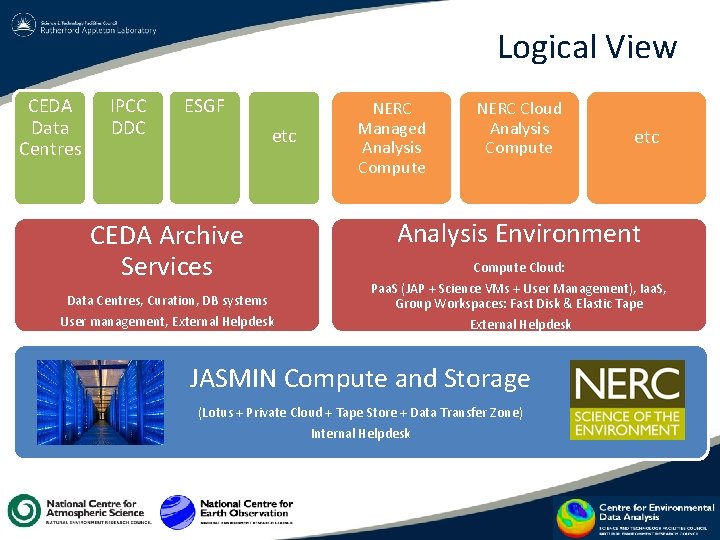

Logical View CEDA Data Centres IPCC DDC ESGF etc CEDA Archive Services Data Centres, Curation, DB systems User management, External Helpdesk NERC Managed Analysis Compute NERC Cloud Analysis Compute etc Analysis Environment Compute Cloud: Paa. S (JAP + Science VMs + User Management), Iaa. S, Group Workspaces: Fast Disk & Elastic Tape External Helpdesk JASMIN Compute and Storage (Lotus + Private Cloud + Tape Store + Data Transfer Zone) Internal Helpdesk

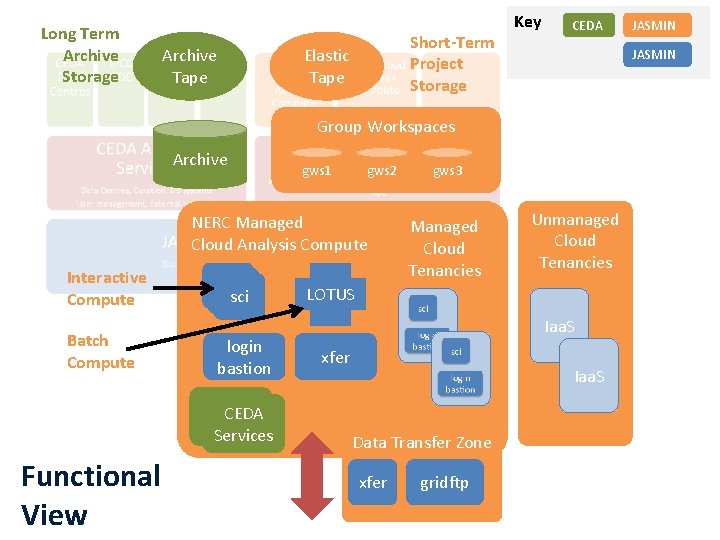

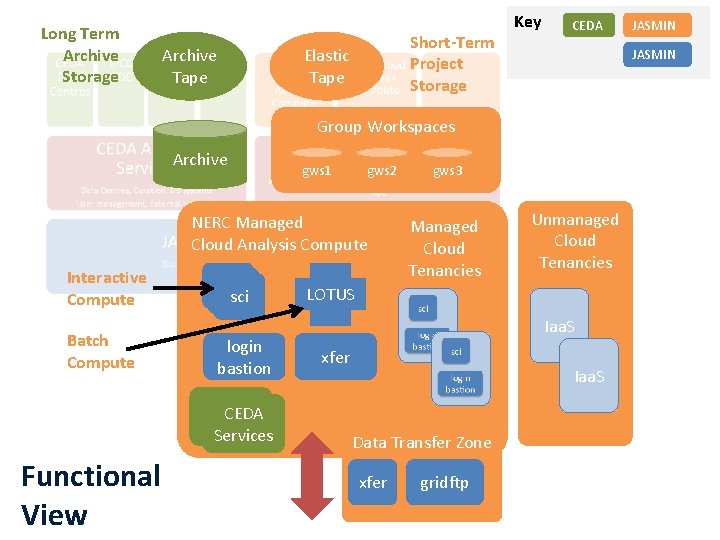

Long Term Archive Storage Archive Tape Short-Term Project Storage Elastic Tape Key CEDA JASMIN Group Workspaces Archive gws 1 gws 2 NERC Managed Cloud Analysis Compute Interactive Compute Batch Compute sci sci login bastion CEDA Services Functional View gws 3 Managed Cloud Tenancies Unmanaged Cloud Tenancies LOTUS Iaa. S xfer Iaa. S Data Transfer Zone xfer gridftp JASMIN

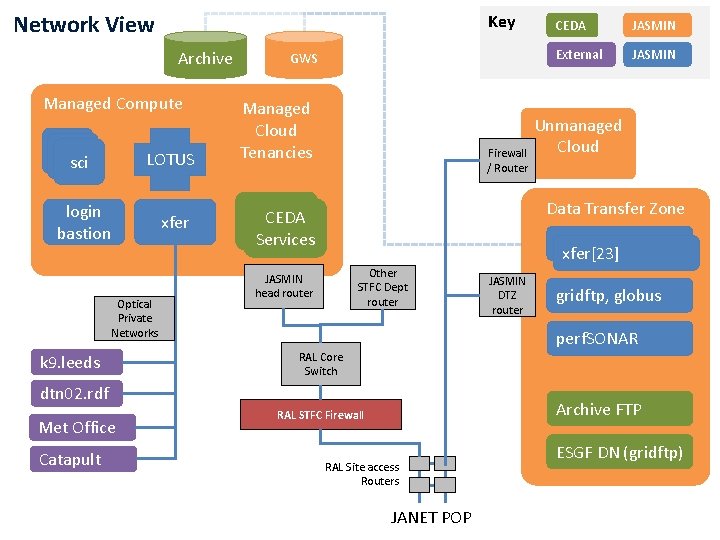

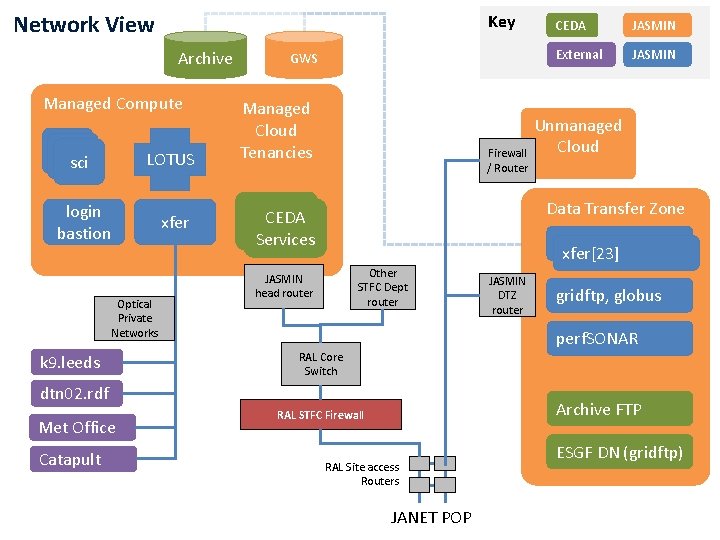

Network View Key Archive Managed Compute sci sci LOTUS login bastion xfer Optical Private Networks k 9. leeds GWS Managed Cloud Tenancies Catapult JASMIN External JASMIN Unmanaged Cloud Firewall / Router Data Transfer Zone CEDA Servicez Services xfer[23] Other STFC Dept router JASMIN head router JASMIN DTZ router gridftp, globus perf. SONAR RAL Core Switch dtn 02. rdf Met Office CEDA Archive FTP RAL STFC Firewall RAL Site access Routers JANET POP ESGF DN (gridftp)

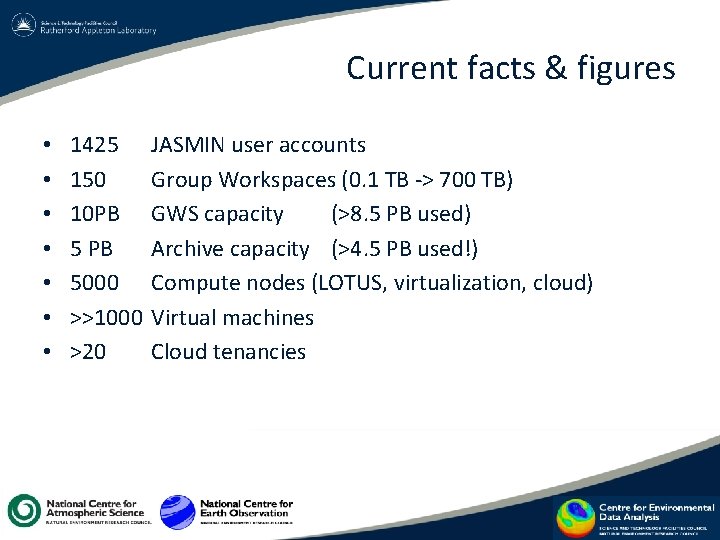

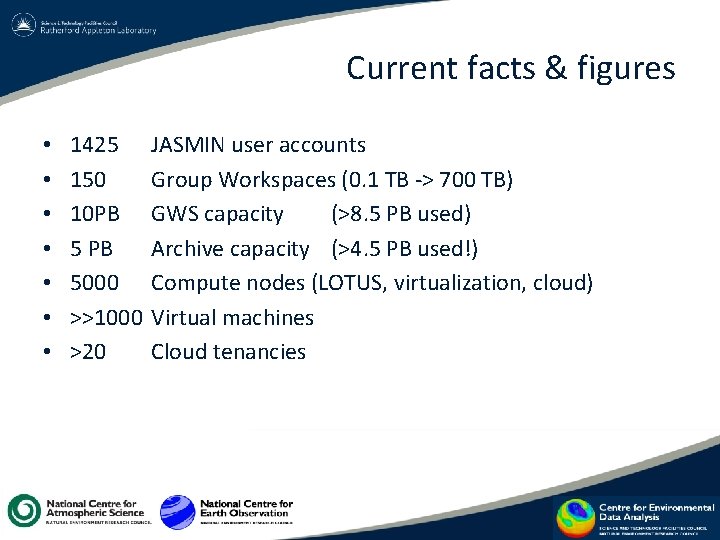

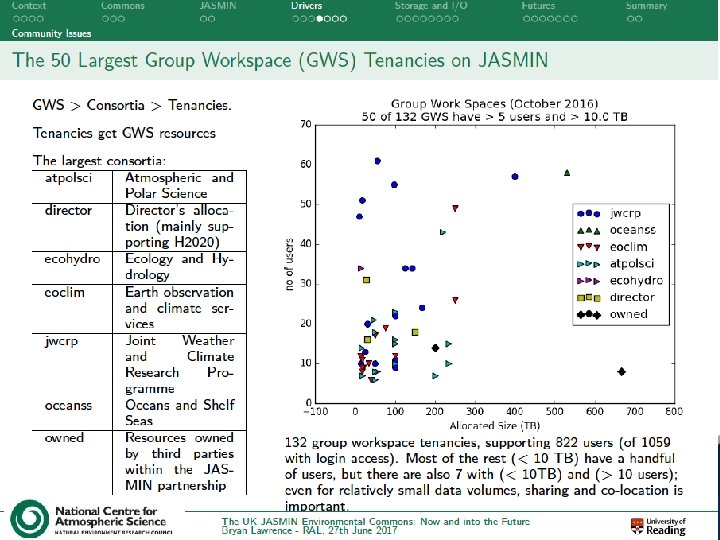

Current facts & figures • • 1425 150 10 PB 5000 >>1000 >20 JASMIN user accounts Group Workspaces (0. 1 TB -> 700 TB) GWS capacity (>8. 5 PB used) Archive capacity (>4. 5 PB used!) Compute nodes (LOTUS, virtualization, cloud) Virtual machines Cloud tenancies

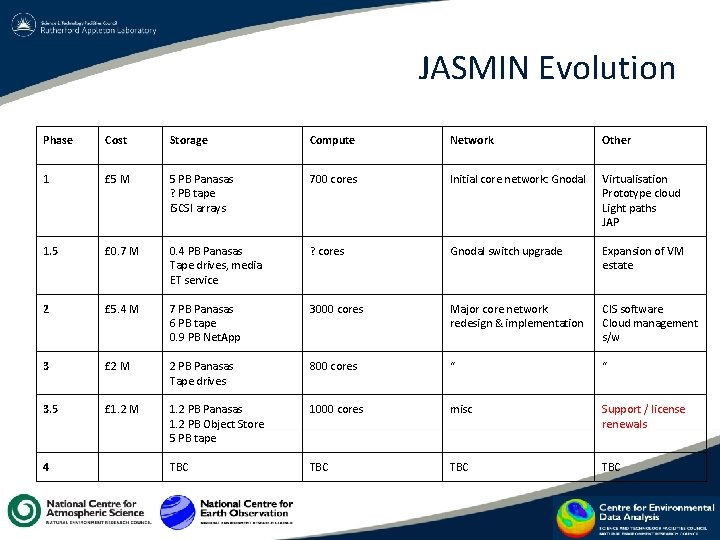

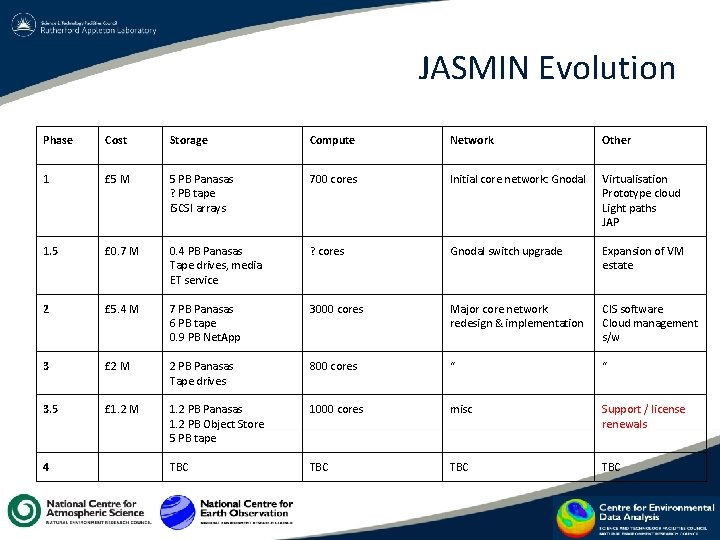

JASMIN Evolution Phase Cost Storage Compute Network Other 1 £ 5 M 5 PB Panasas ? PB tape i. SCSI arrays 700 cores Initial core network: Gnodal Virtualisation Prototype cloud Light paths JAP 1. 5 £ 0. 7 M 0. 4 PB Panasas Tape drives, media ET service ? cores Gnodal switch upgrade Expansion of VM estate 2 £ 5. 4 M 7 PB Panasas 6 PB tape 0. 9 PB Net. App 3000 cores Major core network redesign & implementation CIS software Cloud management s/w 3 £ 2 M 2 PB Panasas Tape drives 800 cores “ “ 3. 5 £ 1. 2 M 1. 2 PB Panasas 1. 2 PB Object Store 5 PB tape 1000 cores misc Support / license renewals TBC TBC 4

JASMIN data growth

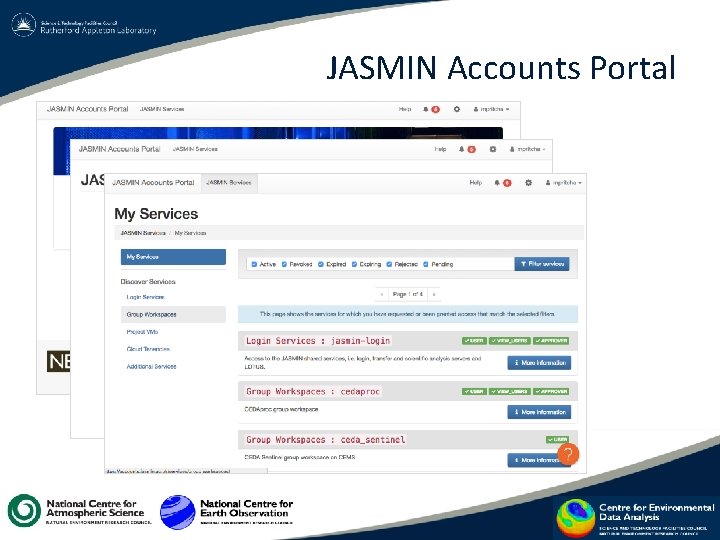

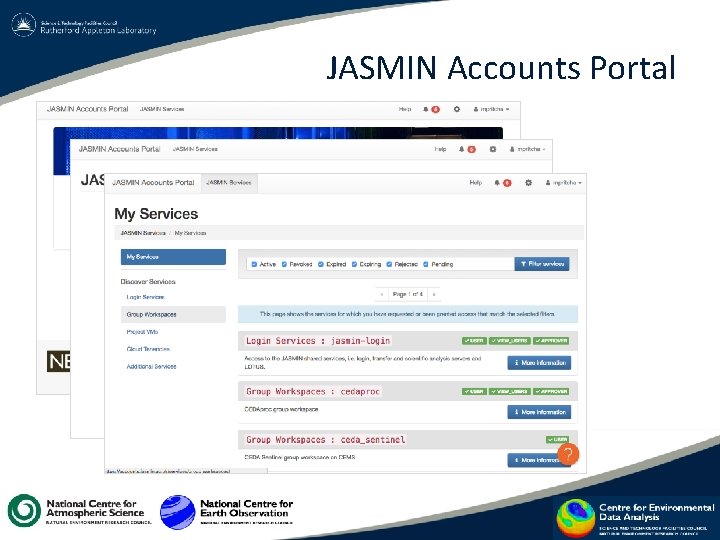

JASMIN Accounts Portal

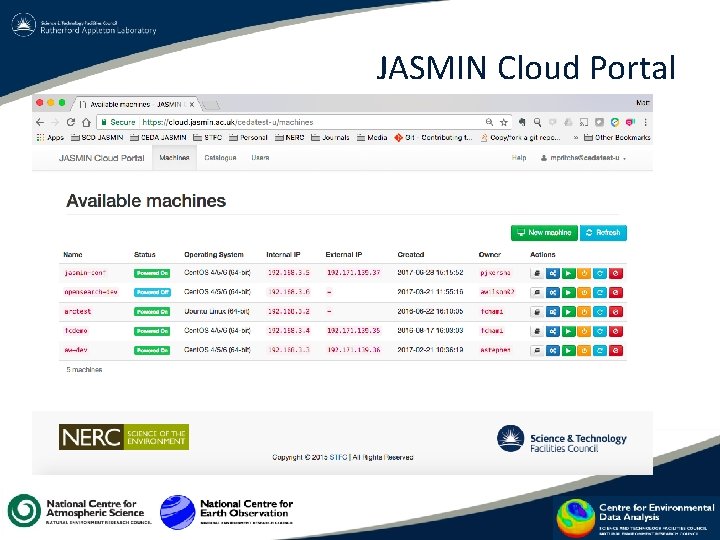

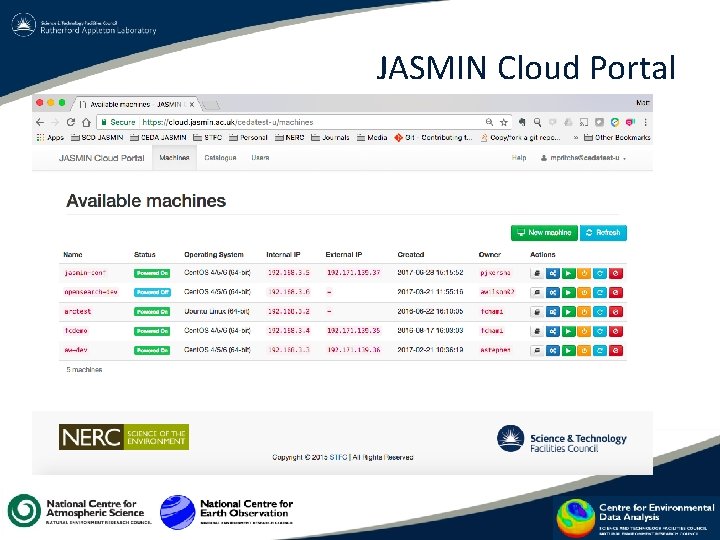

JASMIN Cloud Portal

Data Transfer Zone • “Science DMZ” concept • Secure, friction-free path for science data • Corporate firewall better able to handle “business” traffic

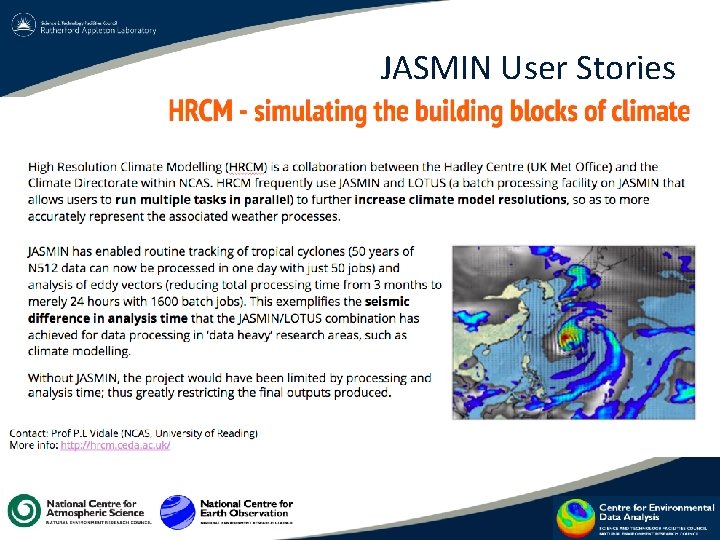

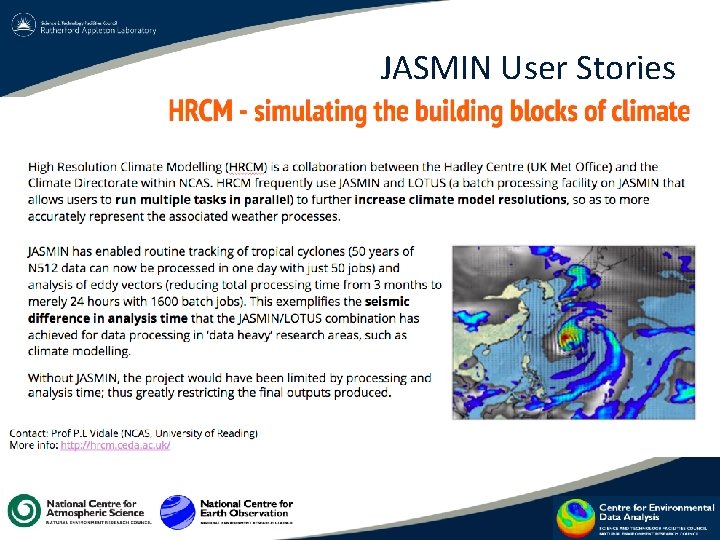

JASMIN User Stories

JASMIN User Stories • COMET: Seismic Hazard monitoring with Sentinel-1 In. SAR “We’ve had fantastic support from the team who have helped us to build a suitable system. The level of support provided has really helped us to achieve our goals and I don’t think a bespoke solution like this would be available anywhere else. Due to the enormous volumes of data we’re dealing with (each image is around 8 GB when zipped), the collocation of the archive with the JASMIN system is essential. ” Emma Hatton, University of Leeds, JAMSIN Conference June 2017

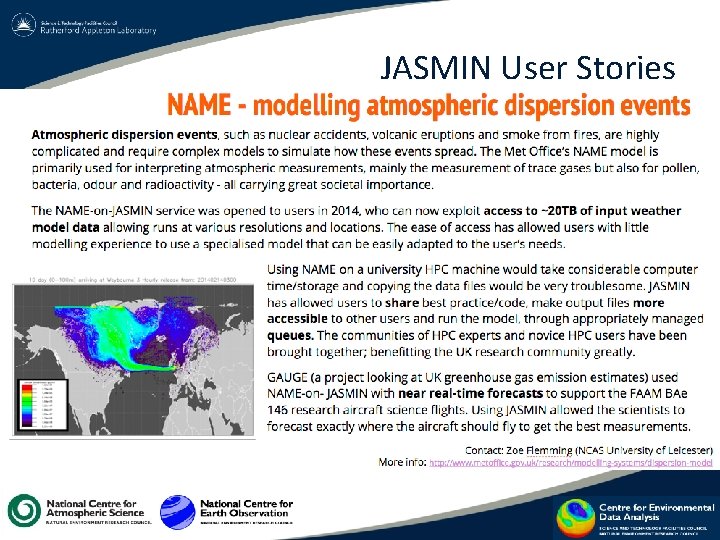

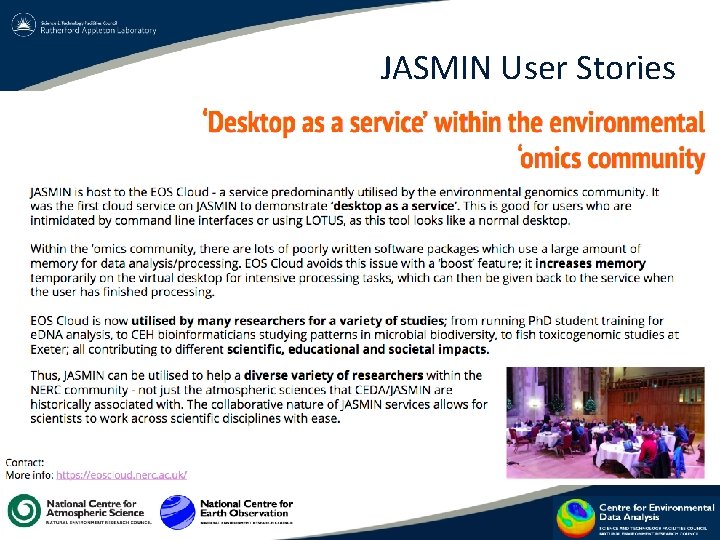

JASMIN User Stories

JASMIN User Stories

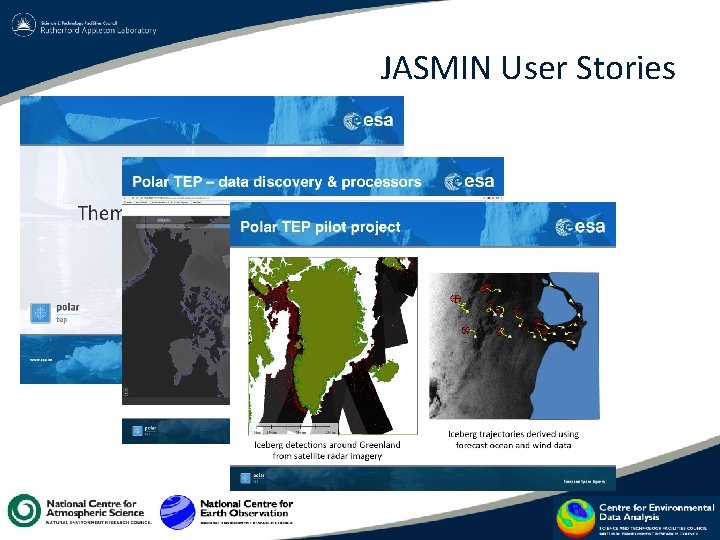

JASMIN User Stories

JASMIN User Stories

JASMIN User Stories

JASMIN User Stories

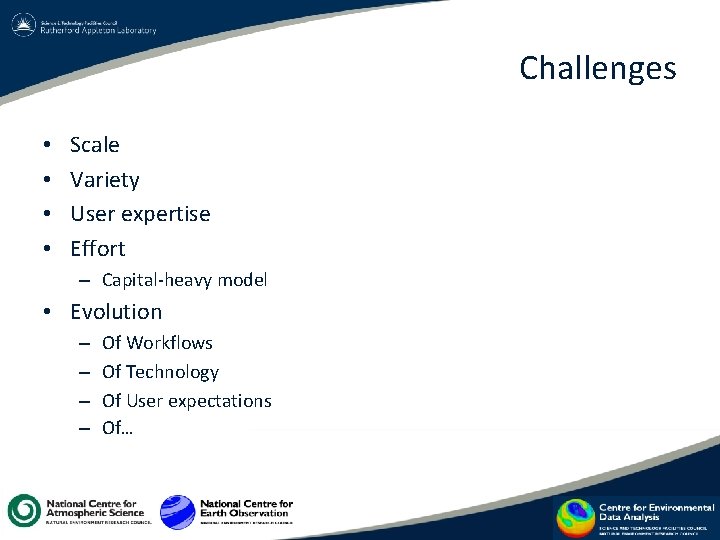

Challenges • • Scale Variety User expertise Effort – Capital-heavy model • Evolution – – Of Workflows Of Technology Of User expectations Of…

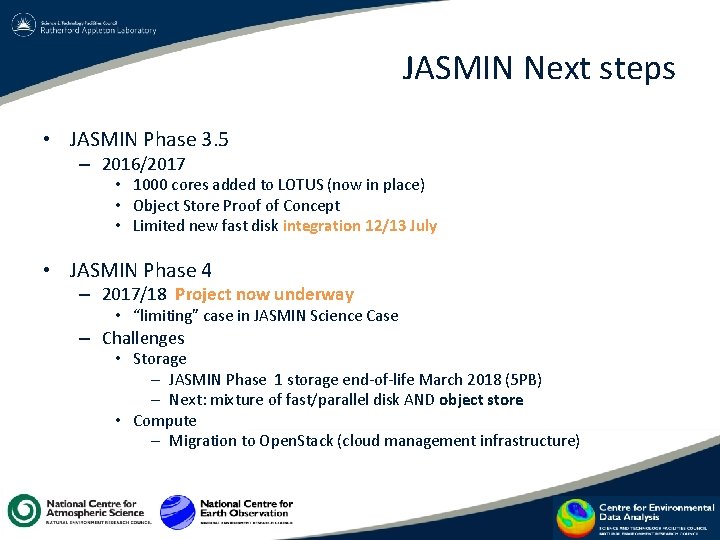

JASMIN Next steps • JASMIN Phase 3. 5 – 2016/2017 • 1000 cores added to LOTUS (now in place) • Object Store Proof of Concept • Limited new fast disk integration 12/13 July • JASMIN Phase 4 – 2017/18 Project now underway • “limiting” case in JASMIN Science Case – Challenges • Storage – JASMIN Phase 1 storage end-of-life March 2018 (5 PB) – Next: mixture of fast/parallel disk AND object store • Compute – Migration to Open. Stack (cloud management infrastructure)

Further information • JASMIN – http: //www. jasmin. ac. uk https: //accounts. jasmin. ac. uk – https: //cloud. jasmin. ac. uk – https: //www. youtube. com/channel/UC 11 n. PZVyj. DLj. Yl. S 7 Nvbnlm. Q • Centre for Environmental Data Analysis – http: //www. ceda. ac. uk • CEDA & JASMIN help documentation – http: //help. ceda. ac. uk • STFC Scientific Computing Department – http: //www. stfc. ac. uk/SCD/ • JASMIN paper Lawrence, B. N. , V. L. Bennett, J. Churchill, M. Juckes, P. Kershaw, S. Pascoe, S. Pepler, M. Pritchard, and A. Stephens. Storing and manipulating environmental big data with JASMIN. Proceedings of IEEE Big Data 2013, p 68 -75, doi: 10. 1109/Big. Data. 2013. 6691556