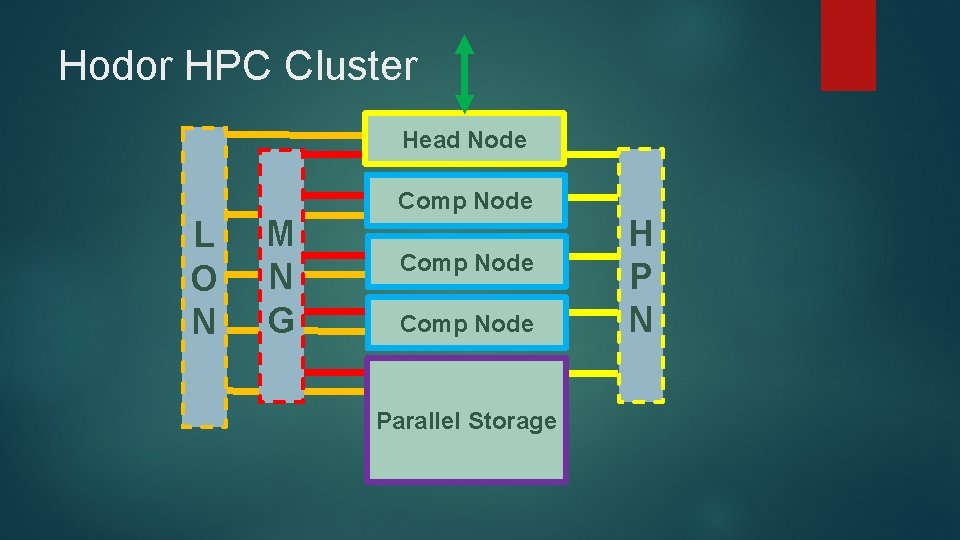

Hodor HPC Cluster Head Node L O N

- Slides: 15

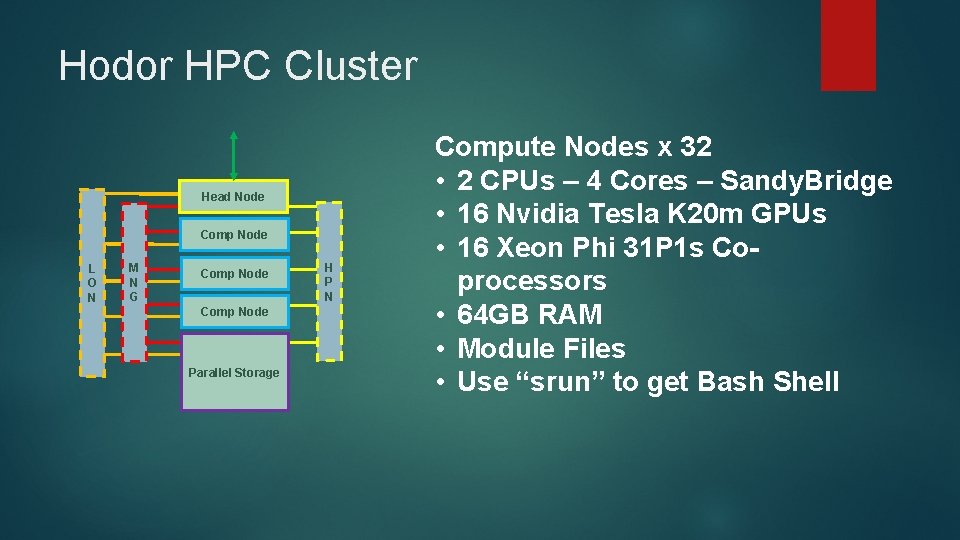

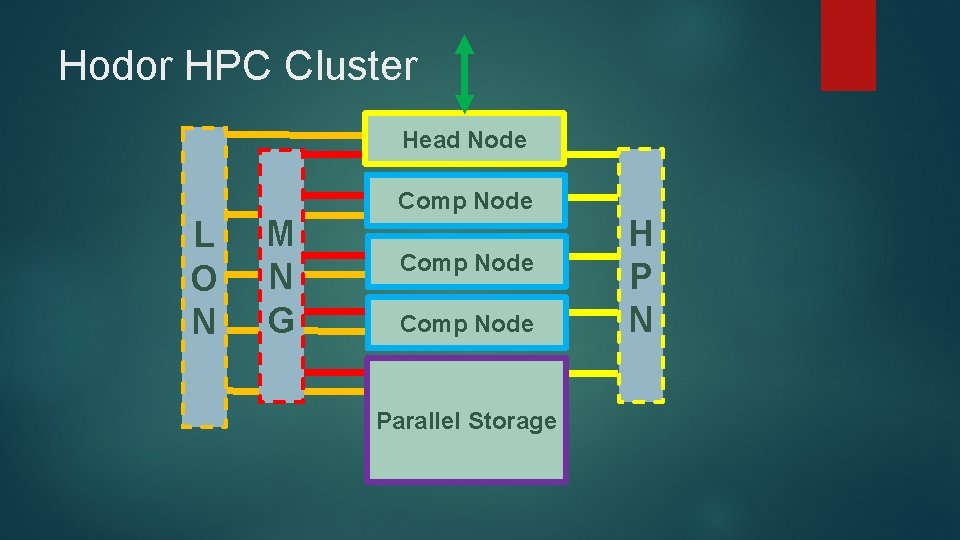

Hodor HPC Cluster Head Node L O N M N G Comp Node Parallel Storage H P N

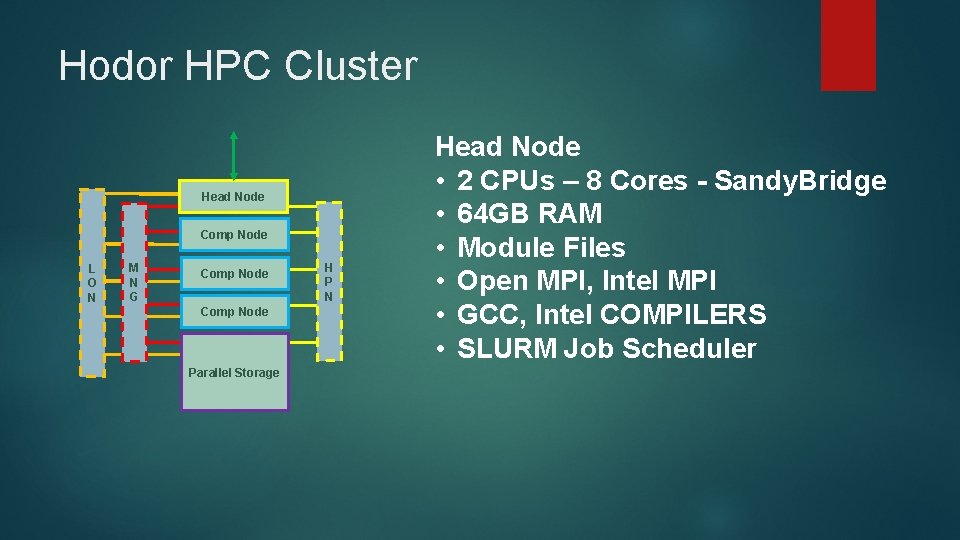

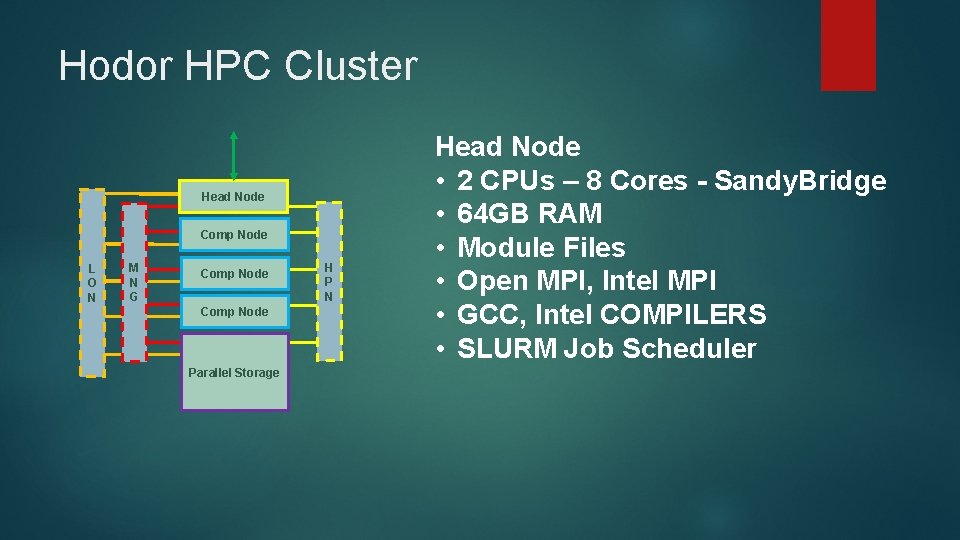

Hodor HPC Cluster Head Node Comp Node L O N M N G Comp Node Parallel Storage H P N Head Node • 2 CPUs – 8 Cores - Sandy. Bridge • 64 GB RAM • Module Files • Open MPI, Intel MPI • GCC, Intel COMPILERS • SLURM Job Scheduler

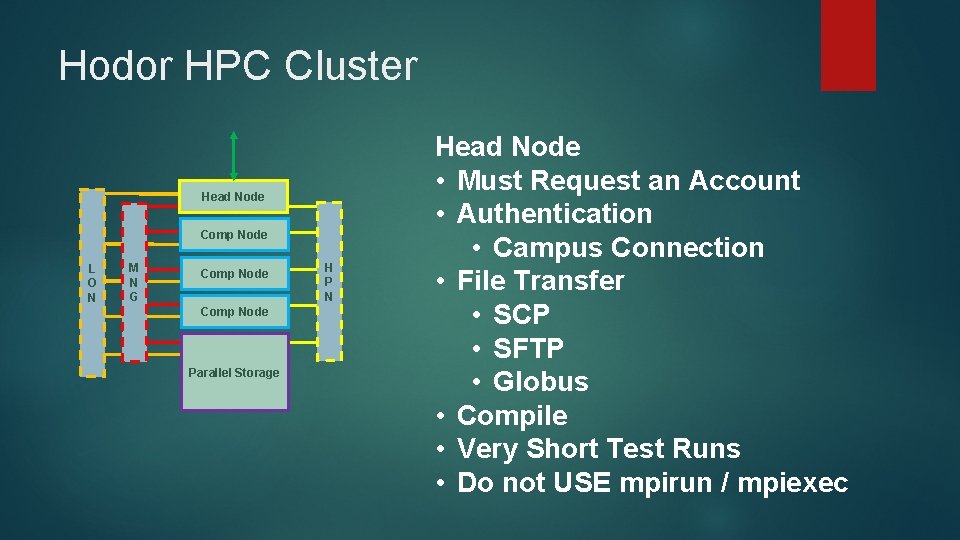

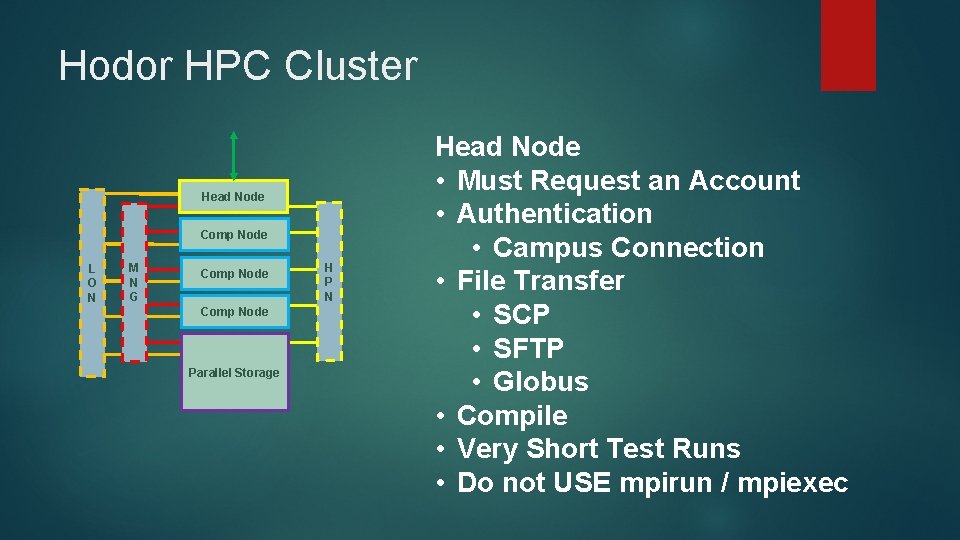

Hodor HPC Cluster Head Node Comp Node L O N M N G Comp Node Parallel Storage H P N Head Node • Must Request an Account • Authentication • Campus Connection • File Transfer • SCP • SFTP • Globus • Compile • Very Short Test Runs • Do not USE mpirun / mpiexec

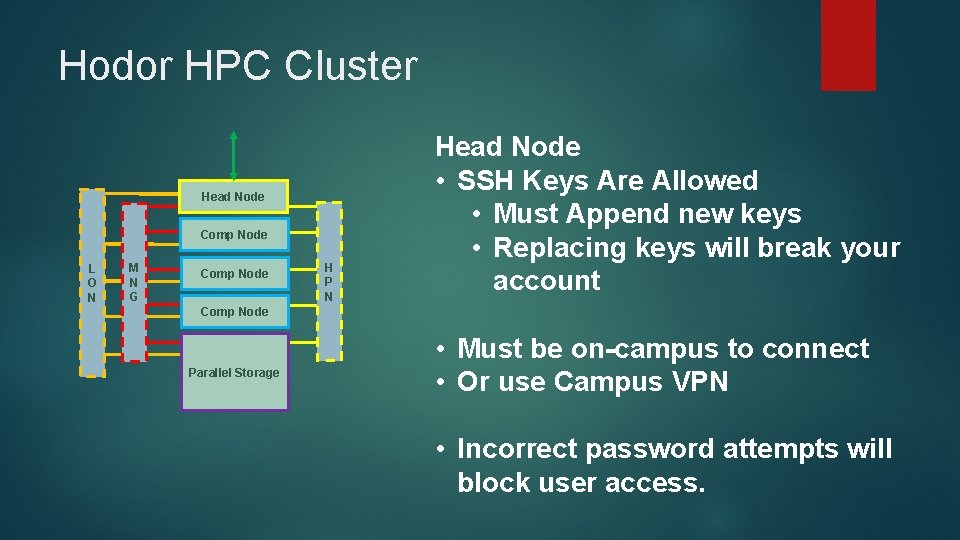

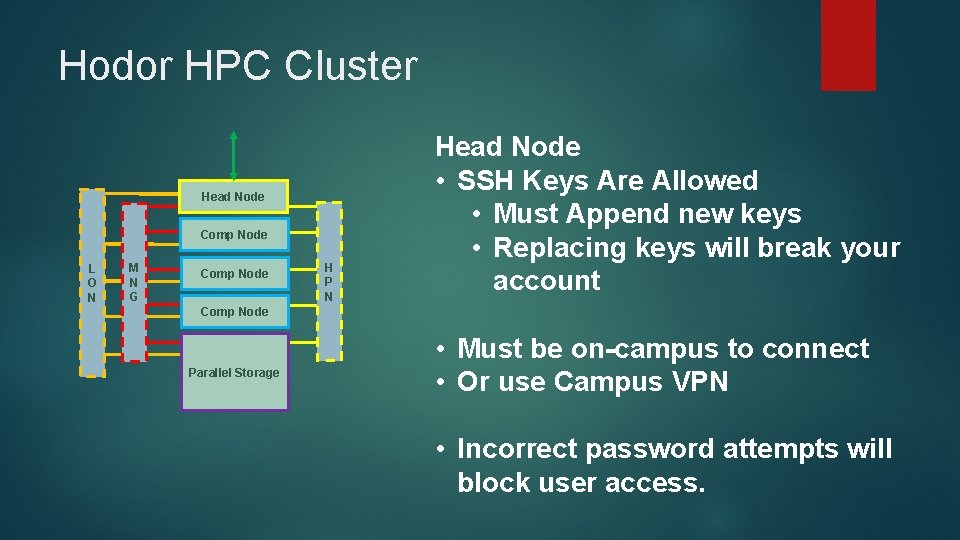

Hodor HPC Cluster Head Node Comp Node L O N M N G Comp Node H P N Head Node • SSH Keys Are Allowed • Must Append new keys • Replacing keys will break your account Comp Node Parallel Storage • Must be on-campus to connect • Or use Campus VPN • Incorrect password attempts will block user access.

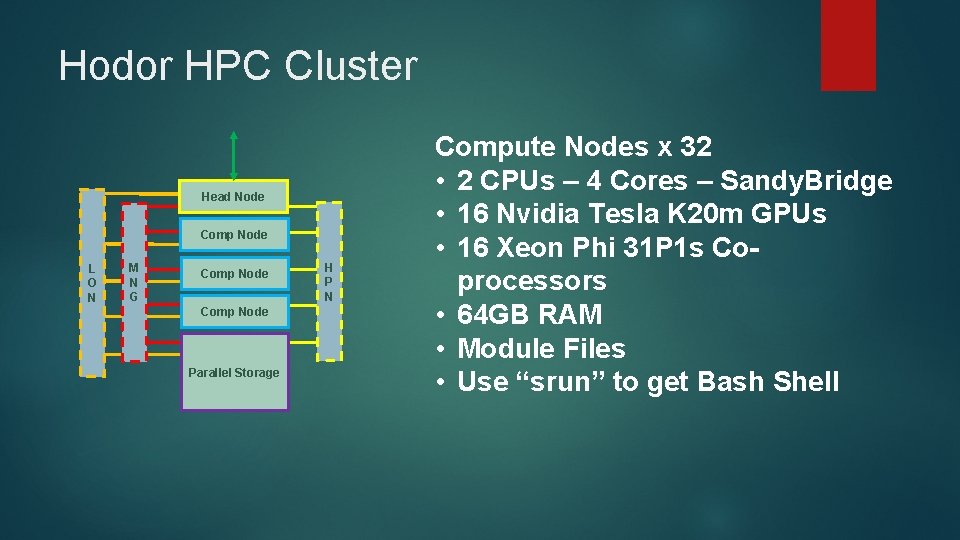

Hodor HPC Cluster Head Node Comp Node L O N M N G Comp Node Parallel Storage H P N Compute Nodes x 32 • 2 CPUs – 4 Cores – Sandy. Bridge • 16 Nvidia Tesla K 20 m GPUs • 16 Xeon Phi 31 P 1 s Coprocessors • 64 GB RAM • Module Files • Use “srun” to get Bash Shell

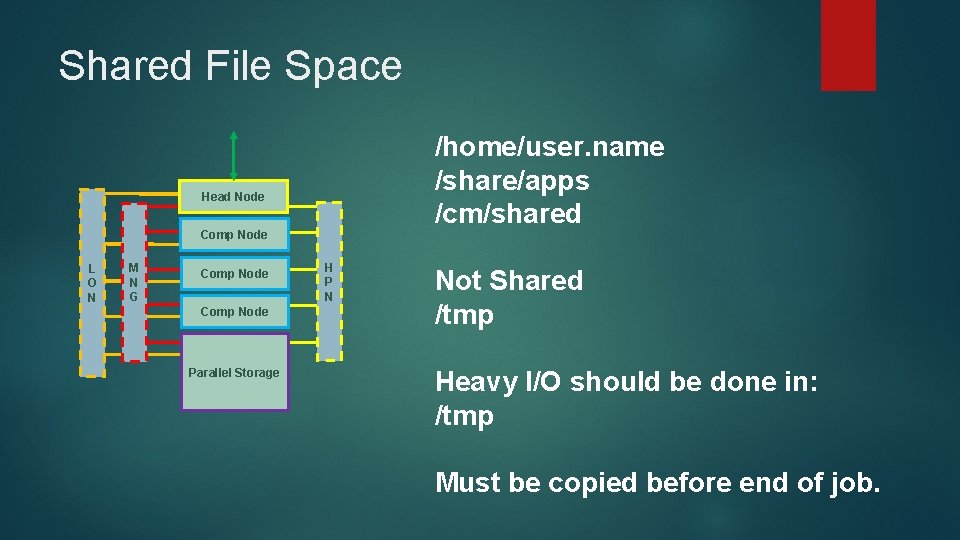

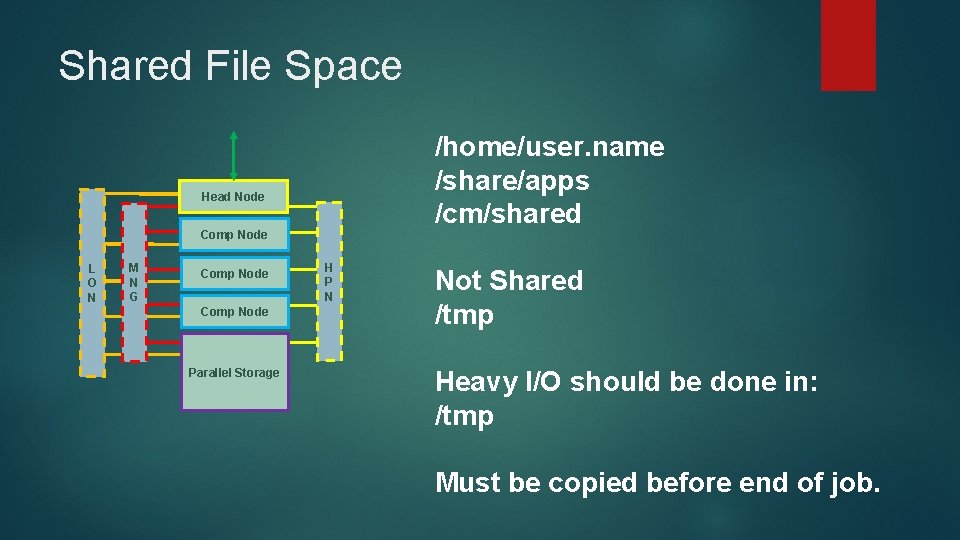

Shared File Space /home/user. name /share/apps /cm/shared Head Node Comp Node L O N M N G Comp Node Parallel Storage H P N Not Shared /tmp Heavy I/O should be done in: /tmp Must be copied before end of job.

SLURM Queue Information Commands squeue sinfo qstat –a qstat –n qstat –f

SLURM Job Submission Commands srun – for interactive - use of “screen” sbatch - for batch runs

SLURM Job Submission Commands Examples: /share/apps/slurm/examples sbatch somescript. sh srun –N 1 –n --pty bash

Important sbatch arguments #SBATCH –N 8 #SBATCH --ntasks-per-node=8 #SBATCH –t 00: 10: 00 #SBATCH –o. /out_test. txt #SBATCh –e. /err_test. txt

Important sbatch environment variables $SLURM_SUBMIT_DIR Path from current dir when sbatch was invoked. $SLURM_NTASKS Total number of tasks in your job $SLURM_JOB_ID ID number identifying your SLURM job.

SLURM Job Delete Commands scancel #### qdel ####

Modulefile Commands module avail module load module list

Hodor Help Info Website: www. crc. und. edu Tutorials & Desktop Software > Linux HPC Cluster (Hodor) Hodor does not have a job submission queue called “test”

Get Hodor Account Send email to: Aaron. Bergstrom@und. edu