HighPerformance Matrix Multiplication Tyler Smith and Jianyu Huang

- Slides: 67

High-Performance Matrix Multiplication Tyler Smith and Jianyu Huang 1

Organization • Introduction • A simple blocked algorithm • State-of-the-art GEMM – Blocking for caches (temporal locality) – Packing (spatial locality) – Optimized kernels 2

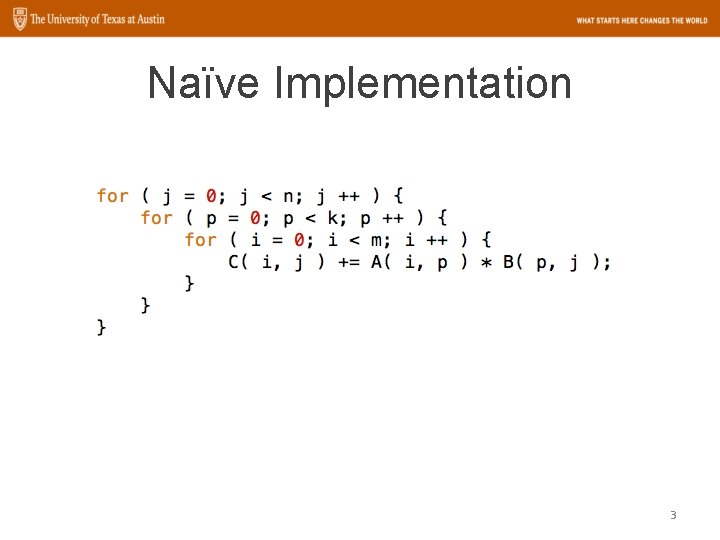

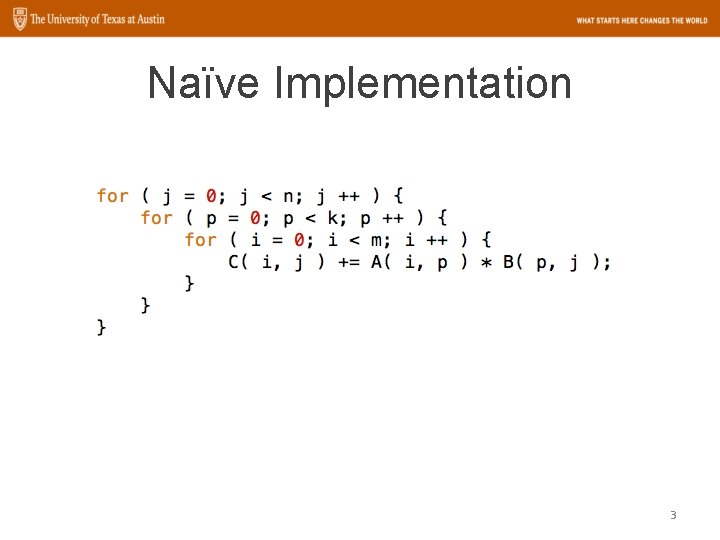

Naïve Implementation 3

Naïve Implementation 4

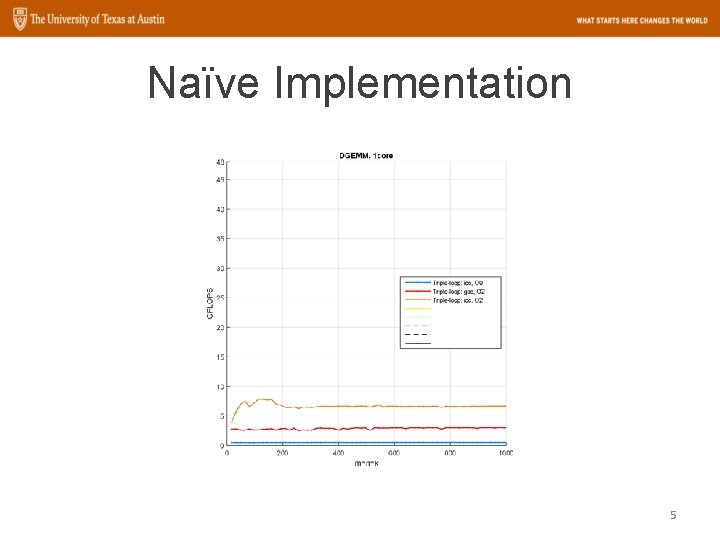

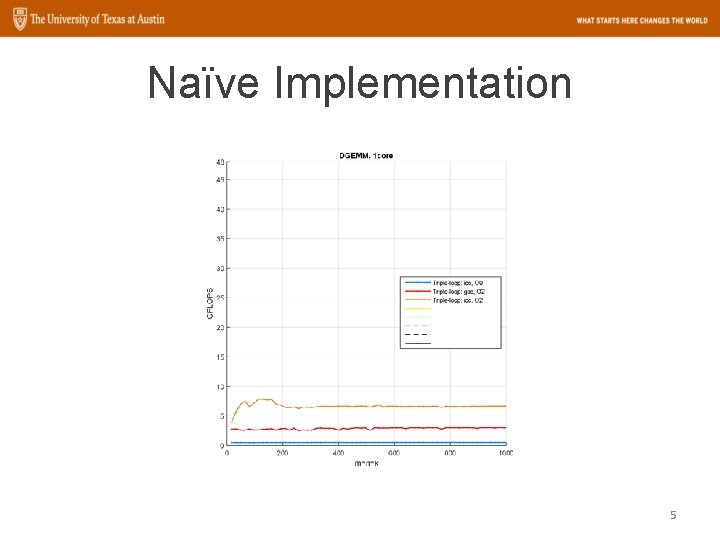

Naïve Implementation 5

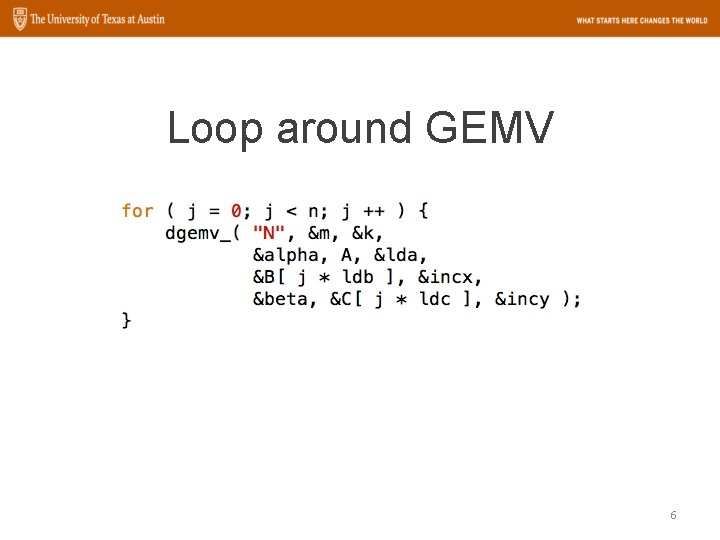

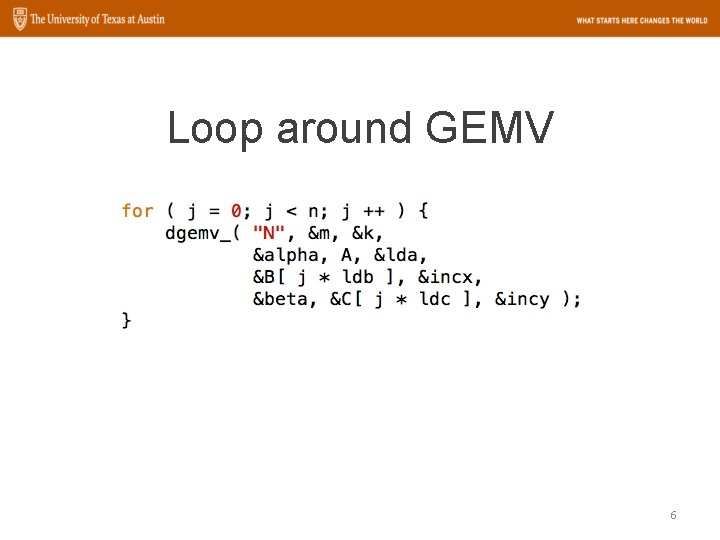

Loop around GEMV 6

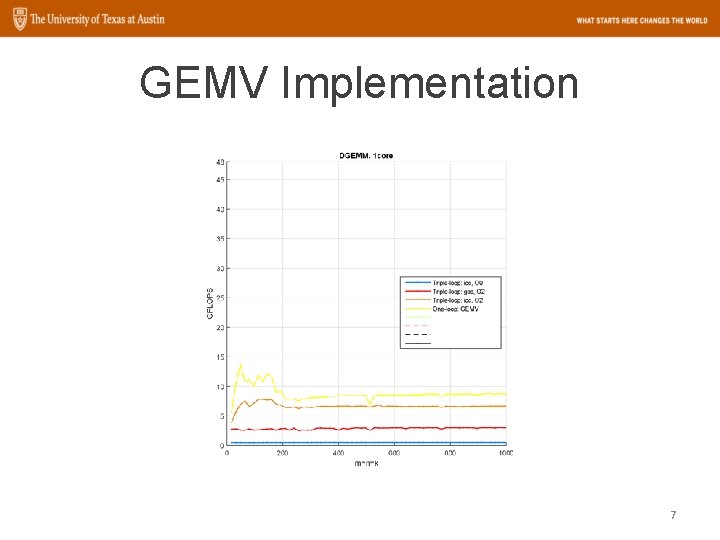

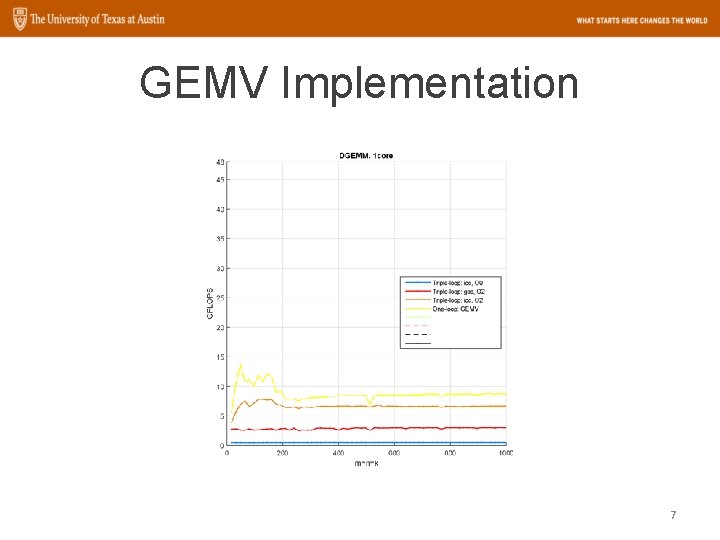

GEMV Implementation 7

Organization • Introduction • A simple blocked algorithm • State-of-the-art GEMM – Blocking for caches (temporal locality) – Packing (spatial locality) – Optimized kernels 8

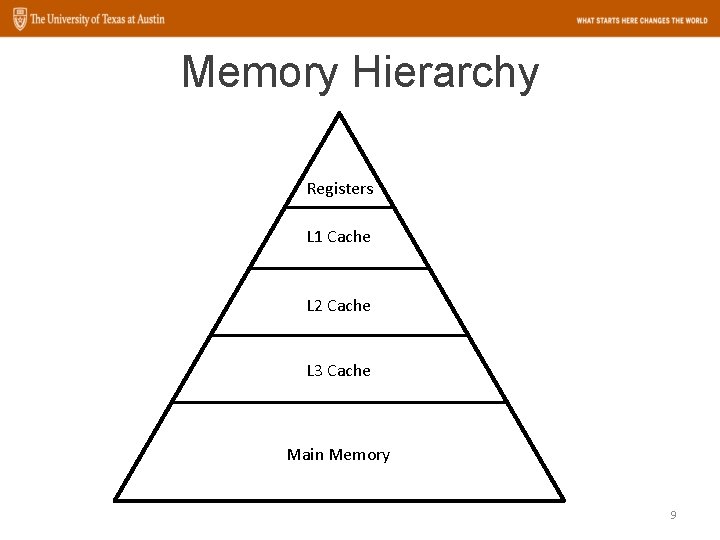

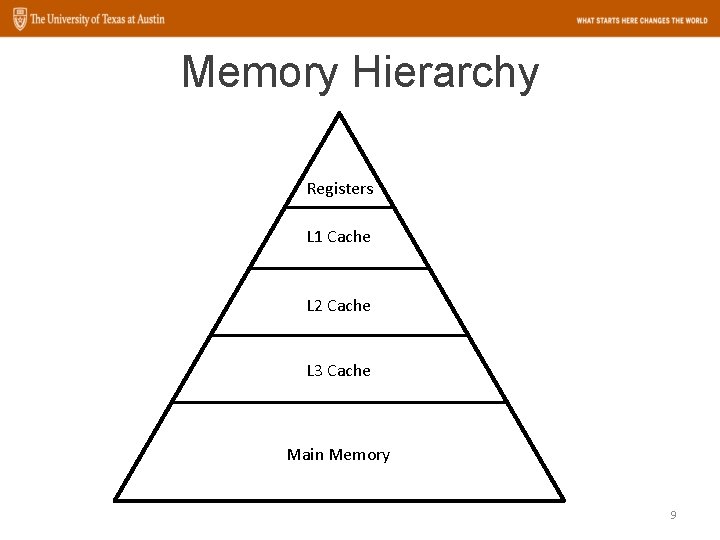

Memory Hierarchy Registers L 1 Cache L 2 Cache L 3 Cache Main Memory 9

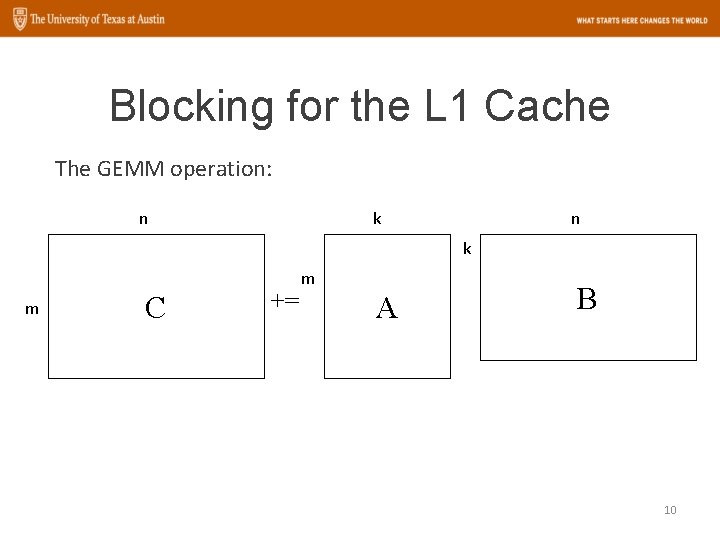

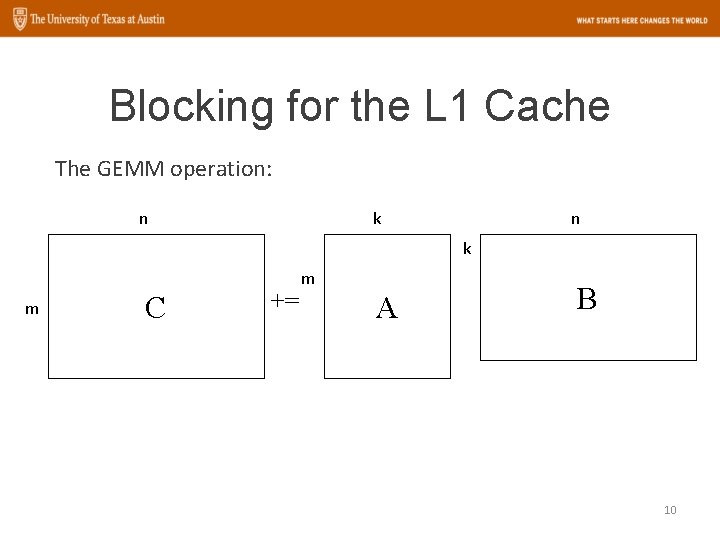

Blocking for the L 1 Cache The GEMM operation: n k m C += m A B 10

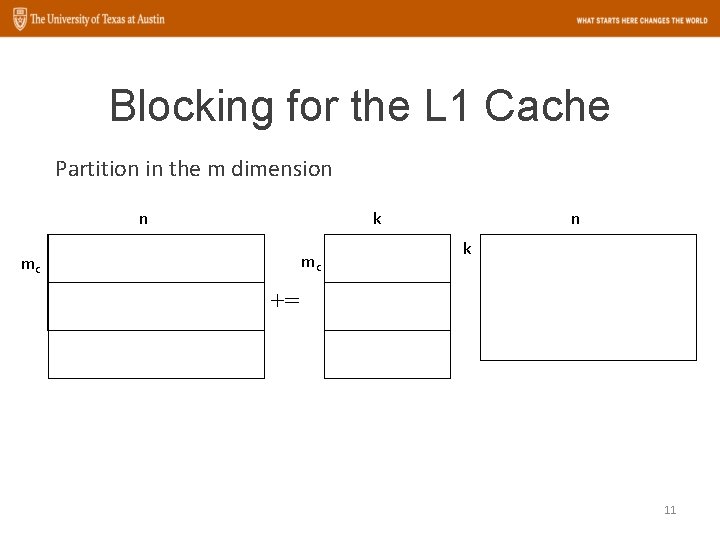

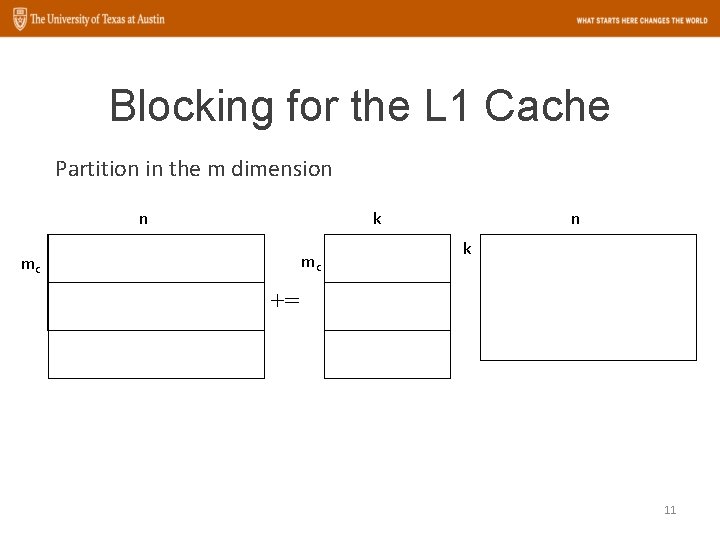

Blocking for the L 1 Cache Partition in the m dimension n k mc mc n k += 11

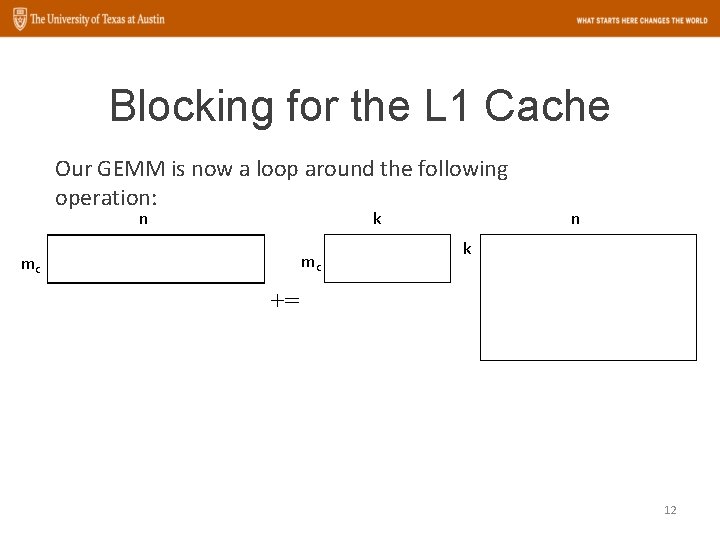

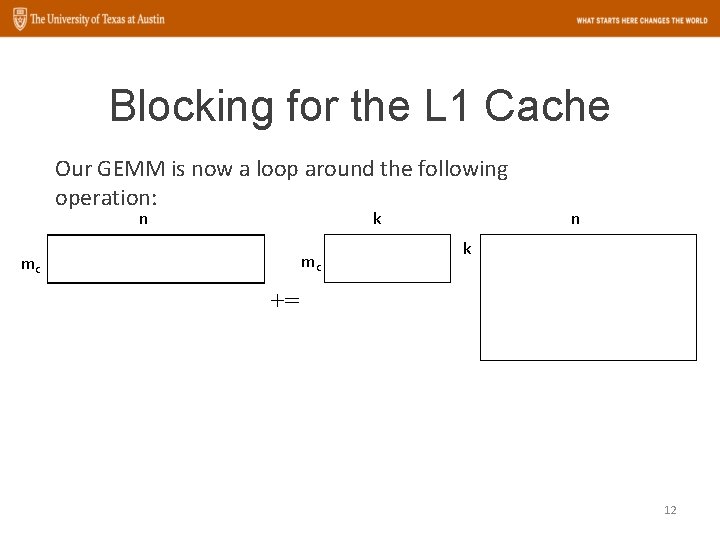

Blocking for the L 1 Cache Our GEMM is now a loop around the following operation: n k mc mc n k += 12

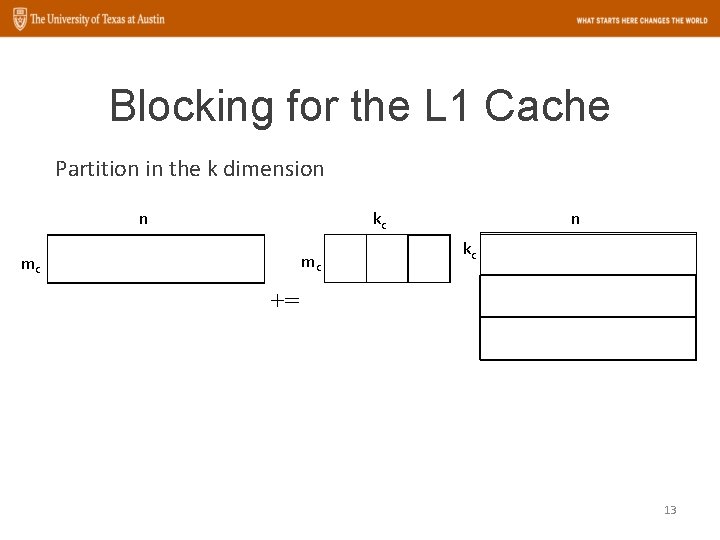

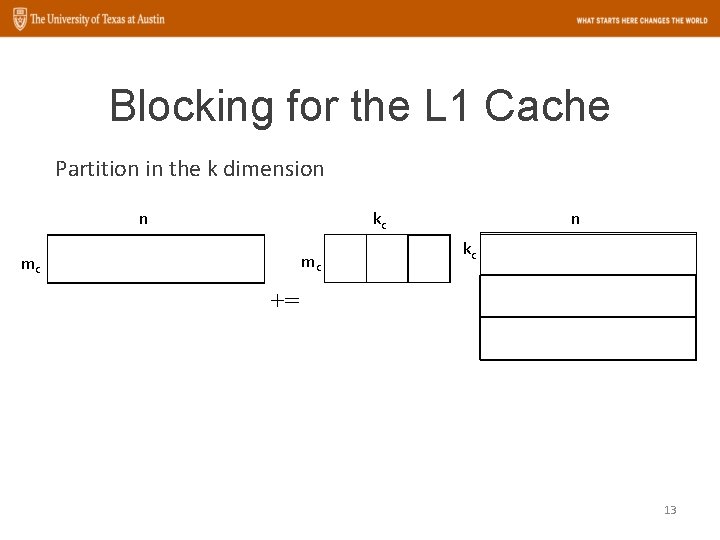

Blocking for the L 1 Cache Partition in the k dimension n kc mc mc n kc += 13

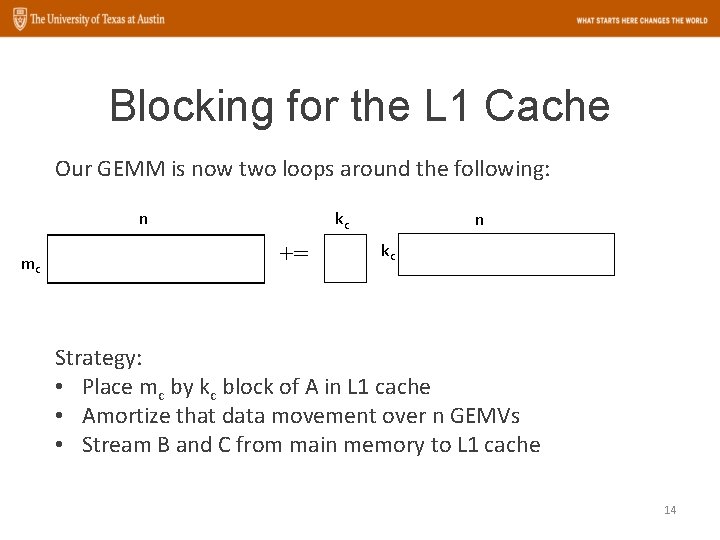

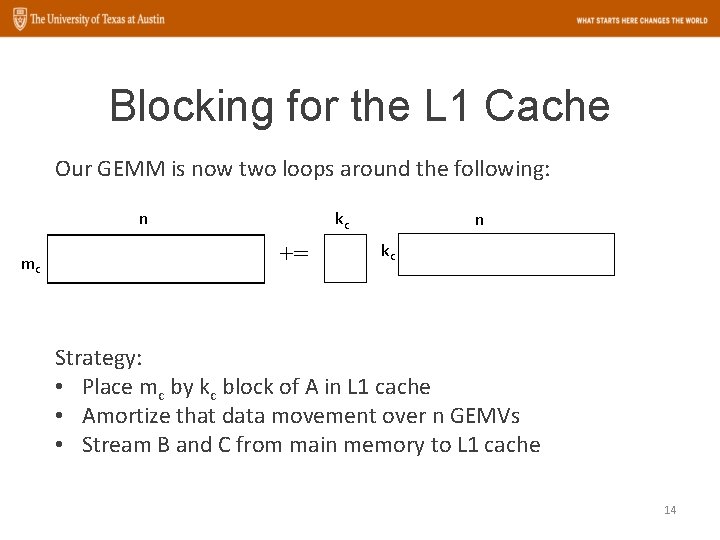

Blocking for the L 1 Cache Our GEMM is now two loops around the following: n mc kc += n kc Strategy: • Place mc by kc block of A in L 1 cache • Amortize that data movement over n GEMVs • Stream B and C from main memory to L 1 cache 14

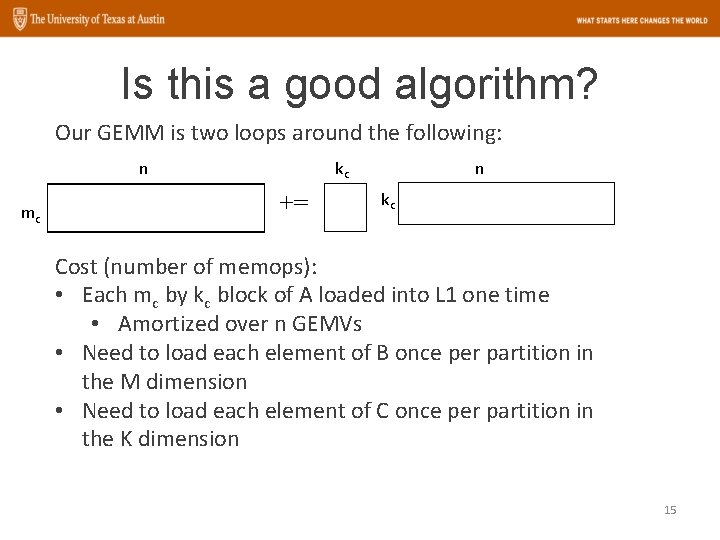

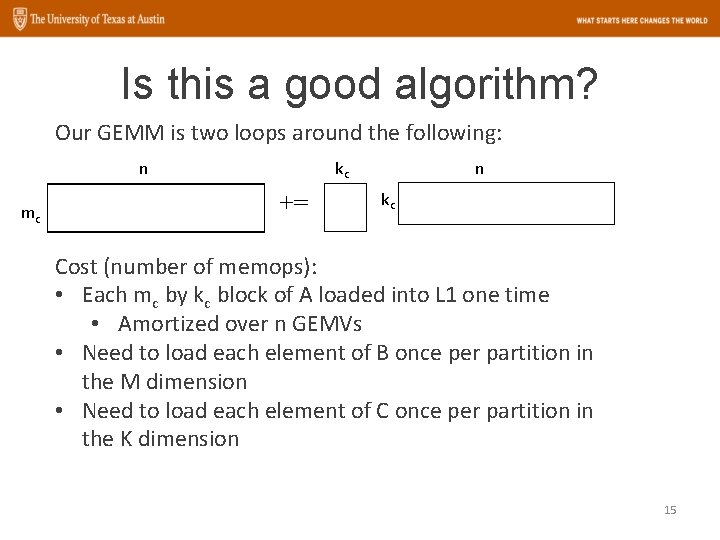

Is this a good algorithm? Our GEMM is two loops around the following: n mc kc += n kc Cost (number of memops): • Each mc by kc block of A loaded into L 1 one time • Amortized over n GEMVs • Need to load each element of B once per partition in the M dimension • Need to load each element of C once per partition in the K dimension 15

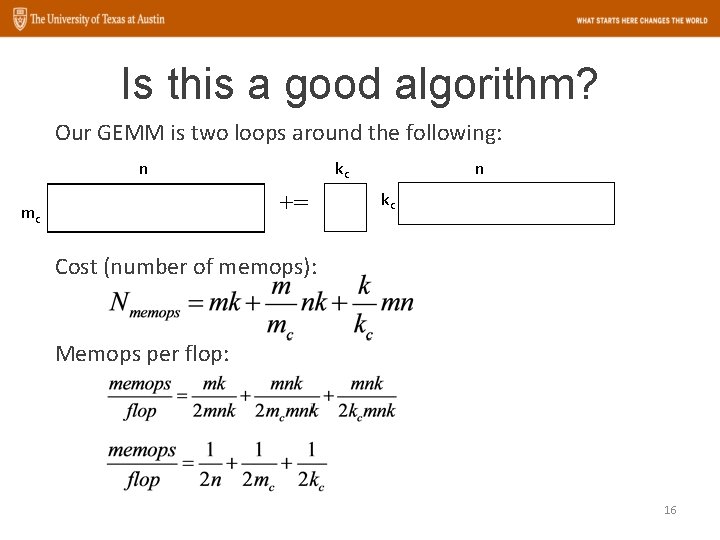

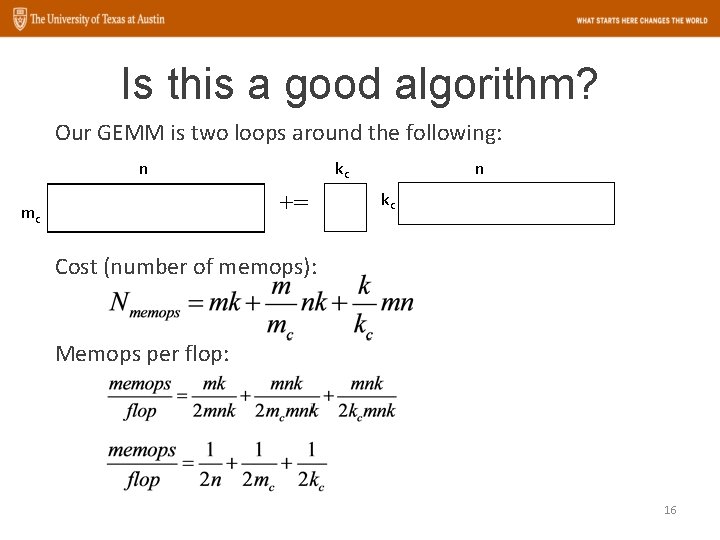

Is this a good algorithm? Our GEMM is two loops around the following: n kc += mc n kc Cost (number of memops): Memops per flop: 16

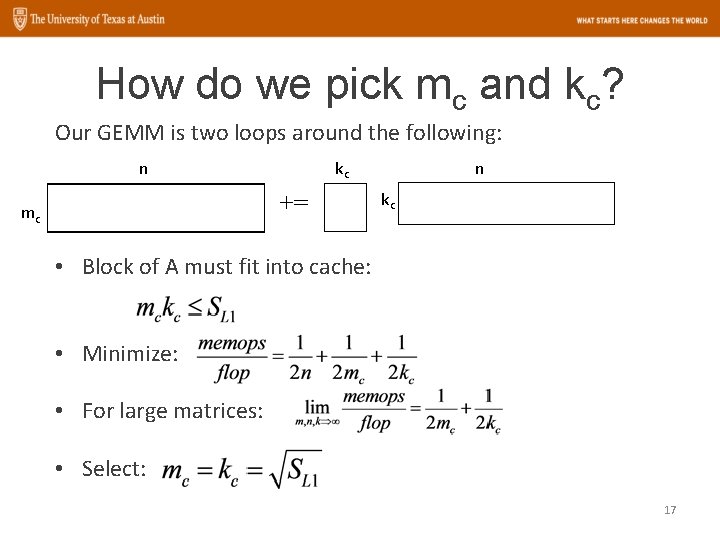

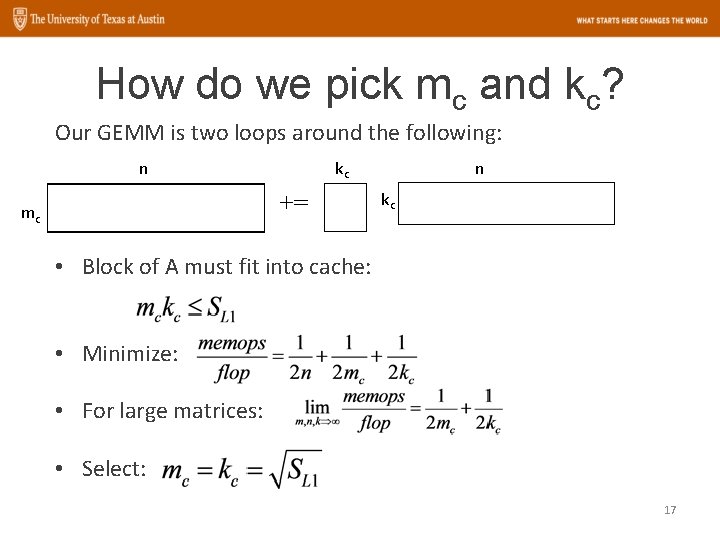

How do we pick mc and kc? Our GEMM is two loops around the following: n kc += mc n kc • Block of A must fit into cache: • Minimize: • For large matrices: • Select: 17

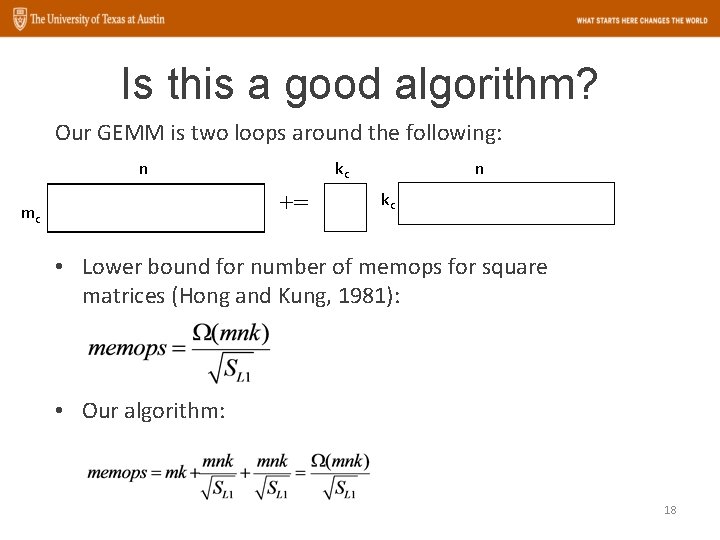

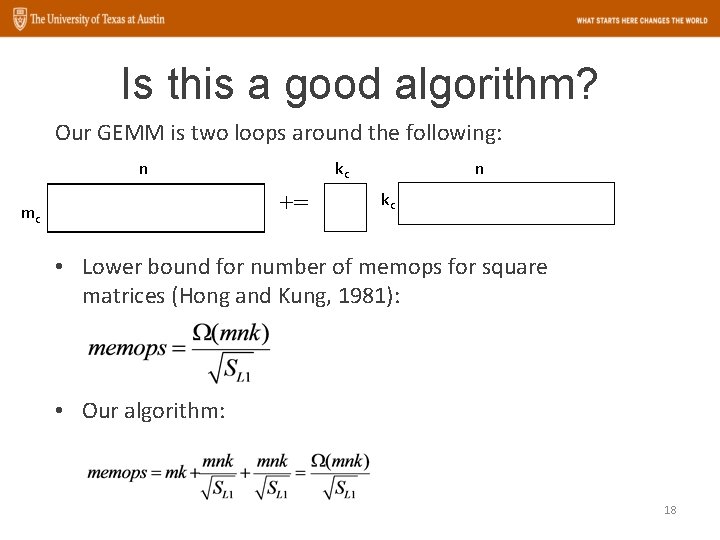

Is this a good algorithm? Our GEMM is two loops around the following: n kc += mc n kc • Lower bound for number of memops for square matrices (Hong and Kung, 1981): • Our algorithm: 18

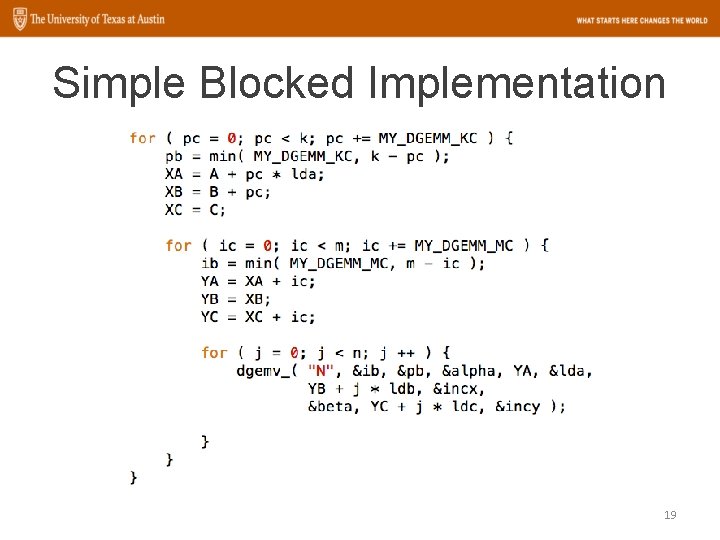

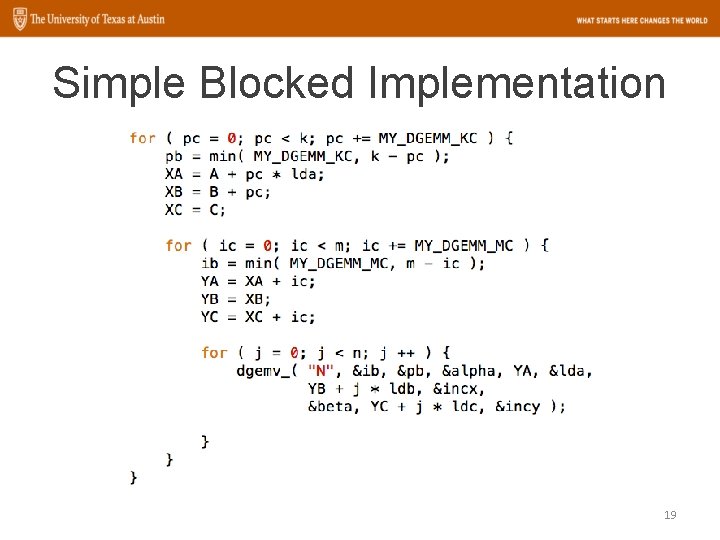

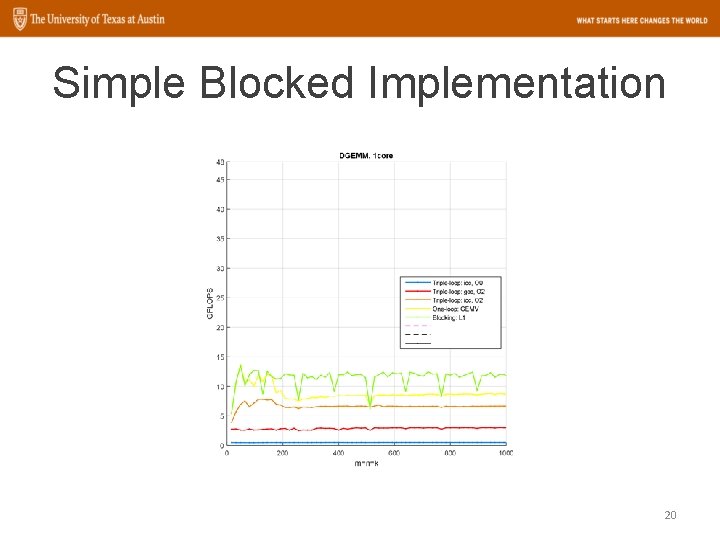

Simple Blocked Implementation 19

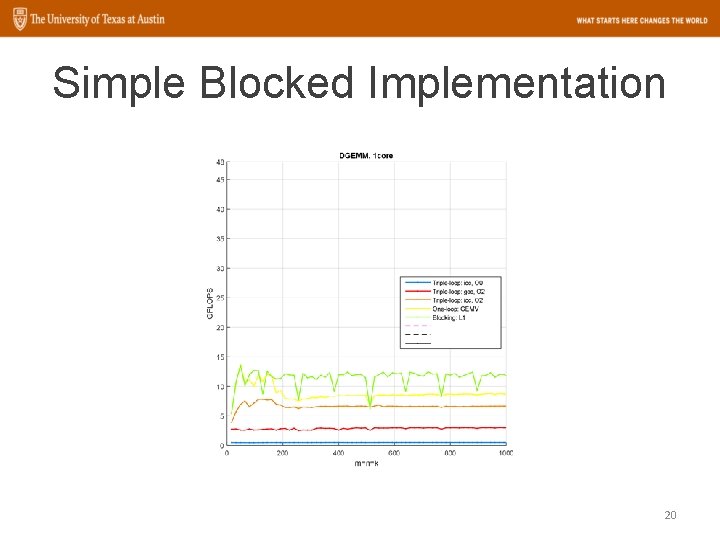

Simple Blocked Implementation 20

Organization • Introduction • A simple blocked algorithm • State-of-the-art GEMM – Blocking for caches (temporal locality) – Packing (spatial locality) – Optimized kernels 21

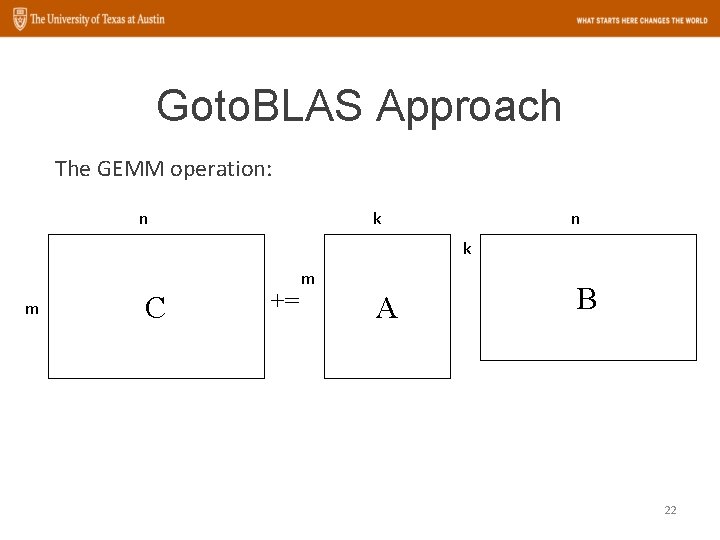

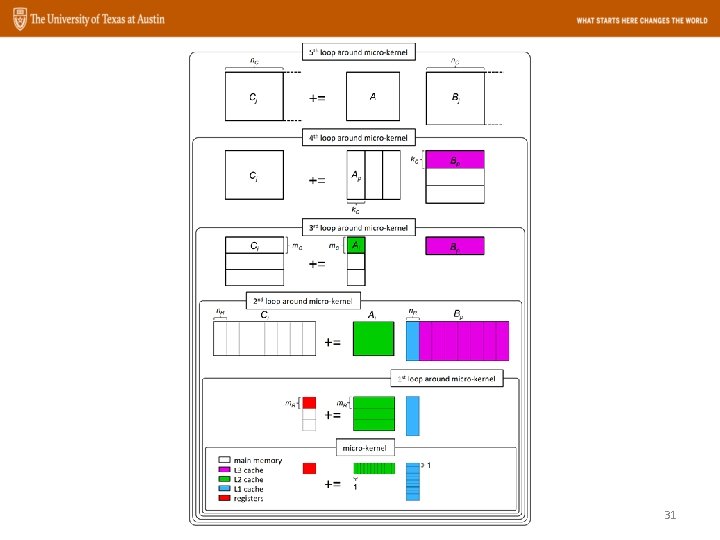

Goto. BLAS Approach The GEMM operation: n k m C += m A B 22

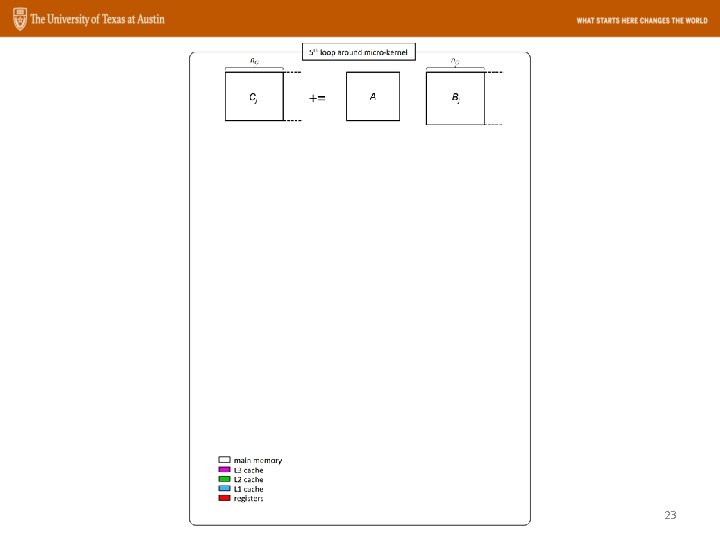

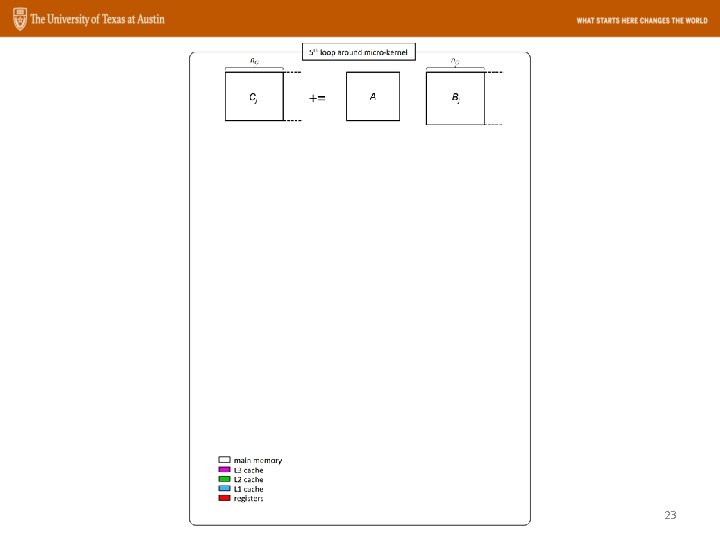

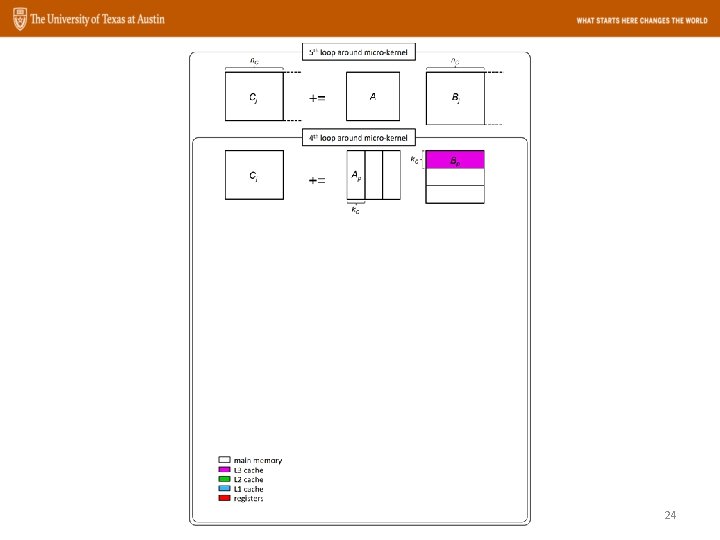

23

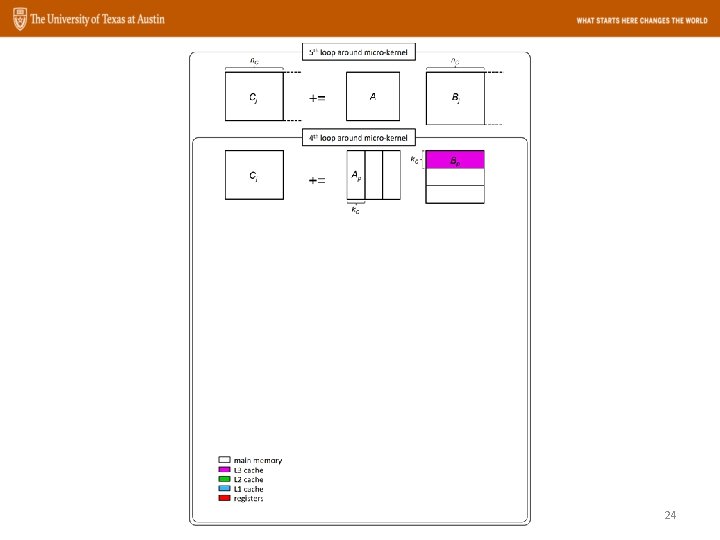

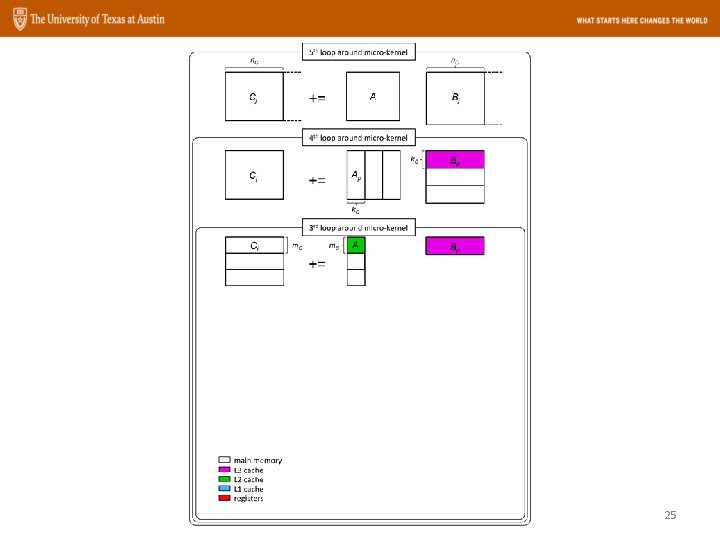

24

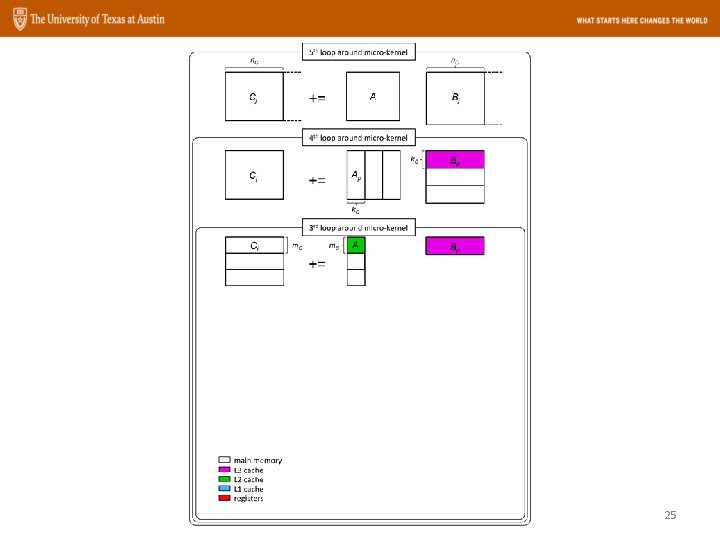

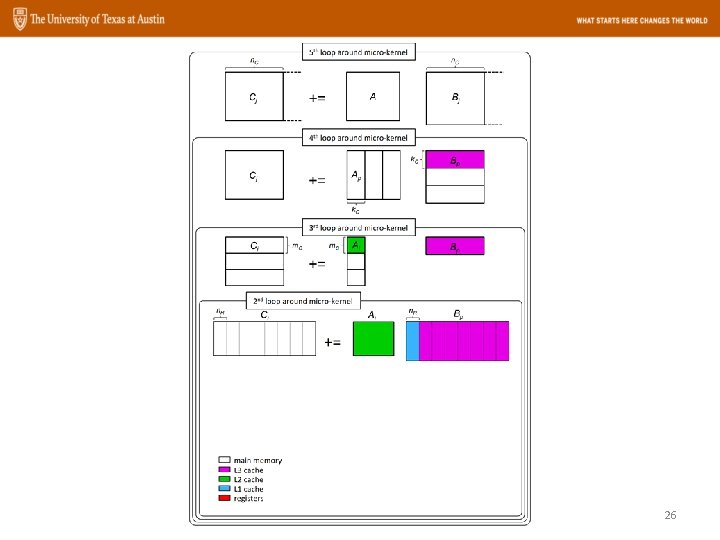

25

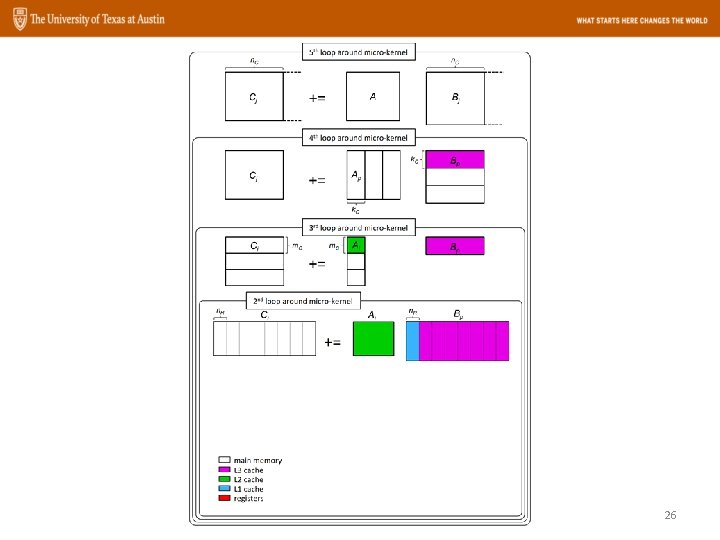

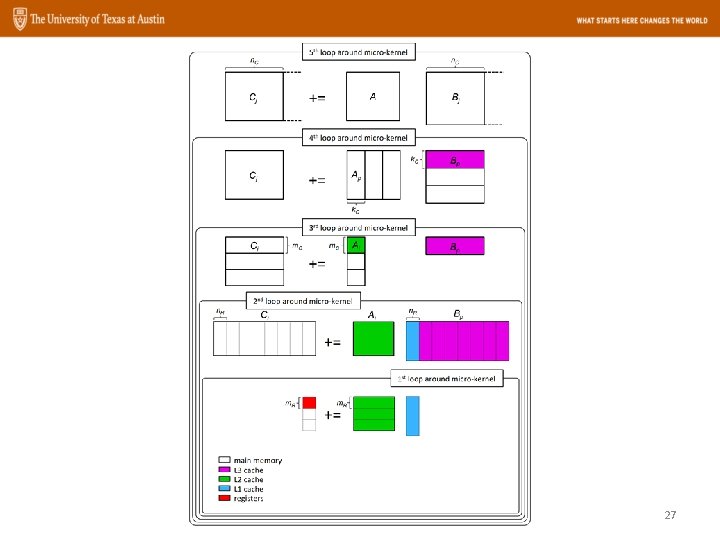

26

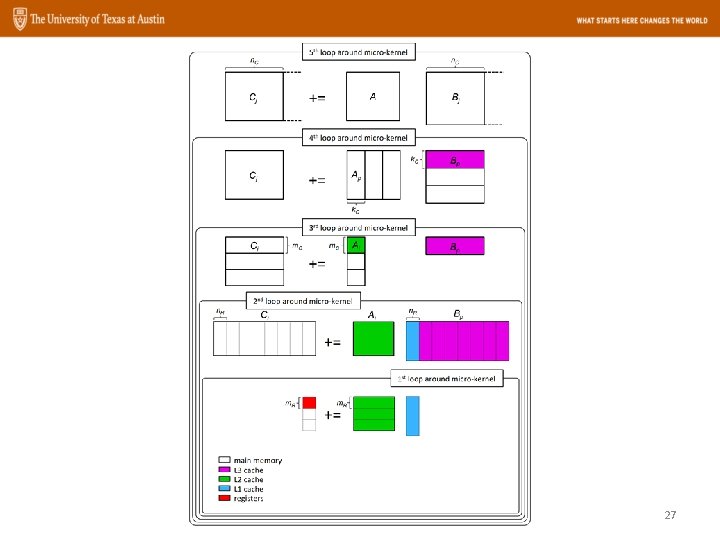

27

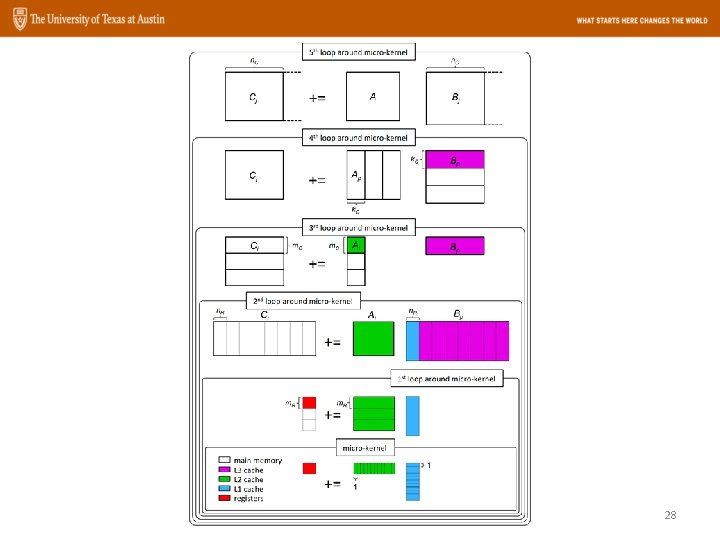

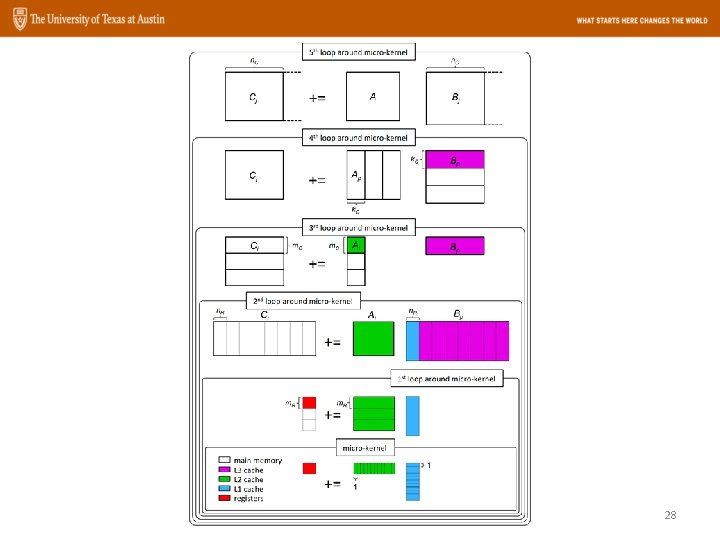

28

Organization • Introduction • A simple blocked algorithm • State-of-the-art GEMM – Blocking for caches (temporal locality) – Packing (spatial locality) – Optimized kernels 29

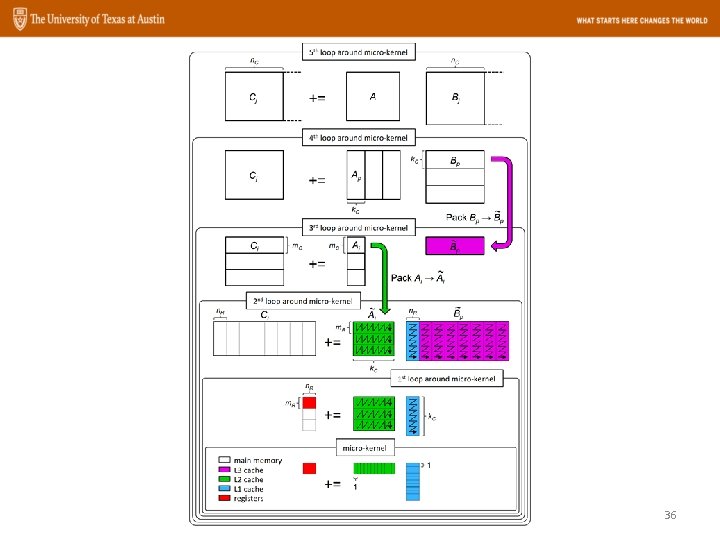

Packing • Better spatial locality • More friendly for prefetching • Reduce: – Conflict misses • Easier SIMD parallelization 30

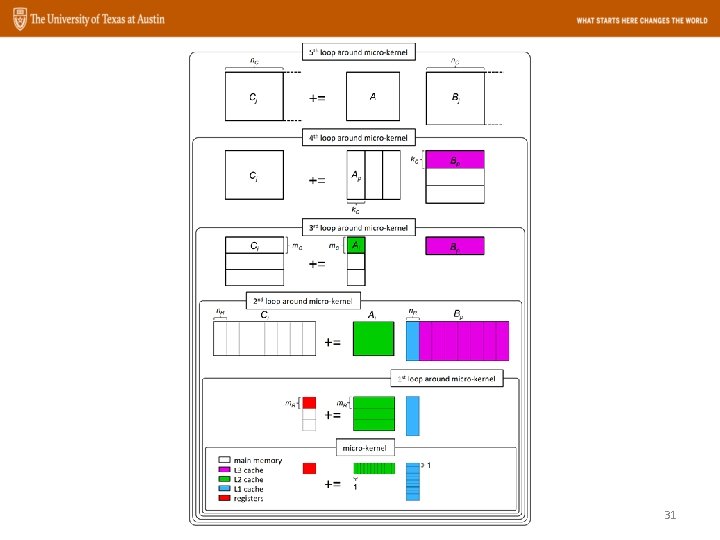

31

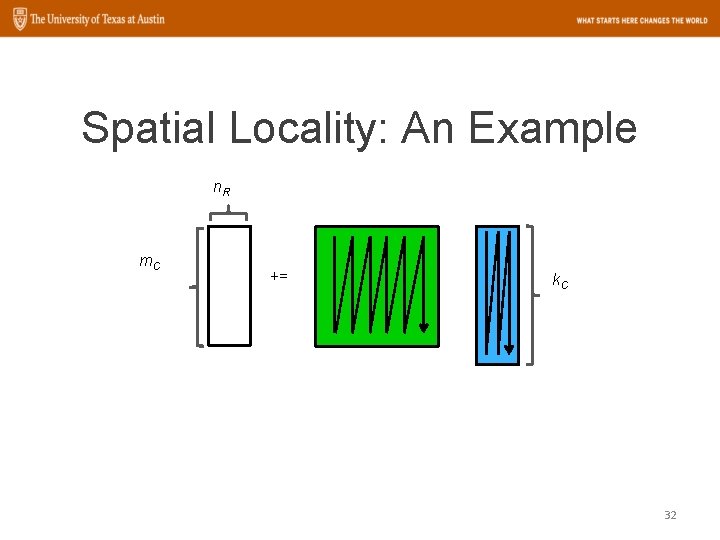

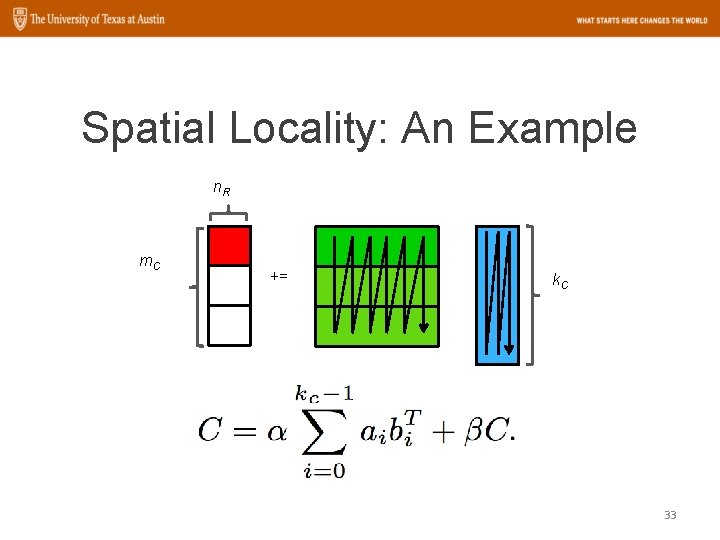

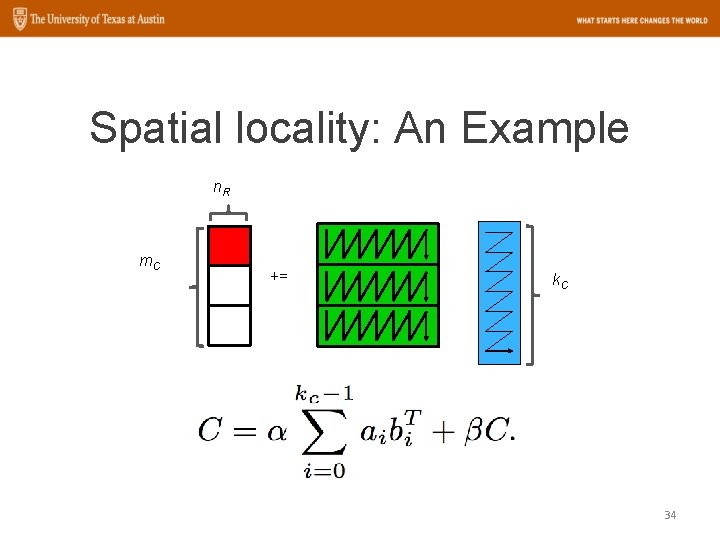

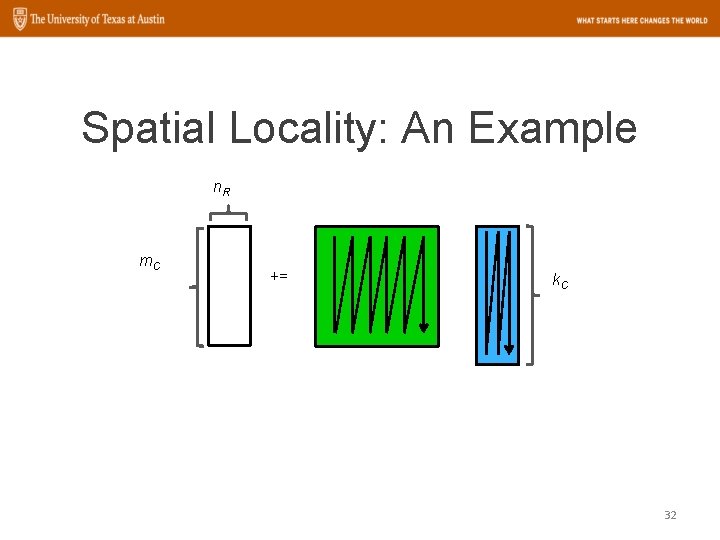

Spatial Locality: An Example n. R m. C += k. C 32

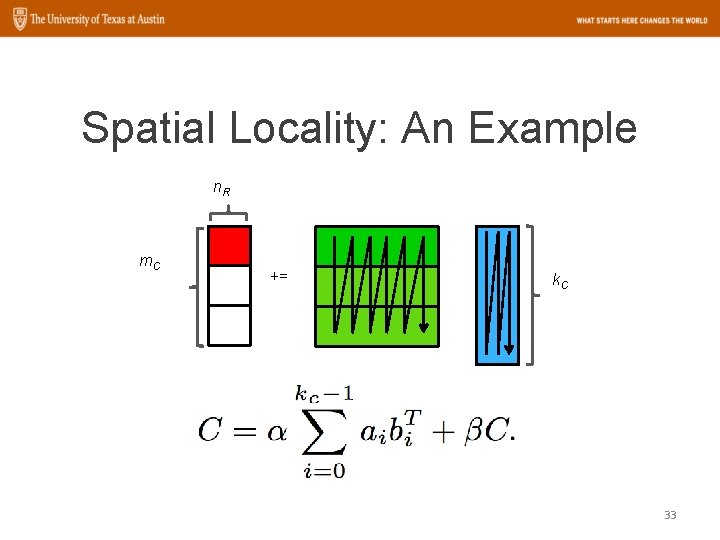

Spatial Locality: An Example n. R m. C += k. C 33

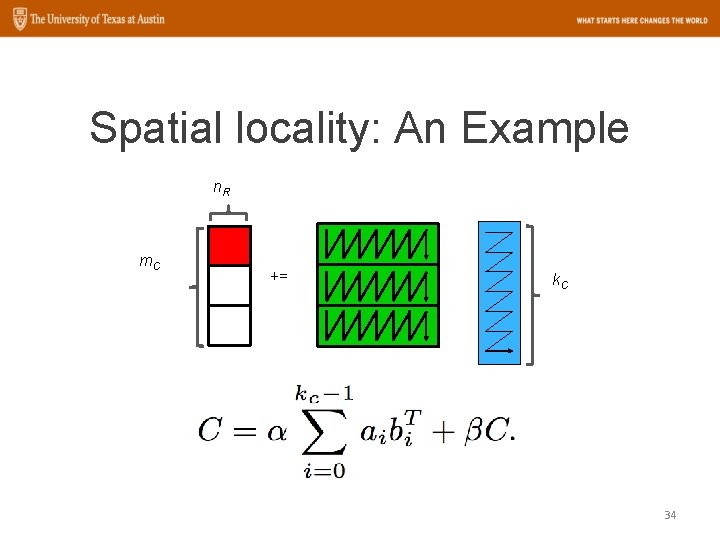

Spatial locality: An Example n. R m. C += k. C 34

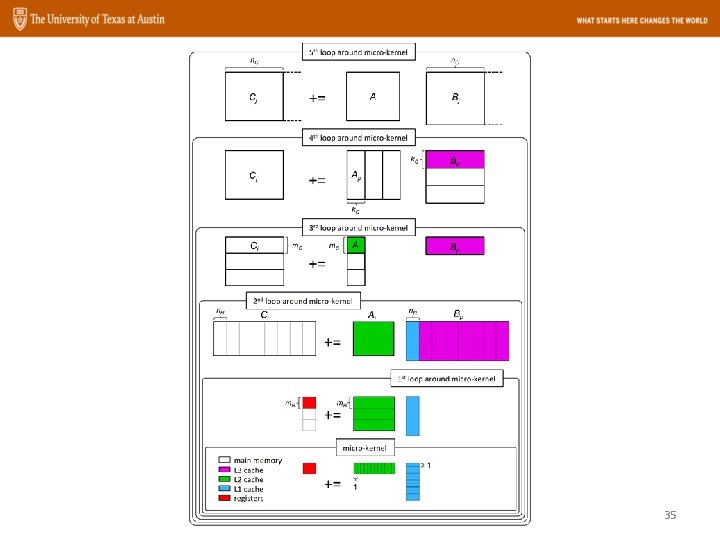

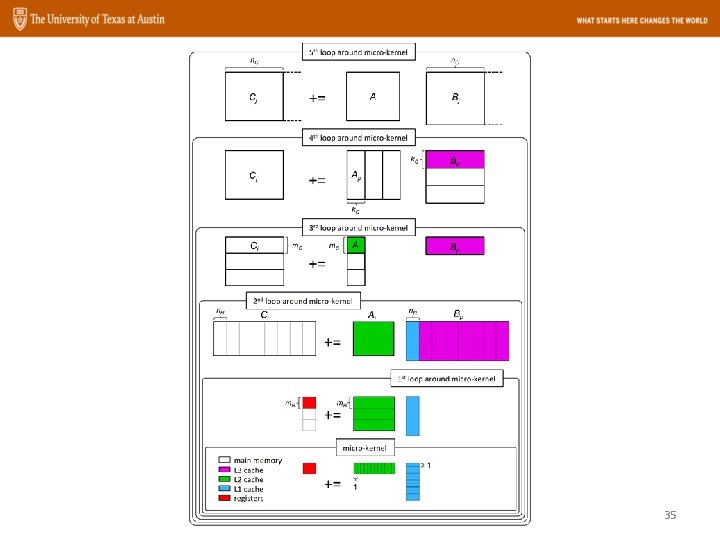

35

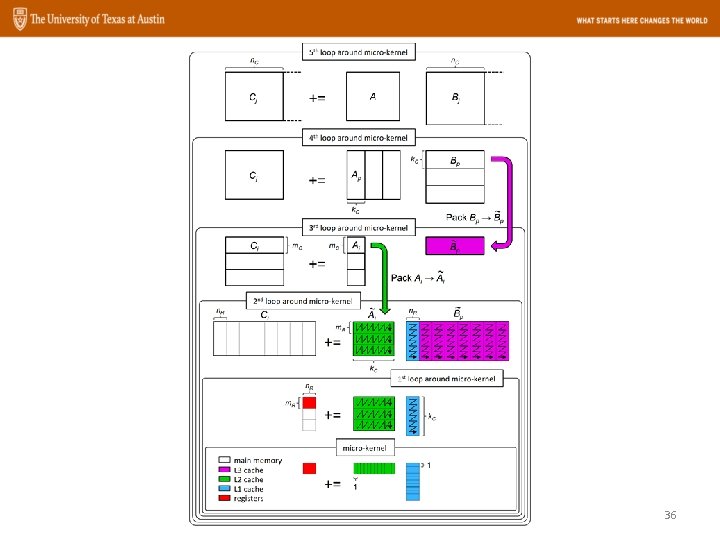

36

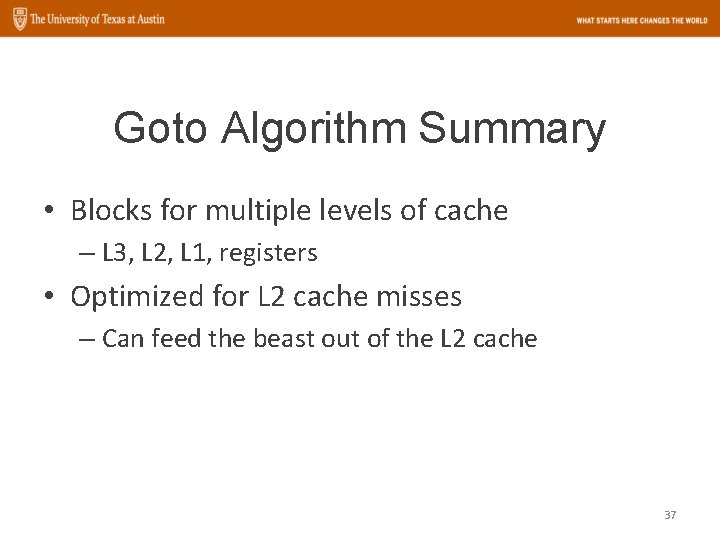

Goto Algorithm Summary • Blocks for multiple levels of cache – L 3, L 2, L 1, registers • Optimized for L 2 cache misses – Can feed the beast out of the L 2 cache 37

Organization • Introduction • A simple blocked algorithm • State-of-the-art GEMM – Blocking for caches (temporal locality) – Packing (spatial locality) – Optimized kernels 38

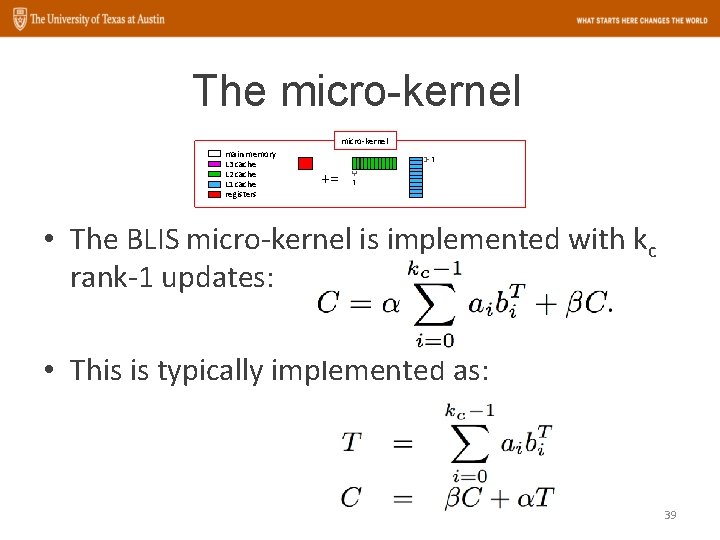

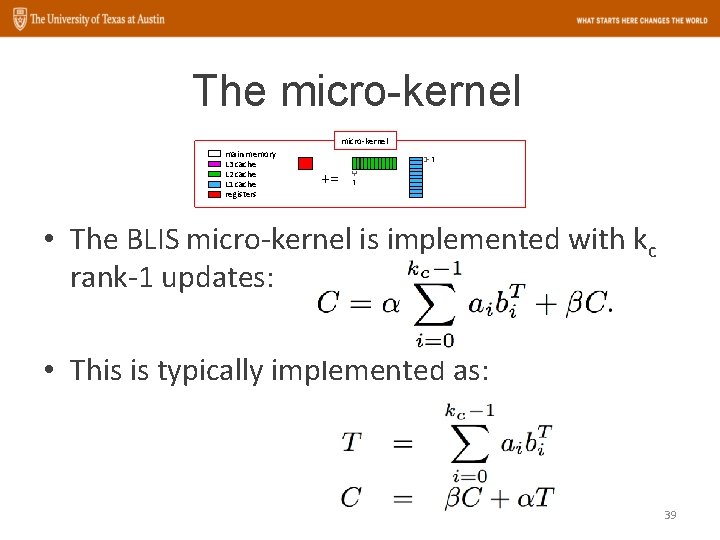

The micro-kernel main memory L 3 cache L 2 cache L 1 cache registers 1 += 1 • The BLIS micro-kernel is implemented with kc rank-1 updates: • This is typically implemented as: 39

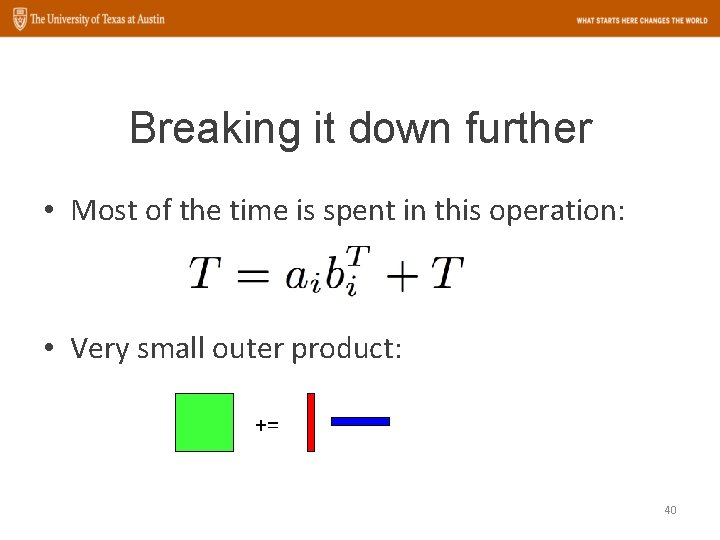

Breaking it down further • Most of the time is spent in this operation: • Very small outer product: += 40

Implementing the outer product • We need to use SIMD • A CPU has some vector length, v – Vector registers can hold v elements – Vector instruction can operate on v elements • We need to think about: – Load, permute and arithmetic instructions 41

SIMD Loads • Typically, we need to load elements into registers from memory before use • A packed SIMD load instruction will load v consecutive elements from memory into the v positions within a register 42

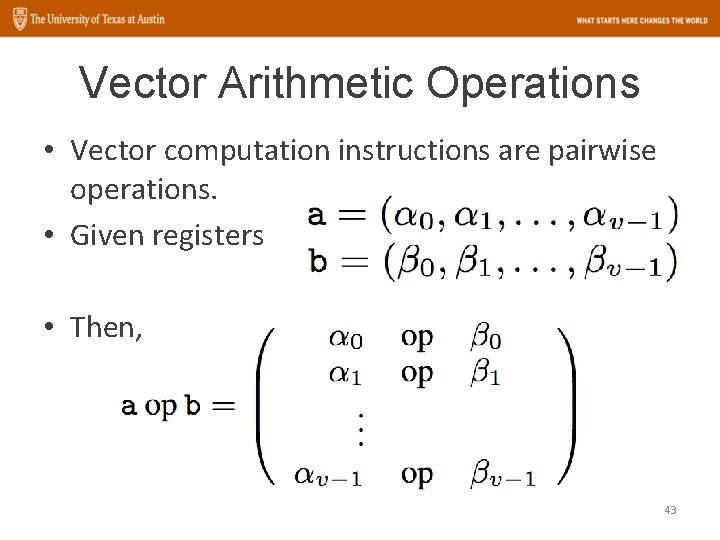

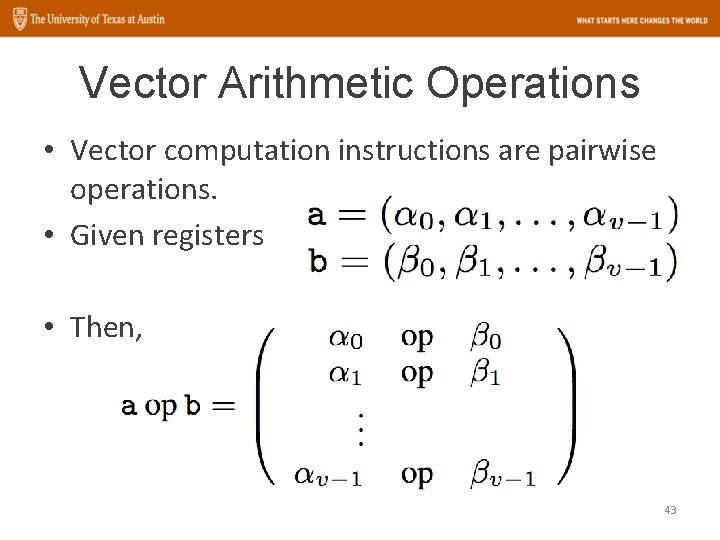

Vector Arithmetic Operations • Vector computation instructions are pairwise operations. • Given registers • Then, 43

Permutation Operations • Permutation instructions will shuffle elements within a register • Necessary because computation instructions are pairwise – We need to multiply every element of a with every element of b 44

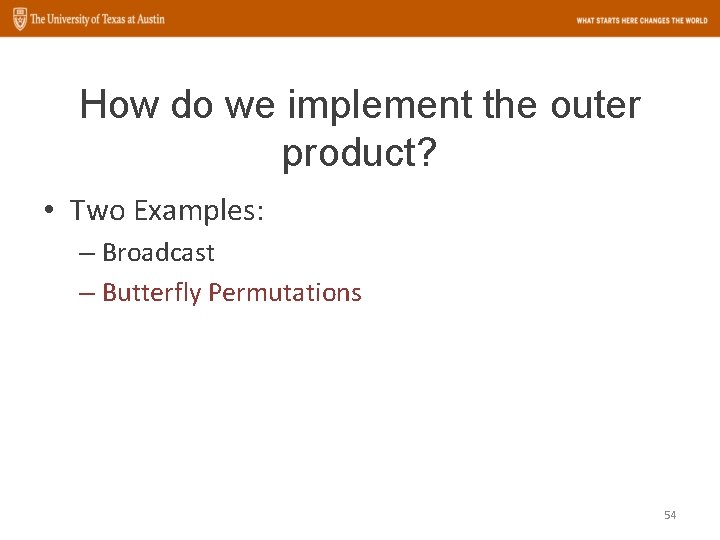

How do we implement the outer product? • Two Examples: – Broadcast – Butterfly Permutations 45

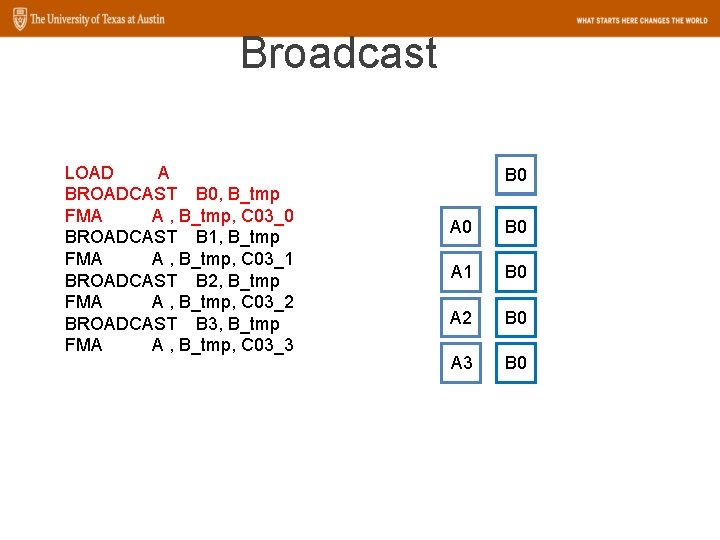

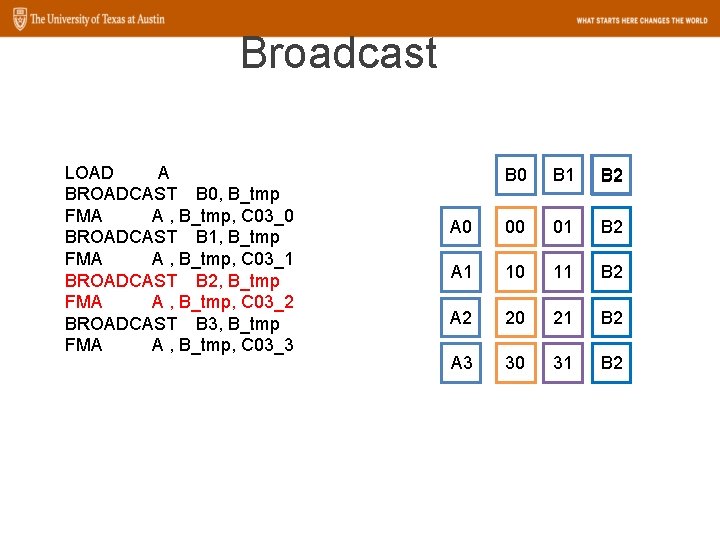

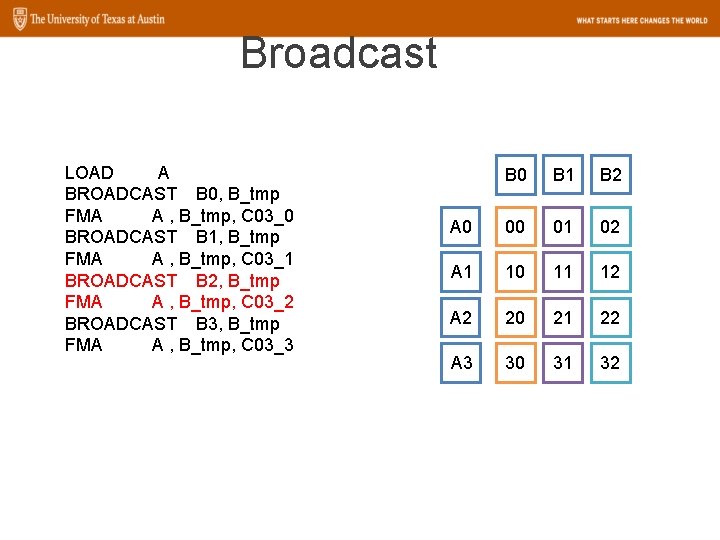

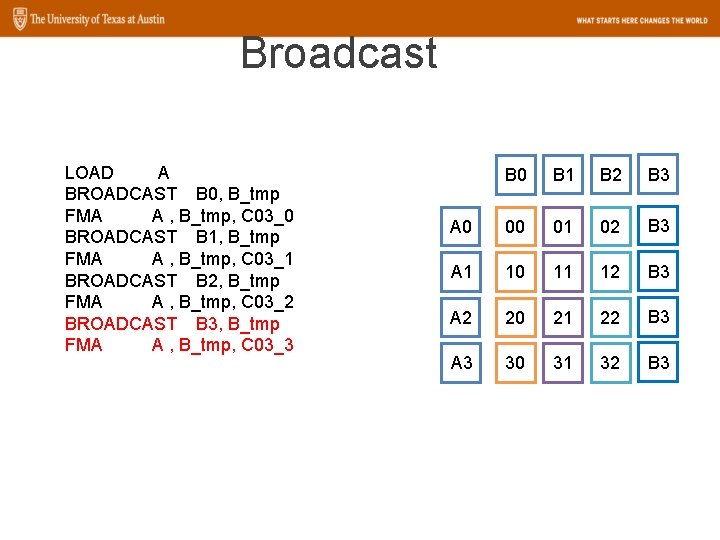

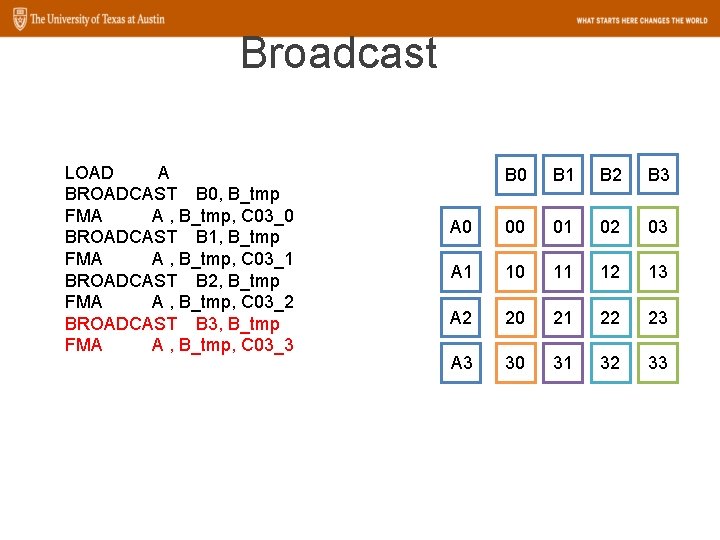

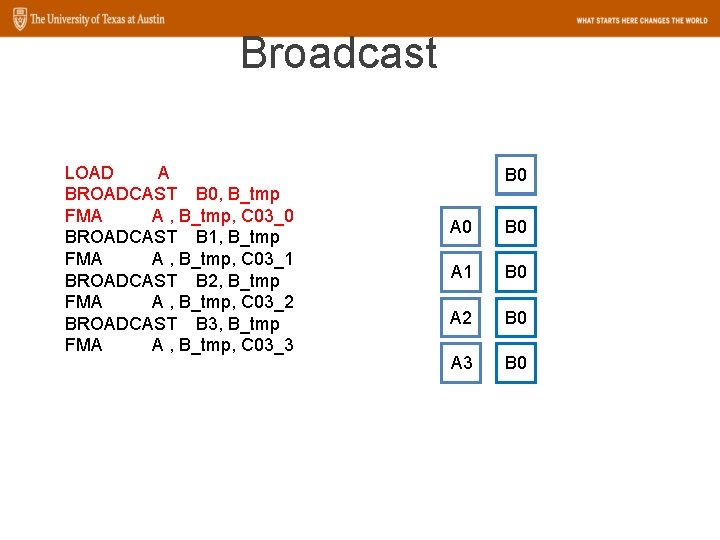

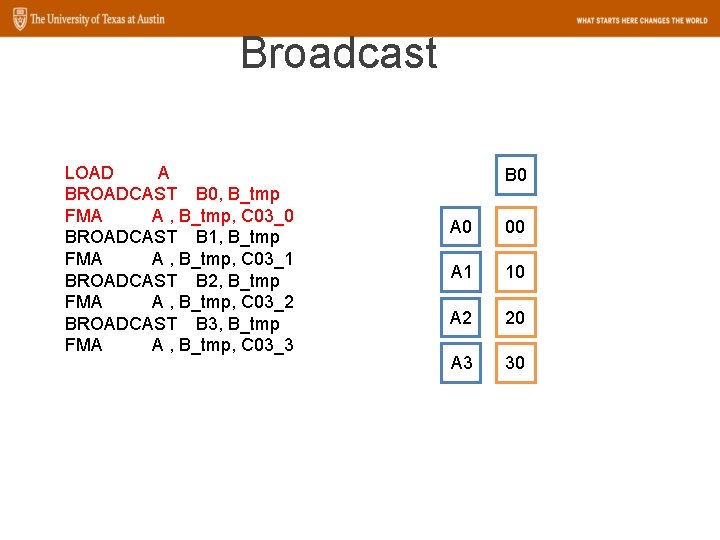

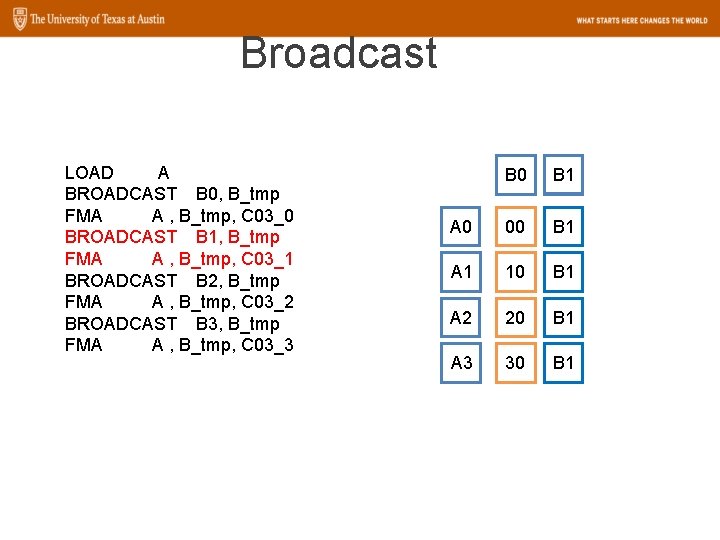

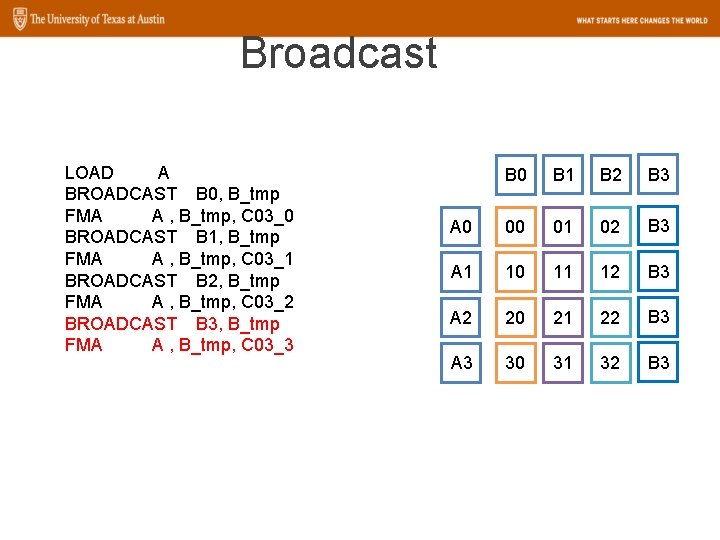

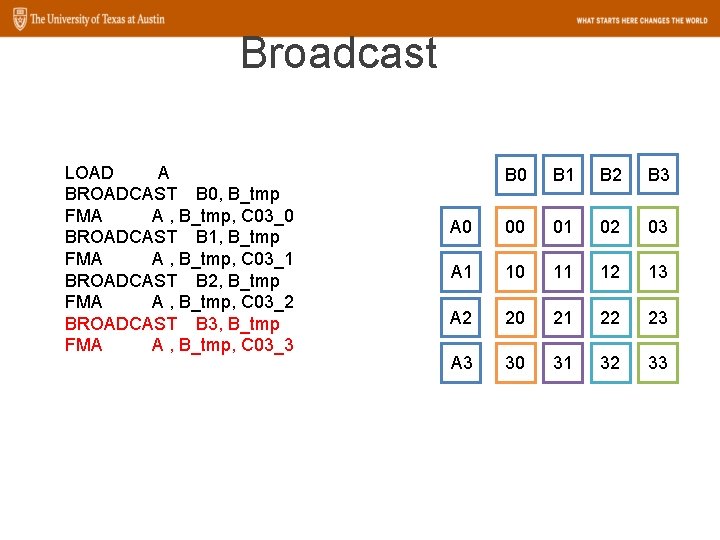

Broadcast LOAD A BROADCAST B 0, B_tmp FMA A , B_tmp, C 03_0 BROADCAST B 1, B_tmp FMA A , B_tmp, C 03_1 BROADCAST B 2, B_tmp FMA A , B_tmp, C 03_2 BROADCAST B 3, B_tmp FMA A , B_tmp, C 03_3 B 0 A 0 B 0 A 1 B 0 A 2 B 0 A 3 B 0

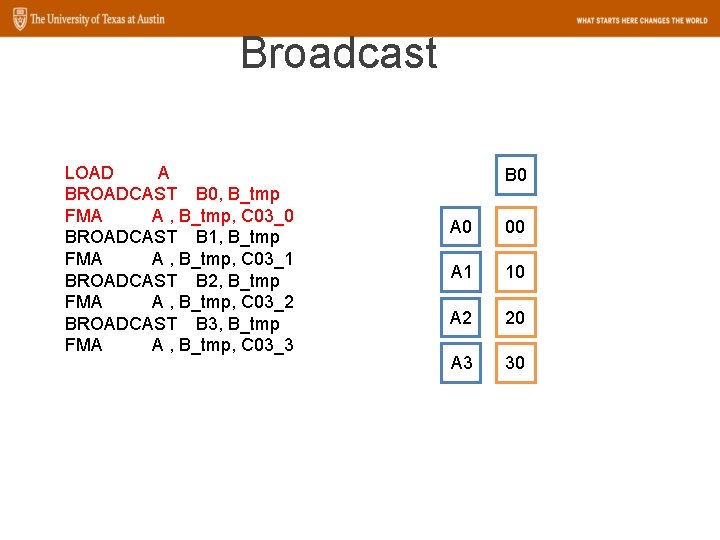

Broadcast LOAD A BROADCAST B 0, B_tmp FMA A , B_tmp, C 03_0 BROADCAST B 1, B_tmp FMA A , B_tmp, C 03_1 BROADCAST B 2, B_tmp FMA A , B_tmp, C 03_2 BROADCAST B 3, B_tmp FMA A , B_tmp, C 03_3 B 0 A 0 00 A 1 10 A 2 20 A 3 30

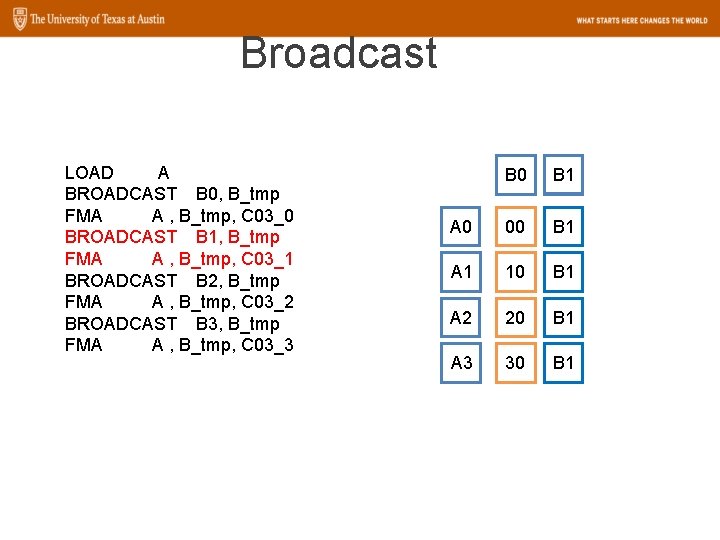

Broadcast LOAD A BROADCAST B 0, B_tmp FMA A , B_tmp, C 03_0 BROADCAST B 1, B_tmp FMA A , B_tmp, C 03_1 BROADCAST B 2, B_tmp FMA A , B_tmp, C 03_2 BROADCAST B 3, B_tmp FMA A , B_tmp, C 03_3 B 0 B 1 A 0 00 B 1 A 1 10 B 1 A 2 20 B 1 A 3 30 B 1

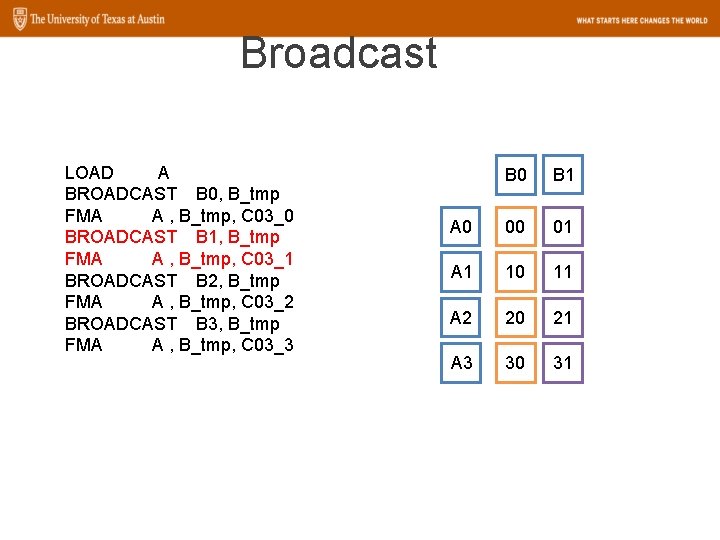

Broadcast LOAD A BROADCAST B 0, B_tmp FMA A , B_tmp, C 03_0 BROADCAST B 1, B_tmp FMA A , B_tmp, C 03_1 BROADCAST B 2, B_tmp FMA A , B_tmp, C 03_2 BROADCAST B 3, B_tmp FMA A , B_tmp, C 03_3 B 0 B 1 A 0 00 01 A 1 10 11 A 2 20 21 A 3 30 31

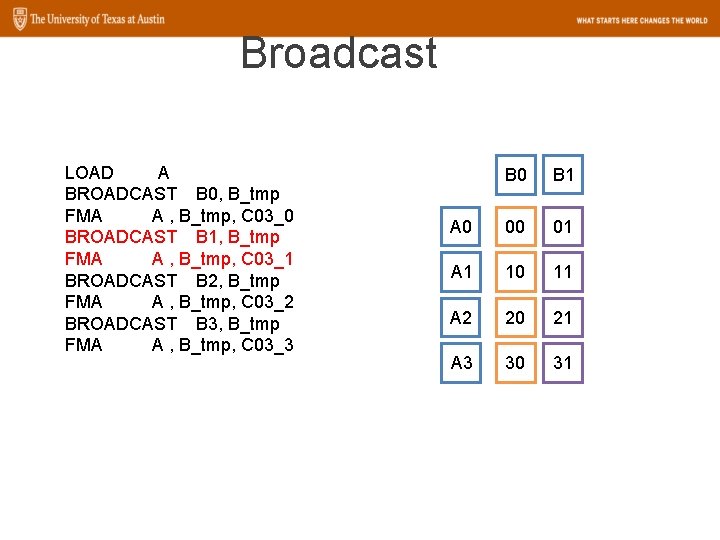

Broadcast LOAD A BROADCAST B 0, B_tmp FMA A , B_tmp, C 03_0 BROADCAST B 1, B_tmp FMA A , B_tmp, C 03_1 BROADCAST B 2, B_tmp FMA A , B_tmp, C 03_2 BROADCAST B 3, B_tmp FMA A , B_tmp, C 03_3 B 0 B 1 B 2 A 0 00 01 B 2 A 1 10 11 B 2 A 2 20 21 B 2 A 3 30 31 B 2

Broadcast LOAD A BROADCAST B 0, B_tmp FMA A , B_tmp, C 03_0 BROADCAST B 1, B_tmp FMA A , B_tmp, C 03_1 BROADCAST B 2, B_tmp FMA A , B_tmp, C 03_2 BROADCAST B 3, B_tmp FMA A , B_tmp, C 03_3 B 0 B 1 B 2 A 0 00 01 02 A 1 10 11 12 A 2 20 21 22 A 3 30 31 32

Broadcast LOAD A BROADCAST B 0, B_tmp FMA A , B_tmp, C 03_0 BROADCAST B 1, B_tmp FMA A , B_tmp, C 03_1 BROADCAST B 2, B_tmp FMA A , B_tmp, C 03_2 BROADCAST B 3, B_tmp FMA A , B_tmp, C 03_3 B 0 B 1 B 2 B 3 A 0 00 01 02 B 3 A 1 10 11 12 B 3 A 2 20 21 22 B 3 A 3 30 31 32 B 3

Broadcast LOAD A BROADCAST B 0, B_tmp FMA A , B_tmp, C 03_0 BROADCAST B 1, B_tmp FMA A , B_tmp, C 03_1 BROADCAST B 2, B_tmp FMA A , B_tmp, C 03_2 BROADCAST B 3, B_tmp FMA A , B_tmp, C 03_3 B 0 B 1 B 2 B 3 A 0 00 01 02 03 A 1 10 11 12 13 A 2 20 21 22 23 A 3 30 31 32 33

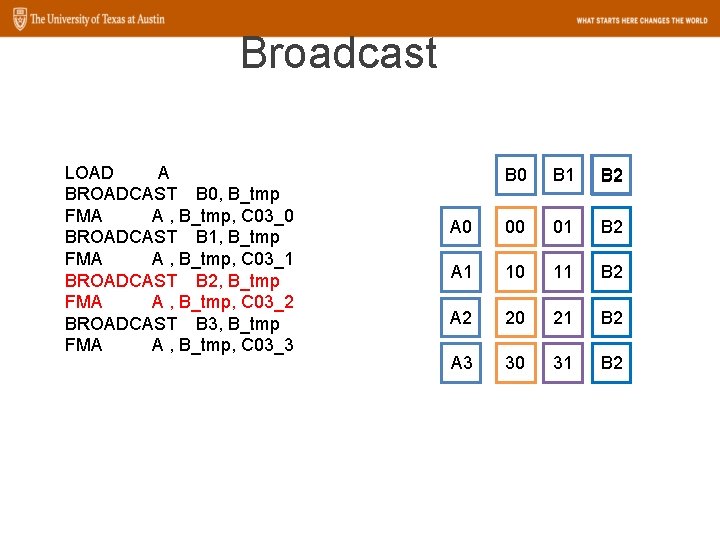

How do we implement the outer product? • Two Examples: – Broadcast – Butterfly Permutations 54

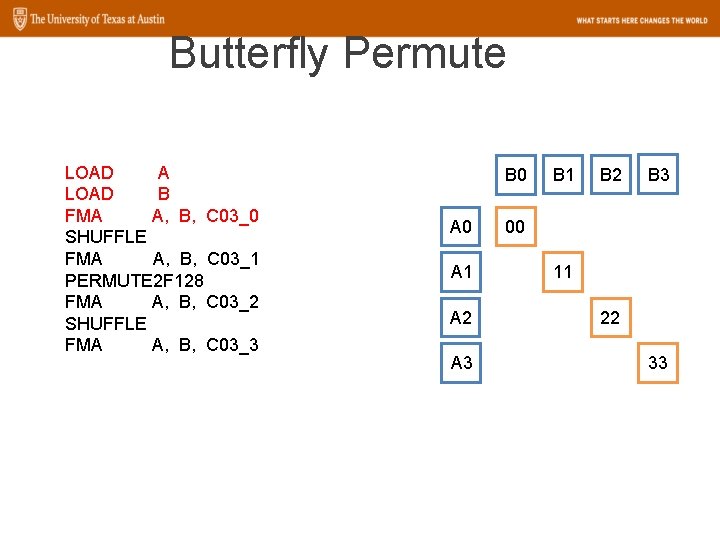

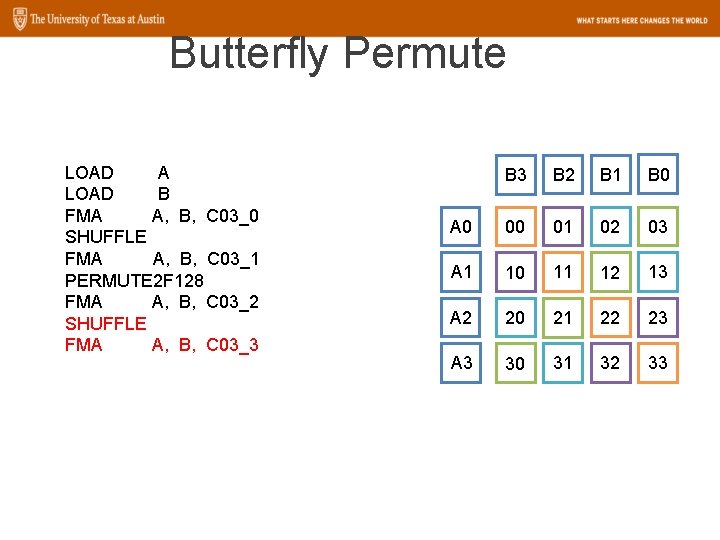

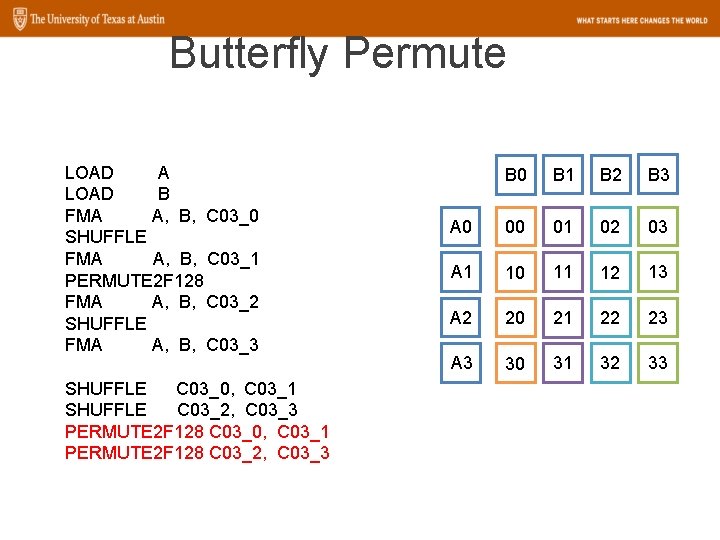

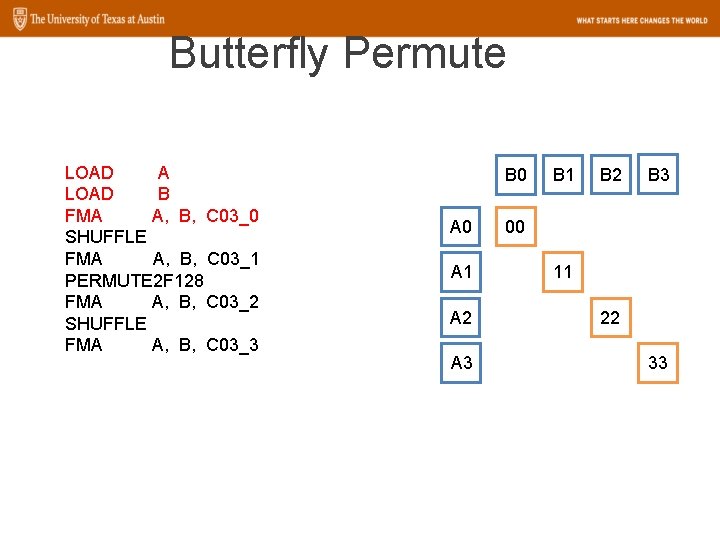

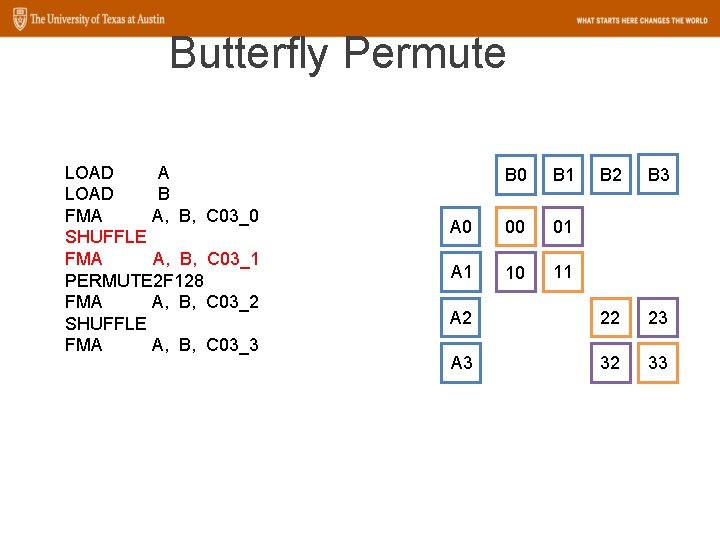

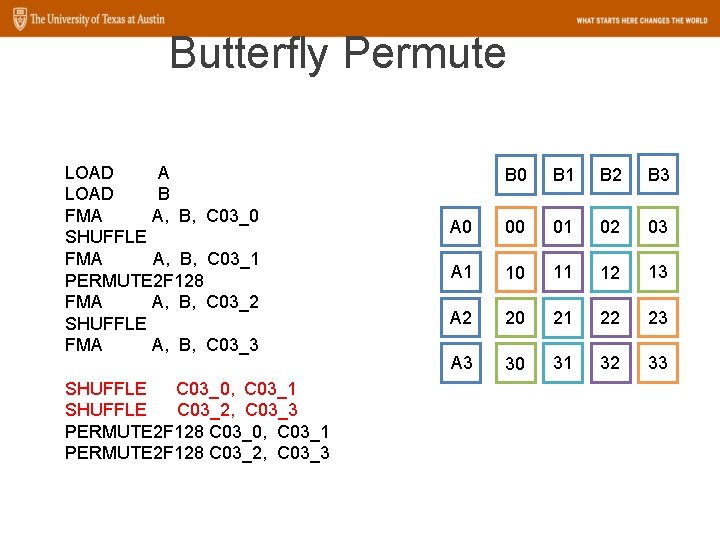

Butterfly Permute LOAD A LOAD B FMA A, B, C 03_0 SHUFFLE FMA A, B, C 03_1 PERMUTE 2 F 128 FMA A, B, C 03_2 SHUFFLE FMA A, B, C 03_3 B 0 A 1 A 2 A 3 B 1 B 2 B 3 00 11 22 33

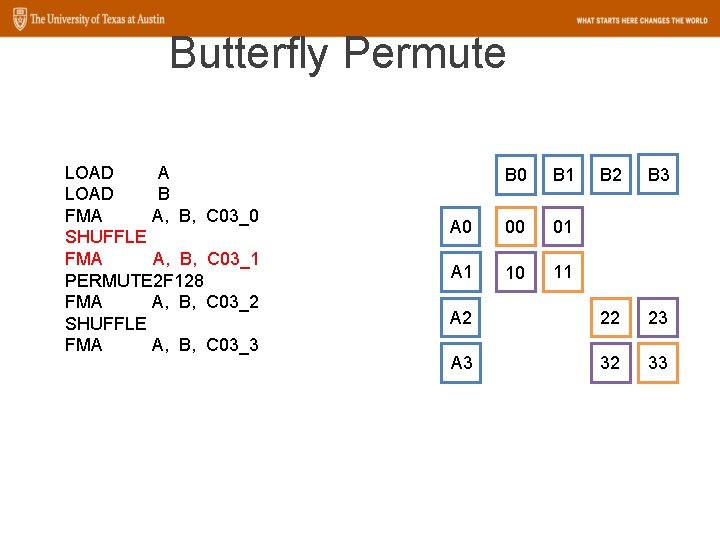

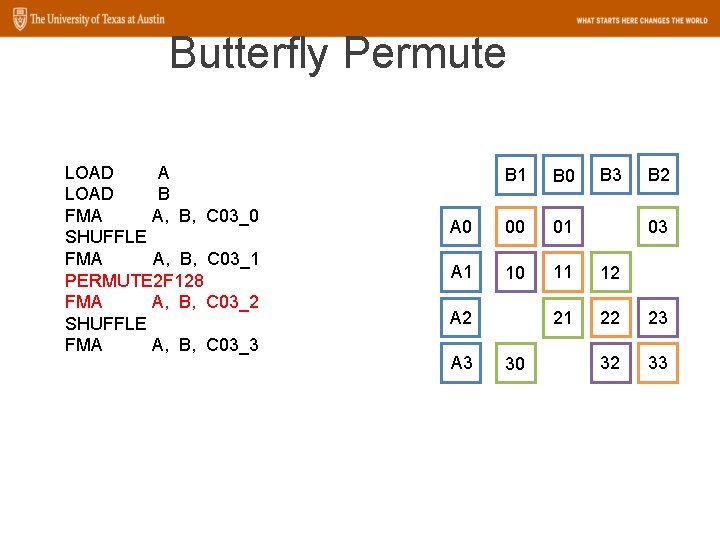

Butterfly Permute LOAD A LOAD B FMA A, B, C 03_0 SHUFFLE FMA A, B, C 03_1 PERMUTE 2 F 128 FMA A, B, C 03_2 SHUFFLE FMA A, B, C 03_3 B 2 B 3 A 2 22 23 A 3 32 33 B 0 B 1 A 0 00 01 A 1 10 11

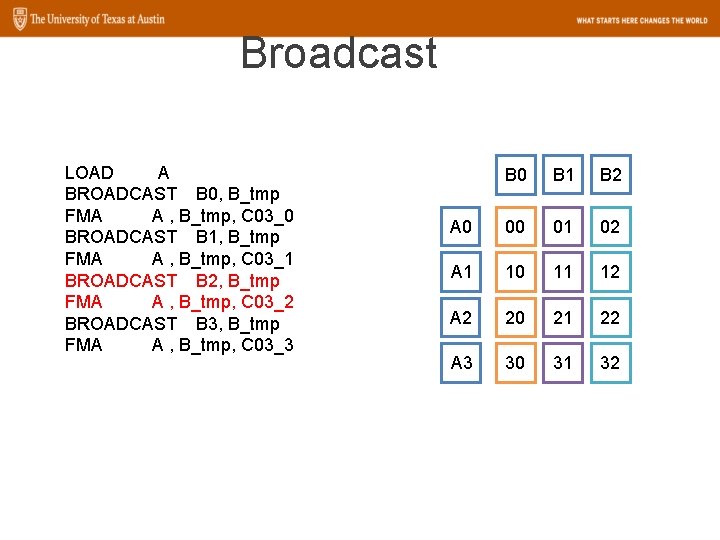

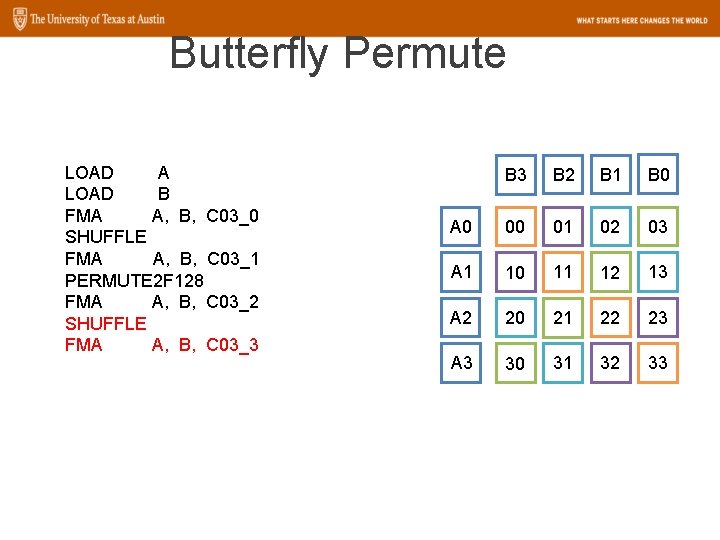

Butterfly Permute LOAD A LOAD B FMA A, B, C 03_0 SHUFFLE FMA A, B, C 03_1 PERMUTE 2 F 128 FMA A, B, C 03_2 SHUFFLE FMA A, B, C 03_3 B 2 B 1 B 0 A 0 00 01 A 1 10 11 12 21 22 23 32 33 A 2 A 3 30 03

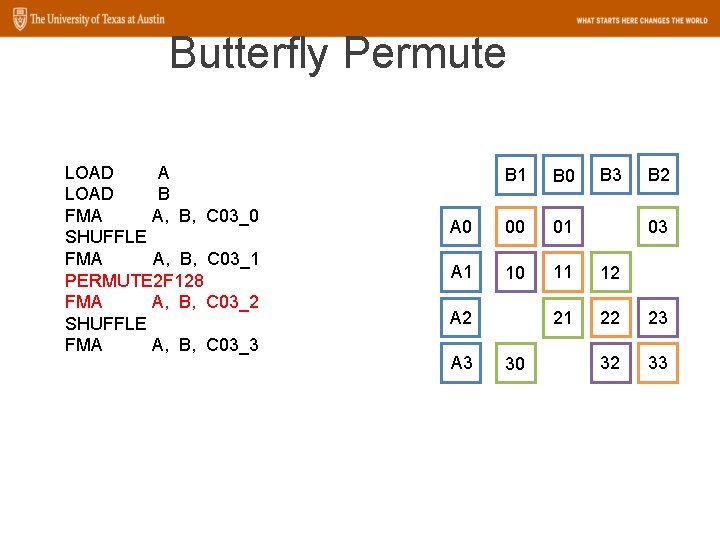

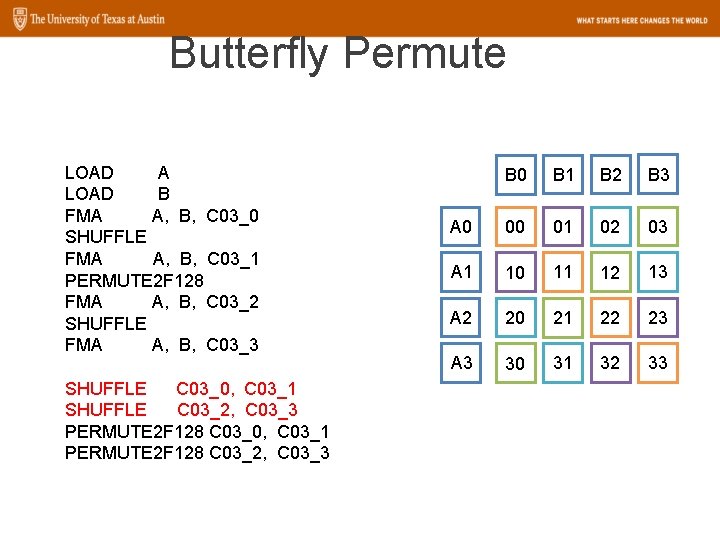

Butterfly Permute LOAD A LOAD B FMA A, B, C 03_0 SHUFFLE FMA A, B, C 03_1 PERMUTE 2 F 128 FMA A, B, C 03_2 SHUFFLE FMA A, B, C 03_3 B 2 B 1 B 0 A 0 00 01 02 03 A 1 10 11 12 13 A 2 20 21 22 23 A 3 30 31 32 33

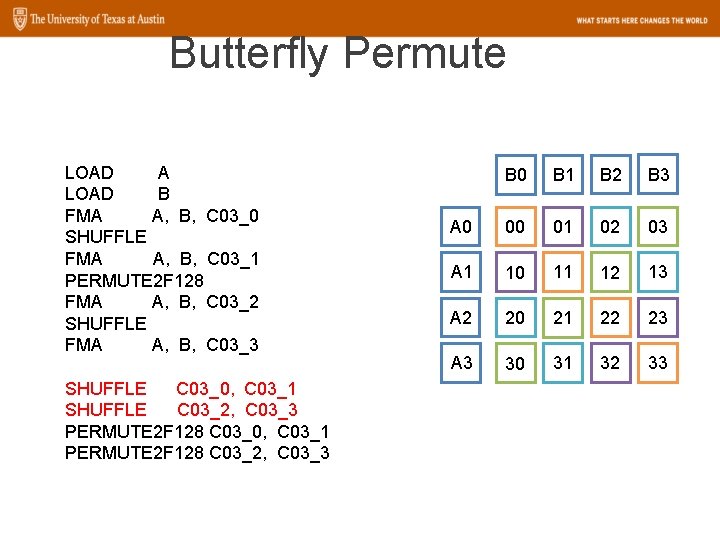

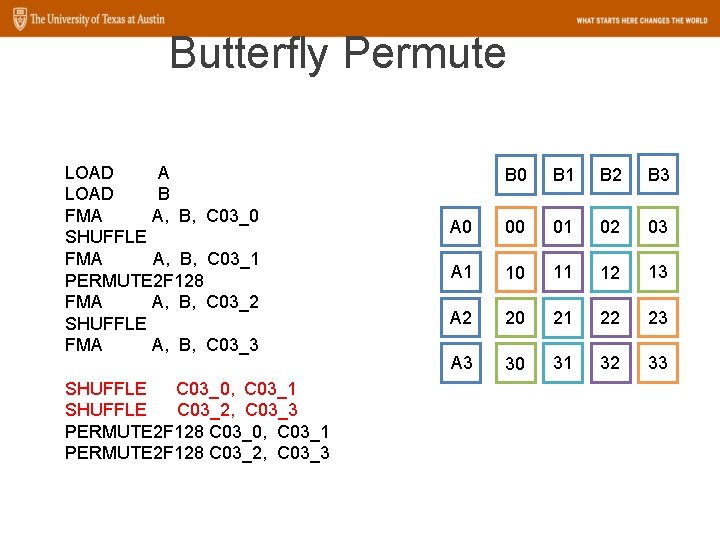

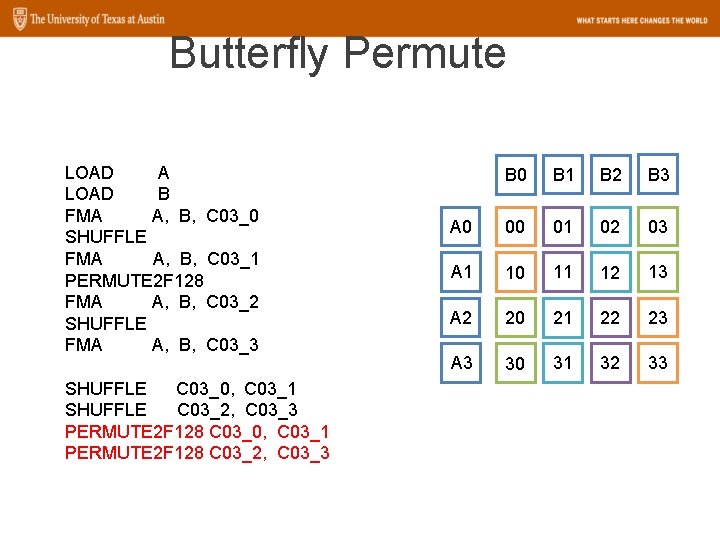

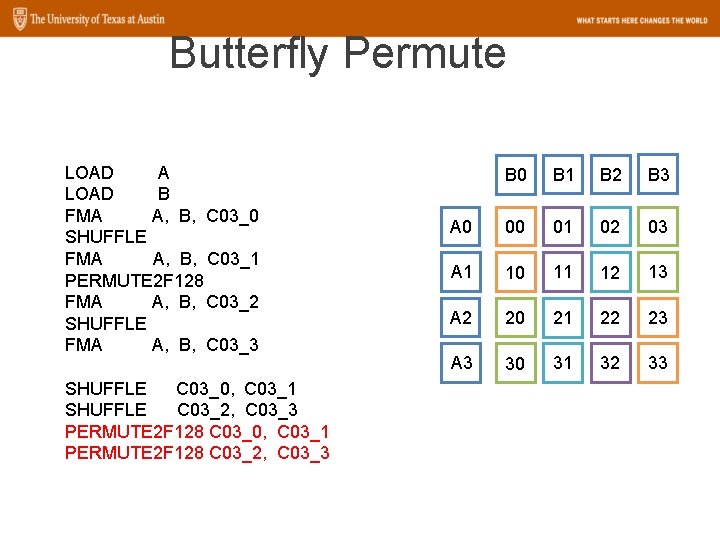

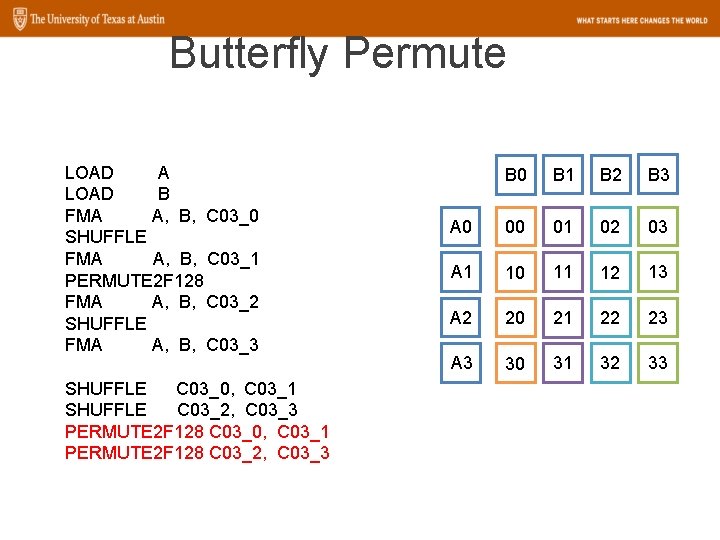

Butterfly Permute LOAD A LOAD B FMA A, B, C 03_0 SHUFFLE FMA A, B, C 03_1 PERMUTE 2 F 128 FMA A, B, C 03_2 SHUFFLE FMA A, B, C 03_3 SHUFFLE C 03_0, C 03_1 SHUFFLE C 03_2, C 03_3 PERMUTE 2 F 128 C 03_0, C 03_1 PERMUTE 2 F 128 C 03_2, C 03_3 B 0 B 1 B 2 B 3 A 0 00 01 02 03 A 1 10 11 12 13 A 2 20 21 22 23 A 3 30 31 32 33

Butterfly Permute LOAD A LOAD B FMA A, B, C 03_0 SHUFFLE FMA A, B, C 03_1 PERMUTE 2 F 128 FMA A, B, C 03_2 SHUFFLE FMA A, B, C 03_3 SHUFFLE C 03_0, C 03_1 SHUFFLE C 03_2, C 03_3 PERMUTE 2 F 128 C 03_0, C 03_1 PERMUTE 2 F 128 C 03_2, C 03_3 B 0 B 1 B 2 B 3 A 0 00 01 02 03 A 1 10 11 12 13 A 2 20 21 22 23 A 3 30 31 32 33

Butterfly Permute LOAD A LOAD B FMA A, B, C 03_0 SHUFFLE FMA A, B, C 03_1 PERMUTE 2 F 128 FMA A, B, C 03_2 SHUFFLE FMA A, B, C 03_3 SHUFFLE C 03_0, C 03_1 SHUFFLE C 03_2, C 03_3 PERMUTE 2 F 128 C 03_0, C 03_1 PERMUTE 2 F 128 C 03_2, C 03_3 B 0 B 1 B 2 B 3 A 0 00 01 02 03 A 1 10 11 12 13 A 2 20 21 22 23 A 3 30 31 32 33

Butterfly Permute LOAD A LOAD B FMA A, B, C 03_0 SHUFFLE FMA A, B, C 03_1 PERMUTE 2 F 128 FMA A, B, C 03_2 SHUFFLE FMA A, B, C 03_3 SHUFFLE C 03_0, C 03_1 SHUFFLE C 03_2, C 03_3 PERMUTE 2 F 128 C 03_0, C 03_1 PERMUTE 2 F 128 C 03_2, C 03_3 B 0 B 1 B 2 B 3 A 0 00 01 02 03 A 1 10 11 12 13 A 2 20 21 22 23 A 3 30 31 32 33

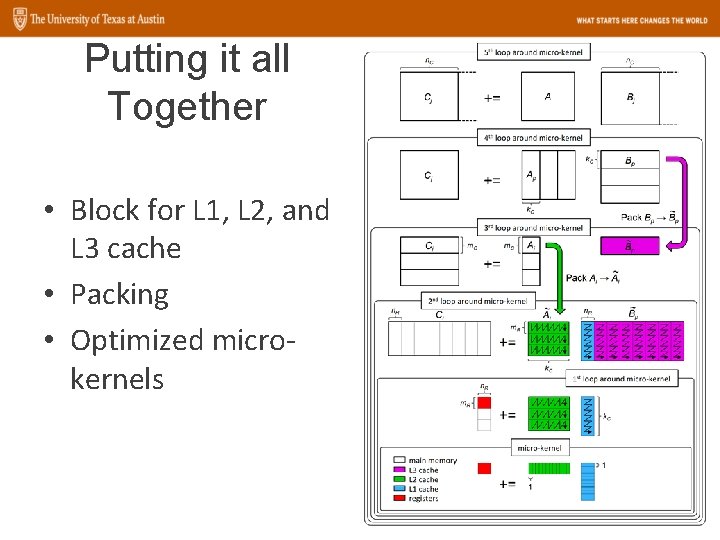

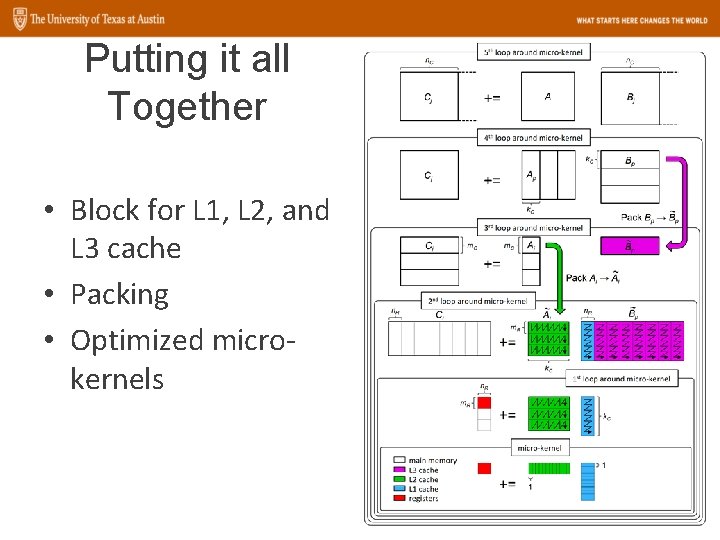

Putting it all Together • Block for L 1, L 2, and L 3 cache • Packing • Optimized microkernels 63

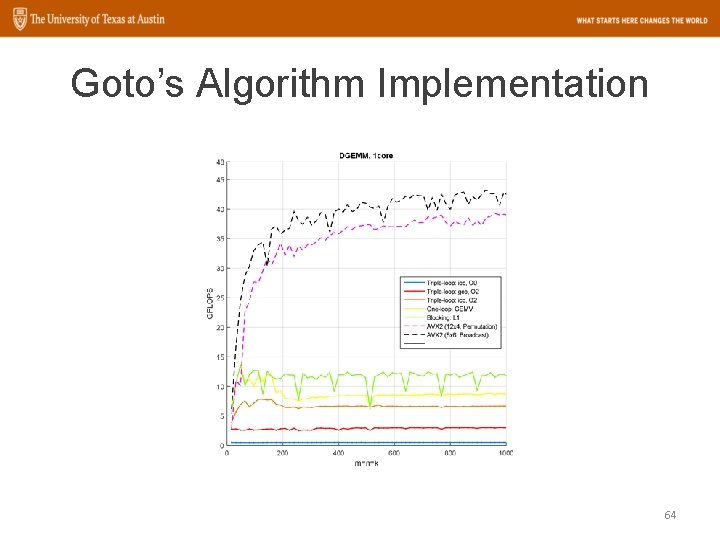

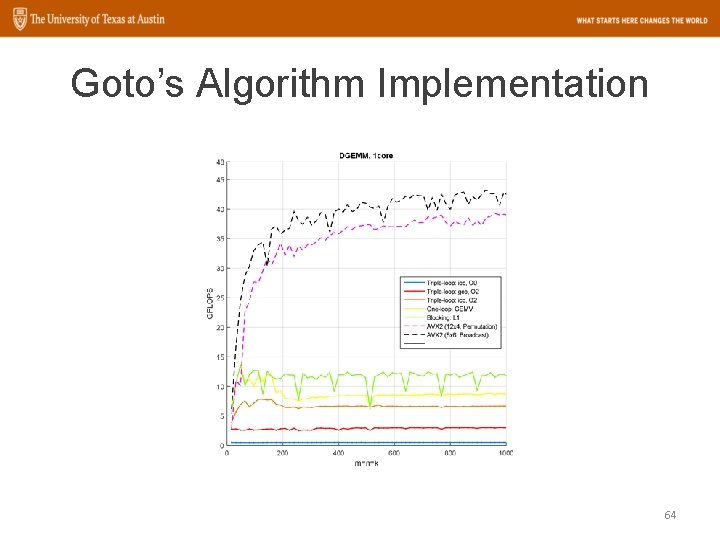

Goto’s Algorithm Implementation 64

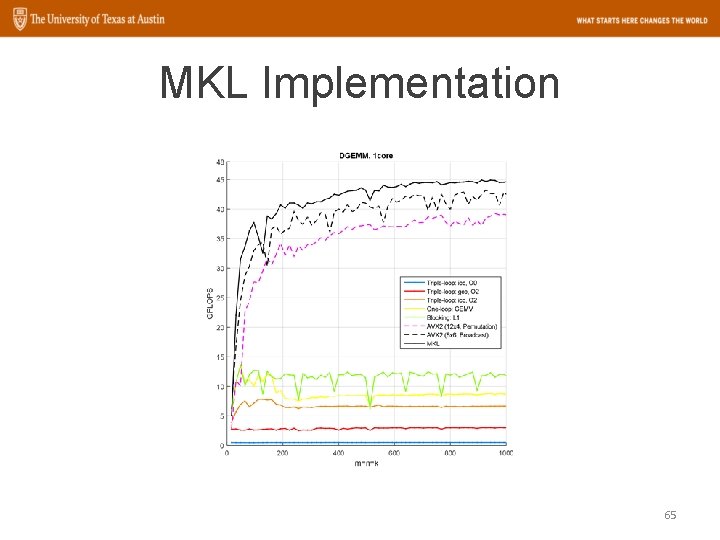

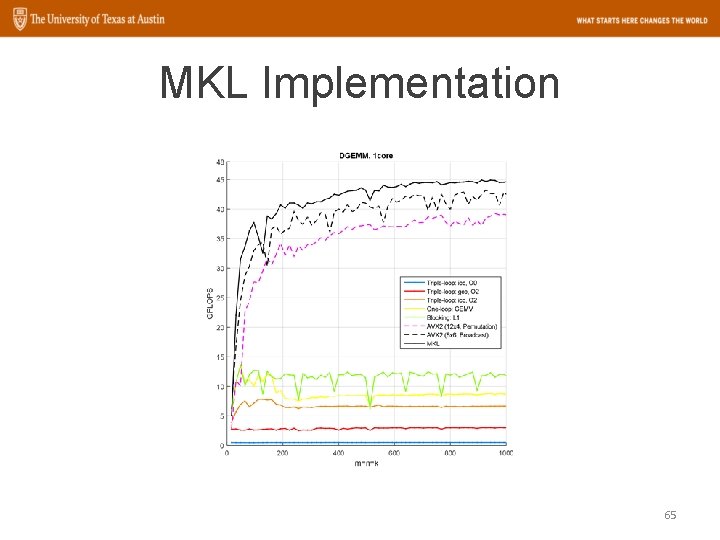

MKL Implementation 65

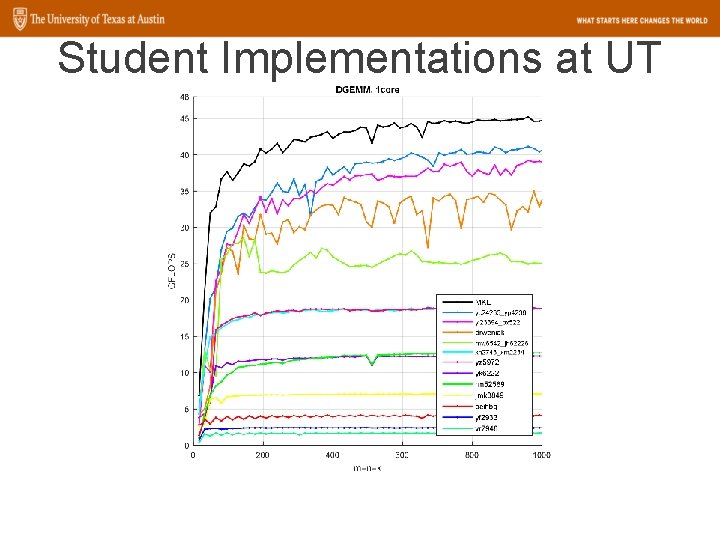

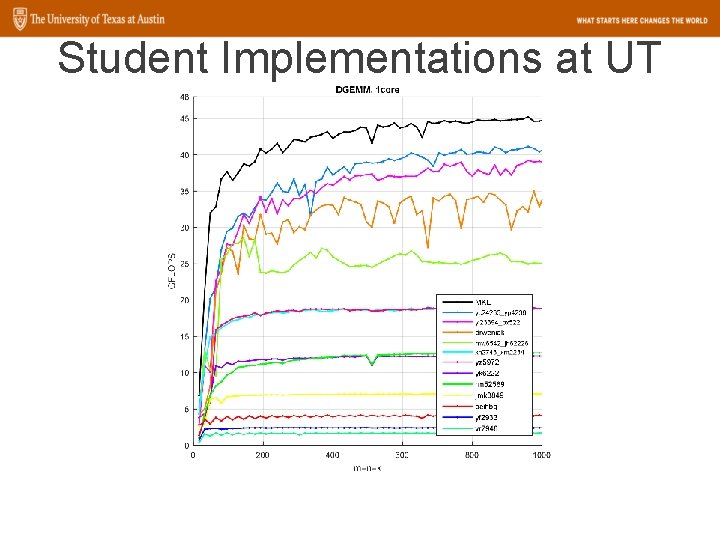

Student Implementations at UT

Thank You! • Tyler Smith – tms@cs. utexas. edu • Jianyu Huang – jianyu@cs. utexas. edu • Get BLIS: – github. com/flame/blis 67