Get To The Point Summarization with Pointer Generator

- Slides: 29

Get To The Point: Summarization with Pointer. Generator Networks Abigail See, Peter J. Liu, Christopher D. Manning Presented by: Matan Eyal

Agenda • Introduction • Word Embeddings • RNNs • Sequence-to-Sequence • Attention • Pointer Networks • Coverage Mechanism

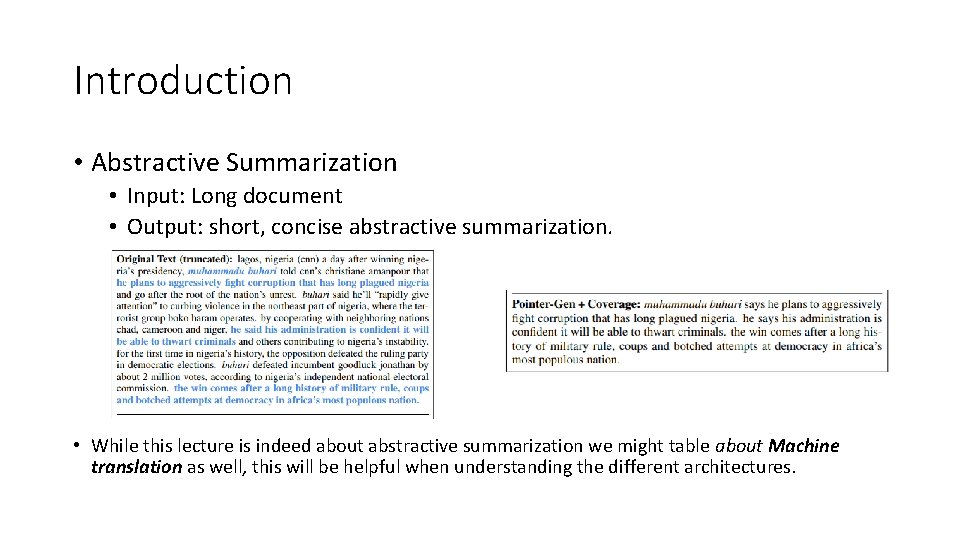

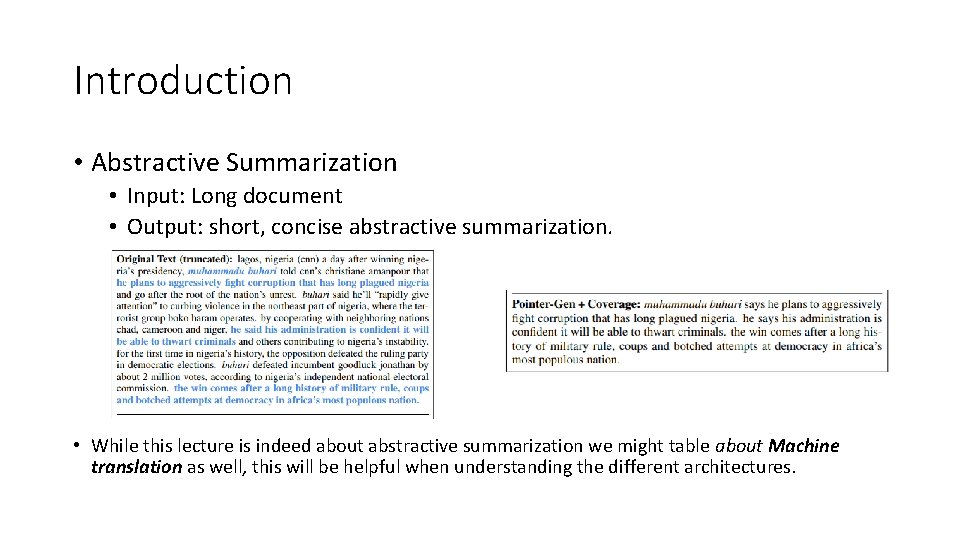

Introduction • Abstractive Summarization • Input: Long document • Output: short, concise abstractive summarization. • While this lecture is indeed about abstractive summarization we might table about Machine translation as well, this will be helpful when understanding the different architectures.

Word embeddings • Deep Learning Architectures are incapable of processing strings or plain text in their raw form. • They require numbers as inputs to perform any sort of job. • How can we encode words?

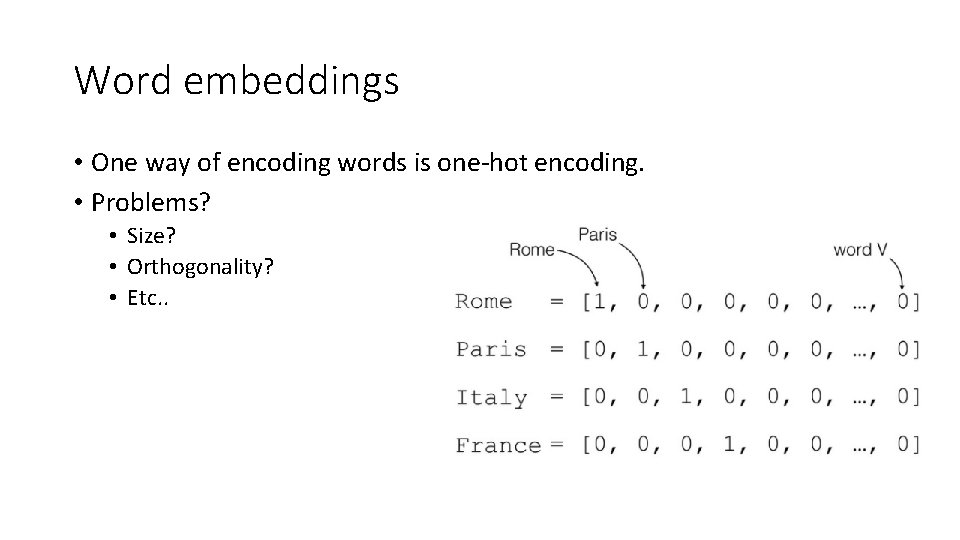

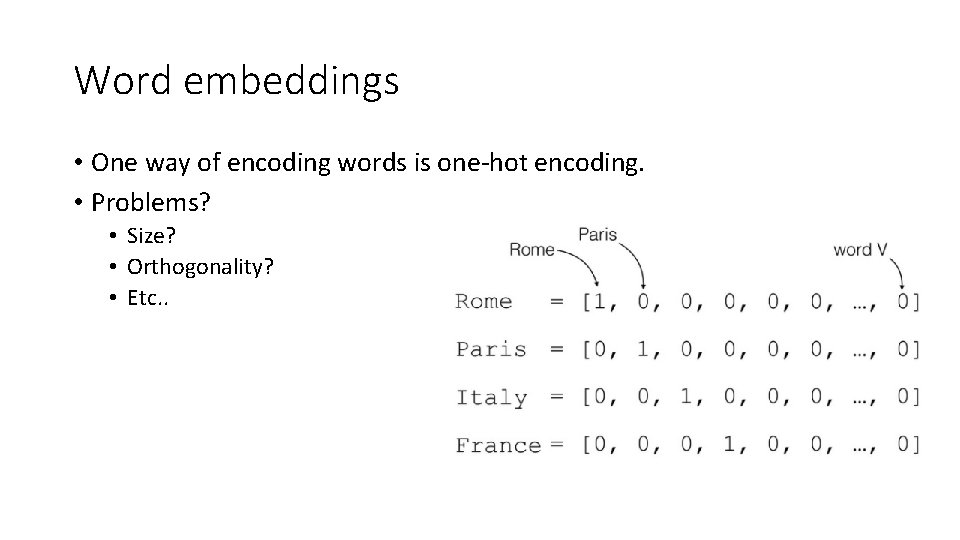

Word embeddings • One way of encoding words is one-hot encoding. • Problems? • Size? • Orthogonality? • Etc. .

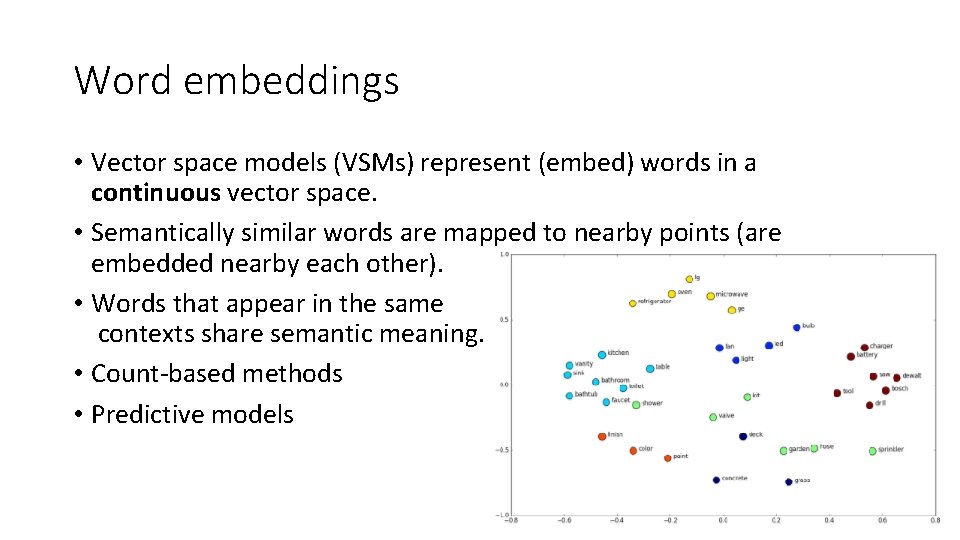

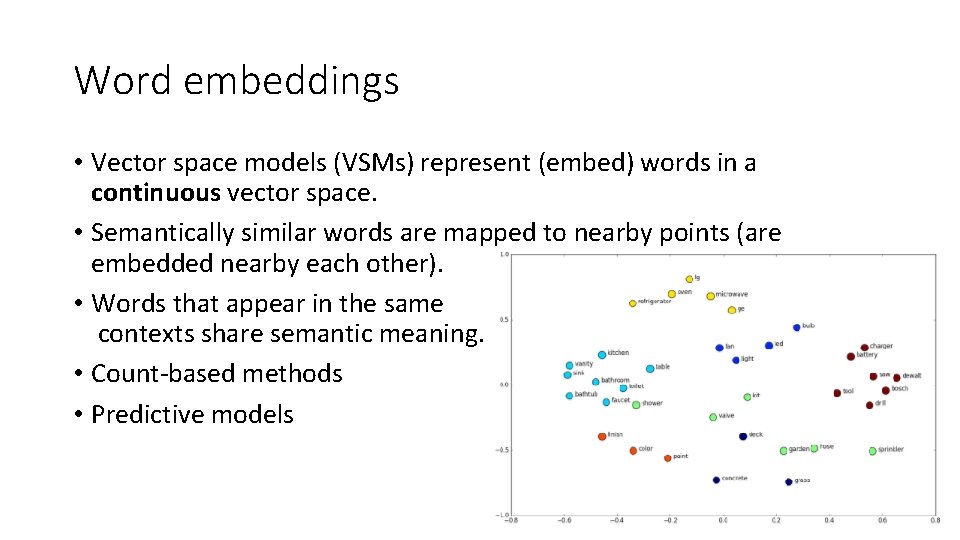

Word embeddings • Vector space models (VSMs) represent (embed) words in a continuous vector space. • Semantically similar words are mapped to nearby points (are embedded nearby each other). • Words that appear in the same contexts share semantic meaning. • Count-based methods • Predictive models

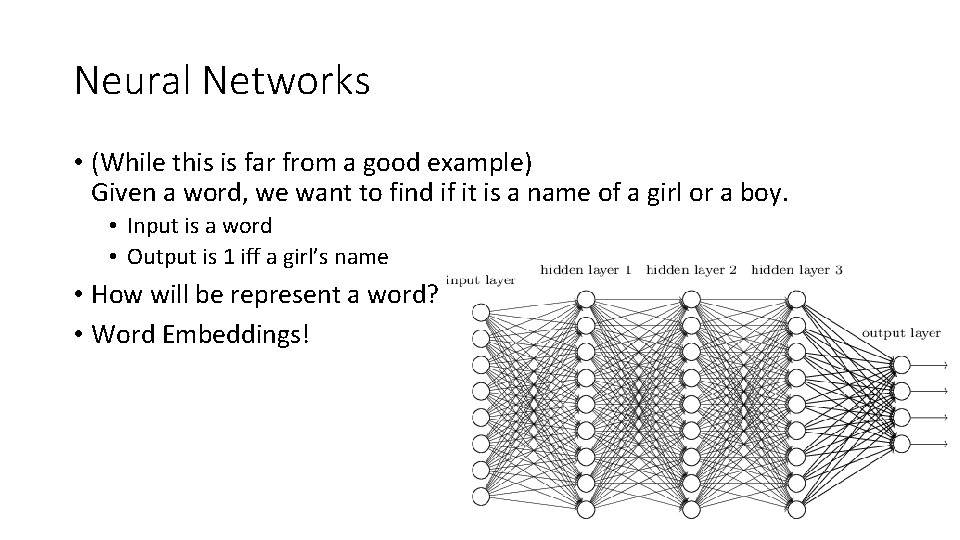

Neural Networks • (While this is far from a good example) Given a word, we want to find if it is a name of a girl or a boy. • Input is a word • Output is 1 iff a girl’s name • How will be represent a word? • Word Embeddings!

I understand Word Embeddings perfectly fine, what does it have to do with summarization? Tomas Mikolov

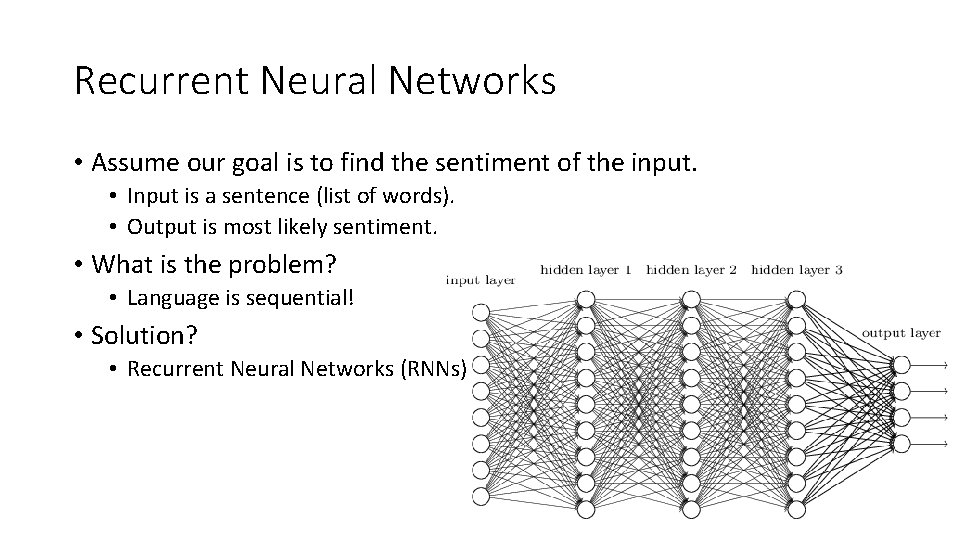

Recurrent Neural Networks • Assume our goal is to find the sentiment of the input. • Input is a sentence (list of words). • Output is most likely sentiment. • What is the problem? • Language is sequential! • Solution? • Recurrent Neural Networks (RNNs)

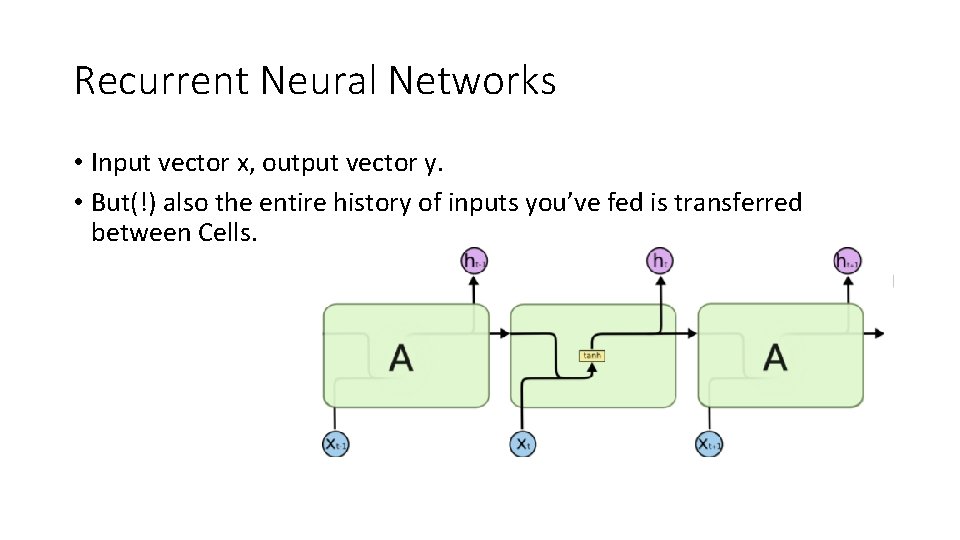

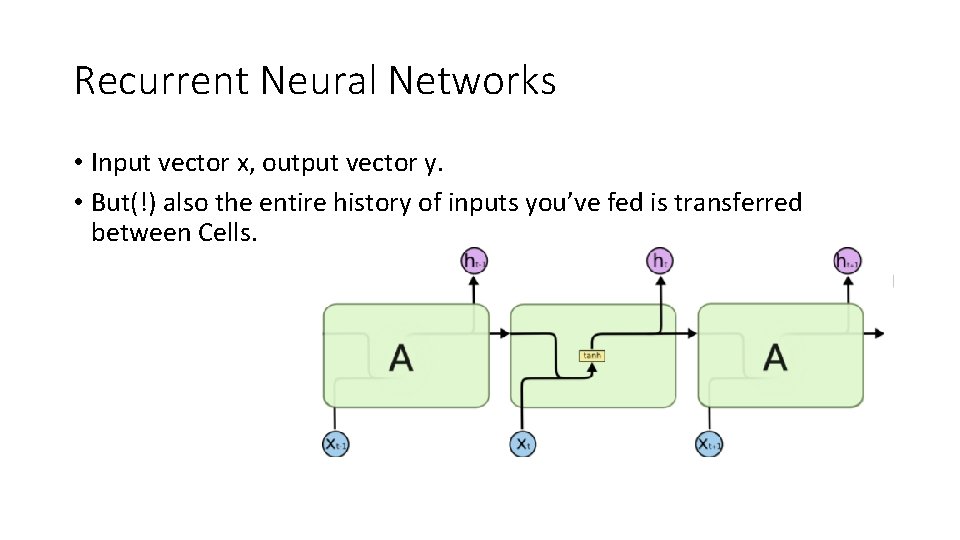

Recurrent Neural Networks • Input vector x, output vector y. • But(!) also the entire history of inputs you’ve fed is transferred between Cells.

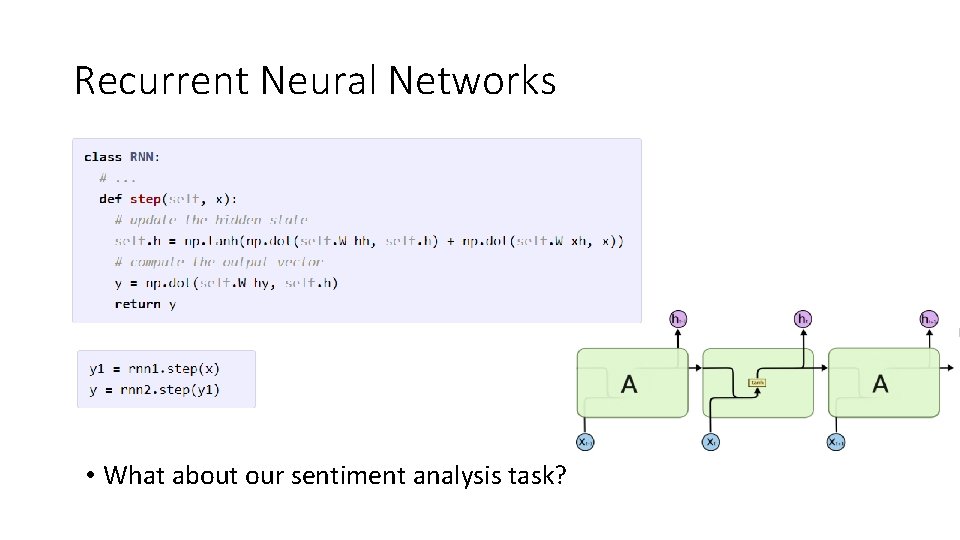

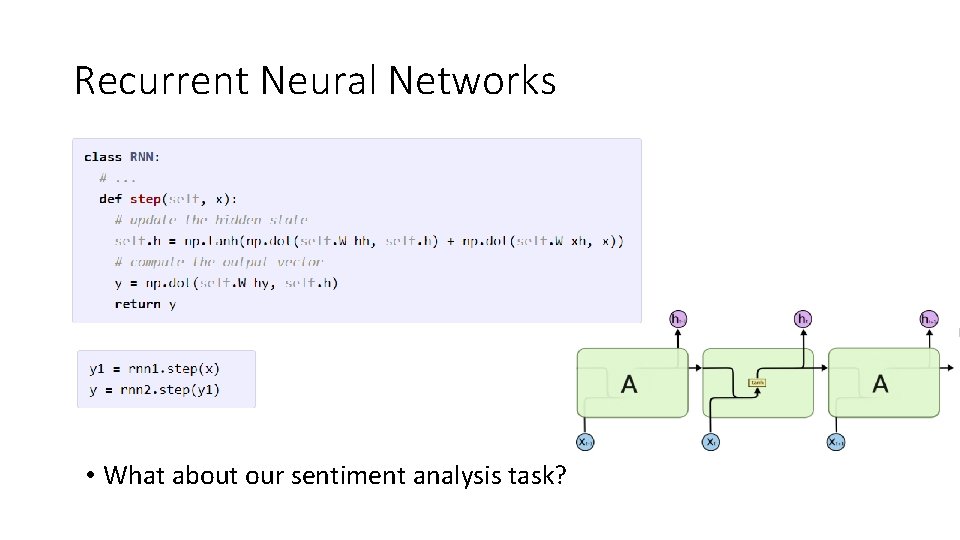

Recurrent Neural Networks • What about our sentiment analysis task?

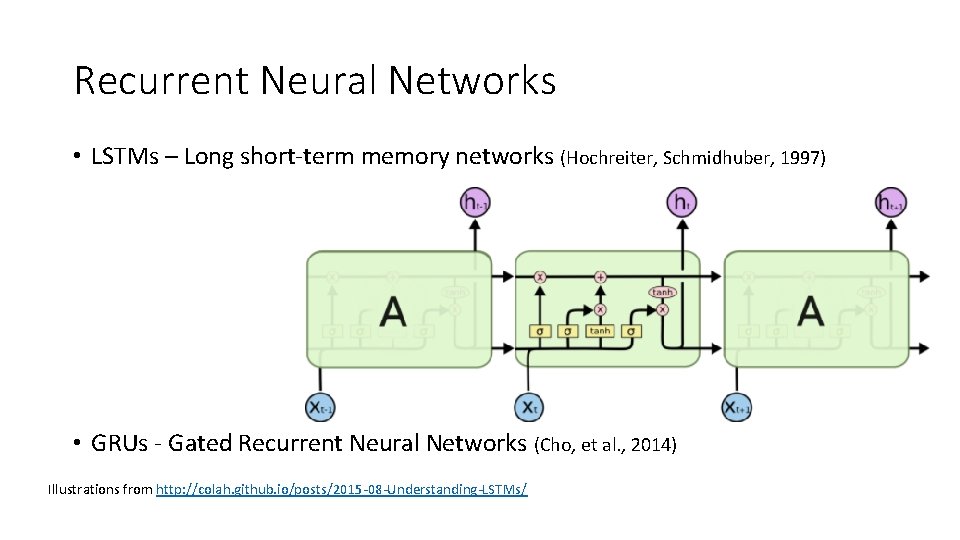

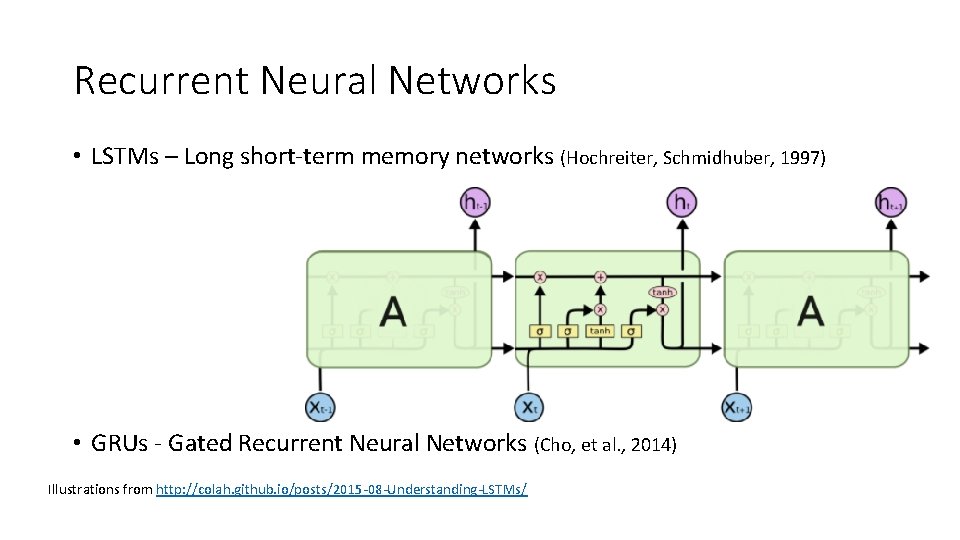

Recurrent Neural Networks • LSTMs – Long short-term memory networks (Hochreiter, Schmidhuber, 1997) • GRUs - Gated Recurrent Neural Networks (Cho, et al. , 2014) Illustrations from http: //colah. github. io/posts/2015 -08 -Understanding-LSTMs/

Oh, awesome! what can I do with this? Jürgen Schmidhuber

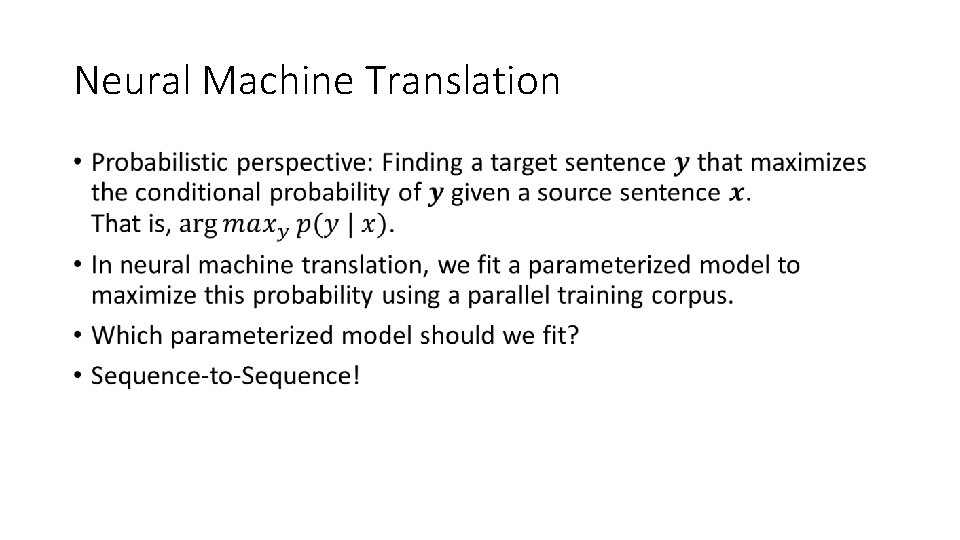

Neural Machine Translation •

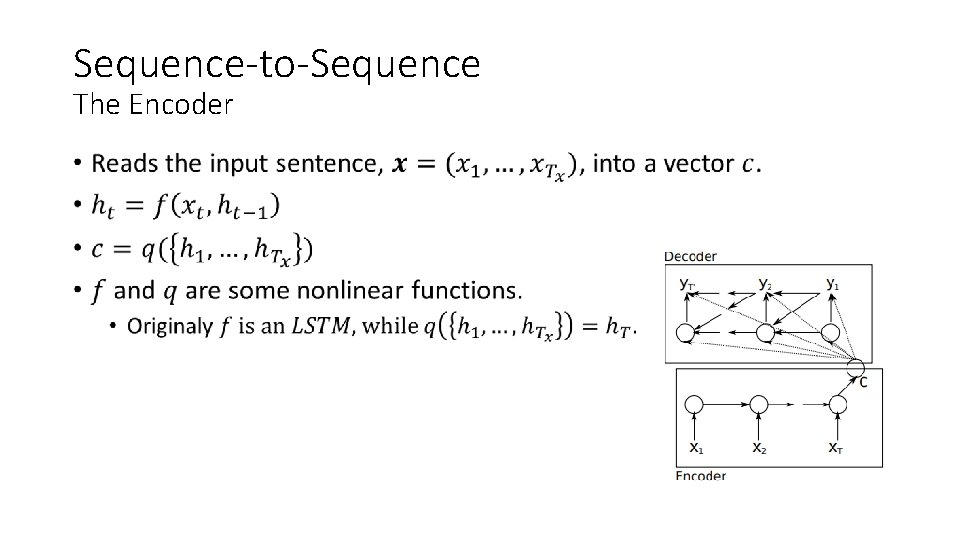

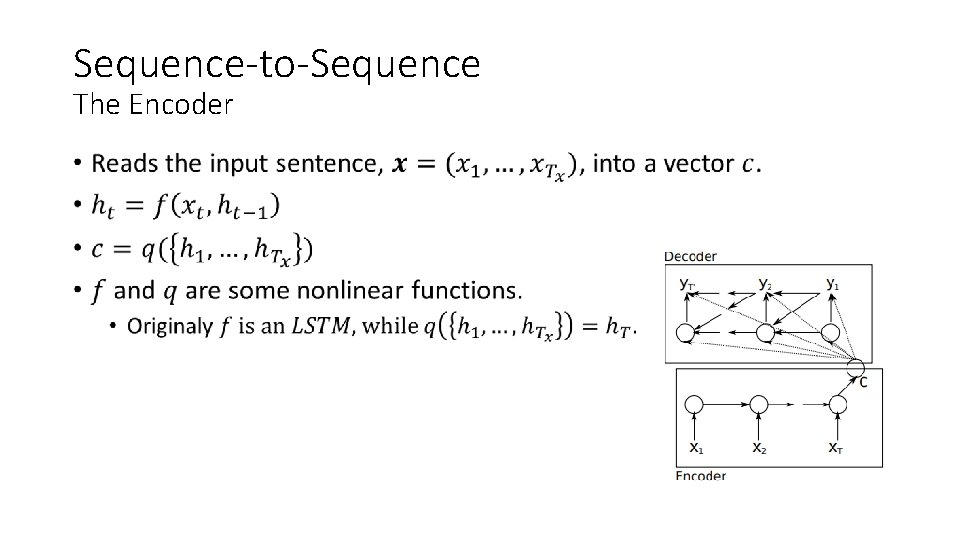

Sequence-to-Sequence The Encoder •

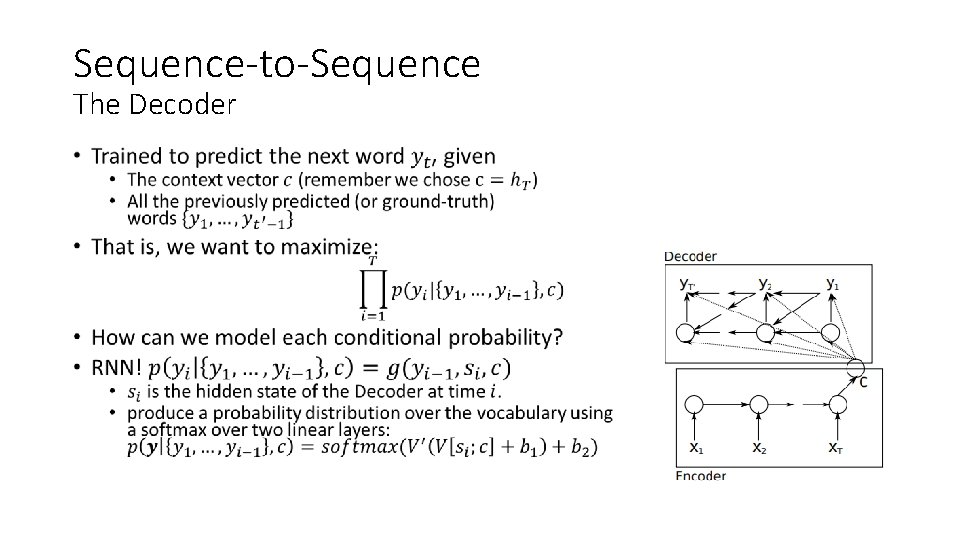

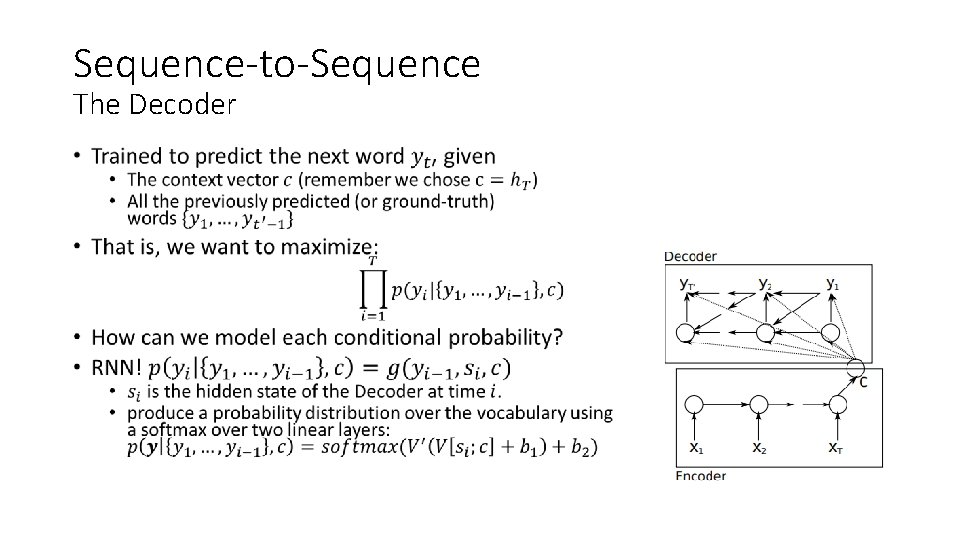

Sequence-to-Sequence The Decoder •

The concept of attention is the most interesting recent architectural innovation in neural networks. (Andrej Karpathy)

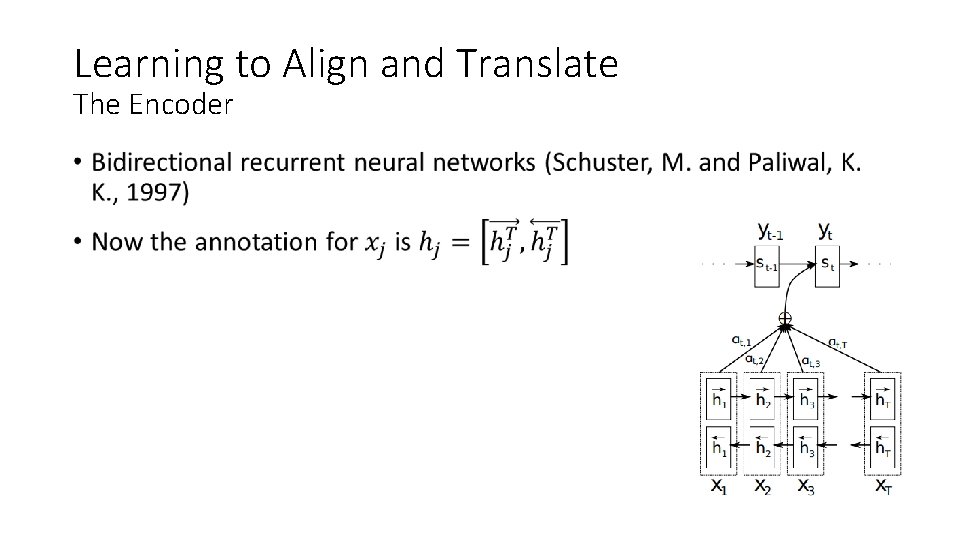

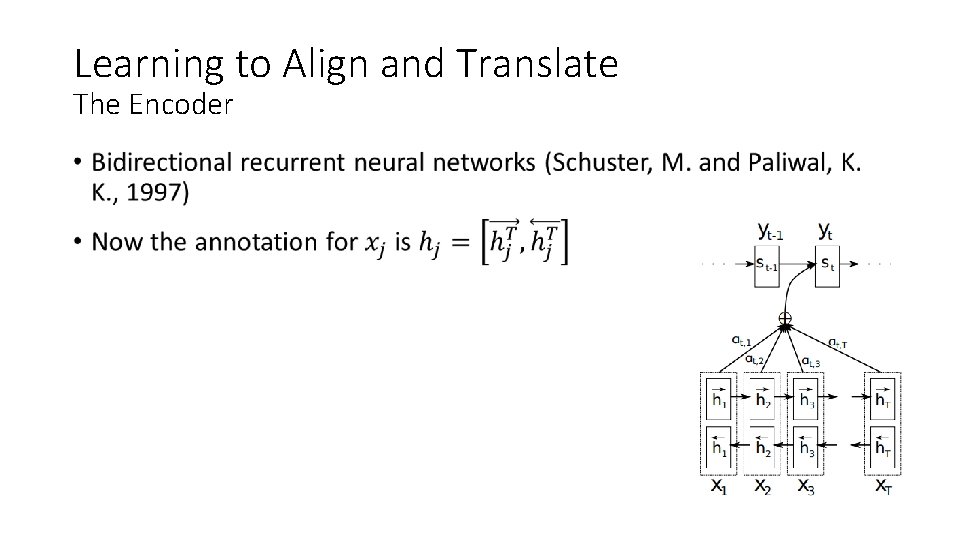

Learning to Align and Translate The Encoder •

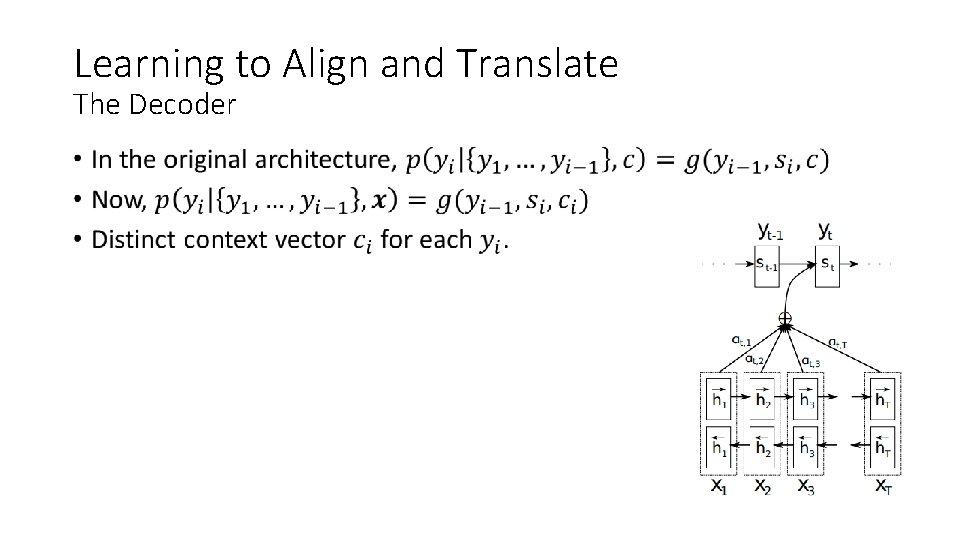

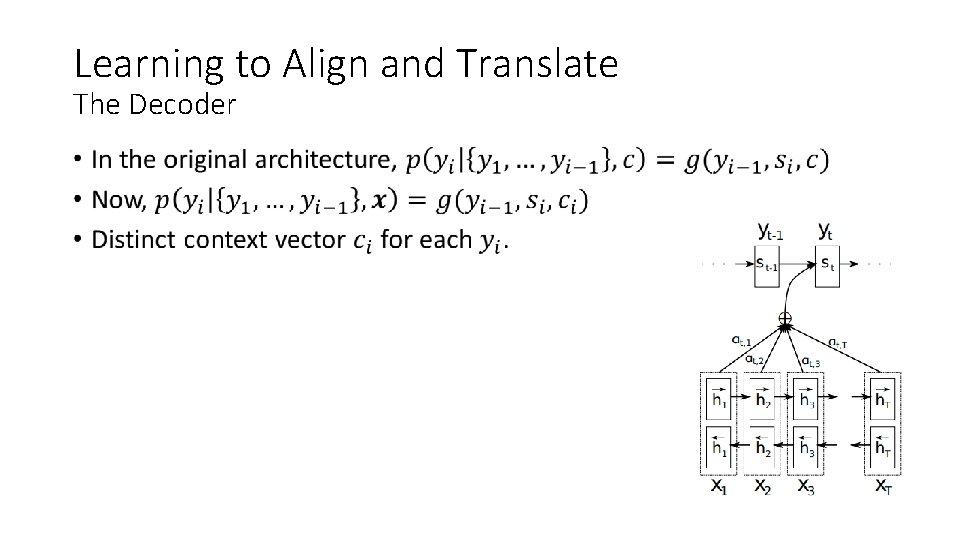

Learning to Align and Translate The Decoder •

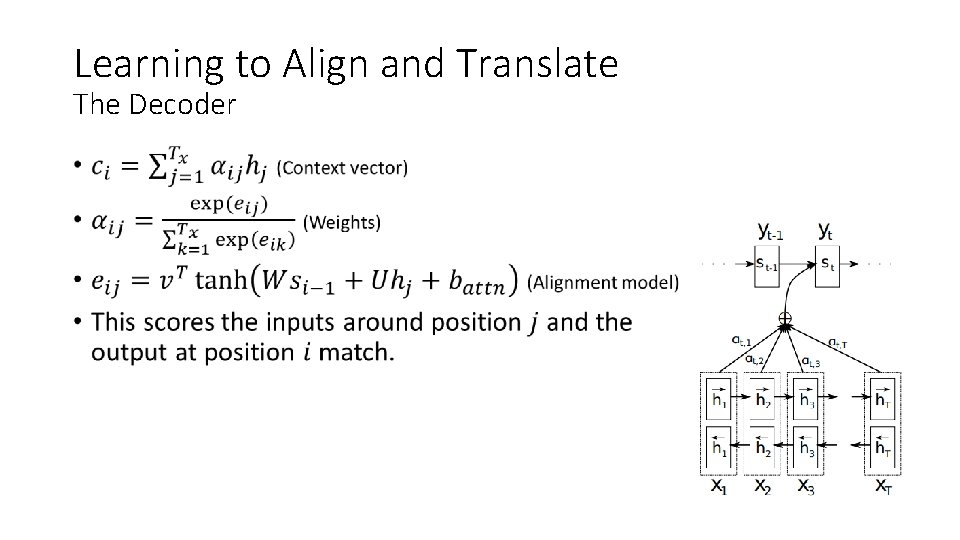

Learning to Align and Translate The Decoder •

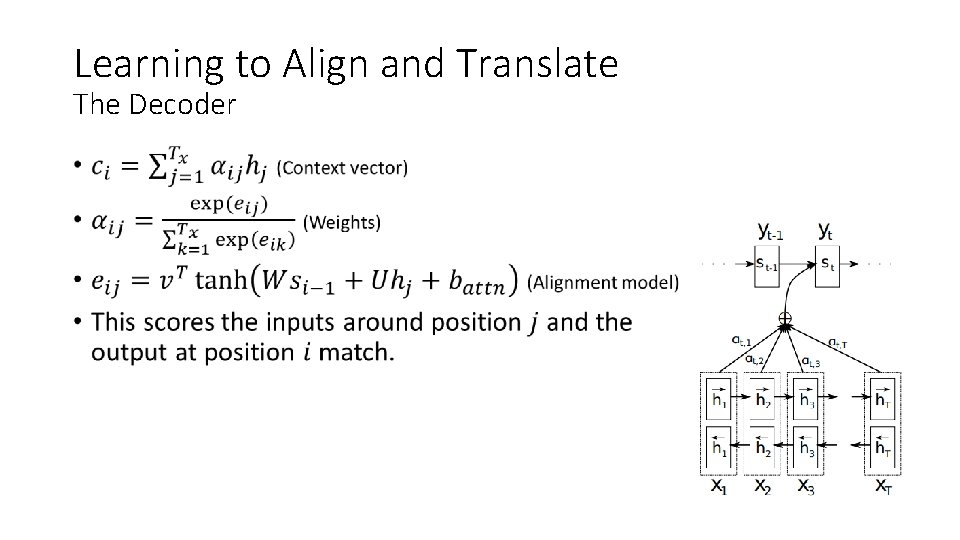

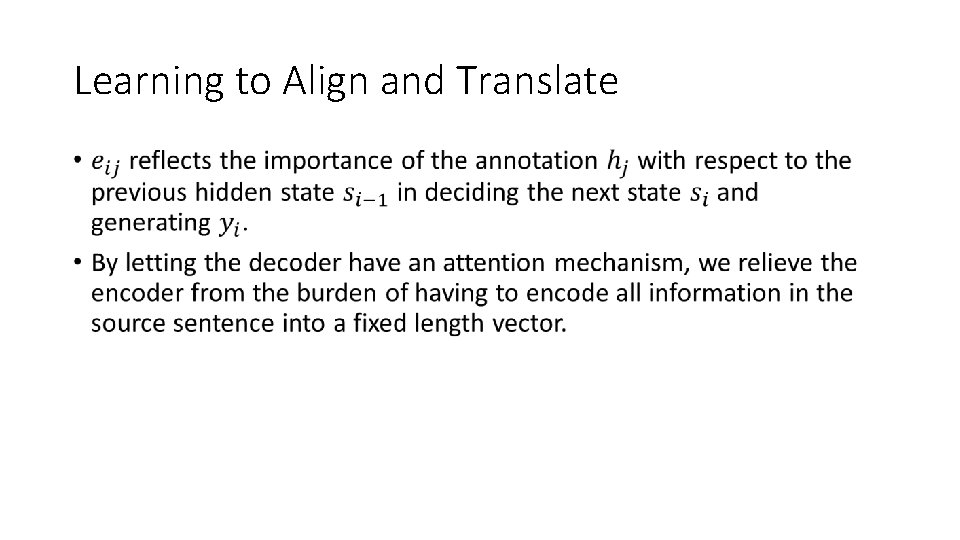

Learning to Align and Translate •

Learning to Align and Translate

Summarization is also a mapping from input sequence to a (shorter) output sequence Alexander Rush

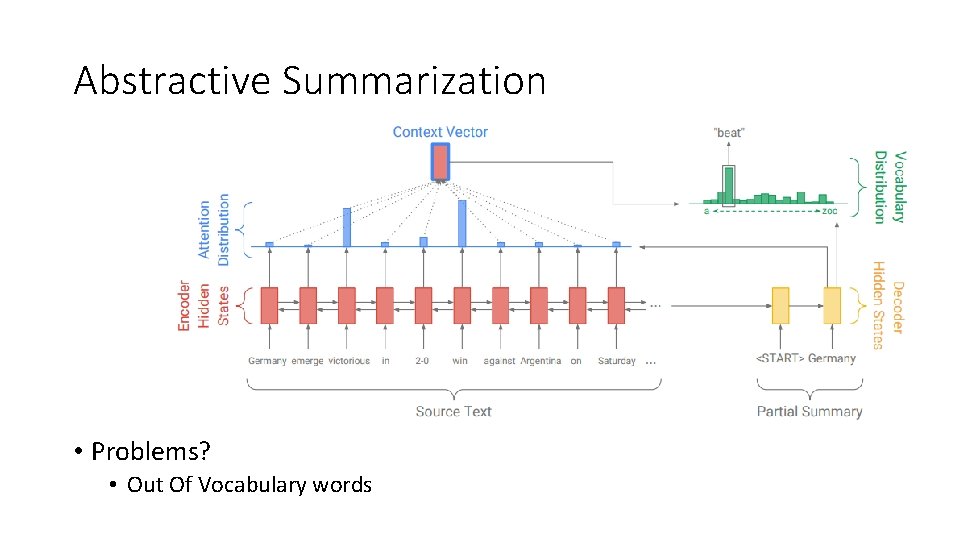

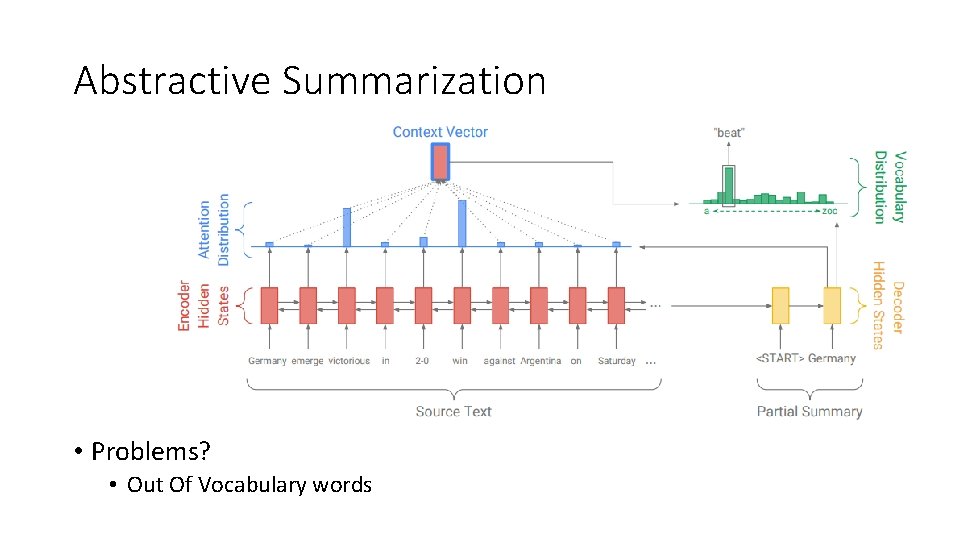

Abstractive Summarization • Problems? • Out Of Vocabulary words

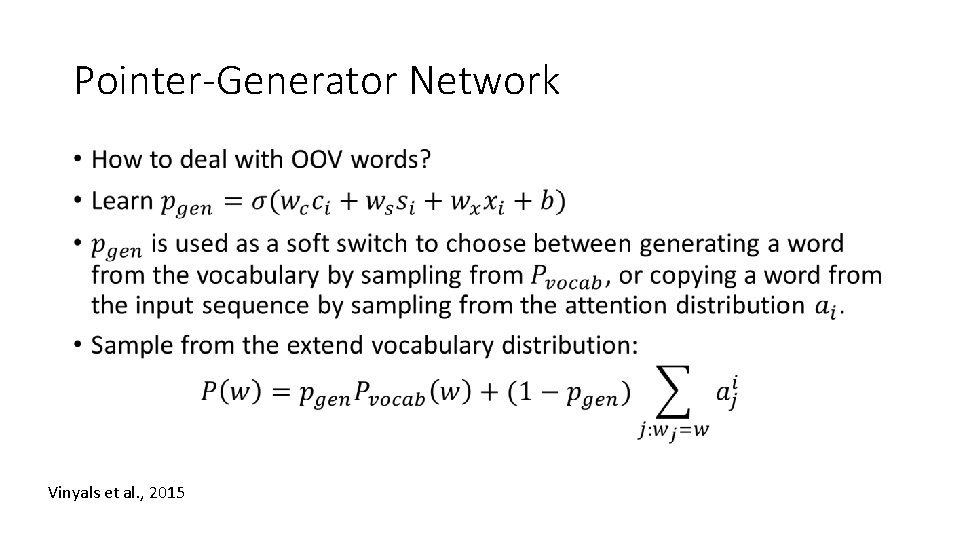

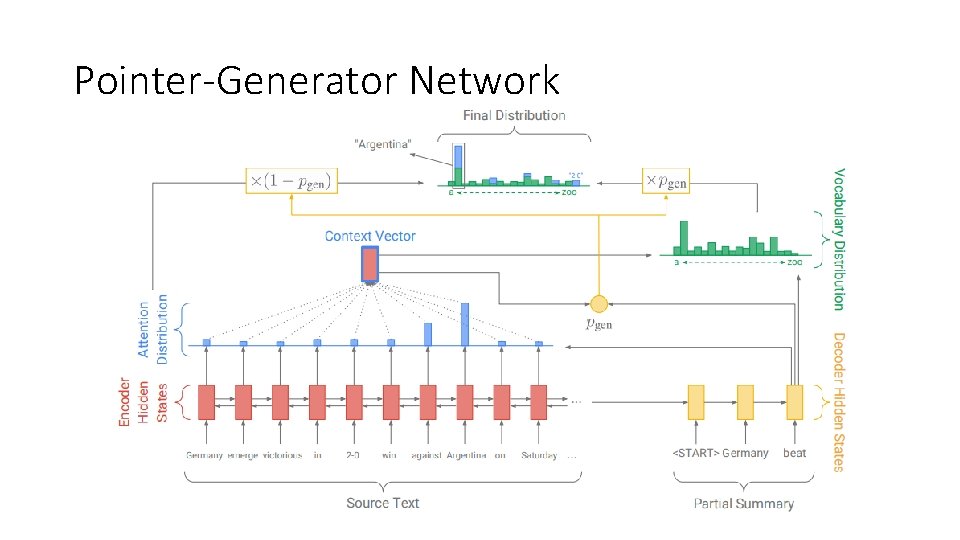

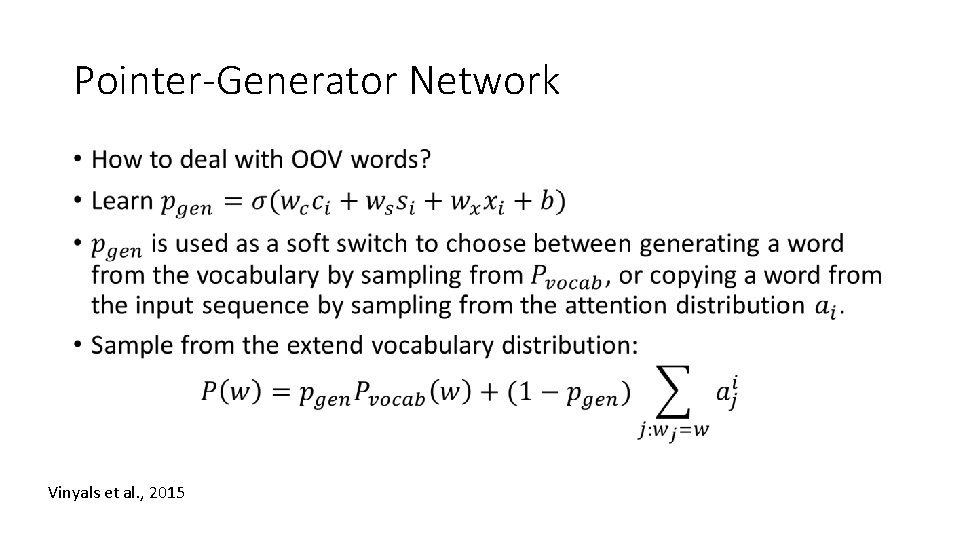

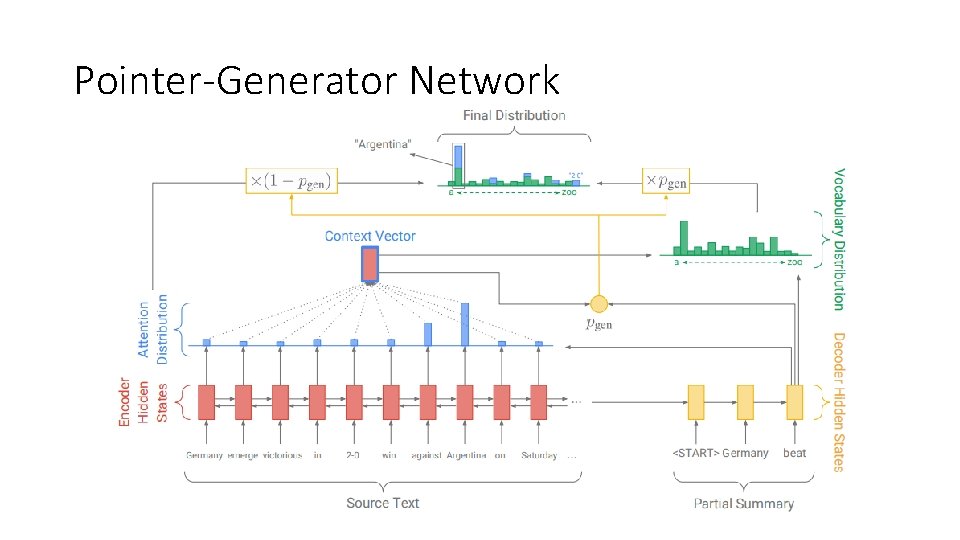

Pointer-Generator Network • Vinyals et al. , 2015

Pointer-Generator Network

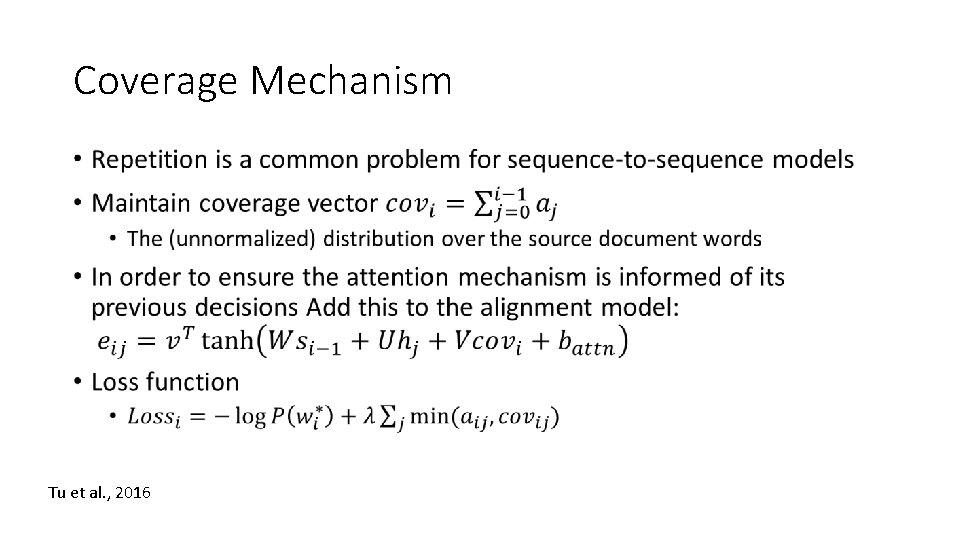

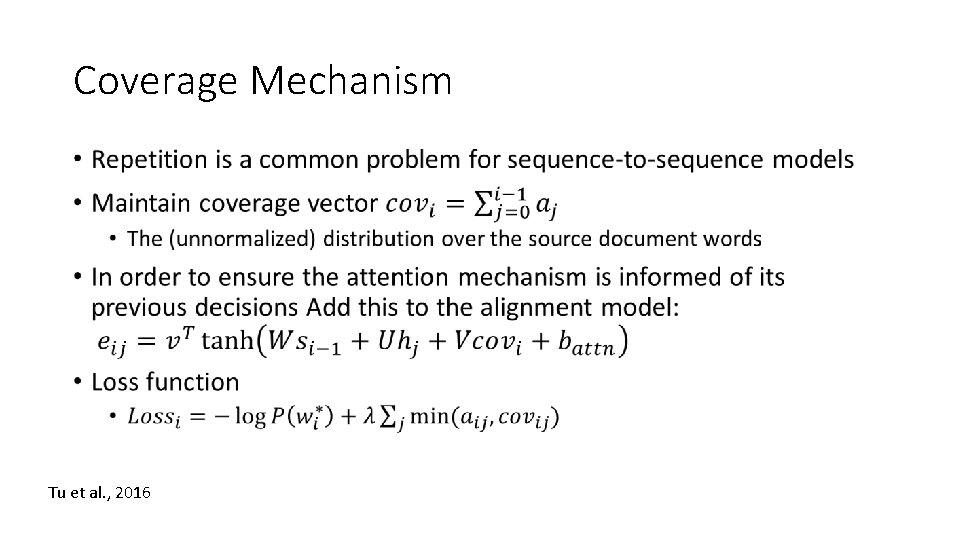

Coverage Mechanism • Tu et al. , 2016

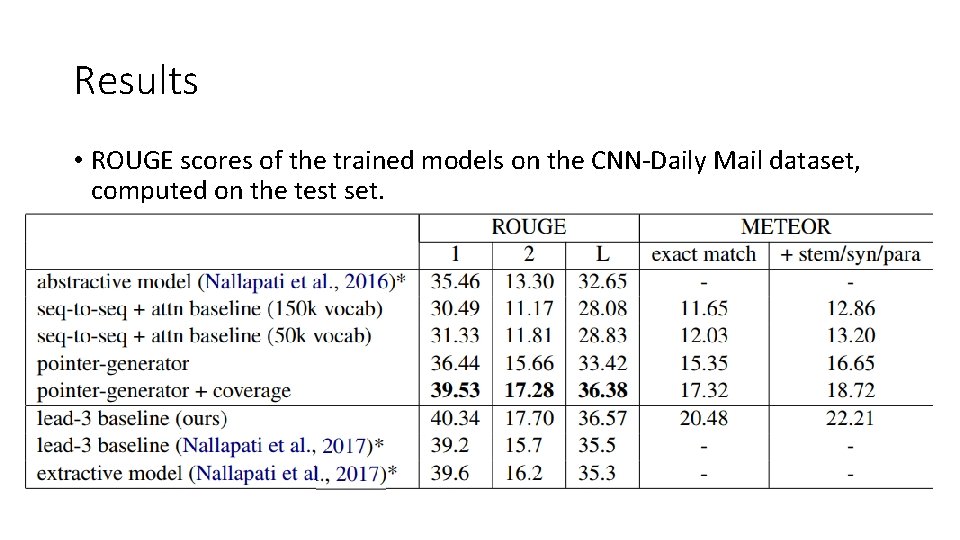

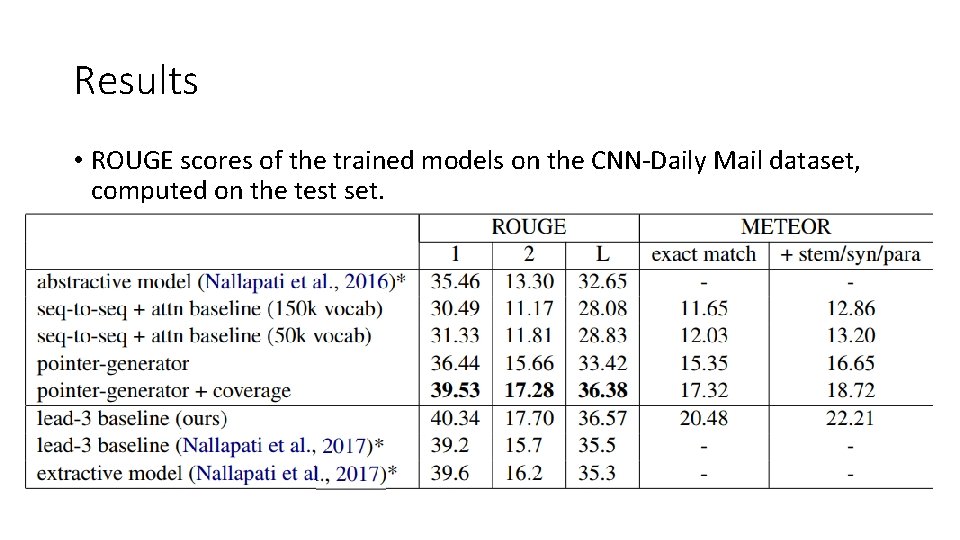

Results • ROUGE scores of the trained models on the CNN-Daily Mail dataset, computed on the test set.