Frontiers in UserComputer Interaction June 1999 Andries van

- Slides: 44

Frontiers in User-Computer Interaction June 1999 Andries van Dam Brown University and the NSF Science and Technology Center for Computer Graphics and Scientific Visualization

Roadmap for Talk • Introduction – – background and goals of UIs – motivation for post-WIMP UIs pros and cons of WIMP (Windows, Icons, Menus, and Point-andclick) GUIs (Graphical User Interface), e. g. , MS Windows • Post-WIMP interaction: From gesture and speech recognition to Multi-modal and Perceptual UIs (PUIs) • Research problems • Note: Interaction is hard to describe with static slides, even with video. You really must experience it…

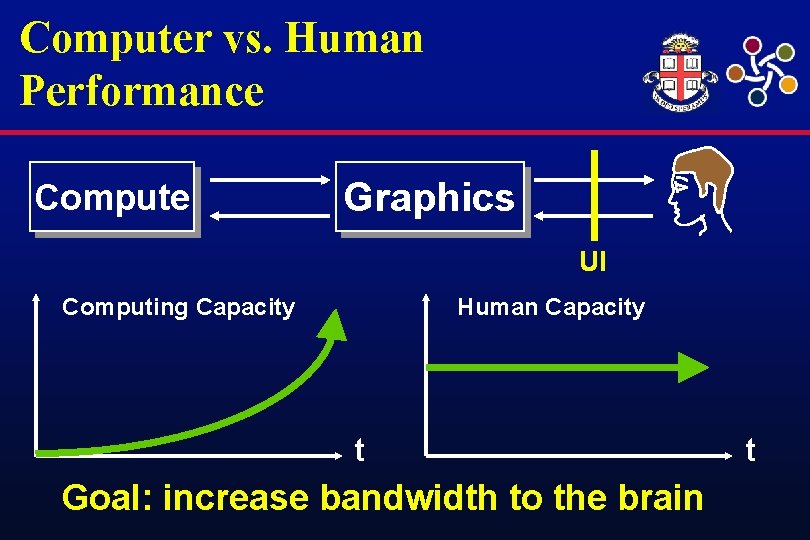

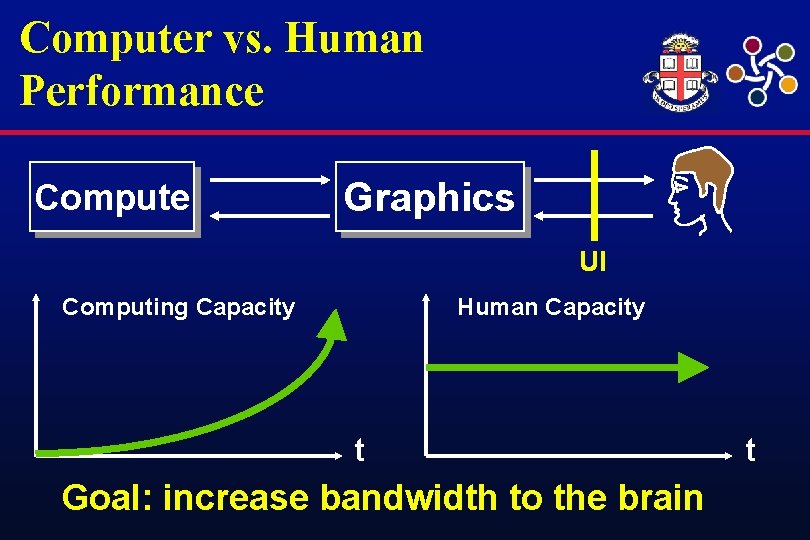

Computer vs. Human Performance Compute Graphics UI Computing Capacity Human Capacity t Goal: increase bandwidth to the brain t

The Ultimate User Interface • None! UIs are a necessary evil • Want human-style interactions with other humans, intelligent assistants, objects in the real and/or virtual world – intelligent assistant (IA) may be manifested as an agent with a “social interface”/avatar (see Apple’s Knowledge Navigator video) – prediction: when IAs become vastly superior to what is available today, they will mediate the majority of transactions

Intelligent Assistants: Understand Your Context • Combine continuous speech recognition and natural language understanding, knowledge management and processing • May have customizable social interfaces/avatars • May run both synchronously (under direct user control) and asynchronously (communicating with other agents)

Today’s Best UI • Goal: Transparency – minimal cognitive processing, relying mostly on motor skills and subconscious perception – focus on task, not technology (e. g. , bicycle, car, and our Sketch examples. MS Power. Point is a negative example!) – – ease of learning is not the same as ease of use context determines how much of an investment the user is willing to make (e. g. , playing a musical instrument)

What to Use Moore’s Law for? • Computers are now fast enough for most applications • Increasingly, we can afford to concentrate on human productivity rather than machine productivity (8 CDs for Office 2000!) • How? Use compute power to make dramatic improvements in user interfaces and capabilities (new modes of expression) – – today, majority of code in desktop productivity applications is in the GUI (e. g. , MS Office), and we’re only tinkering need radical departure, e. g. , Raj Reddy in the early 80 s proposed SILK interfaces -- not yet here o speech, images, language, knowledge base

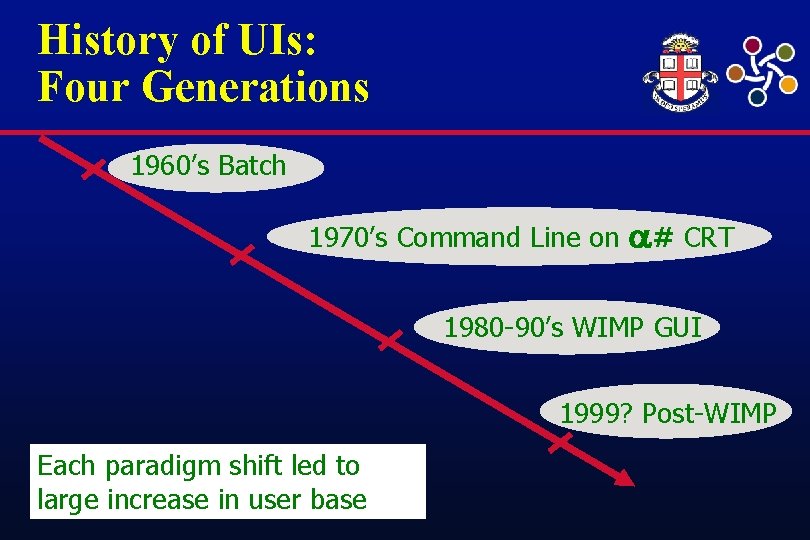

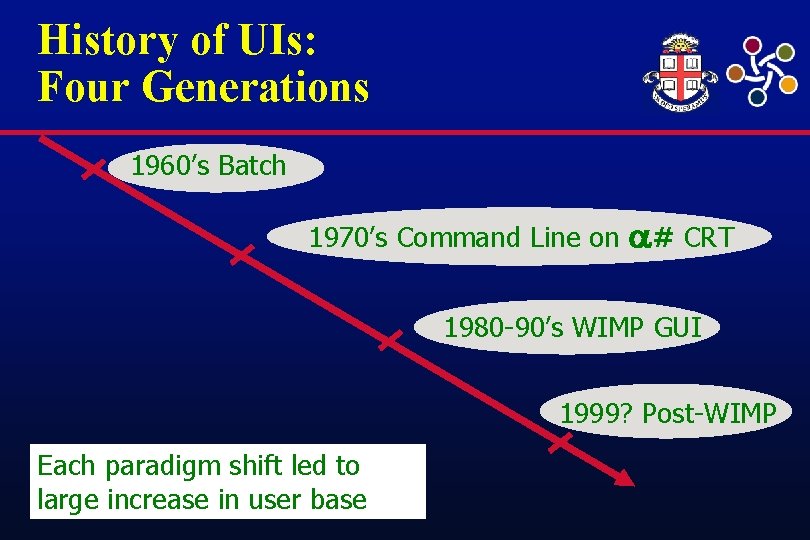

History of UIs: Four Generations 1960’s Batch 1970’s Command Line on # CRT 1980 -90’s WIMP GUI 1999? Post-WIMP Each paradigm shift led to large increase in user base

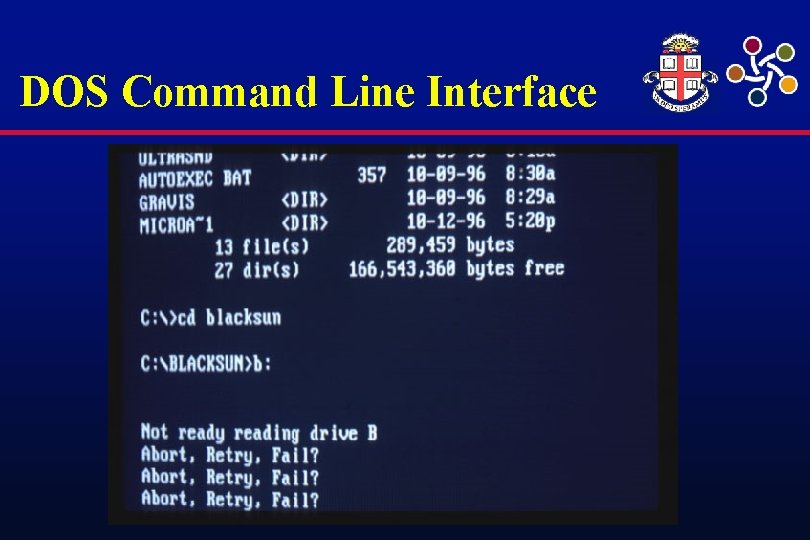

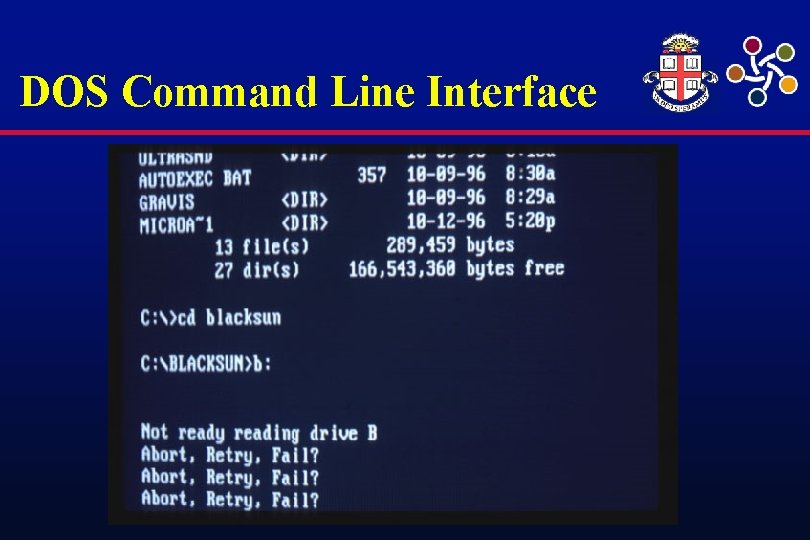

DOS Command Line Interface

GUIs Reduce Typing

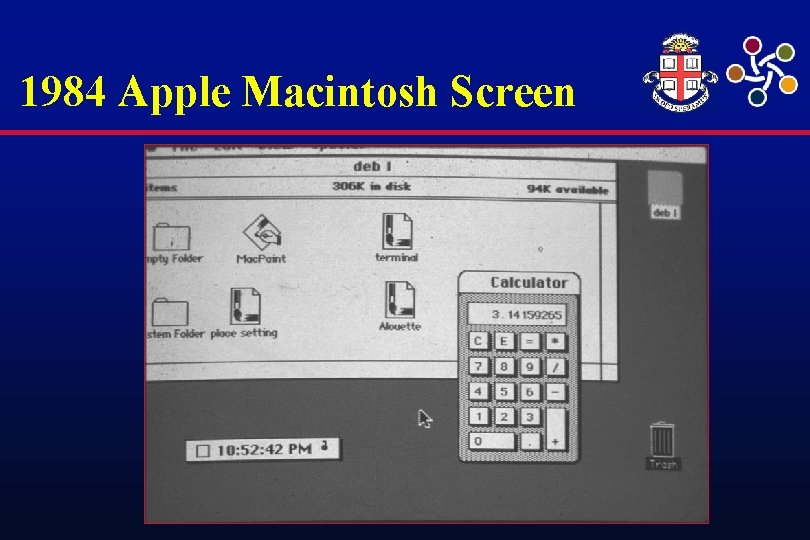

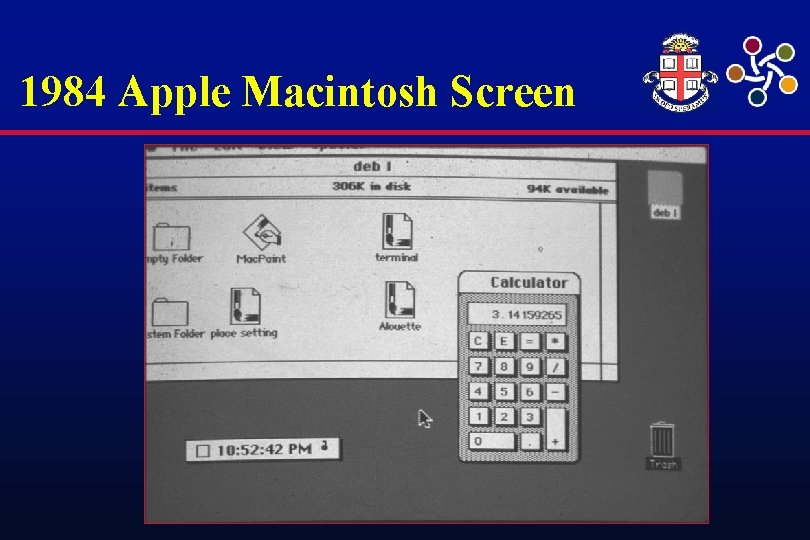

1984 Apple Macintosh Screen

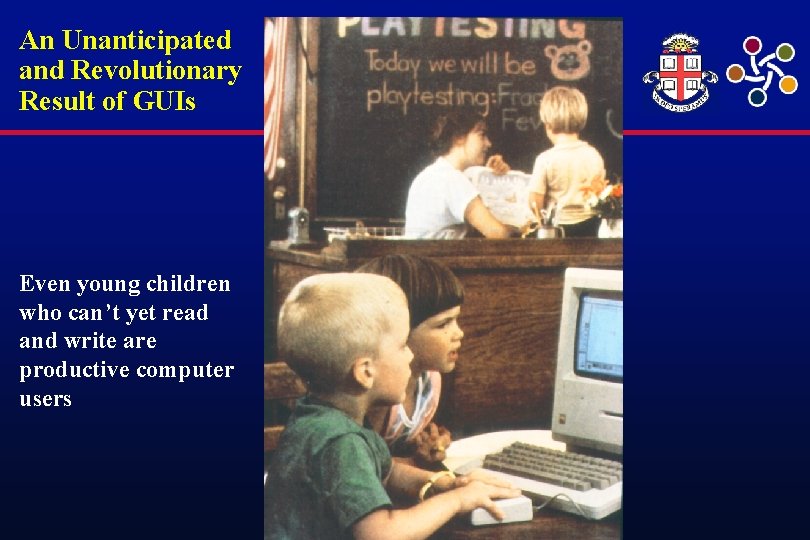

An Unanticipated and Revolutionary Result of GUIs Even young children who can’t yet read and write are productive computer users

Advantages of WIMP GUIs • Xerox PARC’s legacy provides a “standard” => ease of x for users • But not everyone can or wants to use a mouse. . . • Layers of support software => ease of implementation, maintainability – – – toolkits interface builders UIMS

Limitations of WIMP GUI • Imposes sequential “ping-pong” dialog model: mouse and keyboard input, 2 D graphics (sound? ) output – – deterministic and discrete difficult to handle simultaneous input, even two mice pure WIMP doesn’t use other senses: hearing, touch, . . . >50% of our neurons in visual cortex, but as humans it is very difficult for us to communicate without speech, sound. . . • Not usable for immersive VR (e. g. , headmounted display or cave) where you are “in” the scene: no keyboard, mouse…

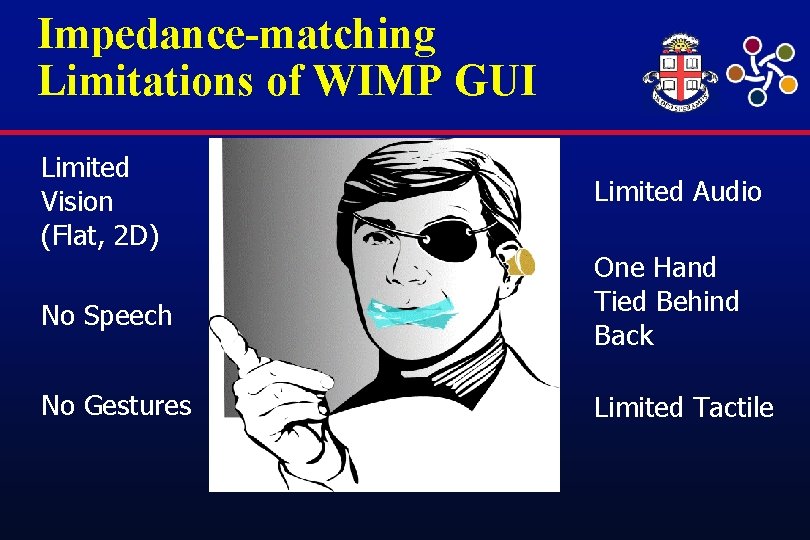

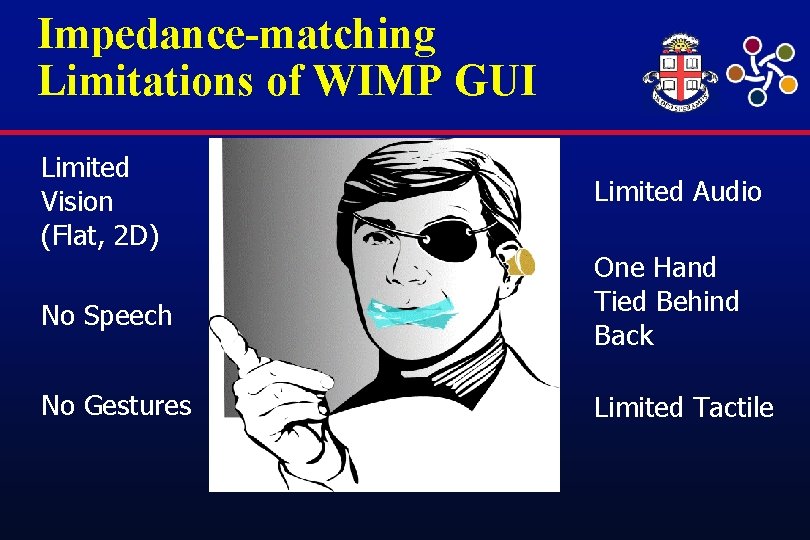

Impedance-matching Limitations of WIMP GUI Limited Vision (Flat, 2 D) No Speech No Gestures Limited Audio One Hand Tied Behind Back Limited Tactile

Brown’s 2 D Note. Pads: Simple Post-WIMP UIs via Gestures • Both based on today’s tablet technology; visual plus tactile feedback • Note. Pad – – – for note-taking, annotation extend WIMP GUI with appropriate marking menus and gestures designed for both stand-alone use and integration into a larger environment such as a Cave • Musical Notepad – – – for musical notation and simple composition purely gestural electronic music paper integrated with full computer editing and MIDI facilities

Environment of Tomorrow: Push/Pull • • • Driven by Moore’s Law, clever device engineering (e. g. , micro- and nano-technology) we’ll have a profusion of form factors: – – wearable computers, information appliances, e-books (based on digital paper) – – office/home (inexpensive) Virtual Reality smart rooms, furniture, clothing, jewelry…(Gershenfeld’s “When Things Start to Think”) micro- and even nano-devices sensors & actuators/“displays” Ubiquitous/pervasive computing with many devices per user – personal computing won’t depend on “the personal computer” – users will be mobile, location- and device-independent, to concentrate on task Next century: bionic humans, neural implants; human- machine fusion? (Kurzweil’s “Age of Spiritual Machines”)

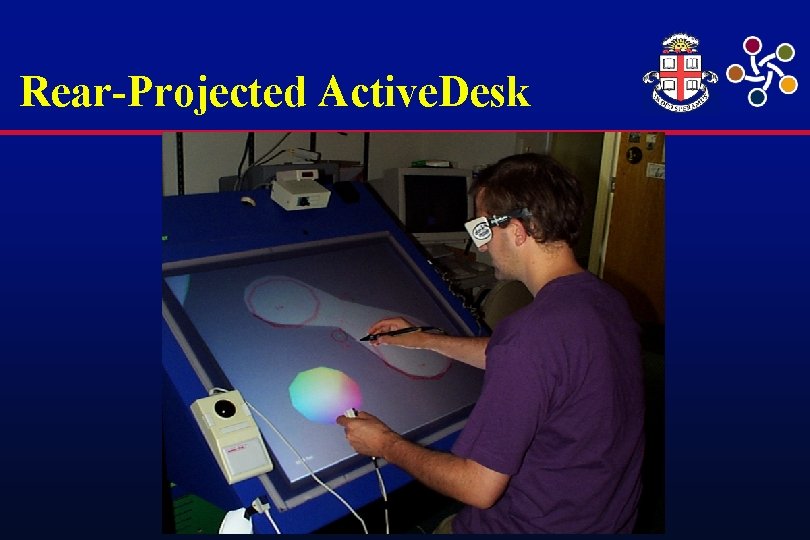

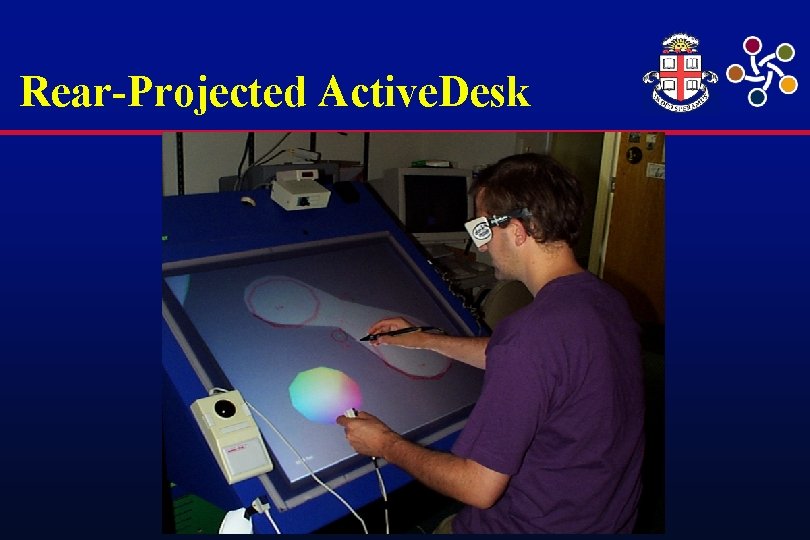

Rear-Projected Active. Desk

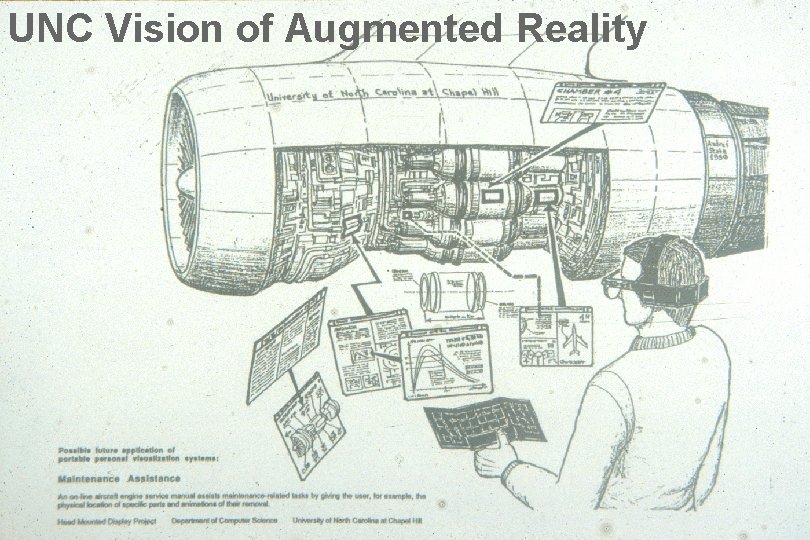

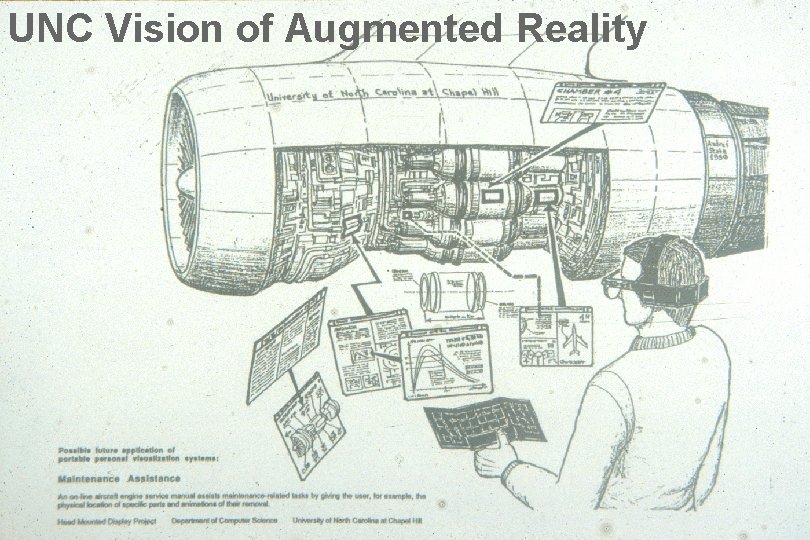

UNC Vision of Augmented Reality

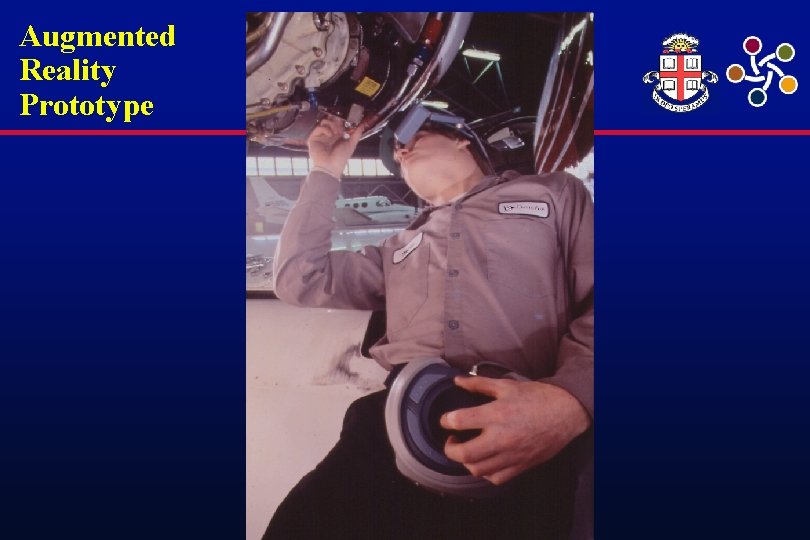

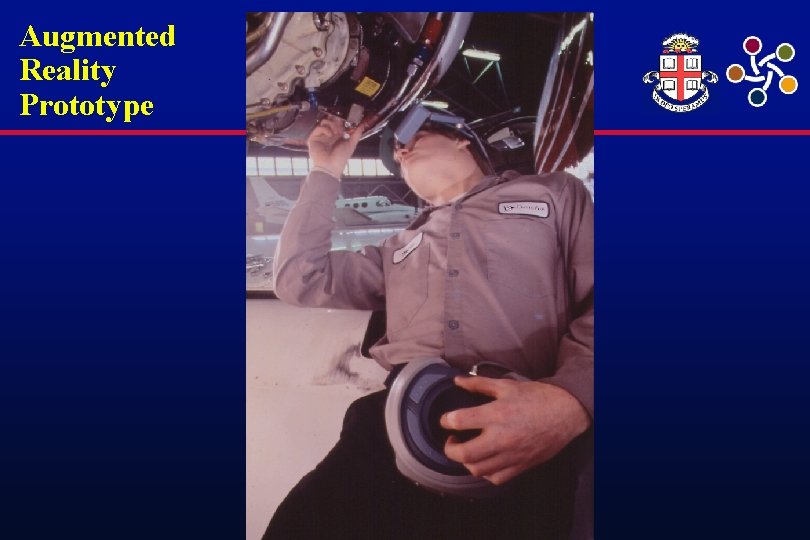

Augmented Reality Prototype

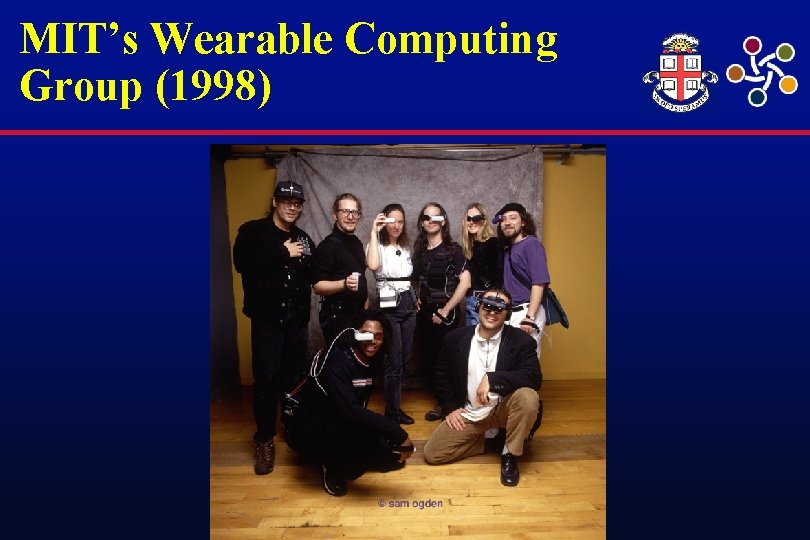

MIT’s Wearable Computing Group (1998)

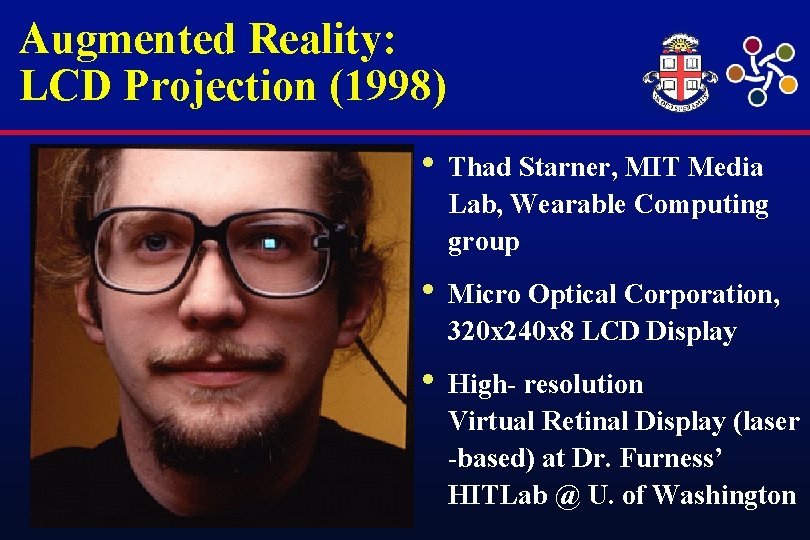

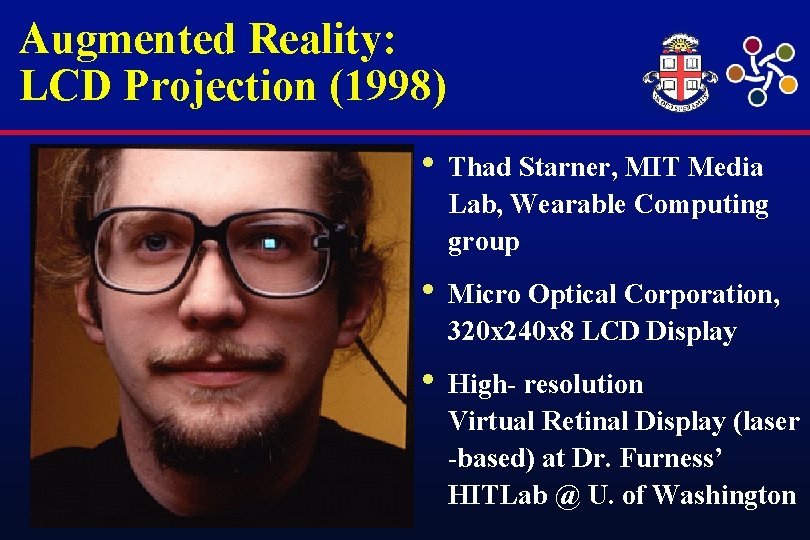

Augmented Reality: LCD Projection (1998) • Thad Starner, MIT Media Lab, Wearable Computing group • Micro Optical Corporation, 320 x 240 x 8 LCD Display • High- resolution Virtual Retinal Display (laser -based) at Dr. Furness’ HITLab @ U. of Washington

Post-WIMP User Interface Characteristics (1/2) • Multiple simultaneous devices, sensory channels, or humans – – 2 -handed, with gesture recognition (e. g. , Brown’s Sketching demos) multi-modal UI: multiple integrated and reinforcing modes o output: display + spatial sound o input: gesture + speech recognition – perceptual UI: goal is to imitate multi-modal human-human dialog – o transparent, passive sensing -- computer is aware of and models user state (location, position, and possibly even mood) so it can respond intelligently multiple humans can collaborate, compete, or be adversarial…

Post-WIMP User Interface Characteristics (2/2) • High bandwidth, continuous input – body part tracking (head, gaze, hand. . . ), e. g. , for immersive VR (which requires head-tracked, wide-field-of-view, low latency, stereo display) – gesture and speech recognition => probabilistic disambiguation o ex: handwriting, gesture recognition in 2 D and 3 D/VR • Autonomous objects in active world are common • Examples: “Put that there” demo from the 80 s at MIT Media Lab, many VR demos

WIMP GUIs Will Be Augmented, Not Replaced • UI spectrum – – direct control (direct manipulation, by-example. . . ) indirect control (smart X, agents, social interfaces) • WIMP enhanced by – 3 D GUI “widgets” (e. g. , 3 D translate/rotate/scale boxes with handles) – speech & gesture recognition (tablets, wands, gloves, body and scene extraction via video-based computer vision technology…) – – haptics (e. g. , force feedback joysticks, augmented mice) smart X, agents/wizards

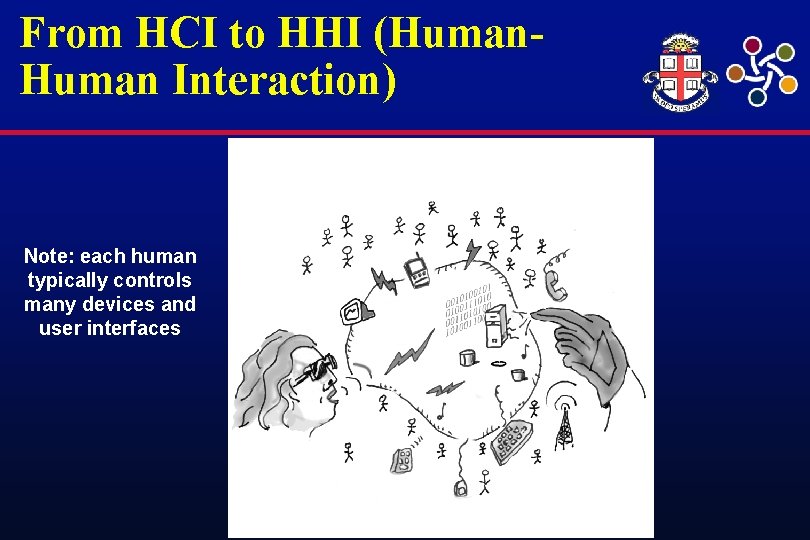

From HCI to HHI (Human Interaction) Note: each human typically controls many devices and user interfaces

Multiple, Interconnected Devices and UIs per User (1/3) • Office application – wall displays + personal notepad that provides private space in addition to any shared space(s) – – user is video-tracked for identification, location, gaze, gesture – furniture: chair is instrumented to help detect posture, adjust to the user’s preferred position – user may wear prostheses (e. g. , hearing aid), health monitors (pulse and blood pressure to monitor stress) user’s speech is decoded: continuous speech recognition + natural language understanding + intelligent information processing allows dialog with an intelligent assistant

Multiple, Interconnected Devices and UIs per User (2/3) • Health-care application – – scaled-down office computing environment for the home – electro-chemical monitors, probes (increasingly less obtrusive) prostheses (today, heart pacemakers, hearing aids, cochlear implants, voice boxes, artificial joints and organs…) o smart toilet to monitor bodily wastes – avatars of o health-care providers o family and friends, support group

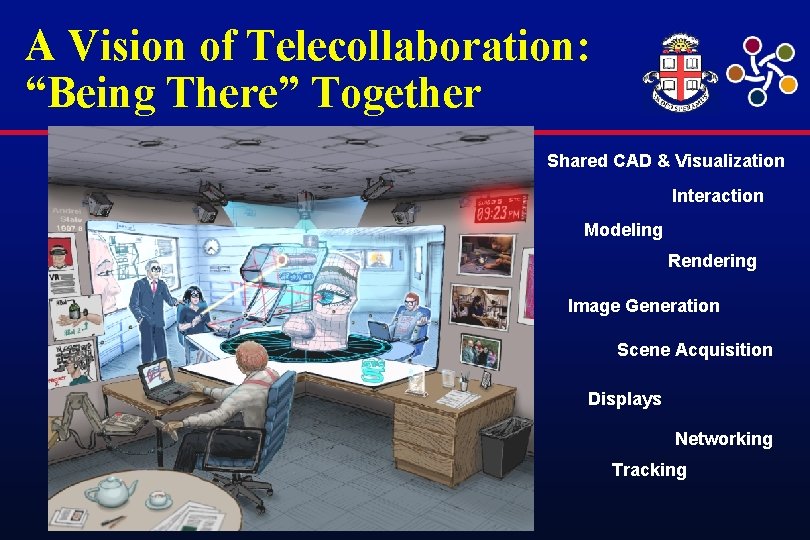

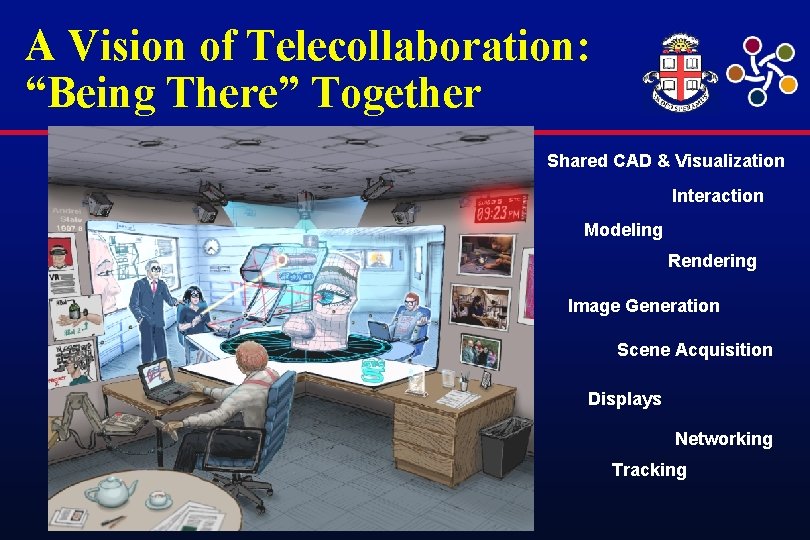

Multiple, Interconnected Devices and UIs per User (3/3) • Telecollaboration for multi-disciplinary industrial design – – immersive VR environment emphasis on small team collaboration scene composed of CAD objects and objects in the environment, including co-workers o requires combination of geometry-based rendering (polygons, surfaces) and image-based rendering of geometry acquired via scene extraction based on computer vision techniques o this is a major paradigm shift in computer graphics huge distributed systems problems, e. g. , minimizing latency and its effects (cybersickness!)

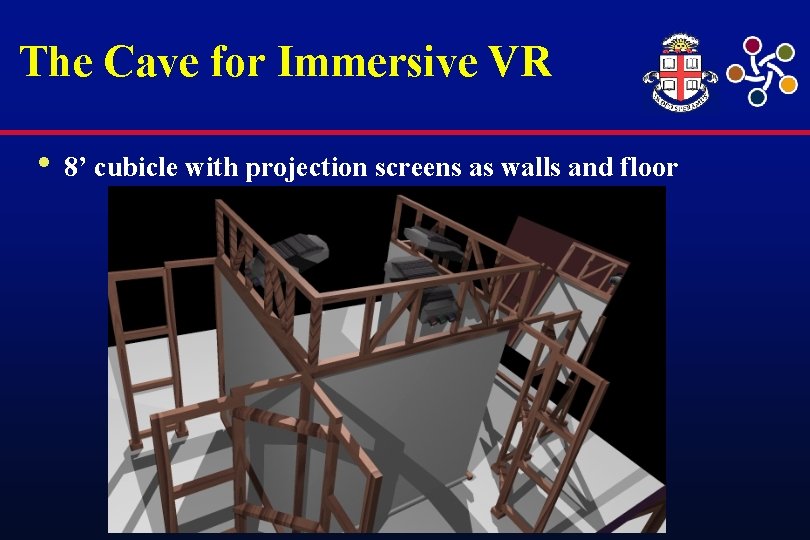

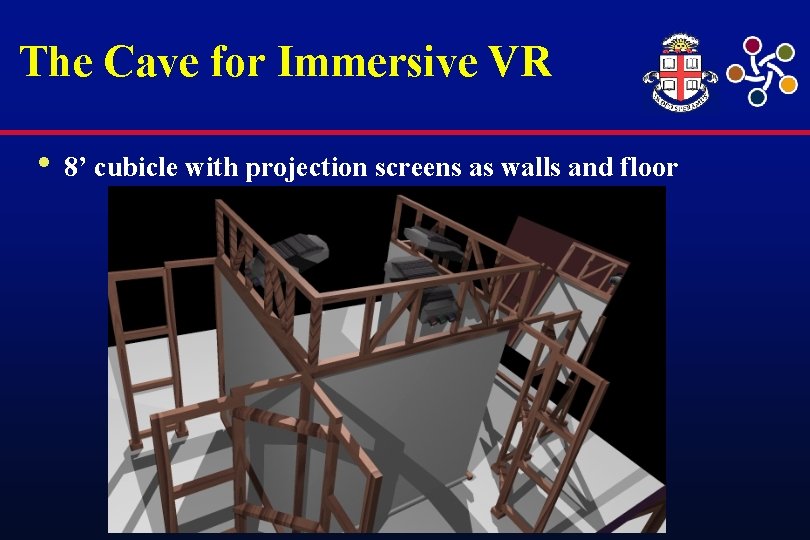

The Cave for Immersive VR • 8’ cubicle with projection screens as walls and floor

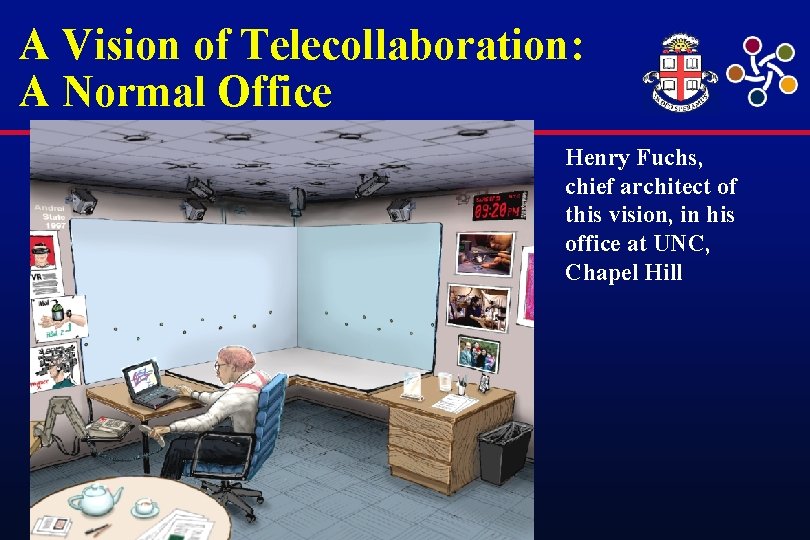

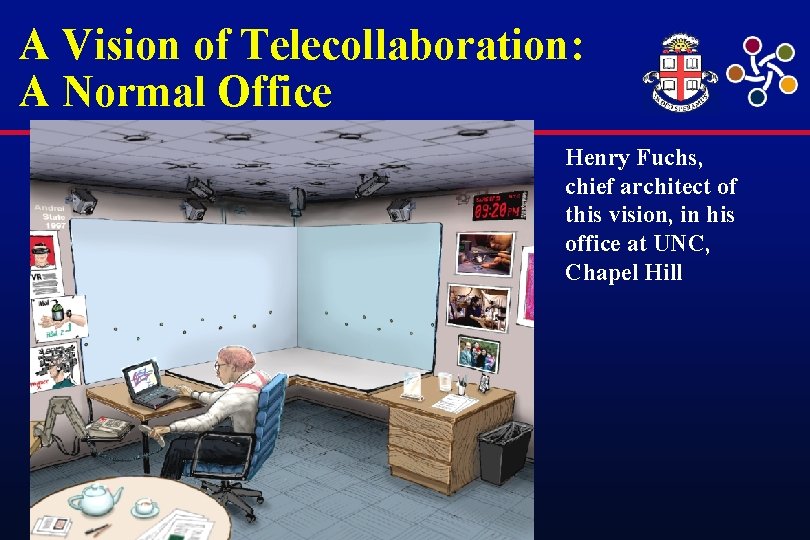

A Vision of Telecollaboration: A Normal Office Henry Fuchs, chief architect of this vision, in his office at UNC, Chapel Hill

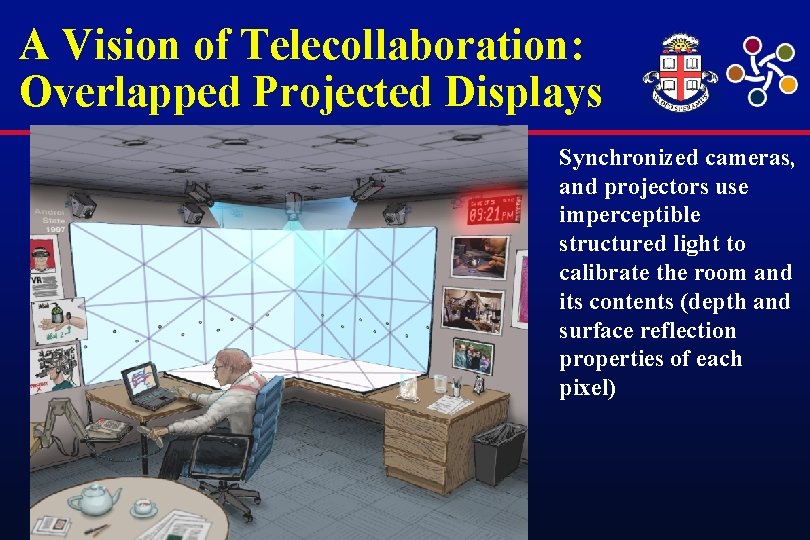

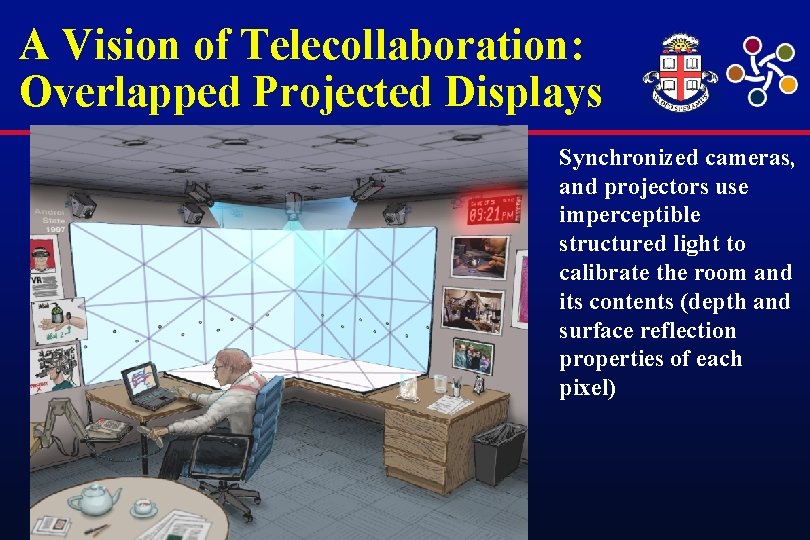

A Vision of Telecollaboration: Overlapped Projected Displays Synchronized cameras, and projectors use imperceptible structured light to calibrate the room and its contents (depth and surface reflection properties of each pixel)

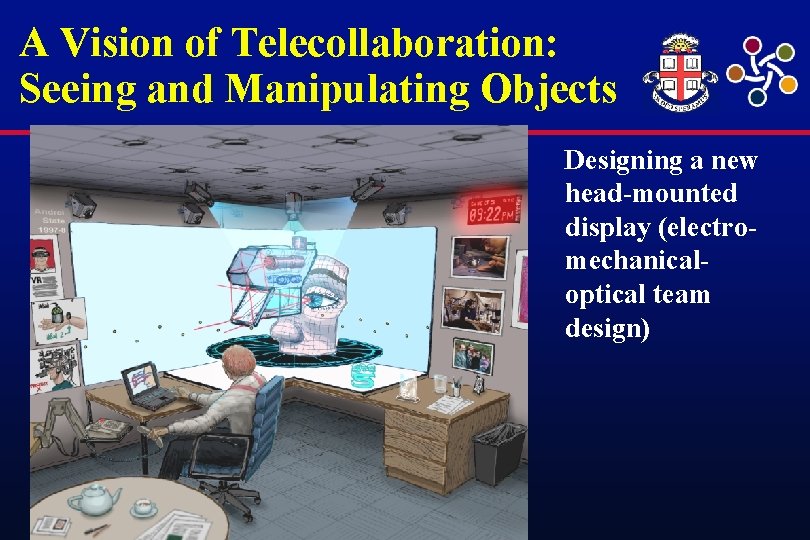

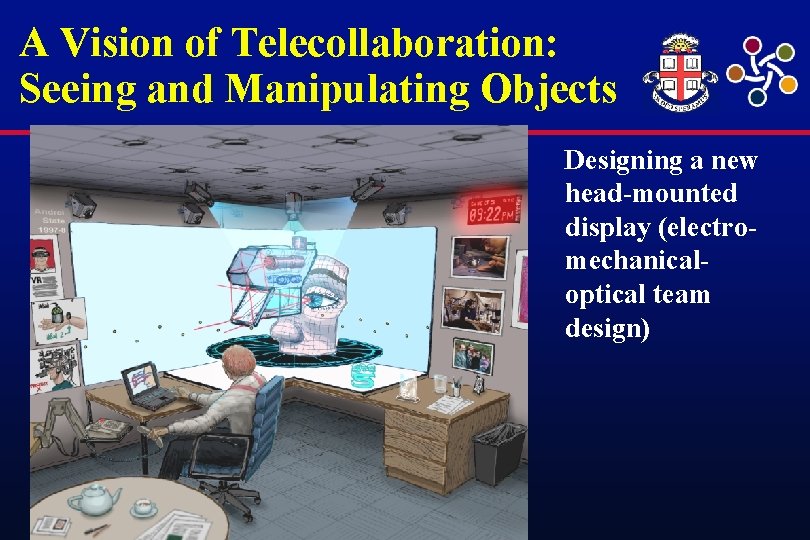

A Vision of Telecollaboration: Seeing and Manipulating Objects Designing a new head-mounted display (electromechanicaloptical team design)

A Vision of Telecollaboration: “Being There” Together Shared CAD & Visualization Interaction Modeling Rendering Image Generation Scene Acquisition Displays Networking Tracking

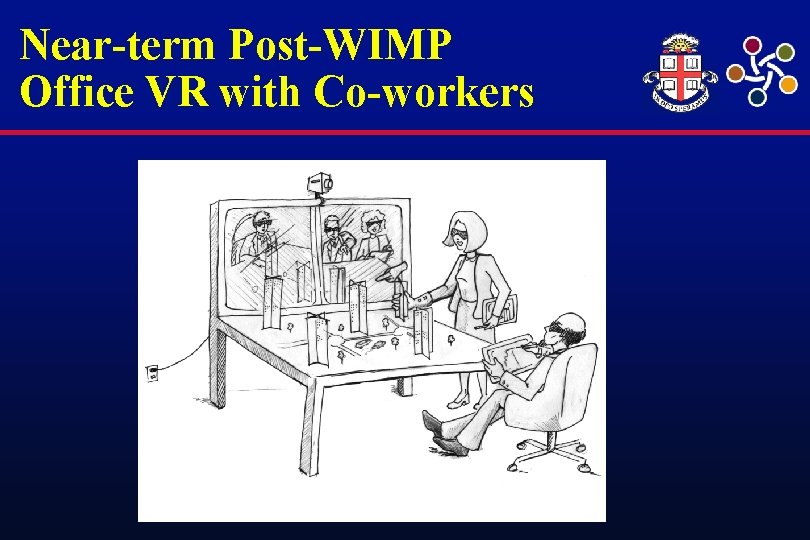

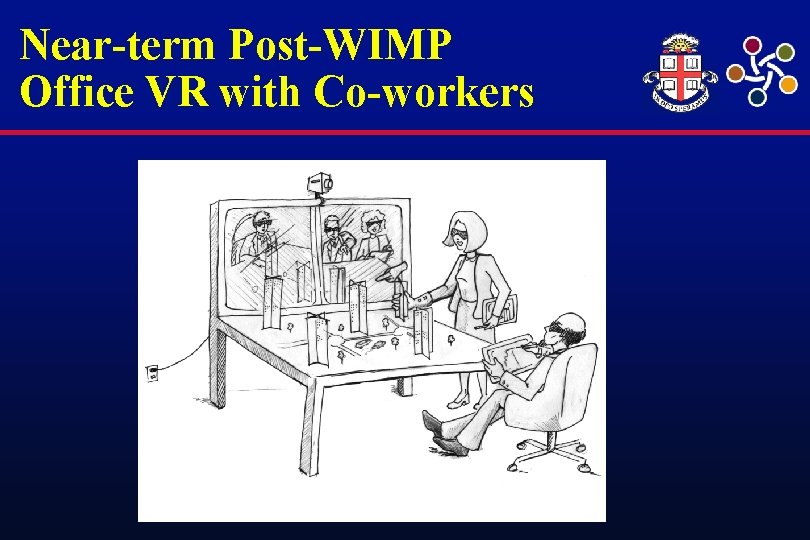

Near-term Post-WIMP Office VR with Co-workers

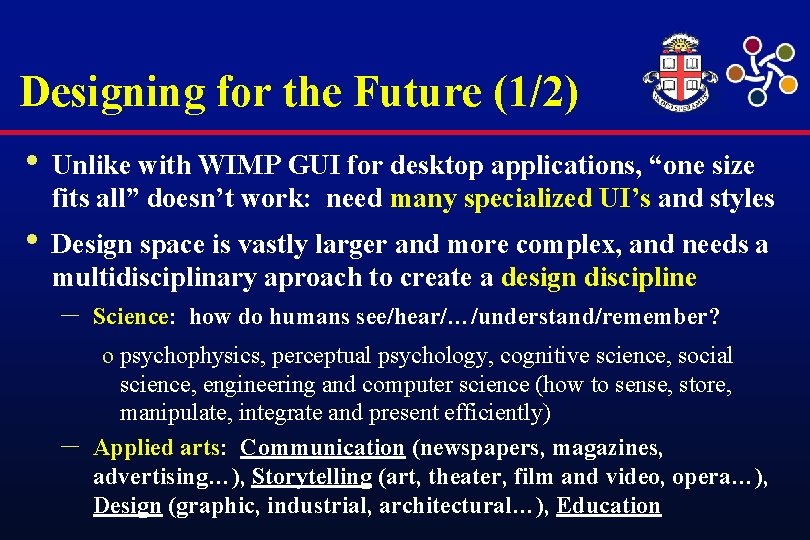

Designing for the Future (1/2) • Unlike with WIMP GUI for desktop applications, “one size fits all” doesn’t work: need many specialized UI’s and styles • Design space is vastly larger and more complex, and needs a multidisciplinary aproach to create a design discipline – – Science: how do humans see/hear/…/understand/remember? o psychophysics, perceptual psychology, cognitive science, social science, engineering and computer science (how to sense, store, manipulate, integrate and present efficiently) Applied arts: Communication (newspapers, magazines, advertising…), Storytelling (art, theater, film and video, opera…), Design (graphic, industrial, architectural…), Education

Designing for the Future (2/2) • 3 D virtual worlds are far more complex than 2 D “worlds, ” and they are not the same as the real world. For example, – – physical space and virtual space needn’t have the same metrics rules for behavior can be simpler or more complex than real-world social interfaces are not the same as people cybersickness in VR (latency-induced) isn’t the same as motion sickness in the real world • We should avoid the trap of designing “horseless carriages” – – these are new media, neither the real world nor the 2 D desktop they will have their own idioms and metaphors, design discipline, and development environments

Some Research Challenges for Post-WIMP Interfaces (1/3) • Much better device technology, greatly improving – – accuracy, comfort, safety (avoid cybersickness) – non-obtrusiveness, spatial and temporal resolution, robustness (for handling, mutual interference, etc. ) – cost effectiveness both input (e. g. , pointers, trackers) and output (e. g. , visual and aural displays, haptics force and tactile feedback) • Metaphors for navigation, selection, manipulation, feedback – – some based on the real world, others not (like teleportation) animation for feedback

Some Research Challenges for Post-WIMP Interfaces (2/3) • Augmentation of display with other sensory channels (e. g. , haptics, sound); “unification” algorithms for mutual disambiguation and reinforcement, e. g. , for gesture + voice • Scaling of interaction techniques • Platform independence (desktop vs. VR; small-team vs. large-scale simulations vs. online communities) – how can we factor out commonality? • Integration of direct control with indirect control (e. g. , through agents)

Some Research Challenges for Post-WIMP Interfaces (3/3) • Powerful integrated development environment – visual programming and debugging tools? !? • Usability studies, especially for longterm effects (negative training, safety. . . ) • Design discipline for post-WIMP UI design – – – interdisciplinary based on verifiable theories, metrics produce design handbooks with design patterns (rules) and exemplars of good design

Where are We Today?

Resources • • Basic UI textbooks – oriented towards traditional WIMP world but useful foundation material – – EC/NSF Research Frontiers in Virtual Environments and Human-Centered Computing Workshop (June 1999) report – • Laurel, B. “The Art of Human-Computer Interface Design”, 1990 Newman, W. M. and Lamming, M. G. "Interactive System Design", 1995 Preece, Jenny. "Human-Computer Interaction", 1994 Shneiderman, B. "Designing the User Interface", 1997 to be published in ACM Computer Graphics SIGGRAPH issue, and IEEE Computer Graphics and Applications Resources Website: – – http: //www. cs. brown. edu/research/graphics/UIResources/ further information about EC/NSF Workshop report publication will be posted here

Acknowledgements • Bill Buxton (SGI Alias|Wavefront) • Tom Furness (University of Washington Human Interface Technology Laboratory) • Ben Shneiderman (University of Maryland) • Rosemary M. Simpson (Brown University) • Mathew Turk (Microsoft Research) • Turner Whitted (Microsoft Research)

• THE END