Error Detection and Correction in Spoken Dialogue Systems

- Slides: 31

Error Detection and Correction in Spoken Dialogue Systems 1

Outline Avoiding errors Detecting errors From the user side: what cues does the user provide to indicate an error? From the system side: how likely is it the system made an error? Dealing with Errors: what can the system do when it thinks an error has occurred? Evaluating SDS: evaluating ‘problem’ dialogues Spoken Dialogue Systems 2

Avoiding misunderstandings The problem By imitating human performance Timing and grounding (Clark ’ 03) Confirmation strategies Clarification and repair subdialogues Spoken Dialogue Systems 3

Outline Avoiding errors Detecting errors From the user side: what cues does the user provide to indicate an error? From the system side: how likely is it the system made an error? Dealing with Errors: what can the system do when it thinks an error has occurred? Evaluating SDS: evaluating ‘problem’ dialogues Spoken Dialogue Systems 4

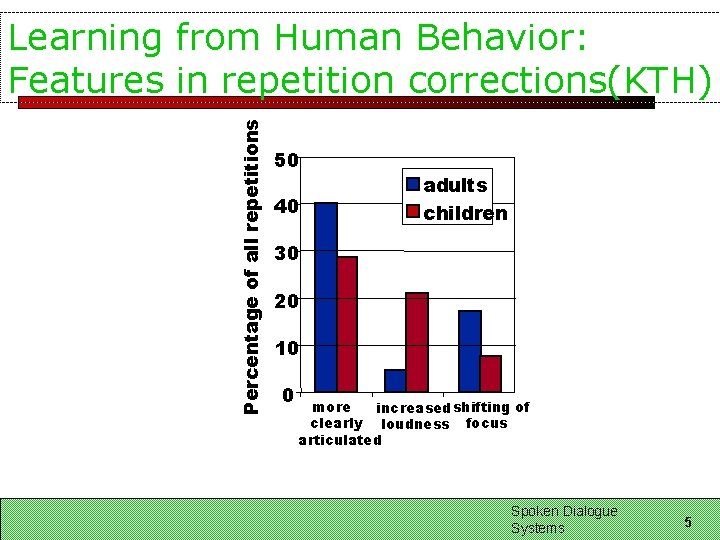

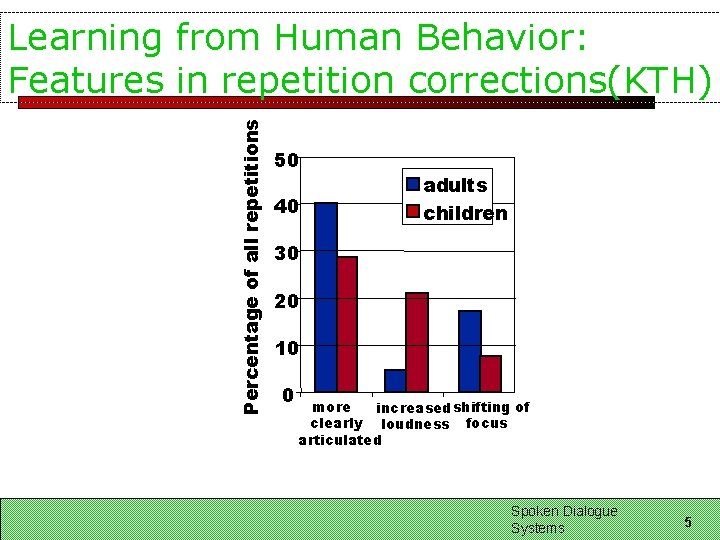

Percentage of all repetitions Learning from Human Behavior: Features in repetition corrections(KTH) 50 40 adults children 30 20 10 0 more increased shifting of clearly loudness focus articulated Spoken Dialogue Systems 5

Learning from Human Behavior (Krahmer et al ’ 01) Learning from human behavior ‘go on’ and ‘go back’ signals in grounding situations (implicit/explicit verification) Positive: short turns, unmarked word order, confirmation, answers, no corrections or repetitions, new info Negative: long turns, marked word order, disconfirmation, no answer, corrections, repetitions, no new info Spoken Dialogue Systems 6

Hypotheses supported but… – Can these cues be identified automatically? – How might they affect the design of SDS? Spoken Dialogue Systems 7

Outline Avoiding errors Detecting errors From the user side: what cues does the user provide to indicate an error? From the system side: how likely is it the system made an error? Dealing with Errors: what can the system do when it thinks an error has occurred? Evaluating SDS: evaluating ‘problem’ dialogues Spoken Dialogue Systems 8

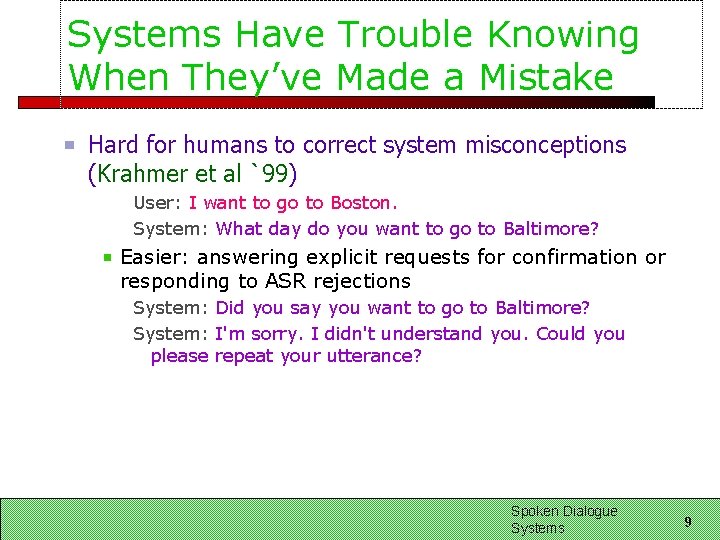

Systems Have Trouble Knowing When They’ve Made a Mistake Hard for humans to correct system misconceptions (Krahmer et al `99) User: I want to go to Boston. System: What day do you want to go to Baltimore? Easier: answering explicit requests for confirmation or responding to ASR rejections System: Did you say you want to go to Baltimore? System: I'm sorry. I didn't understand you. Could you please repeat your utterance? Spoken Dialogue Systems 9

But constant confirmation or over-cautious rejection lengthens dialogue and decreases user satisfaction Spoken Dialogue Systems 10

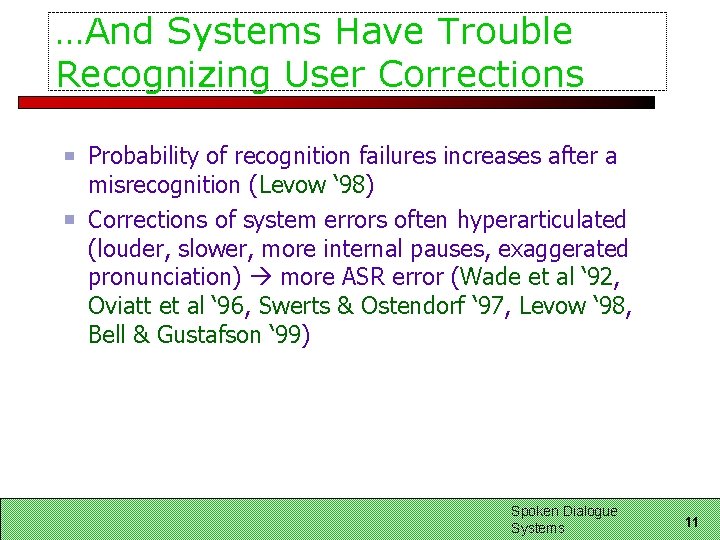

…And Systems Have Trouble Recognizing User Corrections Probability of recognition failures increases after a misrecognition (Levow ‘ 98) Corrections of system errors often hyperarticulated (louder, slower, more internal pauses, exaggerated pronunciation) more ASR error (Wade et al ‘ 92, Oviatt et al ‘ 96, Swerts & Ostendorf ‘ 97, Levow ‘ 98, Bell & Gustafson ‘ 99) Spoken Dialogue Systems 11

Can Prosodic Information Help Systems Perform Better? If errors occur where speaker turns are prosodically ‘marked’…. Can we recognize turns that will be misrecognized by examining their prosody? Can we modify our dialogue and recognition strategies to handle corrections more appropriately? Spoken Dialogue Systems 12

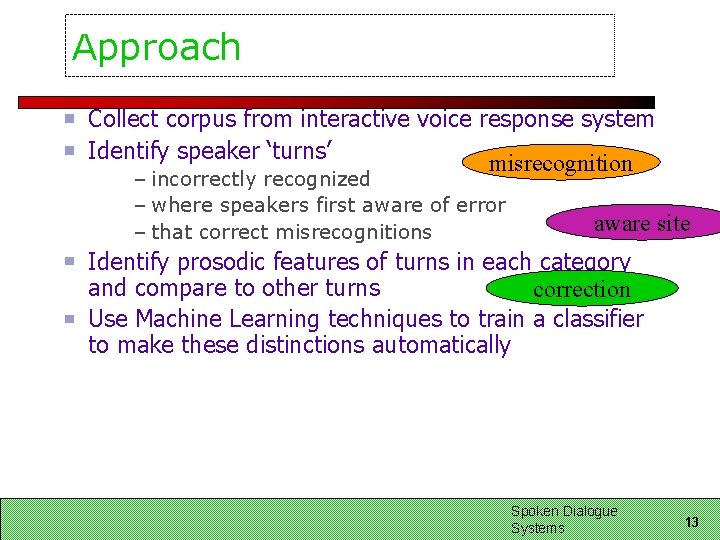

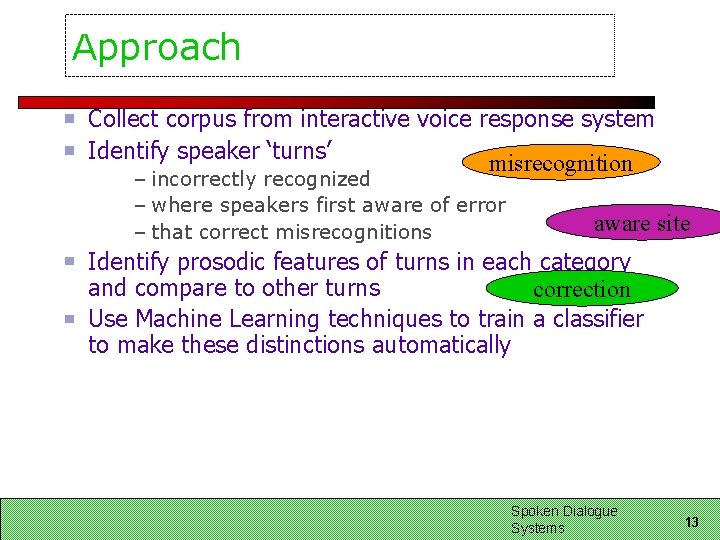

Approach Collect corpus from interactive voice response system Identify speaker ‘turns’ misrecognition – incorrectly recognized – where speakers first aware of error – that correct misrecognitions aware site Identify prosodic features of turns in each category and compare to other turns correction Use Machine Learning techniques to train a classifier to make these distinctions automatically Spoken Dialogue Systems 13

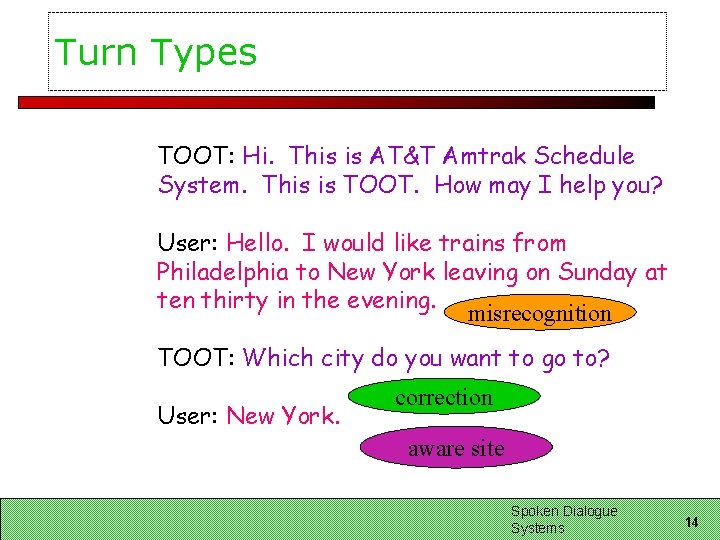

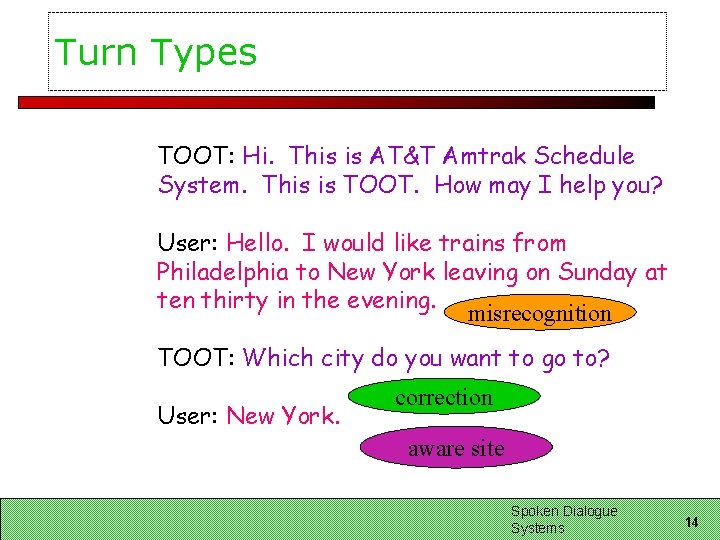

Turn Types TOOT: Hi. This is AT&T Amtrak Schedule System. This is TOOT. How may I help you? User: Hello. I would like trains from Philadelphia to New York leaving on Sunday at ten thirty in the evening. misrecognition TOOT: Which city do you want to go to? User: New York. correction aware site Spoken Dialogue Systems 14

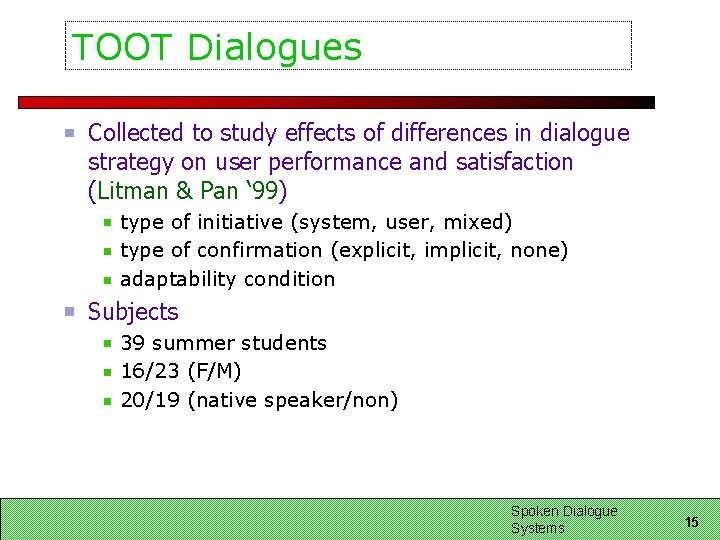

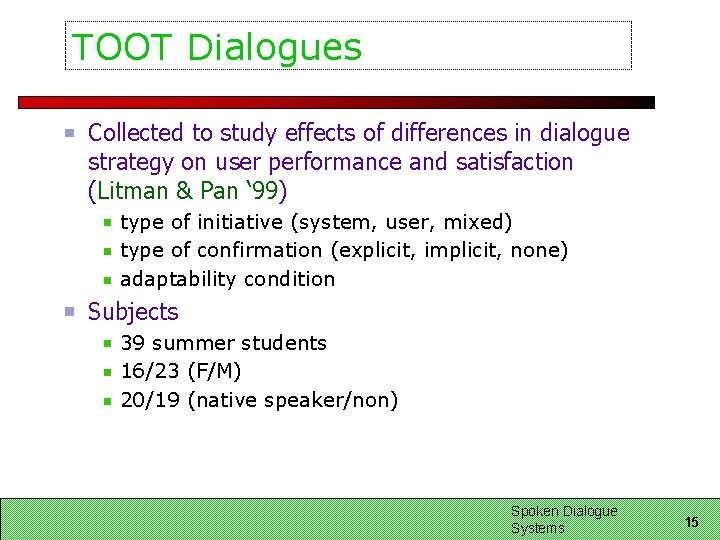

TOOT Dialogues Collected to study effects of differences in dialogue strategy on user performance and satisfaction (Litman & Pan ‘ 99) type of initiative (system, user, mixed) type of confirmation (explicit, implicit, none) adaptability condition Subjects 39 summer students 16/23 (F/M) 20/19 (native speaker/non) Spoken Dialogue Systems 15

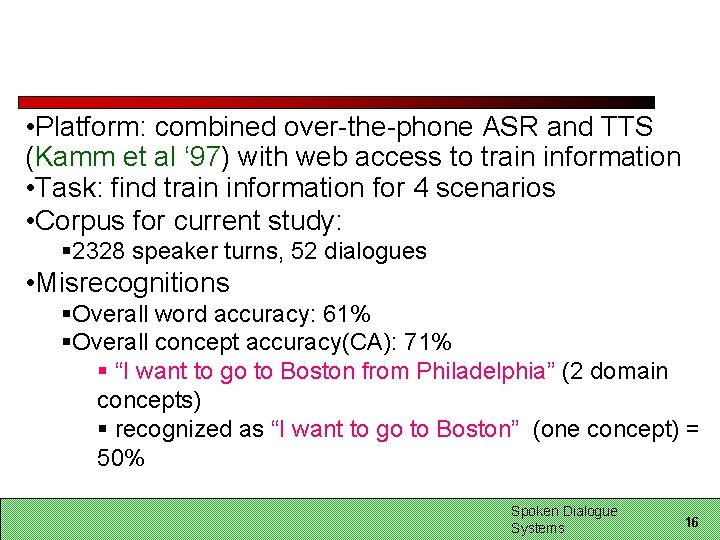

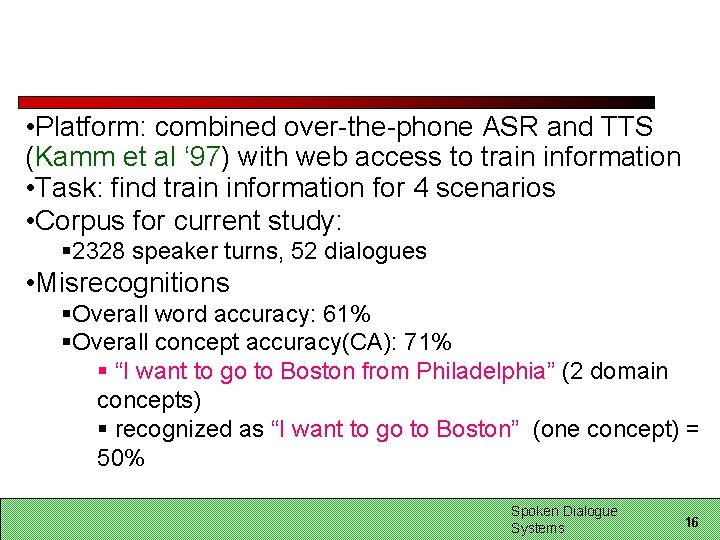

• Platform: combined over-the-phone ASR and TTS (Kamm et al ‘ 97) with web access to train information • Task: find train information for 4 scenarios • Corpus for current study: § 2328 speaker turns, 52 dialogues • Misrecognitions §Overall word accuracy: 61% §Overall concept accuracy(CA): 71% § “I want to go to Boston from Philadelphia” (2 domain concepts) § recognized as “I want to go to Boston” (one concept) = 50% Spoken Dialogue Systems 16

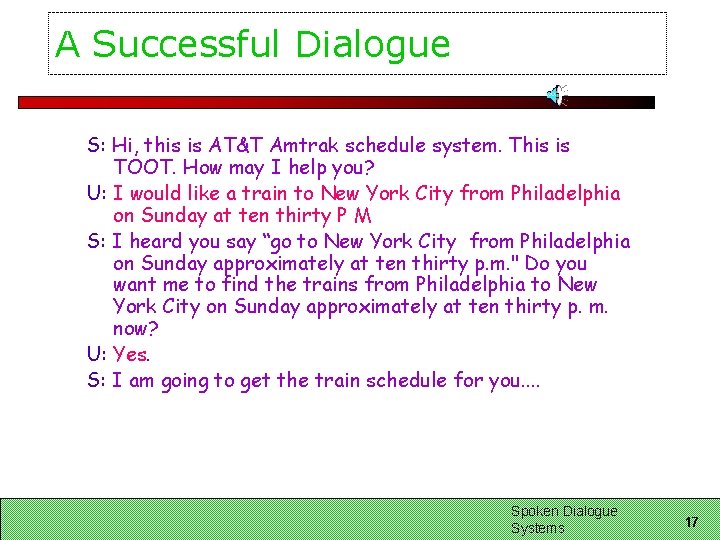

A Successful Dialogue S: Hi, this is AT&T Amtrak schedule system. This is TOOT. How may I help you? U: I would like a train to New York City from Philadelphia on Sunday at ten thirty P M S: I heard you say “go to New York City from Philadelphia on Sunday approximately at ten thirty p. m. " Do you want me to find the trains from Philadelphia to New York City on Sunday approximately at ten thirty p. m. now? U: Yes. S: I am going to get the train schedule for you. . Spoken Dialogue Systems 17

Do Misrecognitions, Awares, and Corrections Differ from Other Turns? For each type of turn: For each speaker, for each prosodic feature, calculate mean values for e. g. all correctly recognized speaker turns and for all incorrectly recognized turns Perform paired t-tests on these speaker pairs of means (e. g. , for each speaker, pairing mean values for correctly and incorrectly recognized turns) Spoken Dialogue Systems 18

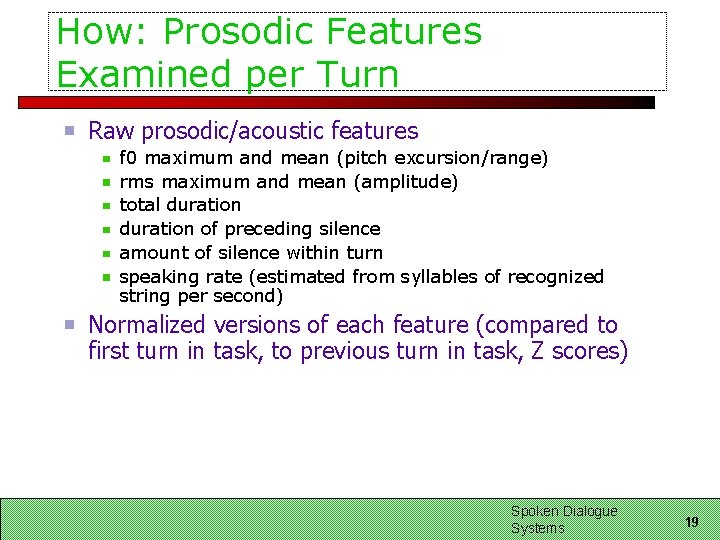

How: Prosodic Features Examined per Turn Raw prosodic/acoustic features f 0 maximum and mean (pitch excursion/range) rms maximum and mean (amplitude) total duration of preceding silence amount of silence within turn speaking rate (estimated from syllables of recognized string per second) Normalized versions of each feature (compared to first turn in task, to previous turn in task, Z scores) Spoken Dialogue Systems 19

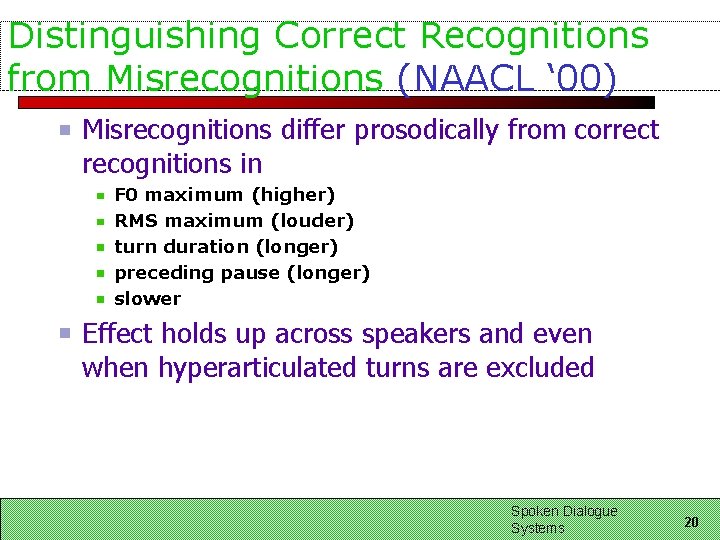

Distinguishing Correct Recognitions from Misrecognitions (NAACL ‘ 00) Misrecognitions differ prosodically from correct recognitions in F 0 maximum (higher) RMS maximum (louder) turn duration (longer) preceding pause (longer) slower Effect holds up across speakers and even when hyperarticulated turns are excluded Spoken Dialogue Systems 20

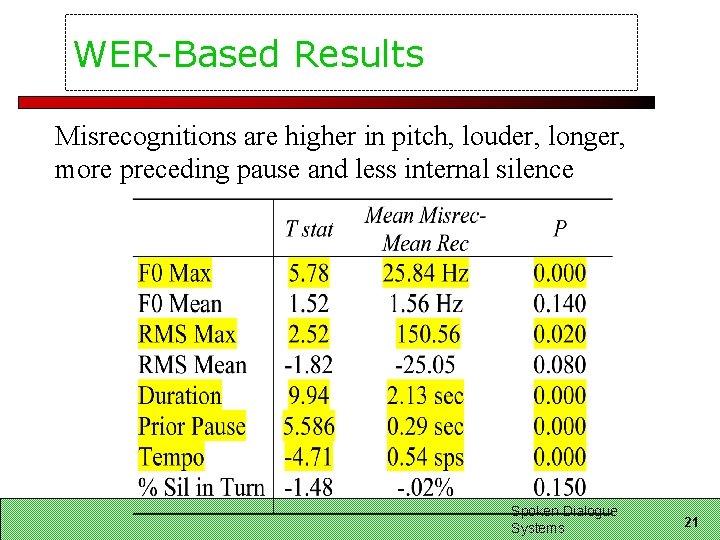

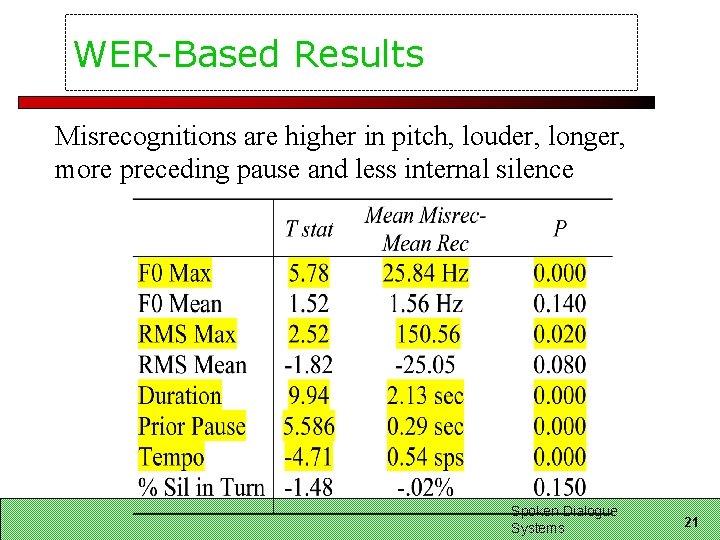

WER-Based Results Misrecognitions are higher in pitch, louder, longer, more preceding pause and less internal silence Spoken Dialogue Systems 21

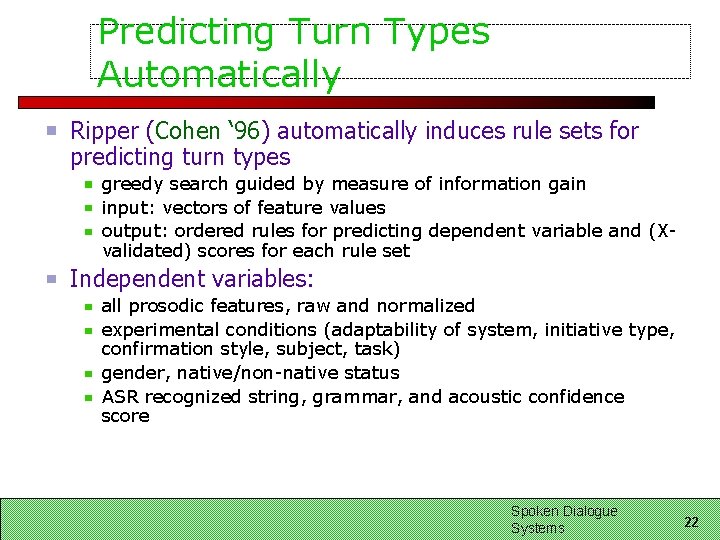

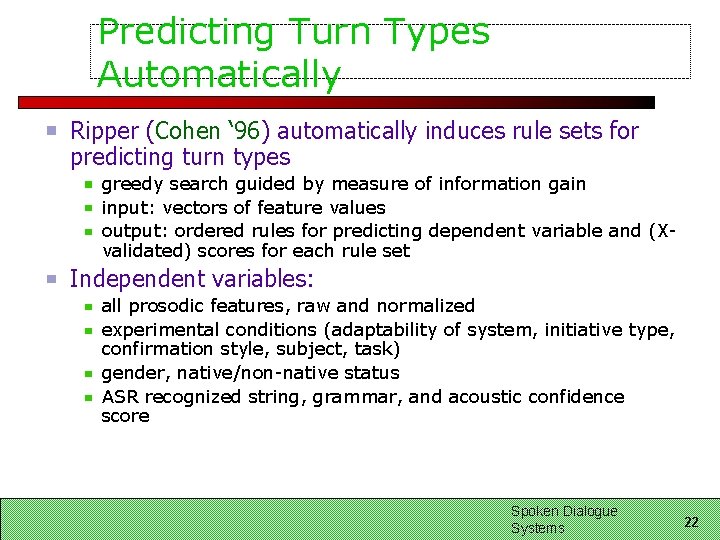

Predicting Turn Types Automatically Ripper (Cohen ‘ 96) automatically induces rule sets for predicting turn types greedy search guided by measure of information gain input: vectors of feature values output: ordered rules for predicting dependent variable and (Xvalidated) scores for each rule set Independent variables: all prosodic features, raw and normalized experimental conditions (adaptability of system, initiative type, confirmation style, subject, task) gender, native/non-native status ASR recognized string, grammar, and acoustic confidence score Spoken Dialogue Systems 22

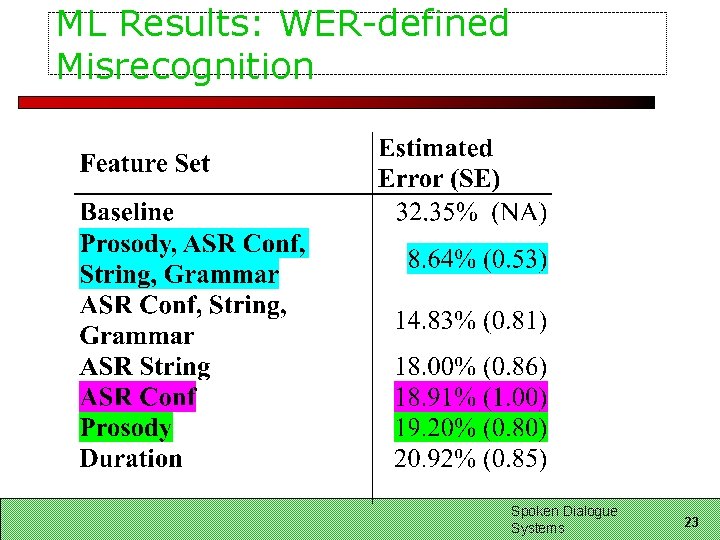

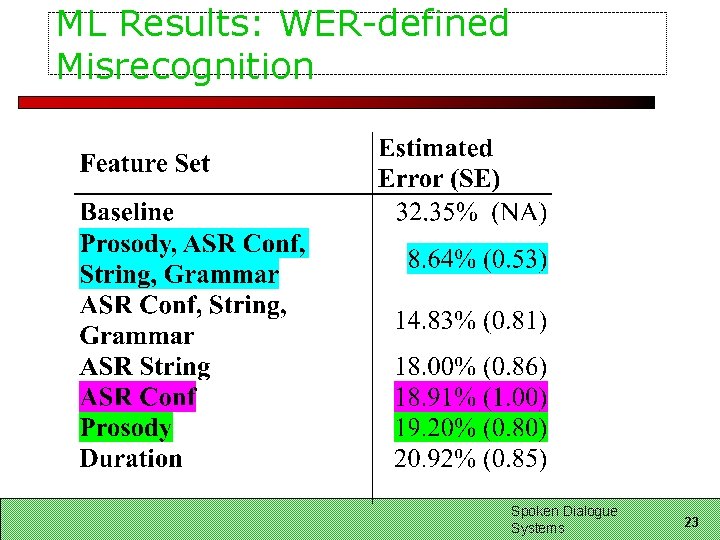

ML Results: WER-defined Misrecognition Spoken Dialogue Systems 23

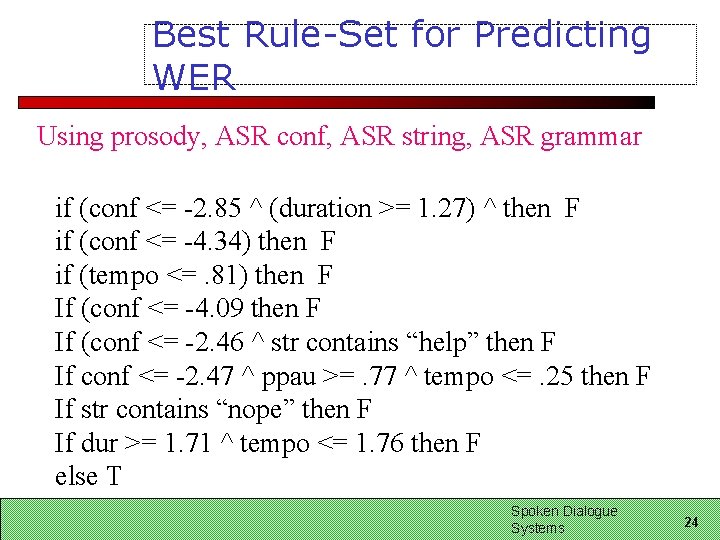

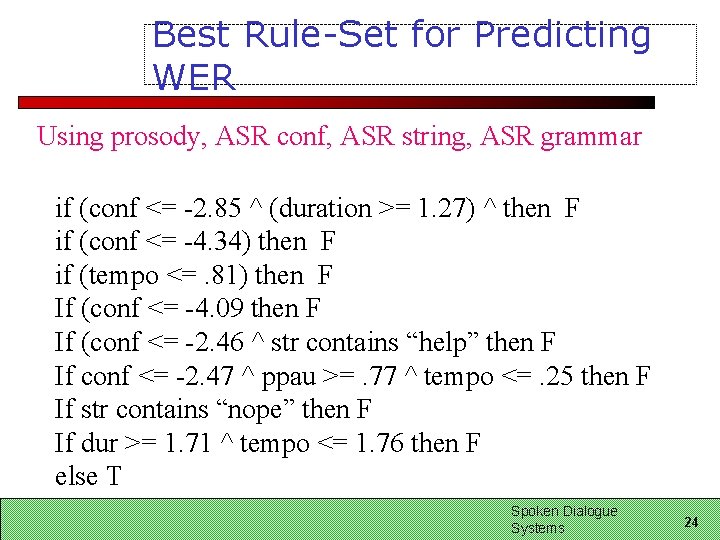

Best Rule-Set for Predicting WER Using prosody, ASR conf, ASR string, ASR grammar if (conf <= -2. 85 ^ (duration >= 1. 27) ^ then F if (conf <= -4. 34) then F if (tempo <=. 81) then F If (conf <= -4. 09 then F If (conf <= -2. 46 ^ str contains “help” then F If conf <= -2. 47 ^ ppau >=. 77 ^ tempo <=. 25 then F If str contains “nope” then F If dur >= 1. 71 ^ tempo <= 1. 76 then F else T Spoken Dialogue Systems 24

Outline Avoiding errors Detecting errors From the user side: what cues does the user provide to indicate an error? From the system side: how likely is it the system made an error? Dealing with Errors: what can the system do when it thinks an error has occurred? Evaluating SDS: evaluating ‘problem’ dialogues Spoken Dialogue Systems 25

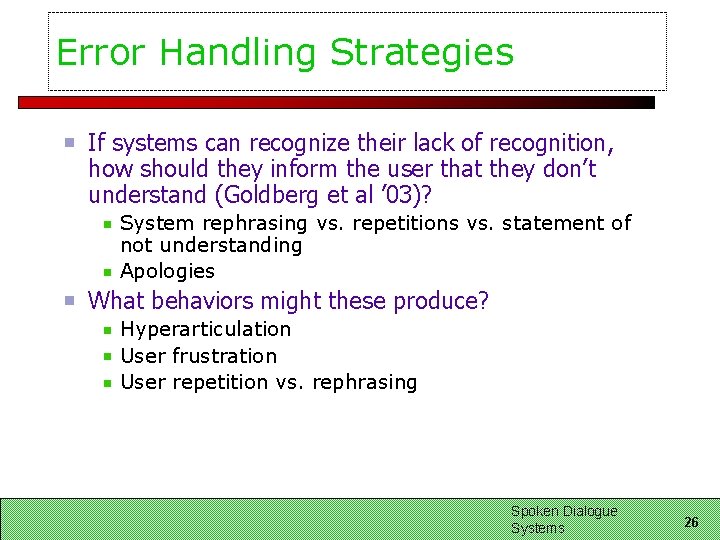

Error Handling Strategies If systems can recognize their lack of recognition, how should they inform the user that they don’t understand (Goldberg et al ’ 03)? System rephrasing vs. repetitions vs. statement of not understanding Apologies What behaviors might these produce? Hyperarticulation User frustration User repetition vs. rephrasing Spoken Dialogue Systems 26

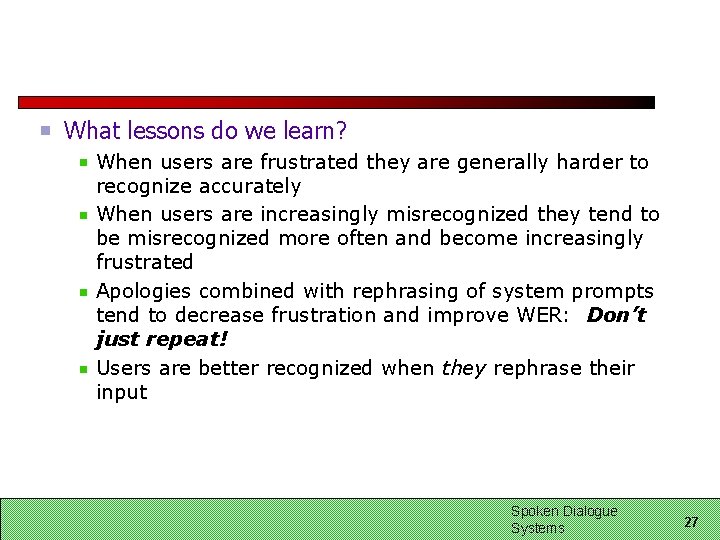

What lessons do we learn? When users are frustrated they are generally harder to recognize accurately When users are increasingly misrecognized they tend to be misrecognized more often and become increasingly frustrated Apologies combined with rephrasing of system prompts tend to decrease frustration and improve WER: Don’t just repeat! Users are better recognized when they rephrase their input Spoken Dialogue Systems 27

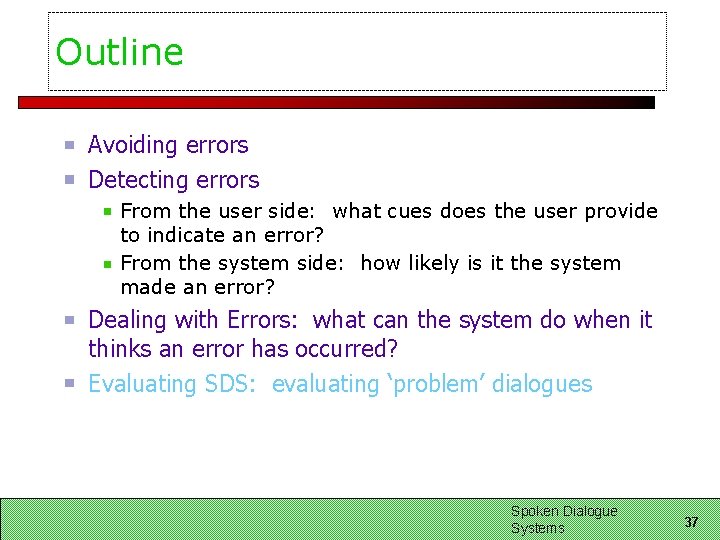

Outline Avoiding errors Detecting errors From the user side: what cues does the user provide to indicate an error? From the system side: how likely is it the system made an error? Dealing with Errors: what can the system do when it thinks an error has occurred? Evaluating SDS: evaluating ‘problem’ dialogues Spoken Dialogue Systems 37

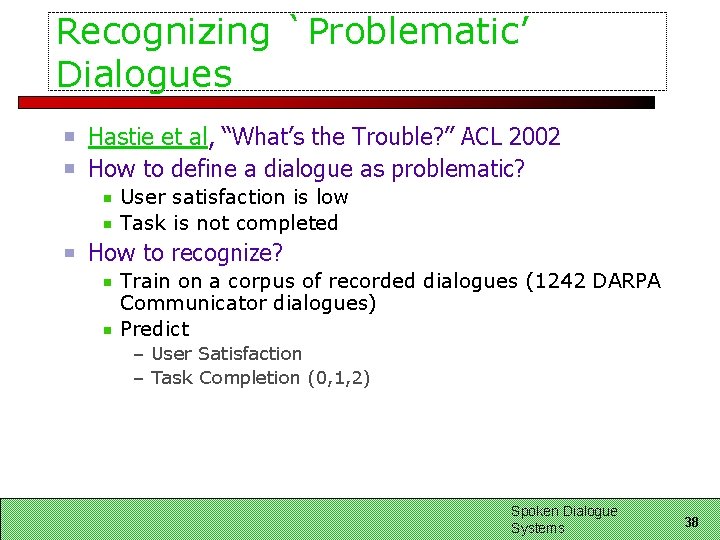

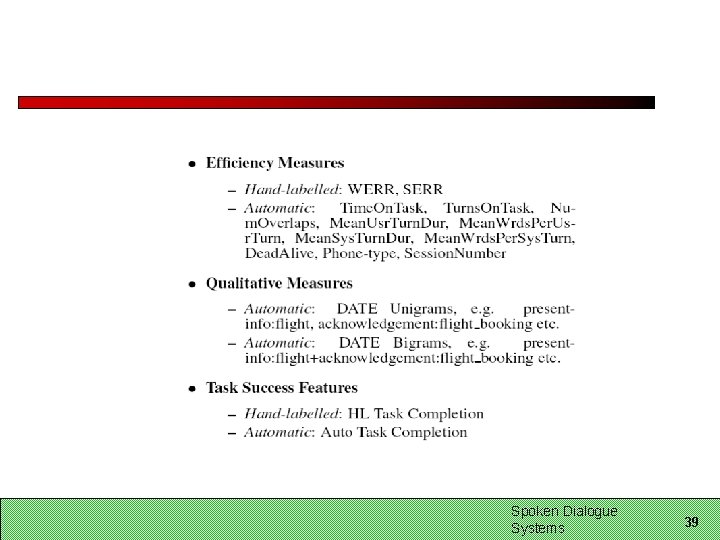

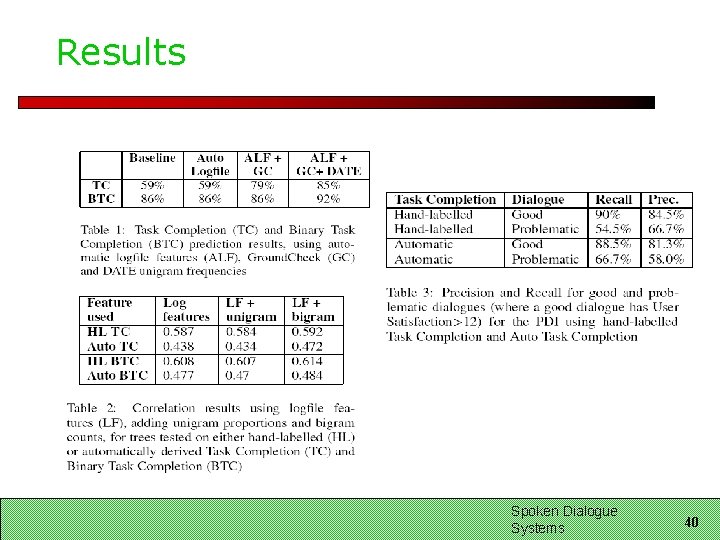

Recognizing `Problematic’ Dialogues Hastie et al, “What’s the Trouble? ” ACL 2002 How to define a dialogue as problematic? User satisfaction is low Task is not completed How to recognize? Train on a corpus of recorded dialogues (1242 DARPA Communicator dialogues) Predict – User Satisfaction – Task Completion (0, 1, 2) Spoken Dialogue Systems 38

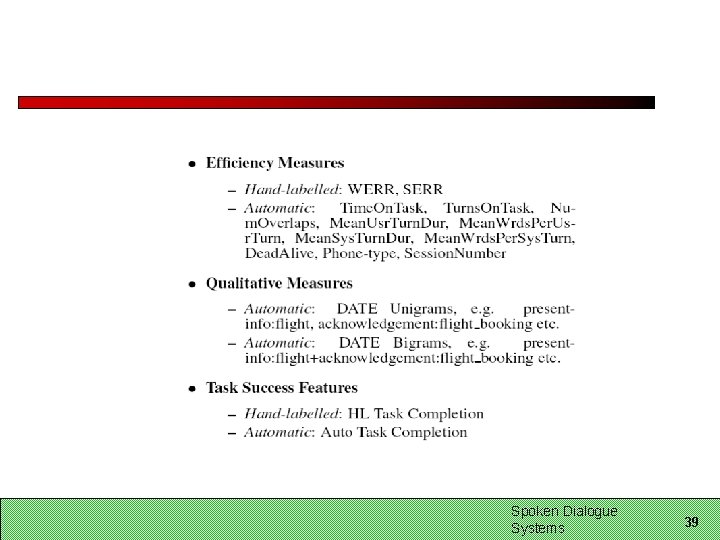

Spoken Dialogue Systems 39

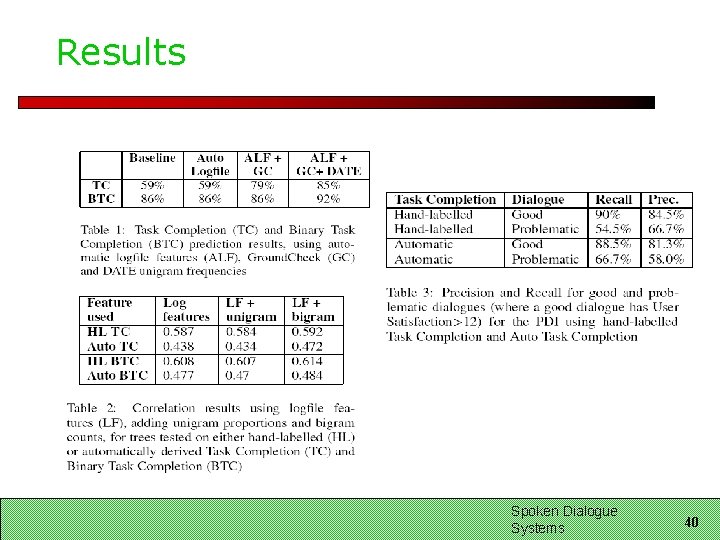

Results Spoken Dialogue Systems 40