Error detection in spoken dialogue systems GSLT Dialogue

![First-turn repair U: I want to travel to Stockh. . [Detection: disfluency] Stocksund First-turn repair U: I want to travel to Stockh. . [Detection: disfluency] Stocksund](https://slidetodoc.com/presentation_image/c7b885fc93da6ccd4ee9ee13fc9e48c8/image-9.jpg)

![Second-turn repair U: I want to travel to Stocksund. S: [Detection: non-understanding] Sorry, I Second-turn repair U: I want to travel to Stocksund. S: [Detection: non-understanding] Sorry, I](https://slidetodoc.com/presentation_image/c7b885fc93da6ccd4ee9ee13fc9e48c8/image-10.jpg)

- Slides: 25

Error detection in spoken dialogue systems GSLT Dialogue Systems, 5 p Gabriel Skantze TT Centrum för talteknologi

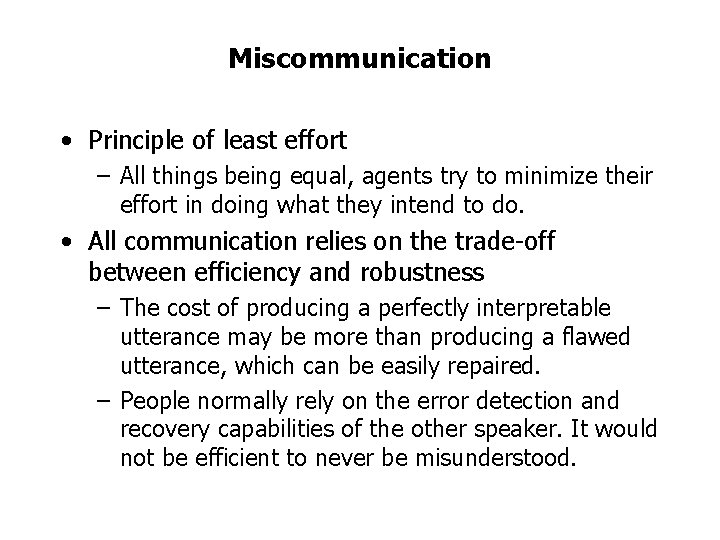

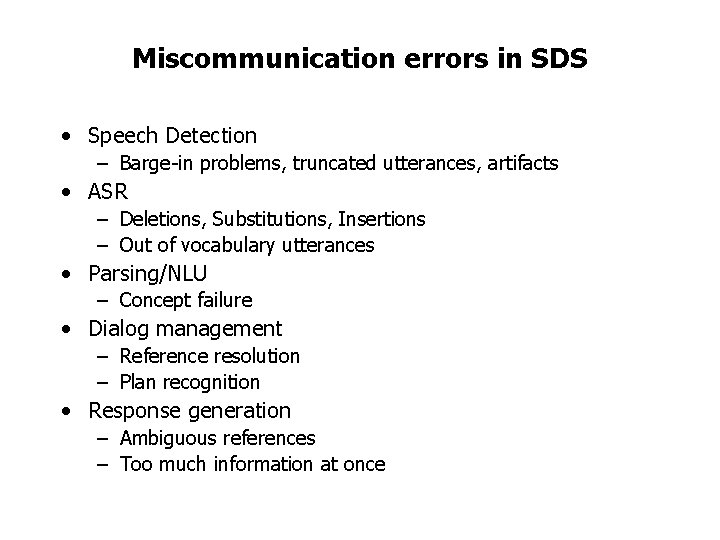

Grounding in conversation • Communication: ”making something common” • Common ground: The mutual understanding of the participants in a joint action • Grounding: establish something as part of common ground well enough for current purposes • The grounding acts will depend on – Confidence of understanding/prior groundedness – The grounding criterion (current purposes) • Cost of task failure – Cost of grounding

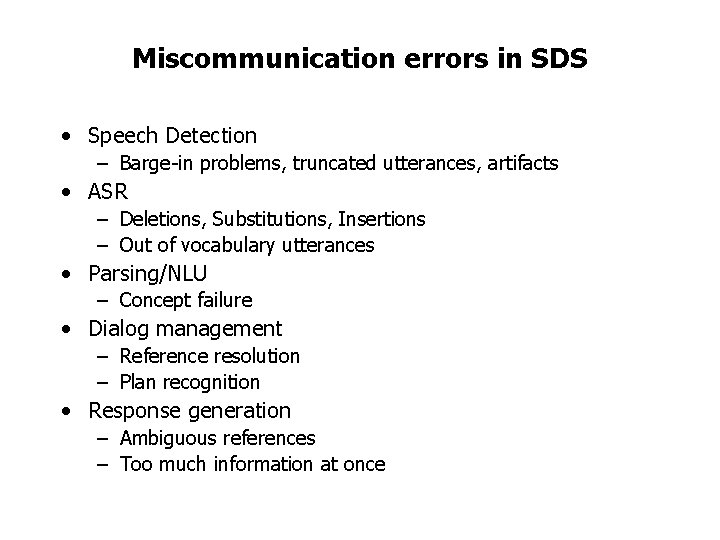

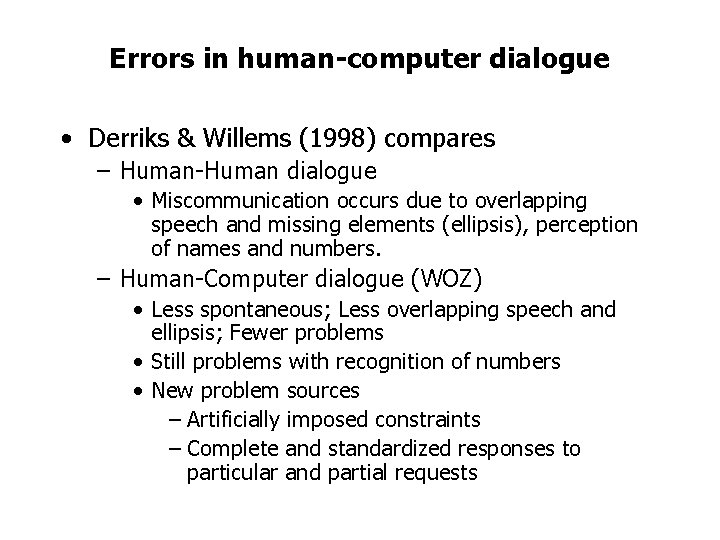

Miscommunication • Principle of least effort – All things being equal, agents try to minimize their effort in doing what they intend to do. • All communication relies on the trade-off between efficiency and robustness – The cost of producing a perfectly interpretable utterance may be more than producing a flawed utterance, which can be easily repaired. – People normally rely on the error detection and recovery capabilities of the other speaker. It would not be efficient to never be misunderstood.

Miscommunication errors in SDS • Speech Detection – Barge-in problems, truncated utterances, artifacts • ASR – Deletions, Substitutions, Insertions – Out of vocabulary utterances • Parsing/NLU – Concept failure • Dialog management – Reference resolution – Plan recognition • Response generation – Ambiguous references – Too much information at once

Errors in human-computer dialogue • Derriks & Willems (1998) compares – Human-Human dialogue • Miscommunication occurs due to overlapping speech and missing elements (ellipsis), perception of names and numbers. – Human-Computer dialogue (WOZ) • Less spontaneous; Less overlapping speech and ellipsis; Fewer problems • Still problems with recognition of numbers • New problem sources – Artificially imposed constraints – Complete and standardized responses to particular and partial requests

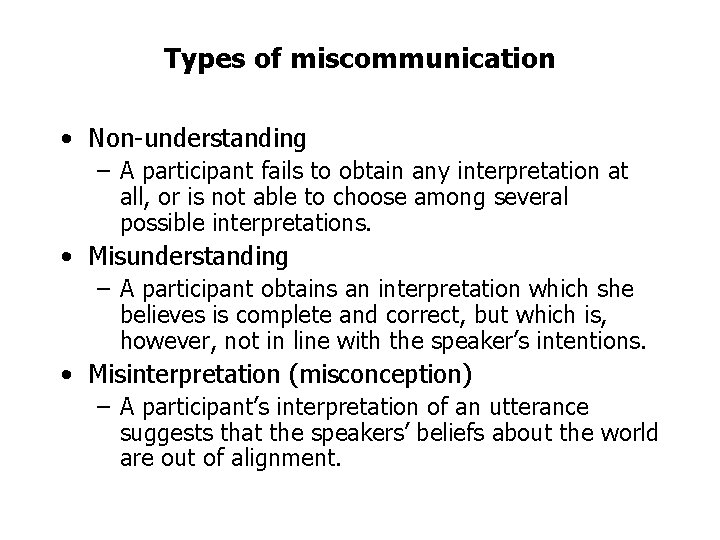

Types of miscommunication • Non-understanding – A participant fails to obtain any interpretation at all, or is not able to choose among several possible interpretations. • Misunderstanding – A participant obtains an interpretation which she believes is complete and correct, but which is, however, not in line with the speaker’s intentions. • Misinterpretation (misconception) – A participant’s interpretation of an utterance suggests that the speakers’ beliefs about the world are out of alignment.

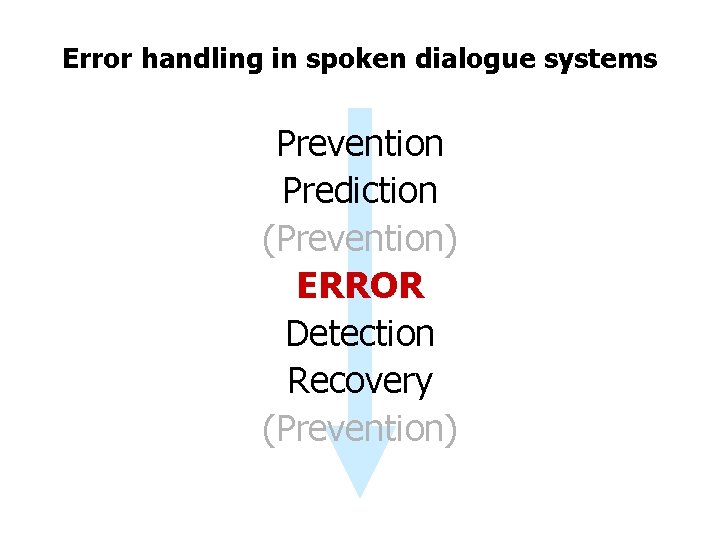

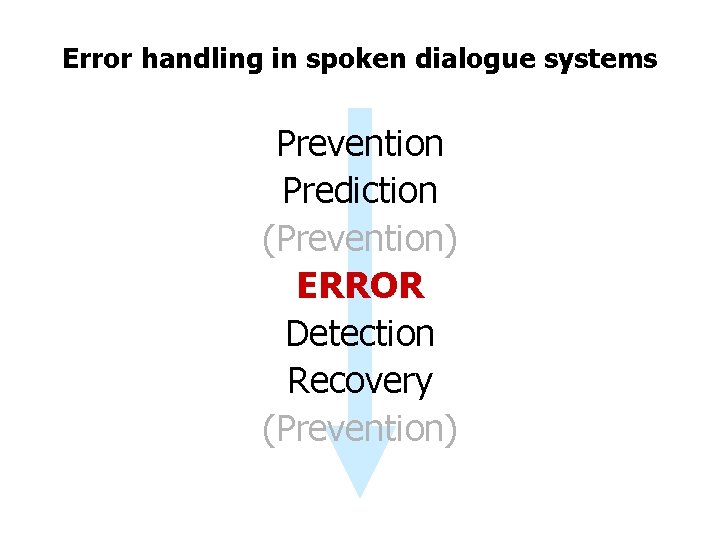

Error handling in spoken dialogue systems Prevention Prediction (Prevention) ERROR Detection Recovery (Prevention)

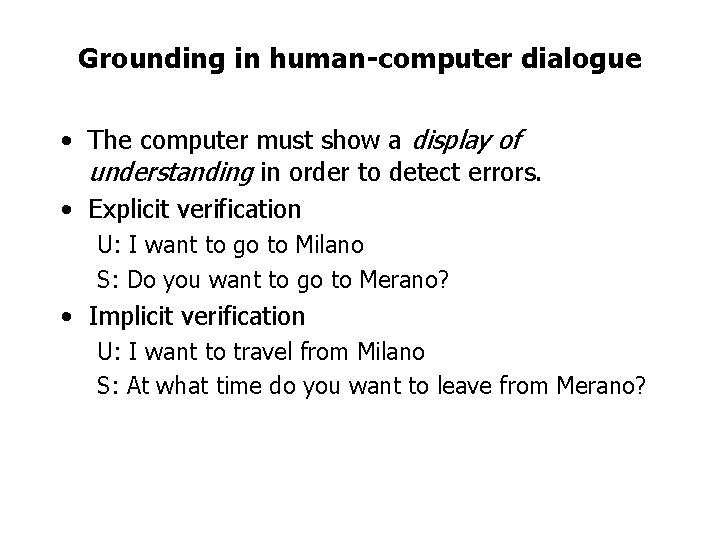

Grounding in human-computer dialogue • The computer must show a display of understanding in order to detect errors. • Explicit verification U: I want to go to Milano S: Do you want to go to Merano? • Implicit verification U: I want to travel from Milano S: At what time do you want to leave from Merano?

![Firstturn repair U I want to travel to Stockh Detection disfluency Stocksund First-turn repair U: I want to travel to Stockh. . [Detection: disfluency] Stocksund](https://slidetodoc.com/presentation_image/c7b885fc93da6ccd4ee9ee13fc9e48c8/image-9.jpg)

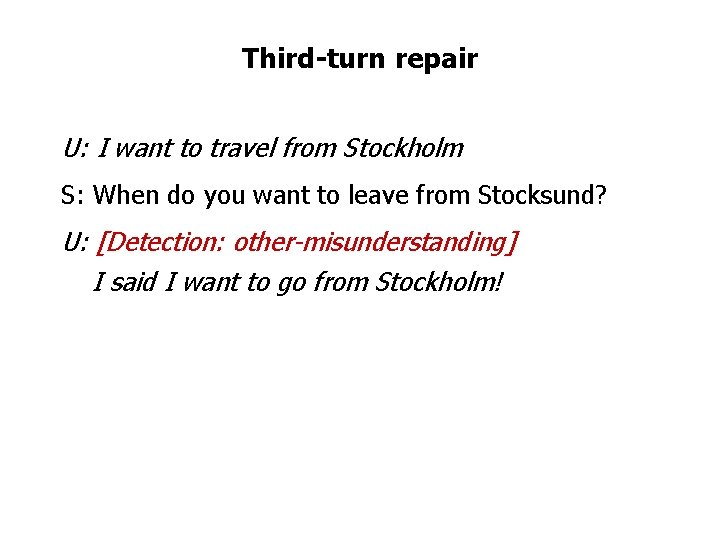

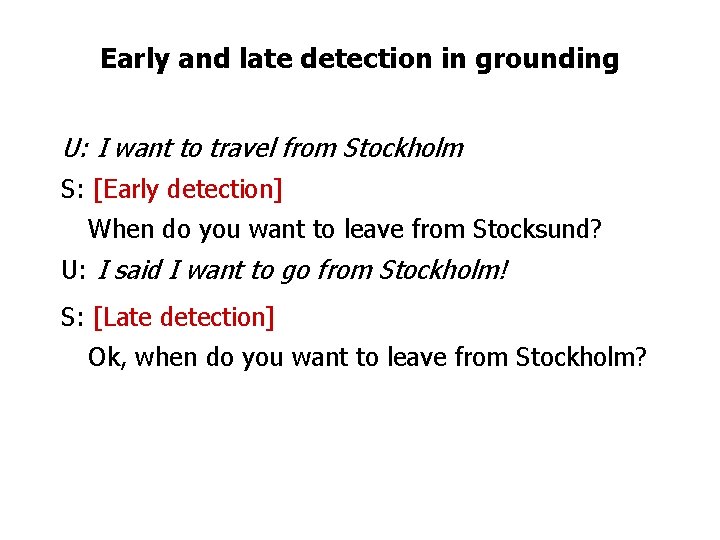

First-turn repair U: I want to travel to Stockh. . [Detection: disfluency] Stocksund

![Secondturn repair U I want to travel to Stocksund S Detection nonunderstanding Sorry I Second-turn repair U: I want to travel to Stocksund. S: [Detection: non-understanding] Sorry, I](https://slidetodoc.com/presentation_image/c7b885fc93da6ccd4ee9ee13fc9e48c8/image-10.jpg)

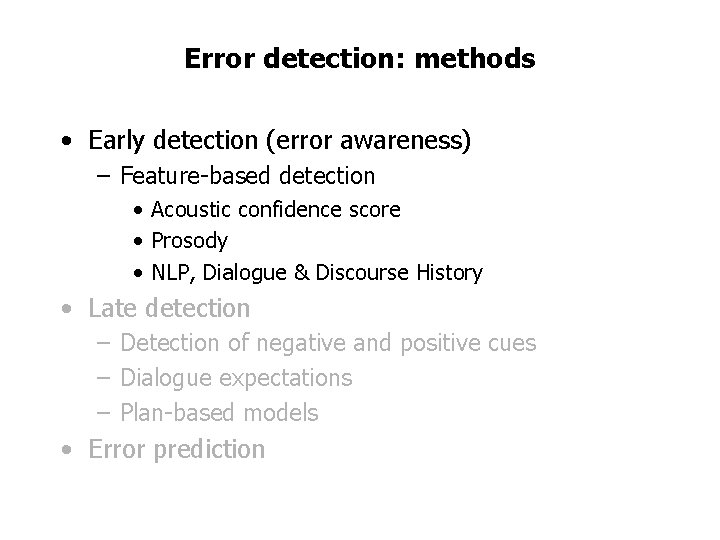

Second-turn repair U: I want to travel to Stocksund. S: [Detection: non-understanding] Sorry, I didn’t understand. Please repeat. U: I want to travel to Stocksund. S: [Detection: self-misunderstanding] Did you say Stockholm?

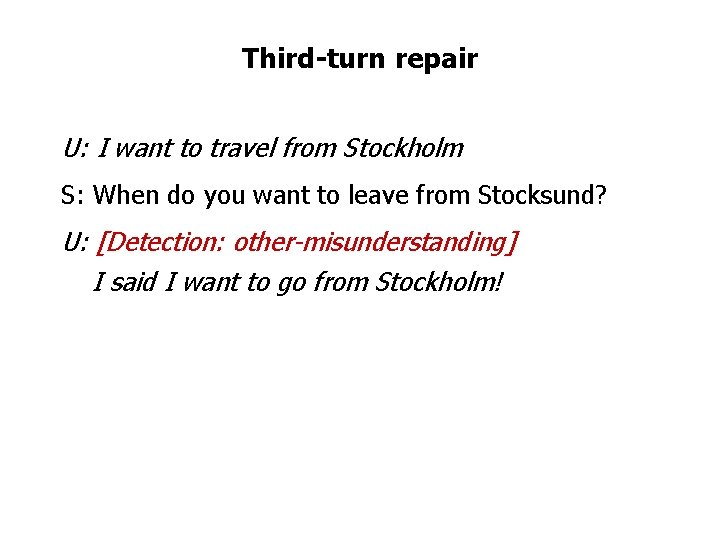

Third-turn repair U: I want to travel from Stockholm S: When do you want to leave from Stocksund? U: [Detection: other-misunderstanding] I said I want to go from Stockholm!

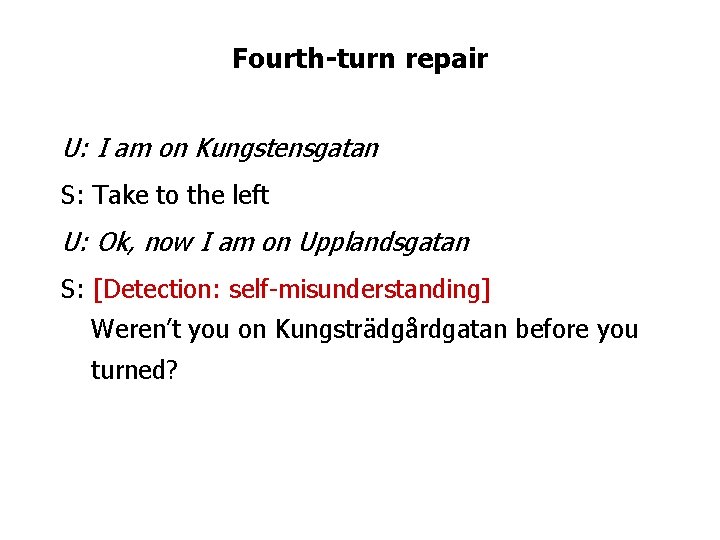

Fourth-turn repair U: I am on Kungstensgatan S: Take to the left U: Ok, now I am on Upplandsgatan S: [Detection: self-misunderstanding] Weren’t you on Kungsträdgårdgatan before you turned?

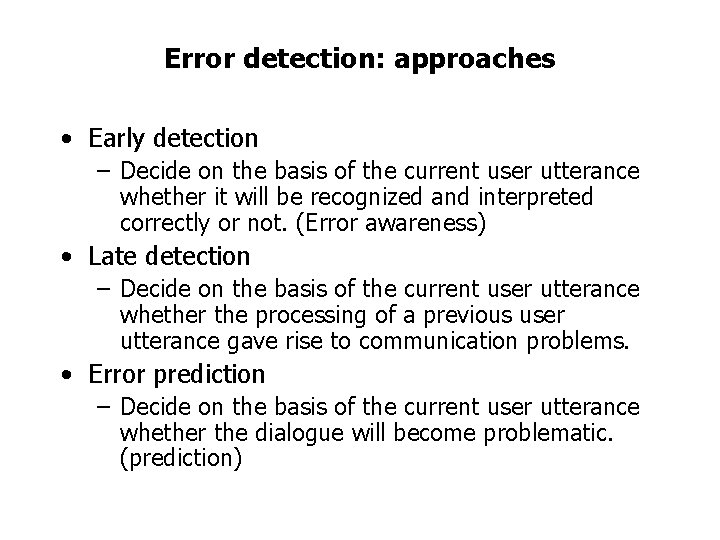

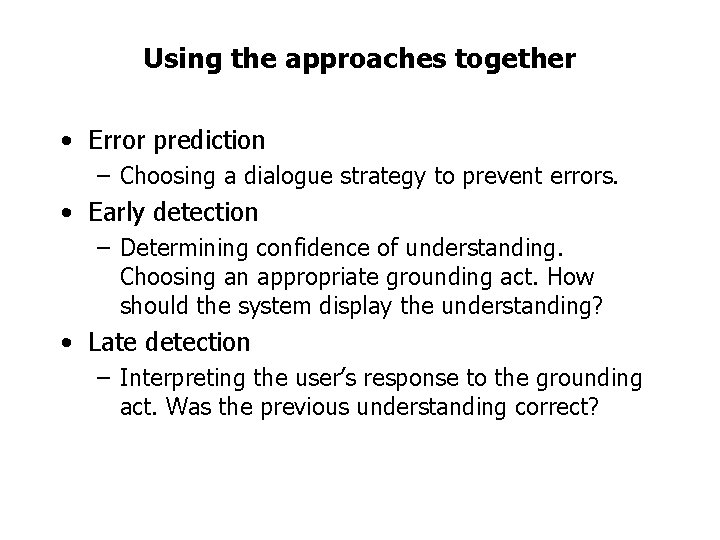

Error detection: approaches • Early detection – Decide on the basis of the current user utterance whether it will be recognized and interpreted correctly or not. (Error awareness) • Late detection – Decide on the basis of the current user utterance whether the processing of a previous user utterance gave rise to communication problems. • Error prediction – Decide on the basis of the current user utterance whether the dialogue will become problematic. (prediction)

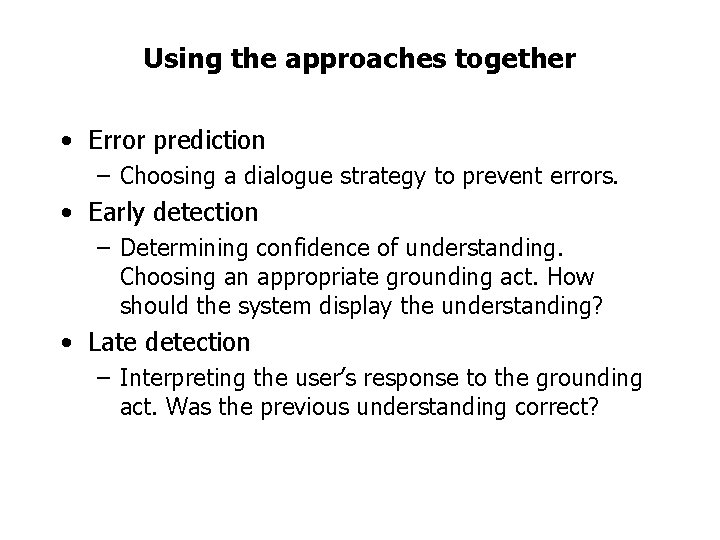

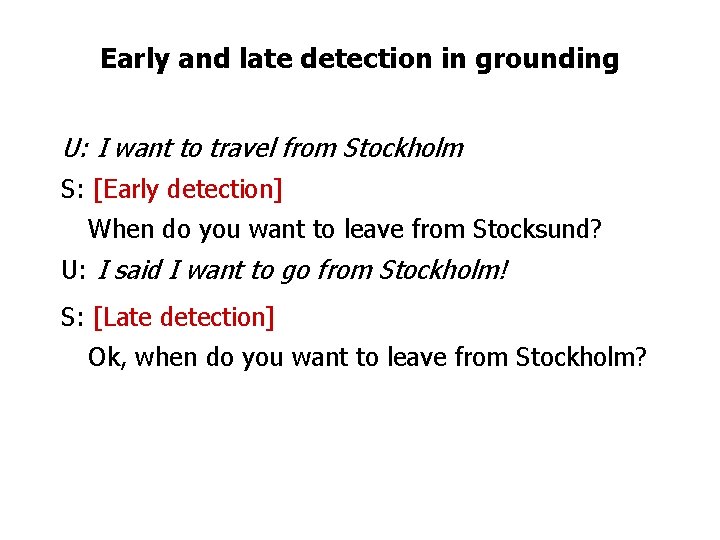

Using the approaches together • Error prediction – Choosing a dialogue strategy to prevent errors. • Early detection – Determining confidence of understanding. Choosing an appropriate grounding act. How should the system display the understanding? • Late detection – Interpreting the user’s response to the grounding act. Was the previous understanding correct?

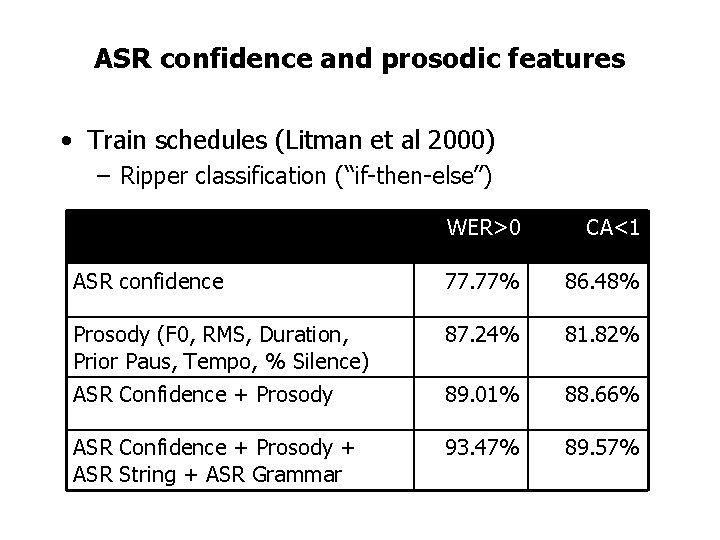

Early and late detection in grounding U: I want to travel from Stockholm S: [Early detection] When do you want to leave from Stocksund? U: I said I want to go from Stockholm! S: [Late detection] Ok, when do you want to leave from Stockholm?

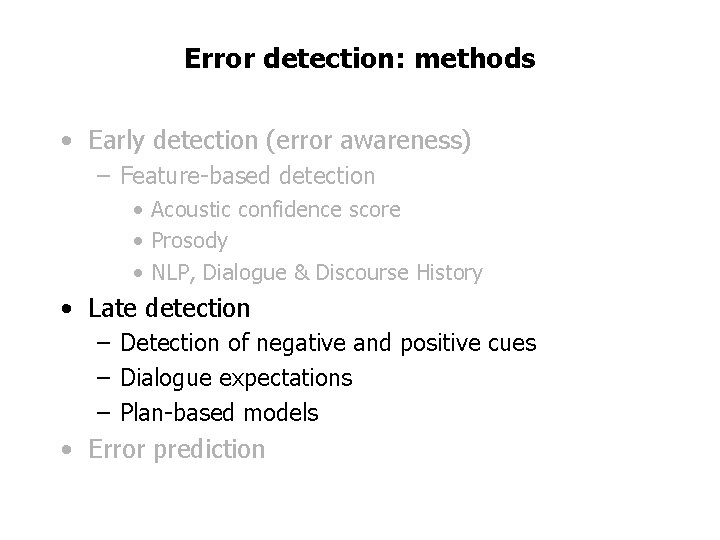

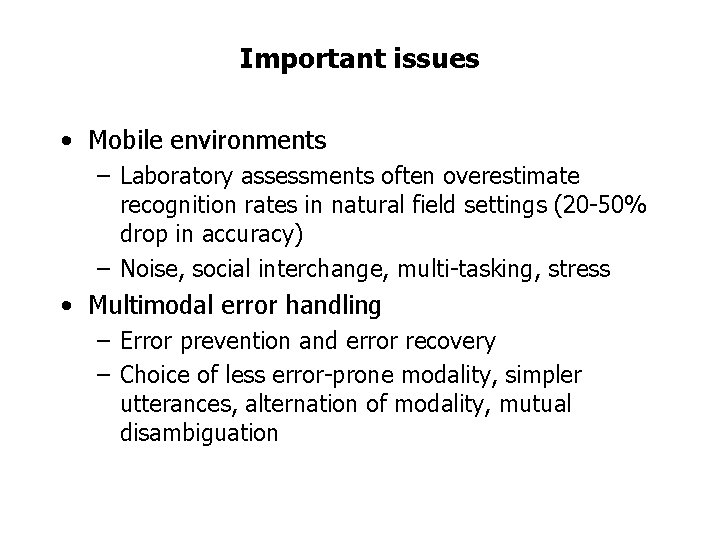

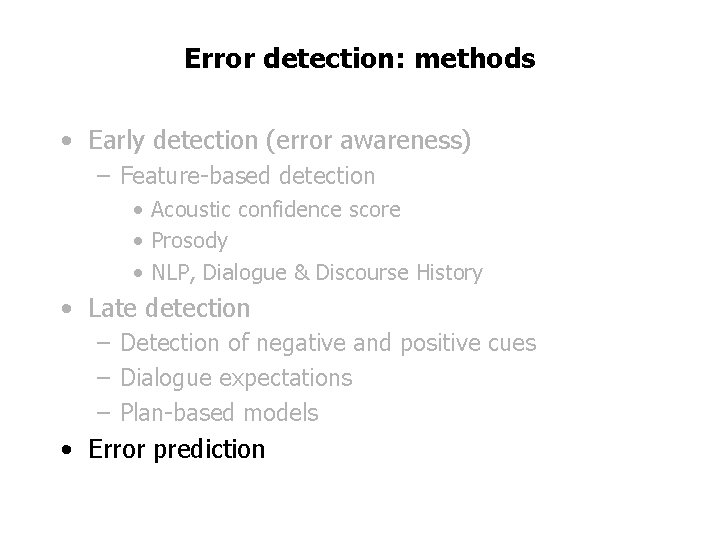

Error detection: methods • Early detection (error awareness) – Feature-based detection • Acoustic confidence score • Prosody • NLP, Dialogue & Discourse History • Late detection – Detection of negative and positive cues – Dialogue expectations – Plan-based models • Error prediction

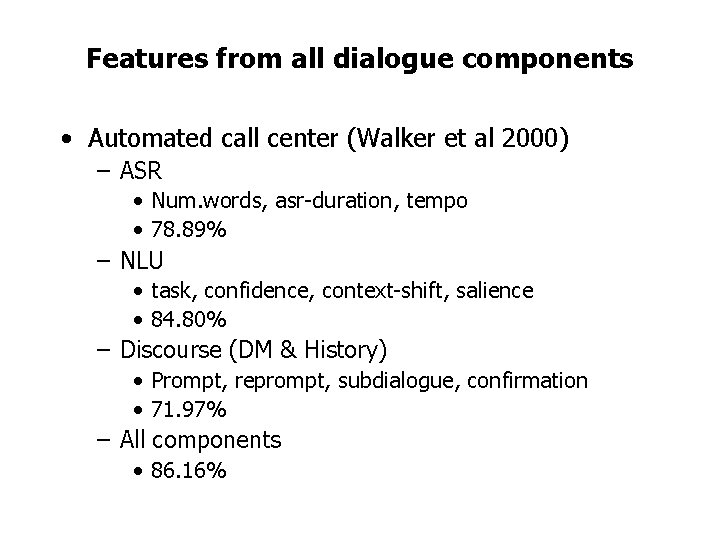

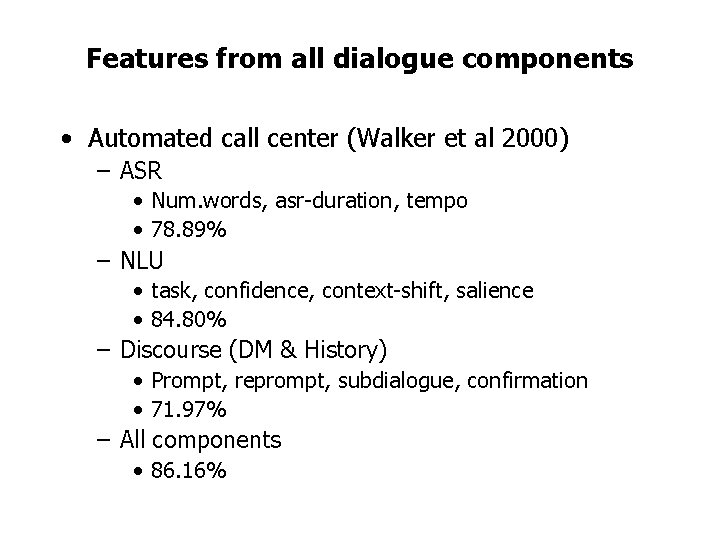

ASR confidence and prosodic features • Train schedules (Litman et al 2000) – Ripper classification (“if-then-else”) WER>0 CA<1 ASR confidence 77. 77% 86. 48% Prosody (F 0, RMS, Duration, Prior Paus, Tempo, % Silence) 87. 24% 81. 82% ASR Confidence + Prosody 89. 01% 88. 66% ASR Confidence + Prosody + ASR String + ASR Grammar 93. 47% 89. 57%

Features from all dialogue components • Automated call center (Walker et al 2000) – ASR • Num. words, asr-duration, tempo • 78. 89% – NLU • task, confidence, context-shift, salience • 84. 80% – Discourse (DM & History) • Prompt, reprompt, subdialogue, confirmation • 71. 97% – All components • 86. 16%

Error detection: methods • Early detection (error awareness) – Feature-based detection • Acoustic confidence score • Prosody • NLP, Dialogue & Discourse History • Late detection – Detection of negative and positive cues – Dialogue expectations – Plan-based models • Error prediction

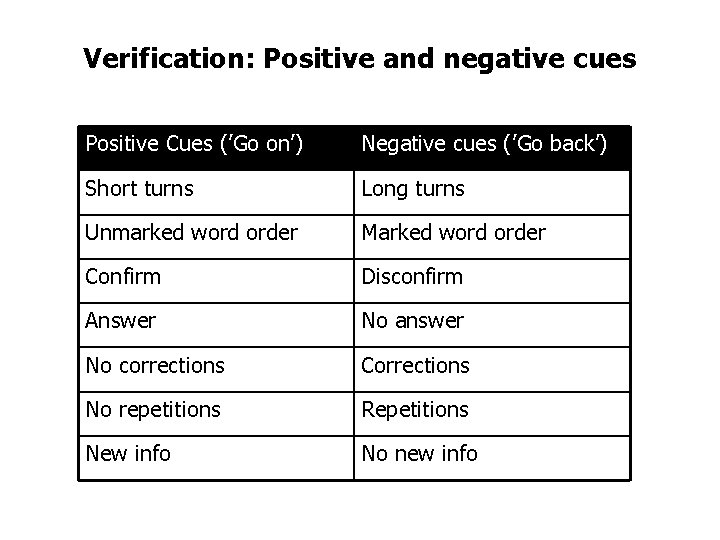

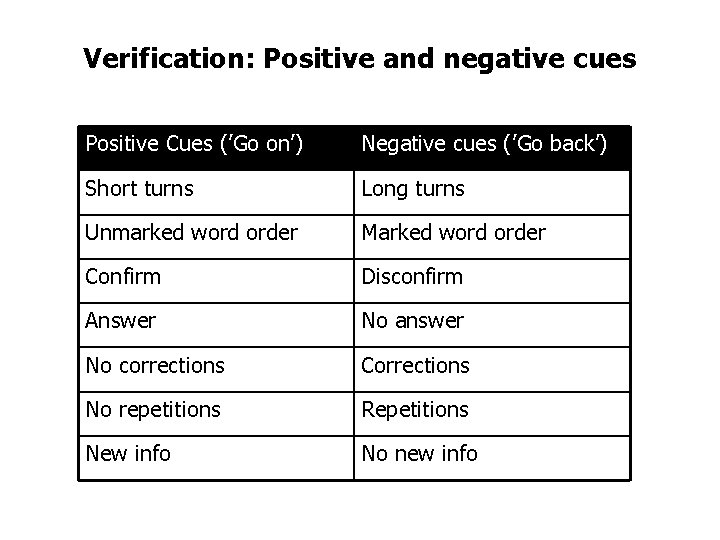

Verification: Positive and negative cues Positive Cues (’Go on’) Negative cues (’Go back’) Short turns Long turns Unmarked word order Marked word order Confirm Disconfirm Answer No answer No corrections Corrections No repetitions Repetitions New info No new info

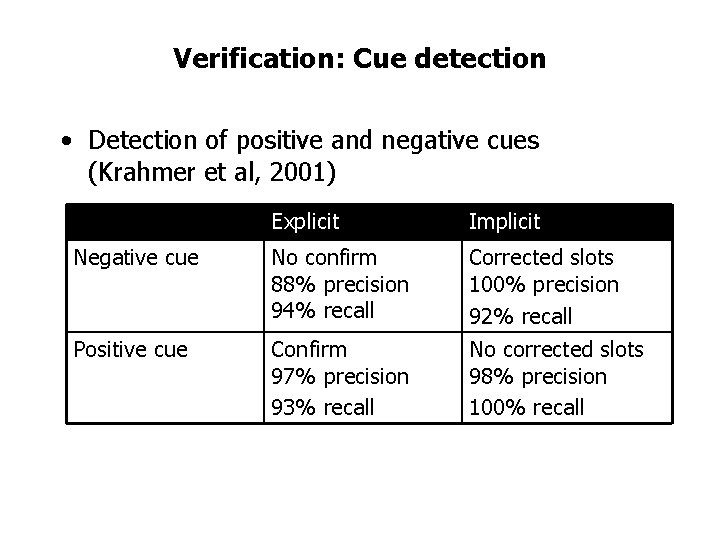

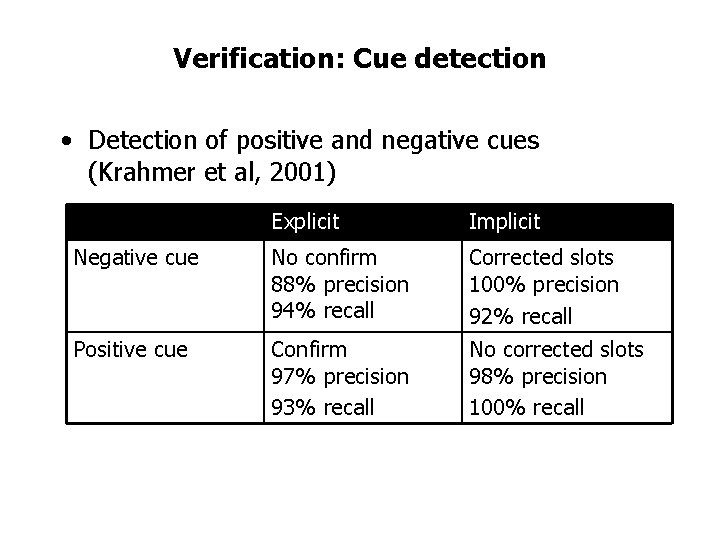

Verification: Cue detection • Detection of positive and negative cues (Krahmer et al, 2001) Explicit Implicit Negative cue No confirm 88% precision 94% recall Corrected slots 100% precision 92% recall Positive cue Confirm 97% precision 93% recall No corrected slots 98% precision 100% recall

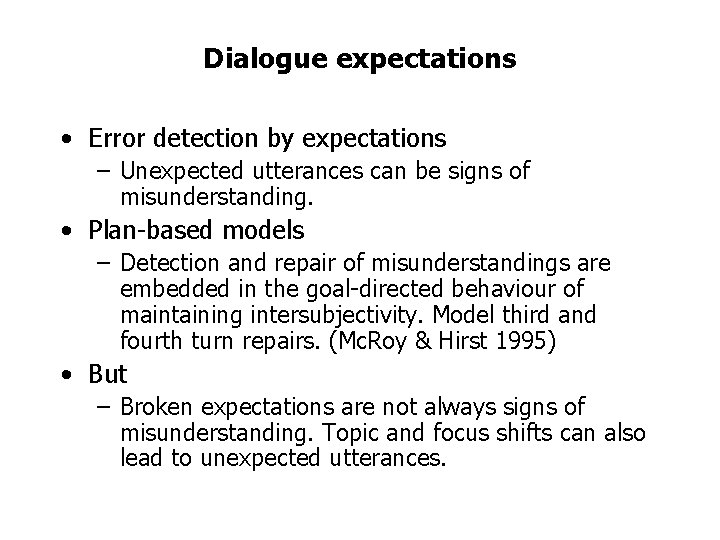

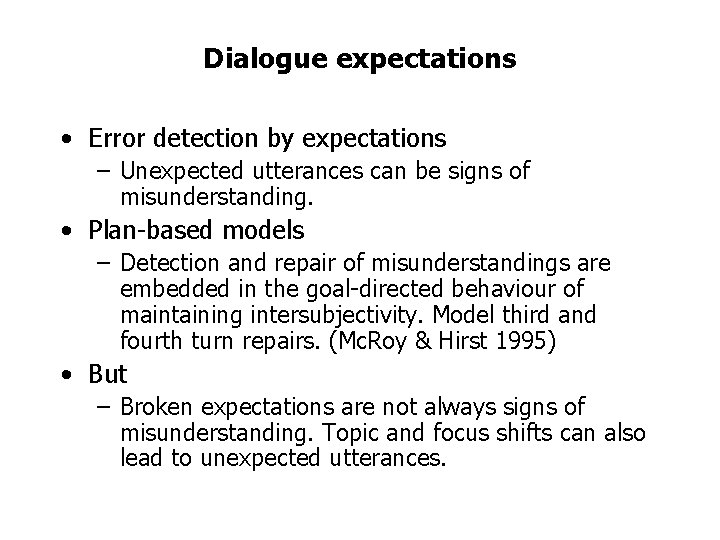

Dialogue expectations • Error detection by expectations – Unexpected utterances can be signs of misunderstanding. • Plan-based models – Detection and repair of misunderstandings are embedded in the goal-directed behaviour of maintaining intersubjectivity. Model third and fourth turn repairs. (Mc. Roy & Hirst 1995) • But – Broken expectations are not always signs of misunderstanding. Topic and focus shifts can also lead to unexpected utterances.

Error detection: methods • Early detection (error awareness) – Feature-based detection • Acoustic confidence score • Prosody • NLP, Dialogue & Discourse History • Late detection – Detection of negative and positive cues – Dialogue expectations – Plan-based models • Error prediction

Error prediction • Approach: – Decide on the basis of the current user utterance(s) whether the dialogue will be problematic. • Walker et al (2000): – Dialogues were classified as “problematic” (36%) or “task success” (64%; baseline) – Trained on features from ASR, NLU and DM – First turn: 72% – Second turn: 80% – Whole dialogue: 87%

Important issues • Mobile environments – Laboratory assessments often overestimate recognition rates in natural field settings (20 -50% drop in accuracy) – Noise, social interchange, multi-tasking, stress • Multimodal error handling – Error prevention and error recovery – Choice of less error-prone modality, simpler utterances, alternation of modality, mutual disambiguation