EECS 262 a Advanced Topics in Computer Systems

![EDF Example: Domino Effect EDF minimizes lateness of the “most tardy task” [Dertouzos, 1974] EDF Example: Domino Effect EDF minimizes lateness of the “most tardy task” [Dertouzos, 1974]](https://slidetodoc.com/presentation_image_h2/4e4bb6a2fed810ca7a373516e44bd154/image-15.jpg)

- Slides: 59

EECS 262 a Advanced Topics in Computer Systems Lecture 13 M-CBS(Con’t) and DRF October 10 th, 2012 John Kubiatowicz and Anthony D. Joseph Electrical Engineering and Computer Sciences University of California, Berkeley http: //www. eecs. berkeley. edu/~kubitron/cs 262

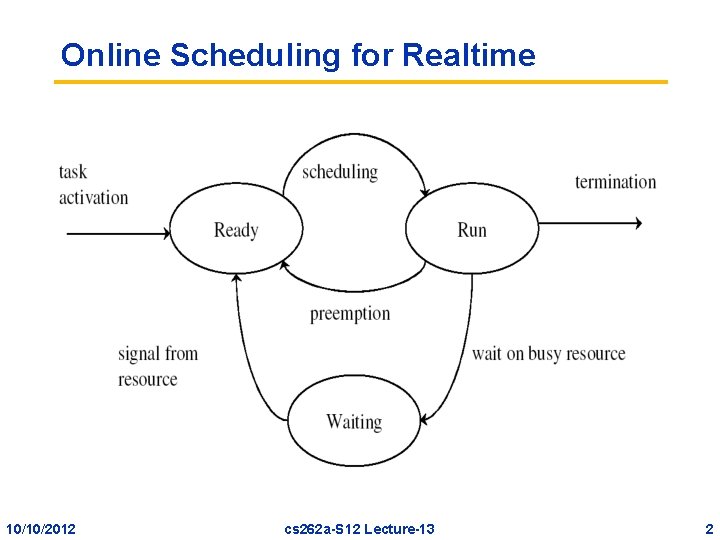

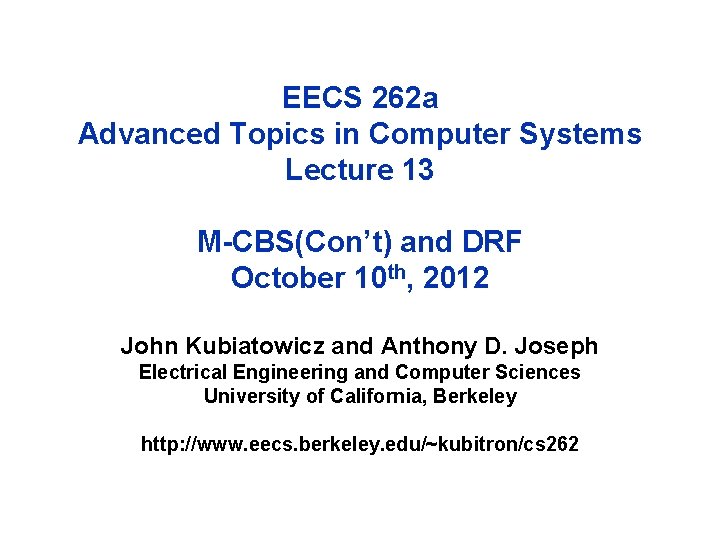

Online Scheduling for Realtime 10/10/2012 cs 262 a-S 12 Lecture-13 2

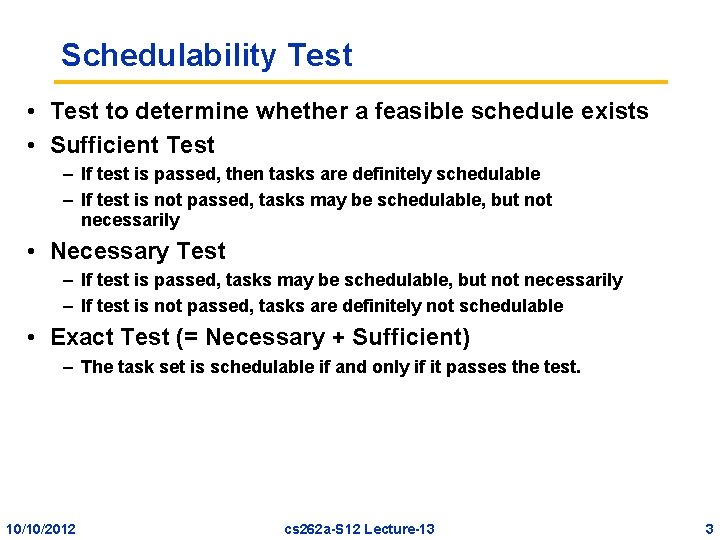

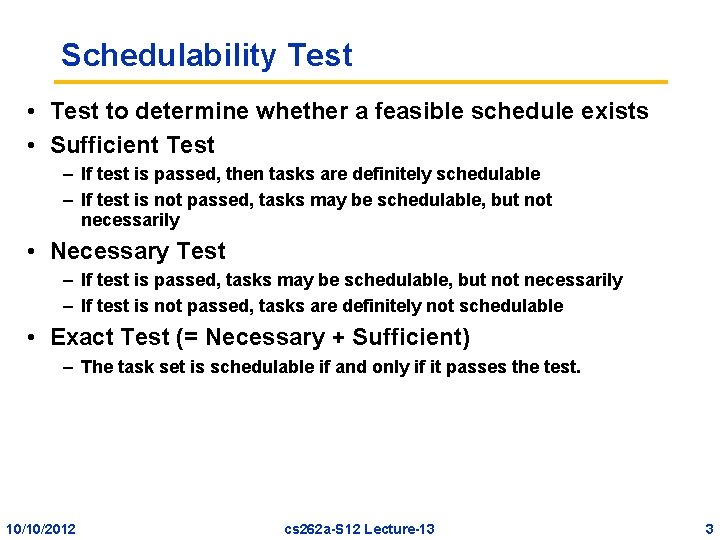

Schedulability Test • Test to determine whether a feasible schedule exists • Sufficient Test – If test is passed, then tasks are definitely schedulable – If test is not passed, tasks may be schedulable, but not necessarily • Necessary Test – If test is passed, tasks may be schedulable, but not necessarily – If test is not passed, tasks are definitely not schedulable • Exact Test (= Necessary + Sufficient) – The task set is schedulable if and only if it passes the test. 10/10/2012 cs 262 a-S 12 Lecture-13 3

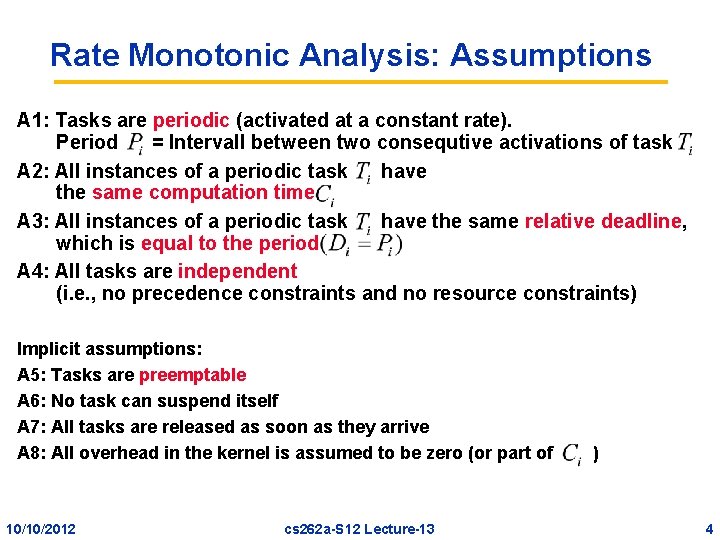

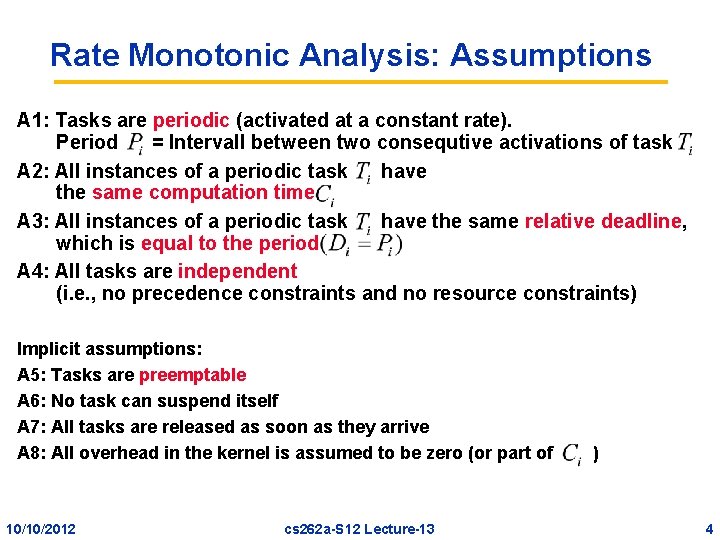

Rate Monotonic Analysis: Assumptions A 1: Tasks are periodic (activated at a constant rate). Period = Intervall between two consequtive activations of task A 2: All instances of a periodic task have the same computation time A 3: All instances of a periodic task have the same relative deadline, which is equal to the period A 4: All tasks are independent (i. e. , no precedence constraints and no resource constraints) Implicit assumptions: A 5: Tasks are preemptable A 6: No task can suspend itself A 7: All tasks are released as soon as they arrive A 8: All overhead in the kernel is assumed to be zero (or part of 10/10/2012 cs 262 a-S 12 Lecture-13 ) 4

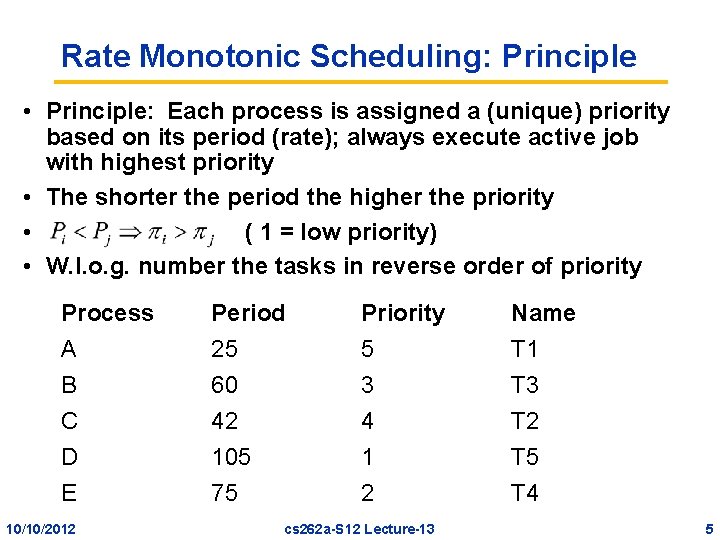

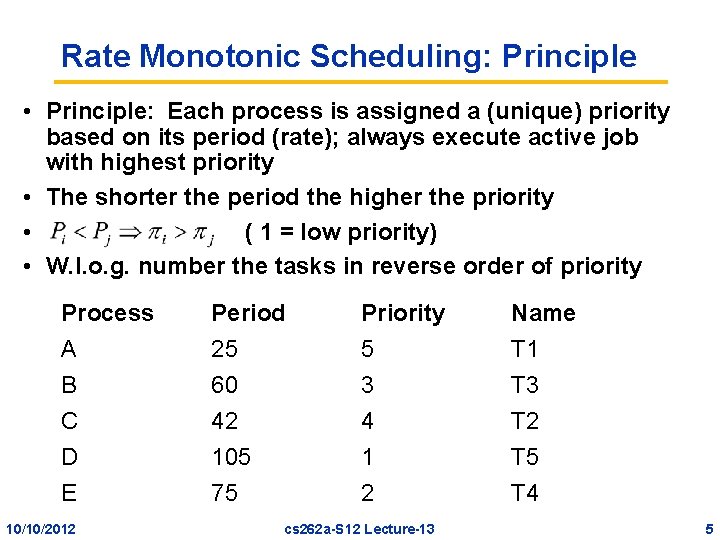

Rate Monotonic Scheduling: Principle • Principle: Each process is assigned a (unique) priority based on its period (rate); always execute active job with highest priority • The shorter the period the higher the priority • ( 1 = low priority) • W. l. o. g. number the tasks in reverse order of priority Process A B C Period 25 60 42 Priority 5 3 4 Name T 1 T 3 T 2 D E 105 75 1 2 T 5 T 4 10/10/2012 cs 262 a-S 12 Lecture-13 5

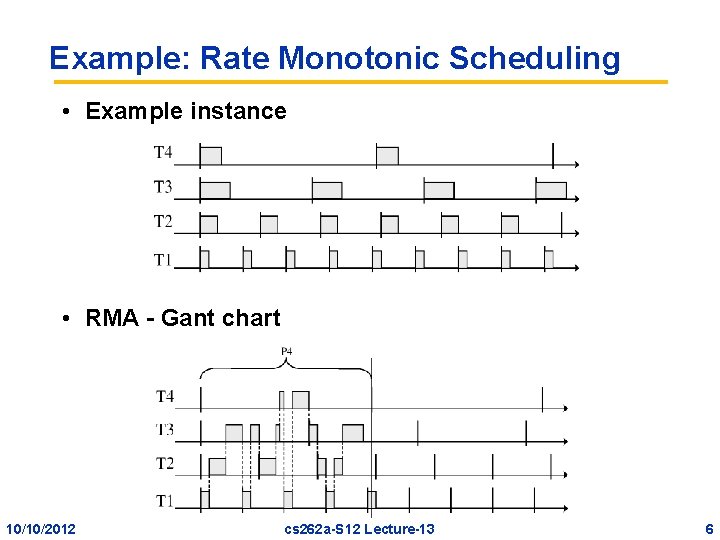

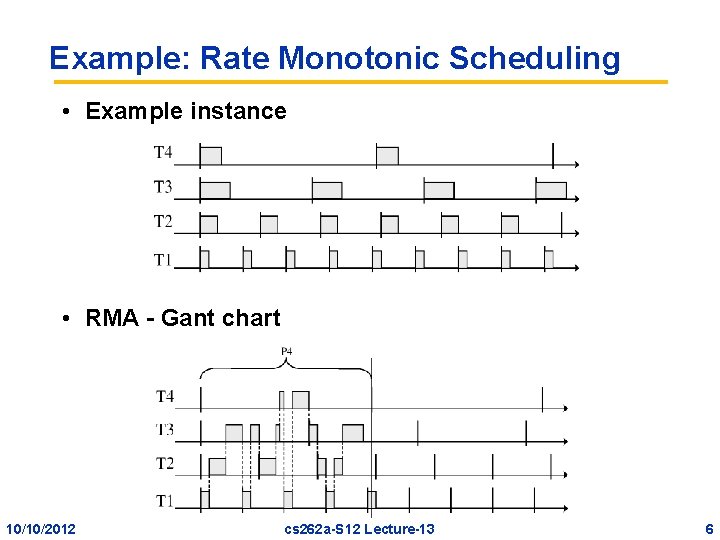

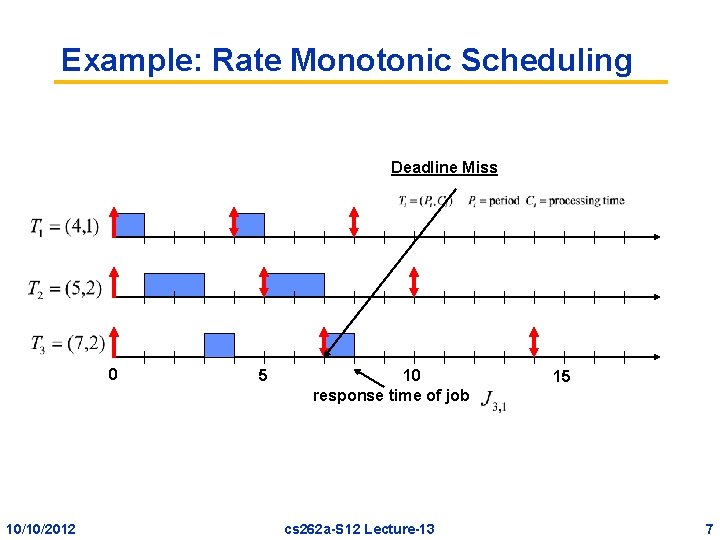

Example: Rate Monotonic Scheduling • Example instance • RMA - Gant chart 10/10/2012 cs 262 a-S 12 Lecture-13 6

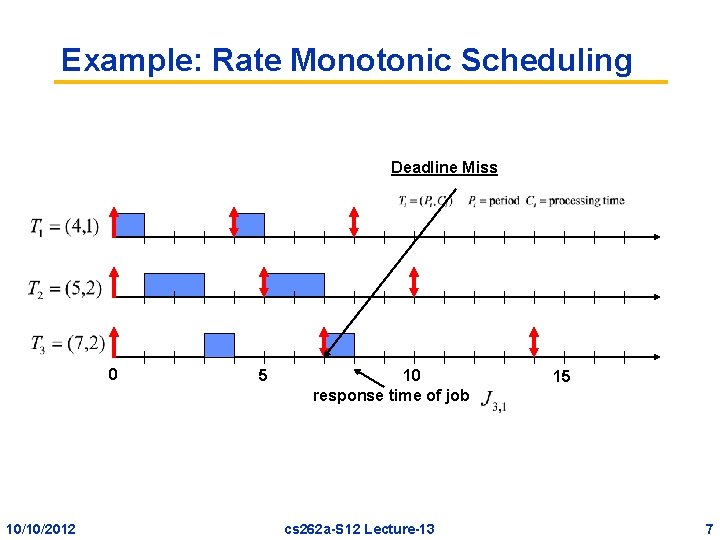

Example: Rate Monotonic Scheduling Deadline Miss 0 10/10/2012 5 10 response time of job cs 262 a-S 12 Lecture-13 15 7

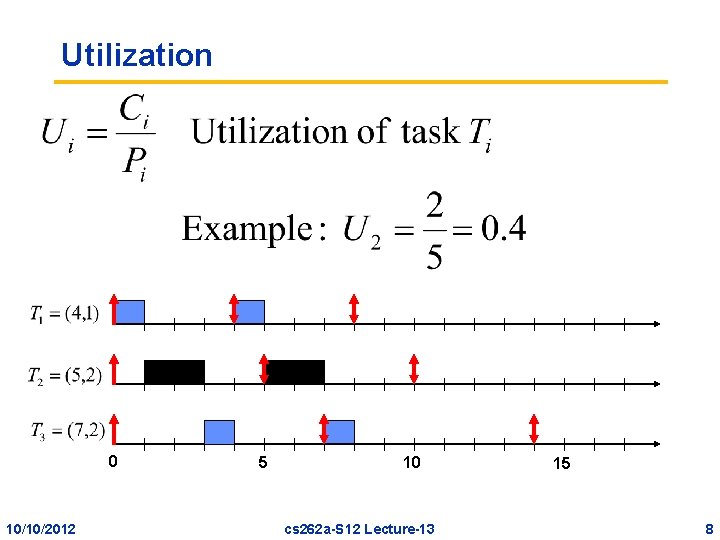

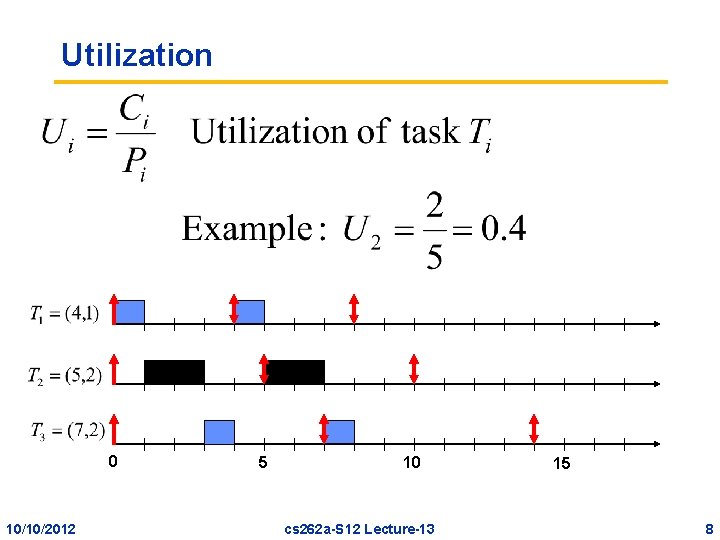

Utilization 0 10/10/2012 5 10 cs 262 a-S 12 Lecture-13 15 8

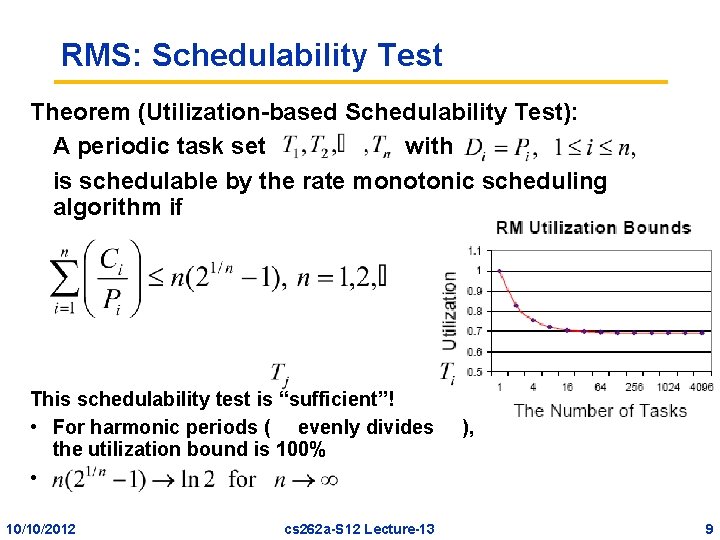

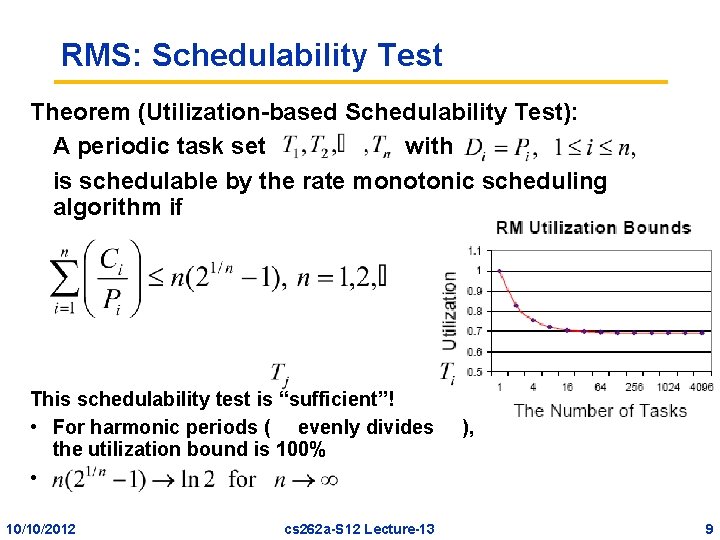

RMS: Schedulability Test Theorem (Utilization-based Schedulability Test): A periodic task set with is schedulable by the rate monotonic scheduling algorithm if This schedulability test is “sufficient”! • For harmonic periods ( evenly divides the utilization bound is 100% • 10/10/2012 cs 262 a-S 12 Lecture-13 ), 9

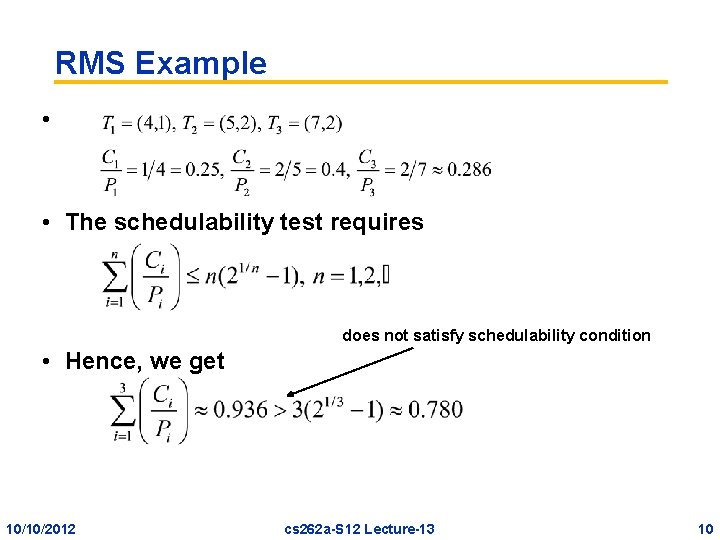

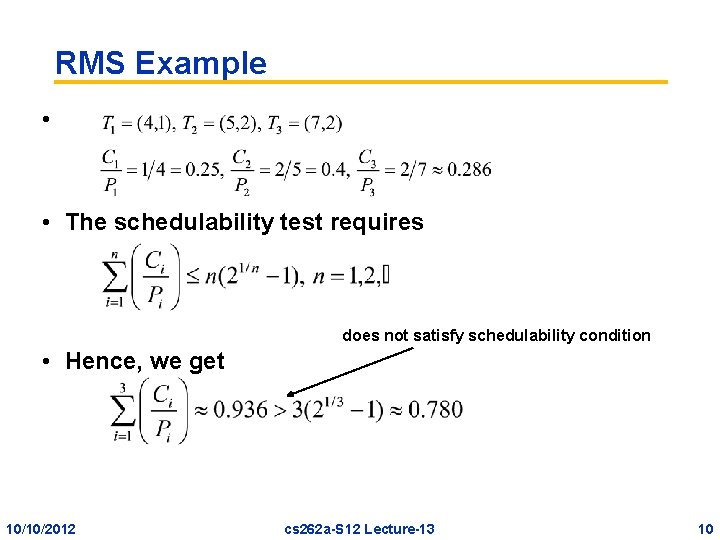

RMS Example • • The schedulability test requires does not satisfy schedulability condition • Hence, we get 10/10/2012 cs 262 a-S 12 Lecture-13 10

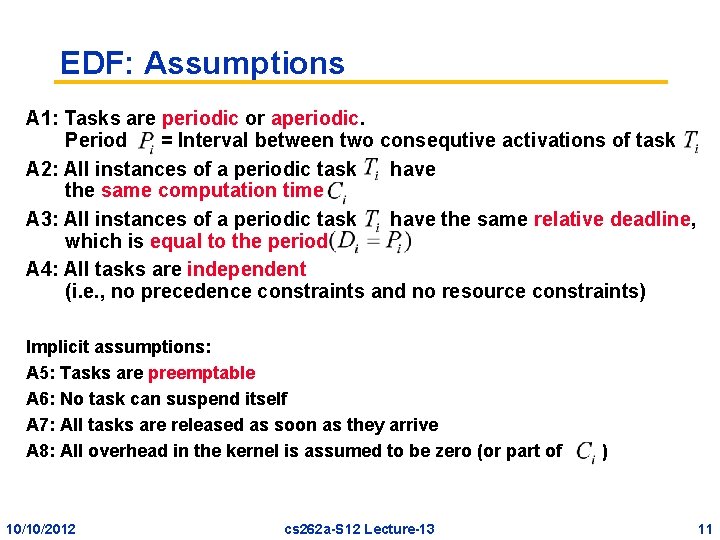

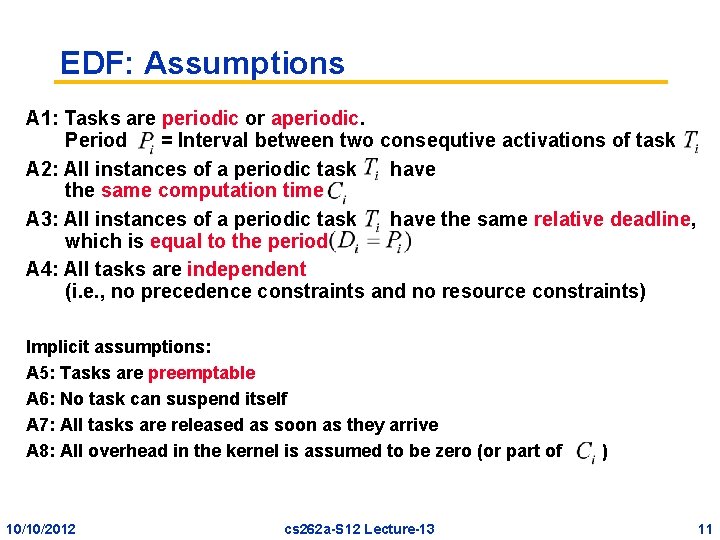

EDF: Assumptions A 1: Tasks are periodic or aperiodic. Period = Interval between two consequtive activations of task A 2: All instances of a periodic task have the same computation time A 3: All instances of a periodic task have the same relative deadline, which is equal to the period A 4: All tasks are independent (i. e. , no precedence constraints and no resource constraints) Implicit assumptions: A 5: Tasks are preemptable A 6: No task can suspend itself A 7: All tasks are released as soon as they arrive A 8: All overhead in the kernel is assumed to be zero (or part of 10/10/2012 cs 262 a-S 12 Lecture-13 ) 11

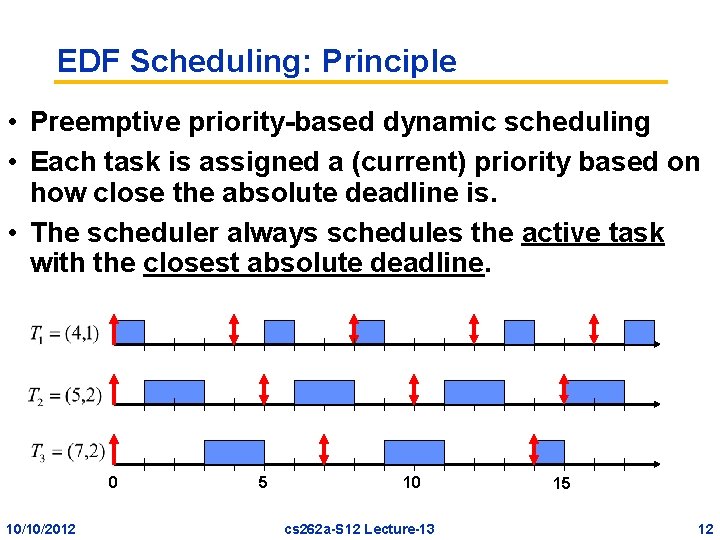

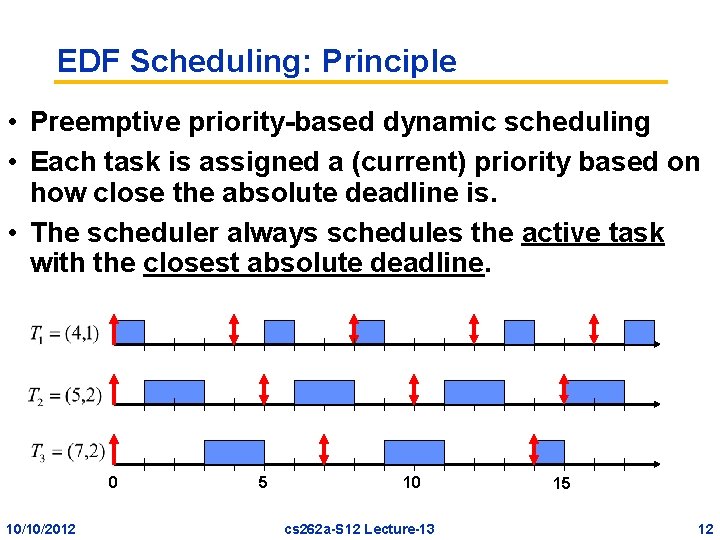

EDF Scheduling: Principle • Preemptive priority-based dynamic scheduling • Each task is assigned a (current) priority based on how close the absolute deadline is. • The scheduler always schedules the active task with the closest absolute deadline. 0 10/10/2012 5 10 cs 262 a-S 12 Lecture-13 15 12

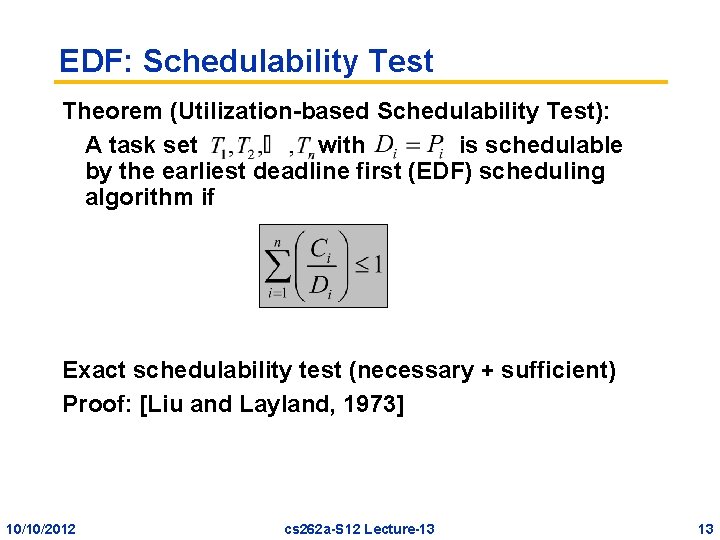

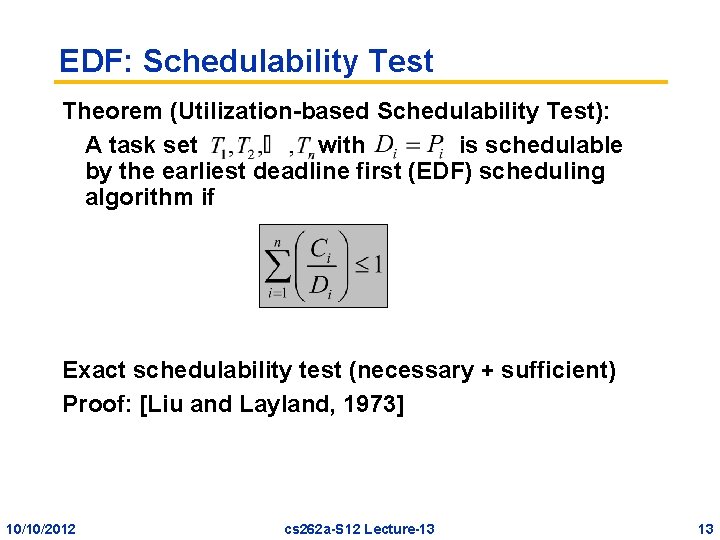

EDF: Schedulability Test Theorem (Utilization-based Schedulability Test): A task set with is schedulable by the earliest deadline first (EDF) scheduling algorithm if Exact schedulability test (necessary + sufficient) Proof: [Liu and Layland, 1973] 10/10/2012 cs 262 a-S 12 Lecture-13 13

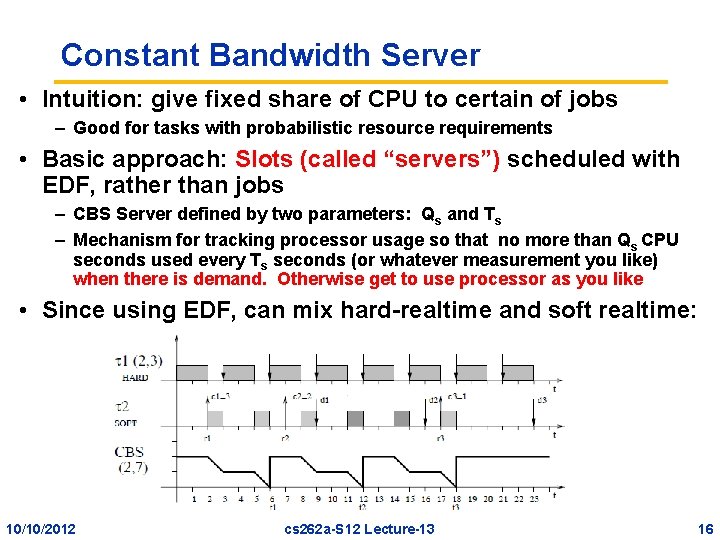

EDF Optimality EDF Properties • EDF is optimal with respect to feasibility (i. e. , schedulability) • EDF is optimal with respect to minimizing the maximum lateness 10/10/2012 cs 262 a-S 12 Lecture-13 14

![EDF Example Domino Effect EDF minimizes lateness of the most tardy task Dertouzos 1974 EDF Example: Domino Effect EDF minimizes lateness of the “most tardy task” [Dertouzos, 1974]](https://slidetodoc.com/presentation_image_h2/4e4bb6a2fed810ca7a373516e44bd154/image-15.jpg)

EDF Example: Domino Effect EDF minimizes lateness of the “most tardy task” [Dertouzos, 1974] Frank Drews 10/10/2012 Real-Time Systems cs 262 a-S 12 Lecture-13 15

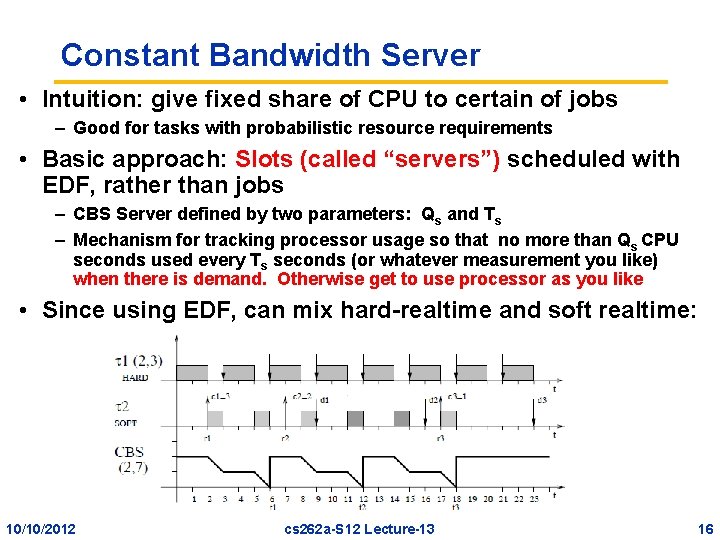

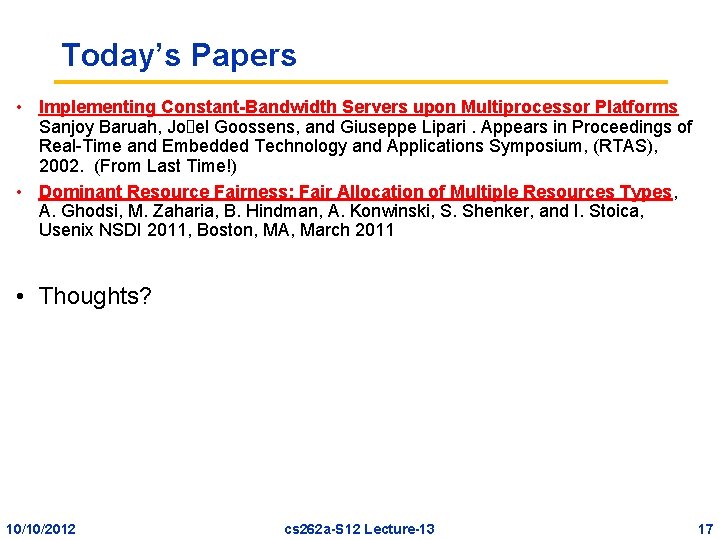

Constant Bandwidth Server • Intuition: give fixed share of CPU to certain of jobs – Good for tasks with probabilistic resource requirements • Basic approach: Slots (called “servers”) scheduled with EDF, rather than jobs – CBS Server defined by two parameters: Qs and Ts – Mechanism for tracking processor usage so that no more than Qs CPU seconds used every Ts seconds (or whatever measurement you like) when there is demand. Otherwise get to use processor as you like • Since using EDF, can mix hard-realtime and soft realtime: 10/10/2012 cs 262 a-S 12 Lecture-13 16

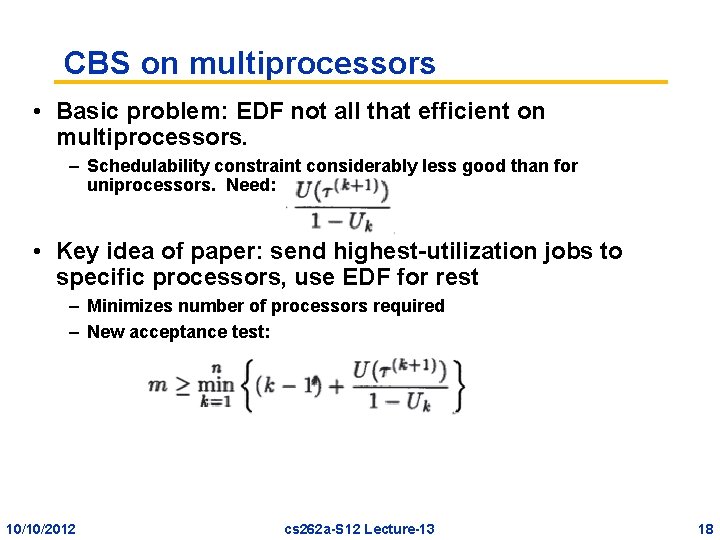

Today’s Papers • Implementing Constant-Bandwidth Servers upon Multiprocessor Platforms Sanjoy Baruah, Jo el Goossens, and Giuseppe Lipari. Appears in Proceedings of Real-Time and Embedded Technology and Applications Symposium, (RTAS), 2002. (From Last Time!) • Dominant Resource Fairness: Fair Allocation of Multiple Resources Types, A. Ghodsi, M. Zaharia, B. Hindman, A. Konwinski, S. Shenker, and I. Stoica, Usenix NSDI 2011, Boston, MA, March 2011 • Thoughts? 10/10/2012 cs 262 a-S 12 Lecture-13 17

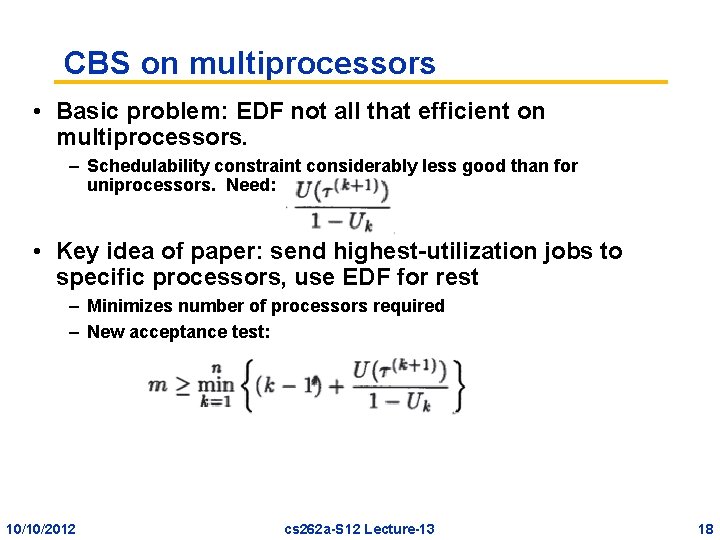

CBS on multiprocessors • Basic problem: EDF not all that efficient on multiprocessors. – Schedulability constraint considerably less good than for uniprocessors. Need: • Key idea of paper: send highest-utilization jobs to specific processors, use EDF for rest – Minimizes number of processors required – New acceptance test: 10/10/2012 cs 262 a-S 12 Lecture-13 18

Is this a good paper? • What were the authors’ goals? • What about the evaluation/metrics? • Did they convince you that this was a good system/approach? • Were there any red-flags? • What mistakes did they make? • Does the system/approach meet the “Test of Time” challenge? • How would you review this paper today? 10/10/2012 cs 262 a-S 12 Lecture-13 19

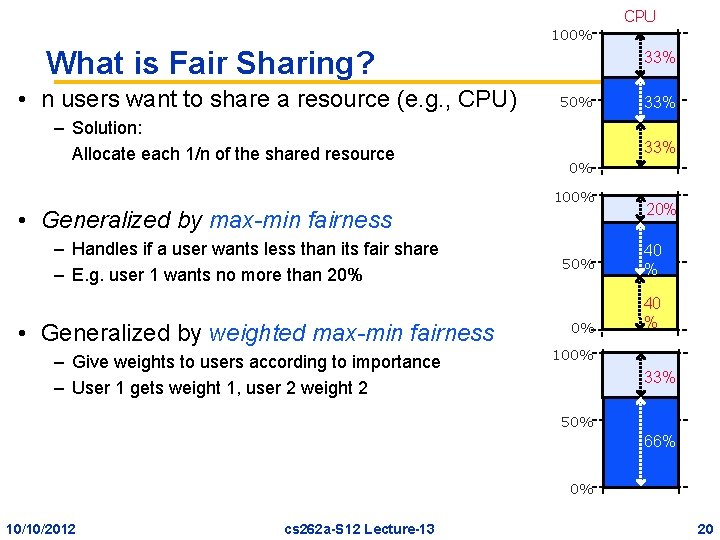

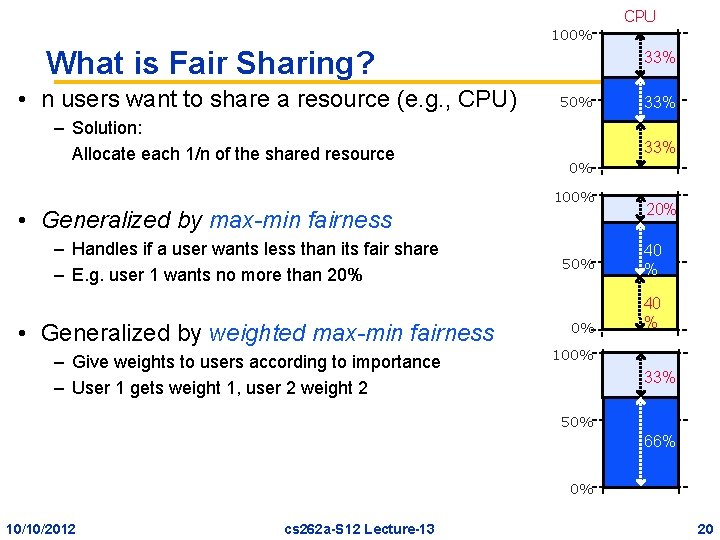

CPU 100% What is Fair Sharing? • n users want to share a resource (e. g. , CPU) – Solution: Allocate each 1/n of the shared resource 33% 50% 33% 0% 100% • Generalized by max-min fairness – Handles if a user wants less than its fair share – E. g. user 1 wants no more than 20% • Generalized by weighted max-min fairness – Give weights to users according to importance – User 1 gets weight 1, user 2 weight 2 33% 20% 50% 40 % 100% 33% 50% 66% 0% 10/10/2012 cs 262 a-S 12 Lecture-13 20

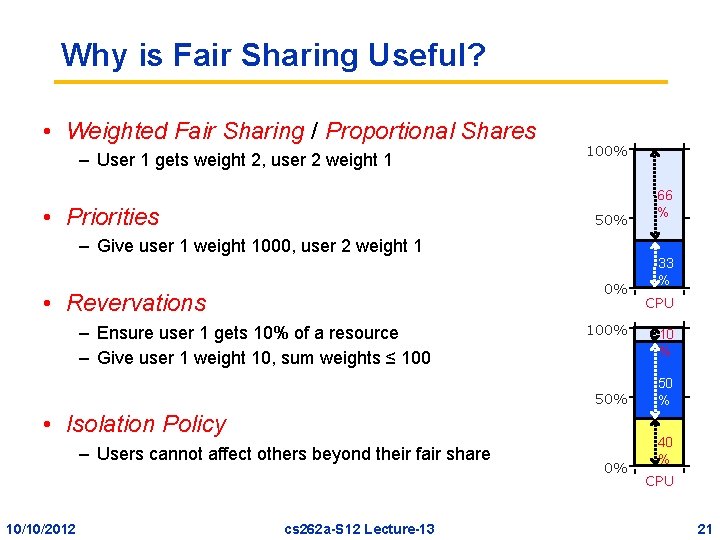

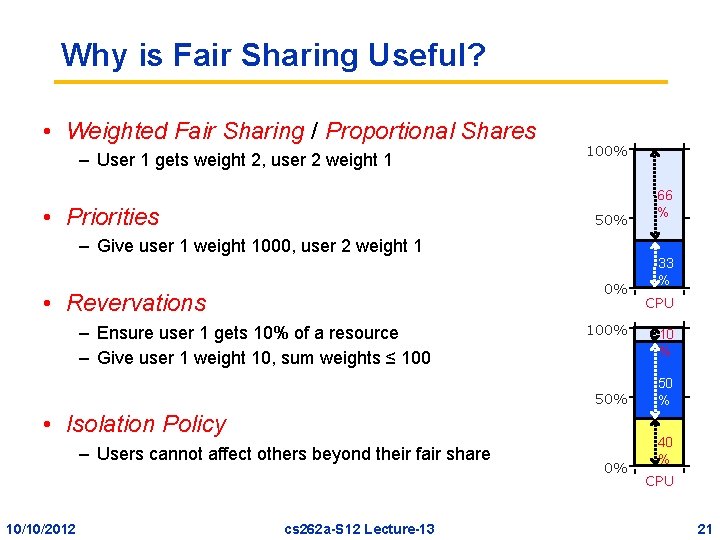

Why is Fair Sharing Useful? • Weighted Fair Sharing / Proportional Shares – User 1 gets weight 2, user 2 weight 1 • Priorities 100% 50% – Give user 1 weight 1000, user 2 weight 1 0% • Revervations – Ensure user 1 gets 10% of a resource – Give user 1 weight 10, sum weights ≤ 100% 50% • Isolation Policy – Users cannot affect others beyond their fair share 10/10/2012 cs 262 a-S 12 Lecture-13 0% 66 % 33 % CPU 10 % 50 % 40 % CPU 21

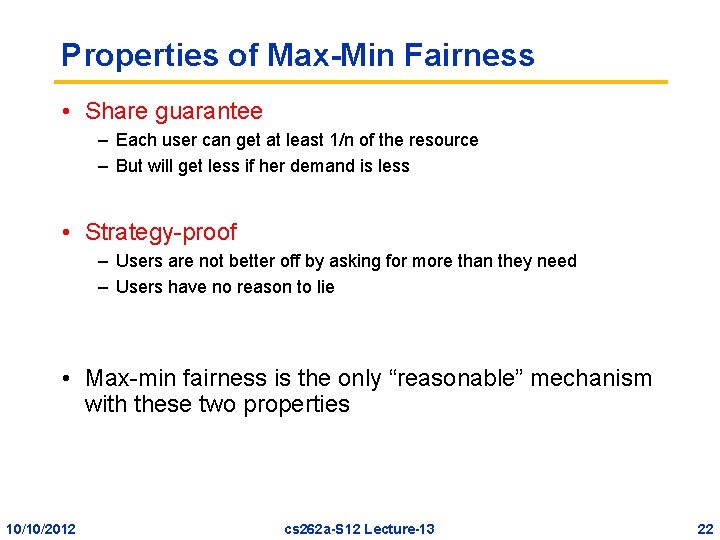

Properties of Max-Min Fairness • Share guarantee – Each user can get at least 1/n of the resource – But will get less if her demand is less • Strategy-proof – Users are not better off by asking for more than they need – Users have no reason to lie • Max-min fairness is the only “reasonable” mechanism with these two properties 10/10/2012 cs 262 a-S 12 Lecture-13 22

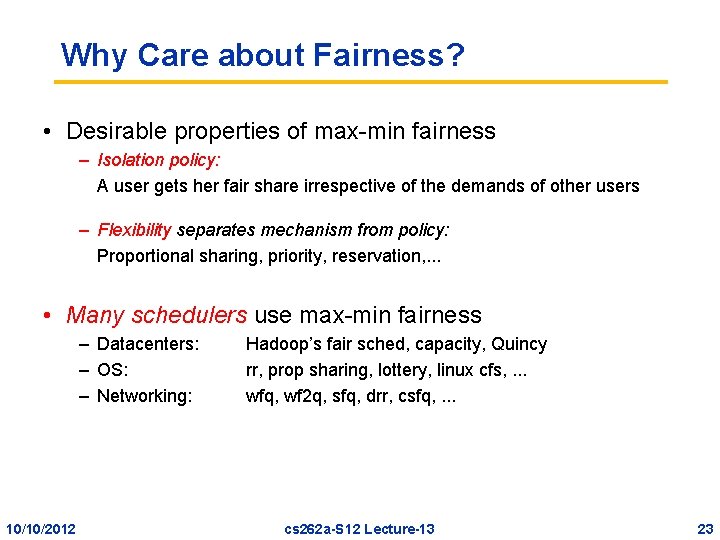

Why Care about Fairness? • Desirable properties of max-min fairness – Isolation policy: A user gets her fair share irrespective of the demands of other users – Flexibility separates mechanism from policy: Proportional sharing, priority, reservation, . . . • Many schedulers use max-min fairness – Datacenters: – OS: – Networking: 10/10/2012 Hadoop’s fair sched, capacity, Quincy rr, prop sharing, lottery, linux cfs, . . . wfq, wf 2 q, sfq, drr, csfq, . . . cs 262 a-S 12 Lecture-13 23

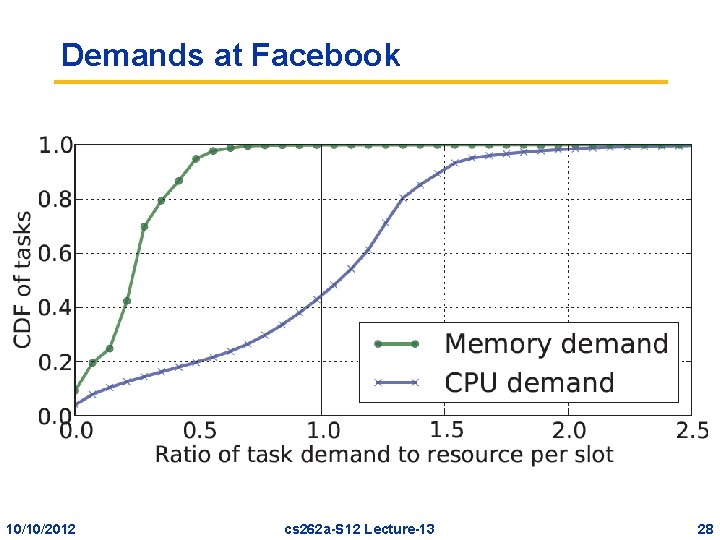

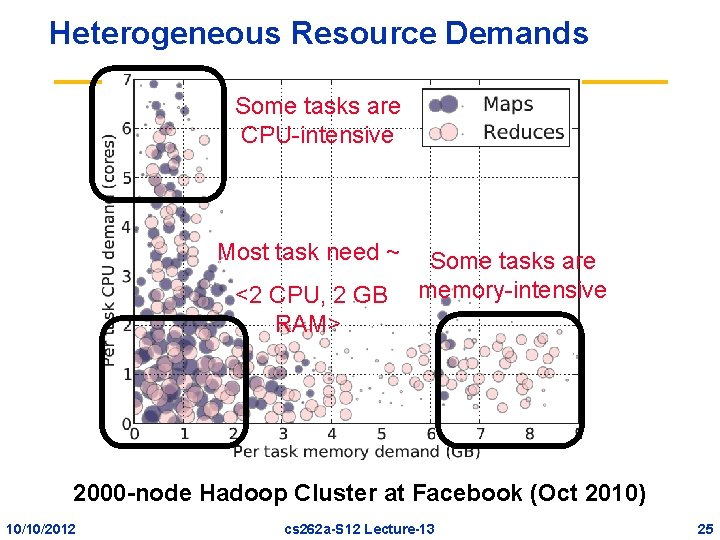

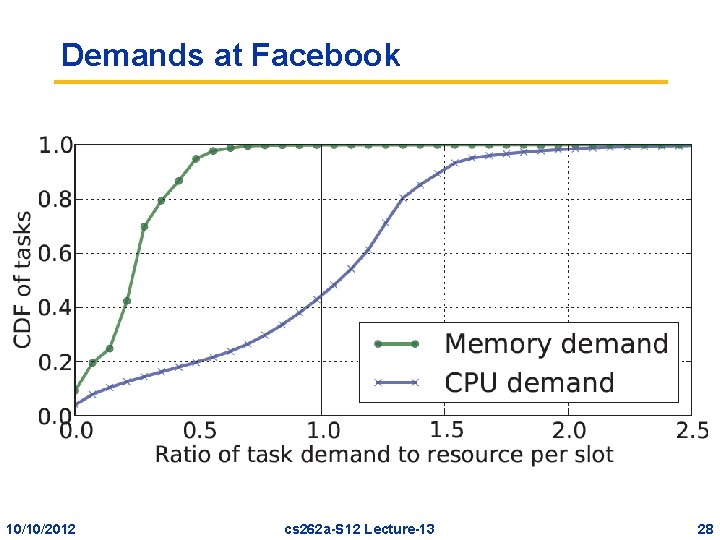

When is Max-Min Fairness not Enough? • Need to schedule multiple, heterogeneous resources – Example: Task scheduling in datacenters » Tasks consume more than just CPU – CPU, memory, disk, and I/O • What are today’s datacenter task demands? 10/10/2012 cs 262 a-S 12 Lecture-13 24

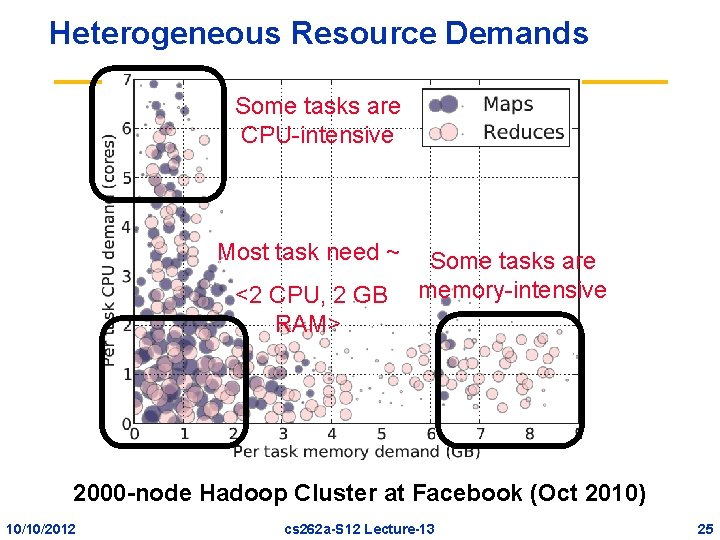

Heterogeneous Resource Demands Some tasks are CPU-intensive Most task need ~ <2 CPU, 2 GB RAM> Some tasks are memory-intensive 2000 -node Hadoop Cluster at Facebook (Oct 2010) 10/10/2012 cs 262 a-S 12 Lecture-13 25

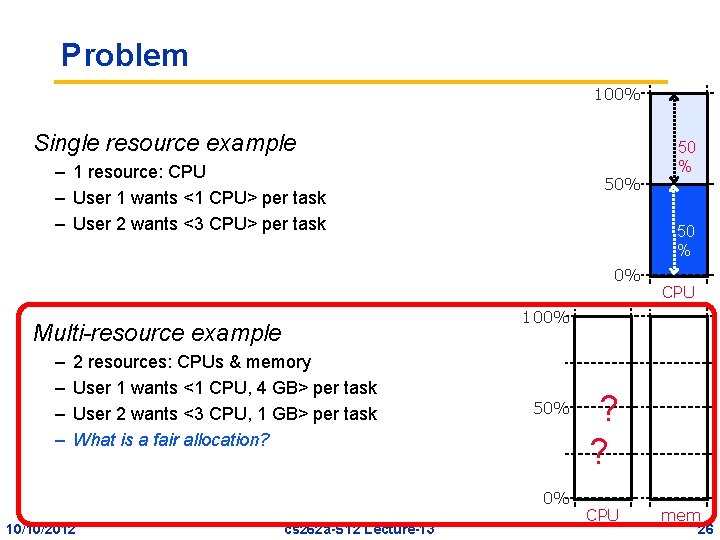

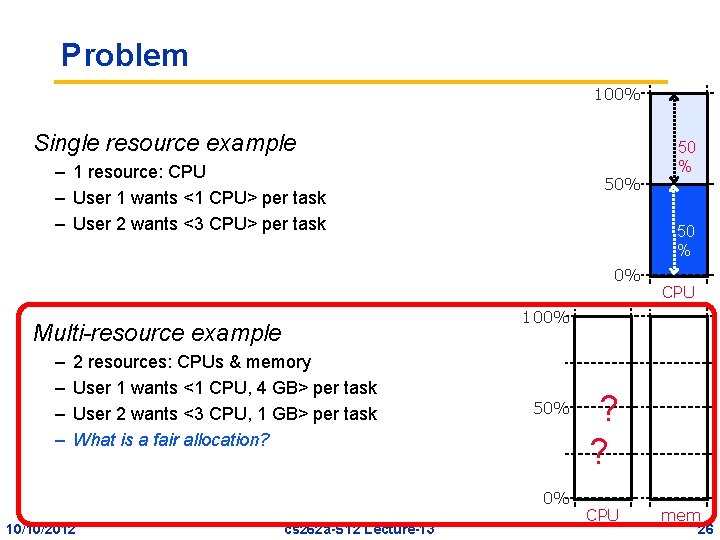

Problem 100% Single resource example – 1 resource: CPU – User 1 wants <1 CPU> per task – User 2 wants <3 CPU> per task 50% 50 % 0% 2 resources: CPUs & memory User 1 wants <1 CPU, 4 GB> per task User 2 wants <3 CPU, 1 GB> per task What is a fair allocation? 50% 0% 10/10/2012 CPU 100% Multi-resource example – – 50 % cs 262 a-S 12 Lecture-13 ? ? CPU mem 26

Problem definition How to fairly share multiple resources when users have heterogeneous demands on them? 10/10/2012 cs 262 a-S 12 Lecture-13 27

Demands at Facebook 10/10/2012 cs 262 a-S 12 Lecture-13 28

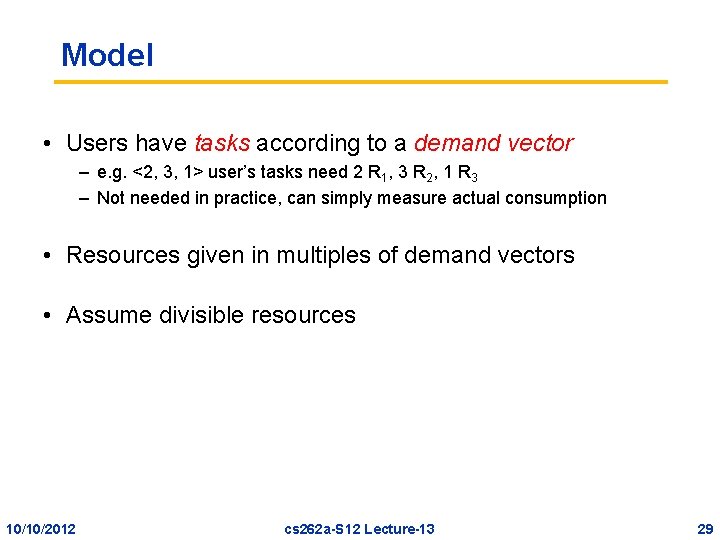

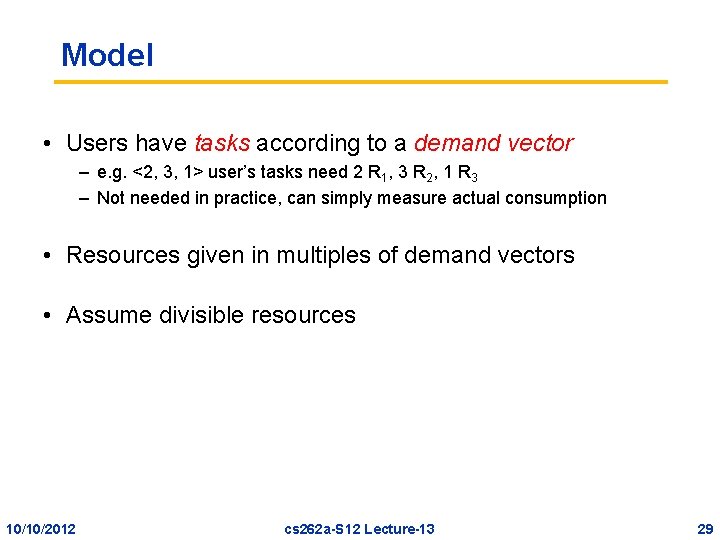

Model • Users have tasks according to a demand vector – e. g. <2, 3, 1> user’s tasks need 2 R 1, 3 R 2, 1 R 3 – Not needed in practice, can simply measure actual consumption • Resources given in multiples of demand vectors • Assume divisible resources 10/10/2012 cs 262 a-S 12 Lecture-13 29

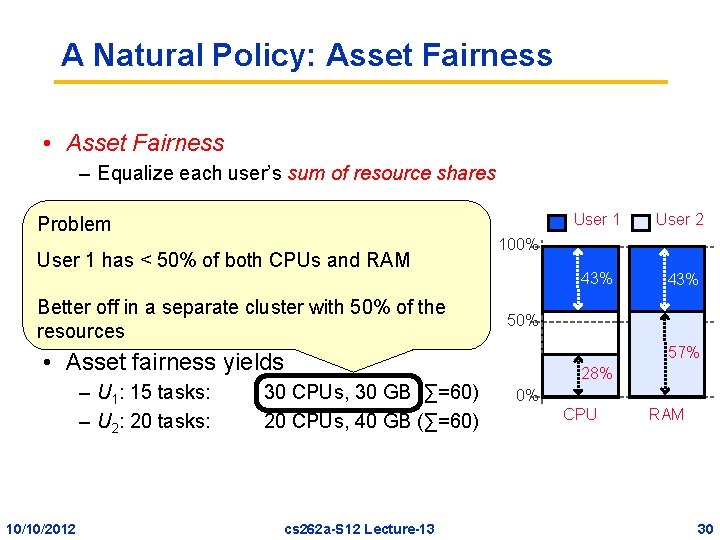

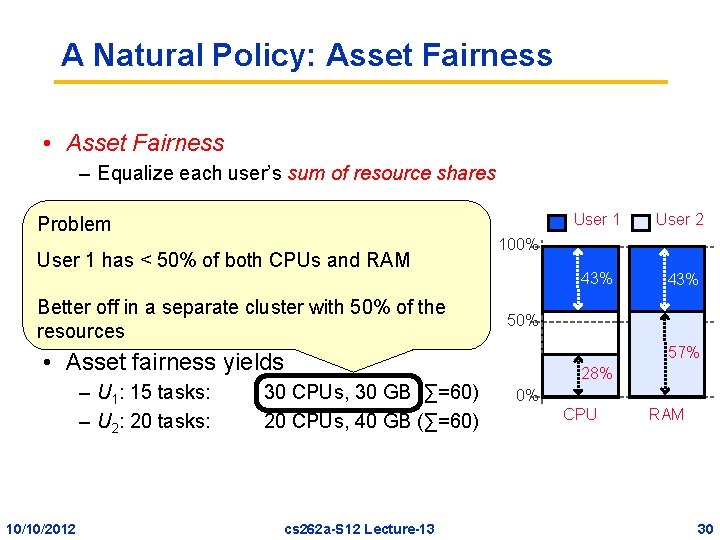

A Natural Policy: Asset Fairness • Asset Fairness – Equalize each user’s sum of resource shares Problem • Cluster with 70 CPUs, 70 GB RAM User 1 has < 50% of both CPUs and RAM – U 1 needs <2 CPU, 2 GB RAM> per task – off U 2 in needs <1 CPU, 2 GBwith RAM> per Better a separate cluster 50% of task the resources 10/10/2012 30 CPUs, 30 GB (∑=60) 20 CPUs, 40 GB (∑=60) cs 262 a-S 12 Lecture-13 User 2 43% 100% 57% • Asset fairness yields – U 1: 15 tasks: – U 2: 20 tasks: User 1 28% 0% CPU RAM 30

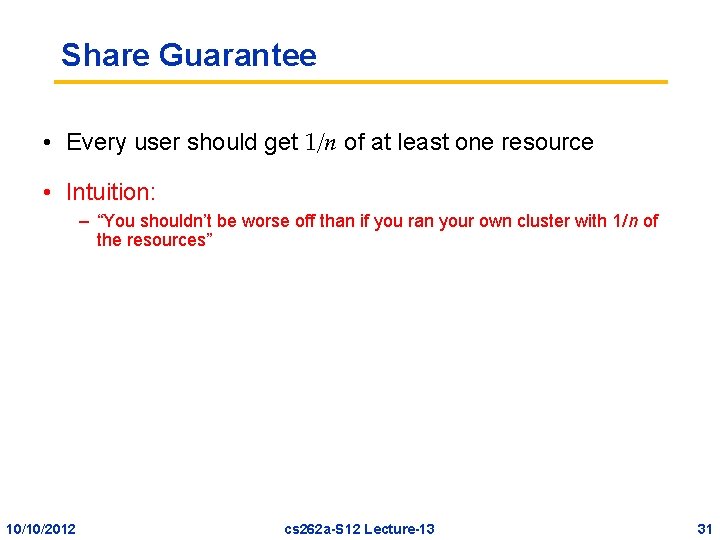

Share Guarantee • Every user should get 1/n of at least one resource • Intuition: – “You shouldn’t be worse off than if you ran your own cluster with 1/n of the resources” 10/10/2012 cs 262 a-S 12 Lecture-13 31

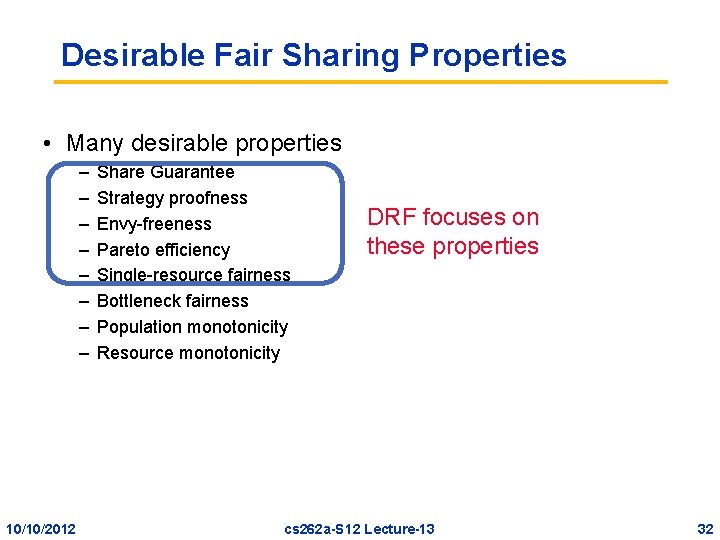

Desirable Fair Sharing Properties • Many desirable properties – – – – 10/10/2012 Share Guarantee Strategy proofness Envy-freeness Pareto efficiency Single-resource fairness Bottleneck fairness Population monotonicity Resource monotonicity DRF focuses on these properties cs 262 a-S 12 Lecture-13 32

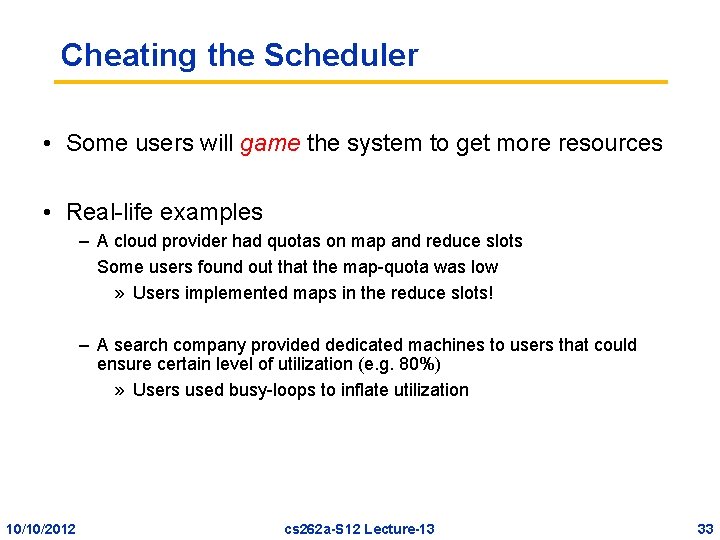

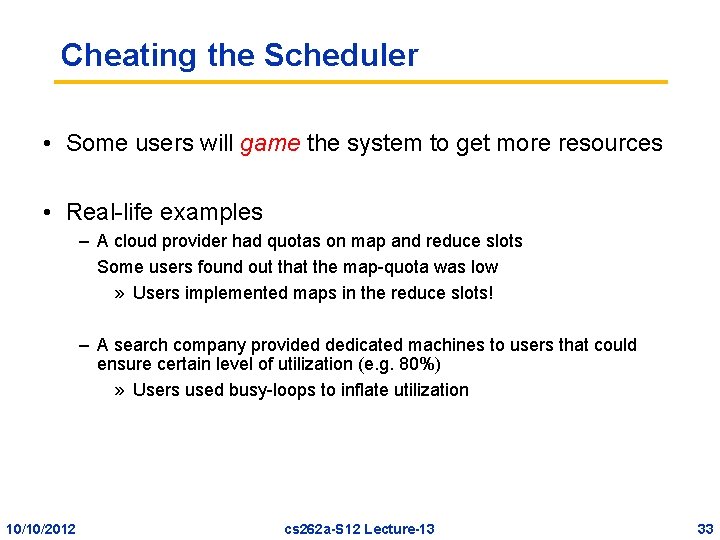

Cheating the Scheduler • Some users will game the system to get more resources • Real-life examples – A cloud provider had quotas on map and reduce slots Some users found out that the map-quota was low » Users implemented maps in the reduce slots! – A search company provided dedicated machines to users that could ensure certain level of utilization (e. g. 80%) » Users used busy-loops to inflate utilization 10/10/2012 cs 262 a-S 12 Lecture-13 33

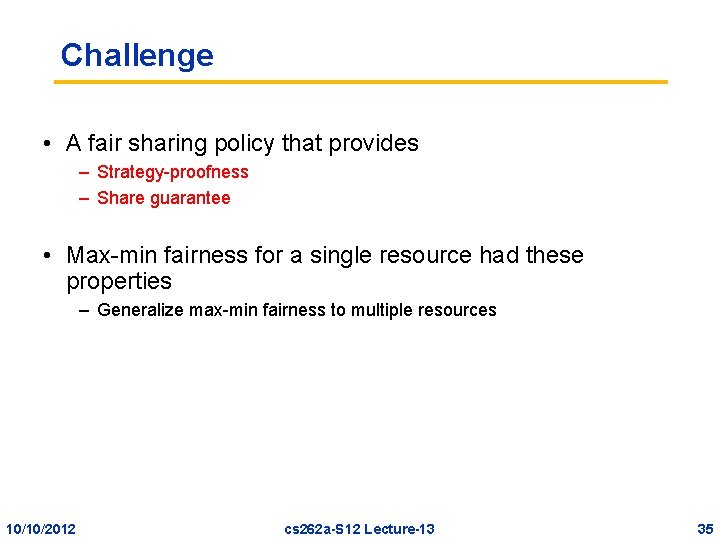

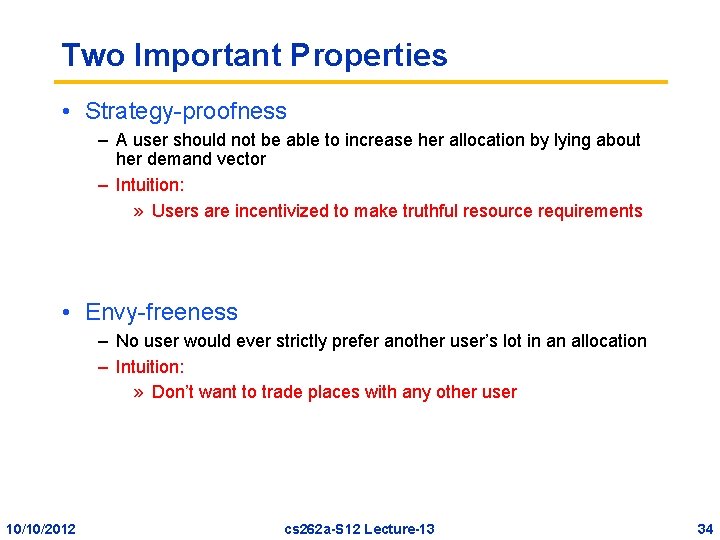

Two Important Properties • Strategy-proofness – A user should not be able to increase her allocation by lying about her demand vector – Intuition: » Users are incentivized to make truthful resource requirements • Envy-freeness – No user would ever strictly prefer another user’s lot in an allocation – Intuition: » Don’t want to trade places with any other user 10/10/2012 cs 262 a-S 12 Lecture-13 34

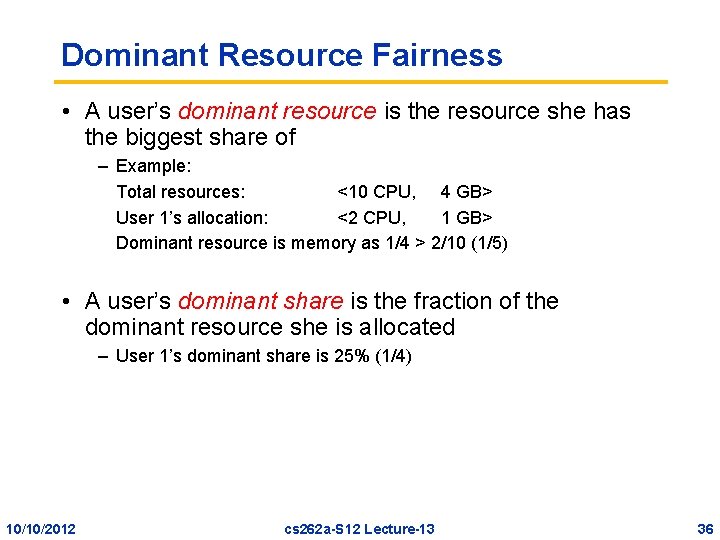

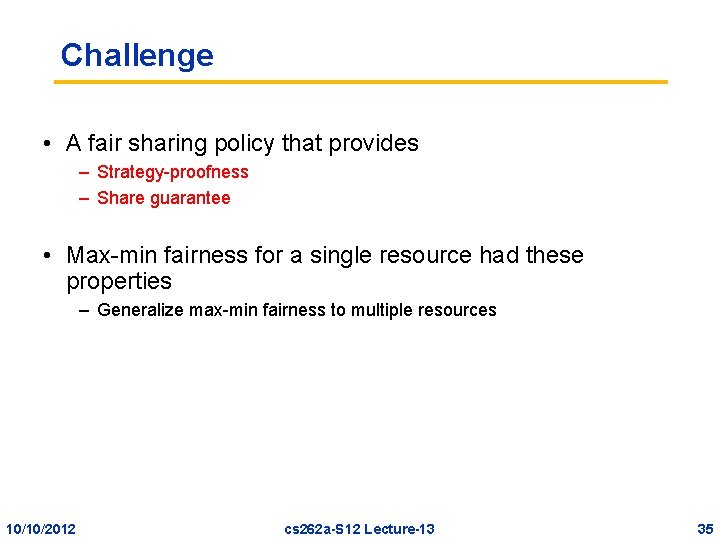

Challenge • A fair sharing policy that provides – Strategy-proofness – Share guarantee • Max-min fairness for a single resource had these properties – Generalize max-min fairness to multiple resources 10/10/2012 cs 262 a-S 12 Lecture-13 35

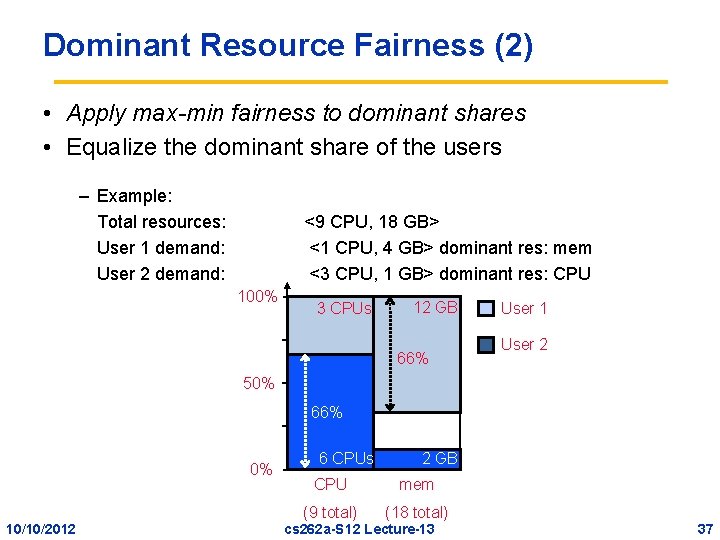

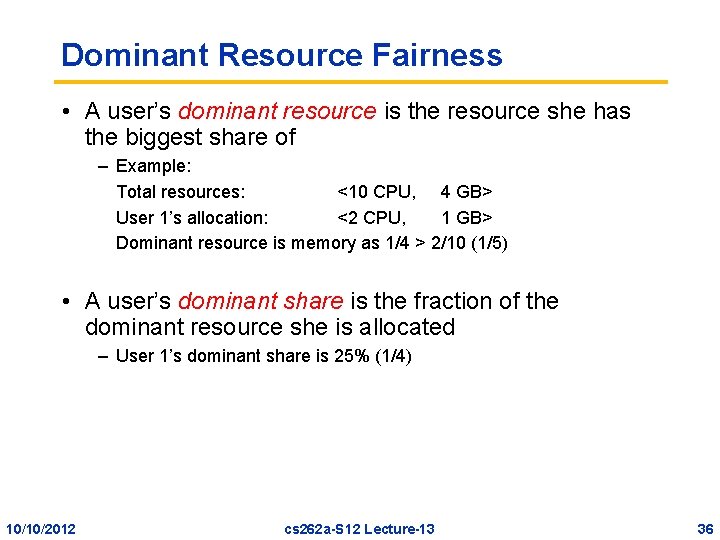

Dominant Resource Fairness • A user’s dominant resource is the resource she has the biggest share of – Example: Total resources: <10 CPU, 4 GB> User 1’s allocation: <2 CPU, 1 GB> Dominant resource is memory as 1/4 > 2/10 (1/5) • A user’s dominant share is the fraction of the dominant resource she is allocated – User 1’s dominant share is 25% (1/4) 10/10/2012 cs 262 a-S 12 Lecture-13 36

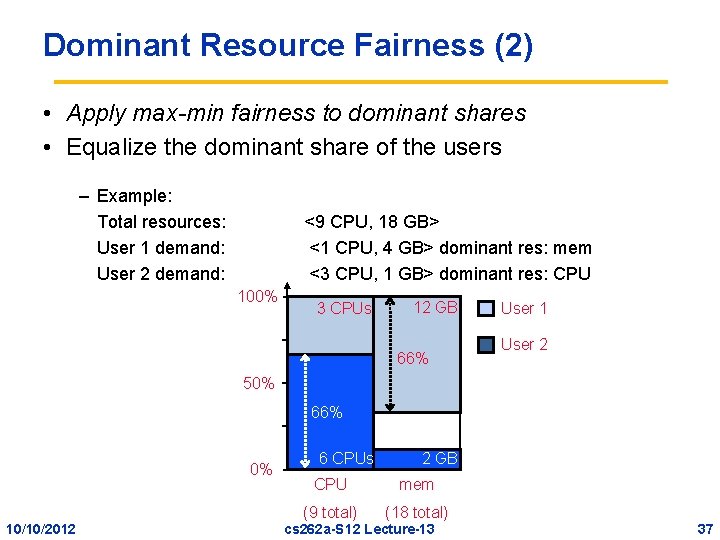

Dominant Resource Fairness (2) • Apply max-min fairness to dominant shares • Equalize the dominant share of the users – Example: Total resources: User 1 demand: User 2 demand: <9 CPU, 18 GB> <1 CPU, 4 GB> dominant res: mem <3 CPU, 1 GB> dominant res: CPU 100% 3 CPUs 12 GB 66% User 1 User 2 50% 66% 0% 10/10/2012 6 CPUs 2 GB CPU mem (9 total) (18 total) cs 262 a-S 12 Lecture-13 37

DRF is Fair • DRF is strategy-proof • DRF satisfies the share guarantee • DRF allocations are envy-free See DRF paper for proofs 10/10/2012 cs 262 a-S 12 Lecture-13 38

Online DRF Scheduler Whenever there available resources and tasks to run: Schedule a task to the user with smallest dominant share • O(log n) time per decision using binary heaps • Need to determine demand vectors 10/10/2012 cs 262 a-S 12 Lecture-13 39

Alternative: Use an Economic Model • Approach – Set prices for each good – Let users buy what they want • How do we determine the right prices for different goods? • Let the market determine the prices • Competitive Equilibrium from Equal Incomes (CEEI) – Give each user 1/n of every resource – Let users trade in a perfectly competitive market • Not strategy-proof! 10/10/2012 cs 262 a-S 12 Lecture-13 40

Determining Demand Vectors • They can be measured – Look at actual resource consumption of a user • They can be provided the by user – What is done today • In both cases, strategy-proofness incentivizes user to consume resources wisely 10/10/2012 cs 262 a-S 12 Lecture-13 41

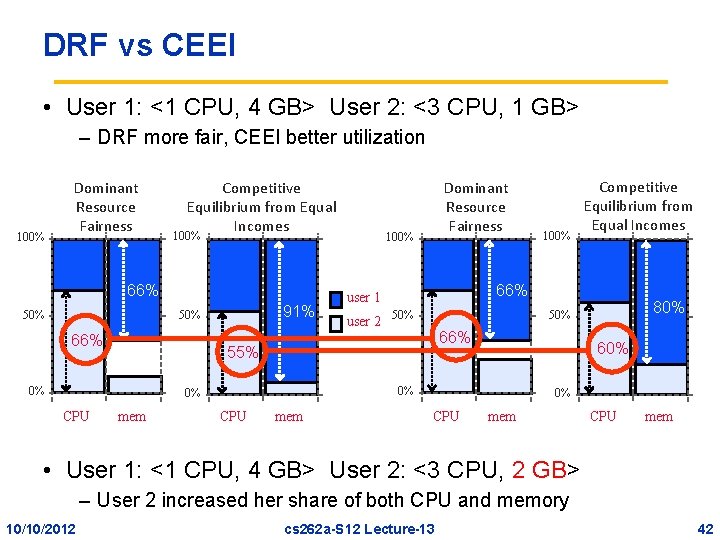

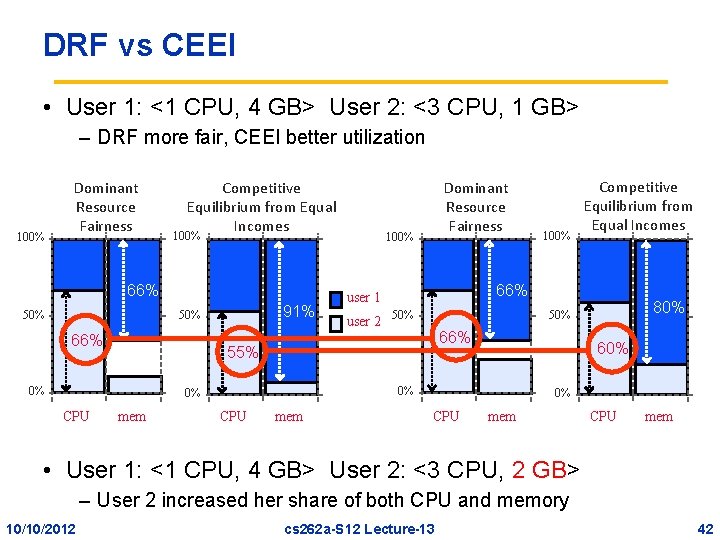

DRF vs CEEI • User 1: <1 CPU, 4 GB> User 2: <3 CPU, 1 GB> – DRF more fair, CEEI better utilization 100% Dominant Resource Fairness Competitive Equilibrium from Equal Incomes 100% 66% 50% 91% 50% 66% 100% user 2 50% mem CPU mem 80% 50% 66% 60% 0% 0% 100% Competitive Equilibrium from Equal Incomes 66% user 1 55% 0% CPU Dominant Resource Fairness 0% CPU mem • User 1: <1 CPU, 4 GB> User 2: <3 CPU, 2 GB> – User 2 increased her share of both CPU and memory 10/10/2012 cs 262 a-S 12 Lecture-13 42

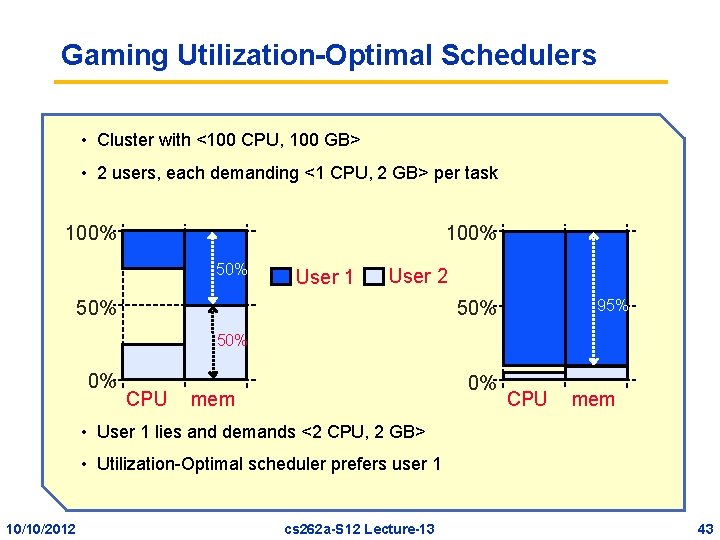

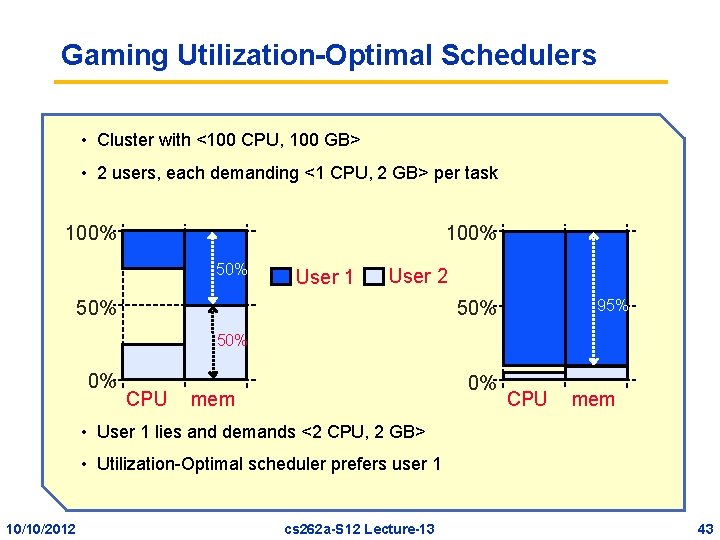

Gaming Utilization-Optimal Schedulers • Cluster with <100 CPU, 100 GB> • 2 users, each demanding <1 CPU, 2 GB> per task 100% 50% User 1 User 2 50% 95% 50% 0% CPU 0% mem CPU mem • User 1 lies and demands <2 CPU, 2 GB> • Utilization-Optimal scheduler prefers user 1 10/10/2012 cs 262 a-S 12 Lecture-13 43

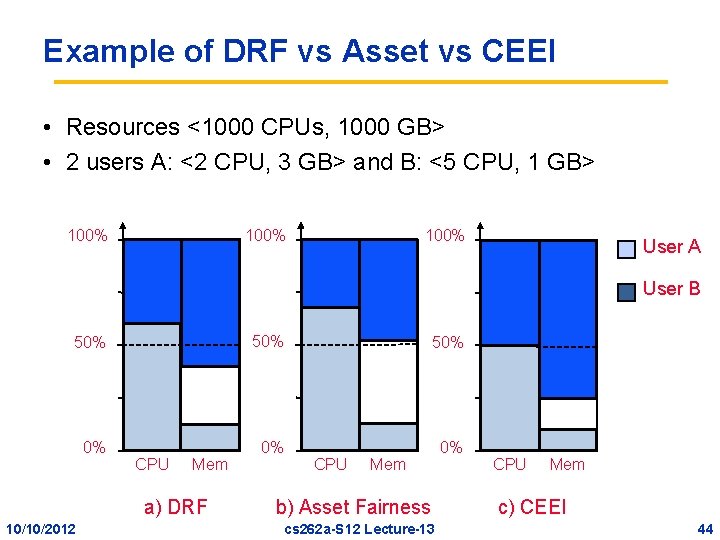

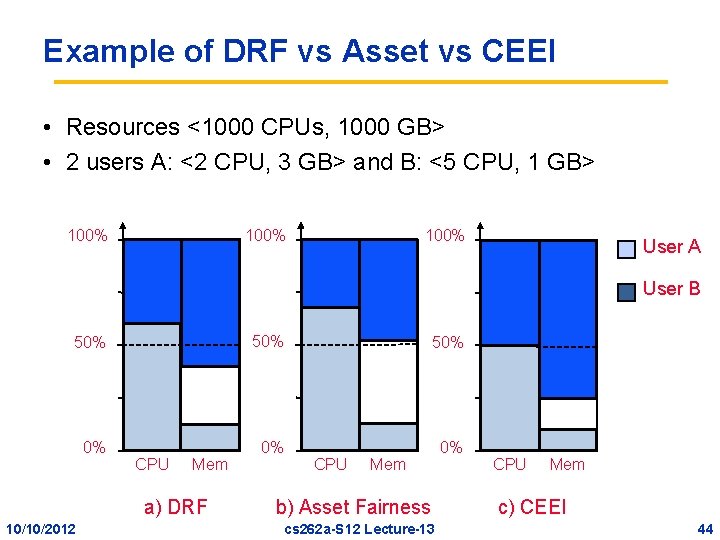

Example of DRF vs Asset vs CEEI • Resources <1000 CPUs, 1000 GB> • 2 users A: <2 CPU, 3 GB> and B: <5 CPU, 1 GB> 100% User A User B 50% 50% 0% CPU Mem a) DRF 10/10/2012 CPU Mem b) Asset Fairness cs 262 a-S 12 Lecture-13 CPU Mem c) CEEI 44

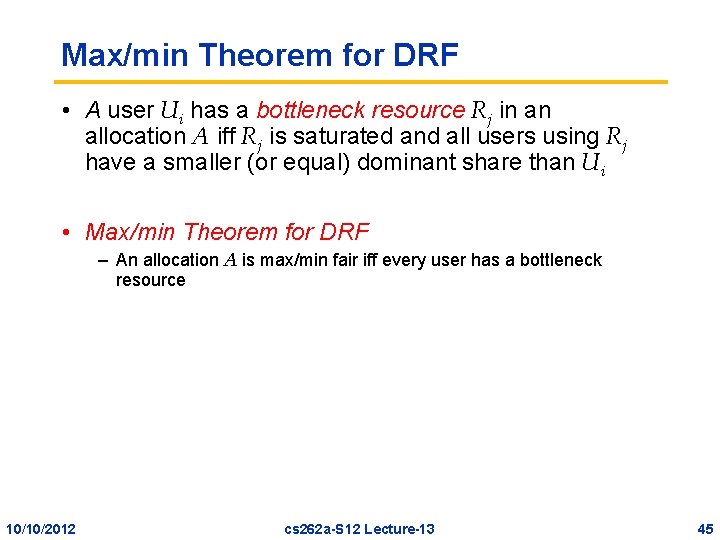

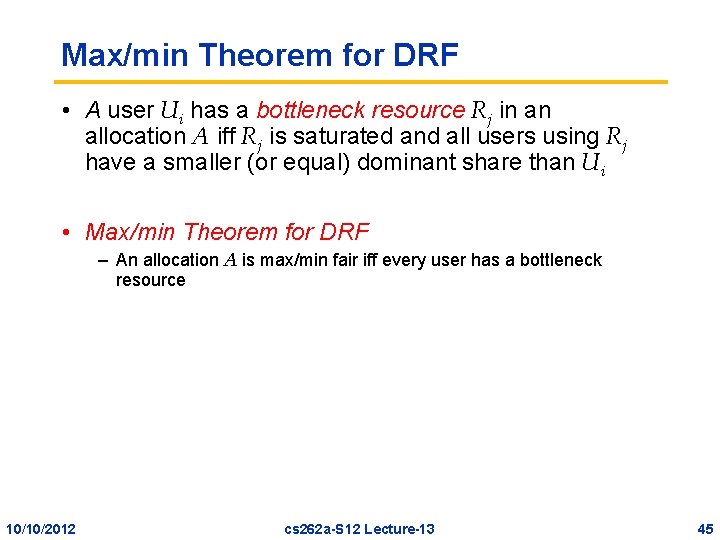

Max/min Theorem for DRF • A user Ui has a bottleneck resource Rj in an allocation A iff Rj is saturated and all users using Rj have a smaller (or equal) dominant share than Ui • Max/min Theorem for DRF – An allocation A is max/min fair iff every user has a bottleneck resource 10/10/2012 cs 262 a-S 12 Lecture-13 45

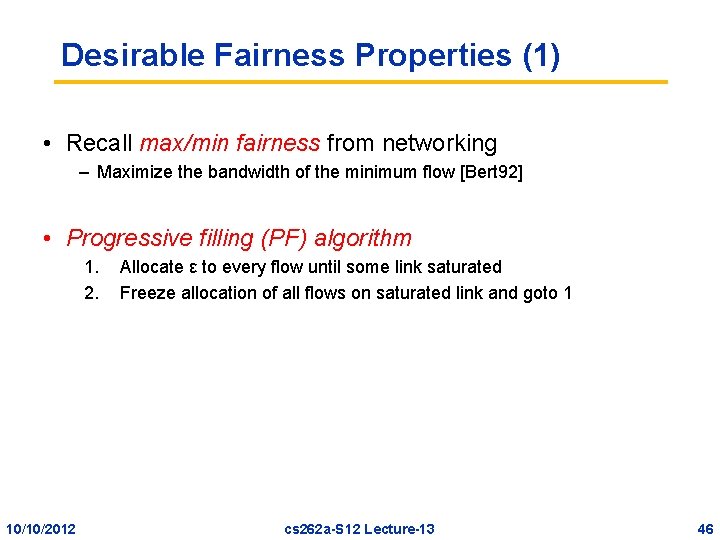

Desirable Fairness Properties (1) • Recall max/min fairness from networking – Maximize the bandwidth of the minimum flow [Bert 92] • Progressive filling (PF) algorithm 1. 2. 10/10/2012 Allocate ε to every flow until some link saturated Freeze allocation of all flows on saturated link and goto 1 cs 262 a-S 12 Lecture-13 46

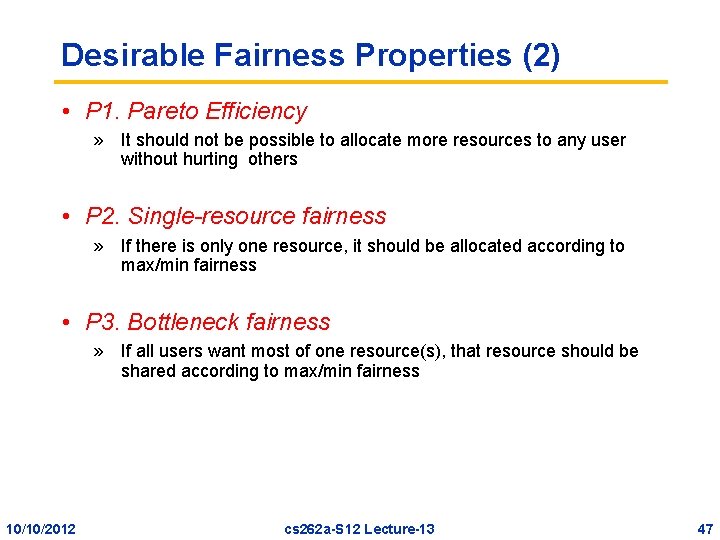

Desirable Fairness Properties (2) • P 1. Pareto Efficiency » It should not be possible to allocate more resources to any user without hurting others • P 2. Single-resource fairness » If there is only one resource, it should be allocated according to max/min fairness • P 3. Bottleneck fairness » If all users want most of one resource(s), that resource should be shared according to max/min fairness 10/10/2012 cs 262 a-S 12 Lecture-13 47

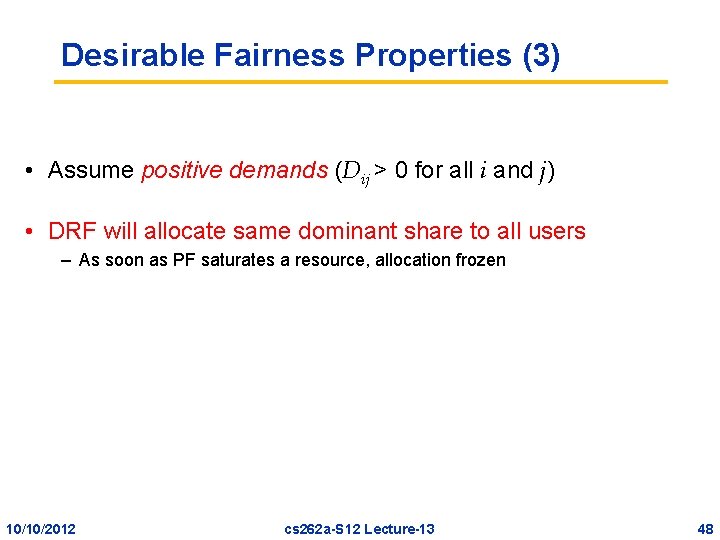

Desirable Fairness Properties (3) • Assume positive demands (Dij > 0 for all i and j) • DRF will allocate same dominant share to all users – As soon as PF saturates a resource, allocation frozen 10/10/2012 cs 262 a-S 12 Lecture-13 48

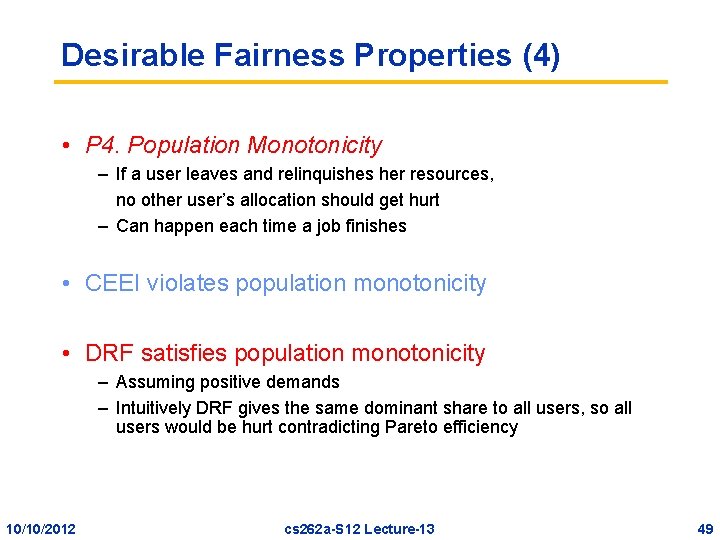

Desirable Fairness Properties (4) • P 4. Population Monotonicity – If a user leaves and relinquishes her resources, no other user’s allocation should get hurt – Can happen each time a job finishes • CEEI violates population monotonicity • DRF satisfies population monotonicity – Assuming positive demands – Intuitively DRF gives the same dominant share to all users, so all users would be hurt contradicting Pareto efficiency 10/10/2012 cs 262 a-S 12 Lecture-13 49

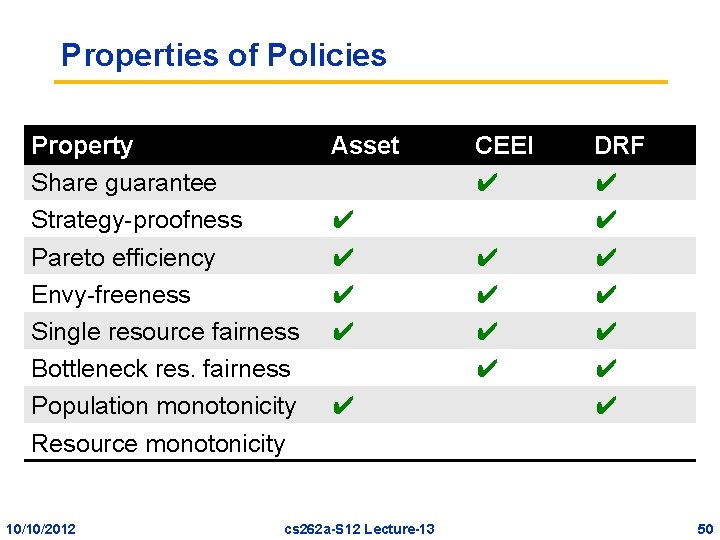

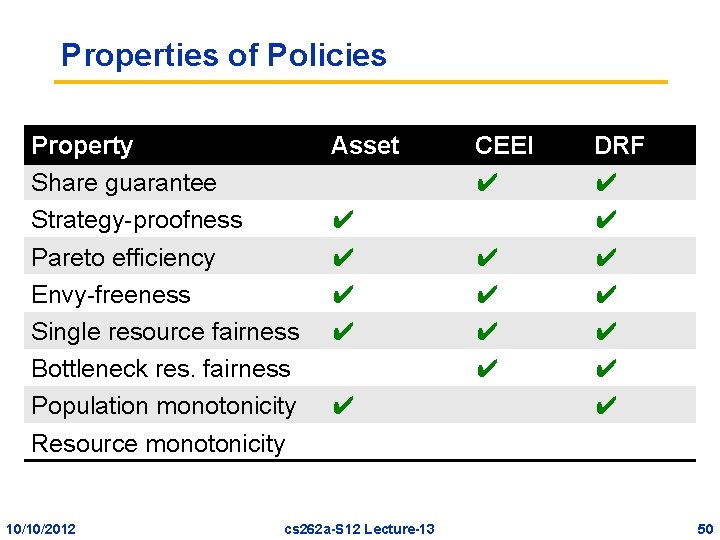

Properties of Policies Property Share guarantee Strategy-proofness Pareto efficiency Envy-freeness Single resource fairness Bottleneck res. fairness Population monotonicity Resource monotonicity 10/10/2012 Asset ✔ ✔ ✔ cs 262 a-S 12 Lecture-13 CEEI ✔ ✔ ✔ DRF ✔ ✔ ✔ ✔ 50

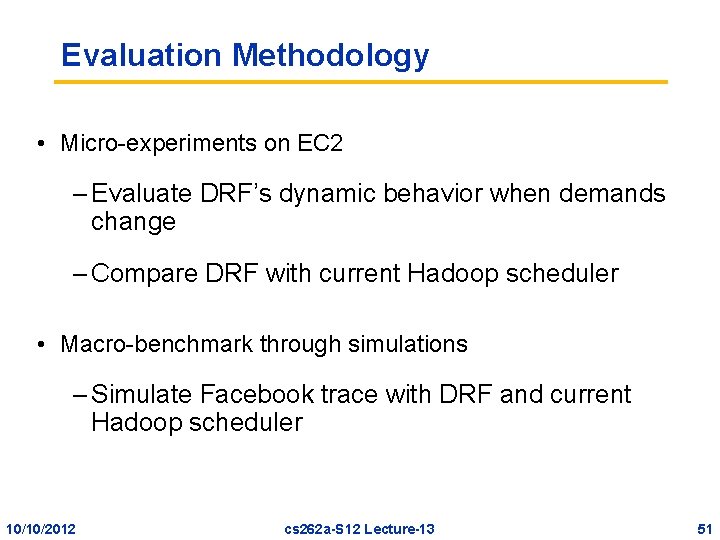

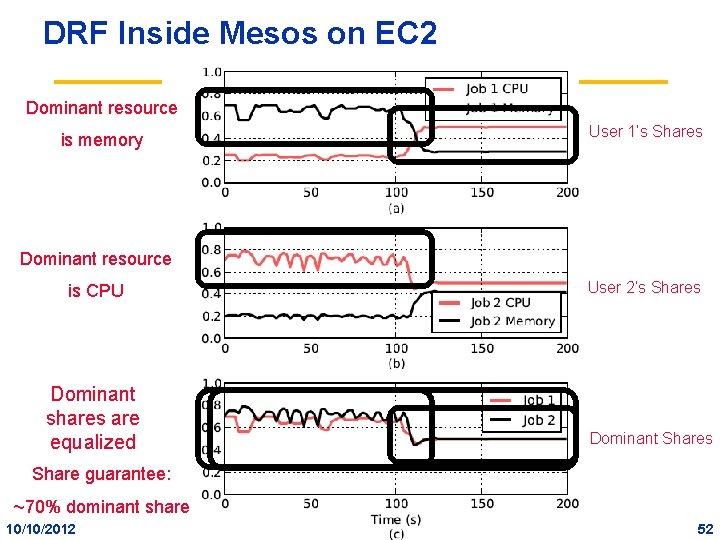

Evaluation Methodology • Micro-experiments on EC 2 – Evaluate DRF’s dynamic behavior when demands change – Compare DRF with current Hadoop scheduler • Macro-benchmark through simulations – Simulate Facebook trace with DRF and current Hadoop scheduler 10/10/2012 cs 262 a-S 12 Lecture-13 51

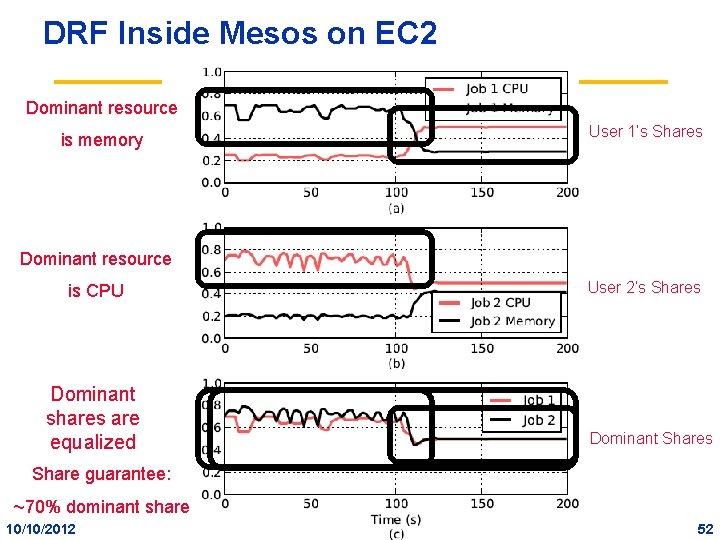

DRF Inside Mesos on EC 2 Dominant resource User 1’s Shares is memory Dominant resource User 2’s Shares is CPU Dominant shares are equalized Dominant Shares Share guarantee: ~70% dominant share 10/10/2012 cs 262 a-S 12 Lecture-13 52

Fairness in Today’s Datacenters • Hadoop Fair Scheduler/capacity/Quincy – Each machine consists of k slots (e. g. k=14) – Run at most one task per slot – Give jobs ”equal” number of slots, i. e. , apply max-min fairness to slot-count • This is what DRF paper compares against 10/10/2012 cs 262 a-S 12 Lecture-13 53

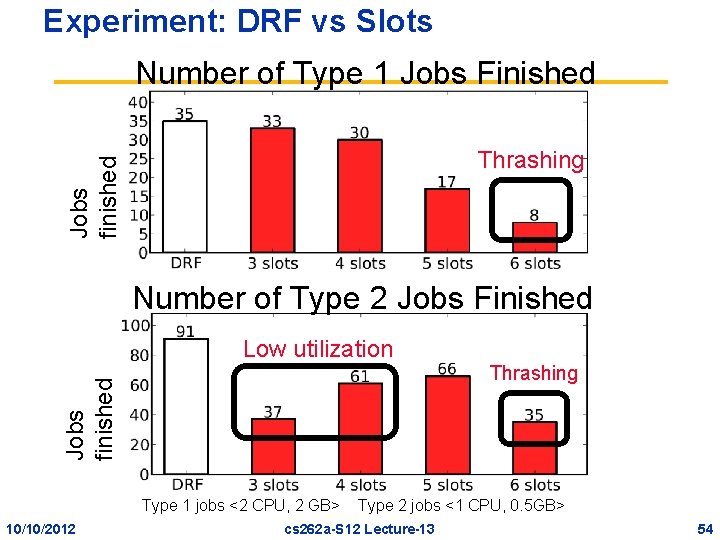

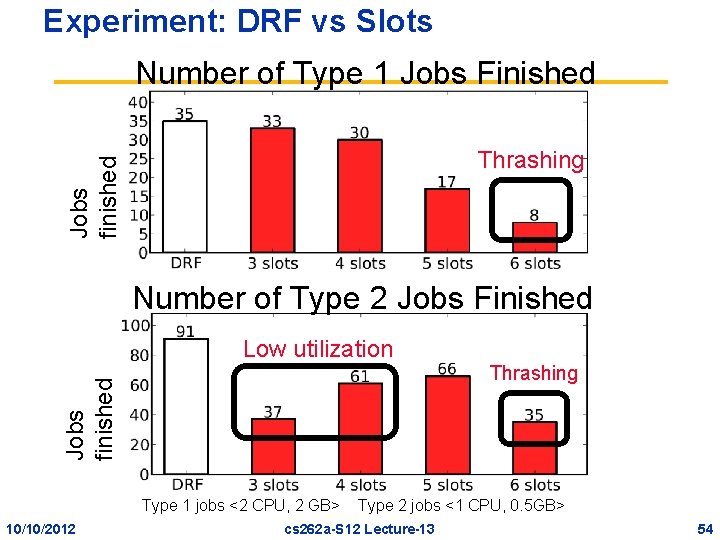

Experiment: DRF vs Slots Number of Type 1 Jobs Finished Jobs finished Thrashing Number of Type 2 Jobs Finished Low utilization Jobs finished Thrashing Type 1 jobs <2 CPU, 2 GB> 10/10/2012 Type 2 jobs <1 CPU, 0. 5 GB> cs 262 a-S 12 Lecture-13 54

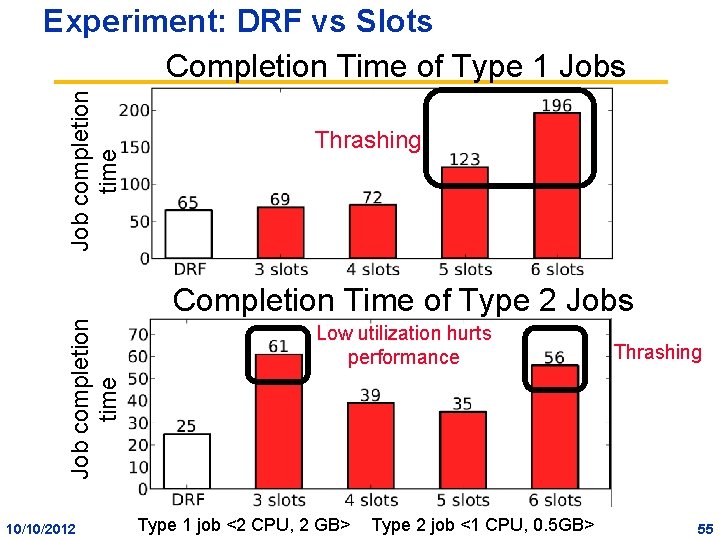

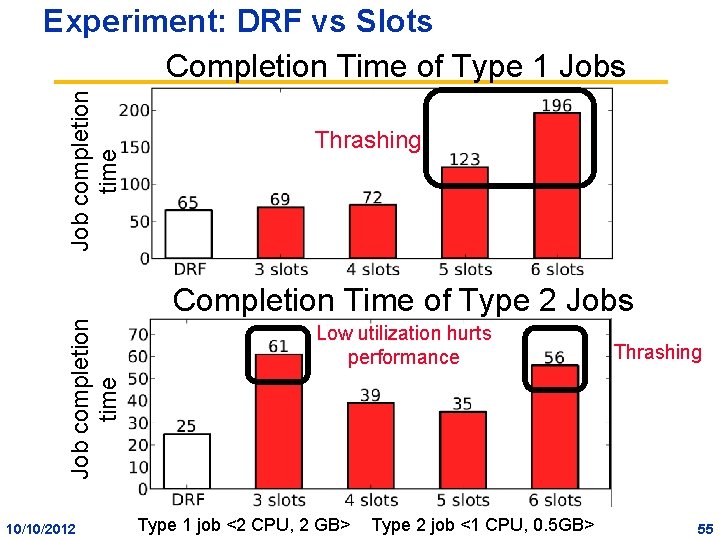

Job completion time Experiment: DRF vs Slots Completion Time of Type 1 Jobs Thrashing Job completion time Completion Time of Type 2 Jobs 10/10/2012 Low utilization hurts performance Type 1 job <2 CPU, 2 GB> Lecture-13 Type 2 job <1 CPU, 0. 5 GB> cs 262 a-S 12 Thrashing 55

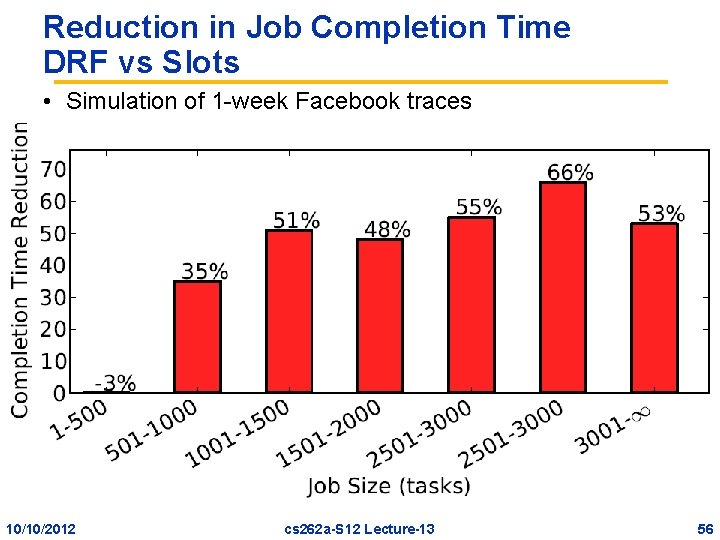

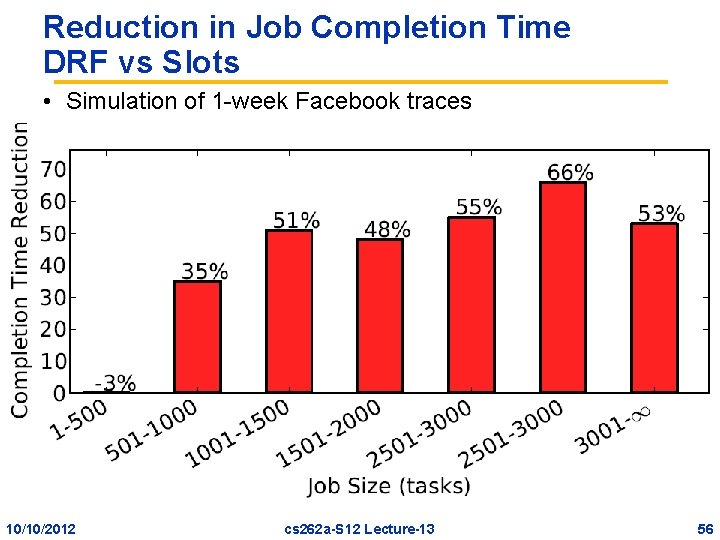

Reduction in Job Completion Time DRF vs Slots • Simulation of 1 -week Facebook traces 10/10/2012 cs 262 a-S 12 Lecture-13 56

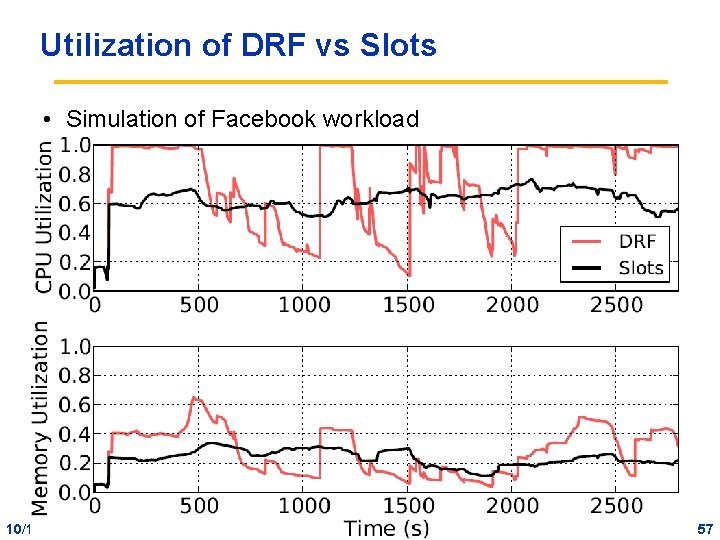

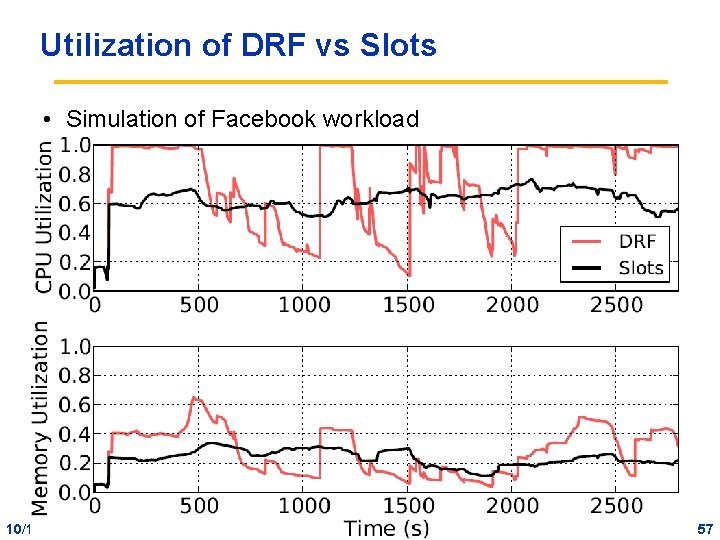

Utilization of DRF vs Slots • Simulation of Facebook workload alig@cs. berkeley. edu 10/10/2012 cs 262 a-S 12 Lecture-13 57 57

Summary • DRF provides multiple-resource fairness in the presence of heterogeneous demand – First generalization of max-min fairness to multiple-resources • DRF’s properties – Share guarantee, at least 1/n of one resource – Strategy-proofness, lying can only hurt you – Performs better than current approaches 10/10/2012 cs 262 a-S 12 Lecture-13 58

Is this a good paper? • What were the authors’ goals? • What about the evaluation/metrics? • Did they convince you that this was a good system/approach? • Were there any red-flags? • What mistakes did they make? • Does the system/approach meet the “Test of Time” challenge? • How would you review this paper today? 10/10/2012 cs 262 a-S 12 Lecture-13 59