EECS 262 a Advanced Topics in Computer Systems

- Slides: 36

EECS 262 a Advanced Topics in Computer Systems Lecture 15 Agile VM Migration/ Global Data Plane/Data. Capsules October 11 th, 2018 John Kubiatowicz Electrical Engineering and Computer Sciences University of California, Berkeley http: //www. eecs. berkeley. edu/~kubitron/cs 262

Today’s Papers • You Can Teach Elephants to Dance Kiryong Ha, Yoshihisa Abe, Thomas Eiszler, Zhuo Chen, Wenlu Hu, Brandon Amos, Rohit Upadhyaya, Padmanabhan Pillai, Mahadev Satyanarayanan, 2017 • Toward a Global Data Infrastructure Nitesh Mor, Ben Zhang, John Kolb, Douglas S. Chan, Nikhil Goyal, Nicholas Sun, Ken Lutz, Eric Allman, John Wawrzynek, Edward A. Lee, and John Kubiatowicz, 2016 • Thoughts? 10/11/2018 Cs 262 a-F 18 Lecture-15 2

Recall: Edge Computing • Wikipedia: Edge computing is a distributed computing paradigm in which computation is largely or completely performed on distributed device nodes known as smart devices or edge devices as opposed to primarily taking place in a centralized cloud environment. • Why compute on the edge? – Latency: Importance of human interactivity – Privacy: Keep sensitive data local – Reliability: Keep computing during network partitions 10/11/2018 Cs 262 a-F 18 Lecture-15 3

Why VM Encapsulation at Edge? • Lightweight mechanisms give performance and scalability – Docker, Linux containers, other in-kernel mechanisms – Focus on lightweight encapsulation • Other attributes to consider (that favor VMs): – Safety: Protect infrastructure from potentially malicious software – Isolation: Hide actions of mutually untrusting executions from one another on multi-tenant cloudlet – Transparency: Ability to run unmodified app code without recompiling or relinking. Allow reuse of existing software for rapid deployment – Deployability: The ability to easily maintain cloudlets in the field and create mobile apps that have high likelihood of finding softwarecompatible cloudlet anywhere in the world. • In short – VMs have many advantages in the edge environment – Can you get above advantages without full guest OS? 10/11/2018 Cs 262 a-F 18 Lecture-15 4

Applications explored • App 1: periodically sends accelerometer readings from a mobile device to a Linux back-end that performs a compute-intensive physics simulation, and returns an image to be rendered • App 2: ships an image from a mobile device to a Windows back-end, where face recognition is performed and labels corresponding to identified faces are returned • App 3: an augmented reality application that ships an image from a mobile device to a Windows back-end that identifies landmarks, and returns a modified image with these annotations • App 4: ships an image from a mobile device to a Linux back-end that performs object detection, and returns the labels of identified objects 10/11/2018 Cs 262 a-F 18 Lecture-15 5

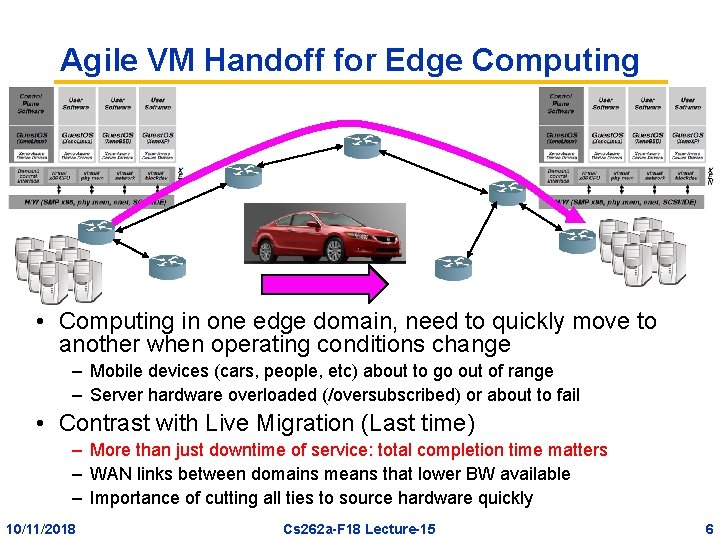

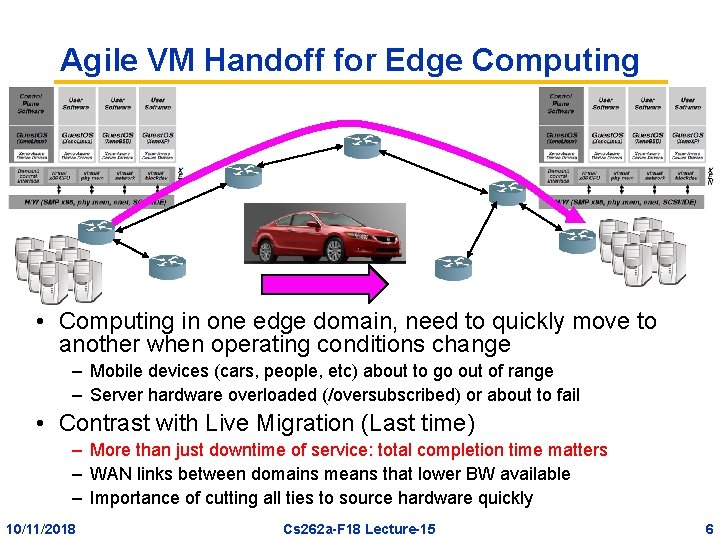

Agile VM Handoff for Edge Computing • Computing in one edge domain, need to quickly move to another when operating conditions change – Mobile devices (cars, people, etc) about to go out of range – Server hardware overloaded (/oversubscribed) or about to fail • Contrast with Live Migration (Last time) – More than just downtime of service: total completion time matters – WAN links between domains means that lower BW available – Importance of cutting all ties to source hardware quickly 10/11/2018 Cs 262 a-F 18 Lecture-15 6

Basic Design and Implementation • VM Handoff principles: – Every non-transfer is a win: Use deduplication, compression and deltaencoding to eliminate transfers – Keep the network busy: Network bandwidth is a precious resources, and should be kept at the highest level of utilization – Go with the flow: Adapt at fine time granularity to network bandwidth and cloudlet compute resources • Contrast with Live Migration: – – Live Migration tries to balance impact on network resources Live Migration tries to minimize downtime Live Migration assumes source hardware can stay up longer Live Migration assumes destination has no common “base” from which to start transfer process (and enable deduplication from base) • Note that Data Deduplication is an essential element of WAN optimization appliances – Extremely successful at reducing network transmission – Done on transmitted data (“read-only”) thus does not affect consistency 10/11/2018 Cs 262 a-F 18 Lecture-15 7

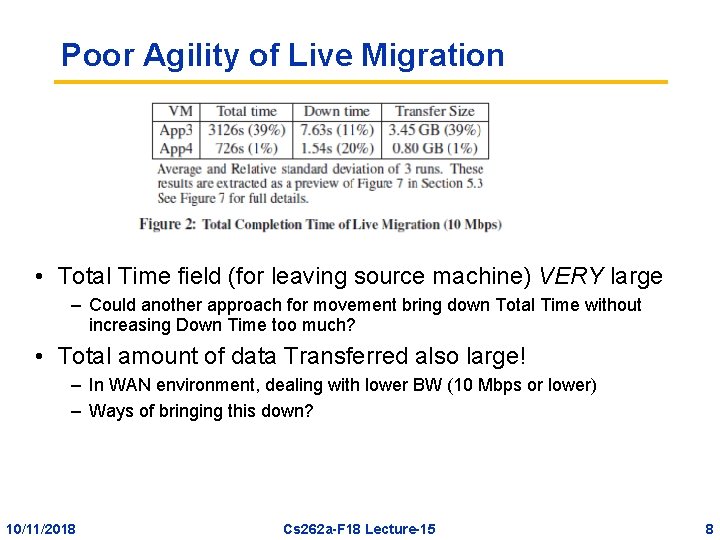

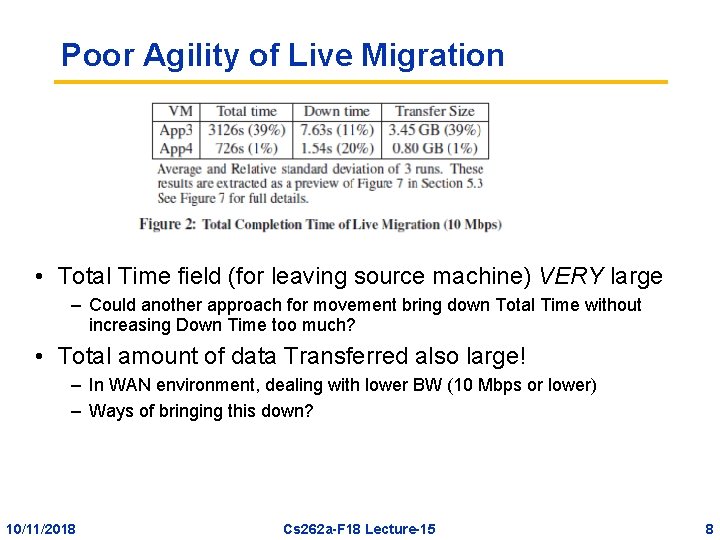

Poor Agility of Live Migration • Total Time field (for leaving source machine) VERY large – Could another approach for movement bring down Total Time without increasing Down Time too much? • Total amount of data Transferred also large! – In WAN environment, dealing with lower BW (10 Mbps or lower) – Ways of bringing this down? 10/11/2018 Cs 262 a-F 18 Lecture-15 8

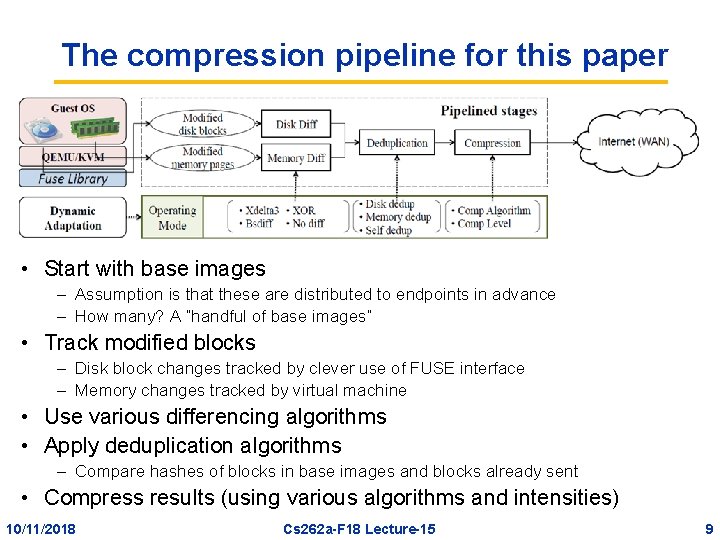

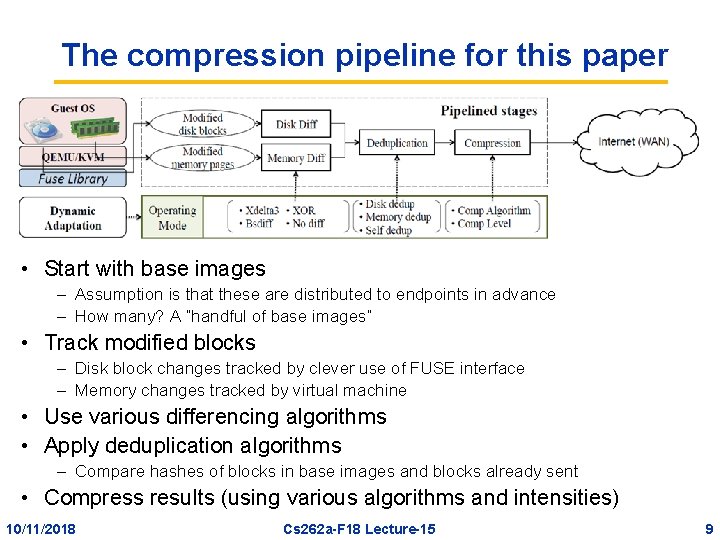

The compression pipeline for this paper • Start with base images – Assumption is that these are distributed to endpoints in advance – How many? A “handful of base images” • Track modified blocks – Disk block changes tracked by clever use of FUSE interface – Memory changes tracked by virtual machine • Use various differencing algorithms • Apply deduplication algorithms – Compare hashes of blocks in base images and blocks already sent • Compress results (using various algorithms and intensities) 10/11/2018 Cs 262 a-F 18 Lecture-15 9

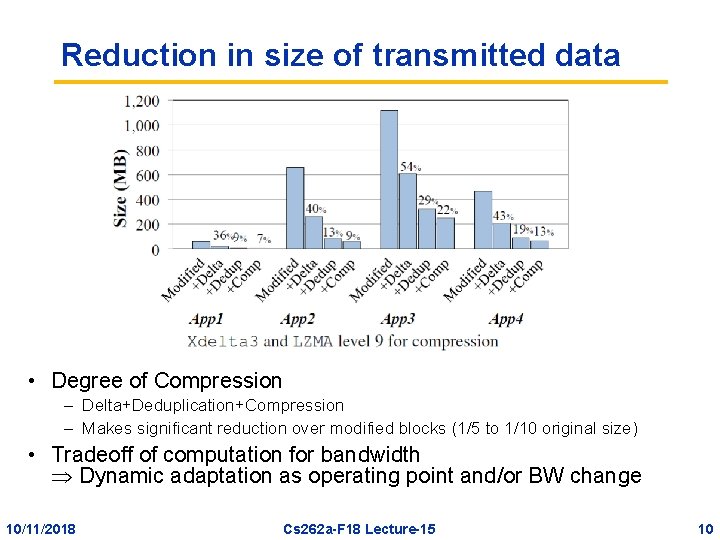

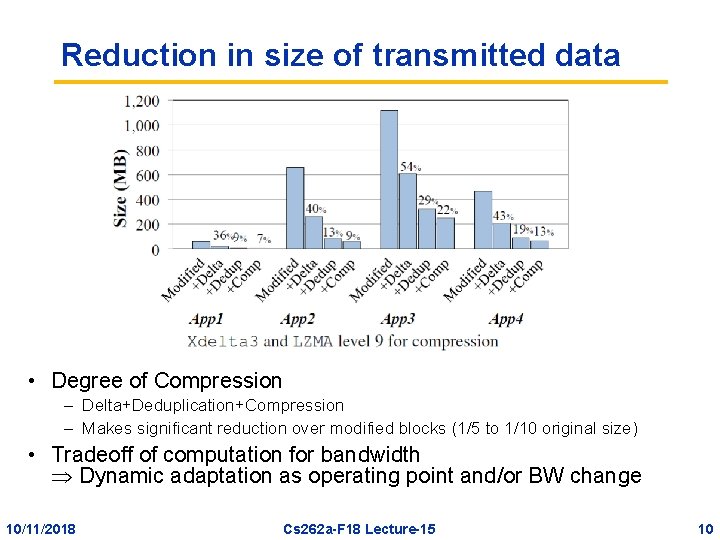

Reduction in size of transmitted data • Degree of Compression – Delta+Deduplication+Compression – Makes significant reduction over modified blocks (1/5 to 1/10 original size) • Tradeoff of computation for bandwidth Dynamic adaptation as operating point and/or BW change 10/11/2018 Cs 262 a-F 18 Lecture-15 10

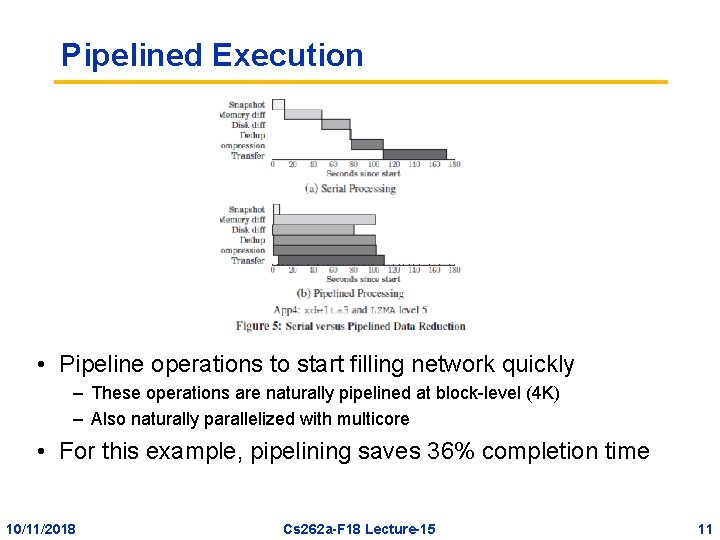

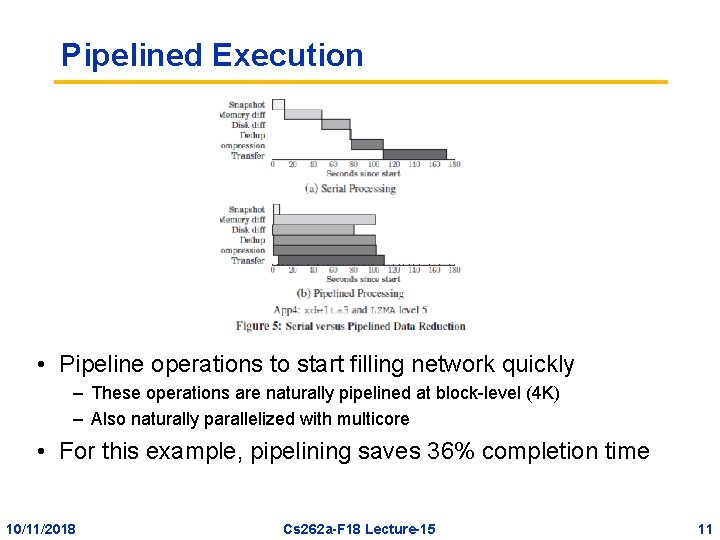

Pipelined Execution • Pipeline operations to start filling network quickly – These operations are naturally pipelined at block-level (4 K) – Also naturally parallelized with multicore • For this example, pipelining saves 36% completion time 10/11/2018 Cs 262 a-F 18 Lecture-15 11

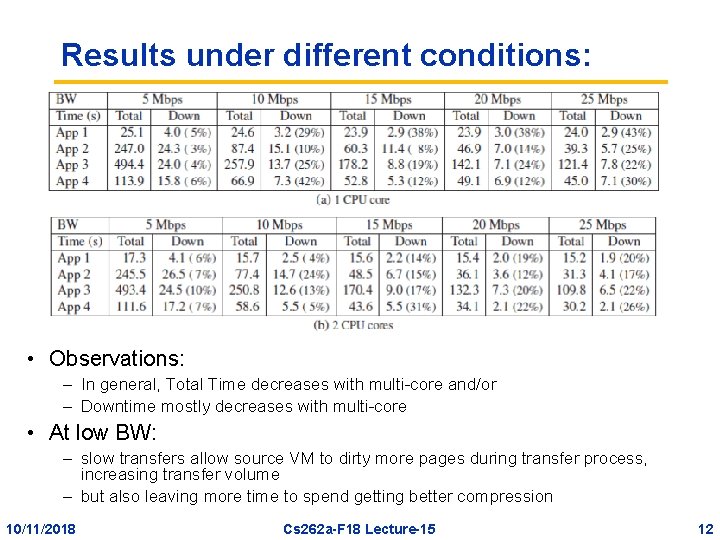

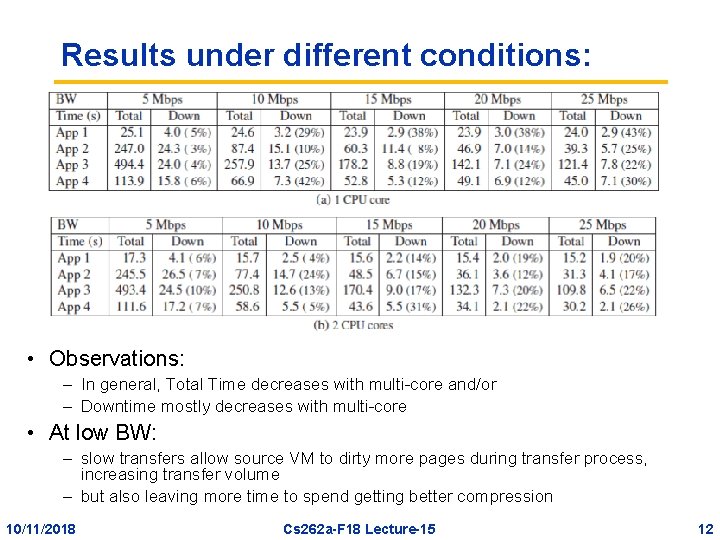

Results under different conditions: • Observations: – In general, Total Time decreases with multi-core and/or – Downtime mostly decreases with multi-core • At low BW: – slow transfers allow source VM to dirty more pages during transfer process, increasing transfer volume – but also leaving more time to spend getting better compression 10/11/2018 Cs 262 a-F 18 Lecture-15 12

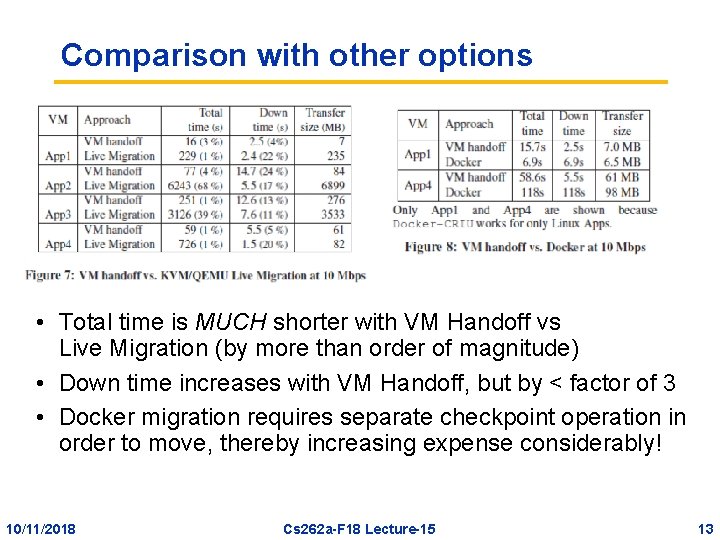

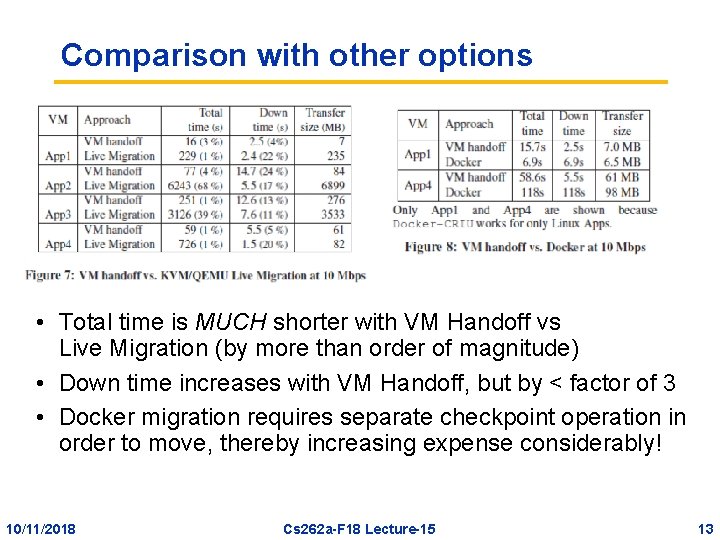

Comparison with other options • Total time is MUCH shorter with VM Handoff vs Live Migration (by more than order of magnitude) • Down time increases with VM Handoff, but by < factor of 3 • Docker migration requires separate checkpoint operation in order to move, thereby increasing expense considerably! 10/11/2018 Cs 262 a-F 18 Lecture-15 13

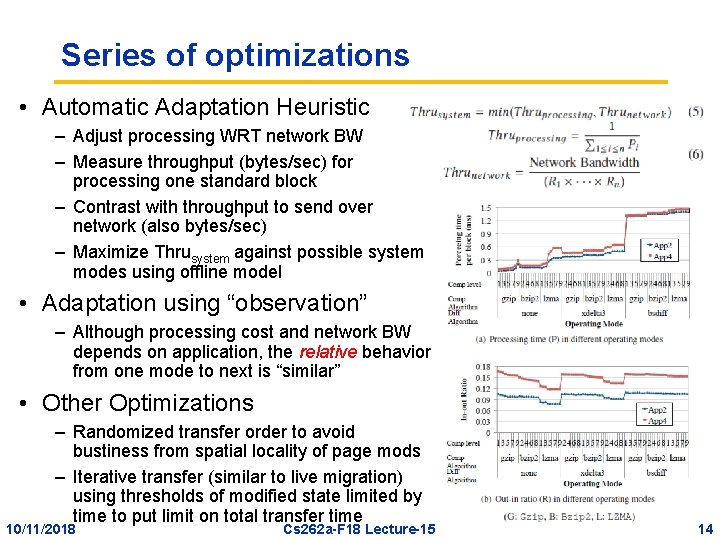

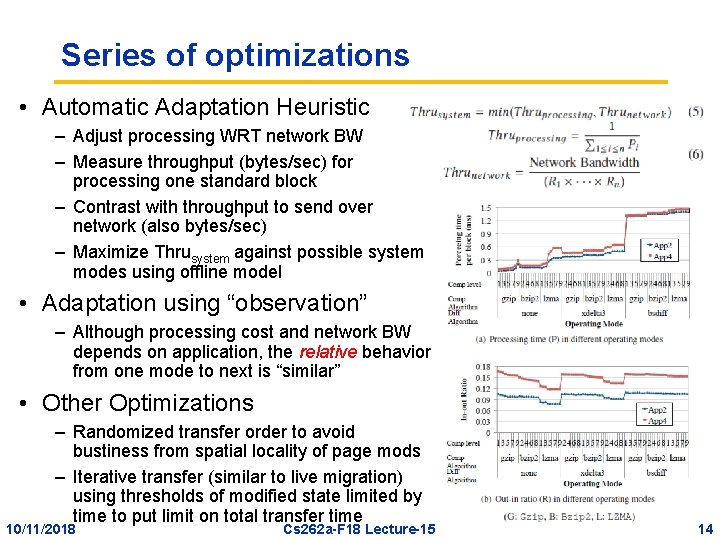

Series of optimizations • Automatic Adaptation Heuristic – Adjust processing WRT network BW – Measure throughput (bytes/sec) for processing one standard block – Contrast with throughput to send over network (also bytes/sec) – Maximize Thrusystem against possible system modes using offline model • Adaptation using “observation” – Although processing cost and network BW depends on application, the relative behavior from one mode to next is “similar” • Other Optimizations – Randomized transfer order to avoid bustiness from spatial locality of page mods – Iterative transfer (similar to live migration) using thresholds of modified state limited by time to put limit on total transfer time 10/11/2018 Cs 262 a-F 18 Lecture-15 14

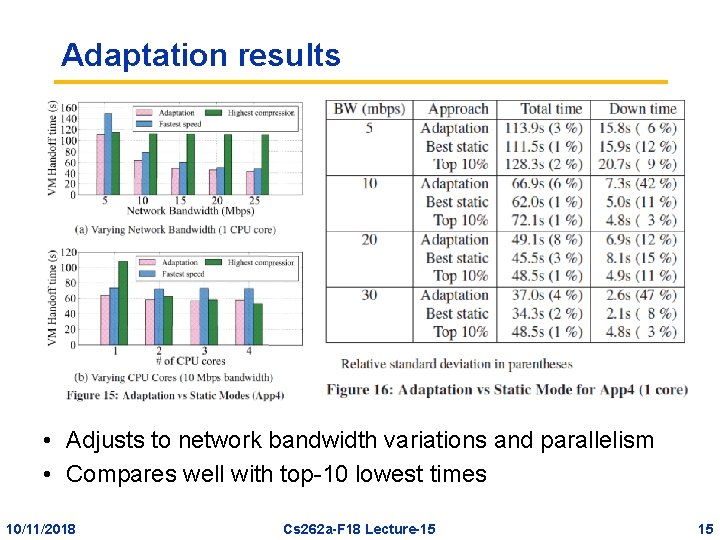

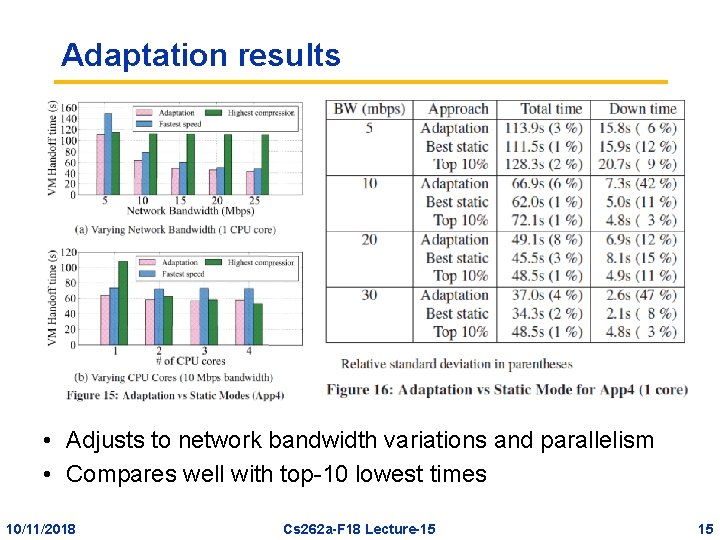

Adaptation results • Adjusts to network bandwidth variations and parallelism • Compares well with top-10 lowest times 10/11/2018 Cs 262 a-F 18 Lecture-15 15

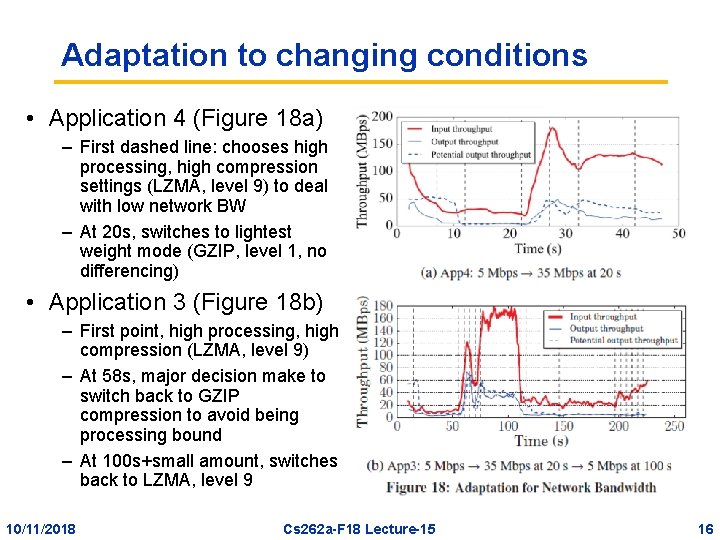

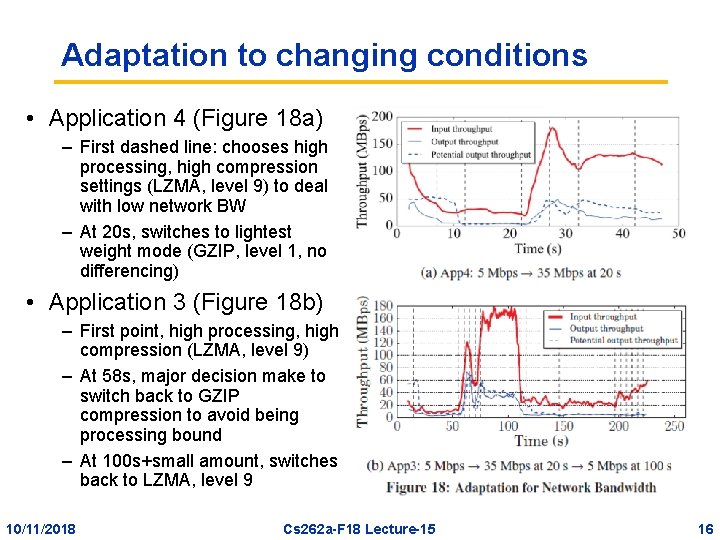

Adaptation to changing conditions • Application 4 (Figure 18 a) – First dashed line: chooses high processing, high compression settings (LZMA, level 9) to deal with low network BW – At 20 s, switches to lightest weight mode (GZIP, level 1, no differencing) • Application 3 (Figure 18 b) – First point, high processing, high compression (LZMA, level 9) – At 58 s, major decision make to switch back to GZIP compression to avoid being processing bound – At 100 s+small amount, switches back to LZMA, level 9 10/11/2018 Cs 262 a-F 18 Lecture-15 16

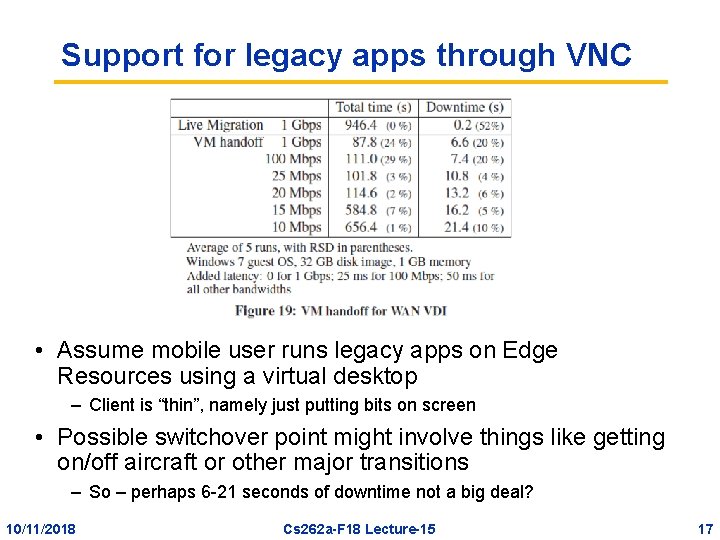

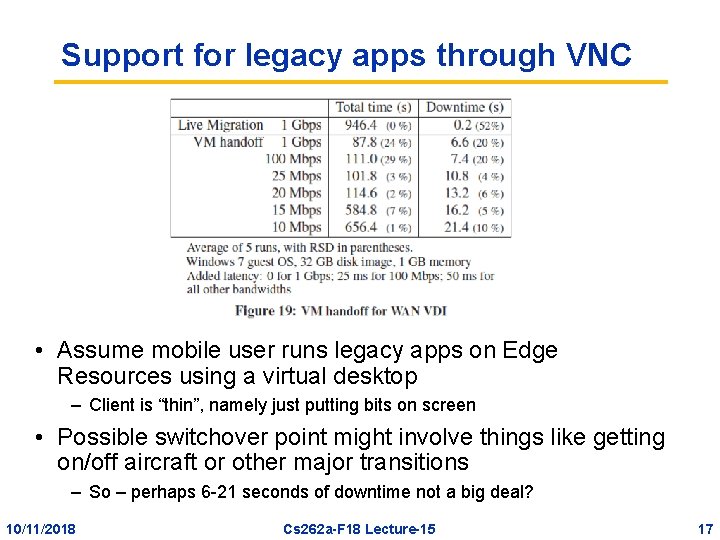

Support for legacy apps through VNC • Assume mobile user runs legacy apps on Edge Resources using a virtual desktop – Client is “thin”, namely just putting bits on screen • Possible switchover point might involve things like getting on/off aircraft or other major transitions – So – perhaps 6 -21 seconds of downtime not a big deal? 10/11/2018 Cs 262 a-F 18 Lecture-15 17

Summary • VM encapsulation at the edge – For safety, isolation, transparency, deployability • Optimize for total migration time – Important aspect not necessarily downtime • Aggressive use of data deduplication and adaptive compression – Assume that a variety of “base” images available to use for eliminating unnecessary traffic – Very similar to WAN optimization devices 10/11/2018 Cs 262 a-F 18 Lecture-15 18

Is this a good paper? • What were the authors’ goals? • What about the evaluation/metrics? • Did they convince you that this was a good system/approach? • Were there any red-flags? • What mistakes did they make? • Does the system/approach meet the “Test of Time” challenge? • How would you review this paper today? 10/11/2018 Cs 262 a-F 18 Lecture-15 19

BREAK 10/11/2018 Cs 262 a-F 18 Lecture-15 20

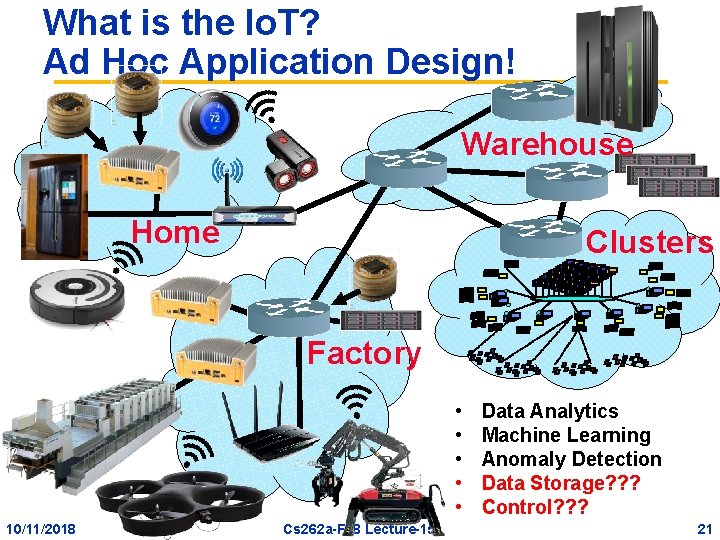

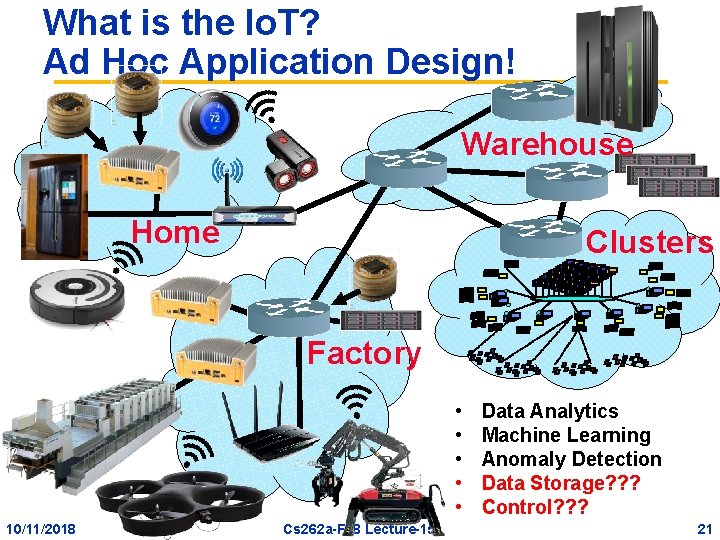

What is the Io. T? Ad Hoc Application Design! Warehouse Home g Clusters Factory • • • 10/11/2018 Cs 262 a-F 18 Lecture-15 Data Analytics Machine Learning Anomaly Detection Data Storage? ? ? Control? ? ? 21

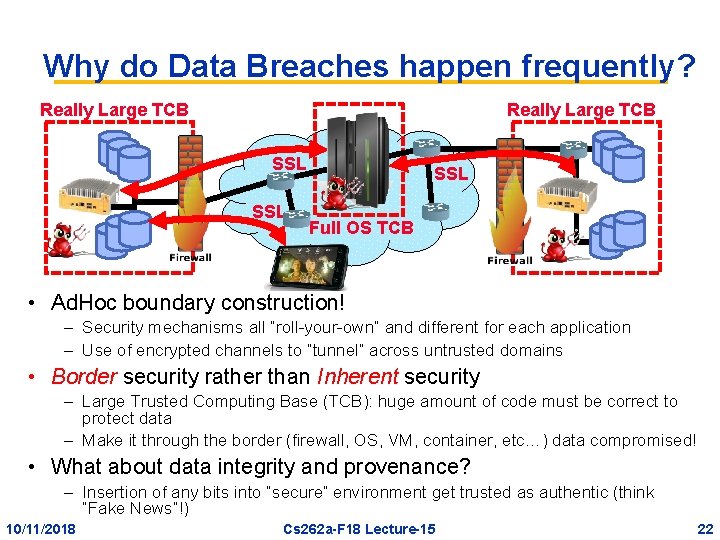

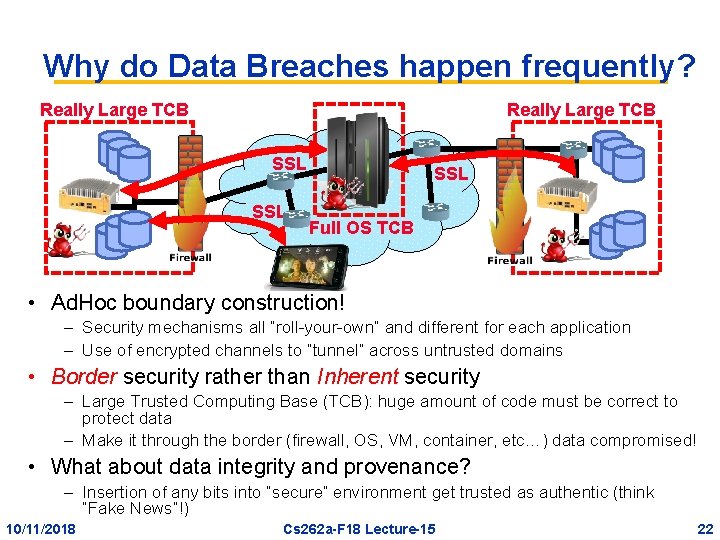

Why do Data Breaches happen frequently? Really Large TCB SSL h Full OS TCB • Ad. Hoc boundary construction! – Security mechanisms all “roll-your-own” and different for each application – Use of encrypted channels to “tunnel” across untrusted domains • Border security rather than Inherent security – Large Trusted Computing Base (TCB): huge amount of code must be correct to protect data – Make it through the border (firewall, OS, VM, container, etc…) data compromised! • What about data integrity and provenance? – Insertion of any bits into “secure” environment get trusted as authentic (think “Fake News”!) 10/11/2018 Cs 262 a-F 18 Lecture-15 22

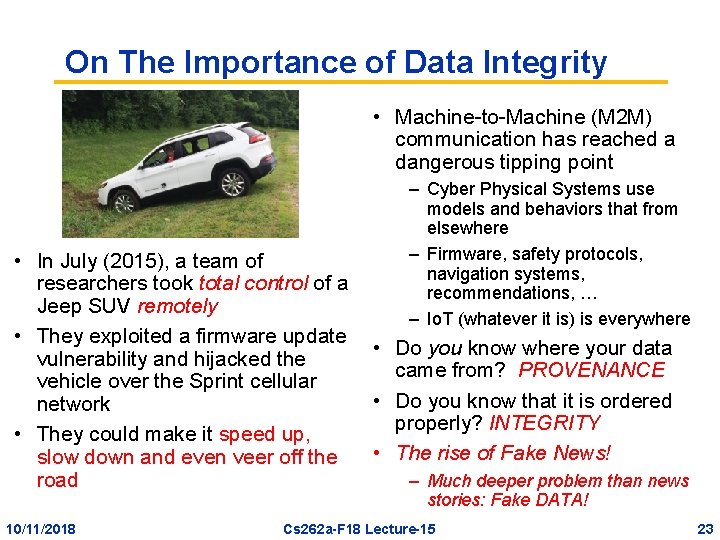

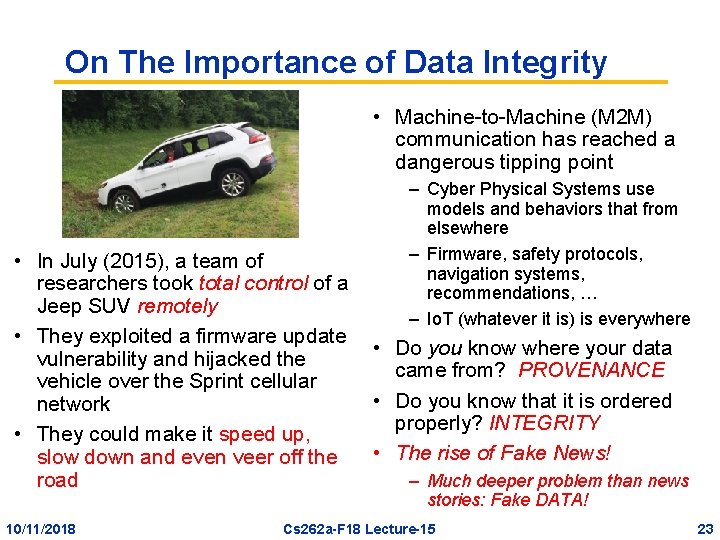

On The Importance of Data Integrity • Machine-to-Machine (M 2 M) communication has reached a dangerous tipping point • In July (2015), a team of researchers took total control of a Jeep SUV remotely • They exploited a firmware update vulnerability and hijacked the vehicle over the Sprint cellular network • They could make it speed up, slow down and even veer off the road 10/11/2018 – Cyber Physical Systems use models and behaviors that from elsewhere – Firmware, safety protocols, navigation systems, recommendations, … – Io. T (whatever it is) is everywhere • Do you know where your data came from? PROVENANCE • Do you know that it is ordered properly? INTEGRITY • The rise of Fake News! – Much deeper problem than news stories: Fake DATA! Cs 262 a-F 18 Lecture-15 23

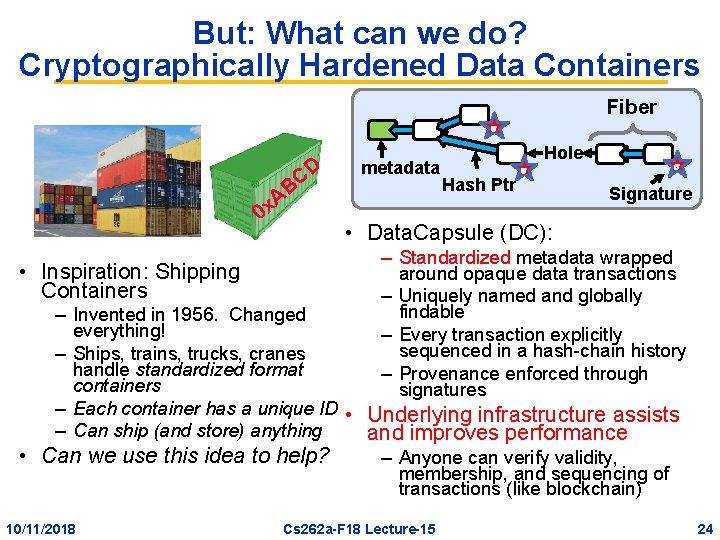

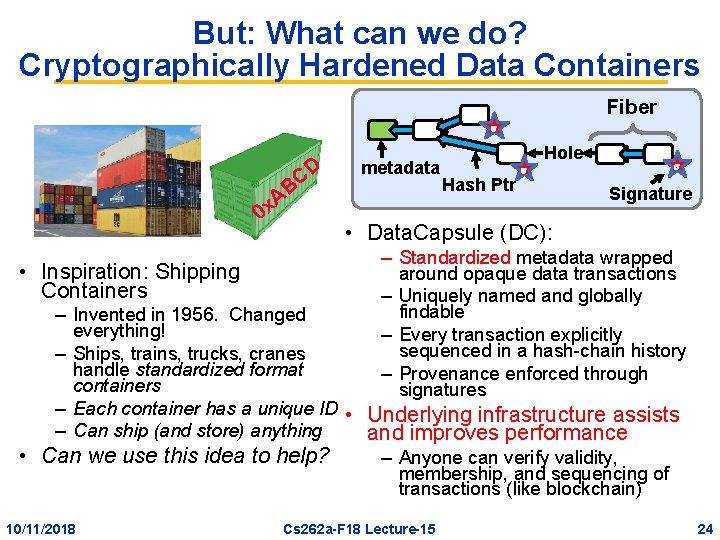

But: What can we do? Cryptographically Hardened Data Containers Fiber AB 0 x • Inspiration: Shipping Containers CD metadata Hash Ptr Hole Signature • Data. Capsule (DC): – Standardized metadata wrapped around opaque data transactions – Uniquely named and globally findable – Every transaction explicitly sequenced in a hash-chain history – Provenance enforced through signatures – Invented in 1956. Changed everything! – Ships, trains, trucks, cranes handle standardized format containers – Each container has a unique ID • Underlying infrastructure assists – Can ship (and store) anything and improves performance • Can we use this idea to help? – Anyone can verify validity, membership, and sequencing of transactions (like blockchain) 10/11/2018 Cs 262 a-F 18 Lecture-15 24

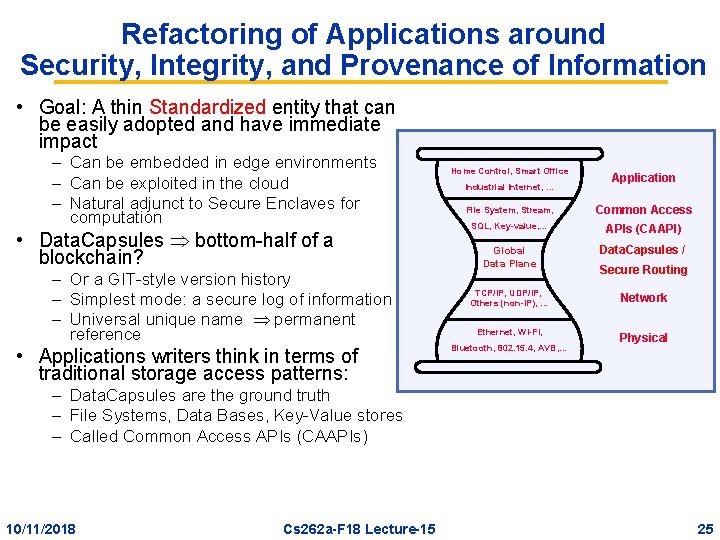

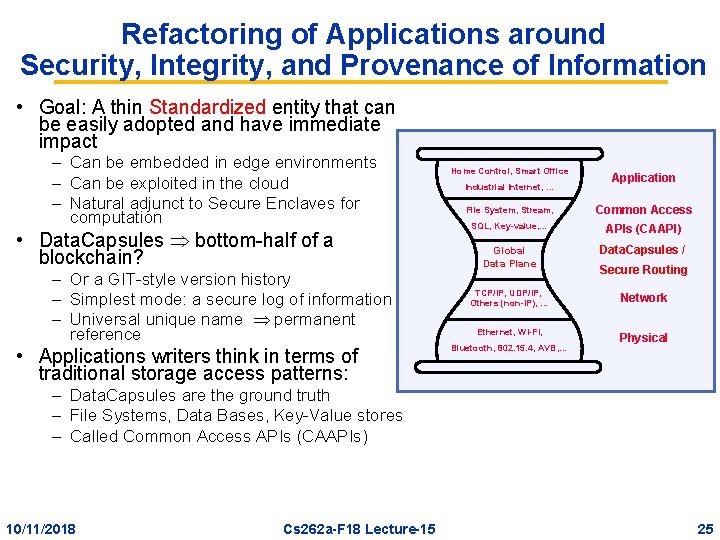

Refactoring of Applications around Security, Integrity, and Provenance of Information • Goal: A thin Standardized entity that can be easily adopted and have immediate impact – Can be embedded in edge environments – Can be exploited in the cloud – Natural adjunct to Secure Enclaves for computation • Data. Capsules bottom-half of a blockchain? – Or a GIT-style version history – Simplest mode: a secure log of information – Universal unique name permanent reference • Applications writers think in terms of traditional storage access patterns: Home Control, Smart Office Industrial Internet, … Application File System, Stream, Common Access SQL, Key-value, … APIs (CAAPI) Global Data Plane Data. Capsules / TCP/IP, UDP/IP, Others (non-IP), … Network Ethernet, WI-FI, Physical Bluetooth, 802. 15. 4, AVB, … Secure Routing – Data. Capsules are the ground truth – File Systems, Data Bases, Key-Value stores – Called Common Access APIs (CAAPIs) 10/11/2018 Cs 262 a-F 18 Lecture-15 25

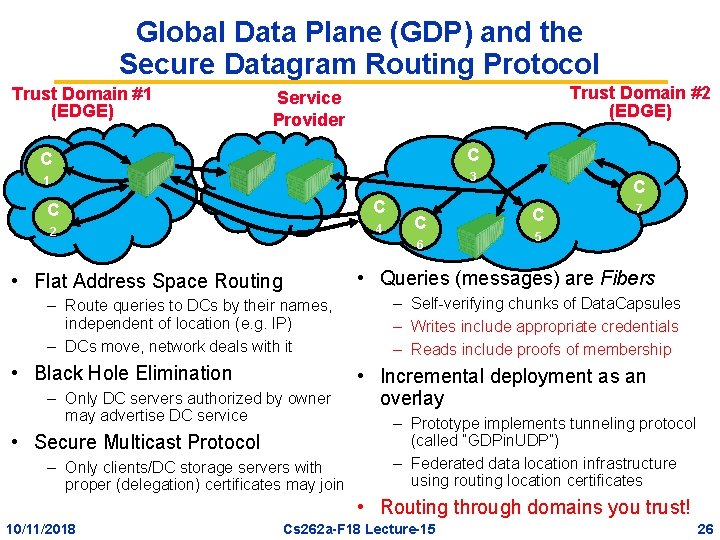

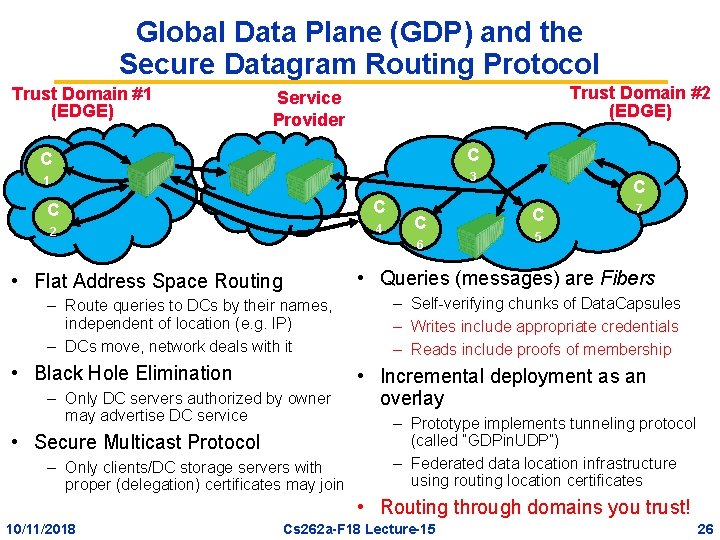

Global Data Plane (GDP) and the Secure Datagram Routing Protocol Trust Domain #1 (EDGE) Trust Domain #2 (EDGE) Service Provider C C 1 3 C C 2 4 C 6 C C 7 5 • Queries (messages) are Fibers • Flat Address Space Routing – Route queries to DCs by their names, independent of location (e. g. IP) – DCs move, network deals with it • Black Hole Elimination – Only DC servers authorized by owner may advertise DC service • Secure Multicast Protocol – Only clients/DC storage servers with proper (delegation) certificates may join – Self-verifying chunks of Data. Capsules – Writes include appropriate credentials – Reads include proofs of membership • Incremental deployment as an overlay – Prototype implements tunneling protocol (called “GDPin. UDP”) – Federated data location infrastructure using routing location certificates • Routing through domains you trust! 10/11/2018 Cs 262 a-F 18 Lecture-15 26

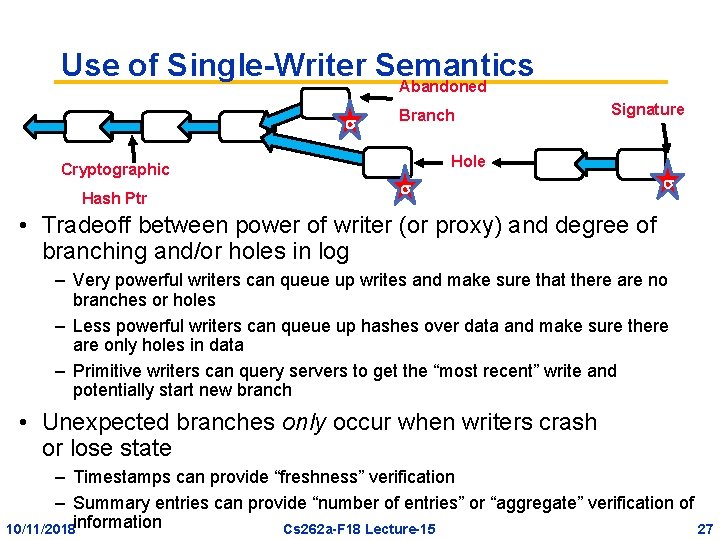

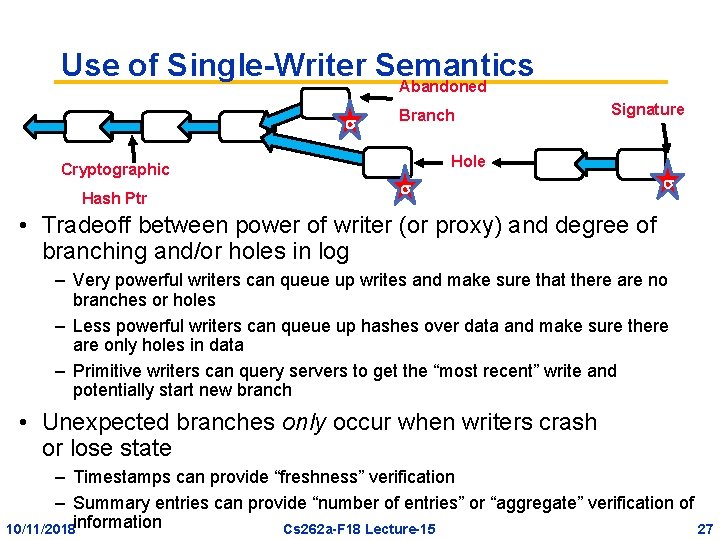

Use of Single-Writer Semantics Abandoned Cryptographic Hash Ptr Branch Signature Hole • Tradeoff between power of writer (or proxy) and degree of branching and/or holes in log – Very powerful writers can queue up writes and make sure that there are no branches or holes – Less powerful writers can queue up hashes over data and make sure there are only holes in data – Primitive writers can query servers to get the “most recent” write and potentially start new branch • Unexpected branches only occur when writers crash or lose state – Timestamps can provide “freshness” verification – Summary entries can provide “number of entries” or “aggregate” verification of 10/11/2018 information Cs 262 a-F 18 Lecture-15 27

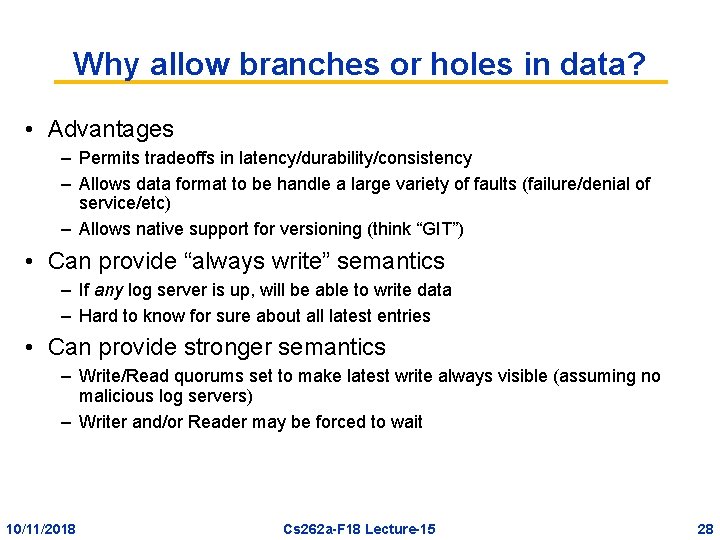

Why allow branches or holes in data? • Advantages – Permits tradeoffs in latency/durability/consistency – Allows data format to be handle a large variety of faults (failure/denial of service/etc) – Allows native support for versioning (think “GIT”) • Can provide “always write” semantics – If any log server is up, will be able to write data – Hard to know for sure about all latest entries • Can provide stronger semantics – Write/Read quorums set to make latest write always visible (assuming no malicious log servers) – Writer and/or Reader may be forced to wait 10/11/2018 Cs 262 a-F 18 Lecture-15 28

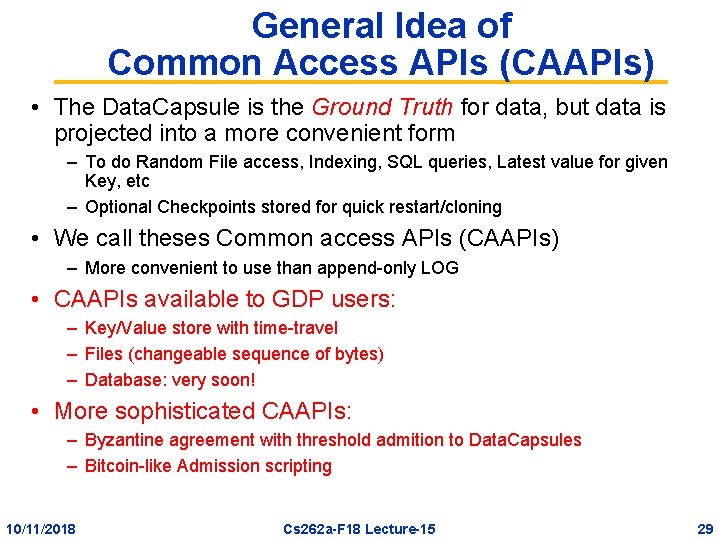

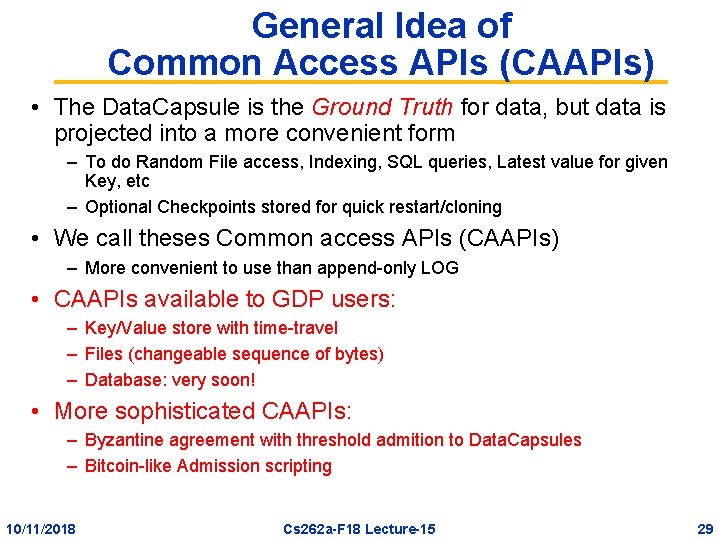

General Idea of Common Access APIs (CAAPIs) • The Data. Capsule is the Ground Truth for data, but data is projected into a more convenient form – To do Random File access, Indexing, SQL queries, Latest value for given Key, etc – Optional Checkpoints stored for quick restart/cloning • We call theses Common access APIs (CAAPIs) – More convenient to use than append-only LOG • CAAPIs available to GDP users: – Key/Value store with time-travel – Files (changeable sequence of bytes) – Database: very soon! • More sophisticated CAAPIs: – Byzantine agreement with threshold admition to Data. Capsules – Bitcoin-like Admission scripting 10/11/2018 Cs 262 a-F 18 Lecture-15 29

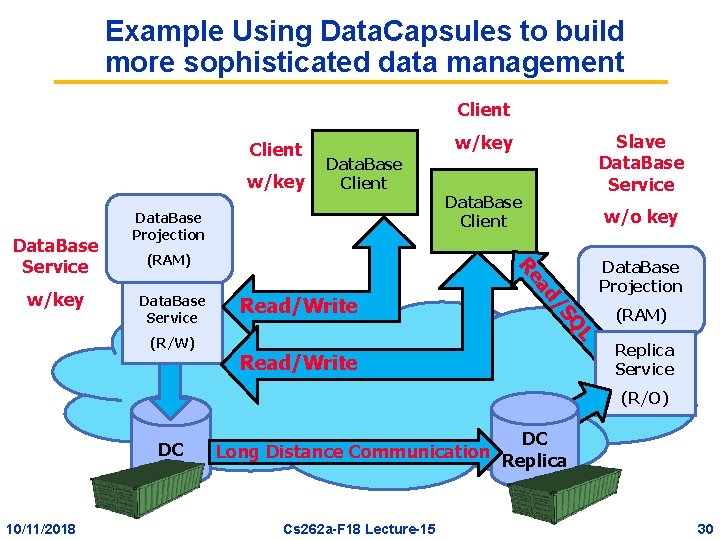

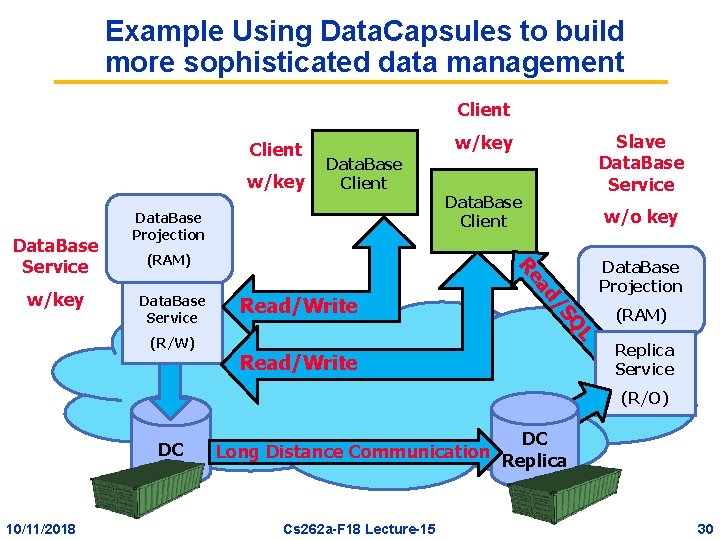

Example Using Data. Capsules to build more sophisticated data management Client w/key Data. Base Projection (RAM) (R/W) w/o key Read/Write QL Data. Base Service Data. Base Client /S ad w/key Data. Base Client Re Data. Base Service Slave Data. Base Service w/key Read/Write Data. Base Projection (RAM) Replica Service (R/O) DC 10/11/2018 DC Long Distance Communication Replica Cs 262 a-F 18 Lecture-15 30

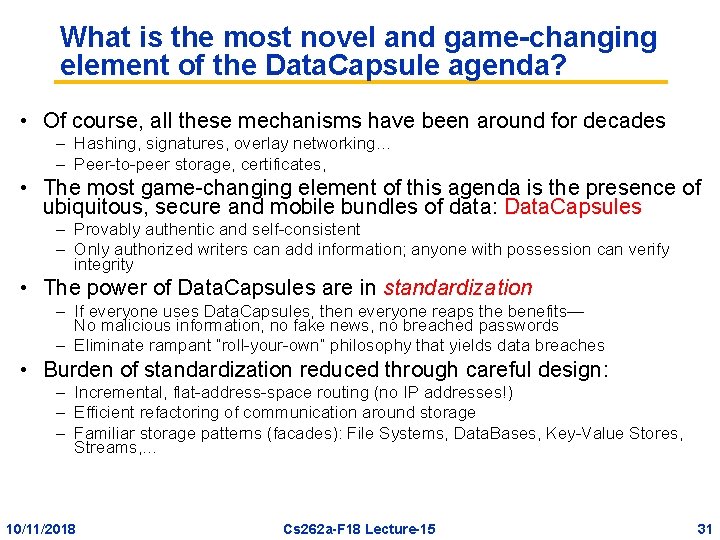

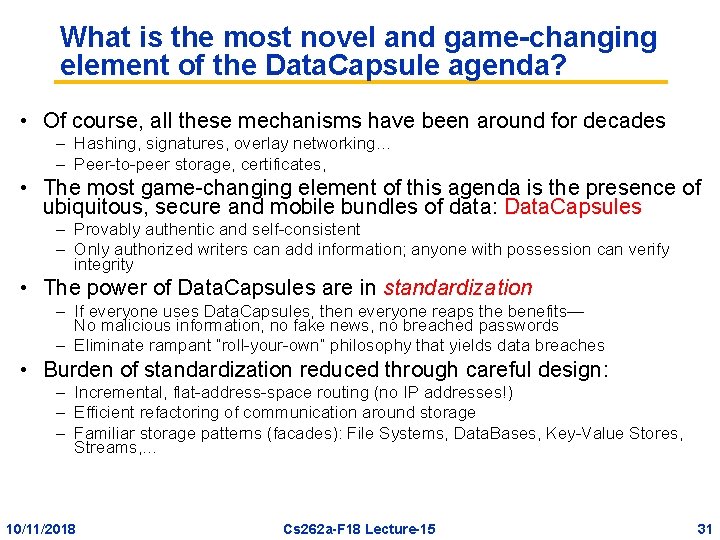

What is the most novel and game-changing element of the Data. Capsule agenda? • Of course, all these mechanisms have been around for decades – Hashing, signatures, overlay networking… – Peer-to-peer storage, certificates, • The most game-changing element of this agenda is the presence of ubiquitous, secure and mobile bundles of data: Data. Capsules – Provably authentic and self-consistent – Only authorized writers can add information; anyone with possession can verify integrity • The power of Data. Capsules are in standardization – If everyone uses Data. Capsules, then everyone reaps the benefits— No malicious information, no fake news, no breached passwords – Eliminate rampant “roll-your-own” philosophy that yields data breaches • Burden of standardization reduced through careful design: – Incremental, flat-address-space routing (no IP addresses!) – Efficient refactoring of communication around storage – Familiar storage patterns (facades): File Systems, Data. Bases, Key-Value Stores, Streams, … 10/11/2018 Cs 262 a-F 18 Lecture-15 31

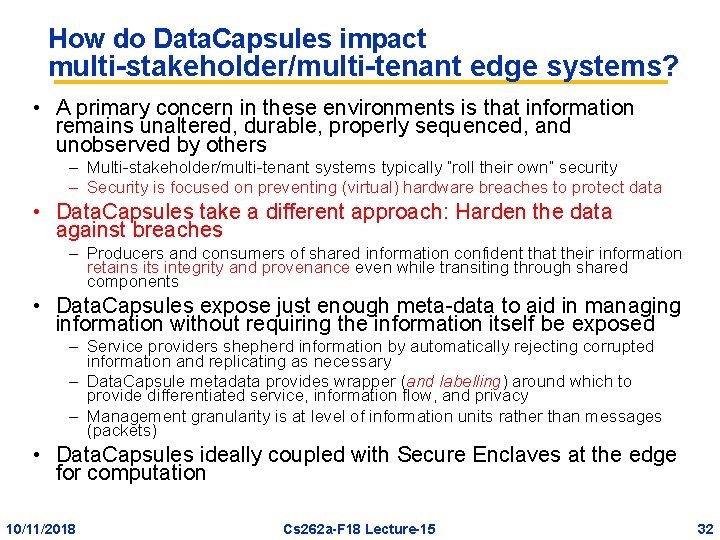

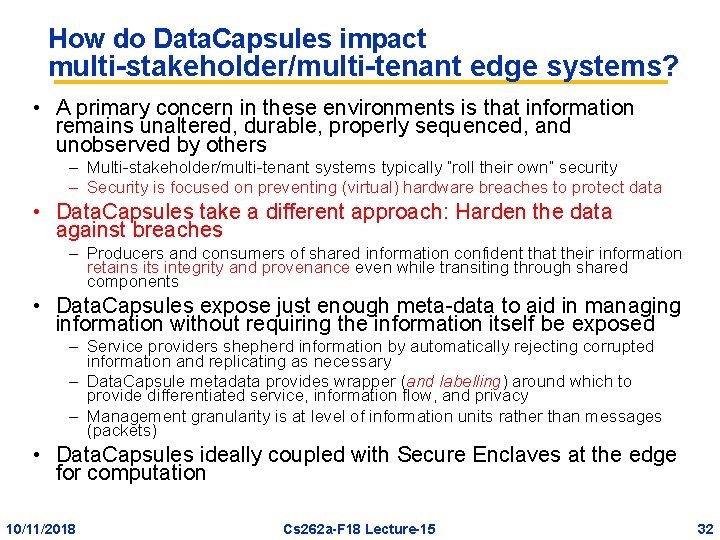

How do Data. Capsules impact multi-stakeholder/multi-tenant edge systems? • A primary concern in these environments is that information remains unaltered, durable, properly sequenced, and unobserved by others – Multi-stakeholder/multi-tenant systems typically “roll their own” security – Security is focused on preventing (virtual) hardware breaches to protect data • Data. Capsules take a different approach: Harden the data against breaches – Producers and consumers of shared information confident that their information retains its integrity and provenance even while transiting through shared components • Data. Capsules expose just enough meta-data to aid in managing information without requiring the information itself be exposed – Service providers shepherd information by automatically rejecting corrupted information and replicating as necessary – Data. Capsule metadata provides wrapper (and labelling) around which to provide differentiated service, information flow, and privacy – Management granularity is at level of information units rather than messages (packets) • Data. Capsules ideally coupled with Secure Enclaves at the edge for computation 10/11/2018 Cs 262 a-F 18 Lecture-15 32

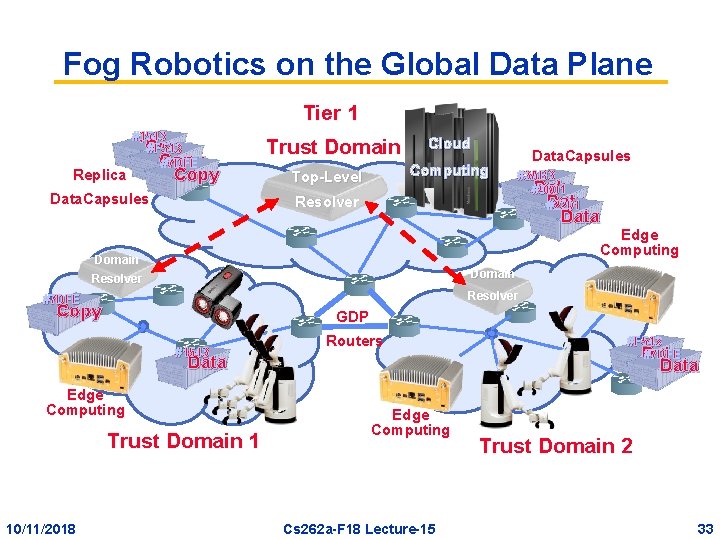

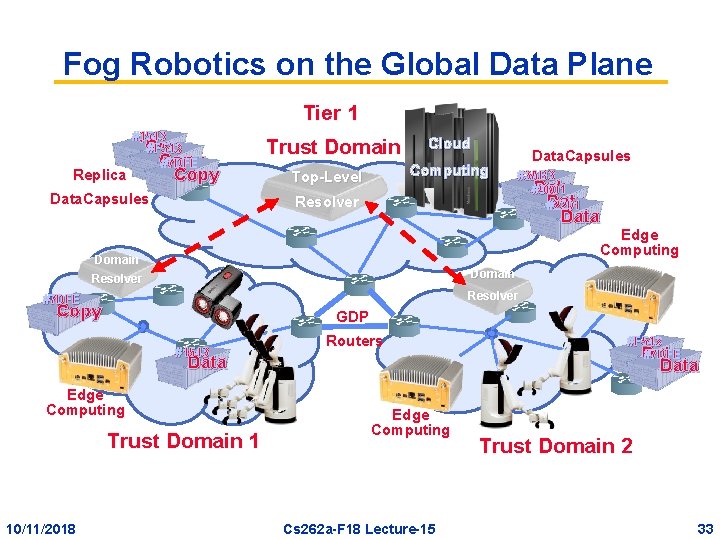

Fog Robotics on the Global Data Plane Tier 1 Replica #1543 `Copy #F 543 Copy #7 DFE ℝC 2 Copy Data. Capsules Trust Domain Cloud Computing Top-Level Resolver Data. Capsules #3 AB 3 Data #9001 Data #2201 Data Edge Computing Domain Resolver #7 DFE Copy GDP #1543 Routers Data Edge Computing Trust Domain 1 10/11/2018 #F 543 #7 DFE Data Edge Computing Cs 262 a-F 18 Lecture-15 Trust Domain 2 33

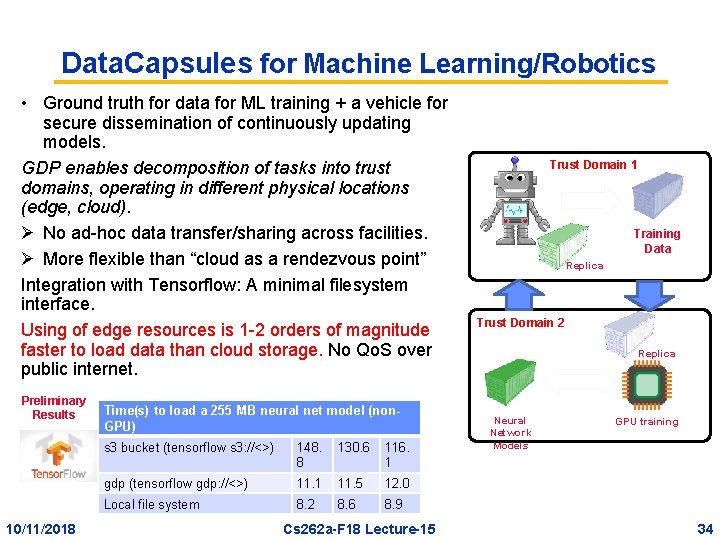

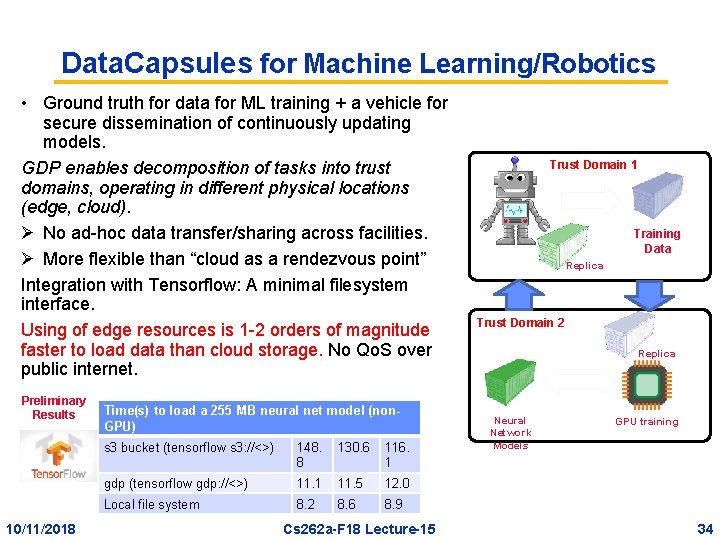

Data. Capsules for Machine Learning/Robotics • Ground truth for data for ML training + a vehicle for secure dissemination of continuously updating models. GDP enables decomposition of tasks into trust domains, operating in different physical locations (edge, cloud). Ø No ad-hoc data transfer/sharing across facilities. Ø More flexible than “cloud as a rendezvous point” Integration with Tensorflow: A minimal filesystem interface. Using of edge resources is 1 -2 orders of magnitude faster to load data than cloud storage. No Qo. S over public internet. Preliminary Results 10/11/2018 Time(s) to load a 255 MB neural net model (non. GPU) s 3 bucket (tensorflow s 3: //<>) 148. 8 130. 6 116. 1 gdp (tensorflow gdp: //<>) 11. 1 11. 5 12. 0 Local file system 8. 2 8. 6 8. 9 Cs 262 a-F 18 Lecture-15 Trust Domain 1 Training Data Replica Trust Domain 2 Replica Neural Network Models GPU training 34

What does short- and long-term success? • Short-term success: Data. Capsules in Io. T – Terra. Swarm Io. T applications: Data. Capsules+GDP were the Great Integrator – For low-power devices: simple gateway talks to devices with Blue. Tooth, Zig. Bee, Wi. Fi and speaks GDP protocols to infrastructure • Short-term success: working distributed applications and that could be experimentally tested under a variety of conditions and threats – Fog Robotics: Robots using and generating models at the edge – Smart manufacturing in which a 3 D additive fabrication facility (at the edge) is communicating securely with a digital twin demonstrating secure and timely exchange of data between the facility and the cloud. • Long-term success: widespread usage – Adoption of Data. Capsules and the GDP as the underpinning for many devices and applications both on the edge and in the cloud. – Example: Adoption of Data. Capsules as new standard for Io. T devices and applications – Similar to the widespread use of shipping containers in ports today 10/11/2018 Cs 262 a-F 18 Lecture-15 35

Is this a good paper? • What were the authors’ goals? • What about the evaluation/metrics? • Did they convince you that this was a good system/approach? • Were there any red-flags? • What mistakes did they make? • Does the system/approach meet the “Test of Time” challenge? • How would you review this paper today? 10/11/2018 Cs 262 a-F 18 Lecture-15 36