ECE 454 Computer Systems Programming Memory performance Part

- Slides: 30

ECE 454 Computer Systems Programming Memory performance (Part III: Virtual Memory and Prefetching) Ding Yuan ECE Dept. , University of Toronto http: //www. eecg. toronto. edu/~yuan

Contents • Virtual Memory (review (hopefully)) • Prefetching 2013 -10 -14 2 Ding Yuan, ECE 454

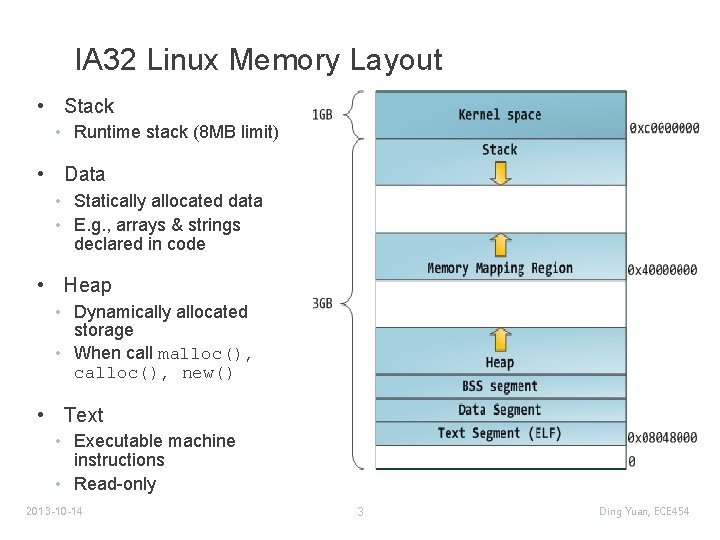

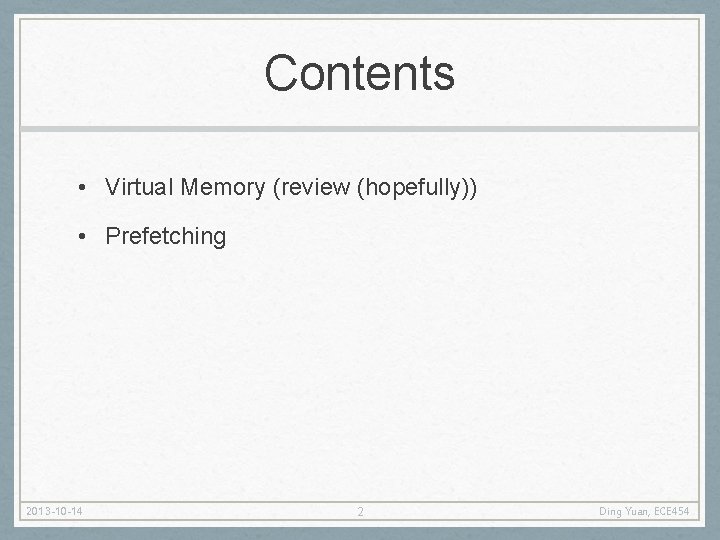

IA 32 Linux Memory Layout • Stack • Runtime stack (8 MB limit) • Data • Statically allocated data • E. g. , arrays & strings declared in code • Heap • Dynamically allocated storage • When call malloc(), calloc(), new() • Text • Executable machine instructions • Read-only 2013 -10 -14 3 Ding Yuan, ECE 454

Virtual Memory • Programs refer to virtual memory addresses • Conceptually very large array of bytes (4 GB for IA 32, 16 exabytes for 64 bits) • Each byte has its own address • System provides address space private to particular “process” • Allocation: Compiler and run-time system • Where different program objects should be stored • All allocation within single virtual address space • But why virtual memory? • Why not physical memory? 2013 -10 -14 4 Ding Yuan, ECE 454

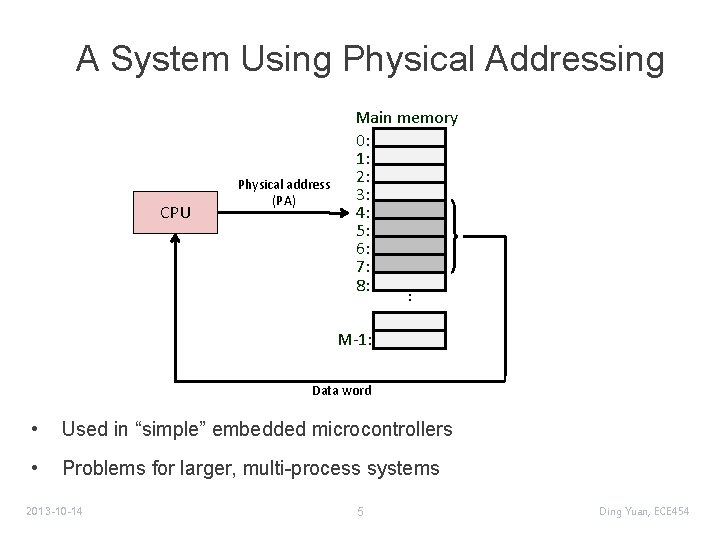

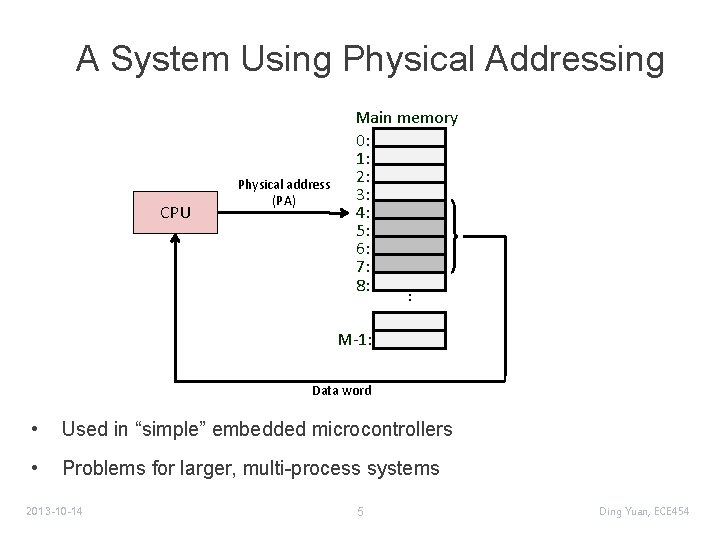

A System Using Physical Addressing CPU Physical address (PA) . . . Main memory 0: 1: 2: 3: 4: 5: 6: 7: 8: M-1: Data word • Used in “simple” embedded microcontrollers • Problems for larger, multi-process systems 2013 -10 -14 5 Ding Yuan, ECE 454

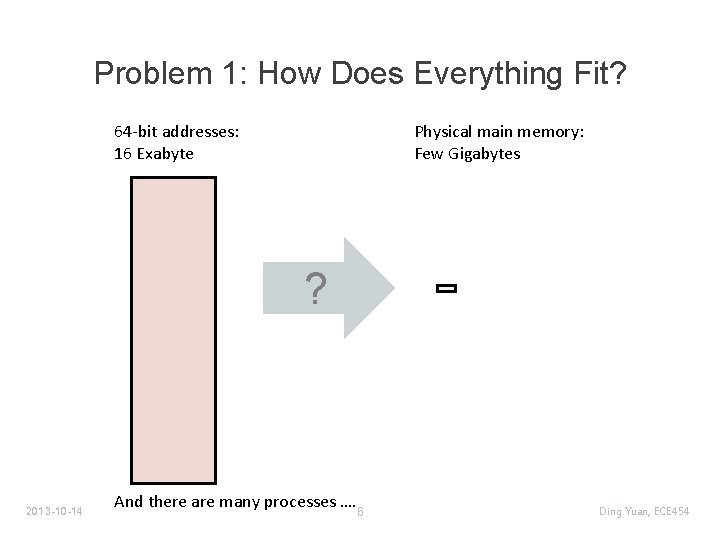

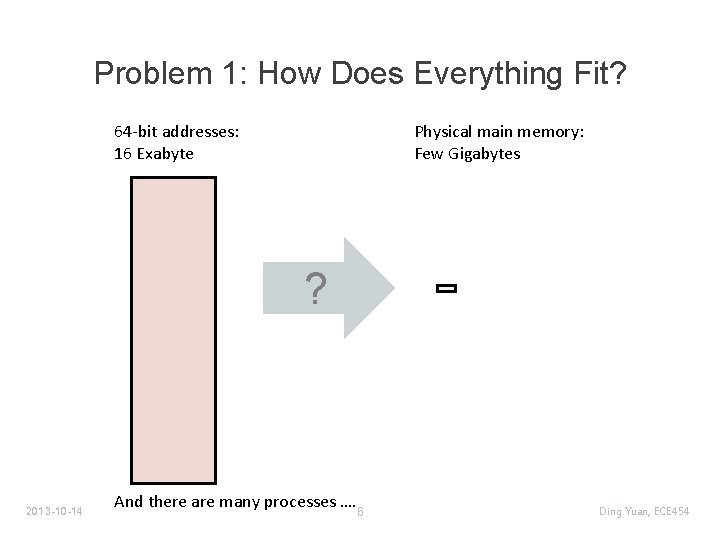

Problem 1: How Does Everything Fit? 64 -bit addresses: 16 Exabyte Physical main memory: Few Gigabytes ? 2013 -10 -14 And there are many processes …. 6 Ding Yuan, ECE 454

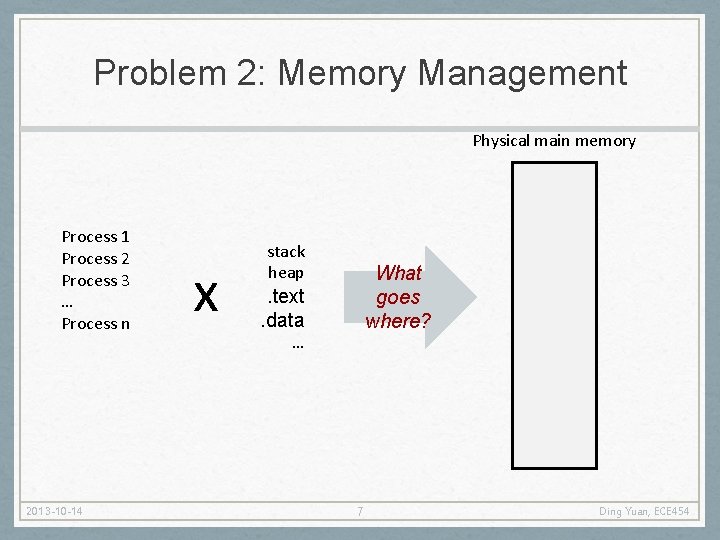

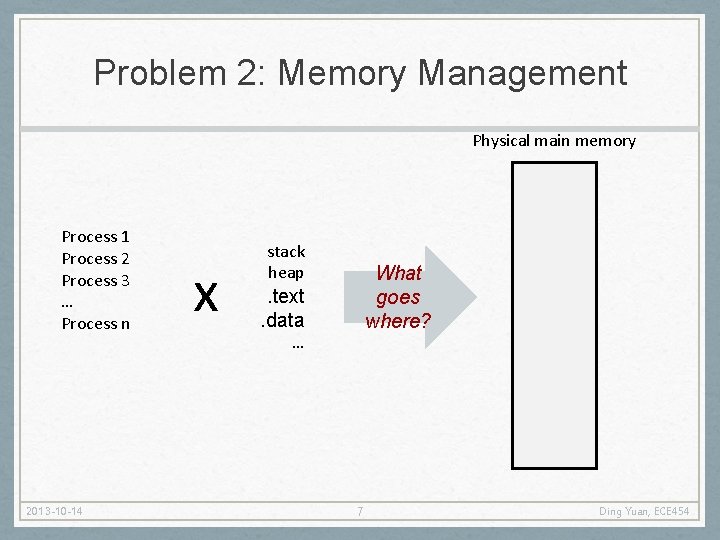

Problem 2: Memory Management Physical main memory Process 1 Process 2 Process 3 … Process n 2013 -10 -14 x stack heap What goes where? . text. data … 7 Ding Yuan, ECE 454

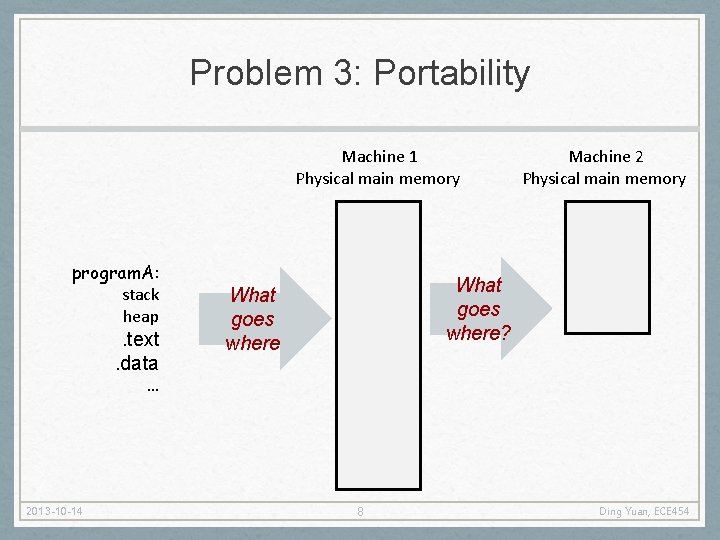

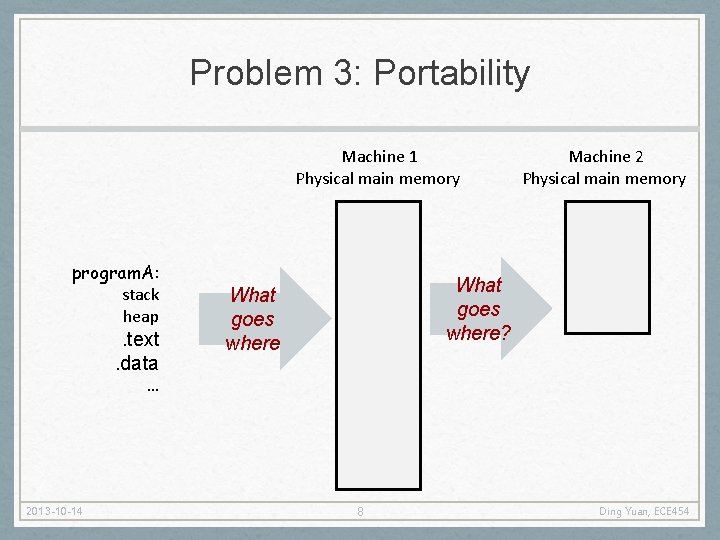

Problem 3: Portability Machine 1 Physical main memory program. A: stack heap . text. data Machine 2 Physical main memory What goes where? What goes where … 2013 -10 -14 8 Ding Yuan, ECE 454

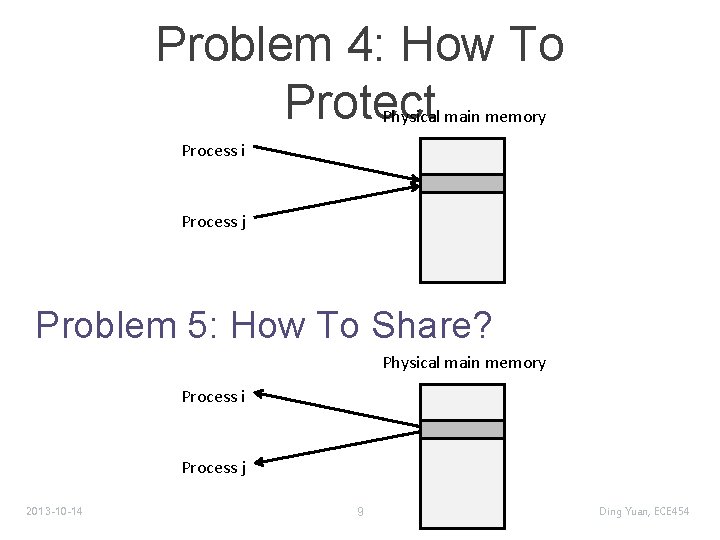

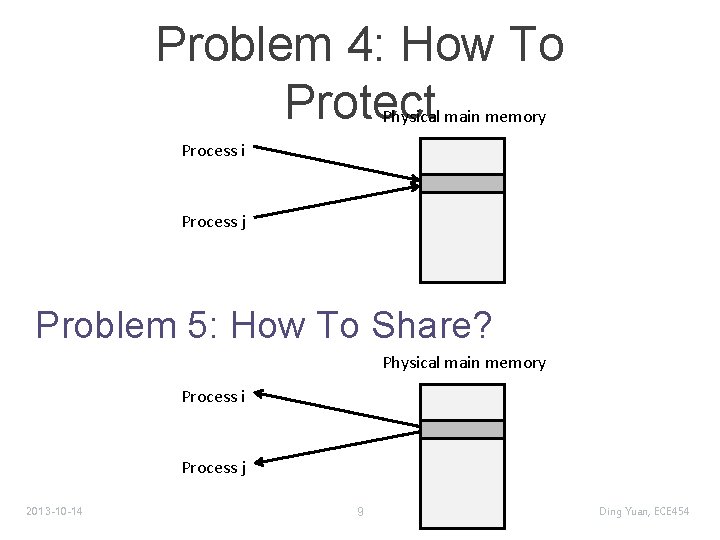

Problem 4: How To Protect Physical main memory Process i Process j Problem 5: How To Share? Physical main memory Process i Process j 2013 -10 -14 9 Ding Yuan, ECE 454

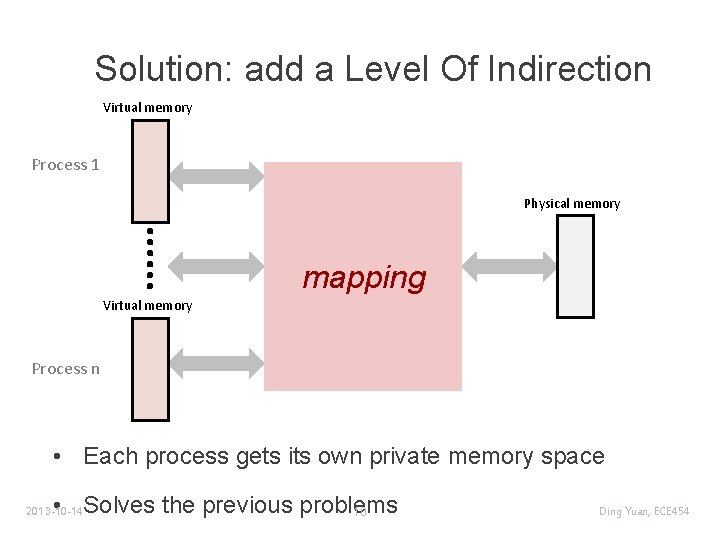

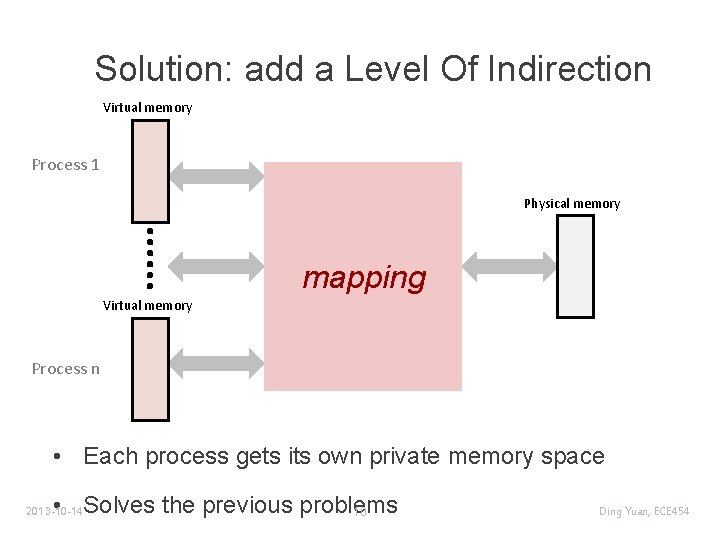

Solution: add a Level Of Indirection Virtual memory Process 1 Physical memory mapping Virtual memory Process n • Each process gets its own private memory space • Solves the previous problems 10 2013 -10 -14 Ding Yuan, ECE 454

A System Using Virtual Addressing CPU Chip CPU Virtual address (VA) Physical address (PA) MMU . . . Main memory 0: 1: 2: 3: 4: 5: 6: 7: 8: M-1: Data word • MMU = Memory Management Unit • MMU keeps mapping of VAs -> PAs in a “page table” 2013 -10 -14 11 Ding Yuan, ECE 454

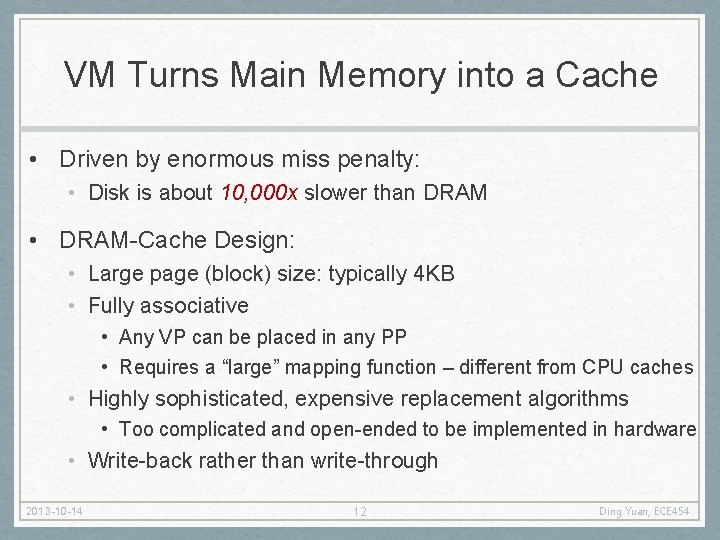

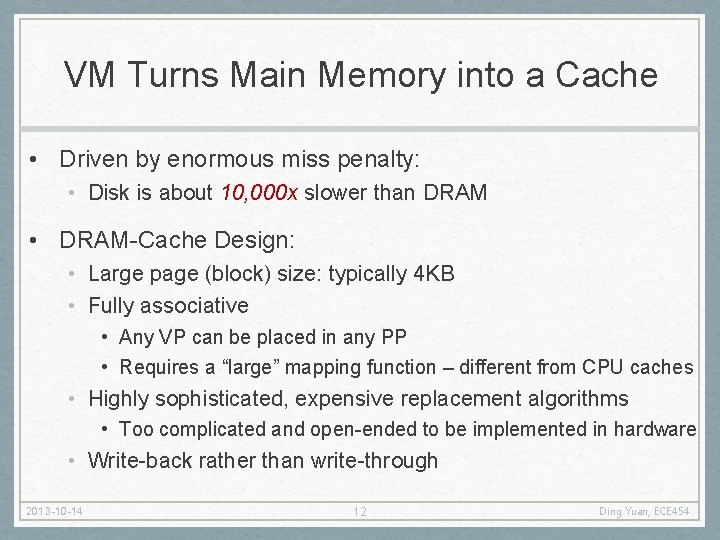

VM Turns Main Memory into a Cache • Driven by enormous miss penalty: • Disk is about 10, 000 x slower than DRAM • DRAM-Cache Design: • Large page (block) size: typically 4 KB • Fully associative • Any VP can be placed in any PP • Requires a “large” mapping function – different from CPU caches • Highly sophisticated, expensive replacement algorithms • Too complicated and open-ended to be implemented in hardware • Write-back rather than write-through 2013 -10 -14 12 Ding Yuan, ECE 454

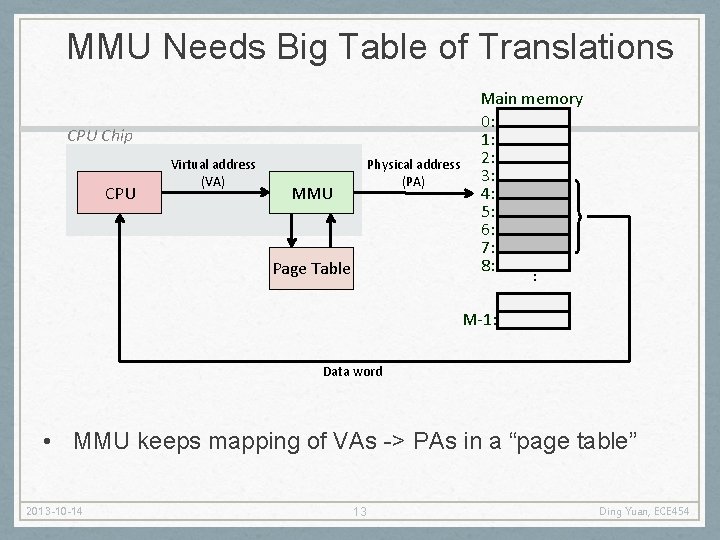

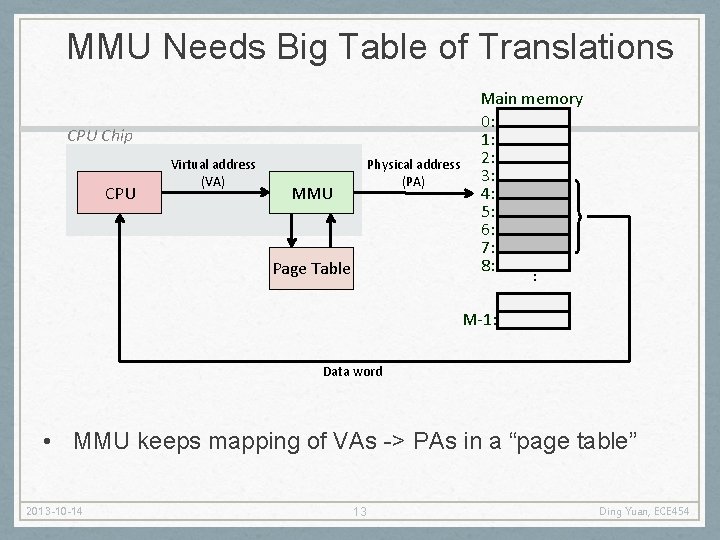

MMU Needs Big Table of Translations CPU Chip CPU Virtual address (VA) Physical address (PA) MMU . . . Page Table Main memory 0: 1: 2: 3: 4: 5: 6: 7: 8: M-1: Data word • MMU keeps mapping of VAs -> PAs in a “page table” 2013 -10 -14 13 Ding Yuan, ECE 454

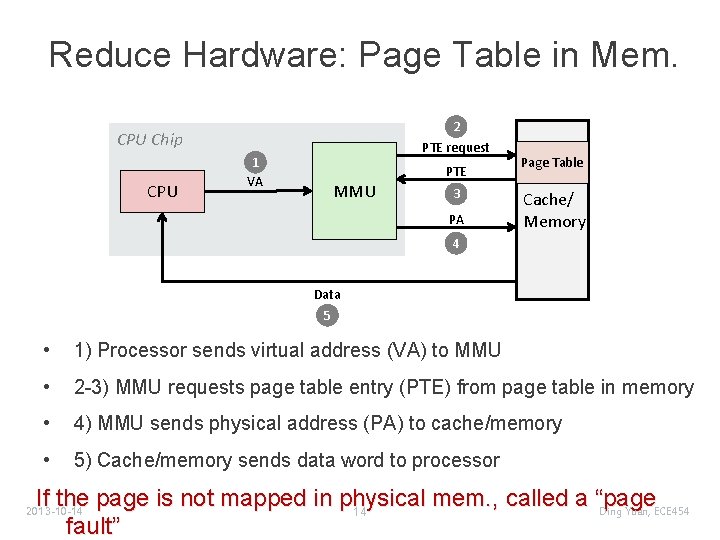

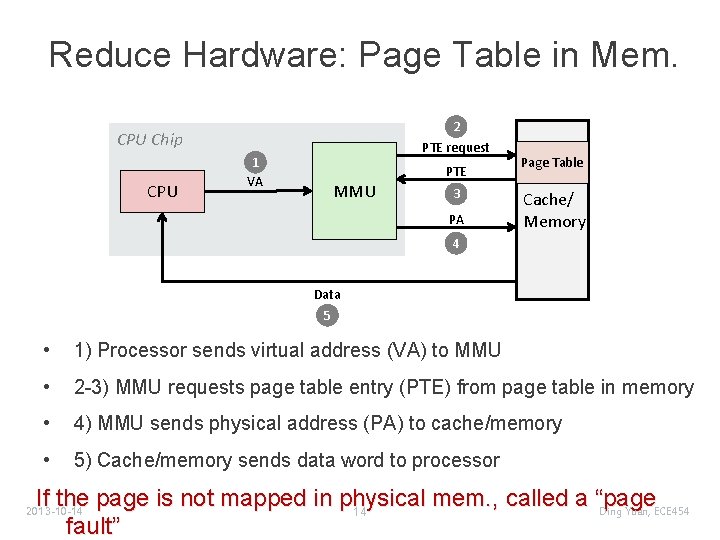

Reduce Hardware: Page Table in Mem. 2 PTE request CPU Chip CPU 1 VA PTE MMU 3 PA Page Table Cache/ Memory 4 Data 5 • 1) Processor sends virtual address (VA) to MMU • 2 -3) MMU requests page table entry (PTE) from page table in memory • 4) MMU sends physical address (PA) to cache/memory • 5) Cache/memory sends data word to processor If the page is not mapped in physical mem. , called a “page Ding Yuan, ECE 454 14 fault” 2013 -10 -14

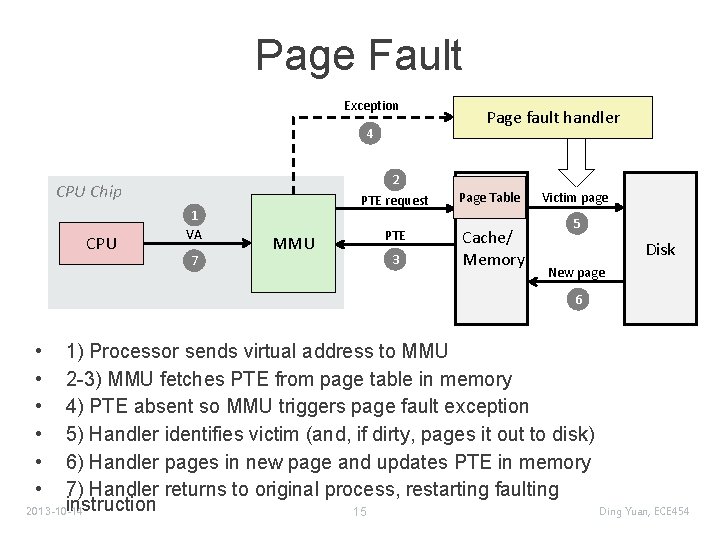

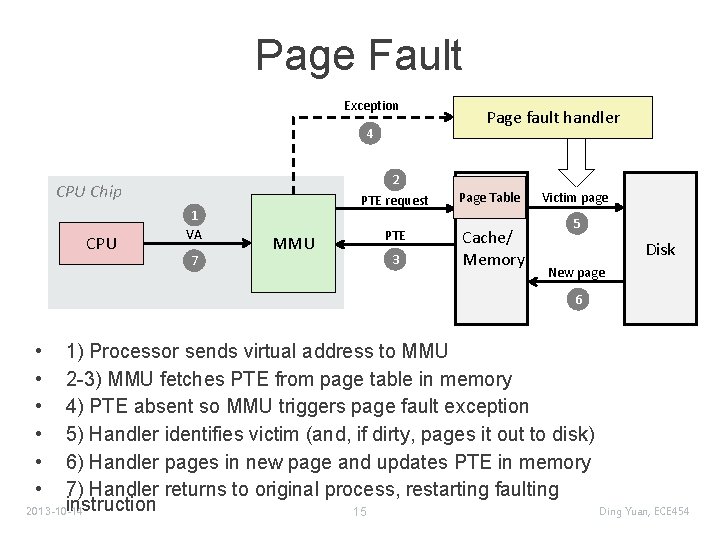

Page Fault Exception 4 2 PTE request CPU Chip CPU 1 VA 7 MMU PTE 3 Page fault handler Page Table Cache/ Memory Victim page 5 Disk New page 6 • • • 1) Processor sends virtual address to MMU 2 -3) MMU fetches PTE from page table in memory 4) PTE absent so MMU triggers page fault exception 5) Handler identifies victim (and, if dirty, pages it out to disk) 6) Handler pages in new page and updates PTE in memory 7) Handler returns to original process, restarting faulting instruction Ding Yuan, ECE 454 2013 -10 -14 15

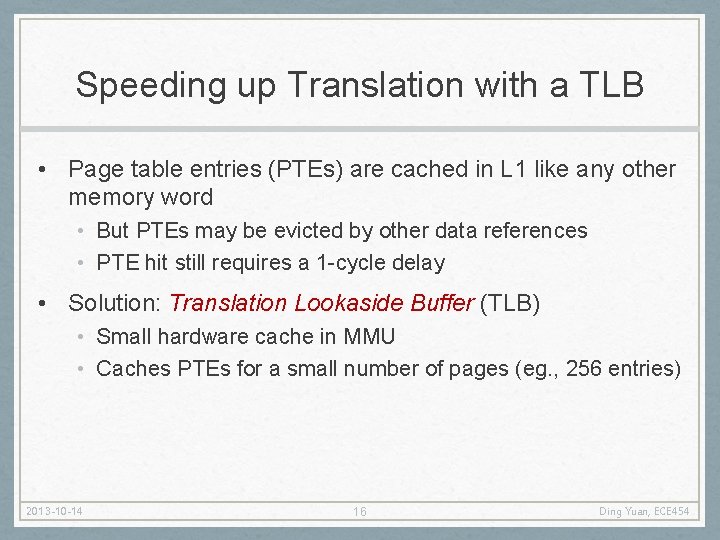

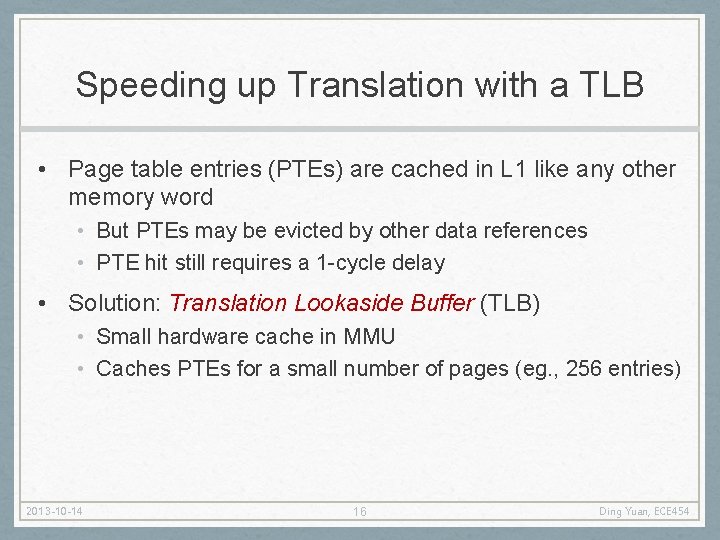

Speeding up Translation with a TLB • Page table entries (PTEs) are cached in L 1 like any other memory word • But PTEs may be evicted by other data references • PTE hit still requires a 1 -cycle delay • Solution: Translation Lookaside Buffer (TLB) • Small hardware cache in MMU • Caches PTEs for a small number of pages (eg. , 256 entries) 2013 -10 -14 16 Ding Yuan, ECE 454

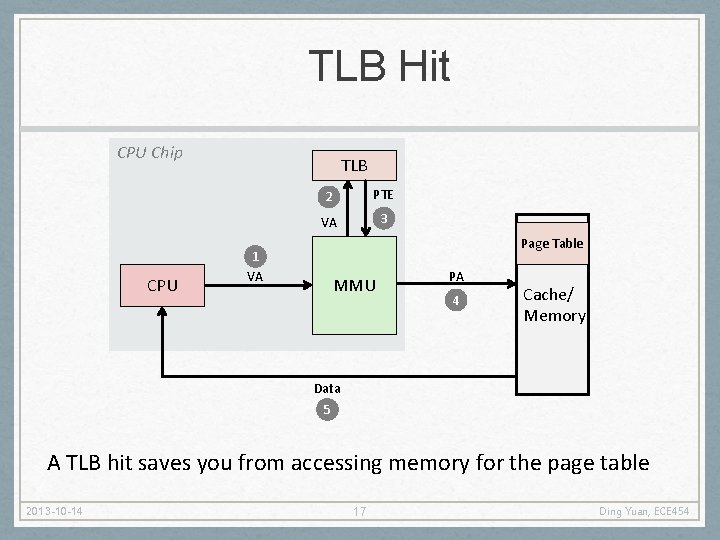

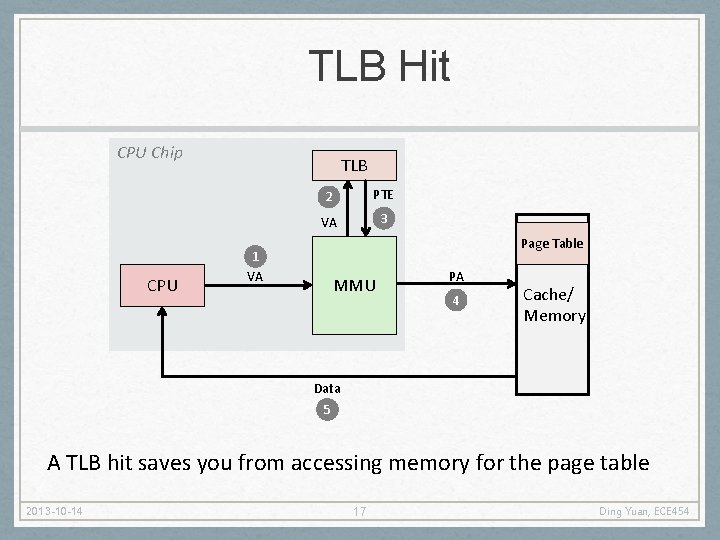

TLB Hit CPU Chip CPU TLB 2 PTE VA 3 Page Table 1 VA MMU PA 4 Cache/ Memory Data 5 A TLB hit saves you from accessing memory for the page table 2013 -10 -14 17 Ding Yuan, ECE 454

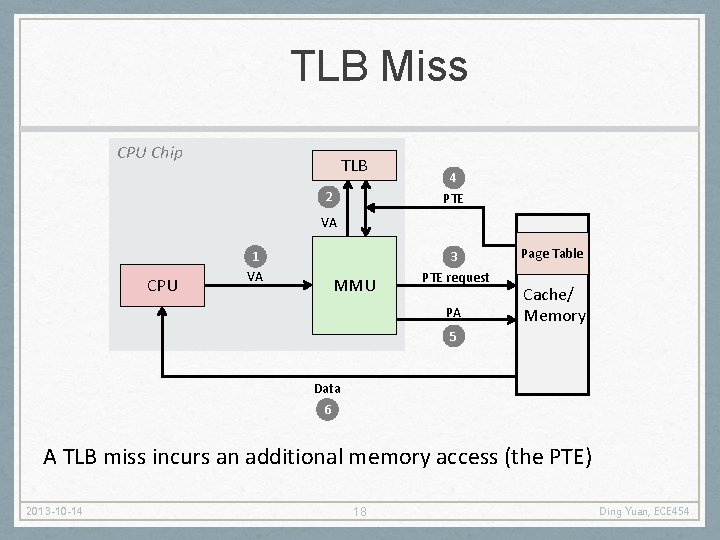

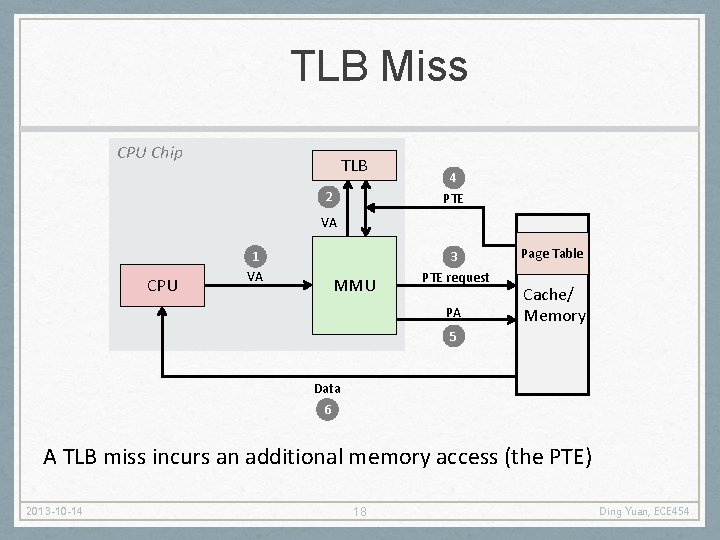

TLB Miss CPU Chip TLB 2 4 PTE VA CPU 1 VA MMU 3 PTE request PA Page Table Cache/ Memory 5 Data 6 A TLB miss incurs an additional memory access (the PTE) 2013 -10 -14 18 Ding Yuan, ECE 454

8 13 2020 -09 -15

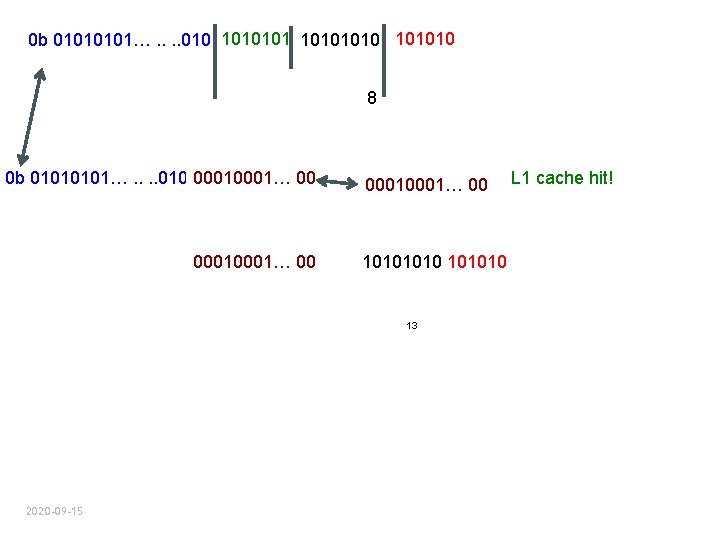

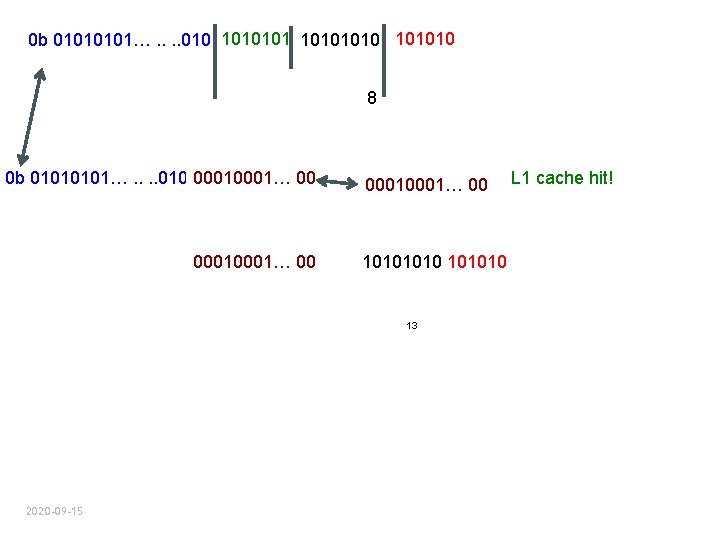

0 b 0101…. . 010 10101010 8 0 b 0101…. . 010 00010001… 00 101010 13 2020 -09 -15 L 1 cache hit!

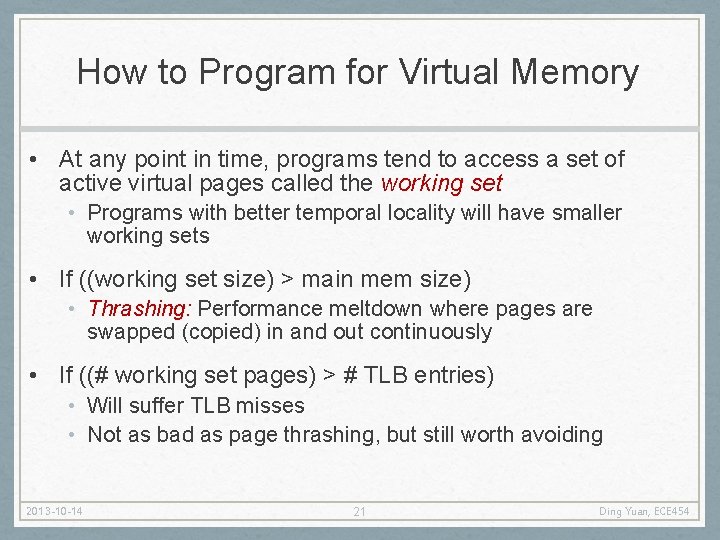

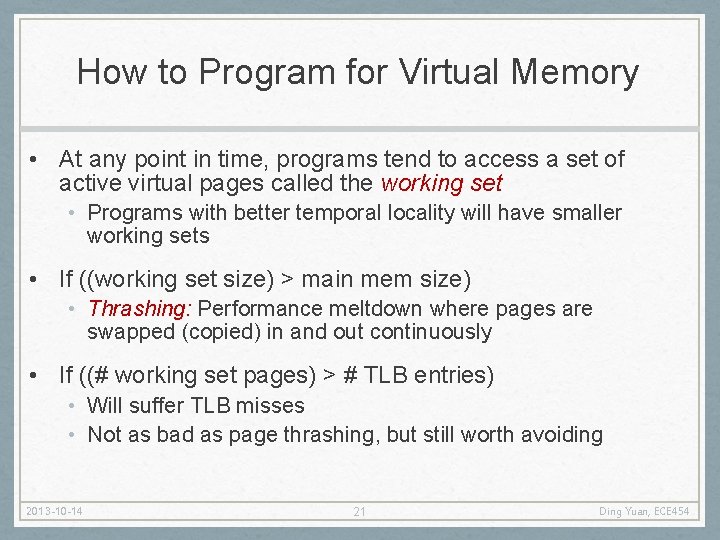

How to Program for Virtual Memory • At any point in time, programs tend to access a set of active virtual pages called the working set • Programs with better temporal locality will have smaller working sets • If ((working set size) > main mem size) • Thrashing: Performance meltdown where pages are swapped (copied) in and out continuously • If ((# working set pages) > # TLB entries) • Will suffer TLB misses • Not as bad as page thrashing, but still worth avoiding 2013 -10 -14 21 Ding Yuan, ECE 454

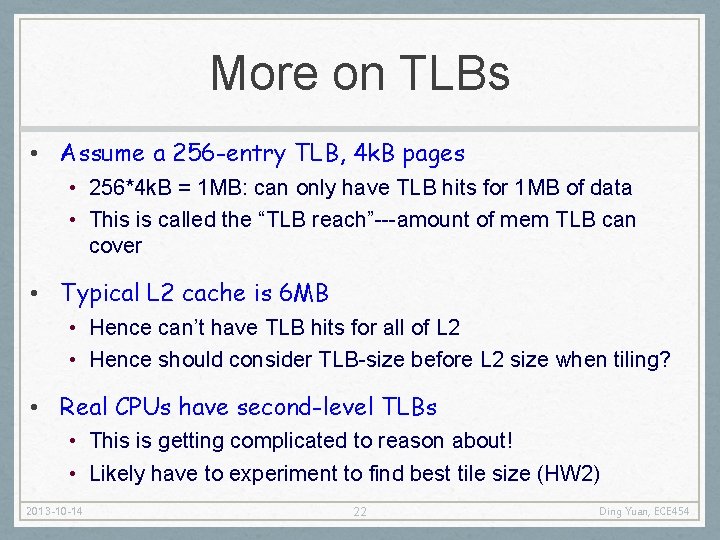

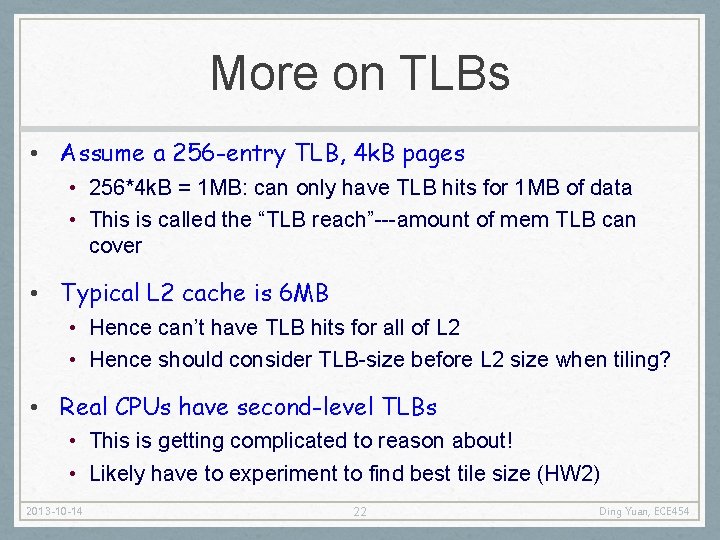

More on TLBs • Assume a 256 -entry TLB, 4 k. B pages • 256*4 k. B = 1 MB: can only have TLB hits for 1 MB of data • This is called the “TLB reach”---amount of mem TLB can cover • Typical L 2 cache is 6 MB • Hence can’t have TLB hits for all of L 2 • Hence should consider TLB-size before L 2 size when tiling? • Real CPUs have second-level TLBs • This is getting complicated to reason about! • Likely have to experiment to find best tile size (HW 2) 2013 -10 -14 22 Ding Yuan, ECE 454

Prefetching 2013 -10 -14 23 Ding Yuan, ECE 454

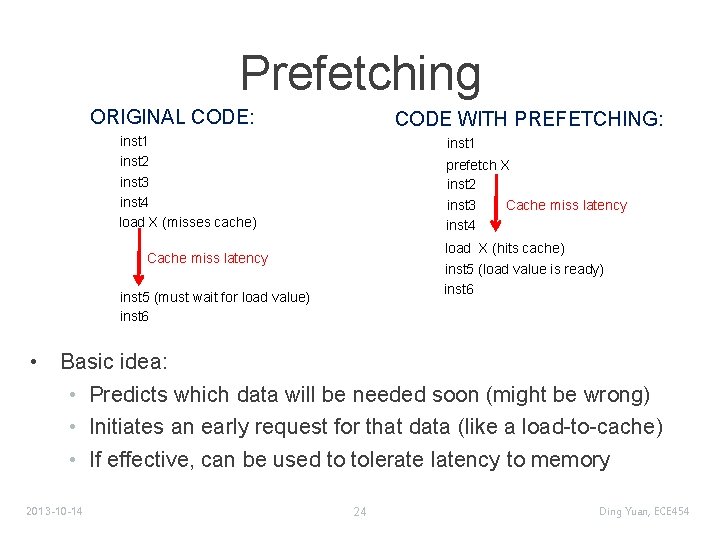

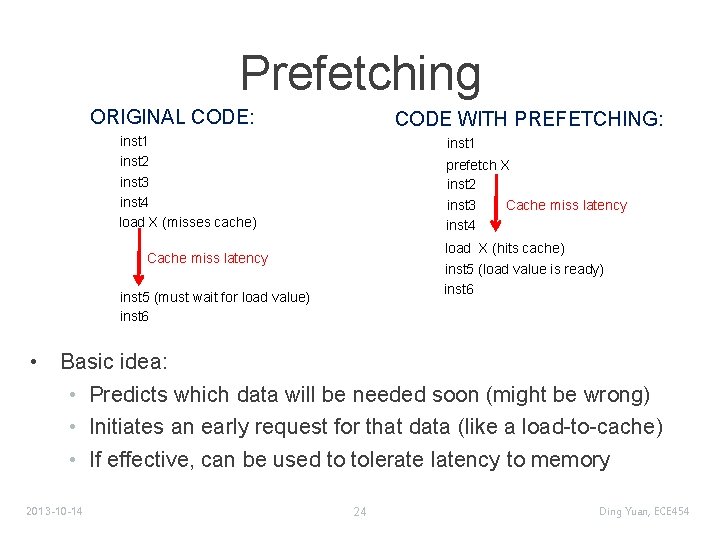

Prefetching ORIGINAL CODE: CODE WITH PREFETCHING: inst 1 inst 2 inst 3 inst 4 load X (misses cache) inst 1 prefetch X inst 2 inst 3 Cache miss latency inst 4 load X (hits cache) inst 5 (load value is ready) inst 6 Cache miss latency inst 5 (must wait for load value) inst 6 • Basic idea: • Predicts which data will be needed soon (might be wrong) • Initiates an early request for that data (like a load-to-cache) • If effective, can be used to tolerate latency to memory 2013 -10 -14 24 Ding Yuan, ECE 454

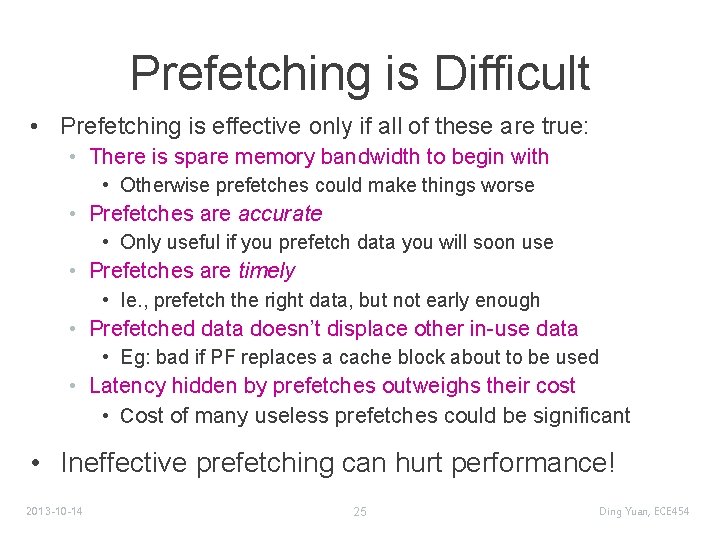

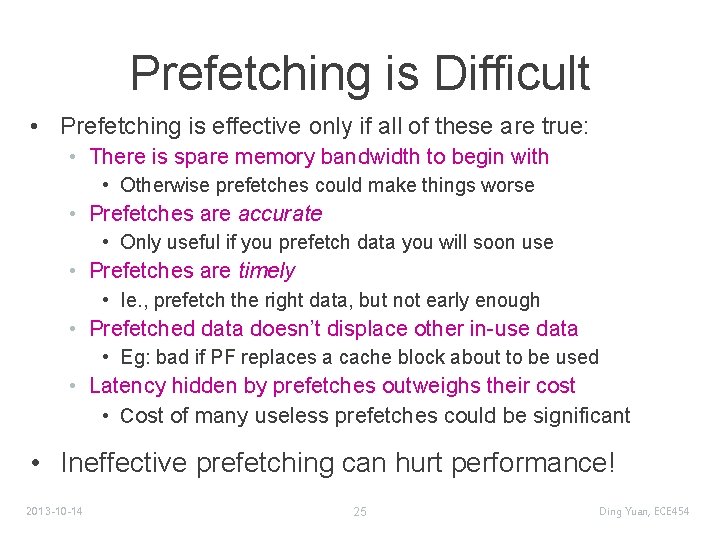

Prefetching is Difficult • Prefetching is effective only if all of these are true: • There is spare memory bandwidth to begin with • Otherwise prefetches could make things worse • Prefetches are accurate • Only useful if you prefetch data you will soon use • Prefetches are timely • Ie. , prefetch the right data, but not early enough • Prefetched data doesn’t displace other in-use data • Eg: bad if PF replaces a cache block about to be used • Latency hidden by prefetches outweighs their cost • Cost of many useless prefetches could be significant • Ineffective prefetching can hurt performance! 2013 -10 -14 25 Ding Yuan, ECE 454

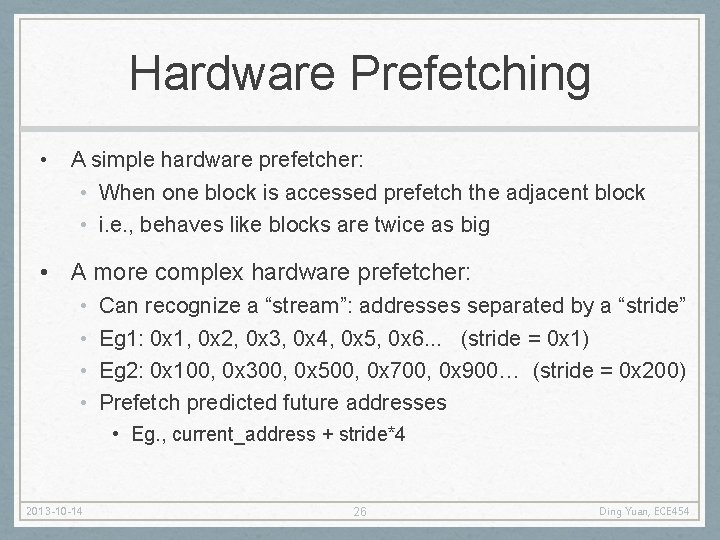

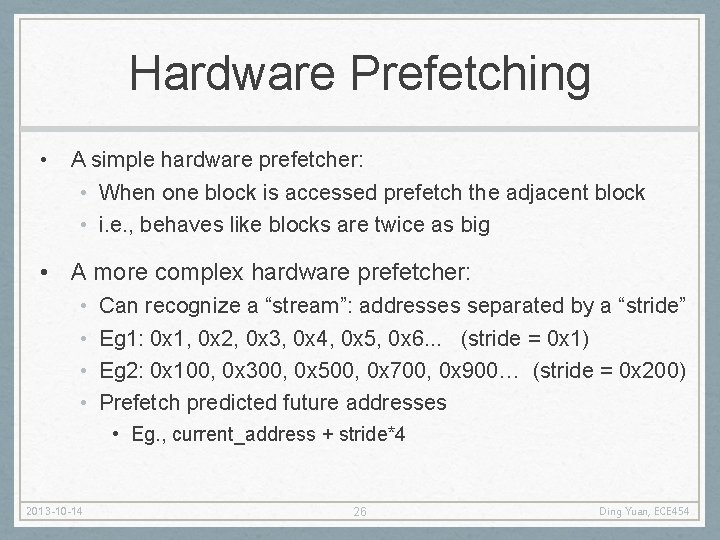

Hardware Prefetching • A simple hardware prefetcher: • When one block is accessed prefetch the adjacent block • i. e. , behaves like blocks are twice as big • A more complex hardware prefetcher: • • Can recognize a “stream”: addresses separated by a “stride” Eg 1: 0 x 1, 0 x 2, 0 x 3, 0 x 4, 0 x 5, 0 x 6. . . (stride = 0 x 1) Eg 2: 0 x 100, 0 x 300, 0 x 500, 0 x 700, 0 x 900… (stride = 0 x 200) Prefetch predicted future addresses • Eg. , current_address + stride*4 2013 -10 -14 26 Ding Yuan, ECE 454

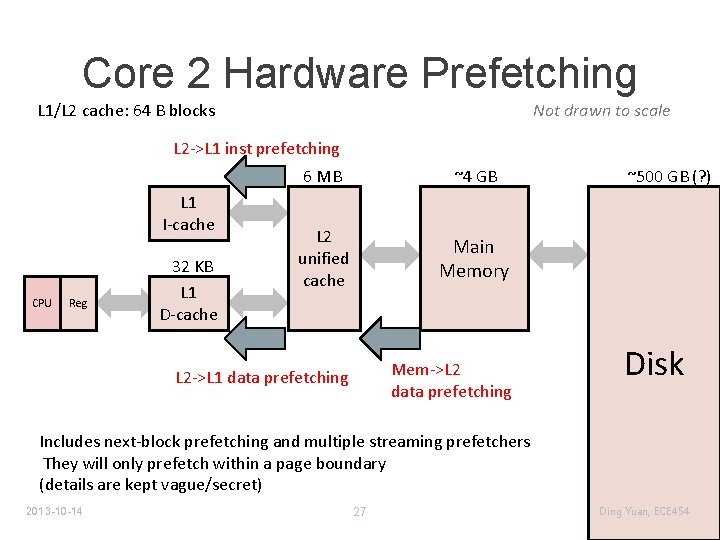

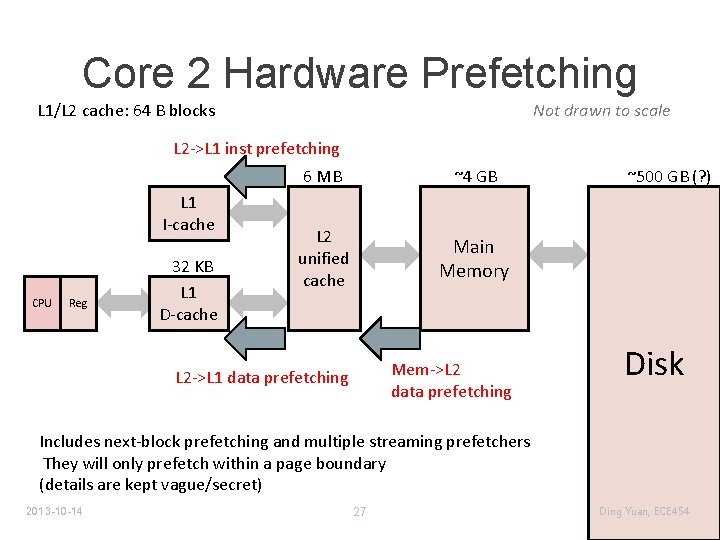

Core 2 Hardware Prefetching L 1/L 2 cache: 64 B blocks Not drawn to scale L 2 ->L 1 inst prefetching 6 MB ~4 GB L 2 unified cache Main Memory L 2 ->L 1 data prefetching Mem->L 2 data prefetching L 1 I-cache CPU Reg 32 KB L 1 D-cache ~500 GB (? ) Disk Includes next-block prefetching and multiple streaming prefetchers They will only prefetch within a page boundary (details are kept vague/secret) 2013 -10 -14 27 Ding Yuan, ECE 454

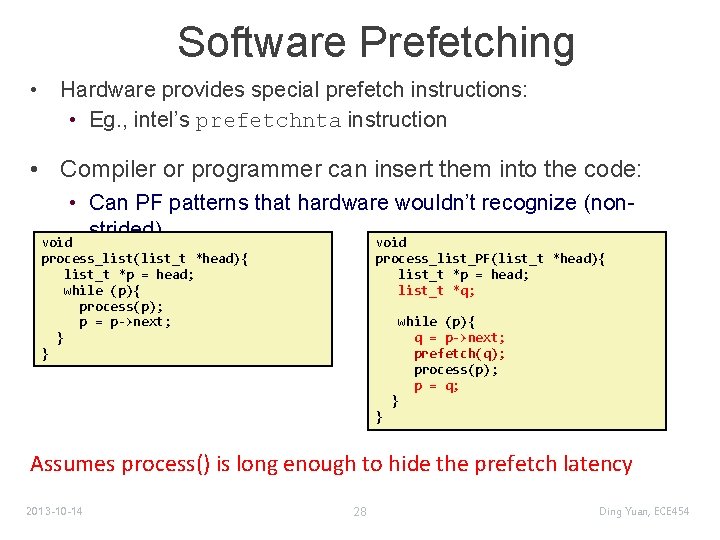

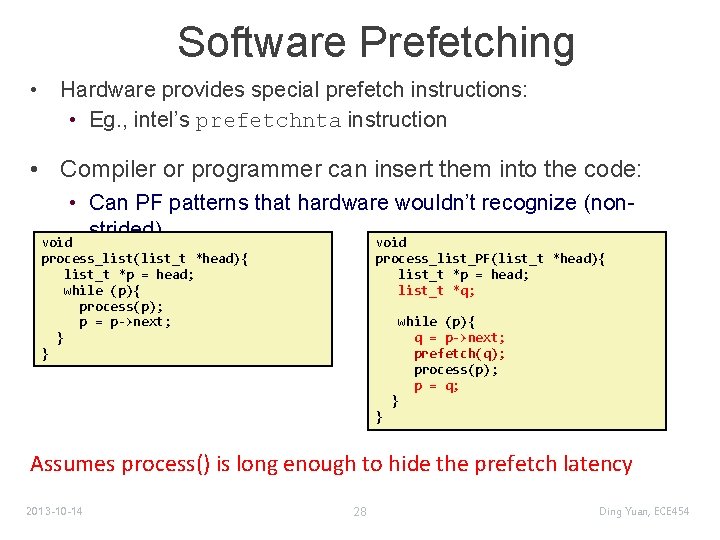

Software Prefetching • Hardware provides special prefetch instructions: • Eg. , intel’s prefetchnta instruction • Compiler or programmer can insert them into the code: • Can PF patterns that hardware wouldn’t recognize (nonstrided) void process_list(list_t *head){ list_t *p = head; while (p){ process(p); p = p->next; } } process_list_PF(list_t *head){ list_t *p = head; list_t *q; } } while (p){ q = p->next; prefetch(q); process(p); p = q; Assumes process() is long enough to hide the prefetch latency 2013 -10 -14 28 Ding Yuan, ECE 454

Summary: Optimizing for a Modern Memory Hierarchy 2013 -10 -14 29 Ding Yuan, ECE 454

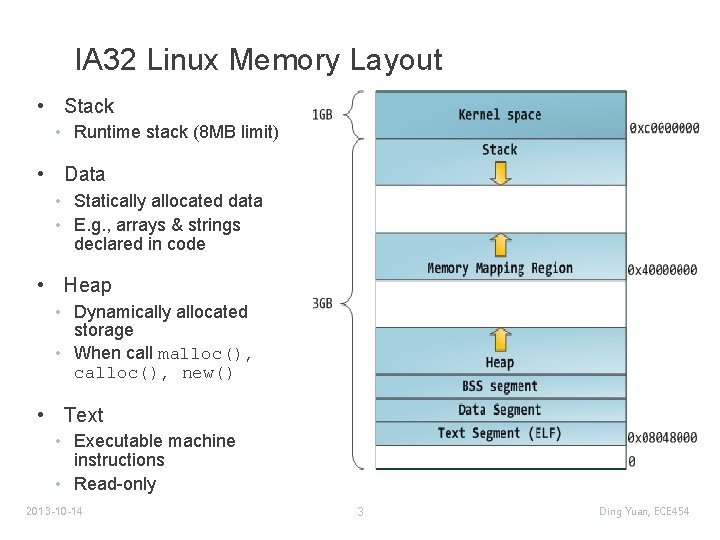

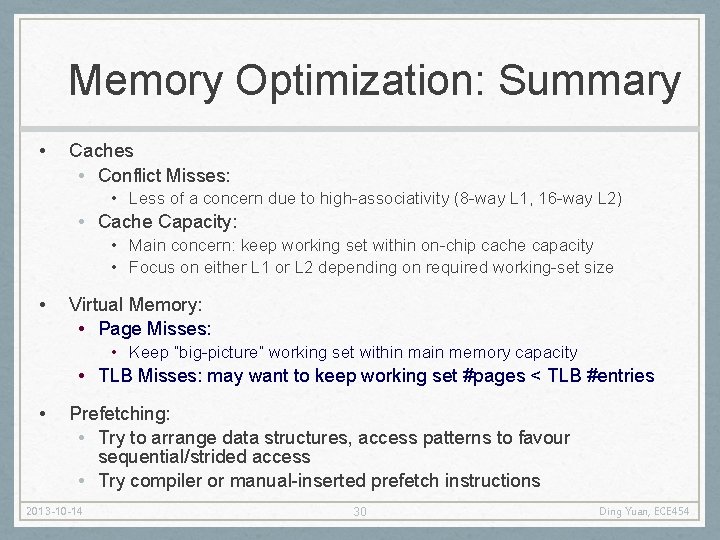

Memory Optimization: Summary • Caches • Conflict Misses: • Less of a concern due to high-associativity (8 -way L 1, 16 -way L 2) • Cache Capacity: • Main concern: keep working set within on-chip cache capacity • Focus on either L 1 or L 2 depending on required working-set size • Virtual Memory: • Page Misses: • Keep “big-picture” working set within main memory capacity • TLB Misses: may want to keep working set #pages < TLB #entries • Prefetching: • Try to arrange data structures, access patterns to favour sequential/strided access • Try compiler or manual-inserted prefetch instructions 2013 -10 -14 30 Ding Yuan, ECE 454