Dieter Kranzlmller Scientific Computing LRZ June 16 th

- Slides: 32

Dieter Kranzlmüller Scientific Computing @ LRZ June 16 th, 2011 CC-IN 2 P 3 Prospective Days Aix-le-Bains, France

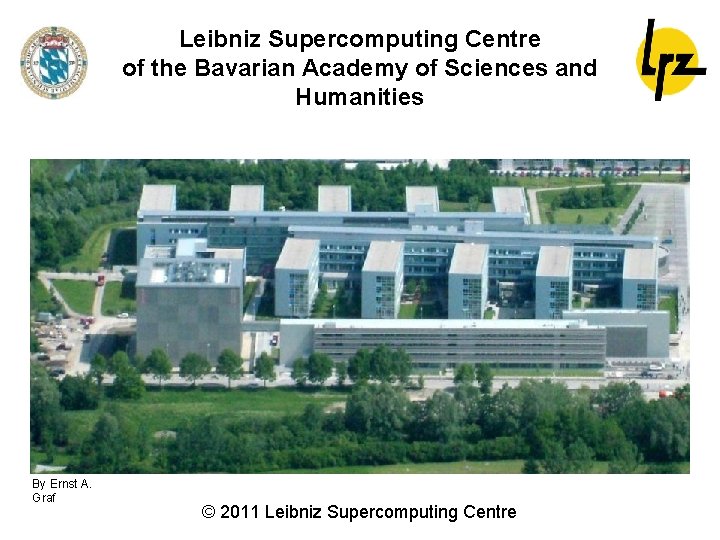

Leibniz Supercomputing Centre of the Bavarian Academy of Sciences and Humanities By Ernst A. Graf © 2011 Leibniz Supercomputing Centre

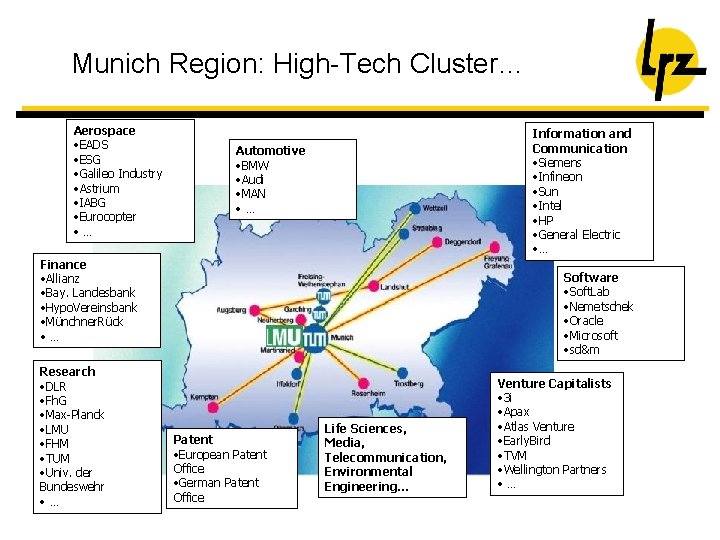

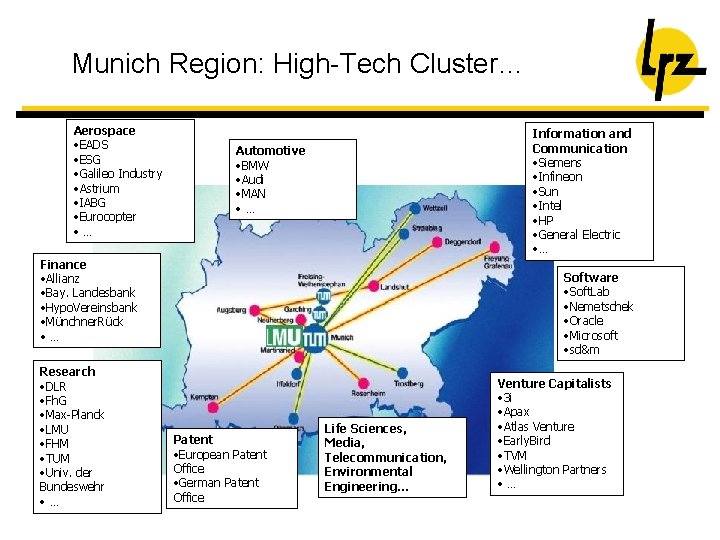

Munich Region: High-Tech Cluster… Aerospace • EADS • ESG • Galileo Industry • Astrium • IABG • Eurocopter • … Information and Communication • Siemens • Infineon • Sun • Intel • HP • General Electric • … Automotive • BMW • Audi • MAN • … Finance • Allianz • Bay. Landesbank • Hypo. Vereinsbank • Münchner. Rück • … Research • DLR • Fh. G • Max-Planck • LMU • FHM • TUM • Univ. der Bundeswehr • … Software • Soft. Lab • Nemetschek • Oracle • Microsoft • sd&m Patent • European Patent Office • German Patent Office Life Sciences, Media, Telecommunication, Environmental Engineering… Venture Capitalists • 3 i • Apax • Atlas Venture • Early. Bird • TVM • Wellington Partners • …

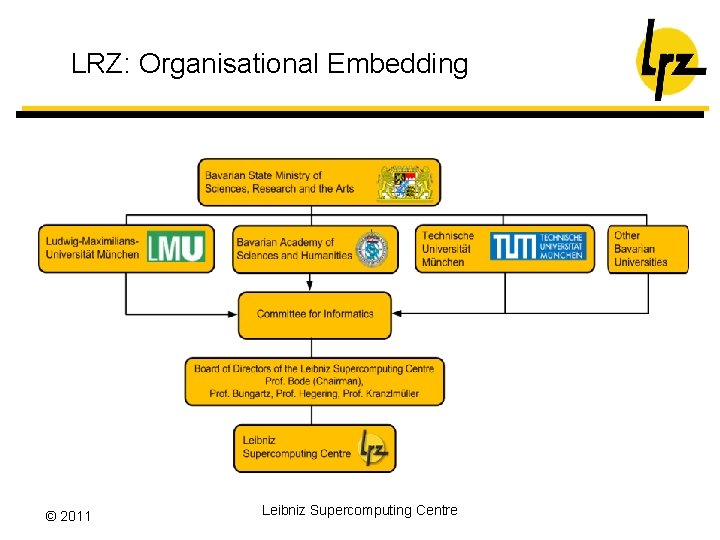

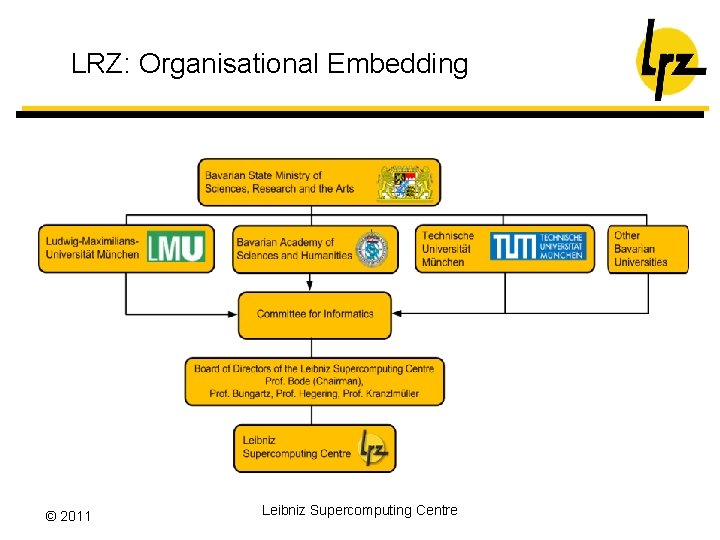

LRZ: Organisational Embedding © 2011 Leibniz Supercomputing Centre

The Leibniz Supercomputing Centre is… q Computer Centre for all Munich Universities l With 156 employees and 38 extra staff l For more than 90, 000 students and l For more than 30, 000 employees l including 8, 500 scientists q Regional Computer Centre for all Bavarian Universities l Capacity computing l Special equipment l Backup and Archiving Centre (15 petabyte, more than 9 billion files) l Distributed File Systems l Competence centre (Networks, HPC, Grid Computing, IT Management) q National Supercomputing Centre l Gauss Centre for Supercomputing l Integrated in European HPC and Grid projects © 2011 Leibniz Supercomputing Centre

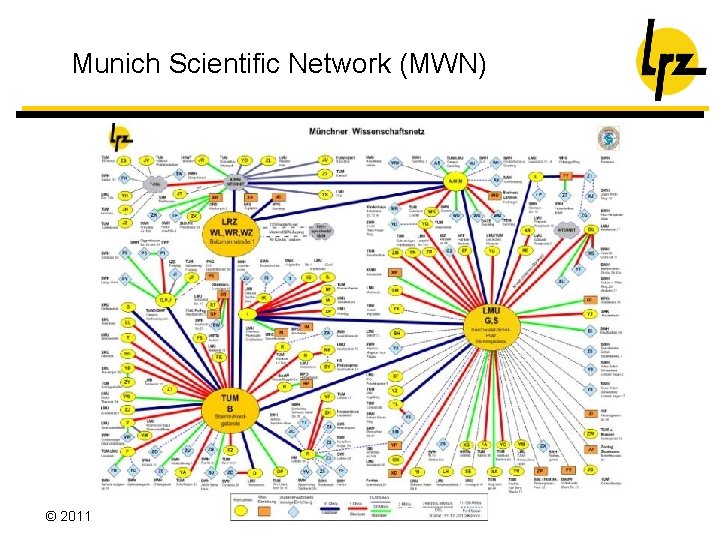

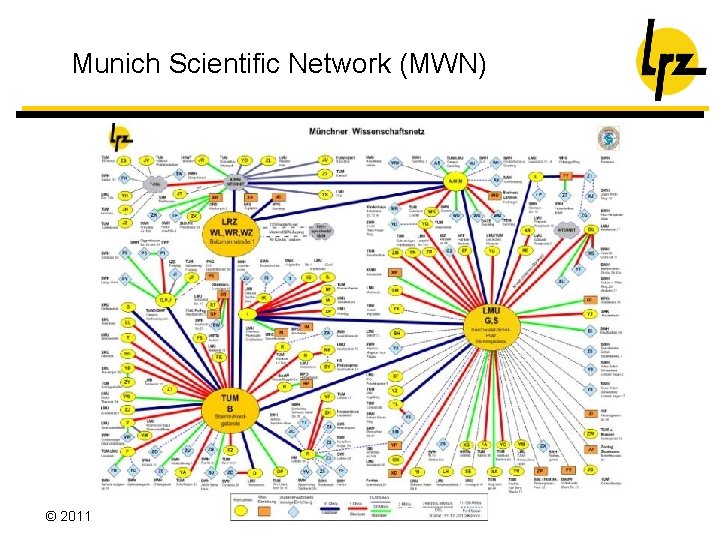

Munich Scientific Network (MWN) © 2011 Leibniz Supercomputing Centre

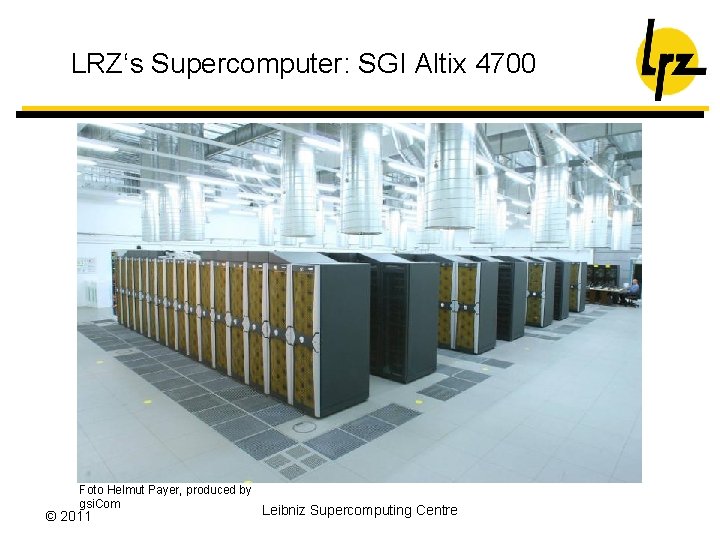

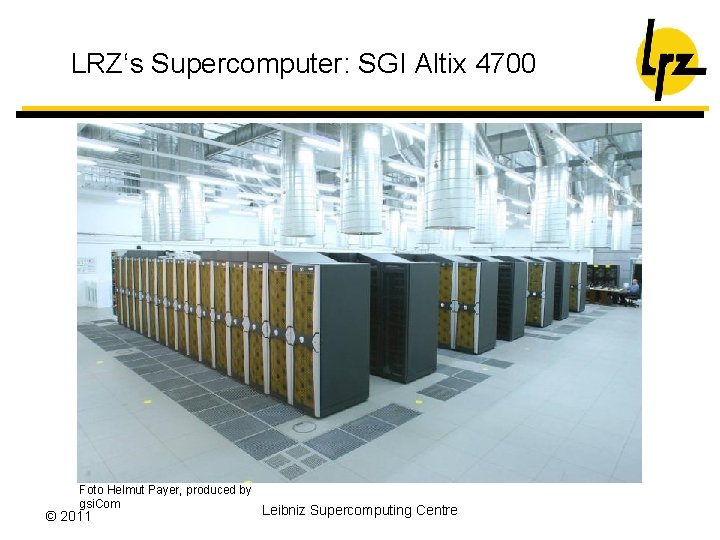

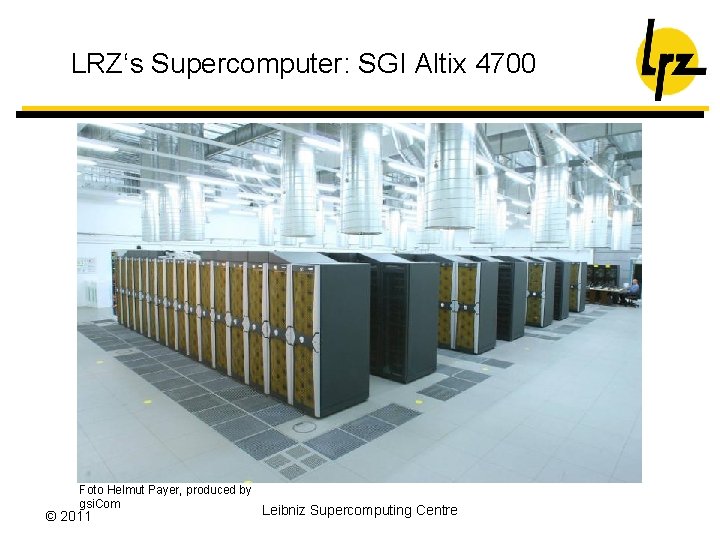

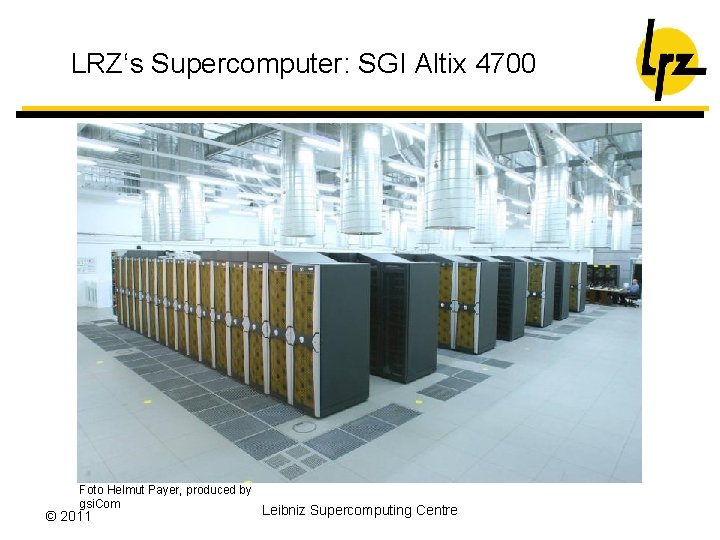

LRZ‘s Supercomputer: SGI Altix 4700 Foto Helmut Payer, produced by gsi. Com © 2011 Leibniz Supercomputing Centre

Evolution of Peak Performance and Memory (Sum over all LRZ systems) © 2011 Leibniz Supercomputing Centre

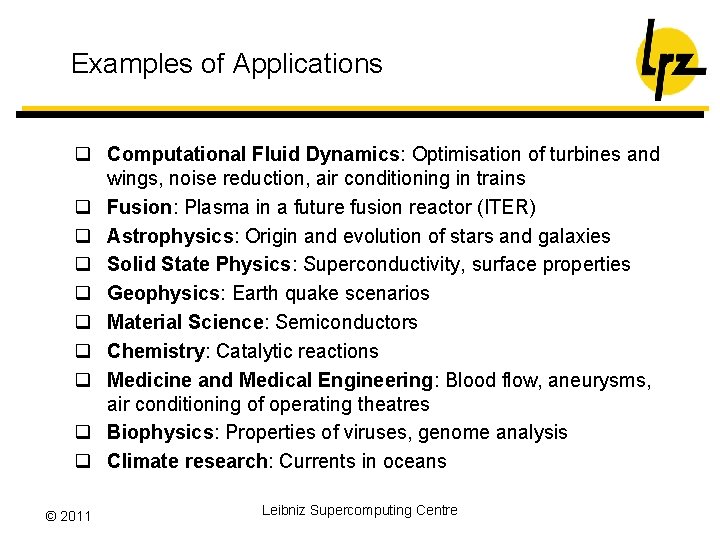

Examples of Applications q Computational Fluid Dynamics: Optimisation of turbines and wings, noise reduction, air conditioning in trains q Fusion: Plasma in a future fusion reactor (ITER) q Astrophysics: Origin and evolution of stars and galaxies q Solid State Physics: Superconductivity, surface properties q Geophysics: Earth quake scenarios q Material Science: Semiconductors q Chemistry: Catalytic reactions q Medicine and Medical Engineering: Blood flow, aneurysms, air conditioning of operating theatres q Biophysics: Properties of viruses, genome analysis q Climate research: Currents in oceans © 2011 Leibniz Supercomputing Centre

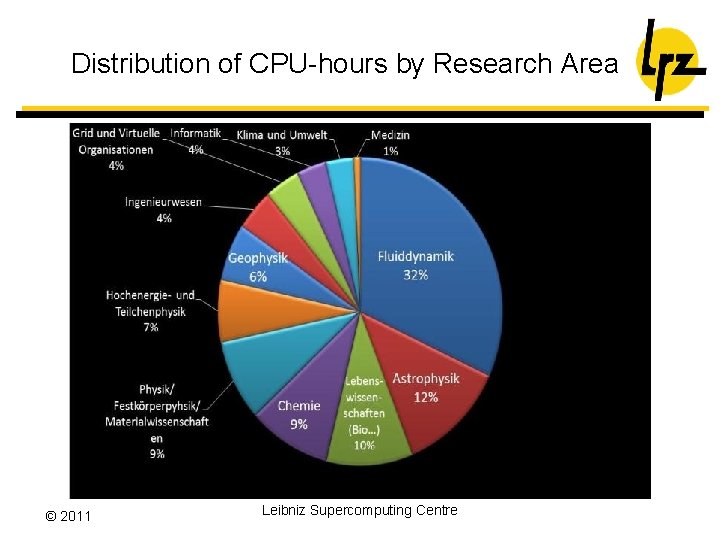

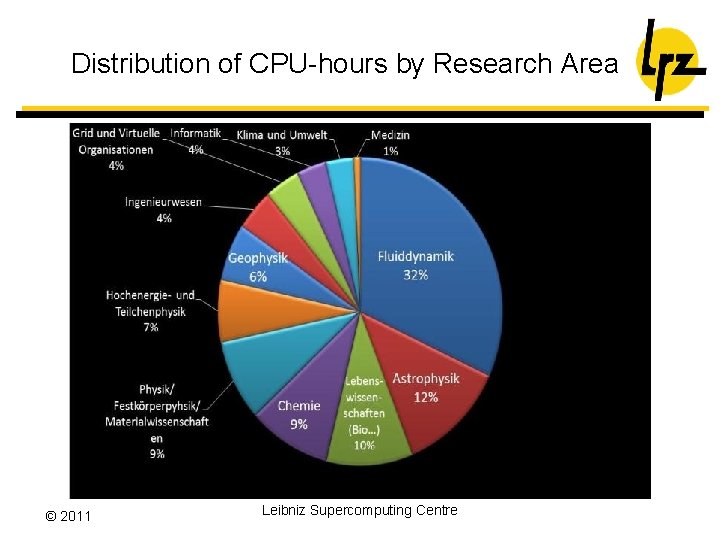

Distribution of CPU-hours by Research Area © 2011 Leibniz Supercomputing Centre

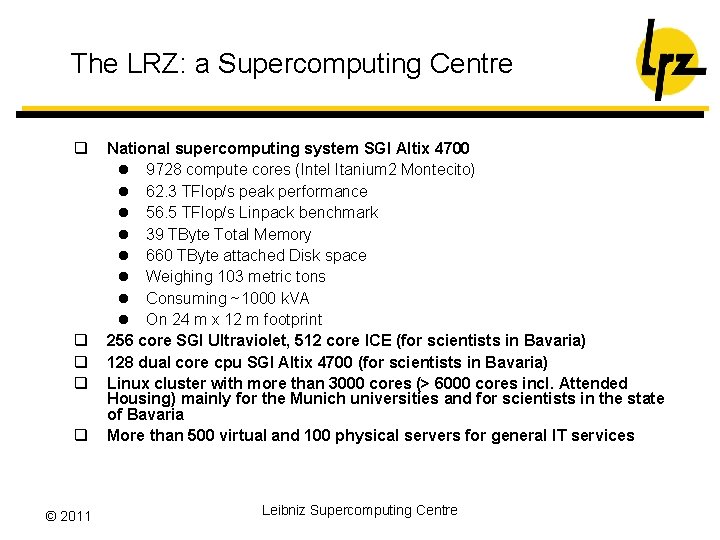

The LRZ: a Supercomputing Centre q q q © 2011 National supercomputing system SGI Altix 4700 l 9728 compute cores (Intel Itanium 2 Montecito) l 62. 3 TFlop/s peak performance l 56. 5 TFlop/s Linpack benchmark l 39 TByte Total Memory l 660 TByte attached Disk space l Weighing 103 metric tons l Consuming ~1000 k. VA l On 24 m x 12 m footprint 256 core SGI Ultraviolet, 512 core ICE (for scientists in Bavaria) 128 dual core cpu SGI Altix 4700 (for scientists in Bavaria) Linux cluster with more than 3000 cores (> 6000 cores incl. Attended Housing) mainly for the Munich universities and for scientists in the state of Bavaria More than 500 virtual and 100 physical servers for general IT services Leibniz Supercomputing Centre

Research at LRZ (1/2) q IT Management (Methods, Architecture, Tools) l Service Management (Impact Analysis, CSM, SLA Mgmt, Monitoring), ITIL (Process Refinements, Mapping to tools, Benchmarking), Cross. Domain Management, Security Management in Federations, Virtualization q Piloting new Network Technologies l 100 Gbit Ethernet WANs q Long-term Archiving l Hierarchical Storage Management Systems l Backup Strategies and Technologies © 2011 Leibniz Supercomputing Centre

Research at LRZ (2/2) q Grids l Management of Virtual Organizations (VO Mgmt), Grid Monitoring, Grid Middleware (Globus), Security and Intrusion Detection on the Grid, Meta-Scheduling, Service Level Agreements (SLA), Global Shared Filesystems q Computational Science l Operations Strategies for Petaflop Systems, New Programming Paradigms for Petaflop Systems, Scaling of Applications, Visualization of Large Data Sets, Virtual Reality © 2011 Leibniz Supercomputing Centre

Munich Computational Sciences Centre q q © 2011 Partners l Max Planck Society (MPG) l Computing Centre Garching of MPG (RZG) l Bavarian Academy of Sciences and Humanities l Leibniz Supercomputing Centre l University of Munich l Technical University of Munich Goals: l support the development of algorithms and applications suitable for high performance computing, as well as data processing and visualization in various scientific areas, l Bundle LRZ’s and RZG’s competence in the areas of high performance computing, large scale data handling and archiving, high performance networking, and optimization of HPC applications, l provide a quality of scientific computing unique throughout Germany, and on an internationally competitive level. Leibniz Supercomputing Centre

LRZ‘s Supercomputer: SGI Altix 4700 Foto Helmut Payer, produced by gsi. Com © 2011 Leibniz Supercomputing Centre

European e-Infrastructures Pan-European Data Network European Grid Infrastructure + National Grid Initiatives + European/National Cloud Initiatives + European/National Data Initiatives Partnership for Advanced Computing in Europe

The Gauss Centre for Supercomputing (GCS) A joint activity of the three German National HPC Centres q q The three German national supercomputing centres at Jülich, Garching and Stuttgart joined forces in the Gauss Centre for Supercomputing l John von Neumann Institut für Computing (NIC) l Leibniz Supercomputing Centre (LRZ) l Höchstleistungsrechenzentrum Stuttgart (HLRS) The largest and most powerful supercomputer infrastructure in Europe. Foundation of GCS (e. V. ) April, 13 th, 2007. Principal Partner in EU-Project PRACE (Partnership for Advanced Computing in Europe) © 2011 Leibniz Supercomputing Centre

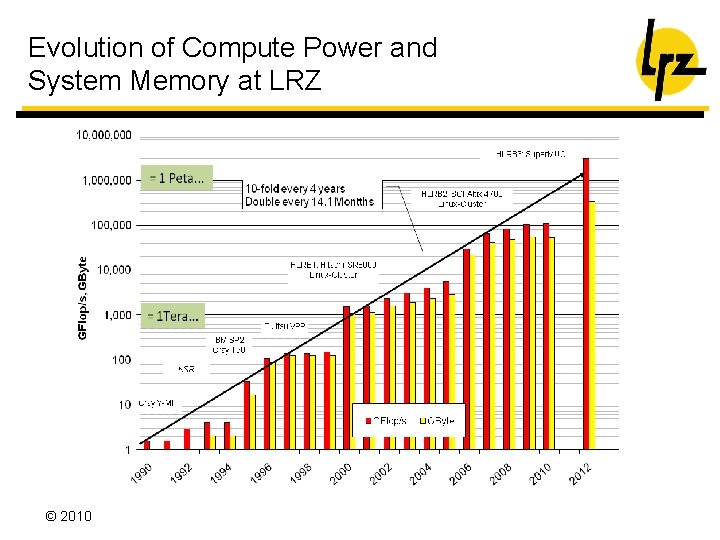

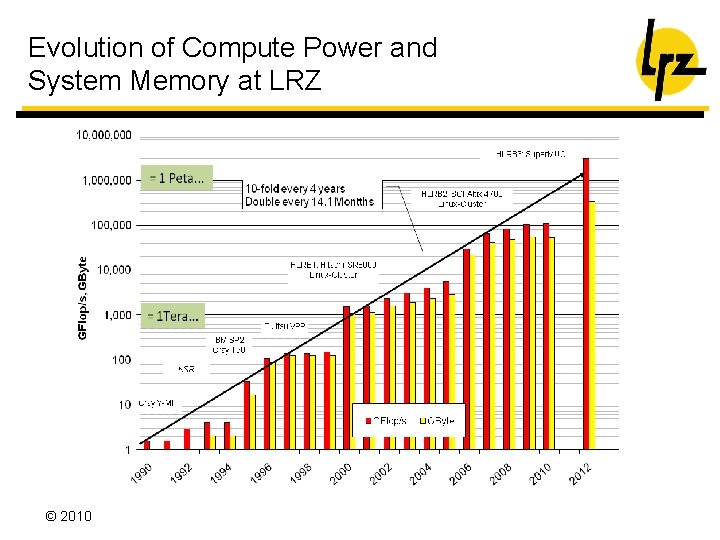

Evolution of Compute Power and System Memory at LRZ © 2010

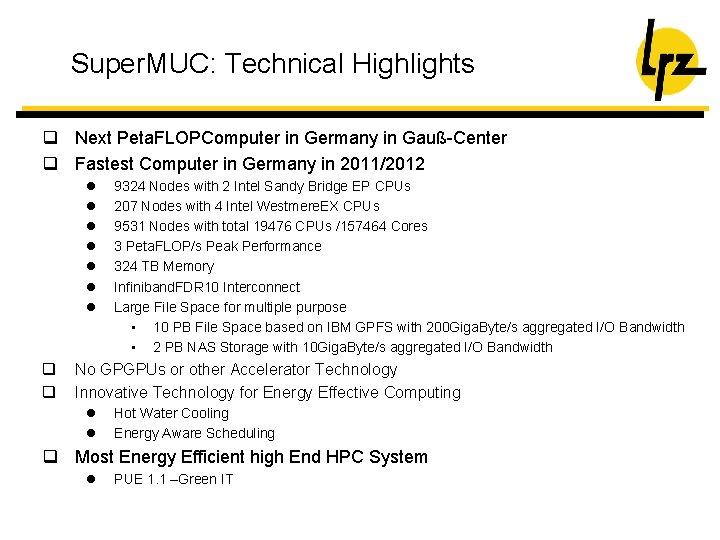

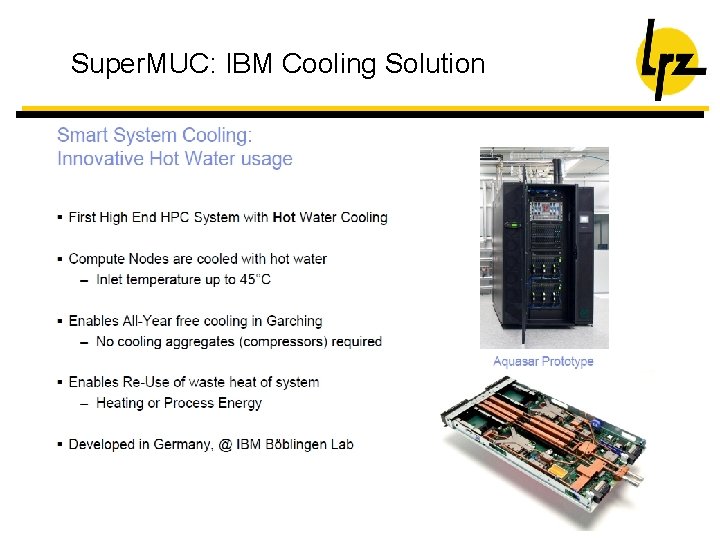

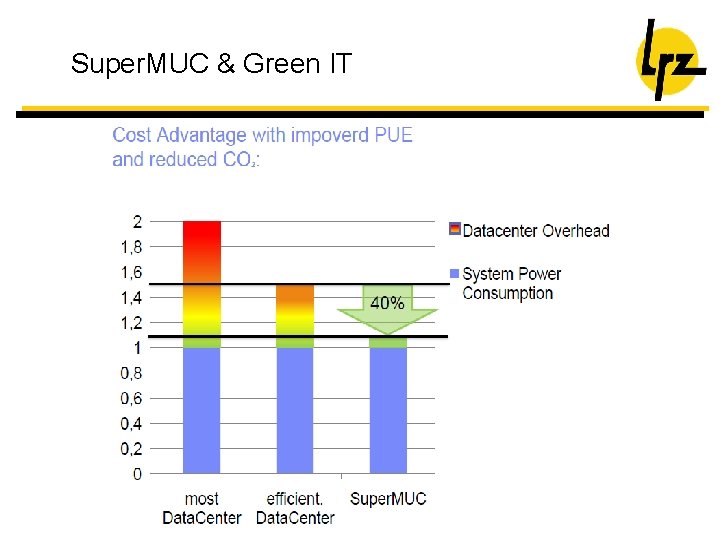

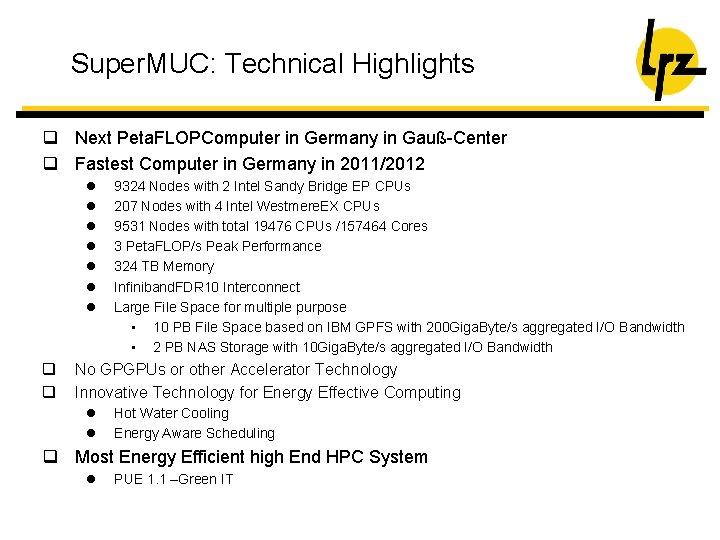

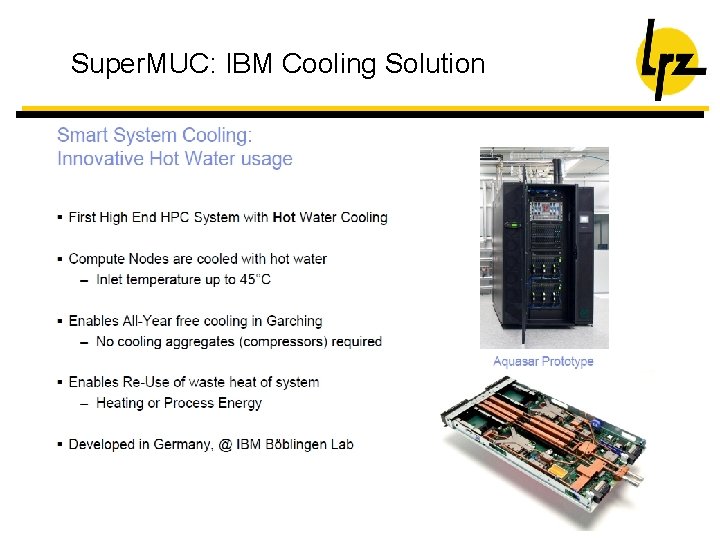

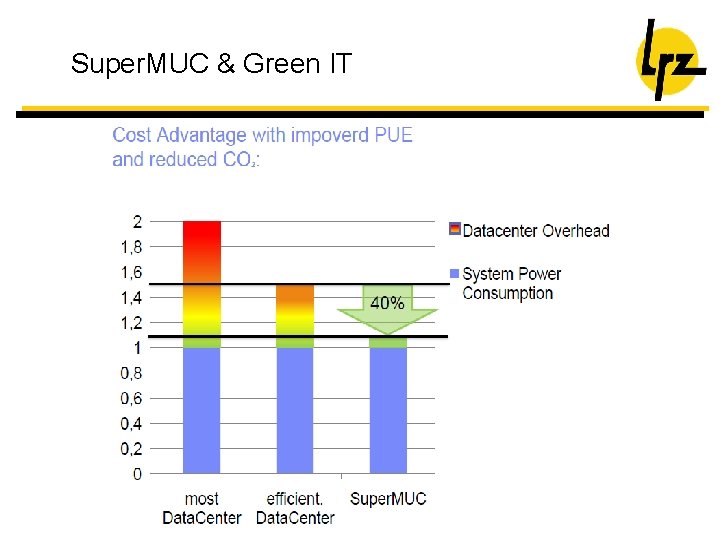

Super. MUC: Technical Highlights q Next Peta. FLOPComputer in Germany in Gauß-Center q Fastest Computer in Germany in 2011/2012 l l l l q q 9324 Nodes with 2 Intel Sandy Bridge EP CPUs 207 Nodes with 4 Intel Westmere. EX CPUs 9531 Nodes with total 19476 CPUs /157464 Cores 3 Peta. FLOP/s Peak Performance 324 TB Memory Infiniband. FDR 10 Interconnect Large File Space for multiple purpose • 10 PB File Space based on IBM GPFS with 200 Giga. Byte/s aggregated I/O Bandwidth • 2 PB NAS Storage with 10 Giga. Byte/s aggregated I/O Bandwidth No GPGPUs or other Accelerator Technology Innovative Technology for Energy Effective Computing l l Hot Water Cooling Energy Aware Scheduling q Most Energy Efficient high End HPC System l PUE 1. 1 –Green IT

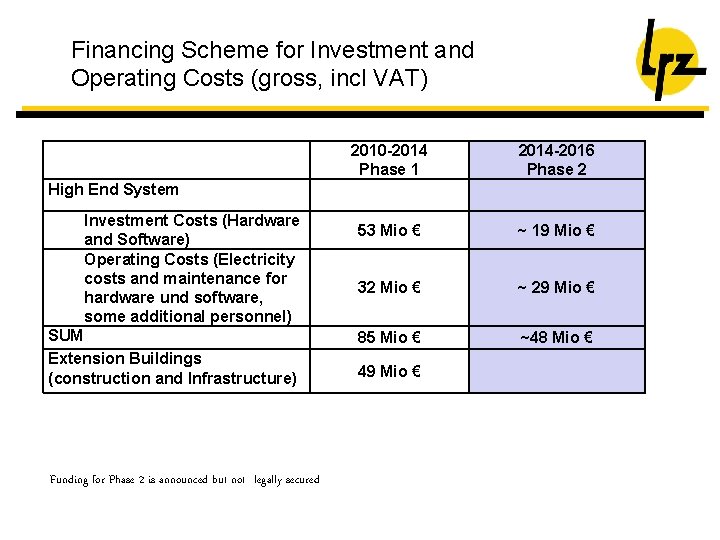

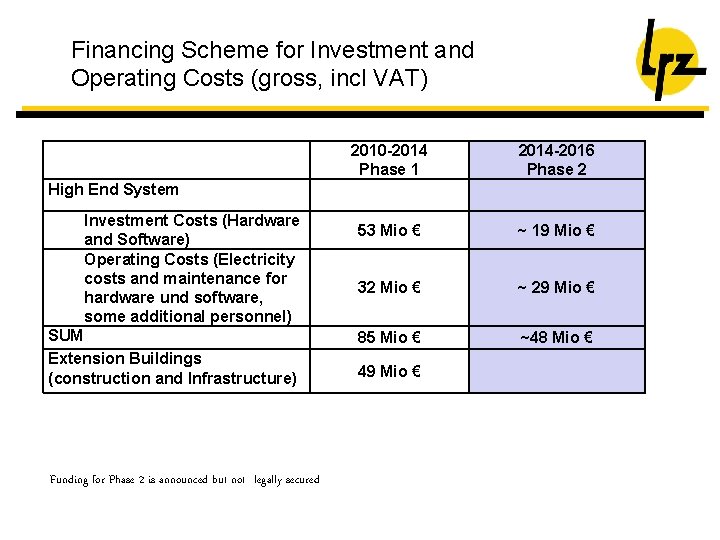

Financing Scheme for Investment and Operating Costs (gross, incl VAT) 2010 -2014 Phase 1 2014 -2016 Phase 2 53 Mio € ~ 19 Mio € 32 Mio € ~ 29 Mio € 85 Mio € ~48 Mio € High End System Investment Costs (Hardware and Software) Operating Costs (Electricity costs and maintenance for hardware und software, some additional personnel) SUM Extension Buildings (construction and Infrastructure) Funding for Phase 2 is announced but not legally secured 49 Mio €

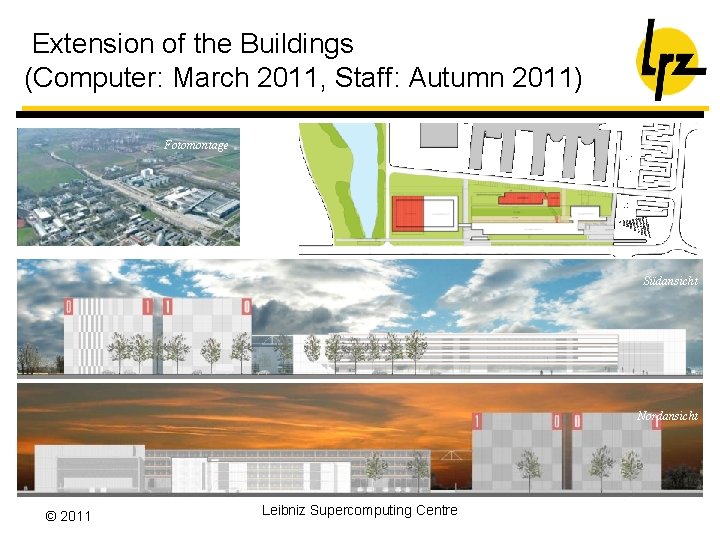

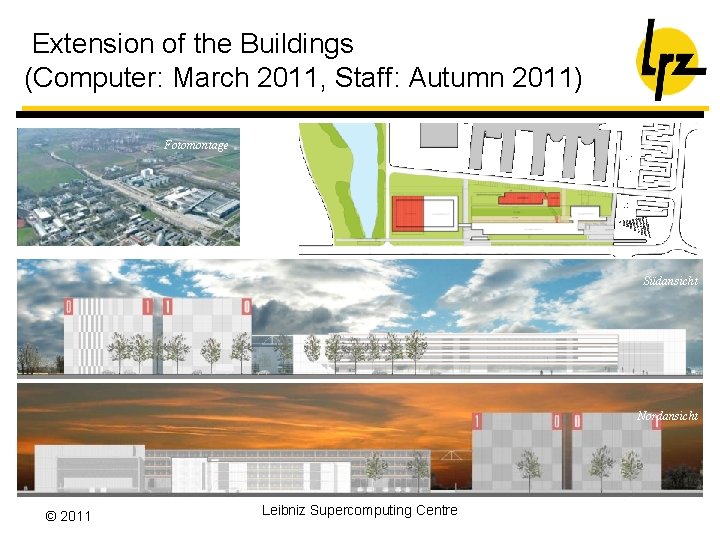

Extension of the Buildings (Computer: March 2011, Staff: Autumn 2011) Fotomontage Südansicht Nordansicht © 2011 Leibniz Supercomputing Centre

The LRZ building sommer 2010 © 2011 Leibniz-Rechenzentrum Foto: Ernst A. Graf

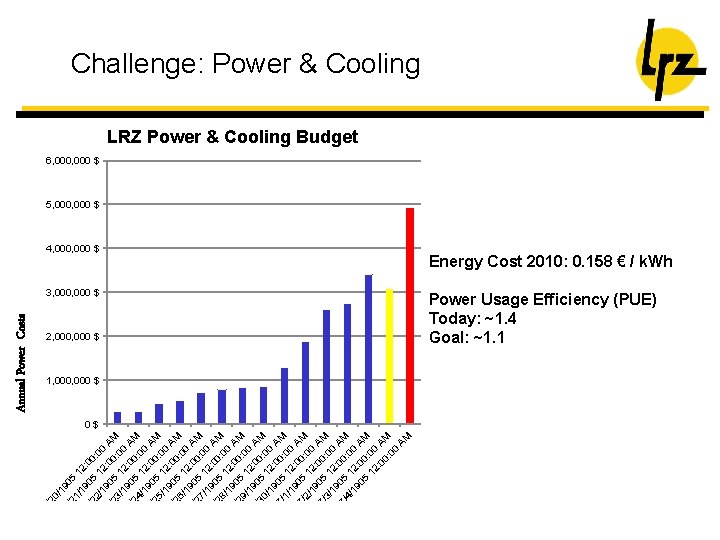

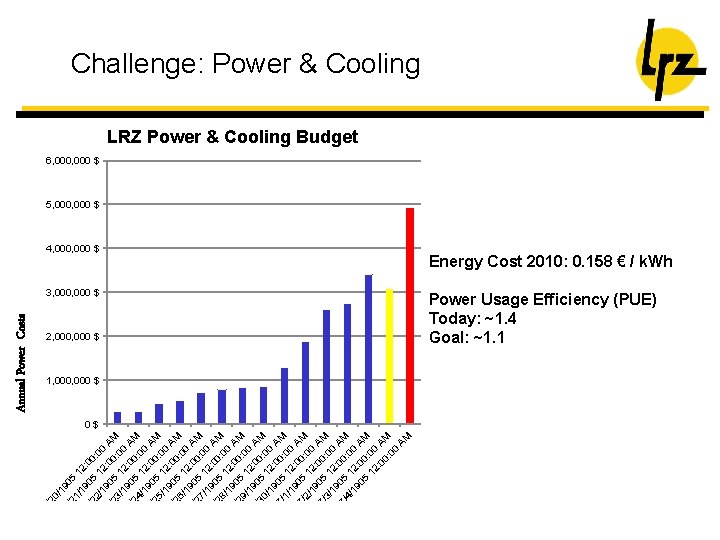

/1 6/ 90 21 5 /1 1 6/ 90 2: 0 0 22 5 /1 12 : 00 9 : 6/ A 23 05 00: M /1 12 00 6/ 90 : 0 AM 0 24 5 /1 12 : 00 6/ 90 : 0 A 0: M 25 5 0 1 /1 0 6/ 90 2: 0 A 0: M 26 5 0 1 /1 0 6/ 90 2: 0 A 0: M 27 5 /1 12 00 6/ 90 : 0 AM 0 28 5 /1 12 : 00 6/ 90 : 0 AM 0 29 5 /1 12 : 00 6/ 90 : 0 A 0: M 30 5 0 1 /1 0 9 2 7/ 05 : 00 AM : 1/ 19 12: 00 A 0 0 7/ 2/ 5 1 0: 0 M 0 19 2 7/ 05 : 00 AM : 3/ 19 12: 00 7/ 05 00 AM : 4/ 19 12: 00 05 00 AM 12 : 00 : 0 A 0: M 00 AM 6/ 20 Annual Power Costs Challenge: Power & Cooling LRZ Power & Cooling Budget 6, 000 $ 5, 000 $ 4, 000 $ 3, 000 $ 2, 000 $ 1, 000 $ 0$ Energy Cost 2010: 0. 158 € / k. Wh Power Usage Efficiency (PUE) Today: ~1. 4 Goal: ~1. 1

Super. MUC: IBM Cooling Solution

Super. MUC & Green IT

Outlook q European Supercomputing Center for Computational Science & Engineering q Challenges: l Scalability Peta/Exascale l Energy efficiency l User-driven service catalogue q Integrating Support Technologies: l IT Management l Grid Computing – Integration with EGI l Virtualization and Cloud Computing l Visualization and Virtual Reality Center Dieter Kranzlmüller

g. SLM: EC-funded FP 7 Project • Consortium partners: EMERGENCE TECH LTD. • • Start date: 2010 -09 -01 Duration: 24 months Total budget: 475, 000 EUR Web site: www. gslm. eu Dieter Kranzlmüller: Grids, Clouds, and e-Infrastructures: New challenges for IT management

Observations • e-Infrastructures provide value to their (scientific and business) users, but are focused on utility more than on warranty today. • For the success of tomorrow’s e-Infrastructures in research (and industry), it is important to adapt ITSM processes and concepts for the new types of e-Infrastructures. • Today, effective and generally applicable solutions/frameworks in support of service delivery management (SDM) and service level management (SLM) for e-Infrastructures are not available. Dieter Kranzlmüller: Grids, Clouds, and e-Infrastructures: New challenges for IT management

g. SLM: EC-funded FP 7 Project Core Objectives of g. SLM: • Foster the cross-disciplinary scientific exchange between the Grid and the IT Service Management communities • Initiate and hold regular meetings and public workshops on the management of e-Infrastructures (Grids). • Produce a scientific roadmap as a basis for future developments and research efforts. Dieter Kranzlmüller: Grids, Clouds, and e-Infrastructures: New challenges for IT management

g. SLM: Vision g. SLM Roadmap describes … • … the potential of applying ITSM concepts in Grids. • … a gap analysis showing where ITSM-related concepts have already been implemented in Grids – and to which degree of success. • … recommendations on how to establish ITSM in Grids in the future. g. SLM will provide … • … a freely available (online) tutorial on ITSM for Grids • … guidance and recommendations on SLM and SDM in Grids in a "good practice" fashion Dieter Kranzlmüller: Grids, Clouds, and e-Infrastructures: New challenges for IT management

Outlook q European Supercomputing Center for Computational Science & Engineering q Challenges: l Scalability Peta/Exascale l Energy efficiency l User-driven service catalogue q Integrating Support Technologies: l Grid Computing – Integration with EGI l Virtualization and Cloud Computing l Visualization and Virtual Reality Center l IT Management Dieter Kranzlmüller

Scientific Computing @ LRZ Dieter Kranzlmüller kranzlmueller@lrz. de