Derived Datatypes and Related Features Self Test with

![Solution /* Initialize displacements array with */ err = MPI_Address(&gi, &displacements[0]); /* memory addresses Solution /* Initialize displacements array with */ err = MPI_Address(&gi, &displacements[0]); /* memory addresses](https://slidetodoc.com/presentation_image_h2/10bc70d4e7f3e8846917bb927f93b251/image-17.jpg)

- Slides: 20

Derived Datatypes and Related Features Self Test with solution

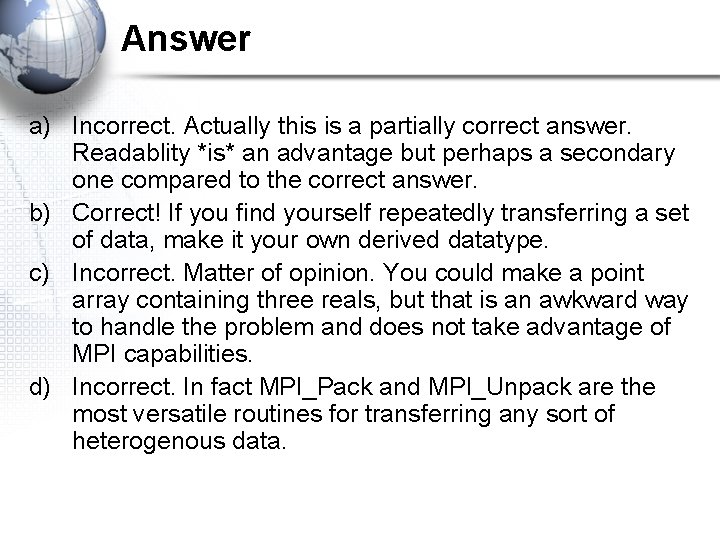

Self Test 1. You are writing a parallel program to be run on 100 processors. Each processor is working with only one section of a skeleton outline of a 3 -D model of a house. In the course of constructing the model house each processor often has to send the three cartesian coordinates (x, y, z) of nodes that are to be used to make the boundaries between the house sections. Each coordinate will be a real value. Why would it be advantageous for you to define a new data type called Point which contained the three coordinates?

Self Test a) My program will be more readable and self commenting. b) Since many x, y, z values will be used in MPI communication routines they can be sent as a single Point type entity instead of packing and unpacking three reals each time. c) Since all three values are real, there is no purpose in making a derived data type. d) It would be impossible to use MPI_Pack and MPI_Unpack to send the three real values.

Answer a) Incorrect. Actually this is a partially correct answer. Readablity *is* an advantage but perhaps a secondary one compared to the correct answer. b) Correct! If you find yourself repeatedly transferring a set of data, make it your own derived datatype. c) Incorrect. Matter of opinion. You could make a point array containing three reals, but that is an awkward way to handle the problem and does not take advantage of MPI capabilities. d) Incorrect. In fact MPI_Pack and MPI_Unpack are the most versatile routines for transferring any sort of heterogenous data.

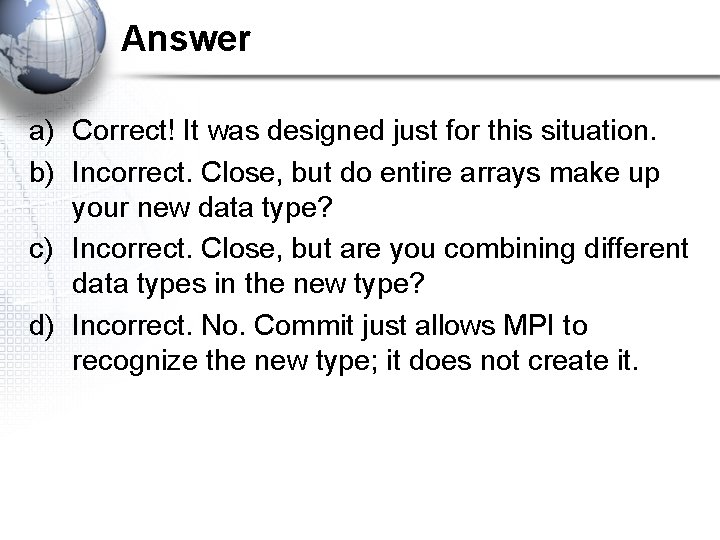

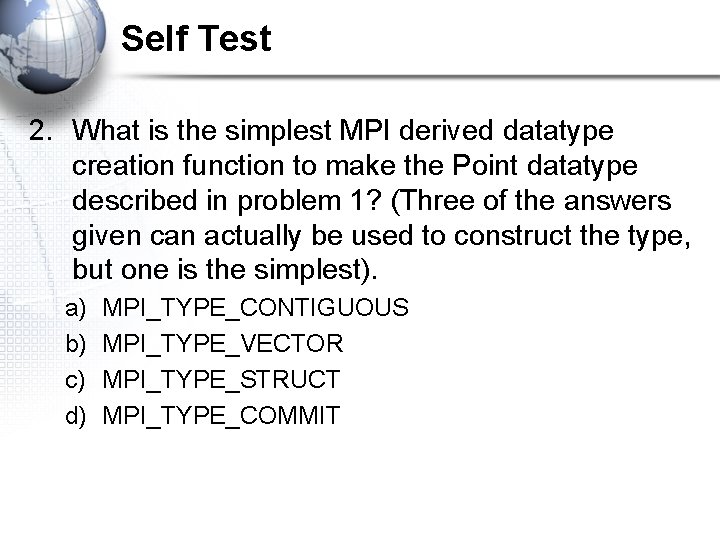

Self Test 2. What is the simplest MPI derived datatype creation function to make the Point datatype described in problem 1? (Three of the answers given can actually be used to construct the type, but one is the simplest). a) b) c) d) MPI_TYPE_CONTIGUOUS MPI_TYPE_VECTOR MPI_TYPE_STRUCT MPI_TYPE_COMMIT

Answer a) Correct! It was designed just for this situation. b) Incorrect. Close, but do entire arrays make up your new data type? c) Incorrect. Close, but are you combining different data types in the new type? d) Incorrect. No. Commit just allows MPI to recognize the new type; it does not create it.

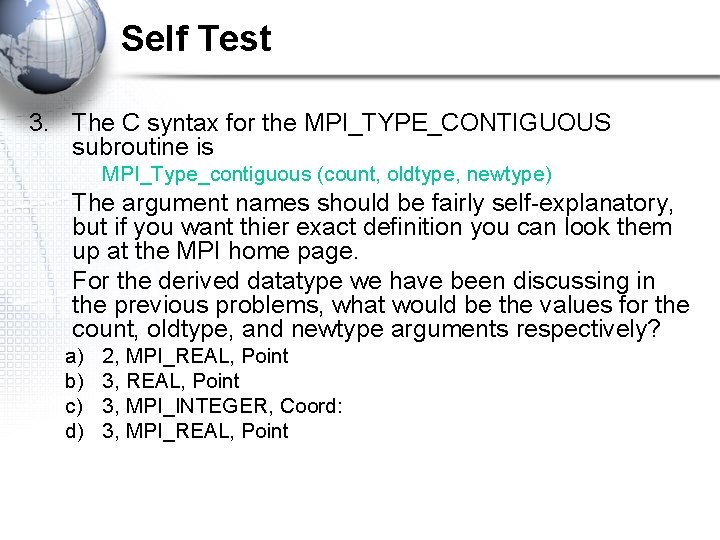

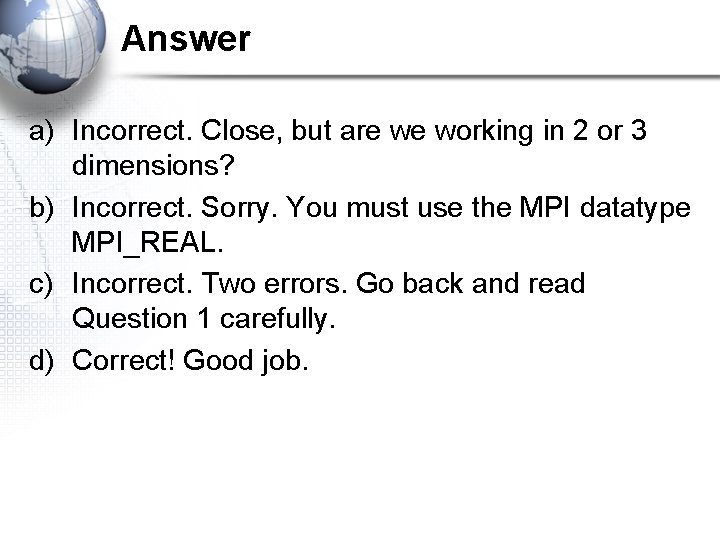

Self Test 3. The C syntax for the MPI_TYPE_CONTIGUOUS subroutine is MPI_Type_contiguous (count, oldtype, newtype) The argument names should be fairly self-explanatory, but if you want thier exact definition you can look them up at the MPI home page. For the derived datatype we have been discussing in the previous problems, what would be the values for the count, oldtype, and newtype arguments respectively? a) b) c) d) 2, MPI_REAL, Point 3, MPI_INTEGER, Coord: 3, MPI_REAL, Point

Answer a) Incorrect. Close, but are we working in 2 or 3 dimensions? b) Incorrect. Sorry. You must use the MPI datatype MPI_REAL. c) Incorrect. Two errors. Go back and read Question 1 carefully. d) Correct! Good job.

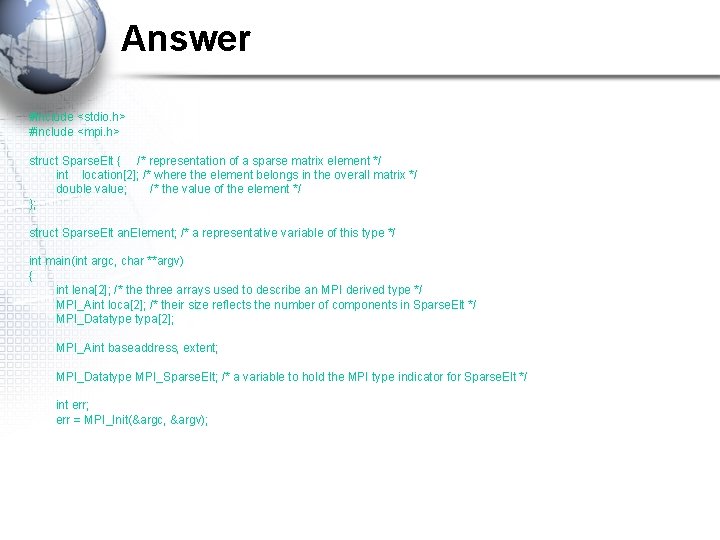

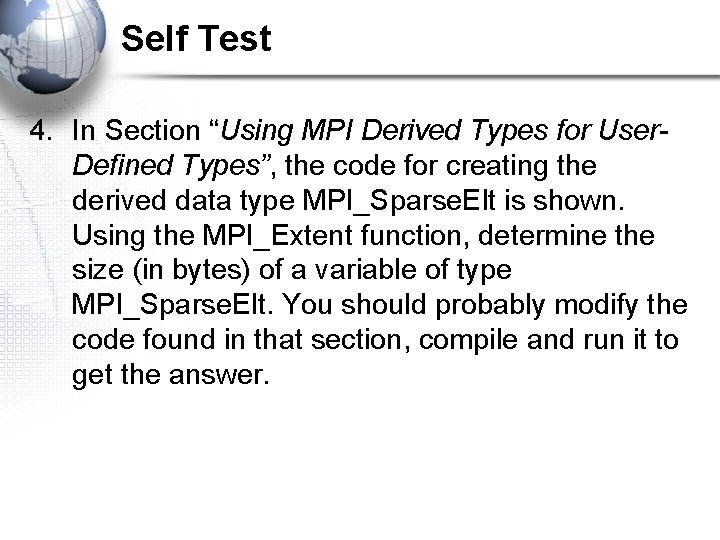

Self Test 4. In Section “Using MPI Derived Types for User. Defined Types”, the code for creating the derived data type MPI_Sparse. Elt is shown. Using the MPI_Extent function, determine the size (in bytes) of a variable of type MPI_Sparse. Elt. You should probably modify the code found in that section, compile and run it to get the answer.

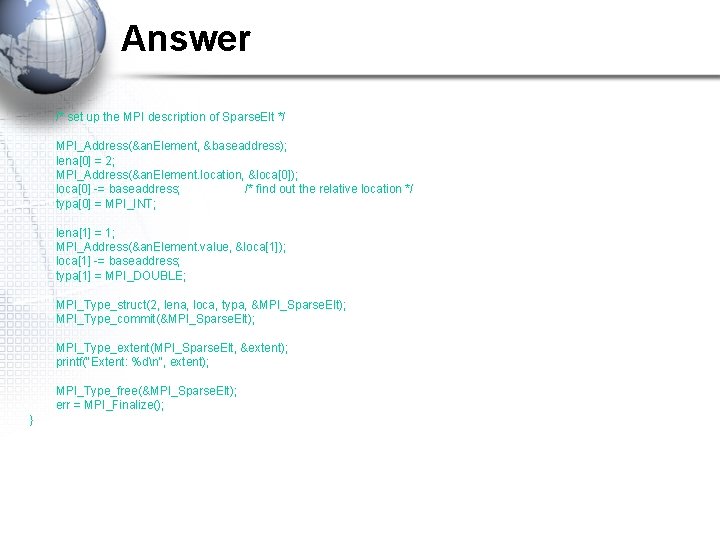

Answer #include <stdio. h> #include <mpi. h> struct Sparse. Elt { /* representation of a sparse matrix element */ int location[2]; /* where the element belongs in the overall matrix */ double value; /* the value of the element */ }; struct Sparse. Elt an. Element; /* a representative variable of this type */ int main(int argc, char **argv) { int lena[2]; /* the three arrays used to describe an MPI derived type */ MPI_Aint loca[2]; /* their size reflects the number of components in Sparse. Elt */ MPI_Datatype typa[2]; MPI_Aint baseaddress, extent; MPI_Datatype MPI_Sparse. Elt; /* a variable to hold the MPI type indicator for Sparse. Elt */ int err; err = MPI_Init(&argc, &argv);

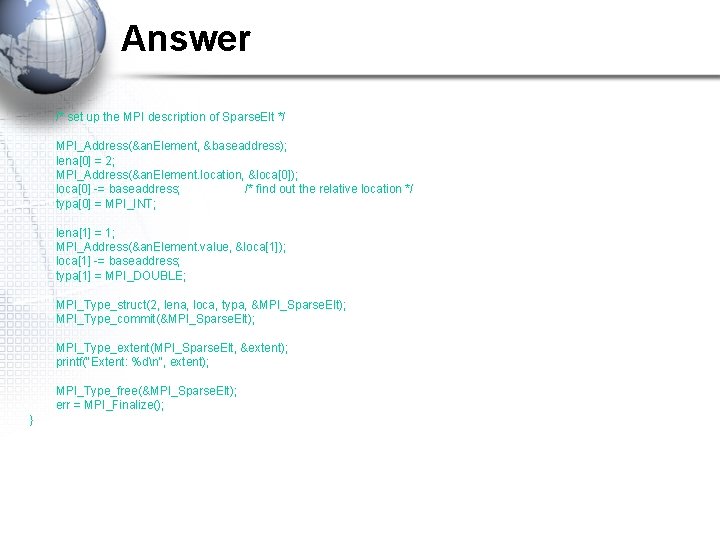

Answer /* set up the MPI description of Sparse. Elt */ MPI_Address(&an. Element, &baseaddress); lena[0] = 2; MPI_Address(&an. Element. location, &loca[0]); loca[0] -= baseaddress; /* find out the relative location */ typa[0] = MPI_INT; lena[1] = 1; MPI_Address(&an. Element. value, &loca[1]); loca[1] -= baseaddress; typa[1] = MPI_DOUBLE; MPI_Type_struct(2, lena, loca, typa, &MPI_Sparse. Elt); MPI_Type_commit(&MPI_Sparse. Elt); MPI_Type_extent(MPI_Sparse. Elt, &extent); printf("Extent: %dn", extent); MPI_Type_free(&MPI_Sparse. Elt); err = MPI_Finalize(); }

Answer • The correct value for the extent will depend on the system on which you ran this exercise. On most systems, the extent is 16 bytes (4 for each of the two ints and 8 for the double), but other values are possible. For example, on a T 3 E, where the ints are 8 bytes each rather than 4, the extent is 24.

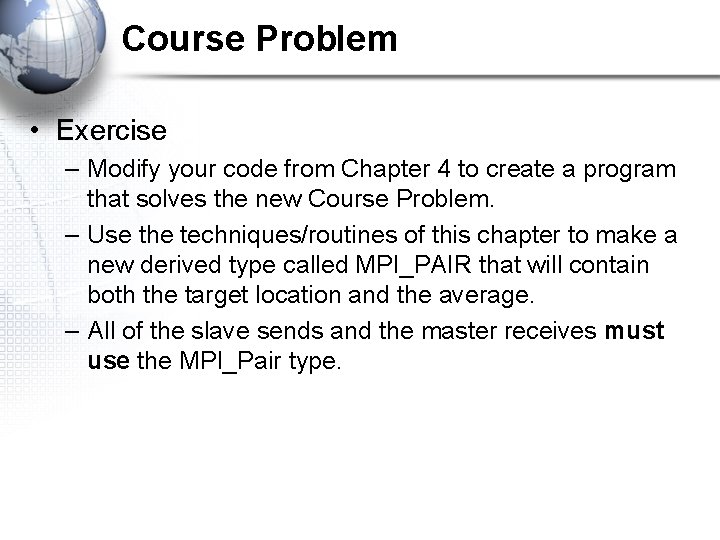

Course Problem

Course Problem • Description – The new problem still implements a parallel search of an integer array. – The program should find all occurrences of a certain integer which will be called the target. – It should then calculate the average of the target value and its index. – Both the target location and the average should be written to an output file. – In addition, the program should read both the target value and all the array elements from an input file.

Course Problem • Exercise – Modify your code from Chapter 4 to create a program that solves the new Course Problem. – Use the techniques/routines of this chapter to make a new derived type called MPI_PAIR that will contain both the target location and the average. – All of the slave sends and the master receives must use the MPI_Pair type.

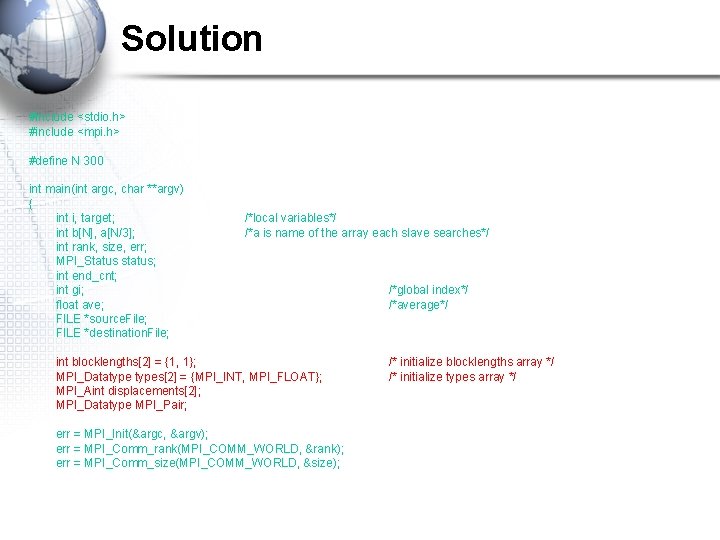

Solution #include <stdio. h> #include <mpi. h> #define N 300 int main(int argc, char **argv) { int i, target; int b[N], a[N/3]; int rank, size, err; MPI_Status status; int end_cnt; int gi; float ave; FILE *source. File; FILE *destination. File; /*local variables*/ /*a is name of the array each slave searches*/ int blocklengths[2] = {1, 1}; MPI_Datatypes[2] = {MPI_INT, MPI_FLOAT}; MPI_Aint displacements[2]; MPI_Datatype MPI_Pair; err = MPI_Init(&argc, &argv); err = MPI_Comm_rank(MPI_COMM_WORLD, &rank); err = MPI_Comm_size(MPI_COMM_WORLD, &size); /*global index*/ /*average*/ /* initialize blocklengths array */ /* initialize types array */

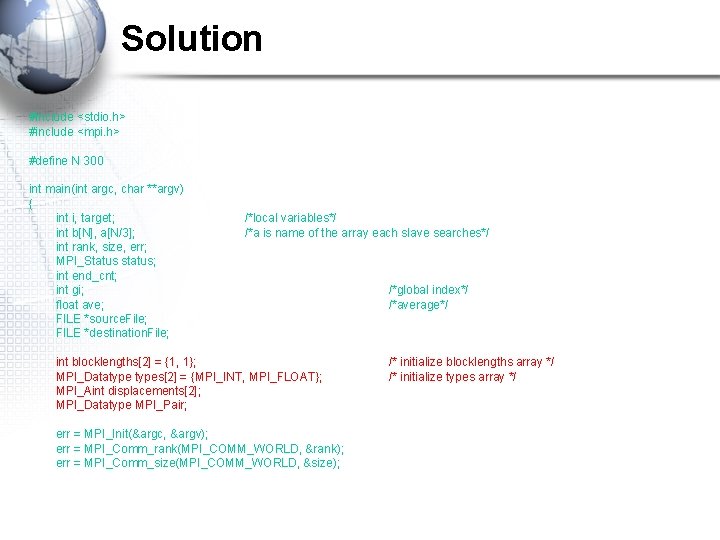

![Solution Initialize displacements array with err MPIAddressgi displacements0 memory addresses Solution /* Initialize displacements array with */ err = MPI_Address(&gi, &displacements[0]); /* memory addresses](https://slidetodoc.com/presentation_image_h2/10bc70d4e7f3e8846917bb927f93b251/image-17.jpg)

Solution /* Initialize displacements array with */ err = MPI_Address(&gi, &displacements[0]); /* memory addresses */ err = MPI_Address(&ave, &displacements[1]); /* This routine creates the new data type MPI_Pair */ err = MPI_Type_struct(2, blocklengths, displacements, types, &MPI_Pair); /* This routine allows it to be used in communication */ err = MPI_Type_commit(&MPI_Pair); if(size != 4) { printf("Error: You must use 4 processes to run this program. n"); return 1; } if (rank == 0) { /* File b. data has the target value on the first line */ /* The remaining 300 lines of b. data have the values for the b array */ source. File = fopen("b. data", "r"); /* File found. data will contain the indices of b where the target is */ destination. File = fopen("found. data", "w"); if(source. File==NULL) { printf("Error: can't access file. c. n"); return 1; } else if(destination. File==NULL) { printf("Error: can't create file for writing. n"); return 1;

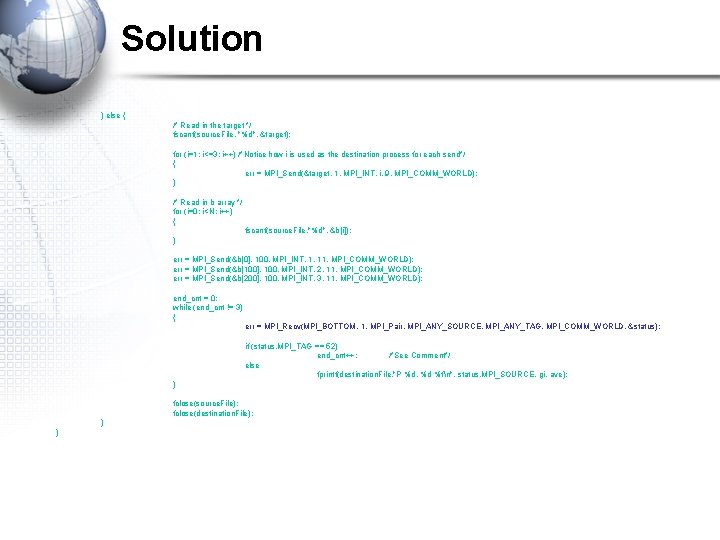

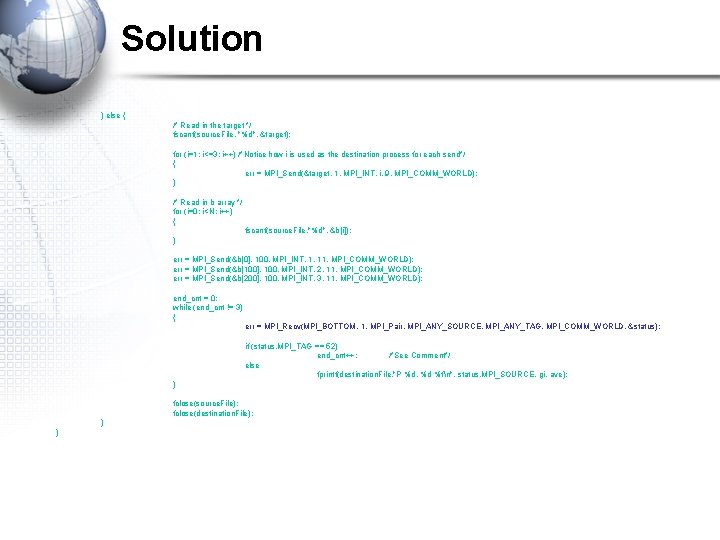

Solution } else { /* Read in the target */ fscanf(source. File, "%d", &target); for (i=1; i<=3; i++) /*Notice how i is used as the destination process for each send*/ { err = MPI_Send(&target, 1, MPI_INT, i, 9, MPI_COMM_WORLD); } /* Read in b array */ for (i=0; i<N; i++) { fscanf(source. File, "%d", &b[i]); } err = MPI_Send(&b[0], 100, MPI_INT, 1, 11, MPI_COMM_WORLD); err = MPI_Send(&b[100], 100, MPI_INT, 2, 11, MPI_COMM_WORLD); err = MPI_Send(&b[200], 100, MPI_INT, 3, 11, MPI_COMM_WORLD); end_cnt = 0; while (end_cnt != 3) { err = MPI_Recv(MPI_BOTTOM, 1, MPI_Pair, MPI_ANY_SOURCE, MPI_ANY_TAG, MPI_COMM_WORLD, &status); if (status. MPI_TAG == 52) end_cnt++; /*See Comment*/ else fprintf(destination. File, "P %d, %d %fn", status. MPI_SOURCE, gi, ave); } fclose(source. File); fclose(destination. File); } }

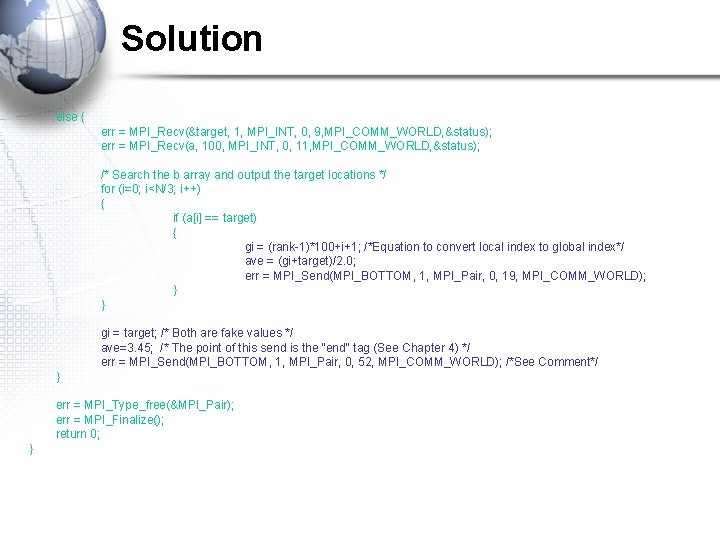

Solution else { err = MPI_Recv(&target, 1, MPI_INT, 0, 9, MPI_COMM_WORLD, &status); err = MPI_Recv(a, 100, MPI_INT, 0, 11, MPI_COMM_WORLD, &status); /* Search the b array and output the target locations */ for (i=0; i<N/3; i++) { if (a[i] == target) { gi = (rank-1)*100+i+1; /*Equation to convert local index to global index*/ ave = (gi+target)/2. 0; err = MPI_Send(MPI_BOTTOM, 1, MPI_Pair, 0, 19, MPI_COMM_WORLD); } } gi = target; /* Both are fake values */ ave=3. 45; /* The point of this send is the "end" tag (See Chapter 4) */ err = MPI_Send(MPI_BOTTOM, 1, MPI_Pair, 0, 52, MPI_COMM_WORLD); /*See Comment*/ } err = MPI_Type_free(&MPI_Pair); err = MPI_Finalize(); return 0; }

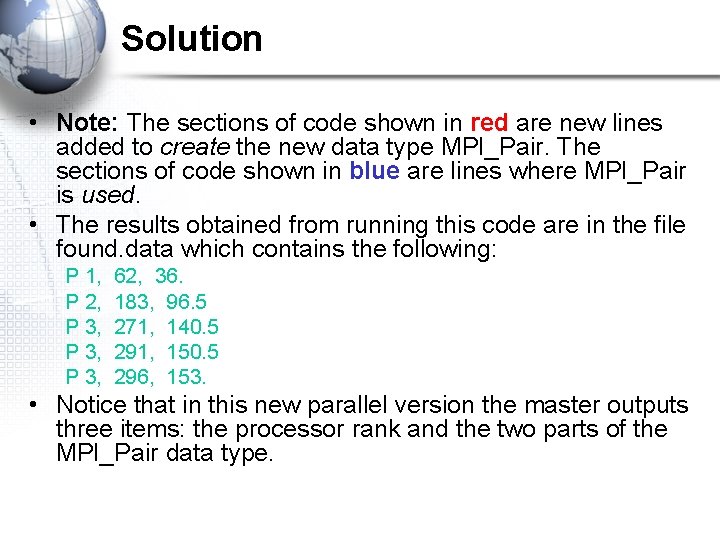

Solution • Note: The sections of code shown in red are new lines added to create the new data type MPI_Pair. The sections of code shown in blue are lines where MPI_Pair is used. • The results obtained from running this code are in the file found. data which contains the following: P 1, P 2, P 3, 62, 36. 183, 96. 5 271, 140. 5 291, 150. 5 296, 153. • Notice that in this new parallel version the master outputs three items: the processor rank and the two parts of the MPI_Pair data type.