Data TAG project Status Perspectives Olivier MARTIN CERN

- Slides: 28

Data. TAG project Status & Perspectives Olivier MARTIN - CERN GNEW’ 2004 workshop 15 March 2004, CERN, Geneva GNEW’ 2004 – 15/03/2004

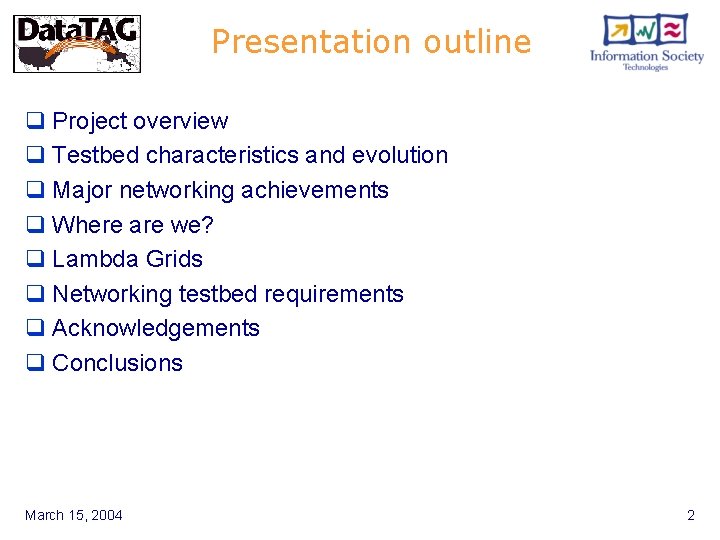

Presentation outline q Project overview q Testbed characteristics and evolution q Major networking achievements q Where are we? q Lambda Grids q Networking testbed requirements q Acknowledgements q Conclusions March 15, 2004 2 Final Data. TAG Review, 24 March 2004 2

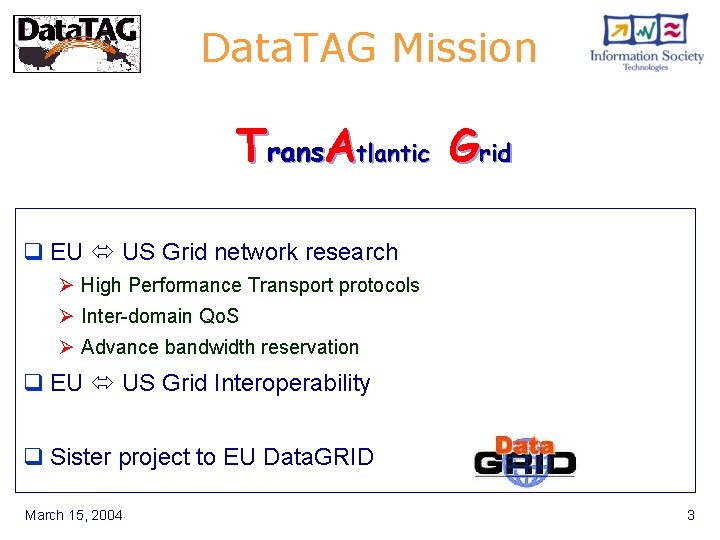

Data. TAG Mission Trans. Atlantic Grid q EU US Grid network research Ø High Performance Transport protocols Ø Inter-domain Qo. S Ø Advance bandwidth reservation q EU US Grid Interoperability q Sister project to EU Data. GRID March 15, 2004 3 Final Data. TAG Review, 24 March 2004 3

Project partners March 15, 2004 http: //www. datatag. org Final Data. TAG Review, 24 March 2004 4 4

Funding agencies Cooperating Networks March 15, 2004 5 Final Data. TAG Review, 24 March 2004 5

EU collaborators q Brunel University q CERN q CLRC q CNAF q q NIKHEF q PPARC q Uv. A q University of Manchester q University of Padova q University of Milano q University of Torino q UCL DANTE INFN INRIA March 15, 2004 6 Final Data. TAG Review, 24 March 2004 6

US collaborators q ANL q Caltech q Fermilab q FSU q q Northwestern University q University of Chicago q University of Michigan UIC q Globus q Indiana q SLAC q Wisconsin q Starlight March 15, 2004 7 Final Data. TAG Review, 24 March 2004 7

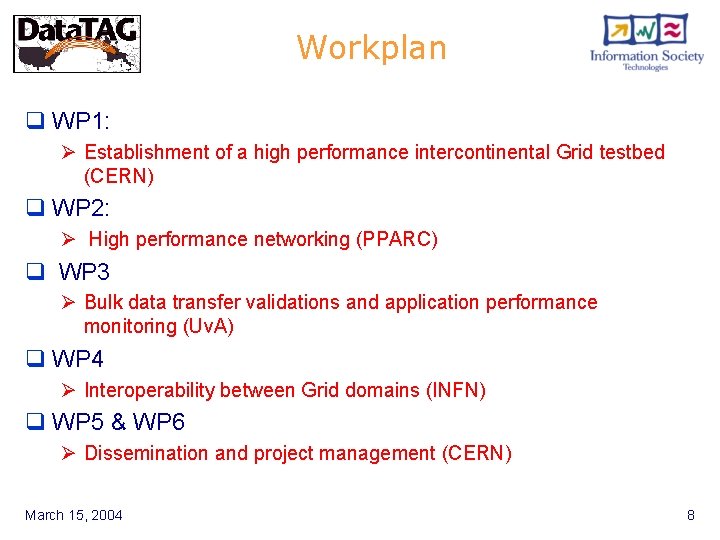

Workplan q WP 1: Ø Establishment of a high performance intercontinental Grid testbed (CERN) q WP 2: Ø High performance networking (PPARC) q WP 3 Ø Bulk data transfer validations and application performance monitoring (Uv. A) q WP 4 Ø Interoperability between Grid domains (INFN) q WP 5 & WP 6 Ø Dissemination and project management (CERN) March 15, 2004 8 Final Data. TAG Review, 24 March 2004 8

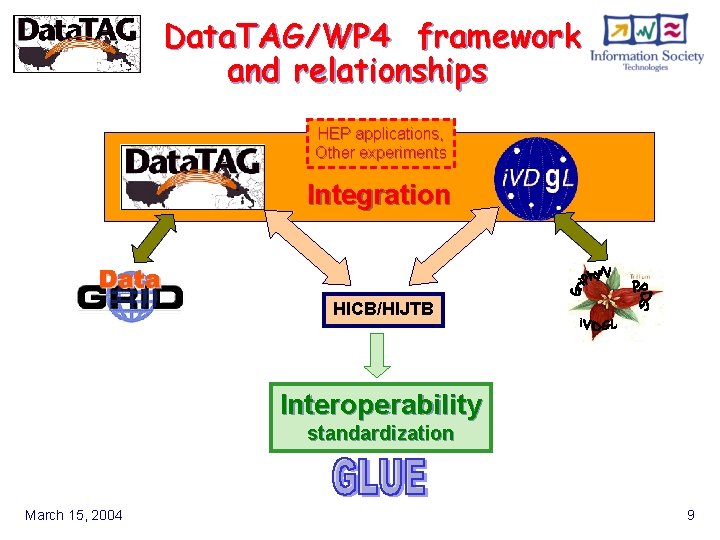

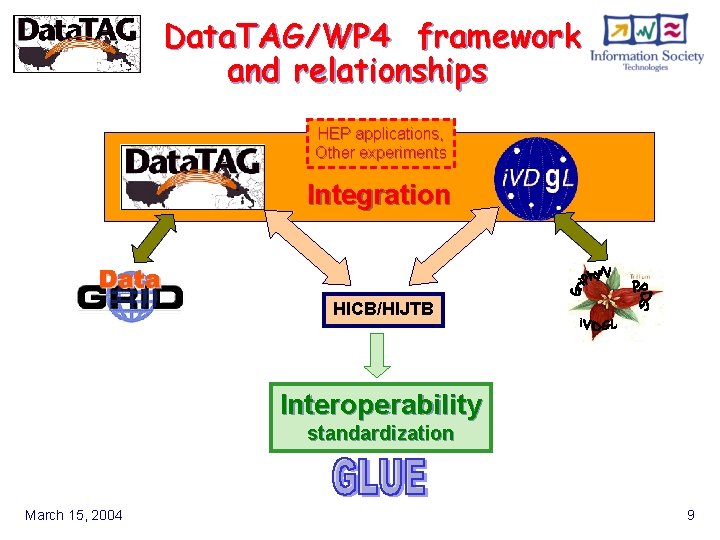

Data. TAG/WP 4 framework and relationships HEP applications, Other experiments Integration HICB/HIJTB Interoperability standardization March 15, 2004 9 Final Data. TAG Review, 24 March 2004 9

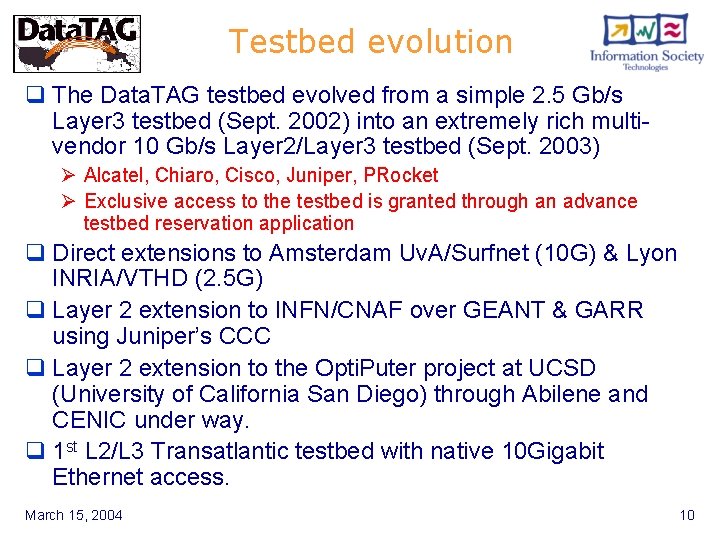

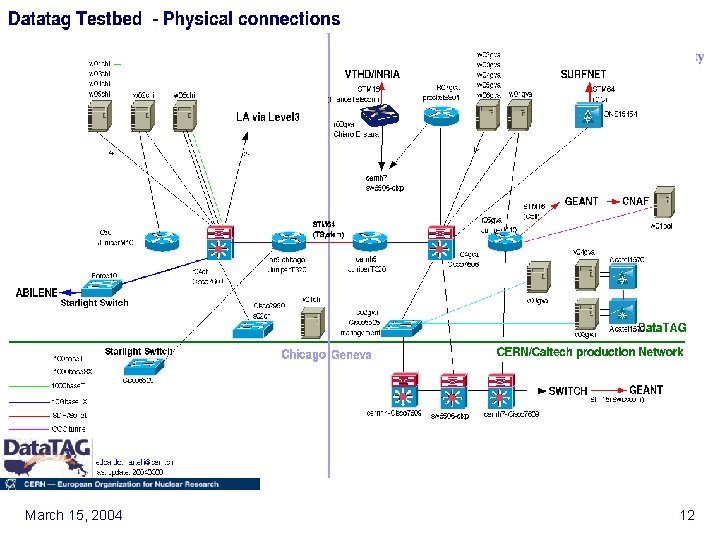

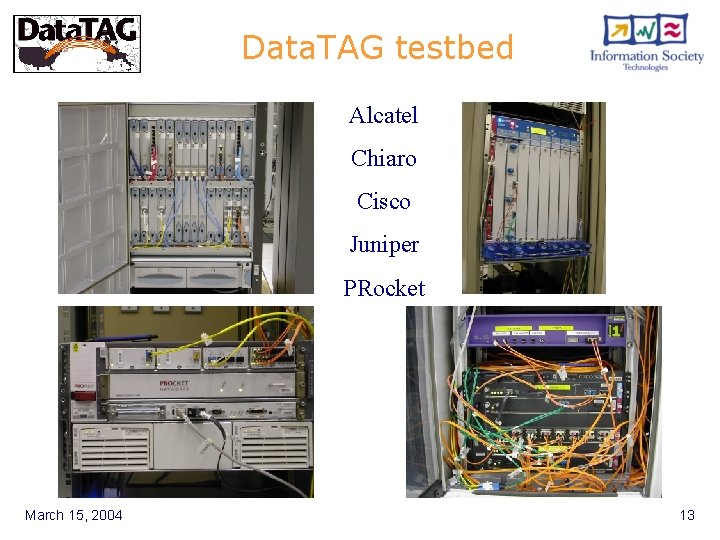

Testbed evolution q The Data. TAG testbed evolved from a simple 2. 5 Gb/s Layer 3 testbed (Sept. 2002) into an extremely rich multivendor 10 Gb/s Layer 2/Layer 3 testbed (Sept. 2003) Ø Alcatel, Chiaro, Cisco, Juniper, PRocket Ø Exclusive access to the testbed is granted through an advance testbed reservation application q Direct extensions to Amsterdam Uv. A/Surfnet (10 G) & Lyon INRIA/VTHD (2. 5 G) q Layer 2 extension to INFN/CNAF over GEANT & GARR using Juniper’s CCC q Layer 2 extension to the Opti. Puter project at UCSD (University of California San Diego) through Abilene and CENIC under way. q 1 st L 2/L 3 Transatlantic testbed with native 10 Gigabit Ethernet access. March 15, 2004 10 Final Data. TAG Review, 24 March 2004 10

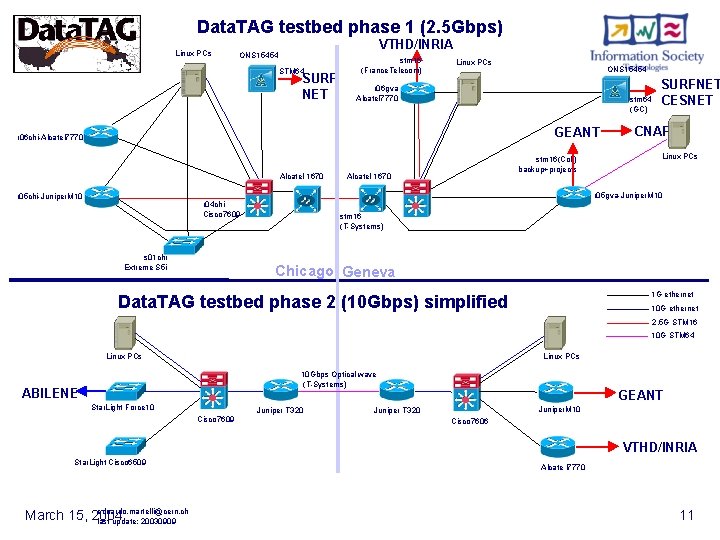

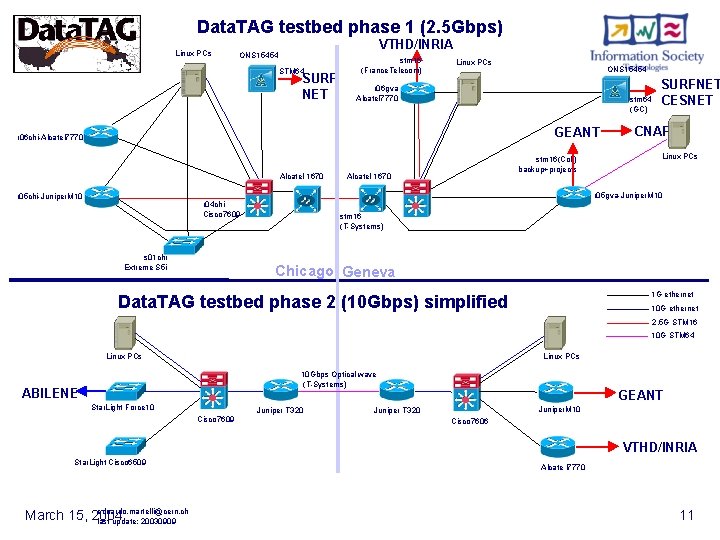

Data. TAG testbed phase 1 (2. 5 Gbps) Linux PCs VTHD/INRIA ONS 15454 STM 64 SURF NET stm 16 (France. Telecom) Linux PCs ONS 15454 r 06 gva Alcatel 7770 stm 64 (GC) GEANT r 06 chi-Alcatel 7770 Alcatel 1670 stm 16(Colt) backup+projects Alcatel 1670 SURFNET CESNET CNAF Linux PCs r 05 gva-Juniper. M 10 r 05 chi-Juniper. M 10 r 04 chi Cisco 7609 s 01 chi Extreme S 5 i stm 16 (T-Systems) Chicago Geneva 1 G ethernet Data. TAG testbed phase 2 (10 Gbps) simplified 10 G ethernet 2. 5 G STM 16 10 G STM 64 Linux PCs 10 Gbps Optical wave (T-Systems) ABILENE Star. Light Force 10 Juniper T 320 Cisco 7609 GEANT Juniper. M 10 Juniper T 320 Cisco 7606 VTHD/INRIA Star. Light Cisco 6509 edoardo. martelli@cern. ch March 15, 2004 last update: 20030909 Alcate l 7770 11 Final Data. TAG Review, 24 March 2004 11

March 15, 2004 12 Final Data. TAG Review, 24 March 2004 12

Data. TAG testbed Alcatel Chiaro Cisco Juniper PRocket March 15, 2004 13 Final Data. TAG Review, 24 March 2004 13

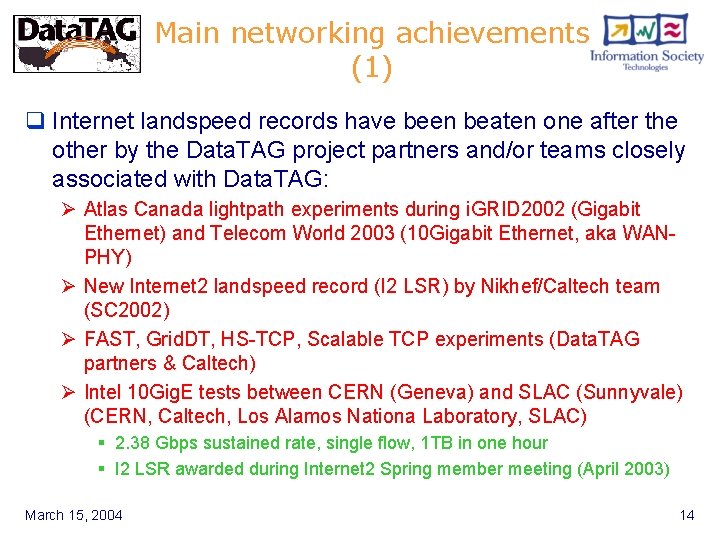

Main networking achievements (1) q Internet landspeed records have been beaten one after the other by the Data. TAG project partners and/or teams closely associated with Data. TAG: Ø Atlas Canada lightpath experiments during i. GRID 2002 (Gigabit Ethernet) and Telecom World 2003 (10 Gigabit Ethernet, aka WANPHY) Ø New Internet 2 landspeed record (I 2 LSR) by Nikhef/Caltech team (SC 2002) Ø FAST, Grid. DT, HS-TCP, Scalable TCP experiments (Data. TAG partners & Caltech) Ø Intel 10 Gig. E tests between CERN (Geneva) and SLAC (Sunnyvale) (CERN, Caltech, Los Alamos Nationa Laboratory, SLAC) § 2. 38 Gbps sustained rate, single flow, 1 TB in one hour § I 2 LSR awarded during Internet 2 Spring member meeting (April 2003) March 15, 2004 14 Final Data. TAG Review, 24 March 2004 14

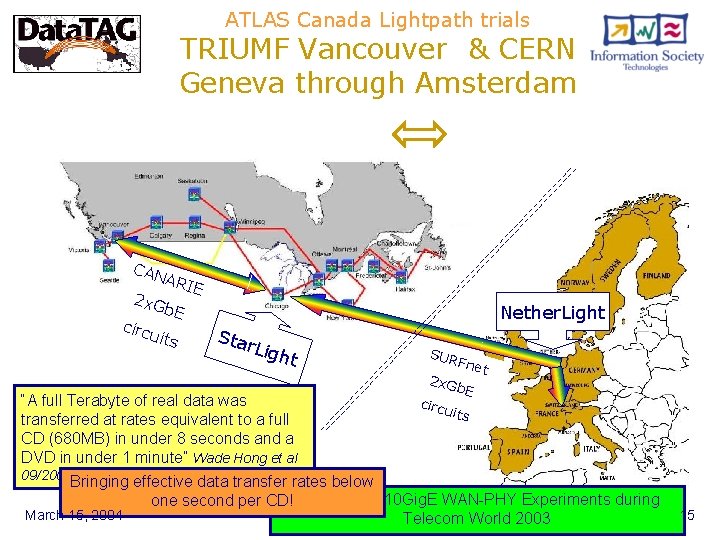

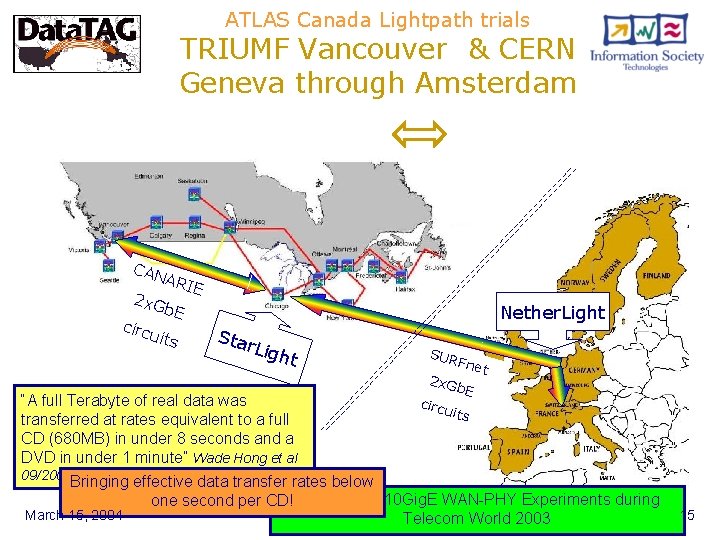

ATLAS Canada Lightpath trials TRIUMF Vancouver & CERN Geneva through Amsterdam CAN 2 x. G circ ARI E b. E uits Nether. Light Sta r. Lig ht SUR 2 x. G Fnet b. E “A full Terabyte of real data was circu its transferred at rates equivalent to a full CD (680 MB) in under 8 seconds and a DVD in under 1 minute” Wade Hong et al 09/2002 Bringing effective data transfer rates below one second per CD! Subsequent 10 Gig. E WAN-PHY Experiments during March 15, 2004 15 Telecom World 2003 Final Data. TAG Review, 24 March 2004 15

10 Gig. E Data Transfer Trial On Feb. 27 -28 2003, a terabyte of data was transferred in 3700 seconds by S. Ravot of Caltech between the Level 3 Po. P in Sunnyvale near SLAC and CERN through the Tera. Grid router at Star. Light from memory to memory with a single TCP/IPv 4 stream. This achievement translates to an average rate of 2. 38 Gbps (using large windows and 9 k. B “jumbo frames”). This beat the former record by a factor of ~2. 5 and used the 2. 5 Gb/s link at 99% efficiency. European Commission Huge distributed effort, 10 -15 highly skilled people monopolized for several weeks! March 15, 2004 16

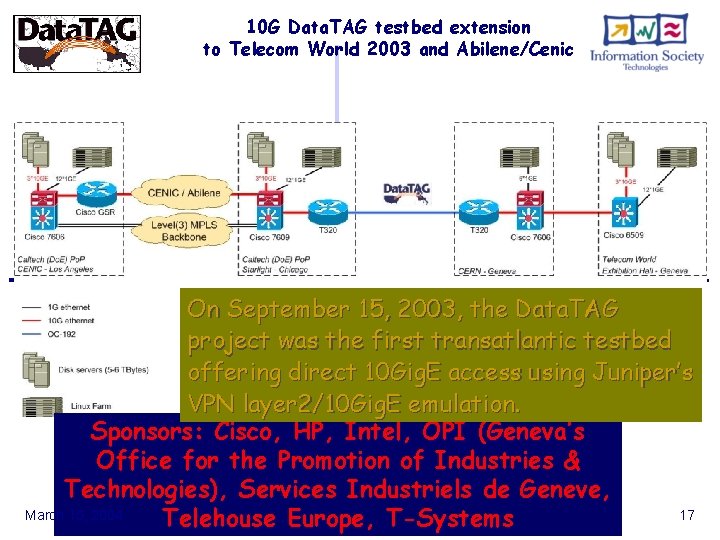

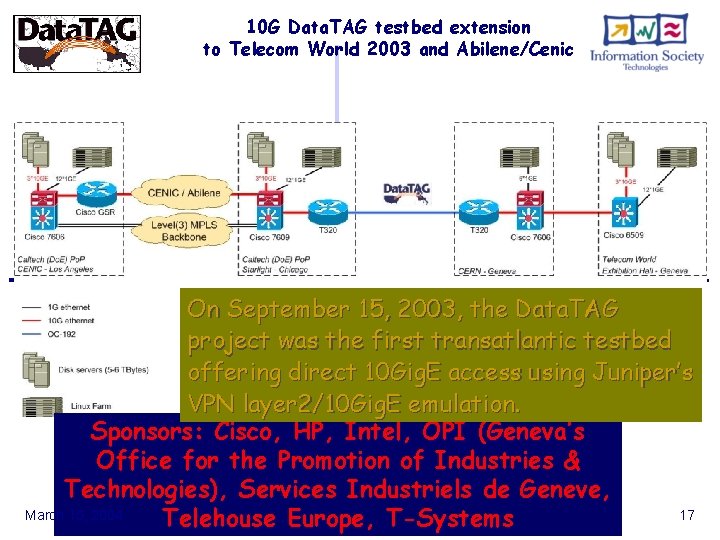

10 G Data. TAG testbed extension to Telecom World 2003 and Abilene/Cenic On September 15, 2003, the Data. TAG project was the first transatlantic testbed offering direct 10 Gig. E access using Juniper’s VPN layer 2/10 Gig. E emulation. Sponsors: Cisco, HP, Intel, OPI (Geneva’s Office for the Promotion of Industries & Technologies), Services Industriels de Geneve, March 15, 2004 17 Telehouse Europe, T-Systems Final Data. TAG Review, 24 March 2004 17

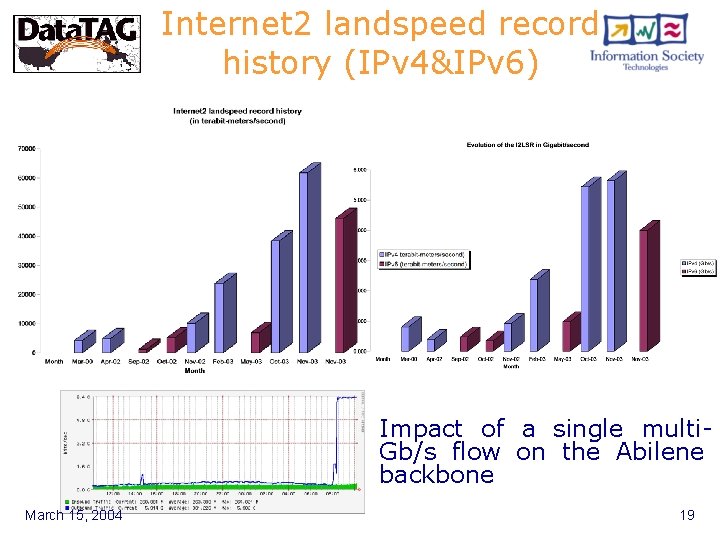

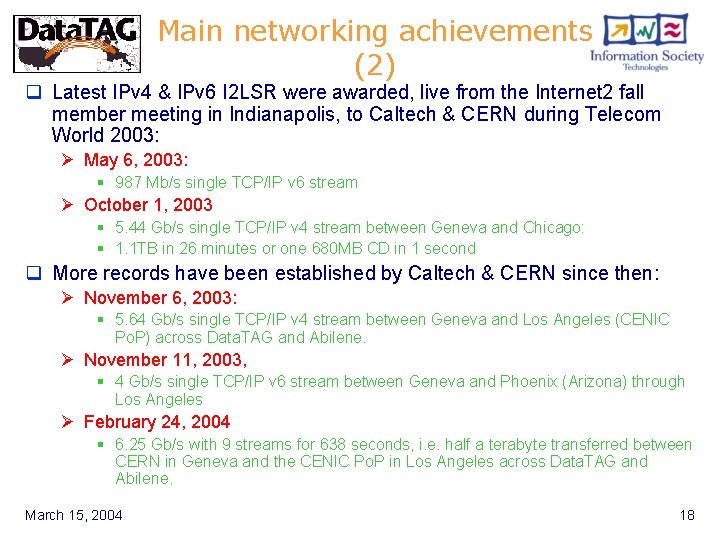

Main networking achievements (2) q Latest IPv 4 & IPv 6 I 2 LSR were awarded, live from the Internet 2 fall member meeting in Indianapolis, to Caltech & CERN during Telecom World 2003: Ø May 6, 2003: § 987 Mb/s single TCP/IP v 6 stream Ø October 1, 2003 § 5. 44 Gb/s single TCP/IP v 4 stream between Geneva and Chicago: § 1. 1 TB in 26 minutes or one 680 MB CD in 1 second q More records have been established by Caltech & CERN since then: Ø November 6, 2003: § 5. 64 Gb/s single TCP/IP v 4 stream between Geneva and Los Angeles (CENIC Po. P) across Data. TAG and Abilene. Ø November 11, 2003, § 4 Gb/s single TCP/IP v 6 stream between Geneva and Phoenix (Arizona) through Los Angeles Ø February 24, 2004 § 6. 25 Gb/s with 9 streams for 638 seconds, i. e. half a terabyte transferred between CERN in Geneva and the CENIC Po. P in Los Angeles across Data. TAG and Abilene. March 15, 2004 18 Final Data. TAG Review, 24 March 2004 18

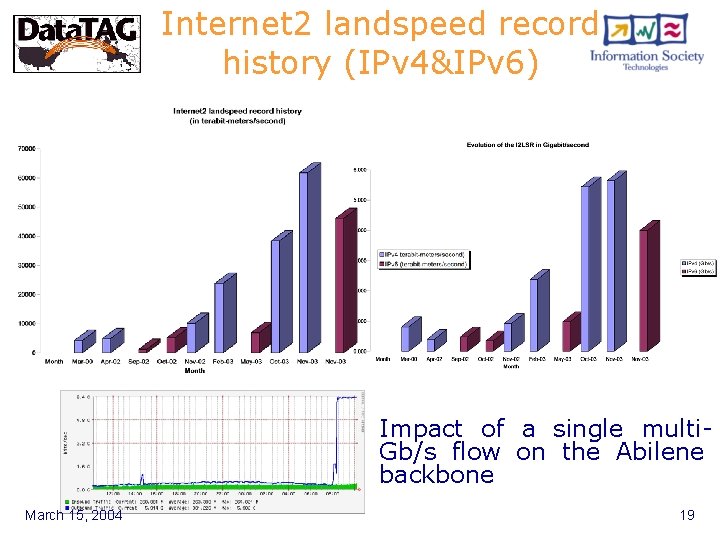

Internet 2 landspeed record history (IPv 4&IPv 6) Impact of a single multi. Gb/s flow on the Abilene backbone March 15, 2004 19 Final Data. TAG Review, 24 March 2004 19

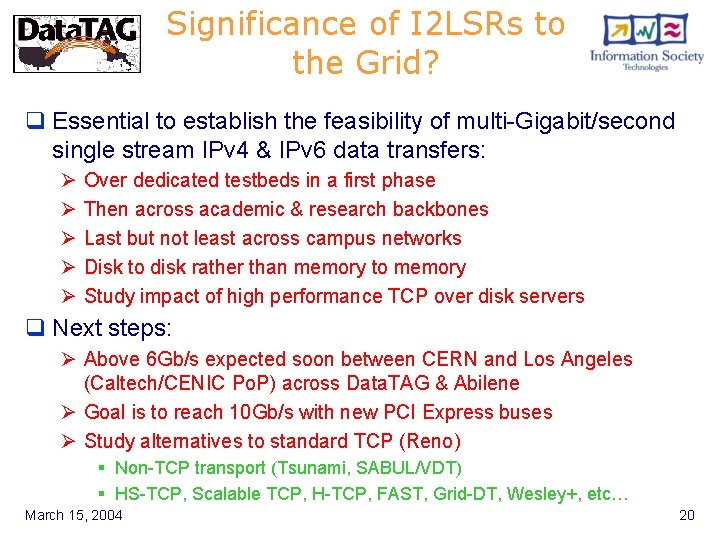

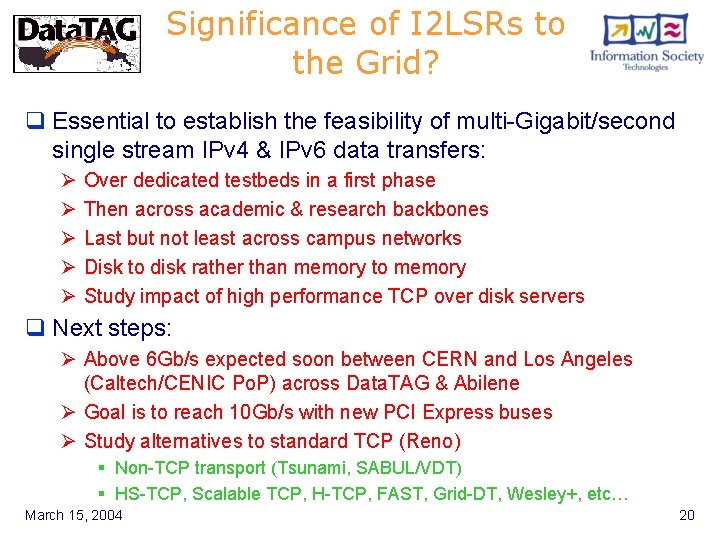

Significance of I 2 LSRs to the Grid? q Essential to establish the feasibility of multi-Gigabit/second single stream IPv 4 & IPv 6 data transfers: Ø Ø Ø Over dedicated testbeds in a first phase Then across academic & research backbones Last but not least across campus networks Disk to disk rather than memory to memory Study impact of high performance TCP over disk servers q Next steps: Ø Above 6 Gb/s expected soon between CERN and Los Angeles (Caltech/CENIC Po. P) across Data. TAG & Abilene Ø Goal is to reach 10 Gb/s with new PCI Express buses Ø Study alternatives to standard TCP (Reno) § Non-TCP transport (Tsunami, SABUL/VDT) § HS-TCP, Scalable TCP, H-TCP, FAST, Grid-DT, Wesley+, etc… March 15, 2004 20 Final Data. TAG Review, 24 March 2004 20

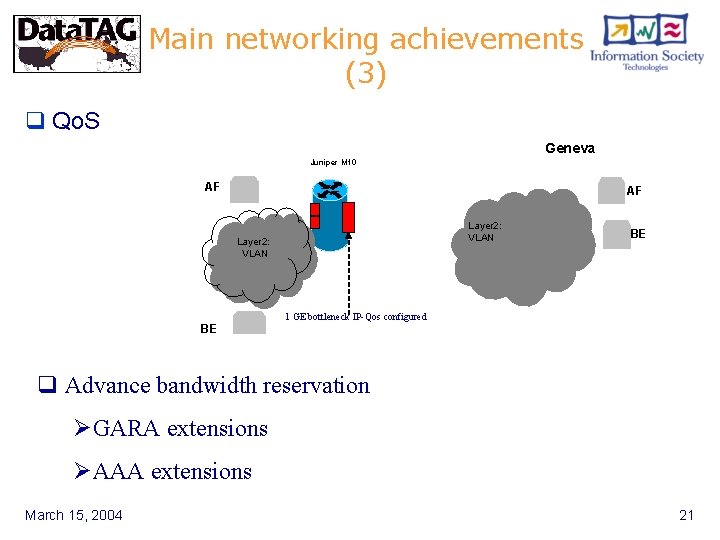

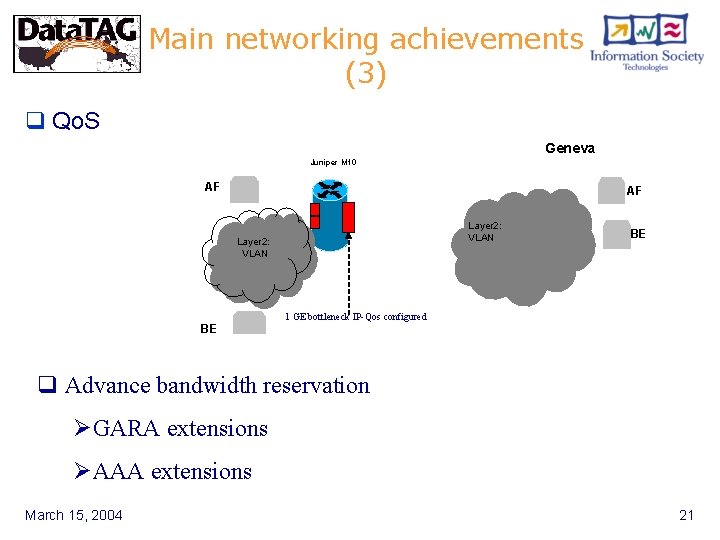

Main networking achievements (3) q Qo. S Geneva Juniper M 10 AF AF Layer 2: VLAN Layer 2: VLAN BE 1 GE bottleneck IP-Qos configured BE q Advance bandwidth reservation ØGARA extensions ØAAA extensions March 15, 2004 21 Final Data. TAG Review, 24 March 2004 21

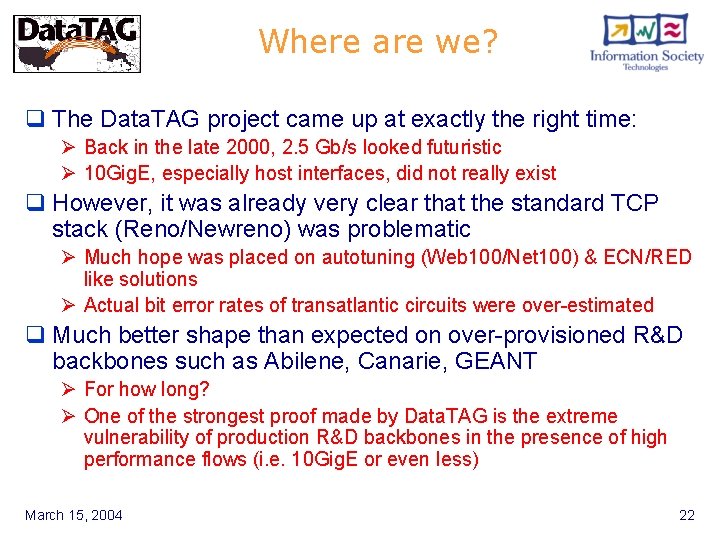

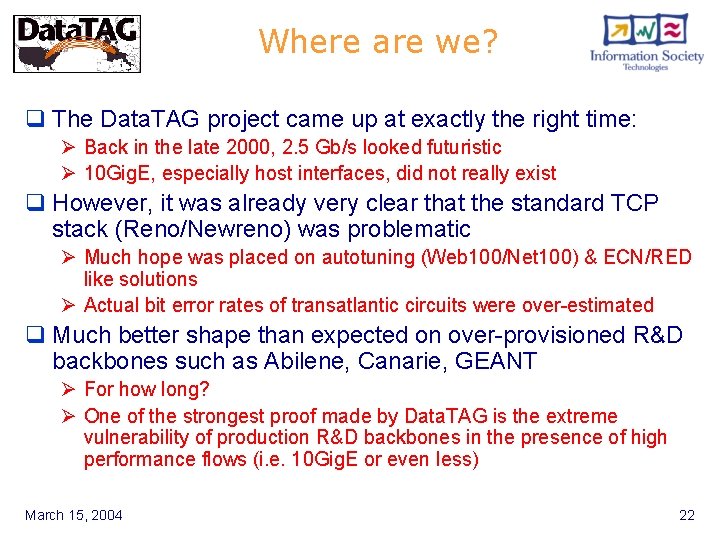

Where are we? q The Data. TAG project came up at exactly the right time: Ø Back in the late 2000, 2. 5 Gb/s looked futuristic Ø 10 Gig. E, especially host interfaces, did not really exist q However, it was already very clear that the standard TCP stack (Reno/Newreno) was problematic Ø Much hope was placed on autotuning (Web 100/Net 100) & ECN/RED like solutions Ø Actual bit error rates of transatlantic circuits were over-estimated q Much better shape than expected on over-provisioned R&D backbones such as Abilene, Canarie, GEANT Ø For how long? Ø One of the strongest proof made by Data. TAG is the extreme vulnerability of production R&D backbones in the presence of high performance flows (i. e. 10 Gig. E or even less) March 15, 2004 22 Final Data. TAG Review, 24 March 2004 22

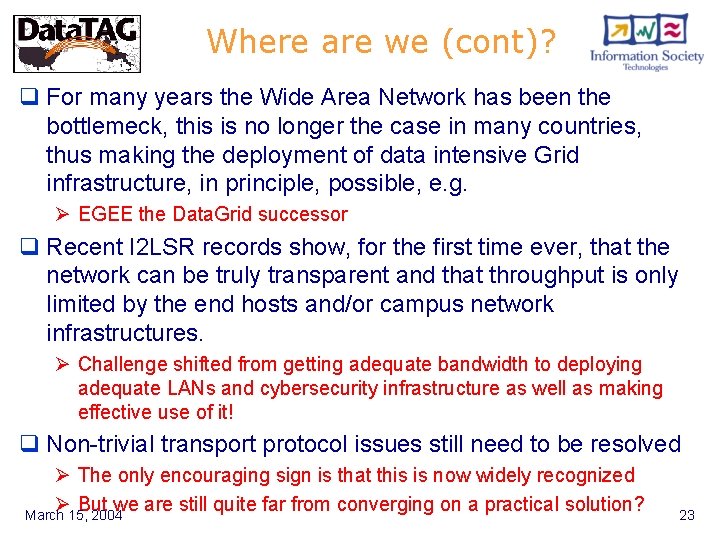

Where are we (cont)? q For many years the Wide Area Network has been the bottlemeck, this is no longer the case in many countries, thus making the deployment of data intensive Grid infrastructure, in principle, possible, e. g. Ø EGEE the Data. Grid successor q Recent I 2 LSR records show, for the first time ever, that the network can be truly transparent and that throughput is only limited by the end hosts and/or campus network infrastructures. Ø Challenge shifted from getting adequate bandwidth to deploying adequate LANs and cybersecurity infrastructure as well as making effective use of it! q Non-trivial transport protocol issues still need to be resolved Ø The only encouraging sign is that this is now widely recognized Ø But we are still quite far from converging on a practical solution? March 15, 2004 Final Data. TAG Review, 24 March 2004 23 23

Layer 1/2/3 networking (1) q Conventional layer 3 technology is no longer fashionable because of: Ø High associated costs, e. g. 200/300 KUSD for a 10 G router interfaces Ø Implied use of shared backbones q The use of layer 1 or layer 2 technology is very attractive because it helps to solve a number of problems, e. g. Ø 1500 bytes Ethernet frame size (layer 1) Ø Protocol transparency (layer 1 & layer 2) Ø Minimum functionality hence, in theory, much lower costs (layer 1&2) March 15, 2004 24 Final Data. TAG Review, 24 March 2004 24

Layer 1/2/3 networking (2) q « Lambda Grids » are becoming very popular: Ø Pros: § circuit oriented model like the telephone network, hence no need for complex transport protocols § Lower equipment costs (i. e. « in theory » a factor 2 or 3 per layer) § the concept of a dedicated end to end light path is very elegant Ø Cons: § « End to end » still very loosely defined, i. e. site to site, cluster to cluster or really host to host § Higher circuit costs, Scalability, Additional middleware to deal with circuit set up/tear down, etc § Extending dynamic VLAN functionality to the campus network is a potential nightmare! March 15, 2004 25 Final Data. TAG Review, 24 March 2004 25

« Lambda Grids » What does it mean? q Clearly different things to different people, hence the « apparently easy » consensus! q Conservatively, on demand « site to site » connectivity Ø Where is the innovation? Ø What does it solve in terms of transport protocols? Ø Where are the savings? § Less interfaces needed (customer) but more standby/idle circuits needed (provider) § Economics from the service provider vs the customer perspective? » Traditionally, switched services have been very expensive, n Usage vs flat charge n Break even, switches vs leased, few hours/day n Why would this change? § In case there are no savings, why bother? q More advanced, cluster to cluster Ø Implies even more active circuits in parallel q Even more advanced, Host to Host Ø All optical Ø Is it realisitic? March 15, 2004 26 Final Data. TAG Review, 24 March 2004 26

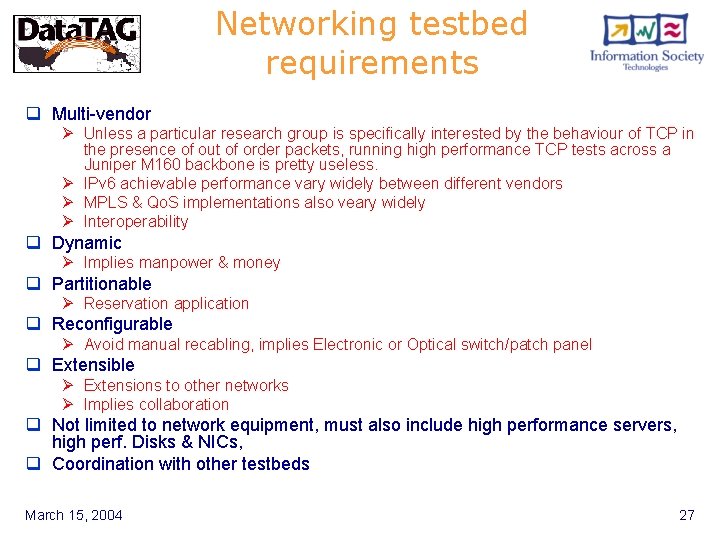

Networking testbed requirements q Multi-vendor Ø Unless a particular research group is specifically interested by the behaviour of TCP in the presence of out of order packets, running high performance TCP tests across a Juniper M 160 backbone is pretty useless. Ø IPv 6 achievable performance vary widely between different vendors Ø MPLS & Qo. S implementations also veary widely Ø Interoperability q Dynamic Ø Implies manpower & money q Partitionable Ø Reservation application q Reconfigurable Ø Avoid manual recabling, implies Electronic or Optical switch/patch panel q Extensible Ø Extensions to other networks Ø Implies collaboration q Not limited to network equipment, must also include high performance servers, high perf. Disks & NICs, q Coordination with other testbeds March 15, 2004 27 Final Data. TAG Review, 24 March 2004 27

Acknowledments q The project would not have accumulated so many successes without the active participation of our North American colleagues, in particular: Ø Ø Ø Caltech/Do. E University of Illinois/NSF i. VDGL Starlight Internet 2/Abilene Canarie q and our European sponsors and colleagues as well, in particular: Ø Ø Ø European Union’s IST program Dante/GEANT GARR Surfnet VTHD q The GNEW 2004 workshop is yet another example of successful collaboration between Europe and USA March 15, 2004 28 Final Data. TAG Review, 24 March 2004 28