CSE 160 Lecture 2 Todays Topics Flynns Taxonomy

- Slides: 32

CSE 160 – Lecture 2

Today’s Topics • Flynn’s Taxonomy • Bit-Serial, Vector, Pipelined Processors • Interconnection Networks – Topologies – Routing – Embedding • Network Bisection

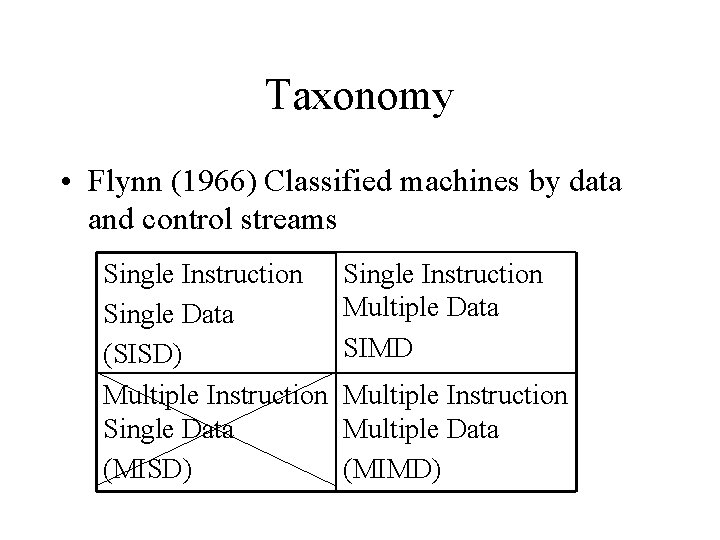

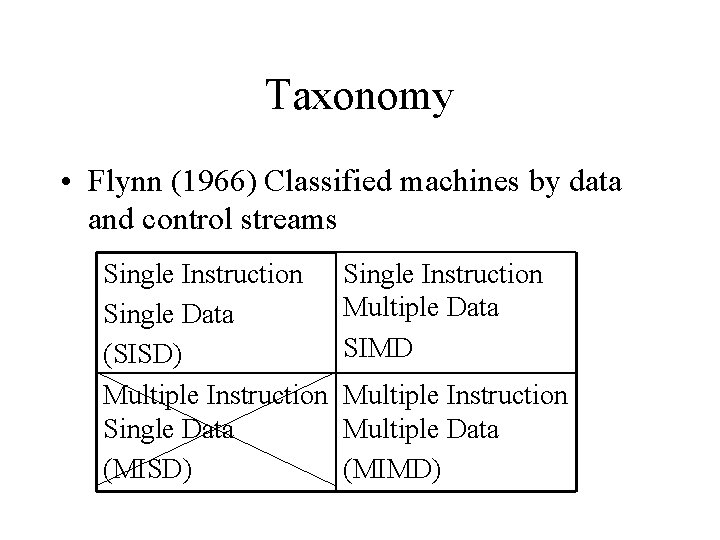

Taxonomy • Flynn (1966) Classified machines by data and control streams Single Instruction Single Data (SISD) Multiple Instruction Single Data (MISD) Single Instruction Multiple Data SIMD Multiple Instruction Multiple Data (MIMD)

SIMD • SIMD – – – All processors execute the same program in lockstep Data that each processor sees is different Single control processor Individual processors can be turned on/off at each cycle Illiac IV, CM-2, Mas. Par are some examples Silicon Graphics Reality Graphics engine

MIMD • All processors execute their own set of instructions • Processors operate on separate datastreams • No centralized clock implied • SP-2, T 3 E, Clusters, Cray’s, etc.

SPMD/MPMD • Single/Multiple Program Multiple Data • SPMD processors run the same program but processors are necessarily run in lock step. • Very popular and scalable programming style • MPMD is similar except that different processors run different programs – PVM distribution has some simple examples

Processor Types • Four types – Bit serial – Vector – Cache-based, pipelined – Custom (eg. Tera MTA or KSR-1)

Bit Serial • Only seen in SIMD machines like CM-2 or Mas. Par • Each clock cycle, one bit of the data is loaded/written – Simplifies memory system and memory trace count • Popular for very dense (64 K) processor arrays

Cache-based, Pipelined • Garden Variety Microprocessor – Sparc, Intel x 86, MC 68 xxx, MIPs, … – Register-based ALUs and FPUs – Registers are of scalar type • Pipelined execution to improve performance of individual chips – Splits up components of basic operation like addition into stages – The more stages, the faster the speedup, but more problems with branching and data/control hazards • Per-processor caches make it challenging to build SMPs (coherency issues) • Now dominates the high-end market

Vector Processors • Very specialized (eg. $$$$$) machines • Registers are true vectors with power of 2 lengths • Designed to efficiently perform matrix-style operations – Ax = b ( b(I) = A(I, J)*x(J)) – Vector registers v 1, v 2, v 3 • V 1 = A(I, *), V 2 = b(*) • MULV V 3(I), V 1, V 2 • “Chaining” to efficiently handle larger vectors than size of vector registers • Cray, Hitachi, SGI (now Cray SV-1) are examples

Some Custom Processors • Denelcor HEP/Tera MTA – Multiple register sets • Stack Pointer, Instruction Pointer, Frame Pointer, etc. • Facilitates hardware threads • Switch each clock cycle to different register set – Why? Stalls to memory subsystem in one thread can be hidden by concurrency • KSR-1 – Cache-only memory processor – Basically 2 generations behind standard micros

Going Parallel • Late 70’s, even vector “monsters” started to to go parallel • For //-processing to work, individual processors must synchronize – SIMD – Synchronize every clock cycle – MIMD – Explicit sychronization • Message passing • Semaphores, monitors, fetch-and-increment – Focus on interconnection networks for rest of lecture

Characterizing Networks • Bandwidth • Device/switch latency • Switching types – Circuit switched (eg. Telephone) – Packet switched (eg. Internet) • Store and forward • Virtual Cut Through • Wormhole routed • Topology – Number of connections – Diameter (how many hops through switches)

Latency • Latency is the amount of time taken for a command to start before any effect is seen – Push on gas pedal before car goes forward – Time you enter a line, before cashier starts on your job – First bit leaves computer A, first bit arrives at computer B OR – (Message latency) First bit leaves computer A, last bit arrives at computer B • Startup latency is the amount of time to send a zero length message

Bandwidth • Bits/second that can travel through a connection • A really simple model for calculating the time to send a message of N bytes – Time = latency + N/bandwidth • Bisection is the minimum number of wires that must be cut to divide a network of machines into two equal halves. • Bisection bandwidth is the total bandwidth through the bisection

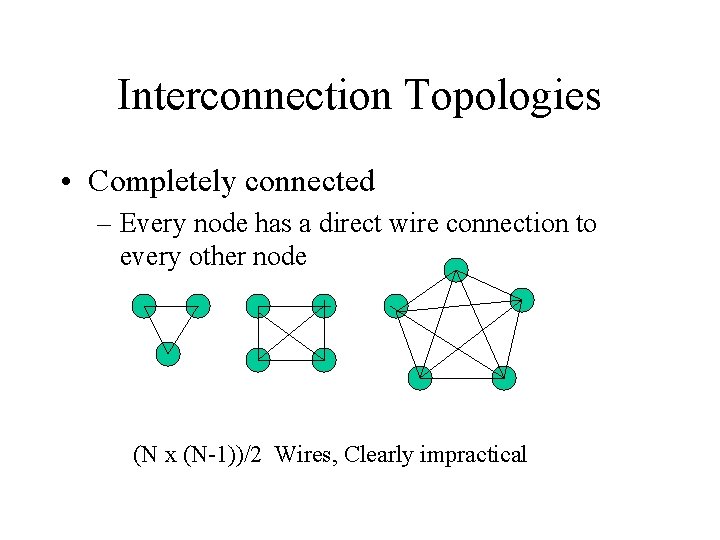

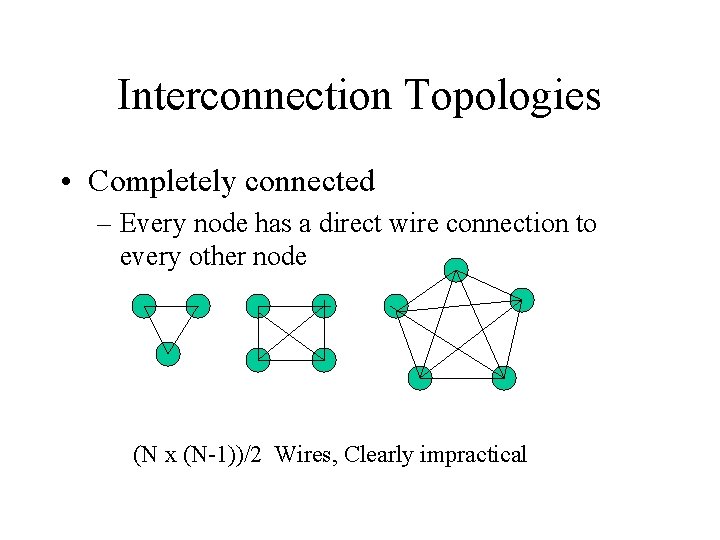

Interconnection Topologies • Completely connected – Every node has a direct wire connection to every other node (N x (N-1))/2 Wires, Clearly impractical

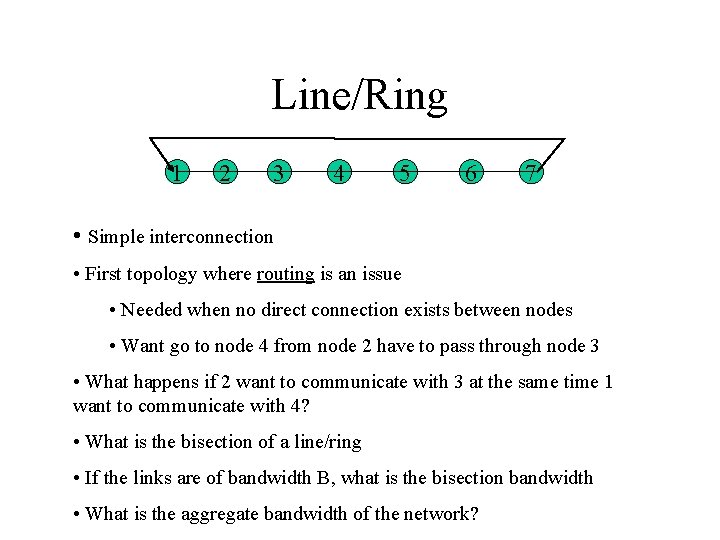

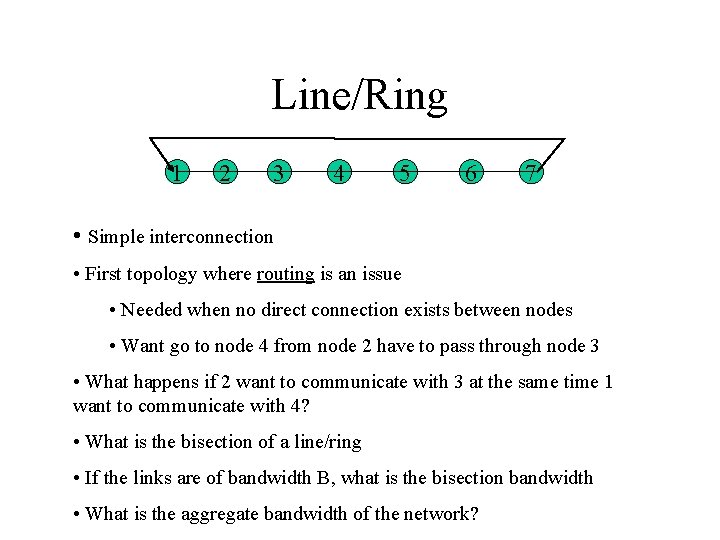

Line/Ring 1 2 3 4 5 6 7 • Simple interconnection • First topology where routing is an issue • Needed when no direct connection exists between nodes • Want go to node 4 from node 2 have to pass through node 3 • What happens if 2 want to communicate with 3 at the same time 1 want to communicate with 4? • What is the bisection of a line/ring • If the links are of bandwidth B, what is the bisection bandwidth • What is the aggregate bandwidth of the network?

Mesh/Torus • Generalization of line/ring to multiple dimensions • More routes between nodes • What is the bisection of this network? 1 2 3 4 5 6 7

Hop Count • Networks are measured by diameter – This is the minimum number of hops that message must traverse for the two nodes that furthest apart – Line: Diameter = N-1 – 2 D (Nx. M) Mesh: Diameter = N+M-2

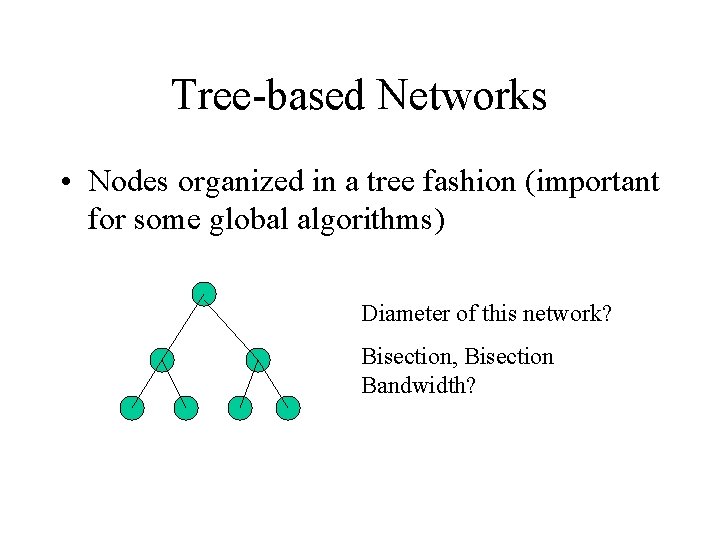

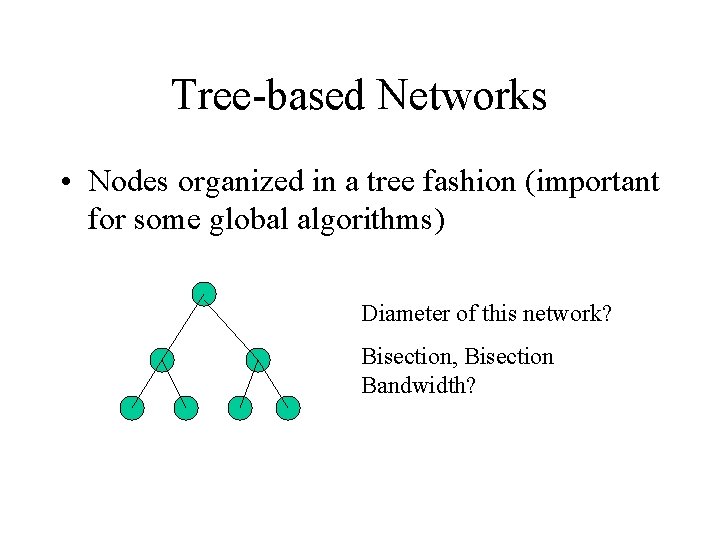

Tree-based Networks • Nodes organized in a tree fashion (important for some global algorithms) Diameter of this network? Bisection, Bisection Bandwidth?

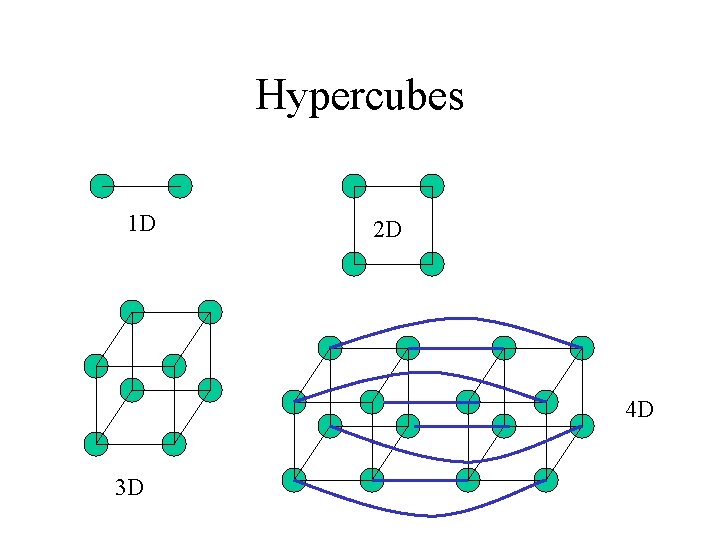

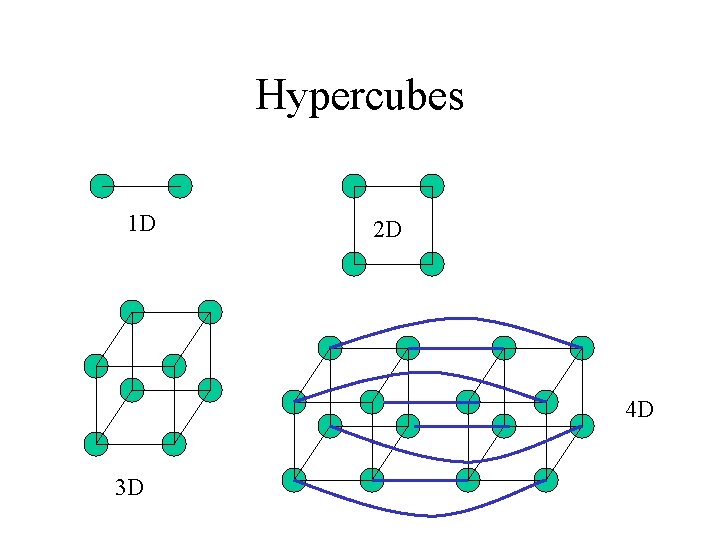

Hypercubes 1 D 2 D 4 D 3 D

Hypercubes 2 • Dimension N Hypercube is constructed by connecting the “corners” of two N-1 hypercubes • Relatively low wire count to build large networks • Multiple routes from any destination to any node. • Exercise to the reader, what is the dimenision of a K-dimensional Hypercube

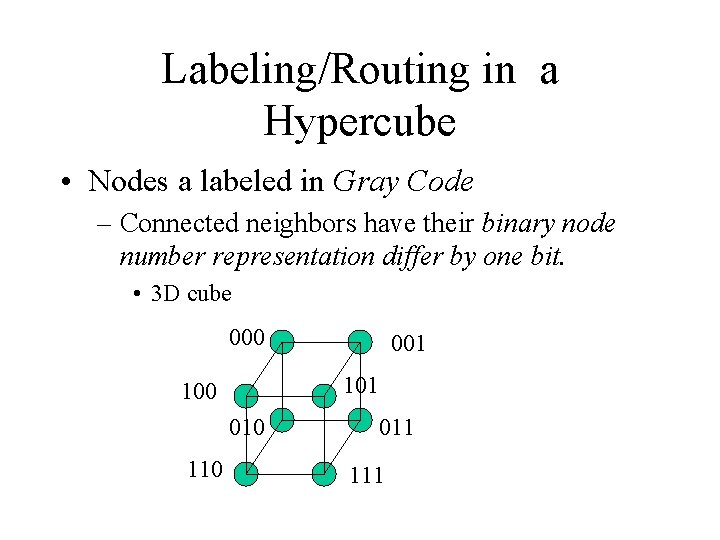

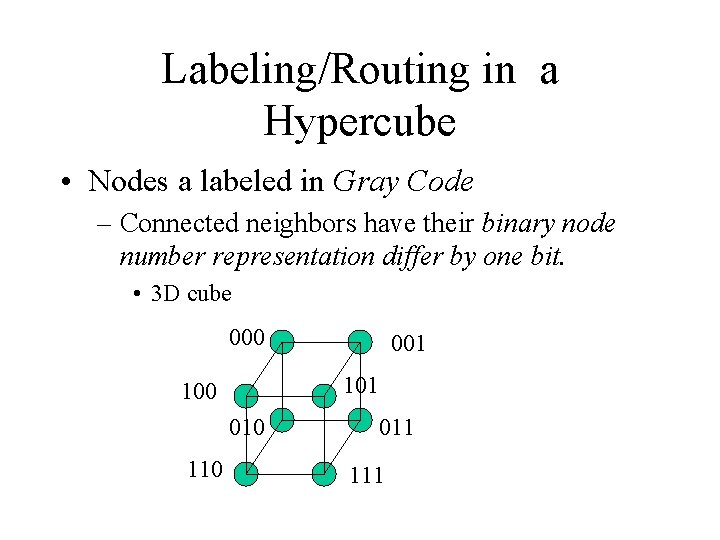

Labeling/Routing in a Hypercube • Nodes a labeled in Gray Code – Connected neighbors have their binary node number representation differ by one bit. • 3 D cube 000 101 100 010 110 001 011 111

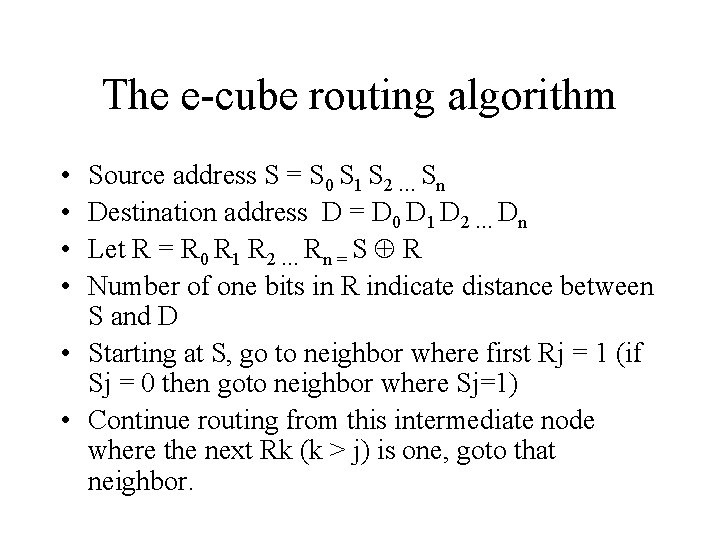

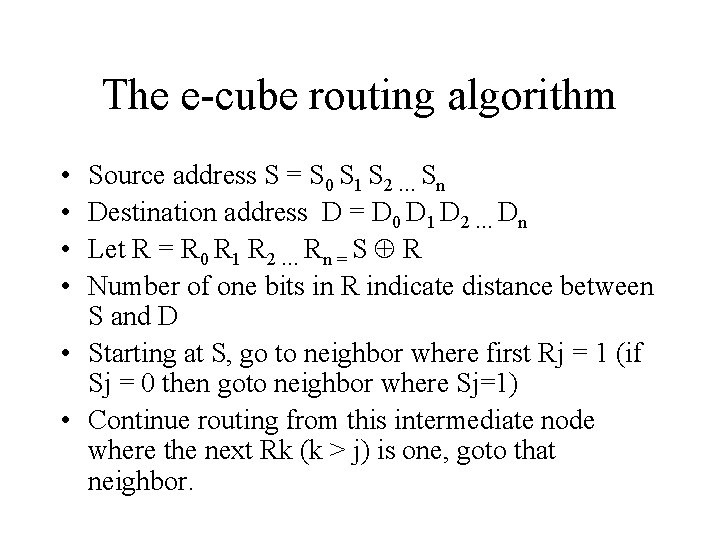

The e-cube routing algorithm • • Source address S = S 0 S 1 S 2 … Sn Destination address D = D 0 D 1 D 2 … Dn Let R = R 0 R 1 R 2 … Rn = S R Number of one bits in R indicate distance between S and D • Starting at S, go to neighbor where first Rj = 1 (if Sj = 0 then goto neighbor where Sj=1) • Continue routing from this intermediate node where the next Rk (k > j) is one, goto that neighbor.

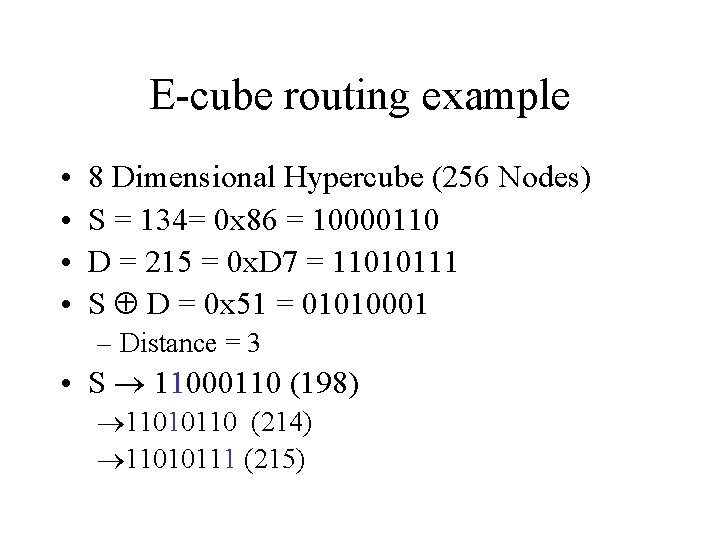

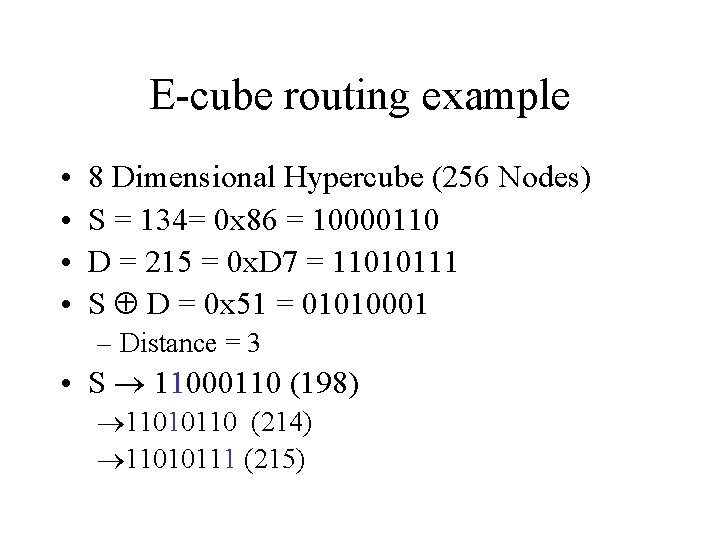

E-cube routing example • • 8 Dimensional Hypercube (256 Nodes) S = 134= 0 x 86 = 10000110 D = 215 = 0 x. D 7 = 11010111 S D = 0 x 51 = 01010001 – Distance = 3 • S 11000110 (198) 11010110 (214) 11010111 (215)

Embedding • A network is embeddable if nodes and links can be mapped to a target network • A mesh is embeddable in a hypercube – There is mapping of hypercube nodes and networks to a mesh • The dilation of an embedding is how many links are needed in the embedding network to represent the embedded network – Perfect embeddings have dilation 1 • Embedding a tree into a mesh has a dilation of 2 (See example in book)

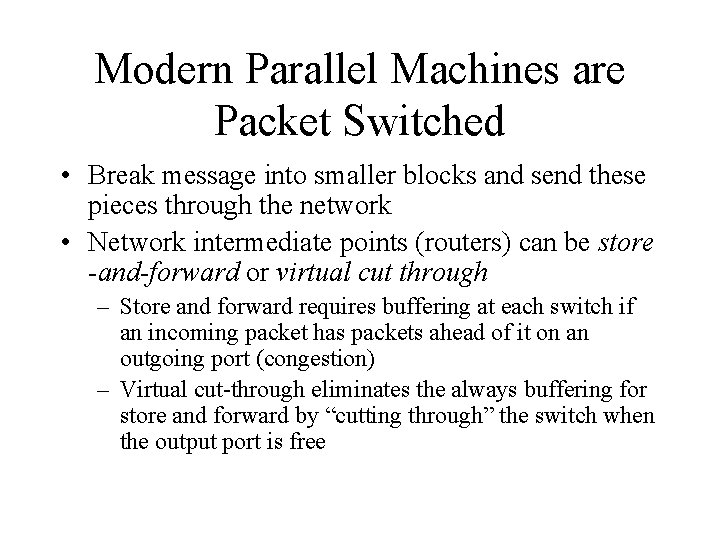

Modern Parallel Machines are Packet Switched • Break message into smaller blocks and send these pieces through the network • Network intermediate points (routers) can be store -and-forward or virtual cut through – Store and forward requires buffering at each switch if an incoming packet has packets ahead of it on an outgoing port (congestion) – Virtual cut-through eliminates the always buffering for store and forward by “cutting through” the switch when the output port is free

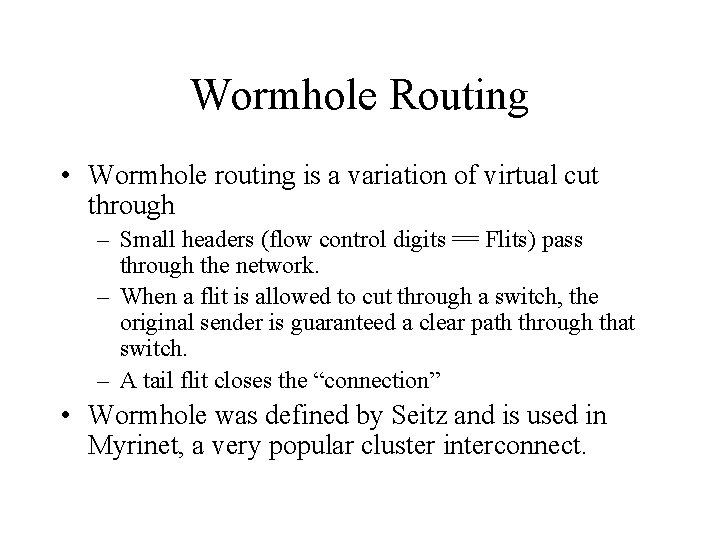

Wormhole Routing • Wormhole routing is a variation of virtual cut through – Small headers (flow control digits == Flits) pass through the network. – When a flit is allowed to cut through a switch, the original sender is guaranteed a clear path through that switch. – A tail flit closes the “connection” • Wormhole was defined by Seitz and is used in Myrinet, a very popular cluster interconnect.

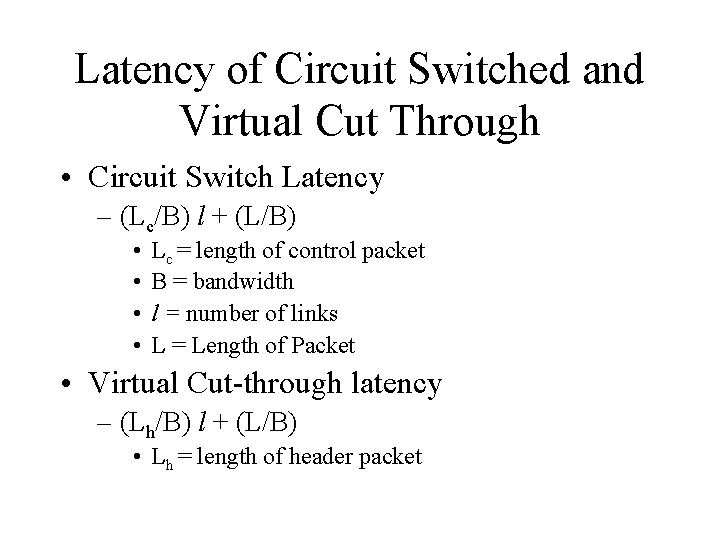

Latency of Circuit Switched and Virtual Cut Through • Circuit Switch Latency – (Lc/B) l + (L/B) • • Lc = length of control packet B = bandwidth l = number of links L = Length of Packet • Virtual Cut-through latency – (Lh/B) l + (L/B) • Lh = length of header packet

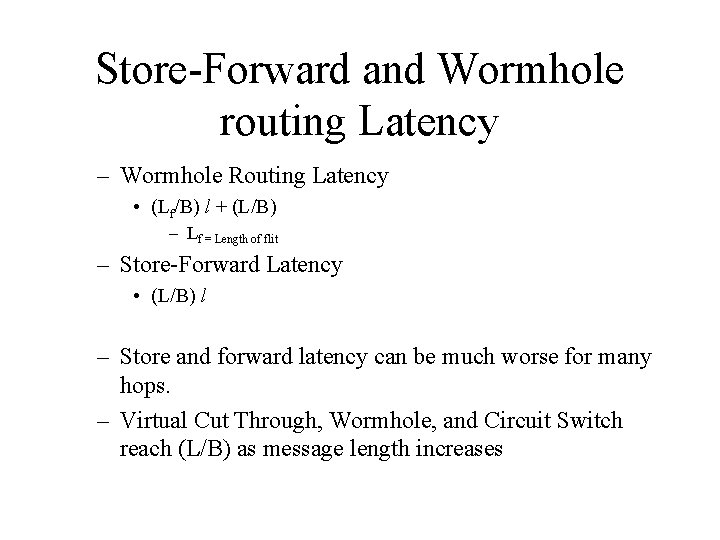

Store-Forward and Wormhole routing Latency – Wormhole Routing Latency • (Lf/B) l + (L/B) – Lf = Length of flit – Store-Forward Latency • (L/B) l – Store and forward latency can be much worse for many hops. – Virtual Cut Through, Wormhole, and Circuit Switch reach (L/B) as message length increases

Deadlock/Livelock • Livelock/Deadlock is a potential problem in any network design. • Livelock occurs in adaptive routing algorithms when a packet never finds destination • Deadlock occurs when packets cannot be forwarded because waiting for other packets to move out of the way. Blocking packet is waiting for blocked packet to move

Next Time … • All about clusters • Introduction to PVM (and MPI)

Flynn's classification diagram

Flynn's classification diagram Sisd architecture

Sisd architecture Flynn's classical taxonomy

Flynn's classical taxonomy Kendall's taxonomy

Kendall's taxonomy 01:640:244 lecture notes - lecture 15: plat, idah, farad

01:640:244 lecture notes - lecture 15: plat, idah, farad Todays objective

Todays objective Midlands 2 west (north)

Midlands 2 west (north) Todays lab

Todays lab Rprefer

Rprefer Todays price of asda shares

Todays price of asda shares Todays jeopardy

Todays jeopardy Todays worldld

Todays worldld Date frui

Date frui Handcuff nomenclature quiz

Handcuff nomenclature quiz Today's objective

Today's objective Todays concept

Todays concept Todays science

Todays science Chapter 13 marketing in today's world answer key

Chapter 13 marketing in today's world answer key Todays objective

Todays objective Today planetary position

Today planetary position Todays generations

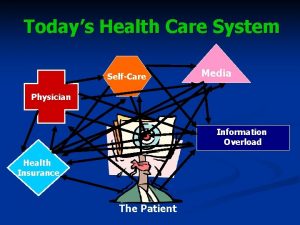

Todays generations Todays health

Todays health Organelle jeopardy

Organelle jeopardy Todays class com

Todays class com Teacher good morning student student

Teacher good morning student student Whats todays temperature

Whats todays temperature Todays objective

Todays objective Todays weather hull

Todays weather hull Todays whether

Todays whether Whats todays temperature

Whats todays temperature Todays class

Todays class Todays objective

Todays objective Todays plan

Todays plan