CS 241 Section Week 9 040909 Topics LMP

- Slides: 85

CS 241 Section Week #9 (04/09/09)

Topics • • LMP 2 Overview Memory Management Virtual Memory Page Tables

LMP 2 Overview

LMP 2 Overview • LMP 2 attempts to encode or decode a number of files the following way: – encode: %>. /mmap -e -b 16 file 1 [file 2. . . ] – decode: %>. /mmap -d -b 8 file 1 [file 2. . . ] • It has the following parameters: – It reads whether it has to encode (‘-e’) or decode(‘ -d’); – the number of bytes (rw_units) for each read/write from the file;

LMP 1 Overview • You have TWO weeks to complete and submit LMP 2. We have divided LMP 2 into two stages: – Stage 1: • Implement a simple virtual memory. • It is recommended you implement the my_mmap() function during this week. • You will need to complete various data structures to deal with the file mapping table, the page table, the physical memory, etc.

LMP 1 Overview • You have TWO weeks to complete and submit LMP 2. We have divided LMP 2 into two stages: – Stage 2 • Implement various functions for memory mapped files including: – my_mread() , my_mwrite() and my_munmap() • Handle page faults in your my_mread() and my_mwrite() functions • Implement two simple manipulations on files: – encoding – decoding

Memory Management

Memory • Contiguous allocation and compaction • Paging and page replacement algorithms

Fragmentation • External Fragmentation – Free space becomes divided into many small pieces – Caused over time by allocating and freeing the storage of different sizes • Internal Fragmentation – Result of reserving space without ever using its part – Caused by allocating fixed size of storage

Contiguous Allocation • Memory is allocated in monolithic segments or blocks • Public enemy #1: external fragmentation – We can solve this by periodically rearranging the contents of memory

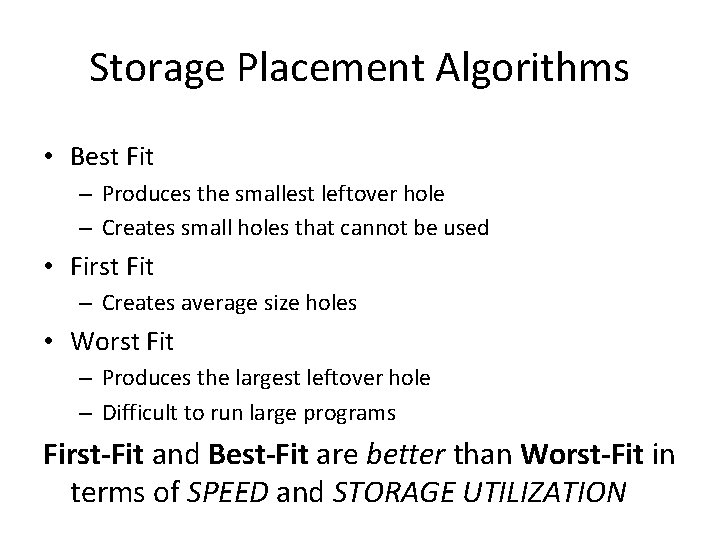

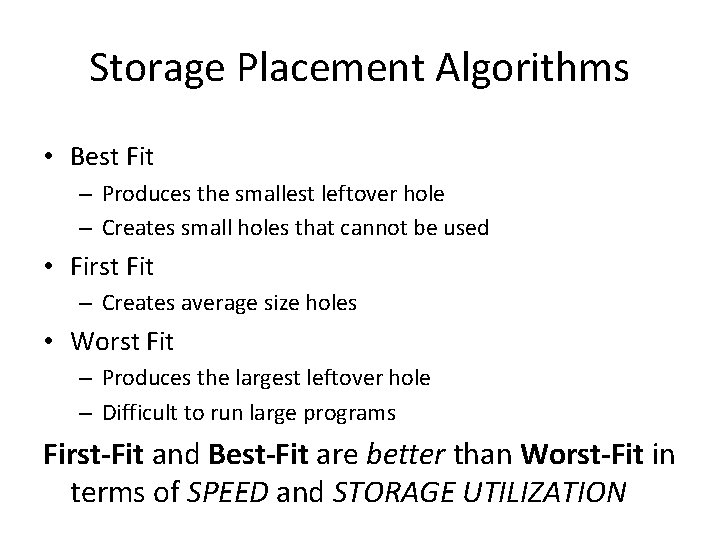

Storage Placement Algorithms • Best Fit – Produces the smallest leftover hole – Creates small holes that cannot be used

Storage Placement Algorithms • Best Fit – Produces the smallest leftover hole – Creates small holes that cannot be used • First Fit – Creates average size holes

Storage Placement Algorithms • Best Fit – Produces the smallest leftover hole – Creates small holes that cannot be used • First Fit – Creates average size holes • Worst Fit – Produces the largest leftover hole – Difficult to run large programs

Storage Placement Algorithms • Best Fit – Produces the smallest leftover hole – Creates small holes that cannot be used • First Fit – Creates average size holes • Worst Fit – Produces the largest leftover hole – Difficult to run large programs First-Fit and Best-Fit are better than Worst-Fit in terms of SPEED and STORAGE UTILIZATION

Exercise • Consider a swapping system in which memory consists of the following hole sizes in memory order: 10 KB, 4 KB, 20 KB, 18 KB, 7 KB, 9 KB, 12 KB, and 15 KB. Which hole is taken for successive segment requests of (a) 12 KB, (b) 10 KB, (c) 9 KB for – First Fit?

Exercise • Consider a swapping system in which memory consists of the following hole sizes in memory order: 10 KB, 4 KB, 20 KB, 18 KB, 7 KB, 9 KB, 12 KB, and 15 KB. Which hole is taken for successive segment requests of (a) 12 KB, (b) 10 KB, (c) 9 KB for – First Fit? 20 KB, 10 KB and 18 KB

Exercise • Consider a swapping system in which memory consists of the following hole sizes in memory order: 10 KB, 4 KB, 20 KB, 18 KB, 7 KB, 9 KB, 12 KB, and 15 KB. Which hole is taken for successive segment requests of (a) 12 KB, (b) 10 KB, (c) 9 KB for – First Fit? 20 KB, 10 KB and 18 KB – Best Fit?

Exercise • Consider a swapping system in which memory consists of the following hole sizes in memory order: 10 KB, 4 KB, 20 KB, 18 KB, 7 KB, 9 KB, 12 KB, and 15 KB. Which hole is taken for successive segment requests of (a) 12 KB, (b) 10 KB, (c) 9 KB for – First Fit? 20 KB, 10 KB and 18 KB – Best Fit? 12 KB, 10 KB and 9 KB

Exercise • Consider a swapping system in which memory consists of the following hole sizes in memory order: 10 KB, 4 KB, 20 KB, 18 KB, 7 KB, 9 KB, 12 KB, and 15 KB. Which hole is taken for successive segment requests of (a) 12 KB, (b) 10 KB, (c) 9 KB for – First Fit? 20 KB, 10 KB and 18 KB – Best Fit? 12 KB, 10 KB and 9 KB – Worst Fit?

Exercise • Consider a swapping system in which memory consists of the following hole sizes in memory order: 10 KB, 4 KB, 20 KB, 18 KB, 7 KB, 9 KB, 12 KB, and 15 KB. Which hole is taken for successive segment requests of (a) 12 KB, (b) 10 KB, (c) 9 KB for – First Fit? 20 KB, 10 KB and 18 KB – Best Fit? 12 KB, 10 KB and 9 KB – Worst Fit? 20 KB, 18 KB and 15 KB

malloc Revisited • Free storage is kept as a list of free blocks – Each block contains a size, a pointer to the next block, and the space itself

malloc Revisited • Free storage is kept as a list of free blocks – Each block contains a size, a pointer to the next block, and the space itself • When a request for space is made, the free list is scanned until a big-enough block can be found – Which storage placement algorithm is used?

malloc Revisited • Free storage is kept as a list of free blocks – Each block contains a size, a pointer to the next block, and the space itself • When a request for space is made, the free list is scanned until a big-enough block can be found – Which storage placement algorithm is used? • If the block is found, return it and adjust the free list. Otherwise, another large chunk is obtained from the OS and linked into the free list

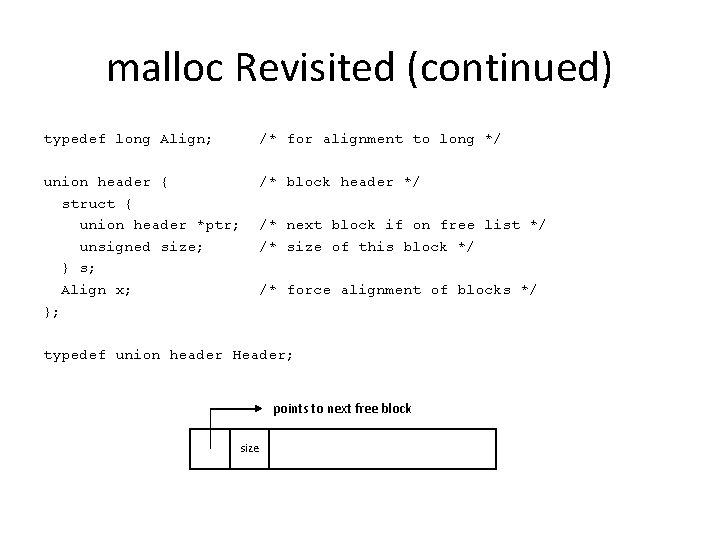

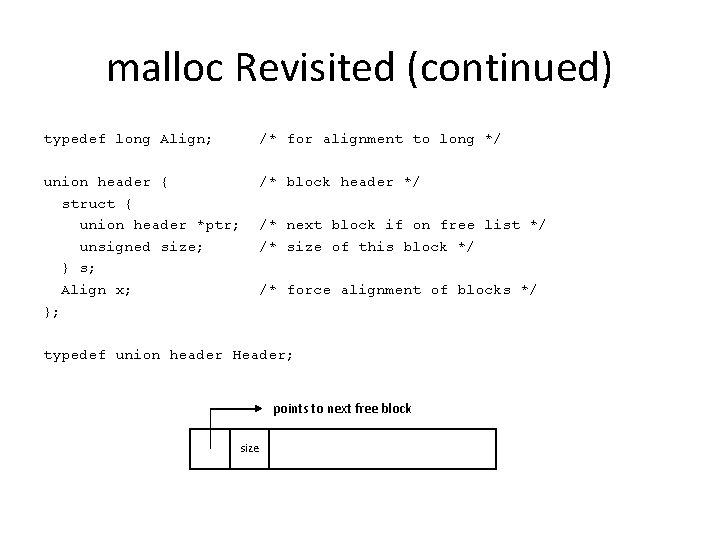

malloc Revisited (continued) typedef long Align; /* for alignment to long */ union header { struct { union header *ptr; unsigned size; } s; Align x; }; /* block header */ /* next block if on free list */ /* size of this block */ /* force alignment of blocks */ typedef union header Header; points to next free block size

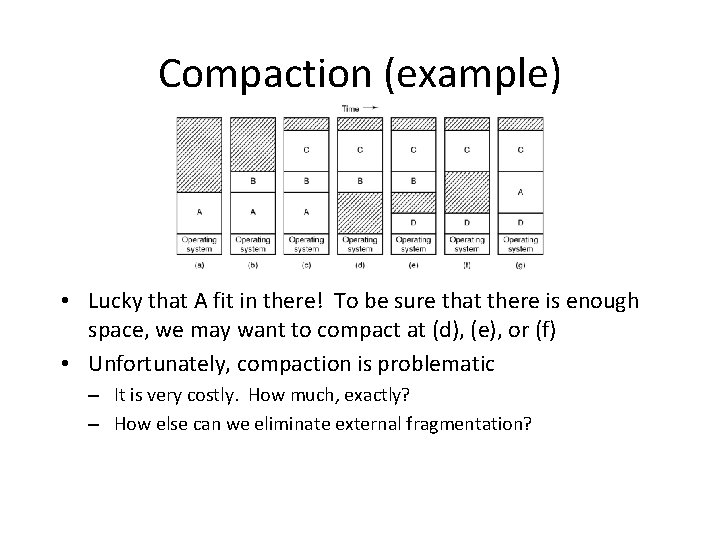

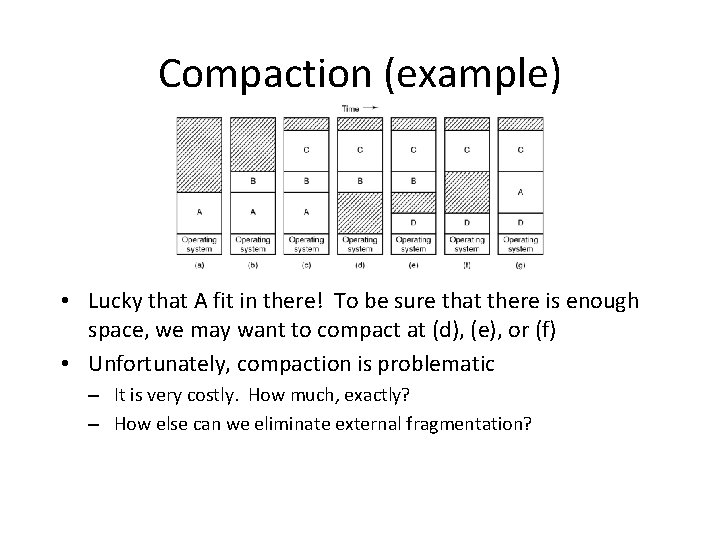

Compaction • After numerous malloc() and free() calls, our memory will have many holes – Total free memory is much greater than that of any contiguous chunk • We can compact our allocated memory – Shift allocations to one end of memory, and all holes to the other end • Temporarily eliminates of external fragmentation

Compaction (example) • Lucky that A fit in there! To be sure that there is enough space, we may want to compact at (d), (e), or (f) • Unfortunately, compaction is problematic – It is very costly. How much, exactly? – How else can we eliminate external fragmentation?

Paging • Divide memory into pages of equal size – We don’t need to assign contiguous chunks – Internal fragmentation can only occur on the last page assigned to a process – External fragmentation cannot occur at all – Need to map contiguous logical memory addresses to disjoint pages

Page Replacement • We may not have enough space in physical memory for all pages of every process at the same time. • But which pages shall we keep? – Use the history of page accesses to decide – Also useful to know the dirty pages

Page Replacement Strategies • It takes two disk operations to replace a dirty page, so: – Keep track of dirty bits, attempt to replace clean pages first – Write dirty pages to disk during idle disk time • We try to approximate the optimal strategy but can seldom achieve it, because we don’t know what order a process will use its pages. – Best we can do is run a program multiple times, and track which pages it accesses

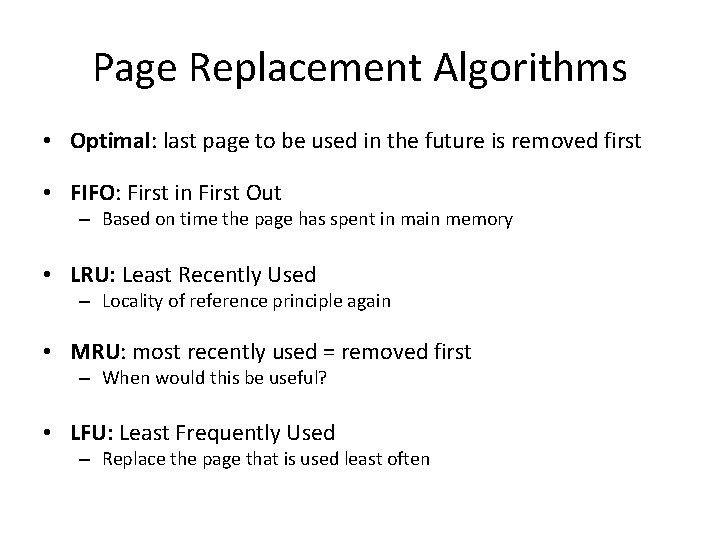

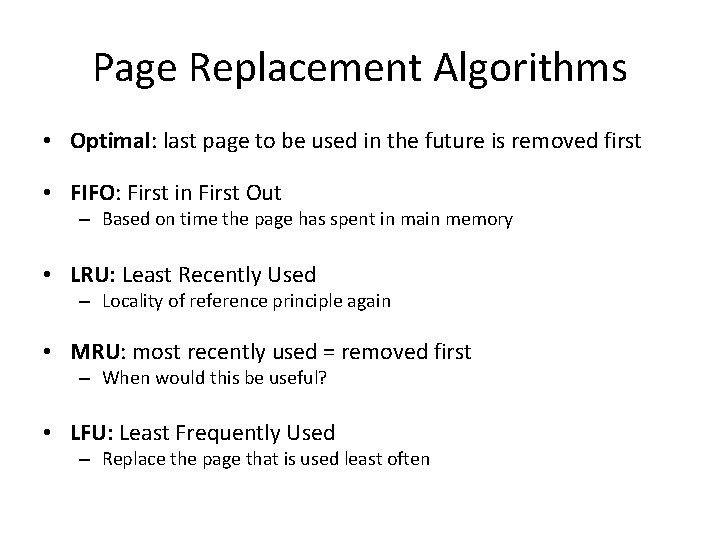

Page Replacement Algorithms • Optimal: last page to be used in the future is removed first • FIFO: First in First Out – Based on time the page has spent in main memory • LRU: Least Recently Used – Locality of reference principle again • MRU: most recently used = removed first – When would this be useful? • LFU: Least Frequently Used – Replace the page that is used least often

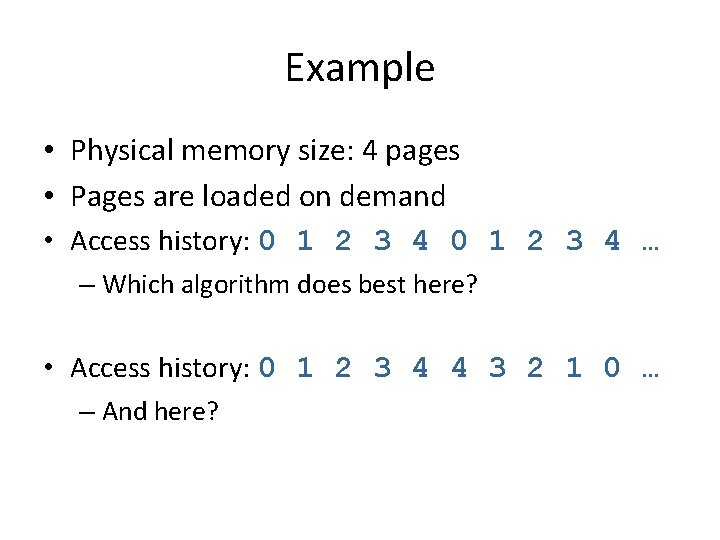

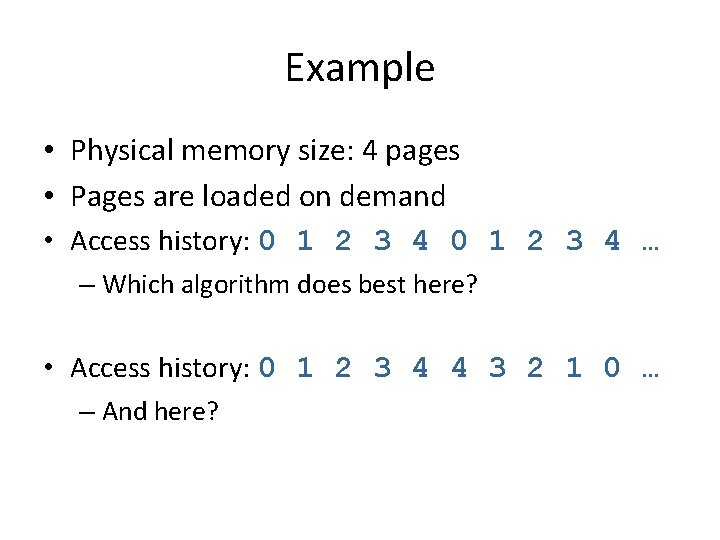

Example • Physical memory size: 4 pages • Pages are loaded on demand • Access history: 0 1 2 3 4 … – Which algorithm does best here? • Access history: 0 1 2 3 4 4 3 2 1 0 … – And here?

Virtual Memory

Why Virtual Memory? • Use main memory as a Cache for the Disk – Address space of a process can exceed physical memory size – Sum of address spaces of multiple processes can exceed physical memory

Why Virtual Memory? • Use main memory as a Cache for the Disk – Address space of a process can exceed physical memory size – Sum of address spaces of multiple processes can exceed physical memory • Simplify Memory Management – Multiple processes resident in main memory. • Each process with its own address space – Only “active” code and data is actually in memory

Why Virtual Memory? • Use main memory as a Cache for the Disk – Address space of a process can exceed physical memory size – Sum of address spaces of multiple processes can exceed physical memory • Simplify Memory Management – Multiple processes resident in main memory. • Each process with its own address space – Only “active” code and data is actually in memory • Provide Protection – One process can’t interfere with another. • because they operate in different address spaces. – User process cannot access privileged information • different sections of address spaces have different permissions.

Principle of Locality • Program and data references within a process tend to cluster

Principle of Locality • Program and data references within a process tend to cluster • Only a few pieces of a process will be needed over a short period of time (active data or code)

Principle of Locality • Program and data references within a process tend to cluster • Only a few pieces of a process will be needed over a short period of time (active data or code) • Possible to make intelligent guesses about which pieces will be needed in the future

Principle of Locality • Program and data references within a process tend to cluster • Only a few pieces of a process will be needed over a short period of time (active data or code) • Possible to make intelligent guesses about which pieces will be needed in the future • This suggests that virtual memory may work efficiently

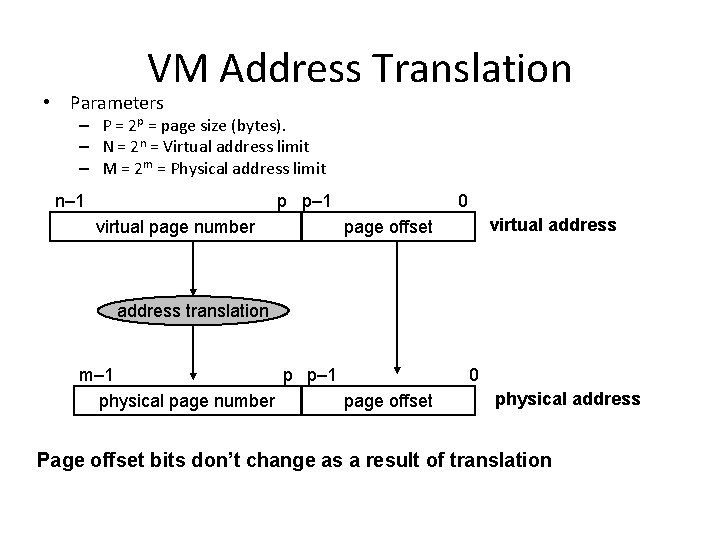

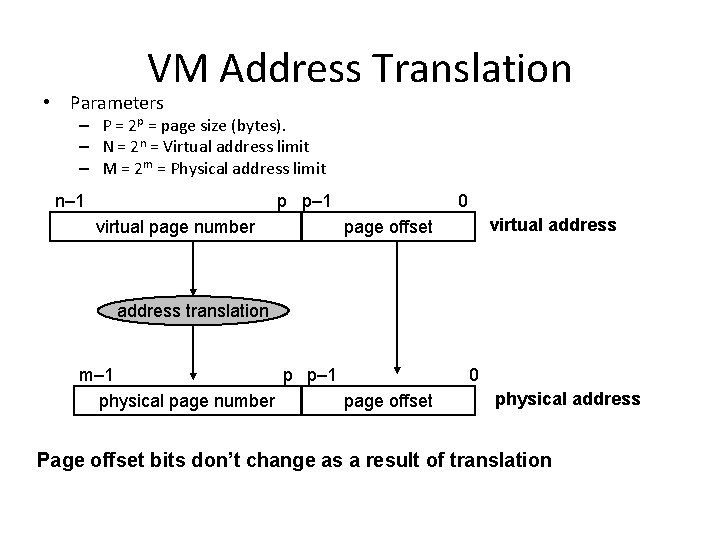

VM Address Translation • Parameters – P = 2 p = page size (bytes). – N = 2 n = Virtual address limit – M = 2 m = Physical address limit n– 1 p p– 1 virtual page number 0 virtual address page offset address translation m– 1 p p– 1 physical page number page offset 0 physical address Page offset bits don’t change as a result of translation

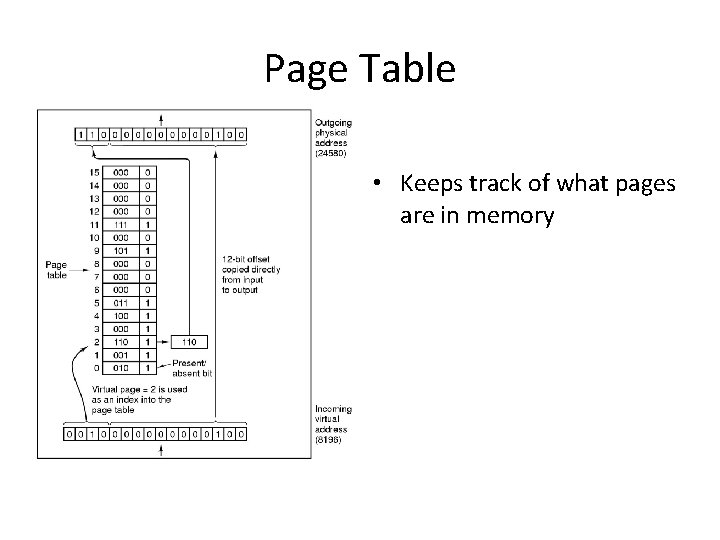

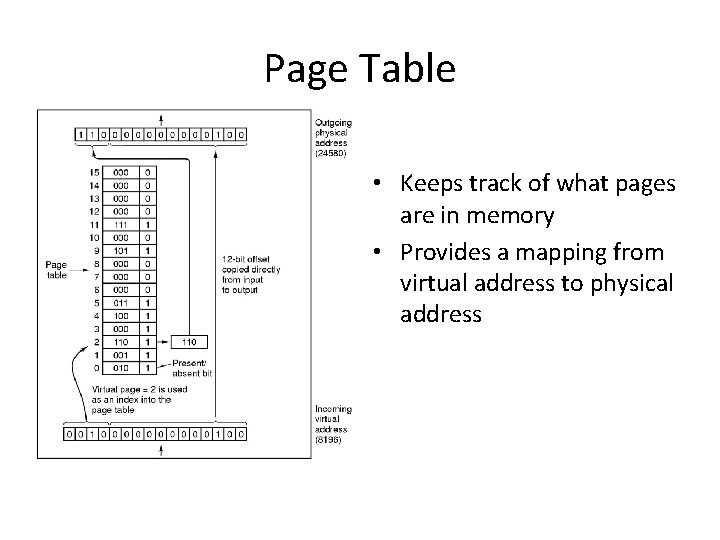

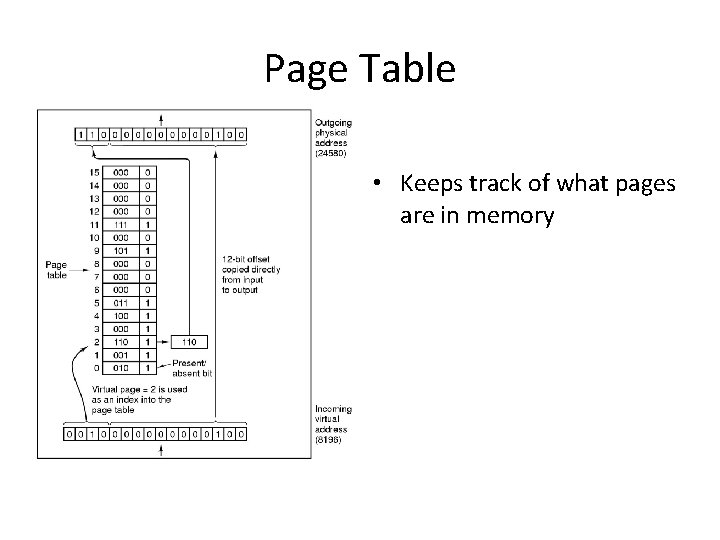

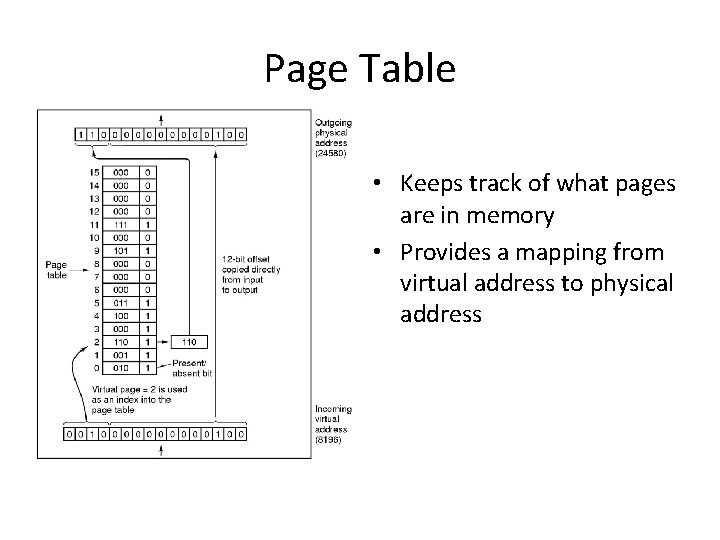

Page Table • Keeps track of what pages are in memory

Page Table • Keeps track of what pages are in memory • Provides a mapping from virtual address to physical address

Handling a Page Fault • Page fault – Look for an empty page in RAM • May need to write a page to disk and free it

Handling a Page Fault • Page fault – Look for an empty page in RAM • May need to write a page to disk and free it – Load the faulted page into that empty page

Handling a Page Fault • Page fault – Look for an empty page in RAM • May need to write a page to disk and free it – Load the faulted page into that empty page – Modify the page table

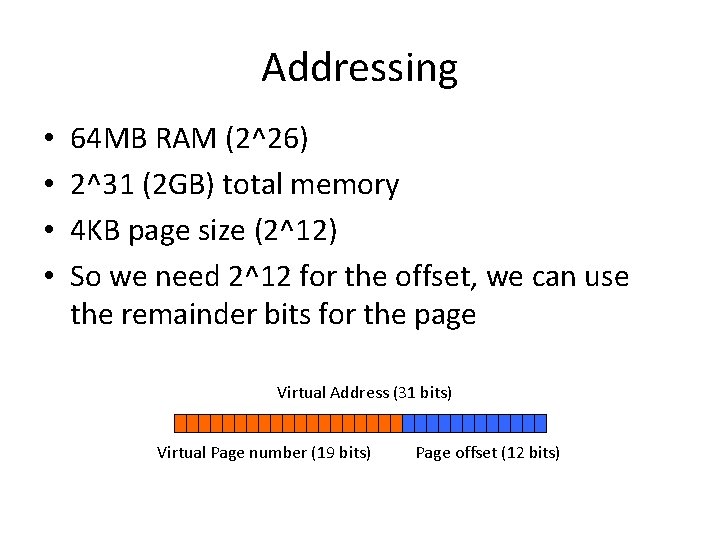

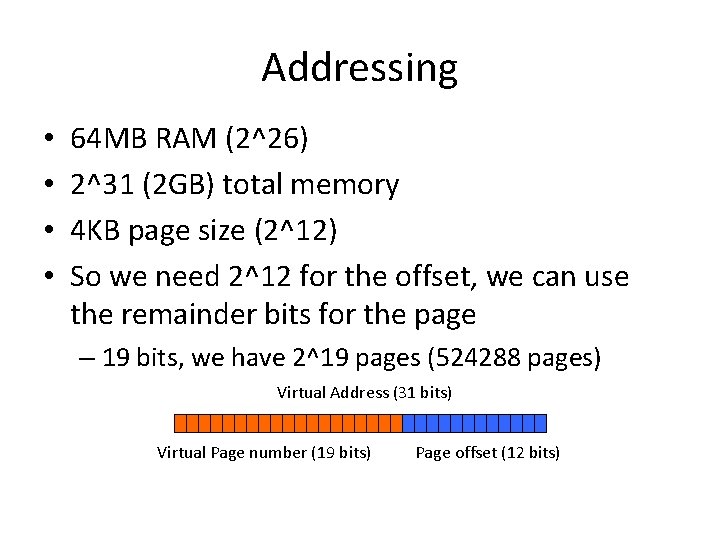

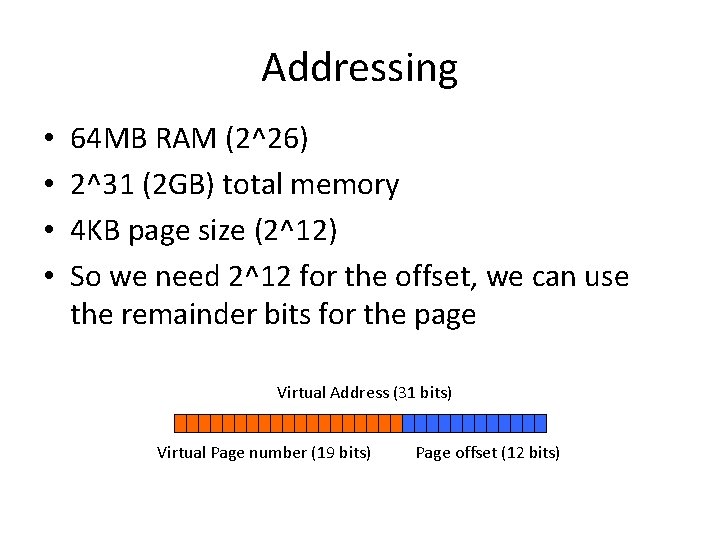

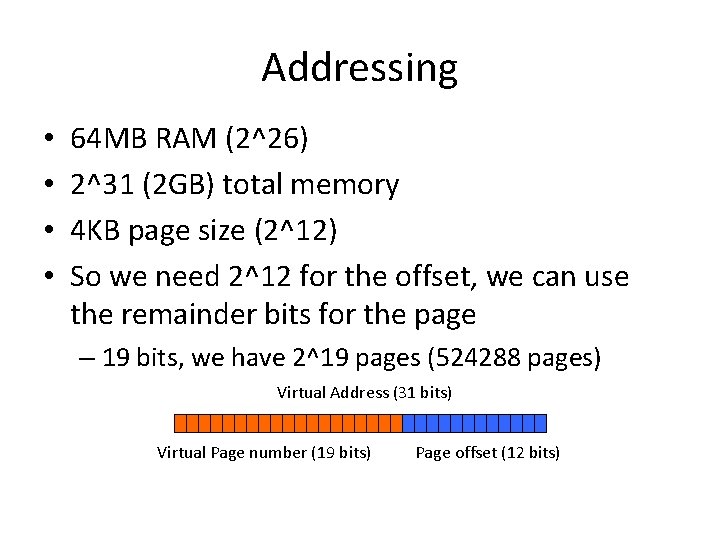

Addressing • 64 MB RAM (2^26)

Addressing • 64 MB RAM (2^26) • 2^31 (2 GB) total memory Virtual Address (31 bits)

Addressing • 64 MB RAM (2^26) • 2^31 (2 GB) total memory • 4 KB page size (2^12) Virtual Address (31 bits)

Addressing • • 64 MB RAM (2^26) 2^31 (2 GB) total memory 4 KB page size (2^12) So we need 2^12 for the offset, we can use the remainder bits for the page Virtual Address (31 bits) Virtual Page number (19 bits) Page offset (12 bits)

Addressing • • 64 MB RAM (2^26) 2^31 (2 GB) total memory 4 KB page size (2^12) So we need 2^12 for the offset, we can use the remainder bits for the page – 19 bits, we have 2^19 pages (524288 pages) Virtual Address (31 bits) Virtual Page number (19 bits) Page offset (12 bits)

Address Conversion • That 19 bit page address can be optimized in a variety of ways – Translation Look-aside Buffer

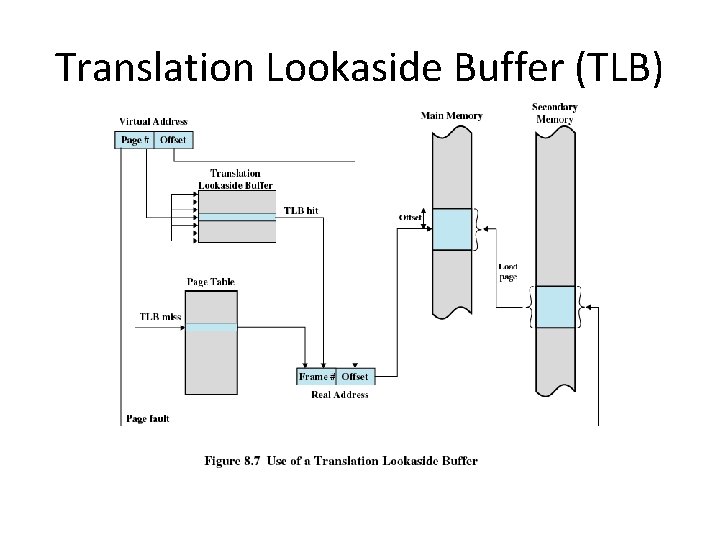

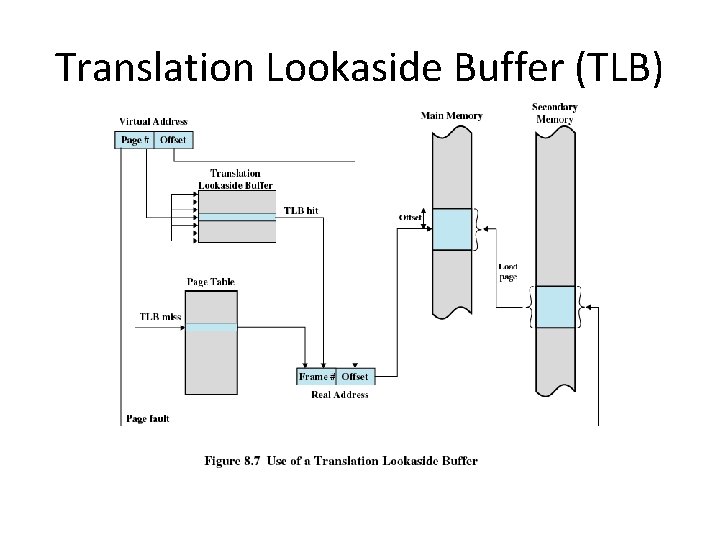

Translation Lookaside Buffer (TLB) • Each virtual memory reference can cause two physical memory accesses – One to fetch the page table – One to fetch the data

Translation Lookaside Buffer (TLB) • Each virtual memory reference can cause two physical memory accesses – One to fetch the page table – One to fetch the data • To overcome this problem a high-speed cache is set up for page table entries

Translation Lookaside Buffer (TLB) • Each virtual memory reference can cause two physical memory accesses – One to fetch the page table – One to fetch the data • To overcome this problem a high-speed cache is set up for page table entries • Contains page table entries that have been most recently used (a cache for page table)

Translation Lookaside Buffer (TLB)

Address Conversion • That 19 bit page address can be optimized in a variety of ways – Translation Look-aside Buffer – Multilevel Page Table

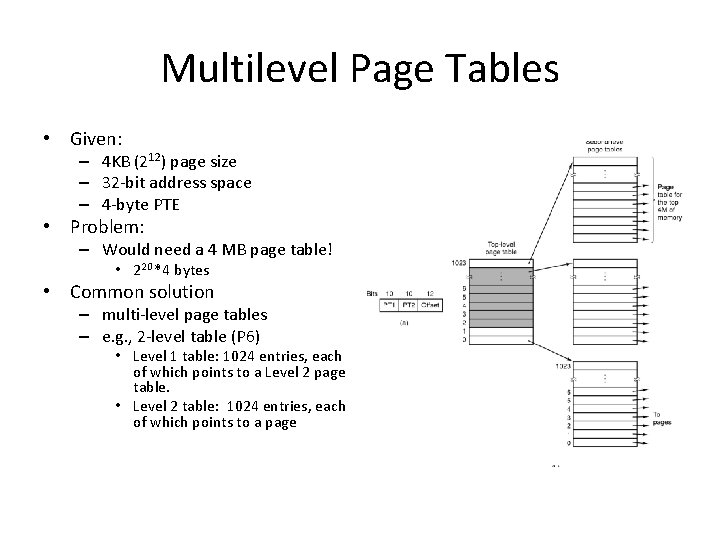

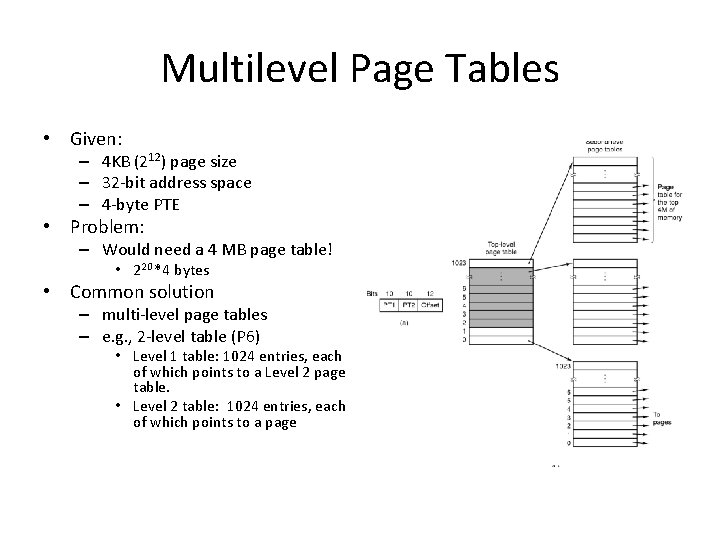

Multilevel Page Tables • Given: – 4 KB (212) page size – 32 -bit address space – 4 -byte PTE

Multilevel Page Tables • Given: – 4 KB (212) page size – 32 -bit address space – 4 -byte PTE • Problem: – Would need a 4 MB page table! • 220 *4 bytes

Multilevel Page Tables • Given: – 4 KB (212) page size – 32 -bit address space – 4 -byte PTE • Problem: – Would need a 4 MB page table! • 220 *4 bytes • Common solution – multi-level page tables – e. g. , 2 -level table (P 6) • Level 1 table: 1024 entries, each of which points to a Level 2 page table. • Level 2 table: 1024 entries, each of which points to a page

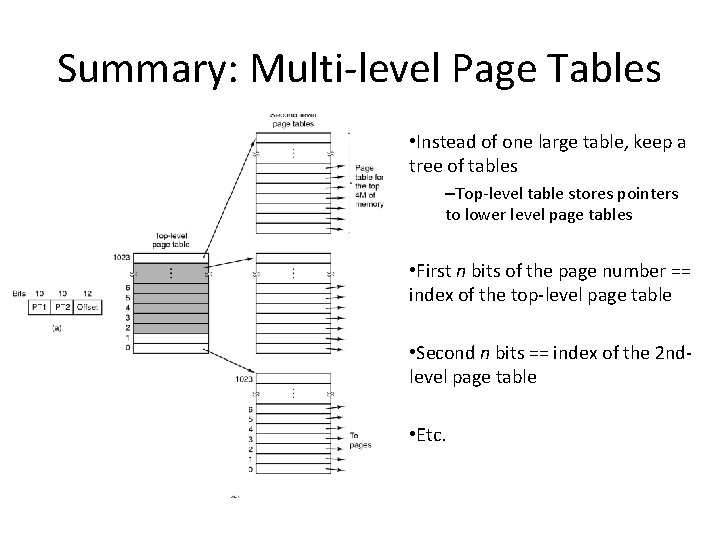

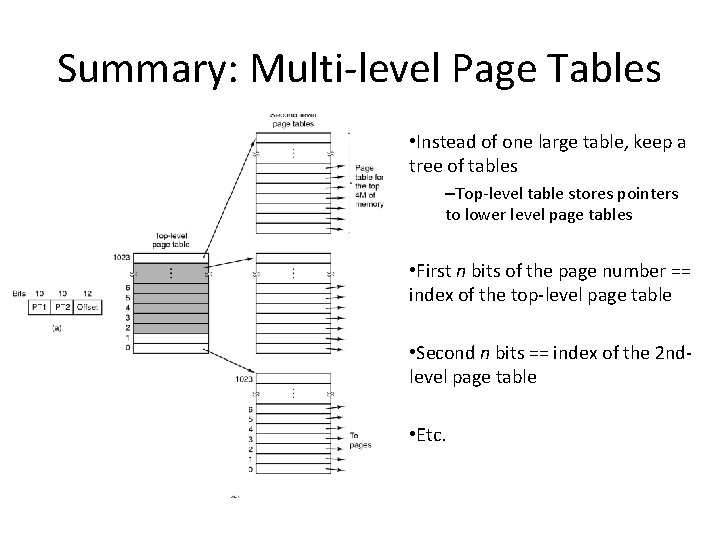

Summary: Multi-level Page Tables • Instead of one large table, keep a tree of tables –Top-level table stores pointers to lower level page tables • First n bits of the page number == index of the top-level page table • Second n bits == index of the 2 ndlevel page table • Etc.

Example: Two-level Page Table • 32 -bit address space (2 GB)

Example: Two-level Page Table • 32 -bit address space (2 GB) • 12 -bit page offset (4 k. B pages)

Example: Two-level Page Table • 32 -bit address space (2 GB) • 12 -bit page offset (4 k. B pages) • 20 -bit page address – First 10 bits index the top-level page table – Second 10 bits index the 2 nd-level page table – 10 bits == 1024 entries * 4 bytes == 4 k. B == 1 page

Example: Two-level Page Table • 32 -bit address space (2 GB) • 12 -bit page offset (4 k. B pages) • 20 -bit page address – First 10 bits index the top-level page table – Second 10 bits index the 2 nd-level page table – 10 bits == 1024 entries * 4 bytes == 4 k. B == 1 page • Need three memory accesses to read a memory location

Why use multi-level page tables? • Split one large page table into many page-sized chunks – Typically 4 or 8 MB for a 32 -bit address space

Why use multi-level page tables? • Split one large page table into many page-sized chunks – Typically 4 or 8 MB for a 32 -bit address space • Advantage: less memory must be reserved for the page tables – Can swap out unused or not recently used tables

Why use multi-level page tables? • Split one large page table into many page-sized chunks – Typically 4 or 8 MB for a 32 -bit address space • Advantage: less memory must be reserved for the page tables – Can swap out unused or not recently used tables • Disadvantage: increased access time on TLB miss – n+1 memory accesses for n-level page tables

Address Conversion • That 19 bit page address can be optimized in a variety of ways – Translation Look-aside Buffer – Multilevel Page Table – Inverted Page Table

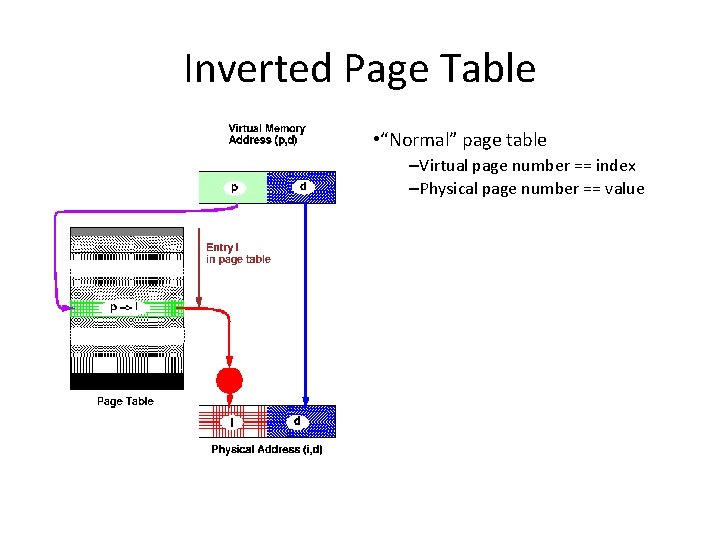

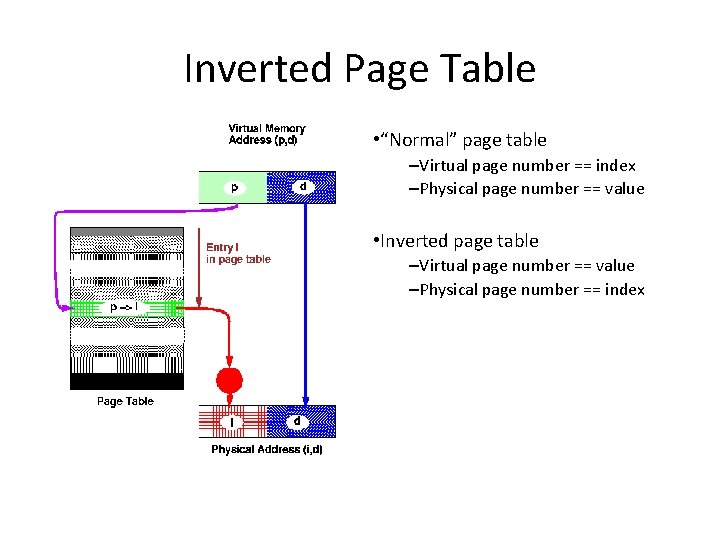

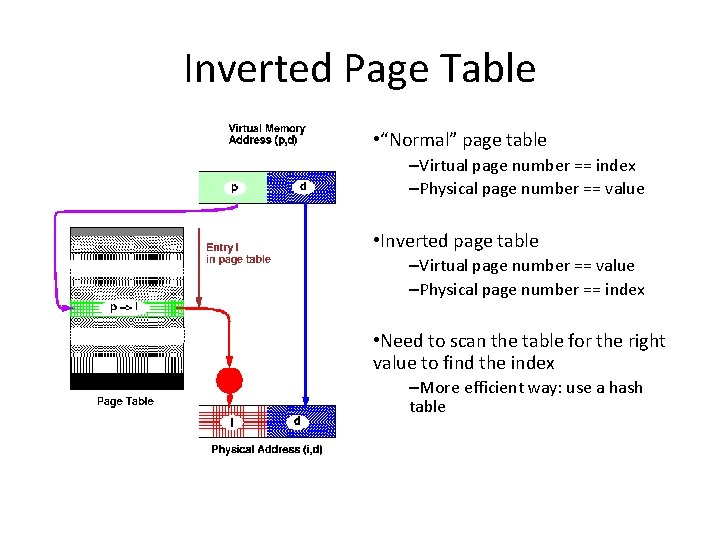

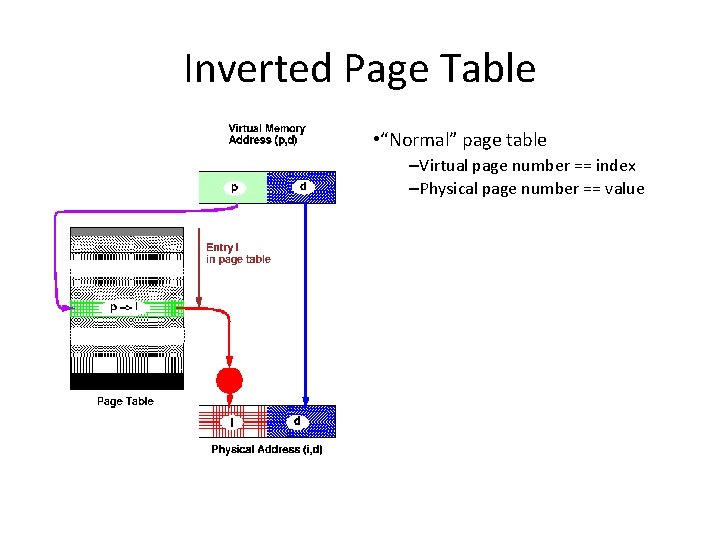

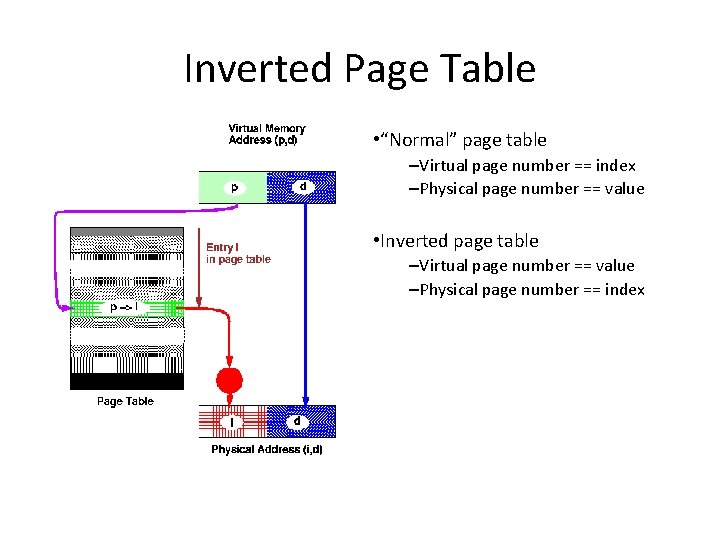

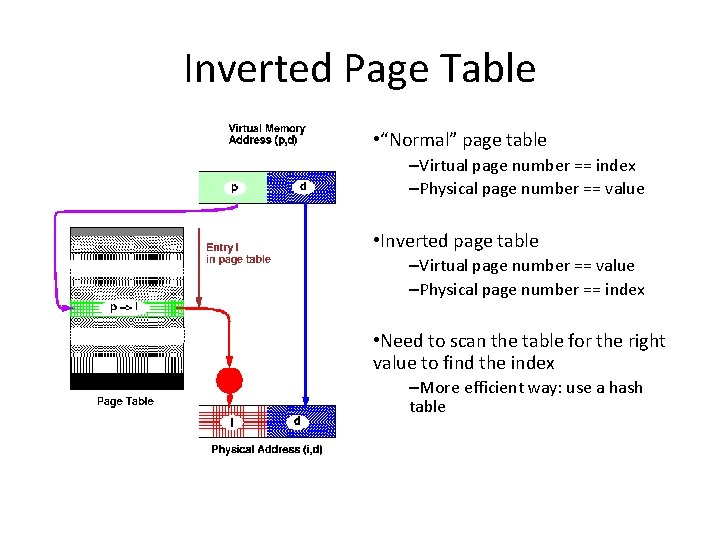

Inverted Page Table • “Normal” page table –Virtual page number == index –Physical page number == value

Inverted Page Table • “Normal” page table –Virtual page number == index –Physical page number == value • Inverted page table –Virtual page number == value –Physical page number == index

Inverted Page Table • “Normal” page table –Virtual page number == index –Physical page number == value • Inverted page table –Virtual page number == value –Physical page number == index • Need to scan the table for the right value to find the index –More efficient way: use a hash table

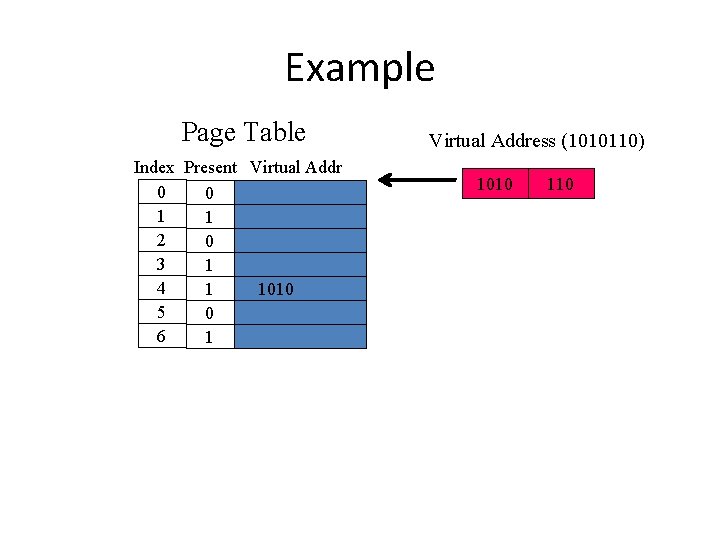

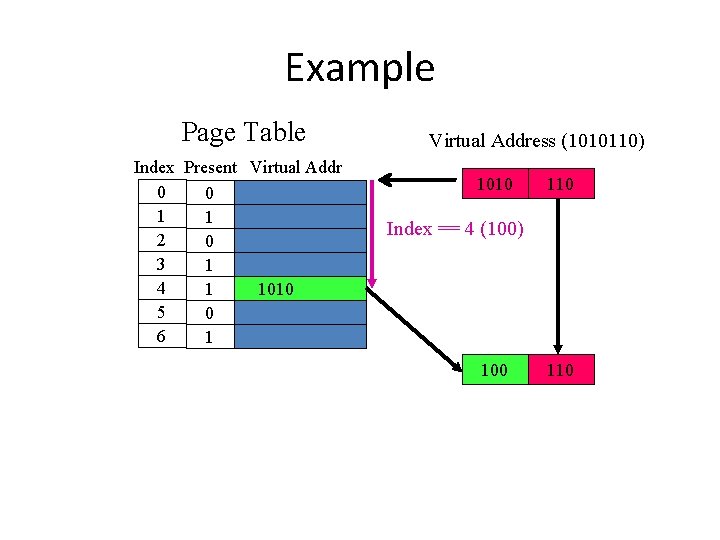

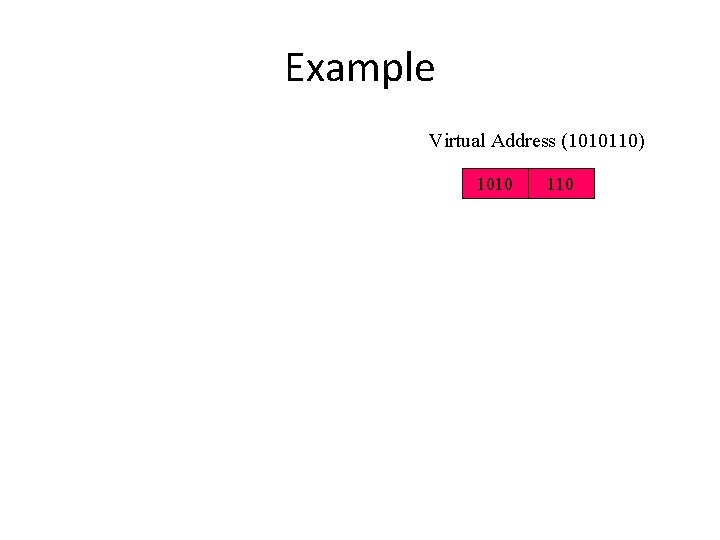

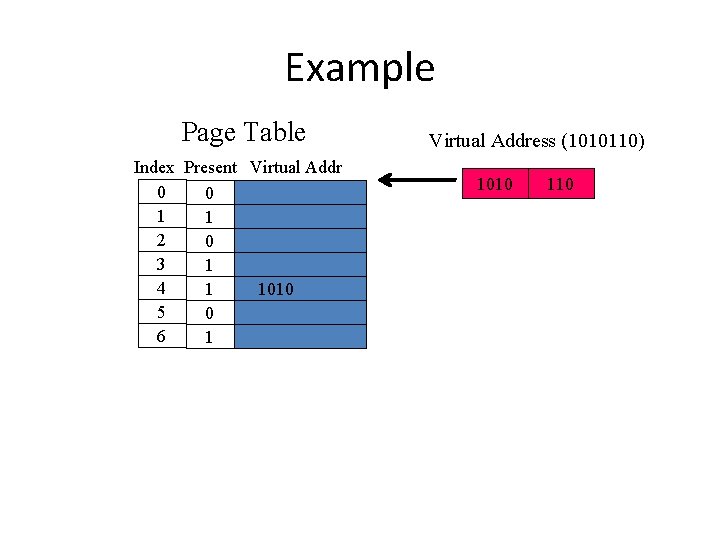

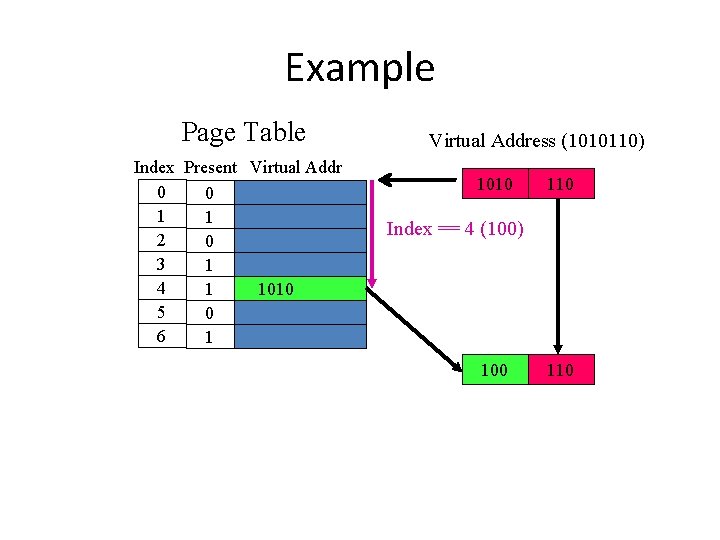

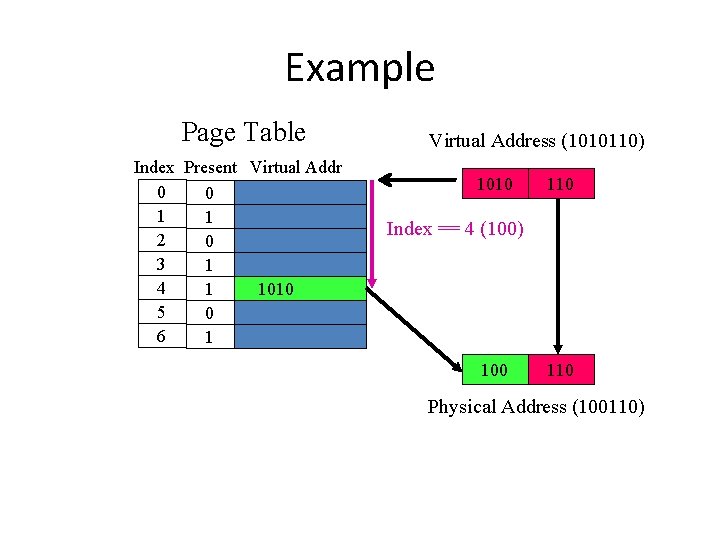

Example Virtual Address (1010110) 1010 110

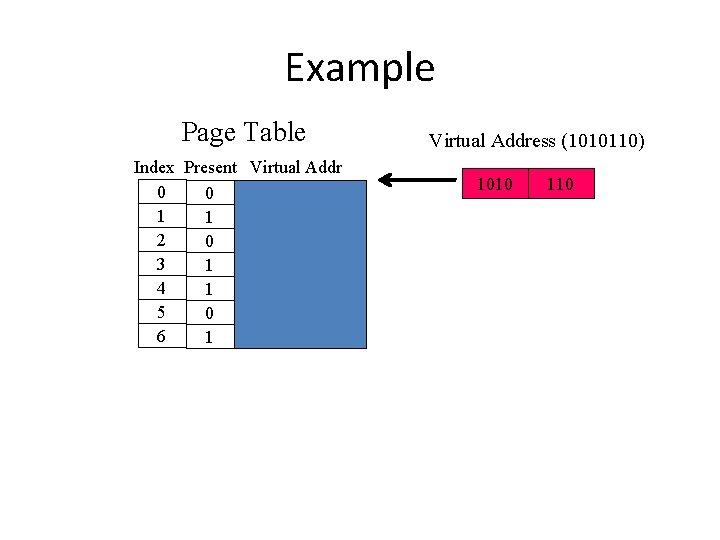

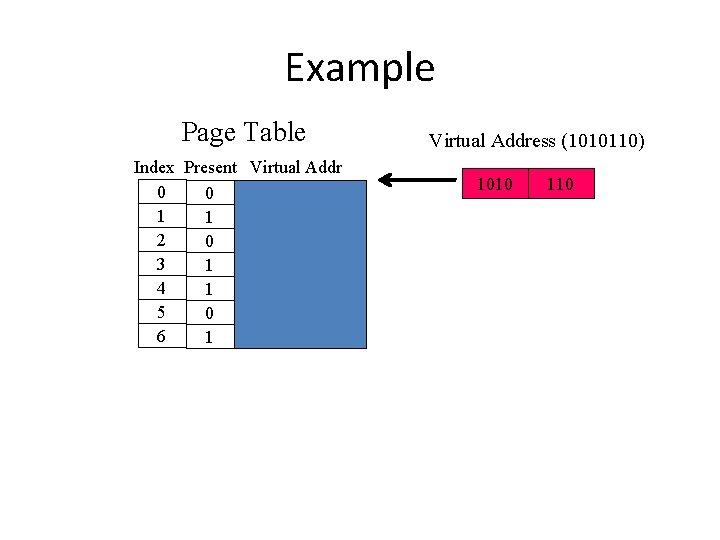

Example Page Table Index Present Virtual Addr 0 0 1 1 2 0 3 1 4 1 5 0 6 1 Virtual Address (1010110) 1010 110

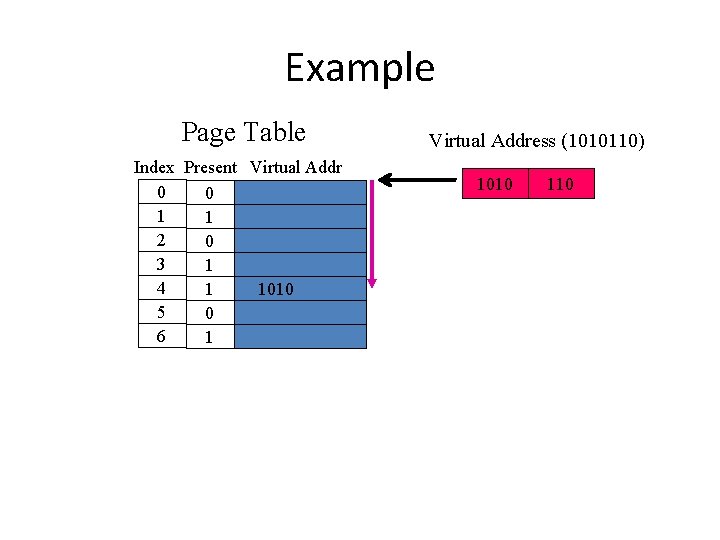

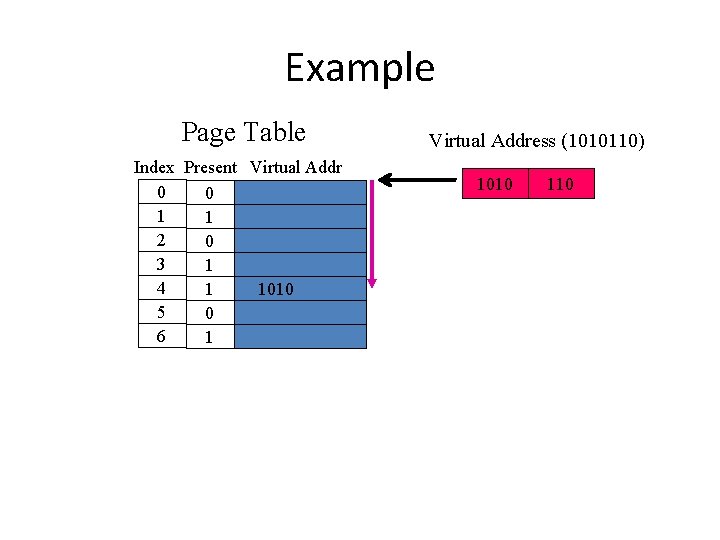

Example Page Table Index Present Virtual Addr 0 0 1 1 2 0 3 1 4 1 1010 5 0 6 1 Virtual Address (1010110) 1010 110

Example Page Table Index Present Virtual Addr 0 0 1 1 2 0 3 1 4 1 1010 5 0 6 1 Virtual Address (1010110) 1010 110

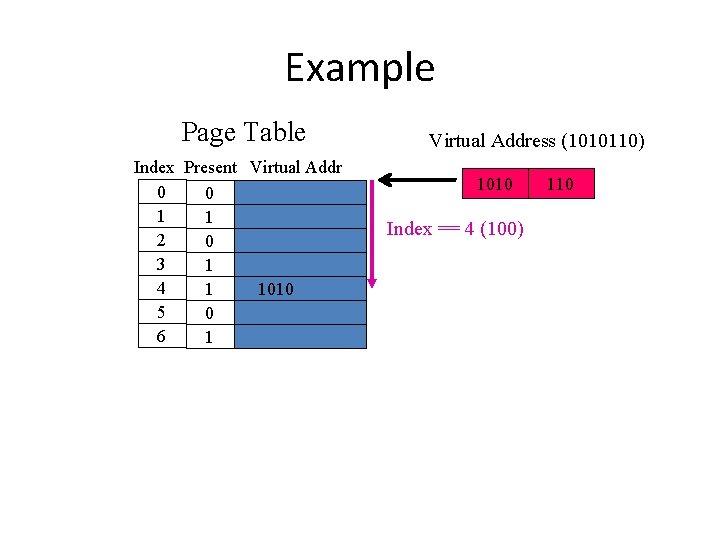

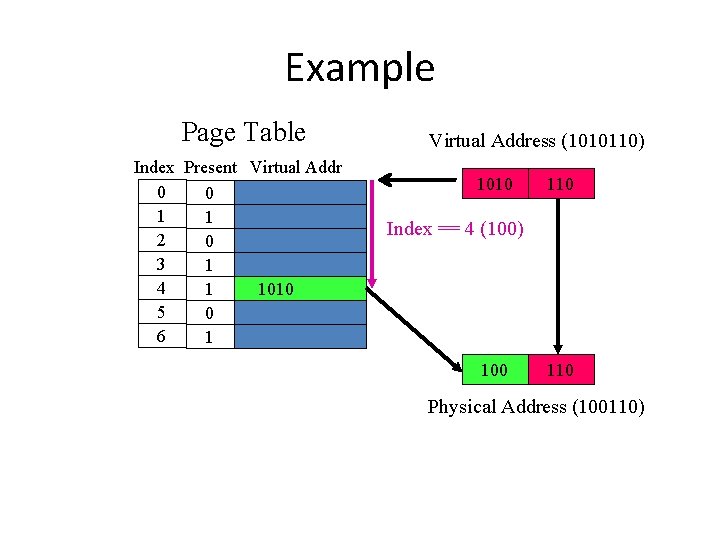

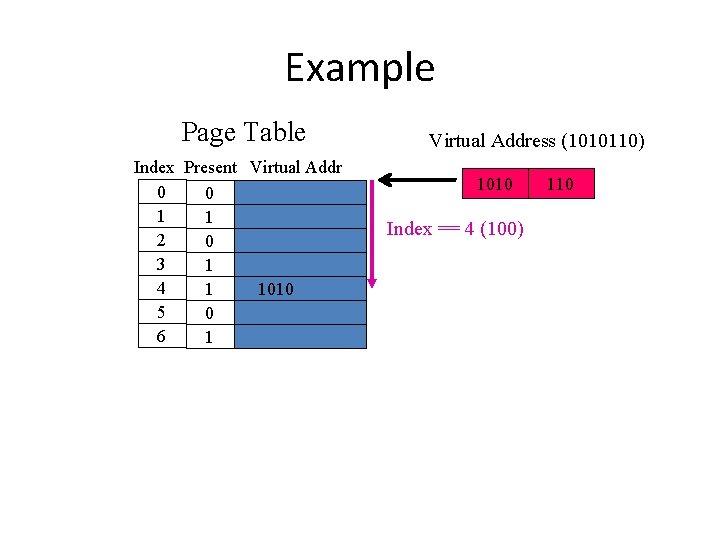

Example Page Table Index Present Virtual Addr 0 0 1 1 2 0 3 1 4 1 1010 5 0 6 1 Virtual Address (1010110) 1010 Index == 4 (100) 110

Example Page Table Index Present Virtual Addr 0 0 1 1 2 0 3 1 4 1 1010 5 0 6 1 Virtual Address (1010110) 1010 Index == 4 (100) 100 110

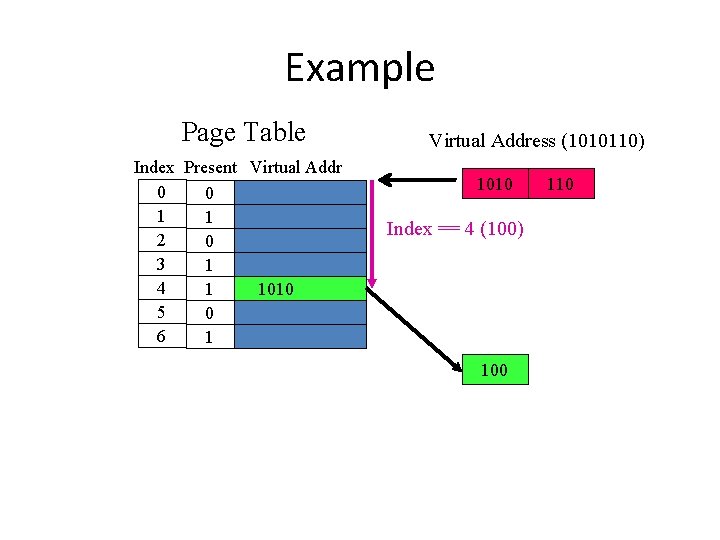

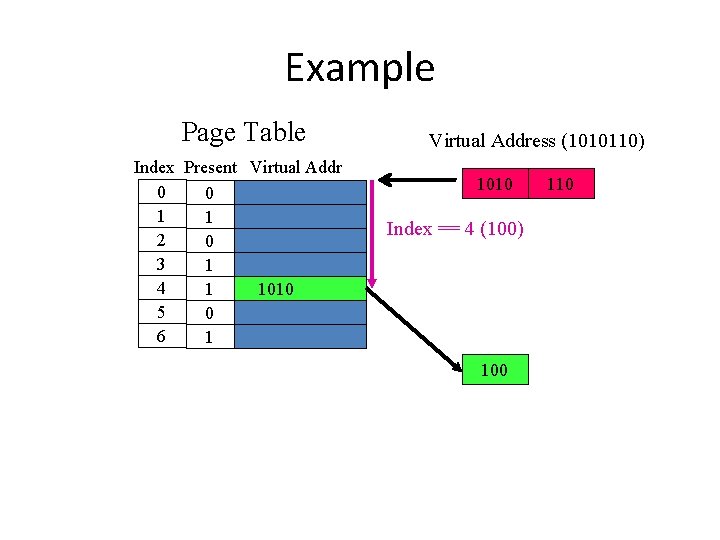

Example Page Table Index Present Virtual Addr 0 0 1 1 2 0 3 1 4 1 1010 5 0 6 1 Virtual Address (1010110) 1010 110 Index == 4 (100) 100 110

Example Page Table Index Present Virtual Addr 0 0 1 1 2 0 3 1 4 1 1010 5 0 6 1 Virtual Address (1010110) 1010 110 Index == 4 (100) 100 110 Physical Address (100110)

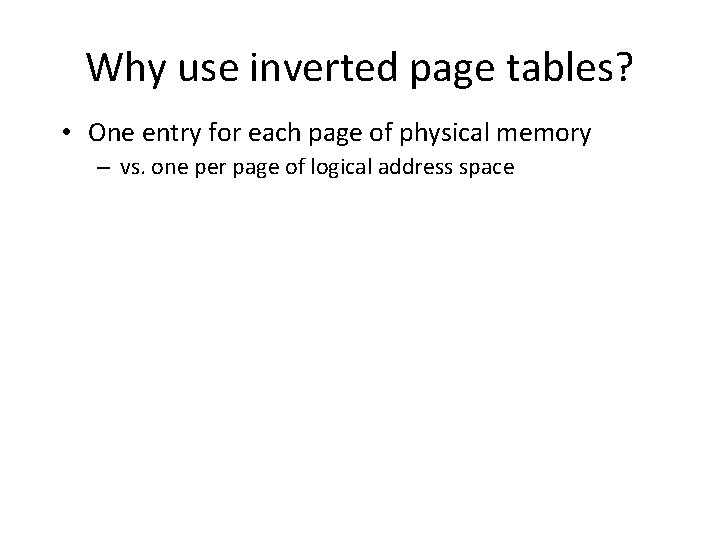

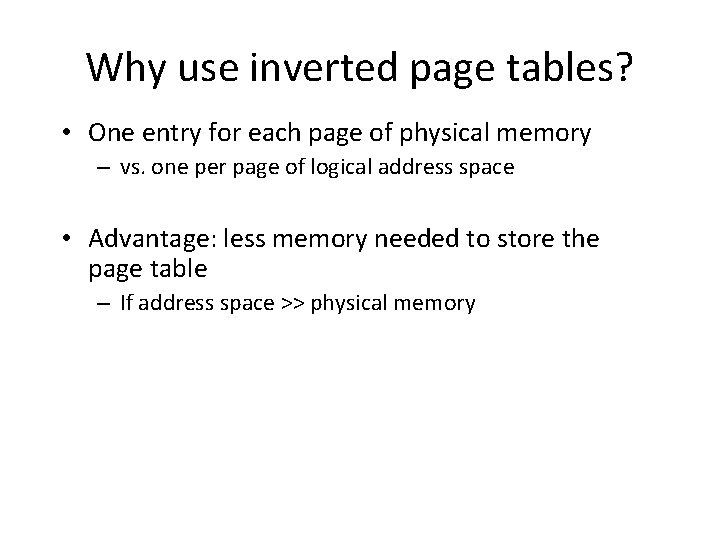

Why use inverted page tables? • One entry for each page of physical memory – vs. one per page of logical address space

Why use inverted page tables? • One entry for each page of physical memory – vs. one per page of logical address space • Advantage: less memory needed to store the page table – If address space >> physical memory

Why use inverted page tables? • One entry for each page of physical memory – vs. one per page of logical address space • Advantage: less memory needed to store the page table – If address space >> physical memory • Disadvantage: increased access time on TLB miss – Use a hash table to limit the search to one – or at most a few extra memory accesses

Summary: Address Conversion • That 19 bit page address can be optimized in a variety of ways – Translation Look-aside Buffer • m – memory cycle, - hit ratio, - TLB lookup time • Effective access time (Eat) – Eat = (m + ) (2 m + )(1 – ) = 2 m + – m – Multilevel Page Table • Similar to indirect pointers in I-nodes • Split the 19 bits into multiple sections – Inverted Page Table • Much smaller, but is slower and more difficult to lookup

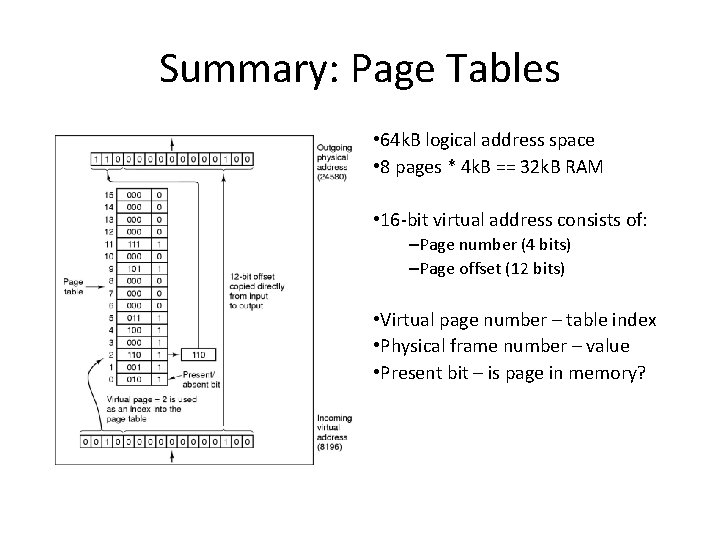

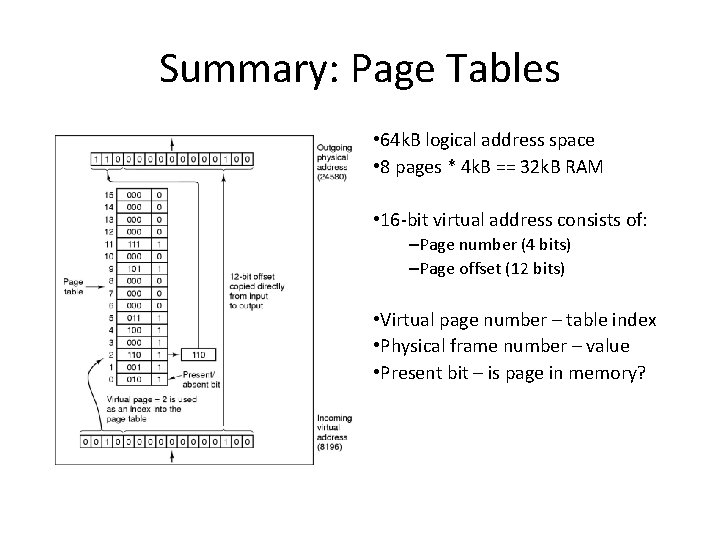

Summary: Page Tables • 64 k. B logical address space • 8 pages * 4 k. B == 32 k. B RAM • 16 -bit virtual address consists of: –Page number (4 bits) –Page offset (12 bits) • Virtual page number – table index • Physical frame number – value • Present bit – is page in memory?

Summary: Virtual Memory • RAM is expensive (but fast), disk is cheap (but slow) • Need to find a way to use the cheaper memory – Store memory that isn’t frequently used on disk – Swap pages between disk and memory as needed • Treat main memory as a cache for pages on disk