COSC 6335 Other Classification Techniques 1 2 3

- Slides: 22

COSC 6335: Other Classification Techniques 1. 2. 3. 09/14/2020 Neural Networks Nearest Neighbor Classifiers Support Vector Machines Introduction to Data Mining, 2 nd Edition with a few slides added by Dr. Eick 1

COSC 6335 NN Lecture(s) Organization 1. Video Amplified Partners/3 blueonebrown: What is a NN? : https: //www. bing. com/videos/search? q=neural+network+video&view=detail&mid=54402 D 363 ABB (8 million views; will only show the first 15: 18 of this video). 2. Followed by the slides in this Slideshow 3. The last lecture in the course “New Trends in Data Mining” will give---beyond other things---a brief introduction to deep learning 8903202 F 54402 D 363 ABB 8903202 F&FORM=VIRE Remark: Due to the overlap with the graduate AI and the graduate Machine Learning course a little emphasis in this course will be put on classification. If this course would be a standalone course more coverage of NNs, SVMs would be given and ensemble approaches would be skipped. 09/14/2020 Introduction to Data Mining, 2 nd Edition with a few slides added by Dr. Eick 2

Neural Networks Lecture Notes for Chapter 4 Artificial Neural Networks Introduction to Data Mining , 2 nd Edition by Tan, Steinbach, Karpatne, Kumar Slides 9, 13 -23 added by Dr. Eick 09/14/2020 Introduction to Data Mining, 2 nd Edition with a few slides added by Dr. Eick 3

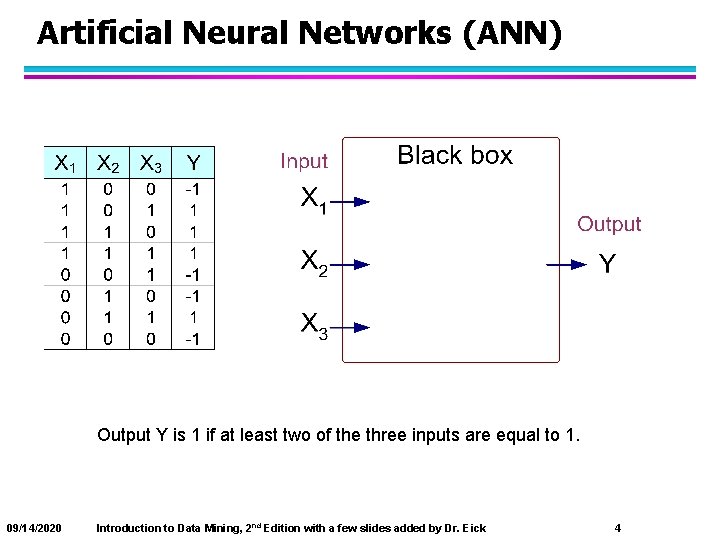

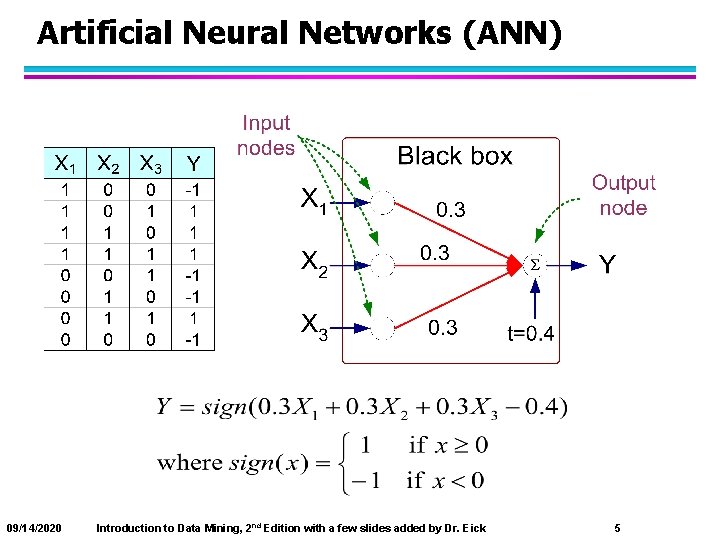

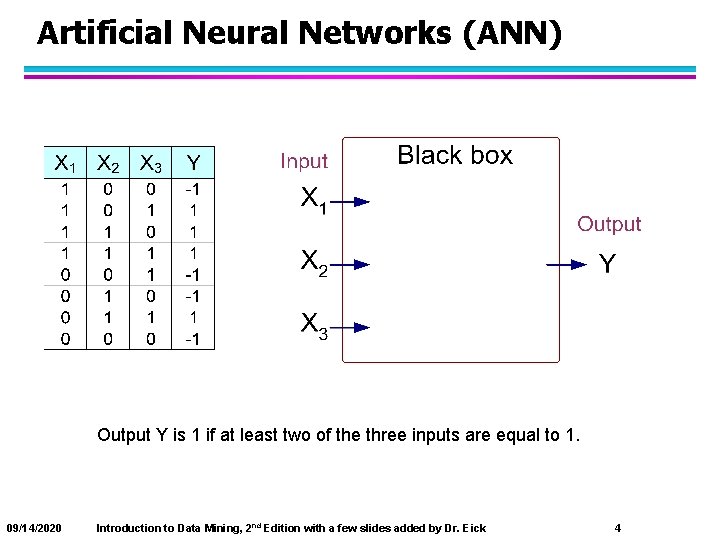

Artificial Neural Networks (ANN) Output Y is 1 if at least two of the three inputs are equal to 1. 09/14/2020 Introduction to Data Mining, 2 nd Edition with a few slides added by Dr. Eick 4

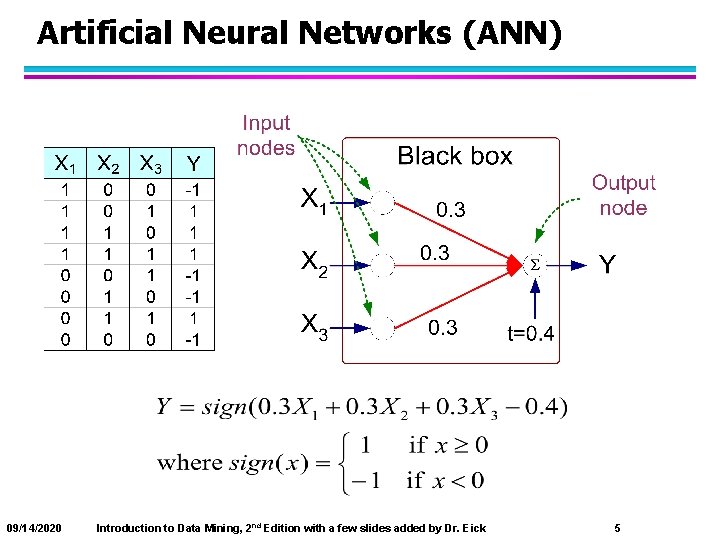

Artificial Neural Networks (ANN) 09/14/2020 Introduction to Data Mining, 2 nd Edition with a few slides added by Dr. Eick 5

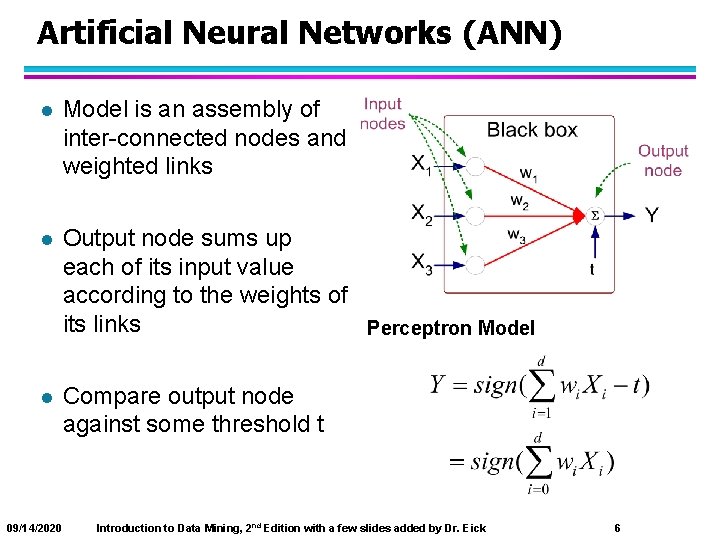

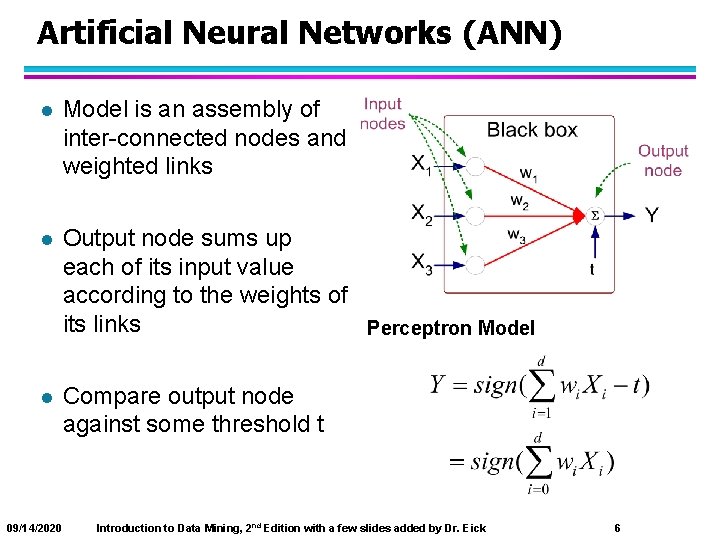

Artificial Neural Networks (ANN) l Model is an assembly of inter-connected nodes and weighted links l Output node sums up each of its input value according to the weights of its links Perceptron Model l Compare output node against some threshold t 09/14/2020 Introduction to Data Mining, 2 nd Edition with a few slides added by Dr. Eick 6

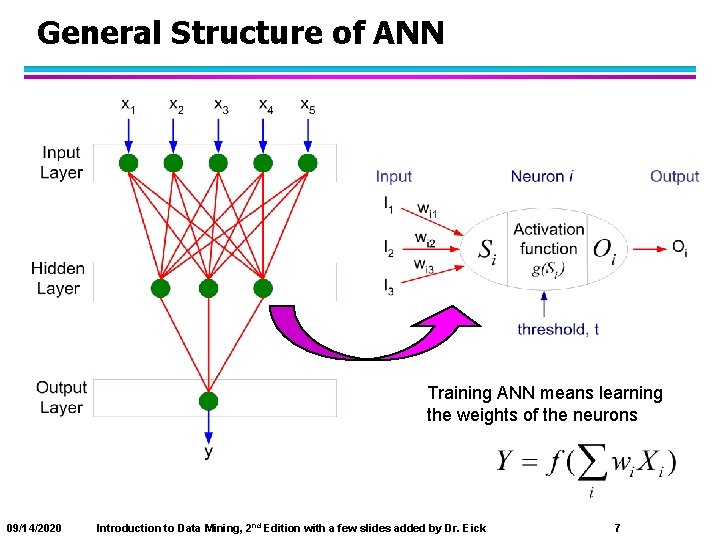

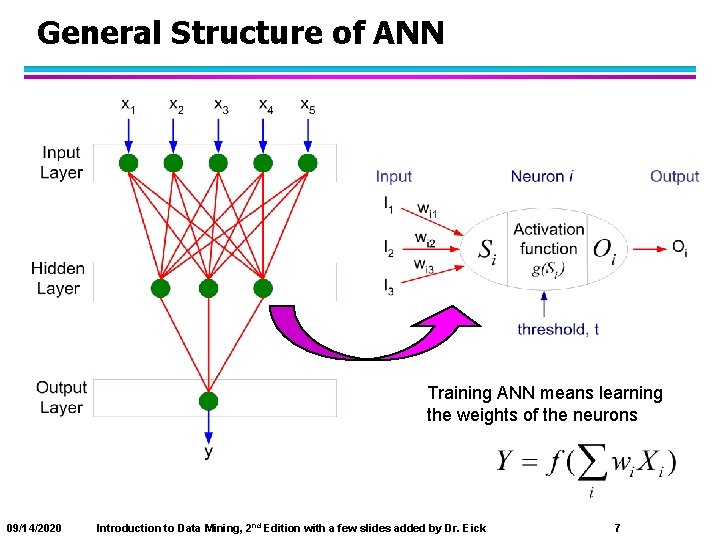

General Structure of ANN Training ANN means learning the weights of the neurons 09/14/2020 Introduction to Data Mining, 2 nd Edition with a few slides added by Dr. Eick 7

Multilayer Neural Network l Hidden layers – intermediary layers between input & output layers l More general activation functions (sigmoid, linear, etc) 09/14/2020 Introduction to Data Mining, 2 nd Edition with a few slides added by Dr. Eick 8

Neural Network Terminology l A neural network is composed of a number of units (nodes) that are connected by links. Each link has a weight associated with it. Each unit has an activation level and a means to compute the activation level at the next step in time. l Most neural networks are decomposed of a linear component called input function, and a non-linear component call activation function. Popular activation functions include: step-function, sign-function, and sigmoid function. l The architecture of a neural network determines how units are connected and what activation function are used for the network computations. Architectures are subdivided into feed-forward and recurrent networks. Moreover, single layer and multi-layer neural networks (that contain hidden units) are distinguished. l Learning in the context of neural networks centers on finding “good” weights for a given architecture so that the error in performing a particular task is minimized. Most approaches center on learning a function from a set of training examples, and use hill-climbing and steepest decent hill-climbing approaches to find the best values for the weights that lead to the “lowest error”. l Loss functions are mechanisms that compute a NN’s error for a training set; NN training looks for finding weights which minimize this function. 09/14/2020 Introduction to Data Mining, 2 nd Edition with a few slides added by Dr. Eick 9

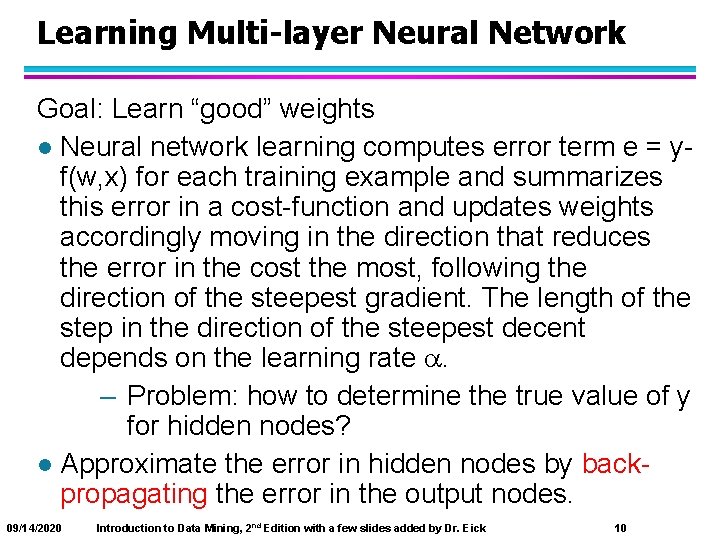

Learning Multi-layer Neural Network Goal: Learn “good” weights l Neural network learning computes error term e = yf(w, x) for each training example and summarizes this error in a cost-function and updates weights accordingly moving in the direction that reduces the error in the cost the most, following the direction of the steepest gradient. The length of the step in the direction of the steepest decent depends on the learning rate . – Problem: how to determine the true value of y for hidden nodes? l Approximate the error in hidden nodes by backpropagating the error in the output nodes. 09/14/2020 Introduction to Data Mining, 2 nd Edition with a few slides added by Dr. Eick 10

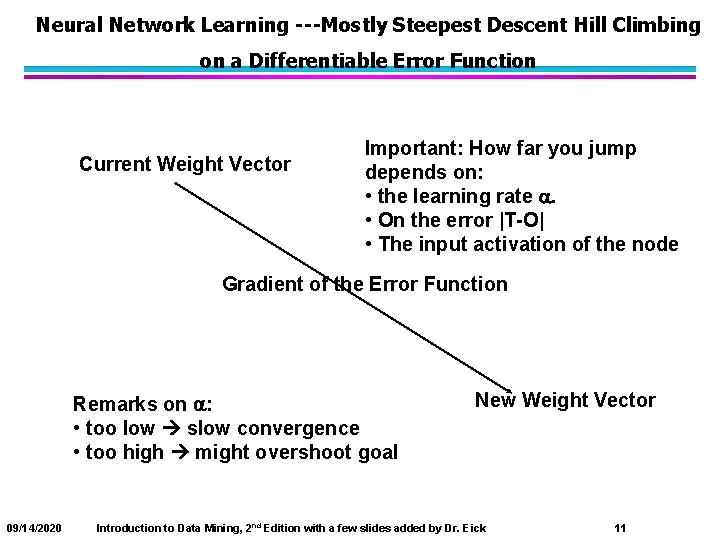

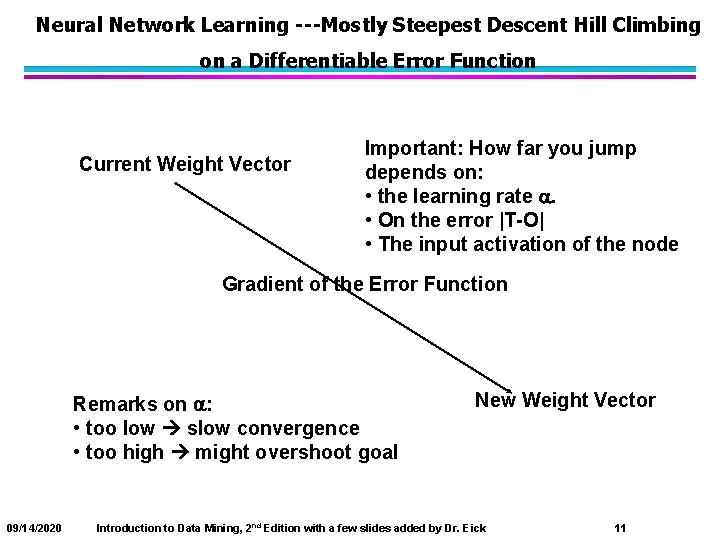

Neural Network Learning ---Mostly Steepest Descent Hill Climbing on a Differentiable Error Function Current Weight Vector Important: How far you jump depends on: • the learning rate a. • On the error |T-O| • The input activation of the node Gradient of the Error Function Remarks on a: • too low slow convergence • too high might overshoot goal 09/14/2020 New Weight Vector Introduction to Data Mining, 2 nd Edition with a few slides added by Dr. Eick 11

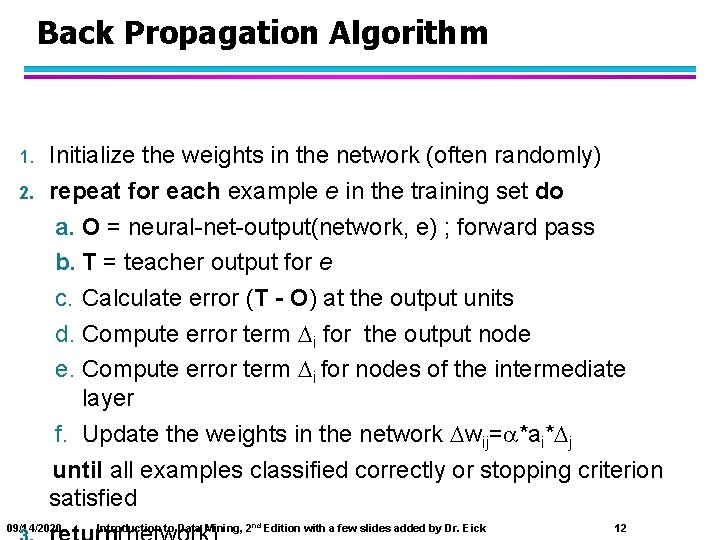

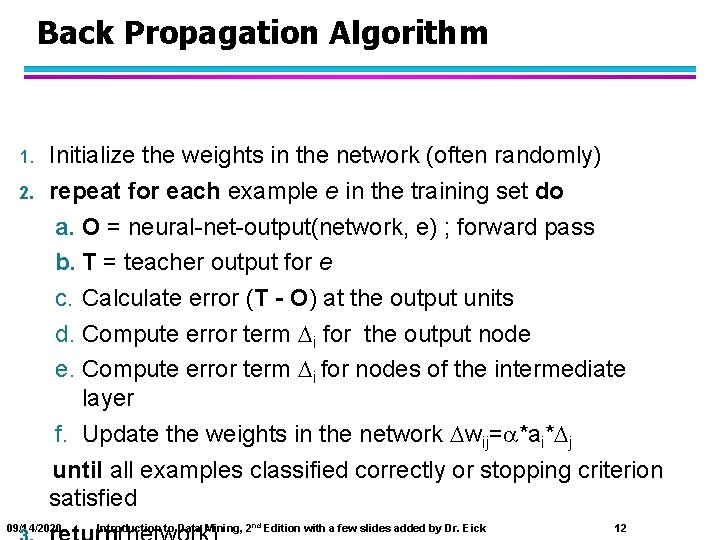

Back Propagation Algorithm 1. 2. Initialize the weights in the network (often randomly) repeat for each example e in the training set do a. O = neural-net-output(network, e) ; forward pass b. T = teacher output for e c. Calculate error (T - O) at the output units d. Compute error term Di for the output node e. Compute error term Di for nodes of the intermediate layer f. Update the weights in the network Dwij= *ai*Dj until all examples classified correctly or stopping criterion satisfied 09/14/2020 Introduction to Data Mining, 2 nd Edition with a few slides added by Dr. Eick 12

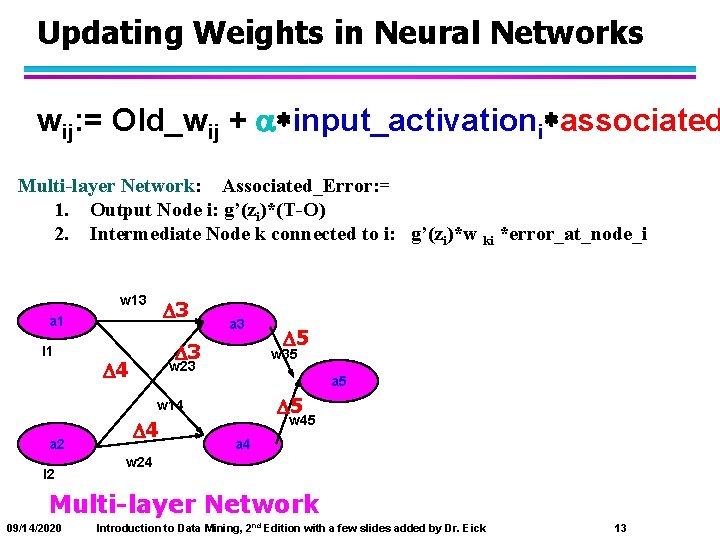

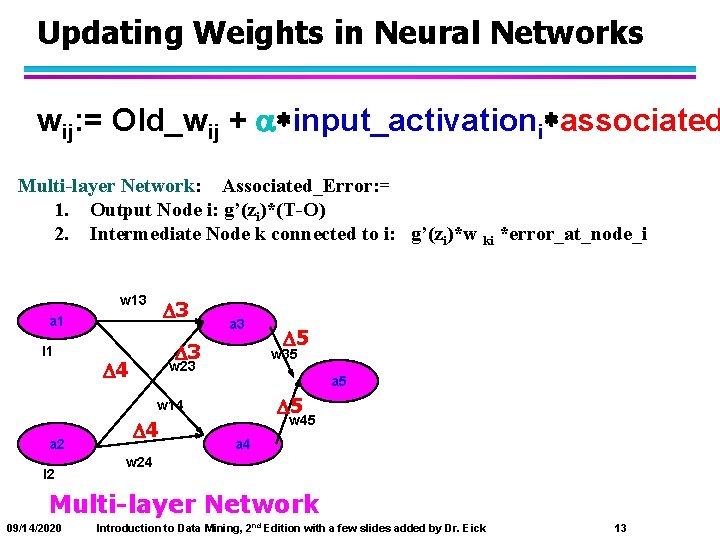

Updating Weights in Neural Networks wij: = Old_wij + a*input_activationi*associated Multi-layer Network: Associated_Error: = 1. Output Node i: g’(zi)*(T-O) 2. Intermediate Node k connected to i: g’(zi)*w ki *error_at_node_i w 13 D 3 a 1 I 1 a 3 D 4 w 23 a 5 D 5 w 14 a 2 I 2 D 4 D 5 w 35 w 45 a 4 w 24 Multi-layer Network 09/14/2020 Introduction to Data Mining, 2 nd Edition with a few slides added by Dr. Eick 13

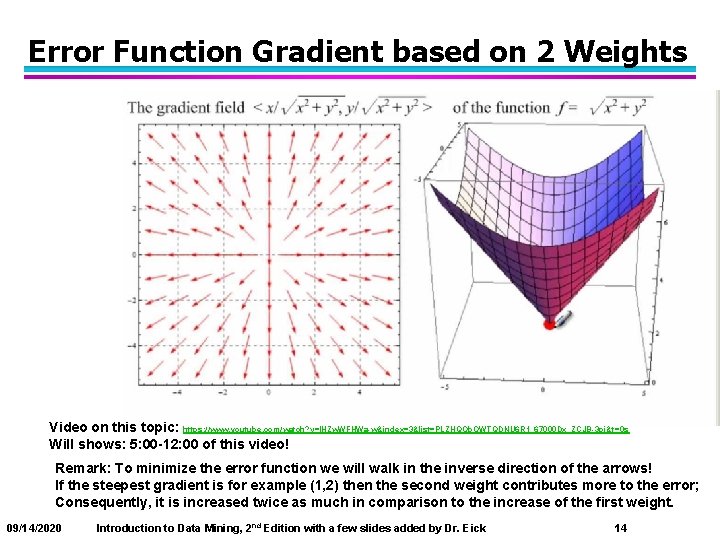

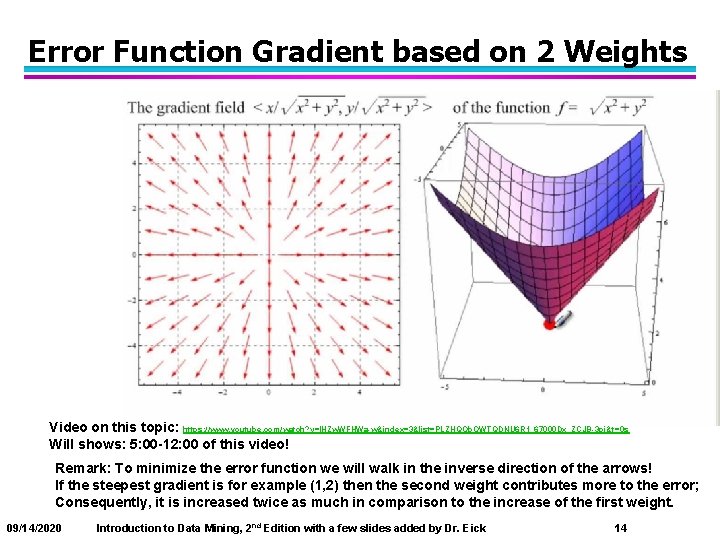

Error Function Gradient based on 2 Weights Video on this topic: https: //www. youtube. com/watch? v=IHZw. WFHWa-w&index=3&list=PLZHQOb. OWTQDNU 6 R 1_67000 Dx_ZCJB-3 pi&t=0 s Will shows: 5: 00 -12: 00 of this video! Remark: To minimize the error function we will walk in the inverse direction of the arrows! If the steepest gradient is for example (1, 2) then the second weight contributes more to the error; Consequently, it is increased twice as much in comparison to the increase of the first weight. 09/14/2020 Introduction to Data Mining, 2 nd Edition with a few slides added by Dr. Eick 14

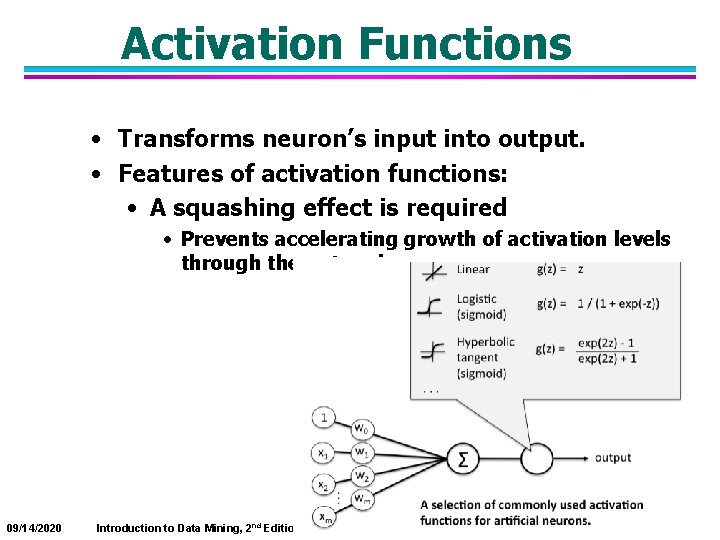

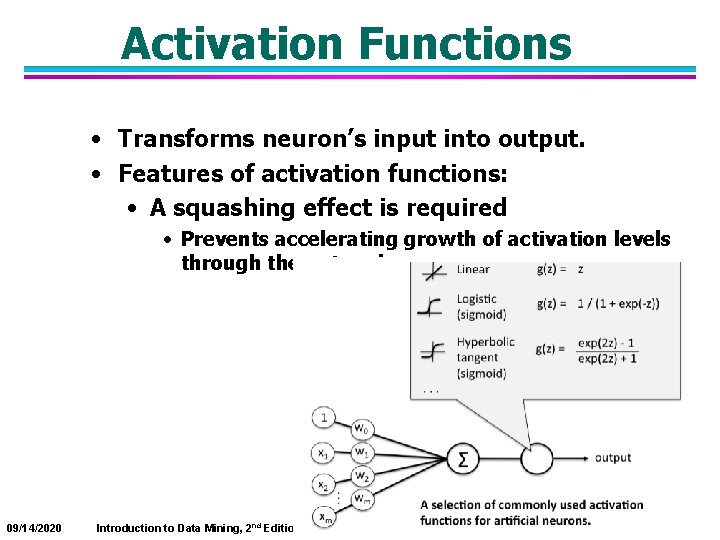

Activation Functions • Transforms neuron’s input into output. • Features of activation functions: • A squashing effect is required • Prevents accelerating growth of activation levels through the network. 15 09/14/2020 Introduction to Data Mining, 2 nd Edition with a few slides added by Dr. Eick 15

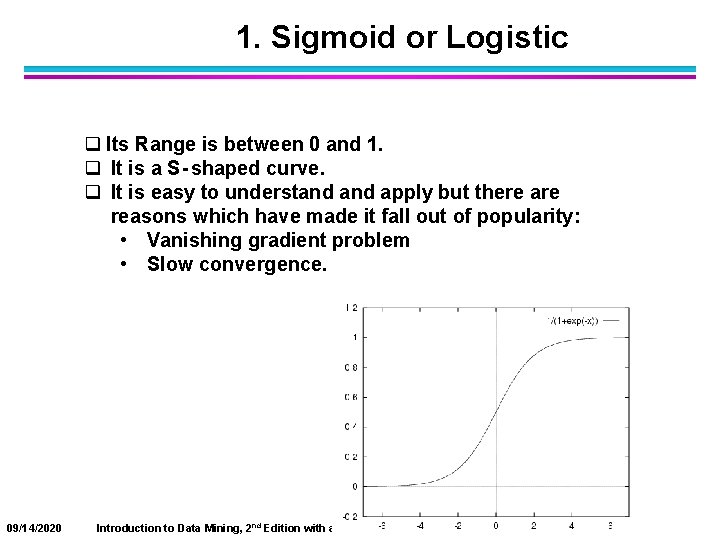

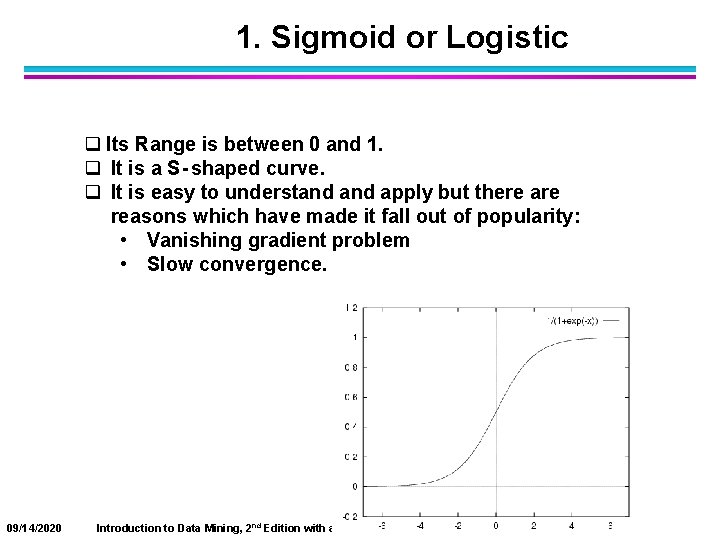

1. Sigmoid or Logistic q Its Range is between 0 and 1. q It is a S - shaped curve. q It is easy to understand apply but there are reasons which have made it fall out of popularity: • Vanishing gradient problem • Slow convergence. 16 09/14/2020 Introduction to Data Mining, 2 nd Edition with a few slides added by Dr. Eick 16

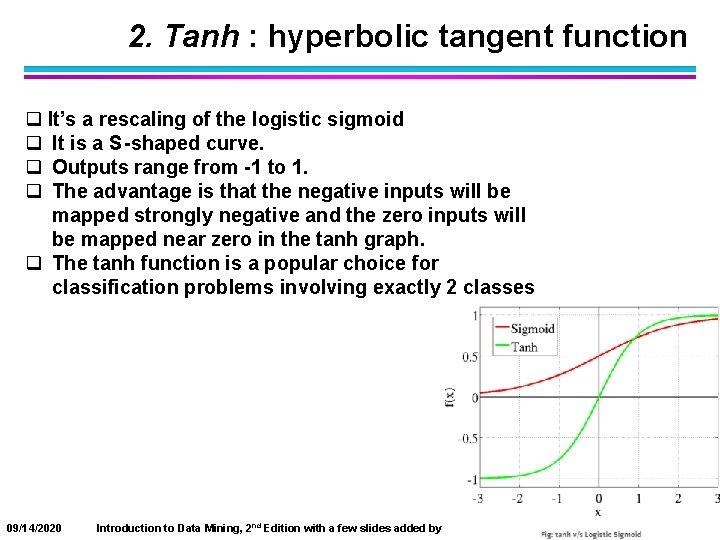

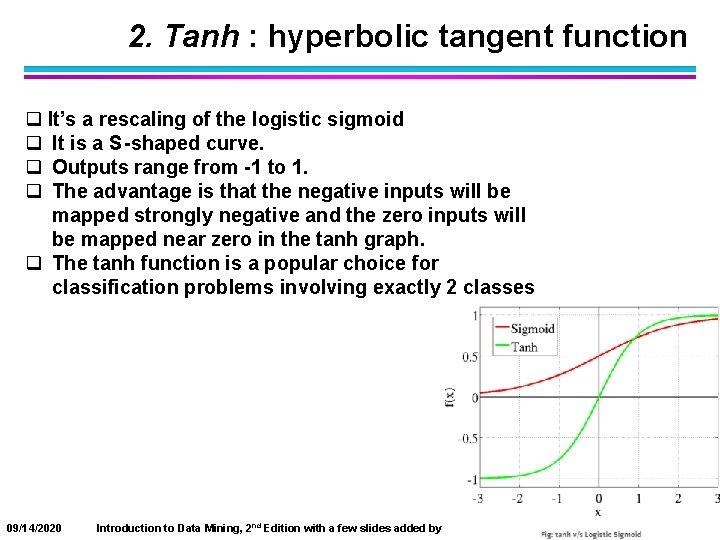

2. Tanh : hyperbolic tangent function q It’s a rescaling of the logistic sigmoid q It is a S -shaped curve. q Outputs range from -1 to 1. q The advantage is that the negative inputs will be mapped strongly negative and the zero inputs will be mapped near zero in the tanh graph. q The tanh function is a popular choice for classification problems involving exactly 2 classes 17 09/14/2020 Introduction to Data Mining, 2 nd Edition with a few slides added by Dr. Eick 17

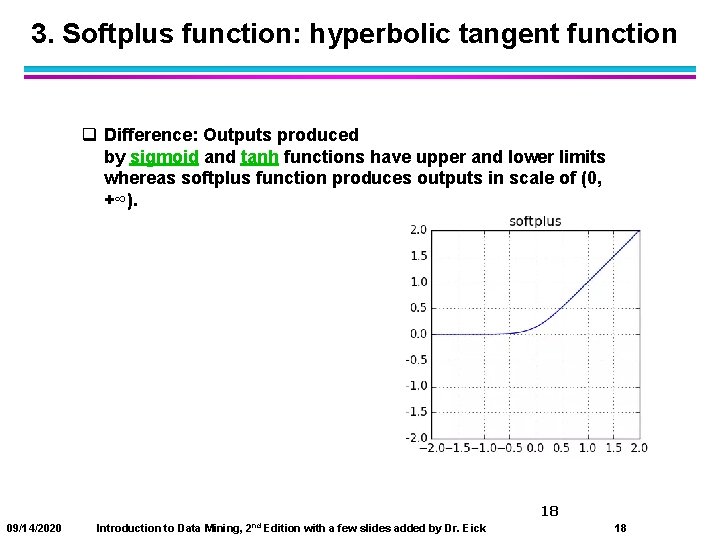

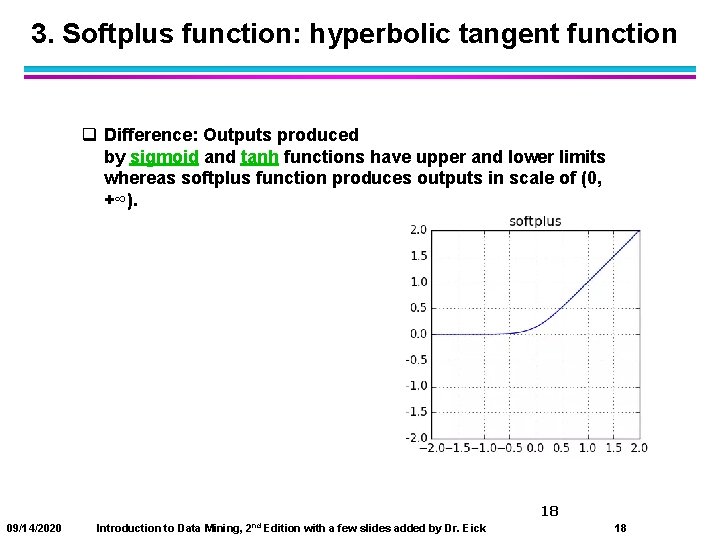

3. Softplus function: hyperbolic tangent function q Difference: Outputs produced by sigmoid and tanh functions have upper and lower limits whereas softplus function produces outputs in scale of (0, +∞). 18 09/14/2020 Introduction to Data Mining, 2 nd Edition with a few slides added by Dr. Eick 18

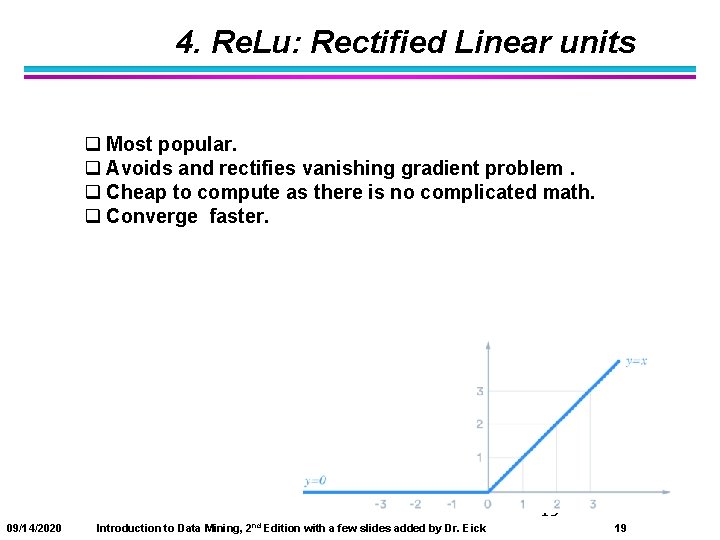

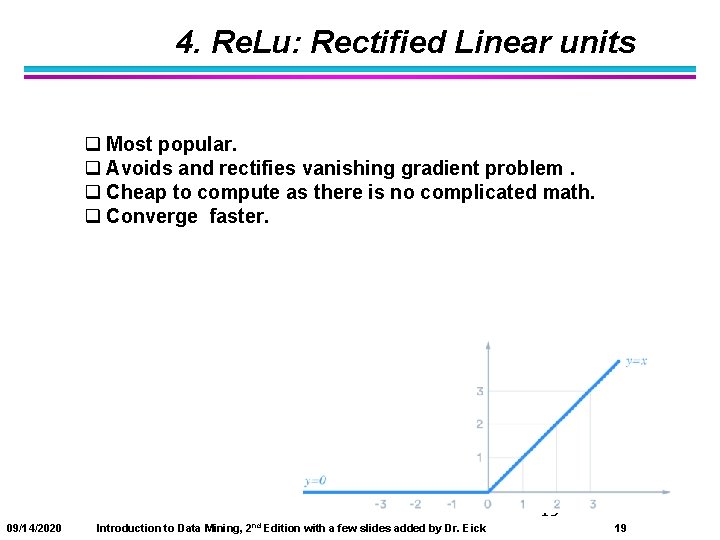

4. Re. Lu: Rectified Linear units q Most popular. q Avoids and rectifies vanishing gradient problem. q Cheap to compute as there is no complicated math. q Converge faster. 19 09/14/2020 Introduction to Data Mining, 2 nd Edition with a few slides added by Dr. Eick 19

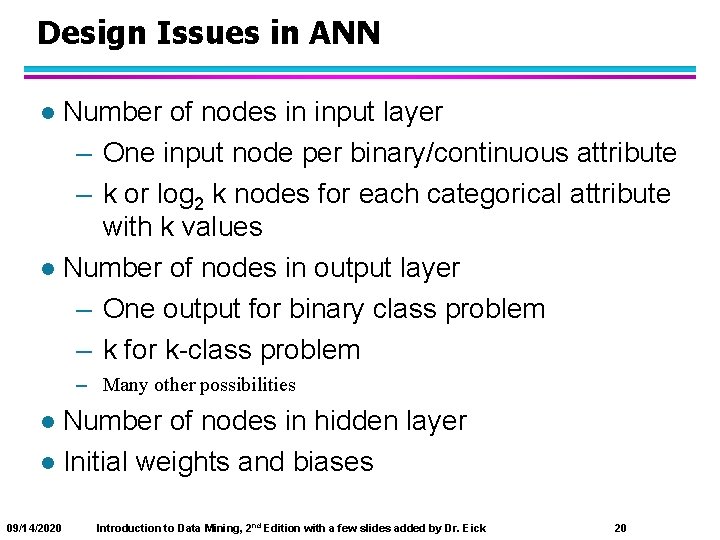

Design Issues in ANN Number of nodes in input layer – One input node per binary/continuous attribute – k or log 2 k nodes for each categorical attribute with k values l Number of nodes in output layer – One output for binary class problem – k for k-class problem l – Many other possibilities Number of nodes in hidden layer l Initial weights and biases l 09/14/2020 Introduction to Data Mining, 2 nd Edition with a few slides added by Dr. Eick 20

Characteristics of ANN l l l 09/14/2020 Multilayer ANN are universal approximators but could suffer from overfitting if the network is too complex or if the number of training examples is too low. Gradient descent may converge to local minimum Model building can be very time consuming, but prediction/classification is very fast. Sensitive to noise in training data Difficult to handle missing attributes and symbolic attributes Introduction to Data Mining, 2 nd Edition with a few slides added by Dr. Eick 21

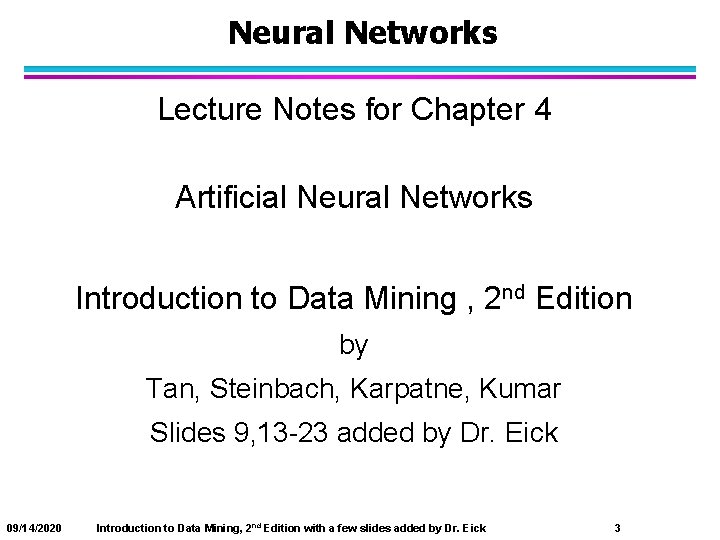

Recent Noteworthy Developments in ANN Use in deep learning and unsupervised feature learning – Seek to automatically learn a good representation of the input from unlabeled data l Google Brain project – Learned the concept of a ‘cat’ by looking at unlabeled pictures from You. Tube – One billion connection network l A little more about Deep Learning in the last lecture of the course on Dec. 4. l 09/14/2020 Introduction to Data Mining, 2 nd Edition with a few slides added by Dr. Eick 22