Cognitive Computer Vision Kingsley Sage khs 20sussex ac

- Slides: 23

Cognitive Computer Vision Kingsley Sage khs 20@sussex. ac. uk and Hilary Buxton hilaryb@sussex. ac. uk Prepared under ECVision Specific Action 8 -3 http: //www. ecvision. org

Lecture 10 l Task based control – l Act. IPret project (case study) In this lecture we shall see how some of the techniques we have seen thus far can be used to build real Cognitive Vision Systems

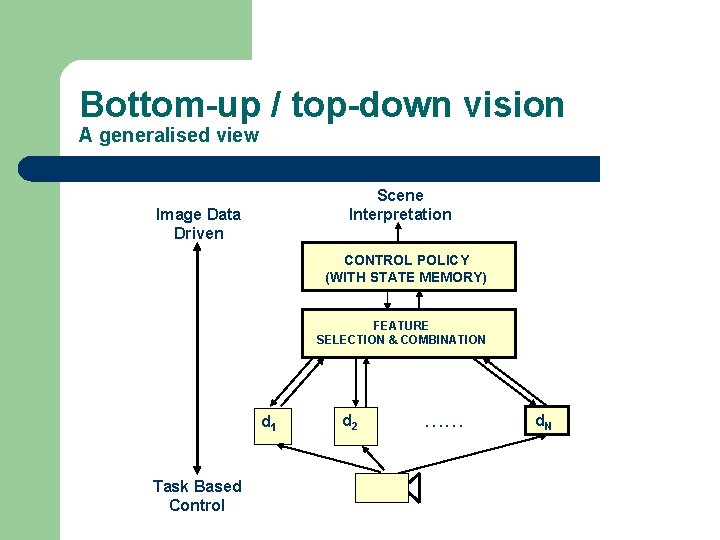

Task based visual control l l The key notion is that we see “for a purpose” rather than see “as is” Perception is guided by expectation We use a computational model of expectation to guide our processing of the scene Bottom-up (data driven) processing constrained by top-down (task based) control

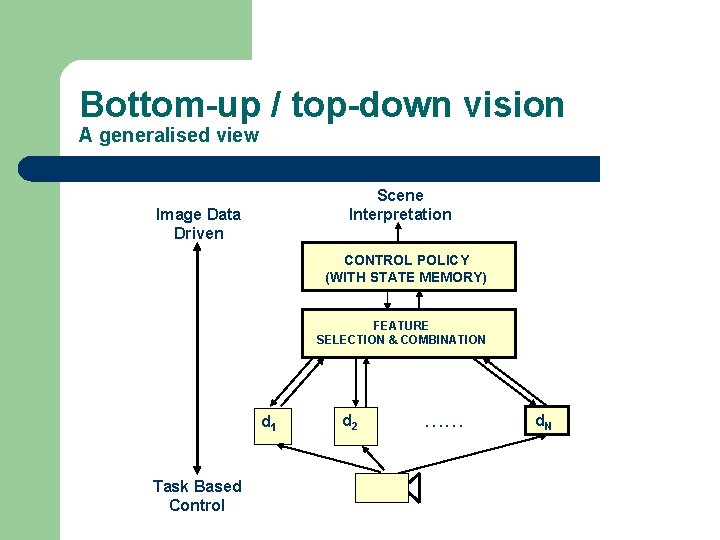

Bottom-up / top-down vision A generalised view Scene Interpretation Image Data Driven CONTROL POLICY (WITH STATE MEMORY) FEATURE SELECTION & COMBINATION d 1 Task Based Control d 2 …… d. N

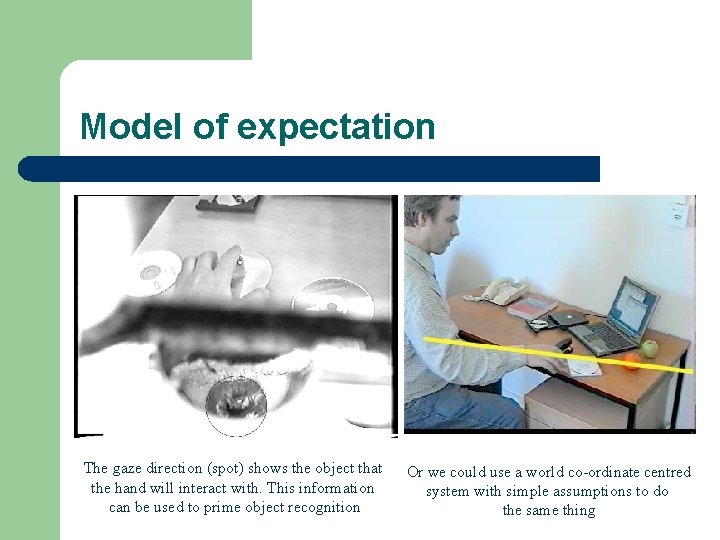

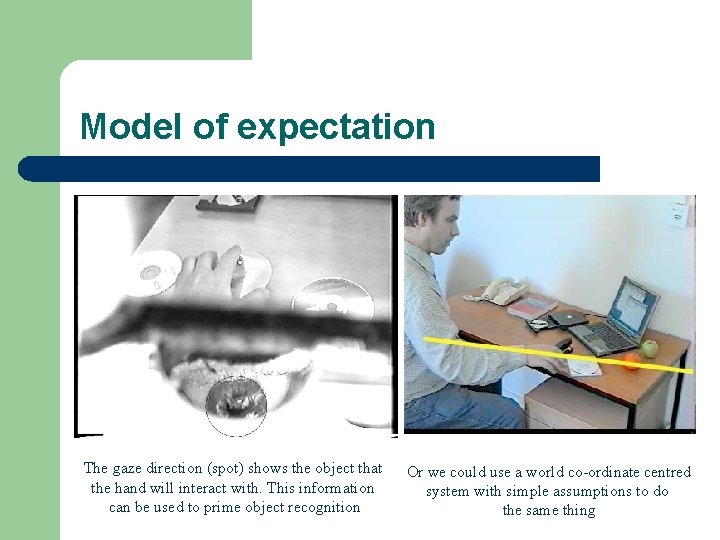

Model of expectation The gaze direction (spot) shows the object that the hand will interact with. This information can be used to prime object recognition Or we could use a world co-ordinate centred system with simple assumptions to do the same thing

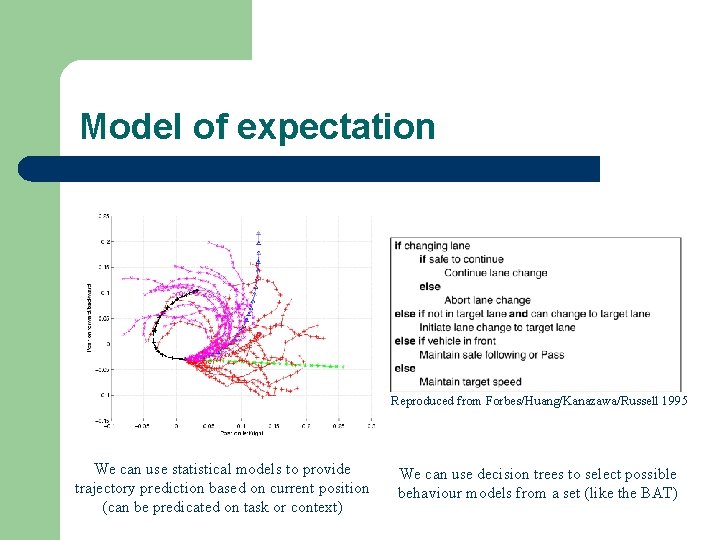

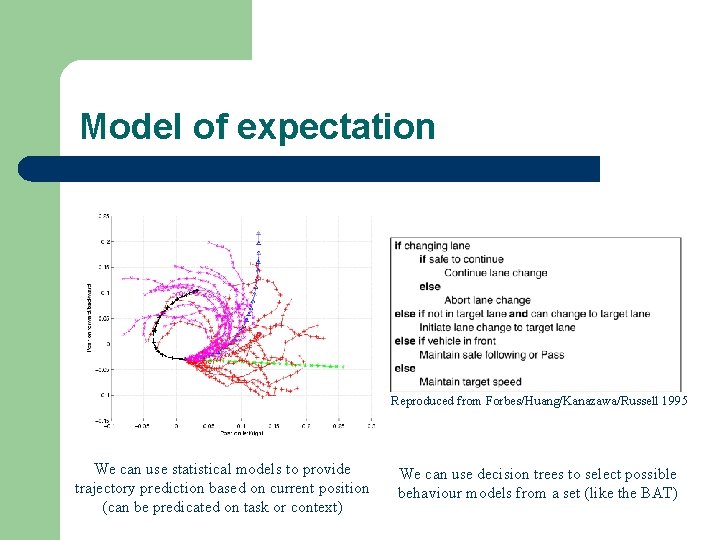

Model of expectation Reproduced from Forbes/Huang/Kanazawa/Russell 1995 We can use statistical models to provide trajectory prediction based on current position (can be predicated on task or context) We can use decision trees to select possible behaviour models from a set (like the BAT)

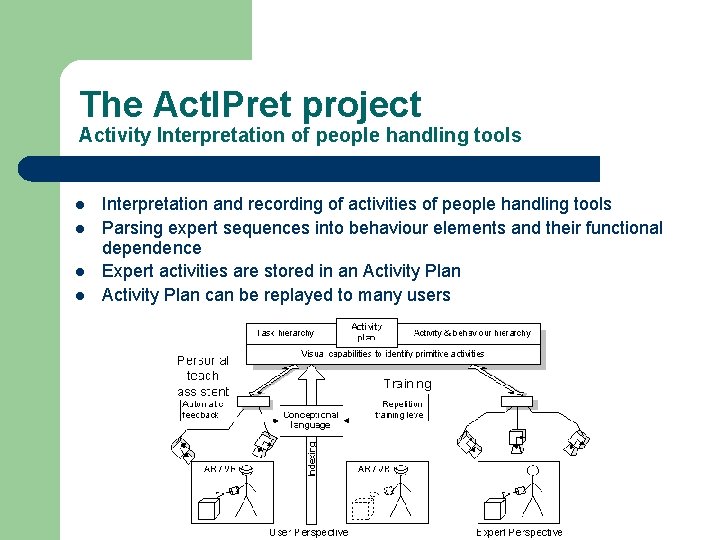

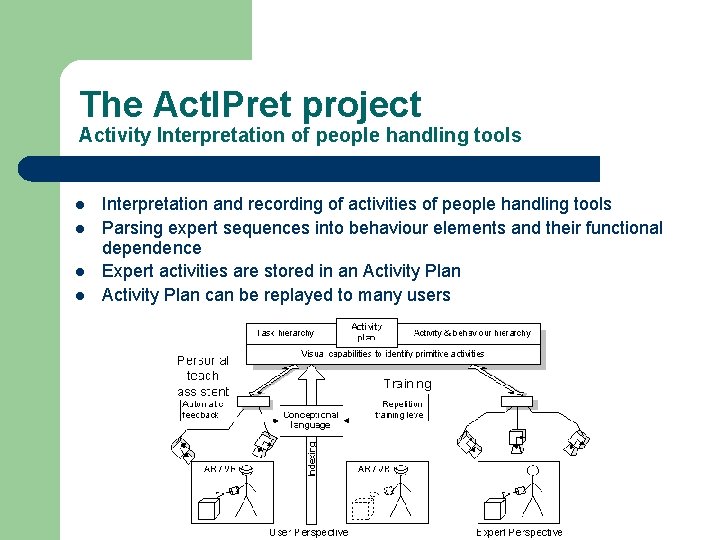

The Act. IPret project Activity Interpretation of people handling tools l l Interpretation and recording of activities of people handling tools Parsing expert sequences into behaviour elements and their functional dependence Expert activities are stored in an Activity Plan can be replayed to many users

The Act. IPret project The promo video!!

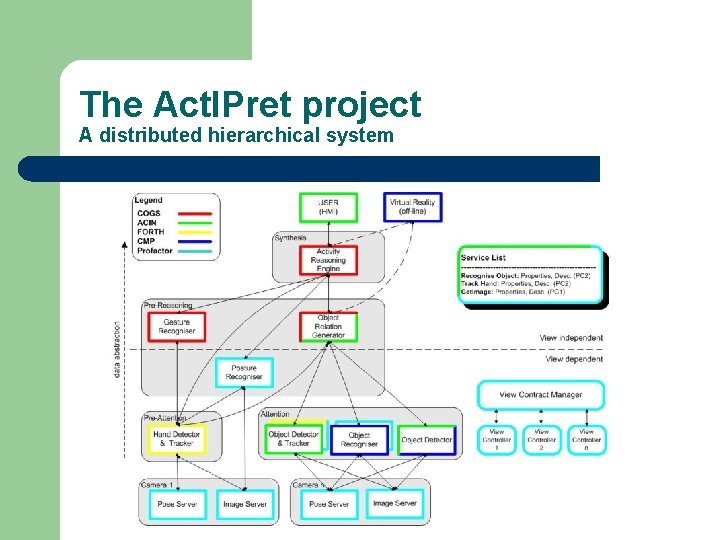

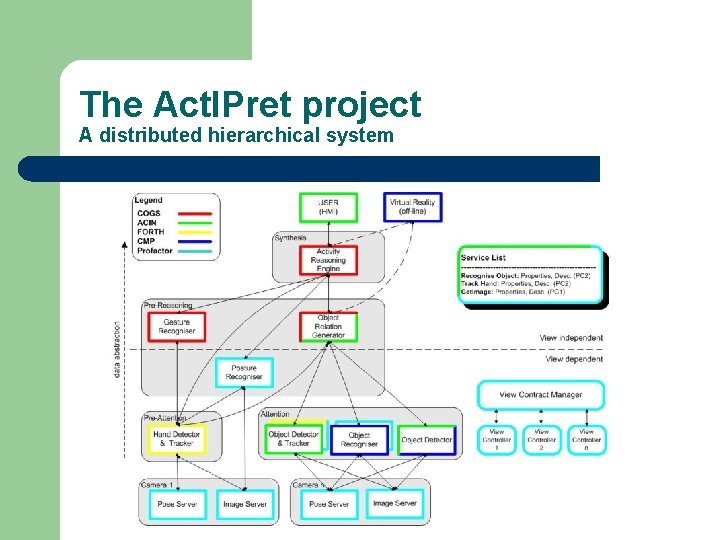

The Act. IPret project A distributed hierarchical system

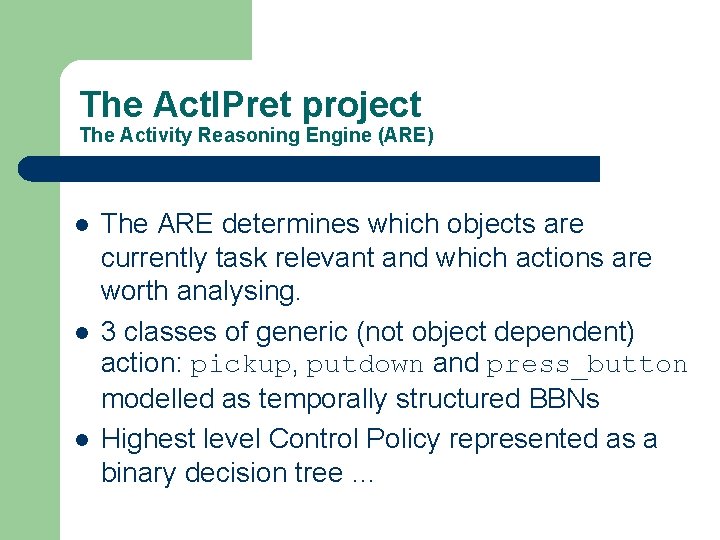

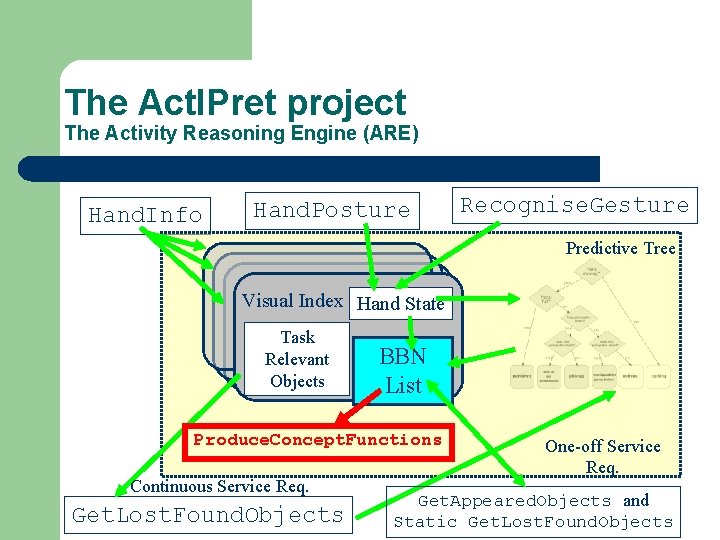

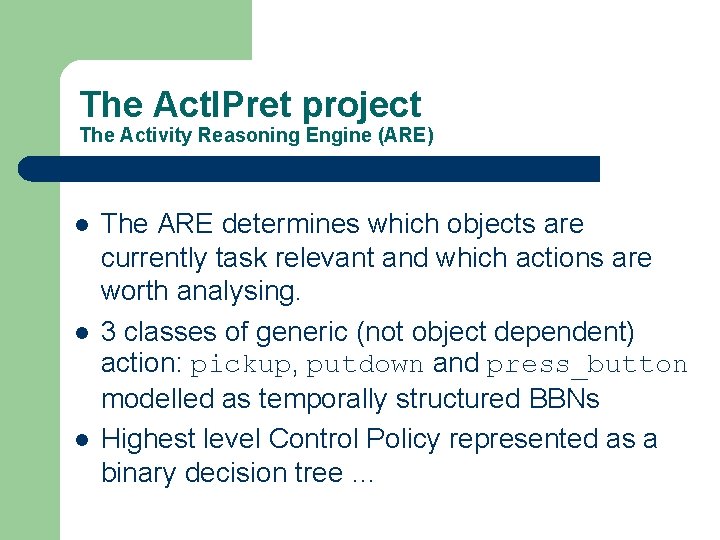

The Act. IPret project The Activity Reasoning Engine (ARE) l l l The ARE determines which objects are currently task relevant and which actions are worth analysing. 3 classes of generic (not object dependent) action: pickup, putdown and press_button modelled as temporally structured BBNs Highest level Control Policy represented as a binary decision tree …

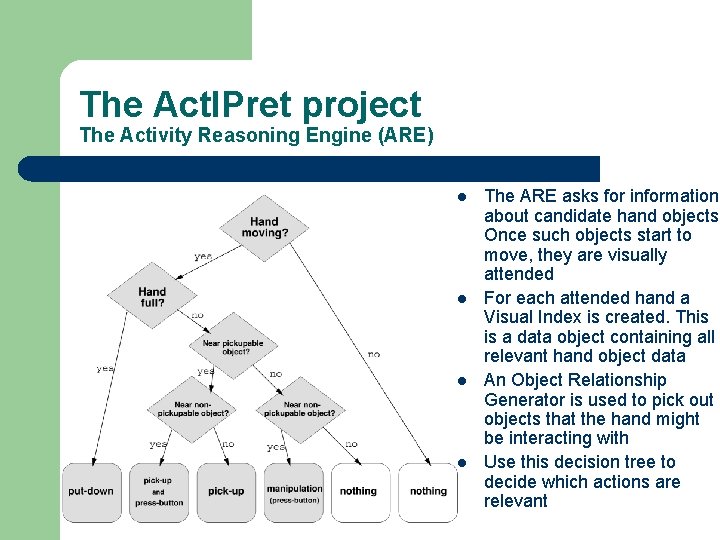

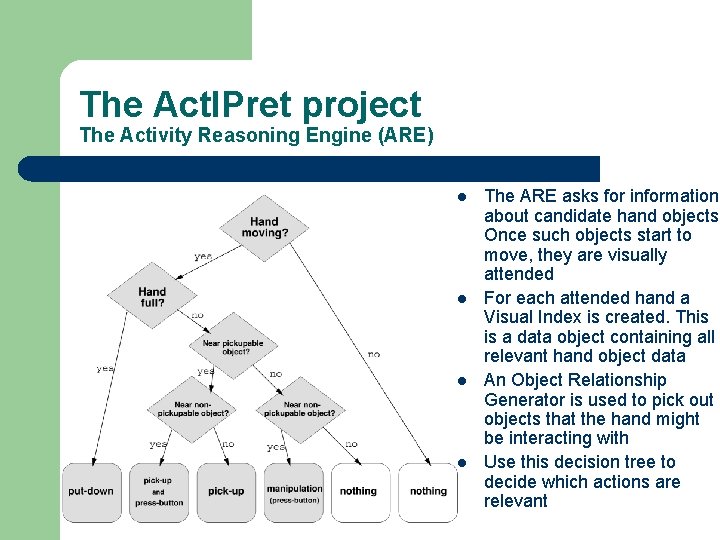

The Act. IPret project The Activity Reasoning Engine (ARE) l l The ARE asks for information about candidate hand objects. Once such objects start to move, they are visually attended For each attended hand a Visual Index is created. This is a data object containing all relevant hand object data An Object Relationship Generator is used to pick out objects that the hand might be interacting with Use this decision tree to decide which actions are relevant

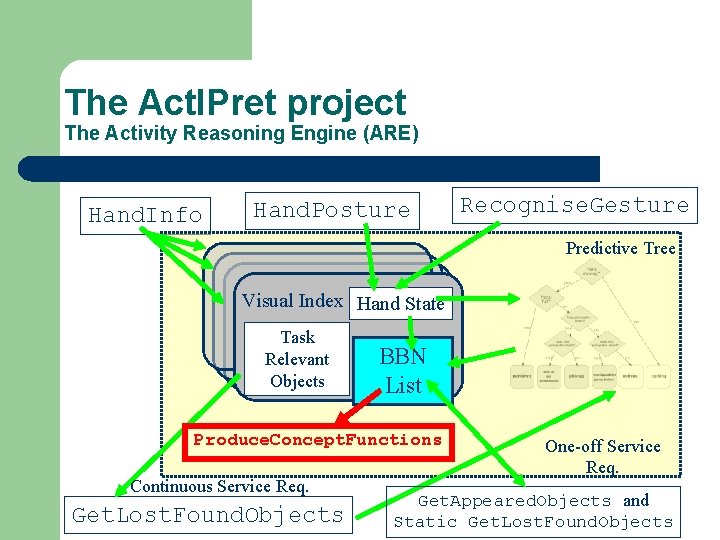

The Act. IPret project The Activity Reasoning Engine (ARE) Hand. Info Hand. Posture Recognise. Gesture Predictive Tree Visual Index Hand State Task Relevant Objects BBN List Produce. Concept. Functions Continuous Service Req. Get. Lost. Found. Objects One-off Service Req. Get. Appeared. Objects and Static Get. Lost. Found. Objects

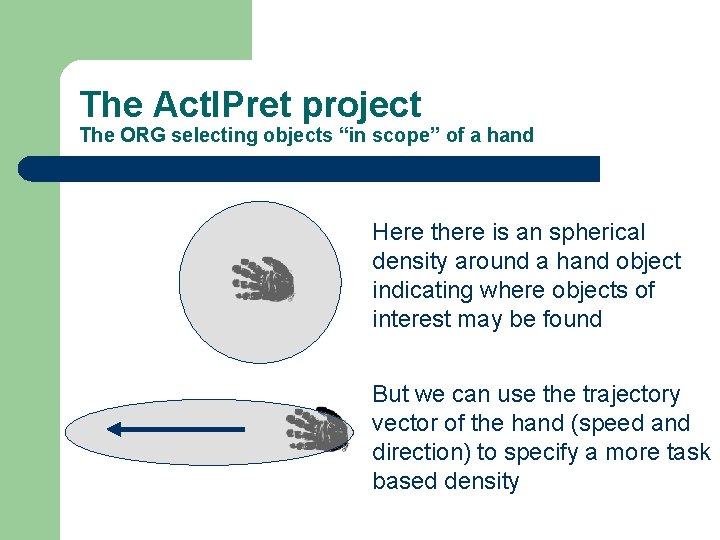

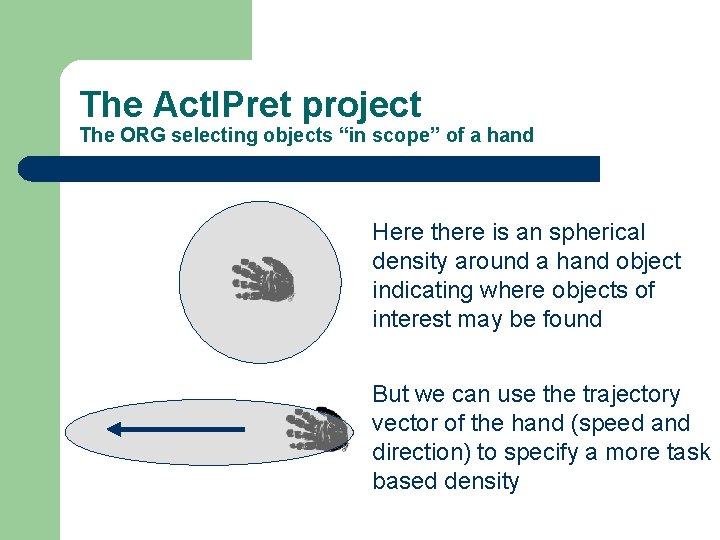

The Act. IPret project The ORG selecting objects “in scope” of a hand Here there is an spherical density around a hand object indicating where objects of interest may be found But we can use the trajectory vector of the hand (speed and direction) to specify a more task based density

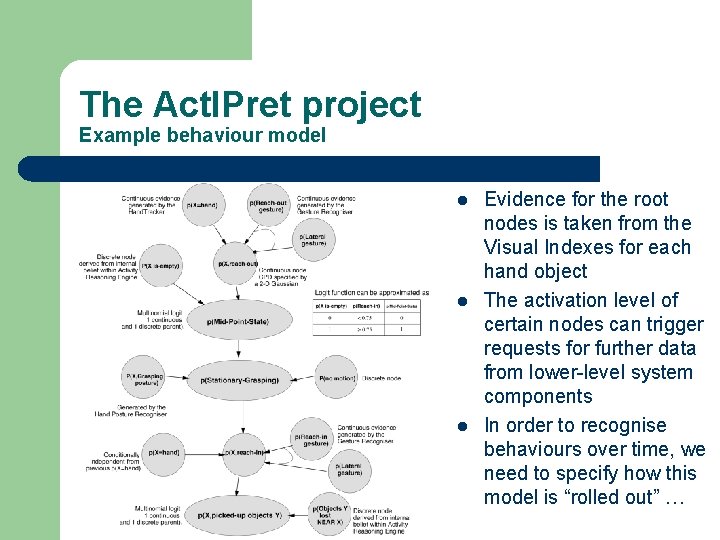

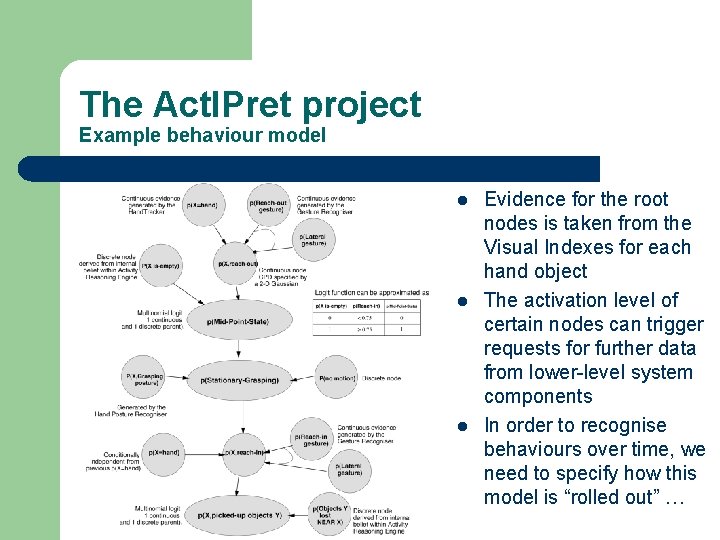

The Act. IPret project Example behaviour model l Evidence for the root nodes is taken from the Visual Indexes for each hand object The activation level of certain nodes can trigger requests for further data from lower-level system components In order to recognise behaviours over time, we need to specify how this model is “rolled out” …

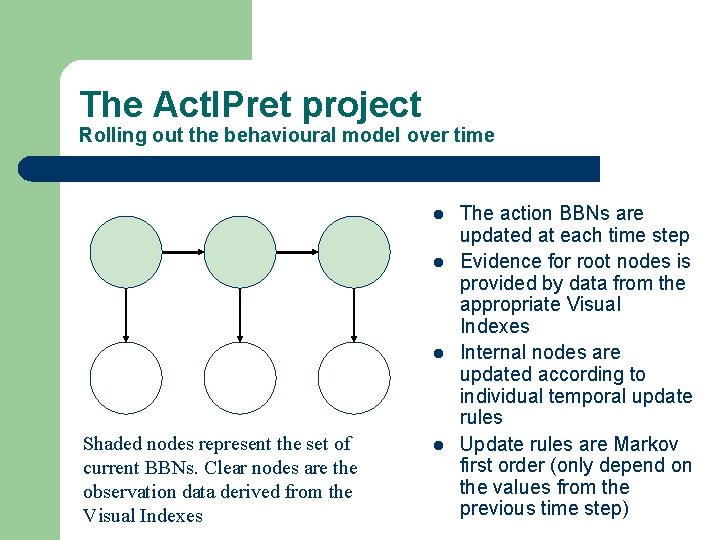

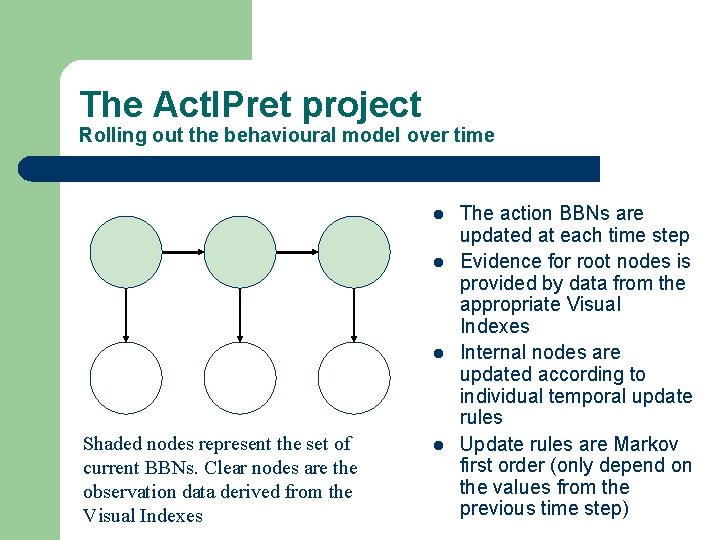

The Act. IPret project Rolling out the behavioural model over time l l l Shaded nodes represent the set of current BBNs. Clear nodes are the observation data derived from the Visual Indexes l The action BBNs are updated at each time step Evidence for root nodes is provided by data from the appropriate Visual Indexes Internal nodes are updated according to individual temporal update rules Update rules are Markov first order (only depend on the values from the previous time step)

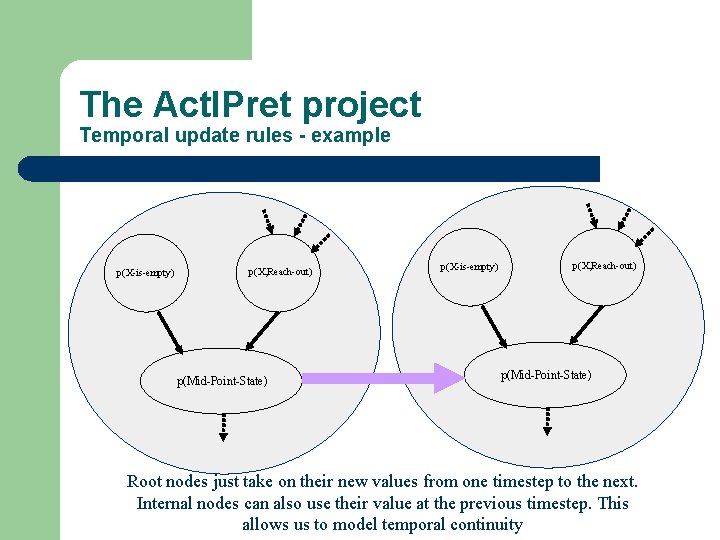

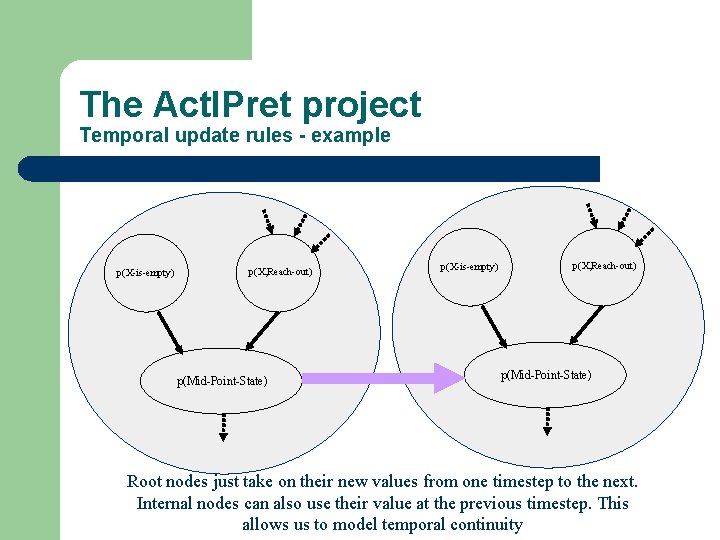

The Act. IPret project Temporal update rules - example p(X-is-empty) p(X, Reach-out) p(Mid-Point-State) Root nodes just take on their new values from one timestep to the next. Internal nodes can also use their value at the previous timestep. This allows us to model temporal continuity

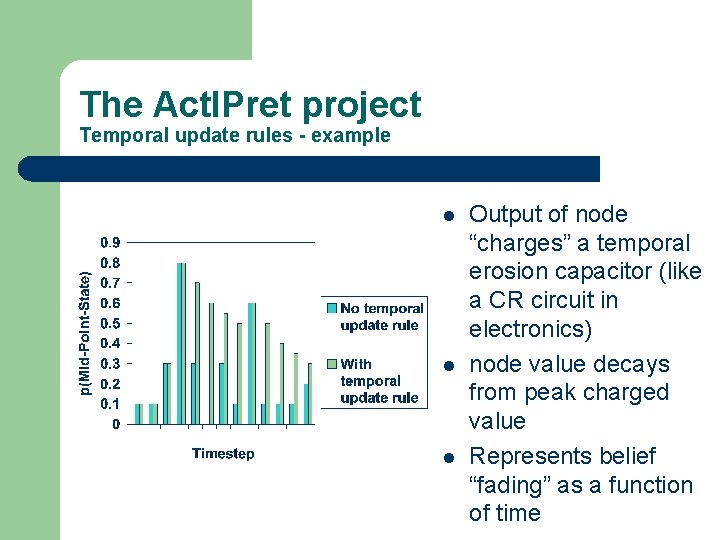

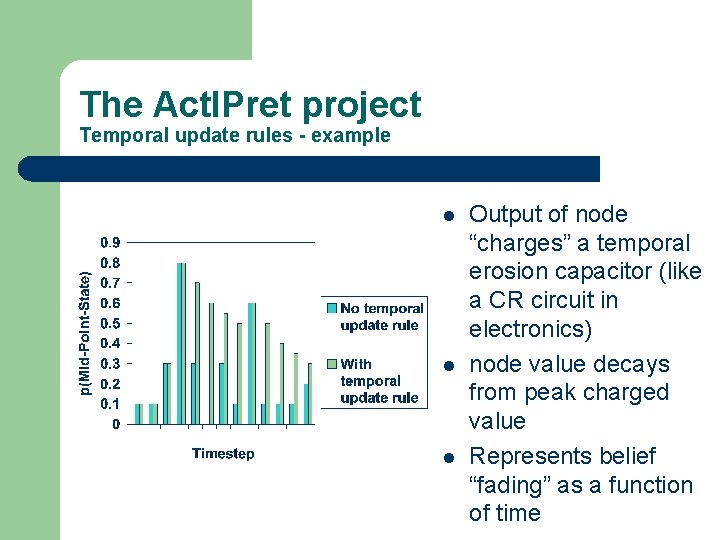

The Act. IPret project Temporal update rules - example l l l Output of node “charges” a temporal erosion capacitor (like a CR circuit in electronics) node value decays from peak charged value Represents belief “fading” as a function of time

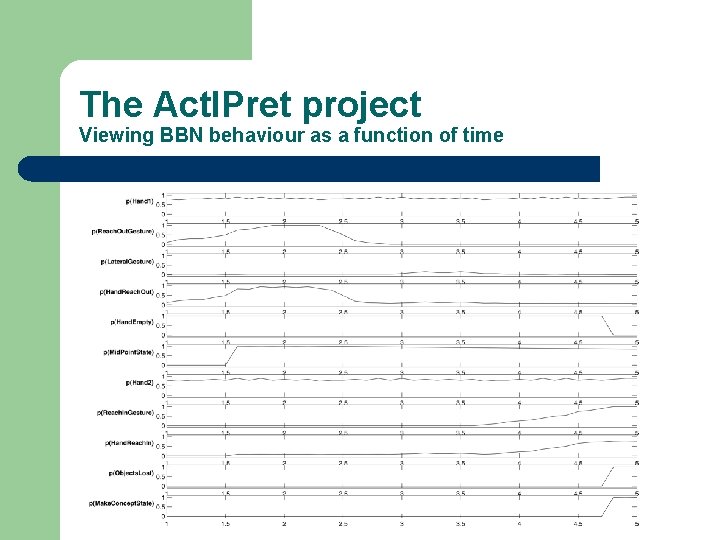

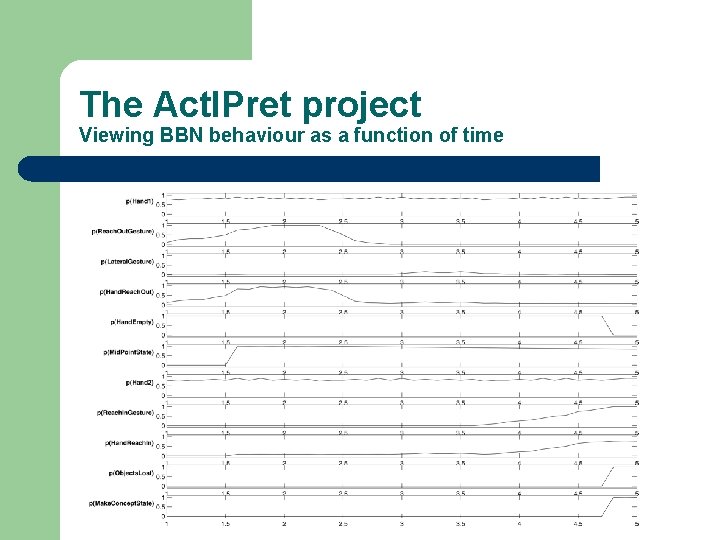

The Act. IPret project Viewing BBN behaviour as a function of time

The Act. IPret project Example Activity Plan for CD scenario shown in video Raw Concepts: Line 0: 1: 2: 3: Time: 5. 683, 13. 004, 15. 232, 15. 372, 'PRESSBUTTON, Hand 1, Object eject. Button 1‘ 'PICKUP, Hand 3, Object cd-intel 7, Location undefined‘ 'PUTDOWN, Hand 3, Object cd-intel 7, Location tray 14‘ 'PRESSBUTTON, Hand 1, Object eject. Button 1‘ Natural Language Version: Using the first hand press the eject. Button, with the second hand, pick up the cd-intel from anywhere, with the second hand, put down the cd-intel on the tray, and with the first hand, press the eject. Button. ‘cd-intel’ could be replace with ‘the Intel CD’

The Act. IPret project Future challenges … l l l Current generic BBNs and Control Policy are hand coded, although general Activity Plan is learned and can be used to store and index scenarios and for VR reconstruction Would be better to learn BBNs for behaviours primitives (actions) from repeated examples by the user (non-trivial)

Further reading l l “Recognition of Action, Activity and Behaviour in the Act. IPret project”, K. H. Sage, A. J. Howell and H. Buxton, Knowledge Representation (forthcoming) The Act. IPret project website at: http: //actipret. infa. tuwien. ac. at/

Summary l l l Task-based visual control requires a model of expectation to drive the processing In the Act. IPret project, the main aim is to interpret the activities of experts in the form of an Activity Plan The involves the Control Policy (encoded as a binary decision tree), Visual Indexes for relevant objects and temporally structured BBNs for the actions in the Activity Reasoning Engine

Next time … l Learning in Hidden Markov Models …

Kingsley sage

Kingsley sage Kingsley sage

Kingsley sage Visual control

Visual control Khs school

Khs school Siakad 2013 uny

Siakad 2013 uny Gordon kelso chemistry

Gordon kelso chemistry Gordon watson kelso

Gordon watson kelso Human vision vs computer vision

Human vision vs computer vision Cognitive and non cognitive religious language

Cognitive and non cognitive religious language Kingsley community primary school

Kingsley community primary school Kerumunan menurut kingsley davis

Kerumunan menurut kingsley davis Elompok

Elompok Kerumunan menurut kingsley davis

Kerumunan menurut kingsley davis Kingsley dam

Kingsley dam Kingsley davis

Kingsley davis Ben kingsley som itzak stern

Ben kingsley som itzak stern Richmond fellowship trust

Richmond fellowship trust Kingsley agho

Kingsley agho Postdamming

Postdamming Sailboat metaphor positive psychology

Sailboat metaphor positive psychology Cmu 16-385

Cmu 16-385 Kalman filter computer vision

Kalman filter computer vision T11 computer

T11 computer Berkeley computer vision

Berkeley computer vision