An Introduction to Cache Design 20211220 coursecpeg 323

- Slides: 45

An Introduction to Cache Design 2021/12/20 coursecpeg 323 -08 FTopic 7 a 1

Cache A safe place for hiding and storing things. Webster Dictionary 2021/12/20 coursecpeg 323 -08 FTopic 7 a 2

Even with the inclusion of cache, almost all CPUs are still mostly strictly limited by the cache access-time: In most cases, if the cache access time were decreased, the machine would speedup accordingly. - Alan Smith Even more so for MPs! 2021/12/20 coursecpeg 323 -08 FTopic 7 a 3

While one can imagine ref. patterns that can defeat existing cache M designs, it is the author’s experience that cache M improve performance for any program or workload which actually does useful computation. 2021/12/20 coursecpeg 323 -08 FTopic 7 a 4

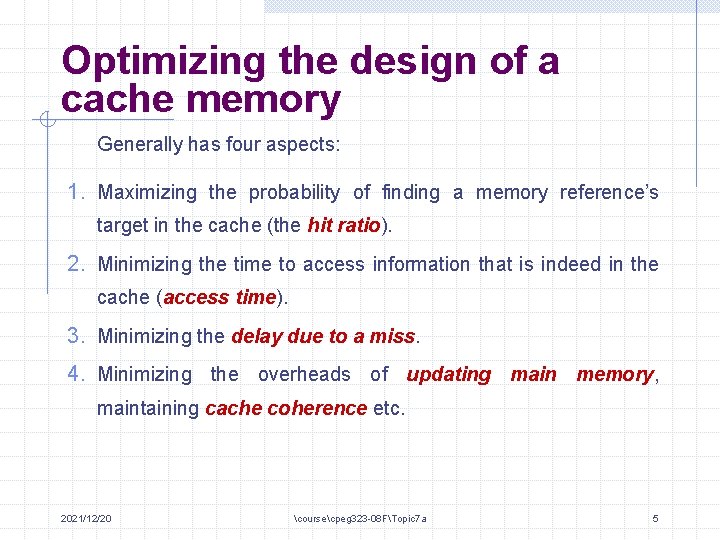

Optimizing the design of a cache memory Generally has four aspects: 1. Maximizing the probability of finding a memory reference’s target in the cache (the hit ratio). 2. Minimizing the time to access information that is indeed in the cache (access time). 3. Minimizing the delay due to a miss. 4. Minimizing the overheads of updating main memory, maintaining cache coherence etc. 2021/12/20 coursecpeg 323 -08 FTopic 7 a 5

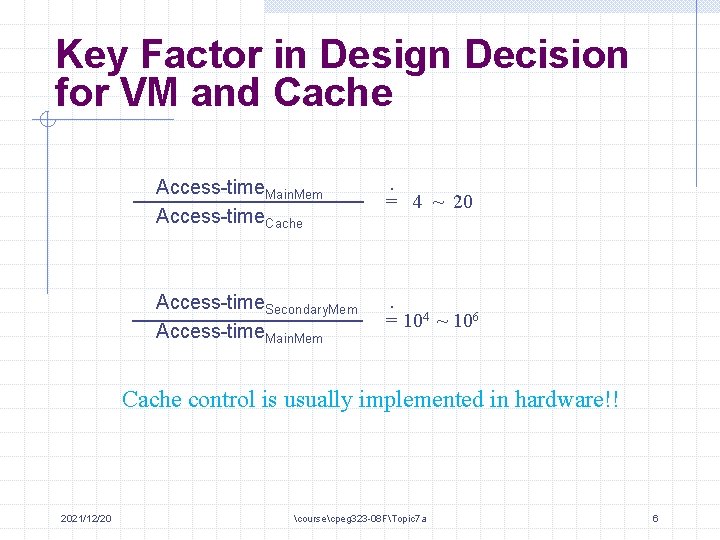

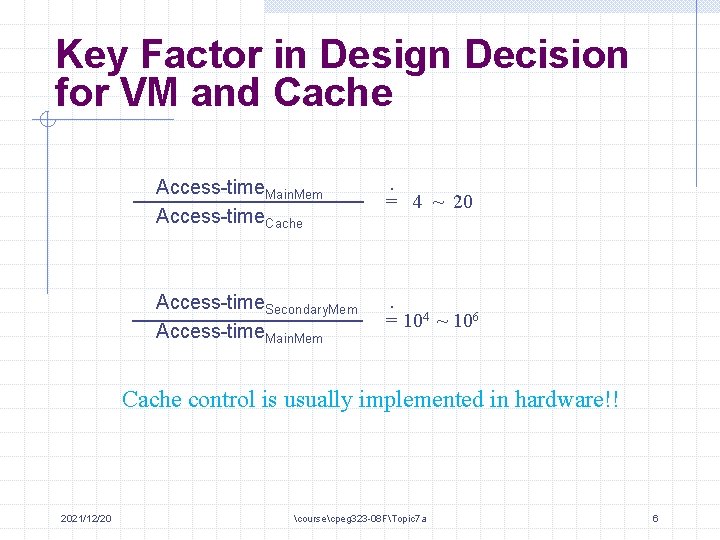

Key Factor in Design Decision for VM and Cache Access-time. Main. Mem Access-time. Cache . = 4 ~ 20 Access-time. Secondary. Mem Access-time. Main. Mem . = 104 ~ 106 Cache control is usually implemented in hardware!! 2021/12/20 coursecpeg 323 -08 FTopic 7 a 6

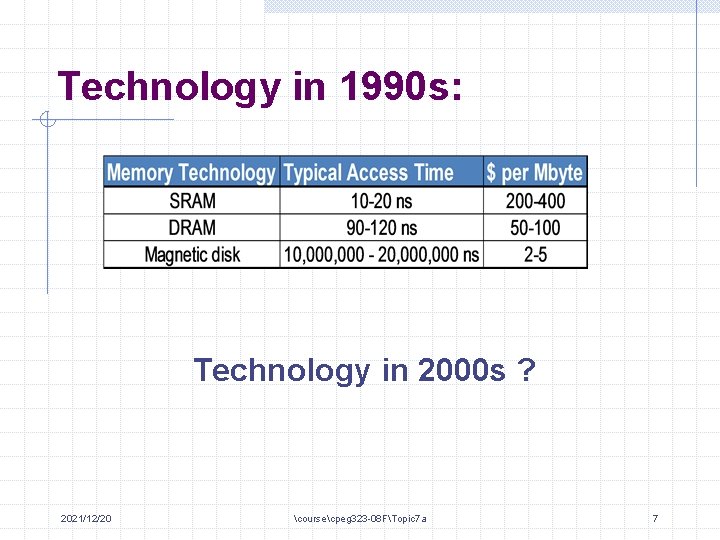

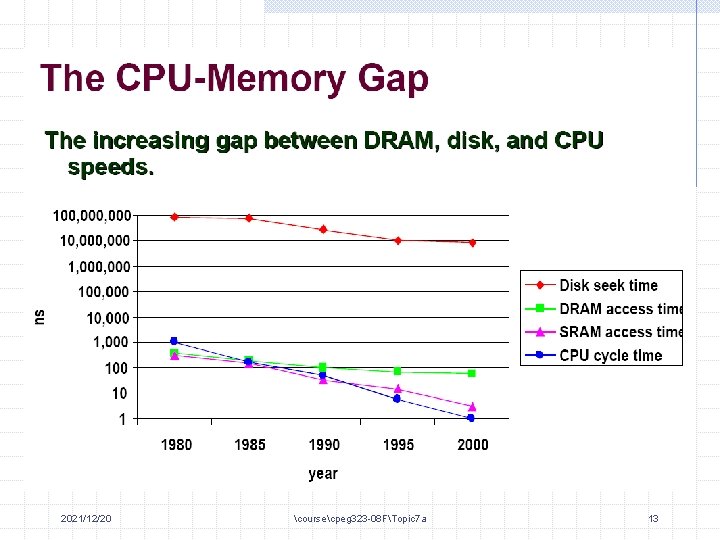

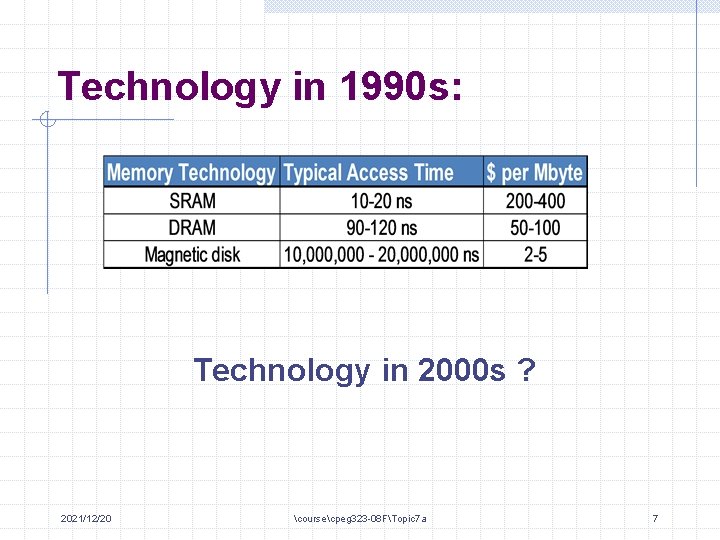

Technology in 1990 s: Technology in 2000 s ? 2021/12/20 coursecpeg 323 -08 FTopic 7 a 7

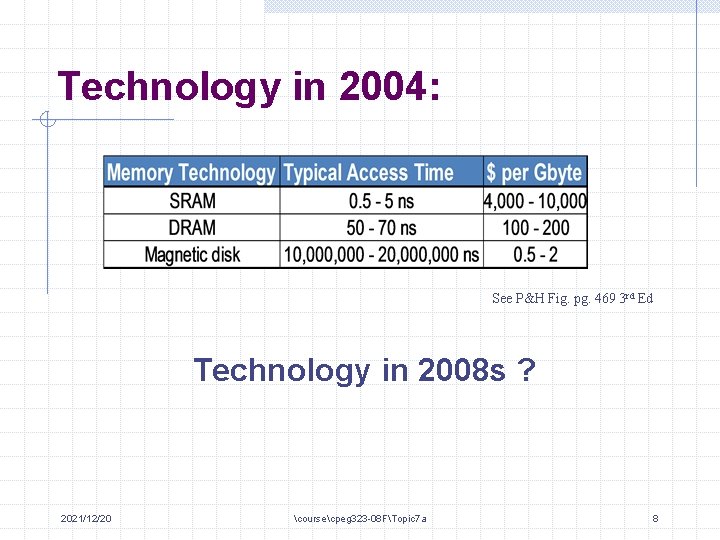

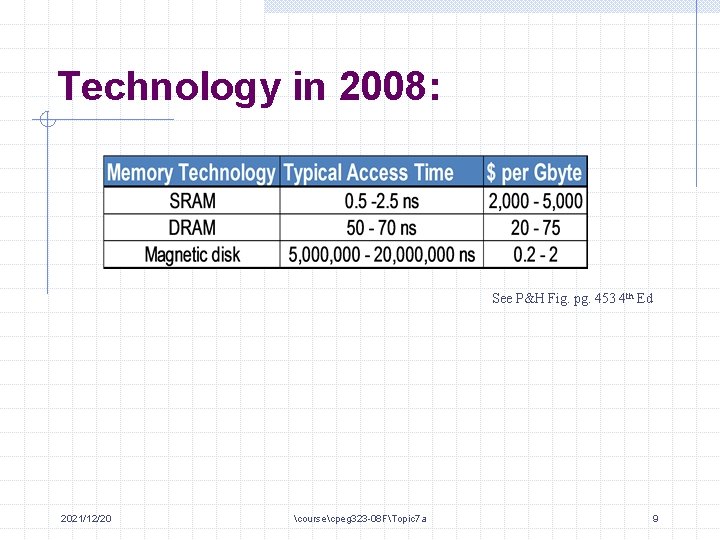

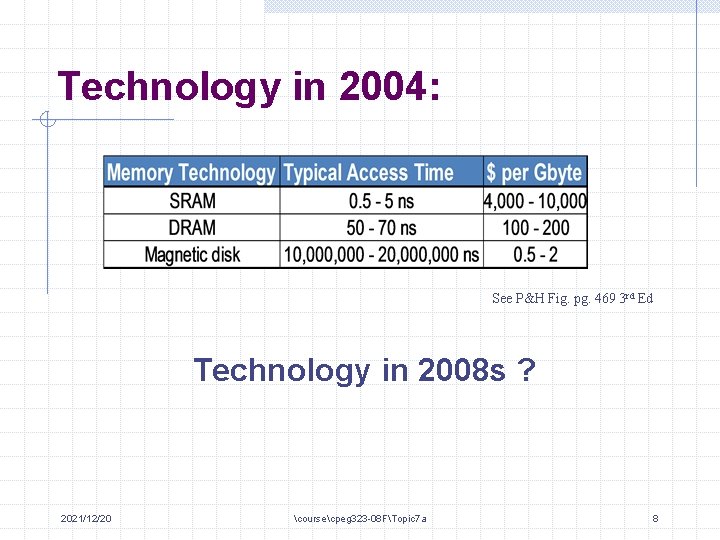

Technology in 2004: See P&H Fig. pg. 469 3 rd Ed Technology in 2008 s ? 2021/12/20 coursecpeg 323 -08 FTopic 7 a 8

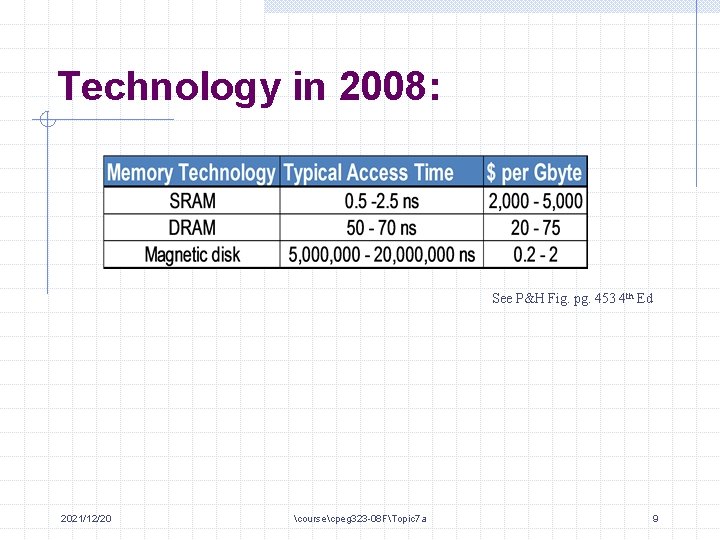

Technology in 2008: See P&H Fig. pg. 453 4 th Ed 2021/12/20 coursecpeg 323 -08 FTopic 7 a 9

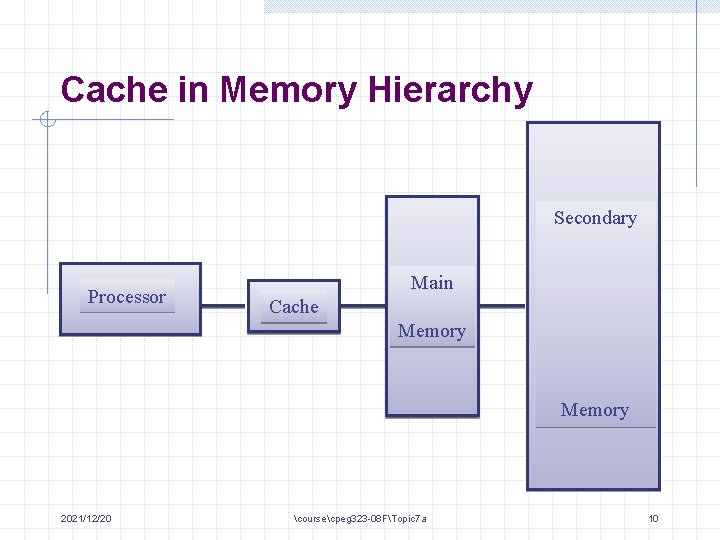

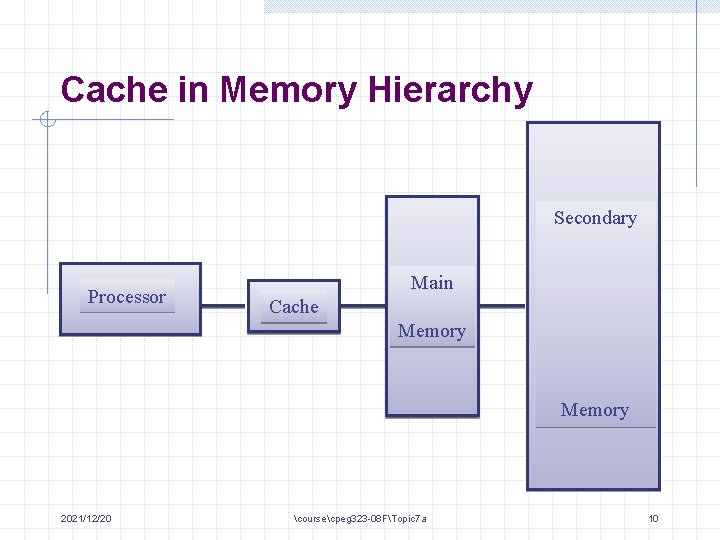

Cache in Memory Hierarchy Secondary Processor Main Cache Memory 2021/12/20 coursecpeg 323 -08 FTopic 7 a 10

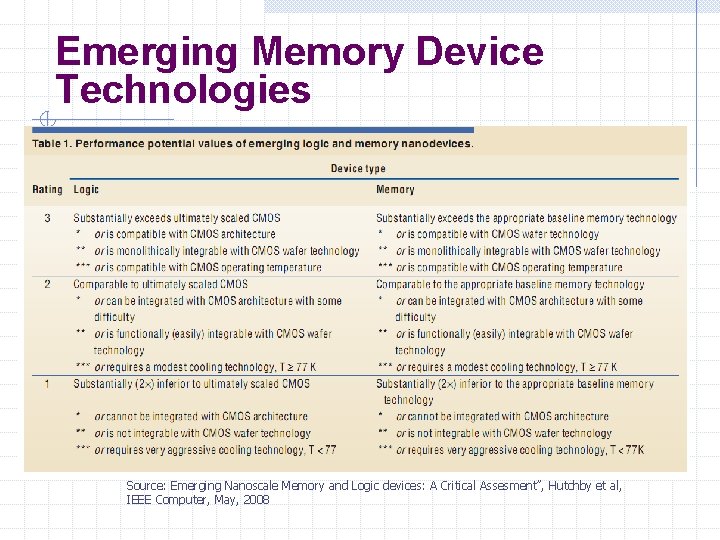

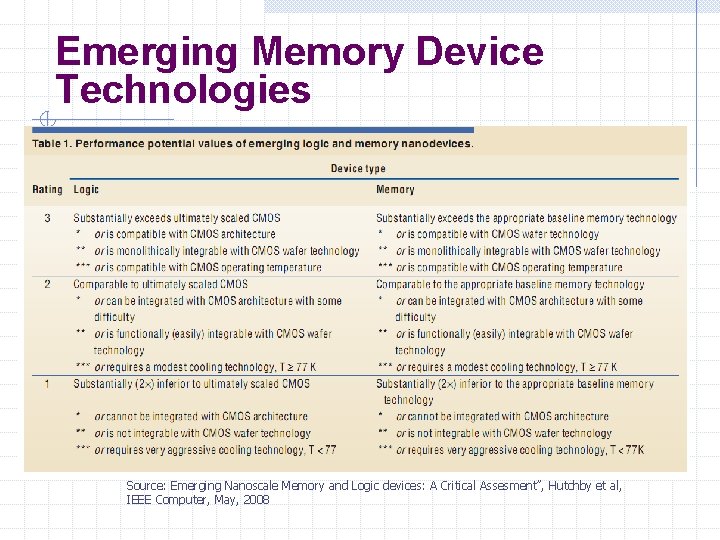

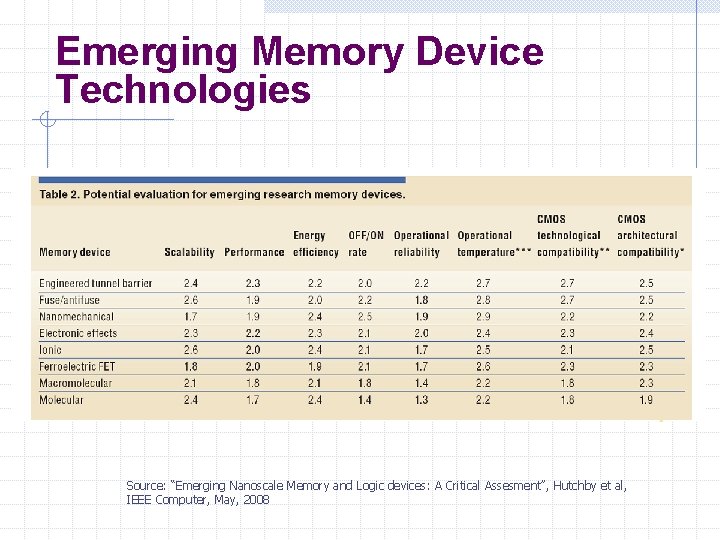

Emerging Memory Device Technologies Source: Emerging Nanoscale Memory and Logic devices: A Critical Assesment”, Hutchby et al, IEEE Computer, May, 2008

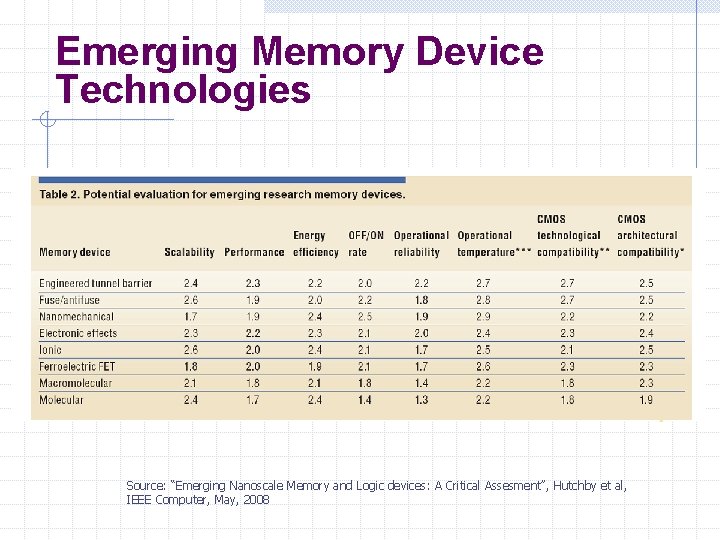

Emerging Memory Device Technologies Source: “Emerging Nanoscale Memory and Logic devices: A Critical Assesment”, Hutchby et al, IEEE Computer, May, 2008

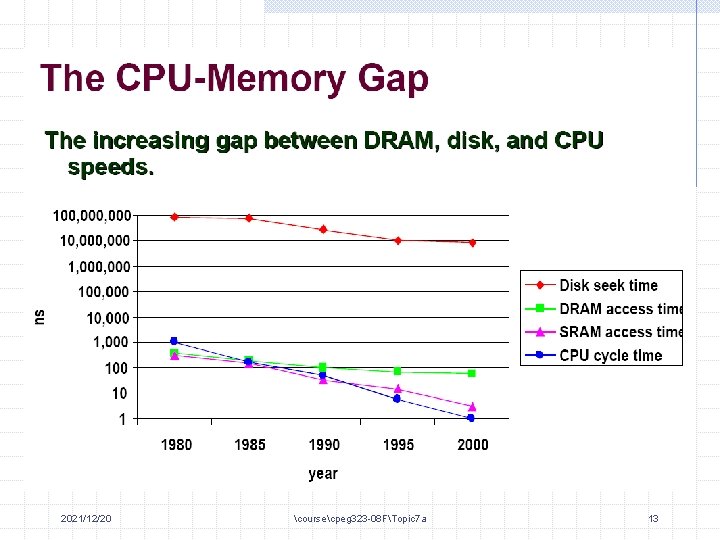

2021/12/20 coursecpeg 323 -08 FTopic 7 a 13

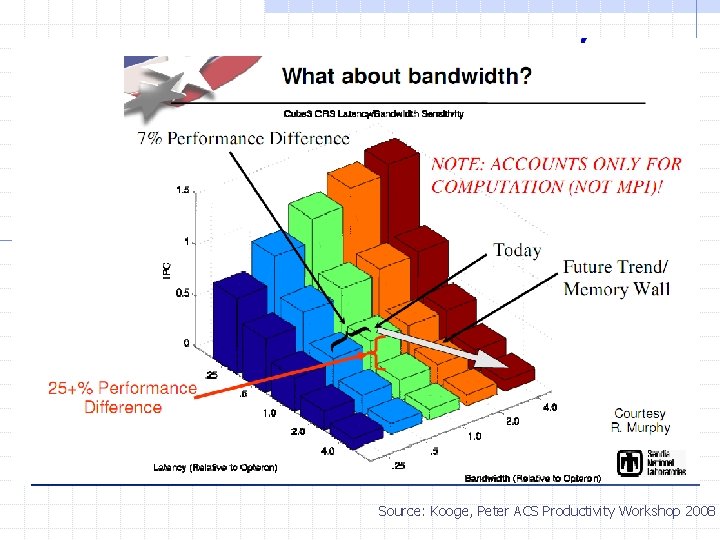

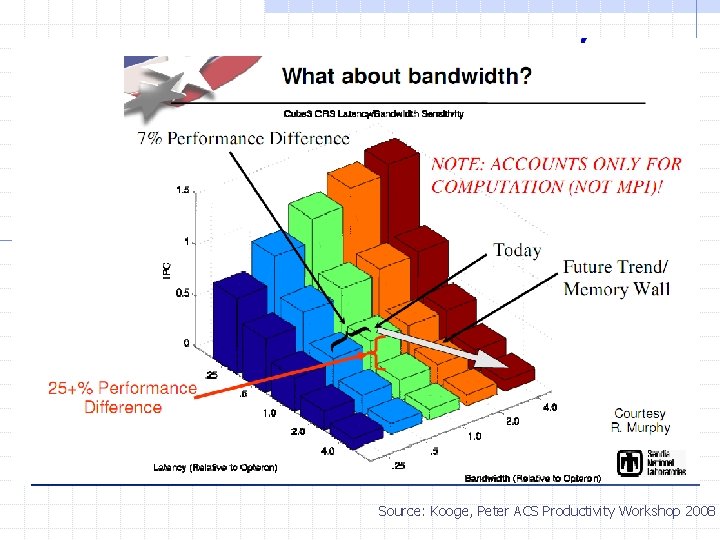

Source: Kooge, Peter ACS Productivity Workshop 2008

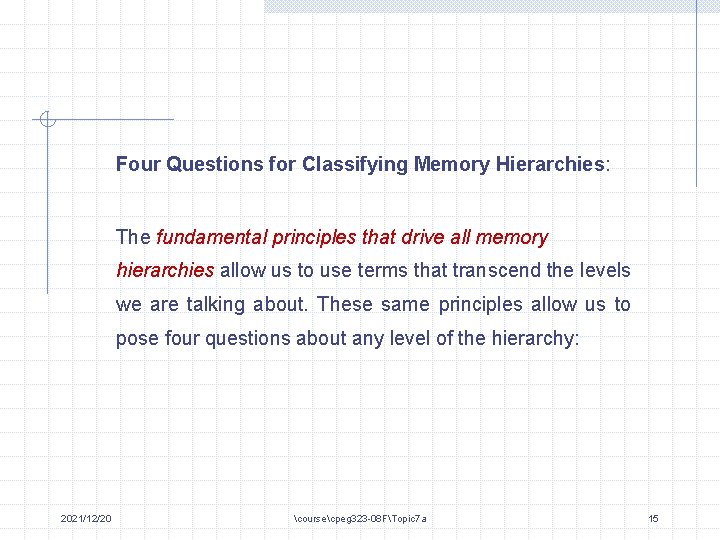

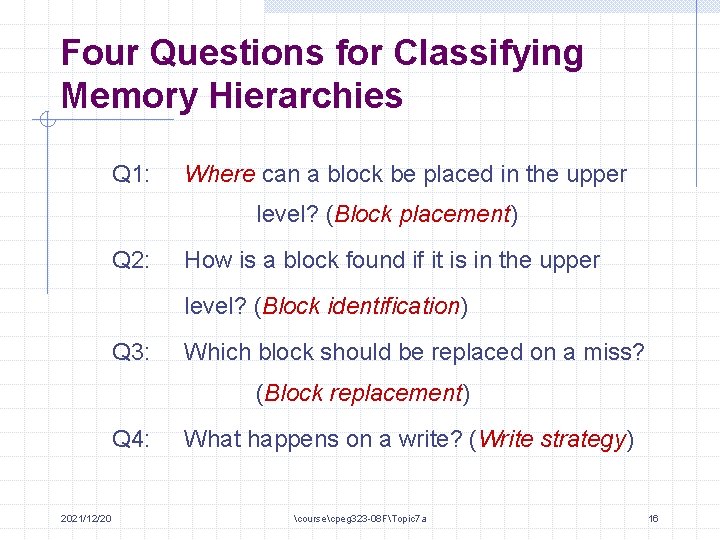

Four Questions for Classifying Memory Hierarchies: The fundamental principles that drive all memory hierarchies allow us to use terms that transcend the levels we are talking about. These same principles allow us to pose four questions about any level of the hierarchy: 2021/12/20 coursecpeg 323 -08 FTopic 7 a 15

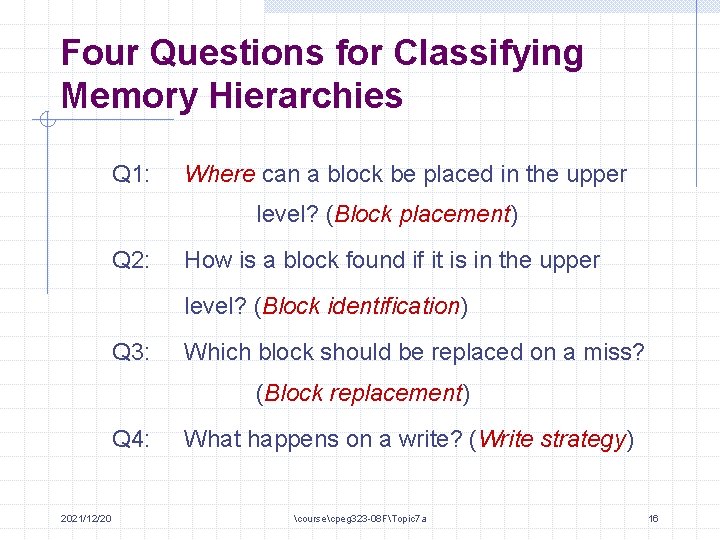

Four Questions for Classifying Memory Hierarchies Q 1: Where can a block be placed in the upper level? (Block placement) Q 2: How is a block found if it is in the upper level? (Block identification) Q 3: Which block should be replaced on a miss? (Block replacement) Q 4: 2021/12/20 What happens on a write? (Write strategy) coursecpeg 323 -08 FTopic 7 a 16

These questions will help us gain an understanding of the different tradeoffs demanded relationships of by memories the at different levels of a hierarchy. 2021/12/20 coursecpeg 323 -08 FTopic 7 a 17

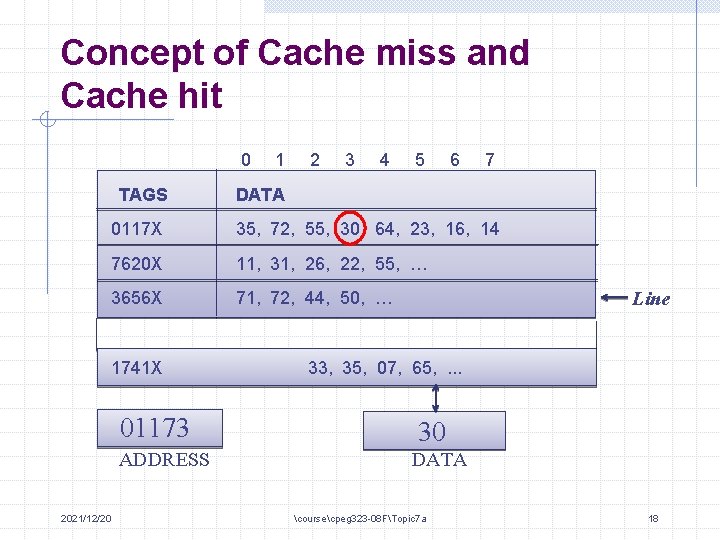

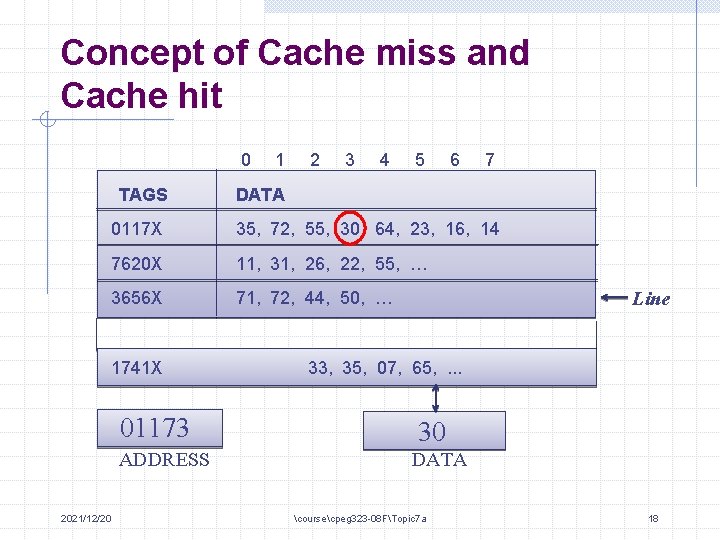

Concept of Cache miss and Cache hit 0 TAGS 2 3 4 5 6 7 DATA 0117 X 35, 72, 55, 30, 64, 23, 16, 14 7620 X 11, 31, 26, 22, 55, … 3656 X 71, 72, 44, 50, … 1741 X 2021/12/20 1 Line 33, 35, 07, 65, . . . 01173 30 ADDRESS DATA coursecpeg 323 -08 FTopic 7 a 18

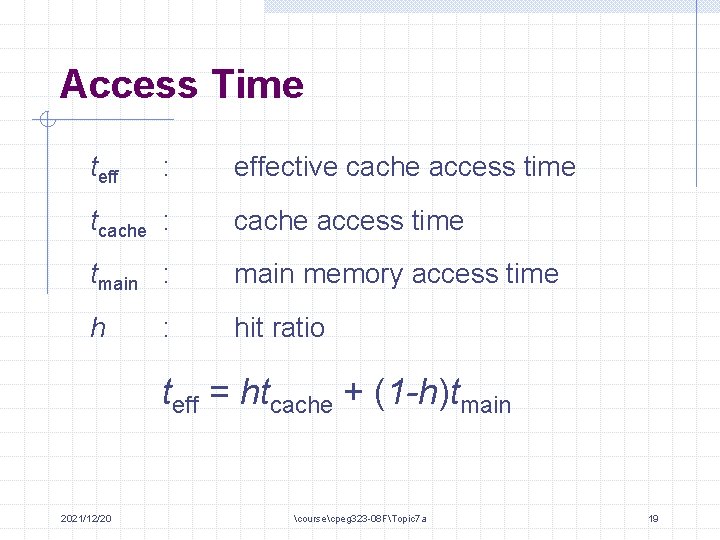

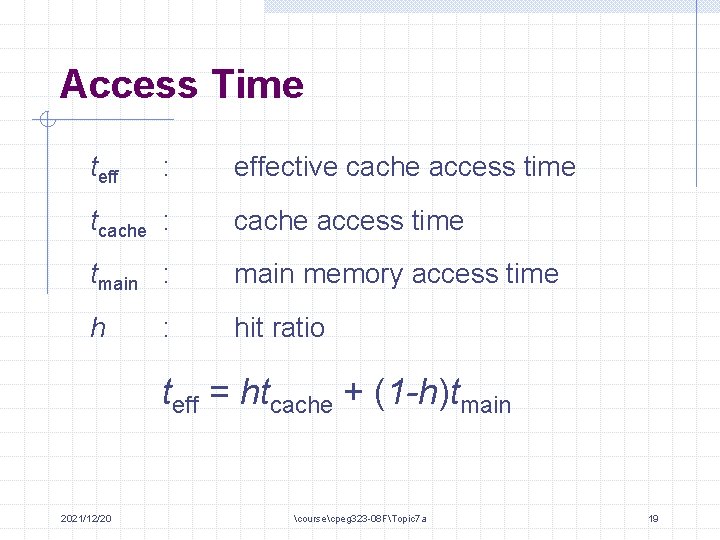

Access Time teff : effective cache access time tcache : cache access time tmain : main memory access time h hit ratio : teff = htcache + (1 -h)tmain 2021/12/20 coursecpeg 323 -08 FTopic 7 a 19

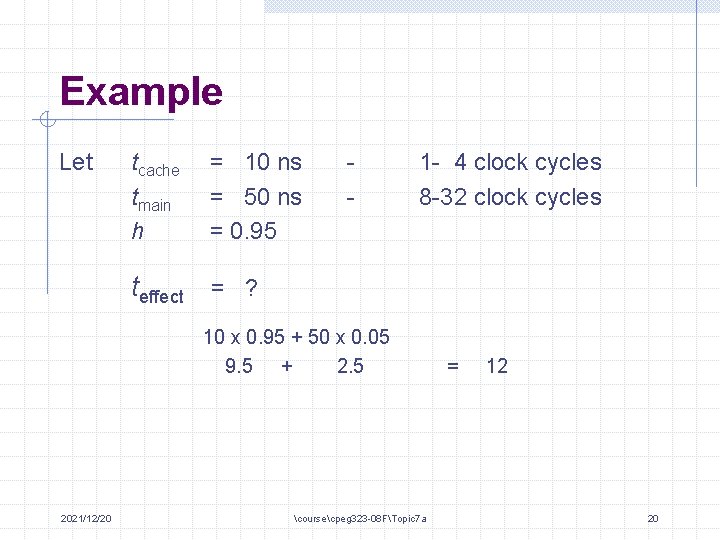

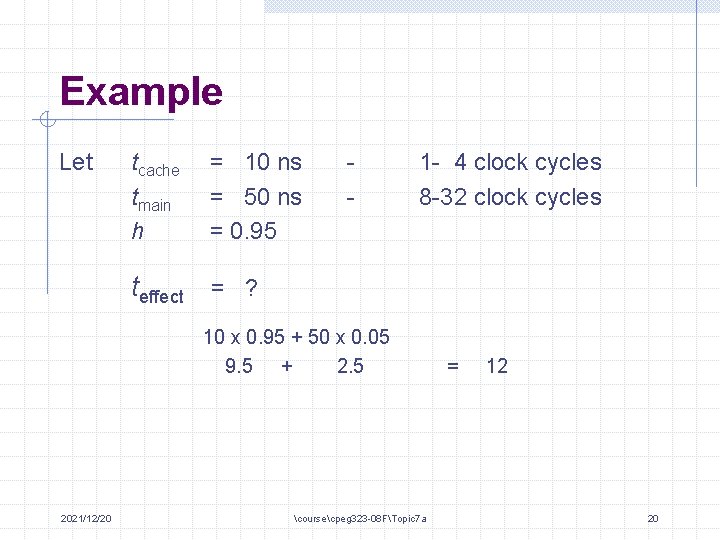

Example Let tcache tmain h = 10 ns = 50 ns = 0. 95 - 1 - 4 clock cycles 8 -32 clock cycles teffect = ? 10 x 0. 95 + 50 x 0. 05 9. 5 + 2. 5 2021/12/20 coursecpeg 323 -08 FTopic 7 a = 12 20

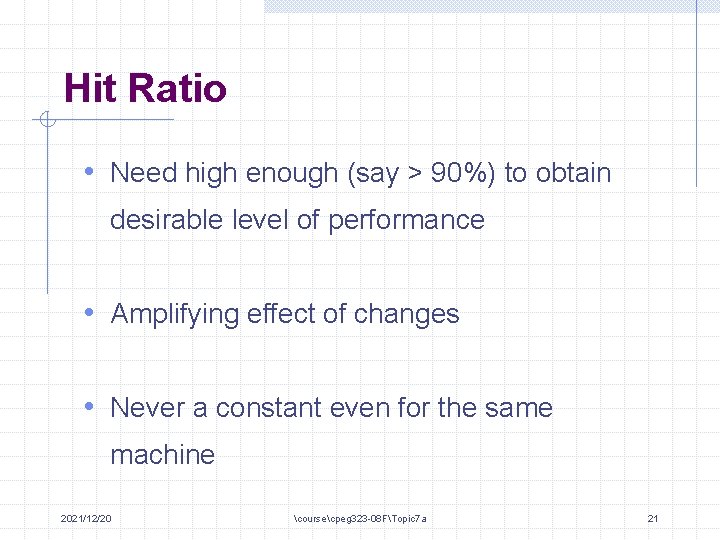

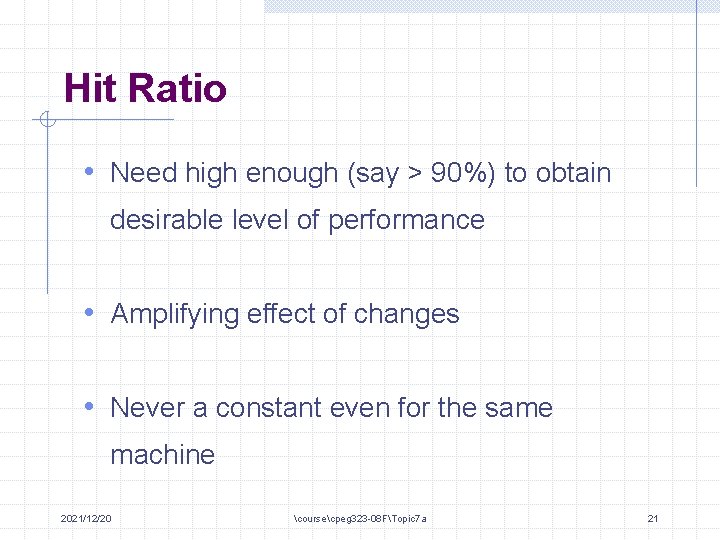

Hit Ratio • Need high enough (say > 90%) to obtain desirable level of performance • Amplifying effect of changes • Never a constant even for the same machine 2021/12/20 coursecpeg 323 -08 FTopic 7 a 21

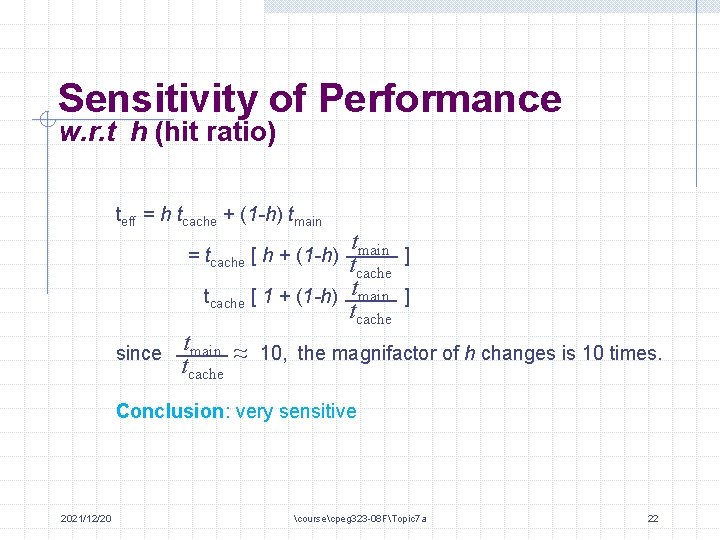

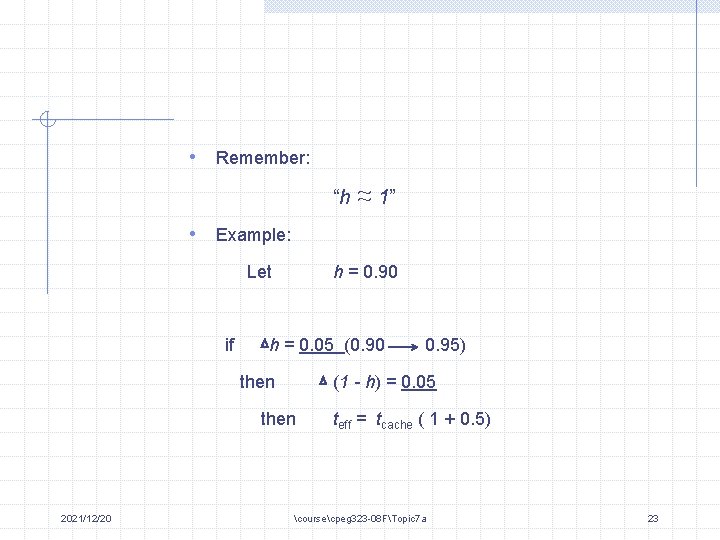

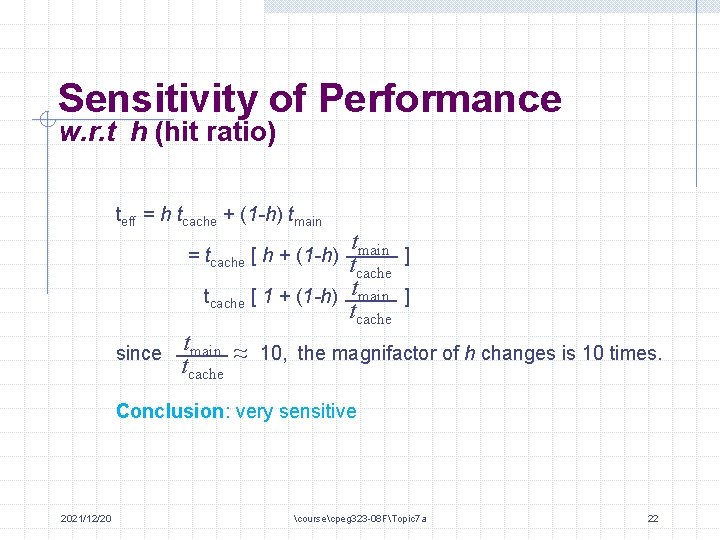

Sensitivity of Performance w. r. t h (hit ratio) teff = h tcache + (1 -h) tmain tcache ] tcache [ 1 + (1 -h) tmain ] tcache = tcache [ h + (1 -h) since tmain ~ 10, the magnifactor of h changes is 10 times. tcache ~ Conclusion: very sensitive 2021/12/20 coursecpeg 323 -08 FTopic 7 a 22

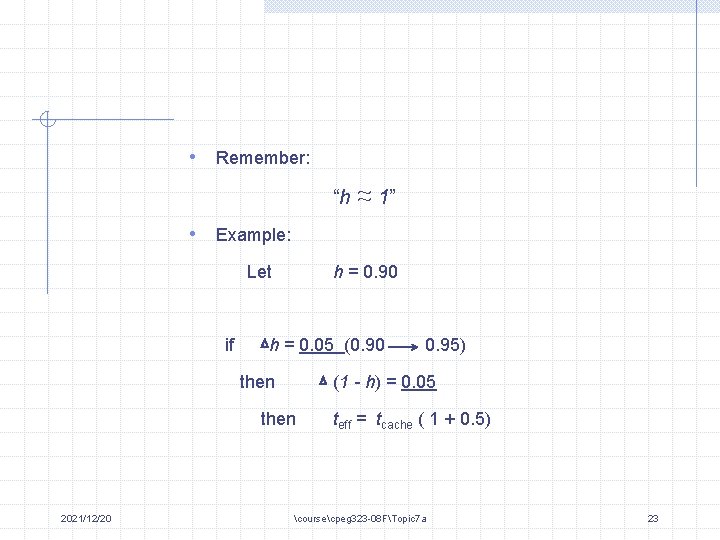

• Remember: “h ~ ~ 1” • Example: Let if h = 0. 90 h = 0. 05 (0. 90 then (1 - h) = 0. 05 then 2021/12/20 0. 95) teff = tcache ( 1 + 0. 5) coursecpeg 323 -08 FTopic 7 a 23

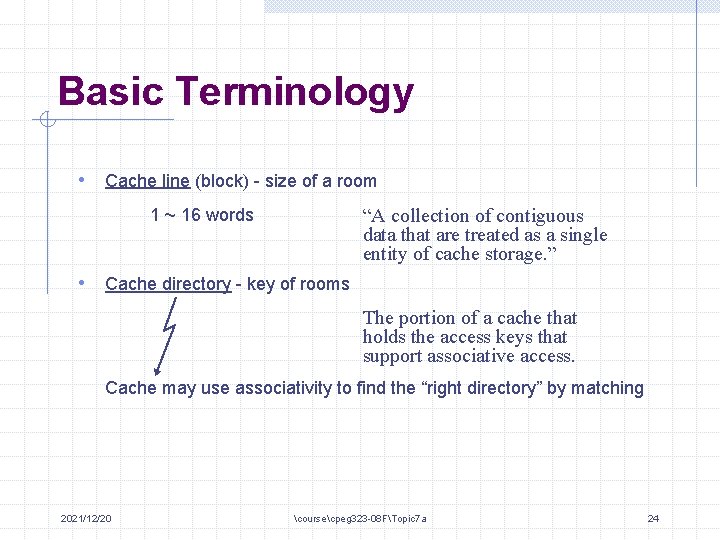

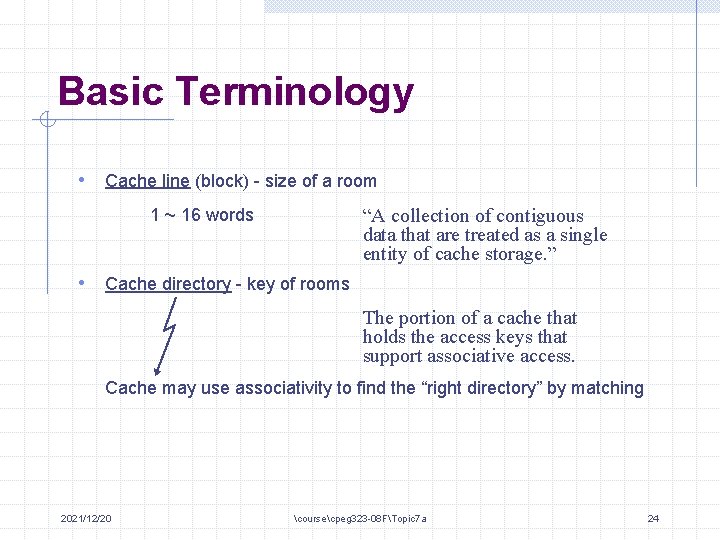

Basic Terminology • Cache line (block) - size of a room “A collection of contiguous data that are treated as a single entity of cache storage. ” 1 ~ 16 words • Cache directory - key of rooms The portion of a cache that holds the access keys that support associative access. Cache may use associativity to find the “right directory” by matching 2021/12/20 coursecpeg 323 -08 FTopic 7 a 24

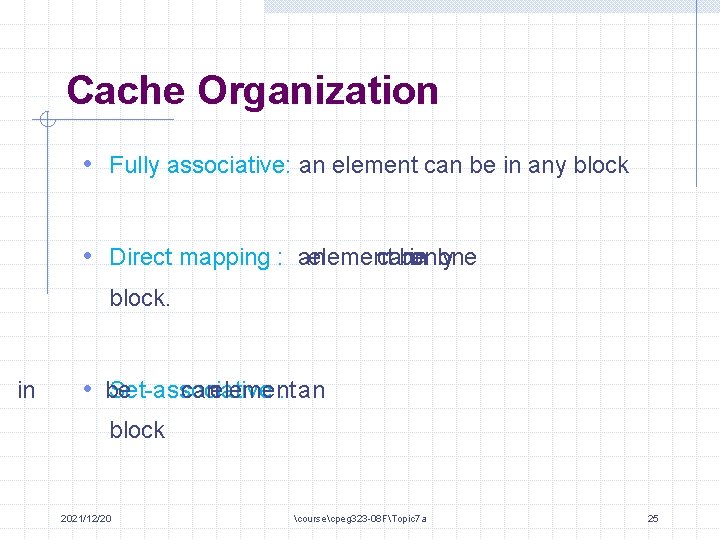

Cache Organization • Fully associative: an element can be in any block • Direct mapping : an element can be in only one block. in • be Set-associative canelement : an block 2021/12/20 coursecpeg 323 -08 FTopic 7 a 25

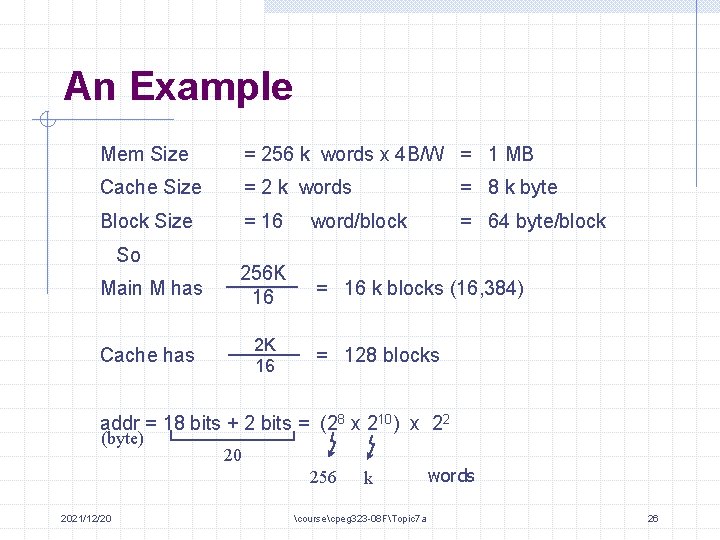

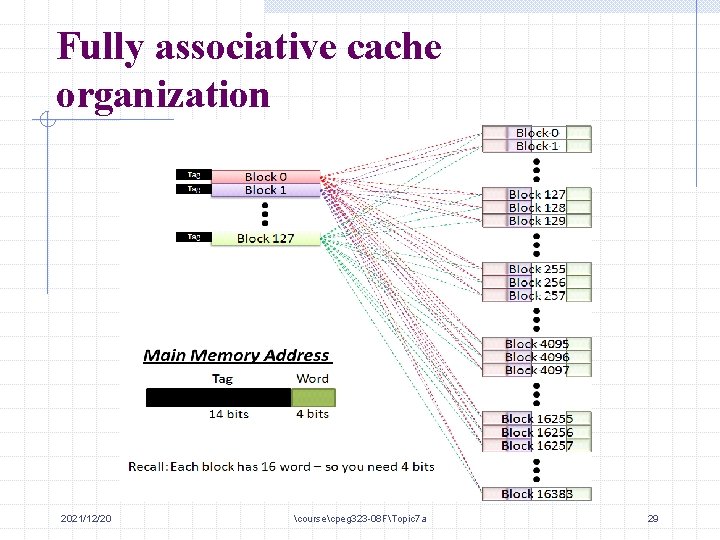

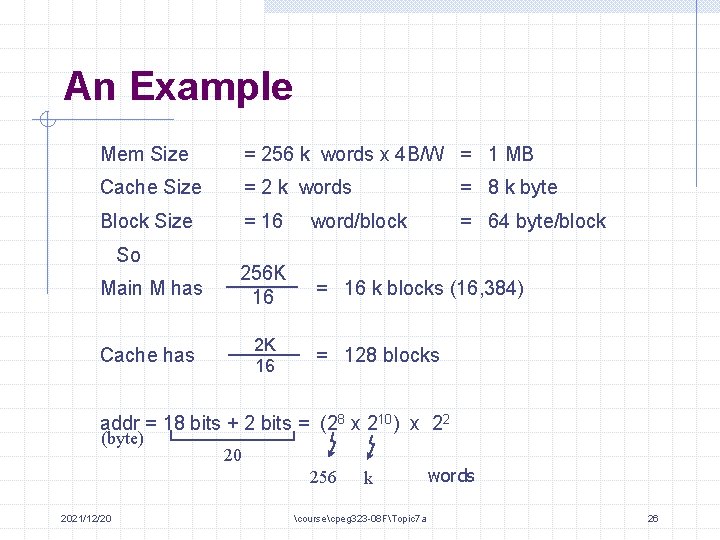

An Example Mem Size = 256 k words x 4 B/W = 1 MB Cache Size = 2 k words = 8 k byte Block Size = 16 word/block = 64 byte/block Main M has 256 K 16 = 16 k blocks (16, 384) Cache has 2 K 16 So = 128 blocks addr = 18 bits + 2 bits = (28 x 210) x 22 (byte) 20 256 2021/12/20 k coursecpeg 323 -08 FTopic 7 a words 26

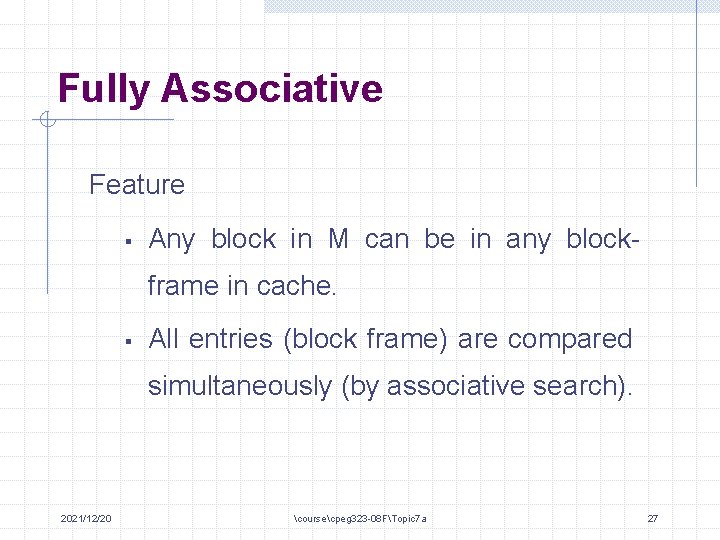

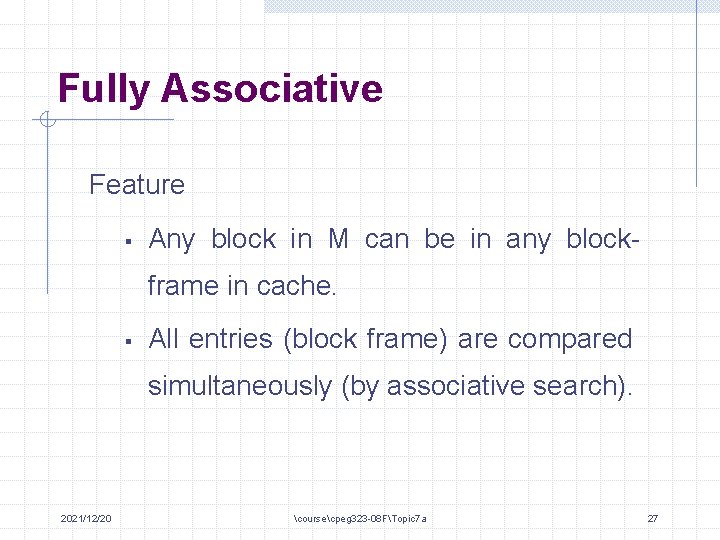

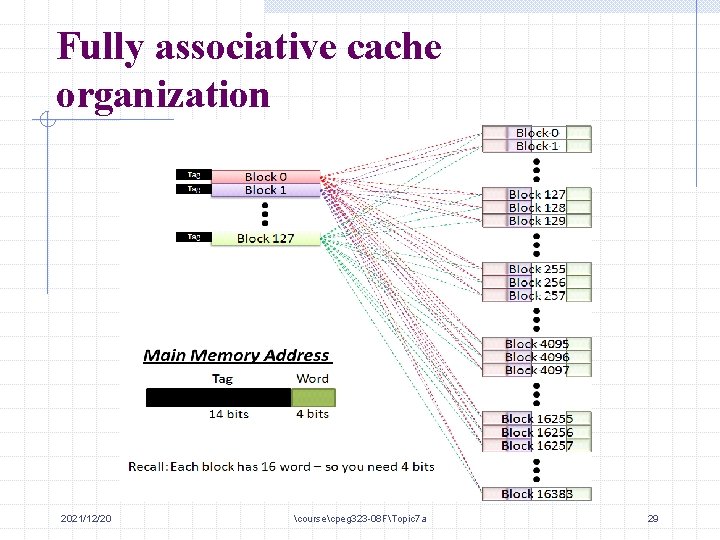

Fully Associative Feature § Any block in M can be in any blockframe in cache. § All entries (block frame) are compared simultaneously (by associative search). 2021/12/20 coursecpeg 323 -08 FTopic 7 a 27

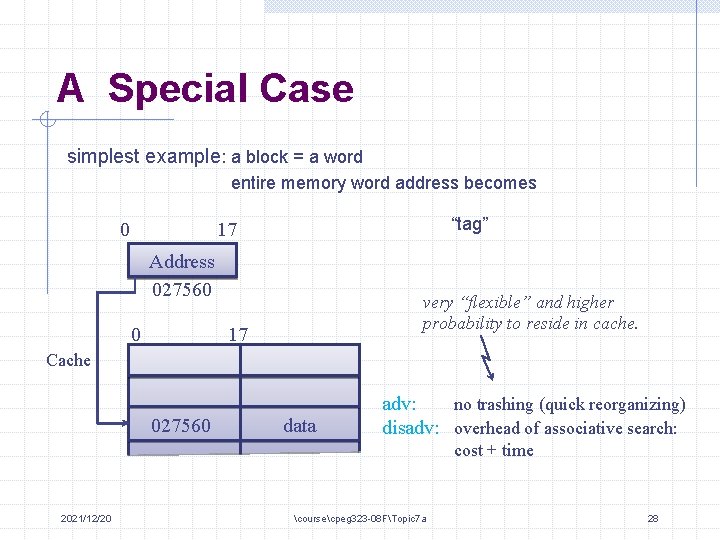

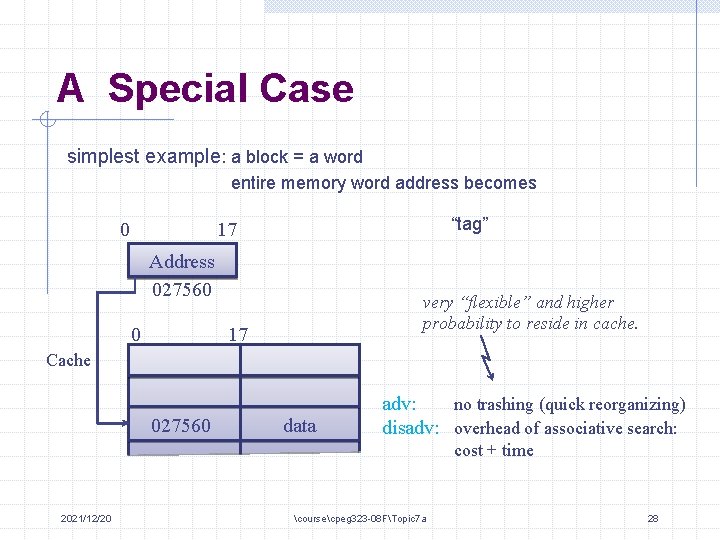

A Special Case simplest example: a block = a word entire memory word address becomes 0 “tag” 17 Address 027560 0 very “flexible” and higher probability to reside in cache. 17 Cache 027560 data adv: no trashing (quick reorganizing) disadv: overhead of associative search: cost + time 2021/12/20 coursecpeg 323 -08 FTopic 7 a 28

Fully associative cache organization 2021/12/20 coursecpeg 323 -08 FTopic 7 a 29

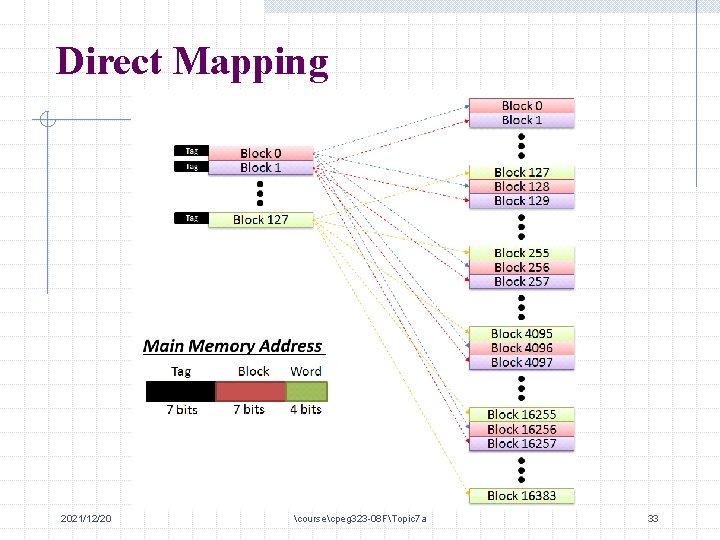

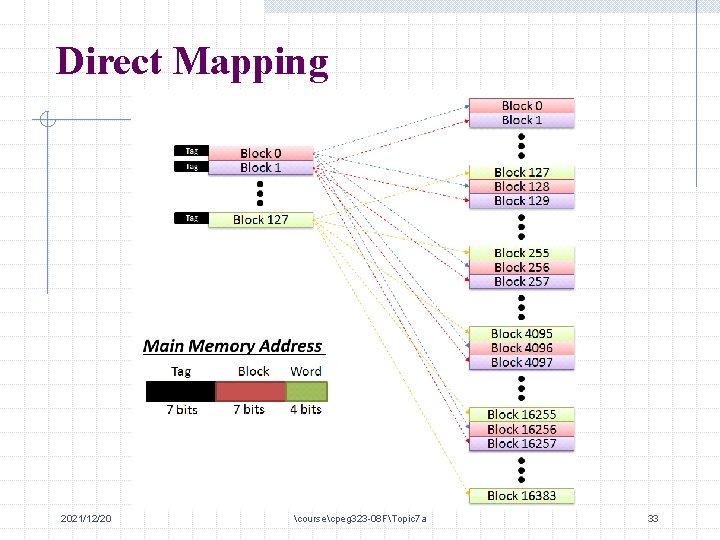

Direct Mapping • No associative match • From M-addr, “directly” indexed to the block frame in cache where the block should be located. A comparison then is to used to determine if it is a miss or hit. 2021/12/20 coursecpeg 323 -08 FTopic 7 a 30

Direct Mapping Cont’d Advantage: simplest: Fast (fewer logic) Low cost: (only one set comparator is needed hence can be in the form of standard M Disadvantage: “trashing” 2021/12/20 coursecpeg 323 -08 FTopic 7 a 31

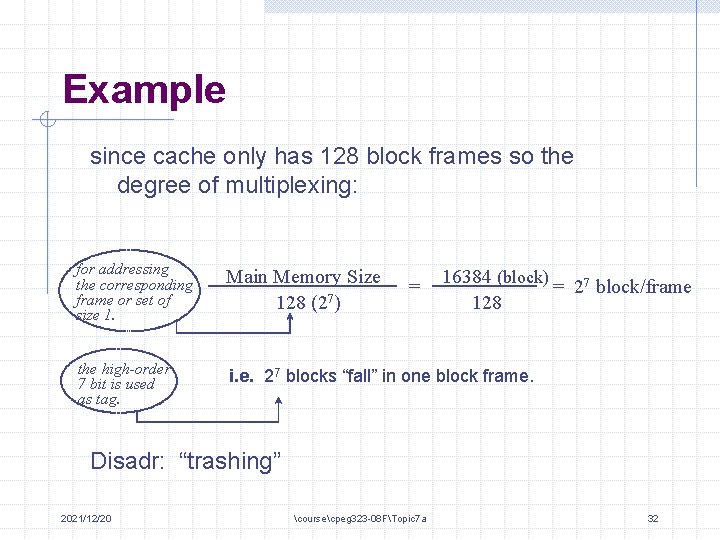

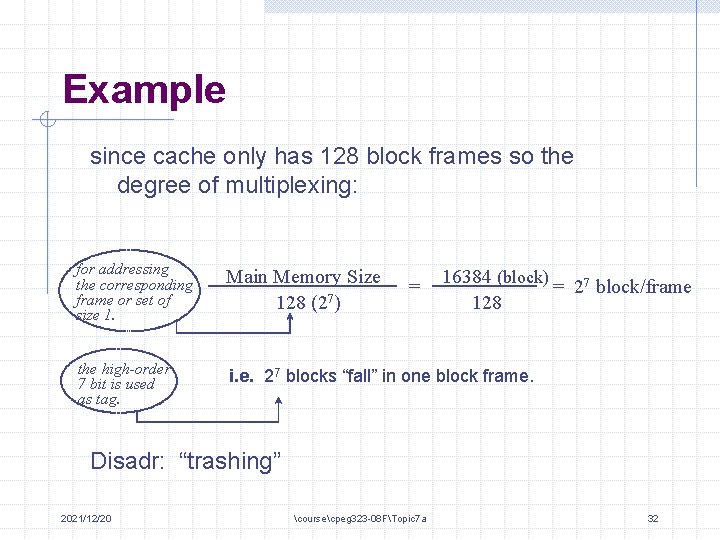

Example since cache only has 128 block frames so the degree of multiplexing: for addressing the corresponding frame or set of size 1. Main Memory Size 128 (27) the high-order 7 bit is used as tag. i. e. 27 blocks “fall” in one block frame. = 16384 (block) = 27 block/frame 128 Disadr: “trashing” 2021/12/20 coursecpeg 323 -08 FTopic 7 a 32

Direct Mapping 2021/12/20 coursecpeg 323 -08 FTopic 7 a 33

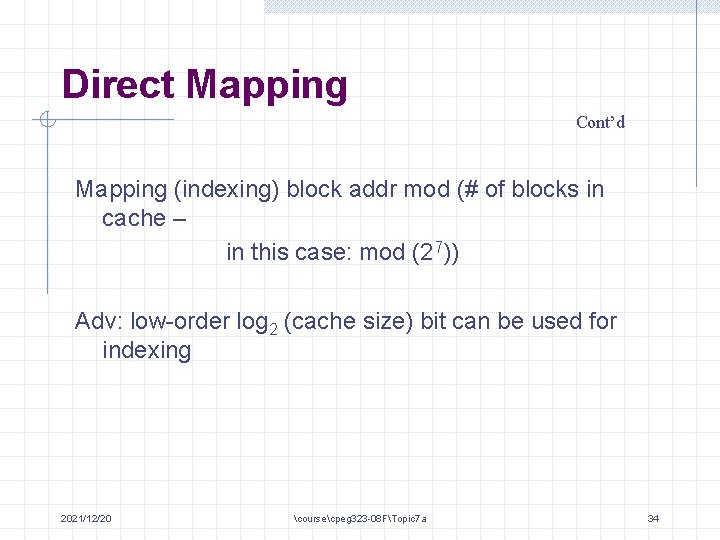

Direct Mapping Cont’d Mapping (indexing) block addr mod (# of blocks in cache – in this case: mod (27)) Adv: low-order log 2 (cache size) bit can be used for indexing 2021/12/20 coursecpeg 323 -08 FTopic 7 a 34

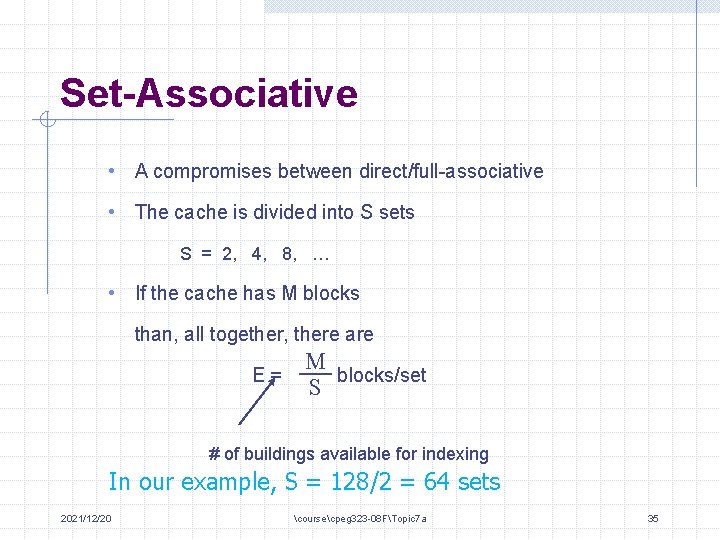

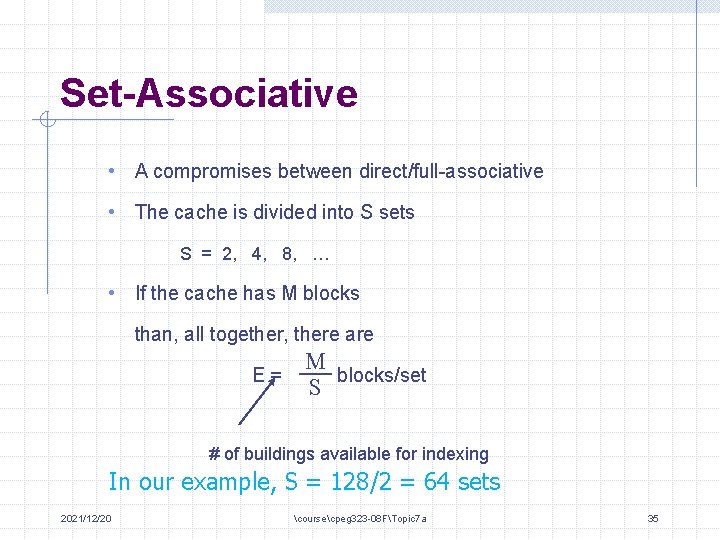

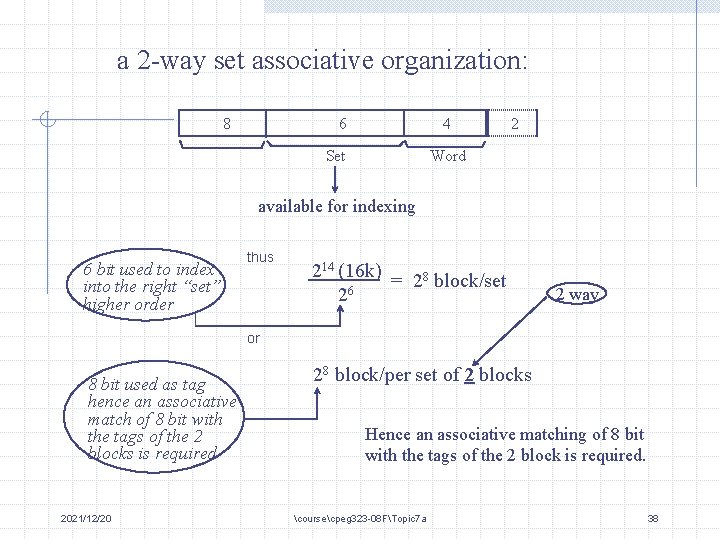

Set-Associative • A compromises between direct/full-associative • The cache is divided into S sets S = 2, 4, 8, … • If the cache has M blocks than, all together, there are E= M blocks/set S # of buildings available for indexing In our example, S = 128/2 = 64 sets 2021/12/20 coursecpeg 323 -08 FTopic 7 a 35

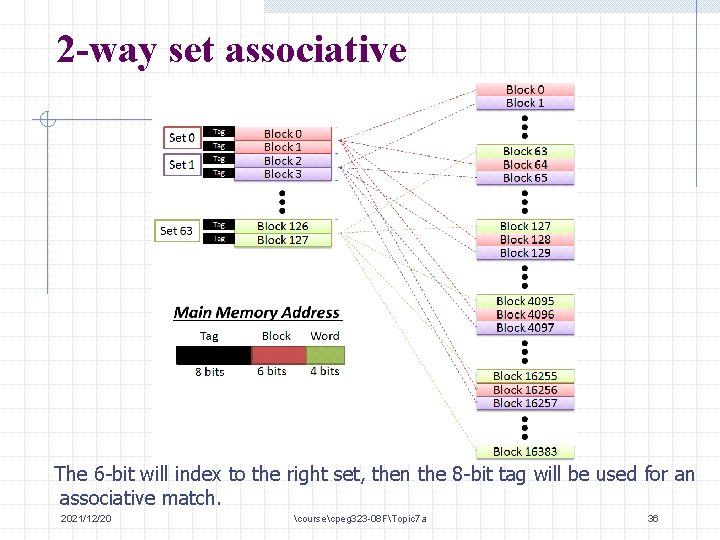

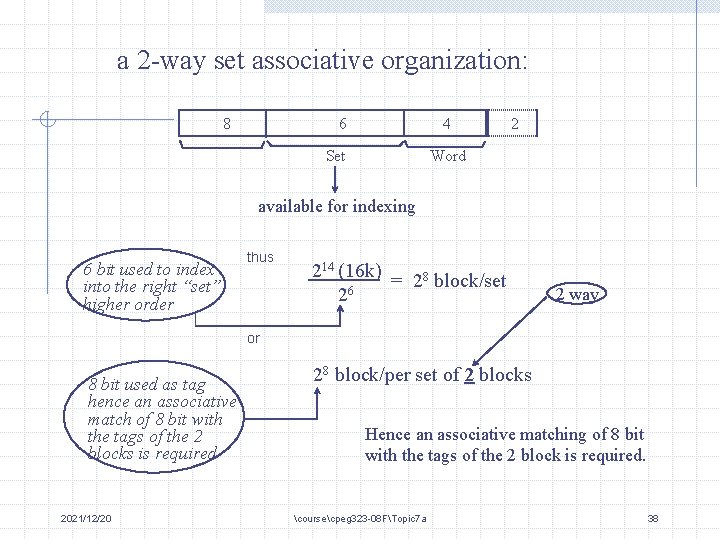

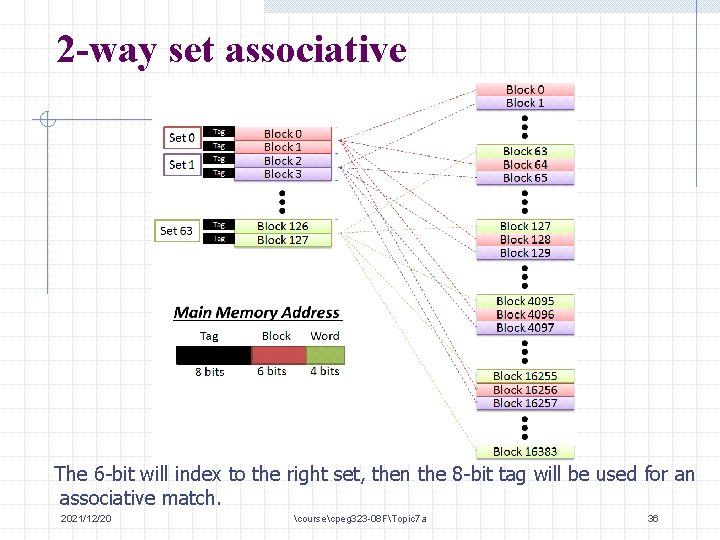

2 -way set associative The 6 -bit will index to the right set, then the 8 -bit tag will be used for an associative match. 2021/12/20 coursecpeg 323 -08 FTopic 7 a 36

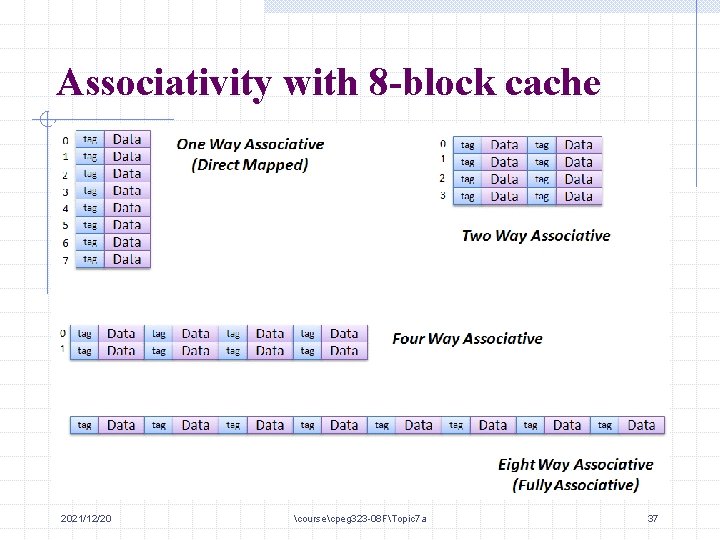

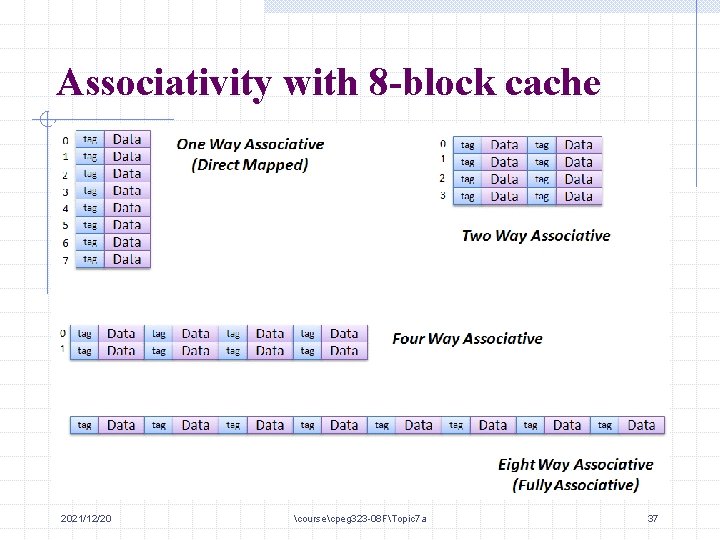

Associativity with 8 -block cache 2021/12/20 coursecpeg 323 -08 FTopic 7 a 37

a 2 -way set associative organization: 8 6 4 Set 2 Word available for indexing 6 bit used to index into the right “set” higher order thus 214 (16 k) = 28 block/set 26 2 way or 8 bit used as tag hence an associative match of 8 bit with the tags of the 2 blocks is required 2021/12/20 28 block/per set of 2 blocks Hence an associative matching of 8 bit with the tags of the 2 block is required. coursecpeg 323 -08 FTopic 7 a 38

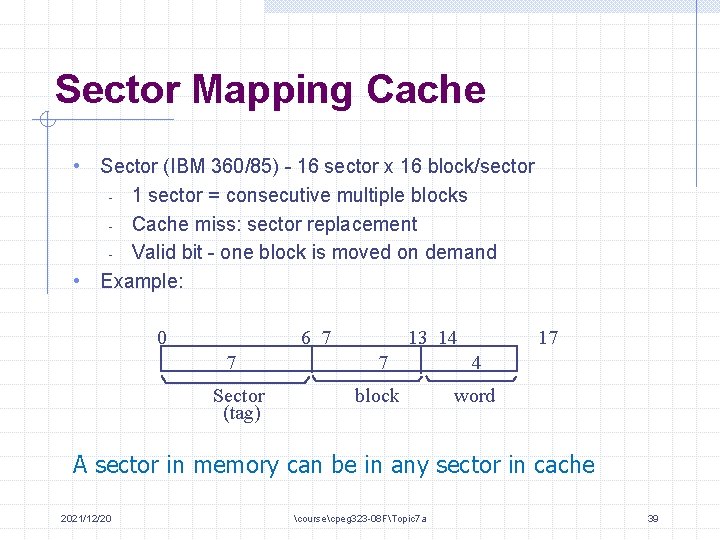

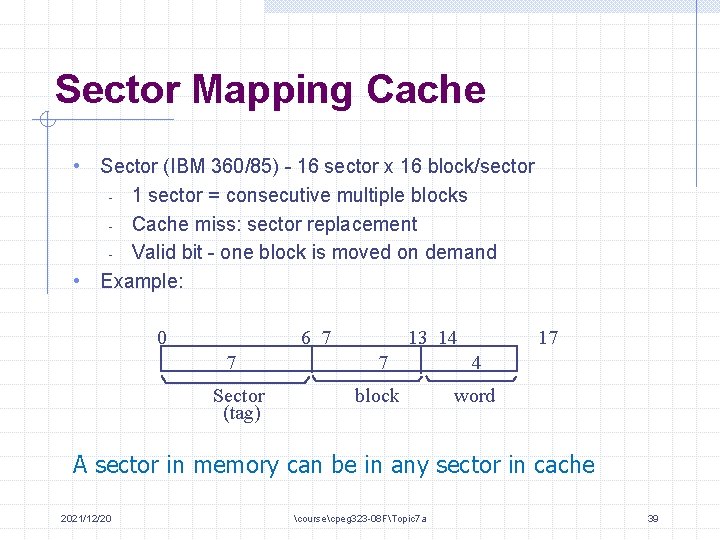

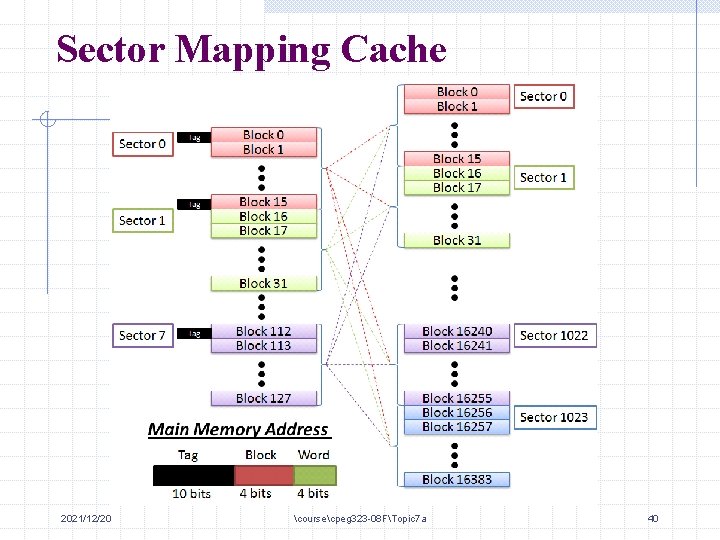

Sector Mapping Cache • Sector (IBM 360/85) - 16 sector x 16 block/sector 1 sector = consecutive multiple blocks - Cache miss: sector replacement - Valid bit - one block is moved on demand • Example: - 0 6 7 7 Sector (tag) 13 14 17 7 4 block word A sector in memory can be in any sector in cache 2021/12/20 coursecpeg 323 -08 FTopic 7 a 39

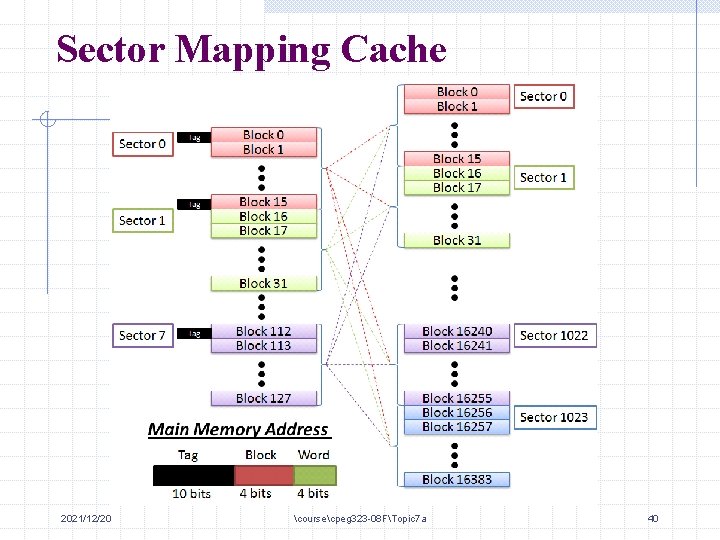

Sector Mapping Cache 2021/12/20 coursecpeg 323 -08 FTopic 7 a 40

cont’d Cache has 128 blocks 16 blocks/sector Main memory has 16 k 16 = 8 sector = 1 K sectors Sector mapping cache 2021/12/20 coursecpeg 323 -08 FTopic 7 a 41

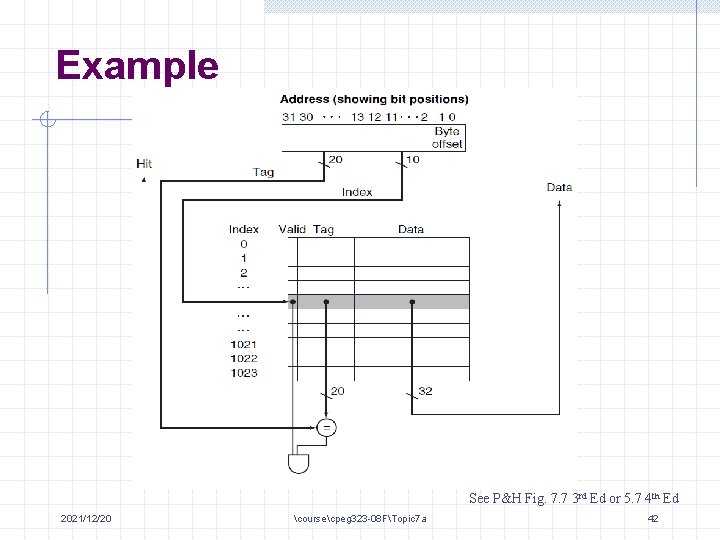

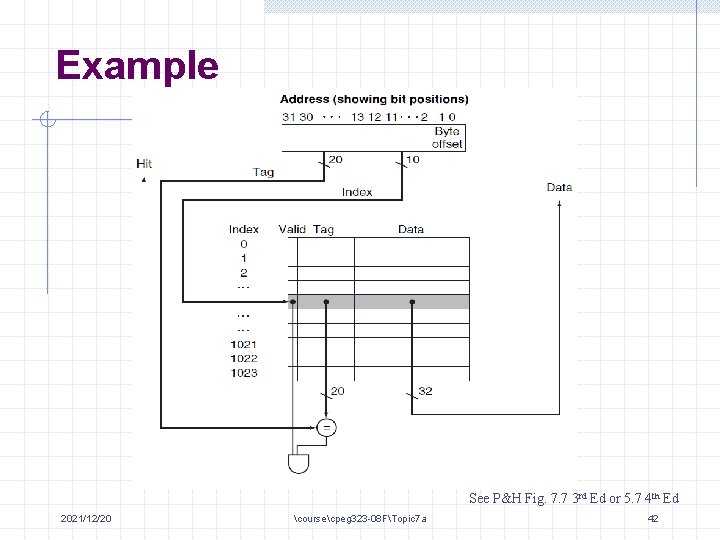

Example See P&H Fig. 7. 7 3 rd Ed or 5. 7 4 th Ed 2021/12/20 coursecpeg 323 -08 FTopic 7 a 42

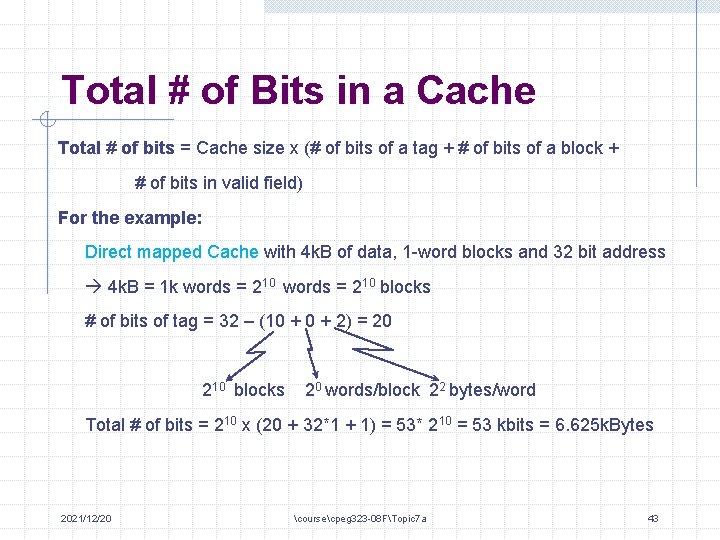

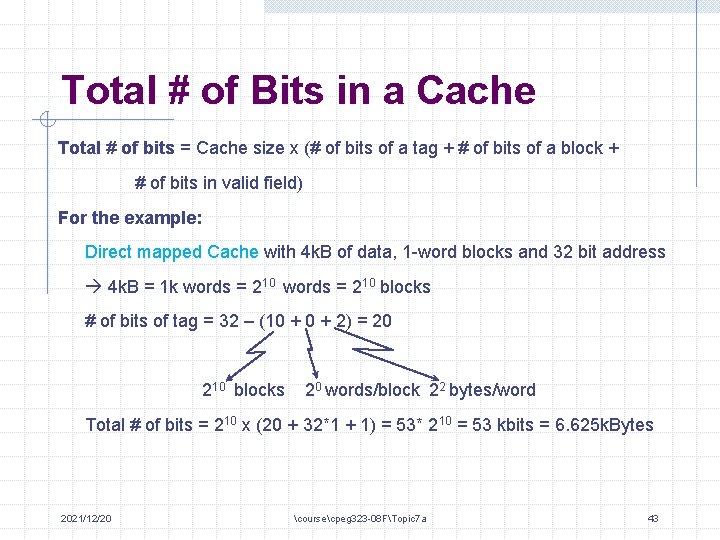

Total # of Bits in a Cache Total # of bits = Cache size x (# of bits of a tag + # of bits of a block + # of bits in valid field) For the example: Direct mapped Cache with 4 k. B of data, 1 -word blocks and 32 bit address 4 k. B = 1 k words = 210 blocks # of bits of tag = 32 – (10 + 2) = 20 210 blocks 20 words/block 22 bytes/word Total # of bits = 210 x (20 + 32*1 + 1) = 53* 210 = 53 kbits = 6. 625 k. Bytes 2021/12/20 coursecpeg 323 -08 FTopic 7 a 43

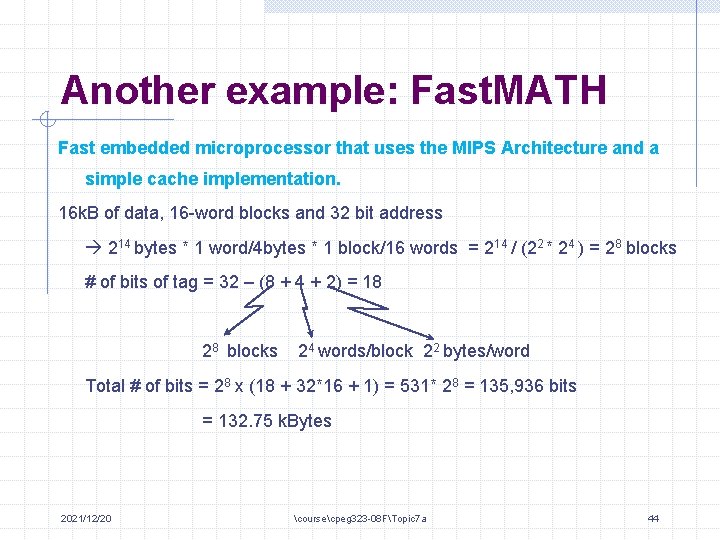

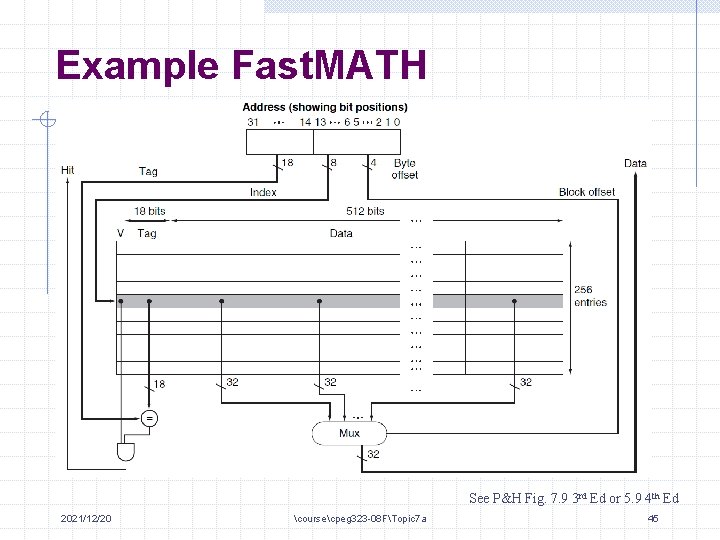

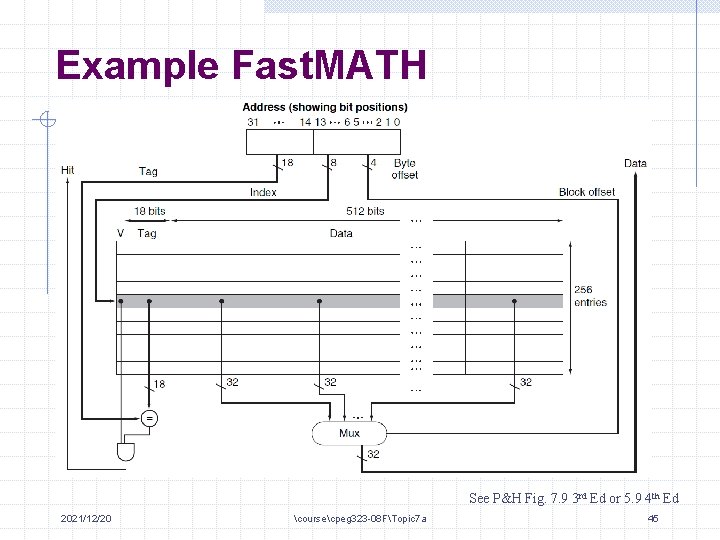

Another example: Fast. MATH Fast embedded microprocessor that uses the MIPS Architecture and a simple cache implementation. 16 k. B of data, 16 -word blocks and 32 bit address 214 bytes * 1 word/4 bytes * 1 block/16 words = 214 / (22 * 24 ) = 28 blocks # of bits of tag = 32 – (8 + 4 + 2) = 18 28 blocks 24 words/block 22 bytes/word Total # of bits = 28 x (18 + 32*16 + 1) = 531* 28 = 135, 936 bits = 132. 75 k. Bytes 2021/12/20 coursecpeg 323 -08 FTopic 7 a 44

Example Fast. MATH See P&H Fig. 7. 9 3 rd Ed or 5. 9 4 th Ed 2021/12/20 coursecpeg 323 -08 FTopic 7 a 45